Extraction of Sunflower Lodging Information Based on UAV Multi-Spectral Remote Sensing and Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

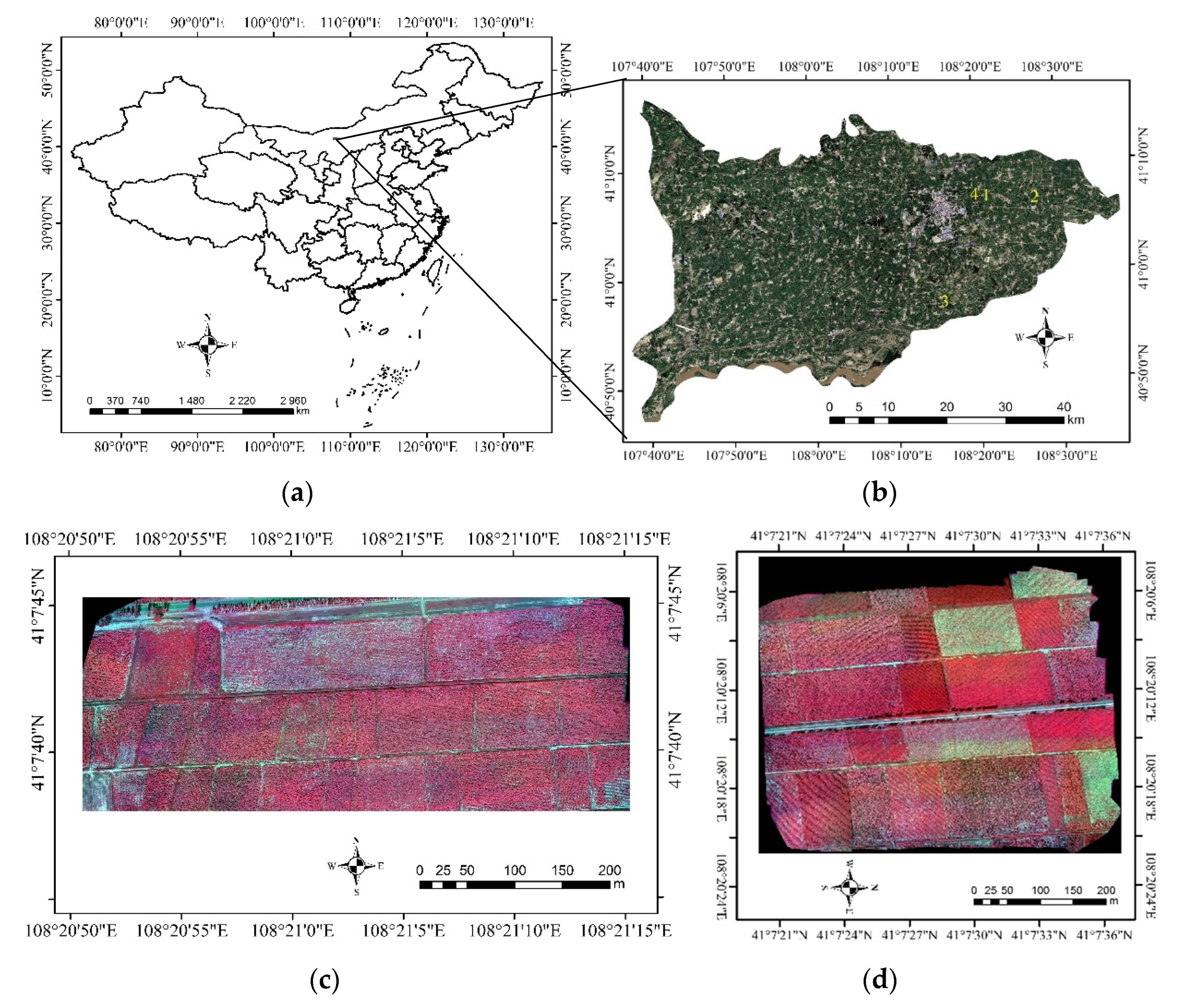

2.1. Study Area and Data

2.1.1. Selection of Study Areas

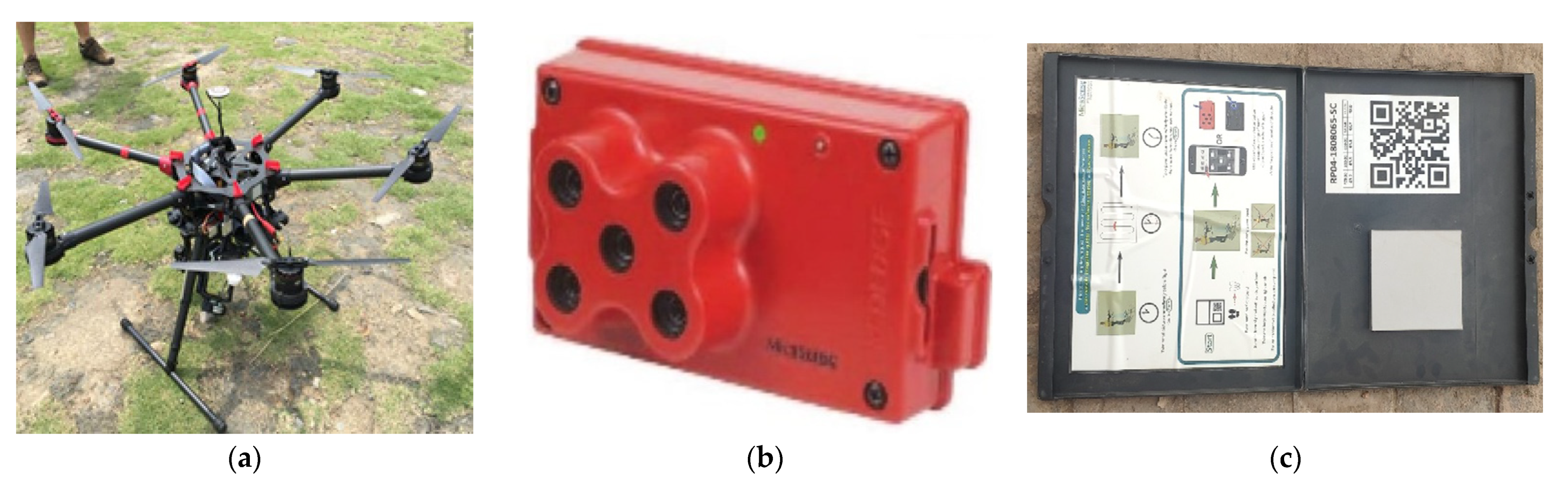

2.1.2. Data Acquisition

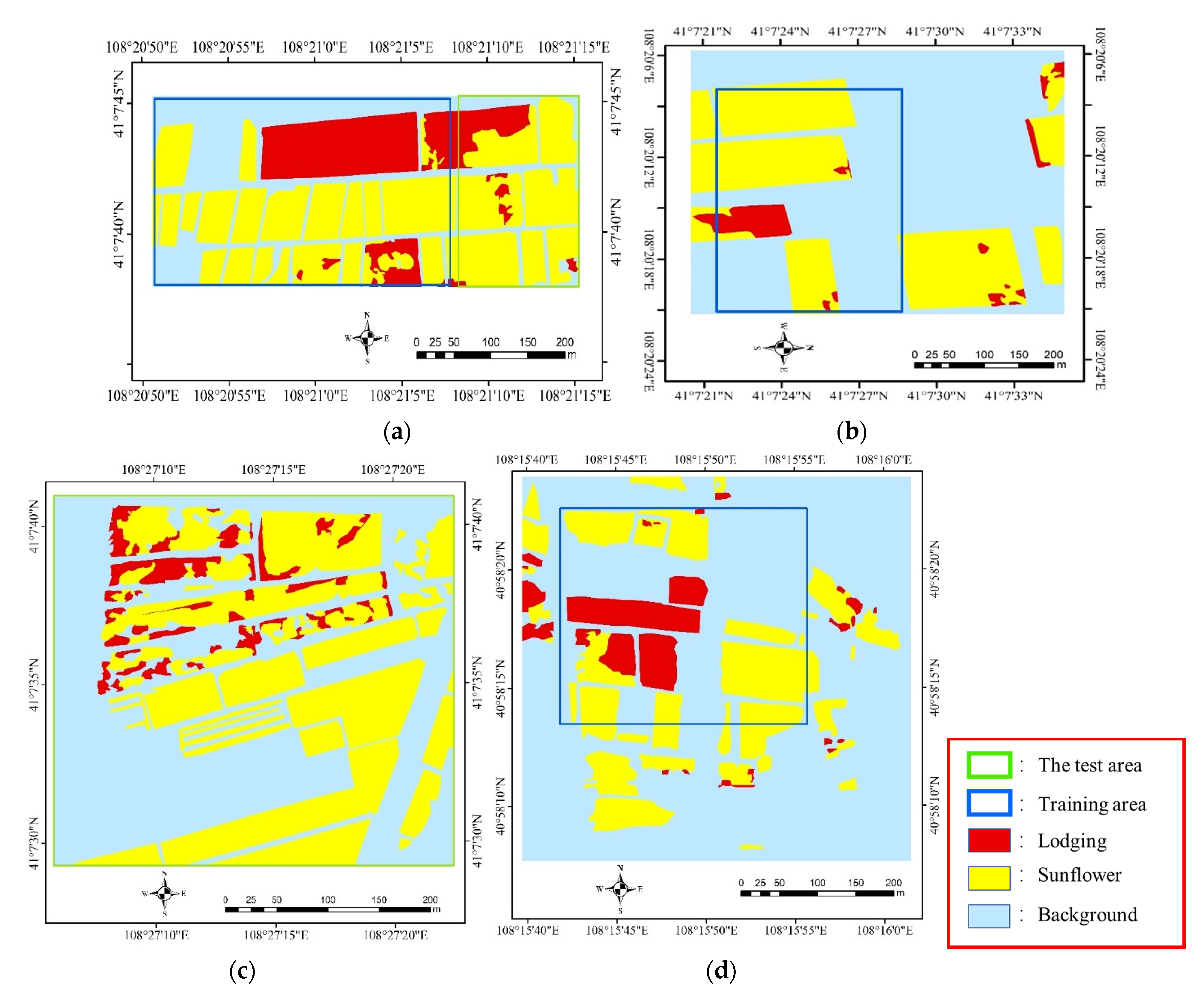

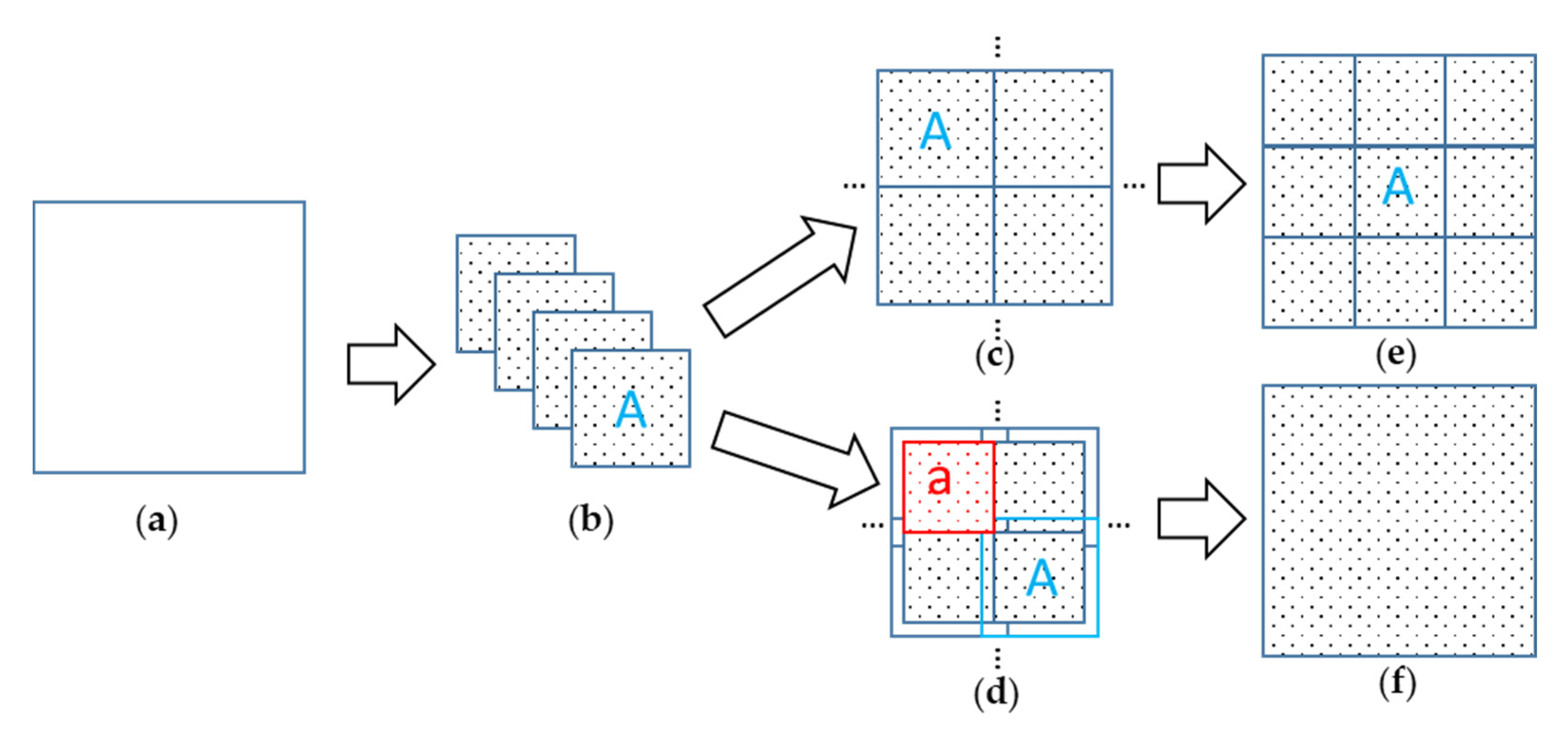

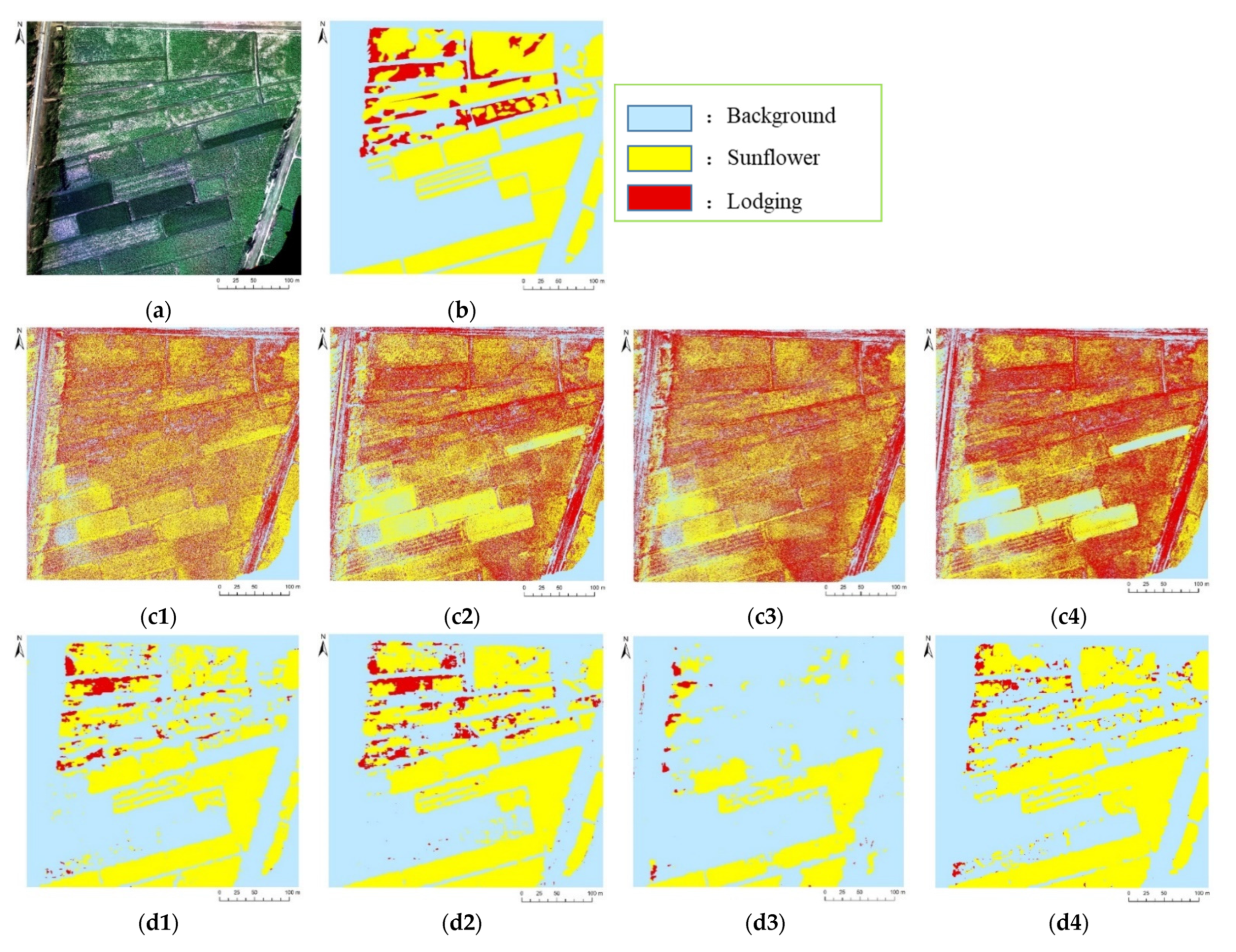

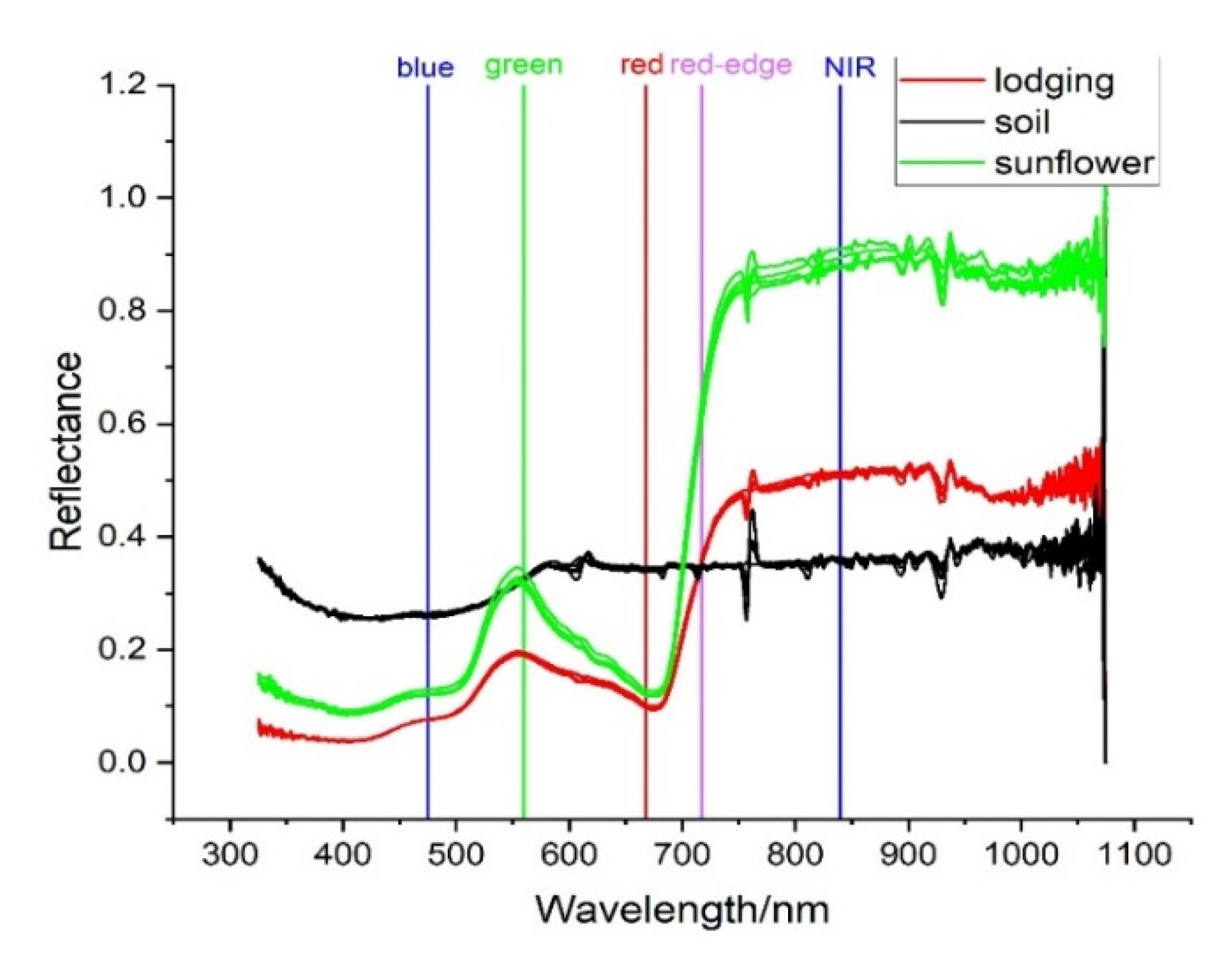

2.1.3. Data Set Construction

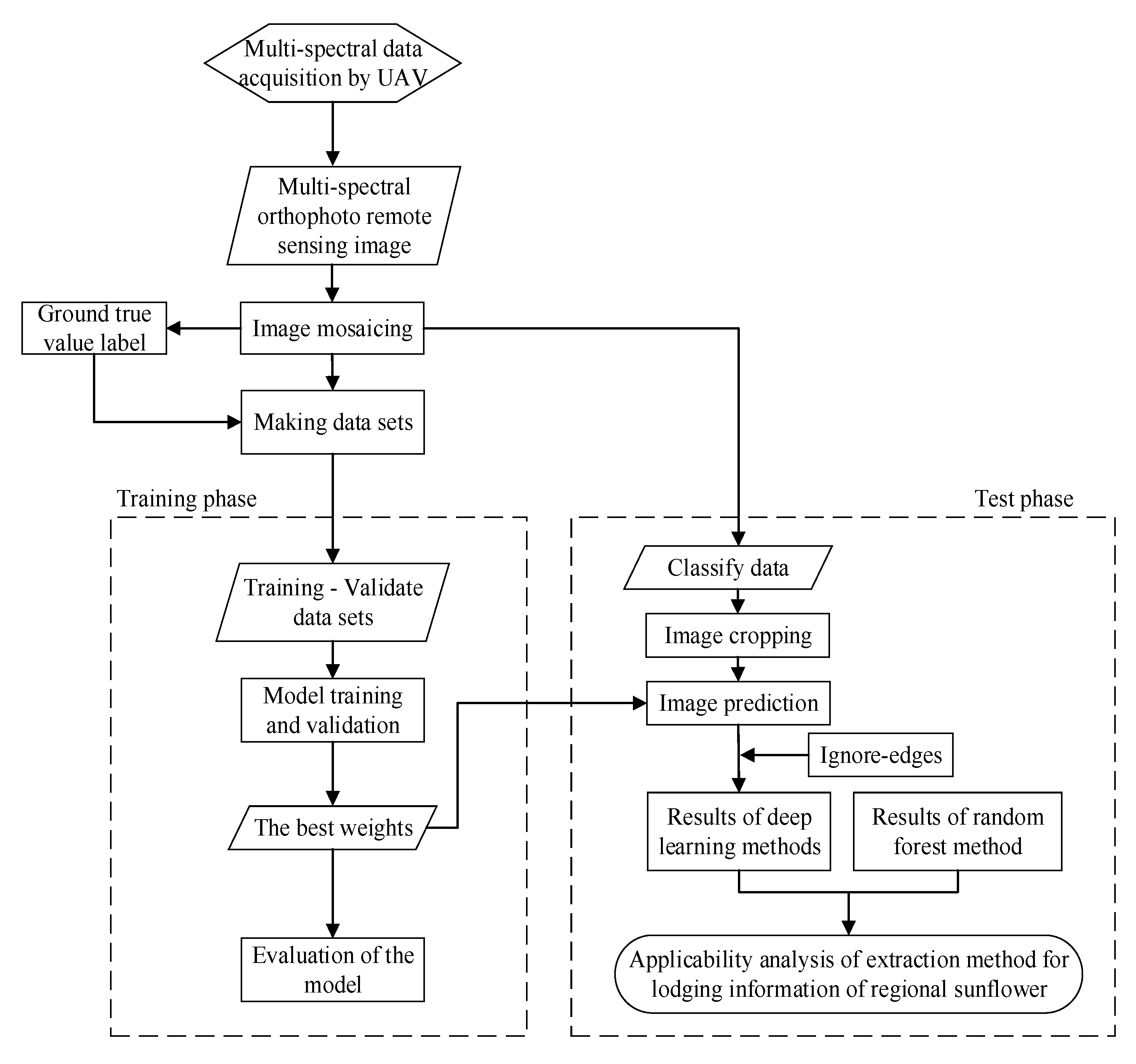

2.2. Methods

2.2.1. Extracting Sunflower Lodging

2.2.2. Random Forest Method

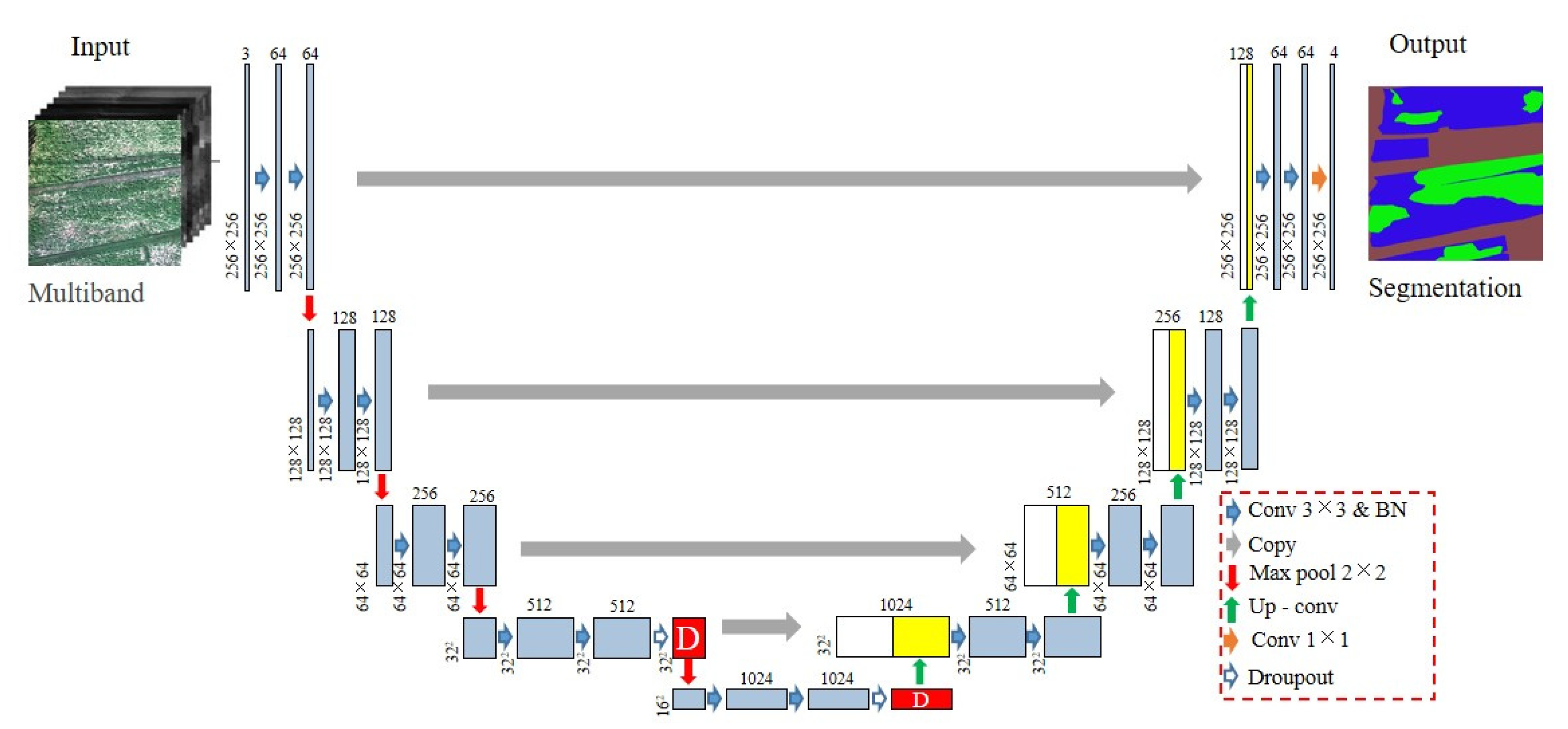

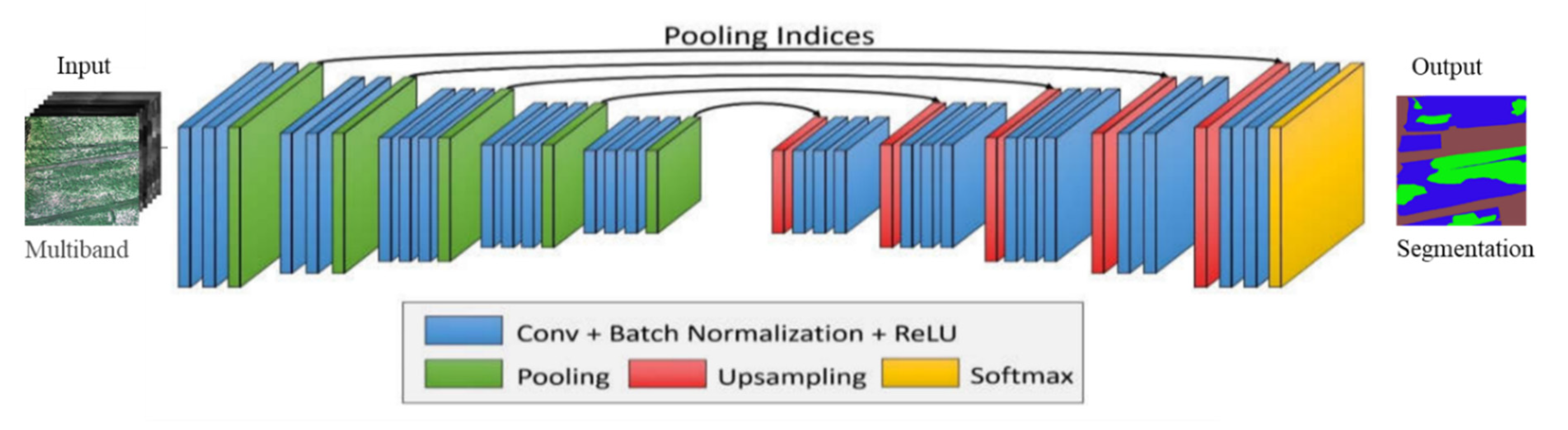

2.2.3. U-Net and SegNet Methods

2.2.4. Model Training

2.2.5. Prediction of Results

2.2.6. Accuracy Evaluation Method

3. Results

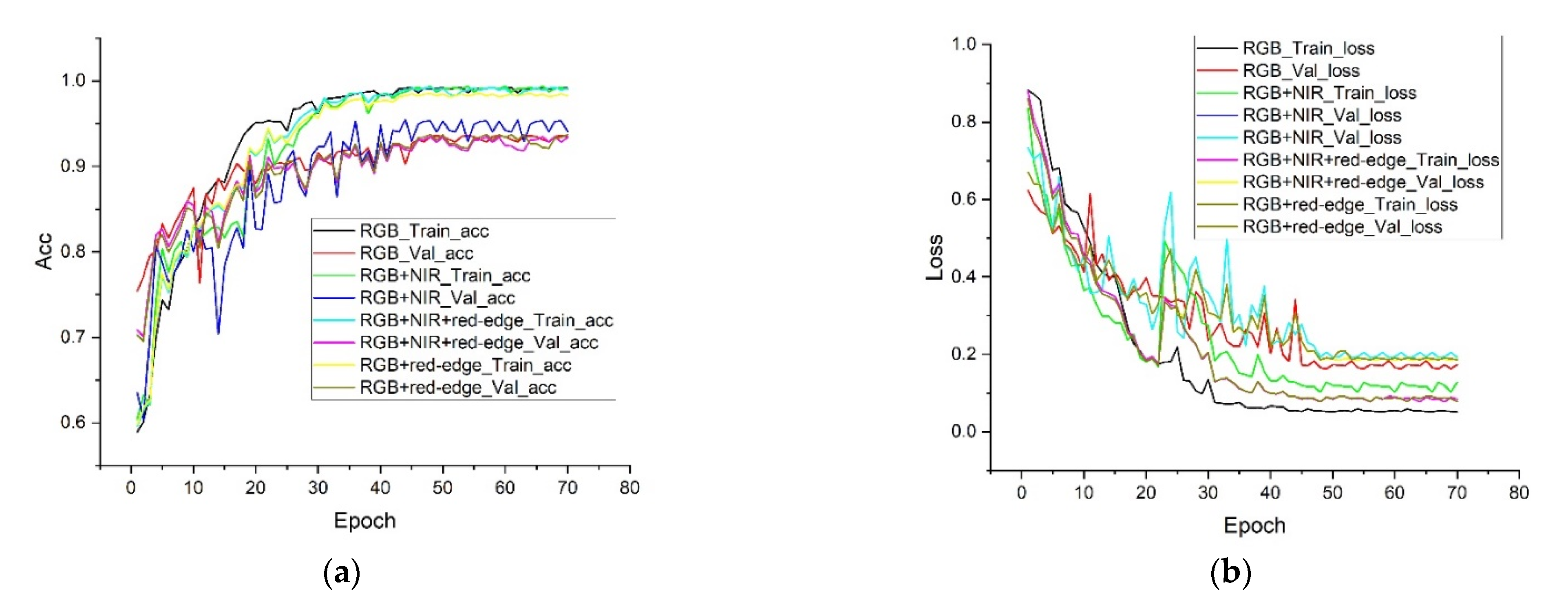

3.1. Results of Model Training and Validation

3.2. Results of Sunflower Lodging Test

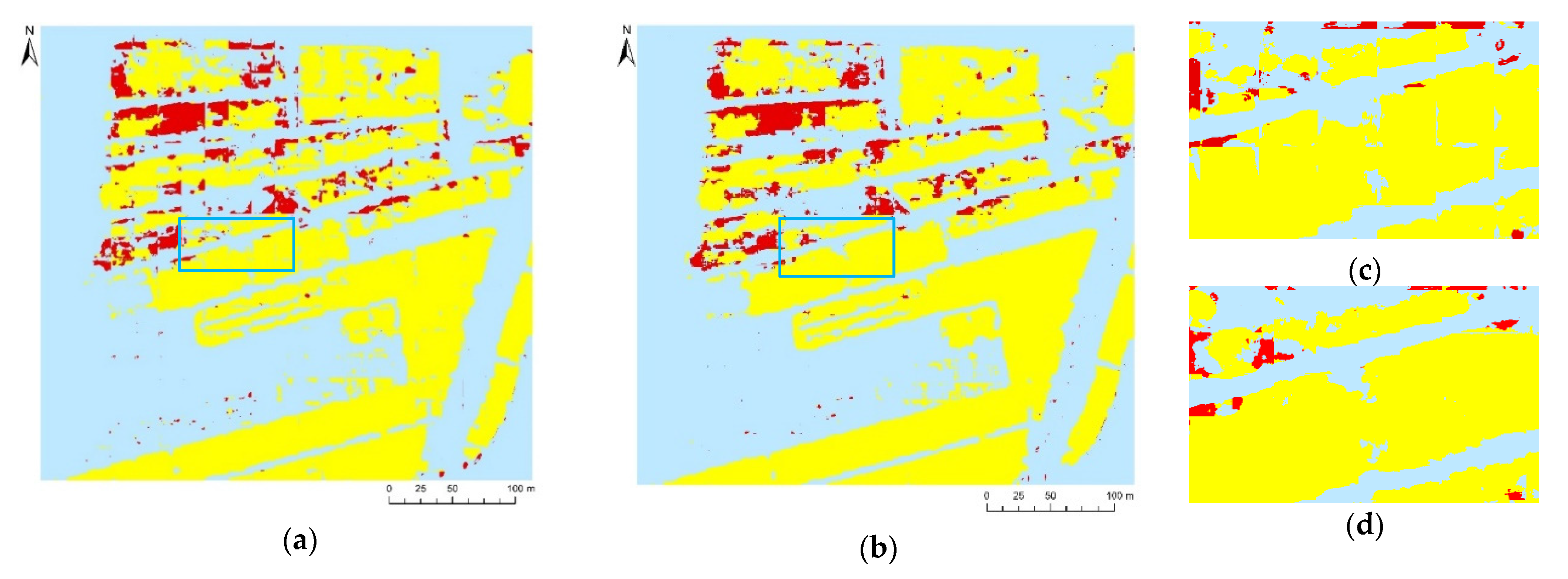

3.3. Direct Splicing Region Prediction Results

4. Discussion

4.1. Comparison of Classification of Zones of Sunflower Lodging

4.2. Comparative Analysis of Related Studies

4.3. Limitations of SegNet and U-Net in Extraction Sunflower Lodging

4.4. Summary and Prospect

5. Conclusions

- Compared with the random forest method, the deep learning method has advantages in terms of the accuracy of classification of areas with sunflower lodging. Deep learning can be used to mine deep features of images and avoid the "salt and pepper phenomenon" in pixel-level classification. The classification accuracy of such methods was about 40% higher than that of the random forest method in experiments. However, the commonly used SegNet and U-Net models are not adequate for generalizing areas of sunflower lodging in cases of complex growth of the crop.

- By using UAV multi-spectral images, the influence of multi-spectral band information based on RGB images on the extraction of lodging-related information for sunflowers was studied for a few combinations of bands. The results of extraction of two deep learning methods showed that the addition of the NIR band can increase the accuracy of classification whereas the addition of the red-edge band reduces it. Thus, while accuracy is improved by using more classification-related information, not all information can be directly used to classify, and inhibiting data need to be filtered out.

- Compared with the traditional method, the proposed method for predicting lodging-related information for regional sunflowers that ignores edge-related information in images removed traces of stitching and improved the accuracy of classification by 2%. The results here can provide technical support for the accurate prediction of lodging-related information on regional sunflowers.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned aerial vehicle |

| RGB | Red band, green band, blue band |

| NIR | Near-infrared band |

| GCPs | Ground control points |

| IoU | Intersect-over-union |

| OA | Overall accuracy |

| Val | Validation |

| Acc | Accuracy |

Appendix A

| Model | Information | F1-Score (%) | Intersection-over-Union (%) | OA (%) | ||||

|---|---|---|---|---|---|---|---|---|

| Background | Sunflower | Lodging | Background | Sunflower | Lodging | |||

| SegNet | Red-edge | 81.84 | 69.58 | 5.69 | 69.27 | 53.35 | 2.93 | 75.80 |

| Red-edge + NIR | 90.48 | 87.22 | 30.97 | 82.61 | 77.34 | 18.32 | 87.06 | |

References

- Berry, P.M. Lodging Resistance cereal lodging resistance in Cereals cereal. In Sustainable Food Production; Christou, P., Savin, R., Costa-Pierce, B.A., Misztal, I., Whitelaw, C.B.A., Eds.; Springer: New York, NY, USA, 2013; pp. 1096–1110. [Google Scholar]

- Rajkumara, S. Lodging in cereals—A review. Agric. Rev. 2008, 1, 55–60. [Google Scholar]

- Berry, P.M.; Sterling, M.; Spink, J.H.; Baker, C.K.; Sylvester-Bradley, R.; Mooney, S.J.; Tams, A.; Ennos, A.R. Understanding and Reducing Lodging in Cereals. Adv. Agron. 2004, 84, 217–271. [Google Scholar]

- Chauhan, S.; Darvishzadeh, R.; Lu, Y.; Boschetti, M.; Nelson, A. Understanding wheat lodging using multi-temporal Sentinel-1 and Sentinel-2 data. Remote Sens. Environ. 2020, 243, 111804. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Feng, H.; Zhou, C.; Yang, H.; Xu, B.; Li, Z.; Yang, X. Quantitative Identification of Maize Lodging-Causing Feature Factors Using Unmanned Aerial Vehicle Images and a Nomogram Computation. Remote Sens. 2018, 10, 1528. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, H.; Niu, Y.; Han, W. Mapping Maize Water Stress Based on UAV Multispectral Remote Sensing. Remote Sens. 2019, 11, 605. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Niu, Y.; Zhang, H.; Han, W.; Li, G.; Tang, J.; Peng, X. Maize Canopy Temperature Extracted from UAV Thermal and RGB Imagery and Its Application in Water Stress Monitoring. Front. Plant Sci. 2019, 10, 1270. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, L.; Zhang, H.; Han, W.; Peng, X. Estimating Above-Ground Biomass of Maize Using Features Derived from UAV-Based RGB Imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef] [Green Version]

- Yao, X.; Liu, W.; Han, W.; Li, G.; Ma, Q. Development of Response Surface Model of Endurance Time and Structural Parameter Optimization for a Tailsitter UAV. Sensors 2020, 20, 1766. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dai, J.; Zhang, G.; Guo, P.; Zeng, T.; Xue, J. Information extraction of cotton lodging based on multi-spectral image from UAV remote sensing. Trans. Chin. Soc. Agric. Eng. 2019, 35, 63–70. [Google Scholar]

- Zhao, L.; Duan, Y.; Shi, Y.; Zhang, B. Wheat lodging identification using DSM by drone. Chin. Agric. Inf. 2019, 31, 36–42. [Google Scholar]

- Mao, Z.; Deng, L.; Zhao, X.; Hu, Y. Extraction of Maize Lodging in Breeding Plot Based on UAV Remote Sensing. Chin. Agric. Sci. Bull. 2019, 35, 62–68. [Google Scholar]

- Li, Z.; Chen, Z.; Wang, L.; Liu, J.; Zhou, Q. Area extraction of maize lodging based on remote sensing by small unmanned aerial vehicle. Trans. Chin. Soc. Agric. Eng. 2014, 30, 207–213. [Google Scholar]

- Li, G.; Zhang, L.; Song, C.; Peng, M.; Zhang, Y.; Han, W. Extraction Method of Wheat Lodging Information Based on Multi-temporal UAV Remote Sensing Data. Trans. Chin. Soc. Agric. Mach. 2019, 50, 211–220. [Google Scholar]

- Yang, M.; Huang, K.; Kuo, Y.; Tsai, H.; Lin, L. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Zhao, C.; Yang, G.; Feng, H. Variations in crop variables within wheat canopies and responses of canopy spectral characteristics and derived vegetation indices to different vertical leaf layers and spikes. Remote Sens. Environ. 2015, 169, 358–374. [Google Scholar] [CrossRef]

- Chauhan, S.; Darvishzadeh, R.; Lu, Y.; Stroppiana, D.; Boschetti, M.; Pepe, M.; Nelson, A. Wheat Lodging Assessment Using Multispectral Uav Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 235–240. [Google Scholar] [CrossRef] [Green Version]

- Kumpumaki, T.; Linna, P.; Lipping, T. Crop Lodging Analysis from Uas Orthophoto Mosaic, Sentinel-2 Image and Crop Yield Monitor Data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7723–7726. [Google Scholar]

- Chauhan, S.; Darvishzadeh, R.; Boschetti, M.; Nelson, A. Estimation of crop angle of inclination for lodged wheat using multi-sensor SAR data. Remote Sens. Environ. 2020, 236, 111488. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Yang, H.; Chen, E.; Li, Z.; Zhao, C.; Yang, G.; Pignatti, S.; Casa, R.; Zhao, L. Wheat lodging monitoring using polarimetric index from RADARSAT-2 data. Int. J. Appl. Earth Obs. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- De, S.; Bhattacharya, A. Urban classification using PolSAR data and deep learning. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 353–356. [Google Scholar]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Hui, P.T. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef] [Green Version]

- Mdya, B.; Jgb, C.; Hui, P.; Hhta, B.; Ycha, B.; Ccs, C. Adaptive autonomous UAV scouting for rice lodging assessment using edge computing with deep learning EDANet. Comput. Electron. Agric. 2020, 179, 105817. [Google Scholar]

- Hamidisepehr, A.; Mirnezami, S.; Ward, J. Comparison of object detection methods for crop damage assessment using deep learning. Trans. ASABE 2020, 63, 1969–1980. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of Unmanned Aerial Vehicle Imagery and Deep Learning UNet to Extract Rice Lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, E.; Tian, Y.; Chen, T.; Branch, J. Region Extraction of Corn Lodging in UAV Images Based on Deep Learning. J. Henan. Agric. Sci. 2018, 8, 155–160. [Google Scholar]

- Wilke, N.; Siegmann, B.; Klingbeil, L.; Burkart, A.; Kraska, T.; Muller, O.; Doorn, A.V.; Heinemann, S.; Rascher, U. Quantifying Lodging Percentage and Lodging Severity Using a UAV-Based Canopy Height Model Combined with an Objective Threshold Approach. Remote Sens. 2019, 11, 515. [Google Scholar] [CrossRef] [Green Version]

- Song, Z.; Zhang, Z.; Yang, S.; Ding, D.; Ning, J. Identifying sunflower lodging based on image fusion and deep semantic segmentation with UAV remote sensing imaging. Comput. Electron. Agric. 2020, 179, 105812. [Google Scholar] [CrossRef]

- Zhang, Z.; Wei, G.; Yao, Z.; Tan, C.; Wang, X.; Han, J. Soil Salt Inversion Model Based on UAV Multispectral Remote Sensing. Trans. Chin. Soc. Agric. Mach. 2019, 50, 151–160. [Google Scholar]

- Ma, Q.; Han, W.; Huang, S.; Dong, S.; Chen, H. Distinguishing Planting Structures of Different Complexity from UAV Multispectral Images. Sensors 2021, 21, 1994. [Google Scholar] [CrossRef]

- Svetnik, V. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef] [PubMed]

- Liaw, A.; Wiener, M.; Liaw, A. Classification and Regression with Random Forest. R News 2002, 2, 18–22. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. 2015, 39, 640–651. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhou, Y.; Wang, S.; Wang, F.; Xu, Z. House building extraction from high resolution remote sensing image based on IEU-Net. J. Remote Sens. 2021, in press. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Chamorro-Martinez, J.; Martinez-Jimenez, P. A comparative study of texture coarseness measures. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 1337–1340. [Google Scholar]

- Bi, J.; Chen, Y.; Wang, J.Z. A Sparse Support Vector Machine Approach to Region-Based Image Categorization. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 1121–1128. [Google Scholar]

- Chu, T.; Starek, M.; Brewer, M.; Murray, S.; Pruter, L. Assessing Lodging Severity over an Experimental Maize (Zea mays L.) Field Using UAS Images. Remote Sens. 2017, 9, 923. [Google Scholar] [CrossRef] [Green Version]

| Unmanned Aerial Vehicle (UAV) | Camera | ||

|---|---|---|---|

| Parameters | Values | Parameters | Values |

| Wheelbase/mm | 900 | Camera model | MicaSense RedEdge-M |

| Takeoff mass/kg | 4.7–8.2 | Pixels | 1280 × 960 |

| Payload/g | 820 | Band | 5 |

| Endurance time/min | 20 | Wave length/nm | 400–900 |

| Digital communication distance/km | 3 | Focal length/mm | 5.5 |

| Battery power/(mA·h) | 16,000 | Field of view/(°) | 47.2 |

| Cruising speed/(m·s−1) | 5 | ||

| Operating System | Windows 10 Enterprise 64bit (DirectX 12) |

|---|---|

| CPU | Intel(R) Core(TM) i9-10920X CPU @ 3.50 GHz(12 cores/GPU node) |

| RAM | 64GB/GPU node |

| Accelerator | NVIDIA GeForce GTX 3090 24GB |

| Image | TensorFlow-19.08-py3 |

| Libraries | Python 3.6.13, NumPy 1.19.5, scikit-learn 0.24.1, TensorFlow-GPU 2.4.1, Keras 2.4.3, Jupiternotebook, CUDA 11.2 |

| Evaluation Matrices | Formula |

|---|---|

| Precision | |

| Recall | |

| Accuracy | |

| Overall accuracy | |

| F1-score | |

| Intersection-over-Union |

| Information | Random Forest | SegNet | U-Net | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Train_Acc (%) | Val_Acc (%) | Train_Acc (%) | Train_Loss (%) | Val_Acc (%) | Val_Loss (%) | Train_Acc (%) | Train_Loss (%) | Val_Acc (%) | Val_Loss (%) | |

| RGB | 81.09 | 67.00 | 99.17 | 5.13 | 93.47 | 17.31 | 98.99 | 11.96 | 95.10 | 19.20 |

| RGB + NIR | 99.10 | 79.77 | 99.67 | 4.73 | 94.07 | 16.71 | 99.01 | 8.07 | 95.21 | 14.24 |

| RGB + red-edge | 98.61 | 77.96 | 98.17 | 5.83 | 93.17 | 17.39 | 98.79 | 11.55 | 92.89 | 33.33 |

| RGB + NIR + red-edge | 99.96 | 85.56 | 99.87 | 4.33 | 94.67 | 16.11 | 99.27 | 10.21 | 96.52 | 15.52 |

| Model | Information | Test Area | F1-Score (%) | Intersection-over-Union (%) | OA (%) | ||||

|---|---|---|---|---|---|---|---|---|---|

| Background | Sunflower | Lodging | Background | Sunflower | Lodging | ||||

| Random Forest | RGB | 2 | 54.14 | 46.16 | 15.05 | 37.12 | 30.00 | 8.14 | 41.80 |

| RGB + NIR | 2 | 52.62 | 46.84 | 15.58 | 35.71 | 30.58 | 8.45 | 42.87 | |

| RGB + red-edge | 2 | 50.09 | 46.32 | 13.96 | 33.41 | 30.14 | 7.50 | 40.36 | |

| RGB + NIR + red-edge | 2 | 51.69 | 48.27 | 15.07 | 34.86 | 31.81 | 8.15 | 43.29 | |

| SegNet | RGB | 2 | 87.71 | 83.08 | 41.71 | 78.11 | 71.05 | 26.35 | 83.98 |

| 1 | 81.71 | 93.40 | 62.30 | 69.08 | 87.62 | 55.24 | 88.53 | ||

| RGB + NIR | 2 | 91.08 | 88.58 | 51.68 | 83.62 | 79.50 | 34.85 | 88.23 | |

| 1 | 84.32 | 94.59 | 84.82 | 72.89 | 89.75 | 69.77 | 90.75 | ||

| RGB + red-edge | 2 | 77.56 | 57.36 | 11.83 | 63.35 | 40.21 | 6.28 | 69.47 | |

| 1 | 75.99 | 93.37 | 37.12 | 61.27 | 87.56 | 22.79 | 85.61 | ||

| RGB + NIR + red-edge | 2 | 87.87 | 85.73 | 23.93 | 78.37 | 75.02 | 13.59 | 84.49 | |

| 1 | 80.80 | 93.85 | 65.87 | 67.79 | 88.42 | 59.76 | 87.79 | ||

| U-Net | RGB | 2 | 84.39 | 79.36 | 35.43 | 72.99 | 65.78 | 21.53 | 80.62 |

| 1 | 77.92 | 91.27 | 58.80 | 63.82 | 83.94 | 52.28 | 85.36 | ||

| RGB + NIR | 2 | 89.58 | 86.43 | 45.37 | 81.12 | 76.11 | 29.34 | 86.47 | |

| 1 | 82.46 | 93.19 | 78.85 | 70.16 | 87.24 | 62.66 | 88.26 | ||

| RGB + red-edge | 2 | 81.55 | 70.12 | 7.83 | 68.84 | 53.99 | 4.08 | 75.56 | |

| 1 | 72.25 | 90.33 | 48.99 | 56.55 | 82.36 | 30.66 | 84.54 | ||

| RGB + NIR + red-edge | 2 | 89.75 | 84.03 | 39.34 | 81.41 | 72.46 | 24.49 | 84.22 | |

| 1 | 83.19 | 92.78 | 61.61 | 71.22 | 86.54 | 54.78 | 87.69 | ||

| Information | Model | F1-Score (%) | Intersection-over-Union (%) | OA (%) | ||||

|---|---|---|---|---|---|---|---|---|

| Background | Sunflower | Lodging | Background | Sunflower | Lodging | |||

| RGB + NIR | SegNet | 89.41 | 85.95 | 47.55 | 80.84 | 75.36 | 31.19 | 86.03 |

| U-Net | 88.66 | 84.95 | 44.59 | 79.63 | 73.84 | 28.70 | 85.28 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Han, W.; Huang, S.; Ma, W.; Ma, Q.; Cui, X. Extraction of Sunflower Lodging Information Based on UAV Multi-Spectral Remote Sensing and Deep Learning. Remote Sens. 2021, 13, 2721. https://doi.org/10.3390/rs13142721

Li G, Han W, Huang S, Ma W, Ma Q, Cui X. Extraction of Sunflower Lodging Information Based on UAV Multi-Spectral Remote Sensing and Deep Learning. Remote Sensing. 2021; 13(14):2721. https://doi.org/10.3390/rs13142721

Chicago/Turabian StyleLi, Guang, Wenting Han, Shenjin Huang, Weitong Ma, Qian Ma, and Xin Cui. 2021. "Extraction of Sunflower Lodging Information Based on UAV Multi-Spectral Remote Sensing and Deep Learning" Remote Sensing 13, no. 14: 2721. https://doi.org/10.3390/rs13142721

APA StyleLi, G., Han, W., Huang, S., Ma, W., Ma, Q., & Cui, X. (2021). Extraction of Sunflower Lodging Information Based on UAV Multi-Spectral Remote Sensing and Deep Learning. Remote Sensing, 13(14), 2721. https://doi.org/10.3390/rs13142721