Deep Learning for Detection of Visible Land Boundaries from UAV Imagery

Abstract

1. Introduction

1.1. Deep Learning for Cadastral Mapping

1.2. Objective of the Study

2. Materials and Methods

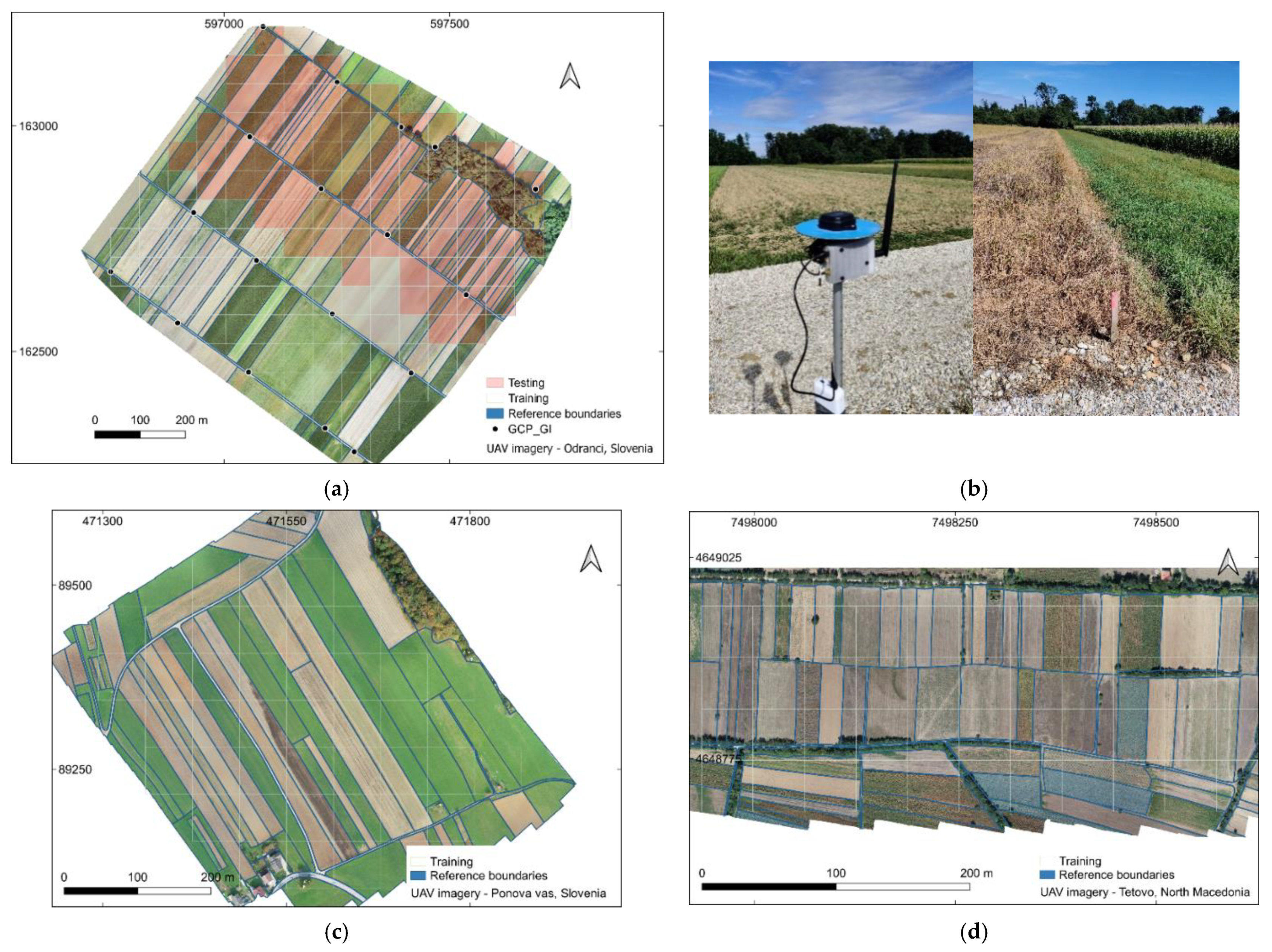

2.1. UAV Data

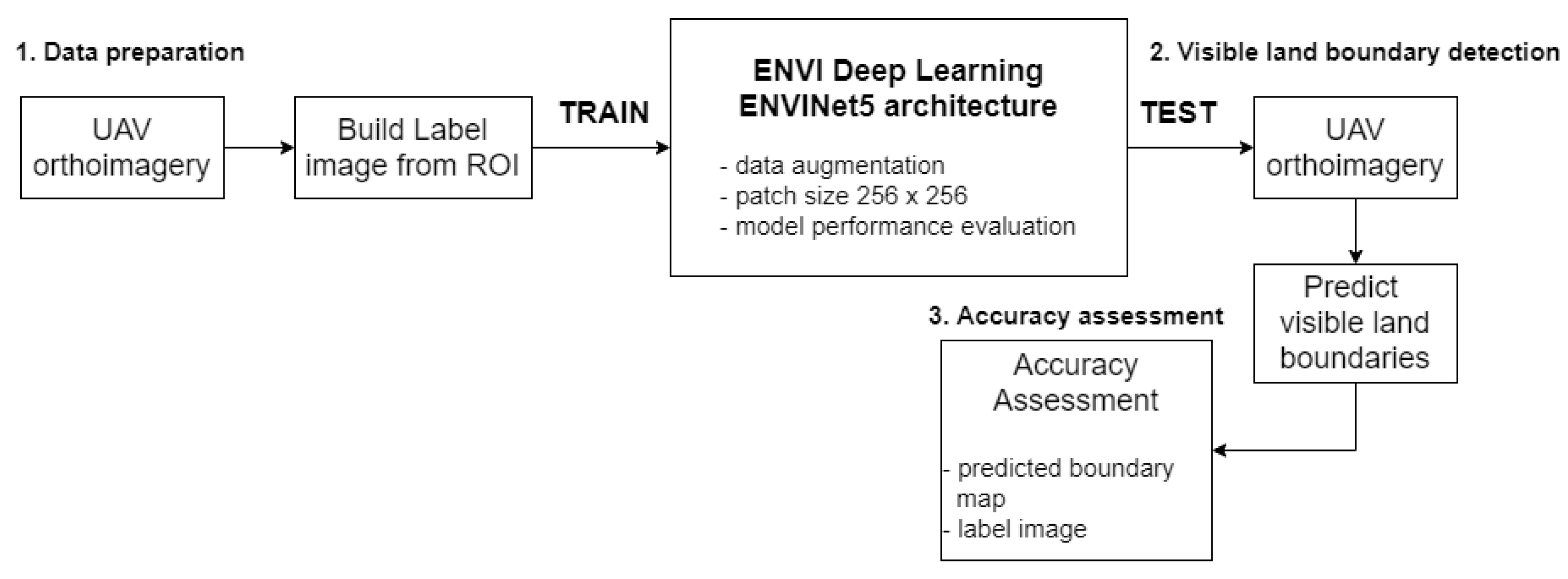

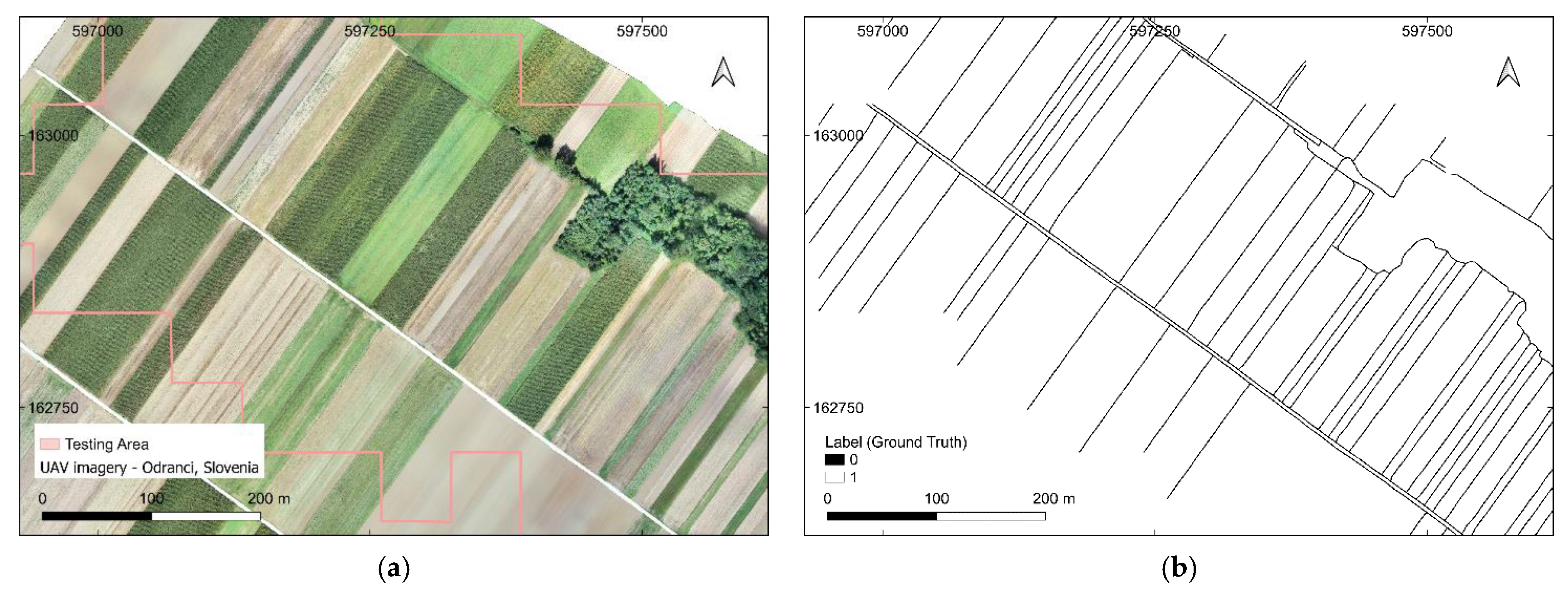

2.2. Detection of Visible Land Boundaries

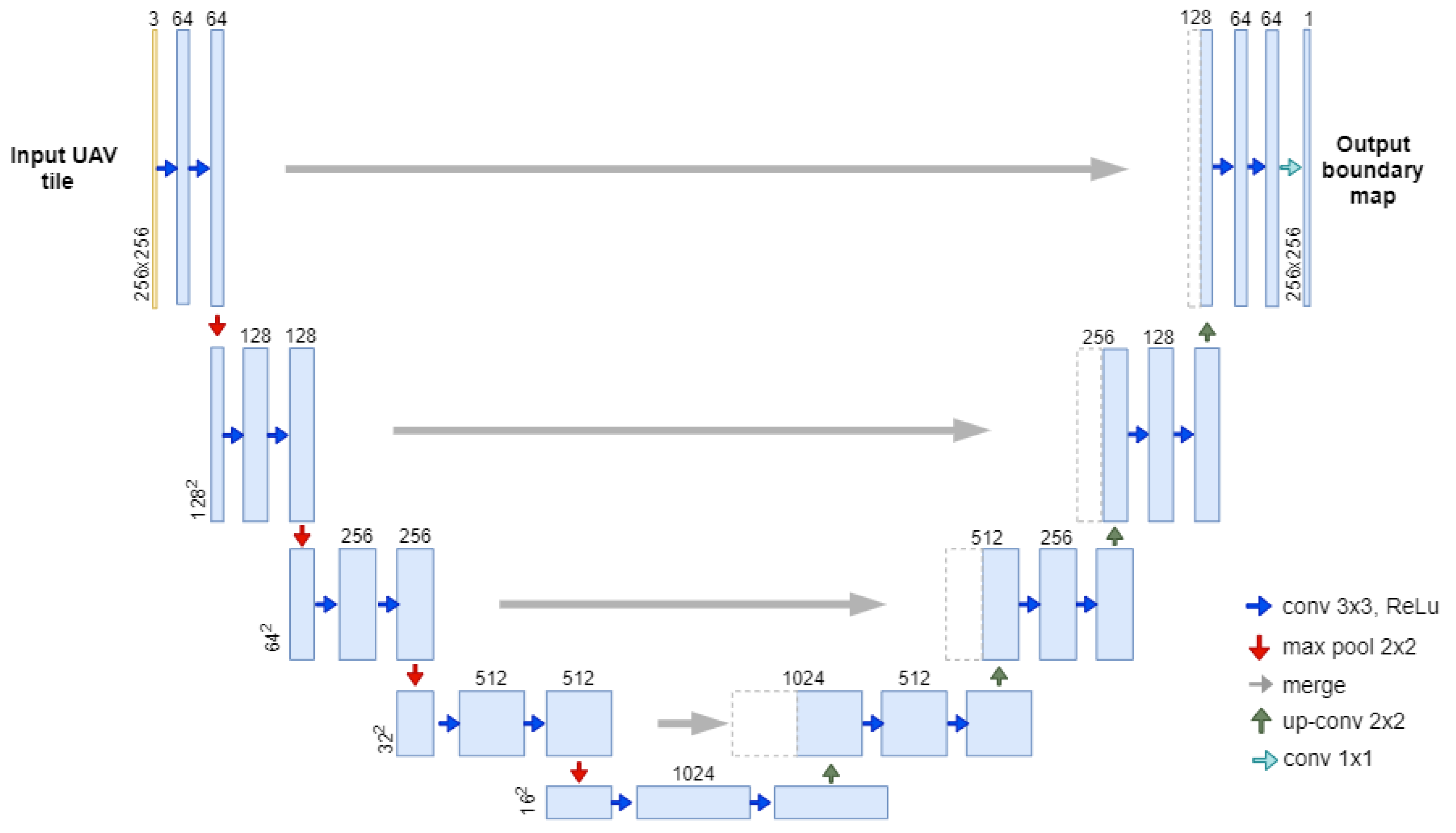

2.2.1. U-Net

2.2.2. ENVI Deep Learning

2.3. Accuracy Assessment

3. Results

3.1. CNN Architecture

3.2. Detection of Visible Land Boundaries by U-Net

3.3. Comparison with ENVI Deep Learning—ENVINet5

4. Discussion

4.1. CNN Architecture and Implementation

4.2. Detection of Visible Land Boundaries

4.3. Boundary Mapping Approach

4.4. Application of Detected Visible Boundaries

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Enemark, S.; Bell, K.C.; Lemmen, C.; McLaren, R. Fit-For-Purpose Land Administration; International Federation of Surveyors (FIG): Copenhagen, Denmark, 2014; ISBN 978-87-92853-11-0. [Google Scholar]

- Luo, X.; Bennett, R.; Koeva, M.; Lemmen, C.; Quadros, N. Quantifying the Overlap between Cadastral and Visual Boundaries: A Case Study from Vanuatu. Urban Sci. 2017, 1, 32. [Google Scholar] [CrossRef]

- Zevenbergen, J. A systems approach to land registration and cadastre. Nord. J. Surv. Real Estate Res. 2004, 1, 11–24. [Google Scholar]

- Simbizi, M.C.D.; Bennett, R.M.; Zevenbergen, J. Land tenure security: Revisiting and refining the concept for Sub-Saharan Africa’s rural poor. Land Use Policy 2014, 36, 231–238. [Google Scholar] [CrossRef]

- Williamson, I.P. Land Administration for Sustainable Development, 1st ed.; ESRI Press Academic: Redlands, CA, USA, 2010; ISBN 9781589480414. [Google Scholar]

- Binns, B.O.; Dale, P.F. Cadastral Surveys and Records of Rights in Land; Food and Agriculture Organization of the United Nations: Rome, Italy, 1995; ISBN 9251036276. [Google Scholar]

- Grant, D.; Enemark, S.; Zevenbergen, J.; Mitchell, D.; McCamley, G. The Cadastral triangular model. Land Use Policy 2020, 97, 104758. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.; Vosselman, G. Review of Automatic Feature Extraction from High-Resolution Optical Sensor Data for UAV-Based Cadastral Mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Zevenbergen, J.; Bennett, R. The visible boundary: More than just a line between coordinates. In Proceedings of the GeoTech Rwanda, Kigali, Rwanda, 18–20 November 2015; pp. 1–4. [Google Scholar]

- Manyoky, M.; Theiler, P.; Steudler, D.; Eisenbeiss, H. Unmanned Aerial Vehicle in Cadastral Applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 57–62. [Google Scholar] [CrossRef]

- Puniach, E.; Bieda, A.; Ćwiąkała, P.; Kwartnik-Pruc, A.; Parzych, P. Use of Unmanned Aerial Vehicles (UAVs) for Updating Farmland Cadastral Data in Areas Subject to Landslides. ISPRS Int. J. Geo-Inf. 2018, 7, 331. [Google Scholar] [CrossRef]

- Koeva, M.; Muneza, M.; Gevaert, C.; Gerke, M.; Nex, F. Using UAVs for map creation and updating. A case study in Rwanda. Surv. Rev. 2018, 50, 312–325. [Google Scholar] [CrossRef]

- Stöcker, C.; Nex, F.; Koeva, M.; Gerke, M. High-Quality UAV-Based Orthophotos for Cadastral Mapping: Guidance for Optimal Flight Configurations. Remote Sens. 2020, 12, 3625. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Xia, X.; Persello, C.; Koeva, M. Deep Fully Convolutional Networks for Cadastral Boundary Detection from UAV Images. Remote Sens. 2019, 11, 1725. [Google Scholar] [CrossRef]

- Ramadhani, S.A.; Bennett, R.M.; Nex, F.C. Exploring UAV in Indonesian cadastral boundary data acquisition. Earth Sci. Inf. 2018, 11, 129–146. [Google Scholar] [CrossRef]

- Crommelinck, S.; Koeva, M.; Yang, M.Y.; Vosselman, G. Application of Deep Learning for Delineation of Visible Cadastral Boundaries from Remote Sensing Imagery. Remote Sens. 2019, 11, 2505. [Google Scholar] [CrossRef]

- Fetai, B.; Oštir, K.; Kosmatin Fras, M.; Lisec, A. Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery. Remote Sens. 2019, 11, 1510. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Yang, M.; Vosselman, G. Contour Detection for UAV-Based Cadastral Mapping. Remote Sens. 2017, 9, 171. [Google Scholar] [CrossRef]

- Arbeláez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Persello, C.; Stein, A. Deep Fully Convolutional Networks for the Detection of Informal Settlements in VHR Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2325–2329. [Google Scholar] [CrossRef]

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Park, S.; Song, A. Discrepancy Analysis for Detecting Candidate Parcels Requiring Update of Land Category in Cadastral Map Using Hyperspectral UAV Images: A Case Study in Jeonju, South Korea. Remote Sens. 2020, 12, 354. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. 2015. Available online: http://arxiv.org/pdf/1505.04597v1 (accessed on 24 February 2021).

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Flood, N.; Watson, F.; Collett, L. Using a U-net convolutional neural network to map woody vegetation extent from high resolution satellite imagery across Queensland, Australia. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101897. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of Unmanned Aerial Vehicle Imagery and Deep Learning UNet to Extract Rice Lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Alshaikhli, T.; Liu, W.; Maruyama, Y. Automated Method of Road Extraction from Aerial Images Using a Deep Convolutional Neural Network. Appl. Sci. 2019, 9, 4825. [Google Scholar] [CrossRef]

- Wierzbicki, D.; Matuk, O.; Bielecka, E. Polish Cadastre Modernization with Remotely Extracted Buildings from High-Resolution Aerial Orthoimagery and Airborne LiDAR. Remote Sens. 2021, 13, 611. [Google Scholar] [CrossRef]

- Wani, M.A.; Bhat, F.A.; Afzal, S.; Khan, A.I. Advances in Deep Learning; Springer: Singapore, 2020; ISBN 978-981-13-6793-9. [Google Scholar]

- GRASS Development Team. GRASS GIS Bringing Advanced Geospatial Technologies to the World; GRASS: Beaverton, OR, USA, 2020. [Google Scholar]

- Google Colaboratory. Available online: https://colab.research.google.com (accessed on 29 April 2021).

- Chollet, F.; et al. Keras. 2015. Available online: https://keras.io (accessed on 29 April 2021).

- Martin, A.; Ashish, A.; Paul, B.; Eugene, B.; Zhifeng, C.; Craig, C.; Greg, S.C.; Andy, D.; Jeffrey, D.; Matthieu, D.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 29 April 2021).

- Zhixuhao. Unet for Image Segmentation. Available online: https://github.com/zhixuhao/unet (accessed on 24 February 2021).

- Gillies, S. Rasterio: Geospatial Raster I/O for Python Programmers. 2013. Available online: https://github.com/mapbox/rasterio (accessed on 30 April 2021).

- GDAL/OGR Contributors. GDAL/OGR Geospatial Data Abstraction Software Library. 2021. Available online: https://gdal.org (accessed on 30 April 2021).

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Exelis Visual Information Solutions. In ENVI Deep Learning; L3Harris Geospatial: Boulder, CO, USA, 1977.

- Exelis Visual Information Solutions. ENVI Deep Learning—Training Background. Available online: https://www.l3harrisgeospatial.com/docs/BackgroundTrainDeepLearningModels.html (accessed on 9 March 2021).

| Location | UAV Model | Camera/Focal Length (mm) | Overlap Forward/Sideward | Flight Altitude | GSD (cm) | Coverage Area (ha) | Purpose |

|---|---|---|---|---|---|---|---|

| Odranci, Slovenia | DJI Phantom 4 Pro | 1” CMOS/24 mm | 80/70 | 90 m | 2.35 | 63.9 | Training and Testing |

| Ponova vas, Slovenia | 80 m | 2.01 | 25.0 | Training | |||

| Tetovo, North Macedonia | 110 m | 2.85 | 24.3 | Training |

| Ground Truth | |||

|---|---|---|---|

| Boundary | No Boundary | ||

| Prediction | Boundary | TP | FP |

| No boundary | FN | TN | |

| Settings | Parameters | |

|---|---|---|

| Trainable layers | pooling layer | maxpooling 2D |

| connected layer | layer depth = 1024 activation = ReLU | |

| dropout layer | dropout rate = 0.8 | |

| logistic layer | activation layer = sigmoid | |

| Learning optimizer | SGD optimizer | learning rate = 0.001 momentum = 0.9 |

| Training | UAV images 256 × 256 × 3, data augmentation, validation split 0.3 | number of epochs = 100 batch size = 32 steps per epoch = training samples/number of epochs |

| Predicted Boundary Map | Overall Accuracy (%) | Recall | Precision | F1 Score |

|---|---|---|---|---|

| Boundary ≤ 0.9 | 94.5 | 0.654 | 0.413 | 0.506 |

| Boundary ≤ 0.7 | 96.2 | 0.480 | 0.565 | 0.519 |

| Boundary ≤ 0.5 | 96.5 | 0.348 | 0.675 | 0.459 |

| Ground Truth | |||

|---|---|---|---|

| Boundary | No Boundary | ||

| U-Net | Boundary | 137,056 | 195,156 |

| No boundary | 72,524 | 8,966,912 | |

| ENVINet5 | Boundary | 175,559 | 325,076 |

| No boundary | 34,021 | 8,836,992 | |

| Predicted Boundary Map | Overall Accuracy (%) | Recall | Precision | F1 Score |

|---|---|---|---|---|

| U-Net | 94.5 | 0.654 | 0.413 | 0.506 |

| ENVINet5 | 96.2 | 0.838 | 0.351 | 0.494 |

| U-Net | ENVI Deep Learning | ||

|---|---|---|---|

| pros | cons | pros | cons |

|

|

|

|

|

|

|

|

|

|

|

|

|

| ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fetai, B.; Račič, M.; Lisec, A. Deep Learning for Detection of Visible Land Boundaries from UAV Imagery. Remote Sens. 2021, 13, 2077. https://doi.org/10.3390/rs13112077

Fetai B, Račič M, Lisec A. Deep Learning for Detection of Visible Land Boundaries from UAV Imagery. Remote Sensing. 2021; 13(11):2077. https://doi.org/10.3390/rs13112077

Chicago/Turabian StyleFetai, Bujar, Matej Račič, and Anka Lisec. 2021. "Deep Learning for Detection of Visible Land Boundaries from UAV Imagery" Remote Sensing 13, no. 11: 2077. https://doi.org/10.3390/rs13112077

APA StyleFetai, B., Račič, M., & Lisec, A. (2021). Deep Learning for Detection of Visible Land Boundaries from UAV Imagery. Remote Sensing, 13(11), 2077. https://doi.org/10.3390/rs13112077