Abstract

Due to the difference of factors, such as lighting conditions, shooting environments, and time, there is compound brightness difference between adjacent images, which includes local brightness difference and radiometric difference. This paper proposed a method to eliminate the compound brightness difference of adjacent images after mosaicking, named local to global radiometric balance. It includes the brightness compensation model and brightness approach model. Firstly, the weighted average value of each row and column of image are calculated to express the brightness change; secondly, according to weighted average value, the brightness compensation model is built; thirdly, combined with the blocking method, the brightness compensation model is applied to image. Based on the value after above process, the brightness approach model is established to make the gray value of adjacent images approach to the mosaic line. In the paper, the standard deviation, MSE (mean square error) and mean value are used as the measure indices of the effect of brightness balance. The three groups of experimental results show that compared with the brightness stretch method, the histogram equalization method and the radiometric balance method, the local to global radiometric balance method not only realizes compound brightness balance, but also has better visual effects than others.

1. Introduction

In order to meet different requirements, such as production of seamless data, researchers have to mosaic multiple remotely sensed images, which are collected in different orbital altitudes of satellite, from different sensors, and/or on different dates [1]. Duo to various conditions, such as solar conditions, shooting environments, sensor characteristics, etc., these images expose a number of radiometric differences from scene to scene and local brightness difference [2]. As a result, it is difficult somewhat to achieve a satisfied local to global brightness balance, seamless lines, and completely mosaicked image production [3].

For this reason, most researchers have made many efforts to minimize radiometric differences between adjacent images, which is also global brightness balance. The radiometric balance can be categorized into two types of method: (1) the blur path method; and (2) the histogram equalization method [4,5].

To achieve image brightness balance using the blur path method, researchers firstly select the overlap area in images after mosaicking; and then stretch brightness of the area [6]. This method includes the radiometric balancing and the brightness stretching. Zhou et al. [1] proposed the radiometric balancing to eliminate radiometric difference of image. This method adjusts the mean value of adjacent images, and uses the principle of weight distribution to make the darker area obtain a higher brightness and the lighter area obtain a lower brightness, so as to achieve brightness balance. However, affected by the weight distribution, this method cannot avoid gray scale blocking [7]. The brightness stretching achieves brightness balance of the image by a fixed formula. This method includes linear brightness stretching and non-linear brightness stretching. Linear brightness stretching adjusts the gray scale of image to achieve brightness balance by changing the slope of the linear formula. The linear brightness stretching is relatively simple, but cannot solve the complicated brightness status of optical images [8]. Therefore, the non-linear brightness stretching is proposed to eliminate the image status with multiple brightness types. The non-linear brightness includes: trigonometric function, gamma function, etc. However, non-linear brightness stretching changes the brightness of image based on a fixed formula [9].

To achieve brightness balance using the histogram equalization method, the histogram of the target image needs to be adjusted according to the mosaic information between adjacent images; on the other hand, the effect relies on the histogram of the reference image [10]. Wang et al. [11] proposed an adaptive histogram equalization method for image to achieve brightness balance. Kim et al. [12] pointed out that adaptive histogram equalization method achieves brightness balance by changes to all possible gray values in the target image according to the reference image information. Wongsritong et al. [13] divided the histogram equalization method into global histogram equalization and local histogram equalization. When the reference area is small, we shall use the global histogram equalization method; and when the reference area is large, we shall use the local histogram equalization method. However, Wongsritong did not indicate how to define the size of the reference area. Yu et al. [14] proposed to use the overlap area between adjacent images as the reference area, with 60% as the boundary. When the overlap area is more than 60%, we shall use local histogram equalization, and when the overlap area is less than 60%, we shall use global histogram equalization. However, this method cannot achieve the brightness balance of the images when the overlap area between adjacent images is less than 25%. In order to improve the quality of the histogram of the reference image, this method sets restrictions according to the image brightness characteristics, such as the richness of image texture information, etc. But this method relies on processing a large number of images [15]. Kansal et al. [16] proposed to control the contrast of the target image by cutting the histogram of the reference image during the histogram equalization process. Although this method reduces the impact of contrast changes, it leads to the loss some grayscale information of the reference image. In order to overcome the limitation of the histogram, Zhou et al. [17] proposed to use the average value and standard deviation ratio of the target image as the limiting condition. This method does not need to specify a reference image, but the processing accuracy will be reduced.

In summary, it is necessary to know the overlapping area brightness information of adjacent images for the blur path method and histogram equalization method [18]. These methods need to change the gray value in the overlapping area to achieve brightness balance [19]. The histogram equalization method relies on the reference image to achieve brightness balance. However, the brightness balance effect is limited by the distribution of the reference image histogram. If the reference image is not specified, accuracy cannot be guaranteed [20]. The path blur method is not limited by the reference image, but the buffer zone needs to be selected according to the brightness difference of the image, and the effect is affected by fixed formula. When the buffer zone is small, this method will produce gray-scale blocking [21]. Above all, the image after mosaicking may have compound difference. However, the above methods can only achieve radiometric balance [22].

In order to solve the above problems, this paper proposes a local to global radiometric balance method. Compared with the blur path method and histogram equalization method, this method solves the problems of relying on scenes, models, and overlapping area information. Simultaneously, the compound brightness difference of the image are all eliminated [23,24]. The main contributions of our study are summarized as follows.

- The proposed method takes into account the adaptive allocation of gray value, achieve the local brightness balance and, what is more, it is not affected by fixed formulas.

- The proposed method is independent of the reference image. In other words, the images can be balanced in the case of incomplete information, such as the lack of overlapping area information of adjacent images.

2. Local to Global Radiometric Balancing

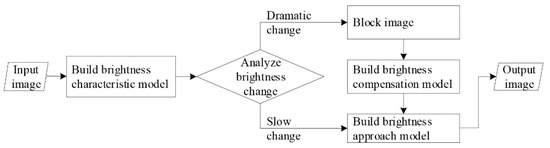

The overall flowchart of the proposed method is shown in Figure 1. To eliminate compound brightness differences using the local to global radiometric balance method: (a) calculate the brightness characteristic value; (b) analysis brightness change of image; (c) block image if brightness of the image has a dramatic change, and establish the brightness compensation model for blocks to achieve local brightness balance; (d) establish the brightness approach model to make the gray values of adjacent optical images approach the mosaic line in order to achieve global brightness balance.

Figure 1.

Flowchart of the proposed method.

2.1. Brightness Compensation Model of Local to Global Radiometric Balancing

2.1.1. Brightness Characteristic

The brightness characteristic is an important index to measure the change of image brightness. The brightness compensation model is build according to the value of the brightness characteristic to realize the local brightness balance of image.

Currently, the average gray value of an image is used to represent the brightness characteristic [25]. Setting the size of the image is . The brightness characteristic value of image can be expressed as follows [26]:

where, is the brightness characteristic value of the image, is the gray value of the image.

The brightness characteristic value of the image can be calculated by using Equation (1). However, Equation (1) cannot avoid the influence of textures information of the image, resulting in errors in the brightness feature value [27]. Therefore, this paper develops the weighted average value to calculate the brightness feature value of image. The weighted average value of image brightness can be expressed as follows:

where, is the brightness characteristic value of the image, is the weighted average value of image brightness in the row direction is the weighted average value of image brightness in the column direction. and are the weight of the row direction and column direction of the image, respectively.

The weight of the unit pixel can be expressed as follows:

where, and are the gray values of unit pixels adjacent to in the row direction and the column direction of image respectively (, ).

Equation (3) shows: if the gray value of adjacent pixels has slow change, the greater weight it has; If the gray value of adjacent pixels dramatic change, the lower weight it has. It should be noted, if the gray value of same to or , the weight is 1.

Substituting Equation (3) into Equation (2), the brightness characteristic values are described in Equation (4):

2.1.2. The Brightness Compensation Model

Based on the brightness characteristic, the brightness compensation value can be calculated. The brightness compensation value uses the target value as a limiting condition to suppress the brightness changes in the row and column directions of the image. Yu et al. [14] proposed that the ratio of the standard deviation of the image to the coefficient of variation can be used as the target value. Therefore, the target value of the image can be expressed as follows:

where, is the standard deviation of the image, is the coefficient of variation. can be expressed as follows:

where, is the gray average value of image which can be calculated by Equation (1).

Combining Equations (4) and (5), the brightness compensation value of the image can be expressed as follows:

where, is the brightness compensation value of image, is the target value of image, is the brightness characteristic value of the image.

Combining Equation (4) and Equation (7), the brightness compensation value of the image in the row direction can be expressed as follows:

where, is the brightness compensation value of image in the row direction.

Similarly, the brightness compensation value of image in the column direction can be expressed as follows:

where, is the brightness compensation value of image in the column direction.

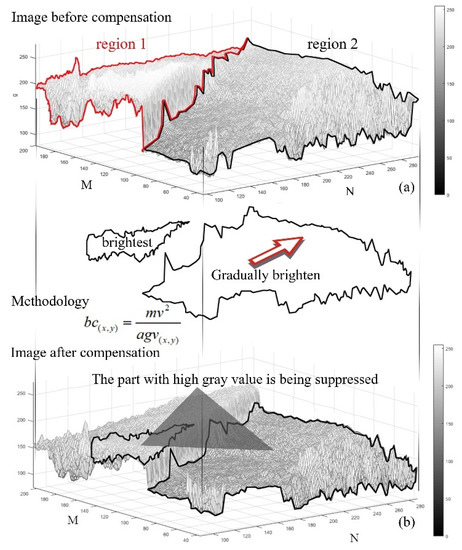

Above all, the principle of brightness compensation is as shown in Figure 2.

Figure 2.

The principle of brightness compensation. (a) Image before compensation. (b) Image after compensation.

2.1.3. Blocking and Compensating on Image

According to Equation (9), we need (a) to obtain the weight through the difference between gray value of adjacent pixels; (b) to calculate the brightness compensation value [27]. However, Yu et al. [14] proposed that the texture of the ground features have a greater impact on the coefficient of variation. Furthermore, Chen et al. [28] proposed that the gray value difference between adjacent pixels of the image is larger, the coefficient of variation is larger, and the accuracy is lower. According to Equation (8), the coefficient of variation will affect the calculation of the brightness compensation value. Therefore, this paper uses the block and extract method to find the block(s) with lower coefficient of variation to calculate the brightness compensation value. The block and extract method should have the following principles [14].

Principle 1.

There is no overlap between blocks after the image is divided.

Principle 2.

The rows and columns direction of the extracted block cannot appear discontinuous when the number of extracted blocks is more than one block.

Principle 3.

If the number of extracted blocks is equal to the number of divided blocks, it is meaningless to use the blocking method.

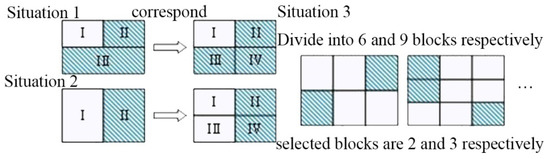

As mentioned above, using the block and extract method to divide the image into four blocks, which is divided into parts. As shown in Figure 3, the situation will be same when the number of divided blocks is less than four. There will be a violation of principle 2 when the number of divided blocks is more than four.

Figure 3.

Special cases in image block.

As shown in Figure 3: in situation 1, divided image into three blocks is the same to divided image into four blocks if extract are two and three respectively. In situation 2, divided image into two blocks is same to divided image into four blocks if extract blocks are one and two respectively. In situation 3, there will be appear discontinuous when divided image into more than four blocks [29].

Let the name of blocks are , , , . In order to select the blocks with lower coefficient of variation for calculating the brightness compensation value. We extracts block(s) by setting up the threshold. The threshold can be expressed as follows:

where, is coefficient of variation of ().

According to Equation (10), corollaries are shown as follow:

Corollary 1.

Compared with other blocks, blocks with a high coefficient of variation will be excluded ().

Corollary 2.

The block with low coefficient of variation can still be selected when the coefficients of variation of each block are similar ().

According to the aforementioned, the brightness compensation value of the image is calculated by interpolation calculation.

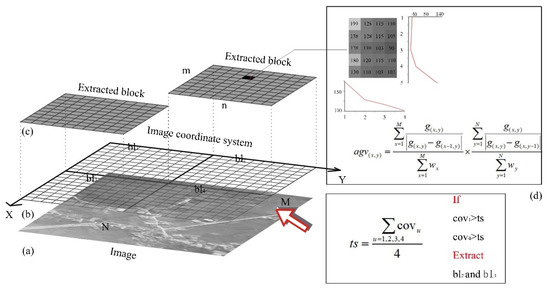

If the blocks are extracted are and , the extraction process as shown in Figure 4.

Figure 4.

Block and extract: (a) original image, (b) creating coordinate system, (c) extracting blocks, (d) the example of calculate brightness compensation.

As shown in Figure 4. (a) the image is divided into 4 blocks; (b) according to the , the blocks are extracted; (c) the weighted average value of image brightness are calculated; Finally, according to Equation (7), the brightness compensation value are calculated.

Above of all, setting is the set of brightness compensation value of the column in the image. Setting is the set of brightness compensation value of the row in the image. Then the brightness compensation model can be expressed as follows:

where, is the brightness compensation model, and,

According to Equation (11), the image after local brightness balance process can be expressed as follows:

where, is the image after local brightness balance process, “⊙” represents the Manhattan accumulation.

2.2. Brightness Approach Model of Local to Global Radiometric Balancing

The brightness compensation model is used to eliminate the local brightness differences in images with compound brightness differences. However, the radiometric difference caused by mosaic of adjacent images (the adjacent images is named image 1, 2, n) still exist. The position of the mosaic line in the image can be expressed as follows:

According to Equations (13) and (14), the image which has compound brightness difference after brightness compensating can be expressed as follows:

where, is the image after local brightness balance, is the brightness compensation value of image to the left of the mosaic line, is the brightness compensation value of the image to the right of the mosaic line, and are the size of the image after mosaicking.

The brightness approach value is calculated to eliminate the radiometric difference of image basis on the local brightness balance. The brightness approach value is achieving radiometric balance by making gray values the same at the mosaic line.

Firstly, the average of the mean gray value of images are regard as approach value to achieve brightness approach at mosaic line. According to Equation (1), the mean gray value after local brightness balance are described in Equation (16):

where, and are the mean gray value of images at mosaic line to the left and right respectively. and are the brightness compensation values, respectively.

According to Equation (16), the brightness approach value can described in Equation (17):

where, is the brightness approach value. According to the brightness approach value, the approach coefficients on both sides of the mosaic line can be expressed as follows:

where, and are the approach coefficients on both sides of the mosaic line, respectively, and are the gray value on both sides of the mosaic line, respectively.

Then, the model of brightness approach value on rows is described in Equation (19):

where, is the brightness approach value on rows, which can darken the image, is the brightness approach value on rows, which can brighten the image. Similarly, the brightness approach value on the column is described as and .

Secondly, combined with Equations (18) and (19), the brightness approach model can be expressed as follows:

where,

Setting the elements of and be 1, when the image only has the radiometric difference on column direction. Otherwise, setting the elements of and be 1.

Thirdly, make the image elements that needs to be darkened 1, and all other elements 0, and this can be expressed as follows:

Combining Equations (20) and (21), the result that darkens the image is expressed as follows:

where, is the result that darkens the image.

Similarly, the result that brightens the image can be expressed as follows:

where is the result that brighten the image.

Finally, the result by the brightness approach model can be expressed as follows:

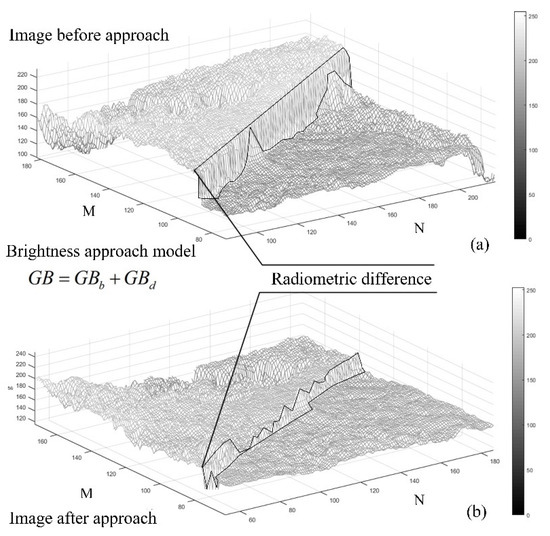

where, is the result by brightness approach model. Based on Figure 2b, the principle of radiometric balance can be expressed as in Figure 5.

Figure 5.

The principle of brightness approach. (a) Image before approach. (b) Image after approach.

Combining the brightness compensation model and the brightness approach model, the local to global radiometric balancing for image can be expressed as follows:

where, is the result after local to global radiometric balancing.

3. Experiments and Results

Using local to global radiometric balancing to achieve a brightness balance of image with compound brightness difference takes place through the following 3 steps:

Step 1.Block the image and select the blocks according to the threshold. The threshold can be calculated by Equation (10).

Step 2.Establishing the brightness compensation model. The model can achieve local brightness balance of image, and can be calculated by Equation (12).

Step 3.Establishing the brightness approach model. The model can achieve global radiometric balance of image, and can be calculated by Equation (20).

3.1. Data Setargon Is a Kind of Satellite

As shown in Figure 6, in order to prove that the local to global radiometric balancing is suitable for brightness equalization of multiple types of image sets, we have selected three sets of images taken by the argon satellite and Landsat satellite for experiments.

Figure 6.

The image set for prove local to global radiometric balancing. (a) and (c) The image taken by argon KH-5. (b) The image taken by Landsat.

As shown in Figure 6, firstly, these images has the following characteristics:

- The image were taken by satellites with different flying altitudes.

- The images were collected over a long time span (1963–2011).

- The images had different resolution.

Secondly, these images had different requirements:

- Figure 6a has local brightness differences. The brightness compensation method needs to be used to process the image to achieve brightness balance.

- Figure 6b has a radiometric difference. The brightness approach method needs to be used to process the image to achieve brightness balance.

- The Figure 6c has a compound brightness difference. The local to global radiometric balancing needs to be used to achieve brightness balance.

3.2. The Experiments and Results

3.2.1. Local Brightness Balance

As shown in Figure 6a, the image was taken in 1967 in Greenland by ARGON KH-5. The satellite has a ground resolution of 140 m and a scan width of 556 km. The brightness compensation model is used to achieve local brightness balance of image.

The local brightness balance can be achieved by following steps:

(a) As shown in Figure 7a. Blocking the image and count the coefficient of variation according to Equation (6). The coefficients of variation of each block are shown in Table 1.

Figure 7.

Blocking and selecting. (a) Blocking image. (b) Selecting blocks

Table 1.

The coefficients of variation of each block.

As shown in Figure 7b, according to the Equation (10) and combining this with the Table 1, the threshold of the image is 0.3880. Then, the blocks Ⅰ and Ⅳ (Ⅰ and Ⅳ as shown in Figure 7b) are selected.

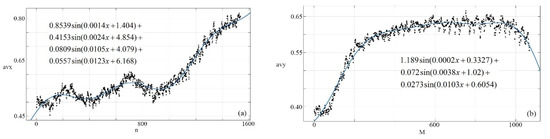

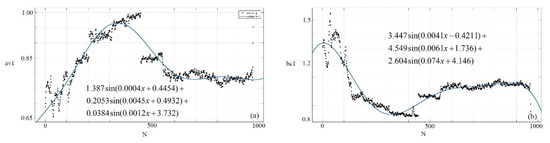

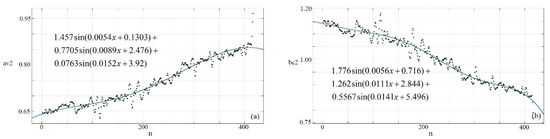

(b) The weight is calculated by Equation (3) and the brightness characteristic value is calculated by Equation (4) according to the block Ⅰ and Ⅳ. As shown in Figure 8, the model of brightness characteristic is established through fitting the value.

Figure 8.

The model of brightness characteristic. (a) The model of row direction. (b) The model of column direction.

As shown in Figure 8, is the brightness characteristic value of row direction of block Ⅰ and Ⅳ, is the brightness characteristic value of column direction of block Ⅰ and Ⅳ, is the number of blocks, is the number of image. As shown in Figure 9, according to the mean value of brightness which is 0.5946, the brightness compensation model is established by Equation (7).

Figure 9.

The model of brightness compensation. (a) The model of row direction. (b) The model of column direction.

As shown in Figure 9, is the brightness characteristic value of row direction of block Ⅰ and Ⅳ, is the brightness characteristic value of column direction of block Ⅰ and Ⅳ.

Finally, the image is processed combining the interpolation method and Equation (12). The result of local brightness balancing is shown in Figure 10.

Figure 10.

The result of local brightness balancing. (a) The image before process. (b) The image after process.

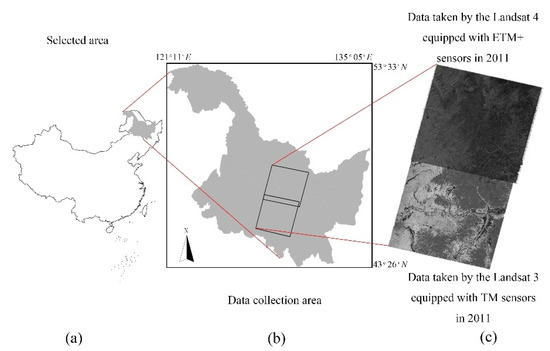

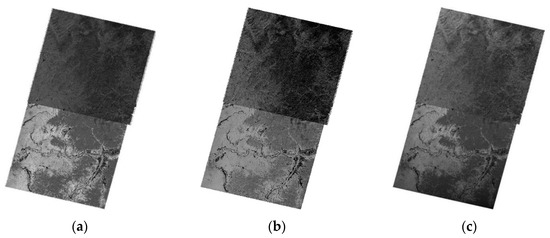

3.2.2. Global Radiometric Balance

The image was taken in 2003 and 2011 at Heilongjiang province, China by Landsat 3 and 4. The Landsat 3 and 4 have different sensors, different number of bands, and shooting at different times as shown in Figure 11.

Figure 11.

Image sources. (a) Selected area. (b) Image collection area. (c) Image obtained.

As shown in Figure 11, the image has obvious radiometric differences. Using the brightness approach model of local to global radiometric balancing can achieve the brightness equation and follows these steps:

Step 1.Calculate thewhich can darken the image, according to Equation (19).

Step 2.Calculate thewhich can brighten the image, according to Equation (19).

Step 3.Building the model of brightness approach model, according to Equation (20).

Step 4.Process the image according to Equations (21) to (23).

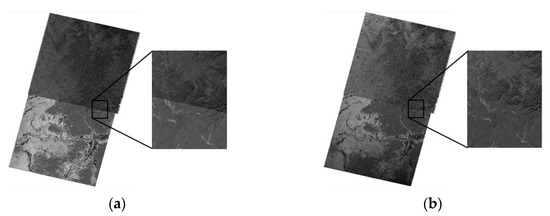

The original image and result by using brightness approach model are shown in Figure 12.

Figure 12.

Compare with original image and the result by using brightness approach model. (a) Original image. (b) The result by using brightness approach model.

3.2.3. Compound Brightness Balance

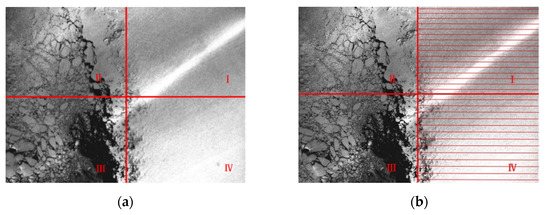

As shown in Figure 13a, the image has compound brightness difference. Combined the brightness compensation model and brightness approach model of local to global radiometric balancing to achieve brightness balance. As shown in Figure 13b and Table 2, we block the images into 4 blocks respectively, and count the coefficient of variation of each blocks. For statistical convenience, we named the brighter region as image 1, and the darker region as image 2. It should be noted that blocks with too small area will not be counted.

Figure 13.

Selecting the blocks of images. (a) Original image. (b) Selected blocks.

Table 2.

The coefficients of variation of each block.

According to the Equation (10), the threshold of image 1 and 2 are 0.1890 and 0.1335, respectively. As shown in Figure 13b. The block Ⅰ of image 1 and the block Ⅰ and Ⅲ of image 2 are selected.

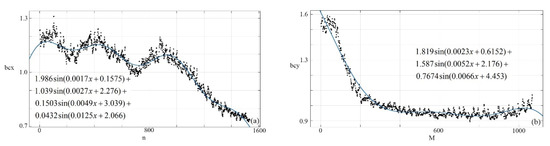

As shown in Figure 14, the model of brightness characteristic and compensation of image 1 are established through fitting the value.

Figure 14.

The model of brightness characteristic and compensation of image 1. (a) Model of brightness characteristic. (b) Model of brightness compensation.

where, is brightness characteristic value of image 1, is the brightness compensation value of image 1.

As shown in Figure 15, similar to the processed of image 1, the model of brightness characteristic and compensation of image 2 are established through fitting the value.

Figure 15.

The model of brightness characteristic and compensation of region 1. (a) Model of brightness characteristic. (b) Model of brightness compensation.

where is brightness characteristic value of image 2, is the brightness compensation value of image 2.

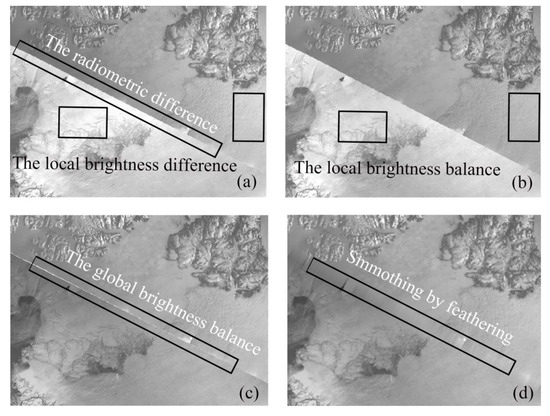

The whole process is shown in Figure 16. According to Equation (12), the result after brightness compensation model of local to global radiometric balancing is shown in Figure 16b. Compared with the original image, the result achieves local brightness balance.

Figure 16.

The process of local to global brightness balancing. (a) Original image. (b) The result after using brightness compensation model of local to global radiometric balancing. (c) The result after using brightness approach model of local to global radiometric balancing. (d) The result after feathering.

However, the result after the brightness compensation model of local to global radiometric balancing still has radiometric difference. Therefore, the brightness approach model of local to global radiometric balancing is used to achieve global brightness balance.

4. Discussion

Compared with the results by different methods and the original image, we discuss the effects on terms of vision and index.

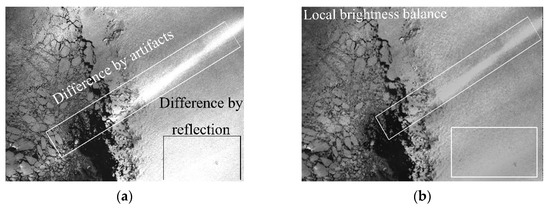

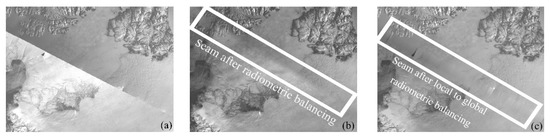

4.1. In Terms of Vision

In the experiment of local brightness balance, as shown in Figure 17a, the original image has local brightness differences caused by artifacts and reflections. Brightness stretching is the most commonly used to achieve local brightness balance method. As shown in Figure 17b, the result by brightness stretch reduces the difference between artifacts and surrounding gray values. However, it cannot eliminate the brightness difference by reflections. As shown in Figure 17c, the result by brightness compensation method of local to global radiometric balancing has a better effect in eliminating the difference caused by reflection and image details are preserved.

Figure 17.

Comparing the result by different methods. (a) Original image. (b) Result by brightness stretch [1]. (c) Result by brightness compensation method.

In the experiment of global brightness balance, as shown in Figure 18a, the original image has obviously radiometric difference cause by taken in different times, equipped different sensors. The result by using the histogram equalization method in the case of unknown overlapping area is shown in Figure 18b. The result has a poor effect. On the one hand, it enhances the contrast of the original image. On the other hands, radiometric difference is not eliminated. The result by using the brightness approach model of local to global radiometric balancing is shown in Figure 18c. It should be emphasized that these two experiments were conducted under the conditions of lack of overlapping area information and lack of reference images.

Figure 18.

Compare with results by different method. (a) Original image. (b) Result by using histogram equalization method. (c) Result by using local to global radiometric balancing.

In the experiment of global brightness balance, the original image is shown in Figure 19a. The image has obvious compound brightness difference. The result by using radiometric balancing is shown in Figure 19b. The result is roughly global brightness balance. However, it has the phenomenon of gray scale blocking, which is a product due to the local brightness difference. As shown in Figure 19c, the result by using local to global radiometric balancing has better visual effect than former.

Figure 19.

Compare with results by different method. (a) Original image. (b) Result by using radiometric balancing [1]. (c) Result by using local to global radiometric balancing.

4.2. In Terms of Index

The index includes standard deviation, MSE and mean value. As shown in Table 3. The standard deviation is an index to express the contrast. The MSE is an index to express the degree of deviation from the original image. The mean value is an index to express the situation of gray value compare with original image. Compare with the index of results by different methods, we can known:

Table 3.

The index of results by different methods.

In the experiment of local brightness balance the standard deviation of the result by the brightness compensation model of local to global radiometric balancing are reduce 0.0675 than original image (0.2465), and is similar to the standard deviation of the result by brightness stretching (the difference is 0.0019). The result by the brightness compensation model has lower MSE than the result by brightness stretching (the difference is 0.0131) and the mean value of the result by the brightness compensation model is similar to original image.

In the experiment of global brightness balance the standard deviation of the original image is 0.1750. The standard deviation of the result by using the histogram equalization method is 0.1825, in the case of unknown overlapping area. The standard deviation of the result by using the brightness approach model of local to global radiometric balancing is 0.1207, in the case of the unknown overlapping area. These indexes show that the result by the brightness approach model of local to global radiometric balancing has ba better effect when the mean and standard deviation value are similar.

In the experiment of compound brightness balance the standard deviation of the original image is 0.1986. The standard deviation of result by using radiometric balancing is 0.1693. The standard deviation of the result by using local to global radiometric balancing is 0.1042. Compared with the original image, the standard deviation of the result by using local to global radiometric balancing is reduced by 0.0944. However, the standard deviation by using the radiometric balancing is reduced by 0.0293. In addition, the result by local to global radiometric balancing has lower MSE than the result by radiometric balancing, and mean value is closer to the original image. Therefore, the local to global radiometric balancing is better than radiometric balancing.

5. Conclusions

We have proposed the local to global radiometric balancing to eliminate compound brightness difference. The brightness compensation model of local to global radiometric balancing is used to achieve local brightness balance. Compared with brightness stretching, the result by local to global radiometric balancing has lower MSE, and mean value is closer to the original image. The brightness approach model of local to global radiometric balancing is used to achieve radiometric balance. Compared with the histogram equalization method, the result by local to global radiometric balancing has lower standard deviation. The local to global radiometric balancing has lower standard deviation and lower MSE than radiometric balancing when processing the image with compound brightness difference. The main innovation of our study is the proposed method, which is not limited by the information of overlapping area and reference images.

Author Contributions

Conceptualization, G.Z. and X.L.; methodology, X.L.; software, X.L.; validation, W.Z. and S.L.; resources, G.Z.; writing—original draft preparation, X.L.; supervision, G.Z., W.Z. and S.L.; project administration, G.Z.; funding acquisition, G.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Guangxi universities scientific research basic capacity improvement project numbers 2021KY1670; National Natural Science of China under Grant numbers 41431179, 41961065; Guangxi Innovative Development Grand Grant under the grant number: GuikeAA18118038, GuikeAA18242048; the National Key Research and Development Program of China under Grant numbers 2016YFB0502501 and the BaGuiScholars program of Guangxi (Guoqing Zhou).

Acknowledgments

The author would like to thank the reviewers for their constructive comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, G.; Jezek, C. Satellite photograph mosaics of Greenland from the 1960s era. Int. J. Remote Sens. 2002, 23, 1143–1159. [Google Scholar] [CrossRef]

- James, R.; Dwyer, L.; Barsi, A. The next Landsat satellite: The Landsat Data Continuity Mission. Remote Sens. Environ. 2012, 122, 11–21. [Google Scholar]

- Li, L.; Li, Y.; Xia, M.; Li, X.; Yao, J. Grid model-based global color correction for multiple image mosaicking. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar]

- Zhou, G.; Liu, X.; Yue, T.; Wang, Q.; Sha, H.; Huang, S.; Pan, Q. A new graduation algorithm for color balance of remote sensing image. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2018, 42, 2517–2521. [Google Scholar] [CrossRef]

- Jiang, G.; Lin, S.C.; Wong, C.; Rahmna, M.A.; Ren, T.R.; Kwok, N.; Shi, H.; Yu, Y.H.; Wu, T. Color image enhancement with brightness preservation using a histogram specification approach. Optics 2015, 126, 5656–5664. [Google Scholar] [CrossRef]

- Li, X.; Gao, C.; Guo, Y.; He, F.; Shao, Y. Cable surface damage detection in cable-stayed bridges using optical techniques and image mosaicking. Opt. Laser Technol. 2019, 110, 36–43. [Google Scholar] [CrossRef]

- Yamakawa, M.; Sugita, Y. Image enhancement using Retinex and image fusion techniques. Electron. Commun. Jpn. 2018, 101, 52–63. [Google Scholar] [CrossRef]

- Zhang, Z.; Feng, H.; Xu, Z.; Qu, L.; Chen, Y. Single image veiling glare removal. J. Mod. Opt. 2018, 65, 2220–2230. [Google Scholar] [CrossRef]

- Tian, Q.; Cohen, L. A variational-based fusion model for non-uniform illumination image enhancement via contrast optimization and color correction. Signal. Process. 2018, 153, 210–220. [Google Scholar] [CrossRef]

- Liu, C.; Cheng, I.; Zhang, Y.; Basu, A. Enhancement of low visibility aerial images using histogram truncation and an explicit Retinex representation for balancing contrast and color consistency. ISPRS J. Photogramm. Remote Sens. 2017, 128, 16–26. [Google Scholar] [CrossRef]

- Wang, Q.; Yan, L.; Yuan, Q. An Automatic Shadow Detection Method for VHR Remote Sensing Orthoimagery. Remote Sens. 2017, 9, 469. [Google Scholar] [CrossRef]

- Tang, J.; Isa, N. Bi-histogram equalization using modified histogram bins. Appl. Soft Comput. 2017, 55, 31–43. [Google Scholar] [CrossRef]

- Wongsritong, K.; Kittayaruasiriwat, K.; Cheevasuvit, F.; Dejhan, K.; Somboonkaew, A. Contrast enhancement using multipeak histogram Equalization with brightness preserving. IEEE Asia Pac. Conf. Circuits Syst. 1998, 242, 455–458. [Google Scholar]

- Yu, L.; Zhang, Y.; Sun, M.; Zhou, X.; Liu, C. An auto-adapting global-to-local color balancing method for optical imagery mosaic. ISPRS J. Photogramm. Remote Sens. 2017, 132, 1–19. [Google Scholar] [CrossRef]

- Yang, K.; Li, H.; Kuang, H.; Li, C.; Li, J.Y. An Adaptive Method for Image Dynamic Range Adjustment. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 640–652. [Google Scholar] [CrossRef]

- Kansal, S.; Purwar, S.; Tripathi, R. Image contrast enhancement using unsharp masking and histogram Equalization. Multimed. Tools Appl. 2018, 77, 26919–26938. [Google Scholar] [CrossRef]

- Zhou, X. Multiple auto-adapting color balancing for large number of images. Int. Arch. Photogramm. Remote Sens. 2015, 40, 735–742. [Google Scholar] [CrossRef]

- Li, X.; Hui, N.; Shen, H.; Fu, Y.; Zhang, L. A robust mosaicking procedure for high spatial resolution remote sensing images. ISPRS Photogramm. Remote Sens. 2015, 109, 108–125. [Google Scholar] [CrossRef]

- Sadeghi, V.; Ebadi, H.; Ahmadi, F. A new model for automatic normalization of multitemporal satellite images using artificial neural network and mathematical methods. Appl. Math. Model. 2013, 37, 6437–6445. [Google Scholar] [CrossRef]

- Jieun, J.; Jaehyun, I.; Jang, J.; Yoonjong, Y.; Joonki, P. Adaptiv White Point Extraction based on Dark Channel Prior for Automatic White Balance. IEIE Trans. Smart Process. Comput. 2016, 5, 383–389. [Google Scholar]

- Li, Y.; Lee, H. Auto white balance by surface reflection decomposition. J. Opt. Soc. Am. 2018, 34, 1800–1809. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Zhang, R.; Liu, N. On-Board Ortho-Rectification for Images Based on an FPGA. Remote Sens. 2017, 9, 874. [Google Scholar] [CrossRef]

- Sun, L.; Cui, Q. Analysis and calculation of the veiling glare index in optical systems. Laser Phys. 2018, 28, 207–217. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, R.; Li, X.; Shen, H.; Yuan, Z. Block Adjustment-Based Radiometric Normalization by Considering Global and Local Differences. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Yu, L.; Zhang, Y.; Sun, M.; Zhu, X. Colour balancing of satellite imagery based on a colour reference library. Int. J. Remote Sens. 2016, 37, 5763–5785. [Google Scholar] [CrossRef]

- Xia, M.; Yao, J.; Gao, Z. A closed-form solution for multi-view color correction with gradient preservation. ISPRS J. Photogramm. Remote Sens. 2019, 157, 188–200. [Google Scholar] [CrossRef]

- Zhou, G.; Schickler, W.; Thorpe, A. True orthoimage generation in urban areas with very tall buildings. Int. J. Remote Sens. 2004, 25, 5163–5180. [Google Scholar] [CrossRef]

- Chen, D.; Shang, S.; Wu, C. Shadow-based Building Detection and Segmentation in High-resolution Remote Sensing Image. J. Multimed. 2014, 9, 181–188. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, W.; Kelmelis, J.A. A Comprehensive Study on Urban True Orthorectification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2138–2147. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).