This research phase consisted of results evaluation designed to assess if solo CNNs can detect complex wetland classes to an acceptable accuracy. To do so, the overall and producer’s accuracies were used to evaluate the capability of different models for identification of the wetland classes (i.e., bog, fen, marsh, swamp, and shallow water) as well as non-wetland classes (i.e., urban, upland, and deep water).

To evaluate the time cost of different CNN models, their training time was assessed, as shown in

Figure 12. The Inception-ResNet and DenseNet models required the longest time for training at 1392 and 1181 min, respectively. In contrast, the ResNet18 model required the least amount of time for training at 73 min. The comparison revealed the advantage of shallow CNNs compared to deep CNNs in terms of overall accuracy and time. This is because there are a higher number of parameters to be fine-tuned in the deeper CNNs, which increases the time and computational costs. Also, these CNN models with a high number of layers require larger training samples to achieve their full potential, which may result in a lower level of accuracy. It is worth highlighting that the experiments were done with an Intel processor (i.e., i5-6200U Central Processing Unit (CPU) of 2.30 GHz) and an 8 GB Random Access Memory (RAM) operating on 64-bit Windows 10.

We also evaluated the efficiency of different solo CNN models in each study area in terms of producer’s accuracy, as described in more detail in the following subsections.

4.2. Grand Falls Study Area

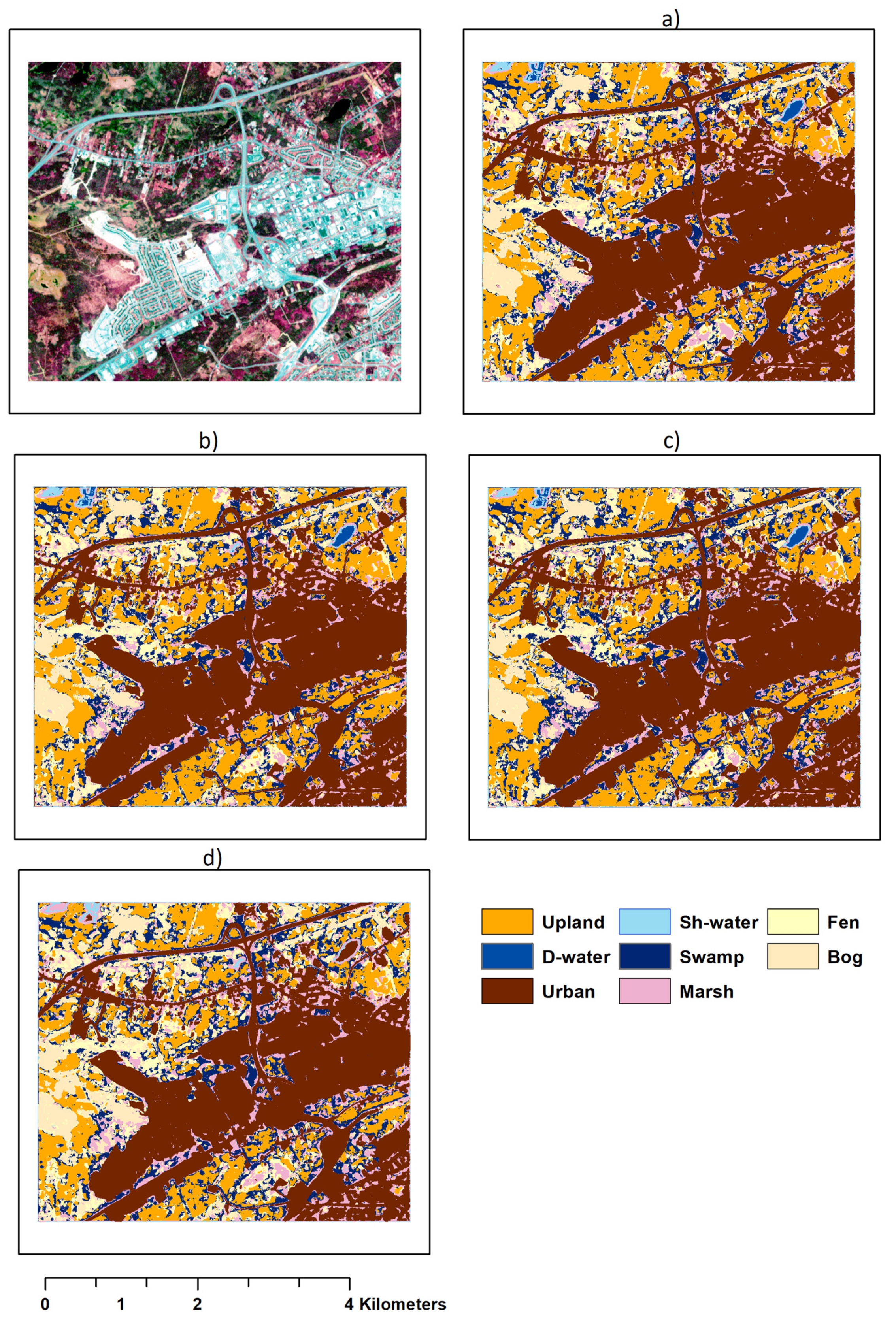

A part of the Grand Falls study area with approximately 4.2 km by 4.9 km is used for the classification mapping (

Figure 14). Like the Avalon area, the CNN models had poor performances for distinguishing the bog, fen, and marsh classes, likely due to their spectral similarity and the low amount of training data. In the Grand Falls, most of the swamp areas were incorrectly classified as bog, followed by fen, marsh, and upland classes. Also, shallow water was classified as deep water in some cases, potentially resulting from spectral similarities (

Table 4). Results indicated that all CNN models had achieved a high producer’s accuracy for recognizing non-wetland classes of urban and upland. It can be generally explained by their higher number of training data relative to that of wetland training samples.

The CNN models had a lower producer’s accuracy in the Grand Falls than that of the Avalon and Gros Morne regions due to the relative complexity of this study area. Fen, marsh, and swamp regions were better recognized by the CNN models in this study region than the Avalon. This could be attributed to the higher number of training data in the Grand Falls for the fen and marsh classes relative to the Avalon and Gros Morne regions. However, they demonstrated relatively poor performances on the classification of shallow water class than that of the Avalon.

In addition, as the number of training data for urban, deep water, and upland classes was less in the Grand Falls, the overall accuracy obtained in this area was much less compared to the Avalon and Gros Morne. The CNN networks of GoogLeNet and ShuffleNet, with the overall accuracies of 79.34% and 79.07%, were superior over the other CNN models, while the least overall accuracy belonged to the CNN network of ResNet18 (

Table 4 and

Figure 11 and

Figure 14).

In the Grand Falls with much less training data, CNN networks, including ShuffleNet and GoogLeNet, were superior over the deeper CNN models of Inception-ResNet and ResNet101. It is due to the reason that there are fewer parameters to be fine-tuned in CNN networks of ShuffleNet and GoogLeNet. There were a higher number of training data for wetland classes in the Grand Falls; consequently, the classification accuracy was higher in this study area than the Avalon and Gros Morne. On the other hand, the training data for the non-wetland classes were relatively low in the Grand Falls, resulting in a lower overall accuracy ranging from 73.87% to 79.34% (

Figure 11 and

Figure 14).

4.3. Gros Morne Study Area

A part of the Gros Morne study area with about 4.2 km by 4.9 km was used for the classification mapping (

Figure 15). In the Gros Morne region, the same issue exists for the incorrect classification of bog, fen, and marsh with solo CNN models. Most of the swamp areas were recognized as the upland class, and most of the CNNs had an issue with the correct classification of shallow and deep waters. It is worth mentioning that swamp and upland classes may have similar structure and vegetation types, specifically in the low water seasons. Consequently, their spectral reflectance can be similar, which leads to their misclassifications. Generally, wetlands are a complex environment where some of their classes have similar spectral signatures, specifically, bog, fen, and marsh wetland classes. All the solo CNNs presented a high level of accuracy for the classification of non-wetlands classes of urban, deep water, and uplands (

Table 5 and

Figure 15). In this study area similar to the Avalon, there were fewer training data for wetland classes of fen, marsh, swamp, and shallow water. Consequently, the performance of the solo CNNs was relatively poor compared to the Grand Falls. Moreover, as the number of training data for non-wetland classes of deep water and upland and wetland class of bog was higher in this region, the achieved overall accuracy was higher than the Avalon and Grand Falls. With an overall accuracy of 90.14%, the Inception-ResNet network was superior over the other solo CNNs (

Figure 11 and

Figure 14 and

Table 5).

There were more training data for bog, urban, deep water, and upland classes in the Avalon and Gros Morne. As a result, CNN models including MobileNet and Inception-ResNet with more parameters outperformed the CNN networks of ShuffleNet and GoogLeNet with fewer parameters.

4.4. Results of Ensemble Models

In this study, the main objective of integrating CNN models is to improve the wetland classification accuracy. As such, the probability layers extracted from the three solo CNN models with the highest accuracy for wetland classification in each study area were fused using four different approaches, which are RF, BTree, BOT, and majority voting. The overall accuracy from a comparison of predicted and reference classes is presented in

Figure 16. Overall, RF, BOT, and BTree models showed higher accuracy in the Gros Morne region, followed by the Avalon and Grand Falls regions. In the Avalon area, the BOT classifier improved the overall accuracy of wetland classes by 6.43% through the ensemble of CNN models of DenseNet, ResNet18, and Xception networks (i.e., they had better results for wetland classification). Overall accuracy between the reference and predicted classes in the Avalon were generally lower compared to that of the Gros Morne study area. With the ensemble of Inception-ResNet, Xception, and MobileNet using the BTree algorithm, the overall accuracy was improved by 3.36% in the Gros Morne study area. The Grand Falls had the least overall accuracy compared to that of the Avalon and Gros Morne, where the BTree obtained higher accuracy than the majority voting, BOT, and RF classifiers, improving the overall accuracy by about 8.16% using the ensemble of GoogLeNet, Xception, and MobileNet.

It can be seen that, in the Avalon region, the BTree classifier improved the results of the best solo CNN (i.e., MobileNet) for the classification of marsh, swamp, and fen classes by 36.68%, 25.76%, and 20.01%, respectively. However, the classification accuracies of shallow water and bog were decreased by 12.5% and 3.29%, respectively (

Table 6 and

Figure 17).

Results obtained by the BTree classifier indicated an improvement of the resulting shallow water, marsh, swamp, and bog classification of the best solo CNN (i.e., GoogLeNet) by 30.28%, 24.27%, 15.99%, and 5.72%, respectively. However, the classification accuracy of fen was decreased by 6.27%. The results of ensemble modeling indicated a significant improvement of wetland classification compared to that of the solo CNNs (

Table 7 and

Figure 18).

It is worth noting that, in the Gros Morne region, even though the overall accuracy did not increase substantially, the ensemble models achieved better classification accuracies for the wetland classes of swamp, fen, marsh, and shallow water. In more detail, the classification accuracy of these classes improved by 62.06%, 32.95%, 26.09%, and 9.79%, respectively, using the BTree classifier compared to that of the best solo CNN (i.e., Inception-ResNet) (

Table 8 and

Figure 19). Although, the classification accuracy of bog was decreased by 14.38%.

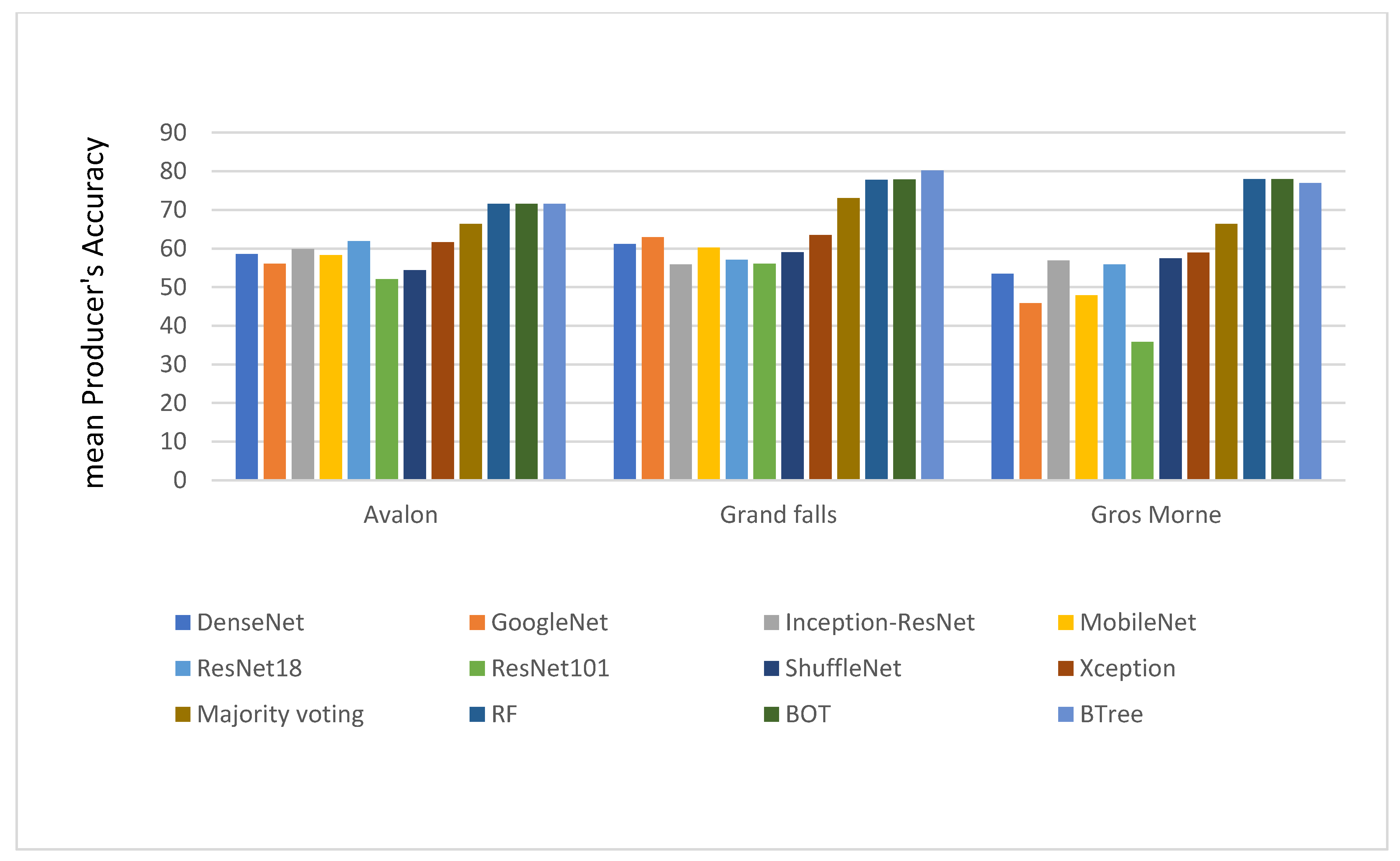

To evaluate the efficiency and effectiveness of the solo CNNs and ensemble models for the classification of the wetland classes of bog, fen, marsh, swamp, and shallow water, their mean producer’s accuracy was assessed and summarized in

Figure 20.

The comparison revealed the superiority of the ensemble models compared to the solo CNN networks in terms of the mean producer’s accuracy. Results indicated a strong agreement between the predicted and reference wetland classes in the Gros Morne region using the ensemble models. In more detail, the ensemble model of the RF algorithm had the highest accuracy with a mean producer’s accuracy of 78%, where it improved the results of the best solo CNN model for the wetland classification (i.e., Xception with a mean producer’s accuracy of 58.96%) by more than 19%. In the Grand Falls, the ensemble model of the BTree improved the accuracy of the best solo CNN model (i.e., Xception with a mean producer’s accuracy of 63.51%) by 16.7%, with a mean producer’s accuracy of 80.21%. Finally, the Avalon area had the least agreement between the predicted and reference wetland classes using the ensemble models.

The BTree classifier improved the results of the best solo CNN model of ResNet18 (with a mean producer’s accuracy of 61.96%) by 9.63%, with a mean producer’s accuracy of 71.59%. Results obtained by the solo and ensemble CNNs indicated the advantage of shallower CNN models, including ResNet18 and Xception, over very deep learning models, such as DenseNet. Besides that, classification accuracies achieved by the solo CNN models were substantially improved in all three study areas for the wetland classification of bog, fen, marsh, swamp, and shallow water (

Table 6,

Table 7 and

Table 8).

The number of parameters that are required to be fine-tuned for each solo CNNs is presented in

Table 9. It is evident from

Table 9 that Inception-ResNet, ResNet101, and MobileNet CNN networks with approximately 50.2, 42.5, and 40.5 million parameters, respectively, had the highest number of parameters that are required to be fine-tuned. On the other hand, the CNN networks of ShuffleNet, GoogLeNet, and ResNet18 with about 1, 6, and 11.2 million parameters, respectively, had the least number of parameters.

The solo CNNs with a higher number of parameters (e.g., Inception-ResNet) require a higher number of training data to reach their full classification potential capability. This contrasts with the situation in remote sensing applications, specifically in wetland classification. As discussed in the previous sections, creating a high number of training data is labor-intensive and quite costly in remote sensing. Overall, this research demonstrated that with a limited number of training data, CNN networks with fewer parameters had better classification performance (e.g., ShuffleNet).

Moreover, the results demonstrated that the supervised classifiers, including BTree, BOT, and RF, were superior in terms of the overall accuracy and mean producer’s accuracy over the unsupervised classifier of majority voting in the Avalon, Grand Falls, and Gros Morne. Their different strategy of data fusion can explain their better classification results. In majority voting classifier, results of the best CNNs are simply fused by their majority values. In contrast, in the supervised tree-based classifiers such as BTree algorithm, results are trained once more to minimize the classification error, resulting in much better classification accuracy.