An Imaging Network Design for UGV-Based 3D Reconstruction of Buildings

Abstract

1. Introduction

- Next Best View Planning: starting from initial viewpoints, the research question is where the next viewpoints should be placed. Most of the approaches use Next Best View (NBV) methods to plan viewpoints without prior geometric information of the target object in the form of 3D model. Generally, NBV methods iteratively find the next best viewpoint based on a cost-planning function and information from previously planned viewpoints. These methods also use partial geometric information of the target object, reconstructed from planned viewpoints, to plan future sensor placements [21]. To find the next best viewpoints, one of three methods for representing the generated scanned area in the initial viewpoints is used, including triangular meshes [22] and volumetric [23] and Surfel representations [24].

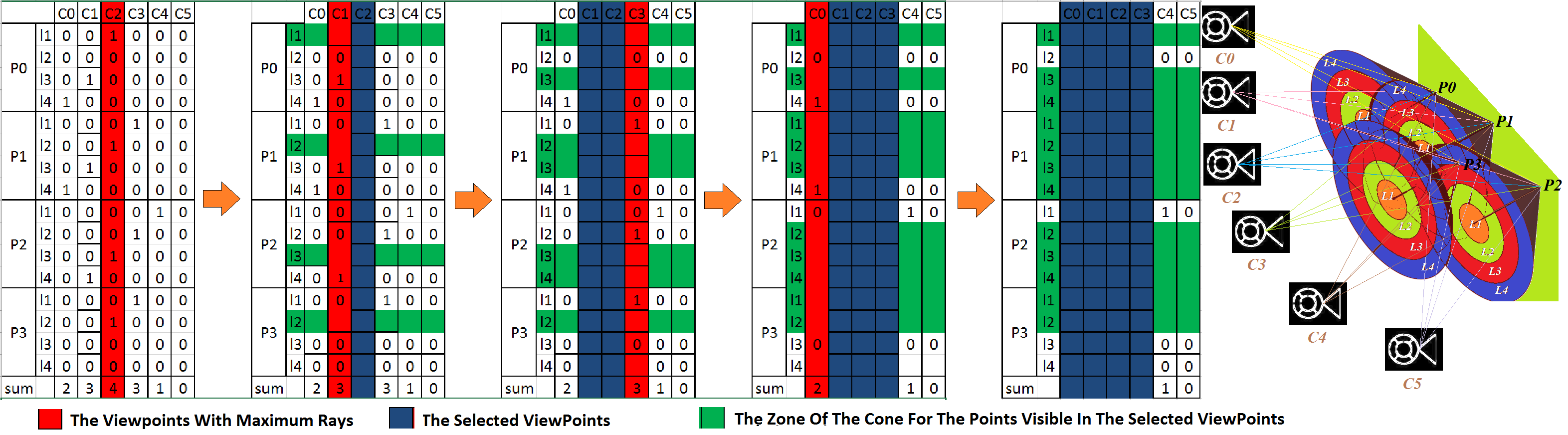

- Clustering and Selecting the Vantage Viewpoints: given a dense imaging network, clustering and selecting the vantage images is the primary goal [18]. Usually, in this category, the core functionality is performed by defining a visibility matrix between sparse surface points (rows) and the camera poses (columns), which can be estimated through a structure from motion procedure [15,16,25,26,27].

- Complete Imaging Network Design (also known as model-based design): contrary to the previous methods, complete imaging network design is performed without any initial network, but an initial geometric model of the object should be available. The common approaches in this category are classified into set theory, graph theory and computational geometry [28].

- (i)

- It proposes a method to suggest camera poses reachable by either a robot in the form of numerical values of the poses or a human operator in the form of vectors on a metric map.

- (ii)

- In contrast to the imaging network design methods which have been developed to generate initial viewpoints located on an ellipse or a sphere at an optimal range from the object, the initial viewpoints are here placed within the maximum and minimum optimal ranges on a two-dimensional map.

- (iii)

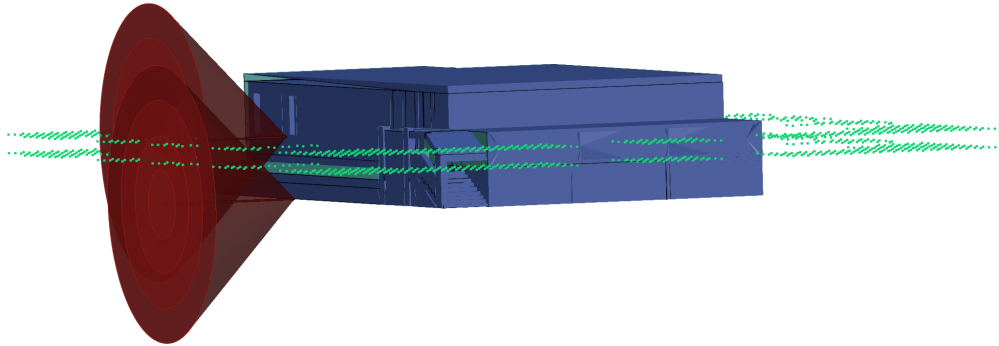

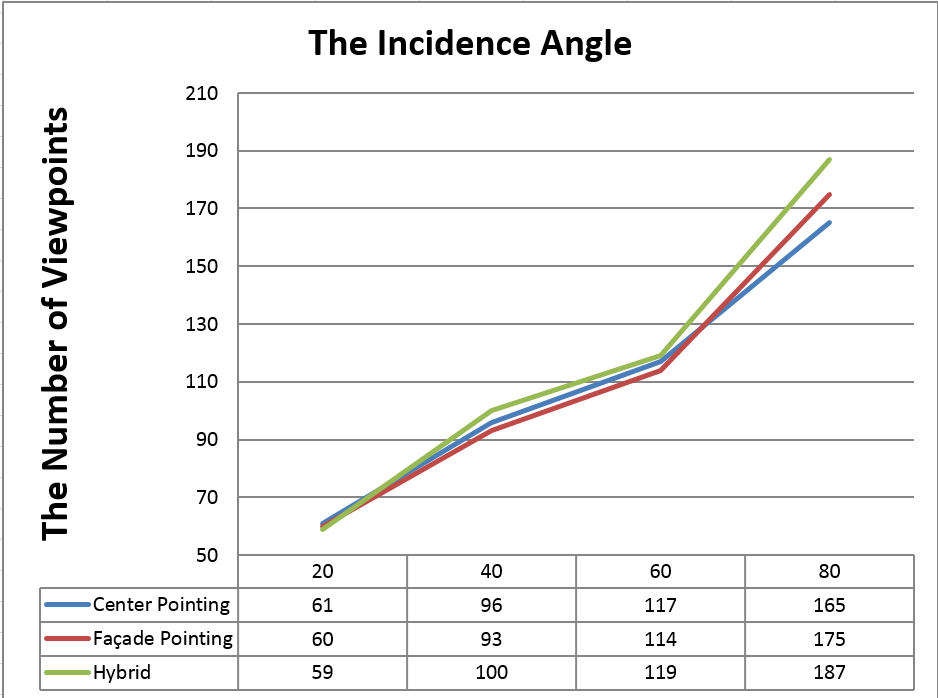

- Contrary to other imaging network design methods developed for building 3D reconstruction (e.g., [21]), the presented method takes into account range-related constraints in defining the suitable range from the building. Moreover, clustering and selecting approach is accomplished using a visibility matrix defined based on a four-zone cone instead of filtering for coverage with only three rays for each point and filtering for accuracy without considering the impact of a viewpoint in dense 3D reconstruction. Additionally, in the presented method, four different definitions of viewpoints are examined to evaluate the best viewpoint directions.

- (iv)

- To evaluate the proposed methods, a simulated environment including textured buildings in ROS Gazebo, as well as a ROS-based simulated UGV equipped with a 2D LiDAR, a DSLR camera and an IMU, are provided and are freely available in https://github.com/hosseininaveh/Moor_For_BIM (accessed on 13 May 2021). Researchers can use them for evaluating their methods.

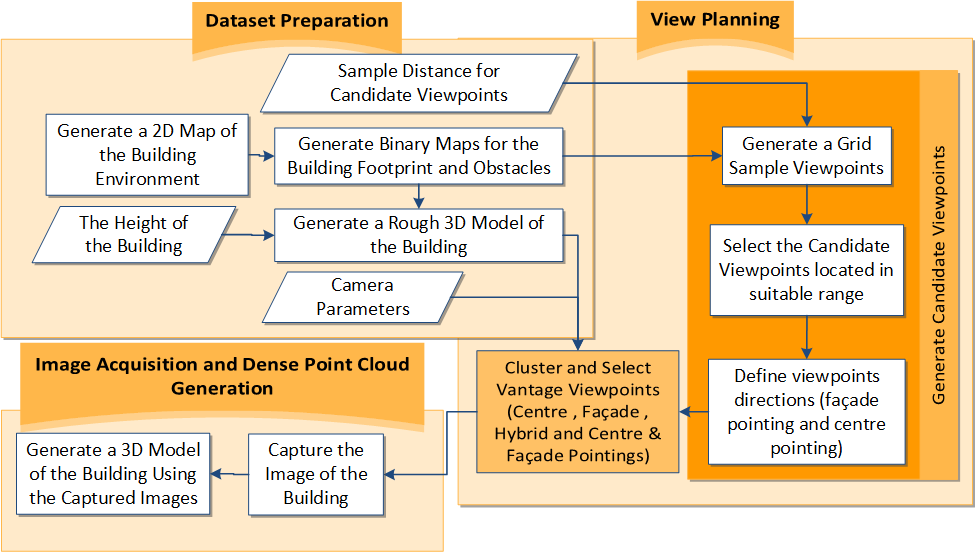

2. Materials and Methods

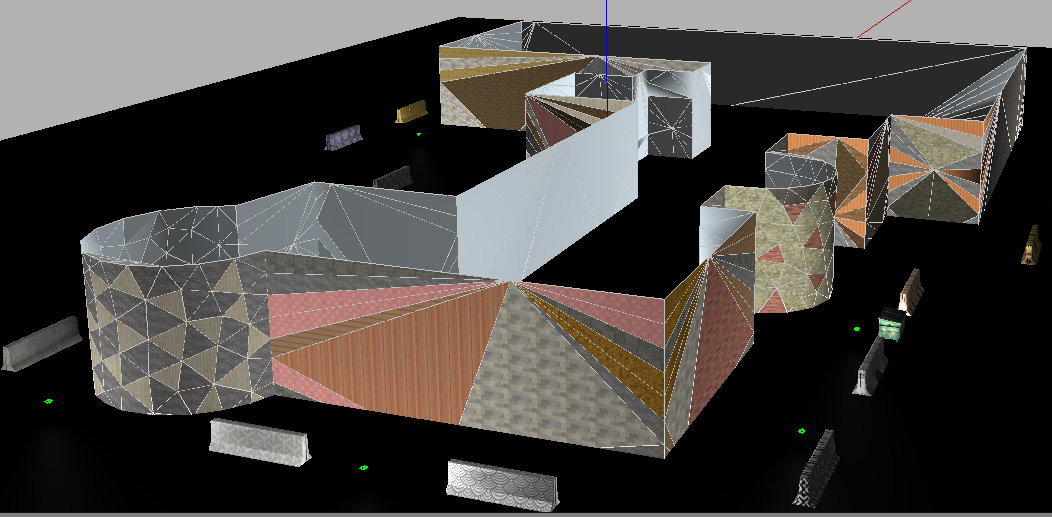

- A dataset is created for running the proposed algorithm for view planning, including a 2D map of the building; an initial 3D model of the building generated simply by defining a thickness for the map using the height of the building; camera calibration parameters; and, minimum distance for candidate viewpoints (in order to keep the correct Ground Sample Distance (GSD) for 3D reconstruction purposes).

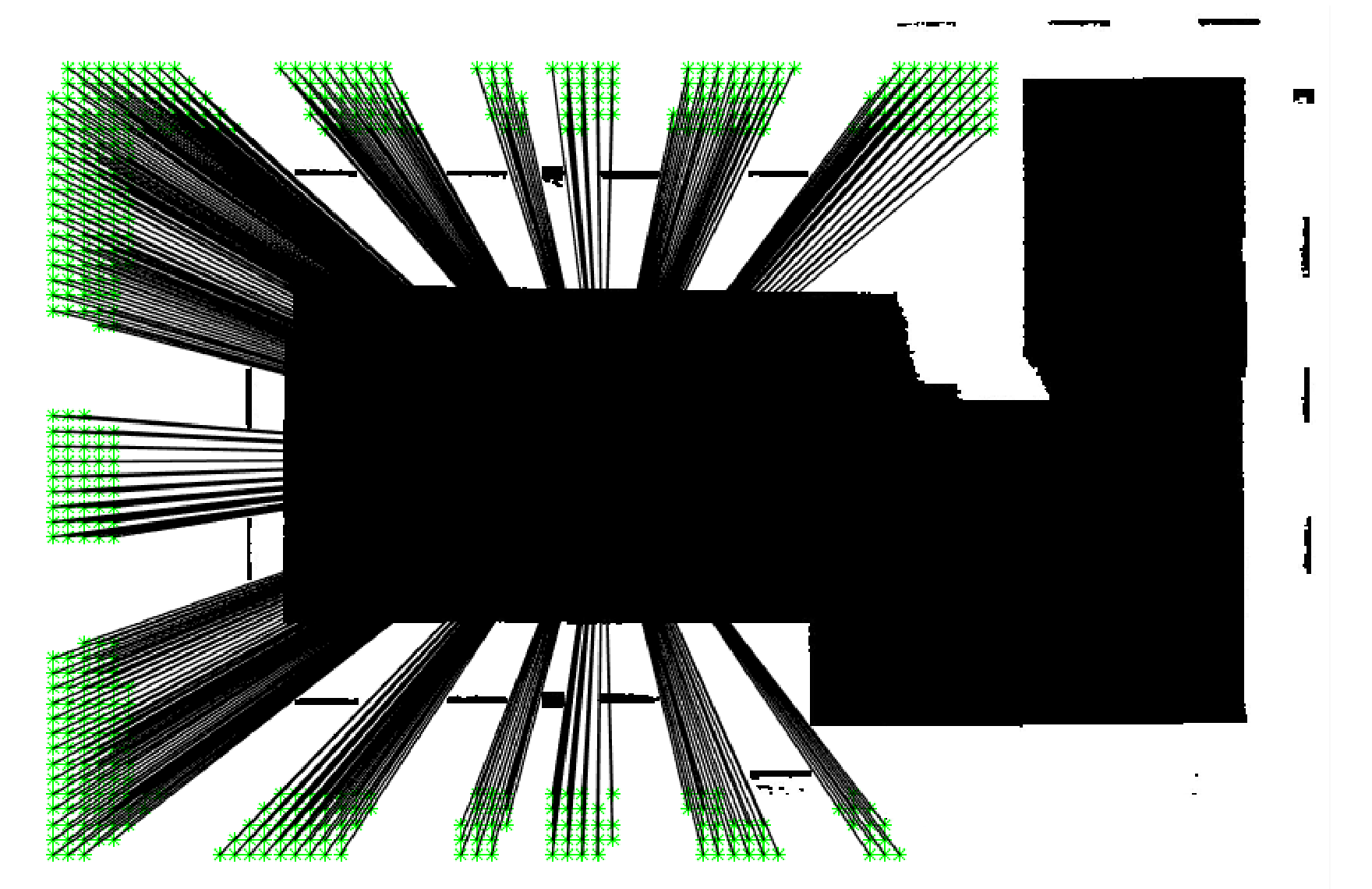

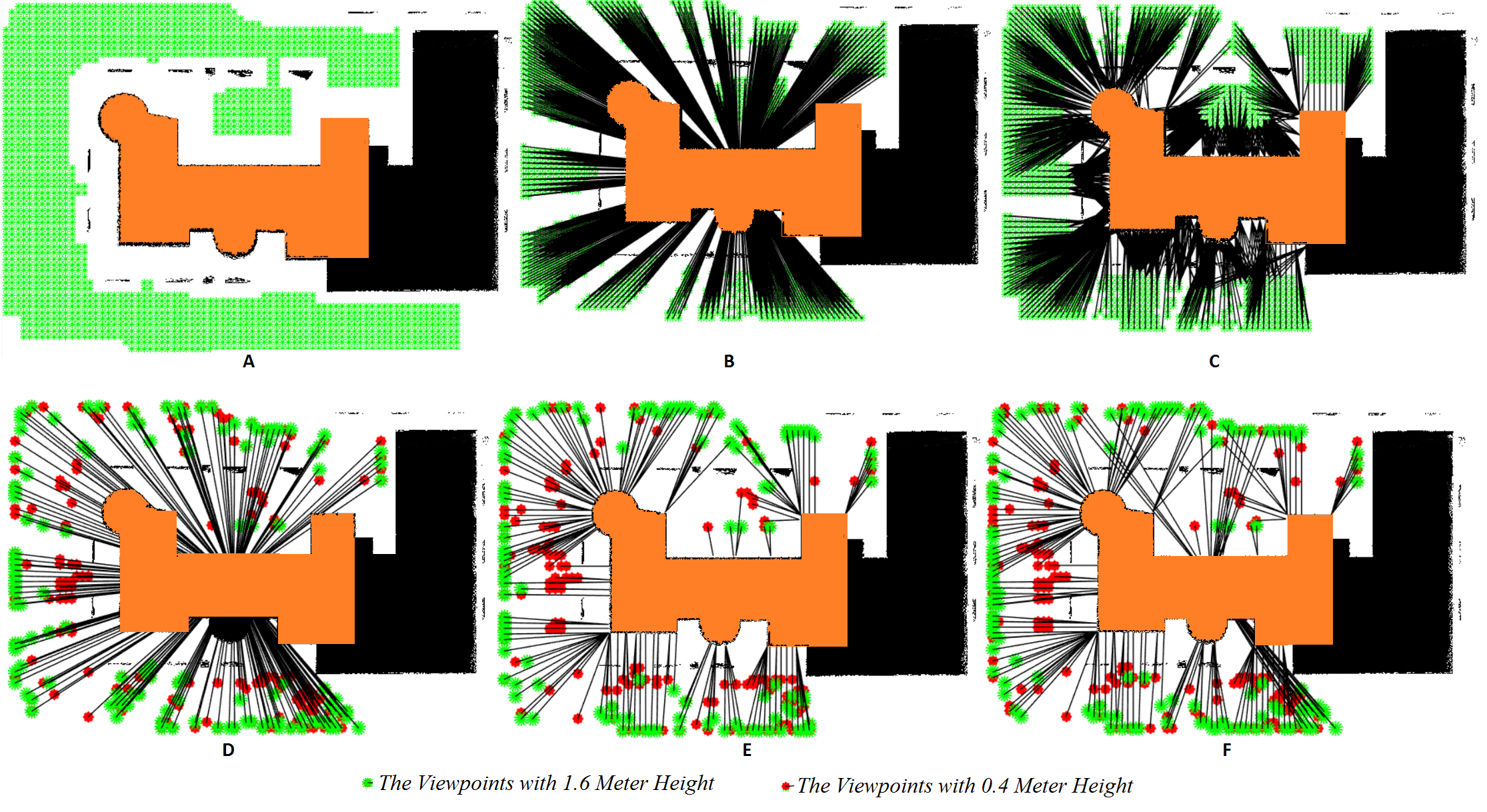

- A set of candidate viewpoints is provided by generating a grid of sample viewpoints on binary maps extracted from the 2D map and selecting some of the viewpoints located in suitable range with considering imaging network constraints. The direction of the camera for each viewpoint is calculated based on pointing towards the façade (called façade pointing) or pointing towards to the centre of the building (called centre pointing), or two directions in each viewpoint locations with centre & façade pointing. The candidate viewpoints in each pose are duplicated at two different heights (0.4 and 1.6 m).

- The generated candidate viewpoints, the camera calibration parameters and the initial 3D model of the building are used in the process of clustering and selecting vantage viewpoints with four different approaches including: centre pointing, façade pointing, hybrid, and centre & façade pointing.

- Given the viewpoint poses selected in the above-mentioned approaches, a set of images is captured at the designed viewpoints and processed with photogrammetric methods to generate dense 3D point clouds.

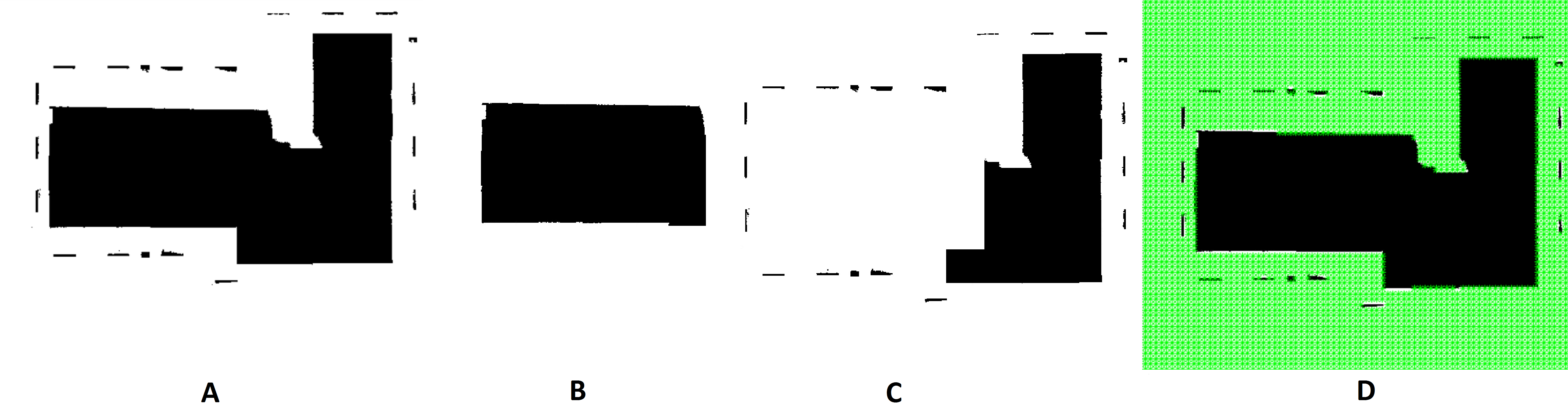

2.1. Dataset Preparation

2.2. Generating Candidate Viewpoints

2.2.1. Generating a Grid of Sample Viewpoints

2.2.2. Selecting the Candidate Viewpoints Located in a Suitable Range

2.2.3. Defining Viewpoint Directions

- (i)

- the camera is looking at the centre of the building (centre pointing) [29]: the directions of viewpoints are generated by simply estimating the centre of the building in the binary image, estimated by computing the centroid derived from image moments on the building map [57] and defining the vector between each viewpoints locations and the estimated centre.

- (ii)

- the camera in each location is looking at the nearest point on the façade (façade pointing): some of the points on the façade may not be visible due to their being located behind obstacles or on the corners of complex buildings. These points are recognized by running the Harris corner detector on the map of the building for finding the corner of the buildings and recognizing the edge points located in front of big obstacles within the range buffer. Given these points, the directions of the six nearest viewpoints to these points are modified towards them.

- (iii)

- two directions (centre & façade pointing) are defined for any viewpoint locations: the directions of the viewpoints for both previous approaches are considered.

2.3. Clustering and Selecting Vantage Viewpoints

2.4. Image Acquisition and Dense Point Cloud Generation

3. Results

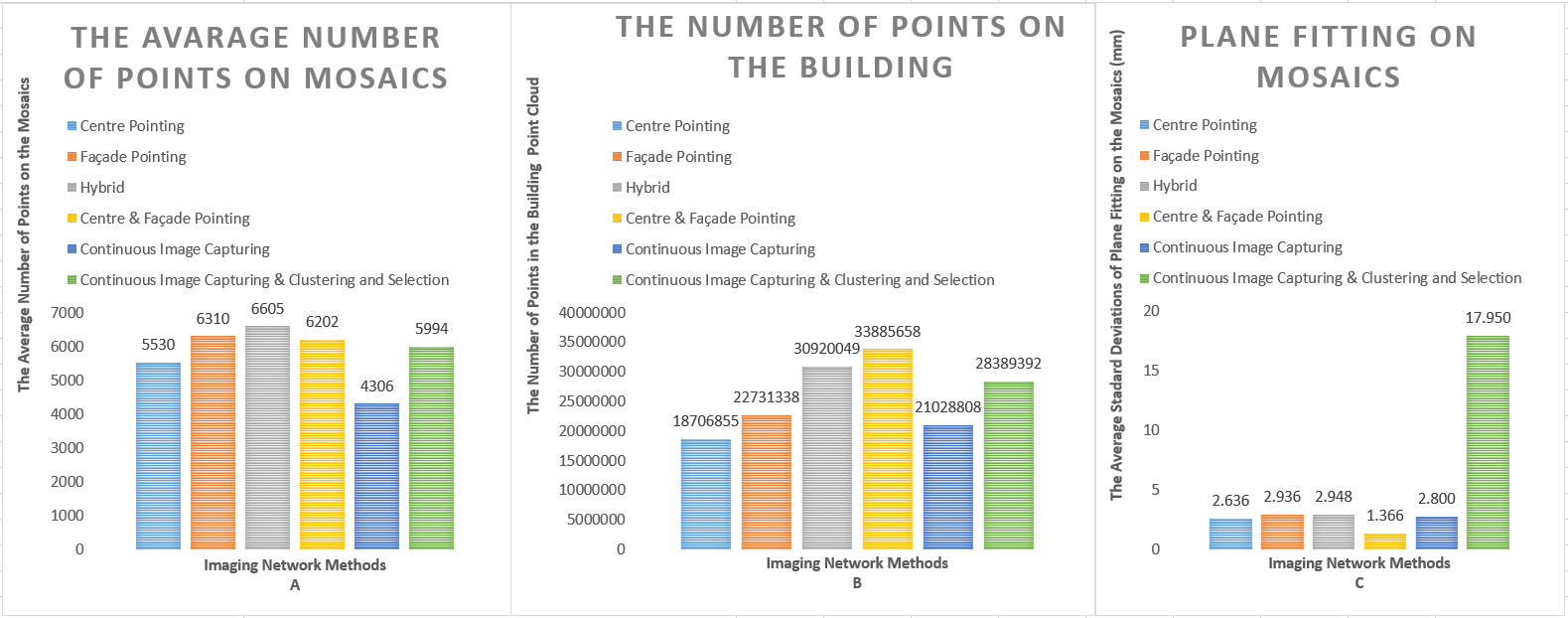

3.1. Simulation Experiments on a Building with Rectangular Footprint

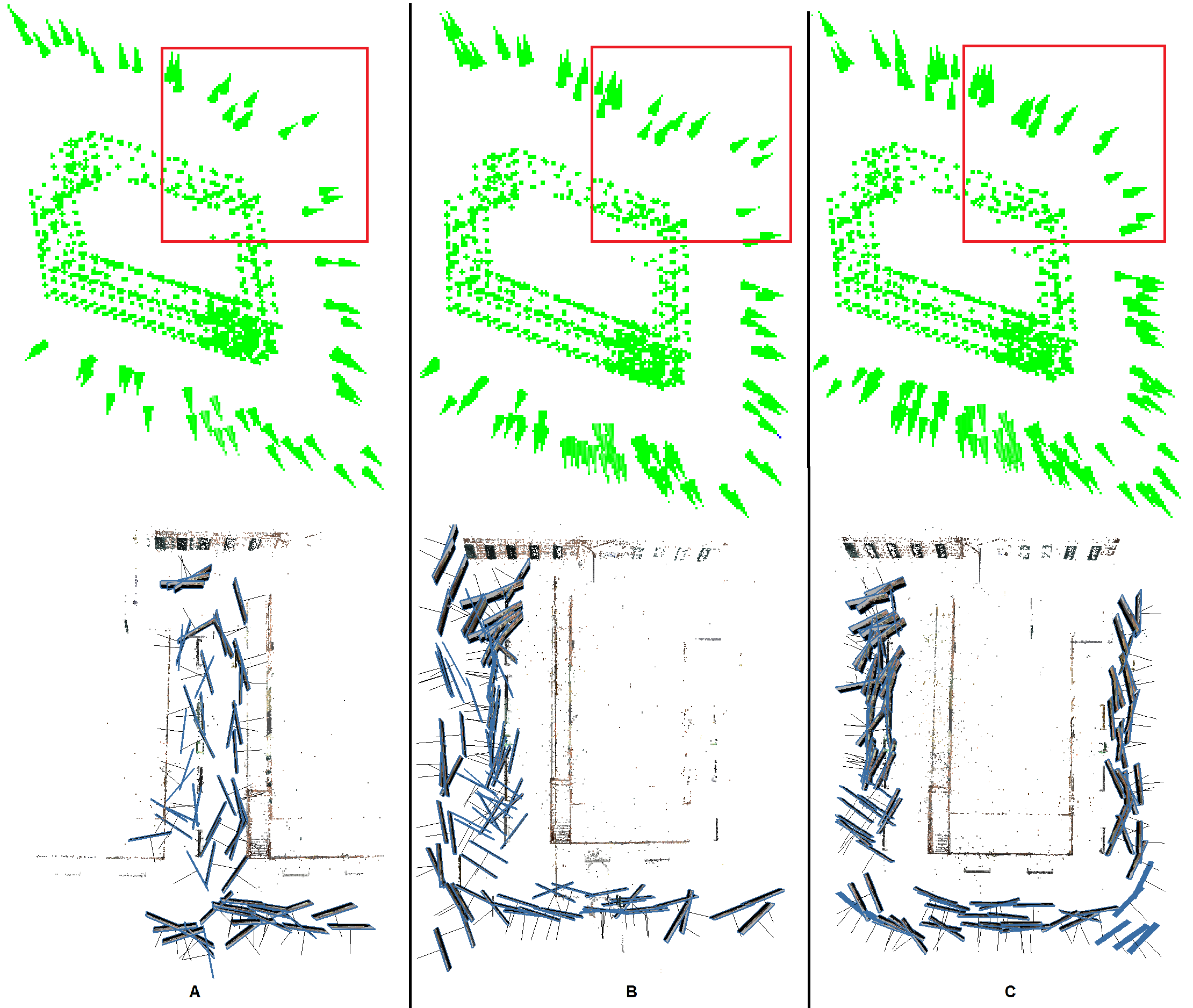

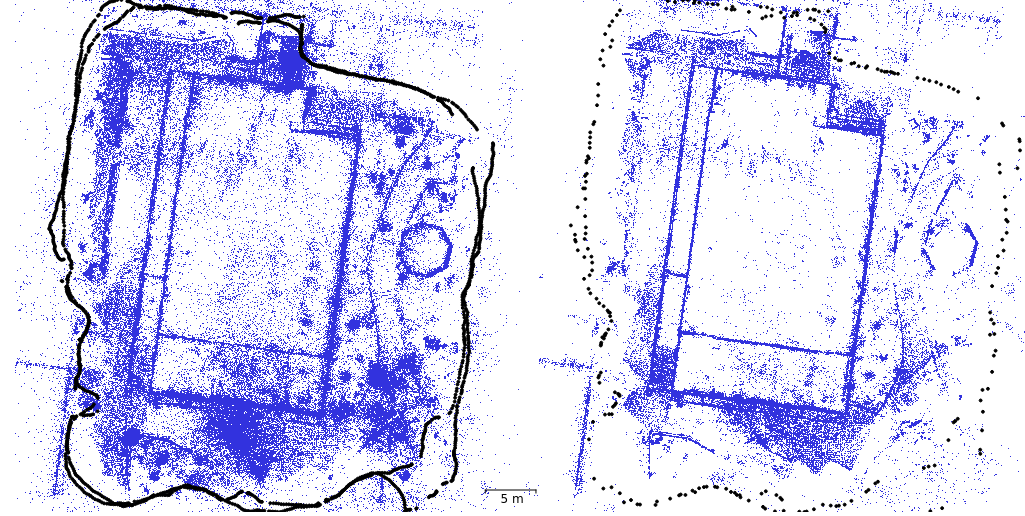

3.1.1. Generating Initial Candidate Viewpoints

3.1.2. Selecting the Candidate Viewpoints Located in a Suitable Range

3.1.3. Defining Viewpoints Directions

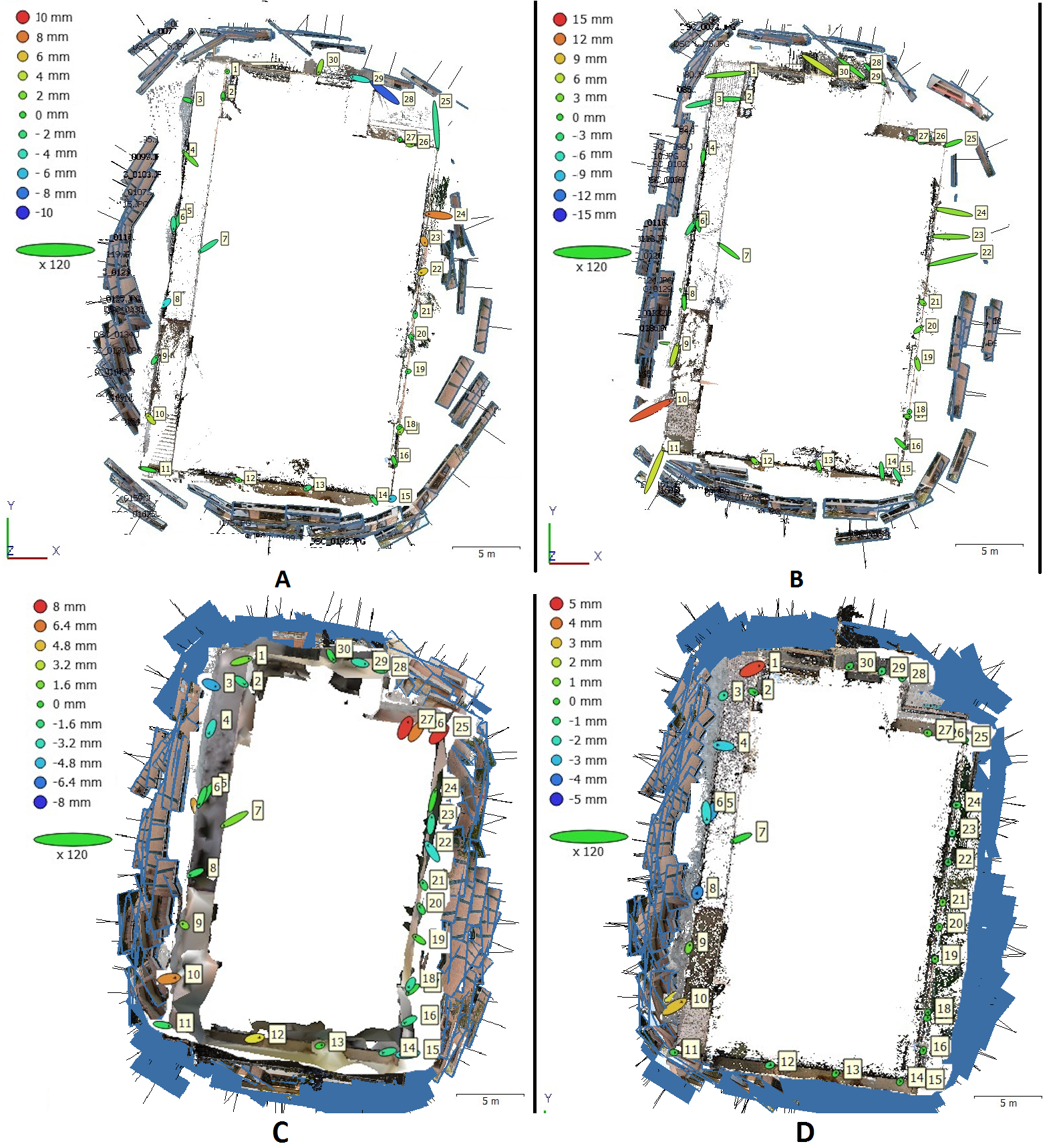

3.1.4. Clustering and Selecting Vantage Viewpoints

- -

- Centre pointing approach: 96 viewpoints were selected out of 1020 initial candidate viewpoints;

- -

- Façade pointing: 107 viewpoints were selected out of 5218 initial viewpoints;

- -

- Hybrid approach: 119 viewpoints were selected out of 6238 initial candidates.

- -

- Centre & façade pointing: 213 viewpoints were chosen as a dataset including the output of both of the first two approaches.

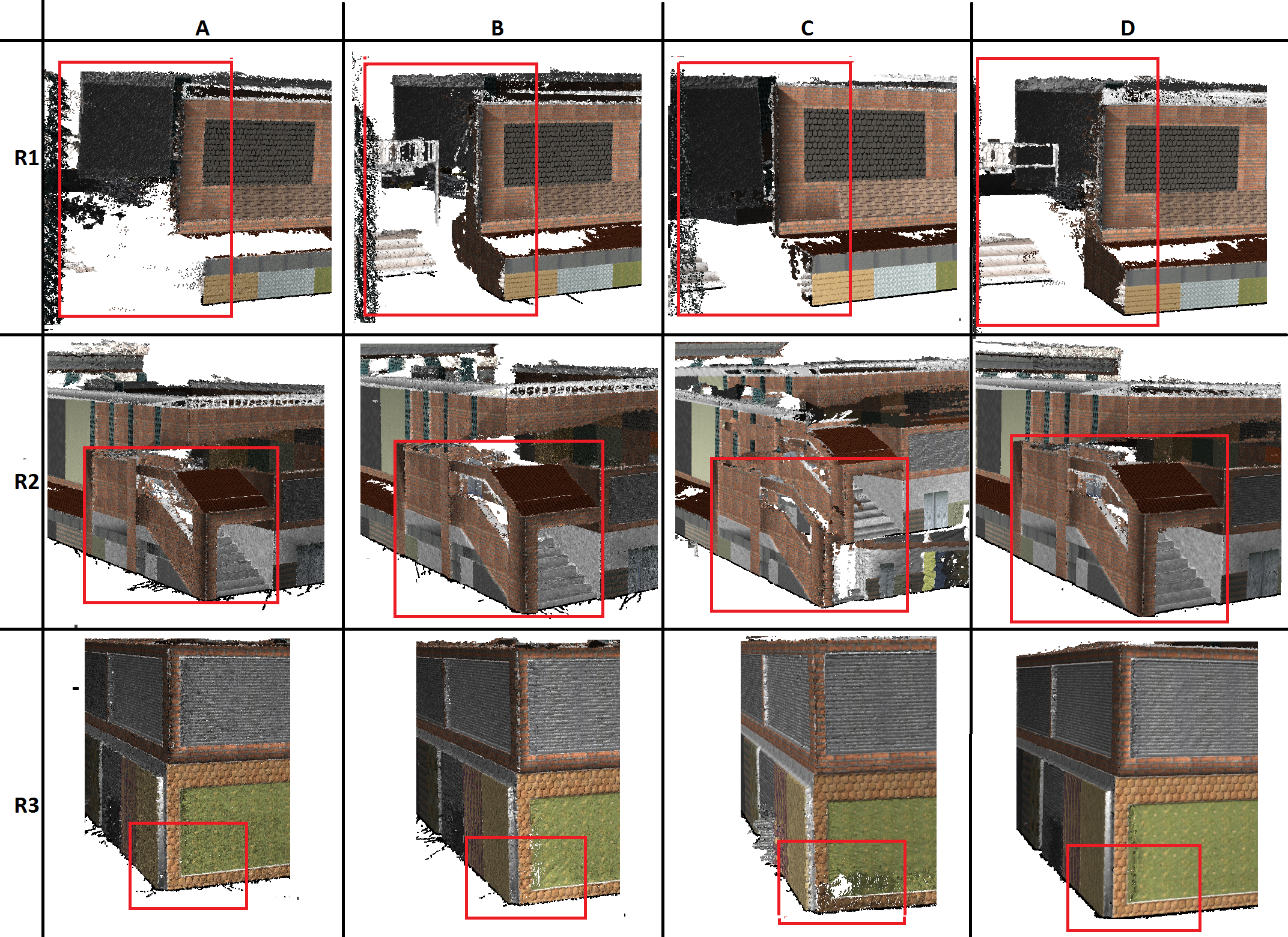

3.1.5. Image Acquisition and Dense Point Cloud Generation

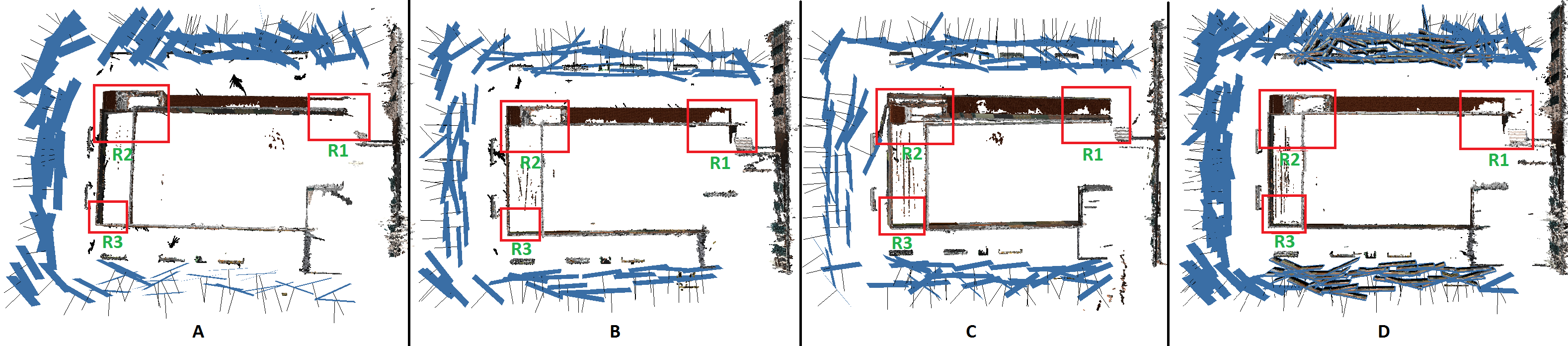

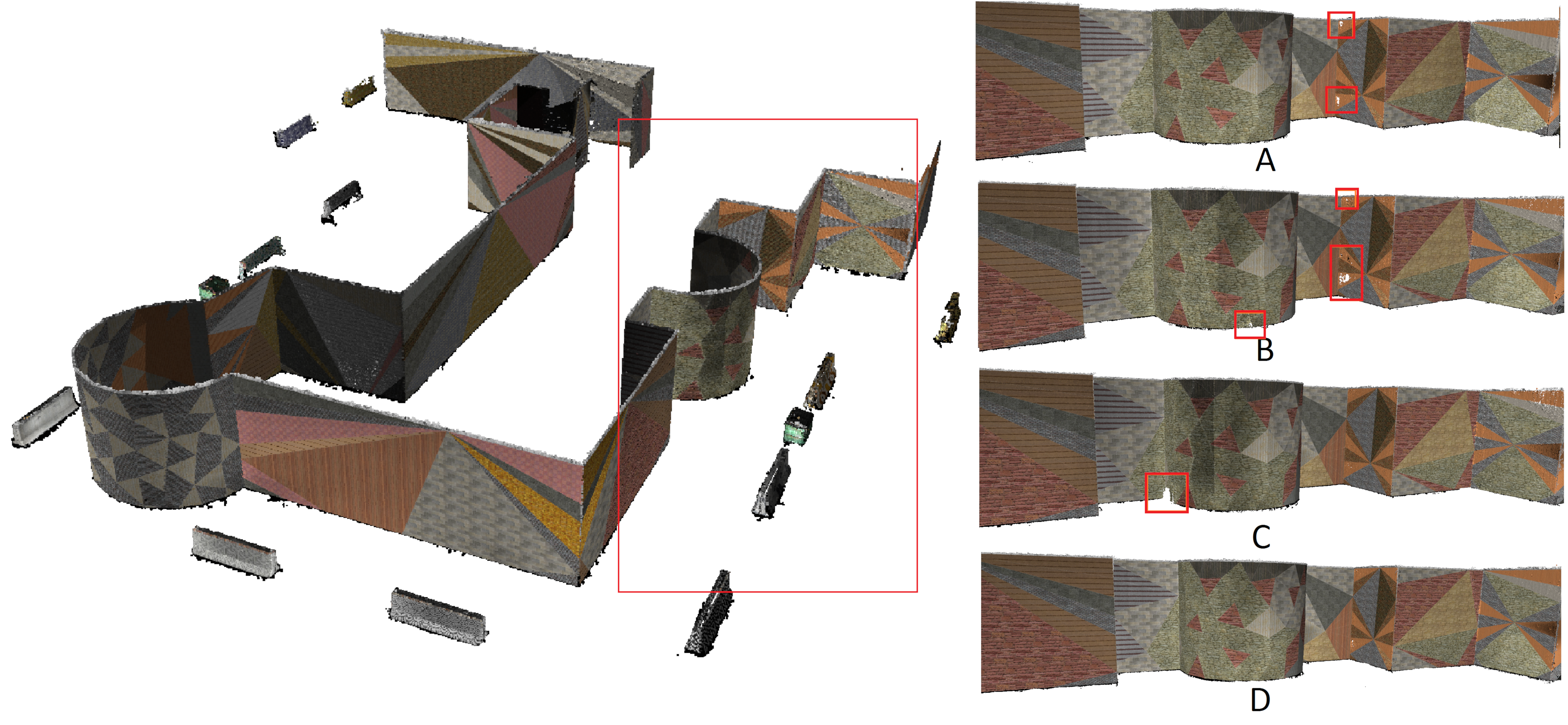

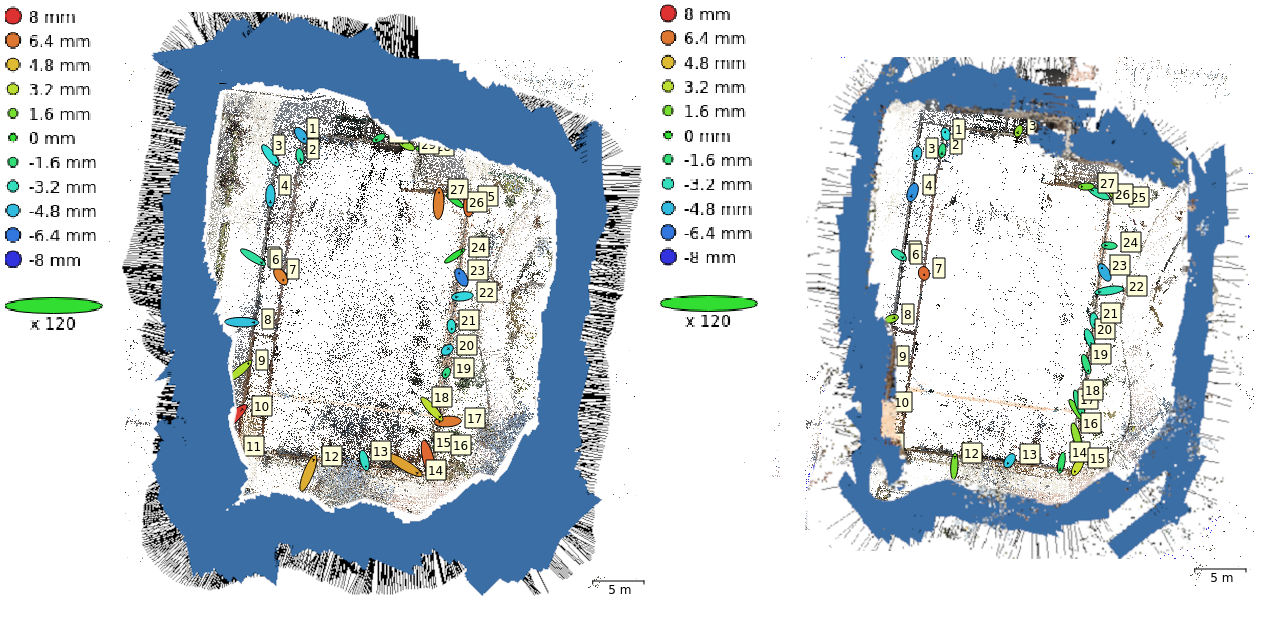

3.2. Simulation Experiments on a Building with Complex Shape

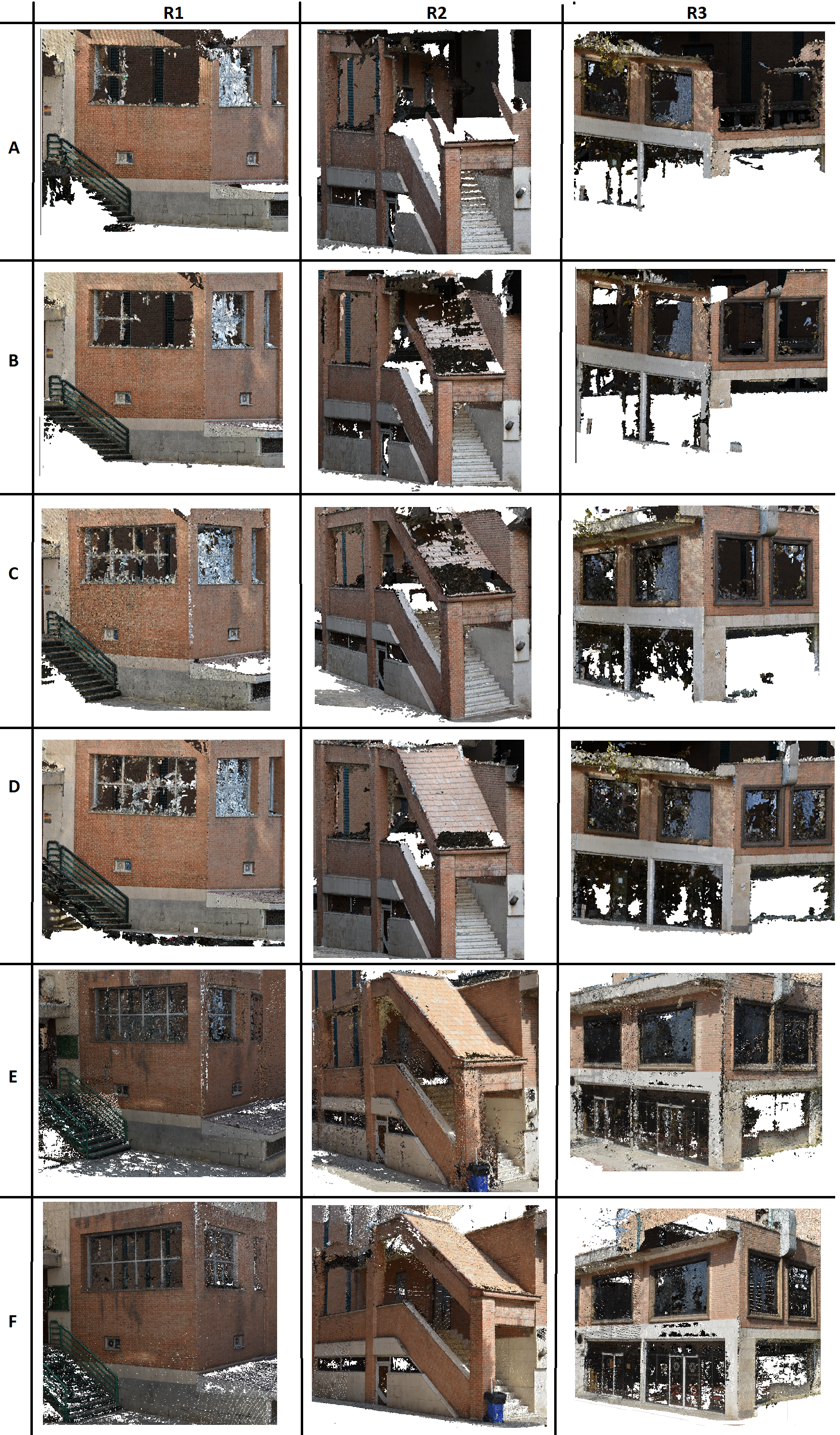

3.3. Real-World Experiments

4. Discussion

5. Conclusions

- -

- Develop another imaging network for a UGV equipped with a digital camera mounted on a pan-tilt unit; so far, it was assumed that the robot is equipped with a camera fixed to the body of the robot, and with no rotations allowed.

- -

- Deploy the proposed imaging network on mini-UAV; in this work, the top parts of the building were ignored (not seen) for 3D reconstruction purposes due to onboard camera limitations, whereas the fusion with UAV images would allow a complete survey of a building.

- -

- Use the clustering and selection approach for key frame selection of video sequences for 3D reconstruction purposes.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adán, A.; Quintana, B.; Prieto, S.; Bosché, F. An autonomous robotic platform for automatic extraction of detailed semantic models of buildings. Autom. Constr. 2020, 109, 102963. [Google Scholar] [CrossRef]

- Al-Kheder, S.; Al-Shawabkeh, Y.; Haala, N. Developing a documentation system for desert palaces in Jordan using 3D laser scanning and digital photogrammetry. J. Archaeol. Sci. 2009, 36, 537–546. [Google Scholar] [CrossRef]

- Valero, E.; Bosché, F.; Forster, A. Automatic Segmentation of 3D Point Clouds of Rubble Masonry Walls, and Its Ap-plication To Building Surveying, Repair and Maintenance. Autom. Constr. 2018, 96, 29–39. [Google Scholar] [CrossRef]

- Macdonald, L.; Ahmadabadian, A.H.; Robson, S.; Gibb, I. High Art Revisited: A Photogrammetric Approach. In Electronic Visualisation and the Arts; BCS Learning and Development Limited: Swindon, UK, 2014; pp. 192–199. [Google Scholar]

- Noh, Z.; Sunar, M.S.; Pan, Z. A Review on Augmented Reality for Virtual Heritage System. In Transactions on Petri Nets and Other Models of Concurrency XV; von Koutny, M., Pomello, L., Kordon, F., Eds.; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2009; pp. 50–61. [Google Scholar]

- Nocerino, E.; Menna, F.; Remondino, F. Accuracy of typical photogrammetric networks in cultural heritage 3D modeling projects. ISPRS Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2014, 45, 465–472. [Google Scholar] [CrossRef]

- Logothetis, S.; Delinasiou, A.; Stylianidis, E. Building Information Modelling for Cultural Heritage: A review. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 177–183. [Google Scholar] [CrossRef]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A Critical Review of Automated Photogrammetric Processing Of Large Datasets. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 591–599. [Google Scholar] [CrossRef]

- Amini, A.S.; Varshosaz, M.; Saadatseresht, M. Development of a New Stereo-Panorama System Based on off-The-Shelf Stereo Cameras. Photogramm. Rec. 2014, 29, 206–223. [Google Scholar] [CrossRef]

- Watkins, S.; Burry, J.; Mohamed, A.; Marino, M.; Prudden, S.; Fisher, A.; Kloet, N.; Jakobi, T.; Clothier, R. Ten questions concerning the use of drones in urban environments. Build. Environ. 2020, 167, 106458. [Google Scholar] [CrossRef]

- Alsadik, B.; Remondino, F. Flight Planning for LiDAR-Based UAS Mapping Applications. ISPRS Int. J. Geo Inf. 2020, 9, 378. [Google Scholar] [CrossRef]

- Koch, T.; Körner, M.; Fraundorfer, F. Automatic and Semantically-Aware 3D UAV Flight Planning for Image-Based 3D Reconstruction. Remote Sens. 2019, 11, 1550. [Google Scholar] [CrossRef]

- Hosseininaveh, A.; Robson, S.; Boehm, J.; Shortis, M. Stereo-Imaging Network Design for Precise and Dense 3d Re-construction. Photogramm. Rec. 2014, 29, 317–336. [Google Scholar]

- Hosseininaveh, A.; Robson, S.; Boehm, J.; Shortis, M. Image selection in photogrammetric multi-view stereo methods for metric and complete 3D reconstruction. In Proceedings of the SPIE-The International Society for Optical Engineering, Munich, Germany, 23 May 2013; Volume 8791. [Google Scholar]

- Hosseininaveh, A.; Serpico, S.; Robson, M.; Hess, J.; Boehm, I.; Pridden, I.; Amati, G. Automatic Image Selection in Photogrammetric Multi-View Stereo Methods. In Proceedings of the International Symposium on Virtual Reality, Archaeology and Intelligent Cultural Heritage, Brighton, UK, 19–21 November 2012. [Google Scholar]

- Hosseininaveh, A.; Yazdan, R.; Karami, A.; Moradi, M.; Ghorbani, F. Clustering and selecting vantage images in a low-cost system for 3D reconstruction of texture-less objects. Measurement 2017, 99, 185–191. [Google Scholar] [CrossRef]

- Vasquez-Gomez, J.I.; Sucar, L.E.; Murrieta-Cid, R.; Lopez-Damian, E. Volumetric Next-best-view Planning for 3D Object Reconstruction with Positioning Error. Int. J. Adv. Robot. Syst. 2014, 11, 159. [Google Scholar] [CrossRef]

- Alsadik, B.; Gerke, M.; Vosselman, G. Automated Camera Network Design for 3D Modeling of Cultural Heritage Objects. J. Cult. Herit. 2013, 14, 515–526. [Google Scholar] [CrossRef]

- Mahami, H.; Nasirzadeh, F.; Ahmadabadian, A.H.; Nahavandi, S. Automated Progress Controlling and Monitoring Using Daily Site Images and Building Information Modelling. Buildings 2019, 9, 70. [Google Scholar] [CrossRef]

- Mahami, H.; Nasirzadeh, F.; Ahmadabadian, A.H.; Esmaeili, F.; Nahavandi, S. Imaging network design to improve the automated construction progress monitoring process. Constr. Innov. 2019, 19, 386–404. [Google Scholar] [CrossRef]

- Palanirajan, H.K.; Alsadik, B.; Nex, F.; Elberink, S.O. Efficient Flight Planning for Building Façade 3d Reconstruction. ISPRS Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 495–502. [Google Scholar] [CrossRef]

- Kriegel, S.; Bodenmüller, T.; Suppa, M.; Hirzinger, G. A surface-based Next-Best-View approach for automated 3D model completion of unknown objects. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4869–4874. [Google Scholar]

- Isler, S.; Sabzevari, R.; Delmerico, J.; Scaramuzza, D. An Information Gain Formulation for Active Volumetric 3D Reconstruction. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3477–3484. [Google Scholar]

- Monica, R.; Aleotti, J. Surfel-Based Next Best View Planning. IEEE Robot. Autom. Lett. 2018, 3, 3324–3331. [Google Scholar] [CrossRef]

- Furukawa, Y. Clustering Views for Multi-View Stereo (CMVS). 2010. Available online: https://www.di.ens.fr/cmvs/ (accessed on 13 May 2021).

- Furukawa, Y.; Curless, B.; Seitz, S.M.; Szeliski, R. Towards Internet-scale multi-view stereo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1434–1441. [Google Scholar]

- Agarwal, S.; Furukawa, Y.; Snavely, N.; Simon, I.; Curless, B.; Seitz, S.M.; Szeliski, R. Building rome in a day. Commun. ACM 2011, 10, 105–112. [Google Scholar] [CrossRef]

- Scott, W.R.; Roth, G.; Rivest, J.-F. View Planning for Automated Three-Dimensional Object Reconstruction and Inspection. ACM Comput. Surv. 2003, 35, 64–96. [Google Scholar] [CrossRef]

- Hosseininaveh, A.A.; Sargeant, B.; Erfani, T.; Robson, S.; Shortis, M.; Hess, M.; Boehm, J. Towards Fully Automatic Reliable 3D Ac-quisition: From Designing Imaging Network to a Complete and Accurate Point Cloud. Robot. Auton. Syst. 2014, 62, 1197–1207. [Google Scholar] [CrossRef]

- Vasquez-Gomez, J.I.; Sucar, L.E.; Murrieta-Cid, R. View Planning for 3D Object Reconstruction with a Mobile Manipulator Robot. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4227–4233. [Google Scholar]

- Fraser, S. Network Design Considerations for Non-Topographic Photogrammetry. Photogramm. Eng. Remote Sens. 1984, 50, 115–1126. [Google Scholar]

- Tarbox, G.H.; Gottschlich, S.N. Planning for Complete Sensor Coverage in Inspection. Comput. Vis. Image Underst. 1995, 61, 84–111. [Google Scholar] [CrossRef]

- Scott, W.R. Model-based view planning. Mach. Vis. Appl. 2007, 20, 47–69. [Google Scholar] [CrossRef]

- Chen, S.; Li, Y. Automatic Sensor Placement for Model-Based Robot Vision. IEEE Trans. Syst. Man, Cybern. Part B 2004, 34, 393–408. [Google Scholar] [CrossRef]

- Karaszewski, M.; Adamczyk, M.; Sitnik, R. Assessment of next-best-view algorithms performance with various 3D scanners and manipulator. ISPRS J. Photogramm. Remote Sens. 2016, 119, 320–333. [Google Scholar] [CrossRef]

- Zhou, X.; Yi, Z.; Liu, Y.; Huang, K.; Huang, H. Survey on path and view planning for UAVs. Virtual Real. Intell. Hardw. 2020, 2, 56–69. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Remondino, F.; Saleri, R. Accuracy and Block Deformation Analysis in Automatic UAV and Terrestrial Photogrammetry–Lesson Learnt. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 203–208. [Google Scholar] [CrossRef]

- Jing, W.; Polden, J.; Tao, P.Y.; Lin, W.; Shimada, K. View planning for 3D shape reconstruction of buildings with unmanned aerial vehicles. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–6. [Google Scholar]

- Zheng, X.; Wang, F.; Li, Z. A multi-UAV cooperative route planning methodology for 3D fine-resolution building model reconstruction. ISPRS J. Photogramm. Remote Sens. 2018, 146, 483–494. [Google Scholar] [CrossRef]

- Almadhoun, R.; Abduldayem, A.; Taha, T.; Seneviratne, L.; Zweiri, Y. Guided Next Best View for 3D Reconstruction of Large Complex Structures. Remote Sens. 2019, 11, 2440. [Google Scholar] [CrossRef]

- Mendoza, M.; Vasquez-Gomez, J.I.; Taud, H.; Sucar, L.E.; Reta, C. Supervised learning of the next-best-view for 3d object reconstruction. Pattern Recognit. Lett. 2020, 133, 224–231. [Google Scholar] [CrossRef]

- Huang, R.; Zou, D.; Vaughan, R.; Tan, P. Active Image-Based Modeling with a Toy Drone. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Hepp, B.; Nießner, M.; Hilliges, O. Plan3d: Viewpoint and Trajectory Optimization for Aerial Multi-View Stereo Recon-struction. ACM Trans. Graph. 2018, 38, 1–17. [Google Scholar] [CrossRef]

- Krause, A.; Golovin, D. Submodular Function Maximization. Tractability 2014, 3, 71–104. [Google Scholar]

- Roberts, M.; Shah, S.; Dey, D.; Truong, A.; Sinha, S.; Kapoor, A.; Hanrahan, P.; Joshi, N. Submodular Trajectory Optimization for Aerial 3D Scanning. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5334–5343. [Google Scholar]

- Smith, N.; Moehrle, N.; Goesele, M.; Heidrich, W. Aerial Path Planning for Urban Scene Reconstruction: A Continuous Optimization Method and Benchmark. ACM Trans. Graph. 2019, 37, 183. [Google Scholar] [CrossRef]

- Arce, S.; Vernon, C.A.; Hammond, J.; Newell, V.; Janson, J.; Franke, K.W.; Hedengren, J.D. Automated 3D Reconstruction Using Op-timized View-Planning Algorithms for Iterative Development of Structure-from-Motion Models. Remote Sens. 2020, 12, 2169. [Google Scholar] [CrossRef]

- Yuhong. Robot Operating System (ROS) Tutorials (Indigo Ed.). 2018. Available online: http://wiki.ros.org/ROS/Tutorials (accessed on 26 June 2018).

- Gazebo. Gazebo Tutorials. 2014. Available online: http://gazebosim.org/tutorials (accessed on 9 March 2020).

- Gazebo. Tutorial: ROS Integration Overview. 2014. Available online: http://gazebosim.org/tutorials?tut=ros_overview (accessed on 9 March 2020).

- Husky. Available online: http://wiki.ros.org/husky_navigation/Tutorials (accessed on 27 June 2018).

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping with Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Wu, C. Visualsfm: A Visual Structure from Motion System. 2011. Available online: http://ccwu.me/vsfm/doc.html (accessed on 13 May 2021).

- Trimble Inc. Sketchup Pro 2016. 2016. Available online: https://www.sketchup.com/ (accessed on 3 November 2016).

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Hosseininaveh, A. Photogrammetric Multi-View Stereo and Imaging Network Design; University College London: London, UK, 2014. [Google Scholar]

- Ahmadabadian, H.; Robson, S.; Boehm, J.; Shortis, M.; Wenzel, K.; Fritsch, D. A Comparison of Dense Matching Algorithms for Scaled Surface Reconstruction Using Stereo Camera Rigs. ISPRS J. Photogramm. Remote Sens. 2013, 78, 157–167. [Google Scholar] [CrossRef]

- Mousavi, V.; Khosravi, M.; Ahmadi, M.; Noori, N.; Haghshenas, S.; Hosseininaveh, A.; Varshosaz, M. The performance evaluation of multi-image 3D reconstruction software with different sensors. Measurement 2018, 120, 1–10. [Google Scholar] [CrossRef]

- Agisoft PhotoScan Software. Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 30 January 2020).

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving Grid-based SLAM with Rao-Blackwellized Particle Filters by Adaptive Proposals and Selective Resampling. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005. [Google Scholar]

- Hosseininaveh, A.; Remondino, F. An Autonomous Navigation System for Image-Based 3D Reconstruction of Façade Using a Ground Vehicle Robot. Autom. Constr. 2021. under revision. [Google Scholar]

| The Maximum Distance From The Object: Min(, , ) | |

| (mm) [56] | |

| F | The focal length of the Camera (mm) |

| D | The maximum length of the object (mm) |

| K | The number of the images in each station |

| Q | The design factor (between 0.4 and 0.7) |

| Sp | The expected relative precision (1/Sp) |

| The image measurement error (half a pixel size) (mm) | |

| (mm) | |

| DT | The expected minimum distance between two points in the final point cloud (mm) |

| Dt | The minimum distance between two recognizable points in the image (pixel) |

| Ires | The image resolution or pixel size (mm) |

| The angle between the ray coming from the camera and the surface plane (radians) | |

| (mm) [56] | |

| The field of view of the camera: | |

| Di | The maximum object length to be seen in the image (mm) |

| Hi | The minimum image frame size (mm) |

| The minimum distance from the object: max (, ) | |

| The minimum distance from the object by considering the depth of fiel: (mm) [56] | |

| DHF | The hyper focal distance: (mm) |

| Fstop | The F number of the camera |

| C | The circle of confusion () |

| DZ | The camera distance focus ( obtained from Table 1) |

| (mm) [56] | |

| HO | The height of the object (mm) |

| Centre Pointing | Façade Pointing | Hybrid | Centre & Façade Pointing | |

|---|---|---|---|---|

| The Number of Initial Viewpoints | 2386 | 10,240 | 12,626 | ------ |

| The Number of Selected Viewpoints | 292 | 278 | 301 | 570 |

| The Number of Points in the Point Cloud | 9,175,444 | 11,156,211 | 10,648,205 | 11,630,850 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hosseininaveh, A.; Remondino, F. An Imaging Network Design for UGV-Based 3D Reconstruction of Buildings. Remote Sens. 2021, 13, 1923. https://doi.org/10.3390/rs13101923

Hosseininaveh A, Remondino F. An Imaging Network Design for UGV-Based 3D Reconstruction of Buildings. Remote Sensing. 2021; 13(10):1923. https://doi.org/10.3390/rs13101923

Chicago/Turabian StyleHosseininaveh, Ali, and Fabio Remondino. 2021. "An Imaging Network Design for UGV-Based 3D Reconstruction of Buildings" Remote Sensing 13, no. 10: 1923. https://doi.org/10.3390/rs13101923

APA StyleHosseininaveh, A., & Remondino, F. (2021). An Imaging Network Design for UGV-Based 3D Reconstruction of Buildings. Remote Sensing, 13(10), 1923. https://doi.org/10.3390/rs13101923