Cross-Examination of Similarity, Difference and Deficiency of Gauge, Radar and Satellite Precipitation Measuring Uncertainties for Extreme Events Using Conventional Metrics and Multiplicative Triple Collocation

Abstract

1. Introduction

- Evaluate the applicability of MTC in extreme events with the cross-examination of three products using traditional metrics;

- Further examine the stability of MTC method’s performance in multiple extreme events;

- Understand gauge rainfall product uncertainties in extreme events, which are often associated with splash-out, wind undercatch, as well as interpolation uncertainties;

- Understand satellite QPE uncertainties in extreme events, which are associated with signals that are indirectly tied to surface precipitation and poor spatiotemporal resolutions;

- Understand radar QPE uncertainties in extreme events, which are associated with incorrect Z-R formulations, non-weather signals, inadequate sampling;

2. Materials and Methods

2.1. Study Domain

2.2. Datasets Description

2.3. TC Evaluations

2.3.1. Assumptions

2.3.2. Expressions

2.3.3. Data Preparation

2.4. Conventional Statistical Metrics

2.5. Hierarchical Evaluation

3. Results

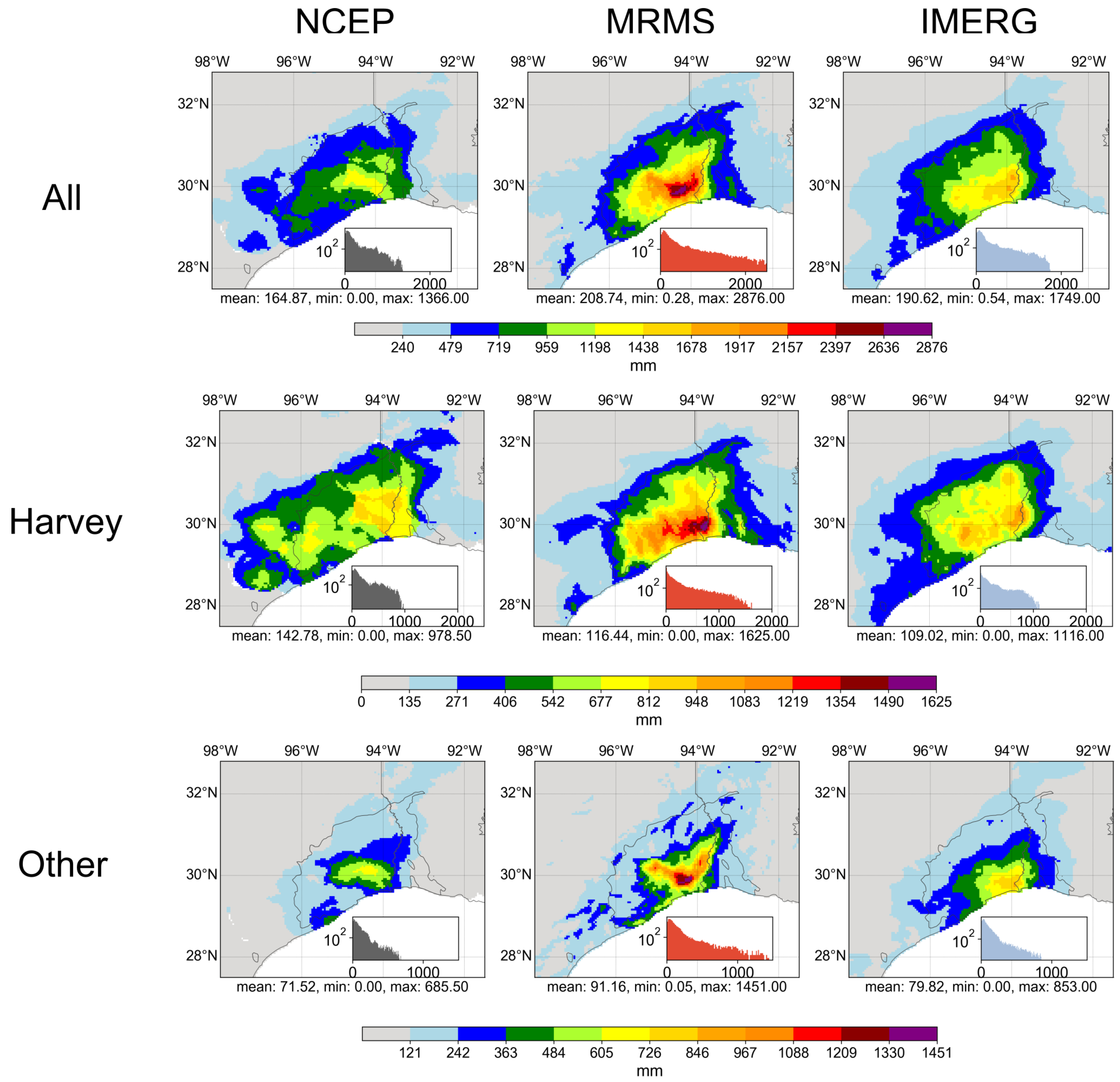

3.1. Cross-Events Comparison

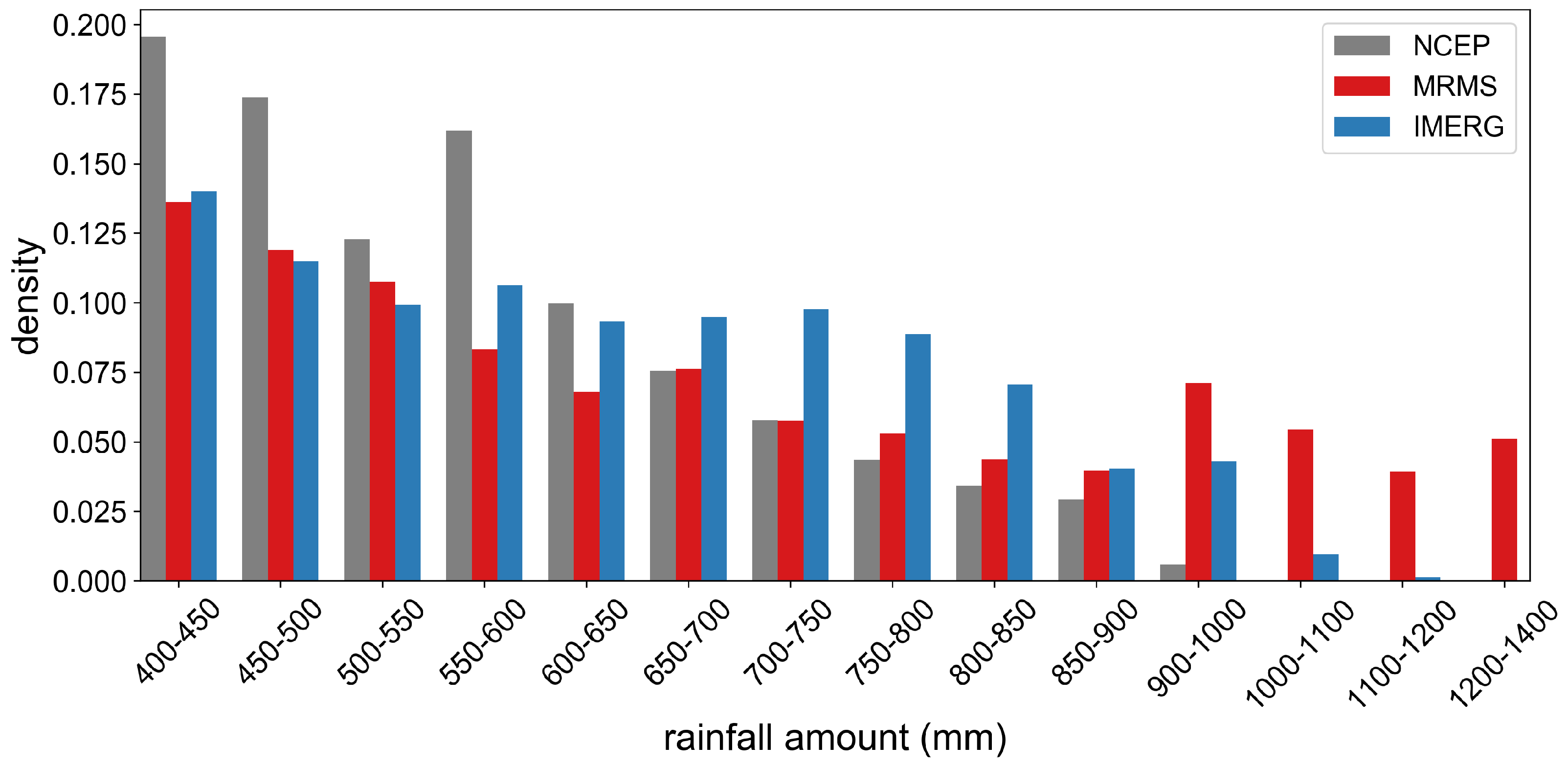

3.1.1. Conventional Inter-Comparison

3.1.2. MTC Comparison

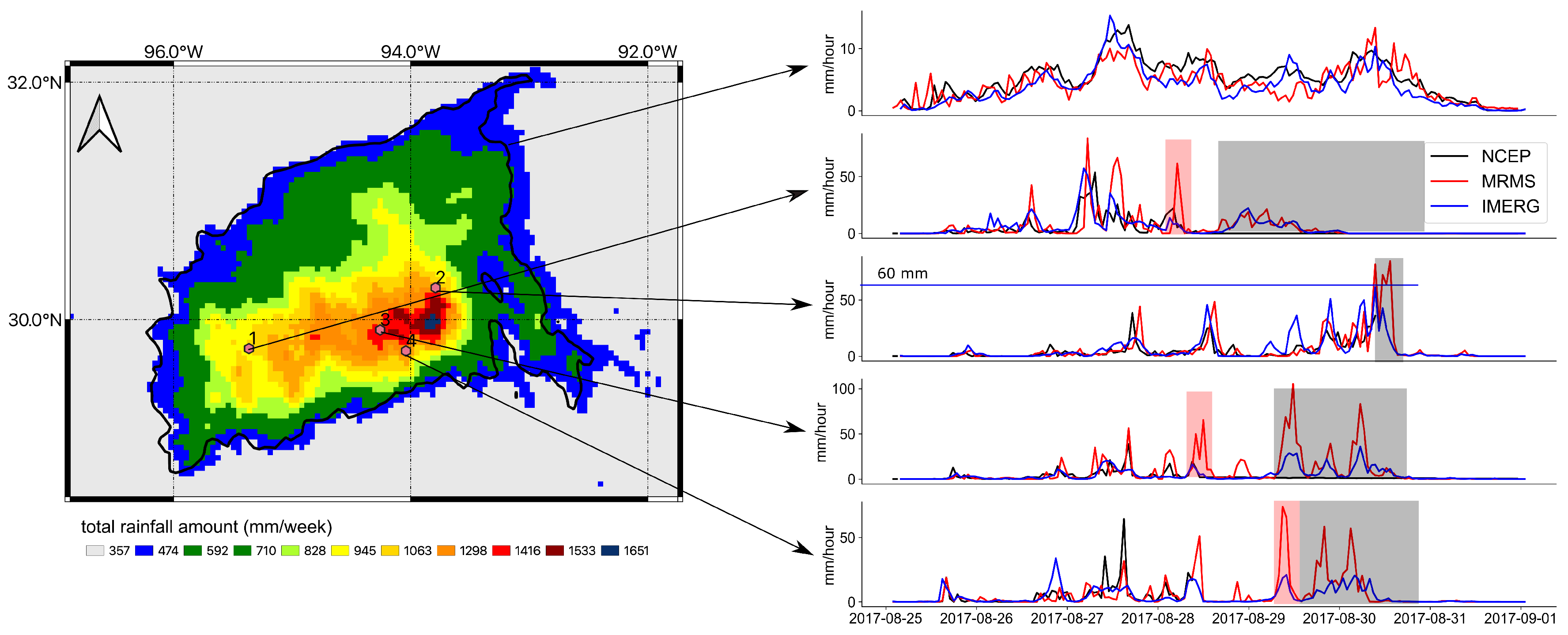

3.2. Hurricane Harvey Analysis

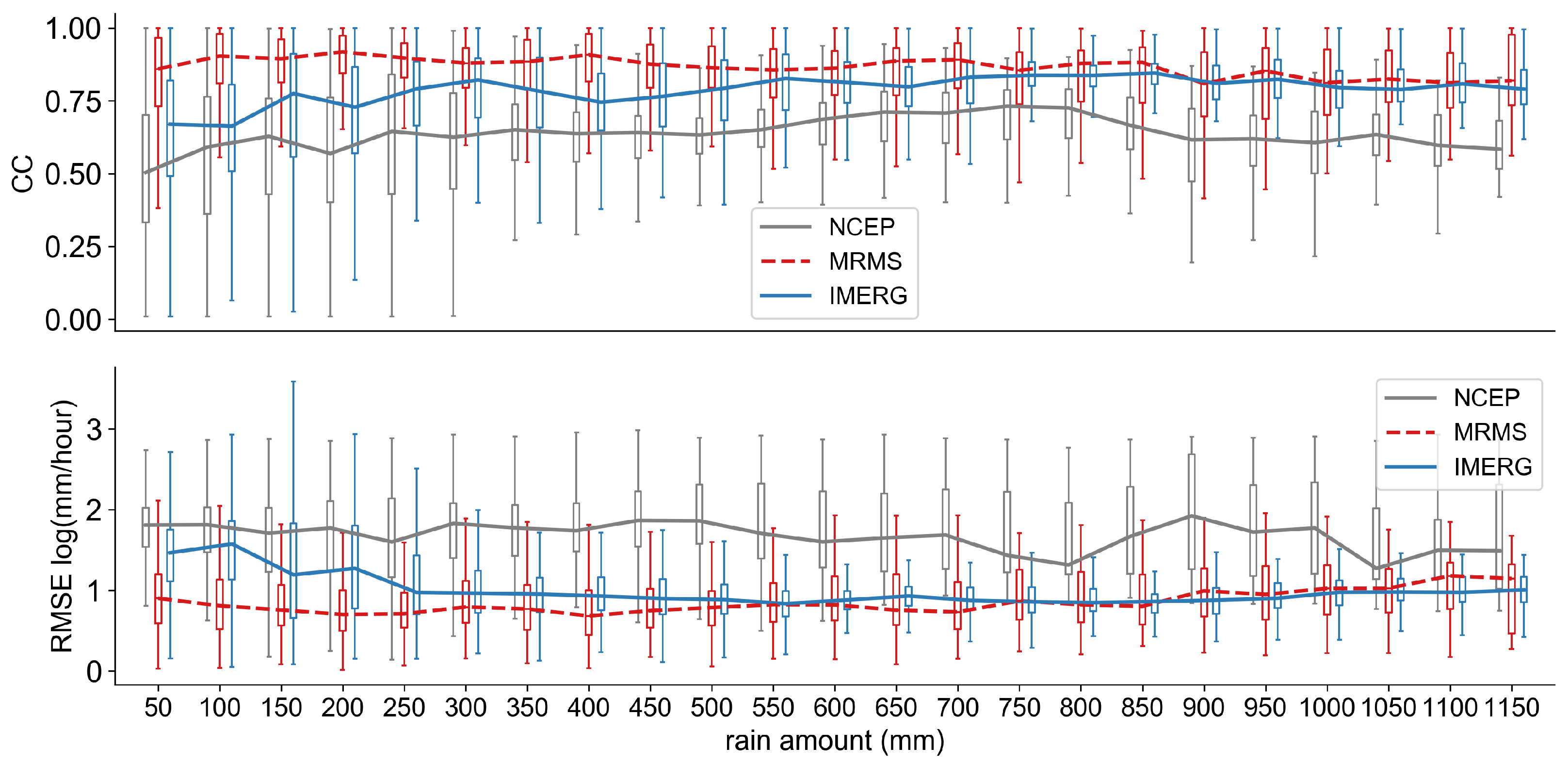

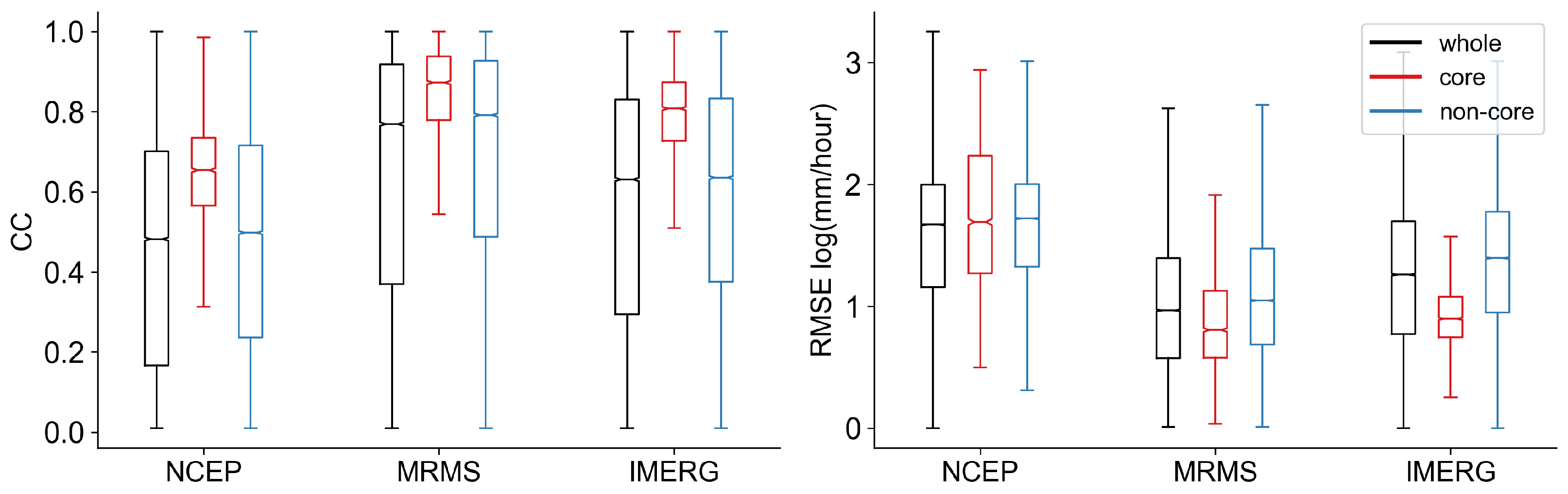

3.2.1. Conventional Inter-Comparison and MTC Results

3.2.2. Further Exploration of MTC Results

3.3. Storm Core of Harvey Event

4. Discussions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Smith, J.A.; Villarini, G.; Baeck, M.L. Mixture distributions and the hydroclimatology of extreme rainfall and flooding in the eastern United States. J. Hydrometeor. 2012, 13, 588–603. [Google Scholar] [CrossRef]

- Villarini, G.; Smith, J.A. Flood peak distributions for the eastern United States. Water Resour. Res. 2010, 46. [Google Scholar] [CrossRef]

- Hong, Y.; Adler, R.F.; Negri, A.; Huffman, G. Flood and landslide applications of near real-time satellite rainfall products. Nat. Hazards 2007, 43, 285–294. [Google Scholar] [CrossRef]

- Kirschbaum, D.; Adler, R.; Adler, D.; Peters-Lidard, C.; Huffman, G.H. Global Distribution of Extreme Precipitation and High-Impact Landslides in 2010 Relative to Previous Years. J. Hydrometeor. 2012, 13, 1536–1551. [Google Scholar] [CrossRef]

- Dong, M.; Chen, L.; Li, Y.; Lu, C. Rainfall Reinforcement Associated with Landfalling Tropical Cyclones. J. Atmos. Sci. 2010, 67, 3541–3558. [Google Scholar] [CrossRef]

- Cerveny, R.S.; Lawrimore, J.; Edwards, R.; Landsea, C. Extreme Weather Records. Bull. Am. Meteorol. Soc. 2007, 88, 853–860. [Google Scholar] [CrossRef]

- Mazzoglio, P.; Laio, F.; Balbo, S.; Boccardo, P.; Disabato, F. Improving an Extreme Rainfall Detection System with GPM IMERG data. Remote Sens. 2019, 11, 677. [Google Scholar] [CrossRef]

- Gao, S.; Meng, Z.; Zhang, F.; Bosart, L.F. Observational Analysis of Heavy Rainfall Mechanisms Associated with Severe Tropical Storm Bilis (2006) after Its Landfall. Mon. Weather Rev. 2006, 137, 1881–1897. [Google Scholar] [CrossRef]

- Dare, R.A.; Davidson, N.E.; McBride, J.L. Tropical Cyclone Contribution to Rainfall over Australia. Mon. Weather Rev. 2012, 140, 3606–3619. [Google Scholar] [CrossRef]

- Knight, D.B.; Davis, R.E. Climatology of tropical cyclone rainfall in the Southeastern United States. Phys. Geogr. 2007, 28, 126–147. [Google Scholar] [CrossRef]

- Emanuel, K. Assessing the present and future probability of Hurricane Harvey’s rainfall. Proc. Natl. Acad. Sci. USA 2017, 48, 12681–12684. [Google Scholar] [CrossRef] [PubMed]

- Omranian, E.; Sharif, H.; Tavakoly, A. How Well Can Global Precipitation Measurement (GPM) Capture Hurricanes? Case Study: Hurricane Harvey. Remote Sens. 2018, 10, 1150. [Google Scholar] [CrossRef]

- Sarachi, S.; Hsu, K.; Sorooshian, S. A Statistical Model for the Uncertainty Analysis of Satellite Precipitation Products. J. Hydrometeor. 2015, 16, 2101–2117. [Google Scholar] [CrossRef]

- Dai, Q.; Yang, Q.; Zhang, J.; Zhang, S. Impact of Gauge Representative Error on a Radar Rainfall Uncertainty Model. J. Appl. Meteor. Climatol. 2018, 57, 2769–2787. [Google Scholar] [CrossRef]

- Luyckx, G.; Berlamont, J. Simplified Method to Correct Rainfall Measurements from Tipping Bucket Rain Gauges. In Proceedings of the Conference: Specialty Symposium on Urban Drainage Modeling at the World Water and Environmental Resources Congress, Orlando, FL, USA, 20–24 May 2001; pp. 767–776. [Google Scholar]

- Molini, A.; Lanza, L.G.; La Barbera, P. The impact of tipping-bucket raingauge measurement errors on design rainfall for urban-scale applications. Hydrol. Process. 2005, 19, 1073–1088. [Google Scholar] [CrossRef]

- Medlin, J.M.; Kimball, S.K.; Blackwell, K.G. Radar and Rain Gauge Analysis of the Extreme Rainfall during Hurricane Danny’s (1997) Landfall. Mon. Weather Rev. 2007, 135, 1869–1888. [Google Scholar] [CrossRef]

- Ciach, G.J.; Krajewski, W.F. On the estimation of radar rainfall error variance. Adv. Water Resour. 1999, 22, 585–595. [Google Scholar] [CrossRef]

- Stampoulis, D.; Anagnostou, E.N. Evaluation of Global Satellite Rainfall Products over Continental Europe. J. Hydrometeor. 2012, 13, 588–603. [Google Scholar] [CrossRef]

- Cao, Q.; Knight, M.; Qi, Y. Dual-pol radar measurements of Hurricane Irma and comparison of radar QPE to rain gauge data. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 496–501. [Google Scholar]

- Gourley, J.J.; Tabary, P.; Parent du Chatelet, J. A Fuzzy Logic Algorithm for the Separation of Precipitating from Nonprecipitating Echoes Using Polarimetric Radar Observations. J. Atmos. Ocean. Technol. 2007, 24, 1439–1451. [Google Scholar] [CrossRef]

- Kirstetter, P.-E.; Gourley, J.J.; Hong, Y.; Zhang, J.; Moazamigoodarzi, S.; Langston, C.; Arthur, A. Probabilistic precipitation rate estimates with ground-based radar networks. Water Resour. Res. 2015, 51, 1422–1442. [Google Scholar] [CrossRef]

- Ryzhkov, A.; Diederich, M.; Zhang, P.; Simmer, C. Potential Utilization of Specific Attenuation for Rainfall Estimation, Mitigation of Partial Beam Blockage, and Radar Networking. J. Atmos. Ocean. Technol. 2014, 31, 599–619. [Google Scholar] [CrossRef]

- Cecinati, F.; Moreno-Ródenas, A.; Rico-Ramirez, M.; ten Veldhuis, M.-C.; Langeveld, J. Considering Rain Gauge Uncertainty Using Kriging for Uncertain Data. Atmosphere 2018, 9, 446. [Google Scholar] [CrossRef]

- Jewell, S.A.; Gaussiat, N. An assessment of kriging-based rain-gauge-radar merging techniques. Q. J. R. Meteorol. Soc. 2015, 141, 2300–2313. [Google Scholar] [CrossRef]

- Yoo, C.; Park, C.; Yoon, J.; Kim, J. Interpretation of mean-field bias correction of radar rain rate using the concept of linear regression. Hydrol. Process. 2014, 28, 5081–5092. [Google Scholar] [CrossRef]

- Chen, S.; Hong, Y.; Cao, Q.; Kirstetter, P.E.; Gourley, J.J.; Qi, Y.; Wang, J. Performance evaluation of radar and satellite rainfalls for Typhoon Morakot over Taiwan: Are remote-sensing products ready for gauge denial scenario of extreme events? J. Hydrol. 2013, 506, 4–13. [Google Scholar] [CrossRef]

- Kidd, C.; Bauer, P.; Turk, J.; Huffman, G.J.; Joyce, R.; Hsu, K.L.; Braithwaite, D. Intercomparison of High-Resolution Precipitation Products over Northwest Europe. J. Hydrometeor. 2011, 13, 67–83. [Google Scholar] [CrossRef]

- Gao, S.; Zhang, J.; Li, D.; Jiang, H.; Fang, N.Z. Evaluation of Multi-Radar Multi-Sensor (MRMS) and Stage IV Gauge-adjusted Quantitative Precipitation Estimate (QPE) During Hurricane Harvey. AGU Fall Meet. Abstr. 2018, NH42A-07. [Google Scholar]

- Mei, Y.; Anagnostou, E.N.; Nikolopoulos, E.I.; Borga, M. Error Analysis of Satellite Precipitation Products in Mountainous Basins. J. Hydrometeor. 2014, 15, 1778–1793. [Google Scholar] [CrossRef]

- Huang, C.; Hu, J.; Chen, S.; Zhang, A.; Liang, Z.; Tong, X.; Zhang, Z. How Well Can IMERG Products Capture Typhoon Extreme Precipitation Events over Southern China? Remote Sens. 2019, 11, 70. [Google Scholar] [CrossRef]

- Hong, Y.; Hsu, K.-l.; Moradkhani, H.; Sorooshian, S. Uncertainty quantification of satellite precipitation estimation and Monte Carlo assessment of the error propagation into hydrologic response. Water Resour. Res. 2006, 42. [Google Scholar] [CrossRef]

- Tian, Y.; Peters-Lidard, C.D. A global map of uncertainties in satellite-based precipitation measurements. Geophys. Res. Lett. 2010. [Google Scholar] [CrossRef]

- Alemohammad, S.H.; McColl, K.A.; Konings, A.G.; Entekhabi, D.; Stoffelen, A. Characterization of precipitation product errors across the United States using multiplicative triple collocation. Hydrol. Earth Syst. Sci. 2015, 19, 3489–3503. [Google Scholar] [CrossRef]

- Caires, S. Validation of ocean wind and wave data using triple collocation. J. Geophys. Res. 2003, 108. [Google Scholar] [CrossRef]

- Gentemann, C.L. Three way validation of MODIS and AMSR-E sea surface temperatures. J. Geophys. Res. 2014, 119, 2583–2598. [Google Scholar] [CrossRef]

- Li, C.; Tang, G.; Hong, Y. Cross-evaluation of ground-based, multi-satellite and reanalysis precipitation products: Applicability of the Triple Collocation method across Mainland China. J. Hydrol. 2018, 562, 71–83. [Google Scholar] [CrossRef]

- Massari, C.; Crow, W.; Brocca, L. An assessment of the performance of global rainfall estimates without ground-based observations. Hydrol. Earth Syst. Sci. 2017, 21, 4347–4361. [Google Scholar] [CrossRef]

- McColl, K.A.; Vogelzang, J.; Konings, A.G.; Entekhabi, D.; Piles, M.; Stoffelen, A. Extended triple collocation: Estimating errors and correlation coefficients with respect to an unknown target. Geophys. Res. Lett. 2014, 41, 6229–6236. [Google Scholar] [CrossRef]

- Roebeling, R.A.; Wolters, E.L.A.; Meirink, J.F.; Leijnse, H. Triple Collocation of Summer Precipitation Retrievals from SEVIRI over Europe with Gridded Rain Gauge and Weather Radar Data. J. Hydrometeor. 2012, 13, 1552–1566. [Google Scholar] [CrossRef]

- Stoffelen, A. Toward the true near-surface wind speed: Error modeling and calibration using triple collocation. J. Geophys. Res. 1998, 103, 7755–7766. [Google Scholar] [CrossRef]

- Zwieback, S.; Scipal, K.; Dorigo, W.; Wagner, W. Structural and statistical properties of the collocation technique for error characterization. Nonlinear Process. Geophys. 2012, 19, 69–80. [Google Scholar] [CrossRef]

- Ratheesh, S.; Mankad, B.; Basu, S.; Kumar, R.; Sharma, R. Assessment of Satellite-Derived Sea Surface Salinity in the Indian Ocean. IEEE Geosci. Remote Sens. Lett. 2013, 10, 428–431. [Google Scholar] [CrossRef]

- Tang, G.; Clark, M.P.; Papalexiou, S.M.; Ma, Z.; Hong, Y. Have satellite precipitation products improved over last two decades? A comprehensive comparison of GPM IMERG with nine satellite and reanalysis datasets. Remote Sens. Environ. 2020, 240, 111697. [Google Scholar] [CrossRef]

- Gourley, J.J.; Hong, Y.; Flamig, Z.L.; Li, L.; Wang, J. Intercomparison of Rainfall Estimates from Radar, Satellite, Gauge, and Combinations for a Season of Record Rainfall. J. Appl. Meteorol. Climatol. 2009, 49, 437–452. [Google Scholar] [CrossRef]

- Seo, D.-J. Real-time estimation of rainfall fields using rain gage data under fractional coverage conditions. J. Hydrol. 1998, 208, 25–36. [Google Scholar] [CrossRef]

- Zhang, J.; Howard, K.; Langston, C.; Kaney, B.; Qi, Y.; Tang, L.; Kitzmiller, D. Multi-Radar Multi-Sensor (MRMS) Quantitative Precipitation Estimation: Initial Operating Capabilities. Bull. Am. Meteorol. Soc. 2016, 97, 621–638. [Google Scholar] [CrossRef]

- Huffman, G.J.; Stocker, E.F.; Bolvin, D.T.; Nelkin, E.J.; Tan, J. GPM IMERG Final Precipitation L3 Half Hourly 0.1 degree x 0.1 degree V06. Greenbelt, MD, Goddard Earth Sciences Data and Information Services Center (GES DISC). 2019. Available online: 10.5067/GPM/IMERG/3B-HH/06 (accessed on 11 November 2019).

- Skofronick-Jackson, G.; Petersen, W.A.; Berg, W.; Kidd, C.; Stocker, E.F.; Kirschbaum, D.B.; Wilheit, T. The Global Precipitation Measurement (Gpm) Mission for Science and Society. Bull. Am. Meteorol. Soc. 2017, 98, 1679–1695. [Google Scholar] [CrossRef]

- Yilmaz, M.T.; Crow, W.T. Evaluation of Assumptions in Soil Moisture Triple Collocation Analysis. J. Hydrometeor. 2014, 15, 1293–1302. [Google Scholar] [CrossRef]

- Tian, Y.; Huffman, G.J.; Adler, R.F.; Tang, L.; Sapiano, M.; Maggioni, V.; Wu, H. Modeling errors in daily precipitation measurements: Additive or multiplicative? Geophys. Res. Lett. 2013, 40, 2060–2065. [Google Scholar] [CrossRef]

- Sukovich, E.M.; Ralph, F.M.; Barthold, F.E.; Reynolds, D.W.; Novak, D.R. Extreme Quantitative Precipitation Forecast Performance at the Weather Prediction Center from 2001 to 2011. Weather Forecast. 2014, 29, 894–911. [Google Scholar] [CrossRef]

- Chen, M.; Nabih, S.; Brauer, N.S.; Gao, S.; Gourley, J.J.; Hong, Z.; Kolar, R.L.; Hong, Y. Can Remote Sensing Technologies Capture the Extreme Precipitation Event and Its Cascading Hydrological Response? A Case Study of Hurricane Harvey Using EF5 Modeling Framework. Remote Sens. 2020, 445. [Google Scholar] [CrossRef]

- Omranian, E.; Sharif, H. Evaluation of the Global Precipitation Measurement (GPM) Satellite Rainfall Products over the Lower Colorado River Basin, Texas. J. Am. Water Resour. Assoc. 2018, 54, 882–898. [Google Scholar] [CrossRef]

- Guo, H.; Chen, S.; Bao, A.; Behrangi, A.; Hong, Y.; Ndayisaba, F.; Stepanian, P.M. Early assessment of Integrated Multi-satellite Retrievals for Global Precipitation Measurement over China. Atmos. Res. 2016, 176–177, 121–133. [Google Scholar] [CrossRef]

- Sungmin, O.; Foelsche, U.; Kirchengast, G.; Fuchsberger, J.; Tan, J.; Petersen, W.A. Evaluation of GPM IMERG Early, Late, and Final rainfall estimates using WegenerNet gauge data in southeastern Austria. Hydrol. Earth Syst. Sci. 2017, 21, 6559–6572. [Google Scholar]

- Sharifi, E.; Steinacker, R.; Saghafian, B. Assessment of GPM-IMERG and Other Precipitation Products against Gauge Data under Different Topographic and Climatic Conditions in Iran: Preliminary Results. Remote Sens. 2016, 8, 135. [Google Scholar] [CrossRef]

- Zhang, J.; Qi, Y. A Real-Time Algorithm for the Correction of Brightband Effects in Radar-Derived QPE. J. Hydrometeor. 2010, 11, 1157–1171. [Google Scholar] [CrossRef]

| Hurricane/Storm | Start Date | End Date | Duration | Maximum Rainfall Amount |

|---|---|---|---|---|

| Harvey | 25 August 2017 | 31 August 2017 | 7 days | 1625 mm |

| Bill | 16 June 2015 | 18 June 2015 | 3 days | 496 mm |

| Cindy | 22 June 2017 | 23 June 2017 | 2 days | 233 mm |

| Imelda | 18 September 2019 | 21 September 2019 | 4 days | 1126 mm |

| Metrics | Equation | Best Value | Conditional Values | |

|---|---|---|---|---|

| Continuous Indices | Correlation coefficient | 1 | ||

| RMS difference (RMSD) | 0 | |||

| Categorical Indices | POD | 1 | ||

| FAR | 0 | |||

| CSI | 1 | |||

| Metrics | All | Harvey | Other | |

|---|---|---|---|---|

| Max. total rain (mm) | NCEP | 1366 | 979 | 686 |

| MRMS | 2876 | 1625 | 1451 | |

| IMERG | 1749 | 1116 | 853 | |

| RMSD (mm/h) | NCEP/IMERG | 2.51 | 1.44 | 1.87 |

| NCEP/MRMS | 3.08 | 1.55 | 2.54 | |

| IMERG/MRMS | 2.71 | 1.42 | 2.20 | |

| Temporal CC | NCEP/IMERG | 0.52 | 0.48 | 0.48 |

| NCEP/MRMS | 0.49 | 0.54 | 0.40 | |

| IMERG/MRMS | 0.62 | 0.56 | 0.60 | |

| Spatial CC | NCEP/IMERG | 0.85 | 0.90 | 0.85 |

| NCEP/MRMS | 0.80 | 0.87 | 0.87 | |

| IMERG/MRMS | 0.91 | 0.94 | 0.87 | |

| POD | NCEP/IMERG | 0.64 | 0.52 | 0.56 |

| NCEP/MRMS | 0.72 | 0.58 | 0.62 | |

| IMERG/MRMS | 0.70 | 0.69 | 0.62 | |

| FAR | NCEP/IMERG | 0.28 | 0.47 | 0.39 |

| NCEP/MRMS | 0.29 | 0.39 | 0.42 | |

| IMERG/MRMS | 0.19 | 0.41 | 0.27 | |

| CSI | NCEP/IMERG | 0.52 | 0.44 | 0.41 |

| NCEP/MRMS | 0.57 | 0.52 | 0.43 | |

| IMERG/MRMS | 0.60 | 0.51 | 0.51 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Chen, M.; Gao, S.; Hong, Z.; Tang, G.; Wen, Y.; Gourley, J.J.; Hong, Y. Cross-Examination of Similarity, Difference and Deficiency of Gauge, Radar and Satellite Precipitation Measuring Uncertainties for Extreme Events Using Conventional Metrics and Multiplicative Triple Collocation. Remote Sens. 2020, 12, 1258. https://doi.org/10.3390/rs12081258

Li Z, Chen M, Gao S, Hong Z, Tang G, Wen Y, Gourley JJ, Hong Y. Cross-Examination of Similarity, Difference and Deficiency of Gauge, Radar and Satellite Precipitation Measuring Uncertainties for Extreme Events Using Conventional Metrics and Multiplicative Triple Collocation. Remote Sensing. 2020; 12(8):1258. https://doi.org/10.3390/rs12081258

Chicago/Turabian StyleLi, Zhi, Mengye Chen, Shang Gao, Zhen Hong, Guoqiang Tang, Yixin Wen, Jonathan J. Gourley, and Yang Hong. 2020. "Cross-Examination of Similarity, Difference and Deficiency of Gauge, Radar and Satellite Precipitation Measuring Uncertainties for Extreme Events Using Conventional Metrics and Multiplicative Triple Collocation" Remote Sensing 12, no. 8: 1258. https://doi.org/10.3390/rs12081258

APA StyleLi, Z., Chen, M., Gao, S., Hong, Z., Tang, G., Wen, Y., Gourley, J. J., & Hong, Y. (2020). Cross-Examination of Similarity, Difference and Deficiency of Gauge, Radar and Satellite Precipitation Measuring Uncertainties for Extreme Events Using Conventional Metrics and Multiplicative Triple Collocation. Remote Sensing, 12(8), 1258. https://doi.org/10.3390/rs12081258