Abstract

Change detection (CD), one of the primary applications of multi-temporal satellite images, is the process of identifying changes in the Earth’s surface occurring over a period of time using images of the same geographic area on different dates. CD is divided into pixel-based change detection (PBCD) and object-based change detection (OBCD). Although PBCD is more popular due to its simple algorithms and relatively easy quantitative analysis, applying this method in very high resolution (VHR) images often results in misdetection or noise. Because of this, researchers have focused on extending the PBCD results to the OBCD map in VHR images. In this paper, we present a proposed weighted Dempster-Shafer theory (wDST) fusion method to generate the OBCD by combining multiple PBCD results. The proposed wDST approach automatically calculates and assigns a certainty weight for each object of the PBCD result while considering the stability of the object. Moreover, the proposed wDST method can minimize the tendency of the number of changed objects to decrease or increase based on the ratio of changed pixels to the total pixels in the image when the PBCD result is extended to the OBCD result. First, we performed co-registration between the VHR multitemporal images to minimize the geometric dissimilarity. Then, we conducted the image segmentation of the co-registered pair of multitemporal VHR imagery. Three change intensity images were generated using change vector analysis (CVA), iteratively reweighted-multivariate alteration detection (IRMAD), and principal component analysis (PCA). These three intensity images were exploited to generate different binary PBCD maps, after which the maps were fused with the segmented image using the wDST to generate the OBCD map. Finally, the accuracy of the proposed CD technique was assessed by using a manually digitized map. Two VHR multitemporal datasets were used to test the proposed approach. Experimental results confirmed the superiority of the proposed method by comparing the existing PBCD methods and the OBCD method using the majority voting technique.

1. Introduction

With the development of various optical satellite sensors capable of acquiring very high resolution (VHR) images, the images have been used in a wide range of applications in the remote sensing field. Among them, change detection (CD), the process of identifying changes in the surface of the Earth occurring over a period of time using images covering the same geographic area acquired on different dates, has proved to be a popular technique [1,2,3,4,5]. The VHR imagery allows us to recognize and differentiate between various types of complex objects (e.g., buildings, trees and roads) in an acquired image [6,7]. For VHR remote sensed imagery, accurate CD results can be obtained thanks to abundant spatial and contextual information [8,9]. Applications such as urban expansion monitoring [10,11], changed building detection [12], forest observation [13], and flood monitoring [14] can benefit from the CD approach using VHR multitemporal images.

Among the numerous CD techniques that have been developed, the most common and easy to use is the unsupervised pixel-based change detection (PBCD). It acquires information on land cover change by measuring the change in intensity through a comparison of pixels on multitemporal images. Image ratio, image difference, and change vector analysis are the representative and most popular PBCD approaches used to obtain the change intensity images [15,16,17,18]. Following that, a binary threshold is estimated to separate the pixels of the change intensity image into changed and unchanged classes [19].

However, the performance of the PBCD algorithms may decrease when applied to multitemporal VHR imagery [20]. It is because PBCD takes an individual pixel as its basic unit without accounting for the spatial context of an image [21]. Therefore, it causes salt-and-pepper noises in the CD results due to the heterogeneity in a pixel-level semantic meaning and misregistration between the VHR images [22]. Instead of using a pixel as the basic unit for CD, using an object, which is a group of pixels that are spatially adjacent and spectrally similar to each other [23], as a basic unit can be a solution for minimizing problems with the VHR image CD [24]. Object-based change detection (OBCD) extracts meaningful objects by segmenting input images and, thus, is consistent with the original idea of using CD to identify differences in the state of an observed object or phenomenon [1,25,26].

Based on our review of the literature, the OBCD methods can be categorized into two groups: (1) fusing spatial features, which takes into consideration of the texture, shape, and topology features of the objects, in the process of change analysis [23,24,27]; and (2) utilizing the object as the process unit to improve the completeness and accuracy of the final result [28,29,30]. Many studies have focused on using the spatial features of the objects. For example, spatial and shape features were exploited together to enhance the ability of features to detect building changes [12]. In [11], an unsupervised approach for OBCD in urban environments was proposed with a focus on individual buildings using object-based difference features. To improve the accuracy of CD in urban areas using bi-temporal VHR remote sensing images, an OBCD scheme combining multiple features and ensemble learning has been proposed [24]. A rule-based approach based on spectral, spatial, and texture features was also introduced for detecting landslides [31]. However, it is complicated to combine the features when applying the rule-based approach. The well-constructed software such as eCognition to address features at object level is necessary. Due to the complexity of images and change patterns, it is also difficult to identify the proper features for improving the CD results. On the other hand, utilizing the object as the process unit to combine PBCD results has been recently studied due to its simple and intuitive methodology. In [28], a traditional PBCD was extended to the OBCD result using the majority voting technique. This determines whether or not the object changed by calculating the ratio of the changed and unchanged pixels within the object. With the increase in spatial resolution, the high reflectance variability of individual objects in urban areas also increases. Therefore, no single method can achieve satisfactory performance. Several PBCD results were thus adaptively combined with the object-level operations using the majority voting strategy [19,29,30]. However, when fusing multiple CD results, uncertainty will remain the primary problem. The multiple results reflect the inaccuracy of each result and the conflict among different decisions.

Recently, analysts addressed this problem by using Dempster-Shafer Theory (DST) for fusing different CD results with the segmented image [32]. DST is a decision theory effectively fusing multiple pieces of evidence (i.e., multiple CD results) from different sources [33,34,35,36]. One important advantage of DST is that it can provide explicit estimates of uncertainty between the different CD results from different data sources [37,38]. Thus, the fusion of CD results using DST aims to improve the reliability of decisions by taking advantage of complementary information while decreasing their uncertainty [39]. In DST, the most critical issue is how to select the uncertainty weight, which explains the reliability of each PBCD result. However, in the previous research relating to DST, the uncertainty weight was empirically selected based on the results of the accuracy assessment using the manually digitized CD reference data [32]. Moreover, the same weight value was allocated for each PBCD method equally, although the CD result was determined to be a unit of the object. Because the equally allocated uncertainty weight ignores the properties of each object, it is unlikely to properly reflect the changes in the VHR multitemporal images.

Another problem occurring when applying the DST fusion and majority voting techniques will cause due to the fact that the size of the changed areas compared to the unchanged areas in a dataset is generally small. Accordingly, the falsely detected changed regions in the PBCD result may tend to be removed when extended to the OBCD result because the ratio of the changed pixels to the unchanged pixels within the object might be also small. This tendency will be severe when the segment size becomes large. For example, when there is only one segment in the study area, there will be no changed areas when extending the PBCD to OBCD using the majority voting or DST fusion because the number of changed pixels in the site is smaller than those of unchanged pixels. When the number of segments increases, this tendency decreases. However, the falsely detected changes, causing by the PBCD methods, will also increase.

To minimize the problems caused by using the DST and majority voting techniques to fuse the PBCD results, we proposed a weighted DST (wDST) fusion method to extend the PBCD methods to the OBCD. We developed a method that automatically calculates and assigns the certainty weight for each object of the PBCD result while considering the stability of the object. Moreover, the proposed wDST method can control the number of changed objects by considering the total extracted number of changed/unchanged pixels in each method. To this end, we first co-registered the multitemporal images to minimize geometric dissimilarity. Then, image segmentation of the co-registered multitemporal VHR imagery was conducted. Three change intensity images were generated using change vector analysis (CVA) [15], iteratively reweighted-multivariate alteration detection (IRMAD) [40], and principal component analysis (PCA) [41]. These three intensity images were exploited to generate binary PBCD maps, after which the maps were fused with the segmented image using the wDST to generate one OBCD map. Finally, the accuracy assessment of the proposed CD technique was conducted using a manually digitized map. Two VHR multitemporal datasets were used to test the proposed approach. To verify the superiority of the proposed method, the results were compared with those obtained using existing PBCD methods and the OBCD method using the majority voting technique.

The contribution of this paper is as follows. First, the proposed CD method enables to consider both the spectral and spatial information by using PBCD maps and segmentation image, respectively. Second, fusing multiple PBCD methods can minimize the uncertainty of the single method selection. Third, the proposed wDST fusion allocates the certainty weight of the object in each PBCD method according to the stability of the object. Moreover, the proposed method can achieve reliable CD results, regardless of the object size, based on the weight that can control the ratio of the changed/unchanged pixels in the dataset. It is an unsupervised approach that needs no reference samples with respect to changes.

The remaining sections of this paper are organized as follows. Section 2 describes the proposed method for the wDST-based OBCD. The experiments and their results are addressed in Section 3. Section 4 contains a detailed discussion of the experimental results. Finally, Section 5 outlines the study conclusion.

2. Methodology

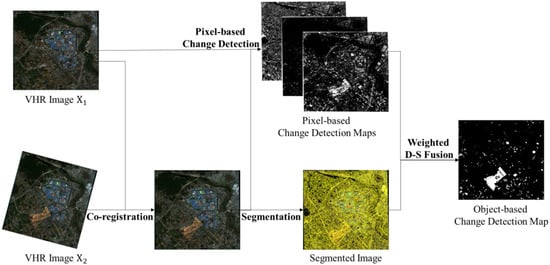

The overall process for the proposed CD is shown in Figure 1. Fine co-registration was performed as a preprocessing step for VHR bi-temporal images and to minimize their geometric dissimilarities [42]. Then, three PBCD methods, which are CVA, IRMAD and PCA, were carried out for the CD. In the meantime, a segmentation image was generated based on the image using the multiresolution technique. Finally, the wDST fusion was performed to extend the PBCD results to an OBCD map. Detailed explanations of each step are presented in the following sub-sections.

Figure 1.

Flowchart of the proposed approach.

2.1. Co-Registration

To perform an effective and reliable CD, an accurate geometrical preprocessing such as orthorectification is required on the multitemporal VHR images [43,44]. In the orthorectification process, however, ancillary data such as digital elevation models and ground control points are necessarily required [43]. Instead, we carried out co-registration between VHR multitemporal images to minimize the geometric misalignment. Specifically, we exploited a phase-based correlation method in locally determined templates between the images to detect conjugate points (CPs) used for the transformation model construction [45]. To detect well-distributed CPs over the multitemporal images, the local templates were constructed over the entire image with the same interval. The location of corresponding templates of the image was determined based on the coordinate information derived from the metadata. Then, the phase correlation was conducted to find a similarity peak, which is associated with the position where the corresponding local templates show the optimal translation difference. The phase correlation method can extract the translation difference between images in the x and y directions [45,46]. The method searches the difference in the frequency domain. Let and represent the corresponding local template images of the multitemporal images that differ only by a translation (), derived as:

The phase correlation () between the two template images is calculated as:

where and are the 2D Fourier and 2D inverse Fourier transformations, respectively.

Since the location of this peak can be interpreted as the translation difference between the two corresponding local templates, we used the peak location of the phase correlation between the template images to extract well-distributed CPs. More specifically, the centroid of each local template in the image was selected as a CP for the template image . Then, the corresponding CP position for the template was determined as shifted location from the centroid of the template to the amount showing the highest similarity value of the phase correlation.

After extracting the CPs, an improved piecewise linear (IPL) transformation [42], which is an advanced version of piecewise linear transformation that construct triangular regions using corresponding CPs to warp one image to the other image, was used to warp the image to the coordinates of the image . The IPL method focuses specifically on improving the co-registration performance outside the triangular regions by extracting additional pseudo-CPs along the boundary of the image to be warped. Interested readers can refer to [42].

2.2. Image Segmentation

A segmentation image was generated using the multi-resolution segmentation method built into the eCognition software for object-based analysis [47]. This technique creates a polygonal object by grouping pixels of the image into one by the use of the bottom-up strategy. It starts with small image objects. In the beginning, the highly correlated adjacent pixels are grown into segmented objects. This process selects random seed pixels that are best suited for potential merging and then maximizes homogeneity within the same object and heterogeneity among different objects. This procedure repeats until all the object conditions, which can be controlled by scale, color, and compactness three parameters, are satisfied. The scale parameter affects the segmentation size of an image and is proportional to the size of the objects. As this value increases, the image becomes roughly divided. The shape parameter is a weight between the object shape and its spectral color. The smaller the value, the greater the influence of spectral characteristics on the generation of the segmented image. The compactness parameter is the ratio of the boundary to the area of the whole object. Among them, scale parameters have a great impact on the CD performance [48]. Therefore, we set the shape and compactness parameters to 0.1, and 0.5, respectively, and conducted the experimnts while changing the scale parameter values from 50 to 500 with an interval of 50 to find a reliable range of the scale parameters according to CD datasets. The segmentation was carried out on the stacked image of all the bands constructed from and .

2.3. Pixel-Based Change Detection (PBCD)

Due to the complexity of the multitemporal VHR images, it is often difficult to obtain an accurate CD result from only one PBCD method. Therefore, we exploited three independent PBCD methods and fused their CD results using the wDST fusion method. Three popular and effective unsupervised PBCD methods including CVA, IRMAD, and PCA were considered.

The CVA method is a classical CD method and has been the foundation of numerous studies [15,49]. Change vectors were obtained by subtracting the spectral values of corresponding bands, and the change intensity image was calculated with the Euclidean distance of all the change vectors as follows:

where is the change intensity of the CVA, and and are the k-the band (k = 1, …, N) of bi-temporal images.

The IRMAD method works based on the principle of canonical correlation analysis, which finds the coupling vector with the highest correlation to a set of multivariate variables [40]. The change intensity image of the IRMAD is calculated by the chi-squared distance as:

where is the standard deviation of the k-th band. and can be derived as:

where and are the transformation vectors calculated from the canonical correlation analysis. It assigns high weights to the unchanged pixels during the iterations to reduce the negative effect of changed pixels while converging.

The PCA-based CD method analyzes images using the absolute-valued difference image . After rapping a non-overlapping mask with the size of h × h (h was set to 4 in this study) to the difference image, it extracts eigenvectors using PCA. Feature vectors for each pixel in the difference image are then extracted by projecting the adjacent mask data into a unique vector space. Then, a change intensity is calculated as:

where is the eigenvector of the covariance matrix, and is the average pixel value.

The generated values of the three change intensity images are then normalized into [0, 1]. Finally, the Otsu threshold was applied to the normalized change intensity images to obtain the binary PBCD maps that explain each pixel as changed or unchanged.

2.4. Weighted D-S Theory (wDST) Fusion

Due to the complexity of the VHR images, no single method can provide consistent means of detecting landscape changes. To improve the CD performance and decrease the uncertainties from single CD technique selection, it is feasible to set a criterion combining complementarities of the three PBCD maps. To this end, wDST was utilized in the proposed method. In [32], DST was used to fuse the multiple PBCD maps. However, the certainty weight, which is the fundamental parameter to carry out the DST, for each PBCD used during the calculation of change, no change and uncertainty was manually set. The same certainty weight was also allocated as per the PBCD method without considering the objects’ properties. To solve these problems and further enhance the CD performance, we automatically allocated the certainty weight in each segmented object according to the homogeneous level of the change intensity images.

The wDST, which is based on the same concept as the DST, incorporates the basic probability assignment function (BPAF) by fusing the probability of each PBCD map to measure the event probability [36,50]. The wDST measures the probability of an event by fusing the probability of each input result. Assuming that there is a space of hypotheses, denoted as , in CD applications, is the set of hypotheses about change/no-change, and its power set is . Assuming A is a nonempty subset of , m(A) indicates the BPAF of subset A, representing the degree of belief. The BPAF is based on the following constraints [32]:

Assuming that we have n independent PBCD maps, indicates the BPAF computed from the PBCD map and . Therefore, the computation of BPAF m(A), which denotes the probability of A by fusing the probabilities of the maps, is shown as follows:

In the CD problem, the space of hypotheses equals to , where indicates unchange and indicates change. Therefore, the three nonempty subsets of are {}, {}, and {}, which means unchange, change, and uncertainty. The BPAF for the three PBCD maps, generated by using the CVA, IRMAD, and PCA methods, is computed considering combining with the segmentation image. For each object j in VHR images, the BPAF of {}, {}, and {} for the PBCD map i is defined as [32]:

where and indicate the number of unchanged and changed pixels in object j in PBCD map i, respectively, indicates the total number of pixels in object j, is a weight that controls the BPAF of the change and unchanged in PBCD map i, and () measures the certainty weight of the PBCD map i in the object j. If is large, the BPAF of uncertainty {} will be small. The main differentiation of the wDST compared to the DST is the parameters and .

The easiest way to determine the certainty weight is to calculate CD accuracies by comparing it with a manually digitized reference map while changing the values [32]. However, it is difficult to construct the reference map in practical CD applications. It is also time-consuming to find the optimal parameter values with repeat experiments. Therefore, we propose an approach to automatically allocate the weight according to the stability of the change intensity of the object. The certainty weight is calculated as:

where indicates the standard deviation of the change intensity image in the PBCD method i at the object j. If the change intensity is homogeneous in the object, the certainty weight value will be large, and vice versa. Accordingly, the certainty weight can be automatically calculated while considering the stability of the change intensity of each object.

Apart from the setting of the certainty weight, another problem of the general DST is caused by the fact that the portion of the changed areas compared to the unchanged areas is relatively small in most of the CD cases. It implies that the extension from the PBCD to the OBCD using the DST or the majority voting is likely to reduce the changed areas, because those fusion methods count the number of change and unchanged pixels within each segment and use the ratio to figure out whether this object is changed or not. The relatively large part of the changed areas is removed when the object size becomes larger.

To minimize the tendency of reducing the changed areas when increasing the object size, we allocate the weight of the BPAF in the PBCD map i derived as:

where and indicate the number of extracted changed and unchanged pixels in PBCD i, respectively. The plays a role in reducing the effect of the BPAF in large areas (i.e., generally unchanged regions, but it can also control the opposite case according to the considered scene). The final decision for each object to be changed is assigned when the following rule is satisfied [32]:

where the , , and are calculated by Equations (9) and (10). In this way, three PBCD maps are then fused by considering the object information and their uncertainty, regardless of the object size.

3. Experimental Results

3.1. Experiments on the First Dataset

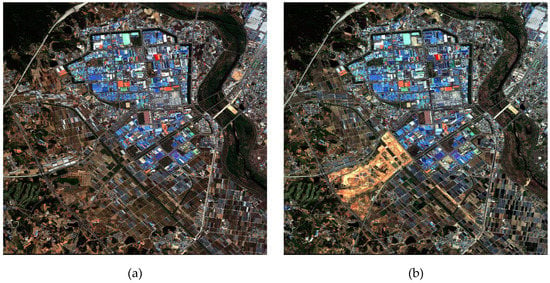

Experiments were conducted to evaluate the performance of the proposed wDST-based OBCD method. WorldView-3 multispectral images with a spatial resolution of 1.24 m were employed to construct the first dataset. The images were acquired in Gwangju city, South Korea. The area includes industrial areas, residences, agricultural lands, rivers, and changed regions related to large-scale urban development. Images and were acquired on 26 May 2017 and 4 May 2018, respectively. The image was co-registered to the coordinates relating to the image by applying the phase-based correlation method [45] with the IPL transformation warping [42]. After conducting the co-registration, the images consisted of 4717 × 4508 pixels (Figure 2). We did not perform the pansharpening of the multispectral images since its spatial resolution is high enough to describe the scene in detail, especially for the binary CD application, rather than the multiple CD case [51,52,53].

Figure 2.

Bitemporal WorldView-3 imagery acquired over Gwangju city, South Korea on (a) 26 May 2017 and (b) 4 May 2018.

Four multispectral bands (i.e., blue, green, red, and near-infrared) of the images were employed to apply the CD procedure. The PBCD results obtained by the CVA, IRMAD, and PCA methods were fused to the OBCD map by the wDST. When fusing based on the wDST, the segmentation image was generated by allocating the scale parameter as 500. To evaluate the performance of the proposed OBCD method, three PBCD results (i.e., CVA [15], IRMAD [40], and PCA [41]) prior to fusion and their extended OBCD results using the majority voting technique [28] were generated for a comparison purpose. Additionally, three PBCD results were combined with the dual majority voting technique to generate the OBCD result [29]. When generating the OBCD results, the same segmentation image was employed for the proposed method (i.e., scale, shape, and compactness parameter values set to 500, 0.1, and 0.5, respectively).

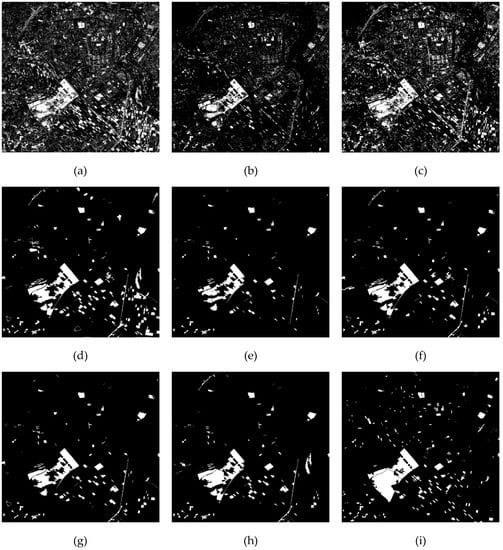

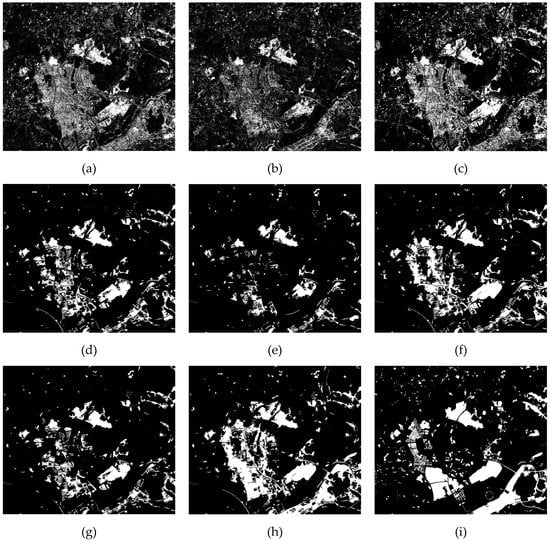

The generated CD results and the manually digitized CD reference map by image analysis experts for accuracy assessment are presented in Figure 3. The numbers of the changed and unchanged pixels in the reference map are 1,424,917 and 19,839,319, respectively. As one can see from the PBCD results (Figure 3a–c), salt-and-pepper noise-like changed pixels were mistakenly detected. These aspects tended to be minimized when the results were extended to the OBCD (Figure 3d–f). The fused results using the majority voting and the proposed wDST techniques were also effective in further removing those noises (Figure 3g,h). The visual results were more similar to the reference map. The OBCD results on dramatically changed regions are magnified in Figure 4 to compare the performance of the CD.

Figure 3.

Results of change detection in the first dataset: (a) pixel-based change detection (PBCD)using change vector analysis (CVA), (b) PBCD using iteratively reweighted-multivariate alteration detection (IRMAD), (c) PBCD using principal component analysis (PCA), (d) object-based change detection (OBCD) using CVA, (e) OBCD using IRMAD, (f) OBCD using PCA, (g) OBCD using majority voting, (h) OBCD using weighted Dempster-Shafer theory (wDST) and (i) reference map.

Figure 4.

Results of magnified object-based change detection in the first dataset: (a) image acquired on 26 May 2017, (b) image acquired on 4 May 2018, (c) OBCD using CVA, (d) OBCD using IRMAD, (e) OBCD using PCA, (f) OBCD using majority voting, (g) OBCD using wDST and (h) reference map.

To evaluate CD performance quantitatively, the false alarm rate (FAR), missed rate (MR), overall accuracy (OA), kappa, and F1-score values were calculated for the reference map and each generated CD result. FAR is the number of false alarms per total number of unchanged samples in the reference map. MR is the number of missed detections per the number of changed samples in the reference map. Overall accuracy is based on the probability that the CD is correctly generated by the reference map. The kappa coefficient, a measure of how the CD results compare to values assigned by change, is more reliable due to the imbalance between changed reference samples and unchanged reference samples. The F1-score is a comprehensive evaluation used in detecting problems with computer vision. The F1-score is the harmonic mean of precision and recall. Precision can be seen as a measure of exactness or quality, whereas recall is a measure of completeness or quantity. In simple terms, high precision means that an algorithm returned substantially more relevant results than irrelevant ones, while high recall means that an algorithm returned most of the relevant results.

The results of the numerical evaluation for the first dataset are presented in Table 1. The highest accuracies for indicators are highlighted in bold. The results are likely to improve when extending the PBCD results to the OBCD ones. The F1-scores of the CVA, IRMAD, and PCA-based CD results improved from 0.374 to 0.610, 0.375 to 0.596, and 0.510 to 0.610 by extending the PBCD to the OBCD. The IRMAD method tended to under-extract the changed regions compared to the CVA and PCA methods. This tendency was confirmed by the fact that the IRMAD method yielded small FAR value whereas large MR value. The OBCD result using the majority voting slightly improved the kappa and F1-score values compared to the CVA, IRMAD, and PCA-based OBCD results. The proposed method achieved the highest values of OA, kappa, and F1-score as 95.877%, 0.633, and 0.655.

Table 1.

Assessment of change detection accuracy results in the first dataset.

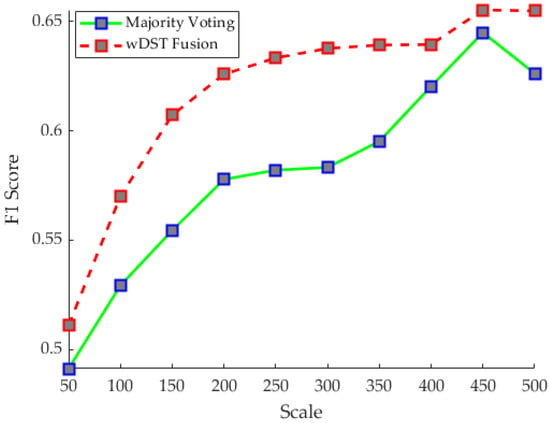

To analyze the effect of the scale parameter values in the segmentation process for the OBCD performance, the F1-score was calculated while the values changed from the 50 to 500 with an interval of 50. At the same time, the OBCD results obtained after using majority voting were calculated. Both results are provided in Figure 5. In both cases, the results showed a tendency for the F1-scores to increase when the scale parameter increased. When the increasing trend exceeded a certain scale, it terminated and became more stable. We observed that the proposed wDST showed better results than the majority voting, regardless of the scales.

Figure 5.

Sensitivity analysis of scale parameters for object-based change detection (first dataset).

3.2. Experiments on the Second Dataset

KOMPSAT-3 multispectral sensor images with a spatial resolution of 2.8 m were used to construct the second dataset. The study area is located over Sejong city in South Korea. This area is an administrative city in South Korea and has been developing since 2007, including the relocation of the central administrative agency. Large-scale high-rise buildings and complexes have been constructed in a short period, which contributes to the major changes in the image pair. The co-registration applied in the first dataset was conducted to warp the image relating the coordinates of the image having a size of 38793344 pixels. The co-registered pair of images from the second dataset is shown in Figure 6.

Figure 6.

Bitemporal Kompsat-3 imagery acquired over Sejong city on (a) 16 November 2013, and (b) 26 February 2019.

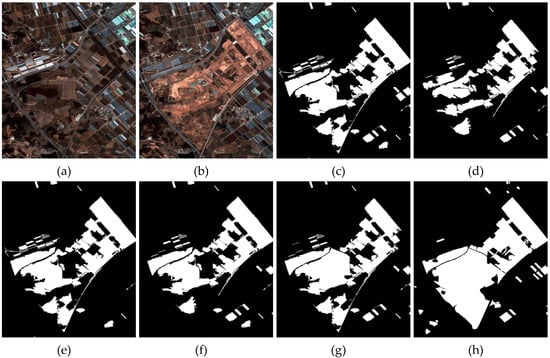

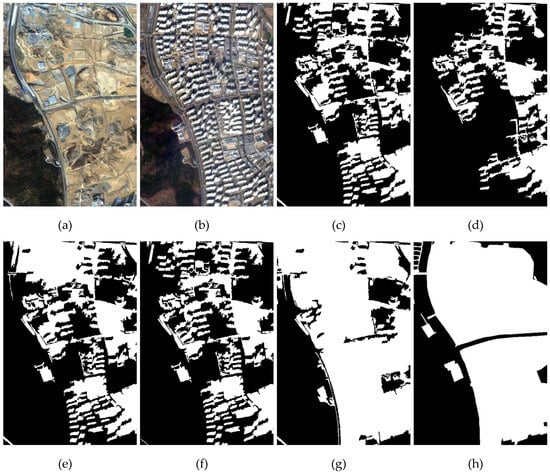

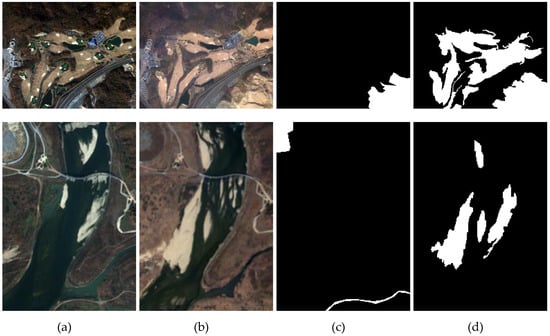

The same PBCD methods and parameter values used in the previous dataset were also utilized. The obtained CD results are shown in Figure 7. The numbers of the changed and unchanged pixels in the reference map are 2,029,749 and 10,941,627, respectively. As similar with the first dataset, the PBCD results led to large number of false alarms, whereas the OBCD results removed many isolated errors. Except for the proposed method, the OBCD results, however, showed a tendency to under-detect the changed regions because too many of them were removed when the majority voting technique was applied. Figure 8 shows the magnified OBCD results in a portion of the region of the dataset that leads to such aspects by applying the majority voting technique, whereas the proposed method maintained to detect the large-scale changes.

Figure 7.

Results of change detection in the second dataset: (a) PBCD using CVA, (b) PBCD using IRMAD, (c) PBCD using PCA, (d) OBCD using CVA, (e) OBCD using IRMAD, (f) OBCD using PCA, (g) OBCD using majority voting, (h) OBCD using wDST and (i) reference map.

Figure 8.

Results of magnified object-based change detection in the second dataset: (a) image acquired on 16 November 2013, (b) image acquired on 26 February 2019, (c) OBCD using CVA, (d) OBCD using IRMAD, (e) OBCD using PCA, (f) OBCD using majority voting, (g) OBCD using wDST and (h) reference map.

Table 2 summarizes the quantitative evaluation in the second dataset. As compared to the CD methods, the IRMAD extracted fewer CD regions, whereas the CVA and PCA extracted relatively larger CD regions. Similar to the previous experimental results, accuracies tended to improve when extending the PBCD results to the OBCD ones. However, the improvement was not significant. This is because the OBCD was likely to remove false alarms when applying the general majority voting technique. However, in the case of the second dataset, a large portion of the areas were changed, which means that underestimating the changed regions by removing them through the OBCD fusion did not adequately improve the CD results. However, the proposed wDST method could detect largely changed areas, as demonstrated by achievement of the best MR, kappa and F1-score values as 0.322, 0.555 and 0.630, respectively.

Table 2.

Assessment of change detection accuracy results in the second dataset.

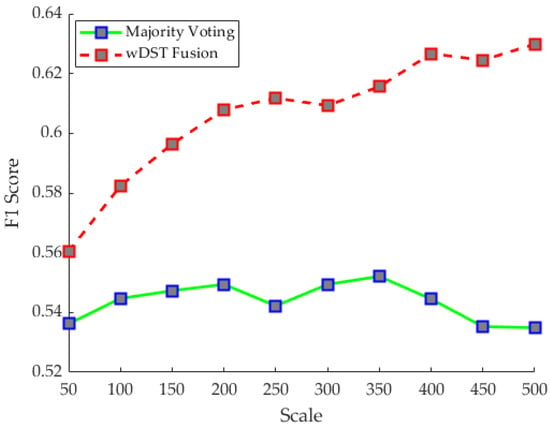

The F1-score values calculated based on different segmentation scales are illustrated in Figure 9. In the case of the majority voting method, it shows a decreased accuracy while the scale increased from 350 to 500. This is because a large portion of changed pixels in the PBCD map was changed as unchanged regions in the OBCD map by applying the majority voting technique in the large-sized objects. The second dataset consists of a relatively large number of changed regions to the extent that underestimating the changed regions decreased accuracy. However, under different scales, the wDST method showed a higher level of accuracy than majority voting. This demonstrates the effectiveness of the proposed fusion methods based on wDST that can control the balance between the portion of changed and unchanged regions in the considered dataset.

Figure 9.

Sensitivity analysis of scale parameters for object-based change detection (second dataset).

4. Discussion

Compared to the existing preferred CD methods, the proposed wDST fusion-based CD approach achieved the highest accuracy in terms of the F1-score and kappa values. To facilitate the practical application of the proposed method, a detailed discussion of the analysis is presented in this section.

First is the influence of the scale parameters. In the case of the first dataset, the accuracy was improved as the scale increased. This result was obtained in particular because of the high-spatial resolution of the Worldview multispectral imagery. In the dataset with a spatial resolution of 1.2 m, very small objects cannot adequately describe the semantic meaning of the scene. Therefore, objects constructed with small-scale values are over-segmented, leading to relatively poor CD performance. In the case of the second dataset, the opposite aspects appeared. The accuracy decreased when the scale parameter values increased as the majority voting technique was applied. This implies that the smaller objects can effectively describe the terrain of the second dataset constructed from Kompsat-3 imagery, which have a relatively finer spatial resolution of 2.8 m. Variations in the accuracies according to the scales were reduced by applying the proposed wDST fusion method. The proposed approach achieved reliable results regardless of the scales because the weight parameter controlled the impact of the ratio between changed/unchanged regions in the scene.

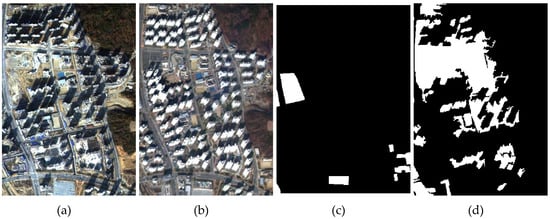

In terms of measuring accuracies, some changed areas that are not of interest (e.g., seasonal changes in vegetated areas, shadow related changes, etc.) were not included in the change reference map. In particular, the seasonal dissimilarity in the second dataset (images collected in November and February) caused severe change detection errors (Figure 10). Moreover, the scene included a large number of high-rise buildings, which cause varying magnitude and direction of the relief displacements and their shadows. These relief displacements and shadows also led the falsely detected changes (Figure 11). Because the study site images are large in size (i.e., 4717 × 4508 pixels and 3879 × 3344 pixels for the first and the second datasets, respectively), it was difficult to digitize all the changes occurring in the sites. The study sites were larger (up to 10 times) than those in other related studies [28,29,30,31,32]. Although the conditions for conducting the CD were limited, we could demonstrate the effectiveness of the proposed approach by achieving the improvement in accuracy as compared to other existing methods.

Figure 10.

Examples of false alarms resulting from seasonal dissimilarity between multitemporal images: (a) image acquired in November, (b) image acquired in February, (c) reference map and (d) proposed method.

Figure 11.

Examples of false alarms resulting from high-rise buildings: (a) image acquired in November, (b) image acquired in February, (c) reference map and (d) proposed method.

In the case of computational efficiency compared to the DST fusion, the proposed wDST fusion needs to calculate two more parameters, including the BPAF weight (Equation (12)) and the certainty weight (Equation (11)). The was calculated only according to the number of PBCD methods used (i.e., 3 times in our case). The certainty weight should be calculated by multiplying the number of used PBCD methods and the number of segments. It means that it will take some time to calculate the certainty weight in the case where the large number of segments is generated. However, it is still efficient to automatically determine the weight according to the stability of the segment instead of empirically allocating the weight.

It should be noted that the main contribution of the proposed method is to fuse multiple PBCD results to the OBCD one by automatically considering the stability of the PBCD results in each object. Therefore, the proposed method can be applicable to any change according to the focus of the PBCD. For example, assuming PBCD results focus on changes regarding a specific case such as urban expansion monitoring, forest observation, or flood monitoring, the extended OBCD result is also related to such changes.

5. Conclusions

We proposed a weighted DST (wDST) fusion method to extend the multiple PBCD maps to the OBCD map. The proposed wDST method can fuse multiple CD maps by automatically allocating the weight of the object in each PBCD map while considering the stability of the object. Moreover, the proposed method enables us to achieve reliable CD results irrespective of the object size by considering the ratio of the changed/unchanged regions in the scene. Two VHR multitemporal datasets were used in developing the proposed approach. Comparative analysis with existing PBCD and OBCD methods on the datasets verified the superiority of the proposed method by yielding the highest F1-score and kappa values.

To improve the CD results particularly for the VHR multitemporal dataset, additional consideration should be given to the site properties and acquisition environments. For example, in the second site, high-rise buildings caused severe CD errors due to dissimilarities in the relief displacement and building shadows. Seasonal dissimilarities between the images also caused CD errors in the regions that were not the focus of the analysis. These issues will be considered in our future work to improve the CD performance of the VHR datasets. Furthermore, we will extend the proposed method to solve a multiple CD problem.

Author Contributions

Conceptualization, Y.H. and S.L.; Methodology, Y.H.; Software, A.J.; Validation, S.J. and Y.H.; Investigation, A.J. and S.J.; Writing-Original Draft Preparation, Y.H.; Writing-Review and Editing, S.L.; Funding Acquisition, Y.H. and S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Kyungpook National University Bokhyeon Research Fund, 2017, and by the National Natural Science Foundation of China (Grant Numbers 41601354).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Singh, A. Review article digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Review articledigital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Zhu, Z. Change detection using Landsat time series: A review of frequencies, preprocessing, algorithms, and applications. ISPRS J. Photogramm. Remote Sens. 2017, 130, 370–384. [Google Scholar] [CrossRef]

- Mahabir, R.; Croitoru, A.; Crooks, A.; Agouris, P.; Stefanidis, A. A critical review of high and very high- resolution remote sensing approaches for detecting and mapping slums: Trends, challenges and emerging opportunities. Urban Sci. 2018, 2, 8. [Google Scholar] [CrossRef]

- Hansen, M.C.; Loveland, T.R. A review of large area monitoring of land cover change using Landsat data. Remote Sens. Environ. 2012, 122, 66–74. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Benediktsson, J.A.; Bovolo, F.; Bruzzone, L. An unsupervised technique based on morphological filters for change detection in very high resolution images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 433–437. [Google Scholar] [CrossRef]

- Falco, N.; Dalla Mura, M.; Bovolo, F.; Benediktsson, J.A.; Bruzzone, L. Change detection in VHR images based on morphological attribute profiles. IEEE Geosci. Remote Sens. Lett. 2012, 10, 636–640. [Google Scholar] [CrossRef]

- Li, Z.; Shi, W.; Zhang, H.; Hao, M. Change detection based on Gabor wavelet features for very high resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 783–787. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Bruzzone, L. Unsupervised change detection in multispectral remote sensing images via spectral-spatial band expansion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3578–3587. [Google Scholar] [CrossRef]

- Lin, C.; Zhang, P.; Bai, X.; Wang, X.; Du, P. Monitoring artificial surface expansion in ecological redline zones by multi-temporal VHR images. In Proceedings of the 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 August 2019. [Google Scholar]

- Leichtle, T.; Geiß, C.; Wurm, M.; Lakes, T.; Taubenböck, H. Unsupervised change detection in VHR remote sensing imagery–An object-based clustering approach in a dynamic urban environment. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 15–27. [Google Scholar] [CrossRef]

- Liu, H.; Yang, M.; Chen, J.; Hou, J.; Deng, M. Line-constrained shape feature for building change detection in VHR remote sensing imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 410. [Google Scholar] [CrossRef]

- Schepaschenko, D.; See, L.; Lesiv, M.; Bastin, J.F.; Mollicone, D.; Tsendbazar, N.-E.; Bastin, L.; McCallum, I.; Bayas, J.C.L.; Baklanov, A.; et al. Recent advances in forest observation with visual interpretation of very high-resolution imagery. Surv. Geophys. 2019, 40, 839–862. [Google Scholar] [CrossRef]

- Byun, Y.; Han, Y.; Chae, T. Image fusion-based change detection for flood extent extraction using bi-temporal very high-resolution satellite images. Remote Sens. 2015, 7, 10347–10363. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Ridd, M.K.; Liu, J. A comparison of four algorithms for change detection in an urban environment. Remote Sens. Environ. 1998, 63, 95–100. [Google Scholar] [CrossRef]

- Carvalho Júnior, O.A.; Guimarães, R.F.; Gillespie, A.R.; Silva, N.C.; Gomes, R.A.T. A new approach to change vector analysis using distance and similarity measures. Remote Sens. 2011, 3, 2473–2493. [Google Scholar] [CrossRef]

- Liu, S.; Marinelli, D.; Bruzzone, L.; Bovolo, F. A review of change detection in multitemporal hyperspectral images: Current techniques, applications, and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 140–158. [Google Scholar] [CrossRef]

- Zheng, Z.; Cao, J.; Lv, Z.; Benediktsson, J.A. Spatial–spectral feature fusion coupled with multi-scale segmentation voting decision for detecting land cover change with VHR remote sensing images. Remote Sens. 2019, 11, 1903. [Google Scholar] [CrossRef]

- Lu, J.; Li, J.; Chen, G.; Zhao, L.; Xiong, B.; Kuang, G. Improving pixel-based change detection accuracy using an object-based approach in multitemporal SAR Flood Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3486–3496. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Han, Y.; Bovolo, F.; Bruzzone, L. Edge-based registration-noise estimation in VHR multitemporal and multisensor images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1231–1235. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Huang, X. Object-oriented change detection based on the Kolmogorov–Smirnov test using high-resolution multispectral imagery. Int. J. Remote Sens. 2011, 32, 5719–5740. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-based change detection in urban areas from high spatial resolution images based on multiple features and ensemble learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef]

- Keyport, R.N.; Oommen, T.; Martha, T.R.; Sajinkumar, K.S.; Gierke, J.S. A comparative analysis of pixel-and object-based detection of landslides from very high-resolution images. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 1–11. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Blaschke, T.; Ma, X.; Tiede, D.; Cheng, L.; Chen, Z.; Chen, D. Object-based change detection in urban areas: The effects of segmentation strategy, scale, and feature space on unsupervised methods. Remote Sens. 2016, 8, 761. [Google Scholar] [CrossRef]

- Lv, Z.; Liu, T.; Wan, Y.; Benediktsson, J.A.; Zhang, X. Post-processing approach for refining raw land cover change detection of very-high-resolution remote sensing images. Remote Sens. 2018, 10, 472. [Google Scholar] [CrossRef]

- Cui, G.; Lv, Z.; Li, G.; Atli Benediktsson, J.; Lu, Y. Refining land cover classification maps based on dual-adaptive majority voting strategy for very high resolution remote sensing images. Remote Sens. 2018, 10, 1238. [Google Scholar] [CrossRef]

- Cai, L.; Shi, W.; Zhang, H.; Hao, M. Object-oriented change detection method based on adaptive multi-method combination for remote-sensing images. Int. J. Remote Sens. 2016, 37, 5457–5471. [Google Scholar] [CrossRef]

- Feizizadeh, B.; Blaschke, T.; Tiede, D.; Moghaddam, M.H.R. Evaluating fuzzy operators of an object-based image analysis for detecting landslides and their changes. Geomorphology 2017, 293, 240–254. [Google Scholar] [CrossRef]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban change detection based on dempster-shafer theory for multitemporal very-high-resolution imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef]

- Du, P.; Liu, S.; Xia, J.; Zhao, Y. Information fusion techniques for change detection from multi-temporal remote sensing images. Inf. Fusion 2013, 14, 19–27. [Google Scholar] [CrossRef]

- Du, P.; Liu, S.; Gamba, P.; Tan, K.; Xia, J. Fusion of difference images for change detection over urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1076–1086. [Google Scholar] [CrossRef]

- Dutta, P. An uncertainty measure and fusion rule for conflict evidences of big data via dempster–shafer theory. Int. J. Image Data Fusion 2018, 9, 152–169. [Google Scholar] [CrossRef]

- Luo, H.; Wang, L.; Shao, Z.; Li, D. Development of a multi-scale object-based shadow detection method for high spatial resolution image. Remote Sens. Lett. 2015, 6, 59–68. [Google Scholar] [CrossRef]

- Hegarat-Mascle, S.L.; Bloch, I.; Vidal-Madjar, D. Application of dempster-shafer evidence theory to unsupervised classification in multisource remote sensing. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1018–1031. [Google Scholar] [CrossRef]

- Hao, M.; Shi, W.; Zhang, H.; Wang, Q.; Deng, K. A scale-driven change detection method incorporating uncertainty analysis for remote sensing images. Remote Sens. 2016, 8, 745. [Google Scholar] [CrossRef]

- Lu, Y.H.; Trinder, J.C.; Kubik, K. Automatic building detection using the dempster-shafer algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 395–403. [Google Scholar] [CrossRef]

- Nielsen, A.A. The regularized iteratively reweighted MAD method for change detection in multi-and hyperspectral data. IEEE Trans. Image Process. 2007, 16, 463–478. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and K-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Han, Y.; Kim, T.; Yeom, J. Improved piecewise linear transformation for precise warping of very-high-resolution remote sensing images. Remote Sens. 2019, 11, 2235. [Google Scholar] [CrossRef]

- Aguilar, M.A.; del Mar Saldana, M.; Aguilar, F.J. Assessing geometric accuracy of the orthorectification process from GeoEye-1 and WorldView-2 panchromatic images. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 427–435. [Google Scholar] [CrossRef]

- Gašparović, M.; Dobrinić, D.; Medak, D. Geometric accuracy improvement of WorldView–2 imagery using freely available DEM data. Photogramm. Rec. 2019, 34, 266–281. [Google Scholar] [CrossRef]

- Han, Y.; Choi, J.; Jung, J.; Chang, A.; Oh, S.; Yeom, J. Automated co-registration of multi-sensor orthophotos generated from unmanned aerial vehicle platforms. J. Sens. 2019, 2019, 2962734. [Google Scholar] [CrossRef]

- Reddy, B.S.; Chatterji, B.N. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef]

- Belgiu, M.; Drǎguţ, L. Comparing supervised and unsupervised multiresolution segmentation approaches for extracting buildings from very high resolution imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 67–75. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Bruzzone, L.; Bovolo, F. Multiscale morphological compressed change vector analysis for unsupervised multiple change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4124–4137. [Google Scholar] [CrossRef]

- Shafer, G. Dempster-shafer theory. Encycl. Artif. Intell. 1992, 1, 330–331. [Google Scholar]

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. WorldView-2 pansharpening. In Proceedings of the ASPRS 2010 Annual Conference, San Diego, CA, USA, 26–30 April 2010. [Google Scholar]

- Belfiore, O.R.; Parente, C. Orthorectification and pan-sharpening of WorldView-2 satellite imagery to produce high resolution coloured ortho-photos. Mod. Appl. Sci. 2015, 9, 122–130. [Google Scholar]

- Gašparović, M.; Jogun, T. The effect of fusing Sentinel-2 bands on land-cover classification. Int. J. Remote Sens. 2018, 39, 822–841. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).