Multi-Source and Multi-Temporal Image Fusion on Hypercomplex Bases

Abstract

1. Introduction

1.1. Necessity for Analysis Ready Data

1.2. Applications Based on Multi-SAR System

1.3. Multi-SAR System with Image Fusion

- Is there a flexible descriptor for multi-spectral data with more than four channels that delivers Kennaugh-like elements as utilized in the Multi-SAR System?

- How can multi-source images (e.g., from SAR and Optics) which are transformed to a common basis be fused without loss of information?

- Is it possible to consider not only spectral or polarimetric, but also further dimensions such as “temporal” or “spatial” in a similar way?

- Does the fusion of multi-source data really produce more stable class signatures than the simple channel combination?

- How does a reduction of the image bit depth due to storing reasons effects the stability of the class signatures?

2. Methodology

2.1. Consistent Data Frame

- All entries of A share the same absolute value given by the dimension of A which is needed to fulfil the orthogonality requirement. This also implies an equal weight of all input channels corresponding to a uniform look factor.

- The first row of A denotes an equally weighted sum over all input channels giving the total intensity (SAR) or reflectance (Optics), respectively. This entry will be necessary for the normalization of the complete set of Kennaugh-like elements later on.

- All other rows composes of as many negative as positive entries, thus their sum and their expectation value consequently equal to zero. Zero means “no information” in this element, whereas any deviation from zero indicated spectral or temporal information, respectively.

- The matrix A is orthogonal. The inverse transform thus is simply given by its transposed matrix of A. As the matrix A is predefined and not estimated for each data set separately as usual in PCA (e.g.), it is guaranteed that the transform can simply be inverted.

- The transposed matrix neutralizes the transform. This characteristic directly results from the orthogonality stated above and underlines that the basis change preserves information. The transform therefore only introduces new coordinate axes in the feature space.

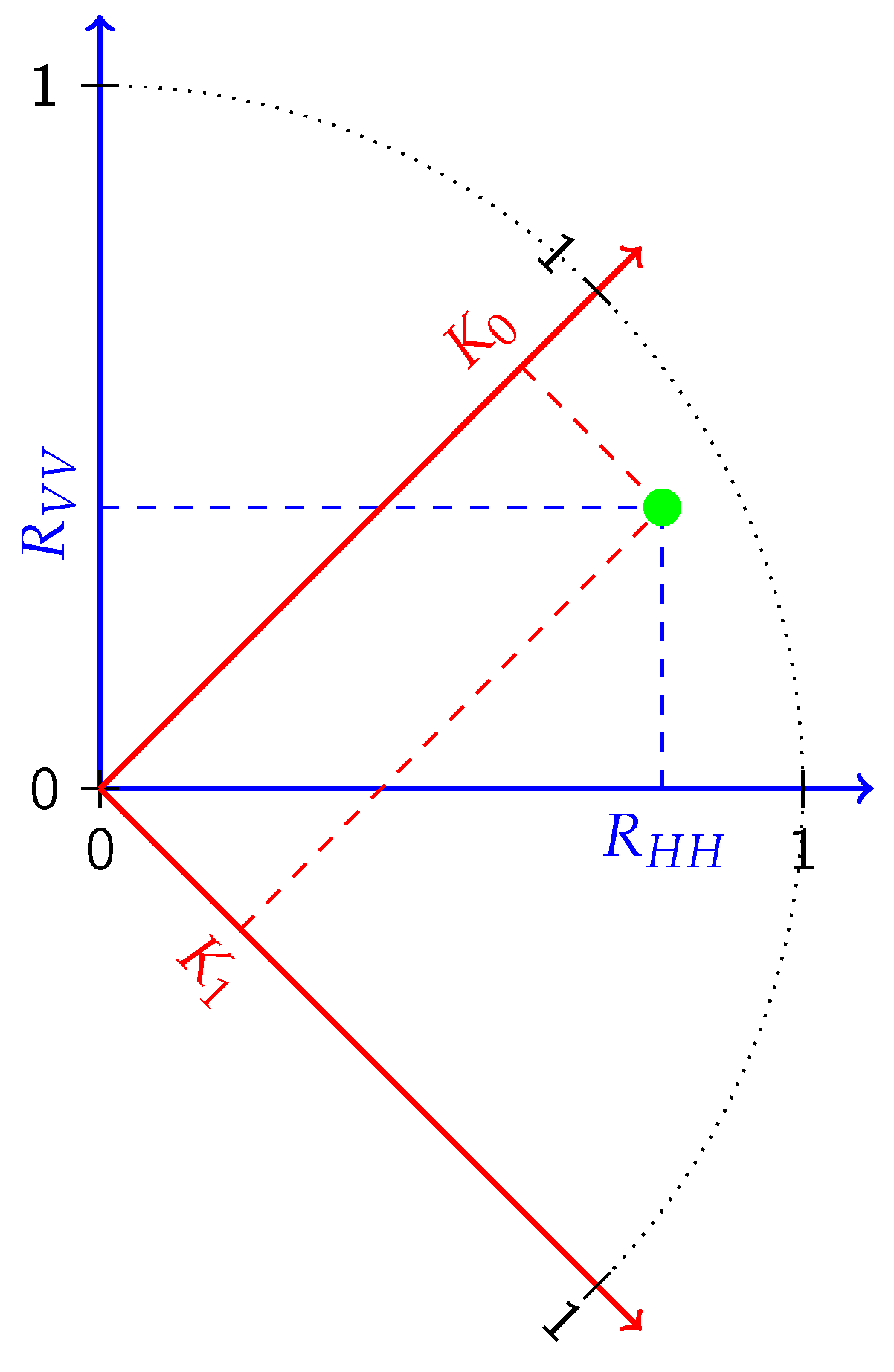

2.1.1. The Complex Basis

2.1.2. The Quaternion Basis

2.1.3. The Octonion Basis

2.1.4. The Sedenion Basis

2.1.5. Higher Order Bases

2.2. Multi-Source Image Fusion

2.2.1. Polarimetric Fusion

2.2.2. SAR Sharpening

2.2.3. Spectral Fusion

2.2.4. SAR-Optical Fusion

2.2.5. Image Fusion with Arbitrary Layers

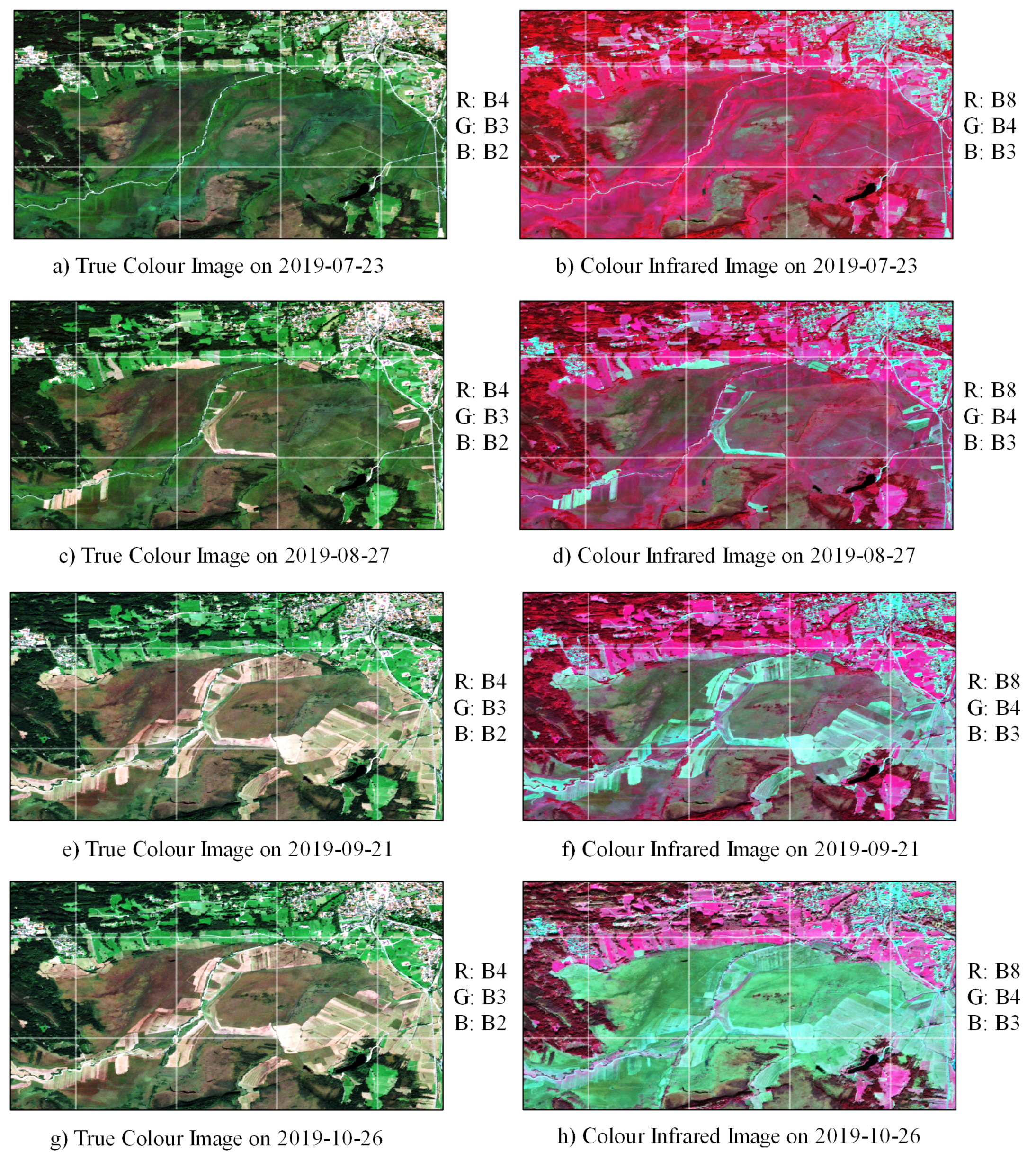

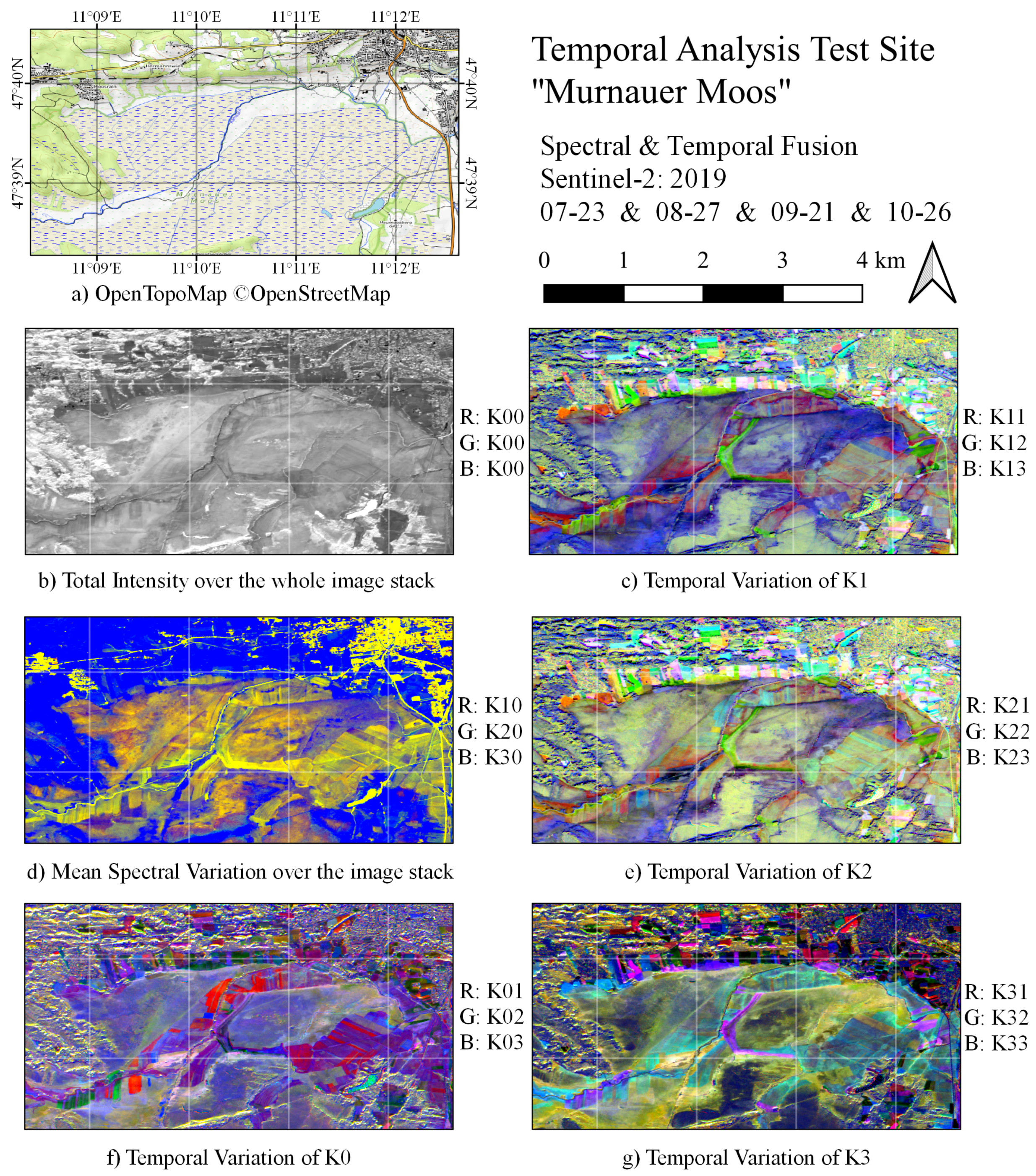

2.3. Multi-Temporal Image Fusion

2.3.1. Change Detection

2.3.2. Gradients and Curvature

2.3.3. General Time Series Analysis

2.4. Data Scaling Approach

2.4.1. Linear Scale

2.4.2. Hyperbolic Tangent Normalized Scale

2.4.3. Logarithmic Scale

2.5. Evaluation Approach

2.5.1. Visual Inspection

2.5.2. Class Signatures

2.5.3. Maximum Likelihood Contingency Evaluation

2.5.4. Similarity of Signatures

2.5.5. Intra- and Inter-Class Similarities

2.5.6. Gain of Intra- vs. Inter-Class-Similarity

3. Results

3.1. Multi-Temporal Image Fusion

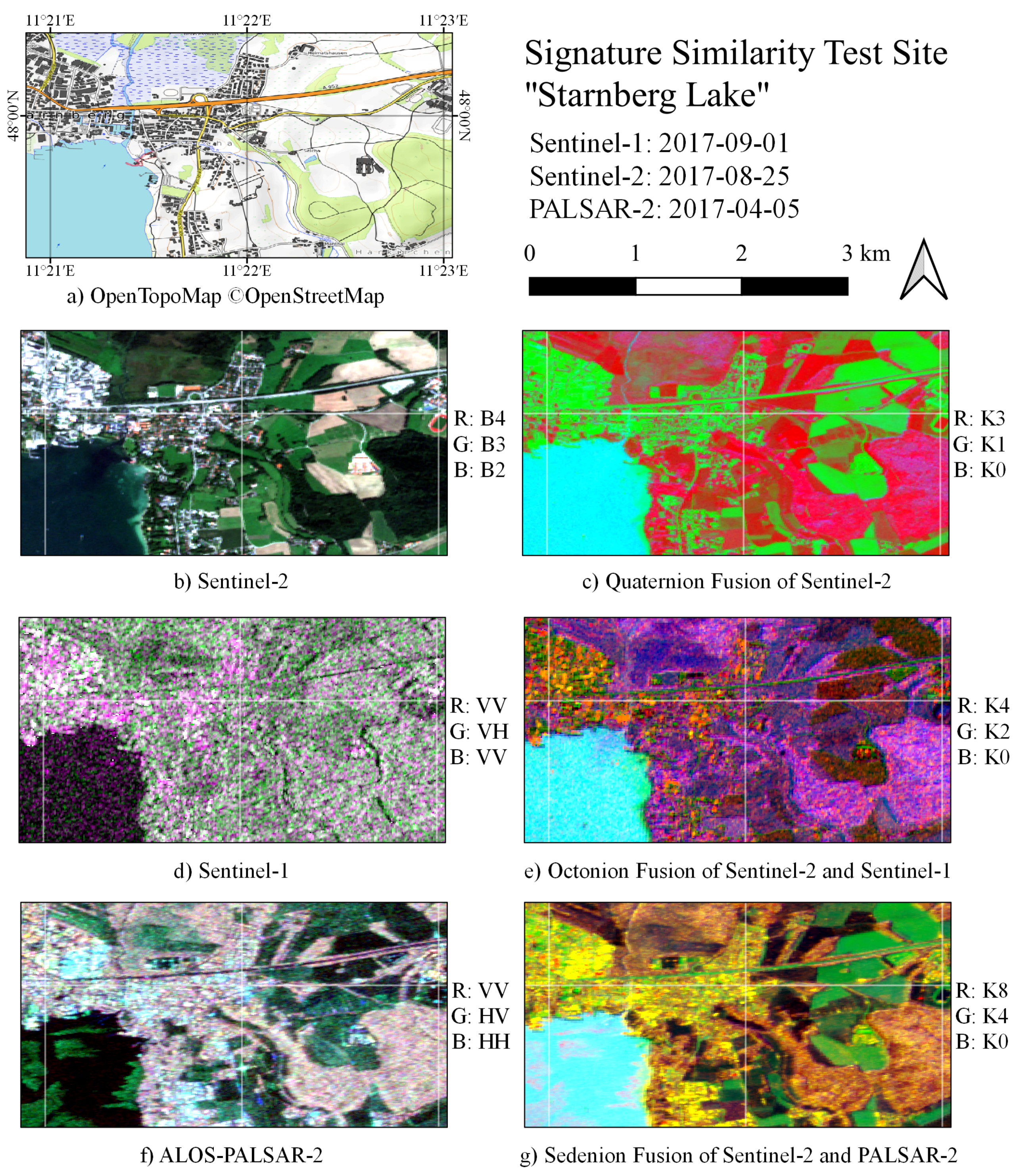

3.2. Multi-Source Image Fusion

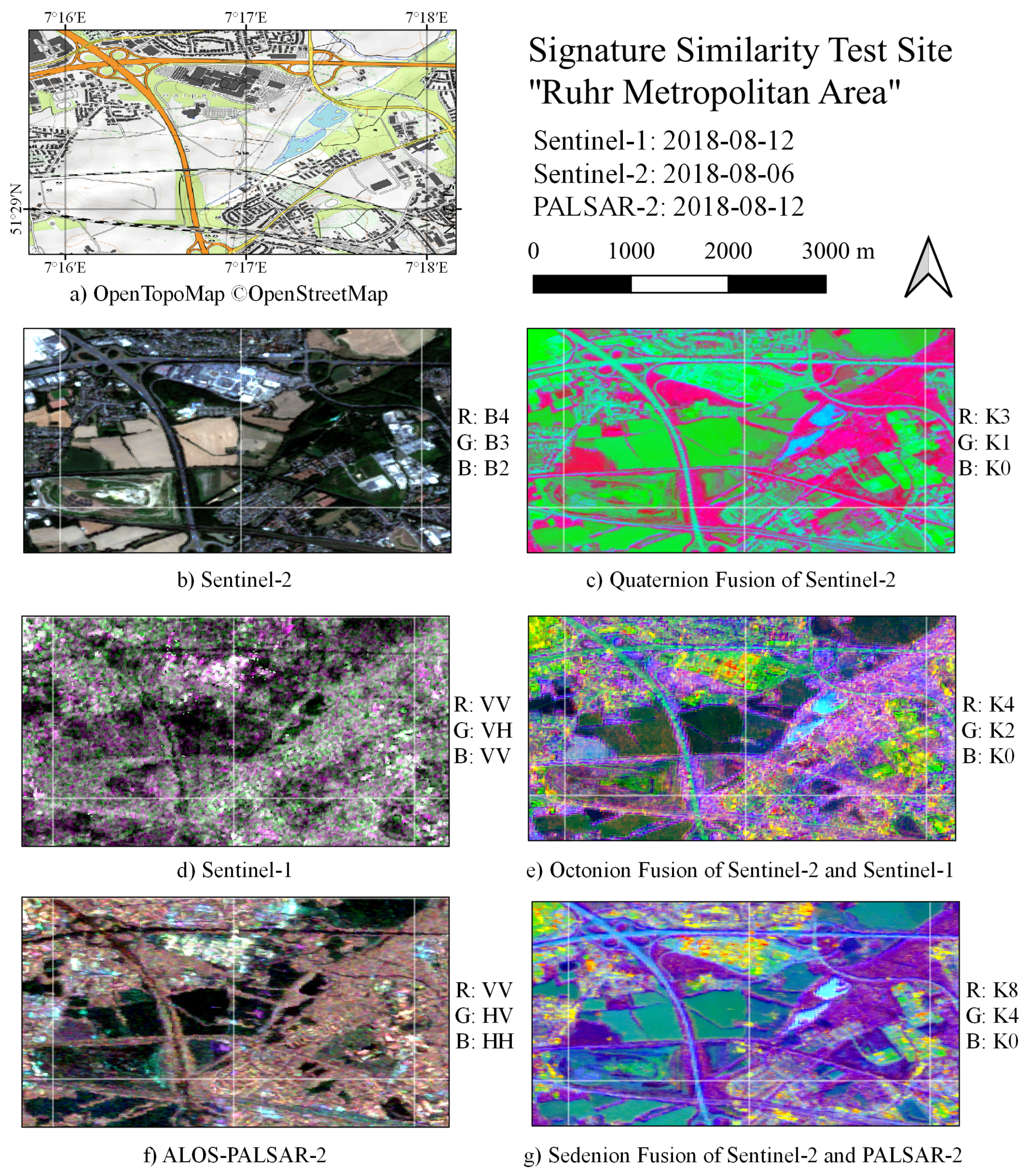

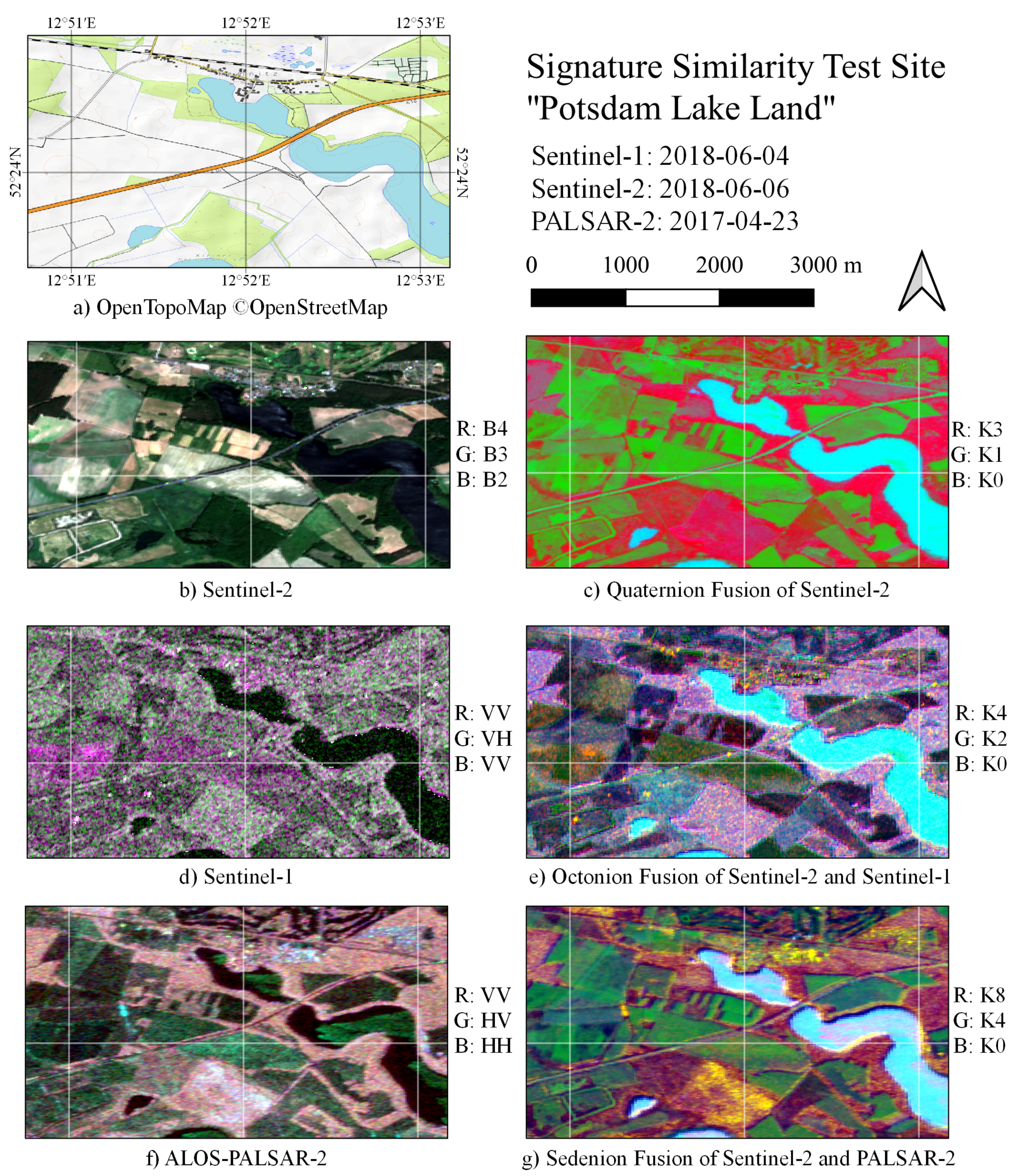

3.2.1. Fused Images

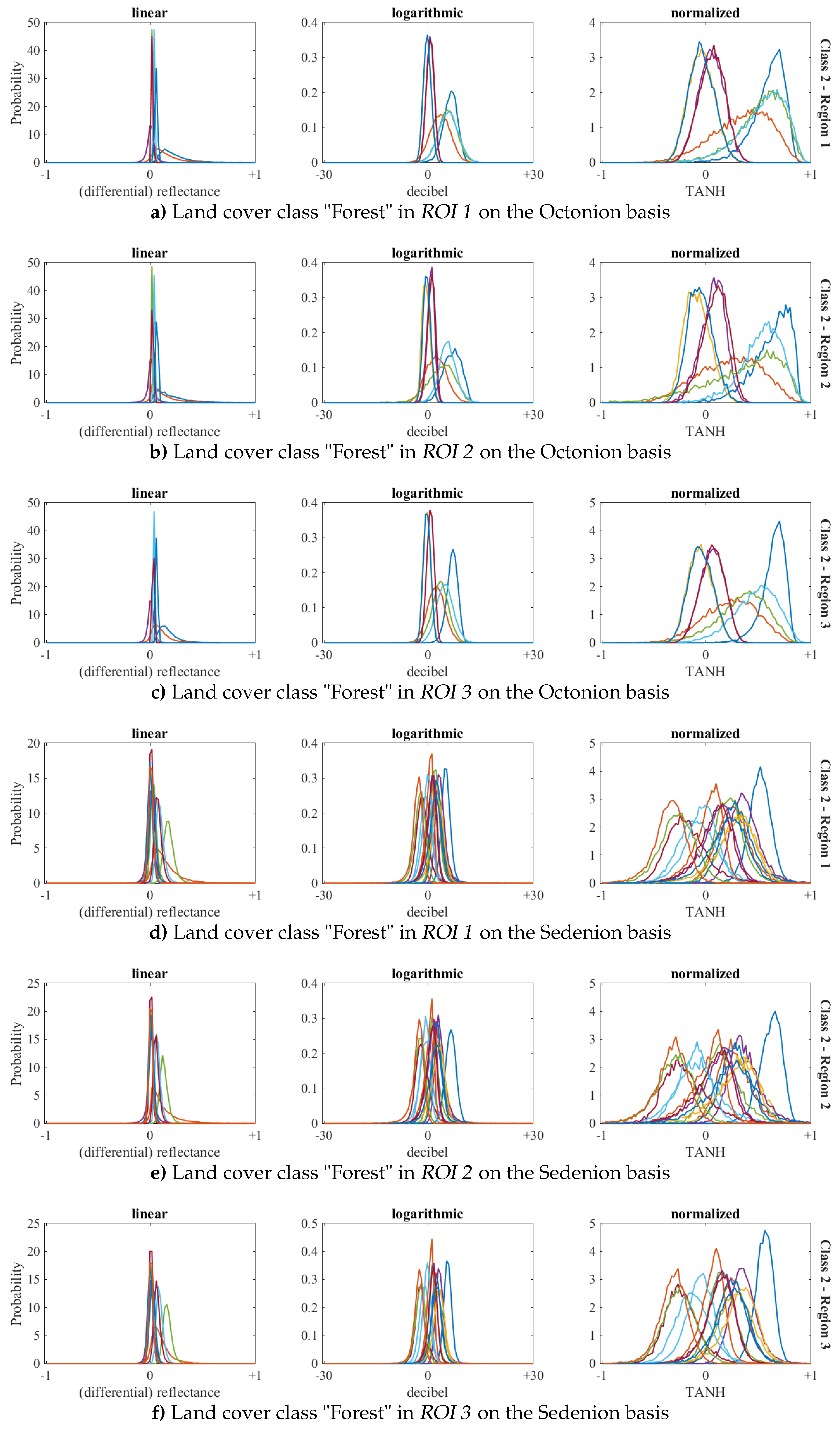

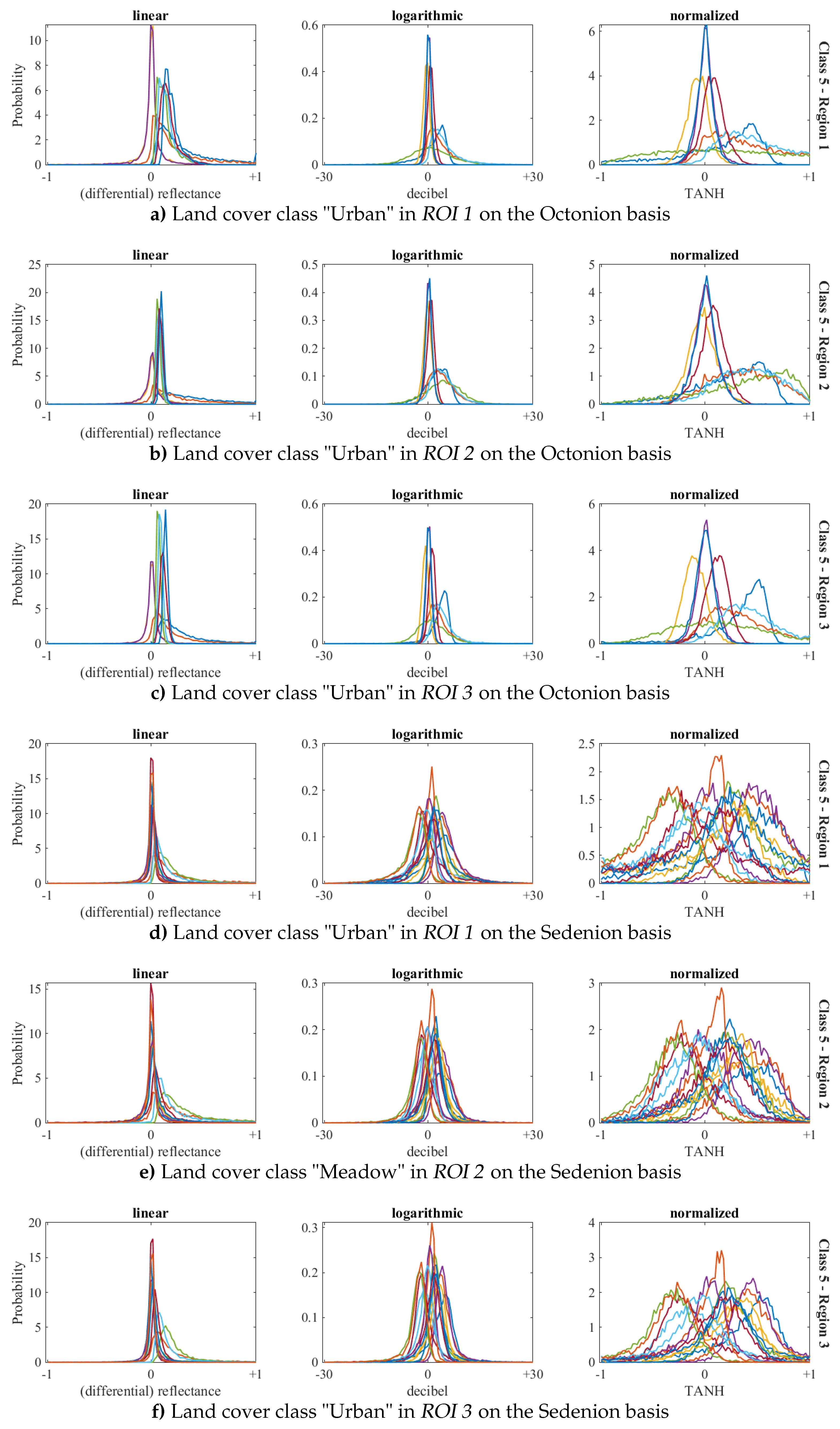

3.2.2. Signature Stability

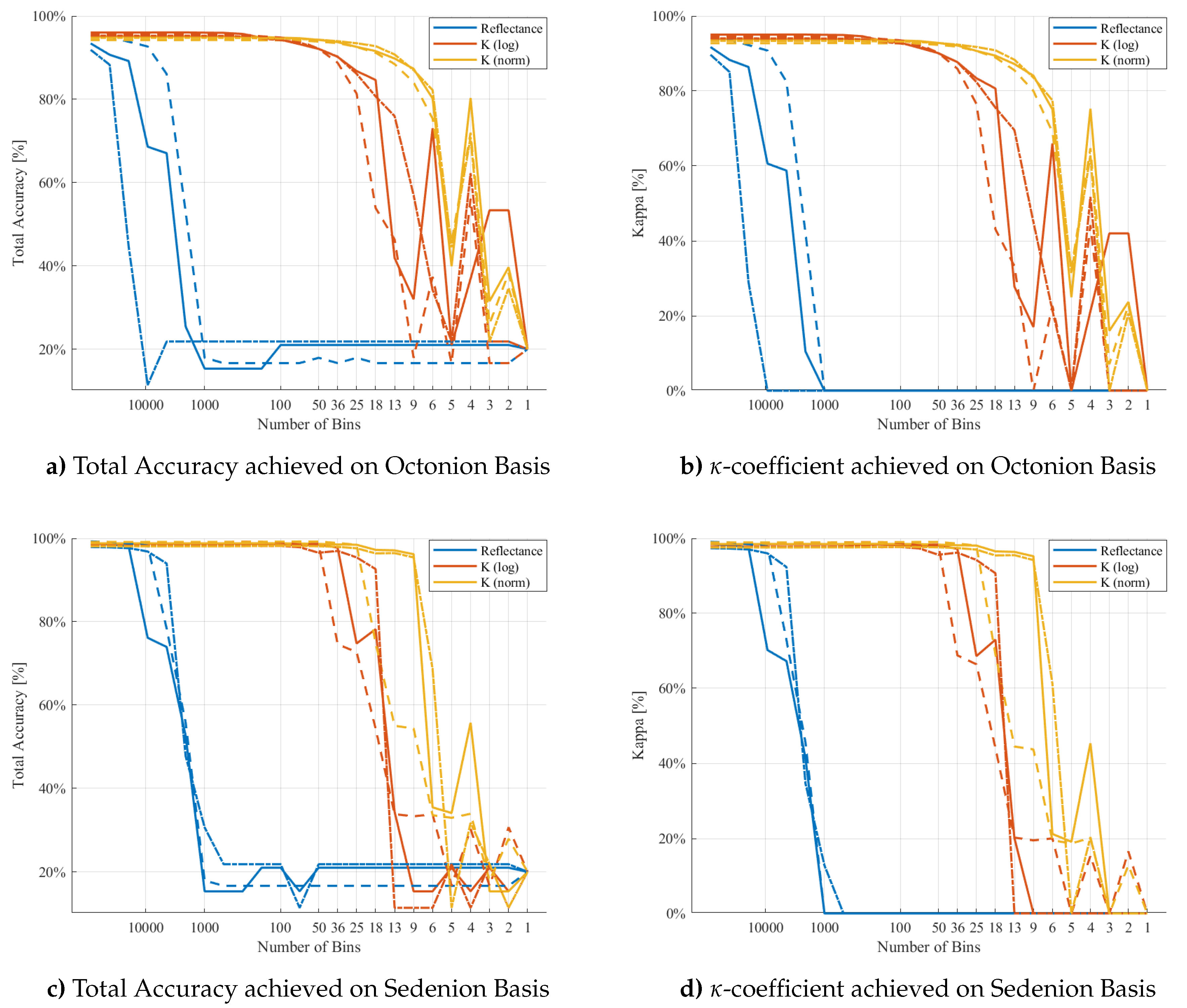

3.2.3. Maximum Likelihood Contingency

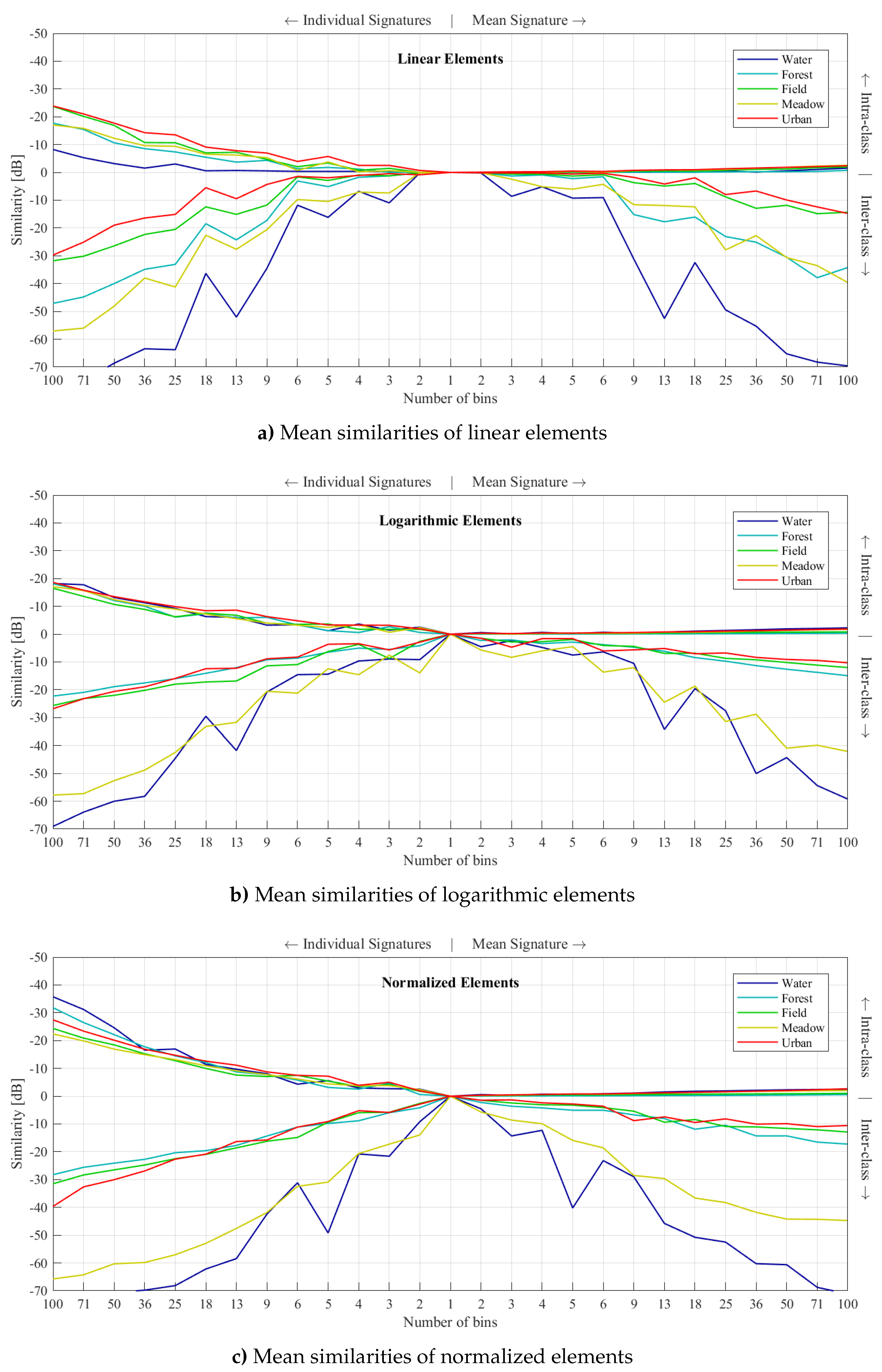

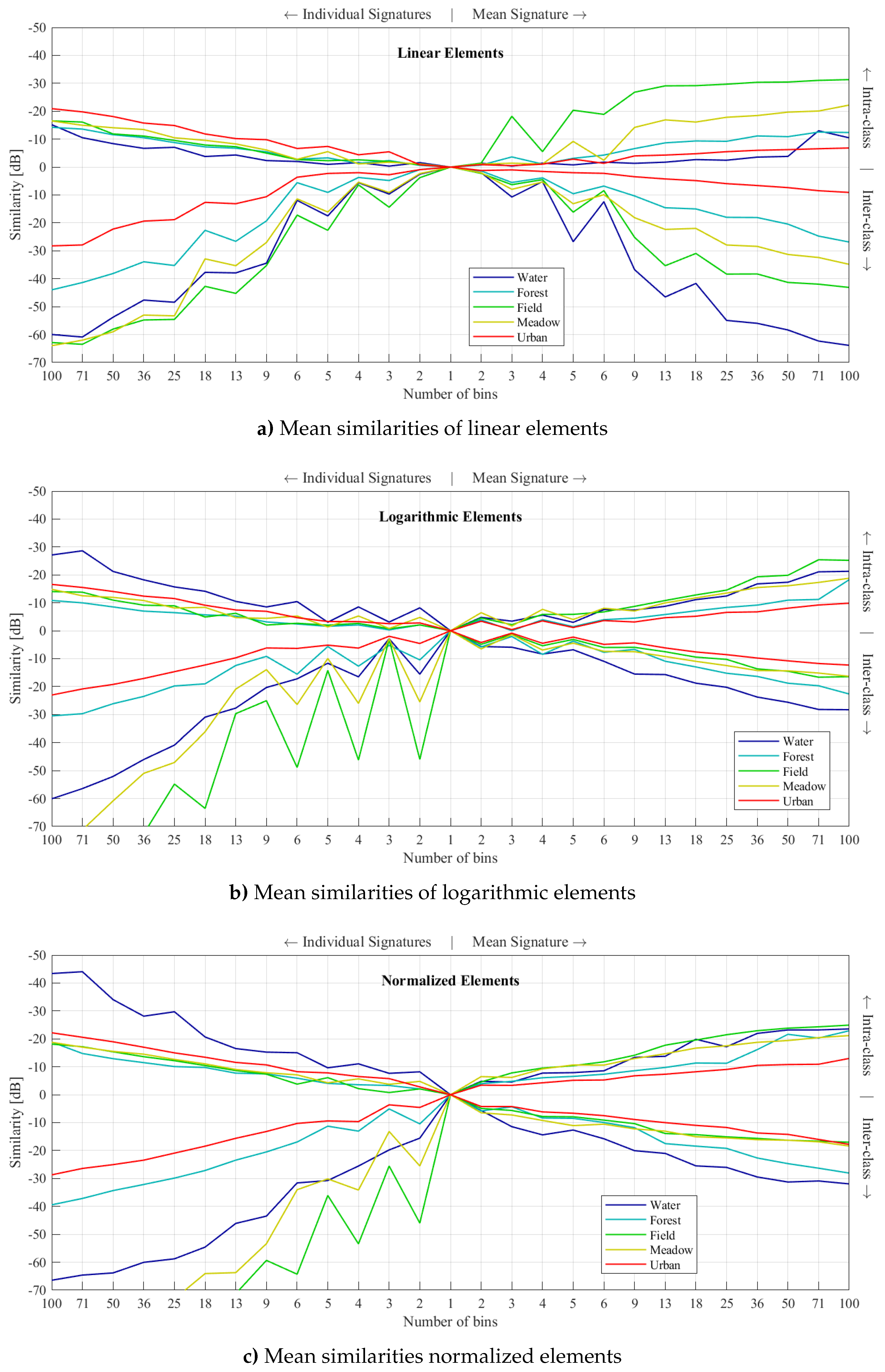

3.2.4. Signature Similarity

- upper left: the mean intra-class similarities of the individual object signatures

- upper right: the mean intra-class similarities of the mean class signatures

- lower left: the mean inter-class similarities of the individual object signatures

- lower right: the mean inter-class similarities of the mean class signatures

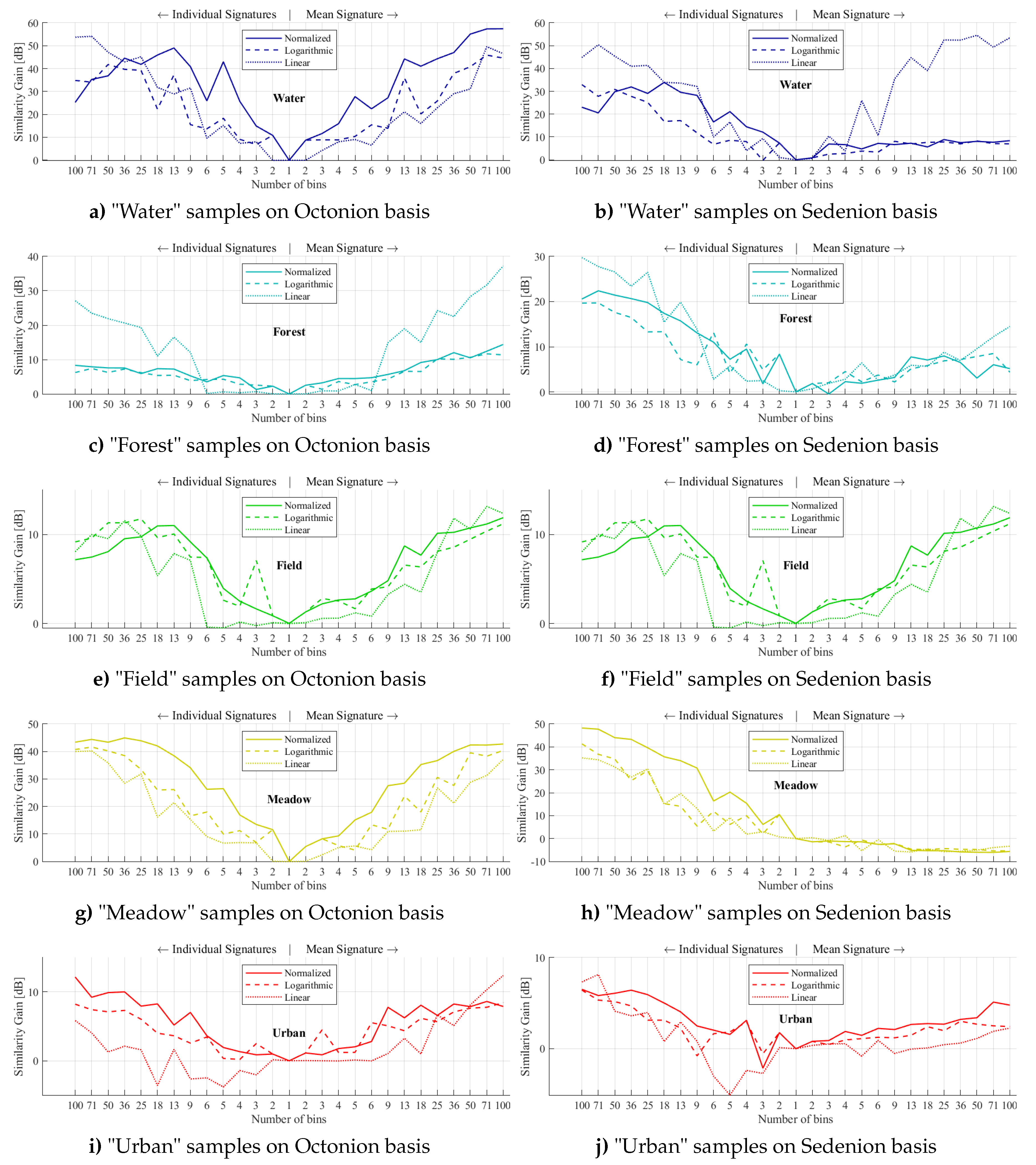

3.2.5. Similarity Gain

4. Discussion

4.1. Multi-Temporal Image Fusion

4.2. Multi-source Image Fusion

4.2.1. Visual Inspection

4.2.2. Mean Class Signatures

4.2.3. Maximum Likelihood Contingency

4.2.4. Signature Similarity

4.2.5. Similarity Gain

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ARD | Analysis Ready Data |

| ASTER | Advanced Spaceborne Thermal Emission and Reflection Radiometer |

| BOA | Bottom of Atmosphere |

| CEOS | Committee on Earth Observation Satellites |

| CNN | Convolutional Neural Networks |

| DEM | Digital Elevation Model |

| DFD | German Remote Sensing Data Center |

| DLR | German Aerospace Center |

| EO | Earth Observation |

| ETM | Enhanced Thematic Mapper |

| FP | fully polarimetric (HH, HV, VH, VV) |

| GRD | Ground Range Detected |

| L2A | Level 2A images |

| MLE | Maximum Likelihood Estimation |

| MSML | Multi-Scale Multi-Looking |

| PCA | Principal Component Analysis |

| Probability density function | |

| RGB | Red Green Blue |

| ROI | Region of Interest |

| SAR | Synthetic Aperture Radar |

| SDC | Swiss Data Cube |

| SLC | Single look complex |

| SRTM | Shuttle Radar Topography Mission |

| TANH | Hyperbolic Tangent |

| USGS | United State Geological Survey |

| UTM | Universal Transverse Mercator Coordinate System |

| WGS84 | World Geodetic System from 1984 |

References

- Sudmanns, M.; Tiede, D.; Lang, S.; Bergstedt, H.; Trost, G.; Augustin, H.; Baraldi, A.; Blaschke, T. Big Earth data: Disruptive changes in Earth observation data management and analysis? Int. J. Digit. Earth 2019. [Google Scholar] [CrossRef]

- ESA. Sentinel Data Access Annual Report 2019. COPE-SERCO-RP-19-0389 2019, 1, 1–116. [Google Scholar]

- Giuliani, G.; Chatenoux, B.; Honeck, E.; Richard, J. Towards Sentinel-2 Analysis Ready Data: A Swiss Data Cube Perspective. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8659–8662. [Google Scholar]

- CEOS Committee on Earth Observation Satellites. CEOS Analysis Ready Data Strategy. CEOS Plenary 2019, 1, 1–9. [Google Scholar]

- Wulder, M.A.; Whitea, J.C.; Loveland, T.R.; Woodcock, C.E.; Belward, A.S.; Cohen, W.B.; Fosnight, E.A.; Shawf, J.; Masekg, J.G.; Royh, D.P. The global Landsat archive: Status consolidation and direction. Remote Sens. Environ. 2016, 185, 271–283. [Google Scholar] [CrossRef]

- Giuliani, G.; Camara, G.; Killough, B.; Minchin, S. Earth Observation Open Science: Enhancing Reproducible Science Using Data Cubes. Data 2019, 4, 147. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Truckenbrodt, J.; Freemantle, T.; Williams, C.; Jones, T.; Small, D.; Dubois, C.; Thiel, C.; Rossi, C.; Syriou, A.; Giuliani, G. Towards Sentinel-1 SAR Analysis-Ready Data: A Best Practices Assessment on Preparing Backscatter Data for the Cube. Data 2019, 4, 93. [Google Scholar] [CrossRef]

- Ticehurst, C.; Zhou, Z.-S.; Lehmann, E.; Yuan, F.; Thankappan, M.; Rosenqvist, A.; Lewis, B.; Paget, M. Building a SAR-Enabled Data Cube Capability in Australia Using SAR Analysis Ready Data. Data 2019, 4, 100. [Google Scholar] [CrossRef]

- CEOS Committee on Earth Observation Satellites. CEOS Analysis Ready Data For Land Polarimetric data. CEOS Plenary 2019, 2.1, 1–9. [Google Scholar]

- Schmitt, A.; Wendleder, A.; Hinz, S. The Kennaugh element framework for multi-scale, multi-polarized, multi-temporal and multi-frequency SAR image preparation. ISPRS J. Photogramm. Remote Sens. 2015, 102, 122–139. [Google Scholar] [CrossRef]

- Schmitt, A. Multiscale and Multidirectional Multilooking for SAR Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2013, 54, 5117–5134. [Google Scholar] [CrossRef]

- Schmitt, A.; Brisco, B. Wetland Monitoring Using the Curvelet-Based Change Detection Method on Polarimetric SAR Imagery. Water 2013, 5, 1036–1051. [Google Scholar] [CrossRef]

- Bauer, O.; Einzmann, K.; Schmitt, A.; Seitz, R. Erfassen von Windwurfflächen im Wald mit Satellitentechnik [Detection of wind throw areas using space-borne techniques]. AFZ – Der Wald 2015, 16, 14–17. [Google Scholar]

- Moser, L.; Schmitt, A.; Wendleder, A.; Roth, A. Monitoring of the Lac Bam Wetland Extent Using Dual-Polarized X-Band SAR Data. Remote Sens. 2016, 8, 1–31. [Google Scholar] [CrossRef]

- Moser, L.; Schmitt, A.; Wendleder, A. Automated wetland delineation from multi-frequency and multi-polarized SAR Images in high temporal and spatial resolution. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, VIII, 57–64. [Google Scholar] [CrossRef]

- Bertram, A.; Wendleder, A.; Schmitt, A.; Huber, M. Long-term Monitoring of water dynamics in the Sahel region using the Multi-SAR-System. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, VIII/8, 313–320. [Google Scholar] [CrossRef]

- Irwin, K.; Braun, A.; Fotopoulos, G.; Roth, A.; Wessel, B. Assessing Single-Polarization and Dual-Polarization TerraSAR-X Data for Surface Water Monitoring. Remote Sens. 2018, 10, 2076–3263. [Google Scholar] [CrossRef]

- Blocksdorf, A.; Schmitt, A.; Wendleder, A. Der Forggensee im Jahreswandel – auf dem Weg zur täglichen Wasserstandsmeldung aus dem Weltall [The Forggen lake throughout the year – on the way to a daily water-level report from space]. Photogramm. Fernerkund. Geoinf. 2018, 86, 29–33. [Google Scholar]

- Wang, W.; Gade, M.; Yang, X. Detection of Bivalve Beds on Exposed Intertidal Flats Using Polarimetric SAR Indicators. Remote Sens. 2017, 9. [Google Scholar] [CrossRef]

- Wendleder, A.; Stettner, S.; Roth, A.; Toose, P.; King, J. Relationships of TerraSAR-X Time Series with Snow Depth, Topography and Vegetation height over Arctic Tundra. In Proceedings of the IUGG, Montreal, QC, Canada, 8–18 July 2019. [Google Scholar]

- Dufour-Beauséjour, S.; Wendleder, A.; Gauthier, Y.; Bernier, M.; Poulin, J.; Gilbert, V.; Tuniq, J.; Rouleau, A.; Roth, A. Seasonal timeline for snow-covered sea ice processes in Nunavik’s Deception Bay from TerraSAR-X and time-lapse photography. Cryosphere Discuss. 2020. under review. [Google Scholar]

- Wendleder, A.; Hintermaier, S.; Schmitt, A.; Mayer, C. Reconstruction of snow stratigraphy using GPR and multi-frequency space-borne SAR data. In Proceedings of the 12th European Conference on Synthetic Aperture Radar, Aachen, Germany, 4–7 June 2018; Volume 9. [Google Scholar]

- Heilig, A.; Wendleder, A.; Schmitt, A.; Mayer, C. Discriminating Wet Snow and Firn for Alpine Glaciers Using Sentinel-1 Data: A Case Study at Rofental, Austria. Geosciences 2019, 9, 2076–3263. [Google Scholar] [CrossRef]

- Wurm, M.; Taubenböck, H.; Weigand, M.; Schmitt, A. Slum mapping in polarimetric SAR data using spatial features. Remote Sens. Environ. 2017, 194, 190–204. [Google Scholar] [CrossRef]

- Schmitt, A.; Sieg, T.; Wurm, M.; Taubenböck, H. Investigation on the separability of slums by multi-aspect TerraSAR-X dual- co-polarized high resolution spotlight images based on the multi-scale evaluation of local distributions. Int. J. Appl. Earth Observ. Geoinf. 2013, 64, 181–198. [Google Scholar] [CrossRef]

- Kleynmans, R. Implementierung und Validierung einer Parameterfreien Multiskaligen Maximum-Likelihood Klassifikation auf Basis lokaler Histogramme [Implementation and Validation of a Parameter-Free Multi-Scale Maximum Likelihood Classification Based on Local Histograms]. Bachelor’s Thesis, Munich University of Applied Sciences, Hochschule München, Germany, 2017. 10/OB 08-17 K64. [Google Scholar]

- Schmitt, A.; Wendleder, A. SAR-Sharpening in the Kennaugh Framework applied to the Fusion of Multi-Modal SAR and Optical Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV, 133–140. [Google Scholar] [CrossRef]

- Kleynmans, R. Validierung und Quantifizierung des Klassifizierungsmehrgewinns Durch SAR-Opt Datenfusion [Validation and Quantification of the Excess Profit From SAR-OPT-Data Fusion for Classification Purposes]. Master’s Thesis, Munich University of Applied Sciences, Hochschule München, Germany, 2019. 10/OM 08-19 K64. [Google Scholar]

- Hell, M. Überwachte, Parameterfreie, Multi-Skalige Klassifikation [Supervised, Parameter-Free, and Multi-Scale Classification]. Bachelor’s Thesis, Munich University of Applied Sciences, Hochschule München, Germany, 2019. 10/OB 08-19 H476. [Google Scholar]

- Pohl, C.; Genderen, J. Structuring contemporary remote sensing image fusion. Int. J. Image Data Fusion 2015, 6, 3–21. [Google Scholar] [CrossRef]

- Schmitt, M.; Zhu, X. Data Fusion and Remote Sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y.; Lu, H.; Yamawaki, A.; Yang, S.; Serikawa, S. Maximum local energy method and sum modified Laplacian for remote image fusion based on beyond wavelet transform. Int. J. Appl. Math. Inf. Sci. 2013, 7, 149–156. [Google Scholar]

- Ha, W.; Gowda, P.H.; Howell, T.A. A review of potential image fusion methods for remote sensing-based irrigation management: Part II. Irrig. Sci. 2013, 31, 851–869. [Google Scholar] [CrossRef]

- Siddiqui, Y. The modified IHS method for fusing satellite imagery. In Proceedings of the ASPRS Annual Conference [CD], Baltimore, MD, USA, 7–11 March 2003. [Google Scholar]

- Chen, C.-M.; Hepner, G.F.; Forster, R.R. Fusion of hyperspectral and radar data using the IHS transformation to enhance urban surface features. ISPRS J. Photogramm. Remote Sens. 2003, 58, 19–30. [Google Scholar] [CrossRef]

- Xu, Q. High-fidelity component substitution pansharpening by the fitting of substitution data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7380–7392. [Google Scholar]

- Xu, Q.; Zhang, Y.; Li, B.; Ding, L. Pansharpening Using Regression of Classified MS and Pan Images to Reduce Color Distortion. IEEE Geosci. Remote Sens. Lett. 2015, 12, 28–32. [Google Scholar]

- Chavez, P.S.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Chiang, J.-L. Knowledge-based principal component analysis for image fusion. Appl. Math. Inf. Sci. 2014, 8, 223–230. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Chapter 8—Image registration and fusion. In Remote Sensing, 3rd ed.; Schowengerdt, R.A., Ed.; Burlington: Burlington, NJ, USA, 2007; pp. 355–385. [Google Scholar]

- Pohl, C.; Genderen, J. Review article Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Mazaheri, S.; Sulaiman, P.S.; Wirza, R.; Dimon, M.Z.; Khalid, F.; Tayebi, R.M. Hybrid Pixel-Based Method for Cardiac Ultrasound Fusion Based on Integration of PCA and DWT. Math. Methods Appl. Med. Imag. 2015, 2015, 1–16. [Google Scholar] [CrossRef]

- Patel, H.; Upla, K. Survey on Image Fusion: Hand Designed to Deep Learning Algorithms. AJCT 2019, V, 1–9. [Google Scholar] [CrossRef]

- Xiao, M.; He, Z. Remote sensing image fusion based on Gaussian mixture model and multiresolution analysis. MIPPR 2013: Remote Sens. Image Process. Geogr. Inf. Syst. Other Appl. 2013, 8921, 173–180. [Google Scholar]

- Amolins, K.; Zhang, Y.; Dare, P. Wavelet based image fusion techniques—An introduction, review and comparison. ISPRS J. Photogramm. Remote Sens. 2007, 62, 249–263. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G. An IHS and wavelet integrated approach to improve pan-sharpening visual quality of natural colour IKONOS and QuickBird images. Inf. Fus. 2015, 6, 225–234. [Google Scholar] [CrossRef]

- Choi, J.; Yeom, J.; Chang, A.; Byun, Y.; Kim, Y. Hybrid Pansharpening Algorithm for High Spatial Resolution Satellite Imagery to Improve Spatial Quality. IEEE Geosci. Remote Sens. Lett. 2013, 10, 490–494. [Google Scholar] [CrossRef]

- Metwalli, M.R.; Nasr, A.H.; Faragallah, O.S.; El-Rabaie, E.M.; Abbas, A.M.; Alshebeili, S.A.; Abd El-Samie, F.E. Efficient pan-sharpening of satellite images with the contourlet transform. Int. J. Remote Sens. 2014, 35, 1979–2002. [Google Scholar] [CrossRef]

- Khare, A.; Srivastava, R.; Singh, R. Edge Preserving Image Fusion Based on Contourlet Transform. Image Signal Process. 2012, 93–102. Available online: https://link.springer.com/chapter/10.1007/978-3-642-31254-0_11 (accessed on 15 January 2020).

- Choi, M.; Kim, R.Y.; Kim, M.-G. The curvelet transform for image fusion. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35. Available online: https://www.isprs.org/proceedings/XXXV/congress/yf/papers/931.pdf (accessed on 15 January 2020).

- Verma, M.; Kaushik, V.D.; Rao, C.V. Curvelet based image fusion. In Proceedings of the 2012 World Congress on Information and Communication Technologies, Trivandrum, India, 30 October–2 November 2012; pp. 959–964. [Google Scholar]

- Deshmukh, C.N.; Bajad, N.G. A comparative study of different image fusion techniques for tone-mapped images. Int. J. Sci. Eng. Res. 2016, 7, 474–478. [Google Scholar]

- Singh, S.; Rajput, R. A Comparative Study of Classification of Image Fusion Techniques. Int. J. Eng. Comput. Sci. 2014, 3, 7350–7353. [Google Scholar]

- Ivakhnenko, A.G.; Lapa, V.G. Cybernetic Predicting Devices; CCM Information Corporation: New York, NY, USA, 1965. [Google Scholar]

- Dechter, R. Learning while searching in constraint-satisfaction problems. In Proceedings of the Fifth National Conference on Artificial Intelligence (AAAI-86), Philadelphia, PA, USA, 11–15 August 1986; pp. 178–183. [Google Scholar]

- Liu, Y.; Chen, X.; Wang, Z.; Wang, Z.J.; Ward, R.K.; Wang, X. Deep learning for pixel-level image fusion: Recent advances and future prospects. Inf. Fus. 2018, 42, 158–173. [Google Scholar] [CrossRef]

- Piao, J.; Chen, Y.; Shin, H. A New Deep Learning Based Multi-Spectral Image Fusion Method. Entropy 2019, 21, 570. [Google Scholar] [CrossRef]

- He, W.; Yokoya, N. Multi-Temporal Sentinel-1 and -2 Data Fusion for Optical Image Simulation. ISPRS Int. J. Geo-Inf. 2018, 7, 389. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.; Wang, Z. Image Fusion With Convolutional Sparse Representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Zhai, J.; Dong, G.; Chen, F.; Xie, X.; Qi, C.; Lin, L. A Deep Learning Fusion Recognition Method Based On SAR Image Data. Procedia Comput. Sci. 2019, 147. [Google Scholar] [CrossRef]

- Chaudhuri, U.; Banerjee, B.; Bhattacharya, A.; Datcu, M. CMIR-NET: A Deep Learning Based Model For Cross-Modal Retrieval In Remote Sensing. Pattern Recognit. Lett. 2020, 131, 456–462. [Google Scholar] [CrossRef]

- Wessel, B.; Schmitt, A.; Wagner, L.; Roth, A. Generating Pseudo Quad-polarized SAR Images of Pursuit- monostatic TanDEM-X Data by Kennaugh Data Fusion. In Proceedings of the 12th European Conference on Synthetic Aperture Radar, Aachen, Germany, 4–7 June 2018. [Google Scholar]

- Schmitt, A. Änderungserkennung in Multitemporalen und Multipolarisierten Radaraufnahmen [Change Detection on Multi-Temporal and Multi-Polarized Radar Acquisitions]. Ph.D. Thesis, Karlsruhe Institute of Technology, Karlsruher Institut für Technologie, Karlsruhe, Germany, 2012. [Google Scholar]

- Colditz, R.; Wehrmann, T.; Bachmann, M.; Steinnocher, K.; Schmidt, M.; Strunz, G.; Dech, S. Influence of Image Fusion Approaches on Classification Accuracy—A Case Study. Int. J. Remote Sens. 2006, 27, 143–149. [Google Scholar] [CrossRef]

- Elkholy, M.; Hosny, M.M.; El-Habrouk, H.M. Studying the effect of lossy compression and image fusion on image classification. Alexandria Eng. J. 2019, 58, 143–149. [Google Scholar] [CrossRef]

- Pal, M. Factors Influencing the Accuracy of Remote Sensing Classifications: A Comparative Study. Ph.D. Thesis, University of Nottingham, Nottingham, UK, 2002. [Google Scholar]

- Moghaddam, H.H.; Torahi, A.A.; Abadi, P.Z.F. Using Discrete Wavelet Transform to increase the Accuracy of Hyper Spectral and High Resolution Images Fusion. J. Radar Opt. Remote Sens. 2019, 1, 22–30. [Google Scholar]

- Yulianti, Y.; Yulianti, E. Multi-Temporal Sentinel-2 Images for Classification Accuracy. J. Comput. Sci. 2019, 15, 258–268. [Google Scholar]

- Sukawattanavijit, C.; Srestasathiern, P. Object-based land cover classification based on fusion of multifrequency SAR data and THAICHOTE optical imagery. Remote Sens. Agric. Ecosyst. Hydrol. 2017, XIX, 13. [Google Scholar]

- Amarsaikhan, D.; Blotevogel, H.H.; van Genderen, J.L.; Ganzorig, M.; Gantuya, R.; Nergui, B. Fusing high-resolution SAR and optical imagery for improved urban land cover study and classification. Int. J. Image Data Fus. 2010, 1, 83–97. [Google Scholar] [CrossRef]

- Makarau, A.; Palubinskas, G.; Reinartz, P. Classification Accuracy Increase using Multisensor Data Fusion. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, XXXVIII, 181–186. [Google Scholar] [CrossRef]

- Jing, X.; Bao, Y. Analysis and Evaluation of IKONOS Image Fusion Algorithm Based on Land Cover Classification. Asian Agric. Res. 2015, 7, 52–56. [Google Scholar]

- Simone, G.; Farina, A.; Morabito, F.C.; Serpico, S.B.; Bruzzone, L. Image fusion techniques for remote sensing applications. Inf. Fus. 2002, 3, 3–15. [Google Scholar] [CrossRef]

- Luo, H. Classification Precision Analysis on Different Fusion Algorithm for ETM+ Remote Sensing Image. In Proceedings of the 2nd International Conference on Electronics, Network and Computer Engineering (ICENCE 2016), Yinchuan, China, 13–14 August 2016. [Google Scholar]

- Wenbo, W.; Jing, Y.; Tingjun, K. Study of Remote Sensing Image Fusion and Its Application in Image Classification. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 1141–1146. [Google Scholar]

- Al-Wassai, F.A.; Kalyankar, N.V. Influences Combination Of Multi-Sensor Images On Classification Accuracy. Int. J. Adv. Res. Comput. Sci. 2013, 4, 10–20. [Google Scholar]

| Reflectance Values | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Channel | T1 | T2 | T3 | T4 | Blue | Green | Red | Infrared | |

| Moment | |||||||||

| 1 | 0.109 | 0.102 | 0.096 | 0.071 | 0.012 | 0.020 | 0.018 | 0.106 | |

| 2 | 0.137 | 0.133 | 0.127 | 0.100 | 0.019 | 0.026 | 0.027 | 0.133 | |

| 3 | 0.159 | 0.161 | 0.158 | 0.129 | 0.037 | 0.038 | 0.045 | 0.053 | |

| 4 | 0.196 | 0.202 | 0.199 | 0.165 | 0.081 | 0.079 | 0.083 | 0.172 | |

| 5 | 0.221 | 0.234 | 0.231 | 0.195 | 0.143 | 0.138 | 0.140 | 0.142 | |

| Kennaugh-Like Elements | |||||||||

| Channel | |||||||||

| Moment | |||||||||

| 1 | 0.276 | 0.027 | 0.039 | 0.018 | 0.136 | 0.097 | 0.088 | 0.083 | |

| 2 | 0.306 | 0.035 | 0.050 | 0.028 | 0.176 | 0.128 | 0.114 | 0.109 | |

| 3 | 0.169 | 0.013 | 0.035 | 0.024 | 0.206 | 0.148 | 0.131 | 0.124 | |

| 4 | 0.354 | 0.071 | 0.086 | 0.074 | 0.260 | 0.188 | 0.165 | 0.158 | |

| 5 | 0.317 | 0.072 | 0.106 | 0.110 | 0.302 | 0.211 | 0.184 | 0.177 | |

| Linear Scale | |||||||||

| 1 | 0.597 | 0.078 | 0.119 | 0.047 | 0.180 | 0.249 | 0.231 | 0.214 | |

| 2 | 0.620 | 0.098 | 0.154 | 0.067 | 0.224 | 0.305 | 0.289 | 0.263 | |

| 3 | 0.037 | 0.034 | 0.110 | 0.045 | 0.225 | 0.312 | 0.266 | 0.269 | |

| 4 | 0.649 | 0.137 | 0.220 | 0.108 | 0.308 | 0.386 | 0.377 | 0.336 | |

| 5 | 0.362 | 0.104 | 0.215 | 0.100 | 0.334 | 0.408 | 0.329 | 0.355 | |

| Normalized Scale | |||||||||

| 1 | 6.361 | 0.687 | 1.057 | 0.412 | 1.790 | 2.841 | 2.445 | 2.210 | |

| 2 | 6.727 | 0.867 | 1.395 | 0.589 | 2.400 | 3.510 | 3.068 | 2.748 | |

| 3 | 1.839 | 0.332 | 1.140 | 0.410 | 2.959 | 3.785 | 2.986 | 2.936 | |

| 4 | 7.309 | 1.243 | 2.127 | 0.984 | 4.086 | 4.612 | 4.088 | 3.618 | |

| 5 | 5.390 | 1.059 | 2.334 | 0.957 | 4.937 | 4.966 | 3.780 | 3.894 | |

| logarithmic Scale | |||||||||

| variability | spectral | temporal | |||||||

| ROI & Coordinate Reference | Sensor | Product | Date |

|---|---|---|---|

| ROI 1 | ALOS-PALSAR-2 | Stripmap FP | 2017-04-05 |

| Starnberg Lake | Sentinel-1 | IW (VV&VH) | 2017-09-01 |

| UTM Zone 32N | Sentinel-2 | MSI-L2A | 2017-08-25 |

| ROI 2 | ALOS-PALSAR-2 | Stripmap FP | 2018-08-12 |

| Ruhr Metropolitan Area | Sentinel-1 | IW (VV&VH) | 2018-08-12 |

| UTM Zone 31N | Sentinel-2 | MSI-L2A | 2018-08-06 |

| ROI 3 | ALOS-PALSAR-2 | Stripmap FP | 2017-04-23 |

| Potsdam Lake Land | Sentinel-1 | IW (VV&VH) | 2018-06-04 |

| UTM Zone 33N | Sentinel-2 | MSI-L2A | 2018-06-06 |

| ROI 1 | “Starnberg Lake” | ||||||||||||

| norm | 15.6 | 16.6 | 15.9 | 17.1 | 15.9 | 15.9 | 16.2 | 12.5 | 8.9 | 11.7 | 7.4 | 4.6 | 3.9 |

| log | 15.0 | 14.7 | 15.9 | 14.0 | 13.6 | 8.7 | 12.5 | 5.7 | 5.3 | 4.3 | 3.8 | 2.3 | 3.9 |

| lin | 26.7 | 26.2 | 23.6 | 18.3 | 21.6 | 9.7 | 12.2 | 9.9 | 1.8 | 3.6 | 1.3 | 1.6 | 0.0 |

| ROI 2 | “Ruhr Metropolitan Area” | ||||||||||||

| norm | 21.2 | 22.3 | 22.3 | 21.7 | 22.4 | 20.3 | 17.9 | 16.2 | 11.9 | 12.9 | 7.4 | 5.6 | 4.7 |

| log | 20.0 | 20.1 | 19.0 | 17.3 | 16.3 | 12.6 | 12.9 | 7.5 | 7.0 | 6.7 | 5.5 | 3.9 | 4.7 |

| lin | 31.0 | 32.0 | 31.5 | 32.0 | 27.9 | 23.0 | 26.4 | 23.5 | 3.4 | 8.0 | 2.5 | 2.5 | 0.1 |

| ROI 3 | “Potsdam Lake Land” | ||||||||||||

| norm | 21.5 | 22.5 | 23.9 | 26.3 | 24.9 | 24.1 | 21.9 | 17.4 | 13.1 | 15.3 | 9.1 | 6.3 | 5.4 |

| log | 23.9 | 22.5 | 22.2 | 22.0 | 17.6 | 14.3 | 15.2 | 9.9 | 10.1 | 5.7 | 5.4 | 5.7 | 5.4 |

| lin | 28.4 | 30.4 | 27.2 | 25.2 | 24.6 | 13.7 | 20.6 | 13.7 | 4.2 | 4.9 | 3.2 | 3.5 | 0.2 |

| bins | 100 | 71 | 50 | 36 | 25 | 18 | 13 | 9 | 6 | 5 | 4 | 3 | 2 |

| ROI 1 | “Starnberg Lake” | ||||||||||||

| norm | 34.5 | 34.0 | 36.1 | 37.0 | 35.4 | 33.5 | 30.7 | 25.4 | 21.7 | 13.2 | 19.9 | 7.9 | 15.4 |

| log | 36.4 | 33.7 | 31.5 | 27.2 | 22.8 | 22.2 | 11.5 | 8.5 | 16.4 | 6.5 | 16.1 | 1.3 | 15.4 |

| lin | 32.1 | 33.1 | 30.9 | 27.2 | 28.1 | 20.4 | 21.4 | 18.2 | 6.1 | 8.0 | 2.1 | 5.7 | 0.7 |

| ROI 2 | “Ruhr Metropolitan Area” | ||||||||||||

| norm | 33.4 | 34.6 | 35.4 | 36.6 | 37.1 | 35.7 | 34.6 | 30.3 | 27.8 | 15.2 | 24.8 | 11.4 | 19.0 |

| log | 35.8 | 34.2 | 33.8 | 31.0 | 27.1 | 28.6 | 13.8 | 13.0 | 20.3 | 7.6 | 20.1 | 2.6 | 19.0 |

| lin | 33.8 | 35.3 | 34.8 | 34.2 | 32.3 | 27.8 | 30.1 | 26.1 | 7.3 | 16.1 | 1.6 | 7.6 | 0.3 |

| ROI 3 | “Potsdam Lake Land” | ||||||||||||

| norm | 34.6 | 35.3 | 33.5 | 32.5 | 31.0 | 29.3 | 27.1 | 23.3 | 20.4 | 14.2 | 19.0 | 9.0 | 13.8 |

| log | 31.1 | 29.6 | 28.2 | 24.7 | 21.9 | 21.4 | 13.4 | 10.3 | 16.8 | 5.1 | 14.7 | 3.4 | 13.8 |

| lin | 32.6 | 33.4 | 31.7 | 30.0 | 30.4 | 22.3 | 26.0 | 20.0 | 6.2 | 12.2 | 1.5 | 5.4 | 1.0 |

| bins | 100 | 71 | 50 | 36 | 25 | 18 | 13 | 9 | 6 | 5 | 4 | 3 | 2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schmitt, A.; Wendleder, A.; Kleynmans, R.; Hell, M.; Roth, A.; Hinz, S. Multi-Source and Multi-Temporal Image Fusion on Hypercomplex Bases. Remote Sens. 2020, 12, 943. https://doi.org/10.3390/rs12060943

Schmitt A, Wendleder A, Kleynmans R, Hell M, Roth A, Hinz S. Multi-Source and Multi-Temporal Image Fusion on Hypercomplex Bases. Remote Sensing. 2020; 12(6):943. https://doi.org/10.3390/rs12060943

Chicago/Turabian StyleSchmitt, Andreas, Anna Wendleder, Rüdiger Kleynmans, Maximilian Hell, Achim Roth, and Stefan Hinz. 2020. "Multi-Source and Multi-Temporal Image Fusion on Hypercomplex Bases" Remote Sensing 12, no. 6: 943. https://doi.org/10.3390/rs12060943

APA StyleSchmitt, A., Wendleder, A., Kleynmans, R., Hell, M., Roth, A., & Hinz, S. (2020). Multi-Source and Multi-Temporal Image Fusion on Hypercomplex Bases. Remote Sensing, 12(6), 943. https://doi.org/10.3390/rs12060943