Abstract

This article spanned a new, consistent framework for production, archiving, and provision of analysis ready data (ARD) from multi-source and multi-temporal satellite acquisitions and an subsequent image fusion. The core of the image fusion was an orthogonal transform of the reflectance channels from optical sensors on hypercomplex bases delivered in Kennaugh-like elements, which are well-known from polarimetric radar. In this way, SAR and Optics could be fused to one image data set sharing the characteristics of both: the sharpness of Optics and the texture of SAR. The special properties of Kennaugh elements regarding their scaling—linear, logarithmic, normalized—applied likewise to the new elements and guaranteed their robustness towards noise, radiometric sub-sampling, and therewith data compression. This study combined Sentinel-1 and Sentinel-2 on an Octonion basis as well as Sentinel-2 and ALOS-PALSAR-2 on a Sedenion basis. The validation using signatures of typical land cover classes showed that the efficient archiving in 4 bit images still guaranteed an accuracy over 90% in the class assignment. Due to the stability of the resulting class signatures, the fuzziness to be caught by Machine Learning Algorithms was minimized at the same time. Thus, this methodology was predestined to act as new standard for ARD remote sensing data with an subsequent image fusion processed in so-called data cubes.

1. Introduction

The introduction addresses the necessity for Analysis Ready Data (ARD), presents the Multi-SAR System, an evaluated framework for the production of polarimetric ARD data, and how the Multi-SAR System is expanded with a new approach for image fusion for optical and SAR data. Finally, we formulates the research questions which will be answered in the following.

1.1. Necessity for Analysis Ready Data

Earth Observation (EO) is turning more and more to ‘Big Earth Data’ [1]. By the end of 2018, the Copernicus Sentinel products had a total data volume of 10 PiB with a daily average growth of 15 TiB in November 2018 [2]. The complexity of data processing, computational power, and storage increases with the data volume. This means that even sophisticated users of EO data invest more time and effort into data preparation than into data interpretation. The idea is to support EO users to transform the immense amounts of data into useful information and decision-ready products [3]. This challenge can be met with the provision of pre-processed EO data to a minimum set of requirements and their organization in a standardized, consistent, and transparent manner. It requires the possibility of an immediate analysis without additionally user effort, interoperability, and processing time as well as open and easy data access. The specifications are regulated within the Committee on Earth Observation Satellites (CEOS) for ARD [4]. ARD products representing surface reflectance are commonly used and their custom pre-processing workflow and provision is already standardized. Since 2008, the United States Geological Survey (USGS) provides the Landsat data as analysis-ready products in a single, universally accessible, centralized global archive [5]. The focus of the Swiss Data Cube (SDC) is a consistent and comparable data processing and storage of different sensors. Therefore, they use the same framework for data discovery, downloading, and processing of Landsat as well as of Sentinel-2 ARD products [3,6]. The provision of ARD products representing radar backscatter started with the continuous and global coverage of Sentinel-1 imagery. The cloud based platform of Google Earth Engine provides processed Level-1 Ground Range Detected (GRD) Sentinel-1 data. The pre-processing contains border and thermal noise removal, radiometric and geometric calibration using Shuttle Radar Topography (SRTM) and Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) Digital Elevation Model (DEM), and conversion to decibel. It is implemented by the Sentinel Toolbox and allows a subsequent processing in Python and JavaScript languages [7]. Truckenbrodt et al. [8] analysed the capability of possible software solutions like the Sentinel Application Platform (SNAP) and GAMMA for the automatic production of Sentinel-1 ground range detected ARD products. He concluded that SNAP is a convenient and user-friendly graphical user interface and additionally, it is open source. Anyway, the error messages were difficult to interpret without prior knowledge and especially the complex Java structure complicates error search. The basic command line interface of licensed GAMMA required experiences in application and development of new workflows. Therefore, it was very robust and fast. The analyses of Ticehurst et al. [9] went beyond the usage of Sentinel-1 radar backscatter data and involved equally polarimetric radar data. She developed ARD products from SAR including normalized radar backscatter (), eigenvector-based dual-polarization decomposition, and interferometric coherence based on SNAP. Her feasibility study showed a good agreement between SNAP and GAMMA with minor systematic geometric and radiometric differences which can be neglected.

The above mentioned backscatter ARD products bases mostly on Sentinel-1 GRD data. Though, CEOS demands Level-1 Single Look Complex (SLC) ARD products in order to exploit similarly the polarimetric information. This requires a standardized provision of polarimetric radar ARD data including speckle filtering, polarimetric decomposition, and normalization of the data in order to reduce their storage demand [10]. We present the Multi-SAR System, an evaluated framework for the production of polarimetric ARD data. The Multi-SAR System contains ortho-rectification, Kennaugh decomposition, multi-looking, radiometric calibration, image enhancement using the multi-scale multi-looking (MSML) approach or Schmittlets, and normalization based on hyperbolic tangent function for the final (almost) lossless data compression according to References [11,12]. The system can be used for the processing of all SAR data in level 1 (focused, slant range, single-look, complex) independent of their geometric, radiometric, and polarimetric resolution. Its main benefit is the usage of the same workflow and the same parameter configuration file independent of the different SAR input data formats and interfaces. The final result is a SAR image in level 2 represented in a consistent ARD format. It is implemented at the German Remote Sensing Data Center (DFD) within the German Aerospace Center (DLR) at Oberpfaffenhofen.

1.2. Applications Based on Multi-SAR System

With respect to the preprocessing of SAR images acquired by different sensors in different modes a first idea how to establish a consistent frame for the ARD provision was mentioned in Reference [13], where the Kennaugh elements were chosen to perform a robust change detection on wetlands. Already two years later, the normalized Kennaugh elements were introduced as a consistent frame for the fusion of multi-sensor and multi-polarized SAR data allowing for simple data analysis with standard and open-source software, effective data compression, and even change detection in combination with advanced locally adaptive filtering techniques [11]. Refining these filtering techniques by multi-directional kernels led to the Schmittlet approach that even enabled the analysis of image texture in the best available (total) intensity image [12]. The change detection capability was used for mapping wind throw areas in mid-European forests from TerraSAR-X images [14]. The usefulness of the normalized Kennaugh elements in the context of wetland remote sensing especially in semi-arid regions was proven several times using TerraSAR-X, RADARSAT-2, and even archived data of RADARSAT-1 and ENVISAT-ASAR [15,16,17]. Due to its flexibility concerning the input data, different polarization combinations could be evaluated at the same time for surface water monitoring purposes [18]. The advanced preprocessing finally enabled an almost daily estimation of the water gauge in the Forggensee in southern Germany via flooded area [19]. Furthermore, the Kennaugh decomposition was successfully utilized in coastal applications for the mapping of bivalve beds on exposed intertidal flats from dual-co-polarized TerraSAR-X data [20]. Going further north, the normalized Kennaugh elements excelled as input feature for classification of Tundra environments in northern Canada. The relation between snow depth, topography, and vegetation was studied on TerraSAR-X time series preprocessed to Kennaugh elements [21]. Even for the understanding of snow covered sea ice processes from TerraSAR-X the Kennaugh preprocessing showed up to be essential [22]. Regarding glaciered areas, the polarimetric information captured in Kennaugh elements helped to detect the supraglacial meltwater drainage on the Devon Ice Cap, Canada. Alpine glaciers were also analysed by normalized Kennaugh elements—a study evaluated the correlation between ground-based radar measurements and space-borne multi-polarized SAR images over the Vernagtferner in the Oetztaler Alps, Austria, [23], the other study evaluated time series of Sentinel-1 data over several summers in order to discriminate wet snow from firn as a first step towards a mass balance estimation from space [24]. Apart from natural landscapes the Kennaugh elements provided a valuable input for the mapping of slums from polarimetric SAR data [25]. In combination with the Schmittlet index and a highly sophisticated, multi-scale classification approach—called histogram classification—the discrimination of formal from informal settlements by multi-aspect SAR images became feasible [26]. The histogram classification which bases on the similarity measure for the comparison of two discrete local histograms was further studied for typical land cover classification based on reflectance data only [27]. Recently, a very promising new approach was published how to generate Kennaugh-like elements from optical multi-spectral data [28] in order to perform a SAR-sharpening gathering typical characteristics of optical and SAR images in one layer. Though, the sharpening of SAR by the fusion of multi-mode images delivered excellent results, the fusion of SAR and Optics still showed some weaknesses, for example, the combined use of SAR and Optics without radiometric fusion still was superior to the fused data set [29]. Additionally, it was restricted to four channel images like the 10 -layers of Sentinel-1 or the typical channels of an aerial image. Thus, the fusion of four channels from SAR and four channels from Optics resulted in four channels again. Although the fused channels showed the typical characteristics of both inputs, they did not provide more degrees of freedom which seems to be essential. Finally, the multiplicative fusion ignores the signal-to-noise-ratio of the input channels and thus, possibly amplifies noise contained in the input channels instead of reducing it. A parallel study [30] proved that the Kennaugh-like provision of multi-spectral data from Optics has advantages over the standard data preparation in reflectance values when using the special multi-scale histogram classification algorithm. In summary, the remaining problem can be seen in the reduction of the image channels when the images are fused instead of an orthogonal transform into Kennaugh-like elements.

1.3. Multi-SAR System with Image Fusion

A step forward to the production of ARD data would be the image fusion of surface reflectance and polarimetric ARD data. Image fusion creates new images that are more suitable for the purposes of human visual perception and object recognition. The detailed overview of existing image fusions in References [31,32] showed impressively that the development of new methods is an ongoing process. The image fusion techniques can be categorized in spatial domain and transform domain fusion [33]. Ha et al. suggested two additional groups for categorization containing optimization approaches like Bayesian data fusion and other techniques like adaptive methods, smoothing filter-based intensity modulations, and spatial and temporal adaptive reflectance fusion models [31,34]. Due to the simplicity and the majority use of the first two mentioned groups, we neglect the two latter groups. The spatial domain fusion contains among others the Intensity-hue-saturation transform [35,36], component substitution [37,38], colour composition, principal component analysis (PCA) [39,40], and high-pass filtering [39,41]. It bases on pixel level processing [42]. Their characteristic is the usage of simple fusion rules and hence a low computing time. The fused images contain their high spatial quality and preserve their spectral information content. Though, the disadvantage is the spectral degradation which reduces the contrast levels in the fused image and hence could affect negatively the classification results [43,44]. The transform domain fusion comprises multi-resolution [45], wavelet transform [46,47], Laplacian and Gaussian pyramid [48], Bandelet Transform, Contourlet [49,50], and Curvelet [51,52]. The Fourier transform of the entire image into the frequency domain overcomes the limitations of the spatial domain methods. As it enhances the properties of the image like brightness, contrast, or distribution of gray levels, it provides its higher spectral content. Additionally, these methods preserve more spatial features and have a better signal to noise ratio compared to pixel-based approaches. Though, the transform domain methods are more complex than approaches of the spatial domain and leads to inadequately fusion images when applied on images from different sensors [43,44,53,54].

Since few years, deep learning has achieved great success in image fusion and can be regarded as a new categorization besides the spatial and transform domain. Its beginning were already in the 1960s as Ivakhnenko and Lapa published their first general, working learning algorithm for supervised deep feedforward multilayer perceptrons [55]. The term ’deep learning’ was for the first time introduced to the machine learning community in 1986 [56]. But first the increase and the availability of amount of labelled data helped deep learning to its breakthrough. Since then, it is used more and more in computer vision and image processing for classification and segmentation [32]. The approach is based on artificial neural networks like Convolutional Neural Networks (CNN) [57,58,59], Convolutional Sparse Coding [57,60], or Stacked Autoencoders [61]. The main advantage of this approach lies in its ability to outperform nearly every other machine learning algorithm. It supplies improvements for unstable and complex problems [32,57]. In addition, the same neural network can be applied to many different applications and data types. It is executed in the pixel level processing such as the spatial domain fusion [42]. In the same way, multi-source data can be fused using the CMIR-NET (e.g.) that learns from two separate, but labelled data sets [62]. Compared to other techniques, a high performance can only be achieved with a very large amount of data. As the model of the neural network must be trained, its accuracy depends on quality and size of the training data. Though, the most important disadvantage is that the output is not explainable as the prediction is not traceable. In a nutshell, the strength of the machine learning methods can be seen in the robust handling of more or less stochastic variations in labelled data. In contrast to that, our preprocessing approach for data fusion is simple, deterministic, robust, fast, and completely independent from labelled data.

The aim of the Multi-SAR System is to reduce stochastic variations in SAR data combinations to a minimum so that simple threshold classification is already enough to detect temporal changes [11]. With respect to the up-to-date machine learning approaches the introduction of Kennaugh-like elements—according to the Kennaugh elements of the Multi-SAR System—is expected to produce most stable physical measures in order to reduce the stochastic in the class assignment. In consequence, the remaining variation to be captured by the machine learning methods is significantly lower and therefore, the results based on Kennaugh-like elements are expected to be even better than using standard reflectance values. Therefore, the Multi-SAR System is expanded to the fusion of SAR and optical images. The current method uses hypercomplex bases which produces Kennaugh-like elements for optical data and hence enables the fusion of SAR and optical data to one image in a second step. It is performed on pixel level with an optional spatial or temporal filtering as known from the Schmittlets [12] implemented in the Multi-SAR System. We summarize the main points explained above in five research question to be answered in the article:

- Is there a flexible descriptor for multi-spectral data with more than four channels that delivers Kennaugh-like elements as utilized in the Multi-SAR System?

- How can multi-source images (e.g., from SAR and Optics) which are transformed to a common basis be fused without loss of information?

- Is it possible to consider not only spectral or polarimetric, but also further dimensions such as “temporal” or “spatial” in a similar way?

- Does the fusion of multi-source data really produce more stable class signatures than the simple channel combination?

- How does a reduction of the image bit depth due to storing reasons effects the stability of the class signatures?

The methodology section will introduce the novel data fusion approach as well as the methods for its evaluation. The result section illustrates selected examples which are interpreted in the discussion section before the main conclusion are formulated.

2. Methodology

This section comprises the methodology needed for the generation of a consistent data frame, for the subsequent data fusion, low-loss compression, and finally, the generation and validation of stable class signatures as input to machine learning algorithms.

2.1. Consistent Data Frame

The basis for any data fusion was a consistent data frame in which each set of layers can be projected. With respect to polarimetric SAR data, the Kennaugh decomposition was first renewed in Reference [13] and then extended to any set of multi-polarized SAR data available nowadays in Reference [11]. The calculation of Kennaugh-like elements even from multi-spectral data in order to sharpen SAR images was recently published in Reference [28]. The core of this technique is an orthogonal transform by a matrix A with following characteristics:

- All entries of A share the same absolute value given by the dimension of A which is needed to fulfil the orthogonality requirement. This also implies an equal weight of all input channels corresponding to a uniform look factor.

- The first row of A denotes an equally weighted sum over all input channels giving the total intensity (SAR) or reflectance (Optics), respectively. This entry will be necessary for the normalization of the complete set of Kennaugh-like elements later on.

- All other rows composes of as many negative as positive entries, thus their sum and their expectation value consequently equal to zero. Zero means “no information” in this element, whereas any deviation from zero indicated spectral or temporal information, respectively.

- The matrix A is orthogonal. The inverse transform thus is simply given by its transposed matrix of A. As the matrix A is predefined and not estimated for each data set separately as usual in PCA (e.g.), it is guaranteed that the transform can simply be inverted.

- The transposed matrix neutralizes the transform. This characteristic directly results from the orthogonality stated above and underlines that the basis change preserves information. The transform therefore only introduces new coordinate axes in the feature space.

For instance, the transformation matrix to derive two Kennaugh elements from twin-polarized radar measurements can be described as follows according to [11]:

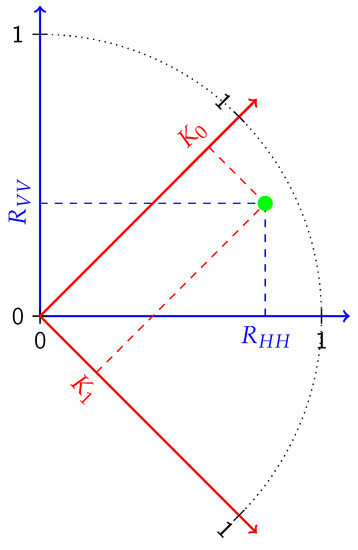

Similar formulations can be found for dual-cross-polarizations with intensity measurements exclusively. The quantum leap in comparison to other decomposition strategies lies in its flexibility and the strict distinction between reliable reflectance (or intensity) and often noisy phase information, thus in its radiometric stability. From a mathematical point of view, the matrix A defined above is most interesting: due to its symmetry, the matrix is its own inverse, that is, and therewith, produces the neutral element in multiplication and likewise in addition. These characteristics result from the special choice of the new axes, cf. Figure 1 in the following.

Figure 1.

The orthogonal transform from reflectance (blue) to Kennaugh elements (red) in the two dimensional space of one measurement (green) according to Equation (2).

2.1.1. The Complex Basis

More generally, the transform can be expressed via a linear transform of a two-element vector, where the two columns of the transformation matrix span one basis of the complex plane .

Due to that reason the transformation matrix is now denoted by C. It linearly transforms the vector of reflectance measures with into Kennaugh elements with the well-known properties, c.f., [11]. Thanks to its orthogonality, this transform restricts to a rotation and an inversion of the incident axes as shown in Figure 1. The scale remains untouched, that is, the transform does not amplify or damp the original signal. Even the relative angles between the channels are equal in order to prevent additional correlations.

2.1.2. The Quaternion Basis

The transform given above is only applicable to two-dimensional spaces. Regarding dual-polarized acquisitions with fixed phase measurements or the up-to-date compact-hybrid mode, four channels are available in Kennaugh elements. Beyond SAR, most airborne camera or scanning systems provide a four-channel image containing Blue, Green, Red, and Infrared. For this case, an extended approach was already published in Reference [28] that formulated a four-by-four matrix with the required characteristics. For conformity reasons, an equivalent matrix is now created by the substitution of each element in C (see Equation (2)) by another complex number. Referring the coefficients to numbers, the four-by-four matrix Q is created, which constitutes the Quaternion basis .

The only difference to the A matrix published in Reference [28] is the order and orientation of the single dimensions which is—by the way—irrelevant for its application. This matrix has already been used for the fusion of images from Sentinel-1 and Sentinel-2. The inspection of the polarimetric information content after the image fusion proofed that the polarimetric information of the input images was retained or even complemented [28].

2.1.3. The Octonion Basis

In order to serve higher-dimensional problems in the same way, the elements 1 or of matrix Q (see Equation (3)) again are replaced by complex entries leading to the Octonion basis with eight dimensions.

Eight channels are especially needed for the fusion of Sentinel-1 (dual-polarized) and Sentinel-2 ( 10 pixel) images when both, the polarimetric and the spectral information, shall be preserved. The article cited above was titled “SAR sharpening” and thus, only cared for the polarimetric information content [28]. Due to the reduction of the dimensionality from eight to four, a minimum data loss is indisputable. Using the new Equation (4) the eight input channels again result in eight output channels, thus the information preserving transform is guaranteed.

2.1.4. The Sedenion Basis

When exploiting the complete information contained in a Sentinel-2 image, thirteen channels are involved whereas channel 8 and only differ in their spectral and spatial resolution, that is, twelve independent channels. In combination with the four Kennaugh elements of Sentinel-1, the need for a sixteen channel transform arises which can be fulfilled by a fusion on the Sedenion basis .

Thus, the Sedenion basis is able to reproduce the whole information content of a combined Sentinel-1 and Sentinel-2 image which will be of highest interest for monitoring purposes in the near future due to the high temporal sampling (at least once a week) and the easy data access at no charge.

2.1.5. Higher Order Bases

With view to coming satellite mission like EnMAP with a hyperspectral sensor on board and therewith, a much higher number of channels, the extension to high-dimensional spaces could be of interest. Due to the consistent spanning of higher-order vector spaces by simple substitution of the input elements, the extension to 32 dimensions (Equation (6)), to 64 dimensions (Equation (7)), to 128 dimensions (Equation (8)), or even more is possible without (theoretical) limitations.

In the case of a minimally lower number of distinct input channels, the respective channel can simply be set to zero leading to a equally lower number of independent output elements as already stated in the context of the Kennaugh decomposition in Reference [11]. Thus, a very flexible, but completely consistent feature space for the fusion of arbitrary reflectance and even further rasterized GIS layers is given. At this point we can answer the first research question: multi-layer data sets can easily be transformed to Kennaugh-like elements using extensions of the complex plane defined in (2) to so-called hypercomplex bases.

2.2. Multi-Source Image Fusion

The following section summarizes the strategies for data fusion on the hypercomplex bases introduced above subdivided to the typically available data sets.

2.2.1. Polarimetric Fusion

The fusion of multi-polarized data sets to pseudo-polarimetric images was realized by the completion of redundant Kennaugh elements in one acquisition by the distinct Kennaugh elements of another acquisition [28]. This additive approach was successfully applied to data acquired in the pursuit-monostatic phase of den TanDEM-X mission for the generation of pseudo-quad-polarized images from two dual-polarized acquisitions [63].

This symbolic formulation can be further refined by introducing the individual spatial resolution [28], that is, the look factor as weight of the input channels. For clarity reasons, only the equally weighted, and therewith the orthogonal case, was considered.

2.2.2. SAR Sharpening

Multi-polarized images generally suffer from a reduced spatial coverage and resolution. To avoid the reduced spatial sampling, an intensity image of higher spatial resolution can be introduced in the same way pan-sharpening is done on optical imagery. In the simplest case, the total intensity of the multi-polarized image is completely replaced.

Though exemplarily shown by a four-channel image, it can be transferred to any polarimetric combination without any limitation. The intensity layer is not restricted to SAR intensities, but can even be given by the panchromatic channel of an airborne camera system as proven in Reference [28].

2.2.3. Spectral Fusion

The fusion of multi-spectral data requires the primary transform from reflectance values to Kennaugh-like elements as already presented in Reference [28]. If the channels are drawn from different sensors and , the matrix multiplication can be divided in two additive parts.

Due to simplicity, the approach is demonstrated in four-dimensional space, but without any limitation of the generality.

2.2.4. SAR-Optical Fusion

The problem occurring when fusing multi-polarized SAR and multi-spectral optical data lies in the combination of already transformed Kennaugh elements in the case of SAR and reflectance values in the case of optical images. We simply circumvented this problem by replacing in Equation (11) and got following equation.

2.2.5. Image Fusion with Arbitrary Layers

The property reflectance in Optics or intensity with respect to SAR essentially denoted that this input layer was subject to a ratio scale, that is, there was a well-defined origin and the ratio between entries was playing the key role. It further implied that the data range was restricted to positive values. Therewith, any layer in ratio scale could be transformed—in combination with others—to the Kennaugh-space. If the ratio scale was not assured and only a cardinal scale can be accepted because of negative entries (e.g.) the inclusion of this layer has to be done in a scaled version of the Kennaugh-like elements which will be presented in the following Section 2.4. The summary of this section answered the second research question: simple matrix multiplication allowed us to separate the calculation of Kennaugh elements from SAR and Kennaugh-like elements from Optics and to fuse the transformed elements afterwards. The information retain was guaranteed because of the orthogonality of the transform. The fusion was performed in an additive way which considers the signal-to-noise ratio. Furthermore, the Kennaugh elements from SAR were well-known for their robustness towards noise.

2.3. Multi-Temporal Image Fusion

The multi-source image fusion mentioned above was designed to fuse images of different sensors, but taken at (approximately) the same time. This section introduces a methodology how to generate multi-temporal Kennaugh-like matrices from multi-source Kennaugh-like elements by a matrix multiplication from the right with one of the orthogonal matrices given above. Instead of multi-temporal and multi-spectral dimensions, multi-row and multi-column measurements could be processed in the same way in order to represent spatial characteristics, for example, as supplement or even substitute to spatial convolutions in machine learning algorithms.

2.3.1. Change Detection

The simplest case is the comparison of two images, in general a pre-event image at time and a post-event image at time . The multi-temporal Kennaugh matrix was calculated by a right multiplication with the basis of the complex plane C which results in the sum and the difference of the two input images.

This conformed to the normalized intensity difference in Reference [11] and the additive formulation of the differential Kennaugh elements envisaged in Reference [64]. As the first element of K referred to an intensity measure, the requirements for a subsequent normalization were fulfilled. The quaternion basis Q for the multi-source fusion was chosen without limiting the generality: the number of dimensions in temporal and spectral direction were completely independent.

2.3.2. Gradients and Curvature

Considering more than two images, for example, four dates , , , and , more descriptors than sum and difference could be evaluated. The temporal combination then was realized by the quaternion basis Q likewise resulting in a four by four matrix of "differential" Kennaugh-like elements.

The matrix could be interpreted as follows: the first column refers to the sum of all Kennaugh elements, that is, to a most stable mean image or a low pass filter. The second column highlighted high frequency gradients or a high pass filter. The third column calculated the mean temporal gradient or a band pass filter. The fourth column produced the temporal curvature. All descriptors were given in critical sampling, but representing the whole information contained in the source products due to the orthogonality of the transformation matrix.

2.3.3. General Time Series Analysis

The Sentinel-1 mission with its repeat pass acquisitions every six days delivers sixteen images for a quarter of a year. In this case, the temporal fusion could simply be replaced by the Sedenion basis S without limitation of the generality.

With respect to longer time spans, hypercomplex bases of higher order were also possible, for example, the Z matrix for half a year or the V matrix for a whole year, see Section 2.1.5. The inclusion of further image layers would require the substitution of Q at the left of Equation (15) and therefore, led to an increase of the number of rows, that is, longer vectors. In any case, the concept guaranteed that the first element always consists of an intensity measure that could be used for the normalization of all other elements. To conclude, we could affirm the third research question: it was possible to introduce the time as new dimension in the same way that multi-source images were combined. Thus, the consistency of the framework was guaranteed. The identical procedure allows equally the inclusion of spatial features like multi-row and multi-column image chips for a robust description of spatial patterns.

2.4. Data Scaling Approach

This section treats the possible scaling variants of the defined elements which can be linear, logarithmic, or even normalized to a closed range with respect to the application. The origin of the elements—vectors from multi-source or matrices from multi-temporal data—is completely irrelevant.

2.4.1. Linear Scale

All image data from optical remote sensing satellites were delivered in reflectance values, mostly multiplied by a calibration factor in order to guarantee integer value. SAR data in contrast was given as real- and imaginary part in single look slant range products or amplitude data in the case of ground range or geocoded products. The conversion from the delivered scaling to intensity values could be found in the respective product specifications. The data range of linearly scaled reflectance data is given by whereas the mode of the exponential distribution could be found slightly above zero. The adequate storing in digital numbers therefore was quite difficult which resulted in a high radiometric sampling of usually at least 16 bit nowadays.

2.4.2. Hyperbolic Tangent Normalized Scale

In order to reduce the bit depth without noteworthy loss of information a normalization approach was introduced in Reference [11]. The core of his hyperbolic tangent (TANH) normalization was a division of all Kennaugh elements except by the total reflectance contained in —the first element of the vector or the first element of the main diagonal, respectively,—and the reduction of to a reference intensity similar to differential Kennaugh elements [13] where the pre-event intensity served as reference for the post-event intensity.

The resulting normalized elements shared a closed data range of which simplified not only the interpretation based on Support Vector Machines (e.g.), but also the conversion to digital numbers. The standard sampling of 16 bit enables a data range of ±48 dB with a maximum radiometric sampling of dB. The reduction to 8 bit still covered a range of ±24 dB with a maximum radiometric sampling of still dB. The range where the radiometric sampling was better than 1 dB is given by ±41.7 dB and ±17.5 dB, respectively. Thus, the loss of information should be negligible in comparison to a conversion of the linearly scaled variables. The possible reduction of the radiometric sampling to only a few bins enabled new classification approaches like the histogram classification [26].

2.4.3. Logarithmic Scale

The reason why TANH normalized values could be expressed in decibel, thus in logarithmic scale was pointed out in Reference [11]. It could be shown that the area hyperbolic tangent of the normalized elements gave the variables in logarithmic scale which differed from the unit decibel only by a constant factor which was the same for all normalized Kennaugh-like elements including .

The logarithmic scale was suitable when layers in cardinal scale should be included on the one hand, see Section 2.2.5. On the other hand, it resulted in layers with more or less a normal distribution which was required by many parameter-based classification approaches like the maximum likelihood classification. Regarding data compression, the logarithmic scaling was superior to the linear scaling because of the variable sampling rate (high around zero and lower towards infinity), but inferior to the normalized scaling because of its open data range .

2.5. Evaluation Approach

The evaluation of data fusion approaches was not a trivial task. Most scientists conducted a whole classification for each data set as well as for the fused one separately and compared the classification results. The improvement was expected to show up in a lower number of misclassifications using the fused data set. This was truly an adequate approach for a predefined combination of data set, classification task, and algorithm. As we were aiming at a universal approach for the data preparation, it was neither reasonable to focus on one application case nor possible to cover all possible applications. Therefore, we decided in favour of following validation strategy composed of traditional and novel methods.

2.5.1. Visual Inspection

Though very subjective, the visual inspection of imagery still was the most reliable interpretation technique with view to extremely complex or high-dimensional tasks. The high dimensionality was present in the multi-source and multi-temporal fusion. Regarding the Quaternion transform, four spectral (or polarimetric) dimensions met four temporal dimensions, which resulted in sixteen elements. The semantic subsampling to a few land cover classes was also very difficult, because the classes had to represent single temporal developments which could be highly variable [15]. Therefore, the multi-temporal fusion was validated by a visual inspection of RGB (Red-Green-Blue) compositions of the single multi-spectral elements along the temporal dimension which is provided by the single columns of the matrix. To support the conclusion drawn from visual inspection, the first five moments of the resulting distribution were calculated along the temporal and spectral dimension separately. They were normalized to a linear deviation, that is, the mean absolute deviation for the first moment, the standard deviation for the second moment, the third root of the kurtosis, and so forth.

2.5.2. Class Signatures

The multi-source image fusion resulted in a feature vector which was much easier to interpret. The signatures of different land cover classes were expected to by quite stable within the region of interest and thus could be described by the distribution of the belonging pixels in the normalized Kennaugh space. Correlations between the single Kennaugh elements which are taken into account in some parametric classifiers like the Maximum Likelihood Estimation (MLE) are of minor importance to modern classifiers like Random Forest (e.g.) or the multi-scale histogram classification [30], that is, due to the immense computing demand when evaluating multi-variable histograms with common statistical measures like mutual information. Our study will consider both: standard MLE and similarity estimation.

2.5.3. Maximum Likelihood Contingency Evaluation

One traditional method to compare class signatures with individual signatures was given within MLE. This parametric approach described the class signatures by multi-variate normal distributions defined via mean vector and covariance matrix . The discriminator for each pixel vector in logarithmic scale [65] then unfolded to

Each pixel in the reference data set was attributed to the class with the maximum likelihood. After this step, a contingency matrix of the training areas—similar to the confusion matrix for classifications—was calculated. As this procedure had to be performed for varying radiometric resolutions, that is, different binnings, only the total accuracy and the -coefficient were reported as indicators for the quality of the class description.

2.5.4. Similarity of Signatures

As parametric approaches like the MLE always imply a certain distribution, non-parametric methods evaluating the empirically derived distributions exclusively such as the Correlation Coefficient, the Kullback-Leibler-Divergence, the Cross-Entropy, or the similarity were preferred nowadays. The similarity was designed especially for the comparison of discrete histograms from normalized remote sensing data with or without consideration of the inter-channel correlations [26]. For the case of normalized elements without noteworthy correlation, it unfolded to

where n denotes the maximum number of bins and m the number of dimensions compared, that is, Kennaugh-like elements. The root does not result directly from the derivation, but it balances the varying number of input elements when testing scenarios with varying numbers of input channels. The similarity had a closed data range of like any probability value. For visualization purposes the unit decibel was preferable and will be used in Section 3.

2.5.5. Intra- and Inter-Class Similarities

Using the signatures defined in Section 2.5.2 and the similarity measure given in Section 2.5.4 intra-class and inter-class similarities were derived and compared in order to estimate the impact of the data fusion and scaling on the class separability. As the similarity could only be calculated for two signatures under study, the arithmetic mean of all possible combinations was chosen as representative value for the intra- and inter-class similarities. We expected high intra-class similarities and low inter-class similarities. In the optimal case, they do not converge until the radiometric sampling is very low, for example, binary. In addition to several diagrams that depicted the influence for each class separately, the mean gain over all classes was given in numbers. It composed of the difference between the mean intra-class and the mean inter-class similarity indicating the relative increase in class similarity. The mean similarities were calculated as arithmetic mean over all samples of the single classes. This equals the geometric mean of the incident class similarities, which means that it was very sensitive towards possible low outliers.

2.5.6. Gain of Intra- vs. Inter-Class-Similarity

In favour of a more comprehensive interpretation, the manifold results from the preceding section were rearranged and combined to a gain in similarity, that is, to which extent the mean similarity of signatures was increased or decreased. The gain was simply defined as difference between the mean logarithmic similarity of objects belonging to the same class over the mean logarithmic similarity of objects belonging to other classes. This reflected the logarithmic quotient of the respective similarities and could again be given in the unit decibel. It provided a measure equivalent to the total accuracy and -coefficient evaluated for the MLE comparison with mean class signatures, but derived from the similarity of individual signatures exclusively this time.

3. Results

The capability of the transforms shown in the previous section will by demonstrated in the following by selected examples. Understandably, the choice of examples was not sufficient to cover all possible applications, but enough to provide a rough impression of the potential inherent to the novel technique.

3.1. Multi-Temporal Image Fusion

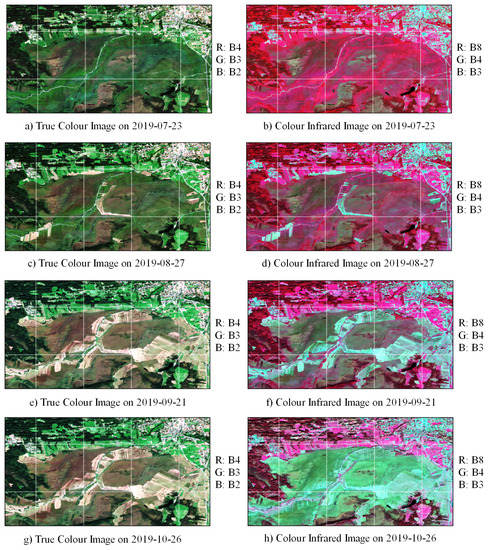

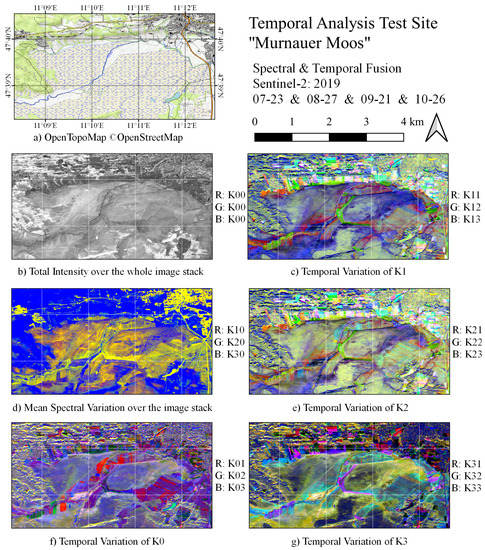

The multi-temporal image fusion presented in Section 2.3 was applied to a data set consisting of four Sentinel-2 geocoded and atmospherically corrected L2A images over the “Murnauer Moos” near the Alps in southern Germany. The area is characterized by restored, quasi natural moor areas, agricultural land, forests, and built-up areas. Only the bottom-of-atmosphere (BOA) reflectance values contained in the 10 -layers are considered in this example for reasons of simplicity. The almost cloud-free images were acquired on 2019-07-23, 2019-08-27, 2019-09-21, and 2019-10-26. True Colour and Colour Infrared images of the data stack were depicted in Figure 2. The reflectance measures were transformed to multi-spectral Kennaugh elements according to Equation (3) and then combined to multi-temporal elements according to Equation (14). The single rows of the resulting multi-spectral and multi-temporal Kennaugh matrix are displayed in Figure 3 gathered to multi-temporal RGB images, that is, each channel refers to one spectral Kennaugh element according to the indication right of the images.

Figure 2.

Sentinel-2 time series of True Colour Images (left column) and Colour Infrared Images (right column) from July to October 2019 over the “Murnauer Moos” in southern Germany. For coordinate reference, scale, and north arrow see Figure 3a.

Figure 3.

Multi-temporal combination of the Kennaugh elements to the four-by-four matrix calculated of the Sentinel-2 10 -layers imaged in July, August, September, and October 2019 over the “Murnauer Moos”, see Figure 2. The depicted channels are indicated on the right side of each image. The coordinates are given in latitude and longitude on the WGS84 ellipsoid.

The normalized moments (e.g., the standard deviation as compared in Reference [66]) along the temporal and the spectral dimension are listed in Table 1 divided in the four spectral or temporal channels remaining in the respective case.

Table 1.

Empirical central moments one to five normalized to linear deviations of the distributions adopted by the multi-spectral and multi-temporal reflectance images and Kennaugh-like elements fused on a two-dimensional Quaternion basis for the “Murnauer Moos” test site.

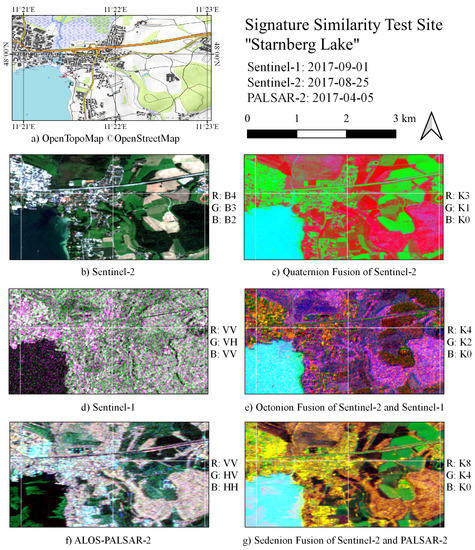

3.2. Multi-Source Image Fusion

This section summarizes the results of the multi-source image fusion to one data set consisting of Sentinel-1, Sentinel-2, and ALOS-PALSAR-2 acquisitions for the three regions of interest (ROI) in Germany, namely the “Starnberg Lake” (ROI 1) in southern Germany, the “Ruhr Metropolitan Area” (ROI 2) in western Germany, and the “Potsdam Lake Land” (ROI 3) near the capital in eastern Germany. Detailed descriptions of the input data can be found in Table 2. All images were projected into the earth-fixed UTM coordinate system with a uniform pixel spacing of 10 by 10 resulting in slightly varying look factors according to [28] which is ignored in the current study for reasons of simplicity. The following figures are all reprojected to latitude and longitude in WGS84.

Table 2.

The remote sensing data subject to the presented study.

The SAR data were preprocessed by the Multi-SAR System [11] exclusively available at DLR (Oberpfaffenhofen) to geocoded, calibrated, and normalized Kennaugh elements. The multi-spectral data were provided in geocoded and radiometrically calibrated BOA reflectance values and transformed into Kennaugh-like elements according to the formulas given above. The study considers two cases: a fusion on the Octonion basis including Sentinel-1 () and Sentinel-2 () and a fusion on the Sedenion basis including ALOS-PALSAR-2 () and Sentinel-2 (). In all three test sites sample segments were manually selected in order to represent following land cover classes: (1) Water, (2) Forest, (3) Field, (4) Meadow, and (5) Urban. The class signatures were derived from labelled segments generated in a previous study [29].

3.2.1. Fused Images

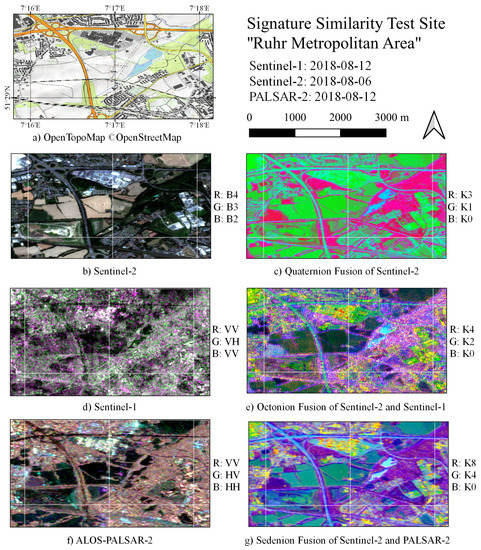

The visualization of Kennaugh-like elements was not an easy task because of their central distribution where the deviation from zero in both directions indicated information. The common visualization comprises three reflectance channels: Red, Green, and Blue with a non-negative exponential distribution. Therefore, the input data sets were presented in the accustomed way: True Colour Images for multi-spectral images of Sentinel-2 and Bourgeaud decomposition (i.e., the intensity channels) for SAR images of Sentinel-1 and ALOS-PALSAR-2. Out of the collection of fused Kennaugh-like elements only a selection of three elements (indicated on the right site of each image) are depicted in logarithmic scale. A subset of the images for the first region of interest can be found in Figure 4. Selected subsets of the second and the third test site are illustrated in the same way in Figure 5 and Figure 6.

Figure 4.

Subset of ROI 1: the “Starnberg Lake” southern of Munich (coordinate reference WGS84).

Figure 5.

Subset of ROI 2: the “Ruhr Metropolitan Area” (coordinate reference WGS84).

Figure 6.

Subset of ROI 3: the “Potsdam Lake Land” south-western of Berlin (coordinate reference WGS84).

3.2.2. Signature Stability

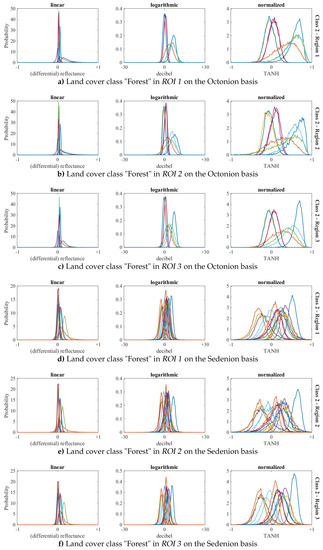

The illustration of multi-spectral data with more than three channels was quite difficult. In optical remote sensing the four-channel information typically was divided in one true colour image (TCI) and one colour infra-red image (CIR) as illustrated in Figure 2 well being aware of the high redundancy. In order to generate an illustration of all eight or sixteen channels of the fused data set, histograms of three different scaling variants were computed. The linear scaling in the left column of Figure 7 and Figure 8 equals the standard methodology working on reflectance values (optical remote sensing) and covariance matrices (radar remote sensing), respectively. Due to the orthogonality of the transform between reflectance channels and Kennaugh-like [28] elements as well as between the covariance matrix and the Kennaugh elements [64], the statement drawn from the histograms was transferable. Elements in linear scale consist of one reflectance value (the total intensity) and several reflectance differences ranging from to . The second variant considered in the middle column of Figure 7 and Figure 8 was the logarithmic scaling in the unit decibel (dB) which is quite usual in radar remote sensing and electronic engineering in general. This scaling adopted a multiplicative characteristic of the input data that was supposed to be composed of intensity quotients. The typical range of decibel values—though theoretically unlimited—restricted to −30 dB to +30 dB. The normalized Kennaugh-like elements displayed in the right column of Figure 7 and Figure 8 shared the unique property of a closed value range , which allowed for the most effective radiometric sampling which will be studied in the following section. In order to show the advantages of our approach two classes out of the five classes of the study which were not easy to describe with traditional methods due to their high variability were chosen for demonstration, namely “Forest” in Figure 7 and “Urban” in Figure 8.

Figure 7.

The distribution of the Kennaugh-like elements on the Octonion basis fusing Sentinel-1 and Sentinel-2 (a–c) and on the Sedenion basis fusing ALOS-PALSAR-2 and Sentinel-2 (d–f) for class 2 “Forest” in linear, logarithmic, and normalized scaling per regions of interest.

Figure 8.

The distribution of the Kennaugh-like elements on the Octonion basis fusing Sentinel-1 and Sentinel-2 (a–c) and on the Sedenion basis fusing ALOS-PALSAR-2 and Sentinel-2 (d–f) for class 5 “Urban” in linear, logarithmic, and normalized scaling per ROI.

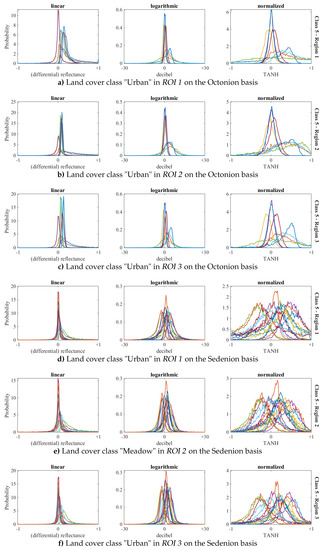

3.2.3. Maximum Likelihood Contingency

The total accuracy and the -coefficient achieved by an MLE of the class assignment within the reference data are depicted in Figure 9 with respect to the ROI (line style), the input data (line colour), and the binning (right axis). The total accuracy attained for only one bin which was the accuracy to be expected when five equally distributed classes were assigned randomly. The -coefficient lowered to zero in this case underlining that the class assignment became purely random.

Figure 9.

Total Accuracy and -coefficient of the contingency matrices achieved using a Maximum- Likelihood class discriminator in relation to the test site (“Starnberg Lake” solid, “Ruhr Metropolitan Area” dashed, and “Potsdam Lake Land” dot-dashed lines), the channel combination and scaling (linear reflectance, logarithmic Kennaugh-like elements, and normalized Kennaugh-like elements, see legend), and the radiometric sampling given by the number of bins.

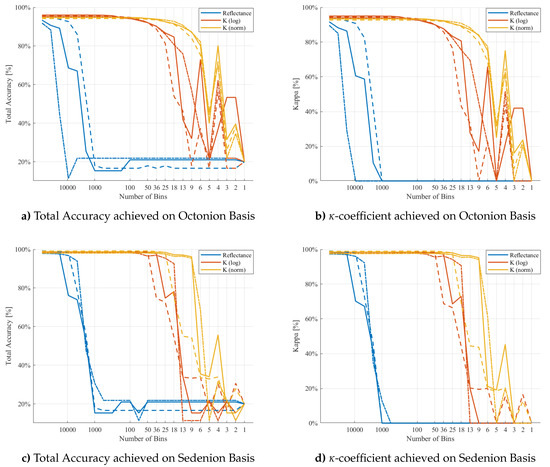

3.2.4. Signature Similarity

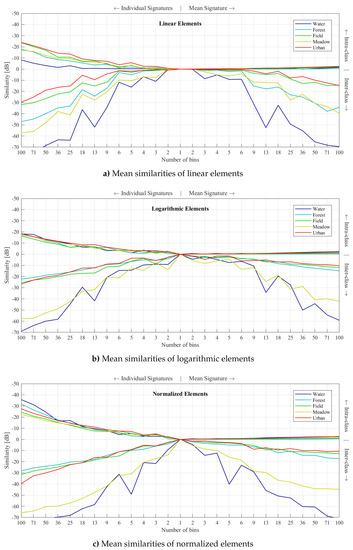

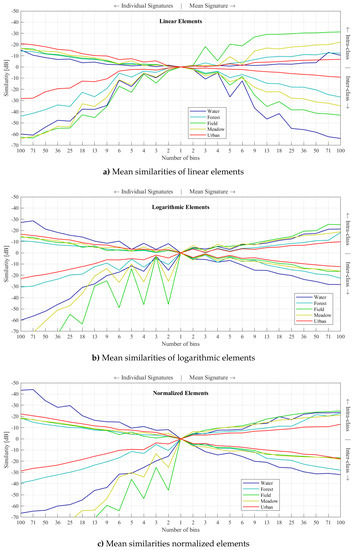

The similarity of two signatures was calculated via Equation (19). In default of symmetry, the similarity measure was not commutative, that is, the similarity of class 1 to class 2 in general differed from the similarity of class 2 to class 1. Therefore, both cases were considered. For clarity reasons only the mean similarities of the respective sample class were kept in Figure 10 for the Octonion fusion and Figure 11 for the Sedenion fusion. The diagrams were split in four parts:

Figure 10.

Mean intra- (upper part) and inter-class (lower part) signature similarity on the Octonion basis using (a) linear, (b) logarithmic, and (c) normalized elements evaluated with individual (left side) and mean signatures (right side) for the “Potsdam Lake Land” test site.

Figure 11.

Mean intra- (upper part) and inter-class (lower part) signature similarity on the Sedenion basis using (a) linear, (b) logarithmic, and (c) normalized elements evaluated with individual (left side) and mean signatures (right side) for the “Starnberg Lake” test site.

- upper left: the mean intra-class similarities of the individual object signatures

- upper right: the mean intra-class similarities of the mean class signatures

- lower left: the mean inter-class similarities of the individual object signatures

- lower right: the mean inter-class similarities of the mean class signatures

All similarities were provided in logarithmic scaling in the decibel unit. The searched class was indicated by the colour according to legends contained in the respective figures.

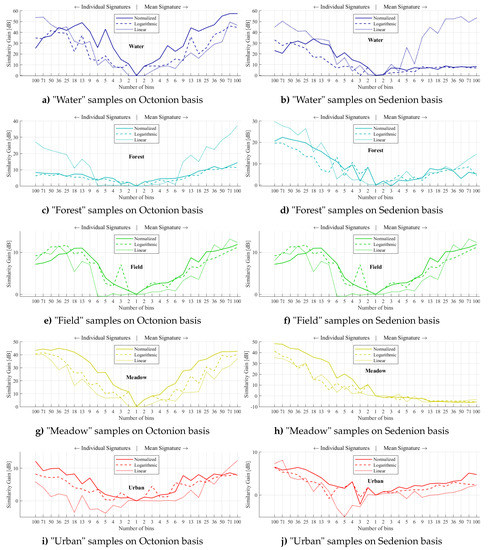

3.2.5. Similarity Gain

The mean gain in class similarity by comparing the intra-class to the inter-class similarities is numbered in Table 3 for the fusion on the Octonion basis and in Table 4 for the fusion on the Sedenion basis. Though, the analysis was performed separately for the three test sites, the results were quite similar. Obviously, the Sedenion fusion with its 16 channels even raised the gain in comparison to the Octonion fusion with its 8 channels. As expected the normalized Kennaugh-like elements showed the highest gain for low radiometric sampling. The gain in the logarithmic scale was remarkably variable. The linear scale was only applicable with higher radiometric sampling. In the case of the Sedenion fusion (see Table 4) it only seldom exceeded the gain of normalized scaling. Regarding the Octonion fusion in Table 3, the linear scaling produced higher values from 20 bins upwards. In order to provide a more detailed view to the single classes under study, selected examples of the similarity gain per class are plotted in Figure 12.

Table 3.

Enhancement of the mean class distinction using the Octonion basis in different scaling variants (rows) evaluated and varying number of bins (columns) for the three test sites evaluated over all samples given in decibel [dB].

Table 4.

Enhancement of the mean class distinction using the Sedenion basis in different scaling variants (rows) evaluated and varying number of bins (columns) for the three test sites evaluated over all samples given in decibel [dB].

Figure 12.

Similarity gain of intra- vs. inter-class comparison for individual (left part) and mean signatures (right part) in three different scalings: normalized (solid), logarithmic (dashed), and linear (dotted) on the Octonion (left) and the Sedenion basis (right) evaluated for each class under study separately.

4. Discussion

This section interprets the results shown above and draws the conclusions concerning the application of the novel data fusion approach. In order to avoid interfering influences from varying classification methods and parameters as studied by [67], we base the whole interpretation on statistical measures and the identical set of class signatures.

4.1. Multi-Temporal Image Fusion

The benefit from the multi-temporal image fusion becomes obvious when comparing Figure 3 with Figure 2. The standard method based on reflectance measurements in several spectral channels suffers from the high correlation of the input channels. Therefore, mostly brightness changes are clearly visible, see Figure 2. The changes in brightness are summarized in the temporal variation of the total intensity in Figure 3f. The multi-temporal combination of the spectral properties in Figure 3d indicates that the mean spectral information content is quite low what was expected when looking at the single images in Figure 2. The really characteristic changes for single land cover classes are all captured in the temporal variation of spectral Kennaugh elements. Agriculture land (e.g.,) shows up in diverse colours. Especially in Figure 3f,g the moor areas reveal a fine structure which was not visible at all in the standard display of Figure 2. The statistical evaluation in Table 1 reveals three points: (1) The spectral variability in the four acquisitions (left part of the table) is equally low for reflectance values, gets variable with the transformation to linear Kennaugh-like elements, and increases significantly with the scaling of the Kennaugh-like elements. (2) The temporal variability in the right part of the table is even lower for the single spectral channels. The transformation to Kennaugh-like elements causes an increase which is again intensified when applying the typical scaling. (3) All channels show comparably high values over all five moments, that is, the underlying distributions seem to be very complex which inhibits the use the simple parametric estimators. Thus, the use of non-parametric approaches like the similarity is highly recommended. A similar validation method was already used by [68] in order to evaluate the gain by the fusion of hyperspectral and panchromatic data. Due to the complexity of the landscape, the clustering to multi-temporal class signatures is a quite difficult task and therefore, will be subject to future studies. The use of enhanced clustering methods like self-organizing (e.g.) would go far beyond the scope of this article. According to [69], the multi-temporal combination promises up to 91% overall accuracy and 89% for the -coefficient with a relatively high thematic sampling of nine land cover classes when using enhacned classification methods. To sum up, the combination to multi-temporal Kennaugh-like elements highlights phenomena that were not apparent in the original reflectance data.

4.2. Multi-source Image Fusion

This section interprets the results of the image fusion on the Octonion and the Sedenion basis shown above. The quantitative evaluation will focus on the multi-source Kennaugh elements and take the signature stability and similarity over the classes provided in the reference data set into account.

4.2.1. Visual Inspection

The subset of the “Starnberg Lake” test site in Figure 4 depicts relatively smooth water and meadow areas in the optical true colour image whereas settlements appear much more heterogeneous. The SAR intensity images share the typical characteristics: smooth surfaces with respect to the wavelength are dark. The ALOS-PALSAR-2 image in Figure 4f reveals some wind effect that cause a higher surface scattering in the cross-polarized channel. Regarding the meadows, Sentinel-1 shows a strong texture (Figure 4d), but ALOS-PALSAR-2 relatively smooth areas (Figure 4f) due to the respective wavelength. The quite clear segments reported by the optical image are preserved and overlaid with the SAR texture when fusing both image sources on the Octonion basis in (Figure 4e) or the Sedenion basis (Figure 4g). Even the open water receives a certain texture that reflects the local wind situation affecting the polarimetric scattering behaviour as described above.

Figure 5 illustrates a subset of the “Ruhr Metropolitan Area” test site. The settlements are clearly distinguishable by their blue tone in the optical true colour image (Figure 5b). In the Sentinel-1 intensity image they cannot be discriminated from the surrounding forests and meadows. The ALOS-PALSAR-2 enables the distinction between meadows that appear dark due to their smoothness with respect to the wavelength and higher objects. The appearance of settlements depends on the orientation, that is, there are very bright spots, but also areas with a mean intensity that can hardly be discriminated from forests. The fused image unites characteristics of SAR and Optics. Settlements are mapped in yellow to red in both the Octonion (Figure 5e) and the Sedenion (Figure 5g) fusion according to their orientation. Agricultural land receives a slight texture that allows for a more detailed analysis of the crop. Water surfaces again appear in pale blue with a clear shape.

The most impressive example for the data fusion can be found in Figure 6 where a subset of the “Potsdam Lake Land” is illustrated. The clear boundaries visible in the optical image are combined with a refined SAR texture. Higher vegetation (e.g., forest) receives a fine and very diverse texture possible allowing for a more detailed analysis. Low vegetation is coloured in green tones according to the wavelength: with a higher variation in C-band (Figure 6e) and a lower variation in L-band (Figure 6g). Even the infrastructure is clearly mapped in the Sedenion fusion. Smooth water is exactly shaped in pale blue with only a slight pink texture in the cross-polarized channel of L-band, cf. Figure 6f,e. In summary, the fused images illustrate scattering characteristics which where not visible at all in the single acquisitions. As the visual impression is often misleading [65], the three test sites are validated quantitatively in the following.

4.2.2. Mean Class Signatures

The mean class signatures of the land cover classes “Forest” and “Urban” are provided in Figure 7 and Figure 8 for the fusion on the Octonion basis (a–c) and on the Sedenion basis (d–f), respectively. The elements in linear scale show only minor deviations from zero over all classes resulting in very slim peaks in the probability density functions (PDF), but with few very high and very low values. This effect even intensifies with an increase of input channels number from Octonion to Sedenion basis. The logarithmic scale provides diverse, but almost normally distributed elements, see middle column. The limitation to the range of [−30 dB, +30 dB] concerns only few values. Regarding typical noise levels of around dB, the values exceeding the limits can be seen as noise. Some undulations become visible that indicate a higher radiometric sampling than provided by the input data. These undulations even increase in the normalized scaling, see Figure 8. The closed data range and the width of the distributions allow a lower radiometric sampling with only negligible loss of information which will be studied in the following section. The signatures over the three test sites appear quite similar. The fusion on Octonion basis provides more distinct histograms than the Sedenion fusion which can be referred to the different input data, see Section 3.

4.2.3. Maximum Likelihood Contingency

The total accuracy and -coefficient of the contingency matrix derived from a MLE class assignment proofs the advantage of the presented methodology. For a high radiometric sampling, the three methods deliver identical results. But as soon as the radiometric sampling is reduced to less than 10,000 bins, the class assignment based on reflectance values becomes more or less random (blue lines in Figure 9). The logarithmic Kennaugh-like elements deliver stable results even for a radiometric sampling with only 50 bins in the Octonion case (cf. Figure 9a,b) and even 25 in the Sedenion case (Figure 9c,d). Regarding the normalized scaling, the accuracy of the class assignment is still clearly over 80% both in the total accuracy and the -coefficient for 3 bit sampling with 8 bins. For lower sampling rates, the location of the bins seems to play a key role because the neighbouring entries vary by 50% and more. A binary image (1 bit) still provides a class distinction with a total accuracy of 30% to 40% and a -coefficient of 20% in some ROIs. This high variability for low sampling rates is confirmed by the signature similarity analysis in the following. With respect to the fourth research question we have to admit that Kennaugh-like elements seem to provide the same accuracy in the class assignment as traditional methods as long as the bit depth is sufficient, that is, 16 bit at least. With reduced bit depths, the novel descriptor surpasses reflectance values by far.

Due to the high diversity and possibility of data combinations different methods for individual data sets and varying accuracy parameters are reported in the literature. A combination of Sentinel-1 and Sentinel-2 using a object-based classifier promises an overall accuracy of 91% and a -coefficient of 89%. The classification of a THAICHOTE and Cosmo-Skymed fusion performed by SVM reaches an overall accuracy of 77% and a -coefficient of 67% whereas an object-based method delivers 82% for the overall accuracy and 75% for the -coefficient [70]. The combination of TerraSAR-X and Quickbird data fused by different methods leads to overall accuracies from 75% (reflectance layers) and 82% (including texture measures) to 91% using refined methods [71]. The latter value is confirmed by a study that uses an INFOFUSE-algorithm on WorldView-2 images, texture measures, and a digital surface model [72]. With respect to a simple maximum likelihood classification, the values drop down to 82% total accuracy and 80% for the -coefficient. Higher accuracy values are exclusively reported to the fusion of multi-scale data acquired by the same sensor, in general for the purpose of pan-sharpening. For the Enhanced Thematic Mapper (ETM) of Landsat, 82% overall accuracy and 79% for the -coefficient are reported in Reference [65] when using the maximum likelihood approach. When fusing the multispectral channels with the panchromatic channel of IKONOS by the SFIM or Gram-Schmidt approach, the overall accuracy reaches almost 99% with a -coefficient of 98% according to [73]. Comprehensively, the calculated accuracy measure depends on many different parameters [67]. One difference could be the usage of the contingency from the training samples in our case and supposably in the latter article in contrast to the confusion matrix from control samples.

4.2.4. Signature Similarity

The diagrams in Figure 10 and Figure 11 show the influence of the radiometric sampling on the class distinction. High values near zero in the intra-class similarity (upper part) and very low values in the inter-class similarity (lower part) should be guaranteed even with a very low number of bins. The distinction using linear elements on the Octonion basis is possible starting with three bins, see Figure 10a. On the Sedenion basis even a radiometric sampling with only two bins enables a weak distinction of the classes. Figure 11c shows an extraordinary high intra-class deviation of the mean signatures of the class “Water” which is not acceptable, but a clear effect of the generally low back scattering. Apart from that, the intra-class similarities seldom exceed −20 dB whereas the inter-class similarities can reach up to −90 dB which is excellent. Looking at the similarity in logarithmic scale in Figure 11b, the high sampling around zero results in several lobes in the inter-class similarity of the individual signatures for less than nine bins. An even number of bins obviously leads to a better class distinction. This effect was already observed in the MLE contingency analysis discussed above. The class assignment now is even possible to a certain degree with only two bins. The same applies to the normalized elements that can be found in Figure 10c for the Octonion basis and in Figure 11c for the Sedenion basis. The main difference to the similarity in linear elements becomes visible when comparing Figure 10a to Figure 11a: the similarity of linearly scaled signatures decreases with an increasing number of bins quite symmetrically in intra- and inter-class direction. This behaviour is independent from the class. The signatures of normalized elements show a stronger increase of around 10 dB in the inter-class direction than in intra-class direction proofing that the signatures similarity to signatures of the same class is ten times higher than the similarity to other classes which is essential for the classification based on normalized elements with reduced radiometric sampling. At this point, we can answer the last research question: the reduction from typically 16 bit to 8 bit images still assures excellent class signature using the new consistent multi-sensor framework. The radiometric sampling of 8 bit equals 256 bins. In this study, 100 bins already promise a class distinction of at least 10 dB and maximum 75 dB using individual signatures, see left edge of Figure 10c. The reduction to 4 bits (or 16 bins) still allows a maximum class discrimination power of about 40 dB. Switching to 3 bit images with only 8 bins further reduced the class distinction. But it is still measurable reaching 5 dB (or 80% in the MLE analysis) on individual signatures in Figure 11c which means that the inter-class similarity is three times higher than the intra-class similarity.

Only one study is reported in the literature that evaluates the classification accuracy in dependence on the compression [66]. This study states an overall accuracy of 45% using a maximum likelihood classification and a compression ratio of 1:50. In our case, this means that the original sampling of 16 bit is reduced to one fifth, that is, reduction from 65536 bins to 1311 bins or approximately 10 bit. The usage of a neural network classification method still promises 49%. The preparation in Kennaugh-like elements in logarithmic or normalized scale already reaches comparable similarity values with only two bins for most classes. With view to the maximum likelihood method whose accuracy is plotted in Figure 9, the total accuracy exceeds the border of 50% with 3 bits, thus already 8 bins. In terms of compression, this equals 8:65536 or 1:8192 for the radiometric compression exclusively.

4.2.5. Similarity Gain

The similarity gain of intra-class over inter-class similarity was not studied in the literature so far. Most publications of data fusion for land cover classification compare the accuracy before and after the fusion. For instance, this was done by [74] for the fusion of two SAR and one optical image in a neural network. The compound classification produced an increase from 84% to 92% in average. Regarding the ETM+ fusion, the accuracy of 62% of the original image reaches 66% at highest for different fusion algorithms [75]. Another study on the ETM+ fusion in combination with a supervised classification [76] reports on a rise from 83% to 90% using the fused image. Looking at higher spatial resolution, it seems to amplify the impact of the classification algorithm. The combination of Quickbird multispectral layers with its panchromatic layer classified by a maximum likelihood approach allows for a total accuracy of 58% before and 59% after the fusion which is an almost negligible gain of. Using the Euclidean Distance instead promises 91% after image fusion in comparison to 87% before [77]. The values provided by these studies can be compared to the accuracy values plotted in Figure 9. For a high radiometric sampling, we cannot state any gain using our fused and normalized images. But, with reduction of the radiometric sampling to 1000 bins, the overall accuracy when classifying the original images falls to values around 20% which is the expectation value for the random assignment of five equally frequent classes. Hence, for images with bit depths in-between 50 bins and 1000 bins we can state an increase from 20% to more than 90% which equals an increase by more than 70 percent points.

The gain of the intra-class over the inter-class similarity, that is, the class discrimination, is will be studied quantitatively in the following. The Octonion fusion in Table 3 indicates that the linear scaling is superior only for high radiometric sampling. For less than 20 bins, the gain is higher using logarithmic and normalized elements. With respect to 16 bins that equals a 4 bit image, the values for normalized elements still range around 20 dB which means that the similarity of samples belonging to the same class is around one hundred times higher than the similarity of samples belonging to different classes. Hence, the class discrimination is assured. The fusion on the Sedenion basis presented in Table 4 enables a gain in similarity of more than 30 dB which equals a thousand times the inter-class similarity. The gain using linear elements decreases almost continuously with the reduction of the radiometric sampling to zero. The gain achieved using the logarithmic or normalized elements only seldom falls below 10 dB, that is, the intra-class similarity is always ten times higher than the inter-class similarity independently from the radiometric sampling. The additional increase of the Sedenion fusion over the Octonion fusion is due to the higher number of channels and the completely different composition of the channels: Sentinel-1 with Sentinel-2 for the Octonion fusion and Sentinel-2 with ALOS-PALSAR-2 for the Sedenion fusion. The choice of the reference samples has another impact that cannot be denied. Anyway, the SAR-Optics fusion on the Sedenion basis would also allow the reduction of the radiometric sampling to a 3 bit image with 8 bins and still provides a class discrimination of factor ten which is unbeaten so far with respect to the low storage requirement. The images thus can be stored with 3 bit only, which enables the further compression with the typical spatial approaches available for most image formats.

Figure 12 illustrates the similarity gain for certain land cover classes separately. In almost all subfigures, be it for the Octonion fusion (left column) or the Sedenion fusion (right column), the normalized Kennaugh-like elements provide the highest similarity gain, see solid line. Only for the “Water” class in the Sedenion fusion (Figure 12b), linear elements seems to excel the logarithmic and normalized elements. This effect can be attributed to the very low response leading to unstable spectral properties which have to be covered by the classification algorithm. In the case of “Forest” a similar effect can be observed in the Octonion fusion (Figure 12c). The “Meadow” class indicates a negative similarity gain, that is, a loss accuracy using mean signatures in all three scaling variants, see Figure 12h. The high variability of the “Meadow” class in terms of soil conditions, soil moisture, and plant phenology might be one reason for that. With view to classification approaches, this means that traditional parametric methods like MLE are not sufficient to describe the “Meadow” class as one homogeneous syntactic class. Thus, the “Meadow” areas have to be divided in several syntactic classes with a homogeneous appearance or modern machine learning approaches have to be utilized for the automated interpretation. Due to the low radiometric sampling needed for an adequate class distinction, the pre-processing to normalized Kennaugh-like elements even supports the usage of Machine Learning: (1) The images are small and easy to store. (2) The bins replace the quantiles often as extended feature space. (3) Inter-channel correlations play only a minor role as shown above. (4) Data fusion is supported in multi-spectral, multi-sensor, and multi-temporal manner. (5) Even the description of spatial features in image chips as realized in CNNs is possible, though not evaluated in detail by now.

5. Conclusions

This article develops a consistent data frame for the fusion of arbitrary collocated raster data sets. The core of the novel fusion technique is an orthogonal transform on hypercomplex bases producing Kennaugh-like elements. The combination can cover multi-spectral, multi-source, and multi-temporal data sets. The description of spatial characteristics by multi-row and multi-column combination is envisaged. Multi-source image fusion results in vectors of Kennaugh-like elements. Multi-source and multi-temporal image fusion produces Kennaugh matrices with the image channel dimensionality along the rows and the time dimensionality along the columns. These linear intensities and intensity differences, respectively, can be further scaled to logarithmic—almost normally distributed—and even normalized elements characterized by a closed data range and a high resilience towards the reduction of the radiometric sampling to 4 bit or even less than that. The method is exemplary applied to Sentinel-1, Sentinel-2, and ALOS-PALSAR-2 single acquisitions of three different regions of interest in Germany and time series of Sentinel-2 over a wetland near the Alps. Due to its flexibility regarding the scaling, any data set with additive or multiplicative characteristics can be used as input layers. A qualitative assessment of the multi-temporal image fusion indicated that the Kennaugh-like elements highlight characteristics that were not visible at all in the standard preparation. Furthermore, the quantitative analysis of multi-source Kennaugh-like elements underlined the stability of the signatures describing typical land cover classes that are distinguishable even with a extremely reduced radiometric sampling of only two bins. The latter point is of importance with respect to modern classification techniques like histogram classifications and Random Forest approaches. The Random Forest method (e.g.) combines a large number of random decision trees in order to stabilize the decision. Each node is a binary sampling which is now simply possible using normalized Kennaugh-like elements in a binary radiometric sampling. This article can only presents the basic idea of a data fusion approach and delivers first hints on its suitability explained by the help of selected experiments. Its practical use has to be evaluated in future studies covering more different landscapes, different sensors, and different classification algorithms. With respect to sharpening approaches of image data fusion with different spatial resolution, the inclusion of weights representing the look factors might be one technical further development. One application-oriented focus will be the analysis of polarimetric SAR time series over Mid-European forests in order to detect and map calamities in a timely manner. The presented methodology further shall be implemented as extension of the Multi-SAR System in order to support ARD data provision to scientific users. The long-term goal will be to establish the normalized Kennaugh-like elements on hypercomplex bases as future standard for multi-dimensional ARD sets in so-called data cubes.

Author Contributions