1. Introduction

Interest in the data-intensive monitoring of croplands and forests has been spiking recently due to the availability of free Remote Sensing (RS) data from various satellite missions, such as Landsat, Sentinel and others, and the development of Unmanned Aerial Vehicles (UAV) technologies for data acquisition [

1,

2,

3,

4,

5,

6,

7,

8,

9]. Satellite imagery (SI) decreases the cost of repeated data acquisition of the Earth’s surface, promoting the construction of time series based on remotely acquired data. The regular acquisition of information facilitates the development of better algorithms for the identification and monitoring of land cover over time [

5,

6,

10,

11]. Close-range photogrammetric surveys using sensors installed in UAV platforms have additional benefits in comparison with free SI, namely at farm or parcel scales, where the spatial resolution of SI cannot provide satisfactory geospatial detail [

3]. UAV photogrammetric surveys are a good method to retrieve very high-resolution imagery at smaller scales, despite the need for accurate planning and costly field campaigns. This is the case, for example, in agroforestry landscapes, where different strata of vegetation coexist [

3,

8].

UAV and SI are frequently used to extract information about land cover types in agroforestry environments. Those products are used for the identification of pastures or grassland, cork oak woodlands and other agricultural fields. Supervised image classification and mapping is increasingly common for the monitoring of grass and pasture parcels [

2,

4,

11]. The automatic supervised classification workflow used in these applications depends on the sensor, type of images, classes nomenclature, algorithms for classification, pixel-based or object-based classification, training and testing data samples and the evaluation of results [

12,

13], each of which influences computational performance. More recently, Geographical Object-Based Image Analysis (GEOBIA) classification has been replacing traditional classification methods like the pixel-based approach [

2,

8,

14,

15]. GEOBIA performs image segmentation based on grouping pixels with similar characteristics, generating sets of homogenous and contiguous segments or regions [

15,

16,

17]. It is particularly recommended for classifying very high resolution imagery, such as UAV products [

16,

17,

18].

Sown Biodiverse Pastures (SBP) are a Portuguese innovation from the 1960s, installed predominantly in the

Alentejo region and in

Montado agroforestry landscapes, which are of high biodiversity value [

19,

20]. These high yield pastures can reduce the environmental impacts of grazing systems, mainly through carbon sequestration [

21,

22,

23]. SBP are a mix of up to 20 species or varieties of annual legumes and grasses. Legumes ensure a natural source of nitrogen for pasture growth, enabling the acceleration of nutrient recycling and the increase of carbon sequestration in soils, which contributes to the reduction of carbon emissions compared to production systems based on naturalized grasslands [

19,

20]. These effects are, however, dependent on the correct management of SBP and their location. The development of techniques and methods for intensively monitoring SBP parcels is becoming increasingly important for the optimization of management practices. Close-range UAV imagery is a promising technique to contribute towards the goal of developing better methods for the monitoring of SBP [

4].

At the moment, the algorithms available in literature are incapable of assisting in the remote monitoring of SBP. As many SBP are installed in low-density agroforestry areas or other areas with significant distances between trees [

19,

20], the tree strata hinder any automatic signal acquisition from the pasture strata. The signature of spectrometric data from SBP is also highly variable in time. SBP have steep seasonal variations throughout their growth cycle, due to weather conditions, agricultural practices and management of the animal production system [

19,

20]. This temporal variation of the reflectance measured by the sensors can be a limitation for one-period analyses, but also provides an opportunity for improved image classification algorithms [

24,

25]. Many studies use the temporal patterns of vegetation change for agricultural and forestry applications to construct RS time series-based algorithms for imagery classification, using different band combinations with synthetic bands representing spectral vegetation indices, and integrating different sensors [

12,

14,

24,

25,

26,

27,

28,

29]. The use of RS time series generates large amounts of data that require computationally efficient classification algorithms [

26]. Machine Learning (ML) algorithms are particularly useful for this purpose. In the case of optical satellite imagery, the data available for time series classification depends on the revisiting time of the plots surveyed, and can be affected by cloud coverage and shadows, missing data and satellite/sensor problems [

5,

26]. UAV imagery is less affected by those problems, but presents additional challenges such as the need to cope with the shadows of objects [

2]. Many authors have studied this problem, mainly in open agroforestry farms due to tree shadows [

2,

30,

31], but those methods also have problems of their own. The most common strategies for reducing the effects of tree shadows are the acquisition of UAV imagery at the maximum sun elevation angle, the acquisition of more sources of data or the application of additional radiometric corrections [

2,

30,

31]. To monitor relatively large areas, using maximum sun elevation angles does not work because missions tend to be long enough for the sun to change relative position. Additional data sources may also be unavailable, and the use of further corrections can impose a significant delay on the postprocessing of data.

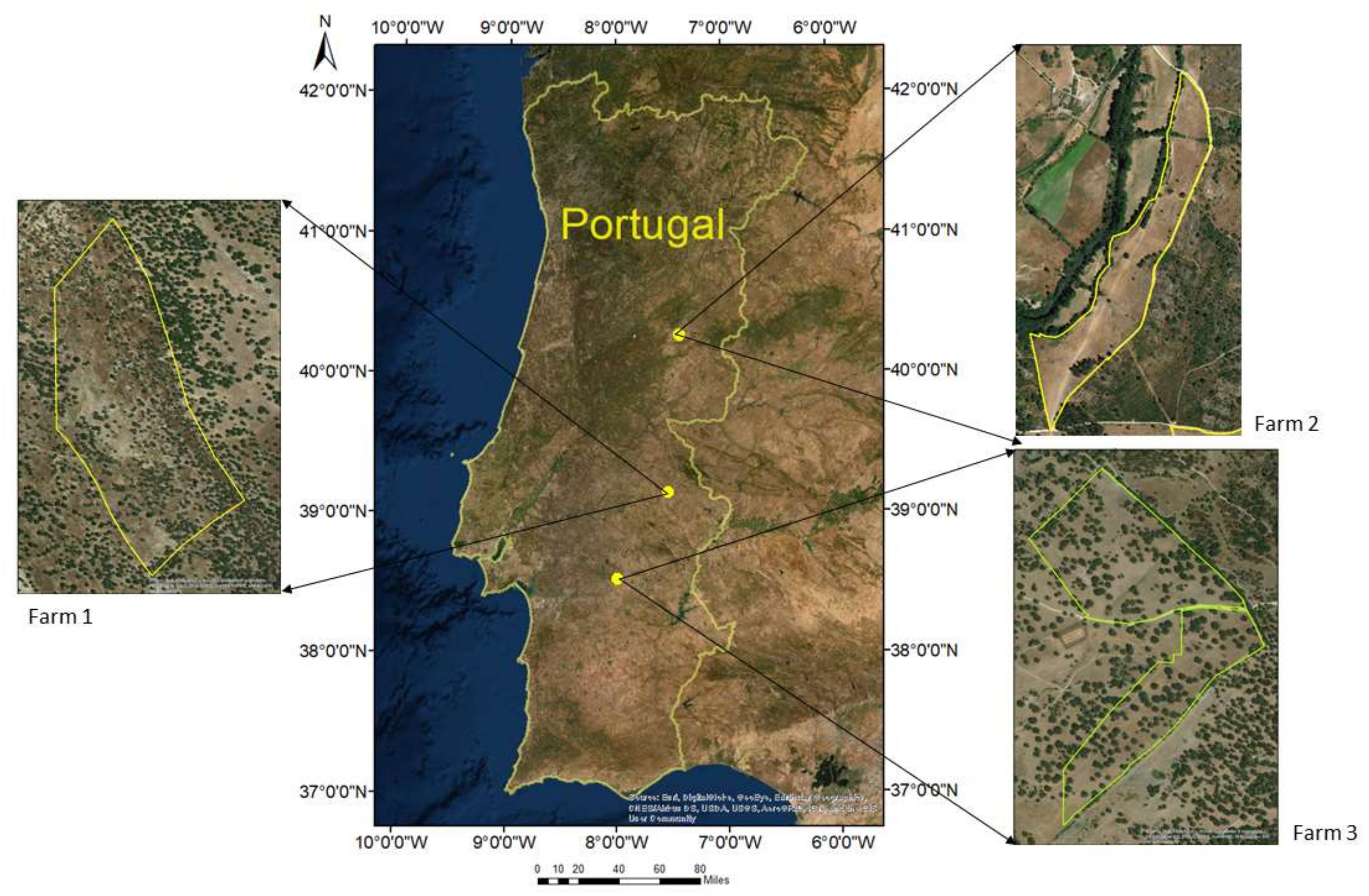

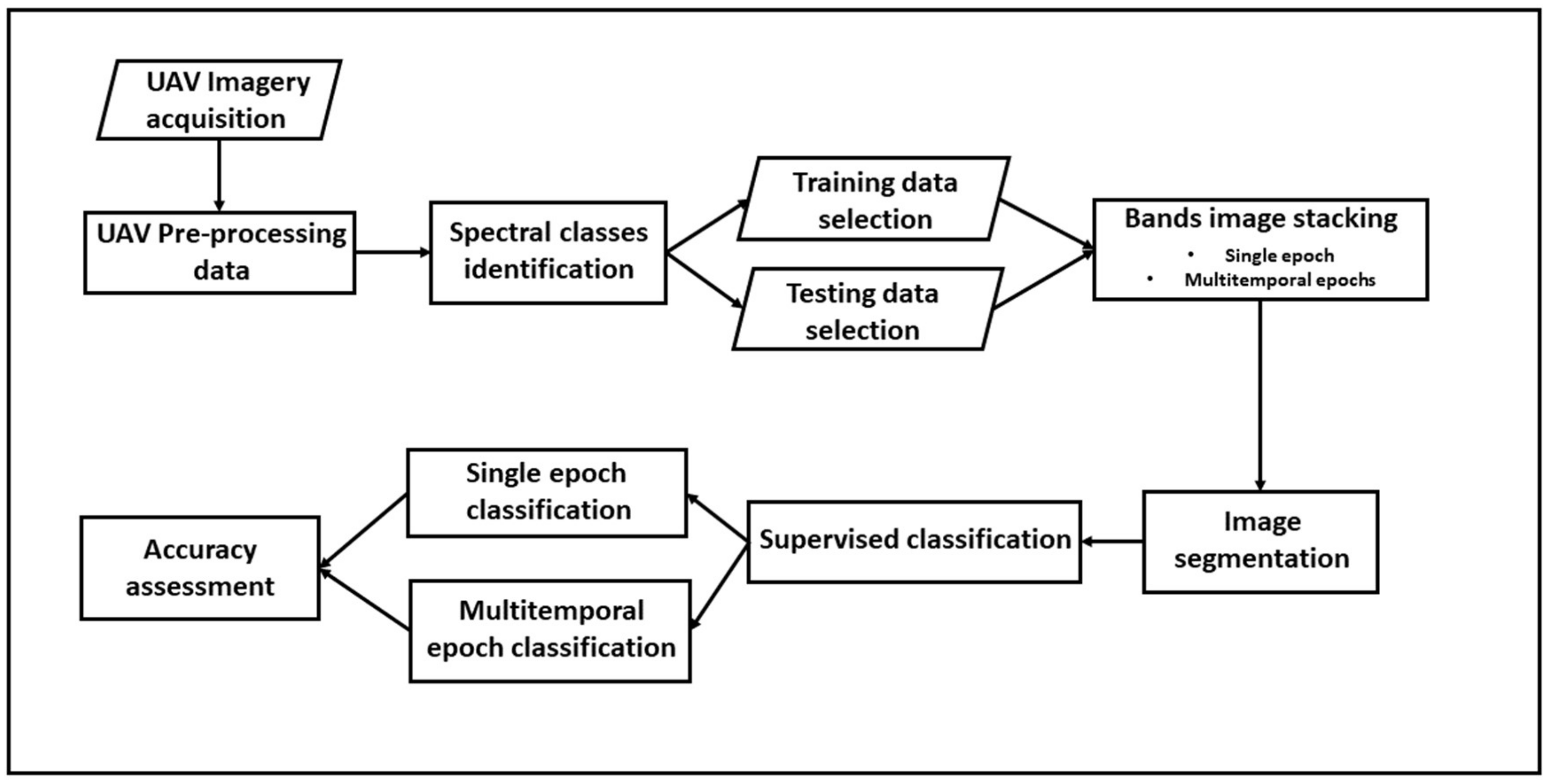

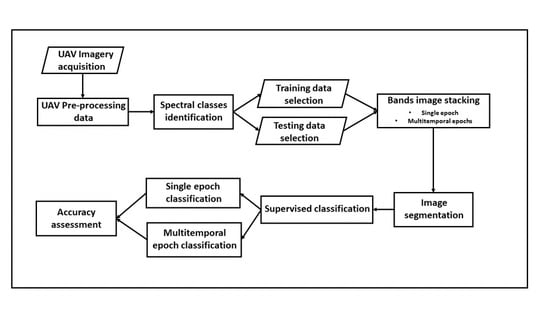

Here, we tested multiple algorithms for the identification and extraction of SBP areas in agroforestry landscapes using GEOBIA classification and UAV surveys conducted in three farms in Portugal. Three ML supervised classification algorithms implemented in the open-source software Orfeo Toolbox (OTB), developed by Centre National d’ Etudes Spatiales [

32], were evaluated, namely Random Forest (RF), Support Vector Machine (SVM) and Artificial Neural Networks (ANN). We used two main approaches for the classification of SBP areas: (1) a baseline approach consisting of single period or single image classification, using the period of maximum NDVI; and (2) multi-temporal image stacking, generating a time series considering the temporal variation of pastures in different epochs. This second approach is hypothesized to reduce the misclassification due to shadows and flowers, avoiding the acquisition of additional sources of data or additional radiometric corrections. We obtained one classification map for each procedure and carried out an accuracy assessment of each.

This study was the first application of GEOBIA classification using ML algorithms included in open-source OTB software for SBP classification, in order to implement an operational workflow for SBP and pastures in general remote monitoring in Montado areas. Moreover, we proposed a new multitemporal approach to attenuate for the interference of tree shadows and flower patches that is potentially applicable to other pasture systems.

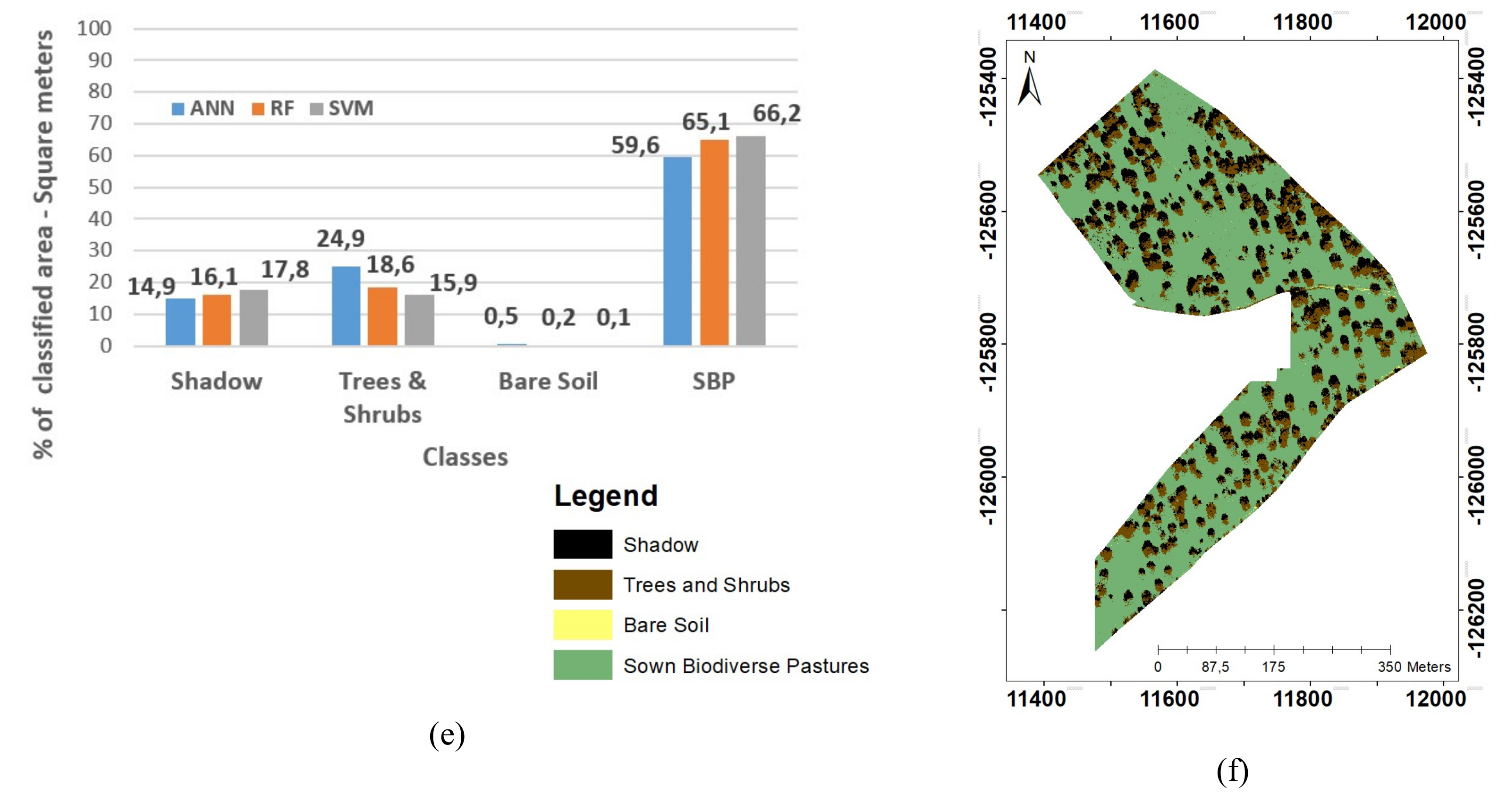

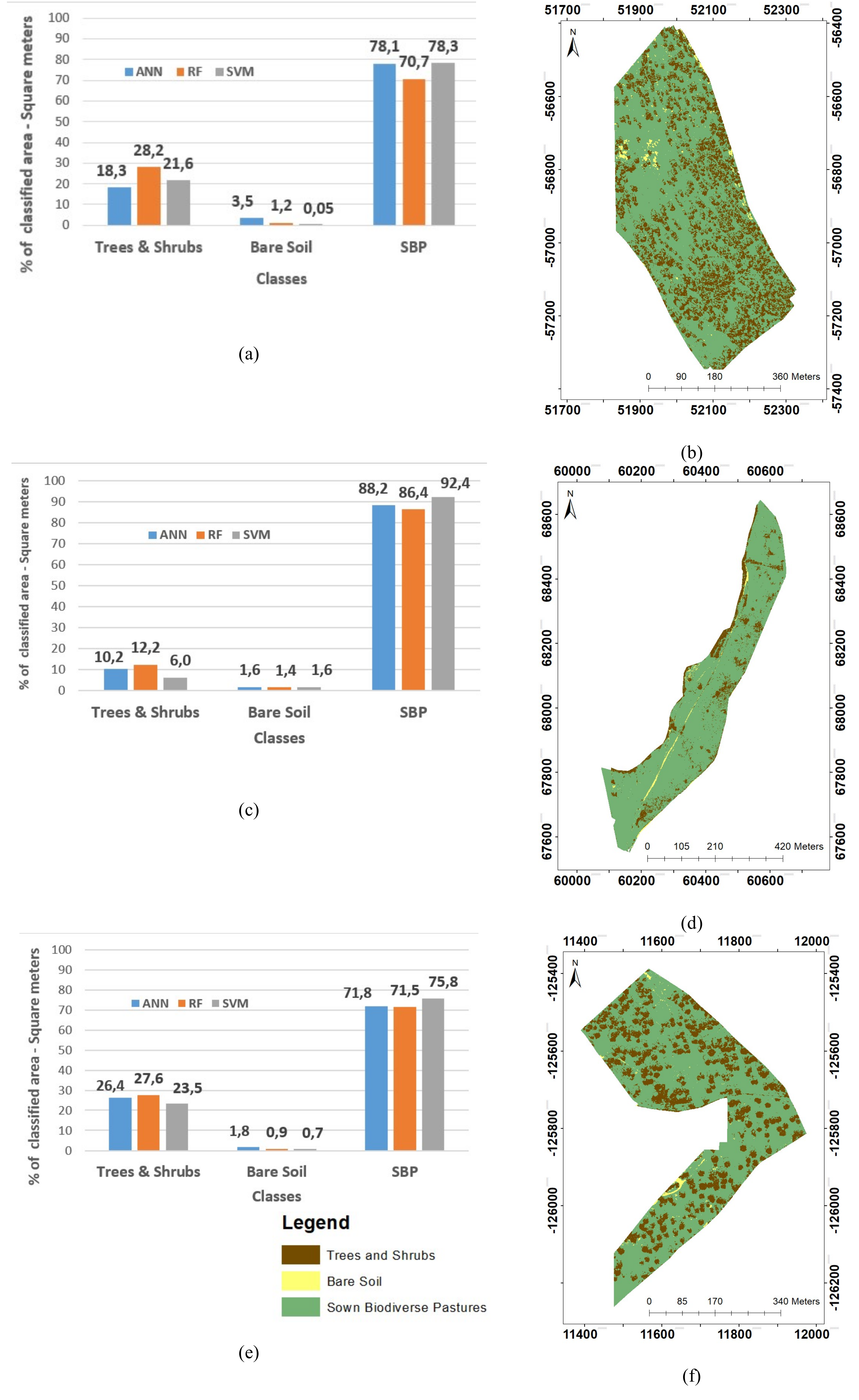

4. Discussion

Out of the many methodological options studied in this paper for identifying SBP areas using RS data, the option that stands out is multitemporal classification using RF. The RF classifications were more accurate than the SVM and ANN classifications (see

Table 3,

Table 4 and

Table 5) in both single epoch and multitemporal classifications. However, in general, SBP and other classes were identified with high accuracy (overall accuracy higher than 79%), regardless of the algorithm. A previous study applied RF and SVM to identify grassland, trees and bare soil land classes in non-SBP

Montado areas using UAV imagery and OTB [

2]. That study also determined that the RF and SVM algorithms had a similar accuracy (~97% and ~95%, respectively), which is similar to the performance we obtained.

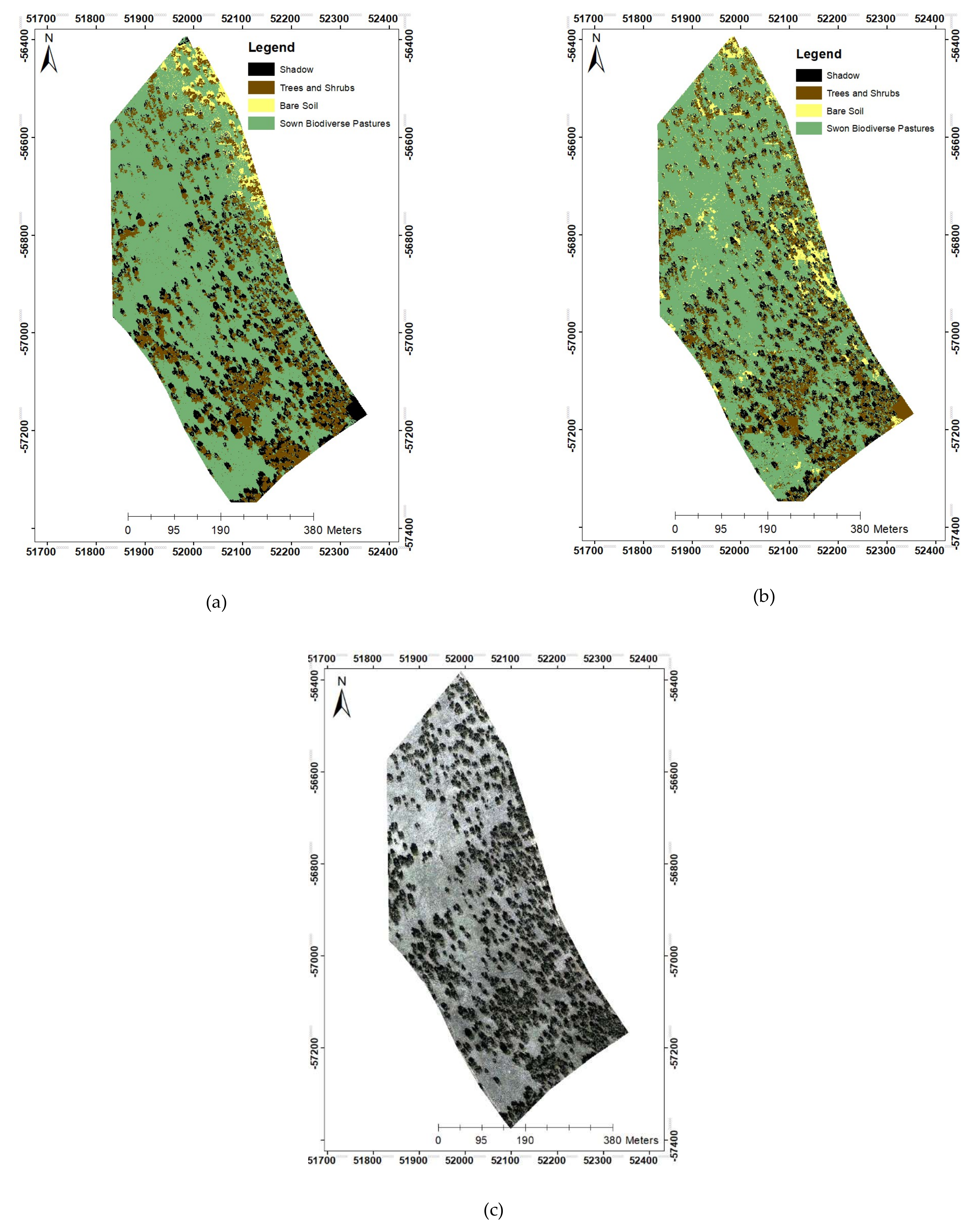

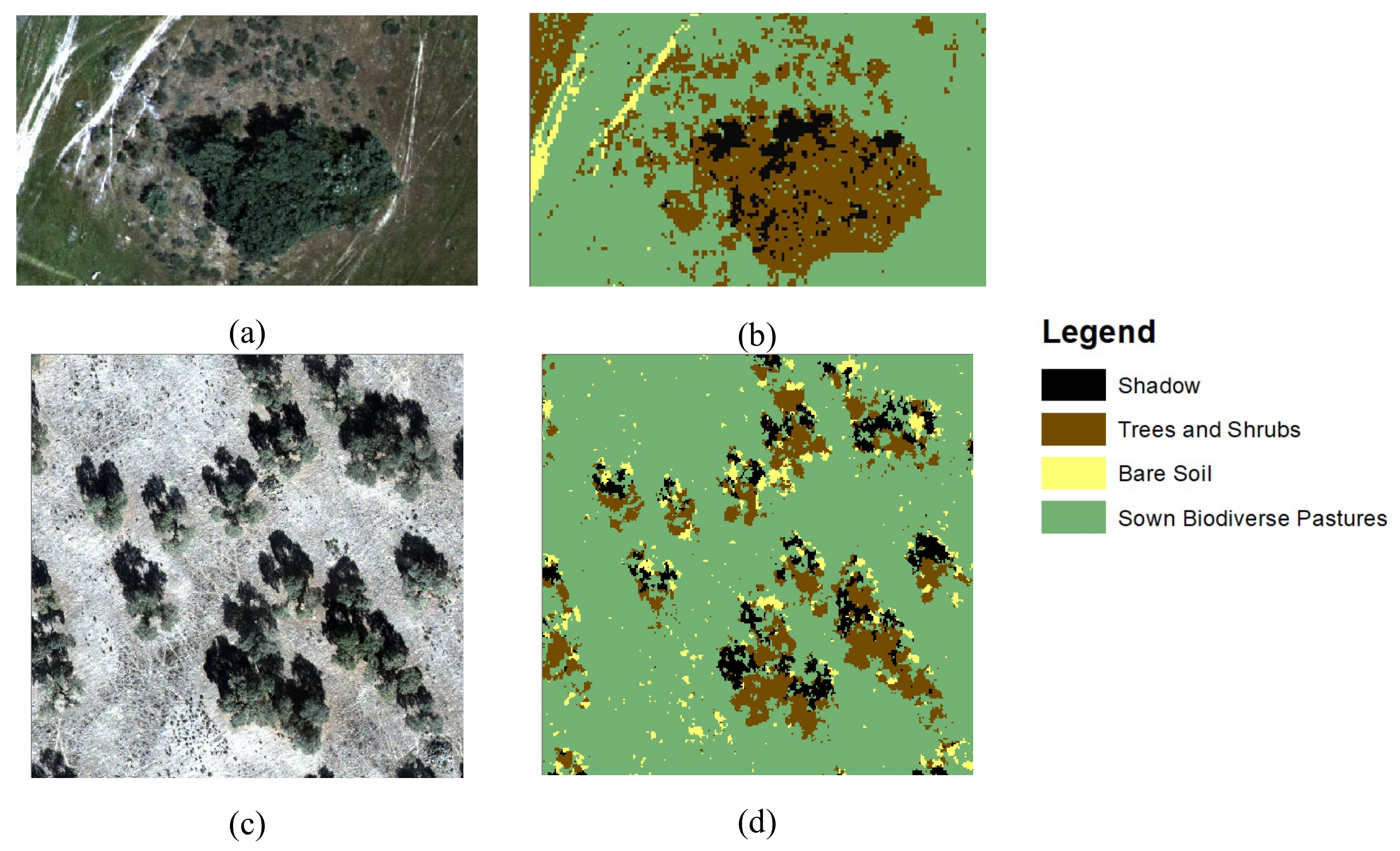

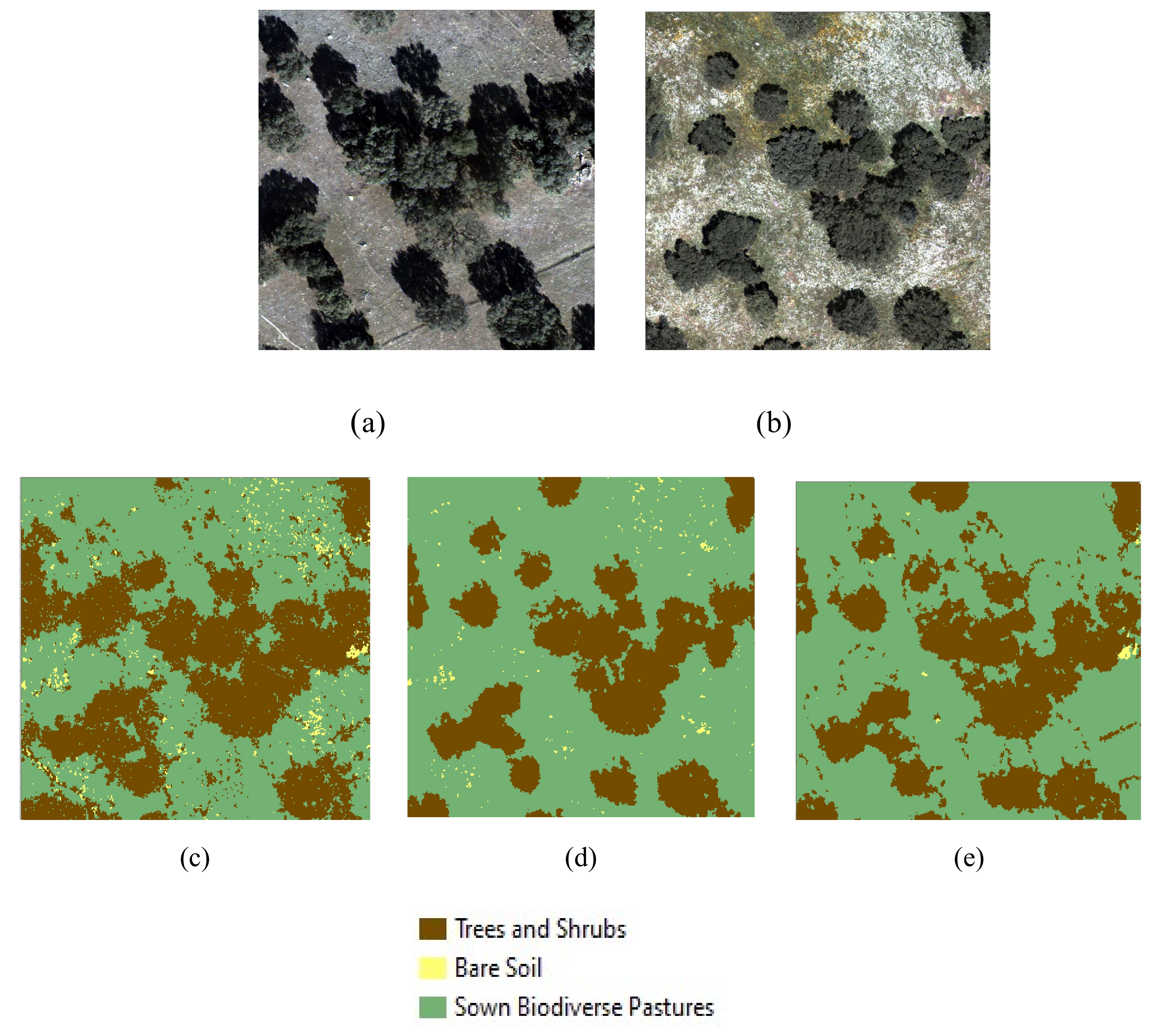

A visual analysis of classification maps shows a common error in classification in all farms, which is mostly prevalent in ANN and RF algorithms; some objects that represent the boundary of the shadows were classified as trees and shrubs (see

Figure 5). This confusion is due to the spectral transition from the shadow to the pastures. This caused an increase of 10% in the size of the area classified as containing trees in farm 1 using the multitemporal RF classification. This problem has also been reported elsewhere in the literature for trees and shadow classification in cork oak environments [

2], but at the moment, we are unable to explain why some algorithms are more sensitive than other to this issue.

The bare soil class covered less than 8% of the parcels. This class included mostly the small roads that exist in the farms, tracks created by frequent movements of animals and some smaller regions of confusion with flower patches in the spring. Although additional data would be needed to fine-tune the algorithms and more accurately classify those regions of the image, the largest and most clearly visible bare soil patches were correctly identified. The lowest producer´s accuracy for the bare soil class was found in farm 3 for multitemporal RF and SVM classifications. Most bare soil areas in this farm were correctly identified. However, in some of the images, shrubs display an absence of vigorous leaves, and can be easily confused with soils. This confusion could have lowered the producer´s accuracy of this class.

Trees and shrubs have similar spectral behavior and shapes, and for this reason, we merged them into a single class. For this class, the DSM had an important role in distinguishing trees from the remaining classes, namely SBP, due to the high altimetric differences [

2,

11,

43]. However, within this class, segmentation was difficult owing to the small dimensions of the shrub objects. Thus, we chose a lower-scale segmentation in order to detect slight spectral and radiometric differences in shrubs and trees, testing multiple values of range radius to avoid over- and under-segmentation. High range radius (over 10) tended to result in under-segmentation of trees and shrubs, hampering the correct identification of shrubs. Low range radii resulted in over-segmentation, and consequently, poor computational performance. Thus, we adopted a range radius between 6 and 8, which worked better for low scale segmentation, and correctly identified most visible shrubs. This segmentation problem in very high-resolution images has been widely reported in the literature [

2,

44], with some authors suggesting that a range radius between 6 and 7 can lead to satisfactory results in segmentation using OTB [

2]. In some studies [

14], multitemporal classification increased the number of segments due to the temporal variability of classes. Our segmentation process led us to the same conclusion, mainly for the SBP class.

Still, RF and SVM were capable of correctly identifying most trees. The main issues were found in multitemporal classification using ANN, which erroneously identified some SBP areas as trees and shrubs in farms 2 and 3, decreasing overall accuracy in comparison with single epoch classification. Raczko and Zagajewski [

45] compared RF, SVM and ANN to identify trees in forested areas. They discussed and concluded that the RF and SVM algorithms are easier to parameterize than ANN. This conclusion also agrees with the results presented and discussed here about tree classification. Testing why multitemporal ANN classification displays those issues was beyond the scope of this study, but it should be regarded as a topic of future research.

Multitemporal classifications had higher overall accuracies and kappa indices than single epoch classifications in most cases because it took into account the temporal variability of vegetation and climate [

46]. The land cover-specific variability of reflectance of the different strata was particularly important to distinguish between trees/shrubs and pasture. While perennial leaf trees, which are the most frequent in these

Montado regions, remain approximately constant in shape and color throughout the year, the characteristics of SBP vary widely. SBP go through a green stage in autumn/early winter, flower and see a boom in productivity in the spring, and completely dry out in the summer. These changes are clearly seen in our UAV data for the study period. Farm 1 is particularly sensitive to the classification approach, as overall accuracy from multitemporal classification was about 4% higher. In our study, we only used three epochs to evaluate the influence of temporal variability. A previous study [

46] considered different time steps of UAV imagery classification. They found that the overall accuracy of results increased by 3% with two or more images compared with using a single image. Our results agree with this observation. This could potentially indicate that the results would be unaffected by adding more epochs. However, the improvement in accuracy is unevenly distributed between the classes and particularly affects classes with lower area (such as bare soil, in our case). The addition of further epochs comes at a cost due to the time and resources involved to carry out UAV flights, and therefore, the cost-benefit of adding more epochs should be carefully evaluated in the future. There are additional approaches for multitemporal analyses that can be tested. For example, very high resolution (VHR) satellite data can be used to increase the temporal resolution of UAV images through fusion of data acquired with different spatial and radiometric resolution, such as those based on concept of “super-resolution” [

47].

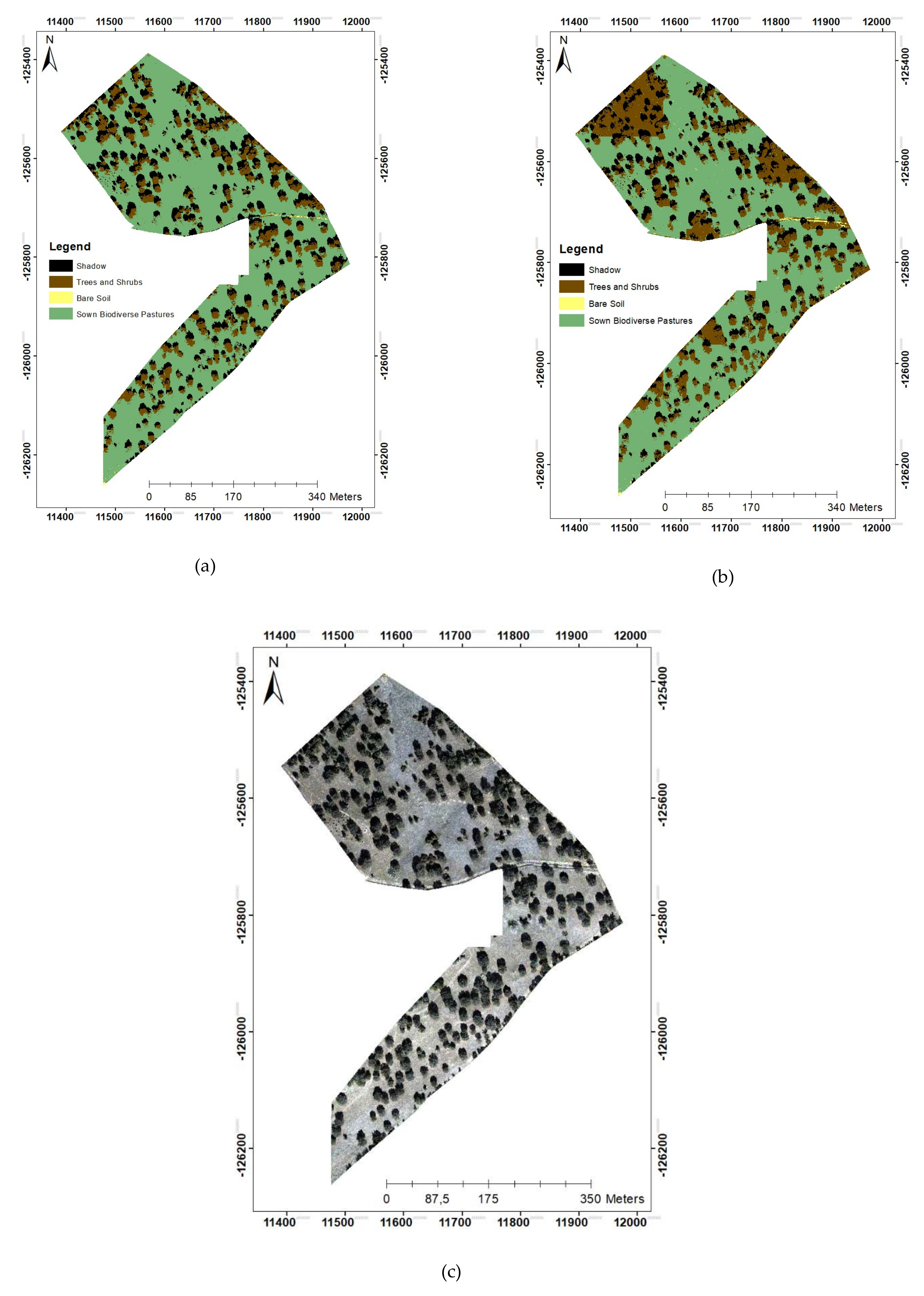

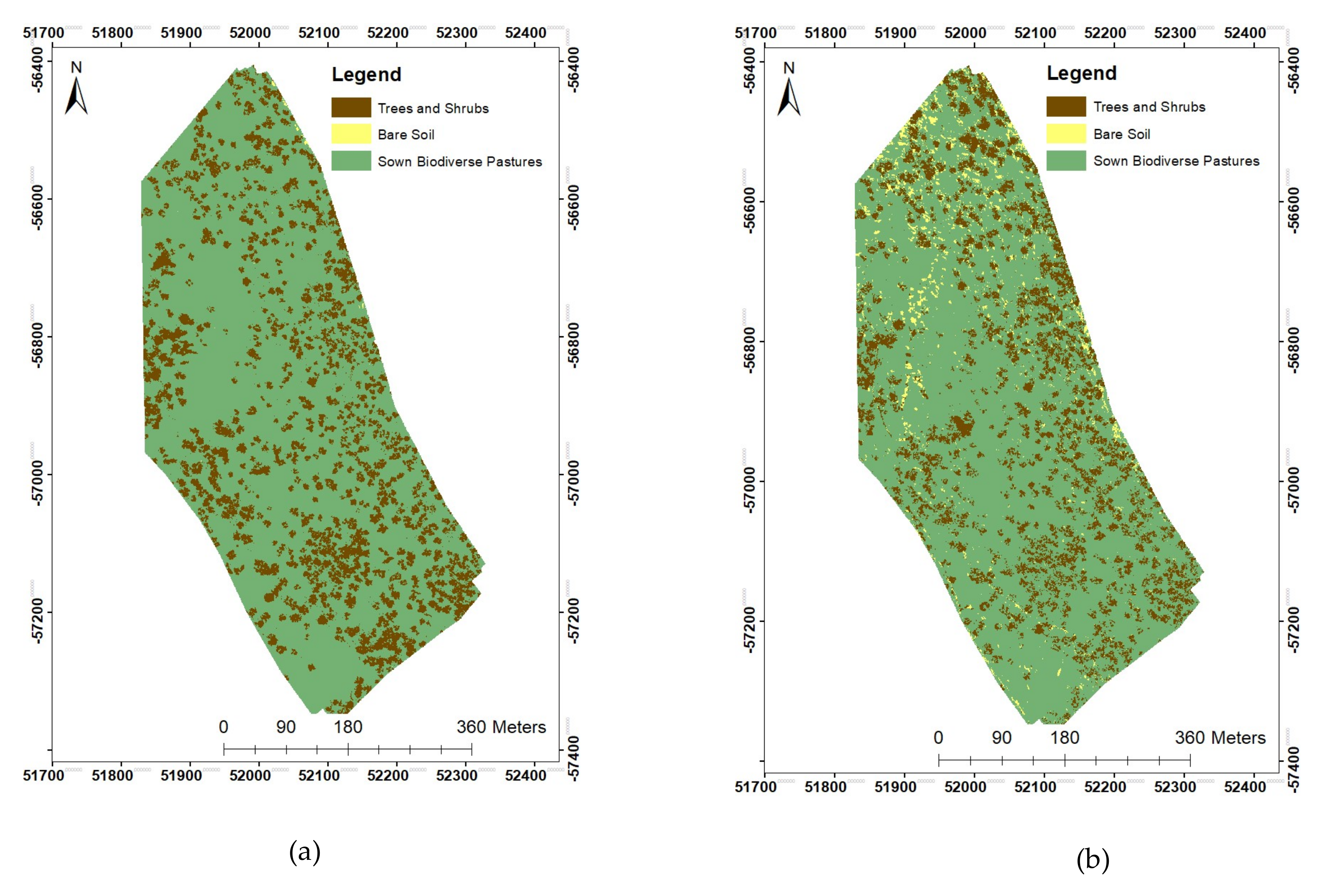

Finally, farm 3 displays very prominent flowering in SBP areas (

Figure 3) during Spring, particularly due to

Chamaemellium mixtum. The radiometry of those flowers is very similar to bare soil radiometry. Therefore, single epoch classification tends to underestimate SBP area by assigning flower patches to the bare soil class. Multitemporal classification fixed the majority of these issues, as most flower patches were classified as SBP (

Figure 5 and

Figure 6). Similarly, the effect of tree and shrub shadows was attenuated through multitemporal classification. This problem is widespread in RS-based classifications of land classes in agroforestry systems, and was dealt with here without the need for additional radiometric correction methods [

2,

31]. Decreasing the effects of shadows and flowers enabled us to identify SBP areas with greater accuracy, as shown by the increased producer and user accuracy of the SBP class. The approach developed here is therefore promising as a general strategy to reduce similar effects in classification problems for other grassland systems in open

Montado agroforestry areas. The reduction of the misclassification due to shadows and flowers in multitemporal classification also reduced the differences of estimated total SBP area between the algorithms, which were sharper for single epoch classification. Thus, the multitemporal classification harmonized the performance between different classifiers/algorithms.