Non-locally Enhanced Feature Fusion Network for Aircraft Recognition in Remote Sensing Images

Abstract

1. Introduction

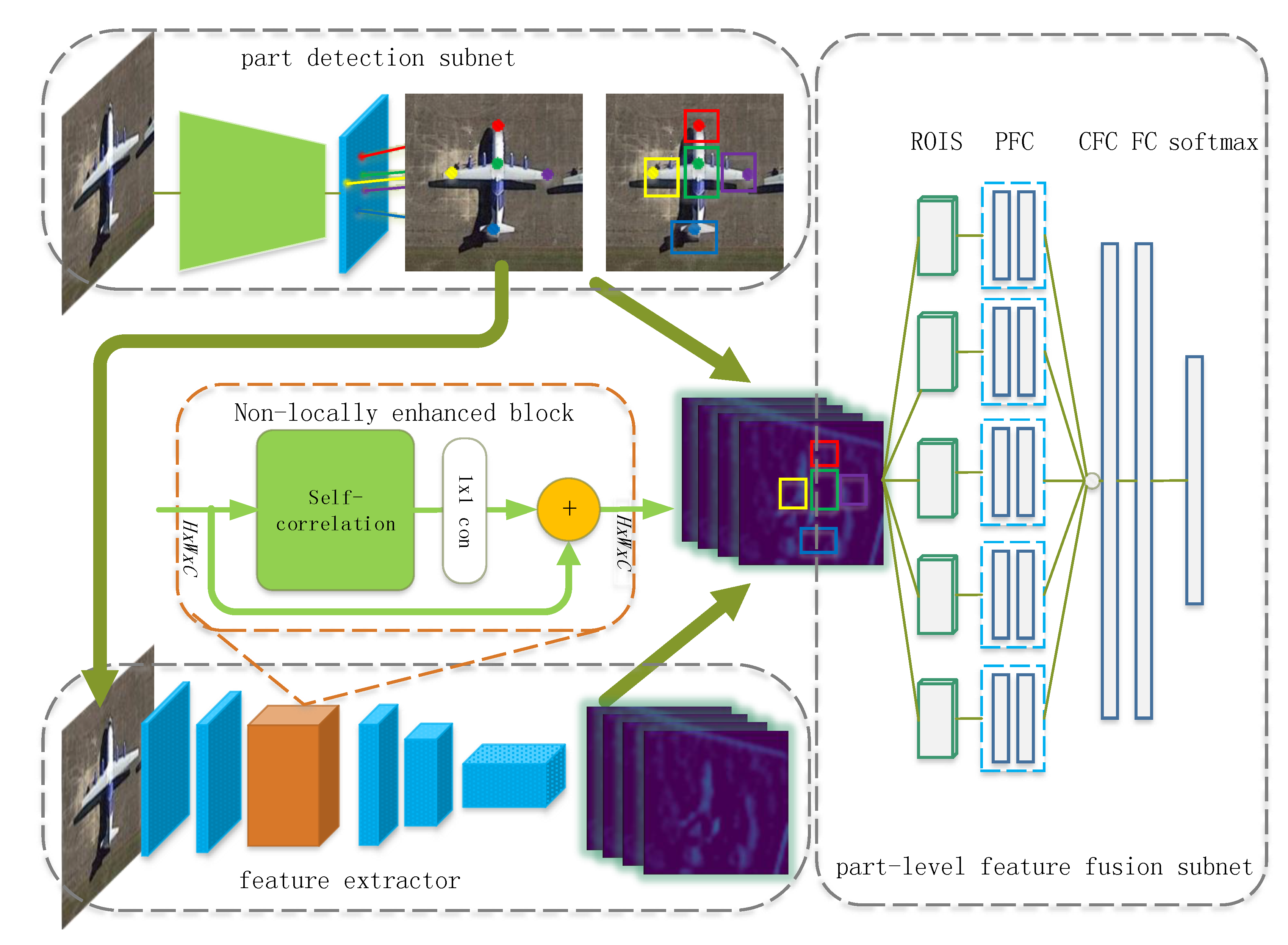

2. Proposed Method

- (1)

- we get an image (denote as Image I) from the dataset, and the nose of the aircraft in Image I may be oriented in any direction. We feed Image I into the part detection sub-network, and get 5 key points of the aircraft;

- (2)

- we utilize the detected key points to correct the posture of the aircraft in Image I (Image I is rotated accordingly), and generate 5 part bounding boxes according to the strategy, as described in Section 2.2.1;

- (3)

- we feed the rotated Image I into the feature extractor, and get the feature maps of the whole image;

- (4)

- we map the part bounding boxes generated in Step 2 to the feature maps generated in Step 3, and get the corresponding feature sub-maps of each part;

- (5)

- we further extract detailed features of each part and then integrate these features, as described in Section 2.2.2.

2.1. Non-locally Enhanced Module

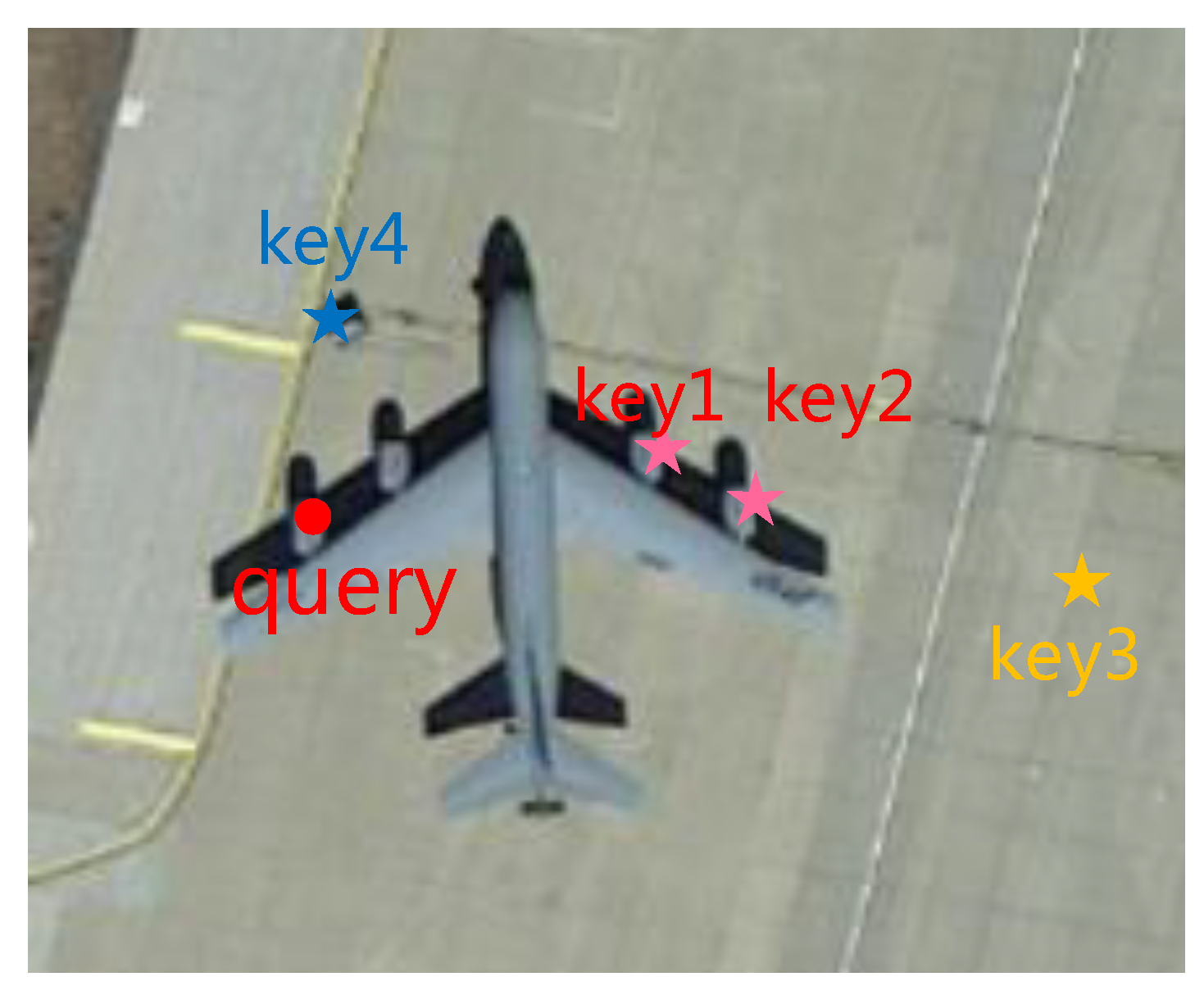

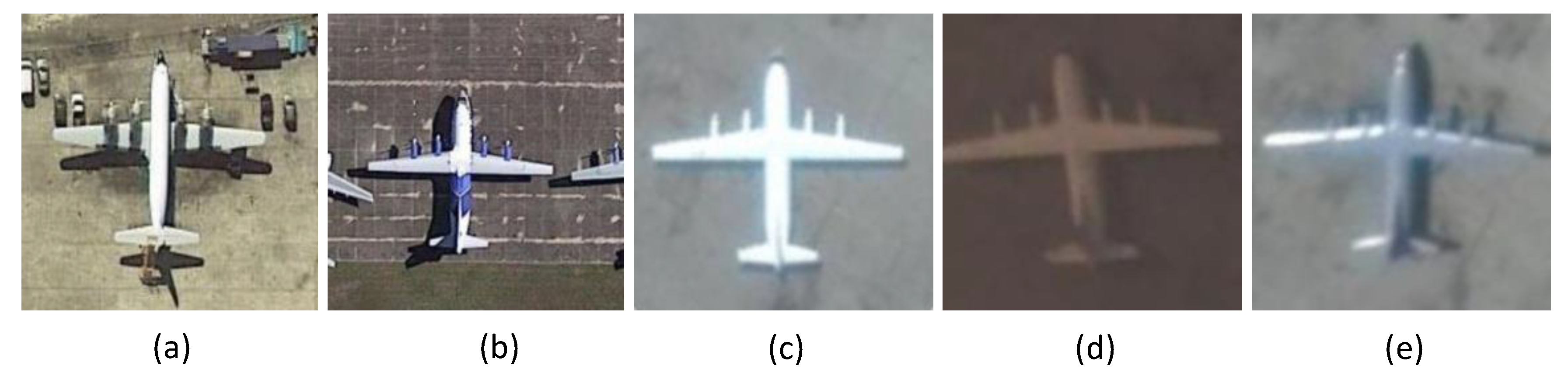

2.1.1. Long-distance Correlation of Aircraft in Remote Sensing Images

2.1.2. Principle of Non-locally Enhanced Operation

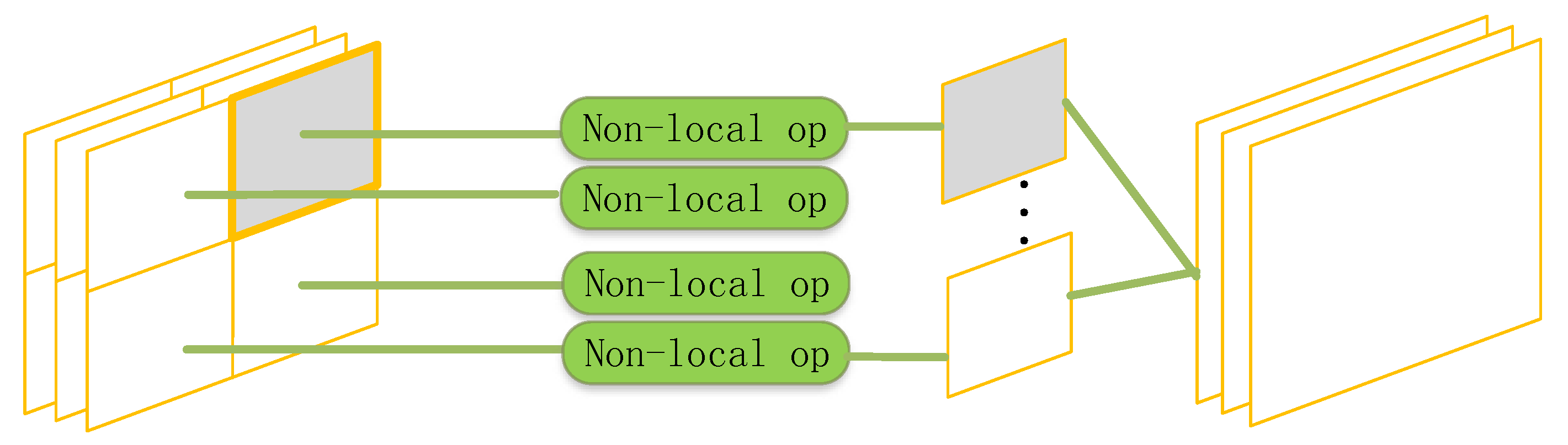

2.1.3. The Realization of Non-locally Enhanced Module

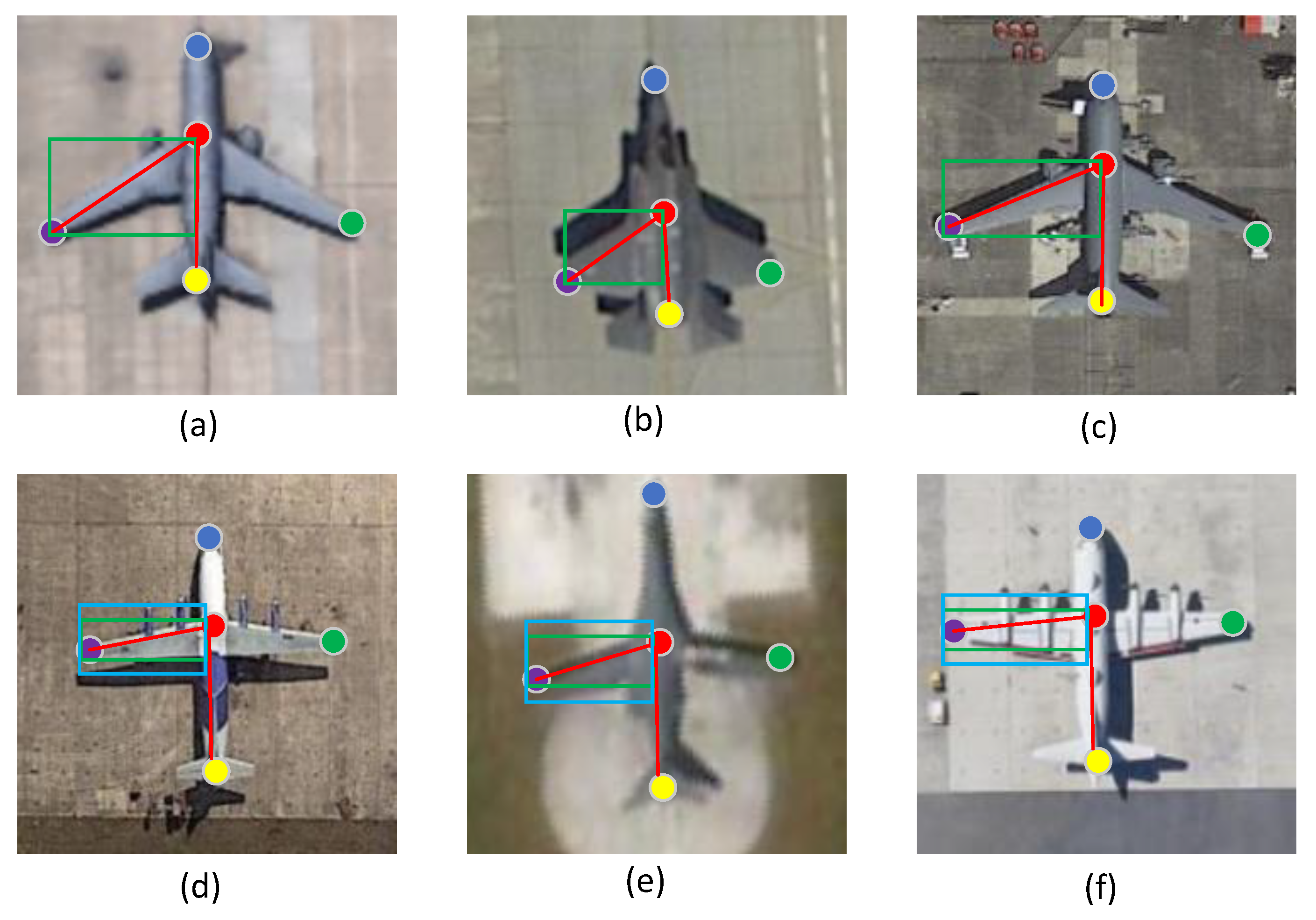

2.2. Part-level Feature Fusion

2.2.1. The Strategy to Generate Bounding Boxes of Part

2.2.2. Feature Extraction and Feature Fusion of Parts

2.3. Hard Example Mining

3. Experiments and Results

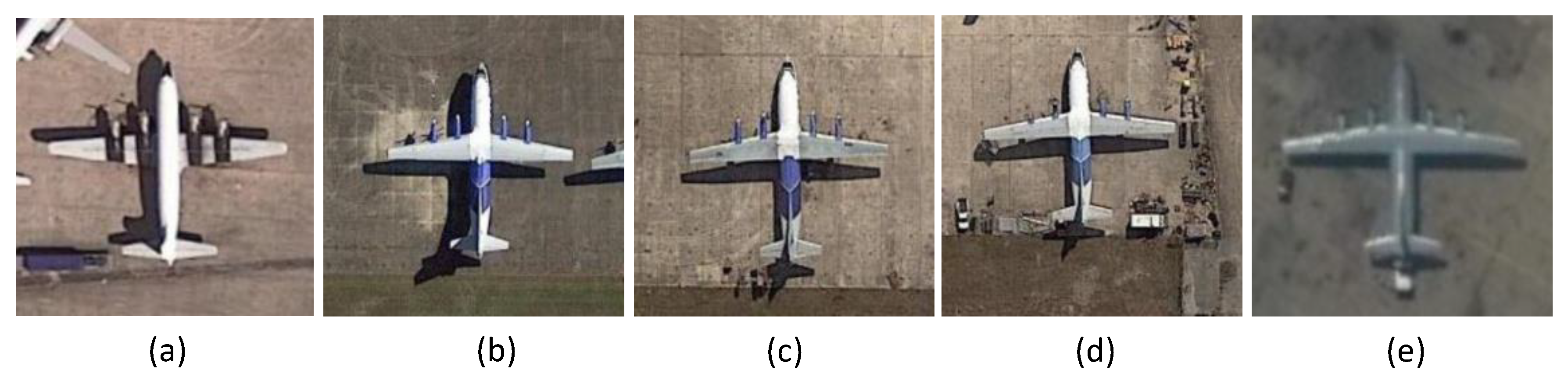

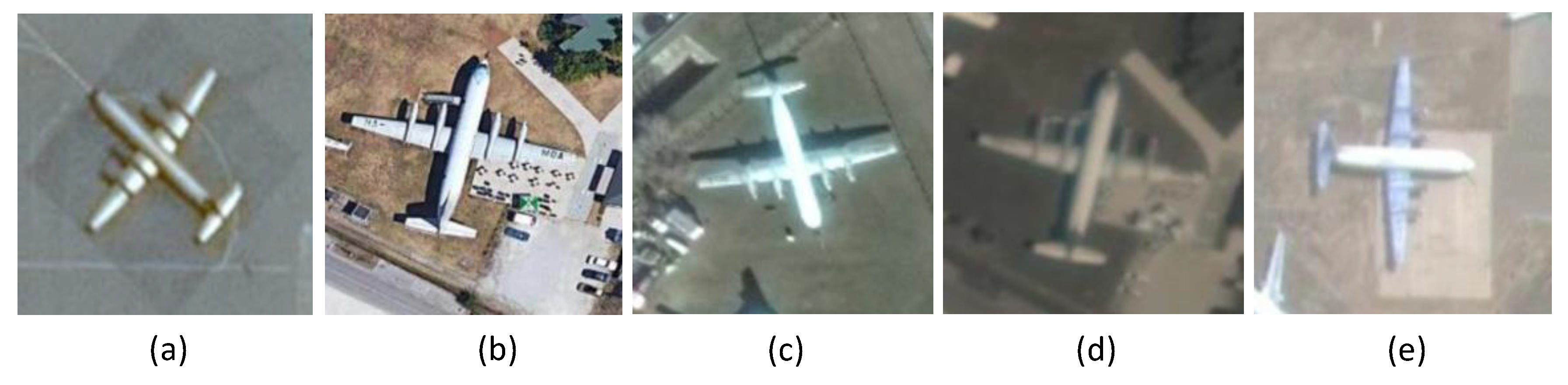

3.1. Dataset

3.2. Implementation Details

3.3. The Results of the Proposed Method

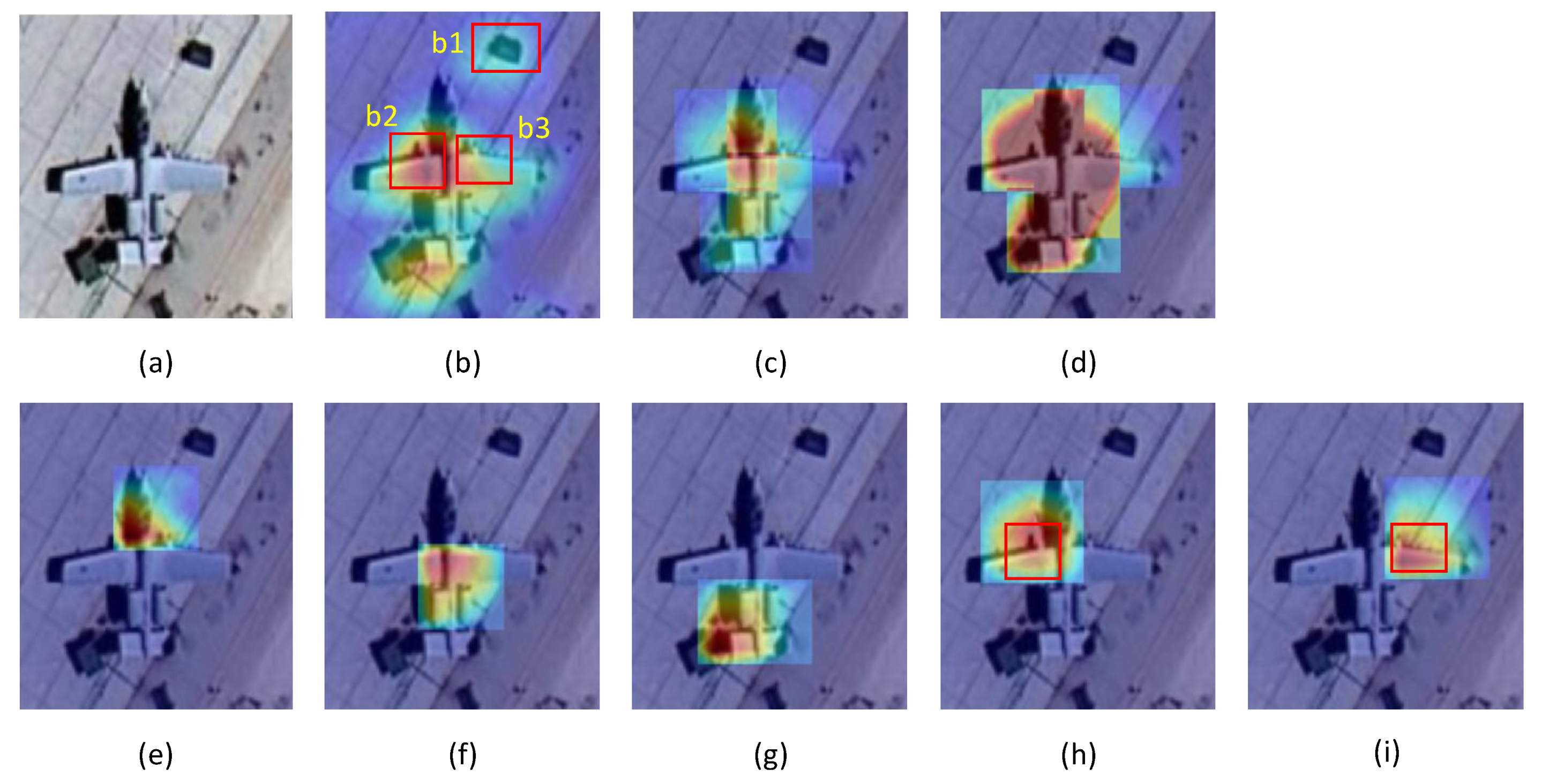

3.4. The Influence of PFF

- (1)

- All part-boxes stitching together actually form a mask of the target, which segments the target from the whole image, and makes the network focus on the target itself, without interference from irrelevant objects and backgrounds outside the target.

- (2)

- The part full connection layer(PFC) allows the network to learn the details inside each part and to better distinguish the nuances between the subclasses.

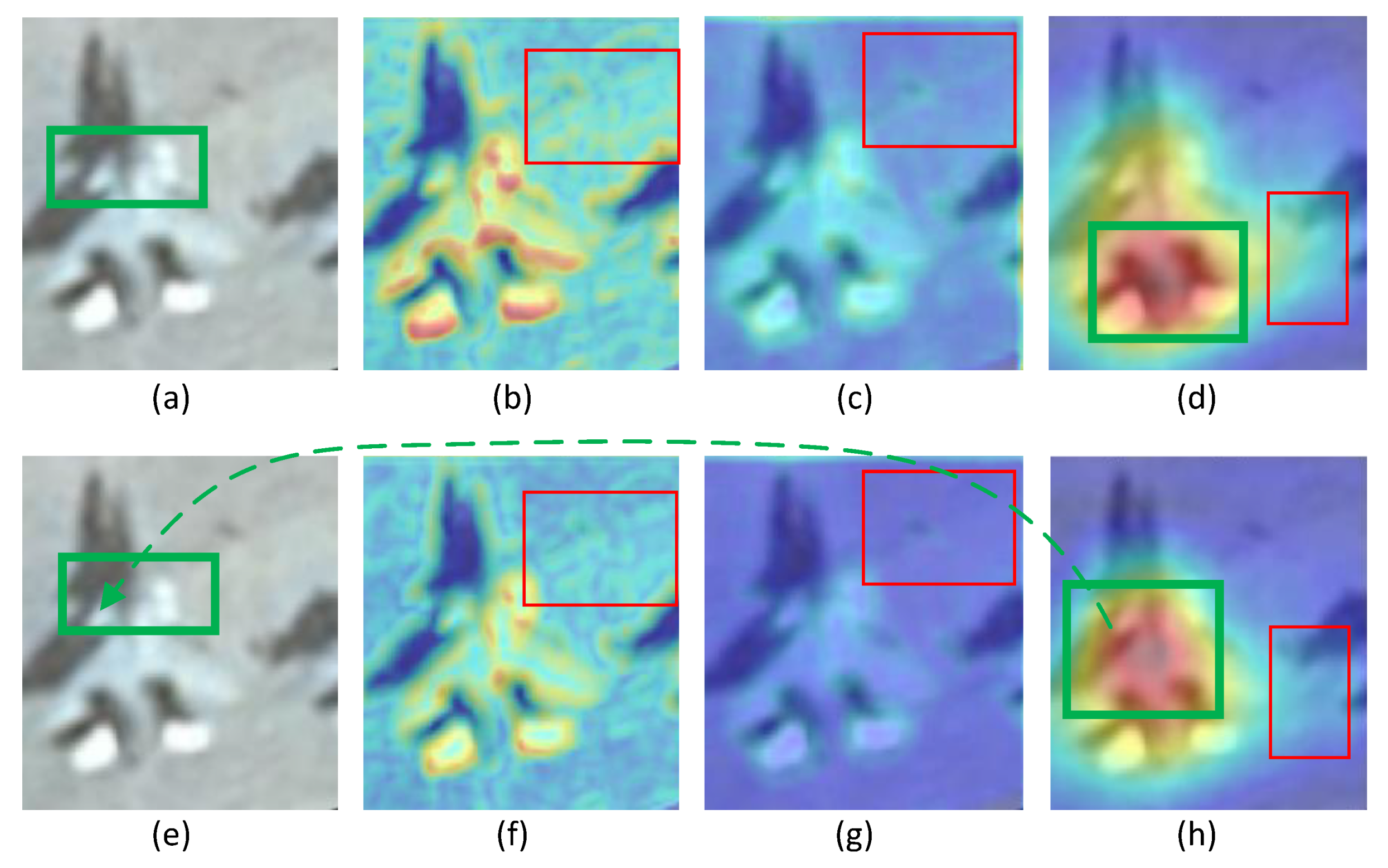

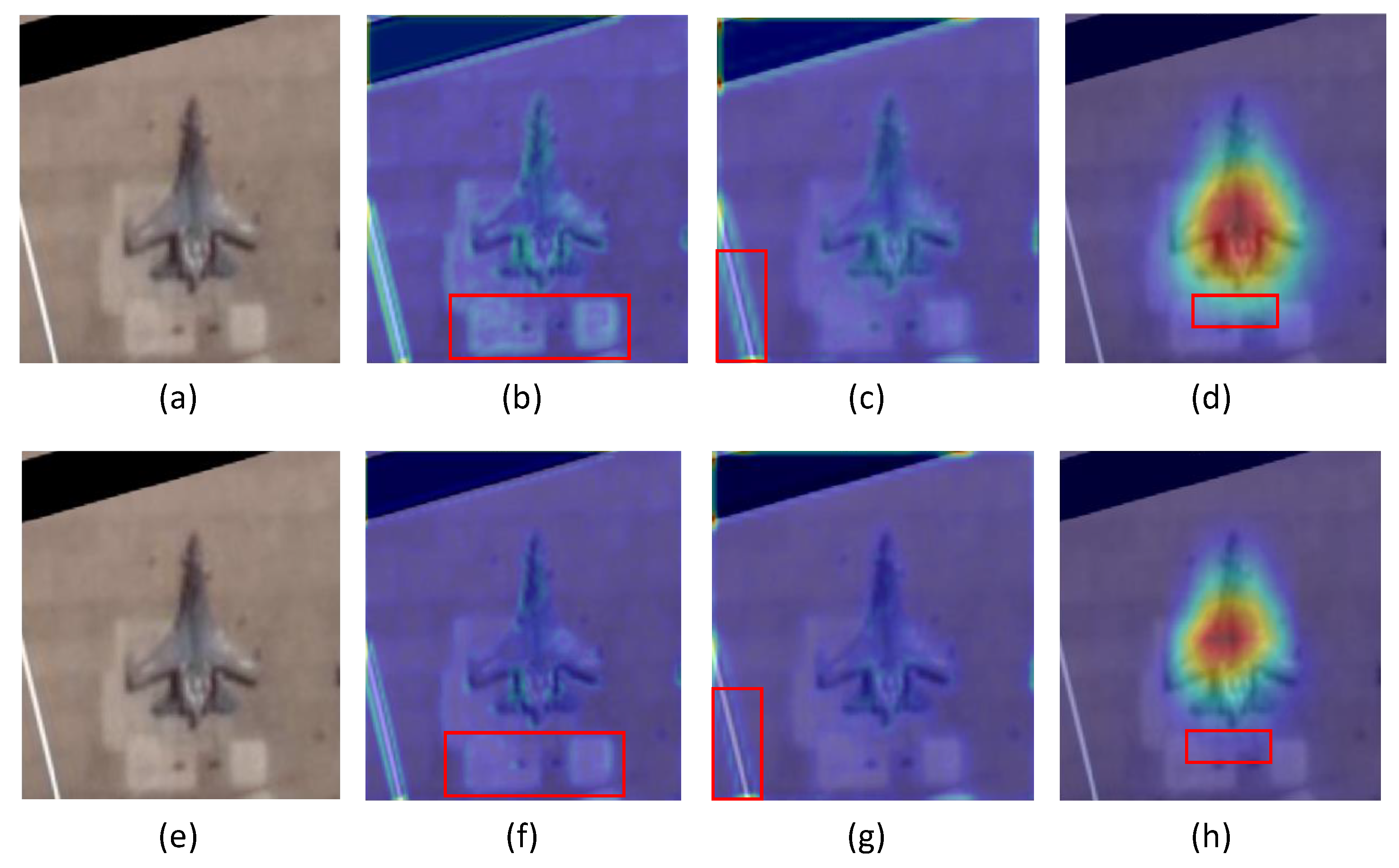

3.5. The Influence of Non-locally Enhanced Operation

- (1)

- With the addition of a non-locally enhanced module, the focused area on the conv3_1 heatmap is more accurate and concentrated than that without the module, indicating that non-locally enhanced operations could guide the network to focus on effective details and ignore useless features.

- (2)

- With the addition of a non-locally enhanced module, heatmap of conv2_2 changes significantly compared with that without the module, indicating that all parameters in the neural network are interrelated, and non-locally enhanced modules could not only influence the subsequent feature maps, but also influence the feature maps before the module.

- (3)

- In the high-level semantic feature maps of conv5_4, the heatmap with non-locally enhanced module is significantly more focused on the aircraft itself and rarely diffuses to irrelevant areas such as the ground, indicating that the effect of the non-locally enhanced module in shallow layers could be effectively transferred to high-level semantics to improve the final presentation and classification ability of the feature extractor.

3.6. The Comparative Experiment of Loss Functions

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wu, Q.; Hao, S.; Xian, S.; Zhang, D.; Wang, H. Aircraft Recognition in High-Resolution Optical Satellite Remote Sensing Images. IEEE Geosci. Remote. Sens. Lett. 2014, 12, 112–116. [Google Scholar]

- Zhang, Y.; Sun, H.; Zuo, J.; Wang, H.; Xu, G.; Sun, X. Aircraft type recognition in remote sensing images based on feature learning with conditional generative adversarial networks. Remote. Sens. 2018, 10, 1123. [Google Scholar] [CrossRef]

- Huang, H.; Huang, J.; Feng, Y.; Liu, Z.; Wang, T.; Chen, L.; Zhou, Y. Aircraft Type Recognition Based on Target Track. J. Phys. Conf. Ser. 2018, 1061, 012015. [Google Scholar] [CrossRef]

- Fu, K.; Dai, W.; Zhang, Y.; Wang, Z.; Yan, M.; Sun, X. Multicam: Multiple class activation mapping for aircraft recognition in remote sensing images. Remote. Sens. 2019, 11, 544. [Google Scholar] [CrossRef]

- Dudani, S.A.; Breeding, K.J.; McGhee, R.B. Aircraft identification by moment invariants. IEEE Trans. Comput. 1977, 100, 39–46. [Google Scholar] [CrossRef]

- Liu, F.; Peng, Y.U.; Liu, K. Research concerning aircraft recognition of remote sensing images based on ICA Zernike invariant moments. Caai Trans. Intell. Syst. 2011, 6, 51–56. [Google Scholar]

- Zhang, Y.N.; Yao, G.Q. Plane Recognition Based on Moment Invariants and Neural Networks. Comput. Knowl. Technol. 2009, 5, 3771–3778. [Google Scholar]

- Lowe, D.G.; Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Hsieh, J.W.; Chen, J.M.; Chuang, C.H.; Fan, K.C. Aircraft type recognition in satellite images. IEE Proc. Vis. Image Signal Process. 2005, 152, 307–315. [Google Scholar] [CrossRef]

- Xu, C.; Duan, H. Artificial bee colony (ABC) optimized edge potential function (EPF) approach to target recognition for low-altitude aircraft. Pattern Recognit. Lett. 2010, 31, 1759–1772. [Google Scholar] [CrossRef]

- Ge, L.; Xian, S.; Fu, K.; Wang, H. Aircraft Recognition in High-Resolution Satellite Images Using Coarse-to-Fine Shape Prior. IEEE Geosci. Remote. Sens. Lett. 2013, 10, 573–577. [Google Scholar]

- An, Z.; Fu, K.; Wang, S.; Zuo, J.; Wang, H. Aircraft Recognition Based on Landmark Detection in Remote Sensing Images. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 1413–1417. [Google Scholar]

- Shao, D.; Zhang, Y.; Wei, W. An aircraft recognition method based on principal component analysis and image model-matching. Chin. J. Stereol. Image Anal. 2009, 3, 7. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Henan, W.; Dejun, L.; Hongwei, W.; Ying, L.; Xiaorui, S. Research on aircraft object recognition model based on neural networks. In Proceedings of the 2012 International Conference on Computer Science and Electronics Engineering, Hangzhou, China, 23–25 March 2012. [Google Scholar]

- Fang, Z.; Yao, G.; Zhang, Y. Target recognition of aircraft based on moment invariants and BP neural network. In Proceedings of the World Automation Congress 2012, Puerto Vallarta, Mexico, 24–28 June 2012. [Google Scholar]

- Diao, W.; Xian, S.; Dou, F.; Yan, M.; Wang, H.; Fu, K. Object recognition in remote sensing images using sparse deep belief networks. Remote. Sens. Lett. 2015, 6, 745–754. [Google Scholar] [CrossRef]

- Zuo, J.; Xu, G.; Fu, K.; Xian, S.; Hao, S. Aircraft Type Recognition Based on Segmentation With Deep Convolutional Neural Networks. IEEE Geosci. Remote. Sens. Lett. 2018, PP, 1–5. [Google Scholar] [CrossRef]

- Kim, H.; Choi, W.C.; Kim, H. A hierarchical approach to extracting polygons based on perceptual grouping. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 2–5 October 1994. [Google Scholar]

- Randall, J.; Guan, L.; Zhang, X.; Li, W. Hierarchical cluster model for perceptual image processing. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002. [Google Scholar]

- Michaelsen, E.; Doktorski, L.; Soergel, U.; Stilla, U. Perceptual grouping for building recognition in high-resolution SAR images using the GESTALT-system. In Proceedings of the 2007 Urban Remote Sensing Joint Event, Paris, France, 11–13 April 2007. [Google Scholar]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the Workshop on statistical learning in computer vision, ECCV. Prague, Prague, Slovansky Ostrov, 11–14 May 2004. [Google Scholar]

- Batista, N.C.; Lopes, A.P.B.; Araújo, A.d.A. Detecting buildings in historical photographs using bag-of-keypoints. In Proceedings of the 2009 XXII Brazilian Symposium on Computer Graphics and Image Processing, Rio De Janiero, Brazil, 11–15 October 2009. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the IEEE Computer Society Conference on Computer Vision & Pattern Recognition, San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Zhong, Y.; Feng, R.; Zhang, L. Non-Local Sparse Unmixing for Hyperspectral Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2014, 7, 1889–1909. [Google Scholar] [CrossRef]

- Deledalle, C.A.; Tupin, F.; Denis, L. Polarimetric SAR estimation based on non-local means. In Proceedings of the Geoscience & Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Iwabuchi, H.; Hayasaka, T. A multi-spectral non-local method for retrieval of boundary layer cloud properties from optical remote sensing data. Remote. Sens. Environ. 2003, 88, 294–308. [Google Scholar] [CrossRef]

- Zhang, N.; Donahue, J.; Girshick, R.; Darrell, T. Part-based R-CNNs for fine-grained category detection. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Uijlings, J.R.R.; Sande, K.E.A.V.D.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-Ucsd Birds-200-2011 Dataset. 2011. Available online: http://www.vision.caltech.edu/visipedia/CUB-200-2011.html (accessed on 13 December 2016).

- Huang, S.; Xu, Z.; Tao, D.; Zhang, Y. Part-Stacked CNN for Fine-Grained Visual Categorization. Computer Vision & Pattern Recognition. 2016. Available online: https://arxiv.org/abs/1512.08086 (accessed on 26 December 2015).

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Peng, Y.; He, X.; Zhao, J. Object-Part Attention Model for Fine-grained Image Classification. IEEE Trans. Image Process. 2017, 1. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, G.; He, X.; Zhang, W.; Chang, H.; Dong, L.; Lin, L. Non-locally enhanced encoder-decoder network for single image de-raining. arXiv 2018, arXiv:1808.01491. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Li, B.; Liu, Y.; Wang, X. Gradient harmonized single-stage detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27–31 January 2019. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Xia, G.S.; Member, S.; IEEE; Hu, J.; Fan, H. AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical learning rates for training neural networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

| Method | Accuracy |

|---|---|

| AlexNet [14] | 70.89% |

| VGG19 [15] | 80.39% |

| ResNet18 [16] | 79.41% |

| Segmentation [20] | 83.25% |

| Extractor + Non-local | 86.55% |

| Extractor + PFF | 87.38% |

| Extractor + Non-local + PFF (Proposed Method) | 88.56% |

| Location | Accuracy |

|---|---|

| non-local module after conv1_2 | 88.17% |

| non-local module after conv2_2 | 88.56% |

| non-local module after conv3_4 | 88.36% |

| non-local module after conv4_4 | 87.34% |

| non-local module after conv5_4 | 86.78% |

| Loss Function | Accuracy |

|---|---|

| Cross-Entropy | 88.56% |

| Focal Loss, =1 | 88.64% |

| Focal Loss, =2 | 88.78% |

| GHM-C Loss | 89.12% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, Y.; Niu, X.; Dou, Y.; Qie, H.; Wang, K. Non-locally Enhanced Feature Fusion Network for Aircraft Recognition in Remote Sensing Images. Remote Sens. 2020, 12, 681. https://doi.org/10.3390/rs12040681

Xiong Y, Niu X, Dou Y, Qie H, Wang K. Non-locally Enhanced Feature Fusion Network for Aircraft Recognition in Remote Sensing Images. Remote Sensing. 2020; 12(4):681. https://doi.org/10.3390/rs12040681

Chicago/Turabian StyleXiong, Yunsheng, Xin Niu, Yong Dou, Hang Qie, and Kang Wang. 2020. "Non-locally Enhanced Feature Fusion Network for Aircraft Recognition in Remote Sensing Images" Remote Sensing 12, no. 4: 681. https://doi.org/10.3390/rs12040681

APA StyleXiong, Y., Niu, X., Dou, Y., Qie, H., & Wang, K. (2020). Non-locally Enhanced Feature Fusion Network for Aircraft Recognition in Remote Sensing Images. Remote Sensing, 12(4), 681. https://doi.org/10.3390/rs12040681