1. Introduction

The risk factors that endanger the integrity of cultural heritage have risen at alarming rate during the last decades. In addition to the natural erosion or weather events, other effects caused by climate change or some human acts of vandalism or terrorism must be considered [

1,

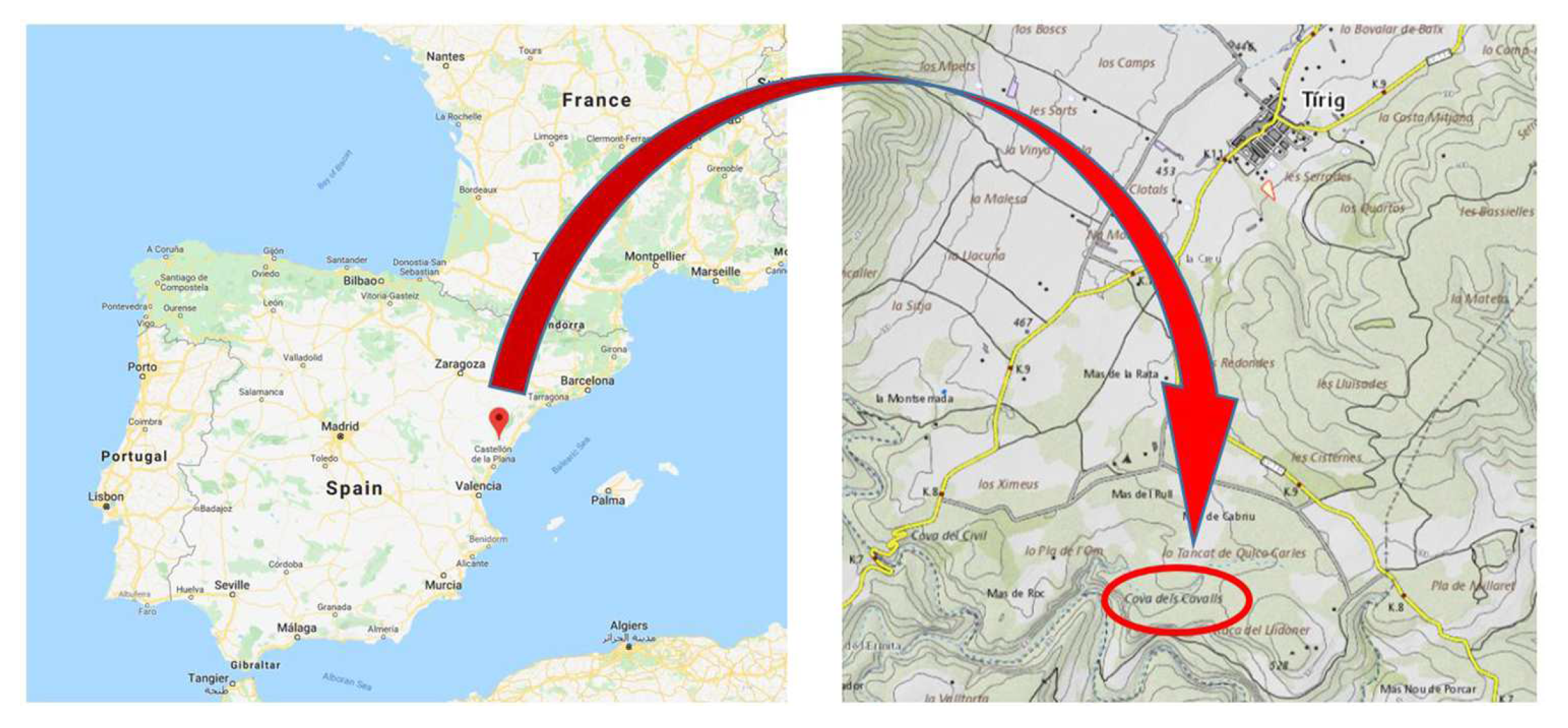

2]. Especially vulnerable are those heritage items located in open-air emplacements such as archaeological rock art painting caves. Thus, it is not surprising that the scientific community, particularly the heads of cultural heritage conservation, and society in general, are very sensitive about the potential threats that endanger our historical places.

Exhaustive and precise documentation is vital in order to adopt urgent and concrete actions to preserve our heritage objects, especially those in danger. To address this priority task, traditional methodologies should be supported by new state-of-the-art techniques. In this respect, the integration of geomatics techniques such as photogrammetry, remote sensing or 3D modelling are indispensable at present, and has been a considerable step forward for new cultural heritage applications [

3]. Previous examples of such applications include 3D photogrammetric and laser scanning documentation [

4,

5,

6]; automatic change detection techniques [

2]; monitoring for deformation control [

7]; microgravimetric surveying technique [

8] or imaging analysis [

9,

10] or enhancement methods [

11,

12] to name just a few.

The use of novel technologies allows users to solve aspects such as the graphic and accurate metric archaeological documentation, usually in a fast and effective way. However, there are still issues to be addressed, such as the precise specification of color which is an essential attribute in cultural heritage documentation. An accurate measurement of color allows the current state description of historical objects, and gives trustworthy information about its degradation or aging over time [

13].

Precise color recording is a priority, yet not trivial, task in heritage documentation [

14,

15]. Traditional methodologies for color description in archaeology are mostly based on visual observations which are strictly subjective. In order to obtain precise color data it is necessary to rely on non-subjective and rigorous methodologies based on colorimetric criteria.

Although current cultural documentation tasks increasingly rely on digital images techniques, it is well known that the color signal recorded by the camera sensor (generally as RGB format) are not colorimetrically sound [

16]. The digital values acquired by the sensor are device dependent; that is, different camera sensors will record different responses even for the same scene under identical lighting conditions. Moreover, the RGB data do not directly correspond with the independent, physically based tristimulus values based on the Commission Internationale de l’Éclairage (CIE) standard colorimetric observer, nor do they satisfy the Luther–Yves condition [

17].

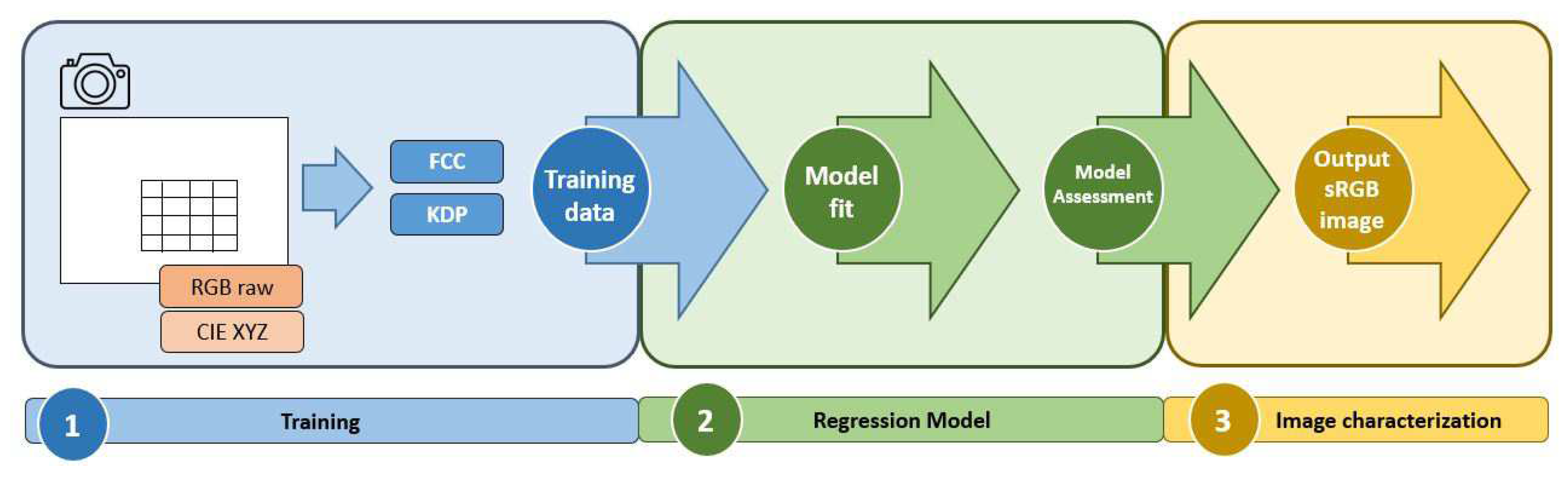

Therefore, a rigorous processing framework is still necessary in order to collect color data using a digital device in a meaningful way. A common and acceptable approach is by means of digital camera characterization [

18,

19,

20]. The fundamental goal of the characterization procedure is to determine the mathematical relationship between the input device-dependent digital camera responses and the output device-independent tristimulus values of an input image scene. Thus, as a result of camera characterization, the tristimulus values for the full scene can be predicted on a pixel-by-pixel basis from the original digital image values captured [

21].

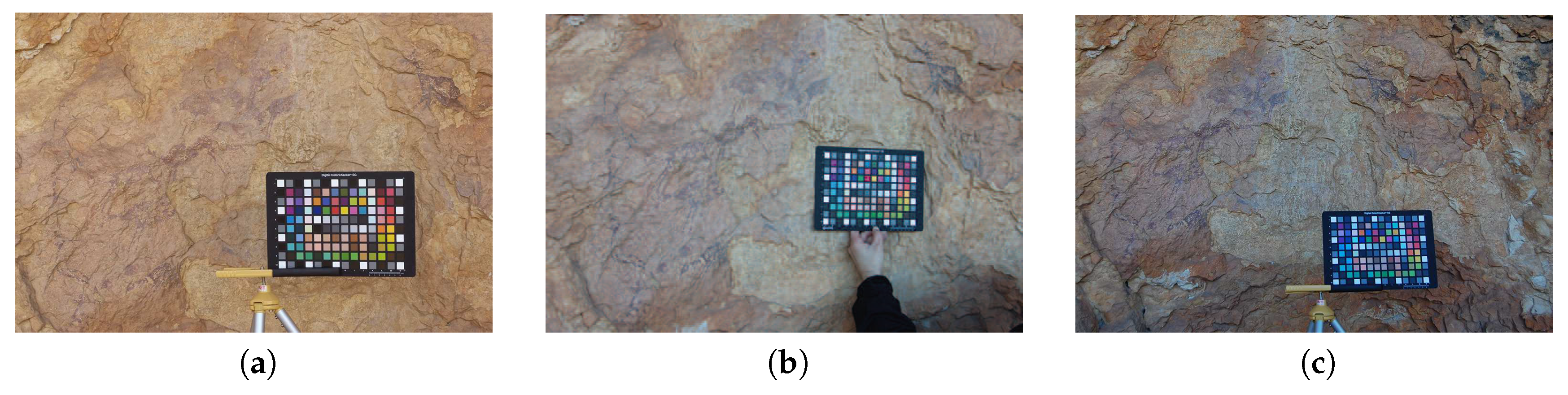

Some factors to consider during the characterization procedure are: the camera built-in sensor, camera parameters during the photographic acquisition phase, size and color properties of training data [

22,

23], and the regression model used [

24]. The sensor has an effect not only in terms of noise but in the characterized output images [

25]. Thus, the interpolation method selected must be properly adapted to the data offering a suitable graphic solution. The number of color patches measured and their distribution also plays a decisive role [

26].

The manufactured color charts are designed to cover the maximum range of imaged colors on photographic or industrial applications. However, in most applications it is not required to use the entire set of color patches as a training set since redundant data could be entered into the regression model. In addition, color charts are not developed specifically for archaeological purposes, where only a few colors are present on natural scenes. Thus, an adequate selection of training samples for the regression model is crucial for quality estimations [

22,

27,

28]. Our previous studies showed that accurate results can be achieved using a reduced number of properly selected samples [

24].

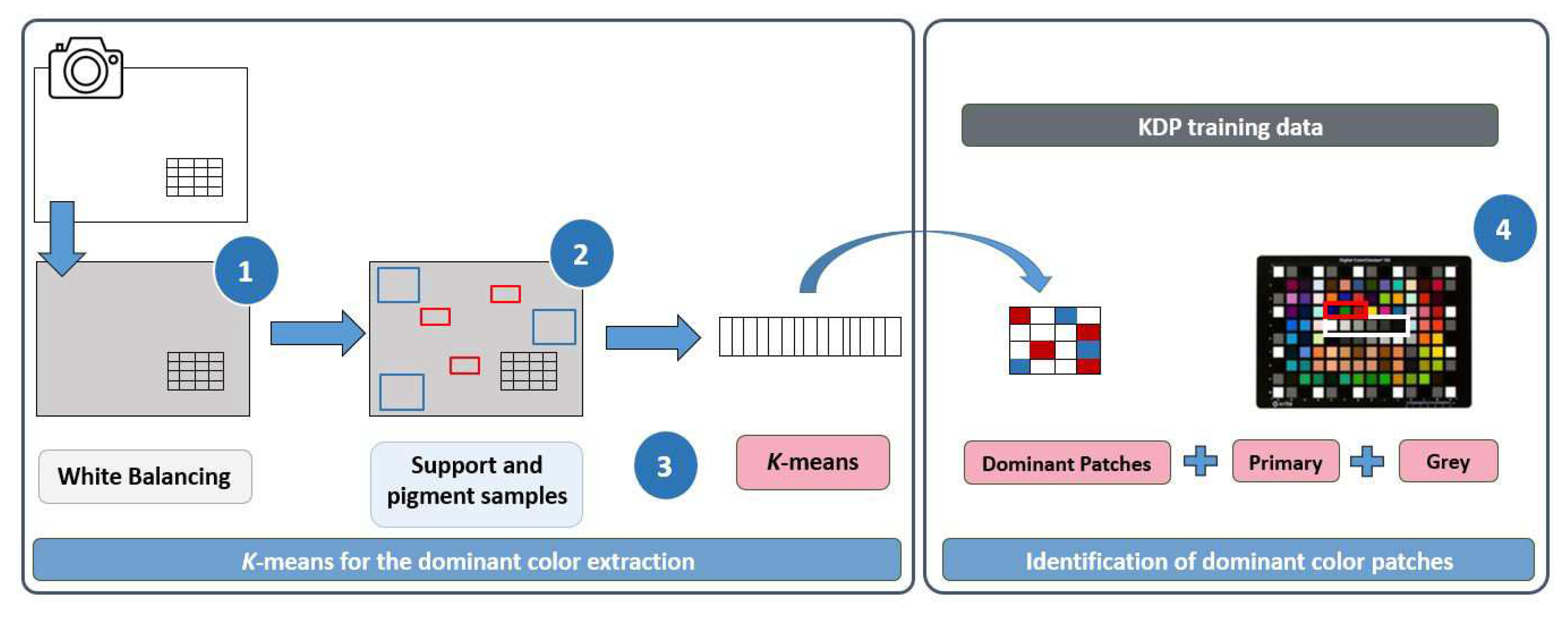

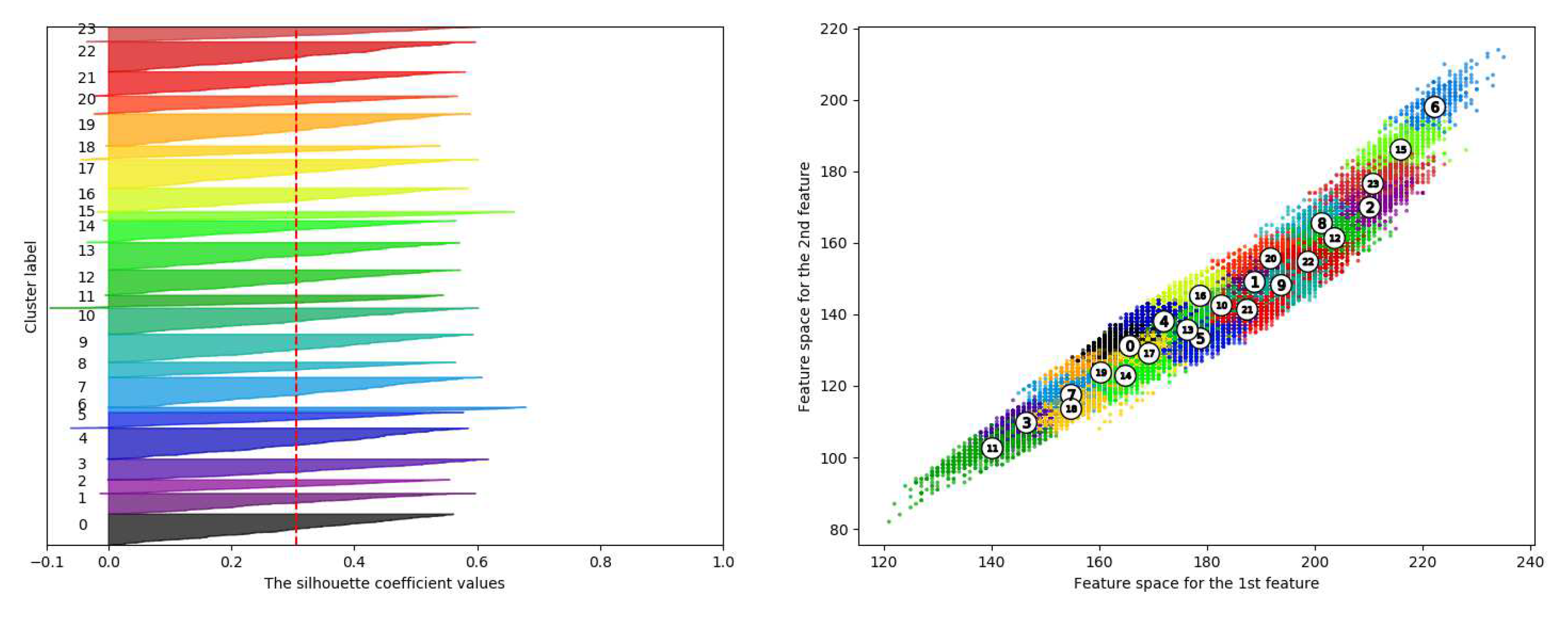

Accordingly and following the lines established in our previous investigations, in this paper we propose a novel framework for an optimal color sample selection of training data. The algorithm is based on a K-means clustering technique. We call this procedure Patch Adaptive Selection with K-means or P-ASK. The use of the P-ASK framework allows us to extract the dominant colors from a digital image and to identify their corresponding chips in the color chart used as a colorimetric reference.

The aim of the P-ASK dominant color patch selection is to carry out the characterization with this color sample set, instead of using the common approach based on the whole color chart. In the P-ASK approach, it is possible to reduce the number of training samples without the loss of colorimetric quality, using the most representative samples related within the chromatic range of the input scene. However, the main contribution of this paper is not only the framework for the K-means methodology but the practical assessment of the results, specifically for recording and documenting properly the color of the rock art scenes.

Several methodologies can be found in the literature about optimal color sample selection, such as those based on metric formulas [

27], adaptive training set [

26,

29], or minimizing the root-mean-square (RMS) errors [

30]. OlejniK-Krugly and Korytkowski developed a procedure based on ICC profiles using a standard ColorChecker and a set of custom color targets by means of direct measurements on the artwork [

31].

Clustering analysis is widely applied for the extraction of representative samples [

28,

29,

32,

33]. Eckhard et al. proposed a clustering-based novel adaptive global training set selection and perform the comparison between different well established global methods for colorimetric quality assessment of printed inks [

28]. Particularly, Xu et al. applied a K-means clustering algorithm in multispectral images to extract a set of training samples directly from the art painting for spectral image reconstruction applications [

33]. These studies showed that better results were achieved using optimal color samples.

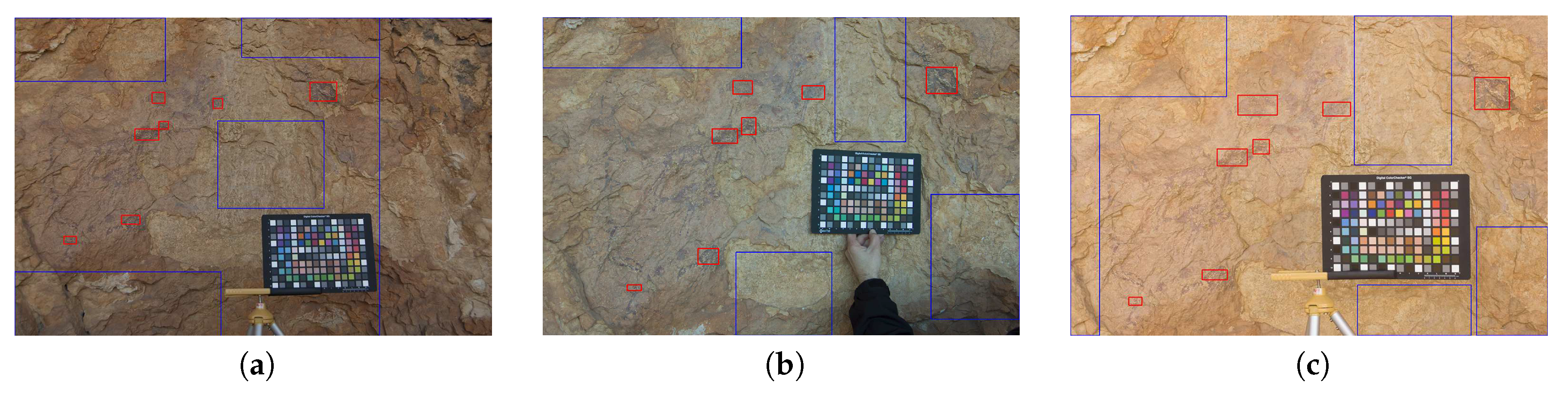

We tested the P-ASK framework proposed in this paper on a set of rock art scenes captured with different digital cameras. The characterization approach with dominant colors offered numerous advantages as reported in the following sections. The rest of the paper is structured as follows. In

Section 2, we describe in detail the theoretical basis and the equipment required to perform the P-ASK framework proposed.

Section 3 contains the results obtained from the processing of laboratory images and rock art scenes. Finally, in

Section 4 and

Section 5 we present the discussion and the main conclusions of this research.

4. Discussion

The generic aim of clustering applications is the natural grouping of specimens that share similar properties within a data set. In this study, we established the P-ASK framework which leverages the K-means technique for automatic training in the selection of a simplified set of color patches, and properly capture the dominant color characteristics of rock art scenes.

Since the K-means algorithm is sensitive to the initial approximations given by the user [

56,

57], a set of preliminary experiments were conducted to set proper values for the number of clusters, the working algorithm and the initialization method. According to our results, the suggested parameters to run K-means were appropriate, and the algorithm implemented in the

KMeans function of the Scikit-learn module proved to be a valid option for clustering operations as well.

However, the K-means algorithm is slow for large data sets, particularly those based on digital images. In our paper, we suggest an alternative approach to extract the dominant colors which consists of using a reduced number of image samples clipped from the full image. By using image sampling, the running time was considerably reduced. For instance, run time decreased from 18.2 min to 4.9 min when using the Fujifilm IS PRO image for K = 24 clusters (cf.

Table 3 with

Table 1 in

Section 3).

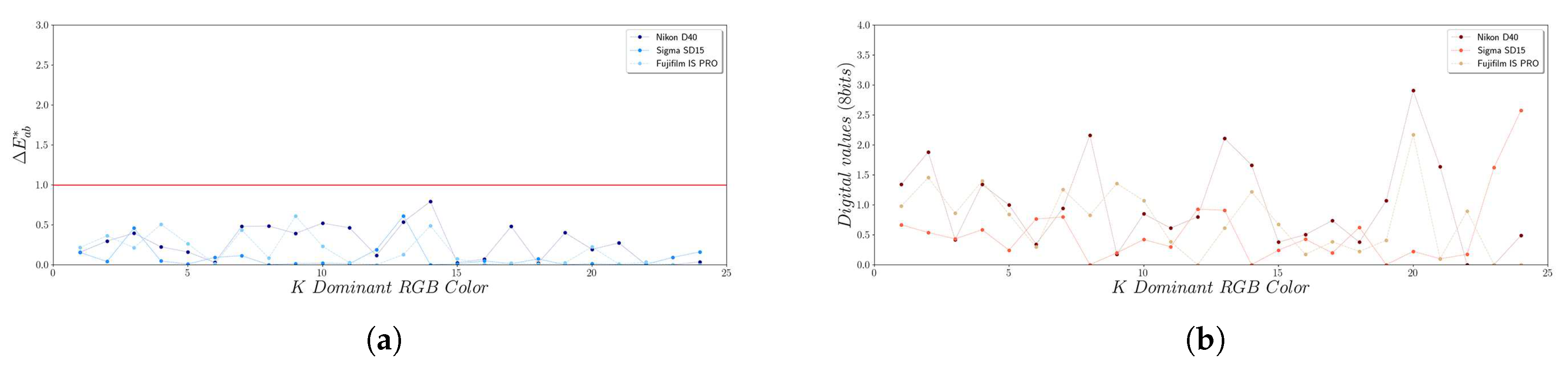

The experiment conducted to identify the effect of randomness on the K-means clustering technique showed that the dominant color set returned from multiple runs was highly consistent [

48]. In this regard,

Figure 8 shows

color differences less than 1 CIELAB unit for the dominant colors obtained in 100 realizations, with similar negligible results in units of pixel RGB digital values.

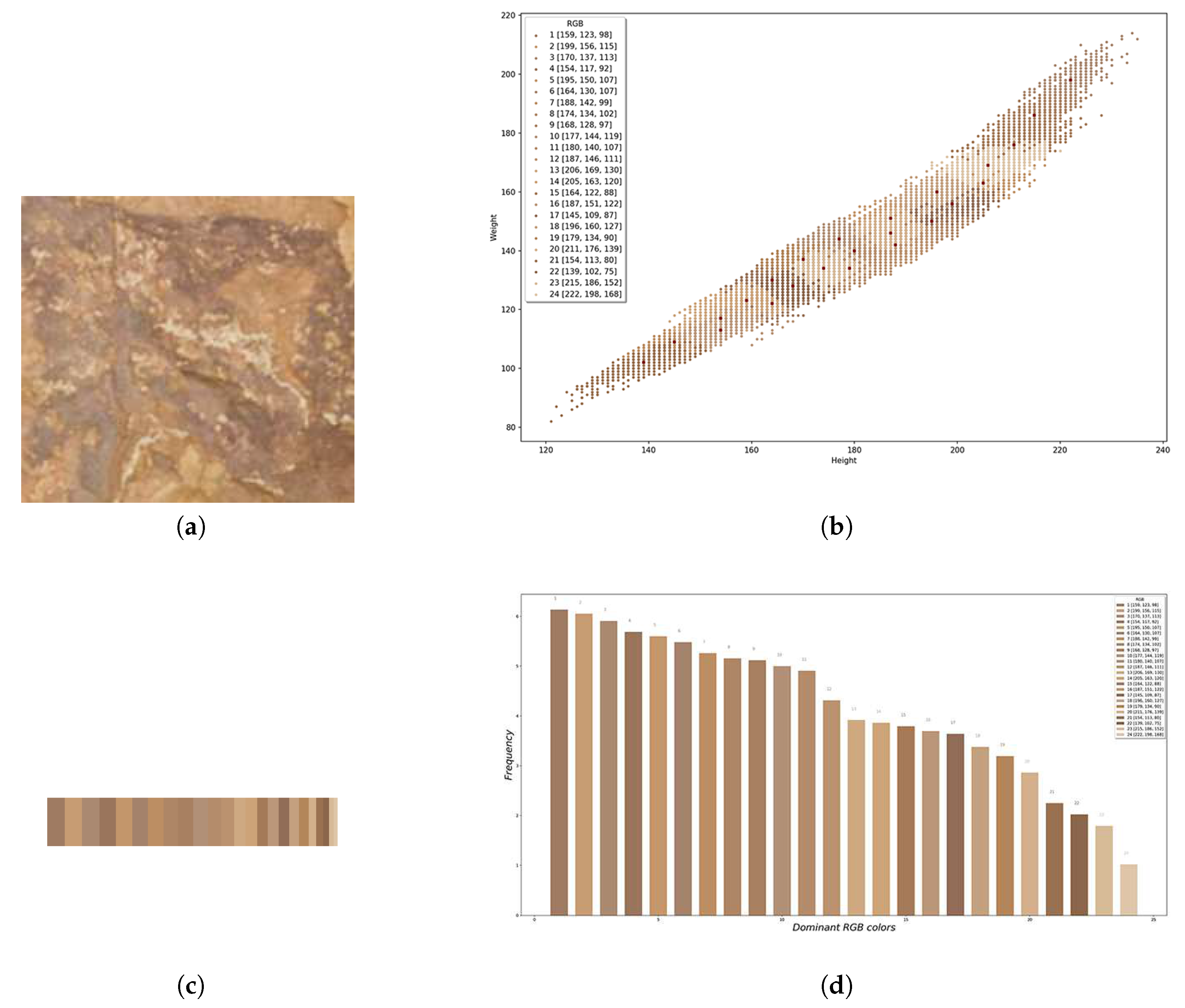

Moreover, the capability of P-ASK to identify the dominant colors into the ColorChecker offers very good results (see

Table 4 and

Figure 13), and allowed extracting a set of color chips from the chart to create a reduced training data set for camera characterization in a simple and fast way. By using this framework, we proved that the characterization approach based on the KDP training data gives accurate results which parallel those obtained in common practice, that is, using the whole set of color chips for model learning.

Although previous studies recommend the use of large training data sets for camera characterization [

22,

41,

73,

74], the results obtained with the P-ASK framework show that it is possible to use a reduced number of training samples without loss of accuracy in colorimetric terms. It is worth noting that the CIE XYZ residuals (

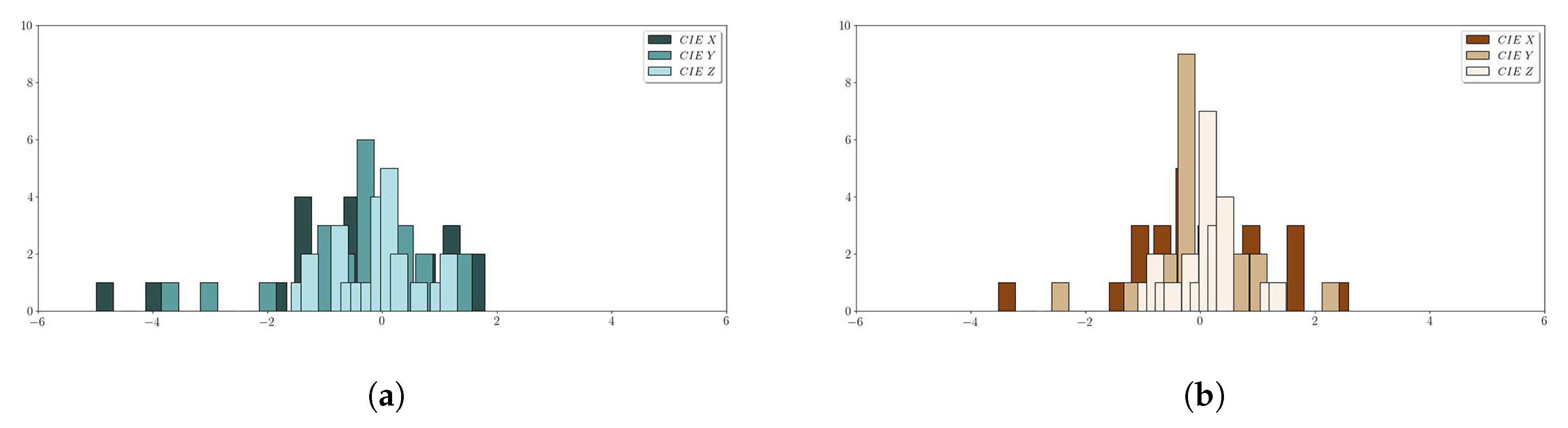

Table 5) and the

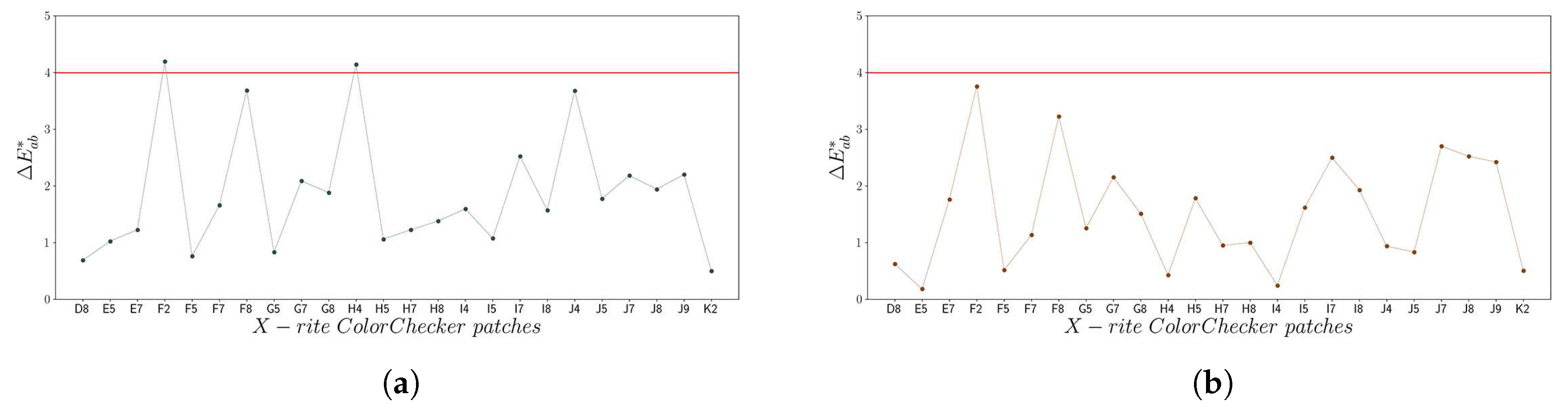

color differences (

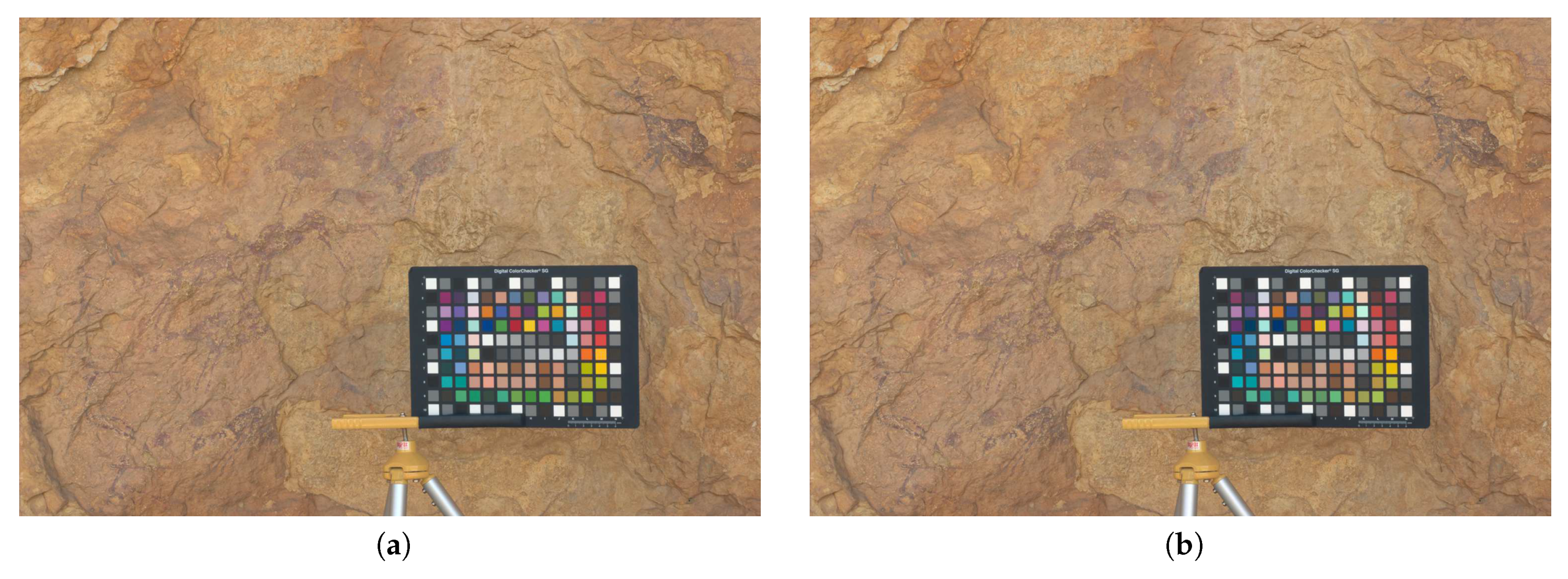

Table 6) obtained using the KPD data set are in fact slightly better. In view of the outcome images, it is evident that sRGB characterized images were satisfactorily obtained (

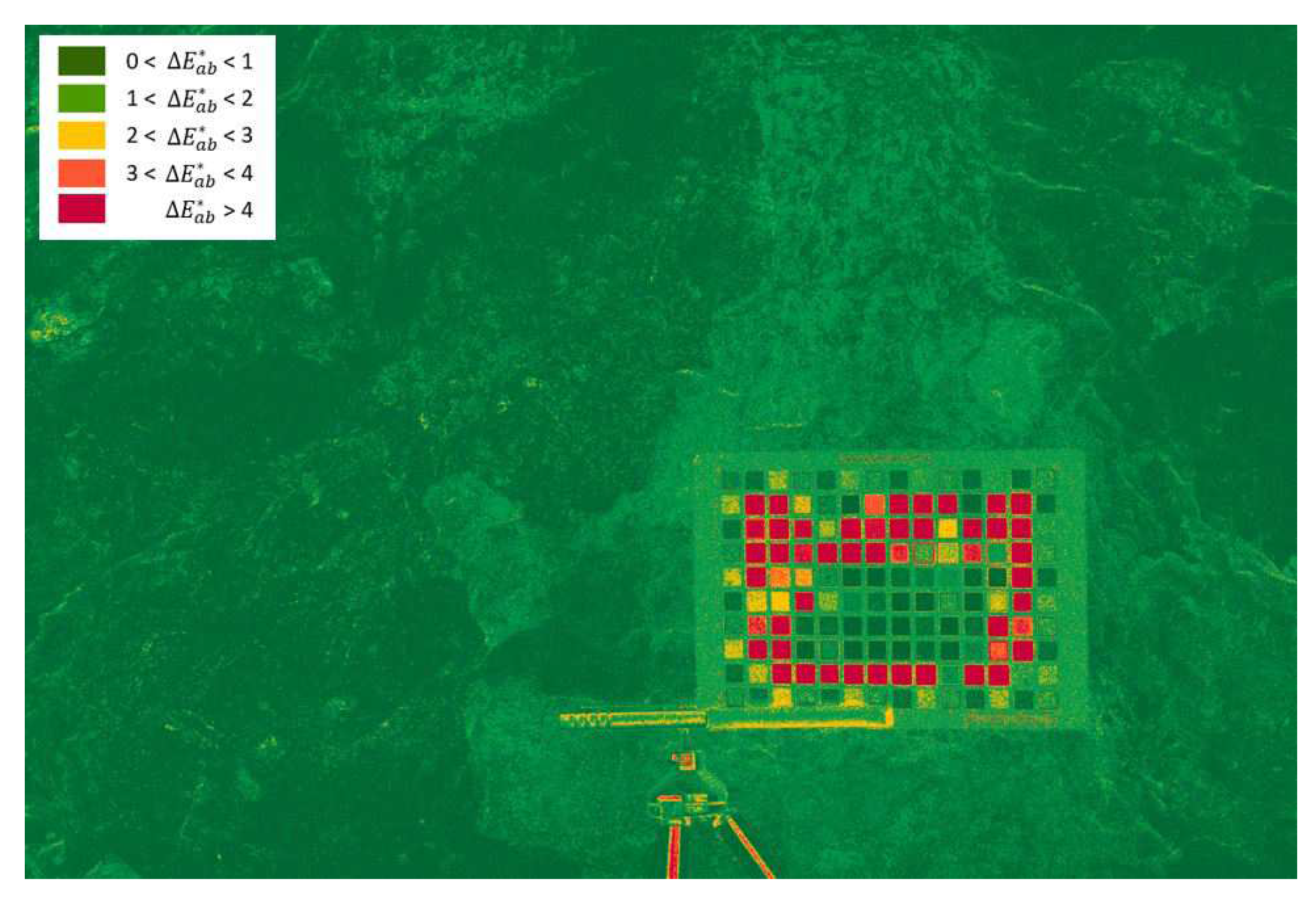

Figure 16), which is confirmed by the color difference map image where differences under 2 CIELAB units prevail over the whole image, particularly in the area of interest for archaeological applications (

Figure 17).

As an aside, we would like to note that the specific imaging hardware, i.e., the digital camera used for graphical documentation, is a key point that must be considered prior to characterization. We know from previous research that built-in imaging sensors have a considerable impact on the final outcome. In this sense, we presented the results of the Fujifilm IS PRO camera which is known to work very well, not only in the characterization procedure [

25], but also in terms of image noise [

75].

5. Conclusions

In this paper, we propose a new P-ASK framework for consistent and accurate archaeological color documentation. The graphic and numeric results obtained after the characterization of rock art images are highly encouraging and confirms that P-ASK is a suitable technique to use in the context of the image-based camera characterization procedure. Our characterization approach based on a reduced set of dominant colors offers several advantages. On the one hand, it implies the reduction of the training set size, and thereby, the computing time required for model training is less than in the regular approach with all color chips. On the other hand, there is an improvement derived from the use of a reduced number of specific color patches, which adjust better to the chromatic range of the scene, and yields better results in the output characterized images. We consider this point as a major contribution in this paper.

The tests conducted in our study confirmed that P-ASK is robust to random issues in the K-means stage and works very well with a specific sampling of the elements of interest in the rock art scene. This point leads us to envision future research lines focused on developing specific color charts for archaeological applications, and even for specific cultural heritage objects, monuments and sites. In summary, the results achieved in this study show that P-ASK is a suitable tool for the proper and rigorous use of color camera characterization in archaeological documentation, and can be integrated into the regular documentation workflow with minimum effort and resources.