Quantitative Landscape Assessment Using LiDAR and Rendered 360° Panoramic Images

Abstract

1. Introduction

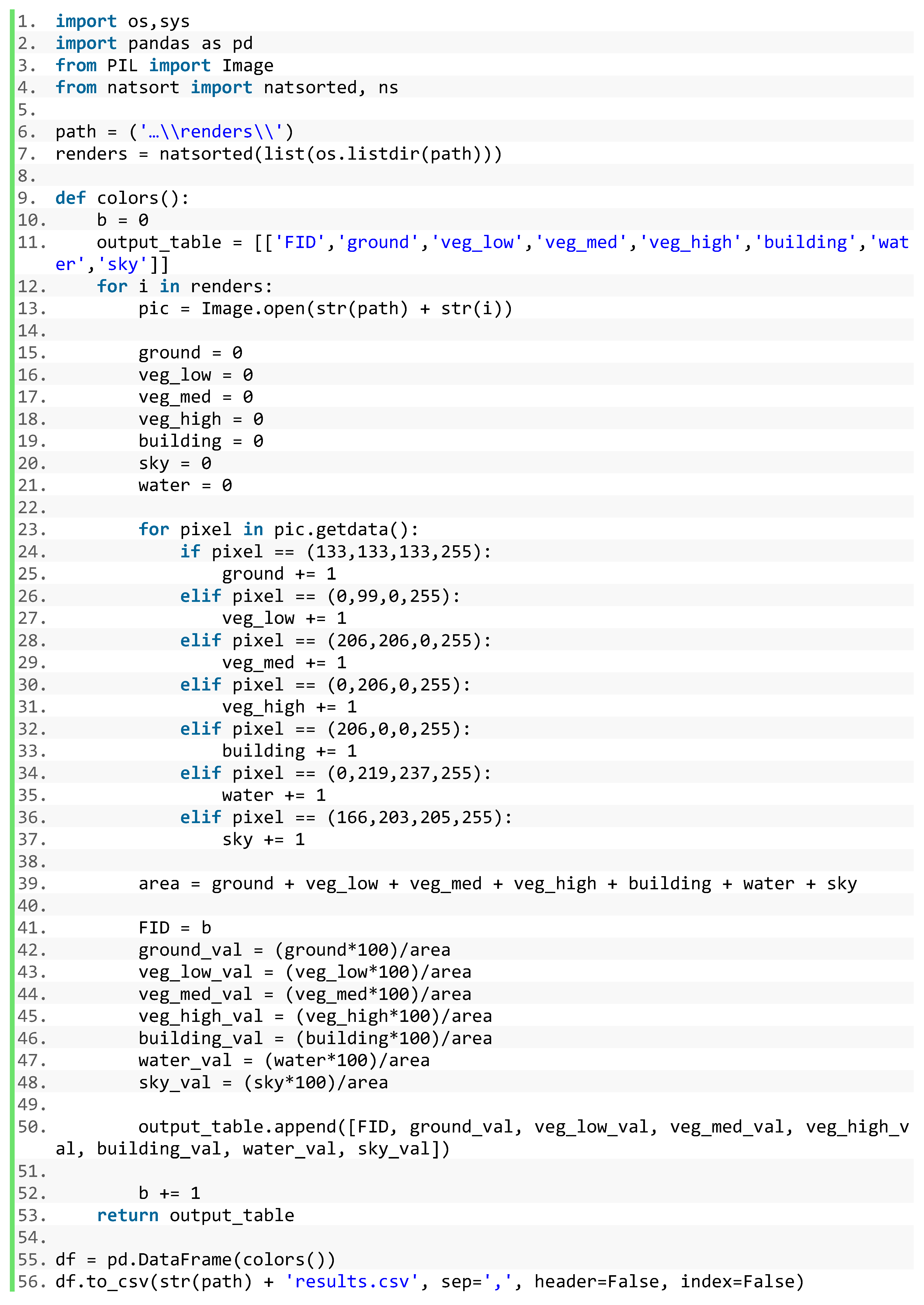

2. Methodology

2.1. Data

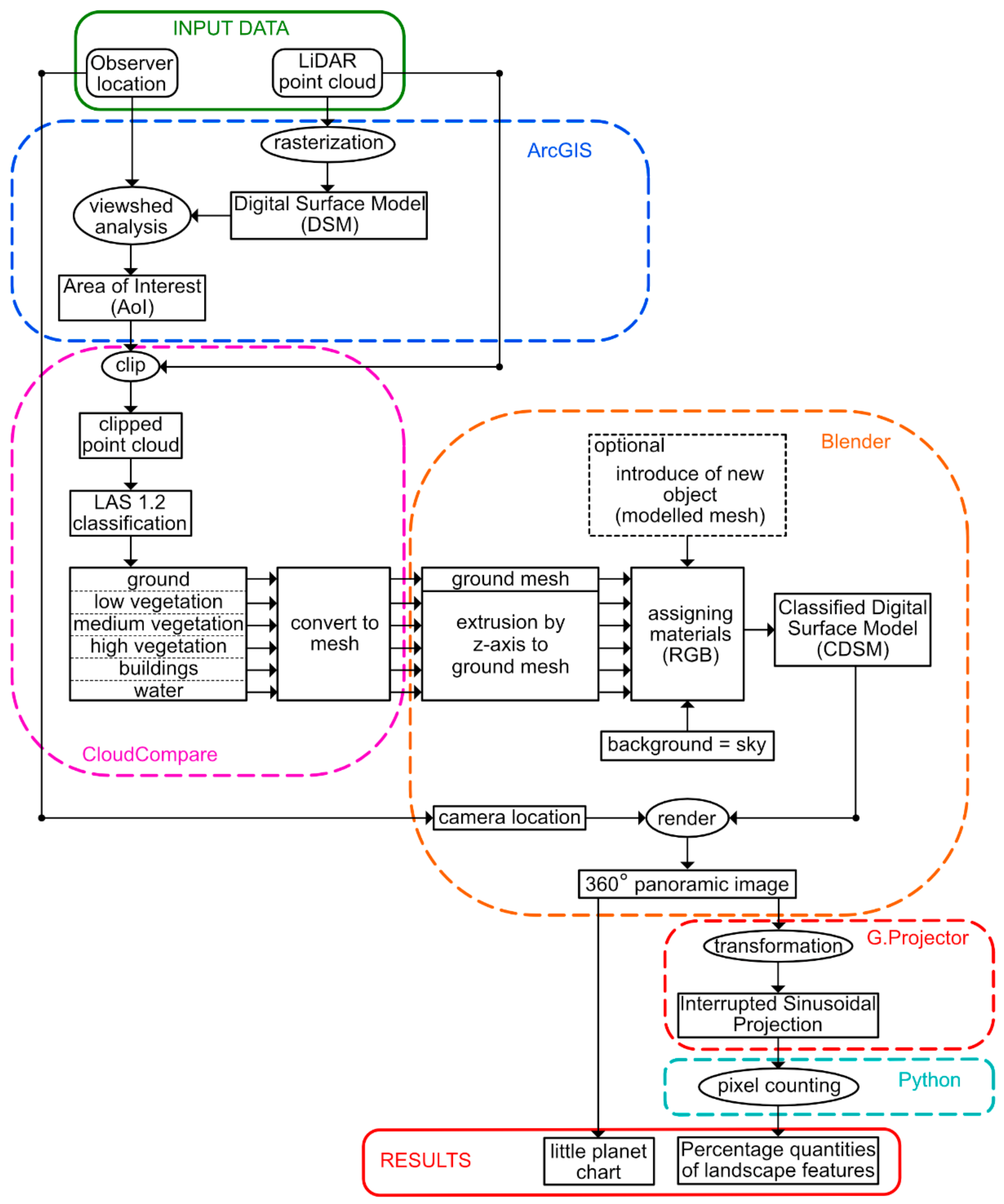

2.2. Classified Digital Surface Model (CDSM) Development

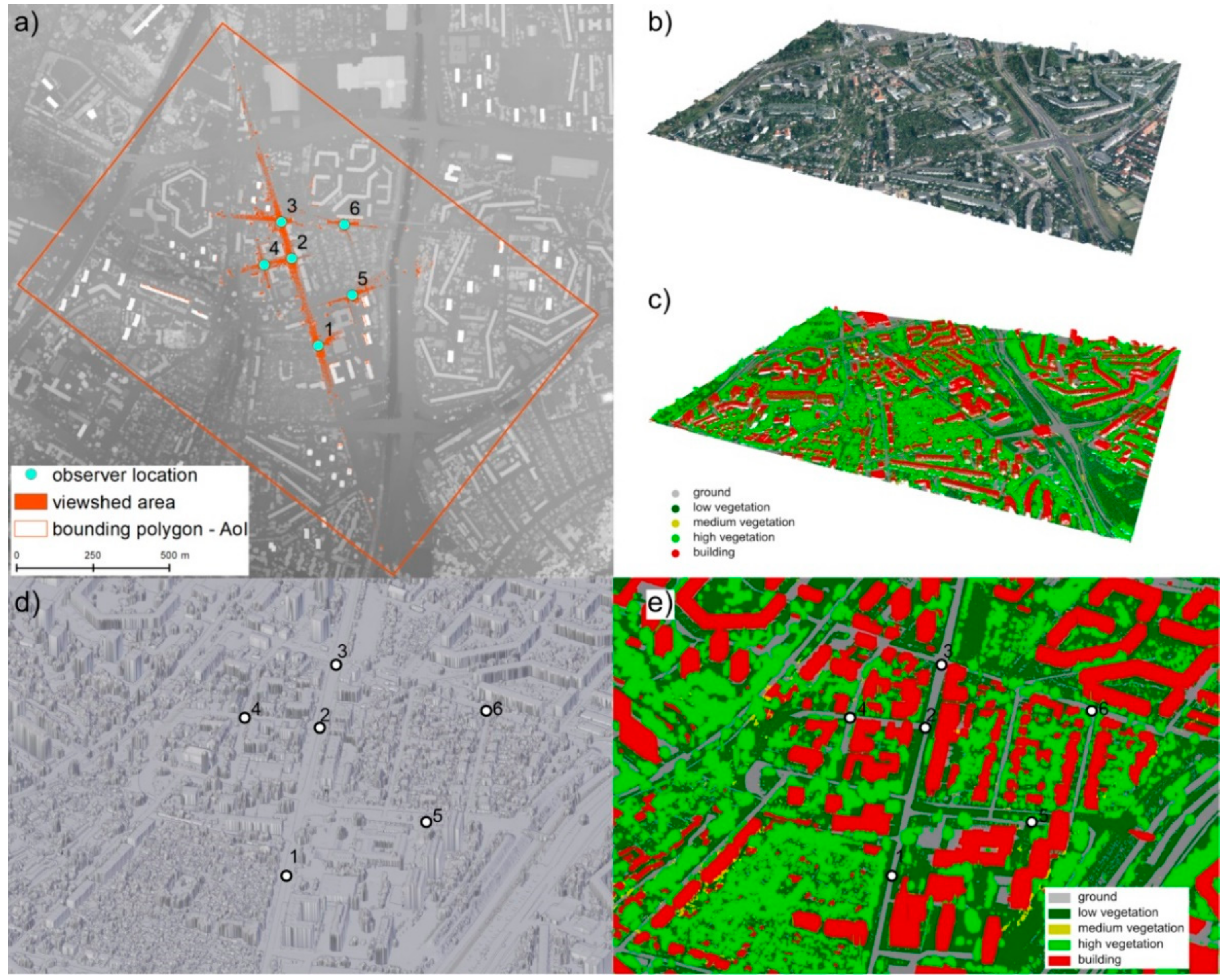

2.3. 360° Panoramic Images Rendering

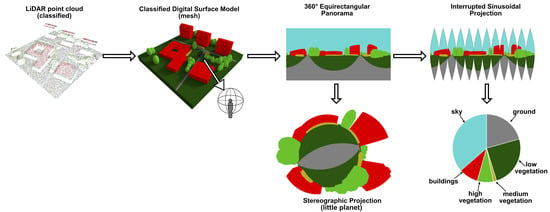

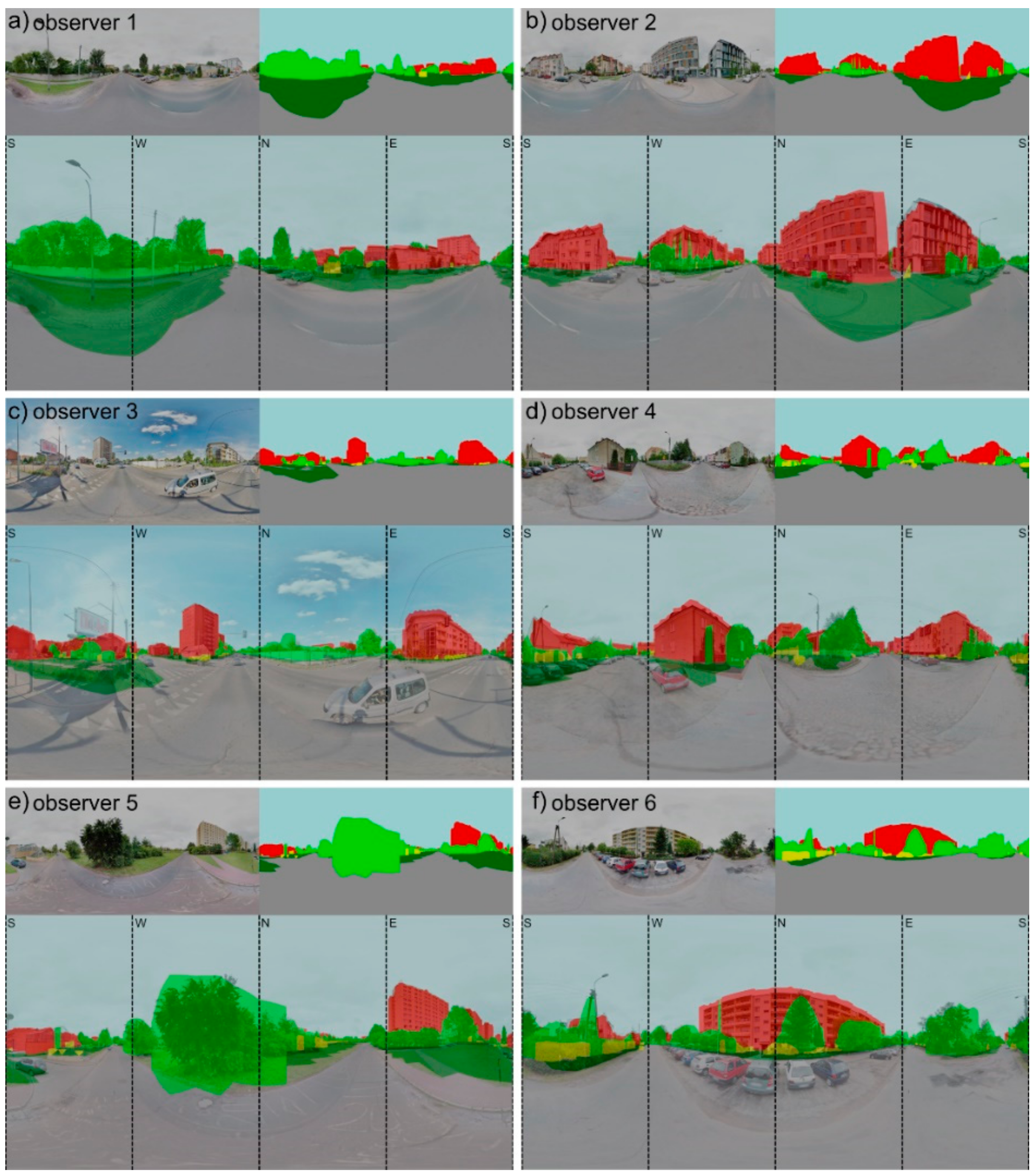

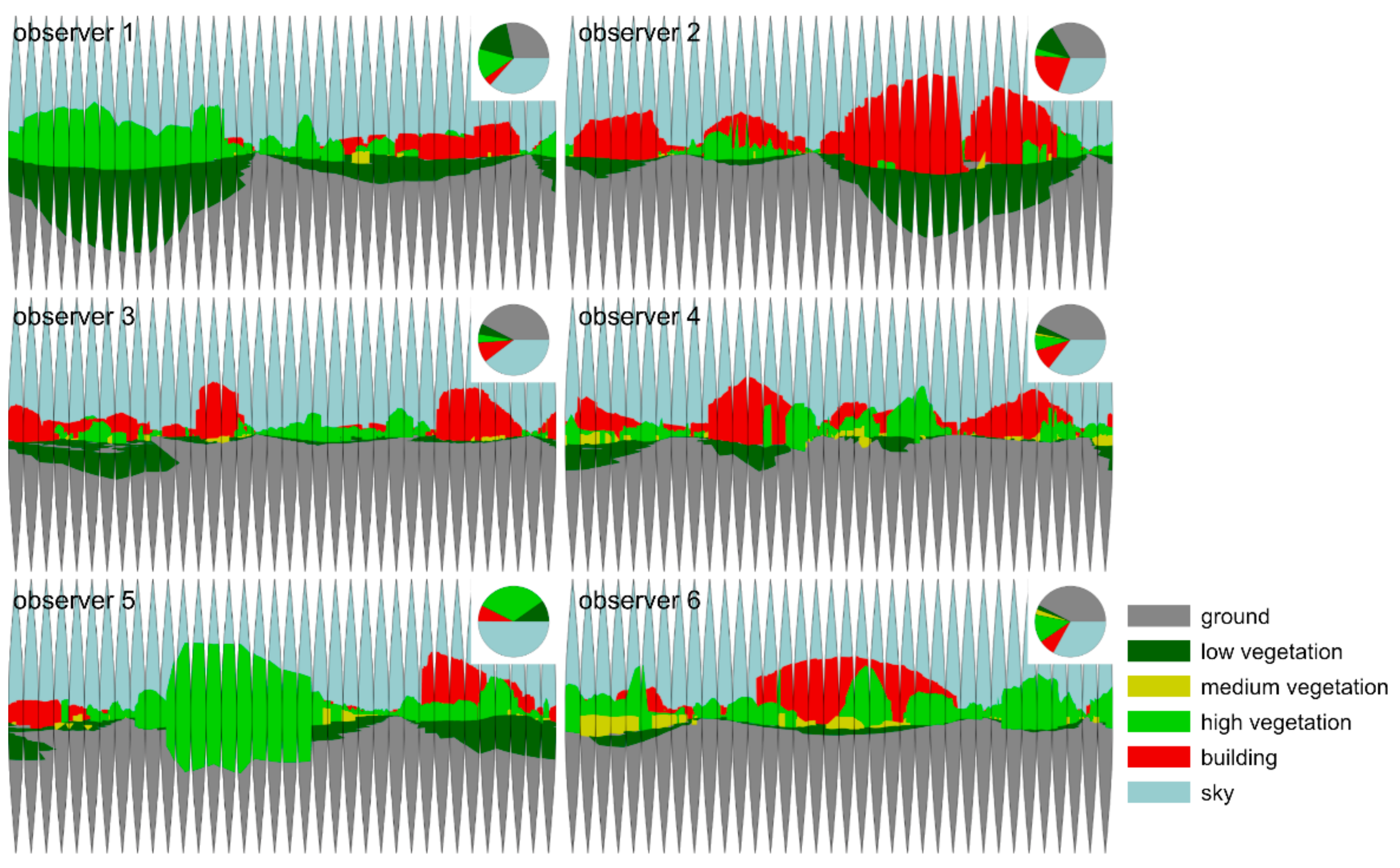

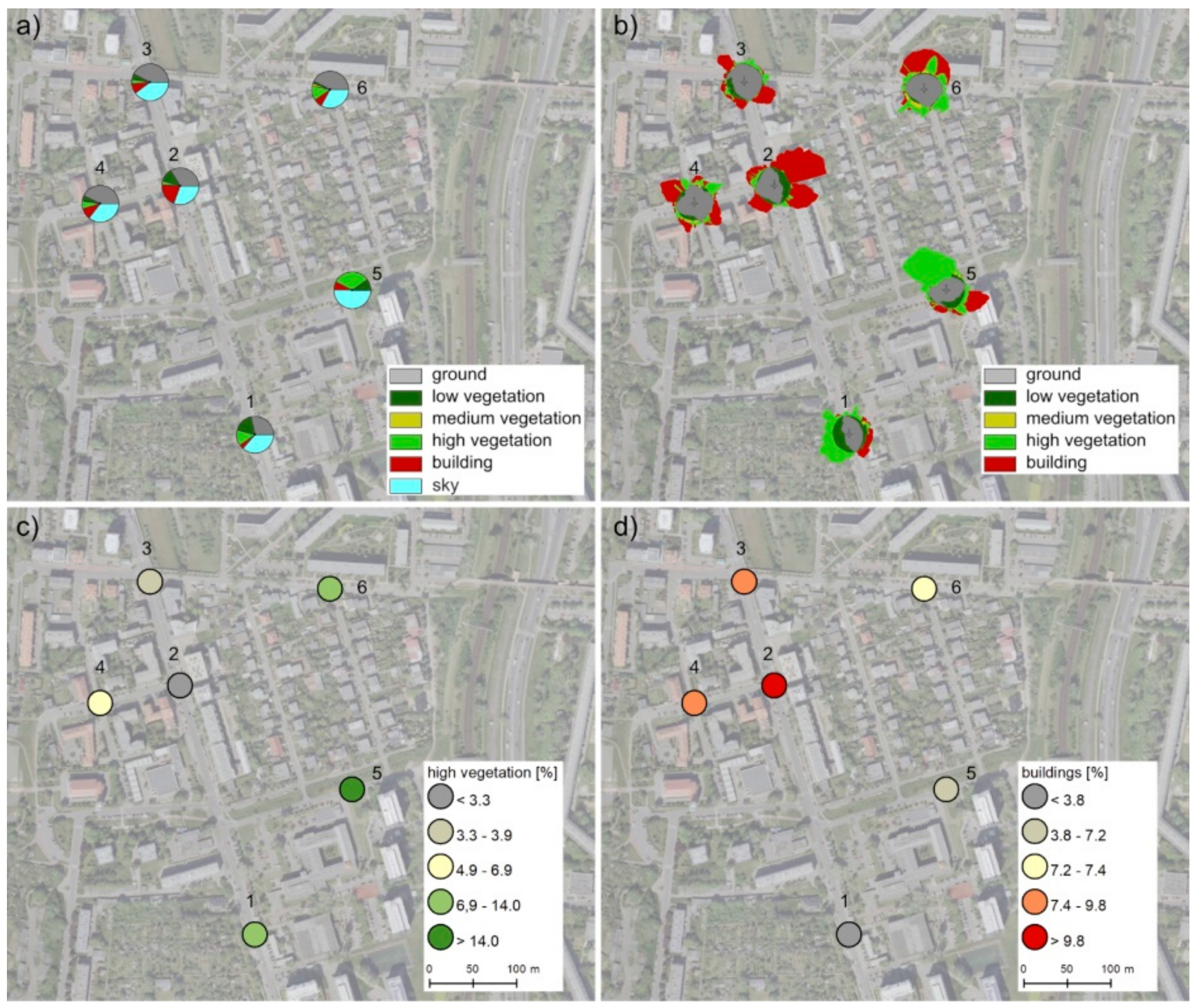

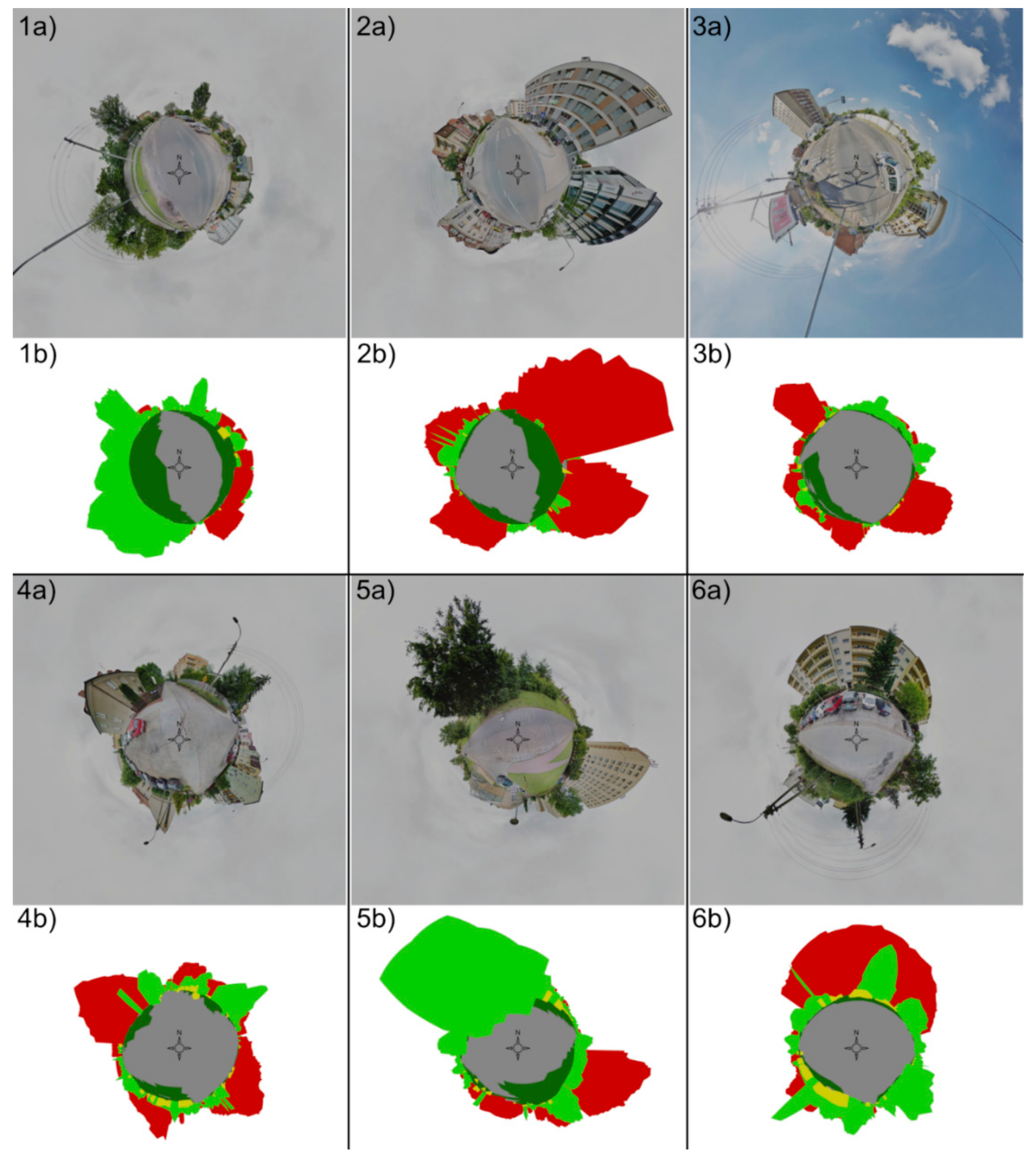

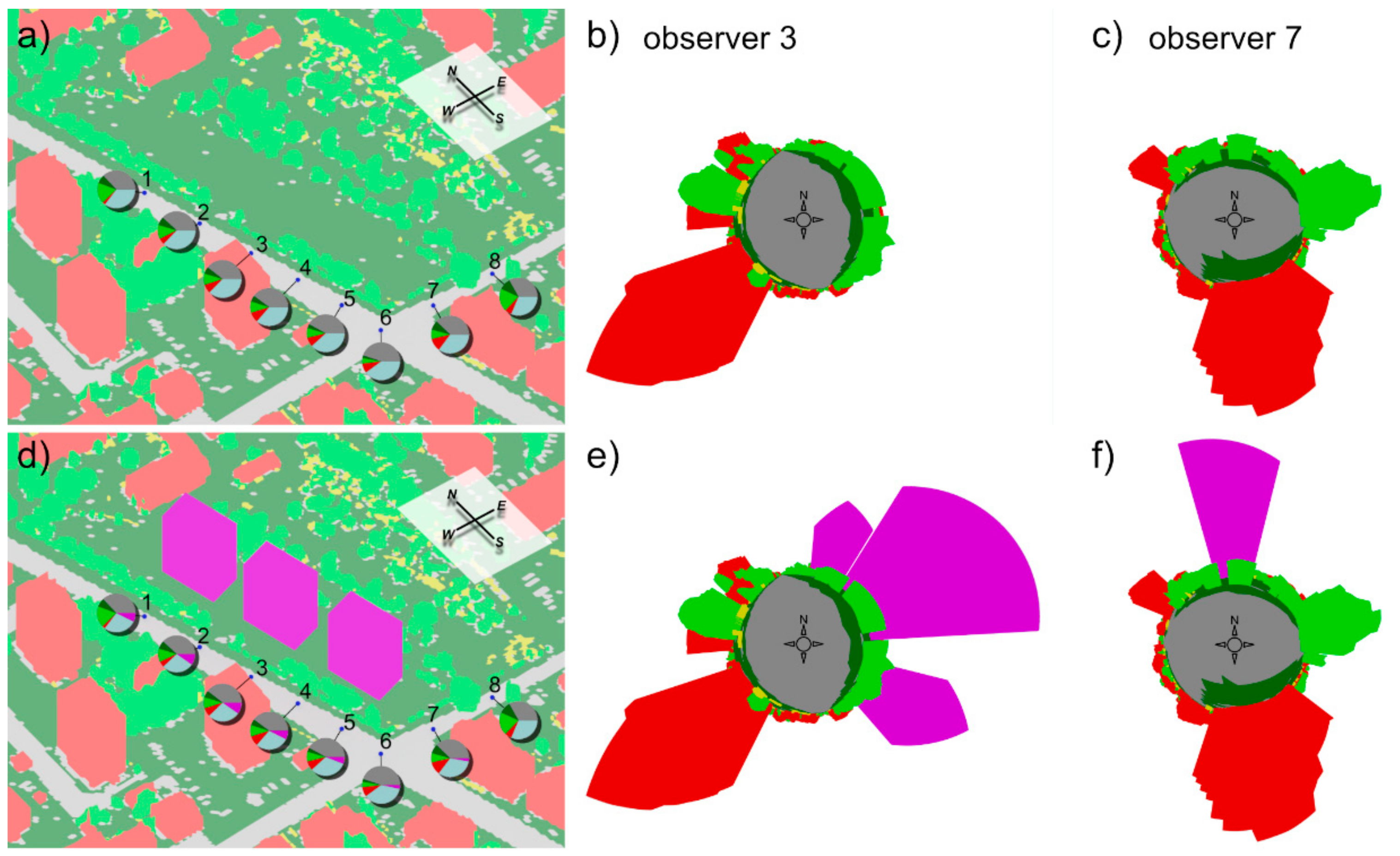

2.4. Presentation of the Outputs

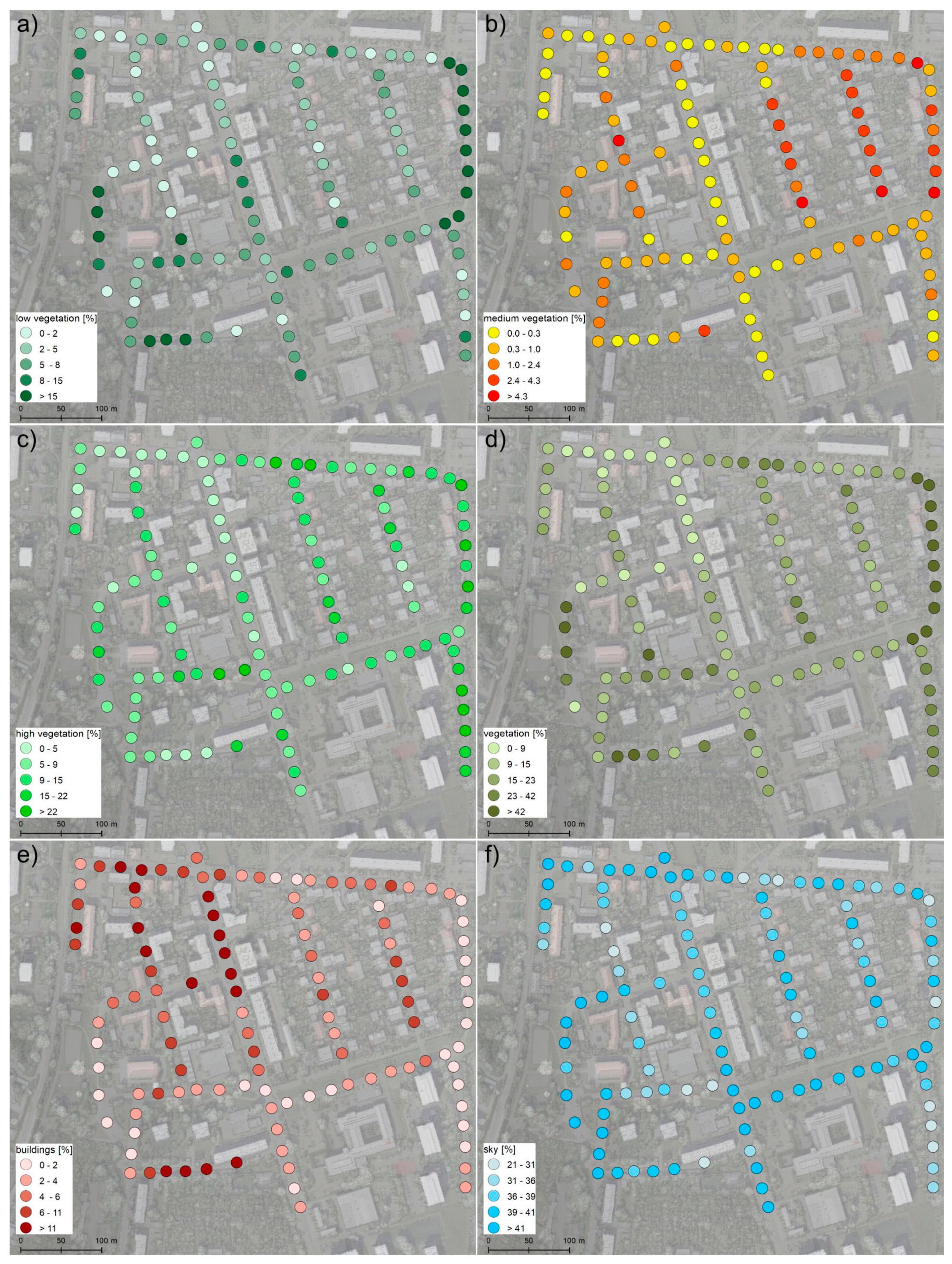

3. Results

4. Discussion

5. Conclusions

- The main advantages of the QLA360 method are: observer-independent, 360° field of view, automatic operation, scalability, and easy presentation and interpretation of results,

- The QLA360 method allows for quantitative analysis of landscape elements from the perspective of an observer in the 360° field of view based on classified LIDAR point clouds,

- A quantitative assessment of landscape features can be performed for any location without additional field studies,

- The use of GIS tools and 3D graphics software in the QLA360 method allows to assess changes in the landscape caused by the introduction of new elements such as trees, buildings, and infrastructure,

- The method is based on processed LIDAR point clouds developed in accordance with ASPRS standards; therefore, it allows for standardization of the classification of landscape features, gives comparable results, and can be easily applied in practice.

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

References

- Council of Europe. Council of European Landscape Convention, Florence, Explanatory Report. CETS No. 176; Council of Europe: Strasbourg, France, 2000. [Google Scholar]

- Aronson, M.F.J.; Lepczyk, C.A.; Evans, K.L.; Goddard, M.A.; Lerman, S.B.; MacIvor, J.S.; Nilon, C.H.; Vargo, T. Biodiversity in the city: Key challenges for urban green space management. Front. Ecol. Environ. 2017, 15, 189–196. [Google Scholar] [CrossRef]

- Badach, J.; Raszeja, E. Developing a framework for the implementation of landscape and greenspace indicators in sustainable urban planning. Waterfront landscape management: Case studies in Gdańsk, Poznań and Bristol. Sustainability 2019, 11, 2291. [Google Scholar] [CrossRef]

- Estoque, R.C.; Murayama, Y.; Myint, S.W. Effects of landscape composition and pattern on land surface temperature: An urban heat island study in the megacities of Southeast Asia. Sci. Total Environ. 2017, 577, 349–359. [Google Scholar] [CrossRef] [PubMed]

- Houet, T.; Verburg, P.H.; Loveland, T.R. Monitoring and modelling landscape dynamics. Landsc. Ecol. 2010, 25, 163–167. [Google Scholar] [CrossRef]

- De Vries, S.; Buijs, A.E.; Langers, F.; Farjon, H.; Van Hinsberg, A.; Sijtsma, F.J. Measuring the attractiveness of Dutch landscapes: Identifying national hotspots of highly valued places using Google Maps. Appl. Geogr. 2013, 45, 220–229. [Google Scholar] [CrossRef]

- Walz, U.; Stein, C. Indicator for a monitoring of Germany’s landscape attractiveness. Ecol. Indic. 2018, 94, 64–73. [Google Scholar] [CrossRef]

- Hedblom, M.; Hedenås, H.; Blicharska, M.; Adler, S.; Knez, I.; Mikusiński, G.; Svensson, J.; Sandström, S.; Sandström, P.; Wardle, D.A. Landscape perception: Linking physical monitoring data to perceived landscape properties. Landsc. Res. 2019, 00, 1–14. [Google Scholar] [CrossRef]

- Olszewska, A.A.; Marques, P.F.; Ryan, R.L.; Barbosa, F. What makes a landscape contemplative? Environ. Plan. B Urban Anal. City Sci. 2018, 45, 7–25. [Google Scholar] [CrossRef]

- White, M.; Smith, A.; Humphryes, K.; Pahl, S.; Snelling, D.; Depledge, M. Blue space: The importance of water for preference, affect, and restorativeness ratings of natural and built scenes. J. Environ. Psychol. 2010, 30, 482–493. [Google Scholar] [CrossRef]

- Sakici, C. Assessing landscape perceptions of urban waterscapes. Anthropologist 2015, 21, 182–196. [Google Scholar] [CrossRef]

- Dupont, L.; Ooms, K.; Antrop, M.; Van Eetvelde, V. Comparing saliency maps and eye-tracking focus maps: The potential use in visual impact assessment based on landscape photographs. Landsc. Urban Plan. 2016, 148, 17–26. [Google Scholar] [CrossRef]

- Tang, J.; Long, Y. Measuring visual quality of street space and its temporal variation: Methodology and its application in the Hutong area in Beijing. Landsc. Urban Plan. 2019, 191, 103436. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, B. Enhancing urban landscape configurations by integrating 3D landscape pattern analysis with people’s landscape preferences. Environ. Earth Sci. 2016, 75, 1018. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, B.; Devereux, B. Urban landscape pattern analysis based on 3D landscape models. Appl. Geogr. 2014, 55, 82–91. [Google Scholar] [CrossRef]

- Biljecki, F.; Ledoux, H.; Stoter, J. Generating 3D city models without elevation data. Comput. Environ. Urban Syst. 2017, 64, 1–18. [Google Scholar] [CrossRef]

- Lindberg, F.; Grimmond, C.S.B.; Gabey, A.; Huang, B.; Kent, C.W.; Sun, T.; Theeuwes, N.E.; Järvi, L.; Ward, H.C.; Capel-Timms, I.; et al. Urban Multi-scale Environmental Predictor (UMEP): An integrated tool for city-based climate services. Environ. Model. Softw. 2018, 99, 70–87. [Google Scholar] [CrossRef]

- Lukač, N.; Štumberger, G.; Žalik, B. Wind resource assessment using airborne LiDAR data and smoothed particle hydrodynamics. Environ. Model. Softw. 2017, 95, 1–12. [Google Scholar] [CrossRef]

- Alavipanah, S.; Haase, D.; Lakes, T.; Qureshi, S. Integrating the third dimension into the concept of urban ecosystem services: A review. Ecol. Indic. 2017, 72, 374–398. [Google Scholar] [CrossRef]

- Anderson, K.; Hancock, S.; Casalegno, S.; Griffiths, A.; Griffiths, D.; Sargent, F.; McCallum, J.; Cox, D.T.C.; Gaston, K.J. Visualising the urban green volume: Exploring LiDAR voxels with tangible technologies and virtual models. Landsc. Urban Plan. 2018, 178, 248–260. [Google Scholar] [CrossRef]

- Schröter, K.; Lüdtke, S.; Redweik, R.; Meier, J.; Bochow, M.; Ross, L.; Nagel, C.; Kreibich, H. Flood loss estimation using 3D city models and remote sensing data. Environ. Model. Softw. 2018, 105, 118–131. [Google Scholar] [CrossRef]

- Wu, Q.; Guo, F.; Li, H.; Kang, J. Measuring landscape pattern in three dimensional space. Landsc. Urban Plan. 2017, 167, 49–59. [Google Scholar] [CrossRef]

- Zheng, Z.; Du, S.; Wang, Y.C.; Wang, Q. Mining the regularity of landscape-structure heterogeneity to improve urban land-cover mapping. Remote Sens. Environ. 2018, 214, 14–32. [Google Scholar] [CrossRef]

- Bishop, I.D.; Miller, D.R. Visual assessment of off-shore wind turbines: The influence of distance, contrast, movement and social variables. Renew. Energy 2007, 32, 814–831. [Google Scholar] [CrossRef]

- Lindemann-Matthies, P.; Briegel, R.; Schüpbach, B.; Junge, X. Aesthetic preference for a Swiss alpine landscape: The impact of different agricultural land-use with different biodiversity. Landsc. Urban Plan. 2010, 98, 99–109. [Google Scholar] [CrossRef]

- Molnarova, K.; Sklenicka, P.; Stiborek, J.; Svobodova, K.; Salek, M.; Brabec, E. Visual preferences for wind turbines: Location, numbers and respondent characteristics. Appl. Energy 2012, 92, 269–278. [Google Scholar] [CrossRef]

- De Vries, S.; de Groot, M.; Boers, J. Eyesores in sight: Quantifying the impact of man-made elements on the scenic beauty of Dutch landscapes. Landsc. Urban Plan. 2012, 105, 118–127. [Google Scholar] [CrossRef]

- Kim, W.H.; Choi, J.H.; Lee, J.S. Objectivity and Subjectivity in Aesthetic Quality Assessment of Digital Photographs. IEEE Trans. Affect. Comput. 2018. [Google Scholar] [CrossRef]

- Lee, J.T.; Kim, H.U.; Lee, C.; Kim, C.S. Photographic composition classification and dominant geometric element detection for outdoor scenes. J. Vis. Commun. Image Represent. 2018, 55, 91–105. [Google Scholar] [CrossRef]

- Srivastava, S.; Vargas Muñoz, J.E.; Lobry, S.; Tuia, D. Fine-grained landuse characterization using ground-based pictures: a deep learning solution based on globally available data. Int. J. Geogr. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, M.; Wang, L.; Huang, J.; Cai, C.; Xu, X. A multi-scene deep learning model for image aesthetic evaluation. Signal Process. Image Commun. 2016, 47, 511–518. [Google Scholar] [CrossRef]

- Gong, F.Y.; Zeng, Z.C.; Zhang, F.; Li, X.; Ng, E.; Norford, L.K. Mapping sky, tree, and building view factors of street canyons in a high-density urban environment. Build. Environ. 2018, 134, 155–167. [Google Scholar] [CrossRef]

- Li, X.; Ratti, C. Mapping the spatial distribution of shade provision of street trees in Boston using Google Street View panoramas. Urban For. Urban Green. 2018, 31, 109–119. [Google Scholar] [CrossRef]

- Middel, A.; Lukasczyk, J.; Zakrzewski, S.; Arnold, M.; Maciejewski, R. Urban form and composition of street canyons: A human-centric big data and deep learning approach. Landsc. Urban Plan. 2019, 183, 122–132. [Google Scholar] [CrossRef]

- Zeng, L.; Lu, J.; Li, W.; Li, Y. A fast approach for large-scale Sky View Factor estimation using street view images. Build. Environ. 2018, 135, 74–84. [Google Scholar] [CrossRef]

- Simensen, T.; Halvorsen, R.; Erikstad, L. Methods for landscape characterisation and mapping: A systematic review. Land Use Policy 2018, 75, 557–569. [Google Scholar] [CrossRef]

- ASPRS Las Specification. 2008. Available online: https://www.asprs.org/a/society/committees/standards/asprs_las_format_v12.pdf (accessed on 23 January 2020).

- Wróżyński, R.; Pyszny, K.; Sojka, M.; Przybyła, C.; Murat-Błażejewska, S. Ground volume assessment using “Structure from Motion” photogrammetry with a smartphone and a compact camera. Open Geosci. 2017, 9, 281–294. [Google Scholar] [CrossRef]

- Wróżyński, R.; Sojka, M.; Pyszny, K. The application of GIS and 3D graphic software to visual impact assessment of wind turbines. Renew. Energy 2016, 96, 625–635. [Google Scholar] [CrossRef]

- Jenny, B.; Bojan, Š.; Arnold, N.D.; Marston, B.E.; Preppernau, C.A. Choosing a Map Projection. Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2017; pp. 213–228. [Google Scholar]

- Leonard, L.; Miles, B.; Heidari, B.; Lin, L.; Castronova, A.M.; Minsker, B.; Lee, J.; Scaife, C.; Band, L.E. Development of a participatory Green Infrastructure design, visualization and evaluation system in a cloud supported jupyter notebook computing environment. Environ. Model. Softw. 2019, 111, 121–133. [Google Scholar] [CrossRef]

- Kazak, J.; van Hoof, J.; Szewranski, S. Challenges in the wind turbines location process in Central Europe—The use of spatial decision support systems. Renew. Sustain. Energy Rev. 2017, 76, 425–433. [Google Scholar] [CrossRef]

- Pettit, C.; Bakelmun, A.; Lieske, S.N.; Glackin, S.; Hargroves, K.C.; Thomson, G.; Shearer, H.; Dia, H.; Newman, P. Planning support systems for smart cities. City Cult. Soc. 2018, 12, 13–24. [Google Scholar] [CrossRef]

- Jiang, B.; Deal, B.; Pan, H.Z.; Larsen, L.; Hsieh, C.H.; Chang, C.Y.; Sullivan, W.C. Remotely-sensed imagery vs. eye-level photography: Evaluating associations among measurements of tree cover density. Landsc. Urban Plan. 2017, 157, 270–281. [Google Scholar] [CrossRef]

- Liang, J.; Gong, J.; Sun, J.; Zhou, J.; Li, W.; Li, Y.; Liu, J.; Shen, S. Automatic sky view factor estimation from street view photographs—A big data approach. Remote Sens. 2017, 9, 411. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, D.; Liu, Y.; Lin, H. Representing place locales using scene elements. Comput. Environ. Urban Syst. 2018, 71, 153–164. [Google Scholar] [CrossRef]

- Jeong, J.; Yoon, T.S.; Park, J.B. Towards a meaningful 3D map using a 3D lidar and a camera. Sensors 2018, 18, 2571. [Google Scholar] [CrossRef]

- Shen, Q.; Zeng, W.; Ye, Y.; Arisona, S.M.; Schubiger, S.; Burkhard, R.; Qu, H. StreetVizor: Visual Exploration of Human-Scale Urban Forms Based on Street Views. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1004–1013. [Google Scholar] [CrossRef]

- Babahajiani, P.; Fan, L.; Kämäräinen, J.K.; Gabbouj, M. Urban 3D segmentation and modelling from street view images and LiDAR point clouds. Mach. Vis. Appl. 2017, 28, 679–694. [Google Scholar] [CrossRef]

- ASPRS Las Specification. 2019. Available online: http://www.asprs.org/wp-content/uploads/2019/03/LAS_1_4_r14.pdf (accessed on 23 January 2020).

- Maslov, N.; Claramunt, C.; Wang, T.; Tang, T. Method to estimate the visual impact of an offshore wind farm. Appl. Energy 2017, 204, 1422–1430. [Google Scholar] [CrossRef]

- Hayek, U.W. Exploring Issues of Immersive Virtual Landscapes for Participatory Spatial Planning Support. J. Digit. Landsc. Archit. 2016, 1, 100–108. [Google Scholar]

- Biljecki, F.; Heuvelink, G.B.M.; Ledoux, H.; Stoter. The effect of acquisition error and level of detail on the accuracy of spatial analyses analyses. Cartogr. Geogr. Inf. Sci. 2018, 45, 156–176. [Google Scholar] [CrossRef]

- Park, Y.; Guldmann, J. Computers, Environment and Urban Systems Creating 3D city models with building footprints and LIDAR point cloud classification: A machine learning approach. Comput. Environ. Urban Syst. 2019, 75, 76–89. [Google Scholar] [CrossRef]

- Germanchis, T.; Pettit, C.; Cartwright, W. Building a 3D geospatial virtual environment on computer gaming technology. J. Spat. Sci. 2004, 49, 89–96. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating tree detection and segmentation routines on very high resolution UAV LiDAR ata. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Liang, X.; Hyyppä, H.; Wang, Y. Autonomous collection of forest field reference—The outlook and a first step with UAV laser scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef]

- Bailey, B.N.; Ochoa, M.H. Semi-direct tree reconstruction using terrestrial LiDAR point cloud data. Remote Sens. Environ. 2018, 208, 133–144. [Google Scholar] [CrossRef]

- Bremer, M.; Wichmann, V.; Rutzinger, M. Multi-temporal fine-scale modelling of Larix decidua forest plots using terrestrial LiDAR and hemispherical photographs. Remote Sens. Environ. 2018, 206, 189–204. [Google Scholar] [CrossRef]

| Classification Value | Description |

|---|---|

| 0 | Created, never classified |

| 1 | Unclassified |

| 2 | Ground |

| 3 | Low Vegetation |

| 4 | Medium Vegetation |

| 5 | High Vegetation |

| 6 | Building |

| 7 | Low Point (noise) |

| 8 | Model Key-point (mass points) |

| 9 | Water |

| 10 | Reserved for ASPRS Definition |

| 11 | Reserved for ASPRS Definition |

| 12 | Overlap Points |

| 13–31 | Reserved for ASPRS Definition |

| Class | Color | RGB Value |

|---|---|---|

| ground | 133, 133, 133 | |

| low vegetation | 0, 99, 0 | |

| medium vegetation | 206, 206, 0 | |

| high vegetation | 0, 206, 0 | |

| building | 206, 0, 0 | |

| water | 0, 219, 237 | |

| sky | 166, 203, 205 |

| Classes | Source |

|---|---|

| Road, Sidewalk, Building, Fence, Pole, Vegetation, Vehicle | Jeong et al. [47] |

| Sky, Previous surface, Trees and plants, Building, Impervious surface, Non-permanent object | Middel et al. [34] |

| Greenery, Sky, Building, Road, Vehicle, Others | Shen et al. [48] |

| Sky, Buildings, Pole, Road marking, Road, Pavement | Tang and Long [13] |

| Building, Road, Car, Sign, Pedestrian, Tree, Sky, Water | Babahajiani et al. [49] |

| Ground, Low vegetation, Medium Vegetation, High vegetation, Building, Water, Sky | Our study |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wróżyński, R.; Pyszny, K.; Sojka, M. Quantitative Landscape Assessment Using LiDAR and Rendered 360° Panoramic Images. Remote Sens. 2020, 12, 386. https://doi.org/10.3390/rs12030386

Wróżyński R, Pyszny K, Sojka M. Quantitative Landscape Assessment Using LiDAR and Rendered 360° Panoramic Images. Remote Sensing. 2020; 12(3):386. https://doi.org/10.3390/rs12030386

Chicago/Turabian StyleWróżyński, Rafał, Krzysztof Pyszny, and Mariusz Sojka. 2020. "Quantitative Landscape Assessment Using LiDAR and Rendered 360° Panoramic Images" Remote Sensing 12, no. 3: 386. https://doi.org/10.3390/rs12030386

APA StyleWróżyński, R., Pyszny, K., & Sojka, M. (2020). Quantitative Landscape Assessment Using LiDAR and Rendered 360° Panoramic Images. Remote Sensing, 12(3), 386. https://doi.org/10.3390/rs12030386