Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks

Abstract

1. Introduction

- The training and testing data sets are several unpaired haze and haze-free remote-sensing images. The cycle structure can achieve unsupervised training that can largely reduce the pressure in preparing data sets.

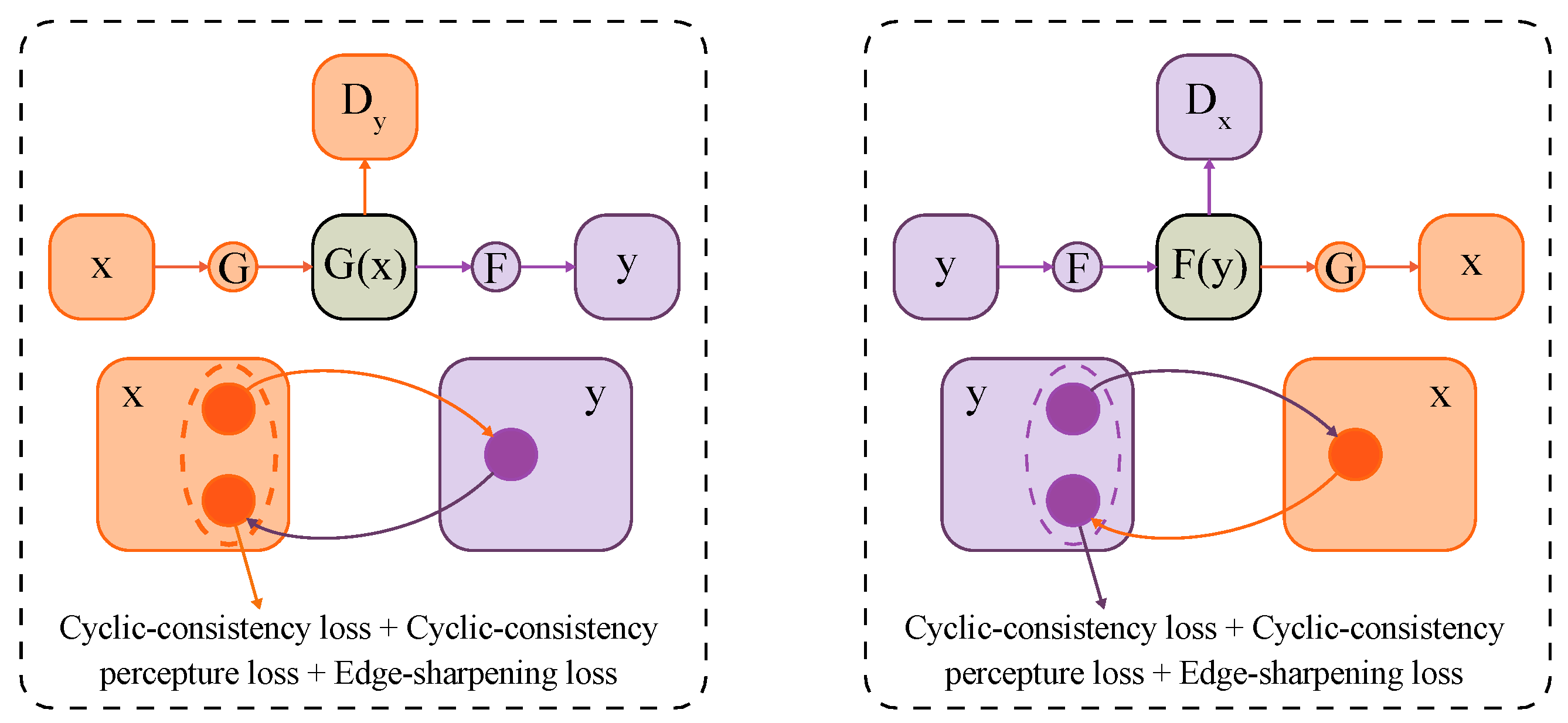

- In the generators G (dehazing model) and F (add-haze model), DenseNet blocks, which can recover the high-frequency information from remote-sensing images, are introduced to replace ResNet.

- In remote-sensing interpretation applications, sharpened edges and haze-free remote-sensing images can reflect contour texture information clearly, leading to more accurate results. In this study, we designed an edge-sharpening loss and introduced cyclic perceptual-consistency loss into the loss function.

- This model uses a transfer learning training model for the cyclic perceptual-consistency loss, and the homemade classified remote-sensing image is used to retrain the perceptual extracted model. This model can accurately learn the feature information of ground objects.

2. Remote-Sensing Image Dehazing Algorithm

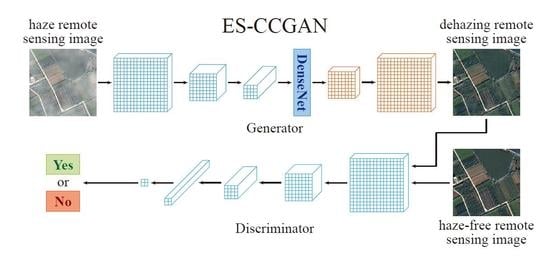

2.1. Edge-Sharpening Cycle-Consistent Adversarial Network (ES-CCGAN) Dehazing Architecture

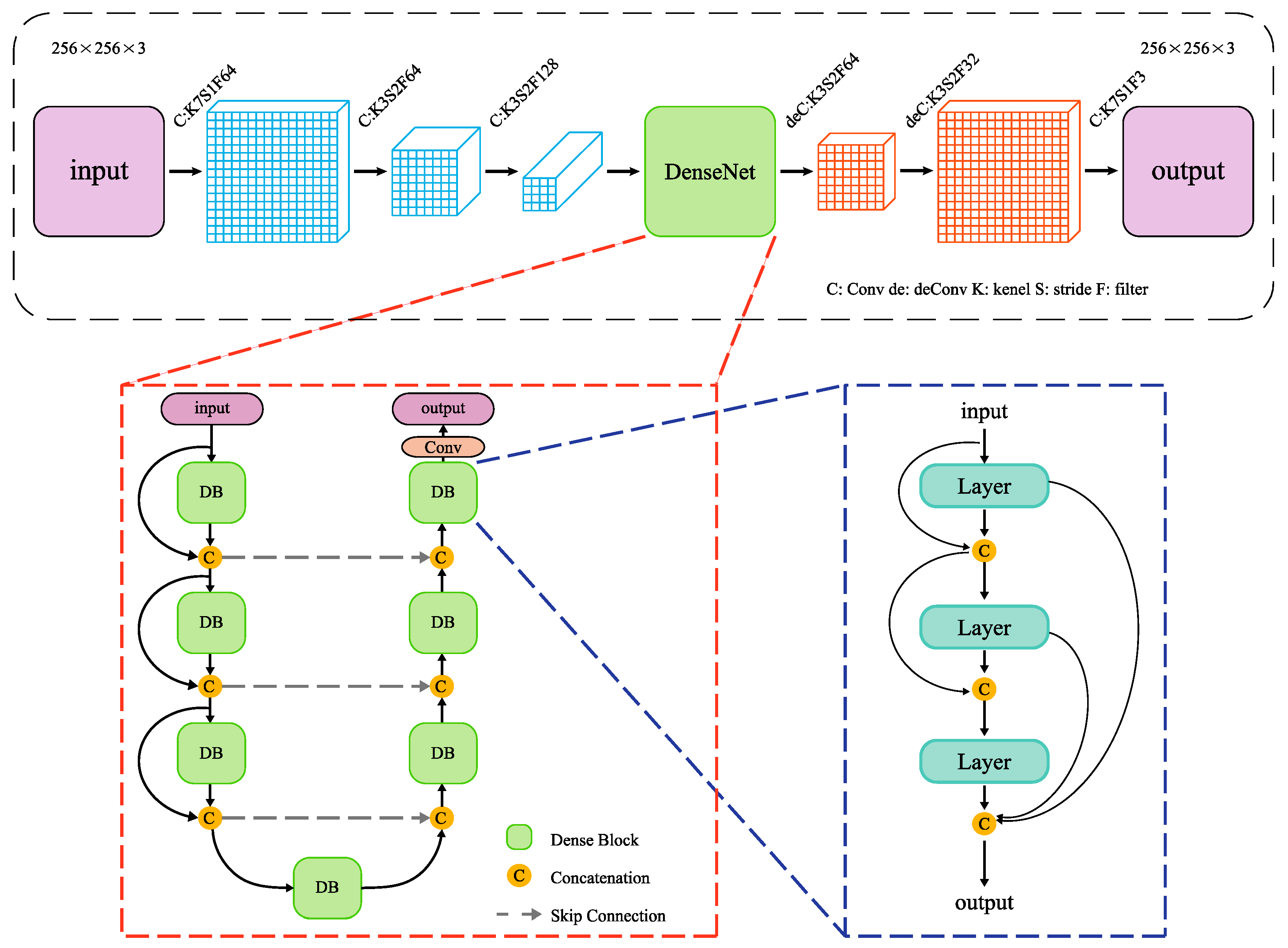

2.1.1. Generation Network

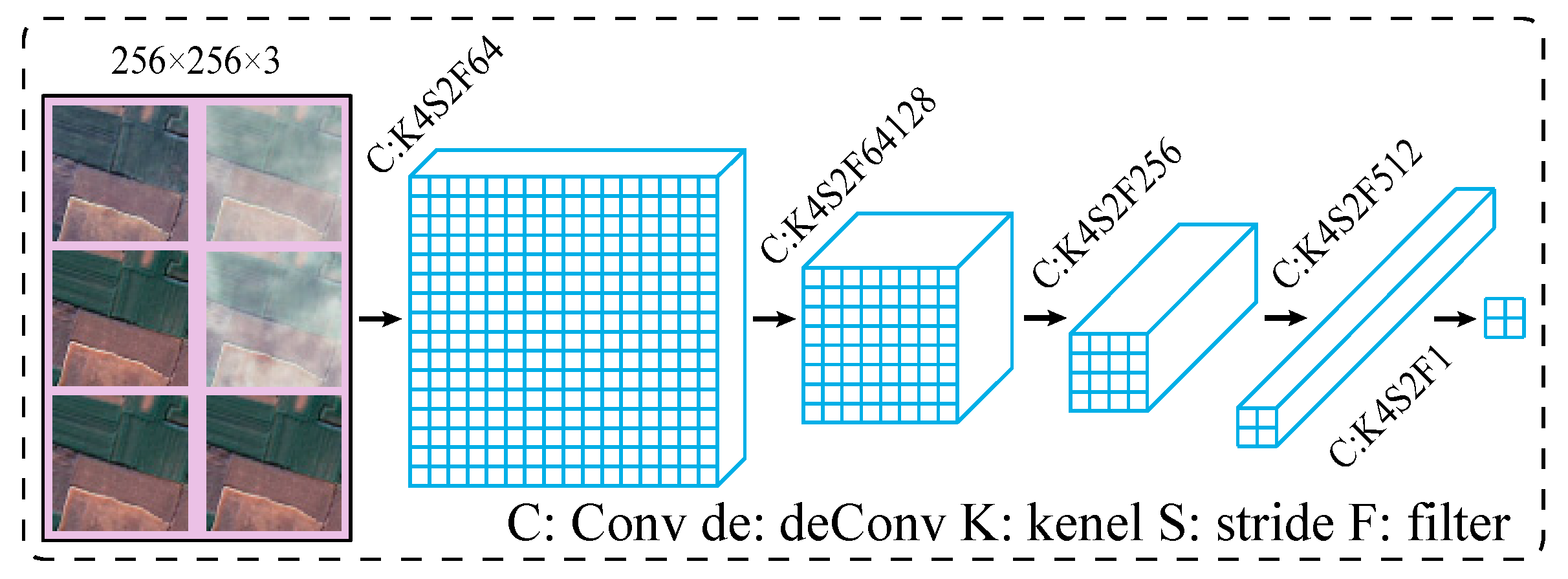

2.1.2. Discriminant Network

2.2. ES-CCGAN Loss Function

2.2.1. Adversarial Loss

2.2.2. Cycle-Consistency Loss and Cyclic Perceptual-Consistency Loss

2.2.3. Edge-Sharpening Loss

- (1)

- Ground-object edge pixels are detected from haze-free remote-sensing images by a standard Canny edge detector, which can accurately locate edge pixels.

- (2)

- Edge regions of the ground object are dilated based on the detected edge pixels.

- (3)

- Gaussian smoothing is applied to the dilated edge regions to obtain y~, which can reduce the edge weights and obtain a more natural effect.

2.2.4. Full Objective of ES-CCGAN

3. Experiments

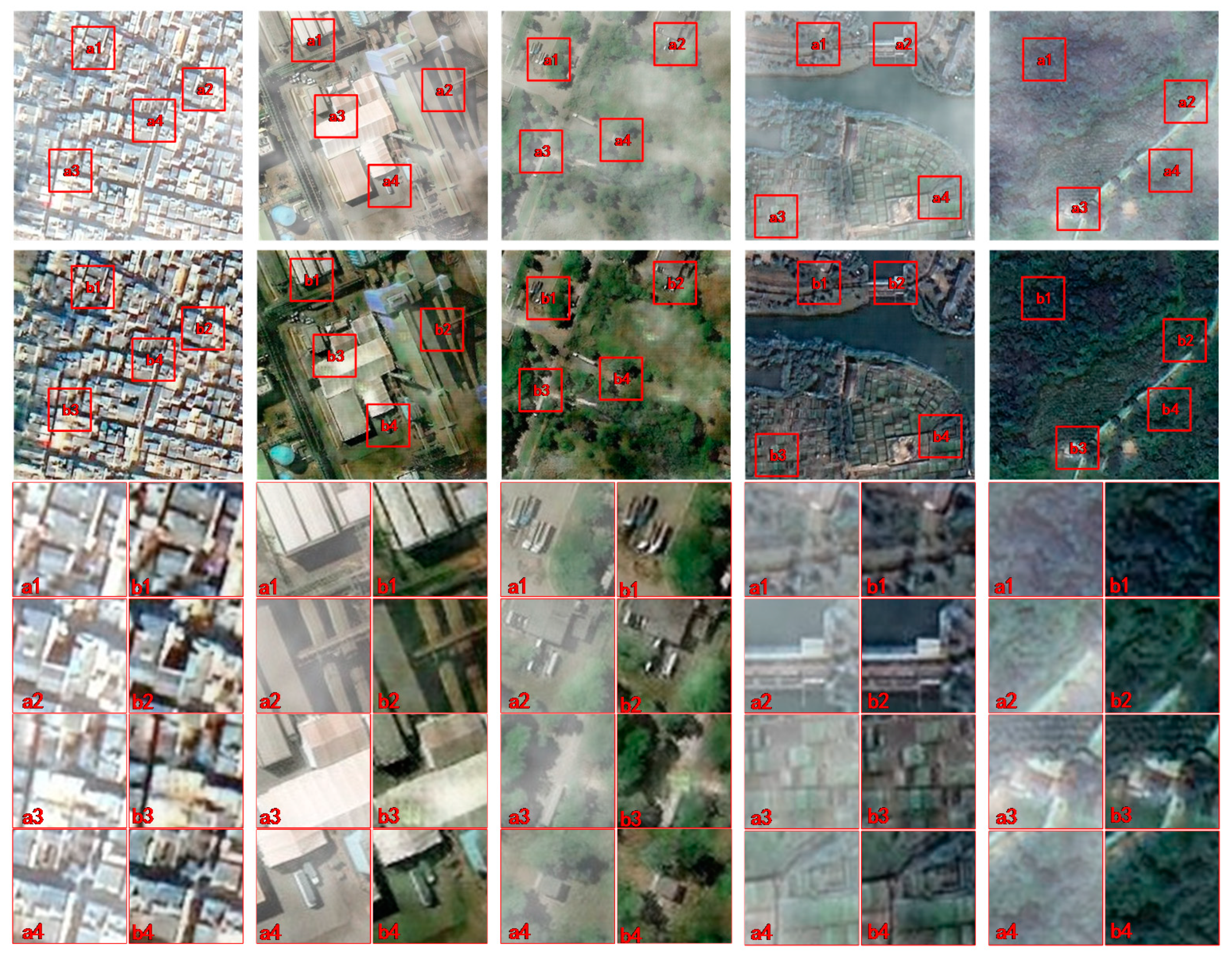

3.1. Experimental Data Set

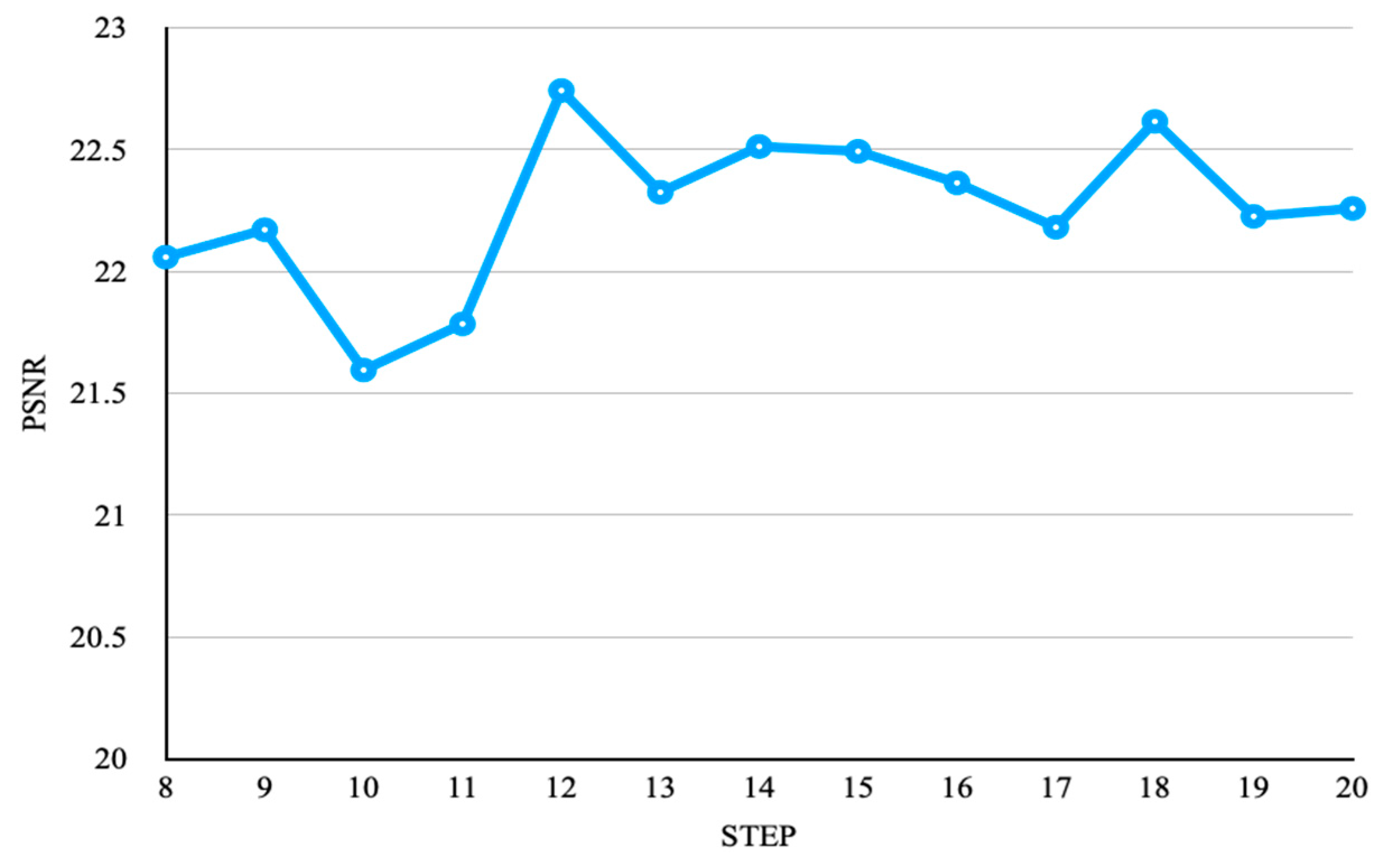

3.2. Network Parameters

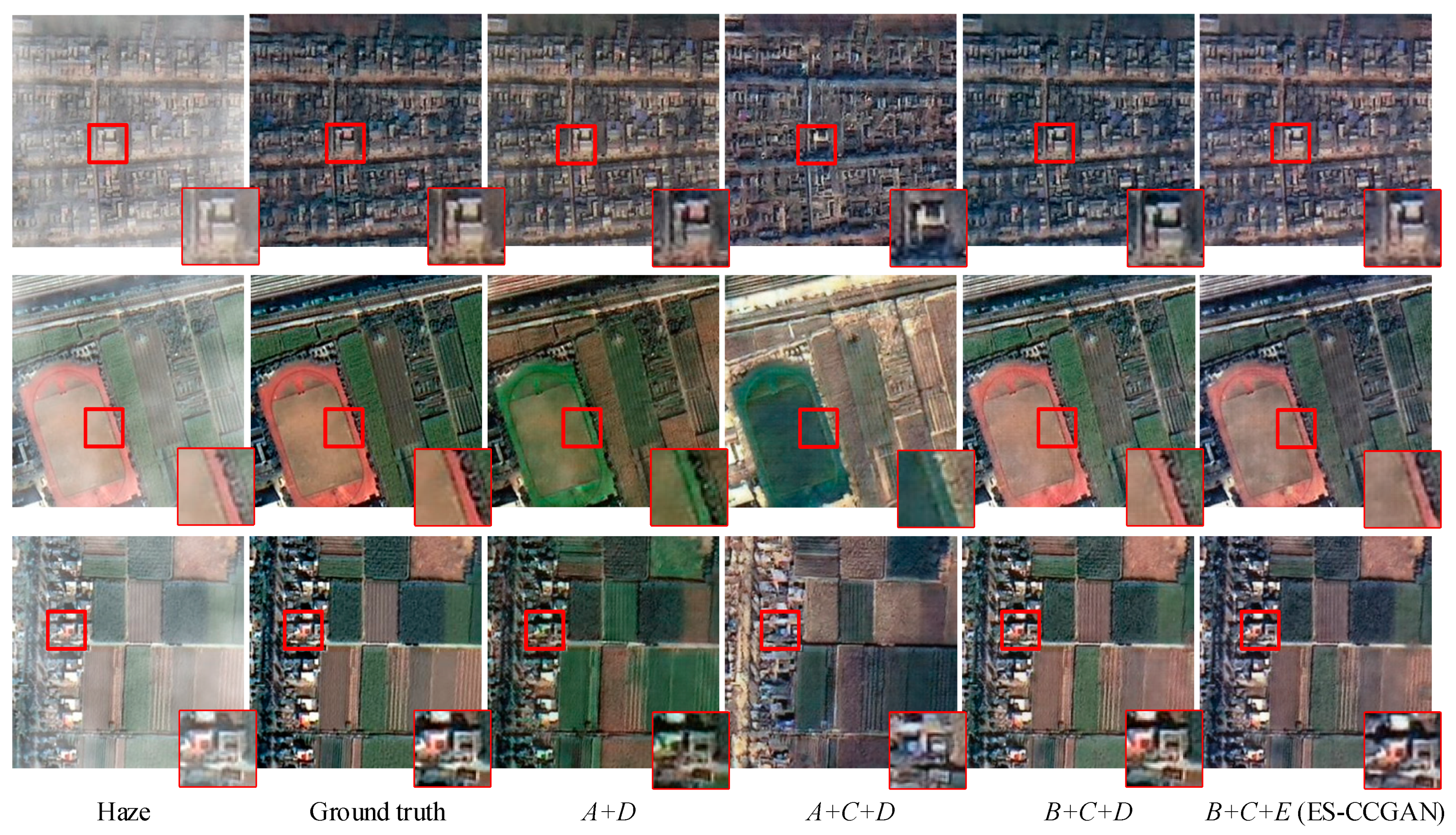

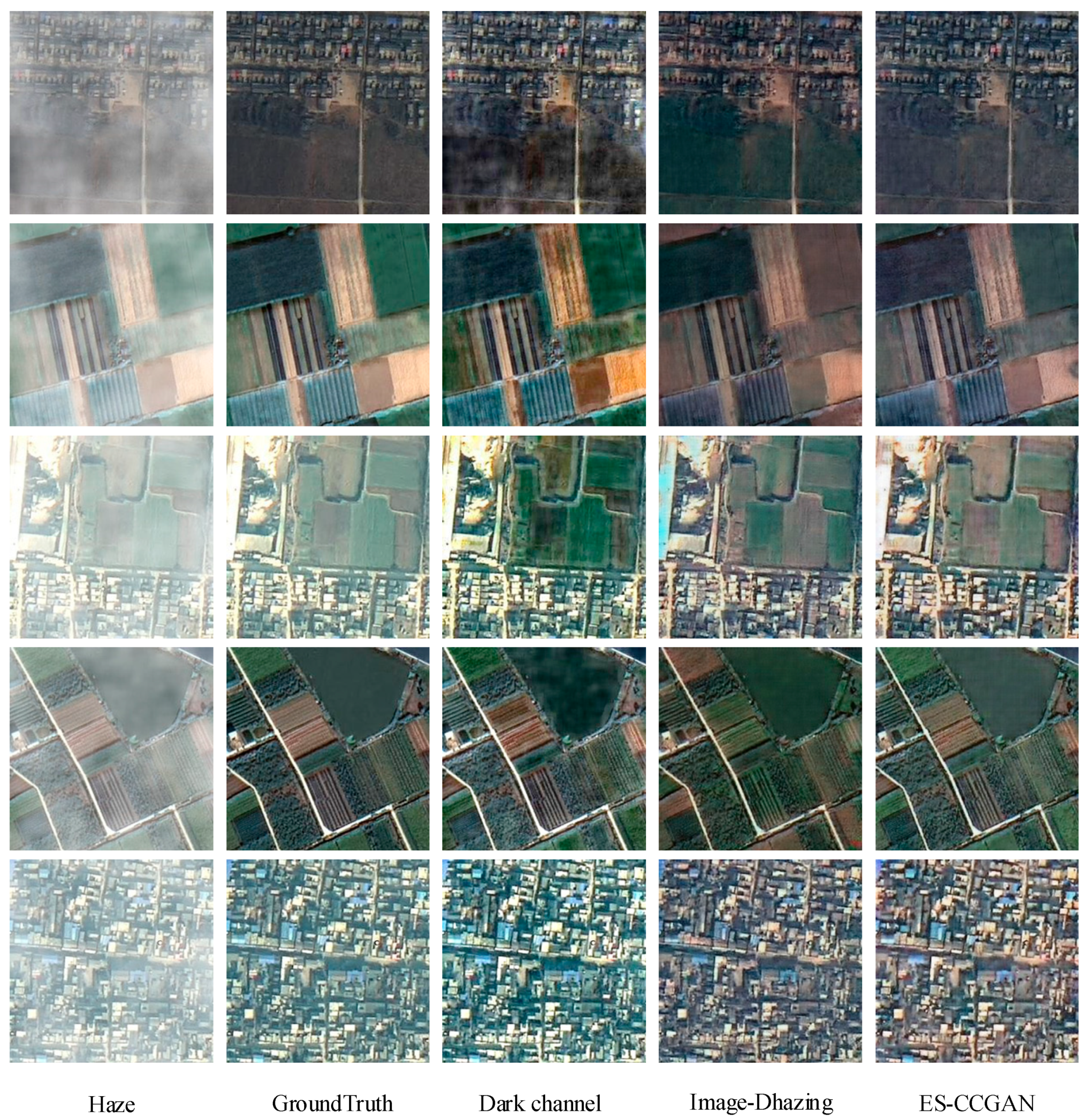

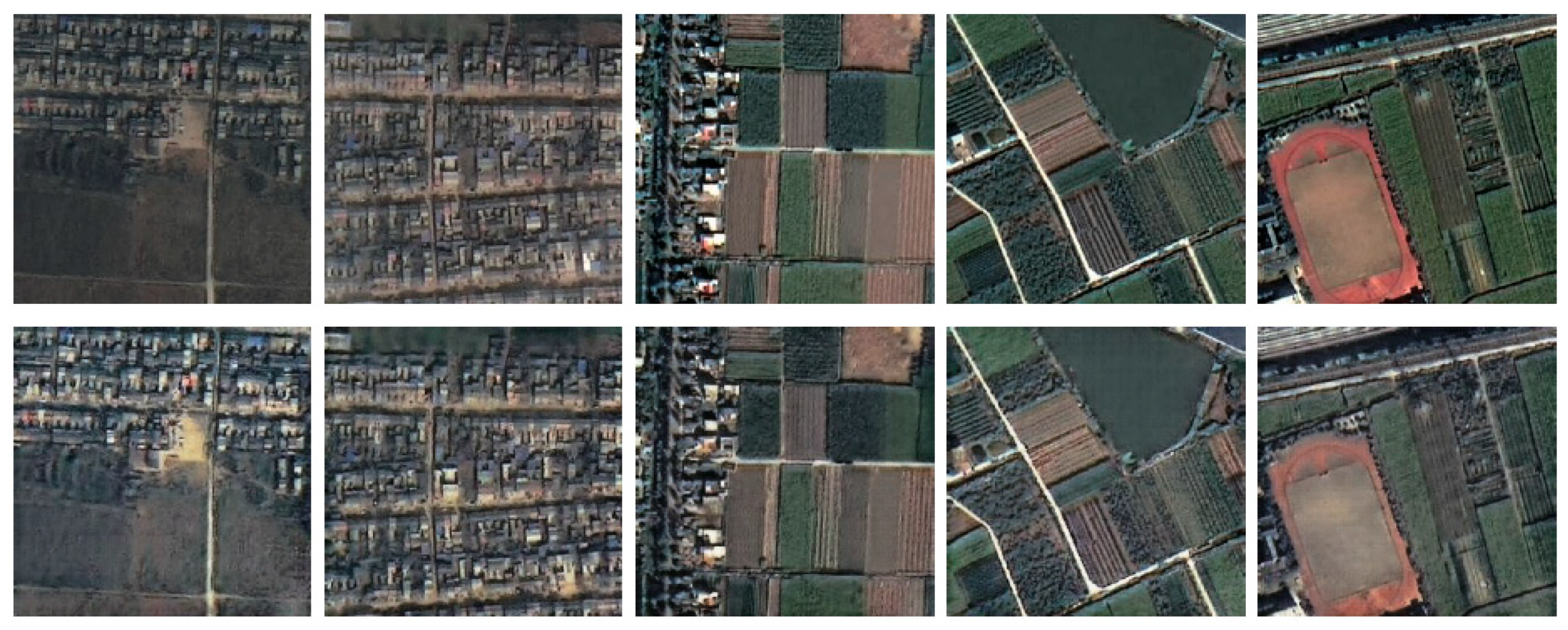

3.3. Experimental Results

4. Discussion

4.1. Some Effects of the Proposed Method

4.2. Comparison with Other Dehazing Methods

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- He, Z.; Deng, M.; Xie, Z.; Wu, L.; Chen, Z.; Pei, T. Discovering the joint influence of urban facilities on crime occurrence using spatial co-location pattern mining. Cities. 2020, 99, 102612. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Wu, L.; Chen, Z. Multilane roads extracted from the OpenStreetMap urban road network using random forests. Transit. GIS. 2019, 23, 224–240. [Google Scholar] [CrossRef]

- Zhang, J.F.; Xie, L.L.; Tao, X.X. Change detection of earthquake damaged buildings on remote sensing image and its application in seismic disaster assessment. In IGARSS 2003. 2003 IEEE International Geoscience and Remote Sensing Symposium, Toulouse, France, 21–25 July 2003; IEEE: New York, NY, USA, 2004; Volume 4, pp. 2436–2438. [Google Scholar]

- Yang, L.; Yang, Z. Automatic image navigation method for remote sensing satellite. Comput. Eng. Appl. 2009, 45, 204–207. [Google Scholar] [CrossRef]

- Xie, B.; Guo, F.; Cai, Z. Improved single image dehazing using dark channel prior and multi-scale retinex. In 2010 International Conference on Intelligent System Design and Engineering Application, Changsha, China, 13–14 October 2010; IEEE: New York, NY, USA, 2011; Volume 1, pp. 848–851. [Google Scholar]

- Li, W.; Li, Y.; Chen, D.; Chan, J.C.W. Thin cloud removal with residual symmetrical concatenation network. ISPRS J. Photogramm. Remote Sens. 2019, 153, 137–150. [Google Scholar] [CrossRef]

- Chen, Y.; He, W.; Yokoya, N.; Huang, T.Z. Blind cloud and cloud shadow removal of multitemporal images based on total variation regularized low-rank sparsity decomposition. ISPRS J. Photogramm. Remote Sens. 2019, 157, 93–107. [Google Scholar] [CrossRef]

- Land, E.H.; McCann, J.J. Lightness and retinex theory. JOSA 1971, 61, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef]

- Polesel, A.; Ramponi, G.; Mathews, V.J. Image enhancement via adaptive unsharp masking. IEEE Trans. Image Process. 2000, 9, 505–510. [Google Scholar] [CrossRef]

- Elad, M.; Kimmel, R.; Shaked, D.; Keshet, R. Reduced complexity retinex algorithm via the variational approach. J. Vis. Commun. Image Represent. 2003, 14, 369–388. [Google Scholar] [CrossRef]

- Meylan, L.; Susstrunk, S. High dynamic range image rendering with a retinex-based adaptive filter. IEEE Trans. Image Process. 2006, 15, 2820–2830. [Google Scholar] [CrossRef] [PubMed]

- Oakley, J.P.; Satherley, B.L. Improving image quality in poor visibility conditions using a physical model for contrast degradation. IEEE Trans. Image Process. 1998, 7, 167–179. [Google Scholar] [CrossRef] [PubMed]

- Tan, K.; Oakley, J.P. Physics-based approach to color image enhancement in poor visibility conditions. JOSA A 2001, 18, 2460–2467. [Google Scholar] [CrossRef] [PubMed]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; IEEE: New York, NY, USA, 2002; Volume 2, pp. 820–827. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 713–724. [Google Scholar] [CrossRef]

- Shwartz, S.; Namer, E.; Schechner, Y.Y. Blind haze separation. In 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: New York, NY, USA, 2006; Volume 2, pp. 1984–1991. [Google Scholar]

- Oakley, J.P.; Bu, H. Correction of simple contrast loss in color images. IEEE Trans. Image Process. 2007, 16, 511–522. [Google Scholar] [CrossRef]

- Li, F.; Wang, H.X.; Mao, X.P.; Sun, Y.L.; Song, H.Y. Fast single image defogging algorithm. Comput. Eng. Des. 2011, 32, 4129–4132. [Google Scholar]

- Tan, R.T. Visibility in bad weather from a single image. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: New York, NY, USA, 2008; pp. 1–8. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Ullah, E.; Nawaz, R.; Iqbal, J. Single image haze removal using improved dark channel prior. In 2013 5th International Conference on Modelling, Identification and Control (ICMIC), Cairo, Egypt, 31 August–2 September 2013; IEEE: New York, NY, USA, 2013; pp. 245–248. [Google Scholar]

- Liu, H.; Jie, Y.; Wu, Z.; Zhang, Q. Fast single image dehazing method based on physical model. In Computational Intelligence in Industrial Application: Proceedings of the 2014 Pacific-Asia Workshop on Computer Science in Industrial Application (CIIA 2014), Singapore, 8–9 December 2014; Taylor & Francis Group: London, UK, 2015; pp. 177–181. [Google Scholar]

- Du, L.; You, X.; Li, K. Multi-modal deep learning for landform recognition. ISPRS J. Photogramm. Remote Sens. 2019, 158, 63–75. [Google Scholar]

- Interdonato, R.; Ienco, D.; Gaetano, R. DuPLO: A DUal view Point deep Learning architecture for time series classification. ISPRS J. Photogramm. Remote Sens. 2019, 149, 91–104. [Google Scholar] [CrossRef]

- Ienco, D.; Interdonato, R.; Gaetano, R. Combining Sentinel-1 and Sentinel-2 Satellite Image Time Series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote Sens. 2019, 158, 11–22. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhang, L.; Dong, H.; Zou, B. Efficiently utilizing complex-valued PolSAR image data via a multi-task deep learning framework. ISPRS J. Photogramm. Remote Sens. 2019, 157, 59–72. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y. Road extraction from high-resolution remote sensing imagery using deep learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; NIPS: Montreal, QC, Canada, 2014; pp. 2672–2680. [Google Scholar]

- Yang, C.; Lu, X.; Lin, Z.; Shechtman, E.; Wang, O.; Li, H. High resolution image inpainting using multi-scale neural patch synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2017; pp. 6721–6729. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. ACM Trans. Graph. 2017, 36, 107. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative image inpainting with contextual attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2018; pp. 5505–5514. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2018; pp. 8183–8192. [Google Scholar]

- Wu, B.; Duan, H.; Liu, Z.; Sun, G. Srpgan: Perceptual Generative Adversarial Network for Single Image Super Resolution; Cornell University: Ithaca, NY, USA, 2017. [Google Scholar]

- Pathak, H.N.; Li, X.; Minaee, S.; Cowan, B. Efficient Super Resolution for Large-Scale Images Using Attentional GAN. In 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; IEEE: New York, NY, USA, 2019; pp. 1777–1786. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Change-Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV); CVF Open Access: Notre Dame, IN, USA, 2018; pp. 1–10. [Google Scholar]

- Yu, J.; Fan, Y.; Yang, J.; Xu, N.; Wang, Z.; Wang, X.; Huang, T. Wide Activation for Efficient and Accurate Image Super-Resolution; Cornell University: Ithaca, NY, USA, 2018. [Google Scholar]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F. A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Anantrasirichai, N.; Biggs, J.; Albino, F. A deep learning approach to detecting volcano deformation from satellite imagery using synthetic datasets. Remote Sens. Environ. 2019, 230, 111179. [Google Scholar] [CrossRef]

- Zhu, J.; Park, Y.; Isola, T.P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision; IEEE: New York, NY, USA, 2017; pp. 2223–2232. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Enomoto, K.; Sakurada, K.; Wang, W.; Fukui, H.; Matsuoka, M.; Nakamura, R.; Kawaguchi, N. Filmy cloud removal on satellite imagery with multispectral conditional generative adversarial nets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops; IEEE: New York, NY, USA, 2017; pp. 48–56. [Google Scholar]

- Singh, P.; Komodakis, N. Cloud-Gan: Cloud Removal for Sentinel-2 Imagery Using a Cyclic Consistent Generative Adversarial Networks. In IGARSS 20182–018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 1772–1775. [Google Scholar]

- Engin, D.; Genç, A.; Kemal-Ekenel, H. Cycle-dehaze: Enhanced cyclegan for single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops; IEEE: New York, NY, USA, 2018; Volume 3, pp. 825–833. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. Comput. Sci. 2014. Available online: https://arxiv.org/abs/1409.1556 (accessed on 7 December 2020).

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Shi, W. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2017; pp. 4681–4690. [Google Scholar]

- Huang, G.; Liu, Z.; Van-Der-Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2017; pp. 4700–4708. [Google Scholar]

- Sajjadi, M.S.M.; Schlkopf, B.; Hirsch, M. Enhancenet: Single image super-resolution through automated texture synthesis. In Proceedings of the IEEE International Conference on Computer Vision; IEEE: New York, NY, USA, 2017; pp. 4491–4500. [Google Scholar]

- Carreira, J.; Caseiro, R.; Batista, J.; Sminchisescu, C. Semantic segmentation with second-order pooling. In European Conference on Computer Vision; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; Volume 7578, pp. 430–443. [Google Scholar] [CrossRef]

- Perlin, K. An image synthesizer. ACM Siggraph Comput. Graph. 1985, 19, 287–296. [Google Scholar] [CrossRef]

- Murasev, A.A.; Spektor, A.A. Interpolated estimation of noise in an airborne electromagnetic system for mineral exploration. Optoelectron. Instrum. Data Process. 2014, 50, 598–605. [Google Scholar] [CrossRef]

- Lysaker, M.; Osher, S.; Tai, X.C. Noise removal using smoothed normals and surface fitting. IEEE Trans. Image Process. 2004, 13, 1345–1357. [Google Scholar] [CrossRef] [PubMed]

- Belega, D.; Petri, D. Frequency estimation by two- or three-point interpolated Fourier algorithms based on cosine windows. Signal. Process. 2015, 117, 115–125. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR); ICLR: Ithaca, NY, USA, 2015. [Google Scholar]

- Xu, Y.; Xie, Z.; Chen, Z. Measuring the similarity between multipolygons using convex hulls and position graphs. Int. J. Geogr. Inf. Sci. 2020, 1–22. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Ren, W.; Ma, L.; Zhang, J. Gated fusion network for single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2018; pp. 3253–3261. [Google Scholar]

| Operation | Kernel Size | Strides | Filters | Normalization | Activation Function |

|---|---|---|---|---|---|

| Conv1 | 7 × 7 | 1 × 1 | 32 | Yes | tanh |

| Conv2 | 3 × 3 | 2 × 2 | 64 | Yes | relu |

| Conv3 | 3 × 3 | 2 × 2 | 128 | Yes | relu |

| DenseNet | -- | -- | -- | -- | -- |

| deConv1 | 3 × 3 | 2 × 2 | 64 | Yes | relu |

| deConv2 | 3 × 3 | 2 × 2 | 32 | Yes | relu |

| deConv3 | 7 × 7 | 1 × 1 | 3 | Yes | relu |

| Operation | Kernel Size | Strides | Normalization | Activation Function |

|---|---|---|---|---|

| Conv1 | 4 × 4 | 2 × 2 | Yes | leaky-relu |

| Conv2 | 4 × 4 | 2 × 2 | Yes | leaky-relu |

| Conv3 | 4 × 4 | 2 × 2 | Yes | leaky-relu |

| Conv4 | 4 × 4 | 2 × 2 | Yes | leaky-relu |

| Conv5 | 4 × 4 | 1 × 1 | No | None |

| Structure |

|---|

| Input, Filters = 3 |

| Dense block (2 layers), Filters = 288 |

| Dense block (4 layers), Filters = 352 |

| Dense block (5 layers), Filters = 432 |

| Dense block (5 layers), Filters = 512 |

| Dense block (3 layers), Filters = 432 |

| Dense block (2 layers), Filters = 368 |

| Dense block (1 layer), Filters = 64 |

| 1 × 1 Convolution, Filters = 256 |

| Category | Urban | Industrial | Suburban | River | Forest |

|---|---|---|---|---|---|

| SSIM(SD) | 0.92 (0.02) | 0.74 (0.06) | 0.91 (0.01) | 0.90 (0.01) | 0.90 (0.01) |

| FSIM(SD) | 0.94 (0.01) | 0.84 (0.01) | 0.93 (0.01) | 0.93 (0.01) | 0.93 (0.01) |

| PSNR(SD) | 23.55 (2.40) | 19.43 (0.97) | 23.96 (0.78) | 24.03 (1.41) | 26.47 (0.94) |

| Method | Dark Channel | CycleDehaze | Intermediate Result | DehazeNet | GFN | Ours |

|---|---|---|---|---|---|---|

| SSIM(SD) | 0.93 (0.02) | 0.55 (0.08) | 0.90 (0.01) | 0.69 (0.19) | 0.86 (0.054) | 0.91 (0.02) |

| FSIM(SD) | 0.95 (0.02) | 0.77 (0.02) | 0.93 (0.01) | 0.84 (0.09) | 0.92 (0.02) | 0.93 (0.01) |

| PSNR(SD) | 21.16 (2.19) | 17.28 (2.69) | 21.66 (3.05) | 14.85 (3.39) | 18.82 (1.96) | 22.46 (3.81) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, A.; Xie, Z.; Xu, Y.; Xie, M.; Wu, L.; Qiu, Q. Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks. Remote Sens. 2020, 12, 4162. https://doi.org/10.3390/rs12244162

Hu A, Xie Z, Xu Y, Xie M, Wu L, Qiu Q. Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks. Remote Sensing. 2020; 12(24):4162. https://doi.org/10.3390/rs12244162

Chicago/Turabian StyleHu, Anna, Zhong Xie, Yongyang Xu, Mingyu Xie, Liang Wu, and Qinjun Qiu. 2020. "Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks" Remote Sensing 12, no. 24: 4162. https://doi.org/10.3390/rs12244162

APA StyleHu, A., Xie, Z., Xu, Y., Xie, M., Wu, L., & Qiu, Q. (2020). Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks. Remote Sensing, 12(24), 4162. https://doi.org/10.3390/rs12244162