1. Introduction

Hyperspectral imaging is characterized by simultaneously capturing the radiance of the earth’s surface at several hundreds of contiguous wavelength bands [

1]. Despite the usual coarse spatial resolution, the acquired hyperspectral images record abundant spectral information of imaging areas [

2], valuable for various remote sensing applications, such as monitoring and management of environmental changes, agricultural land-use, mineral exploitation, etc. [

3,

4,

5]. Hyperspectral images are in the form of 3D cubes with two spatial dimensions and a spectral dimension (i.e., number of bands) [

1,

6]. Spatial pixels in a hyperspectral image correspond to the reflectance of the materials on the earth’s surface. Hyperspectral image classification necessitates a classifier that learns to predict the class label of each pixel given a fraction of labeled pixels for training [

7,

8,

9].

In recent literature, the design of deep learning classifiers [

10,

11,

12,

13] has been at the forefront of efforts, leading to a dramatic improvement in terms of classification accuracy. Deep learning classifiers directly extract representative features from labeled samples and specifies a parameterized mapping from data space to label space. In essence, the performance of deep learning classifiers relies heavily on the volume of labeled samples for training [

14,

15]. A deep learning classifier would achieve remarkable performance for hyperspectral image classification given sufficient labeled samples but normally shows deficiency in the situation of learning with limited labeled samples [

16]. Classifying hyperspectral images with limited labeled samples is a major demand as collecting and labeling hyperspectral images are prohibitively labor- and material-consuming compared with doing similar operations for natural images [

17,

18,

19,

20]. Specifically, collecting hyperspectral images requires deploying specialized imaging spectrometers. In addition, coarse spatial resolution and extensive bands bring about difficulties for labeling. Under the circumstances, it turns to be ill-posed to learn an effective deep learning classifier with limited labeled hyperspectral samples.

Active learning provides a potential means for alleviating this deficiency. Active learning does not change the internal structure of a deep learning classifier but behaves like an efficient labeling strategy. The fundamental principle of the active learning protocol lies in building the training set of labeled samples iteratively [

21], by querying informative unlabeled samples and assigning them supplementary labels within multiple loops. Pool-based active learning and active learning by synthesis are two representatives [

22]. We concentrate on the pool-based one, which is indeed the scheme employed by almost all the active learning-based hyperspectral image classification methods [

17,

18,

23,

24,

25,

26,

27,

28]. Given a pool (i.e., a set) of labeled hyperspectral samples and a pool of unlabeled hyperspectral samples, active learning algorithms strategically query a fixed number (referred to as ‘budget’ in active learning) of most informative ones from the unlabeled pool [

22]. The queried samples are labeled additionally and put into the labeled pool to facilitate model improvement [

29].

Which samples are queried depends on certain criteria which are referred to as acquisition heuristics. There is no generic criterion and each sophisticated acquisition heuristic is designed elaborately by active learning practitioners. Existing active learning-based hyperspectral classifiers normally employ off-the-shelf uncertainty-based algorithms [

30,

31], such as the least confidence [

32], the entropy sampling [

32], the bayesian active learning disagreement (BALD) [

33], etc. Indeed, the above algorithms query unlabeled samples by evaluating the uncertainty based on the output of classifiers, in which representational power is bounded by the number of labeled samples at hand. In this scenario, these active deep learning methods tend to underestimate the feature variability of hyperspectral images spanning from spectral domain to spatial domain.

To address the limitation and comprehensively capture the feature variability of hyperspectral images, we propose a feature-oriented adversarial active learning (FAAL) strategy in this article. We exploit the high-level features obtained from one intermediate layer of a deep learning classifier rather than its output. We employ a deep learning classifier that combines 3D convolutional layers, 2D convolutional layers, and dense layers (i.e., fully connected layers) [

11]. The use of 3D convolutional layers accords with the 3D nature of hyperspectral images and facilitates feature extraction [

34,

35] from both spectral domain and spatial domain. Moreover, we simply divide the classifier into (i) a convolutional module, which learns the high-level features of hyperspectral samples, and (ii) a dense module, which learns to perform predictions. Further, we developed an acquisition heuristic based on adversarial learning with the high-level features. We arrange a generative adversarial network (GAN) in addition to the deep learning classifier for deriving the acquisition heuristic. The GAN comprises two subnetworks: (i) a feature generator that generates fake high-level features, and (ii) a feature discriminator that discriminates between the real high-level features extracted from the convolutional module and the fake high-level features generated by the feature generator. The two subnetworks co-evolve during adversarial learning. Trained with both the real and the fake high-level features, the feature discriminator comprehensively captures the feature variability of hyperspectral images and yields a powerful and generalized discriminative capability. We leverage a well-trained feature discriminator, a purely parameterized yet simple neural network, as the acquisition heuristic to query informative unlabeled samples. The informativeness of them is measured by the estimated probabilities of the well-trained feature discriminator.

The divided deep learning classifier and the feature-oriented GAN form the full feature-oriented adversarial active learning (FAAL) framework. Multiple active learning loops, where both the classifier and the GAN are trained with ever-increasing labeled data, render the classifier robust and generalized for hyperspectral image classification. Overall, the GAN undertakes a pretext task [

36] for the mainstream classification task of the classifier.

Our contributions are summarized as follows.

We develop an active deep learning framework, referred to as feature-oriented adversarial active learning (FAAL), for classifying hyperspectral images with limited labeled samples. The FAAL framework integrates a deep learning classifier with an active learning strategy. This improves the learning ability of the deep learning classifier for classifying hyperspectral images with limited labeled samples.

To the best of our knowledge, neither the focus on high-level features nor the adversarial learning methodology has been explored for active learning-based hyperspectral image classification. In contrast, the active learning within our FAAL framework is characterized by an acquisition heuristic which is established via high-level feature-oriented adversarial learning. Such exploration enables our FAAL framework to comprehensively capture the feature variability of hyperspectral images and thus yield an effective hyperspectral image classification scheme.

Our FAAL framework achieves state-of-the-art performance on two public hyperspectral image datasets for classifying hyperspectral images with limited labeled samples. The effectiveness of both the full FAAL framework and the adversarially learned acquisition heuristic is validated by rigorous experimental evaluations.

The rest of this article is structured as follows.

Section 2 gives some preliminary knowledge of our work.

Section 3 describes our FAAL framework in detail.

Section 4 provides experimental evaluations and discussion. Finally,

Section 5 concludes this article with several directions for future research.

3. Feature-Oriented Adversarial Active Learning

Our feature-oriented adversarial active learning (FAAL) framework performs hyperspectral image classification in a spatial-spectral manner [

51,

52]. We commence by conducting dimensionality reduction (e.g., with 30 spectral bands retained) [

53,

54] to preprocess hyperspectral images. Following, we exert a spatial window (e.g., sized 25) on them and obtain a group of hyperspectral image cubes (e.g., sized

). Our FAAL framework receives as input such hyperspectral image cubes that comprise object pixels (i.e., spectra to be classified) and their spatial neighbor pixels with respect to the hyperspectral images after dimensionality reduction. Without loss of generality, we refer to such a cube as a sample.

Let

be the

ith sample-label pair in the labeled pool with size

L and

be the

jth sample in the unlabeled pool with size

U, separately. We omit the subscripts of notations to make generalizations unless otherwise specified. Active learning-based hyperspectral classifiers delve into querying the most informative hyperspectral samples to be labeled from the unlabeled pool according to a specific acquisition heuristic. Our FAAL framework, composed of a deep learning classifier and a generative adversarial network (GAN), achieves the active query based on adversarial learning with high-level features. The adversarial learning renders the discriminator subnetwork in the GAN increasingly powerful and generalized in discriminative capability, making it an acquisition heuristic in nature.

Figure 1 illustrates the adversarial learning with high-level features.

Figure 2 shows the feature map changes within the GAN.

Figure 3 displays the active query of unlabeled samples outside training. More details are given in the following subsections.

3.1. High-level Features from Classifier Division

A typical deep learning classifier extracts representative features layer by layer. We employ a classifier that combines 3D convolutional layers, 2D convolutional layers, and dense layers (i.e., fully connected layers). It is broadly adapted from the one developed by Roy et al. [

11]. The use of 3D convolutional layers accords with the 3D nature of hyperspectral images, which facilitates spatial-spectral feature extraction for the downstream classification task. Assuming that there are

N labeled samples for training, we use the widely used softmax cross entropy as the cost for the classifier, i.e.,

:

where

and

denotes the groundtruth and the prediction of the

ith sample, respectively. Softmax( ) indicates a softmax operation.

We divide the classifier into two modules by splitting two certain intermediate layers and consider the derived high-level feature space. Specifically, we adopt a simple division strategy that derives (i) a convolutional module including all 3D and 2D convolutional operations in the head of the classifier, and (ii) a dense module stacked by dense layers in the tail, as shown in the upper half of

Figure 1. For simplicity, we use Conv3D, Conv2D, and Dense to represent 3D convolutional layers, 2D convolutional layers, and dense layers, respectively. Given the input labeled samples

x, the convolutional module learns to extract representative high-level features

f. The dense module transforms

f into final predictions

via multiple layer-wise non-linear transformations.

With such division, the mapping from data space to label space is interposed by high-level feature space and thus can be considered as a sequential combination of two mappings. The convolutional module accounts for mapping from data space to high-level feature space, and the dense module concludes the mapping from high-level feature space to label space. We implement an active query by coping with the high-level features in high-level feature space rather than the output of the classifier, i.e., data points in label space. The representational power of the classifier is bounded by the number of labeled samples at hand, making the neatly formed classifier output hardly reflects the feature abundance of hyperspectral images. Exploring the intermediate high-level features alleviates this bias to a certain extent. Besides, we leverage additional fake high-level features generated by a GAN to help capture the feature variability of hyperspectral images during the active query further, which will be introduced in the next subsection.

3.2. Adversarial Learning with High-Level Features

We train a GAN independent of the classifier, as shown in the lower half of

Figure 1. The GAN is composed of a feature generator

G and a feature discriminator

D. Specifically,

G maps noise

z into high-level feature space to generate fake high-level features

.

D treats

(For unity, here, we use

E to denote the convolutional module of classifier), the high-level features extracted from the convolutional module of the classifier as real and

, those generated from noise as fake, and learns to discriminate between them. To be precise,

D receives high-level features (

f or

) as input and estimates the probabilities that they are real. The output probabilities are real values between 0 and 1.

The configuration of neither

G or

D is elaborately designed.

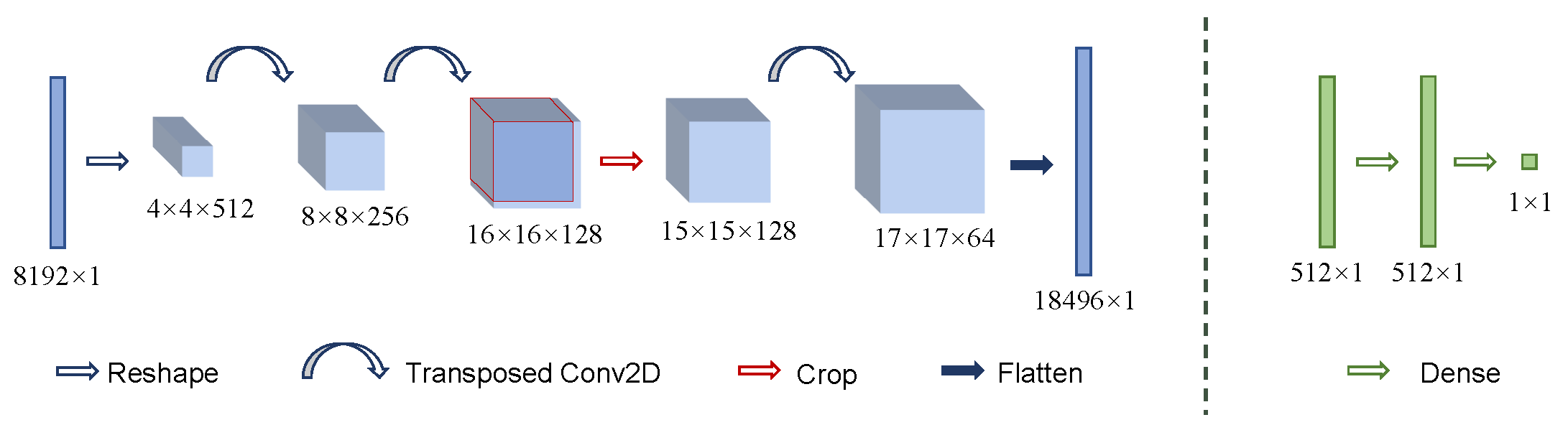

Figure 2 illustrates the feature map changes within

G and

D given a feature size of 18496 × 1, separately.

G begins with a dense layer to expand the input low dimensional (e.g., 100 × 1) noise

z to a size (e.g., 8192 × 1) ready for being reshaped to a piece of small feature maps (e.g., 4 × 4 × 512). We use 2D transposed convolutional layers (Transposed Conv2D) to up-scale feature maps. Particularly, we crop the 16 × 16 feature maps to 15 × 15 by abandoning the last row and the last column to make them up-sampled smoothly to match the target size (i.e., 17 × 17 × 64). Flattening feature maps with that size yields the generated fake high-level features. To build

D, we simply use three dense layers that transform the input real/fake high-level features into real value probabilities.

G and

D co-evolve during adversarial learning where they are trained adversarially and alternately.

D is commonly trained prior to

G. The cost for the feature discriminator

takes the form of standard binary cross entropy:

where

and

are the empirical distribution of current labeled samples and an easy-to-sample prior distribution (e.g., Gaussian distribution) of noise, respectively.

indicates an expectation operation.

To ensure that both

G and

D have strong gradients during training [

55], the cost for the feature generator

holds the form of cross entropy but changes into:

where

is the prior distribution of noise.

In addition, we follow the disparity measurement [

56] to facilitate a diverse feature synthesis, and extend

with a regularization term

:

Minimizing explicitly maximizes the ratio of the distance between two generated features and with respect to that between two corresponding noise vectors and .

Trained with both the real and the fake high-level features,

D captures the feature variability of hyperspectral images and yields a powerful and generalized discriminative capability. It estimates the probabilities that the input features are real rather than fake (generated). We freeze the training of a well-trained

D and take its estimated probabilities as a criterion to measure whether an unlabeled sample is previously well-represented or not. The well-trained

D is an acquisition heuristic for active learning in nature and is a purely parameterized yet simple neural network. In general, samples not previously well-represented yield high uncertainty [

57]. The adversarially learned acquisition heuristic does not extricate from being uncertainty-based but does not explicitly measure uncertainty, either. Such implicit measurement by means of feature discrimination enhances capturing the feature variability of hyperspectral images. Besides, the adversarially learned acquisition heuristic is task-agnostic, so that we believe it scalable to other applications. Research on the property is beyond the scope of this article.

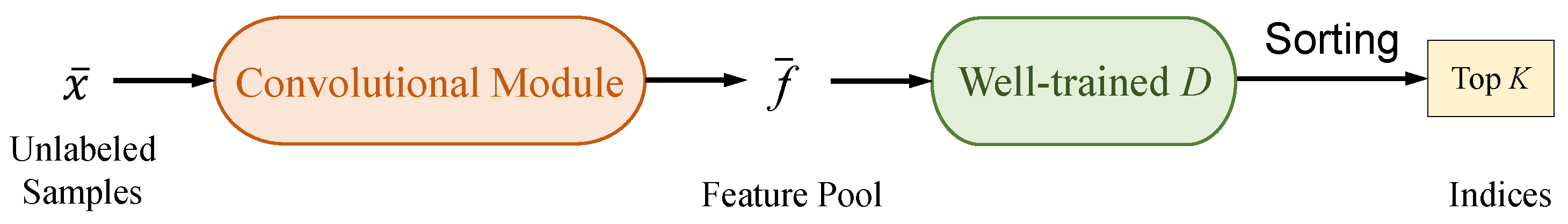

3.3. Active Query of Unlabeled Samples

The above adversarial learning provides an acquisition heuristic for the subsequent active query. Overall, the GAN undertakes a pretext task [

36] for the mainstream classification task. During the adversarial learning,

D learns to output high probability values when receiving real high-level features as input and output low probability values given fake high-level features. When a frozen well-trained

D generalizes to new input, it is intuitively sound that

D would output low probability values if the new input is not well-represented by the pool of

f, and vice versa.

Figure 3 illustrates the active query of unlabeled samples. Our acquisition heuristic performs an active query in the form of high-level features rather than computing using hyperspectral samples throughout. We let hyperspectral samples

in the unlabeled pool input the frozen trained convolutional module of the classifier to obtain a pool of high-level features

. We put those features into the frozen trained

D as the aforementioned new input. Assume the budget of each active learning loop is

K. We sort the output estimated probabilities by value and query the top

K minimums. The output of the active query is a series of indices, with which we can easily trace back to the corresponding unlabeled samples. After being labeled, those queried samples are merged with the labeled samples at hand and removed from the unlabeled pool.

The GAN provides adversarial learning with high-level features, yielding a purely parameterized yet simple acquisition heuristic for actively querying unlabeled samples to be labeled. The feature variability of hyperspectral images is taken into consideration. Multiple active learning loops progressively improve the performance of the classifier for classifying hyperspectral images with limited labeled samples. We refer to the full strategy/framework as feature-oriented adversarial active learning (FAAL).

3.4. Workflow of Full Framework

The two distinctive components involved in our FAAL framework, i.e., a deep learning classifier and a GAN, are trained alternatively and separately. The classifier is trained prior to the GAN. Training one of them necessitates an ad hoc freezing of the training of the other.

The training process has two stages overall. Firstly, the two components are trained with initial labeled samples. Secondly, they are trained with ever-increasing labeled samples (or high-level features) within multiple active learning loops. Specifically, the GAN is unnecessary to be trained in the last active learning loop. Algorithm 1 summarizes the workflow of our FAAL framework.

| Algorithm 1 Feature-oriented adversarial active learning. |

| 1: repeat |

| 2: Update classifier initially: |

| Minimize Equation (1) with initial labeled samples. |

| 3: Update GAN initially: |

| a. Freeze classifier and obtain real high-level features f of current labeled samples. |

| b. Generate fake high-level features from noise z. |

| c. Update D in terms of minimizing Equation (2). |

| d. Generate two groups of fake high-level features and from noise and , respectively. |

| e. Update G in terms of minimizing Equations (3) and (4). |

| 4: for do |

| 5: Active query of unlabeled samples: |

| a. Freeze D. |

| b. Query K unlabeled high-level features with minimum estimated probabilities. |

| c. Trace back to unlabeled samples and label them. |

| d. Merge newly labeled samples with previous ones. |

| e. Remove the queried samples from the unlabeled pool. |

| 6: Update classifier using current labeled samples. |

| 7: Update GAN using the high-level features of the current labeled samples. |

| 8: end for |

| 9: for do |

| 10: Active query of unlabeled samples. |

| 11: Update classifier using current labeled samples. |

| 12: end for |

| 13: until reaching the given threshold. |

4. Experimental Results and Discussion

We rigorously evaluated our FAAL framework for the task of classifying hyperspectral images with limited labeled samples. We avoided using any data augmentation method. We adopted two public hyperspectral image datasets and organize three groups of experimental comparisons on them. All the quantitative comparisons were assessed using three common evaluation metrics: overall accuracy (AA), average accuracy (AA), and kappa coefficient (KAPPA). Larger values indicated better performance. All of the reported results were averaged over ten runs. In each run, initial labeled samples were randomly sampled without fixing random seeds. In all quantitative comparisons, we marked the best in bold.

4.1. Datasets

We adopted two public hyperspectral image datasets (the two datasets are available at

http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes), i.e., Indian Pines and Pavia University. The Indian Pines scene was acquired by the Airborne Visible Infrared Imaging Spectrometer (AVIRS) sensor, and the Pavia University scene was collected by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor. The ground sites of the two scenes are the Indian Pines test site, USA, and the Pavia University, Italy, separately.

Table 1 lists more basic information of the two datasets, including sensor, size, number of available bands, spectral range, ground sample distance (GSD, i.e., spatial resolution), and number of labeled classes. By comparison, the Indian Pines dataset exhibits relatively heavy class imbalance and the Pavia University dataset has less labeled classes. Class information and respective labeled spectrum numbers of the two datasets are given in

Table 2 and

Table 3, separately.

4.2. Implementation Details

We implement our FAAL framework using Python in conjunction with the TensorFlow library. Our experimental environment contains 512 GB random access memory (RAM) and NVIDIA Tesla K80 graphic processing unit (GPU) computing accelerators (11GB memory). We retain 30 bands for each dataset after the dimensionality reduction. The spatial window size for spatial-spectral classification is 25. We set the dimensionality of noise to 100. The learning rates for the classifier and the GAN are 0.001 and 0.0002, respectively. Specifically, we set a minor decay rate of 0.000006 for the training of the classifier. The number of active learning loops is five. We initialize the labeled pool by randomly selecting five samples per class. We set the unlabeled pool by randomly sampling another 1000 samples, leaving the rest for testing. By default, the budgets are set as 34 for Indian Pines, and 41 for Pavia University. After all the active learning loops finish, there are 250 samples in the labeled pool for each of the two datasets. The training epochs are 45 for both initial training and active training. The computational training time is about eleven minutes. Code is available at

https://github.com/gxwangupc/FAAL.

4.3. Analysis of the Naive Classifier

We start by analyzing our employed deep learning classifier (i.e., the basic classifier of our FAAL framework), which is configured with 3D convolutional layers, 2D convolutional layers, and dense layers. The configuration is generally adapted from Roy et al. [

11].

We test two dimensionality reduction strategies. One is the traditional principal component analysis (PCA) [

53]. The other is the superpixel-wise principal component analysis (SuperPCA) [

54] that performs PCA on segmented homogenous regions [

58,

59]. To build a training set for the naive classifier, we randomly sample five samples per class and then randomly choose additional disjointed 170 samples for Indian Pines, and 205 for Pavia University. In total, there are 250 labeled samples for each dataset.

The naive classifier receives all the 250 labeled samples at once. However, these samples are selected in an absolutely random manner regardless of informativeness. An active classifier (naive classifier + active learning) takes into account informativeness during the process of iteratively adding queried samples. Its training starts with an extremely small number of labeled samples and runs multiple times, i.e., loops, during which samples are queried unrestricted by class. The model trained in the current loop can be thought of as a pre-trained model for resuming training in the next loop.

Table 4 assesses the naive classifier and our FAAL framework on the two datasets with the above two dimensionality reduction strategies, in terms of the OA, AA, and KAPPA. Results show that reducing dimensionality using SuperPCA delivers better performance than that using PCA. A sophisticated dimensionality reduction strategy plays a significant role in the downstream task. Hence, we preprocess hyperspectral data just using SuperPCA in the following evaluations. We observe that the naive classifier obtains plausible results in a mass. It suggests that the configuration of our employed deep learning classifier is considerably effective. A major source of the capability may be that the use of 3D convolutional layers accords with the 3D nature of hyperspectral images. Overall, our FAAL framework yields better performance than the naive classifier under the same dimensionality strategy. It highlights that an active learning strategy surpasses the naive setting, i.e., randomly selecting samples and training at once, in classifying hyperspectral images with limited labeled samples. Besides, this validates that the informativeness of samples carries a big weight in the scenario of learning with limited labeled samples.

4.4. Comparison with Other Active Learning Classifiers

We selected three state-of-the-art methods for comparison. Each of them performs a spatial-spectral hyperspectral image classification based on active learning. Zhang et al. [

26] combine active learning with a hierarchical segmentation responsible for extracting spatial information. Zhang et al. [

25] incorporate active learning with an adaptive multi-view generation and an ensemble strategy. Cao et al. [

17] integrate active learning and a convolutional neural network, followed by a Markov random field for finetuning.

AL-SV-HSeg (a single-view active learning framework with hierarchical segmentation) in Zhang et al. [

26], AL-SV (a single-view active learning framework), AL-MV (a multi-view active learning framework), AL-MV-HSeg (a multi-view active learning framework with a hierarchical segmentation), and AL-MVE-HSeg (a multi-view active learning ensemble framework with a hierarchical segmentation) in Zhang et al. [

25], and AL-CNN-MRF (an active deep learning framework with a Markov random field) in Cao et al. [

17], are included as baselines. We compare our FAAL framework with their reported results directly. We use the same number of labeled samples as Zhang et al. [

25,

26]: five samples per class initially and 250 samples totally in the end.

Table 5 reports the comparison results on Indian Pines, in terms of the OA, AA, KAPPA, and accuracy of each class. In the case of using 250 labeled samples in total, our FAAL framework achieves the best performance measured by all the three general metrics. Specifically, FAAL with 250 labeled samples for training obtains higher AA compared with AL-CNN-MRF trained with 416 labeled samples in total. When trained with 300 labeled samples, our FAAL framework consolidates the gains and surpasses AL-CNN-MRF in terms of OA.

Table 6 gives the comparison results on Pavia University. Our FAAL framework trained with 250 labeled samples achieves higher OA and

k than AL-SV-HSeg but lower AA. In comparison with the reported results of AL-CNN-MRF trained with 321 labeled samples in total, we change to initialize the labeled pool by randomly selecting ten samples per class and query 46 unlabeled samples in each active learning loop. 320 labeled samples are used in total. Despite the lower OA than AL-CNN-MRF, our FAAL framework exhibits effectiveness in terms of AA and surpasses AL-CNN-MRF.

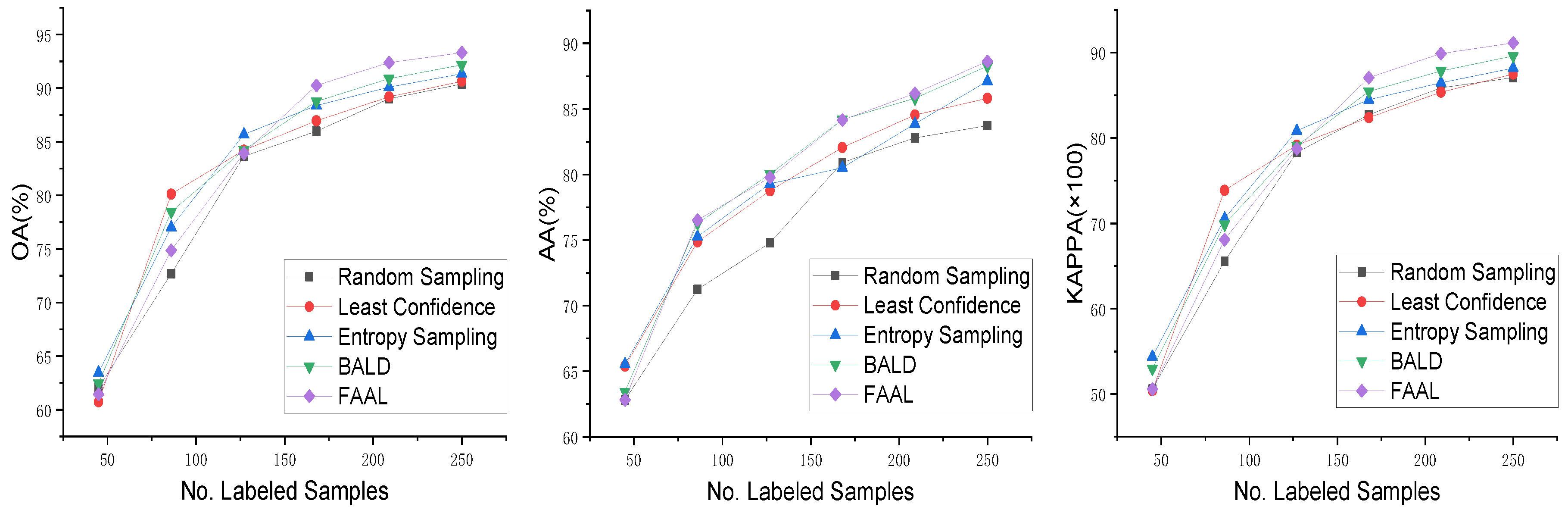

4.5. Study on Acquisition Heuristics

We finally compare the adversarially learned acquisition heuristic of our FAAL framework with off-the-shelf acquisition heuristics. We exert the random sampling (i.e., querying unlabeled samples randomly within each active learning loop), the least confidence [

32], the entropy sampling [

32], and BALD [

33] onto our employed classifier, separately. The usage of labeled samples is as default.

Table 7 and

Table 8 list quantitative comparisons on the two datasets: Indian Pines and Pavia University, respectively. We observe that our FAAL framework achieves superior performance to the classifiers with other available acquisition heuristics. An intuitive reason is that our FAAL framework makes decisions for the active query relying on the high-level features instead of the output of the classifier. Adversarial learning with the high-level features makes our FAAL framework comprehensively capture the feature variability of hyperspectral images.

Figure 4 and

Figure 5 illustrate the qualitative results on Indian Pines and Pavia University, respectively. Groundtruth maps are provided firstly as references.

Classification maps predicted by the classifier with the random sampling, the least confidence, the entropy sampling, BALD, and our adversarially learned acquisition heuristic (i.e., our FAAL framework) are given separately. Overall, superior visual results are obtained with the adversarially learned acquisition heuristic of our FAAL framework.

Figure 6 and

Figure 7 compare the classification performance after each active learning loop on Indian Pines and Pavia University, respectively. The comparisons are measured by OA, AA, and KAPPA, separately. Not surprisingly, the varying curves achieved by the classifier with the random sampling are at the lowest almost all the time. The curves obtained with the adversarially learned acquisition heuristic of our FAAL framework tangle with those obtained with other available acquisition heuristics in the first two active learning loops, as well as edge ahead of them in the next three active learning loops.