Uncertainty Analysis of Object-Based Land-Cover Classification Using Sentinel-2 Time-Series Data

Abstract

1. Introduction

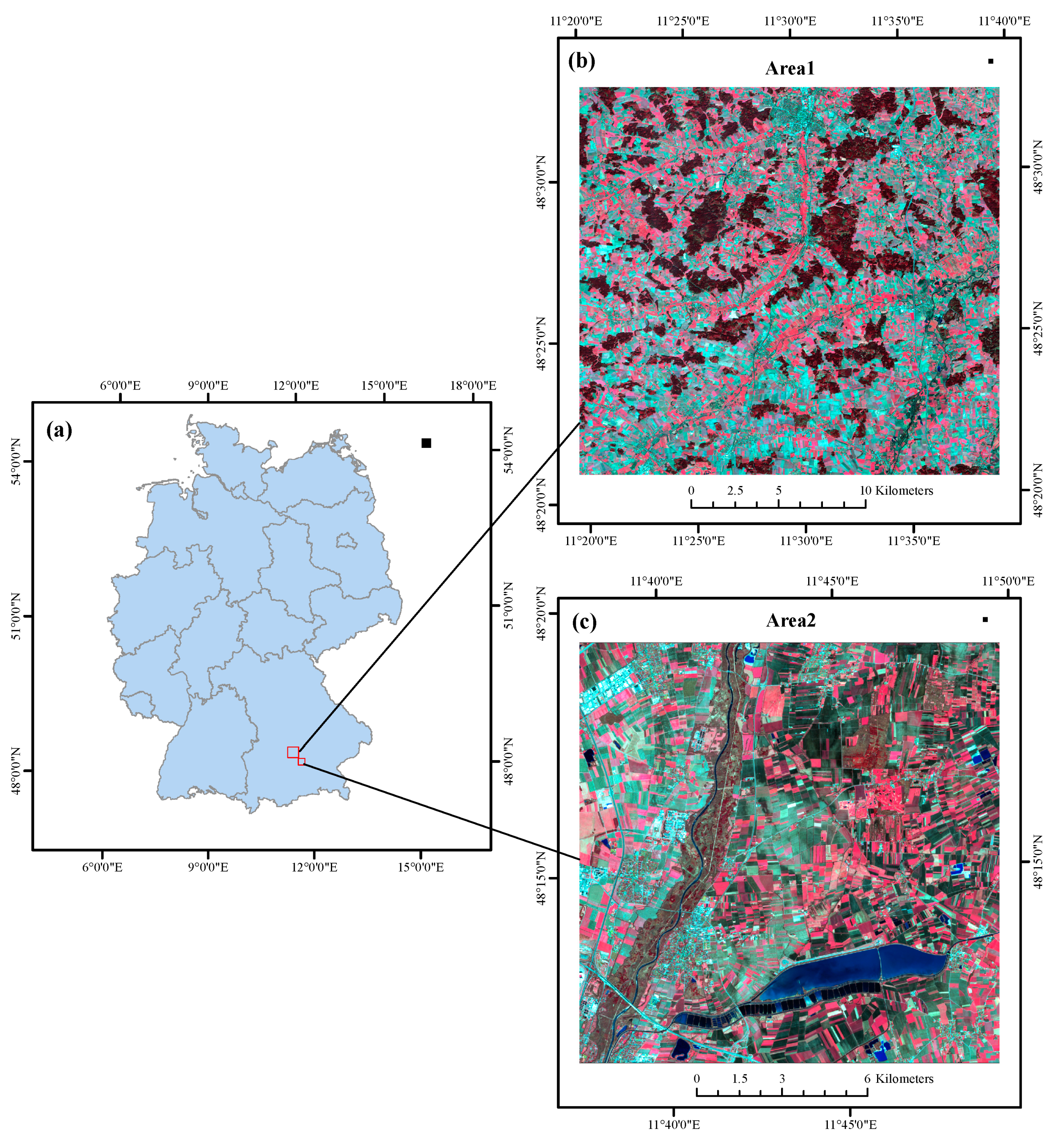

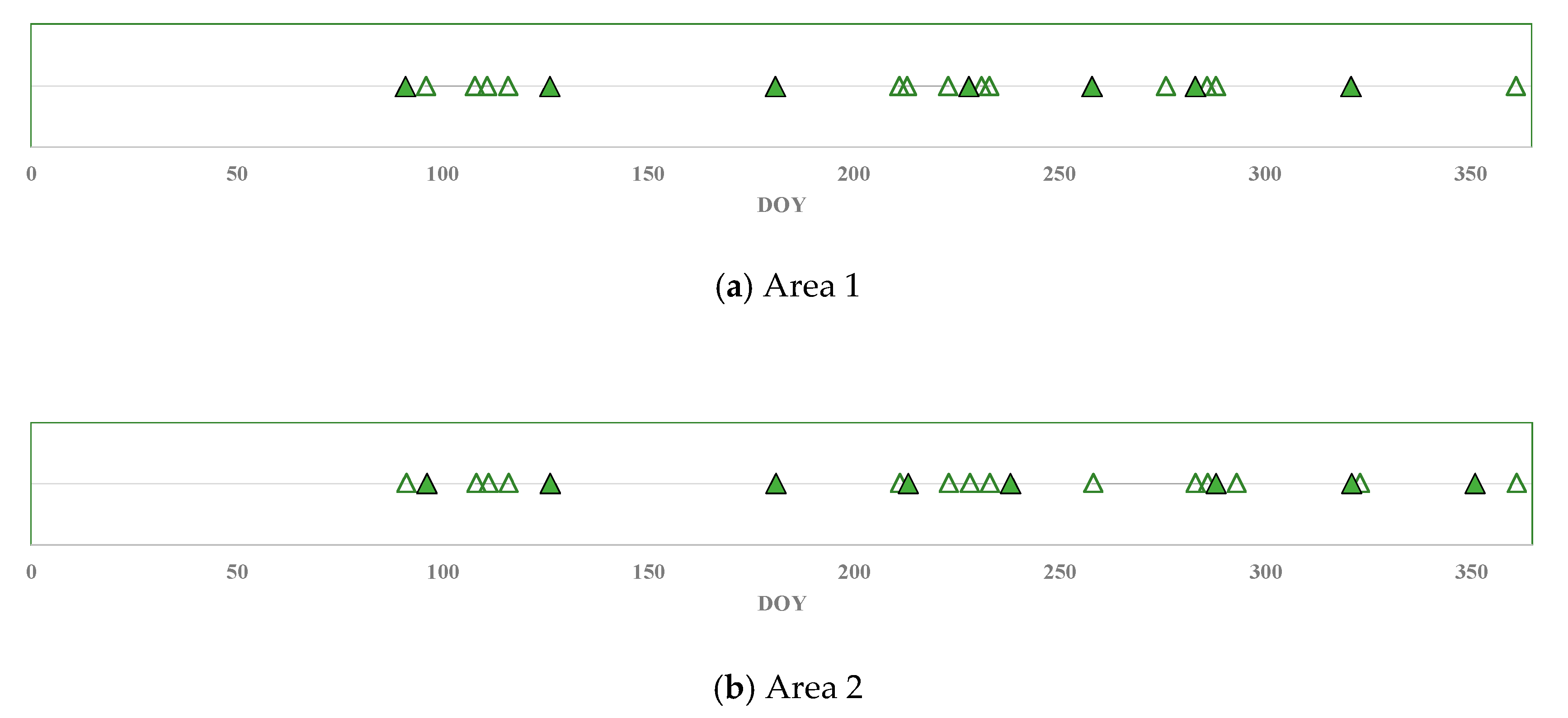

2. Study Area and Dataset

3. Methods

3.1. Segmentation of Multi-Temporal Images

3.2. Training and Validation of Data Collection

3.3. Classification Using Random Forest

3.4. Filtering Feature Subset and Temporal Characteristics Analysis

3.5. Accuracy Evaluation and Statistical Tests

4. Results

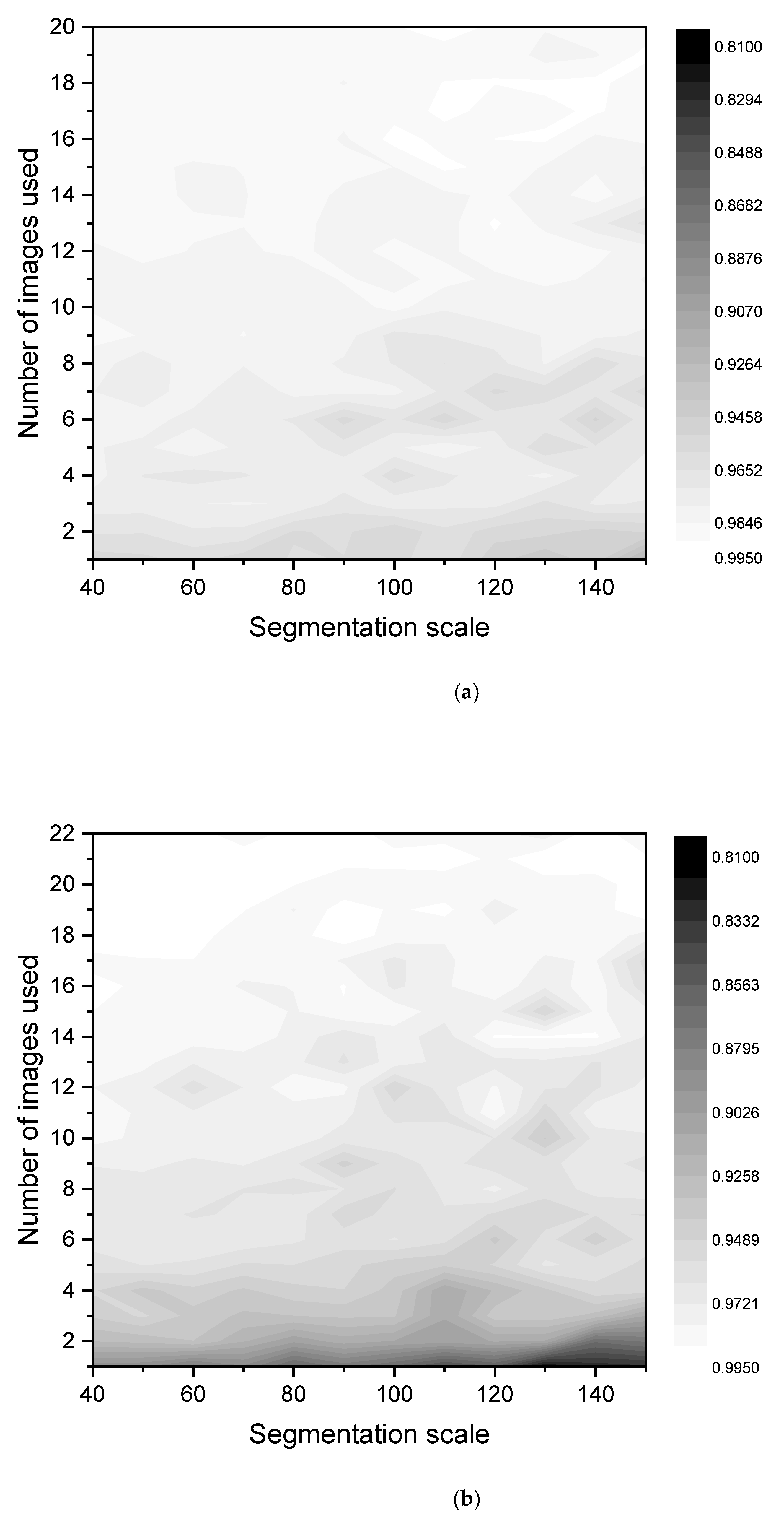

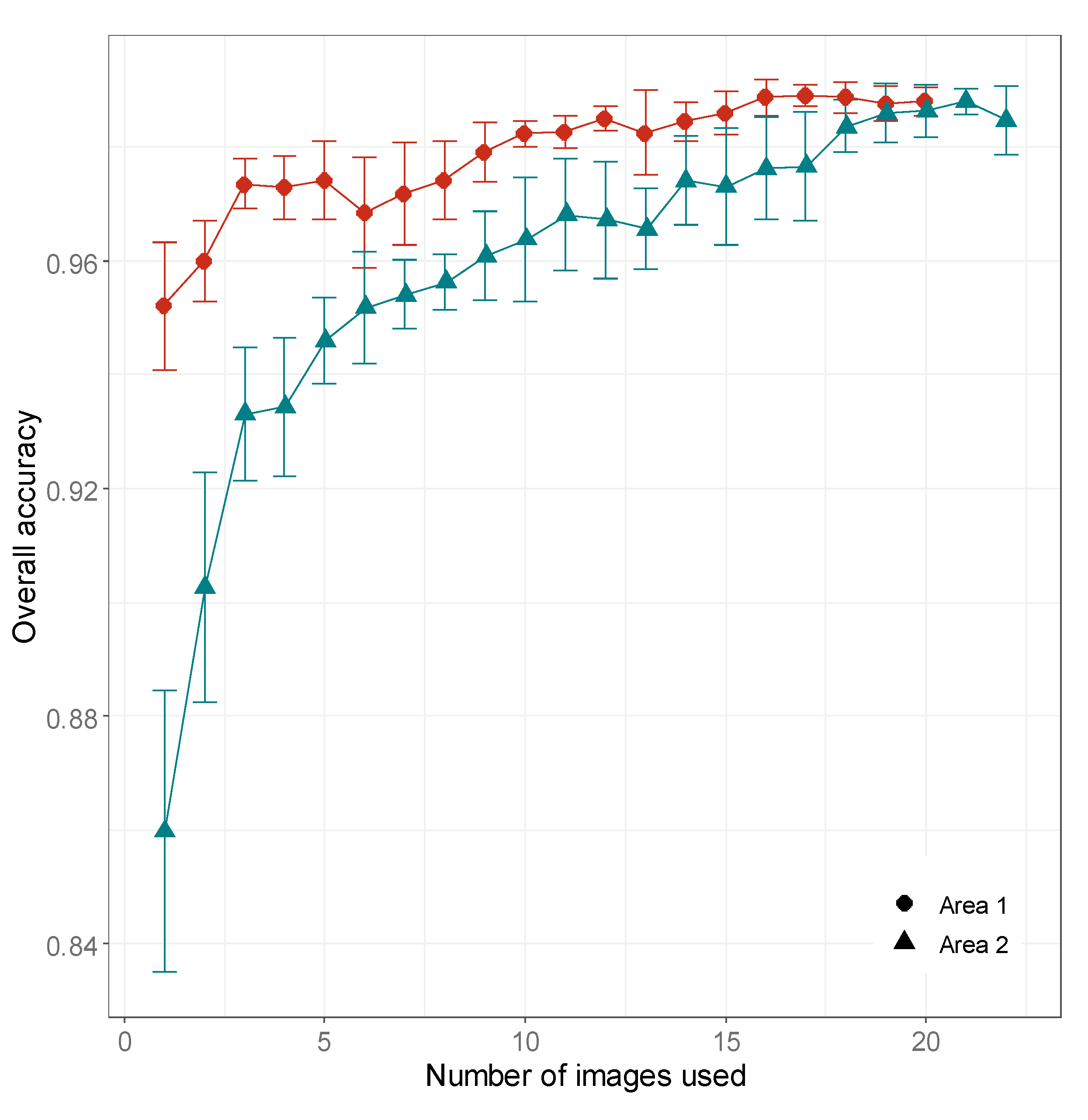

4.1. Influence of Multi-Temporal Images and Segmentation Scale on Overall Accuracy (OA)

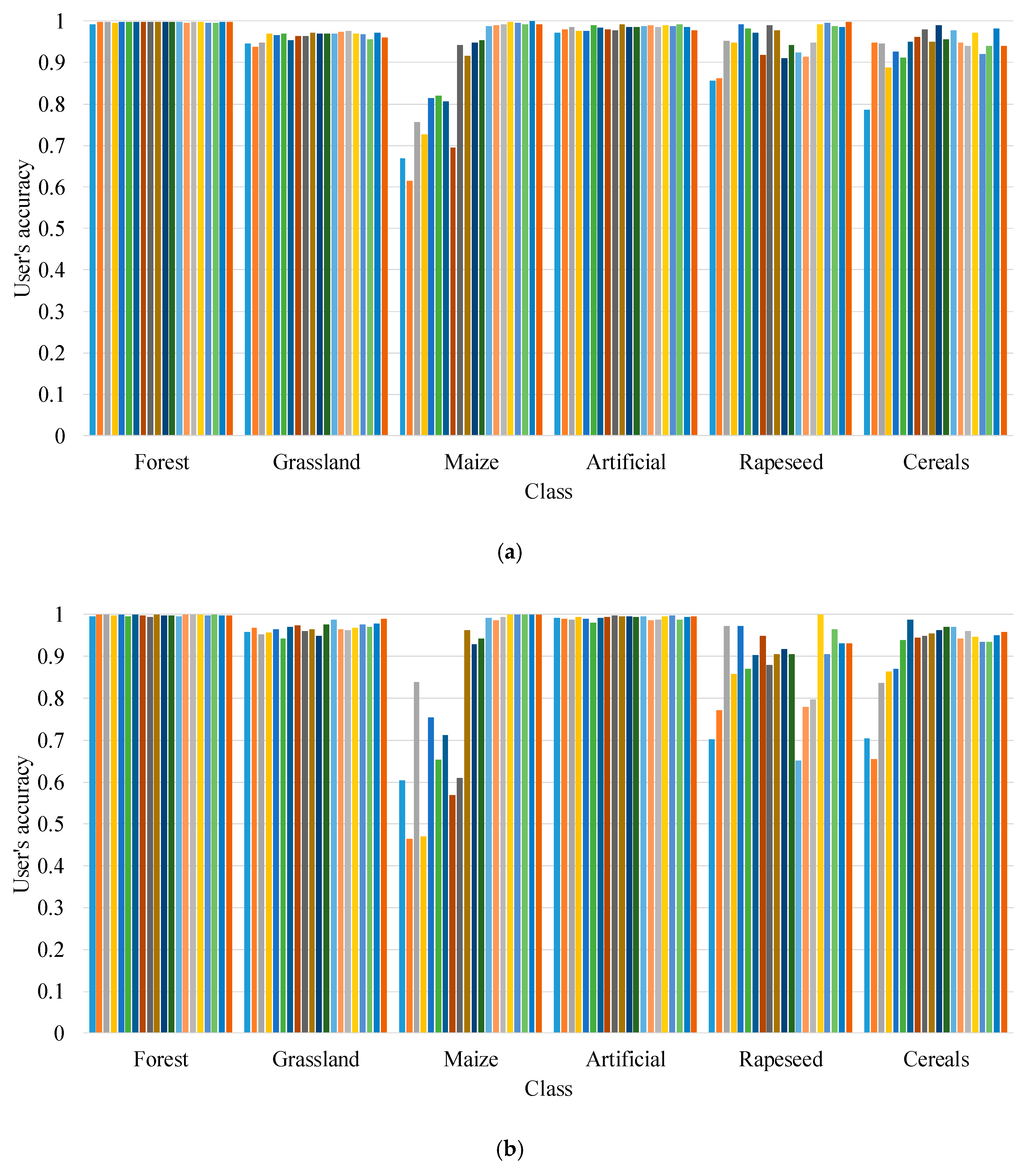

4.2. Effect of Multi-Temporal Images on Category Accuracy

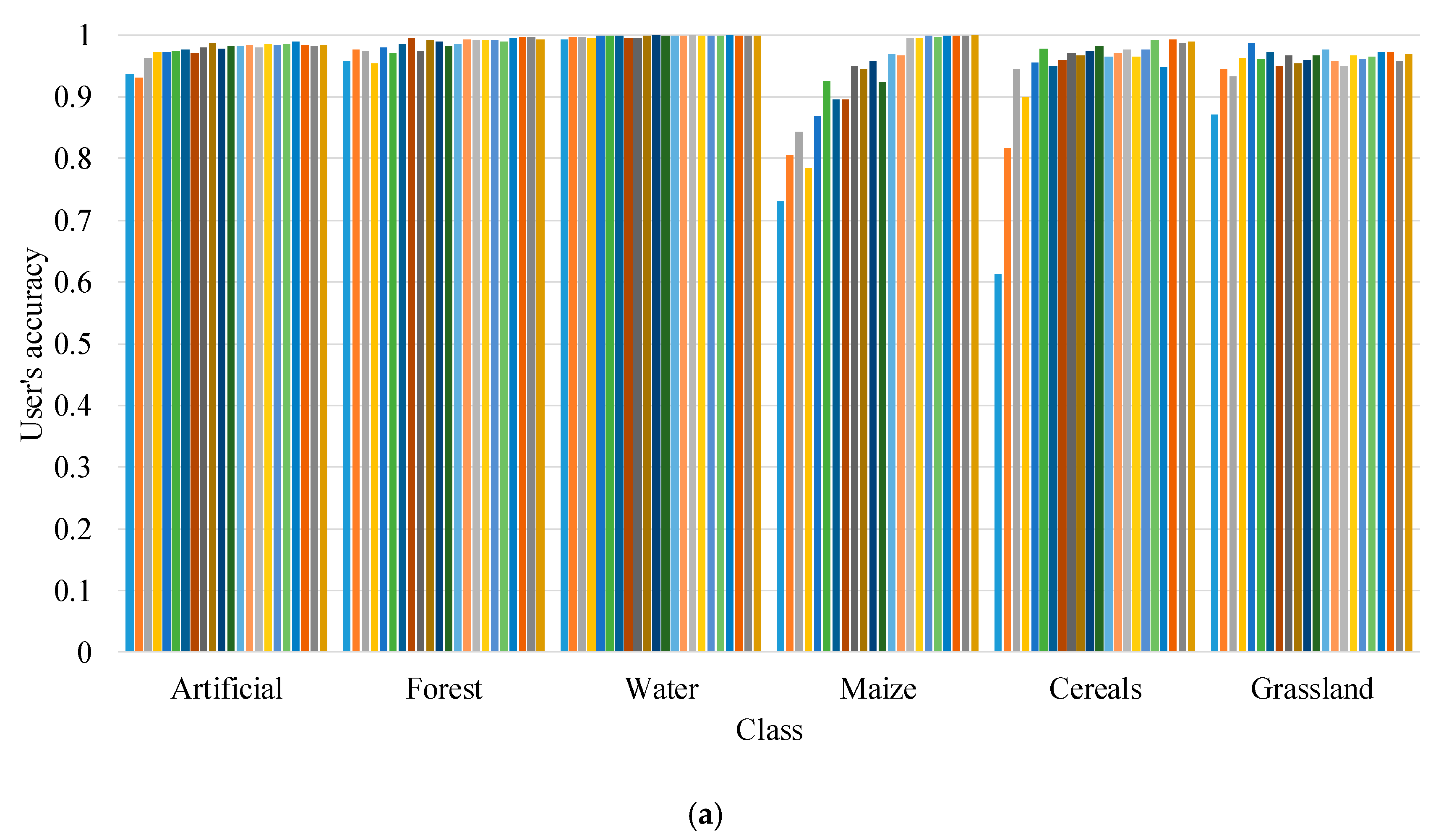

4.3. Effect of Segmentation Scale on Category Accuracy

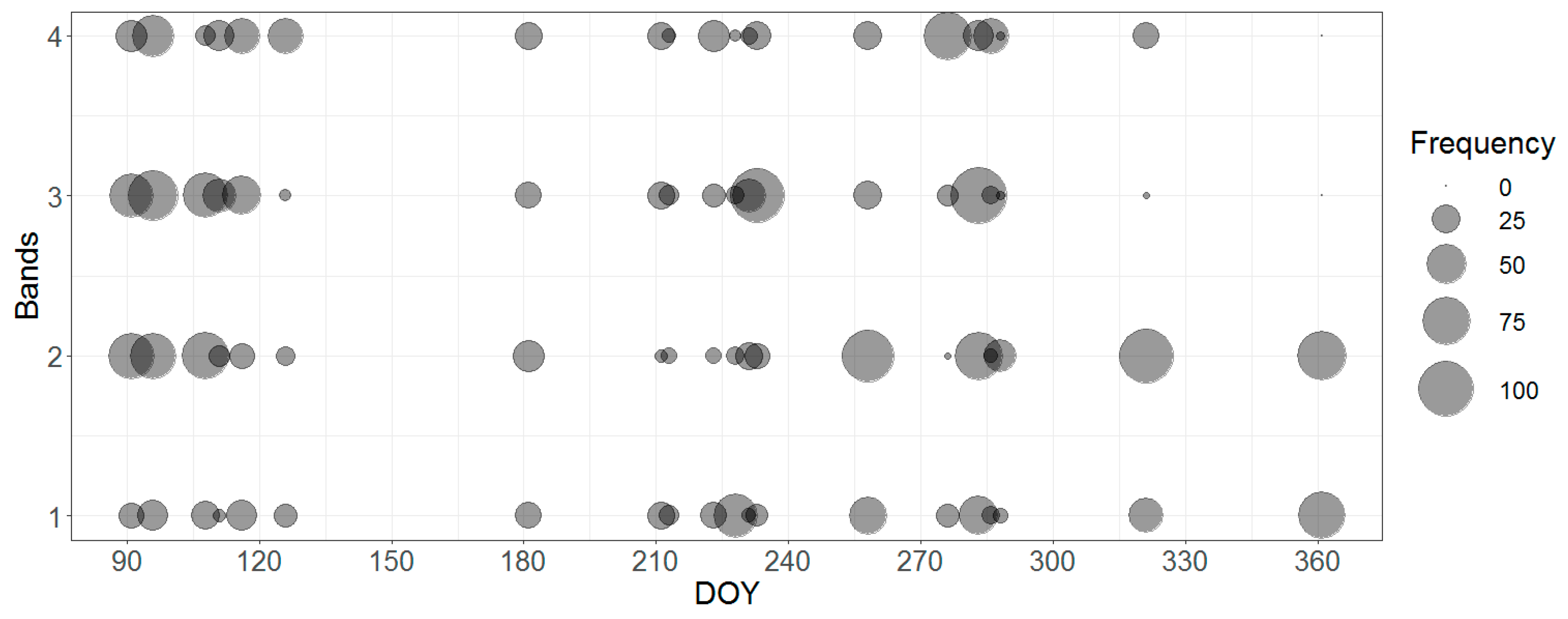

4.4. Feature Selection Response

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Selected by Area 1 | Selected by Area 2 | File Name of Sentinel 2 Image Scenes |

|---|---|---|

| 2 April 2018 | 2 April 2018 | S2A_MSIL2A_20180402T102021_N0207_R065_T32UPU_20180402T155007 |

| 7 April 2018 | 7 April 2018 | S2B_MSIL2A_20180407T102019_N0207_R065_T32UPU_20180407T143030 |

| 19 April 2018 | 19 April 2018 | S2A_MSIL2A_20180419T101031_N0207_R022_T32UPU_20180419T111252 |

| 22 April 2018 | 22 April 2018 | S2A_MSIL2A_20180422T102031_N0207_R065_T32UPU_20180422T141352 |

| 27 April 2018 | 27 April 2018 | S2B_MSIL2A_20180427T102019_N0207_R065_T32UPU_20180427T123359 |

| 7 May 2018 | 7 May 2018 | S2B_MSIL2A_20180507T102019_N0207_R065_T32UPU_20180507T125310 |

| 1 July 2018 | 1 July 2018 | S2A_MSIL2A_20180701T102021_N0208_R065_T32UPU_20180701T141038 |

| 31 July 2018 | 31 July 2018 | S2A_MSIL2A_20180731T102021_N0208_R065_T32UPU_20180731T133841 |

| 2 August 2018 | 2 August 2018 | S2B_MSIL2A_20180802T101019_N0208_R022_T32UPU_20180926T110335 |

| 12 August 2018 | 12 August 2018 | S2B_MSIL2A_20180812T101019_N0208_R022_T32UPU_20180812T153601 |

| 17 August 2018 | 17 August 2018 | S2A_MSIL2A_20180817T101021_N0208_R022_T32UPU_20180817T150139 |

| 20 August 2018 | - | S2A_MSIL2A_20180820T102021_N0208_R065_T32UPU_20180820T161429 |

| 22 August 2018 | 22 August 2018 | S2B_MSIL2A_20180822T101019_N0208_R022_T32UPU_20180822T161243 |

| - | 27 August 2018 | S2A_MSIL2A_20180827T101021_N0208_R022_T32UPU_20180827T152355 |

| 16 September 2018 | 16 September 2018 | S2A_MSIL2A_20180916T101021_N0208_R022_T32UPU_20180916T132415 |

| 4 October 2018 | - | S2B_MSIL2A_20181004T102019_N0208_R065_T32UPU_20181004T151558 |

| 11 October 2018 | 11 October 2018 | S2B_MSIL2A_20181011T101019_N0209_R022_T32UPU_20181011T131546 |

| 14 October 2018 | 14 October 2018 | S2B_MSIL2A_20181014T102019_N0209_R065_T32UPU_20181014T165307 |

| 16 October 2018 | 16 October 2018 | S2A_MSIL2A_20181016T101021_N0209_R022_T32UPU_20181016T131706 |

| - | 21 October 2018 | S2B_MSIL2A_20181021T101039_N0209_R022_T32UPU_20181021T151822 |

| 18 November 2018 | 18 November 2018 | S2A_MSIL2A_20181118T102311_N0210_R065_T32UPU_20181118T120023 |

| - | 20 November 2018 | S2B_MSIL2A_20181120T101319_N0210_R022_T32UPU_20181120T151547 |

| - | 18 December 2018 | S2A_MSIL2A_20181218T102431_N0211_R065_T32UPU_20181218T115057 |

| 28 December 2018 | 28 December 2018 | S2A_MSIL2A_20181228T102431_N0211_R065_T32UPU_20181228T114836 |

References

- Zhu, Z.; Wulder, M.A.; Roy, D.P.; Woodcock, C.E.; Hansen, M.C.; Radeloff, V.C.; Healey, S.P.; Schaaf, C.; Hostert, P.; Scambos, T.A.; et al. Benefits of the free and open Landsat data policy. Remote Sens. Environ. 2019, 224, 382–385. [Google Scholar] [CrossRef]

- Tatsumi, K.; Yamashiki, Y.; Torres, M.A.C.; Taipe, C.L.R. Crop classification of upland fields using Random forest of time-series Landsat 7 ETM+ data. Comput. Electron. Agric. 2015, 115, 171–179. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Chen, X.; Xie, S.; Gao, Y. Fine land-cover mapping in China using Landsat datacube and an operational SPECLib-Based approach. Remote Sens. 2019, 11, 1056. [Google Scholar] [CrossRef]

- Zhang, M.; Lin, H. Object-based rice mapping using time-series and phenological data. Adv. Space Res. 2019, 63, 190–202. [Google Scholar] [CrossRef]

- Löw, F.; Knöfel, P.; Conrad, C. Analysis of uncertainty in multi-temporal object-based classification. ISPRS J. Photogramm. Remote Sens. 2015, 105, 91–106. [Google Scholar] [CrossRef]

- Zhu, Z.; Gallant, A.L.; Woodcock, C.E.; Pengra, B.; Olofsson, P.; Loveland, T.R.; Jin, S.; Dahal, D.; Yang, L.; Auch, R.F. Optimizing selection of training and auxiliary data for operational land cover classification for the LCMAP initiative. ISPRS J. Photogramm. Remote Sens. 2016, 122, 206–221. [Google Scholar] [CrossRef]

- Cai, Y.; Li, X.; Zhang, M.; Lin, H. Mapping wetland using the object-based stacked generalization method based on multi-temporal optical and SAR data. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102164. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Interdonato, R.; Ose, K.; Minh, D.H.T. Combining Sentinel-1 and Sentinel-2 Time Series via RNN for object-based land cover classification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2017, 204, 509–523. [Google Scholar] [CrossRef]

- Csillik, O.; Belgiu, M.; Asner, G.P.; Kelly, M. Object-based time-constrained dynamic time warping classification of crops using Sentinel-2. Remote Sens. 2019, 11, 1257. [Google Scholar] [CrossRef]

- Maus, V.; Câmara, G.; Appel, M.; Pebesma, E. dtwSat: Time-weighted dynamic time warping for satellite image time series analysis in R. J. Stat. Softw. 2019, 88, 1–31. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal Convolutional Neural Network for the Classification of Satellite Image Time Series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef]

- Luciano, A.C.D.S.; Picoli, M.C.A.; Rocha, J.V.; Duft, D.G.; Lamparelli, R.A.C.; Leal, M.R.L.V.; Maire, G.L. A generalized space-time OBIA classification scheme to map sugarcane areas at regional scale, using Landsat images time-series and the random forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 127–136. [Google Scholar] [CrossRef]

- Brinkhoff, J.; Vardanega, J.; Robson, A.J. Land cover classification of nine perennial crops using sentinel-1 and -2 data. Remote Sens. 2020, 12, 96. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A systematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Rougier, S.; Puissant, A.; Stumpf, A.; Lachiche, N. Comparison of sampling strategies for object-based classification of urban vegetation from Very High Resolution satellite images. Int. J. Appl. Earth Obs. Geoinf. 2016, 51, 60–73. [Google Scholar] [CrossRef]

- Ye, S.; Pontius, R.G.; Rakshit, R. A review of accuracy assessment for object-based image analysis: From per-pixel to per-polygon approaches. ISPRS J. Photogramm. Remote Sens. 2018, 141, 137–147. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Browning, D.M.; Rango, A. A comparison of three feature selection methods for object-based classification of sub-decimeter resolution UltraCam-L imagery. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 70–78. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of feature selection methods for object-based land cover mapping of unmanned aerial vehicle imagery using random forest and support vector machine classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Shirvani, Z.; Abdi, O.; Buchroithner, M.F. A synergetic analysis of Sentinel-1 and -2 for mapping historical landslides using object-oriented Random Forest in the Hyrcanian forests. Remote Sens. 2019, 11, 2300. [Google Scholar] [CrossRef]

- Liu, B.; Du, S.; Du, S.; Zhang, X. Incorporating deep features into GEOBIA paradigm for remote sensing imagery classification: A patch-based approach. Remote Sens. 2020, 12, 3007. [Google Scholar] [CrossRef]

- Abdi, O. Climate-triggered insect defoliators and forest fires using multitemporal Landsat and TerraClimate data in NE Iran: An application of GEOBIA TreeNet and panel data analysis. Sensors 2019, 19, 3965. [Google Scholar] [CrossRef]

- Stromann, O.; Nascetti, A.; Yousif, O.; Ban, Y. Dimensionality reduction and feature selection for object-based land cover classification based on sentinel-1 and sentinel-2 time series using google earth engine. Remote Sens. 2020, 12, 76. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation-an optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informationsverarbeitung; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann-Verlag: Heidelberg, Germany, 2000; pp. 12–23. [Google Scholar]

- Radoux, J.; Bogaert, P. Accounting for the area of polygon sampling units for the prediction of primary accuracy assessment indices. Remote Sens. Environ. 2014, 142, 9–19. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Puissant, A.; Rougier, S.; Stumpf, A.E. Object-oriented mapping of urban trees using Random Forest classifiers. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 235–245. [Google Scholar] [CrossRef]

- Hall, M.A.; Holmes, G. Benchmarking attribute selection techniques for discrete class data mining. IEEE Trans. Knowl. Data Eng. 2003, 15, 1437–1447. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Pal, M.; Foody, G.M. Feature selection for classification of hyperspectral data by SVM. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2297–2307. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Toan, T.L.; Planells, M.; Dejoux, J.F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Mendili, L.; Puissant, A.; Chougrad, M.; Sebari, I. Towards a Multi-Temporal Deep Learning Approach for Mapping Urban Fabric Using Sentinel 2 Images. Remote Sens. 2020, 12, 423. [Google Scholar] [CrossRef]

- Vieira, M.A.; Formaggio, A.R.; Rennó, C.D.; Atzberger, C.; Aguiar, D.A.; Mello, M.P. Object Based Image Analysis and Data Mining applied to a remotely sensed Landsat time-series to map sugarcane over large areas. Remote Sens. Environ. 2012, 123, 553–562. [Google Scholar] [CrossRef]

| Defined Class | CLC Description | LUCAS Description | Study Area 1 (ha) | Study Area 2 (ha) |

|---|---|---|---|---|

| Maize—11 | 211-Non-irrigated arable land | B16-Maize | 1.1728 | 501.3447 |

| Rapeseed—12 | B32-Rape and turnip rape | 0.6796 | - | |

| Cereals—13 | B11-Common wheat B13-Barley B15-Oats | 1.1603 | 290.0912 | |

| Forest—2 | 312-Coniferous forest 313-Mixed forest311-Broadleaved forest | C21-Coniferous woodland C31, C32-Mixed woodland C10-Broadleaved woodland | 17.2707 | 762.3008 |

| Artificial land—3 | 111-Continuous urban fabric 112-Discontinuous urban fabric 121-Industrial or commercial units | A22-Artificial non-built up areas A11, A12-Roofed built-up areas | 6.1375 | 738.8659 |

| Grassland—4 | 231-Pastures | E20-Grassland without tree/shrub cover E10-Grassland with sparse tree/shrub cover | 2.4517 | 236.0831 |

| Water areas—5 | 512-Water bodies | G10-Inland water bodies | 0.2653 | 726.3547 |

| Scale | 50 | 60 | 70 | 80 | 90 | 100 | 110 | 120 | 130 | 140 | 150 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| p value | 0.012 | 0.393 | 0.449 | 0.158 | 0.870 | 0.263 | 0.454 | 0.211 | 0.672 | 0.175 | 0.679 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, L.; Schmitt, M.; Zhu, X. Uncertainty Analysis of Object-Based Land-Cover Classification Using Sentinel-2 Time-Series Data. Remote Sens. 2020, 12, 3798. https://doi.org/10.3390/rs12223798

Ma L, Schmitt M, Zhu X. Uncertainty Analysis of Object-Based Land-Cover Classification Using Sentinel-2 Time-Series Data. Remote Sensing. 2020; 12(22):3798. https://doi.org/10.3390/rs12223798

Chicago/Turabian StyleMa, Lei, Michael Schmitt, and Xiaoxiang Zhu. 2020. "Uncertainty Analysis of Object-Based Land-Cover Classification Using Sentinel-2 Time-Series Data" Remote Sensing 12, no. 22: 3798. https://doi.org/10.3390/rs12223798

APA StyleMa, L., Schmitt, M., & Zhu, X. (2020). Uncertainty Analysis of Object-Based Land-Cover Classification Using Sentinel-2 Time-Series Data. Remote Sensing, 12(22), 3798. https://doi.org/10.3390/rs12223798