Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters

Abstract

1. Introduction

1.1. Related work and Contribution

2. Datasets

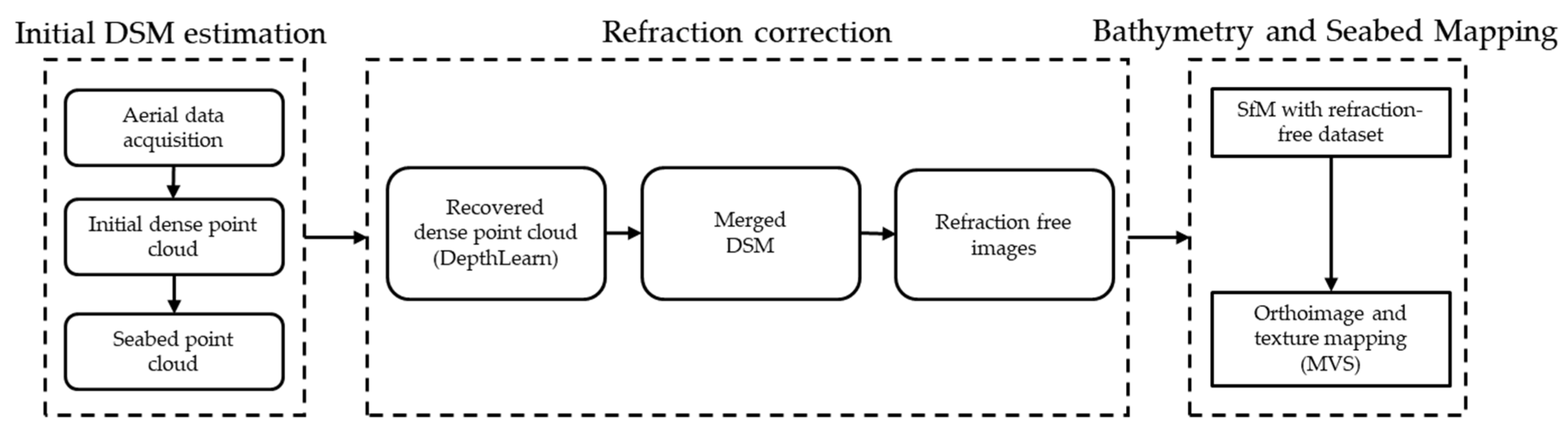

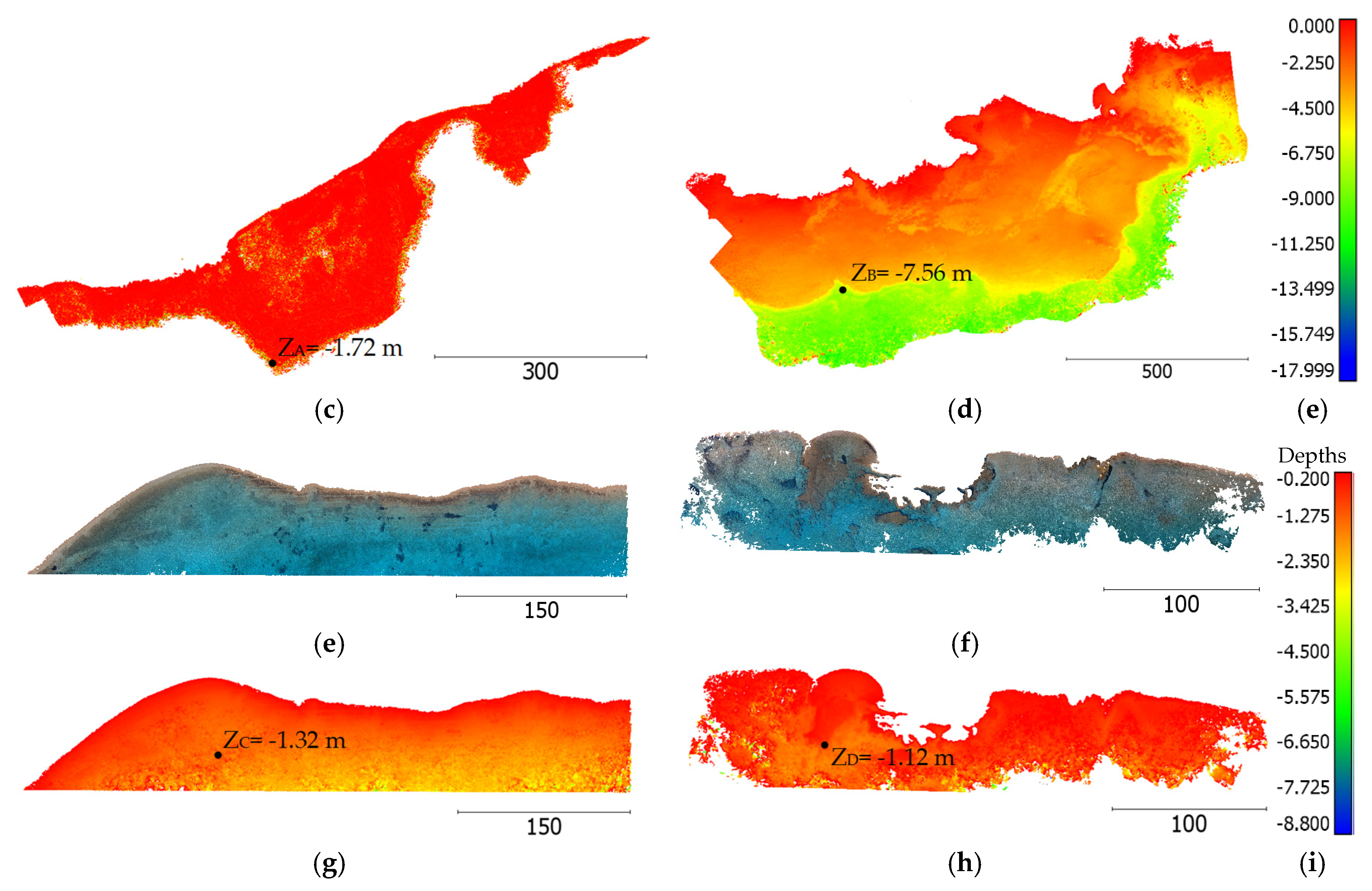

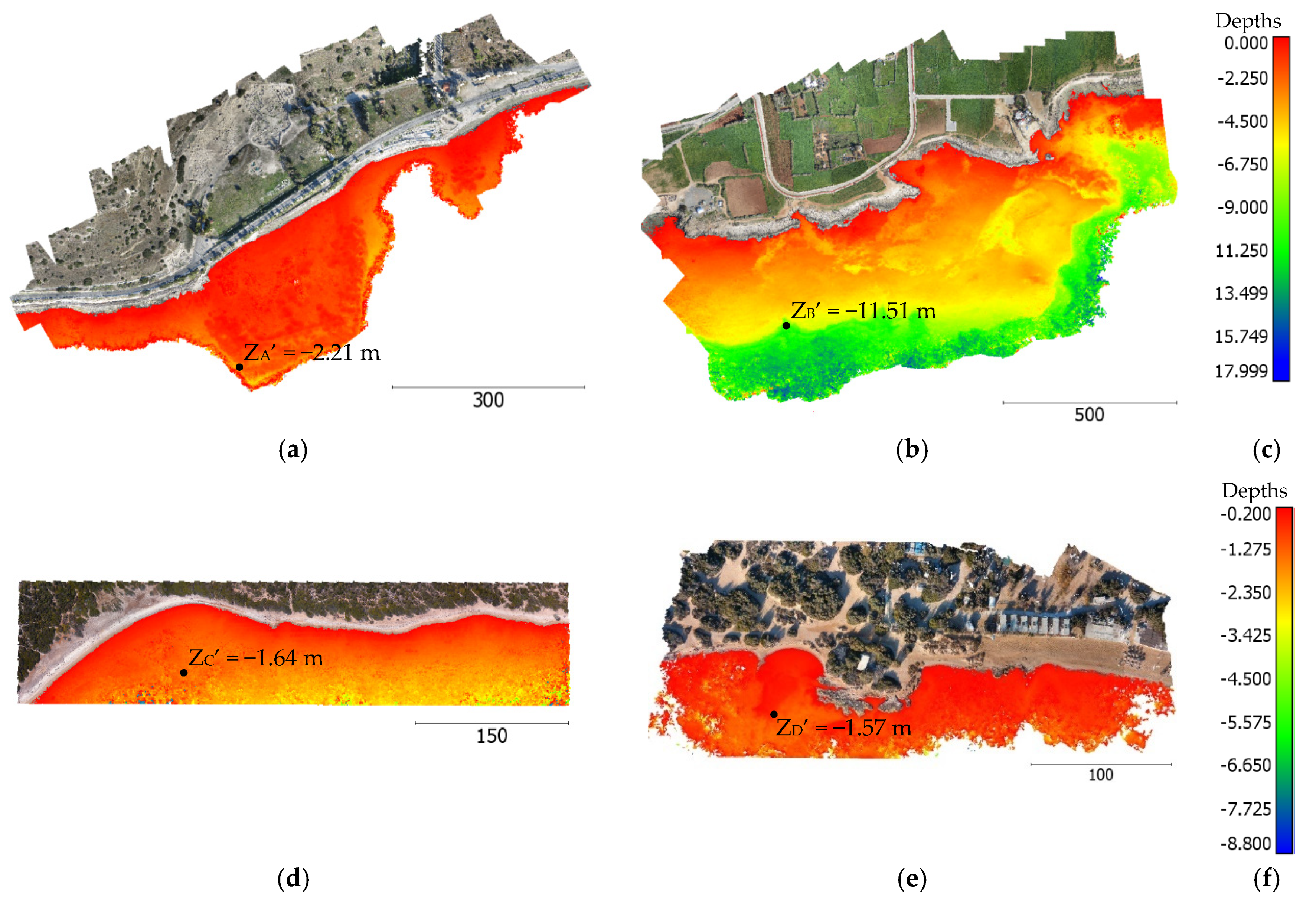

2.1. Agia Napa Test Area

2.2. Amathounta Test Area

2.3. Cyclades-1 Test Area

2.4. Cyclades-2 Test Area

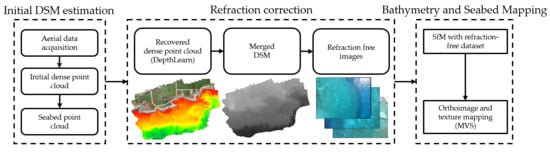

3. Proposed Methodology

3.1. Initial DSM Estimation

3.1.1. Initial Dense Point Cloud

3.1.2. Seabed Point Cloud Extraction

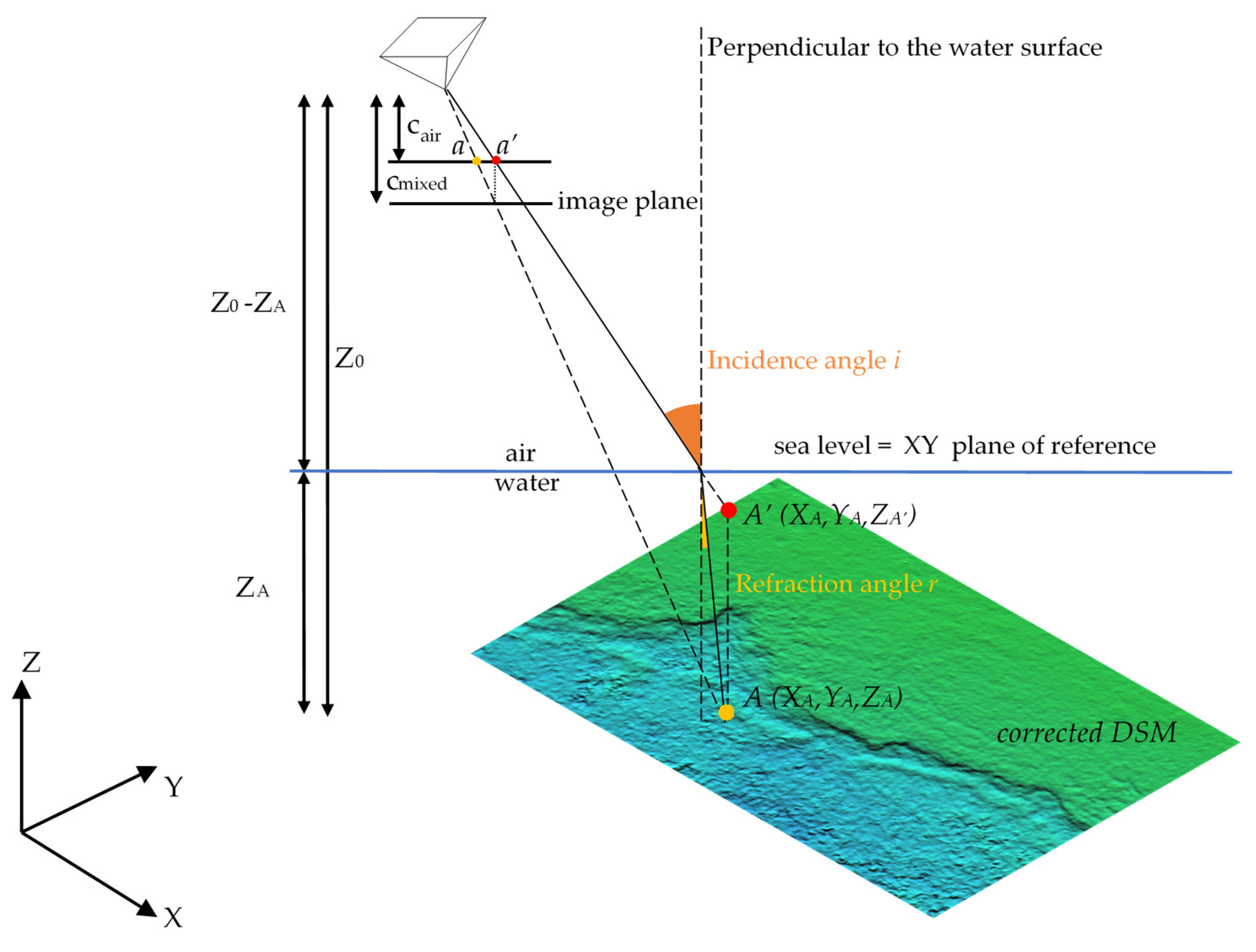

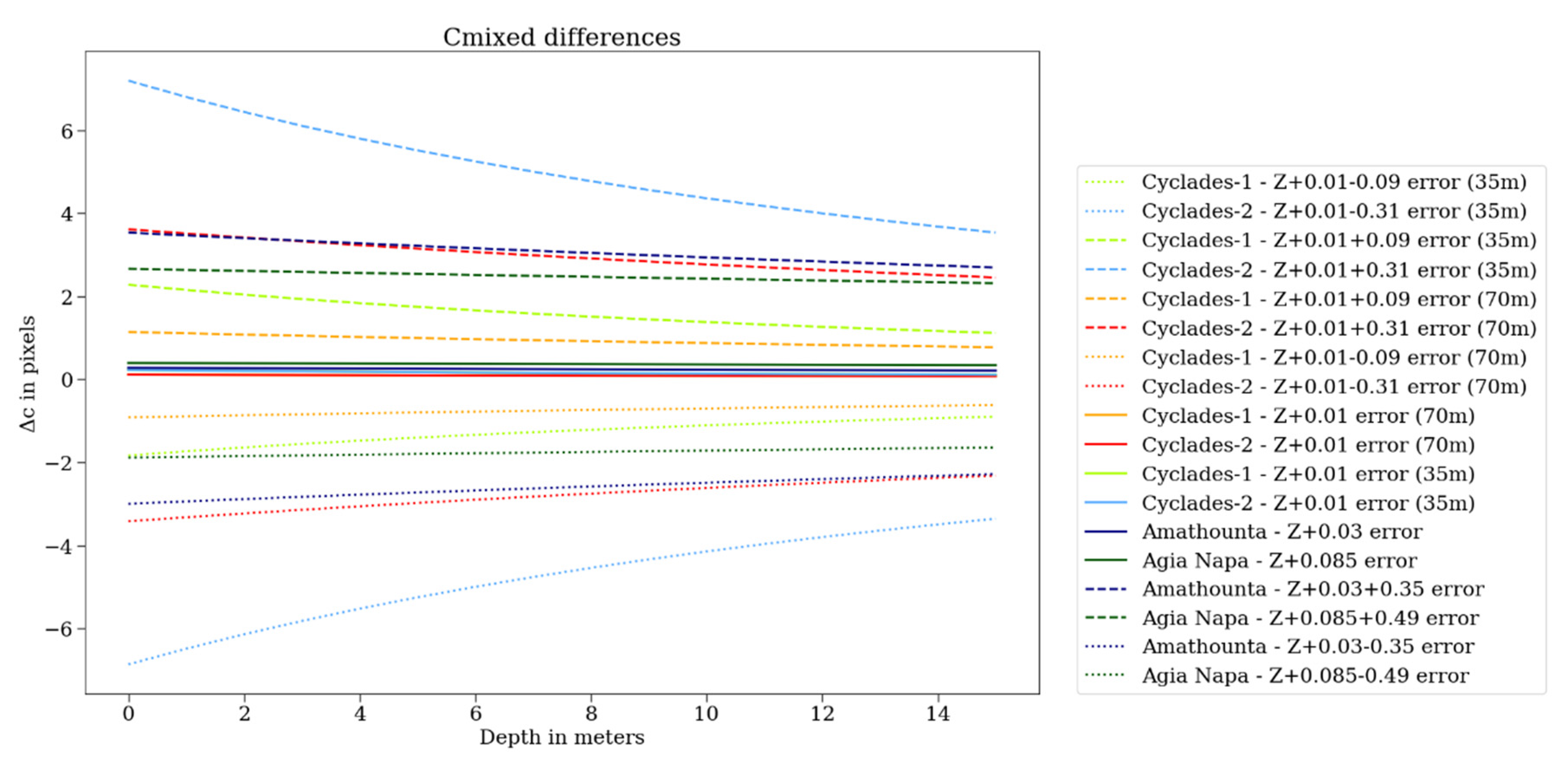

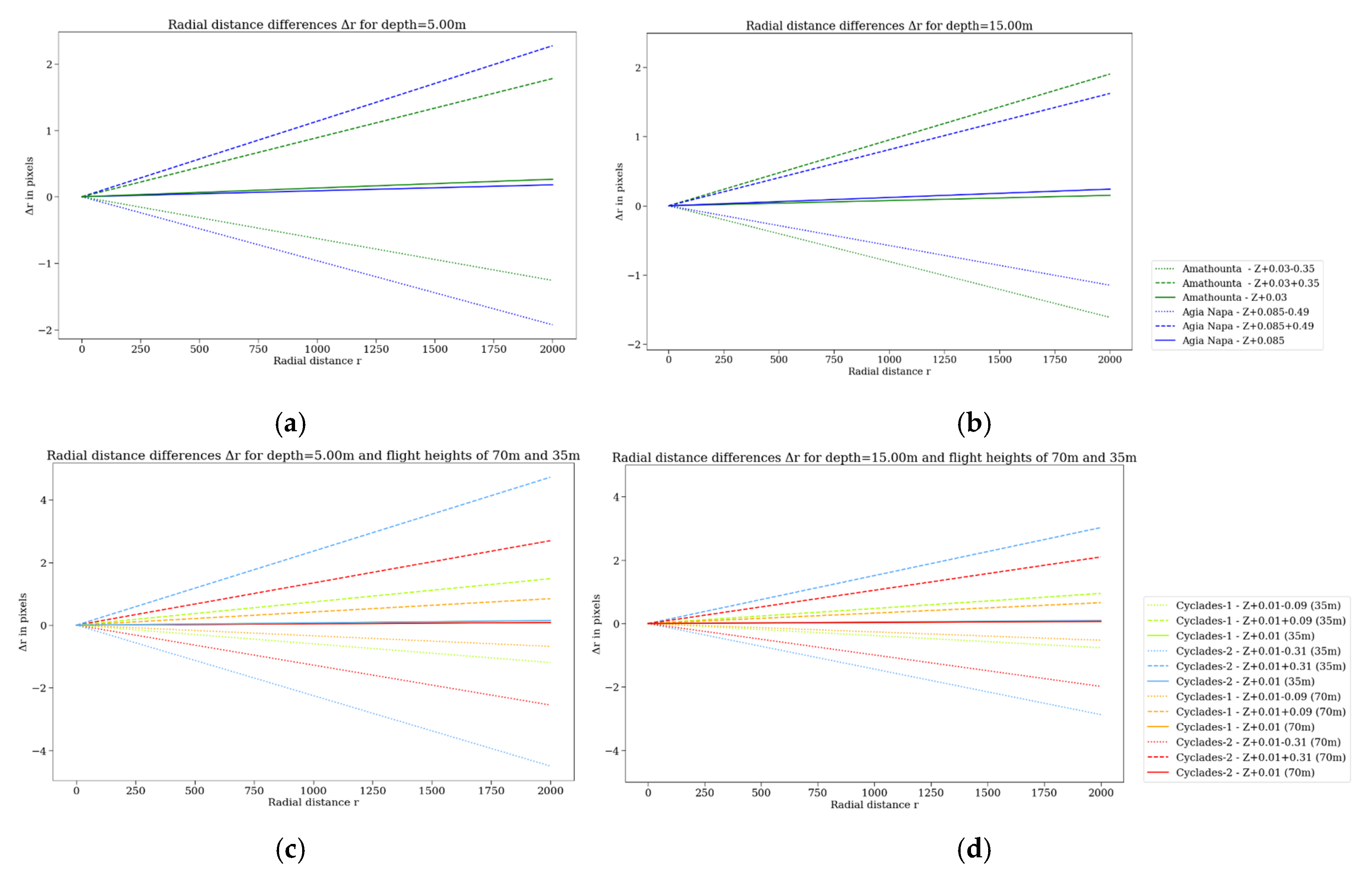

3.2. Refraction Correction

3.2.1. SVR-Based Refraction Correction on the Dense Point Clouds

3.2.2. Merged DSM Generation Using the Corrected Dense Point Clouds

3.2.3. Refraction Correction in the Image Space

3.3. Bathymetry and Seabed Mapping

SfM with Refraction-Free Dataset Generate Orthoimages and Textured 3D Models

4. Experimental Results and Validation

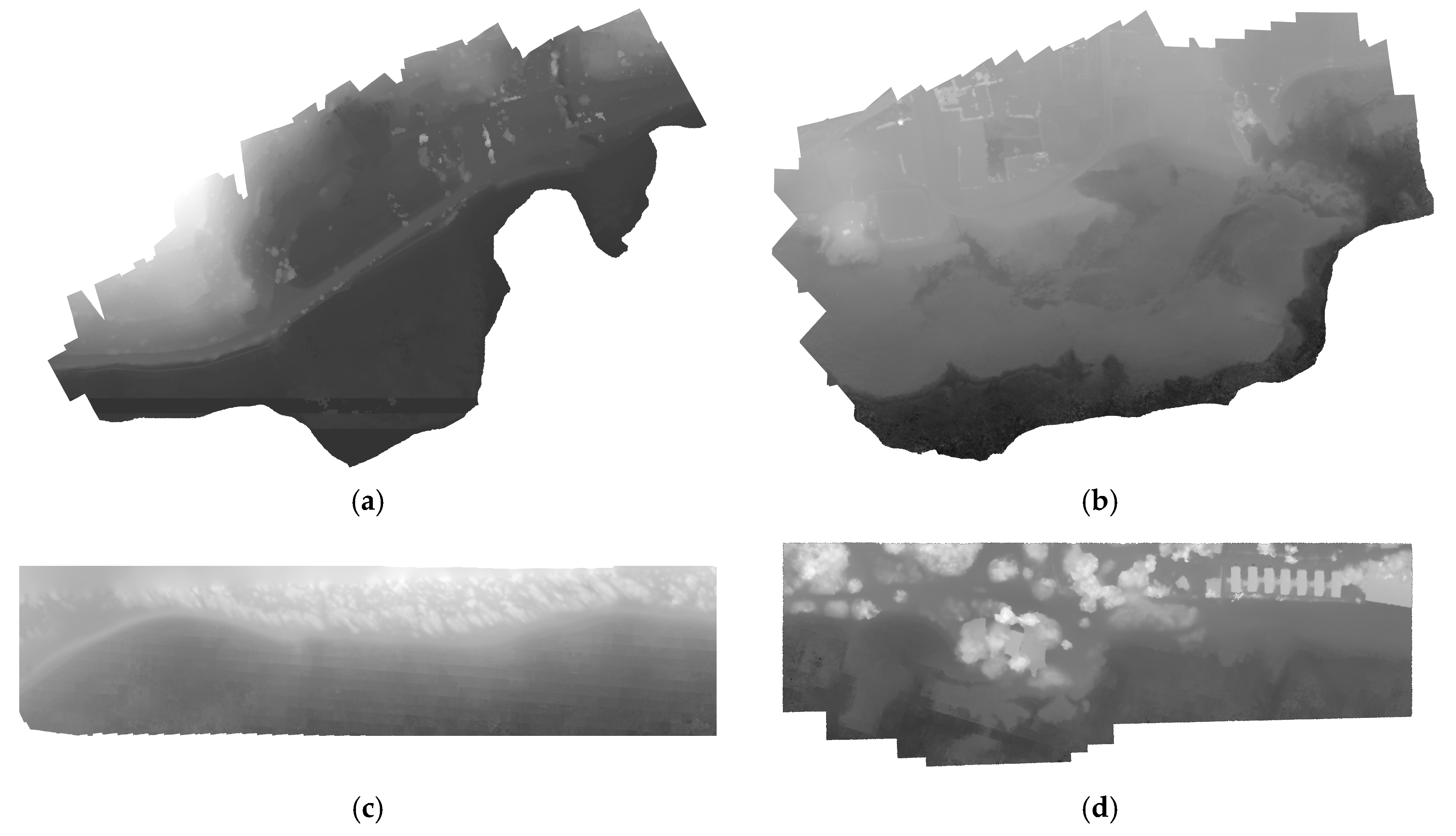

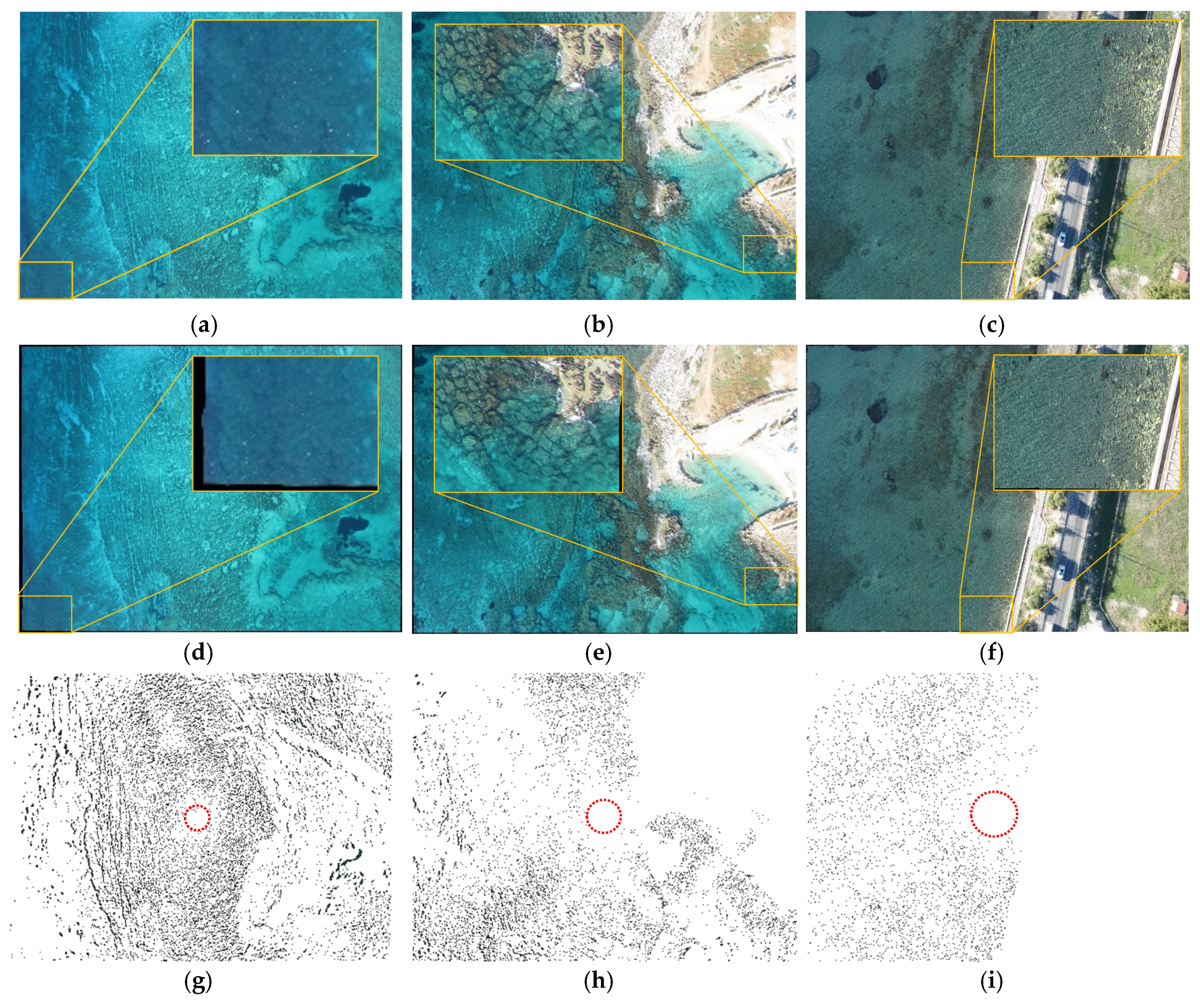

4.1. Refraction-Free Images

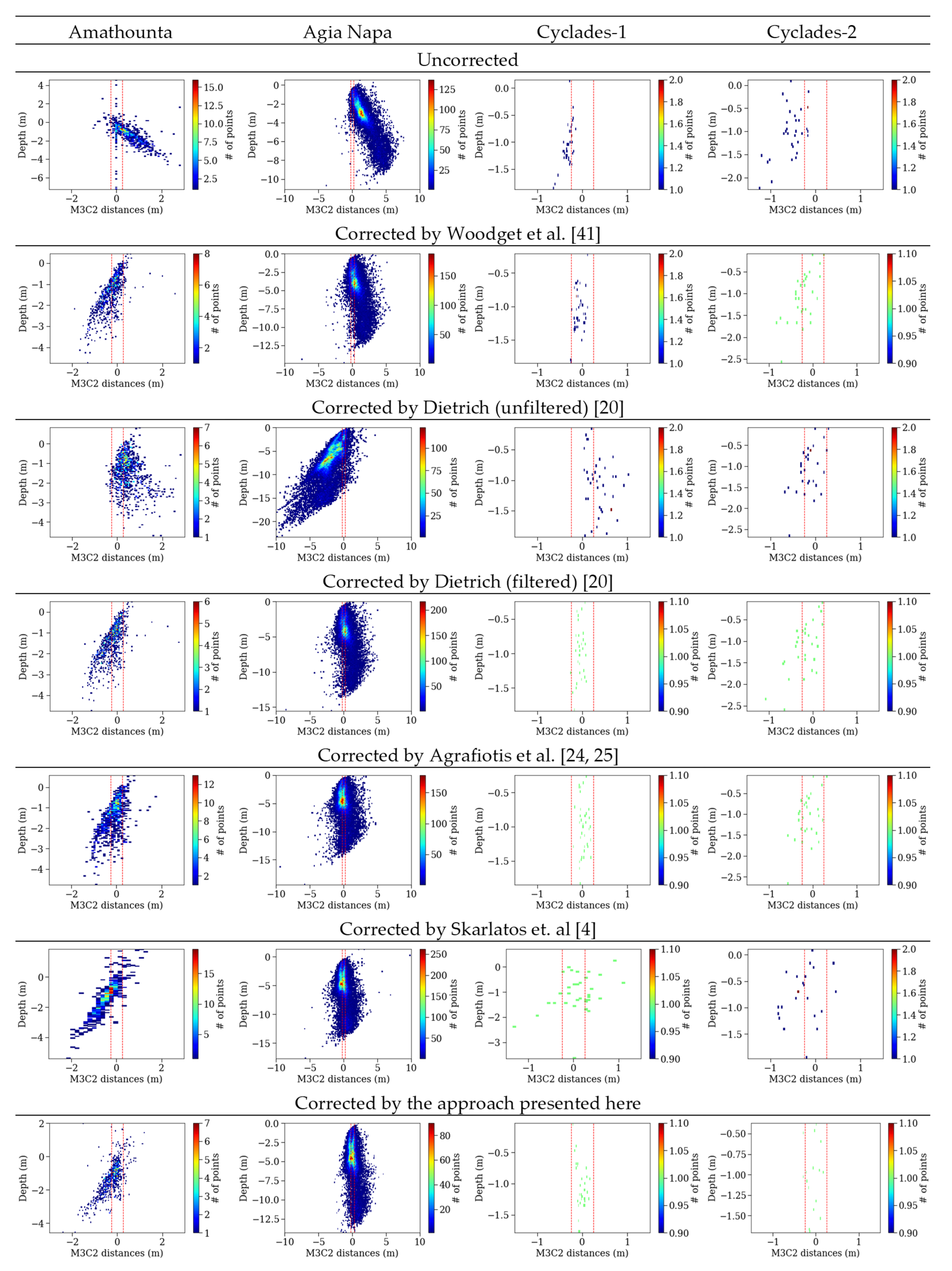

4.2. Assessing Quantitatively the Improvements on the SfM Results

4.2.1. Ground Truth Data

4.2.2. Qualitative and Quantitative Assessment on the Produced Sparse Point Clouds

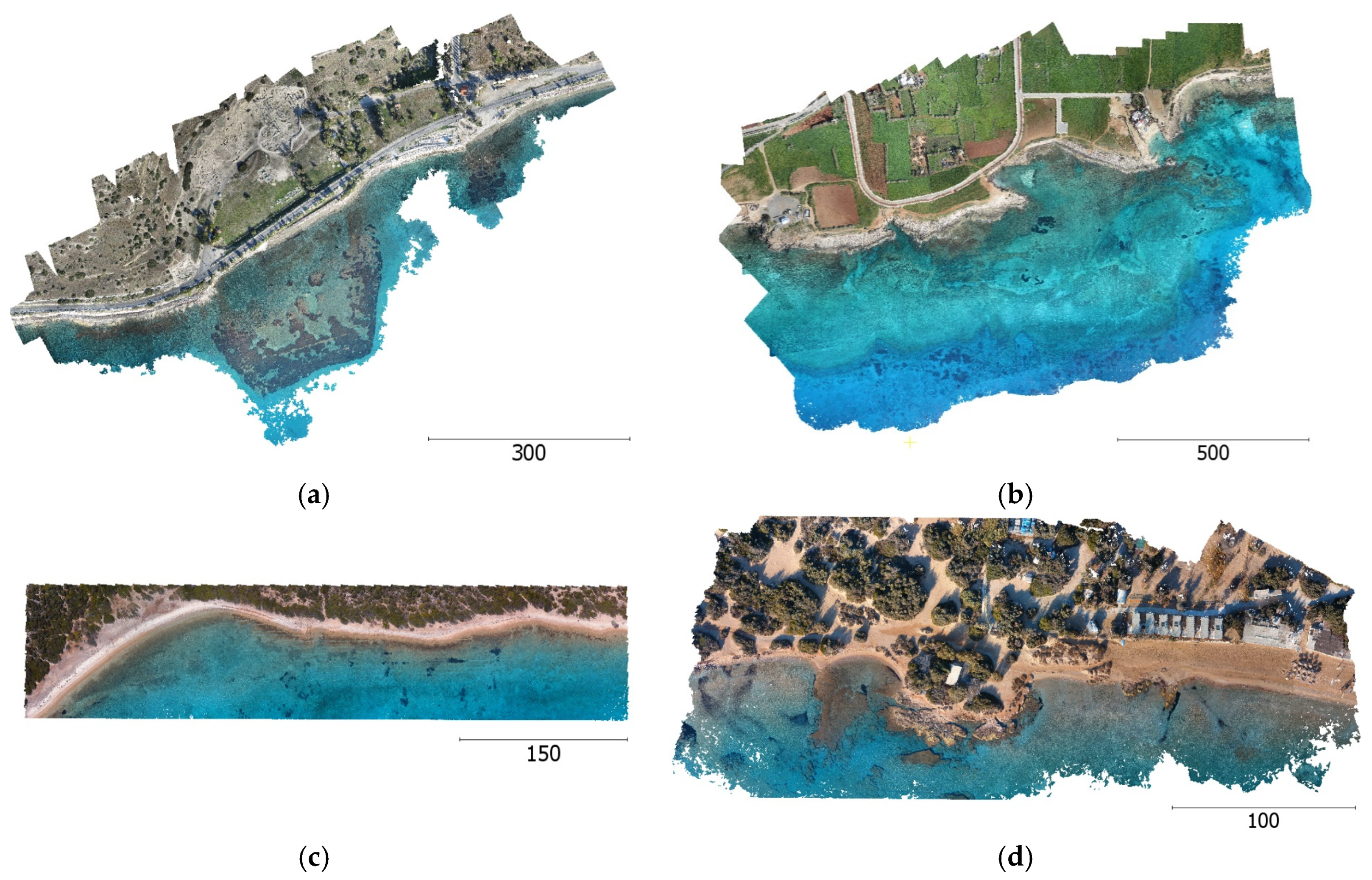

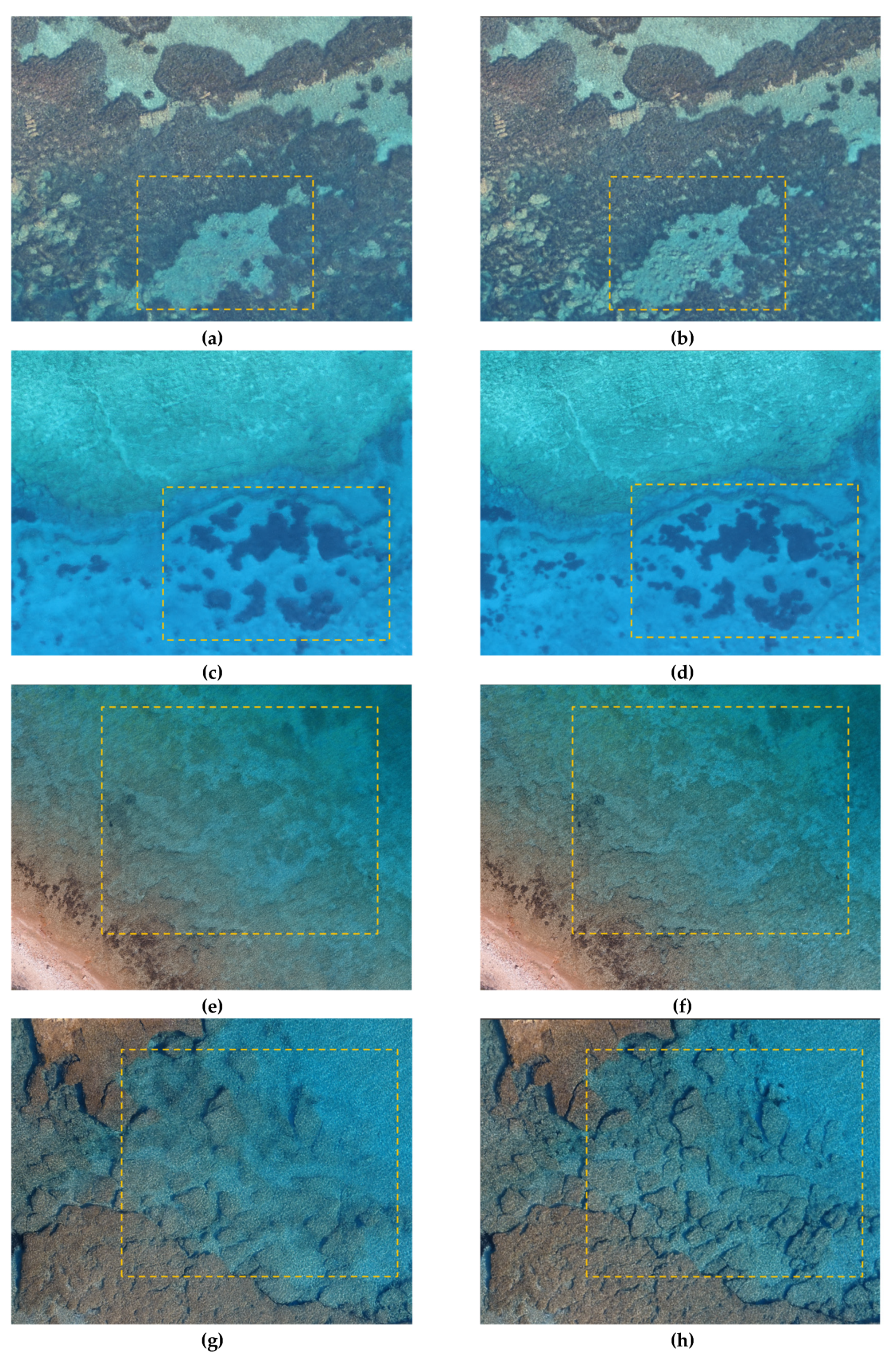

4.3. Assessing Qualitatively the Improvements on the Textured Seabed Models

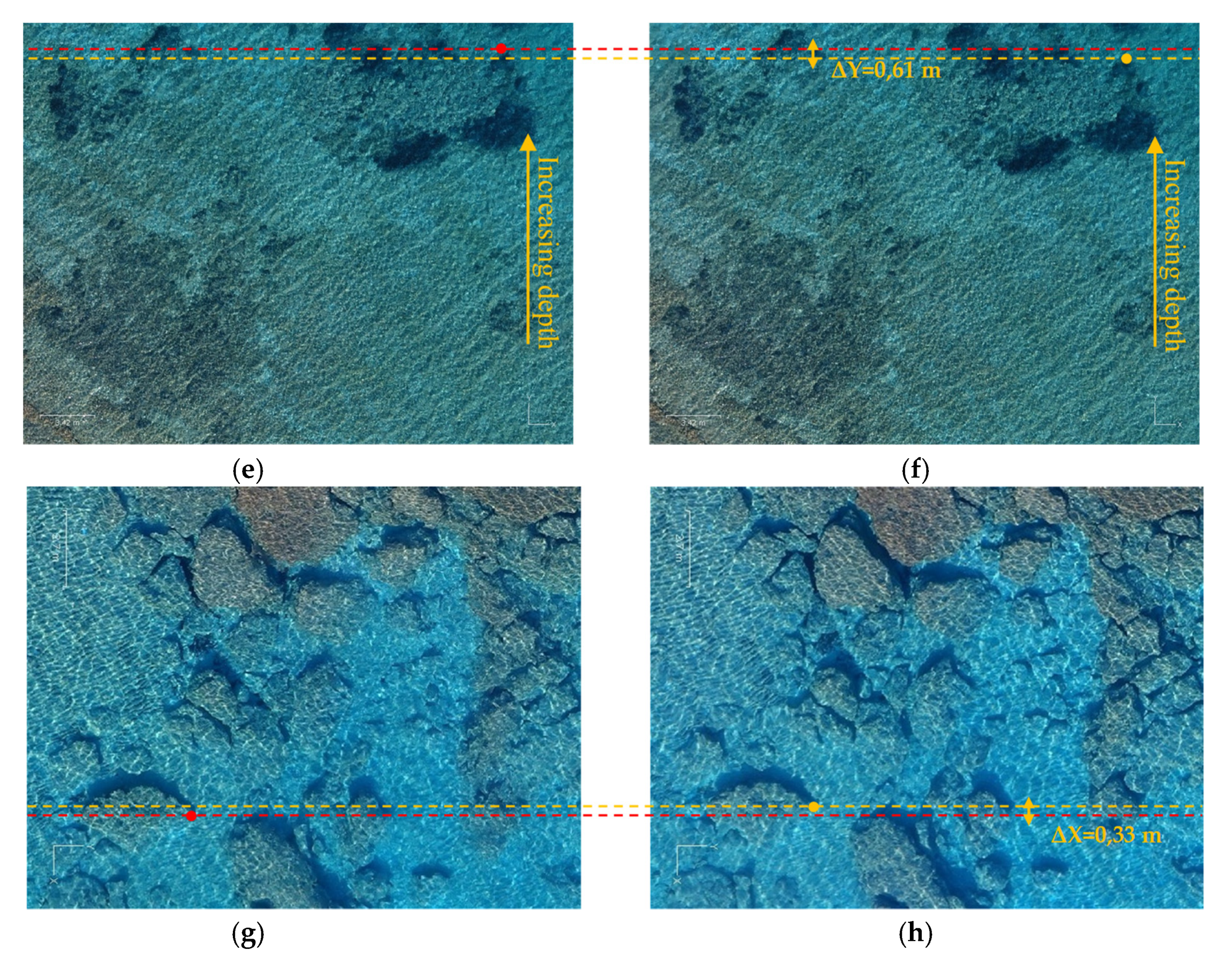

4.4. Assessing Quantitatively the Improvements on the Orthoimages

4.5. Error Propagation within the Sequential Steps of the Proposed Approach

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Agrafiotis, P.; Skarlatos, D.; Forbes, T.; Poullis, C.; Skamantzari, M.; Georgopoulos, A. Underwater Photogrammetry in Very Shallow Waters: Main Challenges and Caustics Effect Removal. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 15–22. [Google Scholar] [CrossRef]

- Karara, H.M. Non-Topographic Photogrammetry, 2nd ed.; American Society for Photogrammetry and Remote Sensing: Falls Church, VA, USA, 1989. [Google Scholar]

- Kasvi, E.; Salmela, J.; Lotsari, E.; Kumpula, T.; Lane, S.N. Comparison of remote sensing based approaches for mapping bathymetry of shallow, clear water rivers. Geomorphology 2019, 333, 180–197. [Google Scholar] [CrossRef]

- Skarlatos, D.; Agrafiotis, P. A Novel Iterative Water Refraction Correction Algorithm for Use in Structure from Motion Photogrammetric Pipeline. J. Mar. Sci. Eng. 2018, 6, 77. [Google Scholar] [CrossRef]

- Green, E.; Mumby, P.; Edwards, A.; Clark, C. Remote Sensing: Handbook for Tropical Coastal Management; United Nations Educational, Scientific and Cultural Organization (UNESCO): Paris, France, 2000. [Google Scholar]

- Georgopoulos, A.; Agrafiotis, P. Documentation of a Submerged Monument Using Improved Two Media Techniques. In Proceedings of the 2012 18th International Conference on Virtual Systems and Multimedia, Milan, Italy, 2–5 September 2012; pp. 173–180. [Google Scholar] [CrossRef]

- Butler, J.B.; Lane, S.N.; Chandler, J.H.; Porfiri, E. Through-water close range digital photogrammetry in flume and field environments. Photogramm. Rec. 2002, 17, 419–439. [Google Scholar] [CrossRef]

- Tewinkel, G.C. Water depths from aerial photographs. Photogramm. Eng. 1963, 29, 1037–1042. [Google Scholar]

- Shmutter, B.; Bonfiglioli, L. Orientation problem in two-medium photogrammetry. Photogramm. Eng. 1967, 33, 1421–1428. [Google Scholar]

- Maas, H.-G. On the Accuracy Potential in Underwater/Multimedia Photogrammetry. Sensors 2015, 15, 18140–18152. [Google Scholar] [CrossRef]

- Wang, Z. Principles of Photogrammetry (with Remote Sensing); Publishing House of Surveying and Mapping: Beijing, China, 1990. [Google Scholar]

- Shan, J. Relative orientation for two-media photogrammetry. Photogramm. Rec. 1994, 14, 993–999. [Google Scholar] [CrossRef]

- Fryer, J.F. Photogrammetry through shallow waters. Aust. J. Geod. Photogramm. Surv. 1983, 38, 25–38. [Google Scholar]

- Elfick, M.H.; Fryer, J.G. Mapping in shallow water. Int. Arch. Photogramm. Remote Sens. 1984, 25, 240–247. [Google Scholar]

- Ferreira, R.; Costeira, J.P.; Silvestre, C.; Sousa, I.; Santos, J.A. Using Stereo Image Reconstruction to Survey Scale Models of Rubble-Mound Structures. In Proceedings of the First International Conference on the Application of Physical Modelling to Port and Coastal Protection, Porto, Portugal, 8–10 May 2006. [Google Scholar]

- Muslow, C. A flexible multi-media bundle approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 472–477. [Google Scholar]

- Wolff, K.; Forstner, W. Exploiting the multi view geometry for automatic surfaces reconstruction using feature based matching in multi media photogrammetry. IAPRS 2000, 33, 900–907. [Google Scholar]

- Ke, X.; Sutton, M.A.; Lessner, S.M.; Yost, M. Robust stereo vision and calibration methodology for accurate three-dimensional digital image correlation measurements on submerged objects. J. Strain Anal. Eng. Des. 2008, 43, 689–704. [Google Scholar] [CrossRef]

- Byrne, P.M.; Honey, F.R. Air Survey and Satellite Imagery Tools for Shallow Water Bathymetry. In Proceedings of the 20th Australian Survey Congress, Darwin, Australia, 20 May 1977; pp. 103–119. [Google Scholar]

- Dietrich, J.T. Bathymetric Structure-from-Motion: Extracting shallow stream bathymetry from multi-view stereo photogrammetry. Earth Surf. Process. Landf. 2017, 42, 355–364. [Google Scholar] [CrossRef]

- Murase, T.; Tanaka, M.; Tani, T.; Miyashita, Y.; Ohkawa, N.; Ishiguro, S.; Suzuki, Y.; Kayanne, H.; Yamano, H. A photogrammetric correction procedure for light refraction effects at a two-medium boundary. Photogramm. Eng. Remote Sens. 2008, 74, 1129–1136. [Google Scholar] [CrossRef]

- Mandlburger, G. Through-Water Dense Image Matching for Shallow Water Bathymetry. Photogramm. Eng. Remote Sens. 2019, 85, 445–455. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Georgopoulos, A. Camera Constant In The Case Of Two Media Photogrammetry. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2015, 40, 1–6. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. Shallow Water Bathymetry Mapping From UAV Imagery Based On Machine Learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 9–16. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sens. 2019, 11, 2225. [Google Scholar] [CrossRef]

- Okamoto, A. Wave influences in two-media photogrammetry. Photogramm. Eng. Remote Sens. 1982, 48, 1487–1499. [Google Scholar]

- Fryer, J.G.; Kniest, H.T. Errors in Depth Determination Caused by Waves in Through-Water Photogrammetry. Photogramm. Rec. 1985, 11, 745–753. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A.; Osborn, J. The Impact of the Calibration Method on the Accuracy of Point Clouds Derived Using Unmanned Aerial Vehicle Multi-View Stereopsis. Remote Sens. 2015, 7, 11933–11953. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3d Is Here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Brown, D.C. Lens distortion for close-range Photogrammetry. P. Eng. 1971, 37, 855–866. [Google Scholar]

- Brown, D.C. Calibration of close range cameras. Int. Arch. Photogram. 1972, 19. [Google Scholar]

- Mullen, R. Manual of Photogrammetry; ASPRS Publications: Bethesda, MD, USA, 2004. [Google Scholar]

- Quan, X.; Fry, E. Empirical equation for the index of refraction of seawater. Appl. Opt. 1995, 34, 3477–3480. [Google Scholar] [CrossRef] [PubMed]

- Green, P.J.; Sibson, R. Computing Dirichlet tessellations in the plane. Comput. J. 1978, 21, 168–173. [Google Scholar] [CrossRef]

- Goshtasby, A.A. Piecewise linear mapping functions for image registration. Pattern Recognit. 1986, 19, 459–466. [Google Scholar] [CrossRef]

- Goshtasby, A.A. 2-D and 3-D Image Registration: For Medical, Remote Sensing, and Industrial Applications; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Bianco, S.; Ciocca, G.; Marelli, D. Evaluating the performance of structure from motion pipelines. J. Imaging 2018, 4, 98. [Google Scholar] [CrossRef]

- Leica HawkEye, III. Available online: https://leica-geosystems.com/-/media/files/leicageosystems/products/datasheets/leica_hawkeye_iii_ds.ashx?la=en (accessed on 7 August 2019).

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (NZ). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- CloudCompare (Version 2.11 Alpha) [GPL Software]. Available online: http://www.cloudcompare.org/ (accessed on 15 July 2019).

- Woodget, A.S.; Carbonneau, P.E.; Visser, F.; Maddock, I.P. Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 47–64. [Google Scholar] [CrossRef]

- Dietrich, J.T. pyBathySfM v4.0; GitHub: San Francisco, CA, USA, 2019; Available online: https://github.com/geojames/pyBathySfM (accessed on 24 October 2019).

- Guenther, G.C.; Cunningham, A.G.; LaRocque, P.E.; Reid, D.J. Meeting the Accuracy Challenge in Airborne Bathymetry; National Oceanic Atmospheric Administration/Nesdis: Silver Spring, MD, USA, 2000.

| Test Site | Amathounta | Agia Napa | Cyclades-1 | Cyclades-2 |

|---|---|---|---|---|

| # Images | 182 | 383 | 449 | 203 |

| Control points used | 29 | 40 | 14 | 11 |

| Average (Avg.) flying height [m] | 103 | 209 | 88/70/35 | 75/33 |

| Avg. base-to-height (B/H) ratio along strip | 0.39 | 0.35 | 0.28/0.31/0.28 | 0.24/0.22 |

| Avg. base-to-height (B/H) ratio across strip | 0.66 | 0.62 | 0.5/0.47/0.46 | 0.42/0.46 |

| Avg. along strip overlap | 65% | 69% | 84%/82%/84% | 86%/87% |

| Avg. across strip overlap | 54% | 57% | 62%/64%/64% | 68%/64% |

| Image footprint on the ground [m] | 149 × 111 | 301 × 226 | 152 × 114/ 121 × 91/ 61 × 45 | 130 × 97/ 57 × 43 |

| GSD [m] | 0.033 | 0.063 | 0.038/0.030/0.015 | 0.032/0.014 |

| RMSX [m] | 0.028 | 0.050 | 0.015 | 0.020 |

| RMSY [m] | 0.033 | 0.047 | 0.010 | 0.014 |

| RMSΖ [m] | 0.046 | 0.074 | 0.019 | 0.021 |

| Reprojection error on all points [pix] | 0.645 | 1.106 | 1.12 | 0.86 |

| Reprojection error in control points [pix] | 1.48 | 0.76 | 0.28 | 0.30 |

| Pixel size [μm] | 1.55 | 1.55 | 1.56 | 1.56 |

| Total # of tie points (uncorrected images) | 28.5 K | 135 K | 186 K | 72 K |

| Adjusted camera constant c [pixels] | 2827.05 | 2852.34 | 2352.23 | 2334.39 |

| Test Site | # of Points | Source | Point Density [Points/m2] | Average Pulse Spacing [m] | Flying Height [m] | Nominal Bathymetric Accuracy [m] |

|---|---|---|---|---|---|---|

| Amathounta | 1 K | LiDAR | 0.4 | - | 600 | 0.15 |

| Agia Napa | 75 K | LiDAR | 1.1 | 1.65 | 600 | 0.15 |

| Cyclades-1 | 23 | Total Station | - | - | - | 0.05 |

| Cyclades-2 | 34 | Total Station | - | - | - | 0.05 |

| Data That the Correction is Applied on | Derived Point Clouds from Different Methods | Test Site | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Amathounta | Agia Napa | Cyclades-1 | Cyclades-2 | ||||||

| [m] | s [m] | [m] | s [m] | [m] | s [m] | [m] | s [m] | ||

| # of check points | 1 K | 75 K | 23 | 34 | |||||

| Uncorrected Images | 0.67 | 2.19 | 1.71 | 1.18 | 0.32 | 0.10 | 0.54 | 0.29 | |

| Point clouds | Woodget et al., [42] | −0.27 | 0.40 | 0.63 | 1.02 | −0.80 | 0.10 | −0.23 | 0.26 |

| Dietrich, [20] | 0.49 | 0.54 | −1.55 | 1.49 | 0.38 | 0.25 | −0.15 | 0.24 | |

| Dietrich (Filt.), [20] | −0.22 | 0.40 | 0.43 | 0.72 | −0.06 | 0.09 | −0.20 | −0.30 | |

| Agrafiotis et al., [24,25] | −0.09 | 0.43 | −0.03 | 0.61 | 0.02 | 0.09 | −0.04 | 0.10 | |

| Images | Skarlatos et al., [4] | −0.39 | 0.88 | −0.05 | 0.74 | 0.15 | 0.42 | −0.28 | 0.36 |

| This paper | −0.19 | 0.28 | −0.04 | 0.37 | −0.02 | 0.09 | −0.06 | 0.14 | |

| IHO limit [43] | ±0.25 | ±0.25 | ±0.25 | ±0.25 | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agrafiotis, P.; Karantzalos, K.; Georgopoulos, A.; Skarlatos, D. Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters. Remote Sens. 2020, 12, 322. https://doi.org/10.3390/rs12020322

Agrafiotis P, Karantzalos K, Georgopoulos A, Skarlatos D. Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters. Remote Sensing. 2020; 12(2):322. https://doi.org/10.3390/rs12020322

Chicago/Turabian StyleAgrafiotis, Panagiotis, Konstantinos Karantzalos, Andreas Georgopoulos, and Dimitrios Skarlatos. 2020. "Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters" Remote Sensing 12, no. 2: 322. https://doi.org/10.3390/rs12020322

APA StyleAgrafiotis, P., Karantzalos, K., Georgopoulos, A., & Skarlatos, D. (2020). Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters. Remote Sensing, 12(2), 322. https://doi.org/10.3390/rs12020322