Abstract

Aiming to improve the navigation accuracy during global navigation satellite system (GNSS) outages, an algorithm based on long short-term memory (LSTM) is proposed for aiding inertial navigation system (INS). The LSTM algorithm is investigated to generate the pseudo GNSS position increment substituting the GNSS signal. Almost all existing INS aiding algorithms, like the multilayer perceptron neural network (MLP), are based on modeling INS errors and INS outputs ignoring the dependence of the past vehicle dynamic information resulting in poor navigation accuracy. Whereas LSTM is a kind of dynamic neural network constructing a relationship among the present and past information. Therefore, the LSTM algorithm is adopted to attain a more stable and reliable navigation solution during a period of GNSS outages. A set of actual vehicle data was used to verify the navigation accuracy of the proposed algorithm. During 180 s GNSS outages, the test results represent that the LSTM algorithm can enhance the navigation accuracy 95% compared with pure INS algorithm, and 50% of the MLP algorithm.

1. Introduction

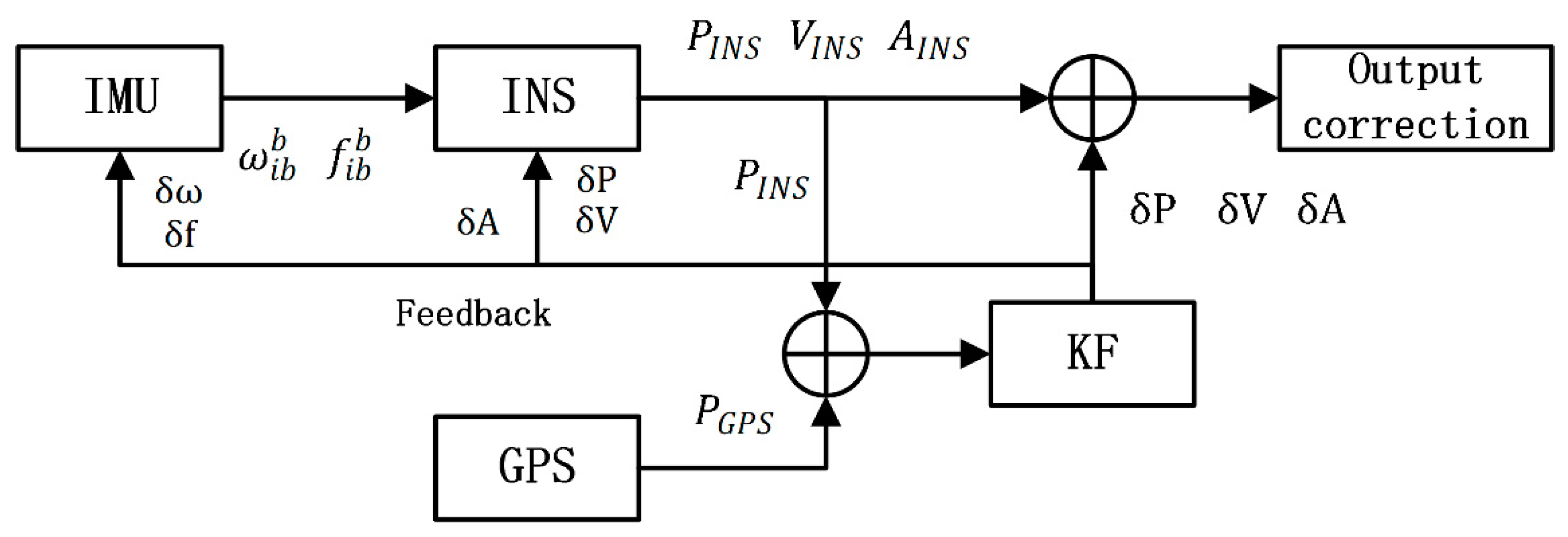

The inertial navigation system (INS) and global navigation satellite system (GNSS) are two of the main and most important approaches for providing position and attitude information for geographical references [1]. The INS has high accuracy in a short period of time not affected by the environment. However, a standalone INS solution will degrade over time, because of the large errors in the raw measurements of inertial measurement units (IMU) [2]. In this study, the signal from GNSS is adopted to provide high precision navigation solutions. Under decent visibility conditions, GNSS can provide continuous and accurate navigation information over a long period of time. However, GNSS alone cannot give reliable positions all of the time, as the satellite signal may be blocked or corrupted as a result of high buildings, viaducts, tunnels, mountains, multi-path reflections, and bad weather conditions [3,4,5]. Because of their complementary properties, INS and GNSS are commonly integrated by a Kalman filter (KF) for providing continuous and high precision navigation [3,4,5,6]. In an INS/GNSS system, GNSS aids INS by estimating its errors in KF [7,8]. Besides, INS connects the GNSS signal gap and helps the GNSS signal reacquisition after an outage [9,10]. However, when the GNSS signal is blocked, KF cannot update information from the GNSS measurements. Meanwhile, the INS/GNSS system changes into a pure mode, whose position error will diverge over a period of time [2,3,4,11]. Therefore, an improved fusion algorithm needs to be explored so as to improve the INS navigation performance when the GNSS signal is lost.

With the development of artificial intelligence (AI) and big data, a lot of AI methods have been explored so as to improve navigation accuracy during GNSS outages [12,13,14,15,16,17,18]. Nowadays, artificial neural networks (ANN) are the most widely used methods to model a complex nonlinear problem. Many researchers have built a variety of neural networks for aiding INS when the GNSS signal is lost. Rashad and his team first used a radial basis function neural network to model the INS position and the position error between INS and GNSS [12,13]. EI-Sheimy used time, velocity, and yaw as inputs to model the position error and velocity, showing a more stable and accurate result [14]. In later works, an improved autoregressive delay-dependent neural network model was applied. The input features are the current and past one-step position and velocity from IMU and INS, while the output is the INS position error [15]. Another method based on the ensemble model has been explored to promote the generalization of the algorithm by utilizing a lot of weak learners to construct a strong learner [7,15,17]. Furthermore, the support vector machine and genetic algorithm were also explored so as to overcome the over-fitting and local-minimum problems of neural networks [18]. Lately, factor graph optimizations have been widely used for multi-sensor fusion in autonomous systems [19]. This method uses a factor graph model to represent the joint probability distribution function. An efficient incremental inference algorithm over the factor graph is applied, which yields a near-optimal inertial navigation system.

However, almost all of the above methods are based on a static neural network, like the radial basis function neural network (RBF) or multilayer perceptron neural network (MLP). The conception of all above approaches is to model the current and past one-step INS information by training the AI model when the GNSS signal is available. Almost all of the existing INS aiding algorithms try to improve the navigation solutions during GNSS outages by predicting the INS errors using previous INS outputs. The major drawback of which is that they cannot store more past vehicle dynamic information when dealing with a time serial data [20]. Therefore, under the condition of a long period of GNSS outages, any of above INS aiding algorithms may not have the capability of providing accurate and stable navigation results [21]. Furthermore, the main shortcoming of the MLP with a sliding window is the rapid increase of computing complexity, as the number of neurons in the input layer has to be equal to the number of past samples [22]. For all of the above reasons, this study suggests that a LSTM neural network identifying a nonlinear dynamic process can solve the above drawbacks of those models, which have capabilities to perform highly nonlinear dynamic mapping and store past information [23]. LSTM is also a basic neuron in the recurrent neural network (RNN), which can select and store significant information [24], and has been widely used in a variety dynamic process, such as natural language process (NPL) [25] and time serial prediction [26,27].

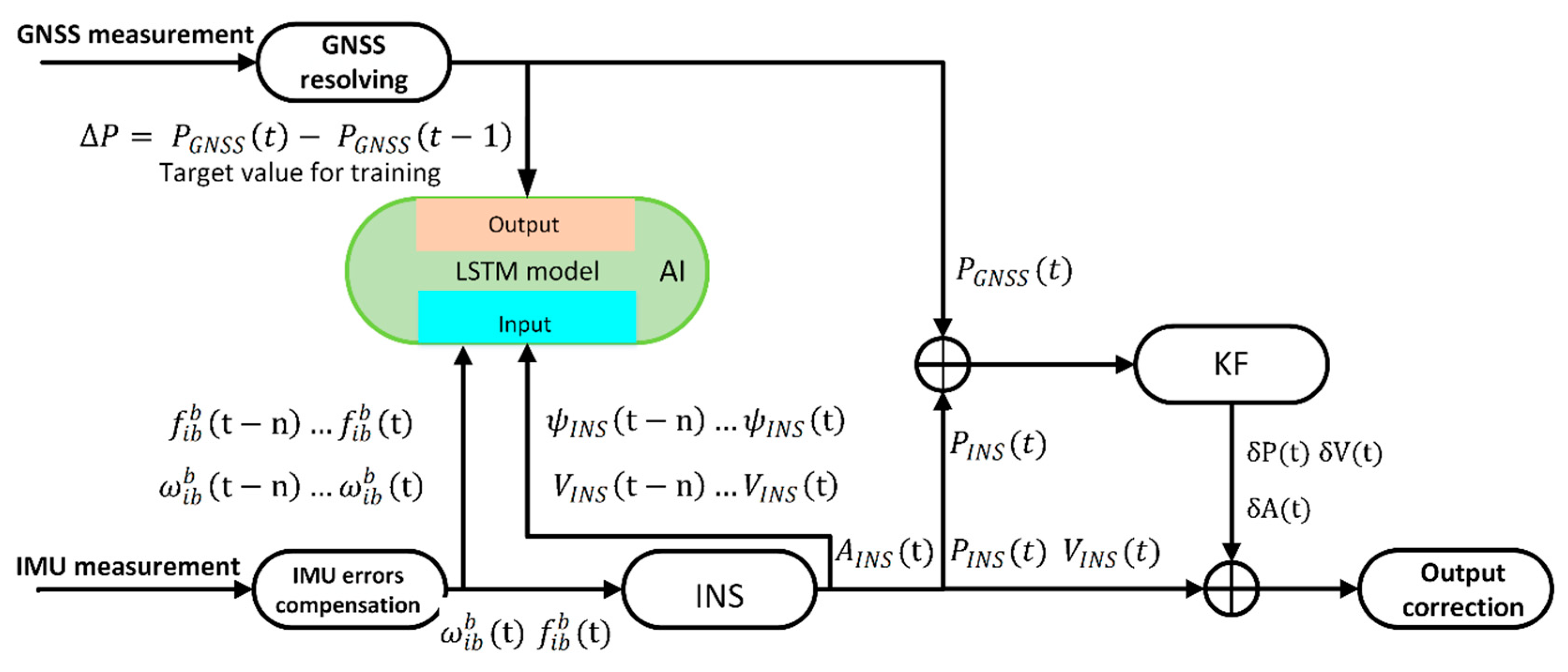

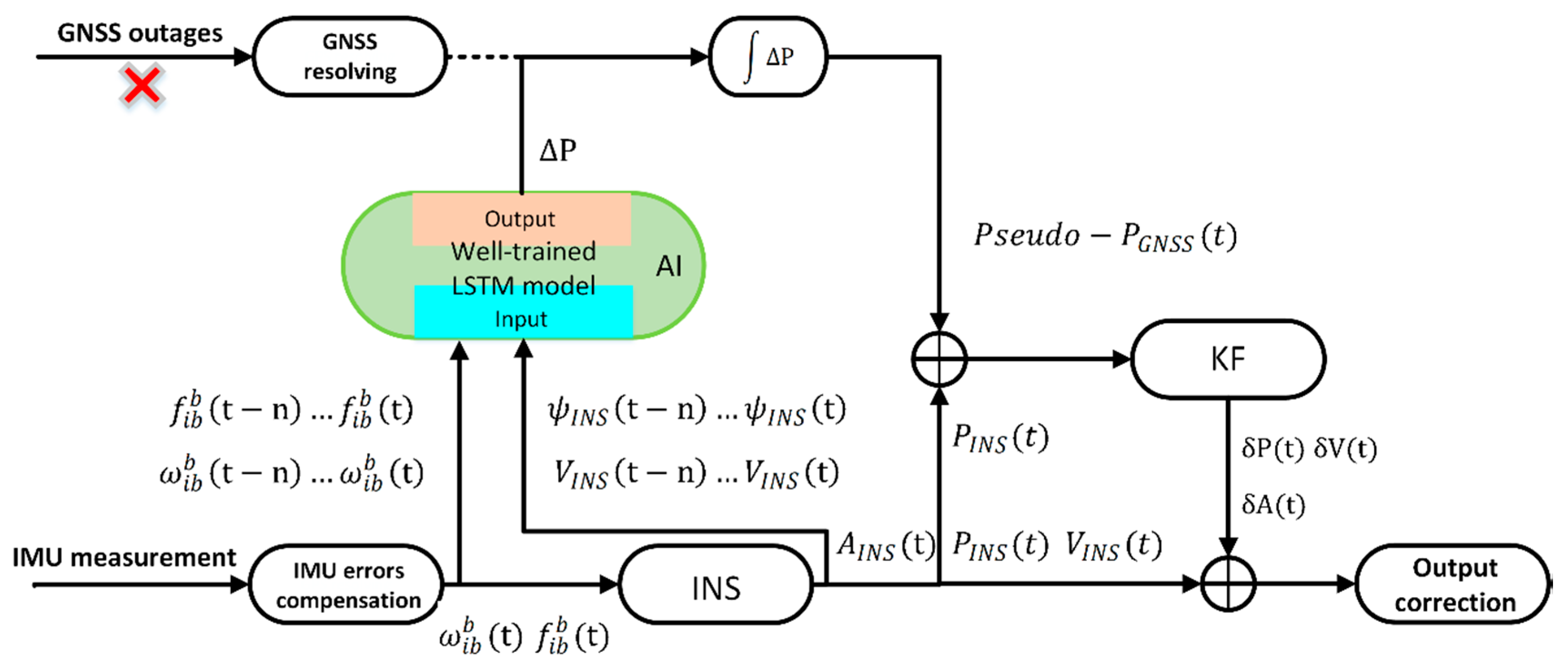

For maintaining good performance of INS during the GNSS outages, a novel AI method based on LSTM is proposed to overcome the drawbacks of the methods discussed above. When the GNSS signal is available, the velocity, yaw of INS, output of IMU are used as the input features for training the LSTM model, while the output of the model is the position increment of the GNSS. Once the GNSS signal is lost for a short time, the information of INS will be fed into the LSTM model to generate the pseudo GNSS increment. After accumulating the pseudo GNSS increments, the pseudo GNSS position is sent to KF for correcting the INS navigation results. The actual test data is used to evaluate the novel algorithm of LSTM, which is also compared with the traditional MLP algorithm. The test results demonstrate that the LSTM algorithm can promote navigation accuracy better than the MLP algorithm during a long period of GNSS outages.

The structure of the rest paper is organized as follows: Section 2 introduces the simple INS/GNSS loosely coupled navigation system. The LSTM architecture and training method for time serials prediction are illustrated in Section 3. Section 4 demonstrates an improved model of the LSTM fusion algorithm aiding for INS. The actual road experiments are presented and discussed in Section 5. Finally, Section 6 presents the conclusions.

3. Structure of Memory Unit of LSTM

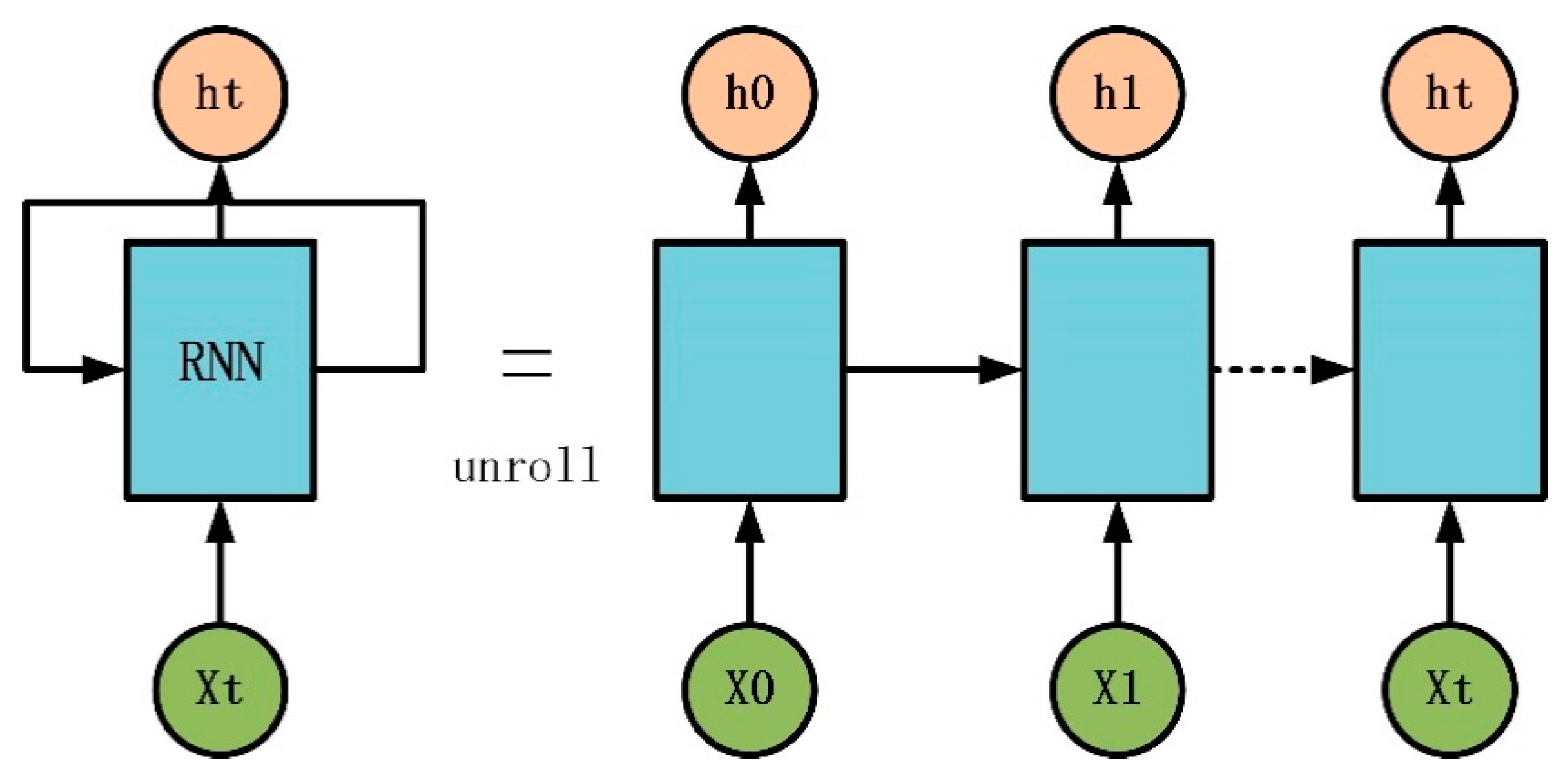

The recurrent neural network is now widely used in prediction sequence-based tasks such as pedestrian trajectory predicting [32] and vehicle trajectory prediction [33]. Unlike traditional multilayer perceptron neural networks, a basic RNN has a loop to allow information to persist.

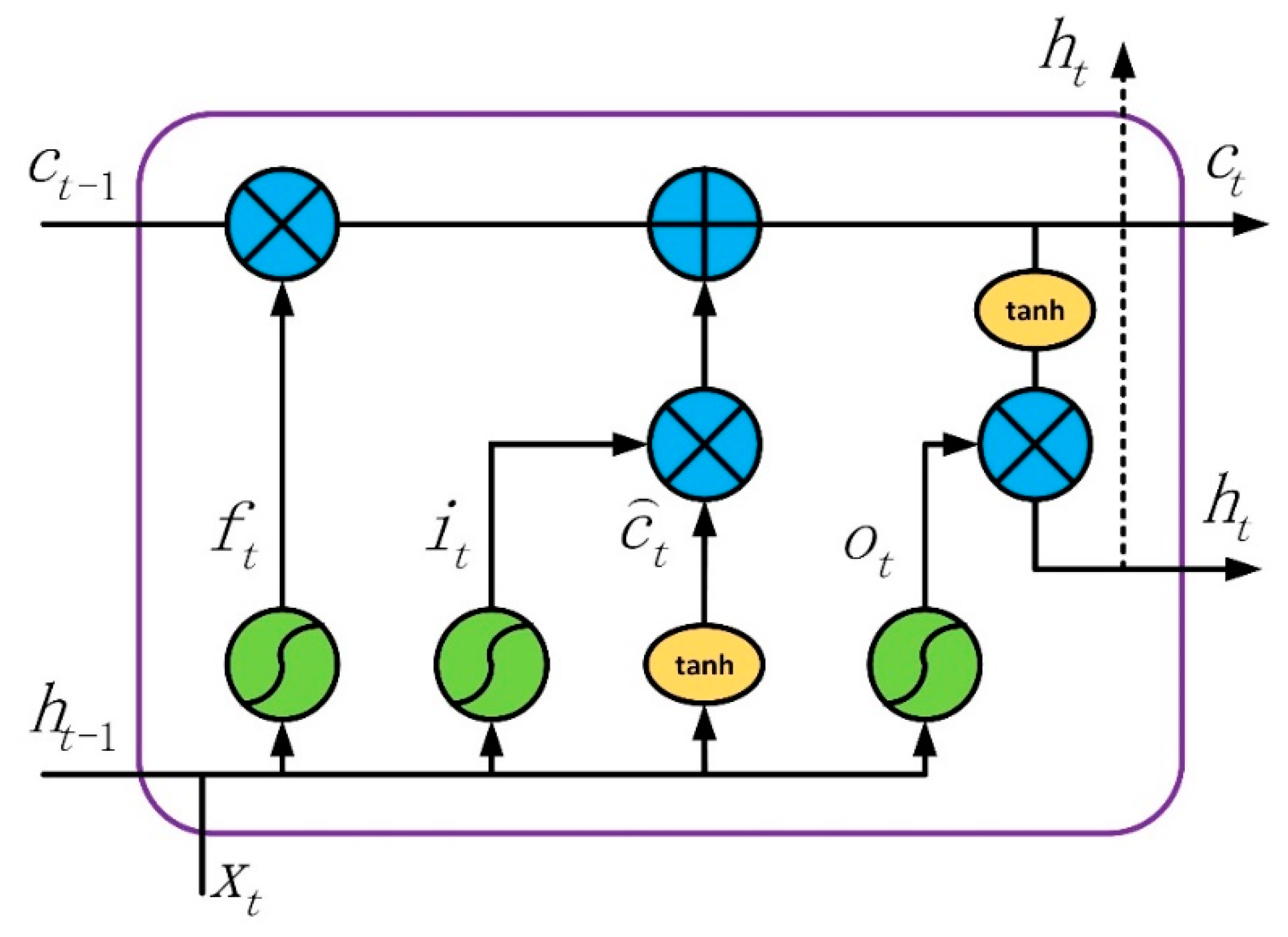

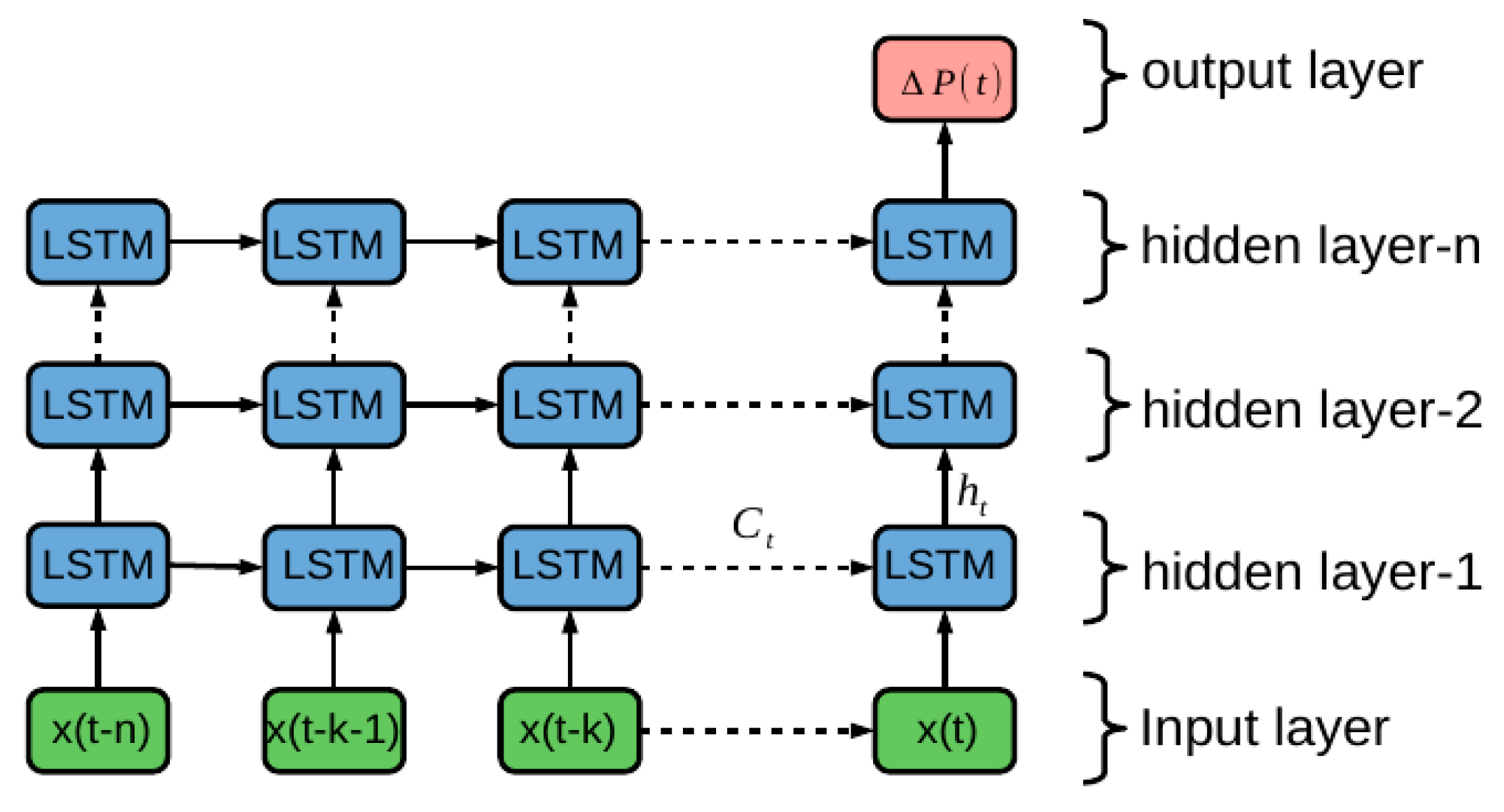

In Figure 2, the chain-like architecture indicates that RNN is related to sequences and lists. However, in traditional RNN, there is a lack of capability to handle long-term dependency [34]. Whereas, the long short-term memory network (LSTM) is a special RNN with the ability of learning long-term dependency, the structure of which is shown in Figure 3.

Figure 2.

Unrolled recurrent neural network. RNN—recurrent neural network.

Figure 3.

Short-term memory network (LSTM) block architecture.

Above LSTM block has three gates, including the input gate , forget gate , and output gate , all filled by green. Moreover, is the state of the cell, is the input data and the previous state is . The blue circles demonstrate the multiplications and additions. Based on information flow in the structure, the mathematical model of LSTM can be summarized as

where the donates the Hadamard product and means the sigmoid non-linearity function. Stacking and temporally concatenating the basic LSTM unit can lead to more powerful networks, which already have been applied to solve variety of time serial problems [35].

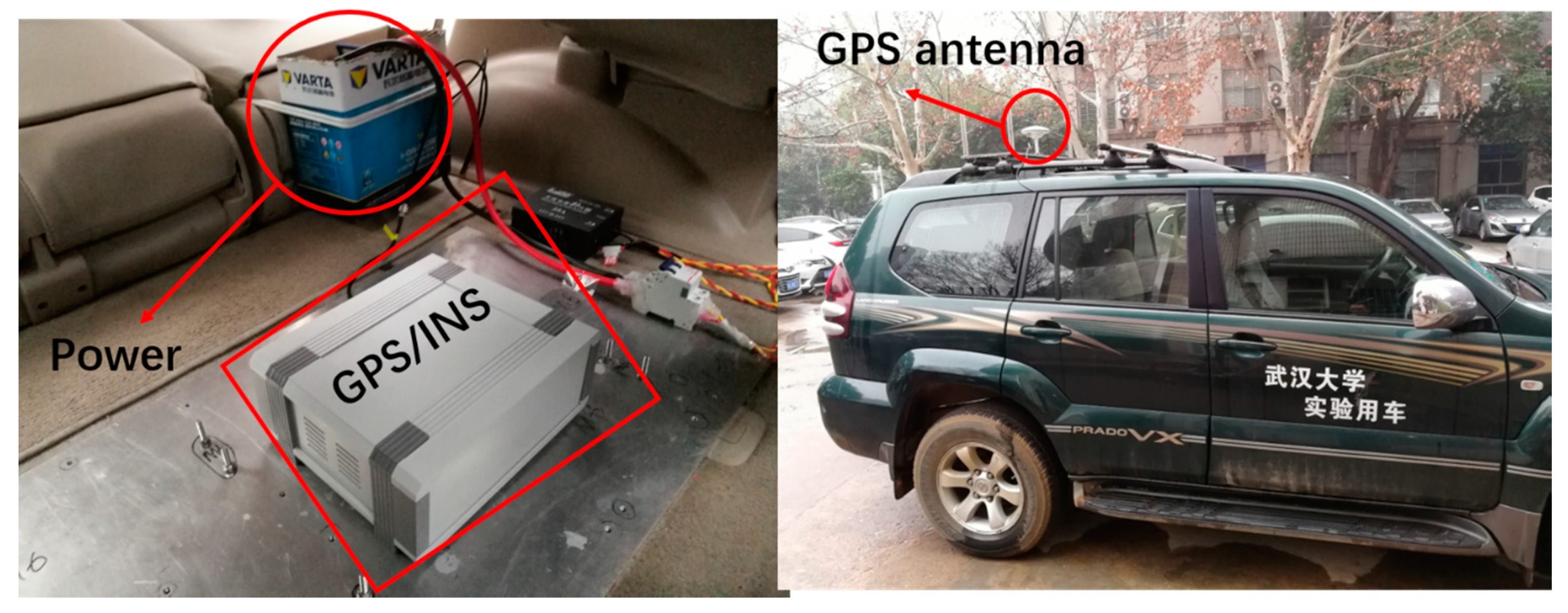

5. Road Experimental Testing Results

The test data acquisition platform is shown in Figure 8. The sensors specifications (features) are described in Table 1. ICM-20602 is a low-cost six-axis MEMS (microelectromechanical systems) motion-tracking device, while Ublox-M8P is a high precise GNSS module. The reference navigation solution—i.e. true position, velocity, and attitude—is recorded by a loose-coupled GNSS/INS system with RTK during vehicle experiment. After all the experiment data was collected, GNSS signal outages can be simulated by software algorithm to evaluate the performance of different neural network algorithms. The aim of this study is to improve the navigation performance of low-cost IMU during GNSS outages by utilizing an AI model based on LSTM.

Figure 8.

Vehicle with navigation system equipment.

Table 1.

Features.

The proposed LSTM-based method aiding for the INS/GNSS integrated system was tested and analyzed both in the training and prediction mode, and the procedure and diagram are illustrated in Figure 4 and Figure 5, respectively. The parameter turning, and the influence of the number of time steps and hidden units in the LSTM model are also explored. The proposed model of AI is trained and tested at different times.

5.1. Data Description

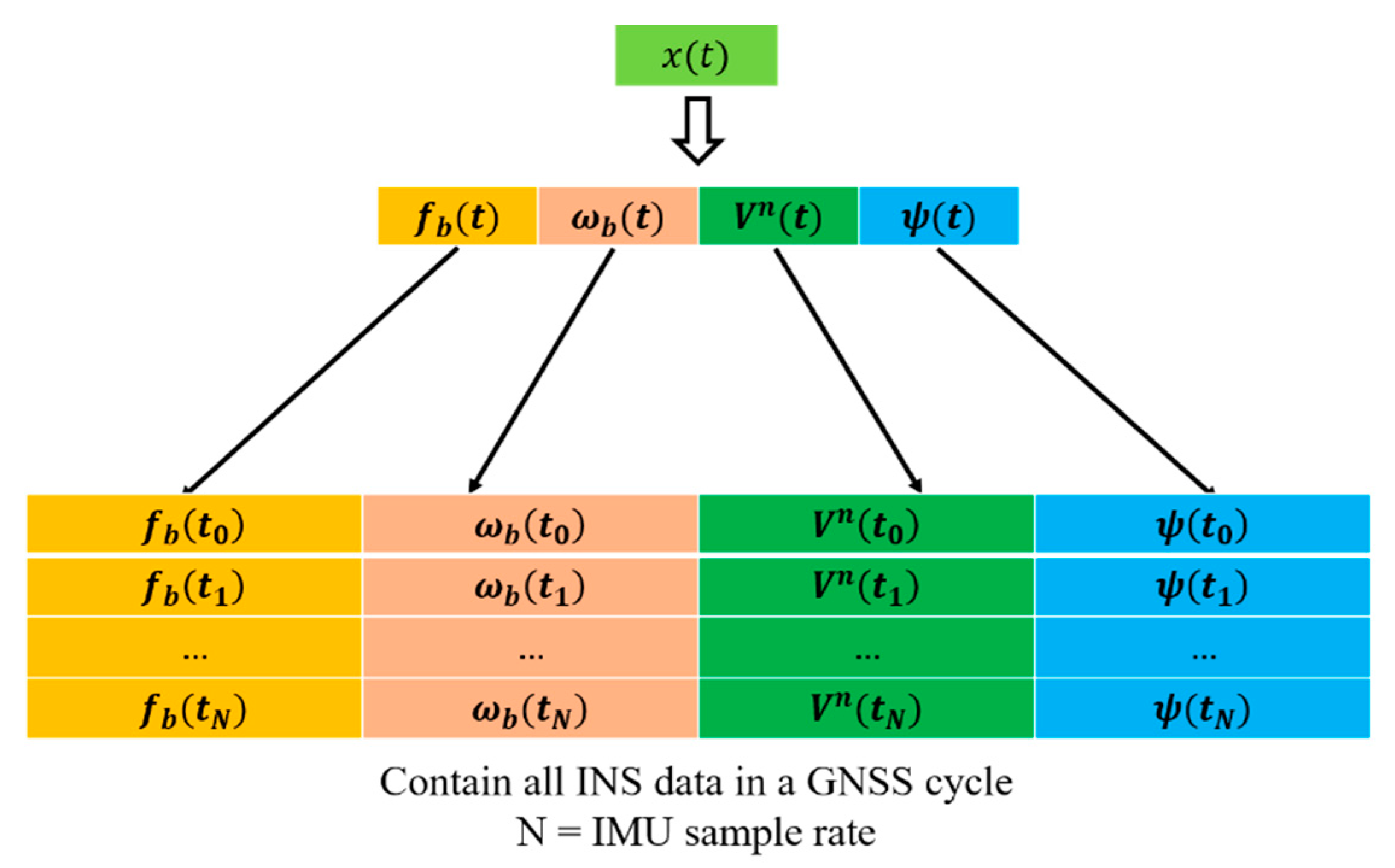

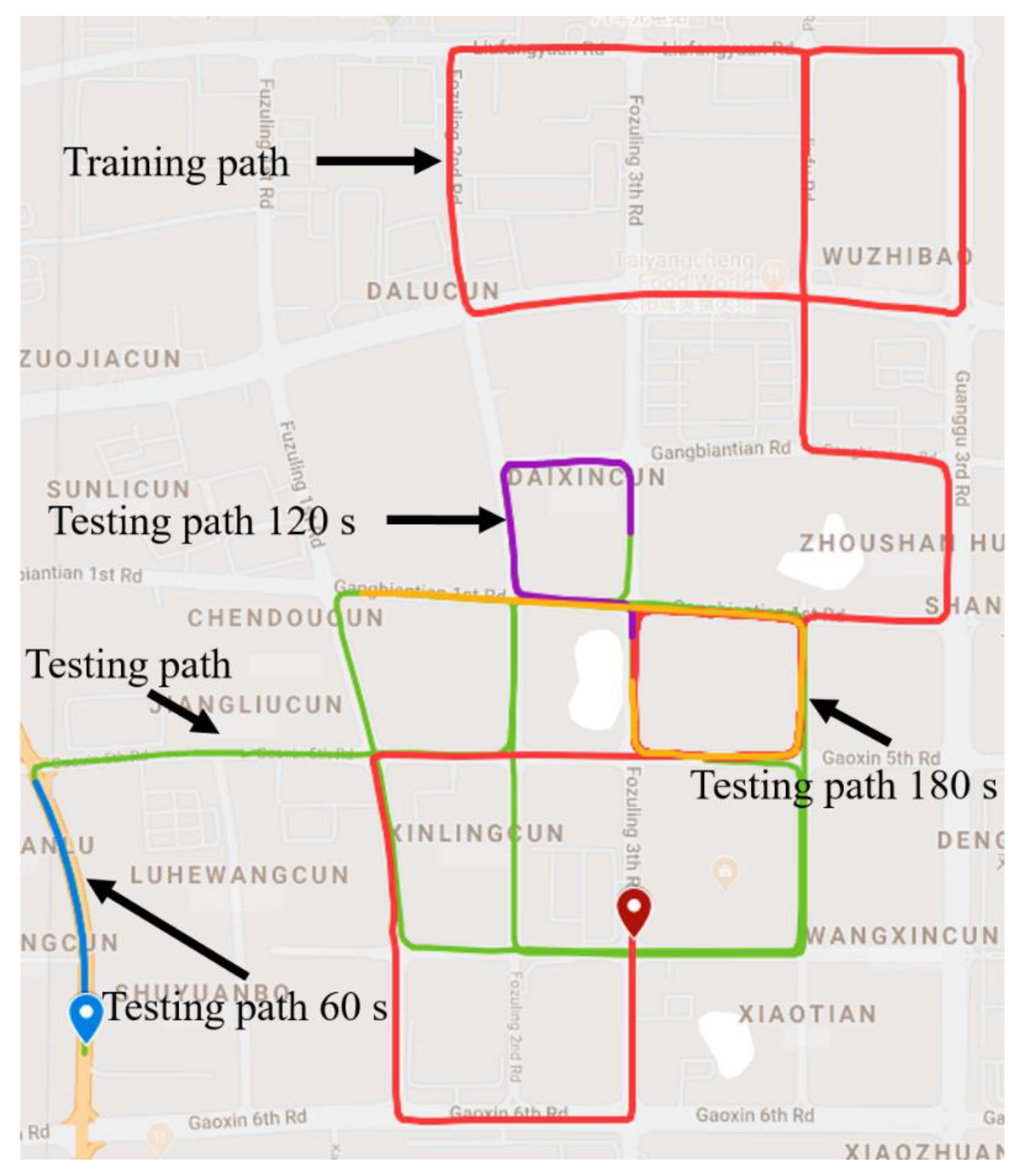

The trajectory of this experiment is shown in Figure 9, which was collected in China, Hubei Province, at Wu Chang. The sampling rates of the INS and GNSS are 50 Hz and 1 Hz, respectively. The red icon and blue icon represent the start point and end point, separately. During the path of the red line, the whole system works under loosely coupled mode during the trajectory. At that time, the GNSS data and INS data were integrated to maintain continuous and high-precision navigation results, meanwhile the LSTM-based model was under the training process. The input features of the LSTM module include the specific force, angular rate, yaw, and velocity at the current and past seconds, while the position increments calculated from the GNSS are as the output.

Figure 9.

Trajectory for training path.

When the vehicle was running along with the three parts of test path where the GNSS signal had assumed outages, which means the GNSS signal outages is simulated, the navigation results only depended on pure INS reckoning.

In the experiment, the trajectory data from INS and GNSS (in Figure 9) was split into the following two parts: the first part with 4400 s, marked by the red line, is used as the training set; the second part with 1400 s, marked by green line, is used as the test set. Meanwhile, three parts of test path for 60 s, 120 s, and 180 s which were signed in Figure 9 were selected to evaluate the performance of the proposed algorithm. Actually, when a GNSS signal is available, all of the data from INS will be used to train the AI model. Once the GNSS signal is unavailable, the well-trained model will substitute the GNSS to supply the position information for the Kalman filter, so as to decrease the errors of INS. All of the data have been scaled between −1 and 1 for accelerating the process of training.

In a typical machine learning application, the data set is usually iterated many times using a gradient descent algorithm. However, navigation is a real-time application, which needs normalize the data by a section of road data in practical applications. In this experiment, the off-line mode is used to explore the feasibility of the neural network methods in terms of improving the INS navigation solutions when the GNSS signal is lost. Furthermore, different features have different numerical values and sizes. Normalization and standardization are mainly used to make calculations more convenient. For example, the size of two variables is different, while one value is larger than the other. Then, they may cause a numerical calculation error when both of them are simultaneously variables. Therefore, the scaling input data is needed for making calculations more convenient and quickly convergent.

5.2. Design the LSTM Network

The AI model is comprised of two LSTM layer and a fully-connected layer activated by softmax function. In the process of designing an LSTM network, there are several hyper parameters that need careful tuning, namely: (1) the number of hidden units, and (2) the step time length. Setting too many hidden units and too lengthy time steps will spend more time making an algorithm convergence, and may lead an over-fitting problem. In the experiment, the other parameters’ values are shown in Table 2.

Table 2.

Parameter set.

The training set has reserved 20% data as a validation set to adjust the model parameters. That means 80% of the data of the training set is used to train the model, the remaining 20% is used to evaluate the performance of model. The criterion for selecting parameters is the root mean square error value on the validation set. The smaller the value generally means better the generalization ability of model. In this study, a grid search method is adopted to find the best parameter combination, while three time-step lengths (1, 4, and 8) and three hidden units of LSTM (32, 64, and 128) are selected to be evaluated. Three-time steps and three hidden units will generate nine combinations. The model performance is evaluated by the root mean square error (RMSE).

The training time and RMSE in the validation were calculated and compared in each combination, then the final parameters were selected from the best choices of these combinations. The experimental results are displayed in Table 3 which indicates that the setting time step and hidden units are equal to 4 and 64, respectively, making the LSTM algorithm obtain less RMSE. The low RMSE implies the high confidence of the prediction method. Specifically, the different performance of prediction with different time steps and hidden units relate to the overfitting problem, which produces the large value of RMSE.

Table 3.

Root mean square error (RMSE) with different time steps and hidden units.

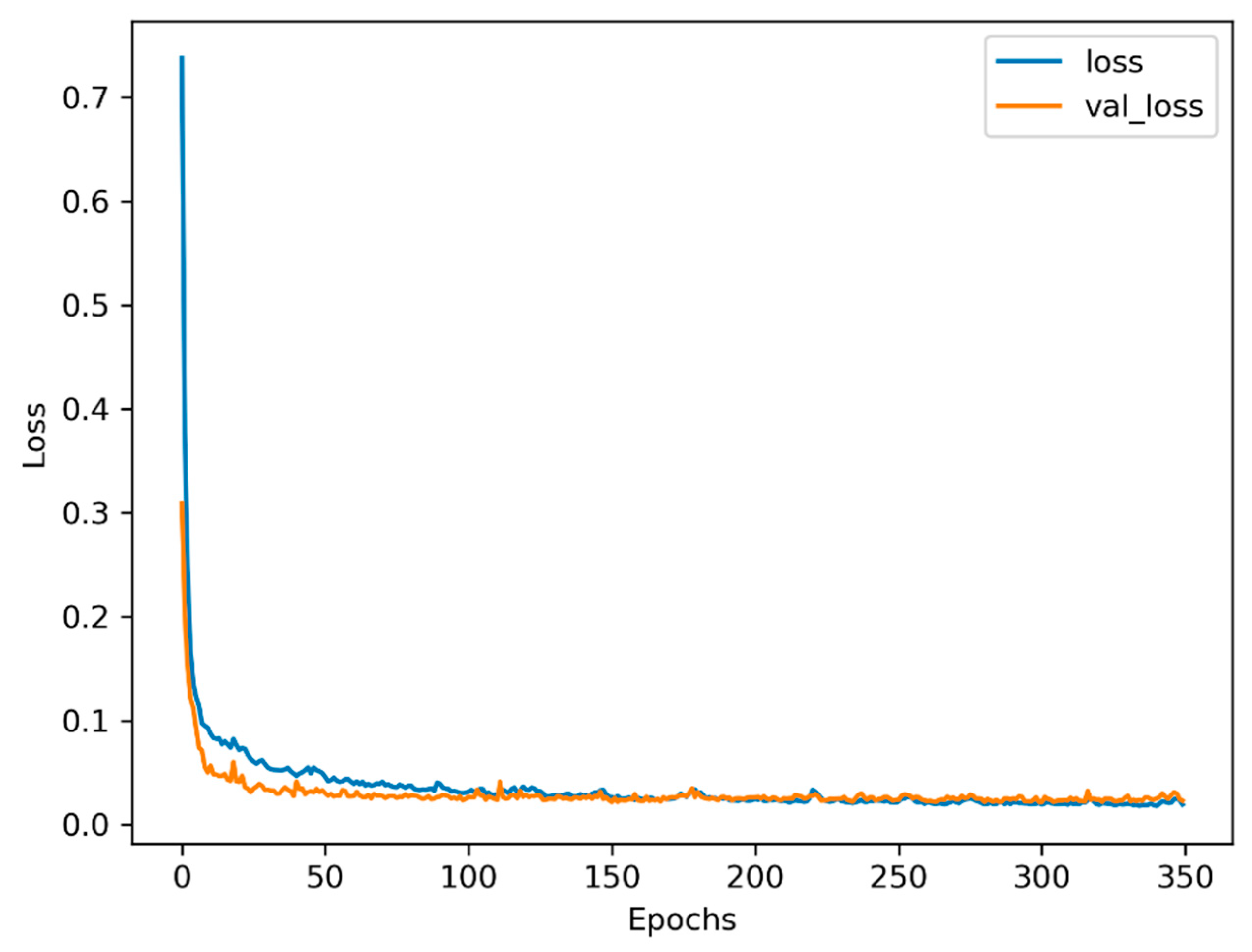

Figure 10 indicates the varying losses with epochs during the training process. It can be seen that the loss of training and validation will decrease to the minimum value after 150 epochs or so. Then, the trends of training and validation tend to be flat, which seems that setting early stopping will save more time without losing any performance.

Figure 10.

Training and validation loss with 64 hidden units and four time steps.

5.3. Experiment Results

In this section, the navigation performance of the LSTM model is compared with that of MLP. MLP uses a time window length of four to predict the future position increment, whose input features are the same as that of LSTM, including , , , and the yaw. Aiming to have same hidden units as LSTM, MLP has two hidden layers, each of which has 64 hidden units, except for the input and output layer. The algorithm was implemented with Python 3.6 and Tensorflow 1.9, which are very famous deep learning frameworks developed by Google [41], and trained by Adam optimizer [42].

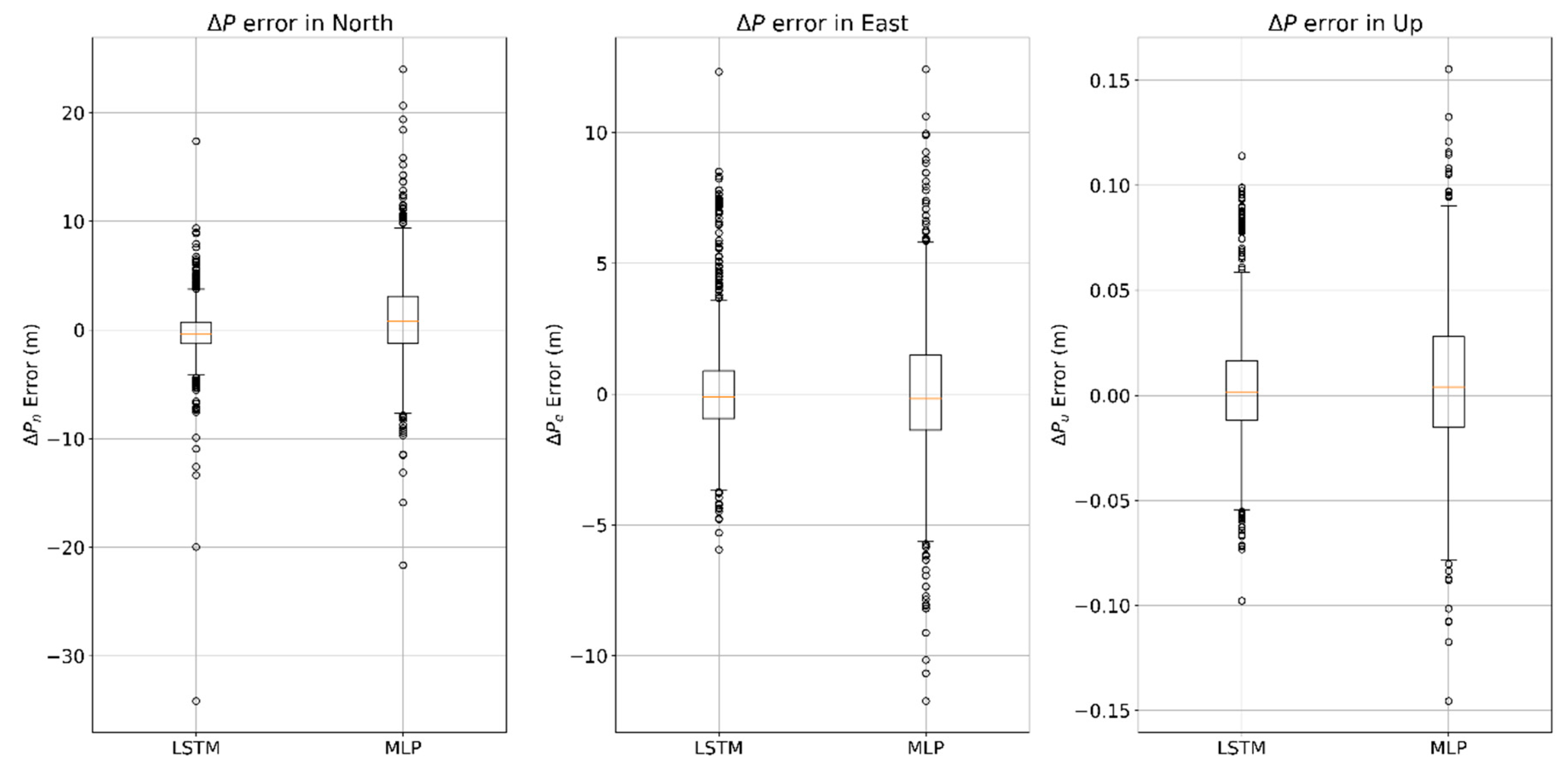

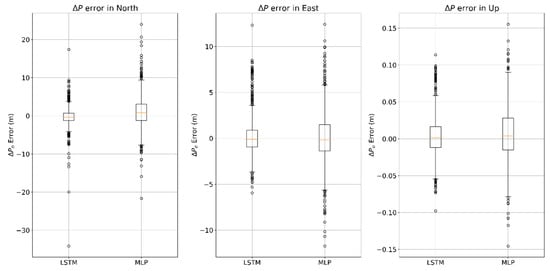

Under the training procedure of the above algorithms (LSTM and MLP), the 4400 s training set, including the above features (, , , and the yaw), is employed to train the models of MLP and LSTM, respectively. Part of test set containing the above features, is applied in order to estimate the effect of the trained models, from which the effectiveness of predicting the position increments of three models can be distinguished, while the increment of position is formed with latitude, longitude, and height. Comparison results are shown in Figure 11, and summarized in Table 4.

Figure 11.

Comparison of multilayer perceptron neural network (MLP) and LSTM algorithms in terms of prediction error.

Table 4.

Mean abstract error and standard deviation of in different algorithms. MAE—mean absolute error; LSTM—long short-term memory; MLP—multilayer perceptron neural network.

The distribution of prediction error in MLP and LSTM algorithms are shown in Figure 11. The value of the mean absolute error (MAE) of the predicting position increments in the north direction using the algorithms of LSTM and MLP are 1.573 m and 3.053 m, respectively, whereas the standard deviation of each algorithm is 2.506 m and 4.109 m, respectively. The value of the mean absolute error of the predicting position increments in the east direction are 1.422 m and 1.965 m, respectively, meanwhile the standard deviations are 2.138 m and 2.686 m, respectively. Table 4 shows that the performance of predicting in LSTM algorithm is more reliable and stable than that in the traditional MLP algorithm.

In order to compare the performance of different models during GNSS outages more specifically, three different length of test path were selected, which were 60 s, 120 s, and 180 s. For dealing with land vehicles, the position, velocity in North and East, as well as heading were selected to display the performances of improving the navigation results during GNSS outages in different algorithms.

5.3.1. 60-s Outage Experiment Results

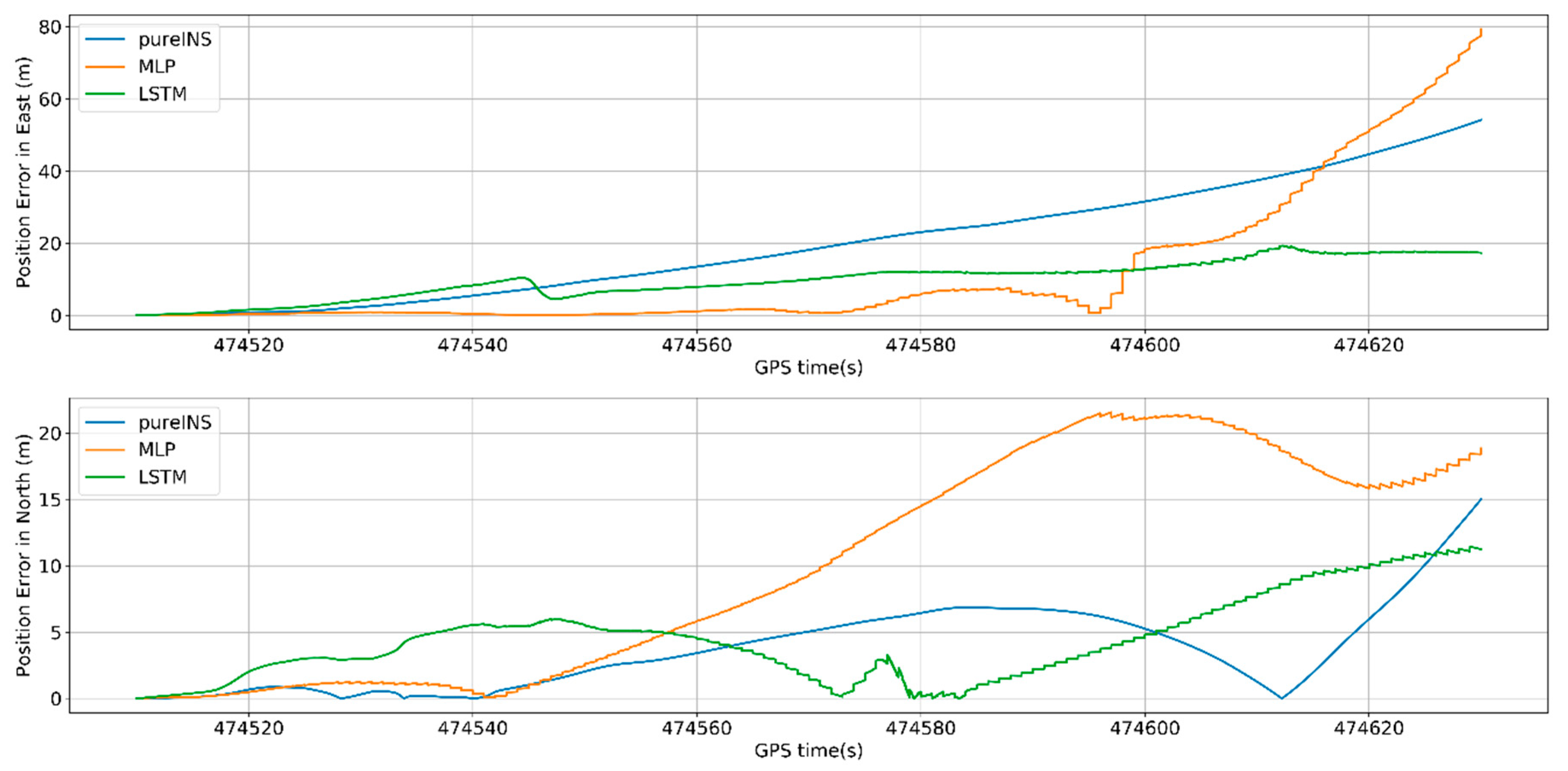

From Figure 12, Figure 13 and Figure 14 show the navigation results among different algorithms during 60 s GNSS outages. Before 475,330 s, where the GNSS signal is still available, the whole system works on loosely coupled mode, while three algorithms show the same results. When the GNSS signal is assumed outages, and the navigation performance varies in three different methods.

Figure 12.

Position errors of 60 s outages with different algorithms.

Figure 13.

Velocity errors of 60 s outages with different algorithms.

Figure 14.

Heading errors of 60 s outages with different algorithms.

In Figure 12 and Figure 13, the proposed LSTM algorithm obviously outperforms the MLP algorithm and pure INS. The navigation errors of all three methods gradually deteriorates with time during the GNSS signal outages. In Figure 12, the max position error in east of pure INS, MLP, and LSTM is 42.4, 10.7, and 1.5 m; meanwhile, the max position error in north is 15.6, 21.1, and 9.25 m, respectively. In Figure 13 the max velocity error in east of pure INS, MLP, and LSTM is 0.58, 0.95, and 0.12 m/s, meanwhile the velocity error in north is 1.5, 0.24, and 0.32 m/s, respectively. In Figure 14, the heading error among three algorithms is 1.15°, 0.95°, and 0.18°. All the results are summarized in Table 5. At 475,390 s, the system turns to the loosely coupled mode, and navigation error quickly converges both in three methods.

Table 5.

Max error of position, velocity, and heading during 60 s GNSS outages in different algorithms.

Figure 15 indicates that LSTM can greatly decrease the pure INS’s navigation error. At the end of GNSS outages, the position error of the LSTM algorithm is 80% performance improvement compared with pure INS mode.

Figure 15.

60 s outages trajectory.

5.3.2. 120-s Outage Experiment Results

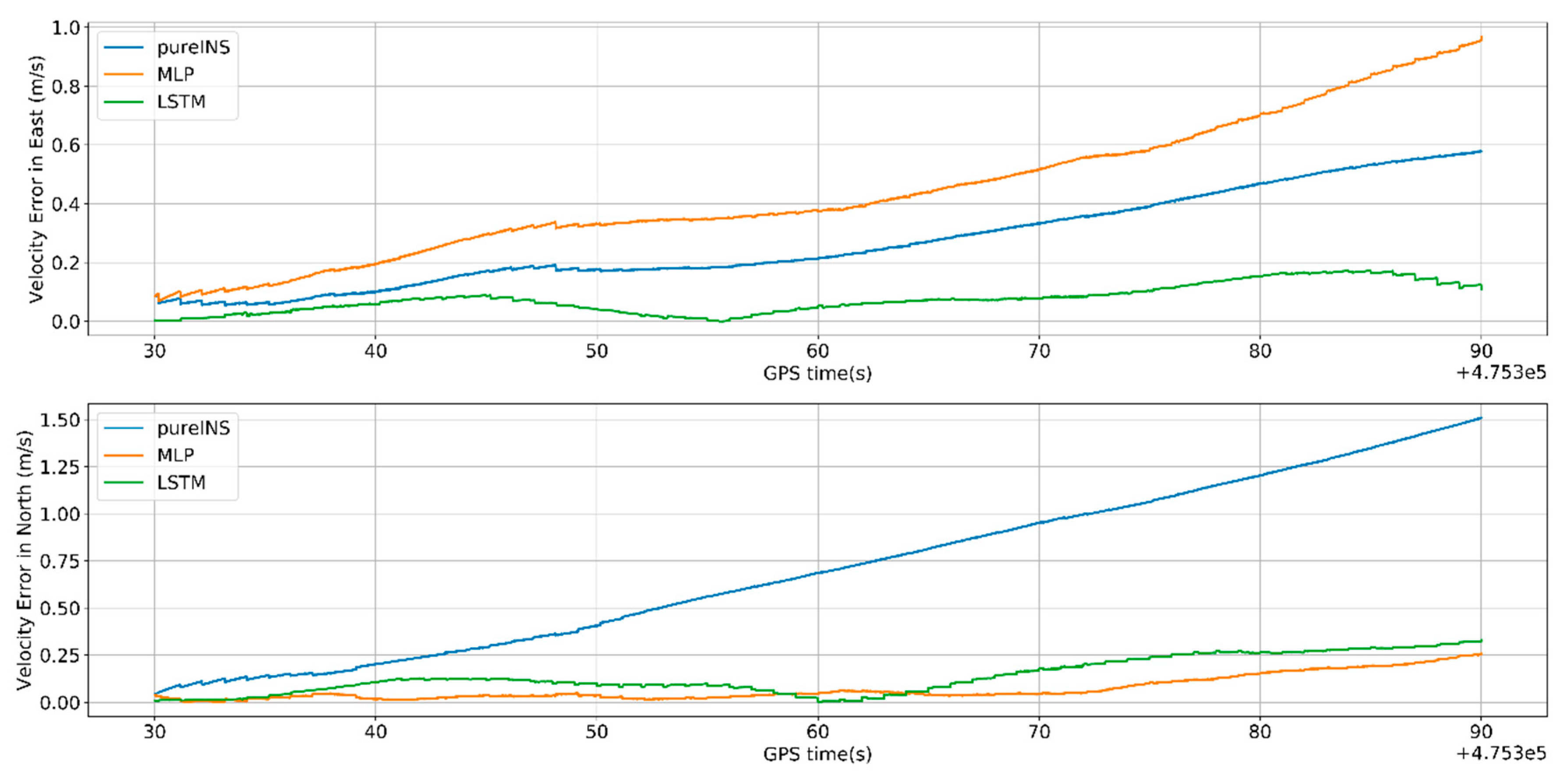

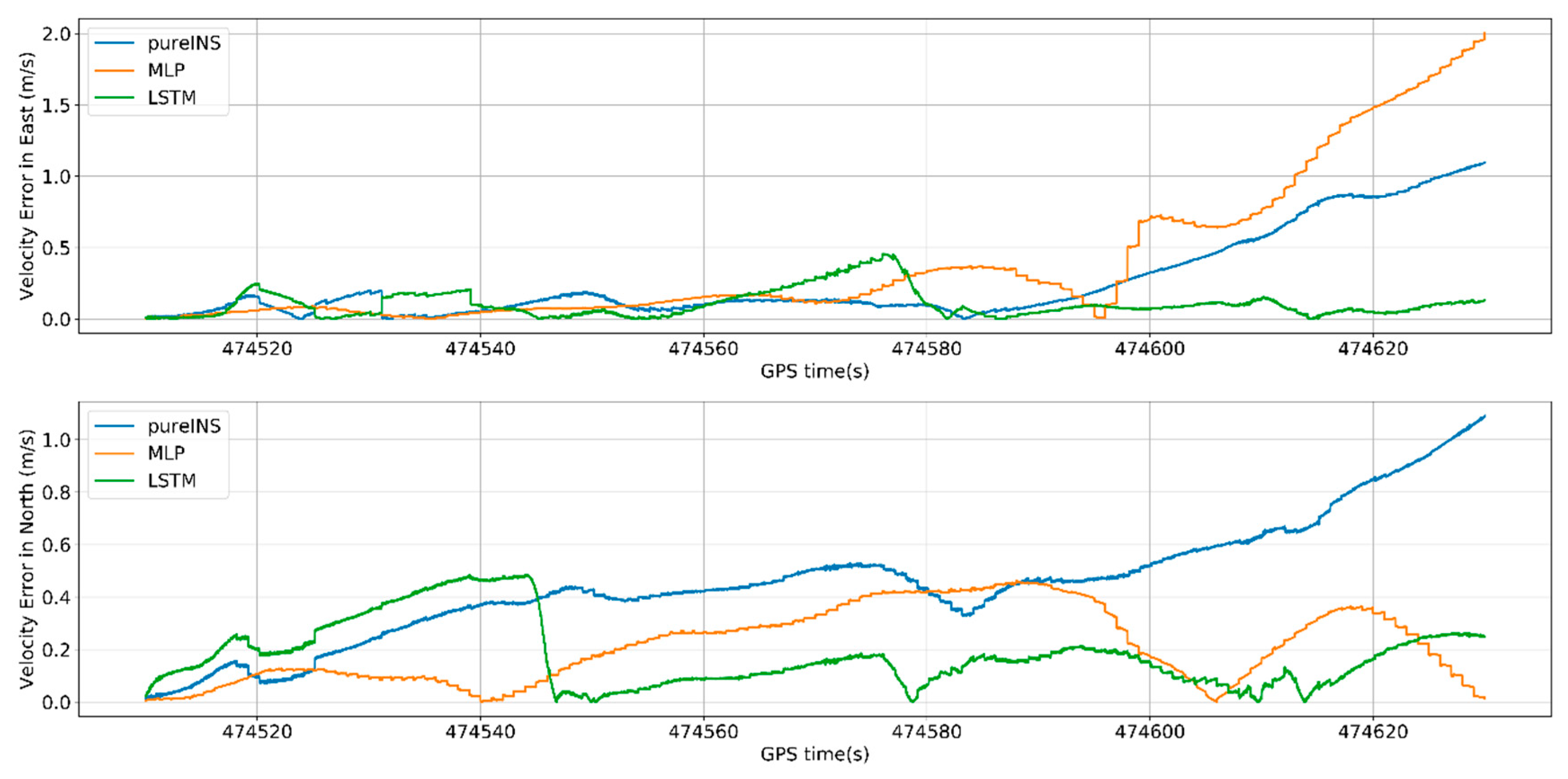

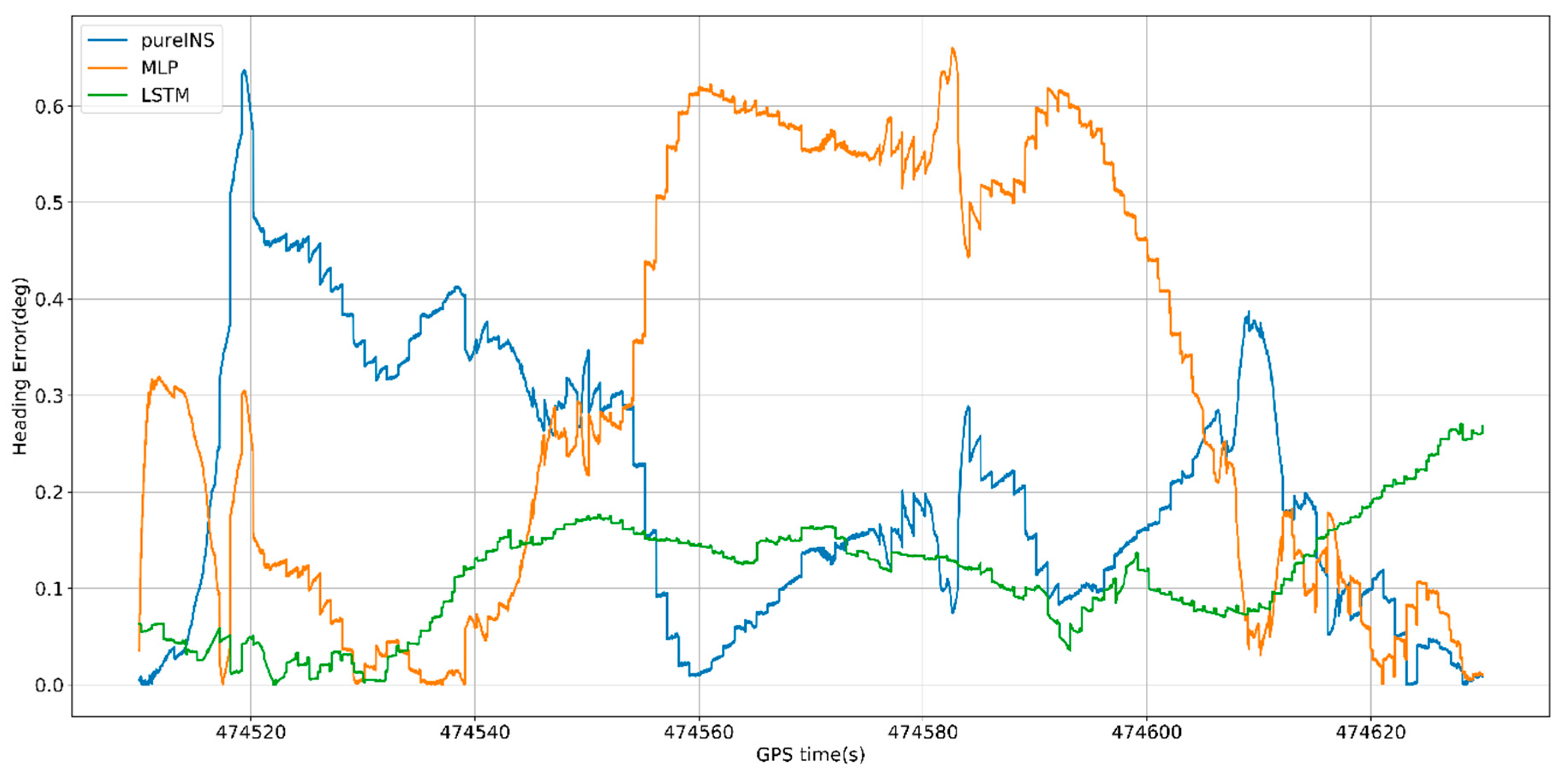

This section, a longer GNSS signal time and more complicated scene was selected to test the performances of different algorithms. Figure 16, Figure 17 and Figure 18 show the navigation results among different algorithms during 120 s GNSS outages.

Figure 16.

Position errors of 120 s outages with different algorithms.

Figure 17.

Velocity errors of 120 s outages with different algorithms.

Figure 18.

Heading errors of 120 s outages with different algorithms.

From Figure 16, at 474,630 s, the max position error in east of pure INS, MLP, and LSTM is 54.2, 79.7, and 19.4 m, meanwhile the max position error in north is 14.9, 18.6, and 11.3 m. In Figure 17, the max velocity error in east of pure INS, MLP, and LSTM is 1.1, 2.0, and 0.46 m/s, meanwhile the max velocity error in north is 1.1, 0.45, and 0.32 m/s. In Figure 18, the heading error among three algorithms is 0.63°, 0.62°, and 0.27°. All the results are summarized in Table 6.

Table 6.

Max error of position, velocity, and heading during 120 s GNSS outages in different algorithms.

From Figure 16 and Figure 17, it can be seen that LSTM performs worse in beginning 40 s in north, but for last 80 s, LSTM performs better than MLP for position and velocity in both directions. From the time average performance, LSTM also performs better than MLP.

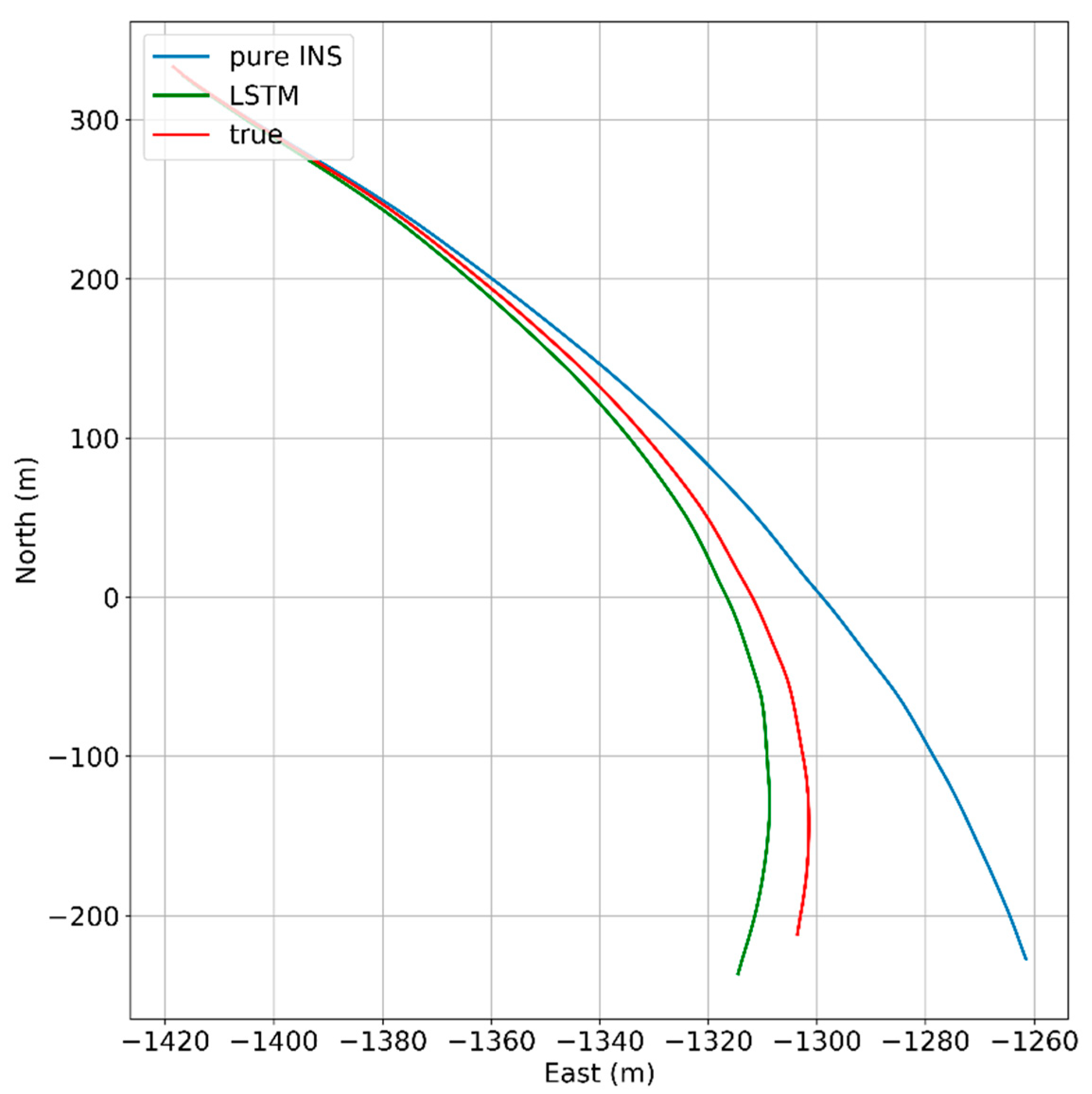

In Figure 19, under the condition of longer GNSS signal outages and complicated scene with three benches, the performances of all algorithms have reduced, but LSTM also get higher navigation accuracy compared with pure INS. At the end of GNSS outages, the position error of the LSTM algorithm is 68.5% performance improvement compared with the pure INS.

Figure 19.

120 s outages trajectory.

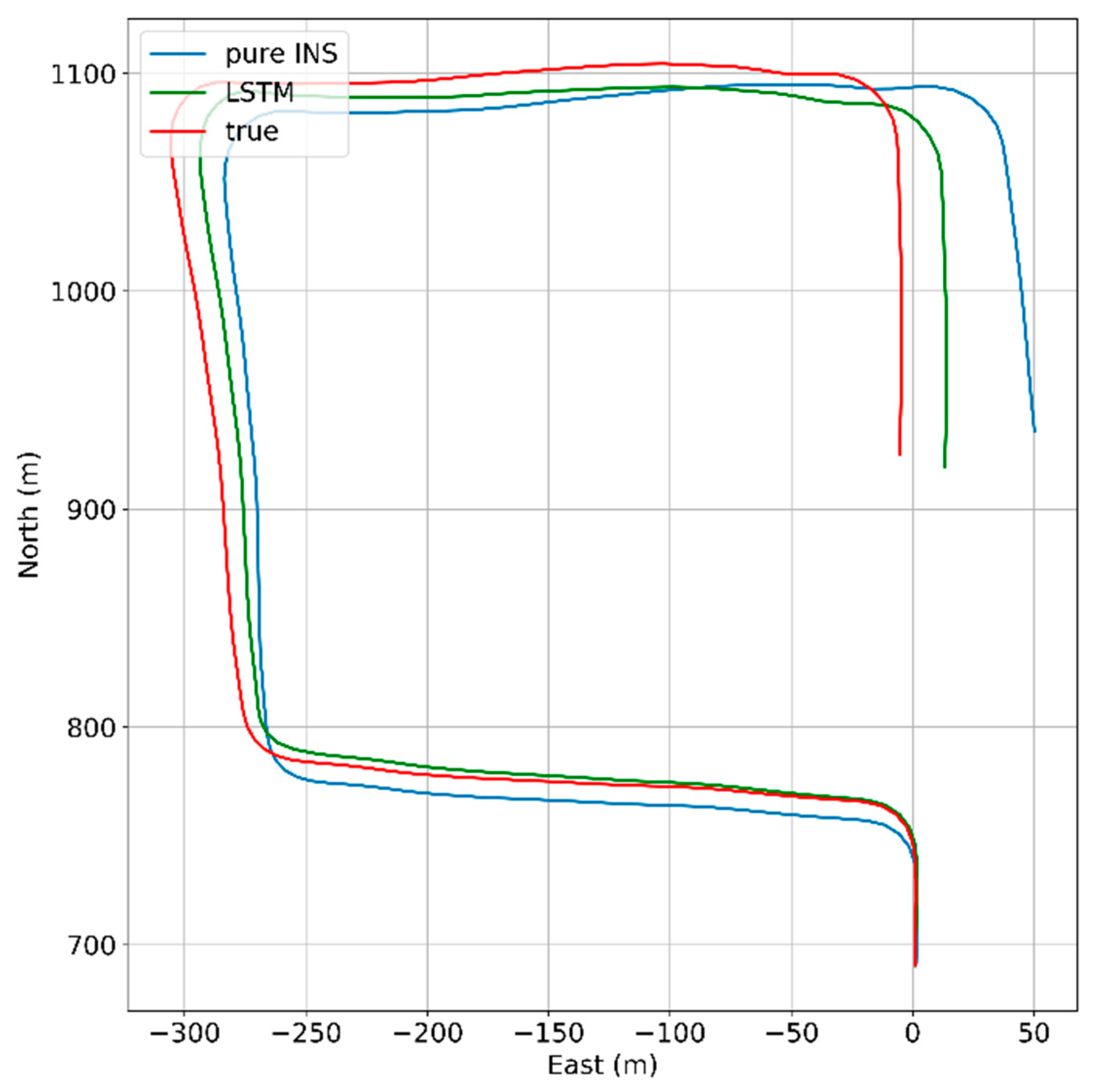

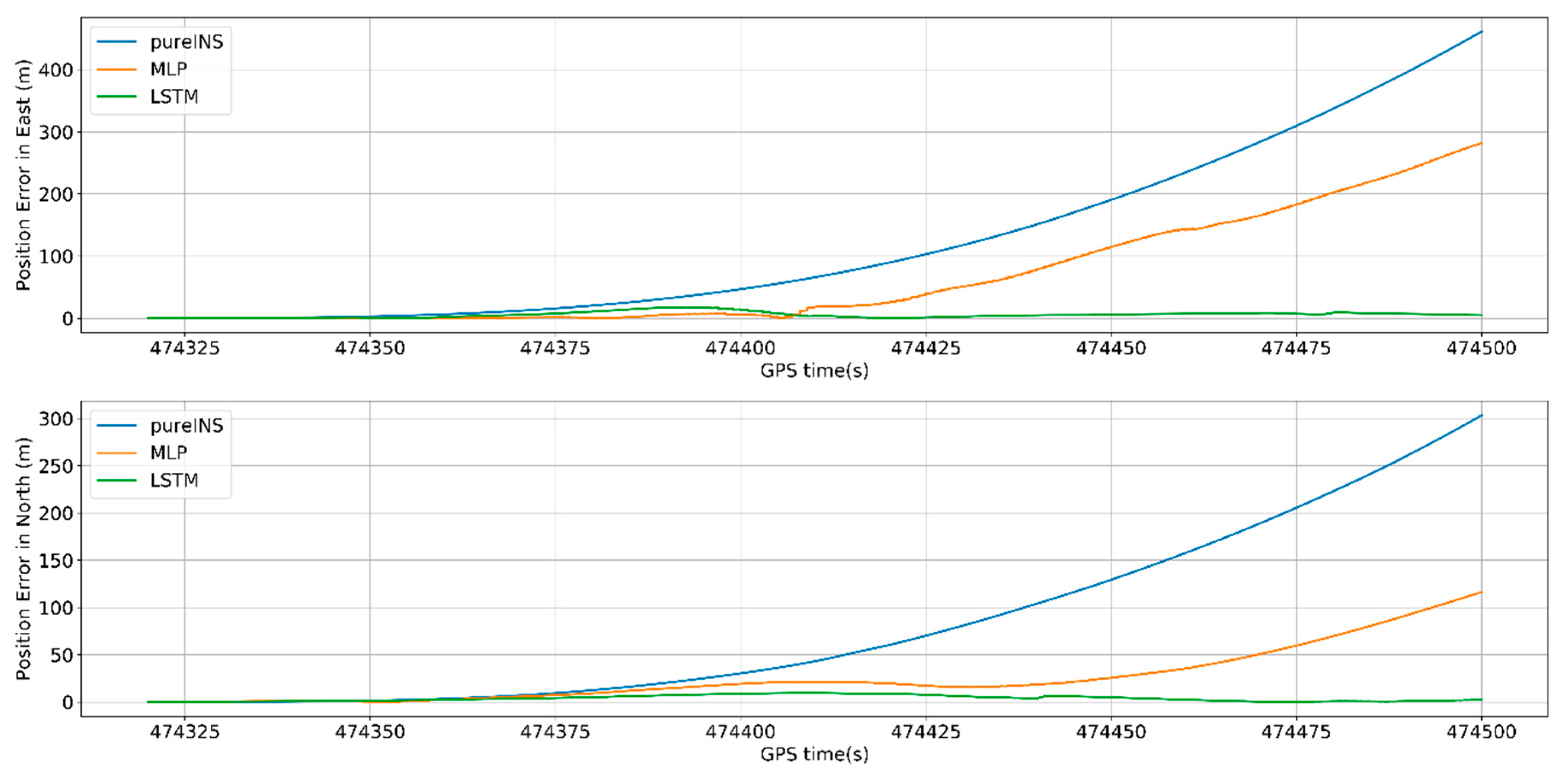

5.3.3. 180-s Outage Experiment Results

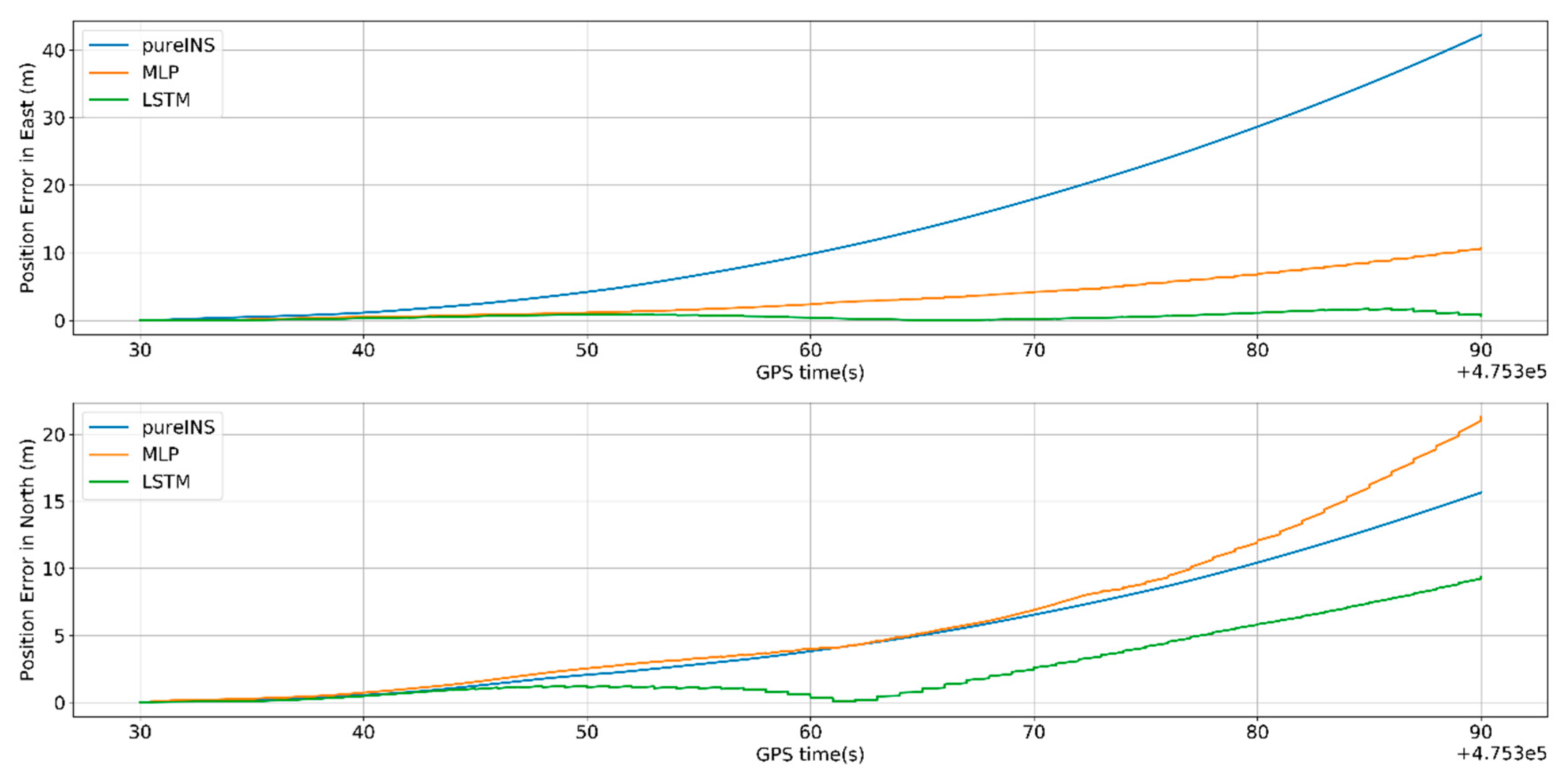

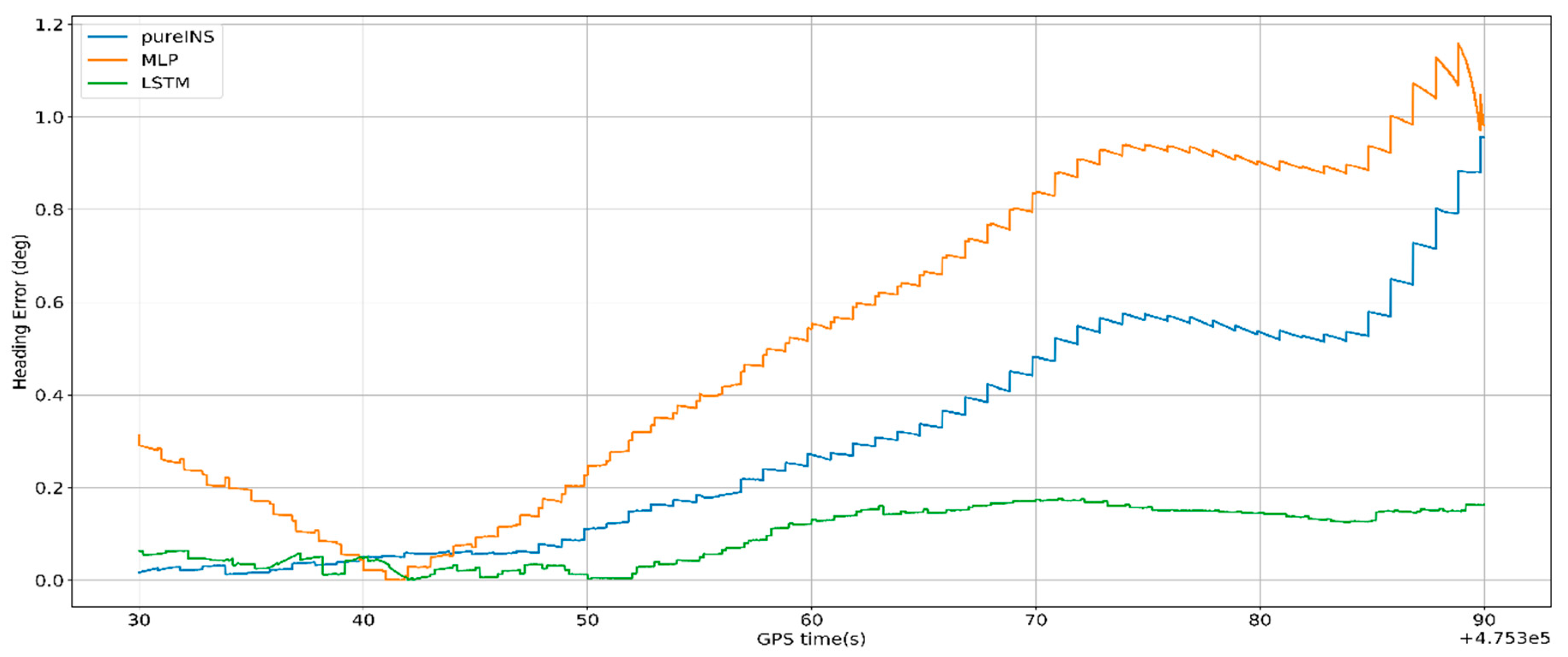

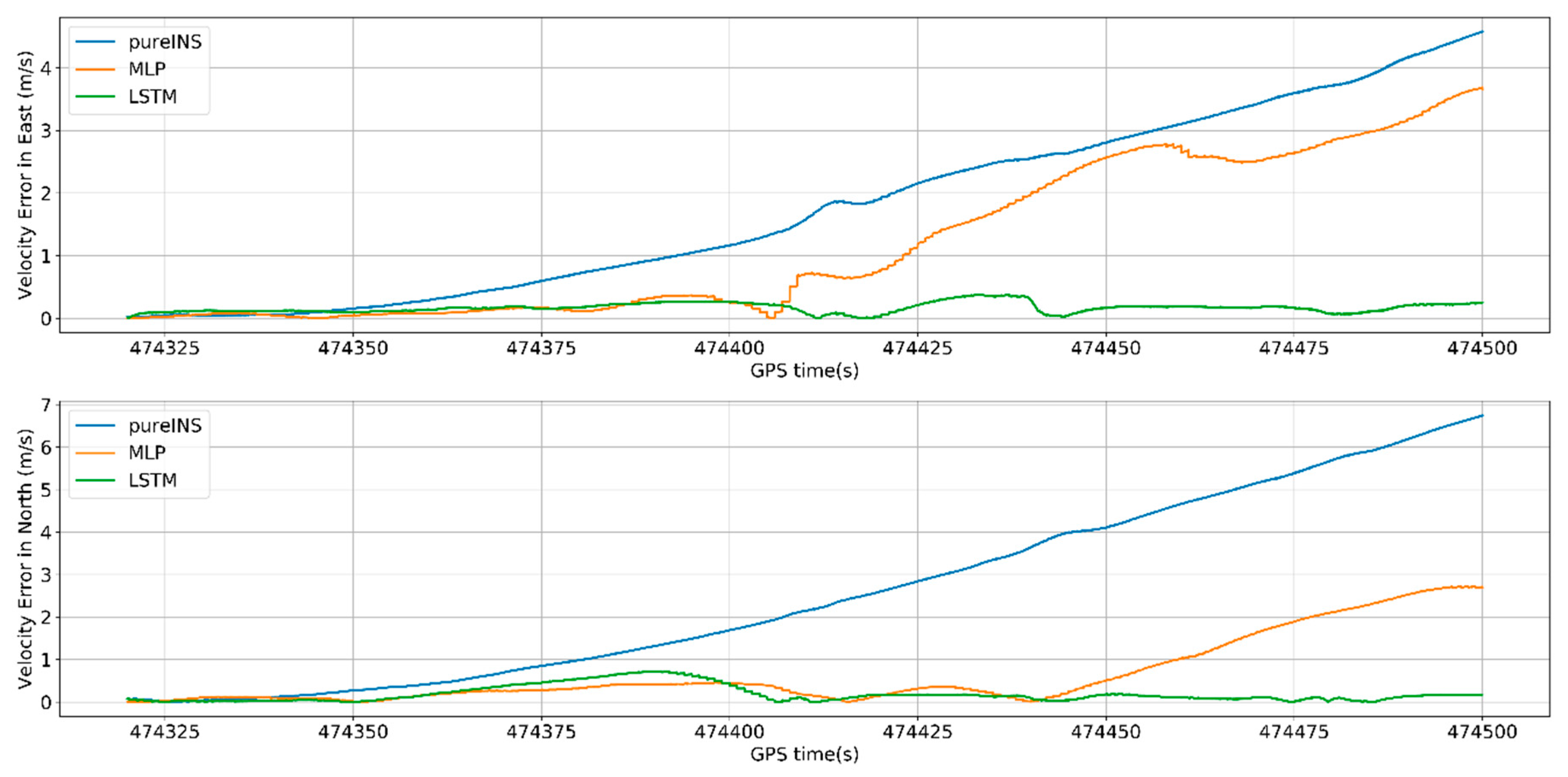

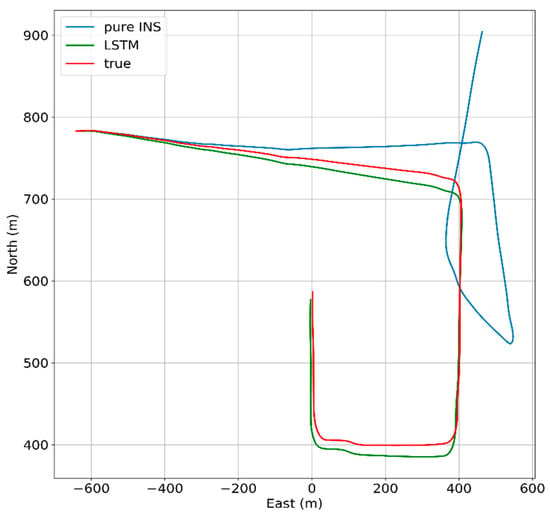

This section, a 180 s GNSS signal time was selected to test the performances of different algorithms. Figure 20, Figure 21 and Figure 22 show the navigation results among different algorithms during 180 s GNSS outages.

Figure 20.

Position errors of 180 s outages with different algorithms.

Figure 21.

Velocity errors of 180 s outages with different algorithms.

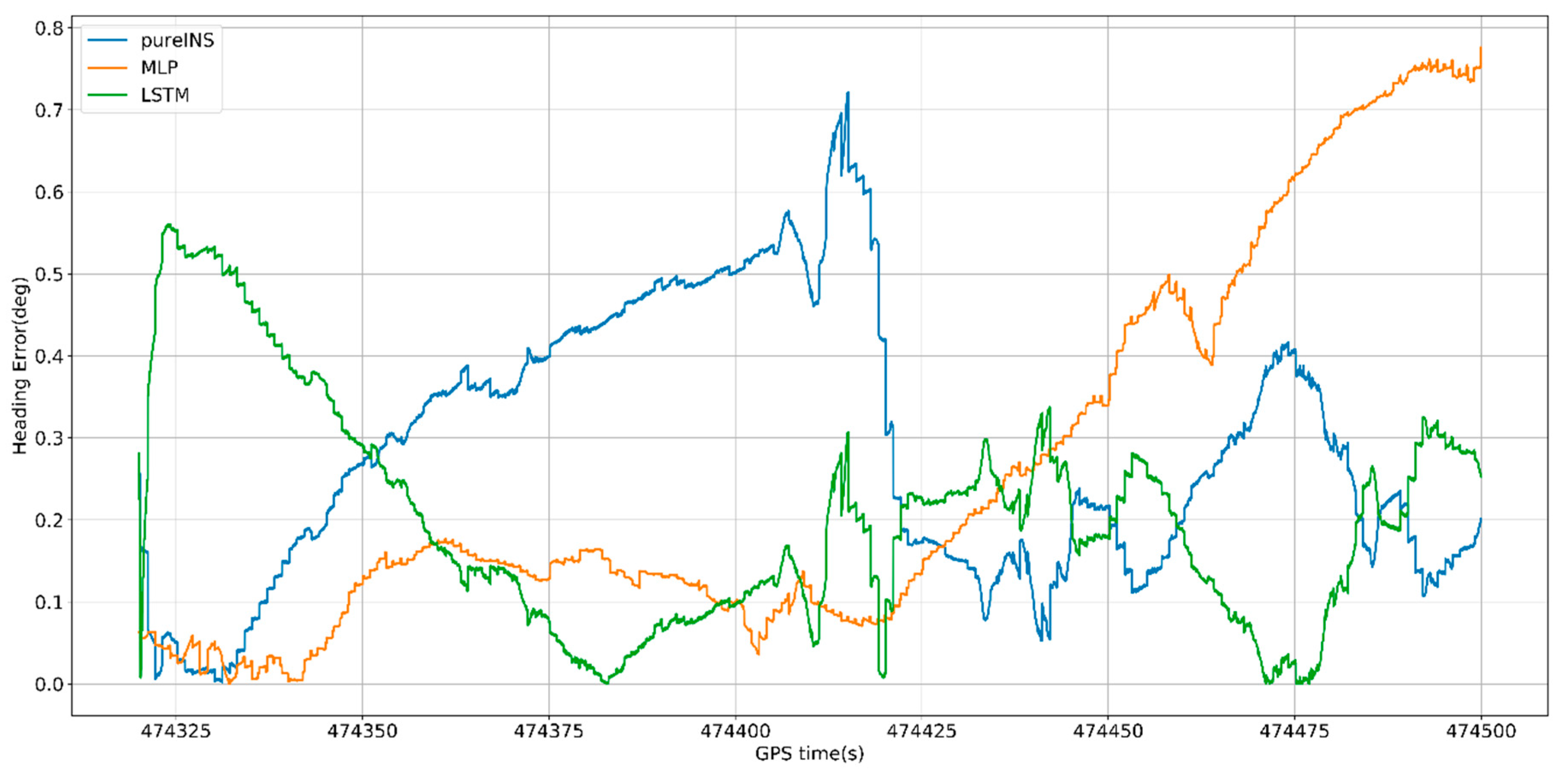

Figure 22.

Heading errors of 180 s outages with different algorithms.

In Figure 20, the proposed LSTM algorithm is obviously better than the MLP algorithm and pure INS. At 474,500 s, the max position error in east of pure INS, MLP, and LSTM is 460, 280, and 17 m, meanwhile the max position error in north is 303, 115, and 10 m. In Figure 21, the max velocity error in east of pure INS, MLP, and LSTM is 4.6, 3.7, and 0.4 m/s, meanwhile the max velocity error in north is 6.7, 2.8, and 0.7 m/s. In Figure 22, the heading error among three algorithms is 0.7°, 0.75° and 0.55°. All the results are summarized in Table 7.

Table 7.

Max error of position, velocity, and heading during 180 s GNSS outages in different algorithms.

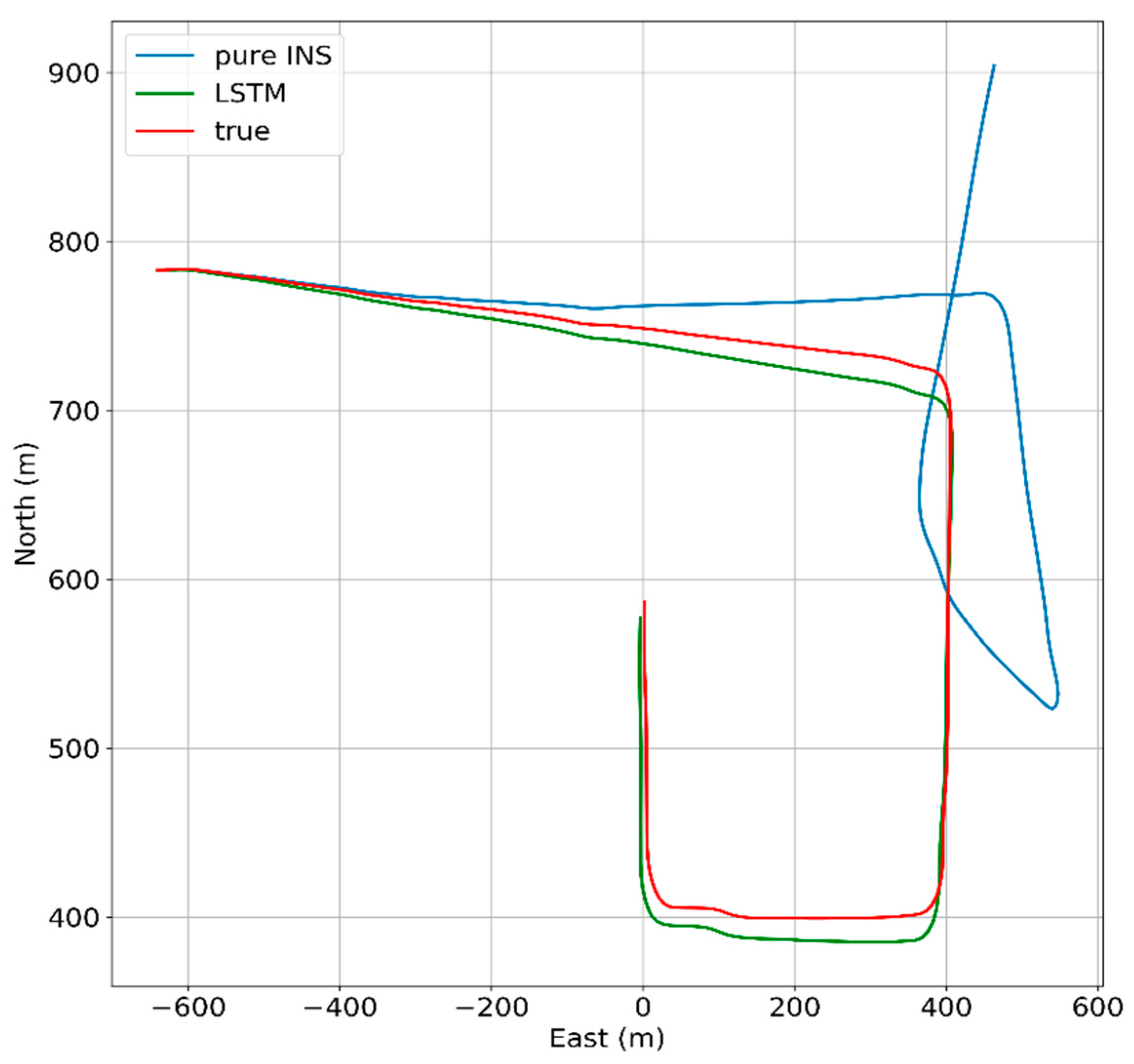

In Figure 23, under the condition of 180 s GNSS signal outages and complicated scene with three benches, the performances of all algorithms have reduced, but LSTM can also improve the navigation errors. At the end of GNSS outages, the position error of the LSTM algorithm is 95.5% performance improvement compared with the pure INS.

Figure 23.

LSTM 180 s outages trajectory.

5.4. Experiment Summary

When the GNSS signal is not available, the whole system turns to predicting mode. The measurements from INS—which are the specific force, velocity, yaw, and angular rate—are sent to a well-trained AI-based model so as to attain a predicting value of position increments. Then, accumulating these position increments can get the pseudo-GNSS position to act as a substitute of the true GNSS position, which will subtract the position measurement from INS to constitute a part of the input vector of the Kalman filter. Finally, the Kalman filter will correct the INS errors so as to attain the corrected position, attitude, and velocity. The test results indicate that the MLP algorithm, which suffers from an incapability of dealing with time-dependency between current and past vehicle dynamics, cannot be a proper model for aiding INS during GNSS signal outages. When the GNSS signal is lost, the LSTM algorithm can get a more accurate navigation result because of its capability of constructing the relationship between future and past information.

6. Conclusions

This paper proposes a novel approach of estimating pseudo measurements employing the LSTM algorithm to reduce the accumulating INS errors when the GNSS signal is blocked. Even in challenging environments, such as complete GNSS outages, this hybrid approach aggregates LSTM neural network, leading to a substantial decrease in position error of vehicle. In this research, the input features and output of the LSTM module were carefully analyzed, where the input features—including the specific force, angular rate, velocity, and yaw—can represent the dynamic vehicle information well. Then, the estimated output was selected as the position increment, which can avoid bringing in additional errors caused by mixing the information of GNSS and INS. Compared with the MLP algorithm, a kind of traditional static neural network, the proposed algorithm based on LSTM can obtain more accurate and stable predictions of navigation results due to its incredible property of dealing with sequential processes. Thus, the LSTM algorithm can provide a more accurate and reliable way to develop a navigation scheme during GNSS outages.

Author Contributions

This paper is a collaborative work by all of the authors. Conceptualization, J.J. and W.F.; Data curation, W.F., J.J., Y.T. (Yifeng Tao), S.L., and Y.G.; Formal analysis, J.J. and W.F.; Funding acquisition, J.J.; Investigation, W.F., J.J., P.Y., and Y.T. (Yifeng Tao); Methodology, W.F., Y.T. (Yanan Tang), S.L., and J.J.; Project administration, J.J.; Software, W.F., Y.T. (Yifeng Tao), S.L., Y.G., and Y.T. (Yanan Tang); Supervision, J.J.; Validation, W.F., Y.T. (Yifeng Tao), and Y.T. (Yanan Tang); Writing (original draft), W.F. and J.J.; Writing (review and editing), W.F., J.J., J.L., P.Y., and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (2018YFB0505200 and 2018YFB0505201), the Fundamental Research Funds for the Central Universities (2042018kf0253).

Acknowledgments

Part of this work is supported by the Collaborative Innovation Center of Geospatial Technology, Wuhan University, who also provided the experimental sites and testers.

Conflicts of Interest

The authors declare that they have no conflict of interest to disclose.

References

- Srinivas, P.; Anil, K. Overview of architecture for GNSS-INS integration. In Proceedings of the 2017 Recent Developments in Control, Automation & Power Engineering (RDCAPE), Noida, India, 26–27 October 2017; pp. 433–438. [Google Scholar]

- Groves, P.D.; Wang, L. The four key challenges of advanced multi-sensor navigation and positioning. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium—PLANS 2014, Monterey, CA, USA, 5–8 May 2014; pp. 773–792. [Google Scholar]

- Gao, S. Multi-sensor optimal data fusion for INS/GNSS/SAR integrated navigation system. Aerosp. Sci. Technol. 2009, 13, 232–237. [Google Scholar] [CrossRef]

- Li, X.; Chen, W. Multi-sensor fusion methodology for enhanced land vehicle positioning. Inf. Fusion 2019, 46, 51–62. [Google Scholar] [CrossRef]

- Havyarimana, V.; Hanyurwimfura, D. A novel hybrid approach based-SRG model for vehicle position prediction in multi-GNSS outage conditions. Inf. Fusion 2018, 41, 1–8. [Google Scholar] [CrossRef]

- Abdolkarimi, E.S.; Mosavi, M.R. Optimization of the low-cost INS/GNSS navigation system using ANFIS for high speed vehicle application. In Proceedings of the 2015 Signal Processing and Intelligent Systems Conference (SPIS), Tehran, Iran, 16–17 December 2015; pp. 93–98. [Google Scholar]

- Adusumilli, S.; Bhatt, D. A low-cost INS/GNSS integration methodology based on random forest regression. Expert Syst. Appl. 2013, 40, 4653–4659. [Google Scholar] [CrossRef]

- Ye, W.; Liu, Z. Enhanced Kalman Filter using Noisy Input Gaussian Process Regression for Bridging GNSS Outages in a POS. J. Navig. 2018, 71, 565–584. [Google Scholar] [CrossRef]

- Yang, Y.X. Adaptive Navigation and Kinematic Positioning, 2nd ed.; Surveying and Mapping Press: Beijing, China, 2008; pp. 18–19. ISBN 978-7-5030-4005-4. [Google Scholar]

- Du, S.; Gao, Y. Inertial aided cycle slip detection and identification for integrated PPP GNSS and INS. Sensors 2012, 12, 14344–14362. [Google Scholar] [CrossRef]

- Xu, Z. Novel hybrid of LS-SVM and Kalman filter for GNSS/INS integration. J. Navig. 2010, 63, 289–299. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A. Online INS/GNSS integration with a radial basis function neural network. IEEE Aerosp. Electron. Syst. Mag. 2005, 20, 8–14. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A. Sensor integration for satellite-based vehicular navigation using neural networks. IEEE Trans. Neural Netw. 2007, 18, 589–594. [Google Scholar] [CrossRef]

- El-Sheimy, N.; Chiang, K.-W.; Noureldin, A. The utilization of artificial neural networks for multisensor system integration in navigation and positioning instruments. IEEE Trans. Instrum. Meas. 2006, 55, 1606–1615. [Google Scholar] [CrossRef]

- Jaradat, M.A.K.; Abdel-Hafez, M.F. Non-linear autoregressive delay-dependent INS/GNSS navigation system using neural networks. IEEE Sens. J. 2017, 17, 1105–1115. [Google Scholar] [CrossRef]

- Li, J.; Song, N. Improving positioning accuracy of vehicular navigation system during GNSS outages utilizing ensemble learning algorithm. Inf. Fusion 2017, 35, 1–10. [Google Scholar] [CrossRef]

- Adusumilli, S.; Bhatt, D. A novel hybrid approach utilizing principal component regression and random forest regression to bridge the period of GNSS outages. Neurocomputing 2015, 166, 185–192. [Google Scholar] [CrossRef]

- Tan, X. GA-SVR and pseudo-position-aided GNSS/INS integration during GNSS outage. J. Navig. 2015, 68, 678–696. [Google Scholar] [CrossRef]

- Indelman, V.; Williams, S.; Kaess, M.; Dellaert, F. Information fusion in navigation systems via factor graph based incremental smoothing. Robot. Auton. Syst. 2013, 61, 721–738. [Google Scholar] [CrossRef]

- Dai, H.F.; Bian, H.W.; Wang, R.Y.; Ma, H. An INS/GNSS integrated navigation in GNSS denied environment using recurrent neural network. Def. Technol. 2019. [Google Scholar] [CrossRef]

- Malleswaran, M.; Vaidehi, V.; Sivasankari, N. A novel approach to the integration of GNSS and INS using recurrent neural networks with evolutionary optimization techniques. Aerosp. Sci. Technol. 2014, 32, 169–179. [Google Scholar] [CrossRef]

- Noureldin, A.; El-Shafie, A.; Bayoumi, M. GNSS/INS integration utilizing dynamic neural networks for vehicular navigation. Inf. Fusion 2011, 12, 48–57. [Google Scholar] [CrossRef]

- Hasan, A.M.; Samsudin, K. GNSS/INS integration based on dynamic ANFIS network. Int. J. Control Autom. 2012, 5, 1–21. [Google Scholar]

- Hochreiter, S.; Jürgen, S. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Wu, Y.; Schuster, M.; Chen, Z. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Ma, X. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Duan, Y.; Lv, Y.; Wang, F.Y. Travel time prediction with LSTM neural network. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1053–1058. [Google Scholar]

- Zhang, Y. Hybrid algorithm based on MDF-CKF and RF for GNSS/INS system during GNSS outages. IEEE Access 2018, 6, 35343–35354. [Google Scholar] [CrossRef]

- Trawny, N.; Mourikis, A.I. Vision-aided inertial navigation for pin-point landing using observations of mapped landmarks. J. Field Robot. 2007, 24, 357–378. [Google Scholar] [CrossRef]

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems; Artech House: London, UK, 2013. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. New extension of the Kalman filter to nonlinear systems. Aerosense Int. Soc. Opt. Photonics 1997. [Google Scholar] [CrossRef]

- Fernando, T. Soft hardwired attention: An lstm framework for human trajectory prediction and abnormal event detection. Neural Netw. 2018, 108, 466–478. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, W. LSTM network: A deep learning approach for short-term traffic forecast. IET Intell. Transp. Syst. 2017, 11, 68–75. [Google Scholar] [CrossRef]

- Saleh, K.; Mohammed, H.; Saied, N. Intent prediction of vulnerable road users from motion trajectories using stacked LSTM network. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017. [Google Scholar]

- Xingjian, S.H.I. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Bhatt, D. A novel hybrid fusion algorithm to bridge the period of GNSS outages using low-cost INS. Expert Syst. Appl. 2014, 41, 2166–2173. [Google Scholar] [CrossRef]

- Abdel-Hamid, W.; Noureldin, A. Adaptive fuzzy prediction of low-cost inertial-based positioning errors. IEEE Trans. Fuzzy Syst. 2007, 15, 519–529. [Google Scholar] [CrossRef]

- Farrell, J.; Barth, M. The Global Positioning System and Inertial Navigation; Mcgraw-Hill: New York, NY, USA, 1999; Volume 61. [Google Scholar]

- Goshen-Meskin, D.; Bar-Itzhack, I.Y. Unified approach to inertial navigation system error modeling. J. Guid. Control Dyn. 1992, 15, 648–653. [Google Scholar]

- Liu, W.; Wen, Y. Large-margin softmax loss for convolutional neural networks. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 507–516. [Google Scholar]

- Abadi, M.; Paul, B. Tensorflow: A system for large-scale machine learning. In Proceedings of the OSDI’16: 12th USENIX conference on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Diederik, K.; Jimmy, B. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).