Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures

Abstract

1. Introduction

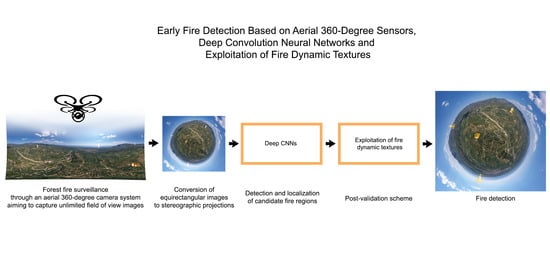

- We propose a novel early fire detection remote sensing system using aerial 360-degree digital cameras in an operationally and time efficient manner, aiming to overcome the limited field of view of state-of-the-art systems and human-controlled specified data capturing.

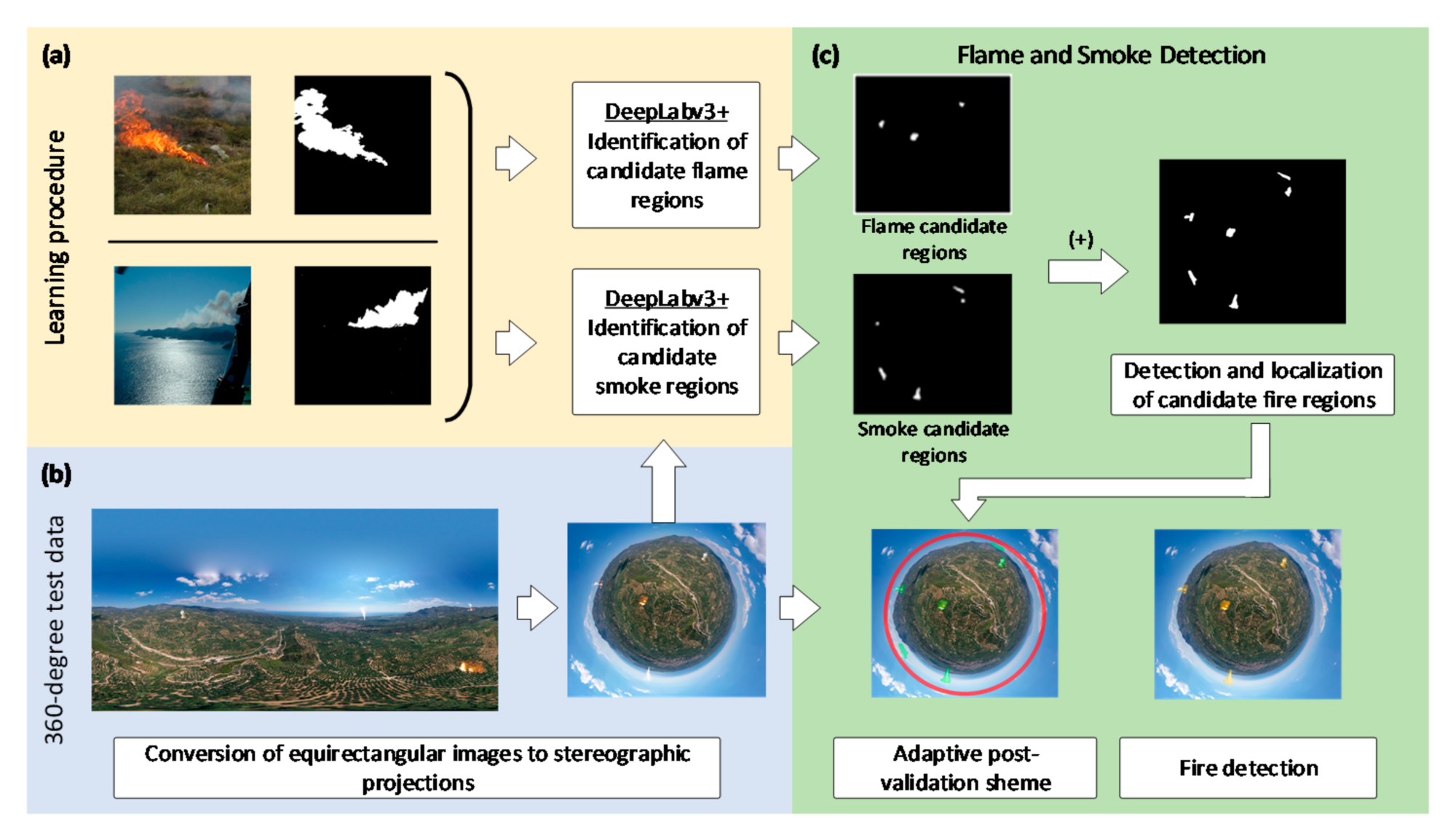

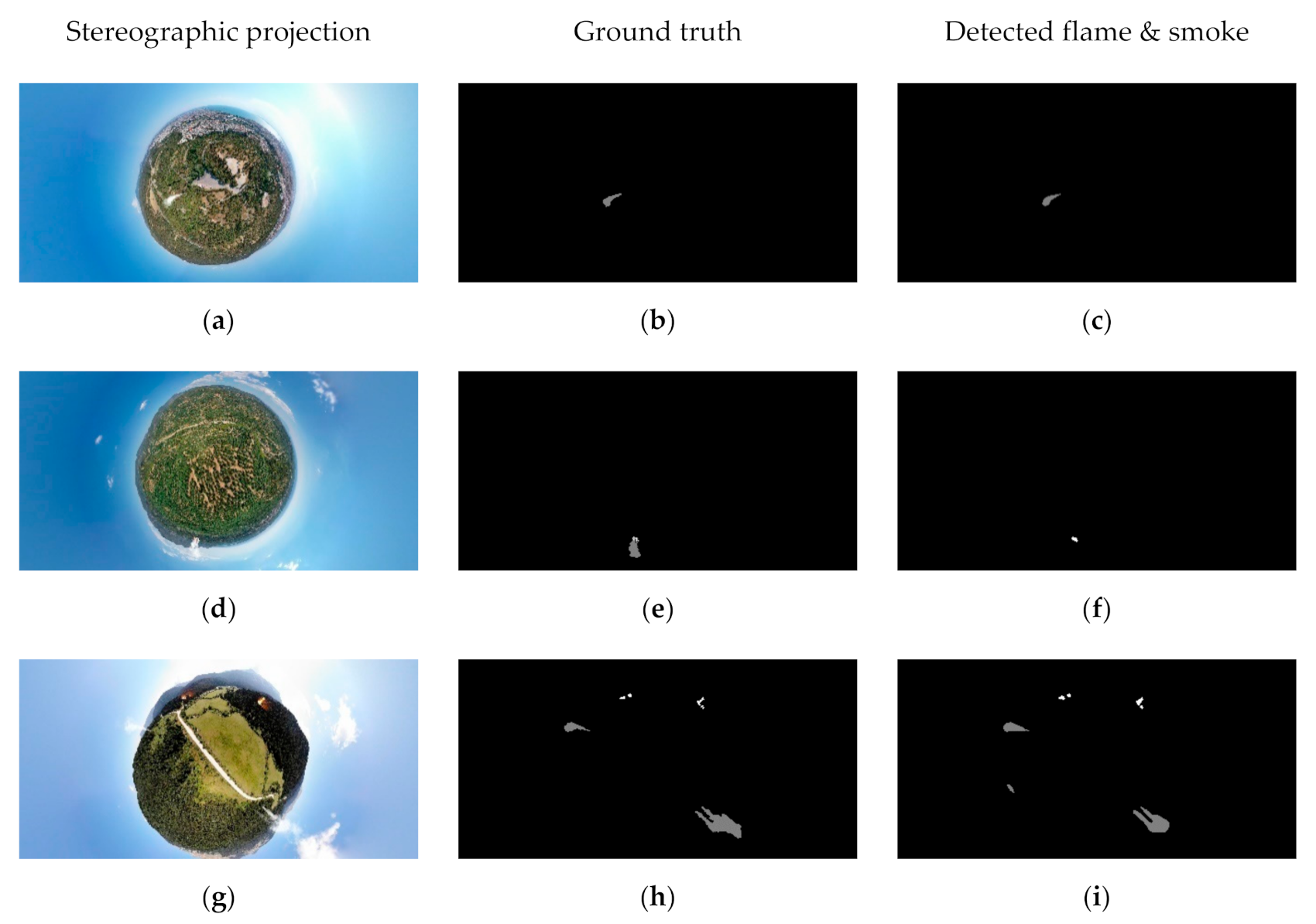

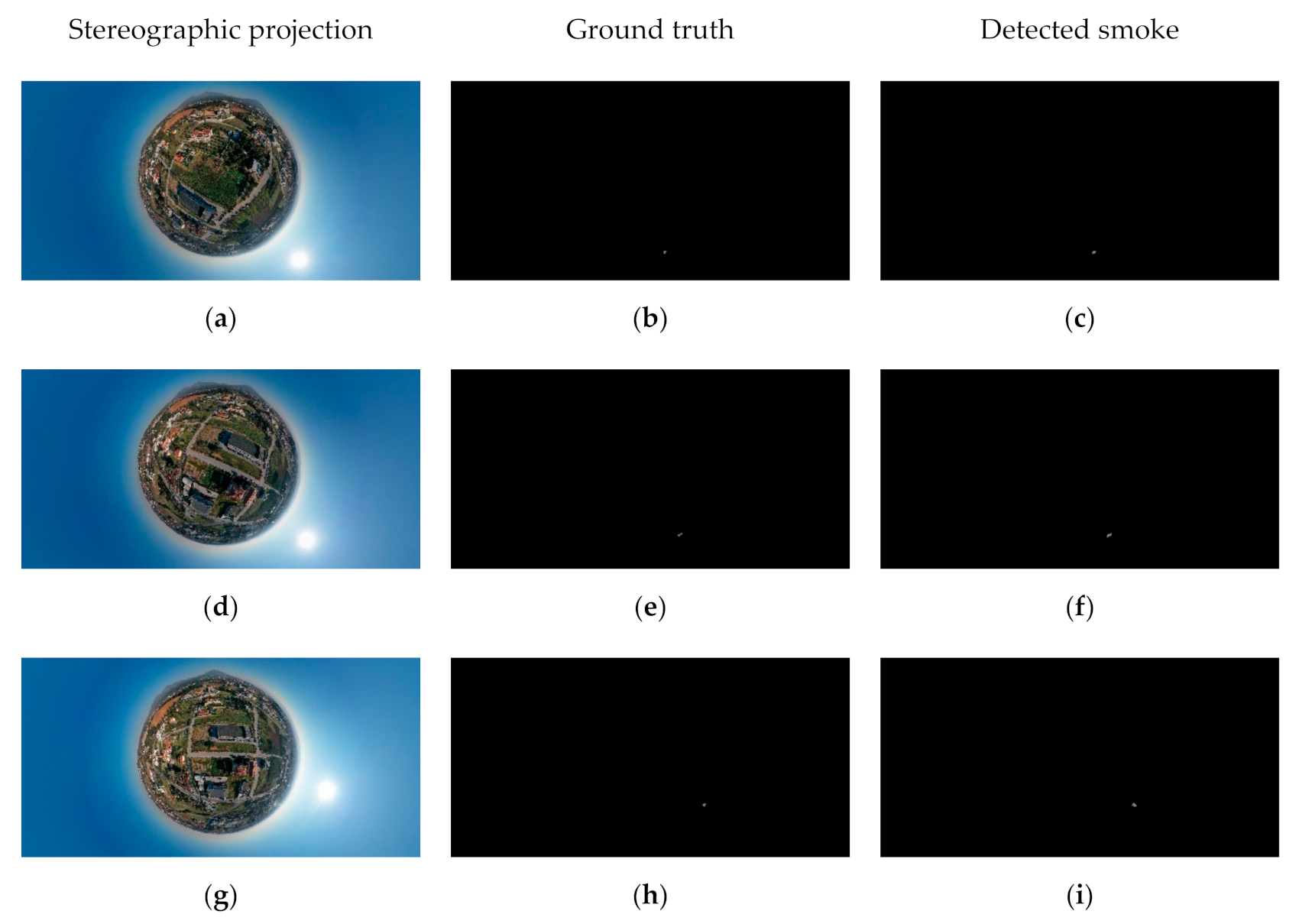

- A novel method is proposed for fire detection based on the extraction of stereographic projections and aiming to detect both flame and smoke through two deep convolutional neural networks. Specifically, we initially perform flame and smoke segmentation, identifying candidate fire regions through the use of two Deeplab V3+ models. Then, the detected regions are combined and validated taking into account the environmental appearance of the examined instant capture test image.

2. Materials and Methods

2.1. Data Description

2.2. Stereographic Projection of Equirectangular Raw Projections

2.3. Detection and Localization of Candidate Fire Regions

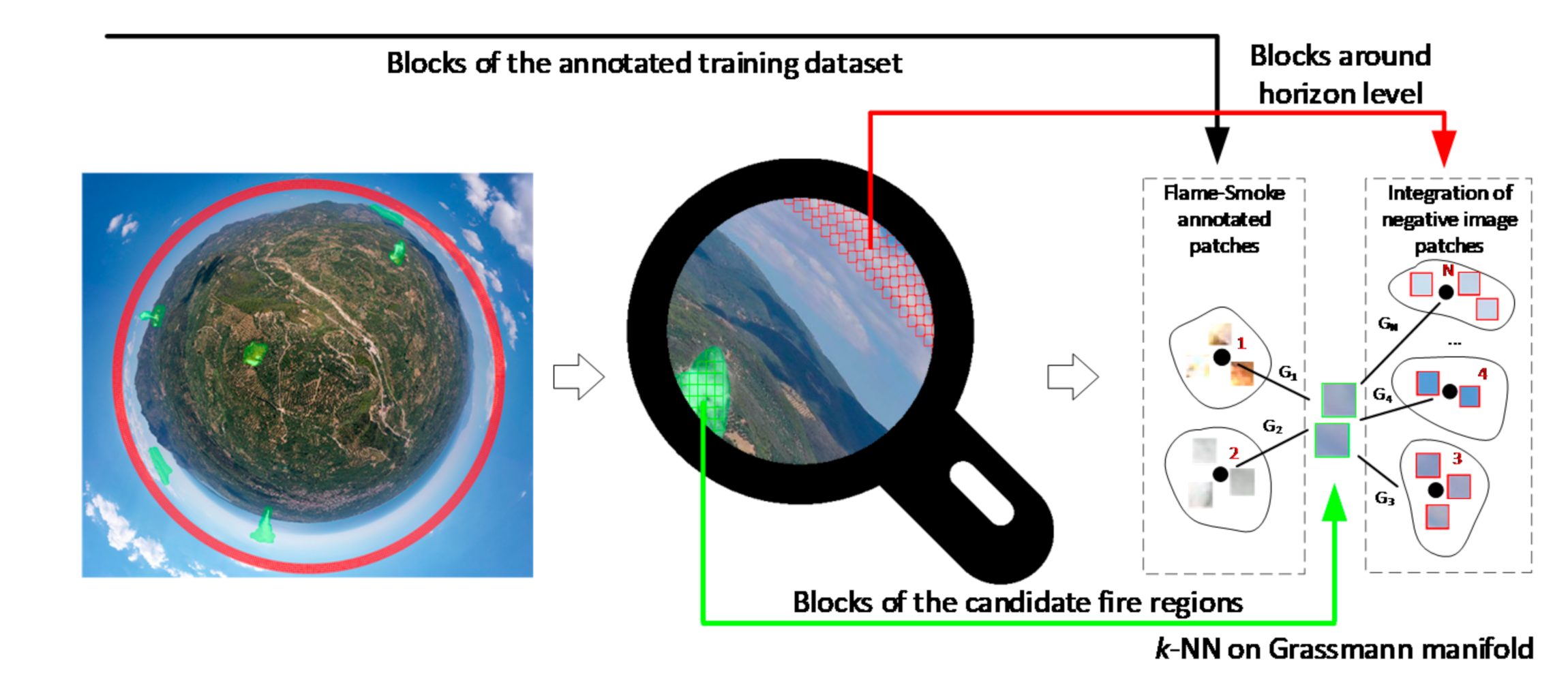

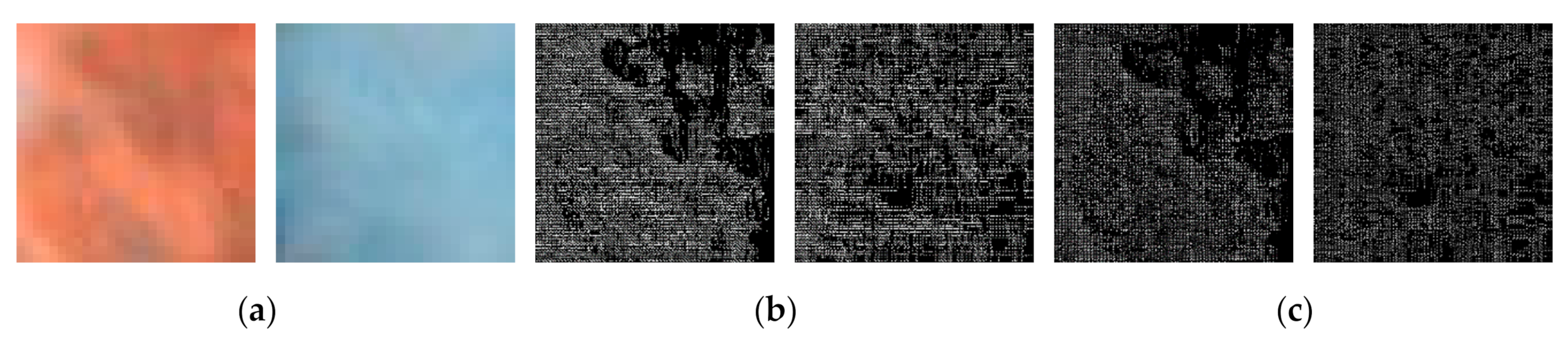

2.4. Adaptive Post-Validation Scheme

3. Experimental Results

3.1. Ablation Analysis

3.2. Comparison Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- HM Government. A Green Future: Our 25 Year Plan to Improve the Environment. Available online: https://www.gov.uk/government/publications/25-year-environment-plan (accessed on 24 October 2019).

- Wieting, C.; Ebel, B.A.; Singha, K. Quantifying the effects of wildfire on changes in soil properties by surface burning of soils from the Boulder Creek Critical Zone Observatory. J. Hydrol. Reg. Stud. 2017, 13, 43–57. [Google Scholar] [CrossRef]

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne optical and thermal remote sensing for wildfire detection and monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef]

- Govil, K.; Welch, M.L.; Ball, J.T.; Pennypacker, C.R. Preliminary Results from a Wildfire Detection System Using Deep Learning on Remote Camera Images. Remote Sens. 2020, 12, 166. [Google Scholar] [CrossRef]

- NASA EARTHDATA. Remote Sensors. Available online: https://earthdata.nasa.gov/learn/remote-sensors (accessed on 10 September 2020).

- Szpakowski, D.M.; Jensen, J.L. A review of the applications of remote sensing in fire ecology. Remote Sens. 2019, 11, 2638. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Dennison, P.; Gitas, I.; Hulley, G.; Kalashnikova, O.; Katagis, T.; Kuai, L.; Meng, R.; Roberts, D.; Stavros, N. Hyperspectral remote sensing of fire: State-of-the-art and future perspectives. Remote Sens. Environ. 2018, 216, 105–121. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial images-based forest fire detection for firefighting using optical remote sensing techniques and unmanned aerial vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- Hendel, I.G.; Ross, G.M. Efficacy of Remote Sensing in Early Forest Fire Detection: A Thermal Sensor Comparison. Can. J. Remote Sens. 2020, 1–15. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Cetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Tsalakanidou, F.; Grammalidis, N. Flame detection for video-based early fire warning systems and 3D visualization of fire propagation. In Proceedings of the 13th IASTED International Conference on Computer Graphics and Imaging, Crete, Greece, 18–20 June 2012. [Google Scholar]

- Grammalidis, N.; Cetin, E.; Dimitropoulos, K.; Tsalakanidou, F.; Kose, K.; Gunay, O.; Gouverneur, B.; Torri, D.; Kuruoglu, E.; Tozzi, S.; et al. A Multi-Sensor Network for the Protection of Cultural Heritage. In Proceedings of the 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011. [Google Scholar]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Real time video fire detection using spatio-temporal consistency energy. In Proceedings of the 10th IEEE International Conference on Advanced Video and Signal Based Surveillance, Krakow, Poland, 27–30 August 2013; pp. 365–370. [Google Scholar]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Lloret, J.; Garcia, M.; Bri, D.; Sendra, S. A wireless sensor network deployment for rural and forest fire detection and verification. Sensors 2009, 9, 8722–8747. [Google Scholar] [CrossRef]

- Prema, C.E.; Vinsley, S.S.; Suresh, S. Efficient flame detection based on static and dynamic texture analysis in forest fire detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Gubin, N.A.; Zolotarev, N.S.; Poletaev, A.S.; Chensky, D.A.; Batzorig, Z.; Chensky, A.G. A microwave radiometer for detection of forest fire under conditions of insufficient visibility. J. Phys. Conf. Ser. 2019, 1353, 012092. [Google Scholar] [CrossRef]

- Varotsos, C.A.; Krapivin, V.F.; Mkrtchyan, F.A. A New Passive Microwave Tool for Operational Forest Fires Detection: A Case Study of Siberia in 2019. Remote Sens. 2020, 12, 835. [Google Scholar] [CrossRef]

- Koltunov, A.; Ustin, S.L.; Quayle, B.; Schwind, B.; Ambrosia, V.G.; Li, W. The development and first validation of the GOES Early Fire Detection (GOES-EFD) algorithm. Remote Sens. Environ. 2016, 184, 436–453. [Google Scholar] [CrossRef]

- Vani, K. Deep Learning Based Forest Fire Classification and Detection in Satellite Images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019; pp. 61–65. [Google Scholar]

- Jang, E.; Kang, Y.; Im, J.; Lee, D.W.; Yoon, J.; Kim, S.K. Detection and monitoring of forest fires using Himawari-8 geostationary satellite data in South Korea. Remote Sens. 2019, 11, 271. [Google Scholar] [CrossRef]

- Polivka, T.N.; Wang, J.; Ellison, L.T.; Hyer, E.J.; Ichoku, C.M. Improving nocturnal fire detection with the VIIRS day–night band. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5503–5519. [Google Scholar] [CrossRef]

- Sharma, J.; Granmo, O.C.; Goodwin, M.; Fidje, J.T. Deep convolutional neural networks for fire detection in images. In Proceedings of the International Conference on Engineering Applications of Neural Networks, Athens, Greece, 25–27 August 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 183–193. [Google Scholar]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Fnaiech, F. Convolutional neural network for video fire and smoke detection. In Proceedings of the IECON 2016-42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 24–27 October 2016; pp. 877–882. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1419–1434. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Kaza, K.; Grammalidis, N. Fire Detection from Images Using Faster R-CNN and Multidimensional Texture Analysis. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing, Brighton, UK, 12–17 May 2019; pp. 8301–8305. [Google Scholar]

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-based Forest Fire Detection Algorithm Using Convolutional Neural Network. In Proceedings of the IEEE 37th Chinese Control Conference, Wuhan, China, 25–27 July 2018; pp. 10305–10310. [Google Scholar]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency detection and deep learning-based wildfire identification in UAV imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Higher order linear dynamical systems for smoke detection in video surveillance applications. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1143–1154. [Google Scholar] [CrossRef]

- Filonenko, A.; Hernández, D.C.; Jo, K.H. Fast smoke detection for video surveillance using CUDA. IEEE Trans. Ind. Inform. 2017, 14, 725–733. [Google Scholar] [CrossRef]

- Toreyin, B.U.; Dedeoglu, Y.; Cetin, A.E. Contour based smoke detection in video using wavelets. In Proceedings of the IEEE 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Chunyu, Y.; Jun, F.; Jinjun, W.; Yongming, Z. Video fire smoke detection using motion and color features. Fire Technol. 2010, 46, 651–663. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Smoke detection using spatio-temporal analysis, motion modeling and dynamic texture recognition. In Proceedings of the 22nd European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 1078–1082. [Google Scholar]

- Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Learning-based smoke detection for unmanned aerial vehicles applied to forest fire surveillance. J. Intell. Robot. Syst. 2019, 93, 337–349. [Google Scholar] [CrossRef]

- Dai, M.; Gao, P.; Sha, M.; Tian, J. Smoke detection in infrared images based on superpixel segmentation. In Proceedings of the MIPPR 2019: Remote Sensing Image Processing, Geographic Information Systems, and Other Applications, Wuhan, China, 2–3 November 2019. [Google Scholar]

- Wang, J.; Roudini, S.; Hyer, E.J.; Xu, X.; Zhou, M.; Garcia, L.C.; Reid, J.S.; Peterson, D.; da Silva, A.M. Detecting nighttime fire combustion phase by hybrid application of visible and infrared radiation from Suomi NPP VIIRS. Remote Sens. Environ. 2020, 237, 111466. [Google Scholar] [CrossRef]

- Yin, Z.; Wan, B.; Yuan, F.; Xia, X.; Shi, J. A deep normalization and convolutional neural network for image smoke detection. IEEE Access 2017, 5, 18429–18438. [Google Scholar] [CrossRef]

- Tao, C.; Zhang, J.; Wang, P. Smoke detection based on deep convolutional neural networks. In Proceedings of the IEEE International Conference on Industrial Informatics-Computing Technology, Intelligent Technology, Industrial Information Integration, Wuhan, China, 3–4 December 2016; pp. 150–153. [Google Scholar]

- Luo, Y.; Zhao, L.; Liu, P.; Huang, D. Fire smoke detection algorithm based on motion characteristic and convolutional neural networks. Multimed. Tools Appl. 2018, 77, 15075–15092. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, J. Deep domain adaptation based video smoke detection using synthetic smoke images. Fire Saf. J. 2017, 93, 53–59. [Google Scholar] [CrossRef]

- Zhang, Q.X.; Lin, G.H.; Zhang, Y.M.; Xu, G.; Wang, J.J. Wildland forest fire smoke detection based on faster R-CNN using synthetic smoke images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Mi, T.W.; Yang, M.T. Comparison of Tracking Techniques on 360-Degree Videos. Appl. Sci. 2019, 9, 3336. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T. A Novel Framework for Early Fire Detection Using Terrestrial and Aerial 360-Degree Images. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Auckland, New Zealand, 10–14 February 2020; Springer: Cham, Switzerland, 2020; pp. 63–74. [Google Scholar]

- Corsican Fire Database. Available online: http://cfdb.univ-corse.fr/modules.php?name=Sections&sop=viewarticle&artid=137&menu=3 (accessed on 7 September 2019).

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Jadon, A.; Omama, M.; Varshney, A.; Ansari, M.S.; Sharma, R. Firenet: A specialized lightweight fire & smoke detection model for real-time iot applications. arXiv 2019, arXiv:1905.11922. [Google Scholar]

- Center for Wildfire Research. Available online: http://wildfire.fesb.hr/index.php?option=com_content&view=article&id=62&Itemid=72 (accessed on 20 January 2020).

- Center for Wildfire Research. Available online: http://wildfire.fesb.hr/index.php?option=com_content&view=article&id=66&Itemid=76 (accessed on 24 April 2020).

- Open Wildfire Smoke Datasets. Available online: https://github.com/aiformankind/wildfire-smoke-dataset (accessed on 18 July 2020).

- Smoke Dataset. Available online: https://github.com/jiyongma/Smoke-Data (accessed on 29 May 2020).

- Barmpoutis, P. Fire detection-360-degree Dataset. Zenodo 2020. [Google Scholar] [CrossRef]

- Costantini, R.; Sbaiz, L.; Susstrunk, S. Higher order SVD analysis for dynamic texture synthesis. IEEE Trans. Image Process. 2007, 17, 42–52. [Google Scholar] [CrossRef]

- Chang, C.H.; Hu, M.C.; Cheng, W.H.; Chuang, Y.Y. Rectangling stereographic projection for wide-angle image visualization. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2824–2831. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Arfken, G.B.; Weber, H.J. Mathematical methods for physicists. Am. J. Phys. 1999, 67, 165. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Kitsikidis, A.; Grammalidis, N. Classification of multidimensional time-evolving data using histograms of grassmannian points. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 892–905. [Google Scholar] [CrossRef]

- Karcher, H. Riemannian center of mass and mollifier smoothing. Commun. Pure Appl. Math. 1977, 30, 509–541. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28: 29th Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Aslan, S.; Güdükbay, U.; Töreyin, B.U.; Çetin, A.E. Early wildfire smoke detection based on motion-based geometric image transformation and deep convolutional generative adversarial networks. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8315–8319. [Google Scholar]

- DJI. Mavic 2 Pro Specification. Available online: https://www.dji.com/gr/mavic-air-2/specs (accessed on 12 September 2020).

| Flame Detection | Smoke Detection | Flame or Smoke Detection | ||||||

|---|---|---|---|---|---|---|---|---|

| mIoU | F-score | mIoU | F-score | mIoU | F-score | Precision | Recall | |

| DeepLab v3+ | 76.5% | 81.3% | 65.2% | 80.1% | 71.2% | 81.4% | 68.9% | 99.3% |

| Proposed | 78.2% | 94.8% | 70.4% | 93.9% | 77.1% | 94.6% | 90.3% | 99.3% |

| Flame Detection | Smoke Detection | Flame or Smoke Detection | ||||

|---|---|---|---|---|---|---|

| mIoU | F-Score | mIoU | F-Score | mIoU | F-Score | |

| SSD | 61.2% | 69.7% | 59.1% | 67.3% | 59.8% | 67.6% |

| FireNet | 62.9% | 72.2% | 60.5% | 70.5% | 61.4% | 71.1% |

| YOLO v3 | 71.4% | 80.6% | 68.2% | 78.3% | 69.5% | 78.8% |

| Faster R-CNN | 65.8% | 72.7% | 64.1% | 70.6% | 65.0% | 71.5% |

| Faster R-CNN/Grassmannian VLAD encoding | 74.4% | 83.4% | 69.9% | 87.4% | 73.8% | 87.4% |

| U-Net | 68.4% | 74.4% | 64.8% | 71.3% | 67.4% | 71.9% |

| Proposed | 78.2% | 94.8% | 70.4% | 93.9% | 77.1% | 94.6% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. https://doi.org/10.3390/rs12193177

Barmpoutis P, Stathaki T, Dimitropoulos K, Grammalidis N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sensing. 2020; 12(19):3177. https://doi.org/10.3390/rs12193177

Chicago/Turabian StyleBarmpoutis, Panagiotis, Tania Stathaki, Kosmas Dimitropoulos, and Nikos Grammalidis. 2020. "Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures" Remote Sensing 12, no. 19: 3177. https://doi.org/10.3390/rs12193177

APA StyleBarmpoutis, P., Stathaki, T., Dimitropoulos, K., & Grammalidis, N. (2020). Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sensing, 12(19), 3177. https://doi.org/10.3390/rs12193177