Incorporating Deep Features into GEOBIA Paradigm for Remote Sensing Imagery Classification: A Patch-Based Approach

Abstract

1. Introduction

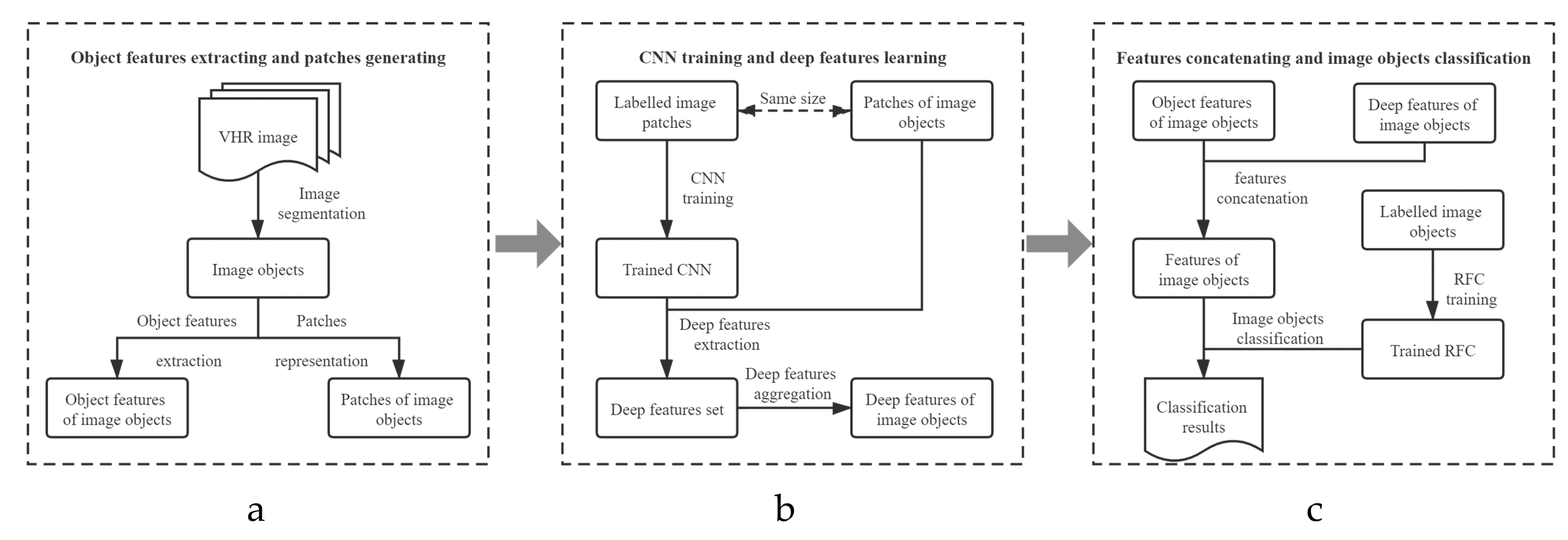

2. Methodology

- Object features extracting and patches generating. The VHR image was segmented into image objects using the multiresolution segmentation method [35] with eCognition, and object features were extracted simultaneously. Additionally, image objects are irregular polygons, while the inputs of the CNN are regular image patches. To extract deep features for image objects, a patches representation strategy was presented to represent every image object as a set of regular image patches.

- CNN training and deep features learning. The reference map of VHR images was obtained by careful visual interpretation, and labelled patches were obtained by random sampling from the reference map. A CNN model was trained through the labelled image patches for obtaining the deep features of patches. Then, a deep feature aggregation approach was performed to obtain the deep features of image objects.

- Features concatenating and image objects classification. Labelled image objects were selected through the reference map, and a random forest classifier (RFC) was trained. Finally, object and deep features of image objects were concatenated together to obtain classification results by the trained RFC.

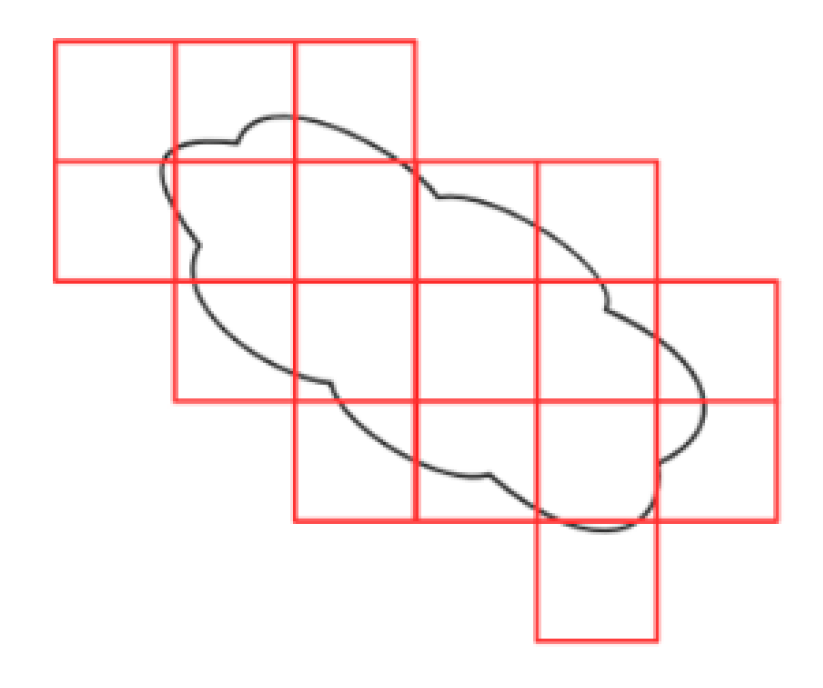

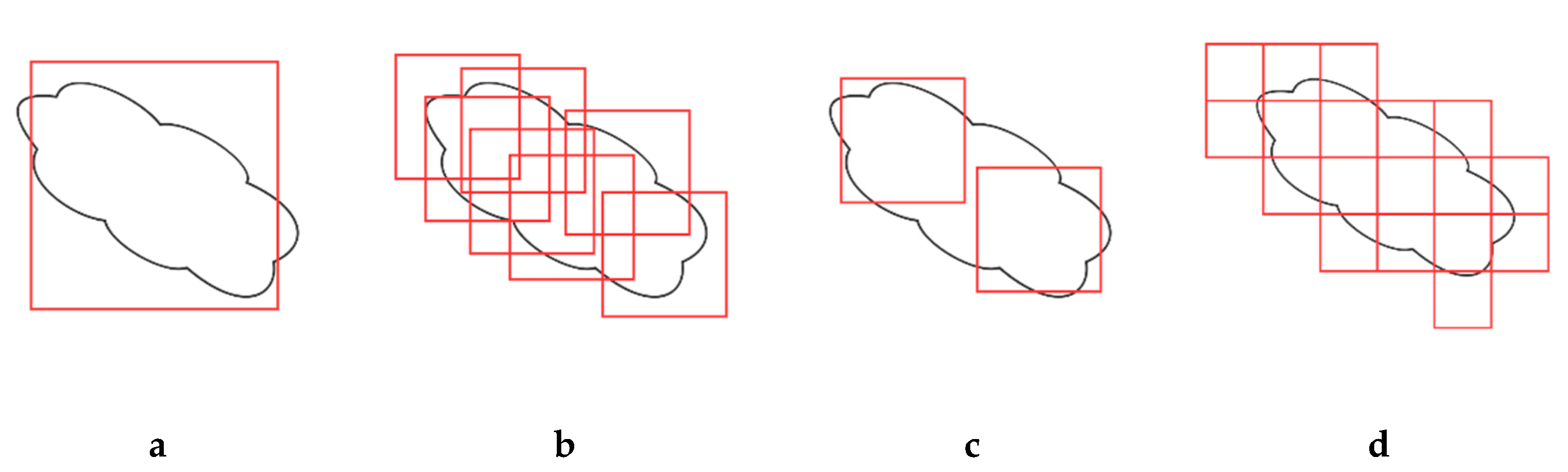

2.1. Patches Representations of Image Objects

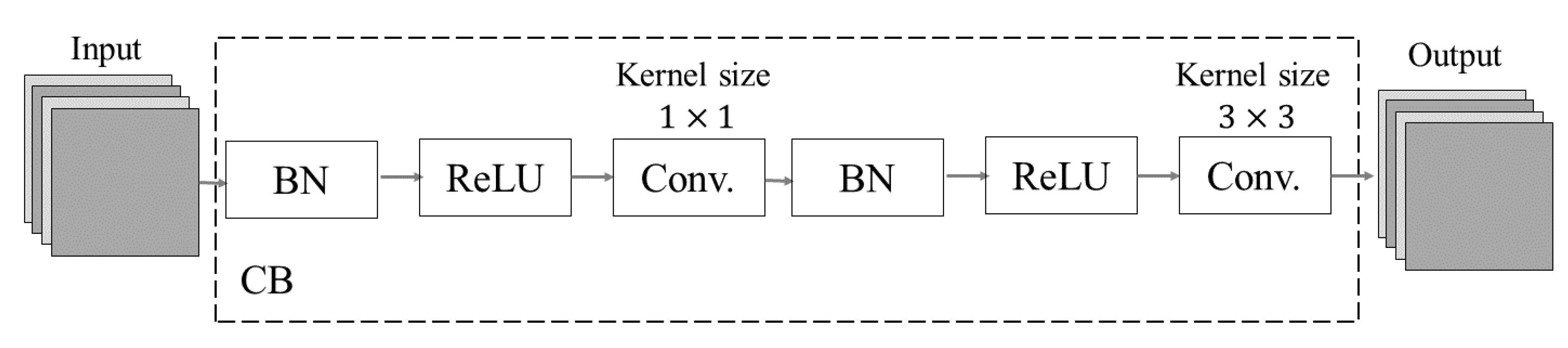

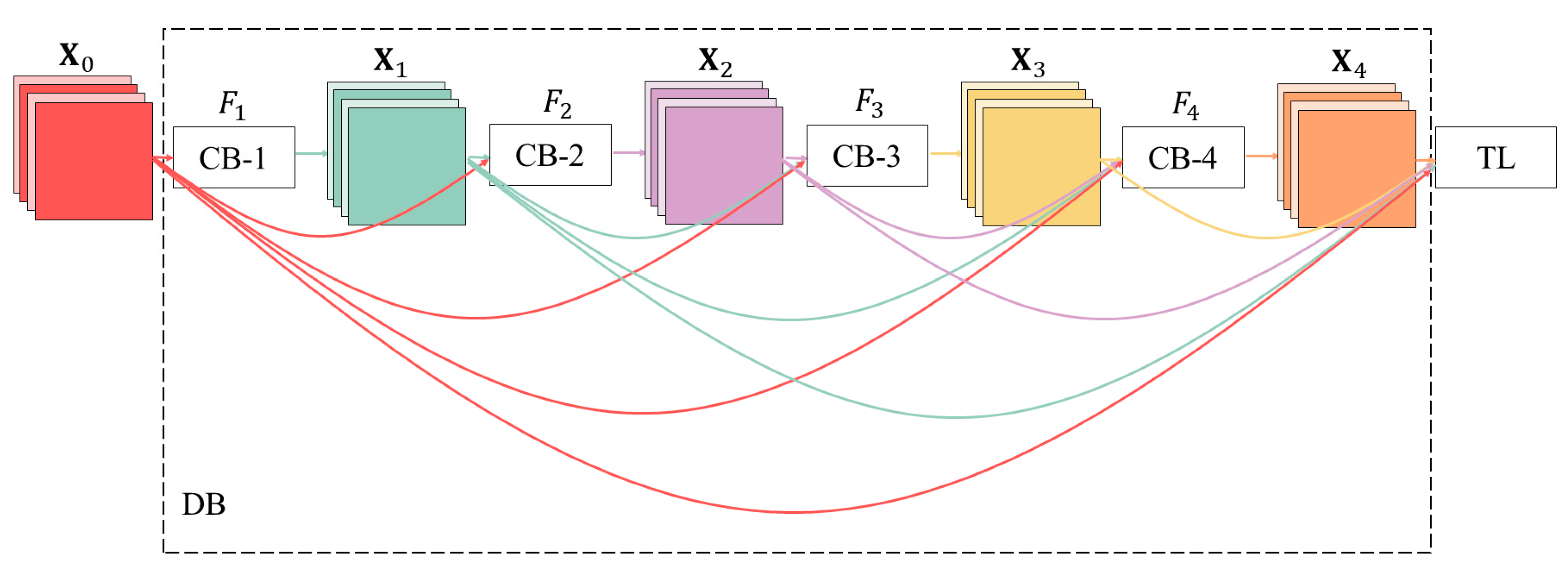

2.2. Convolutional Neural Networks

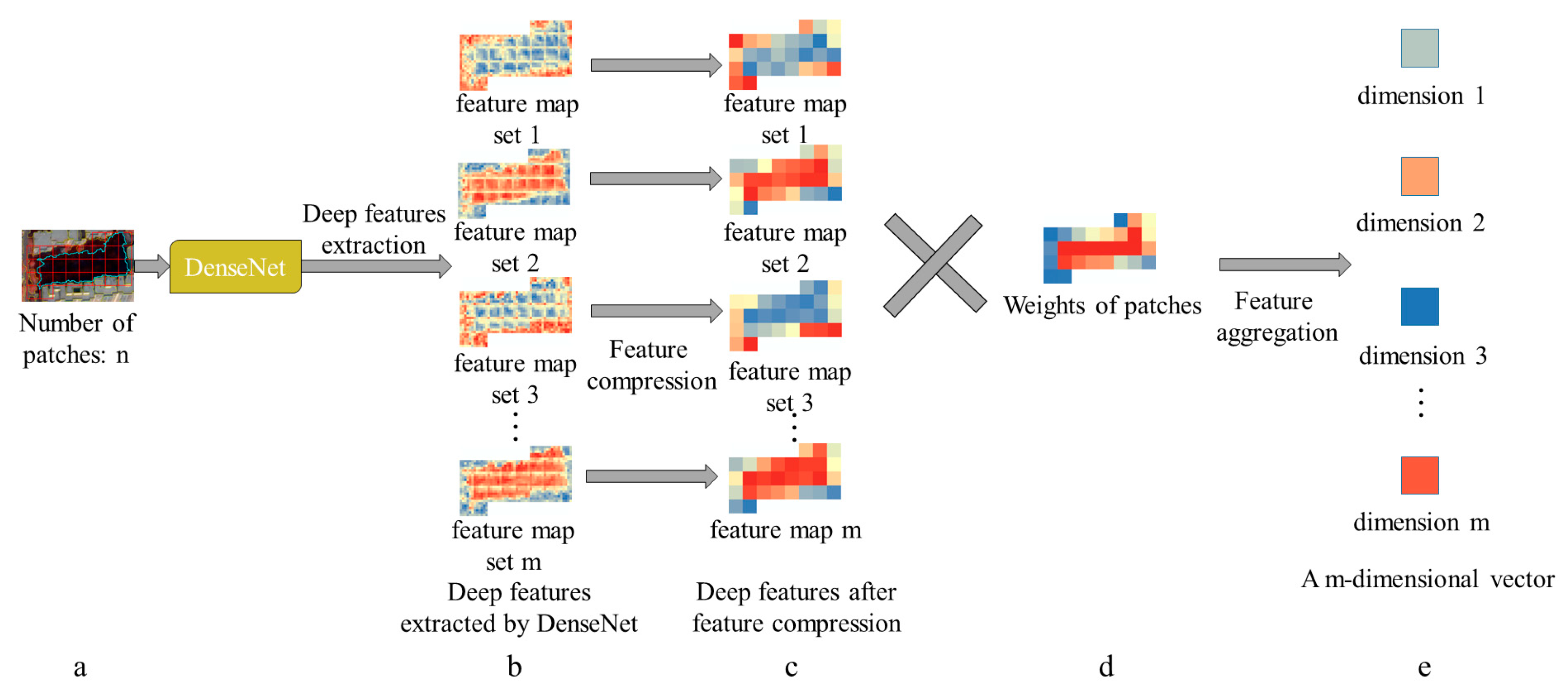

2.3. Deep Feature Aggregation of Image Objects

2.4. Image Object Classification Using Random Forest Classifier

2.5. Accuracy Assessment

3. Datasets and Parameter Settings

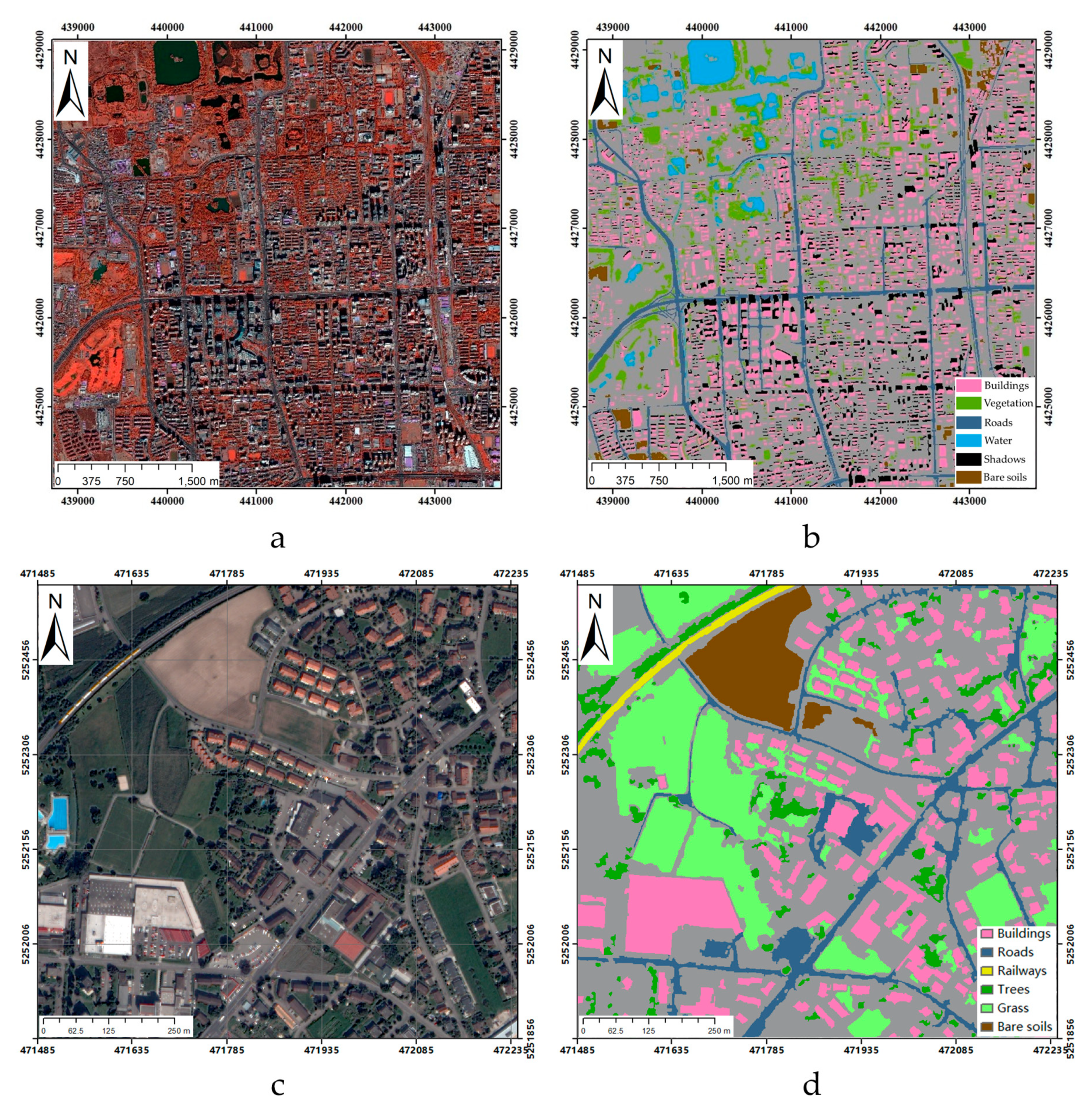

3.1. Image Datasets

3.2. Training and Validation Datasets

3.3. Parameter Settings

4. Results and Analysis

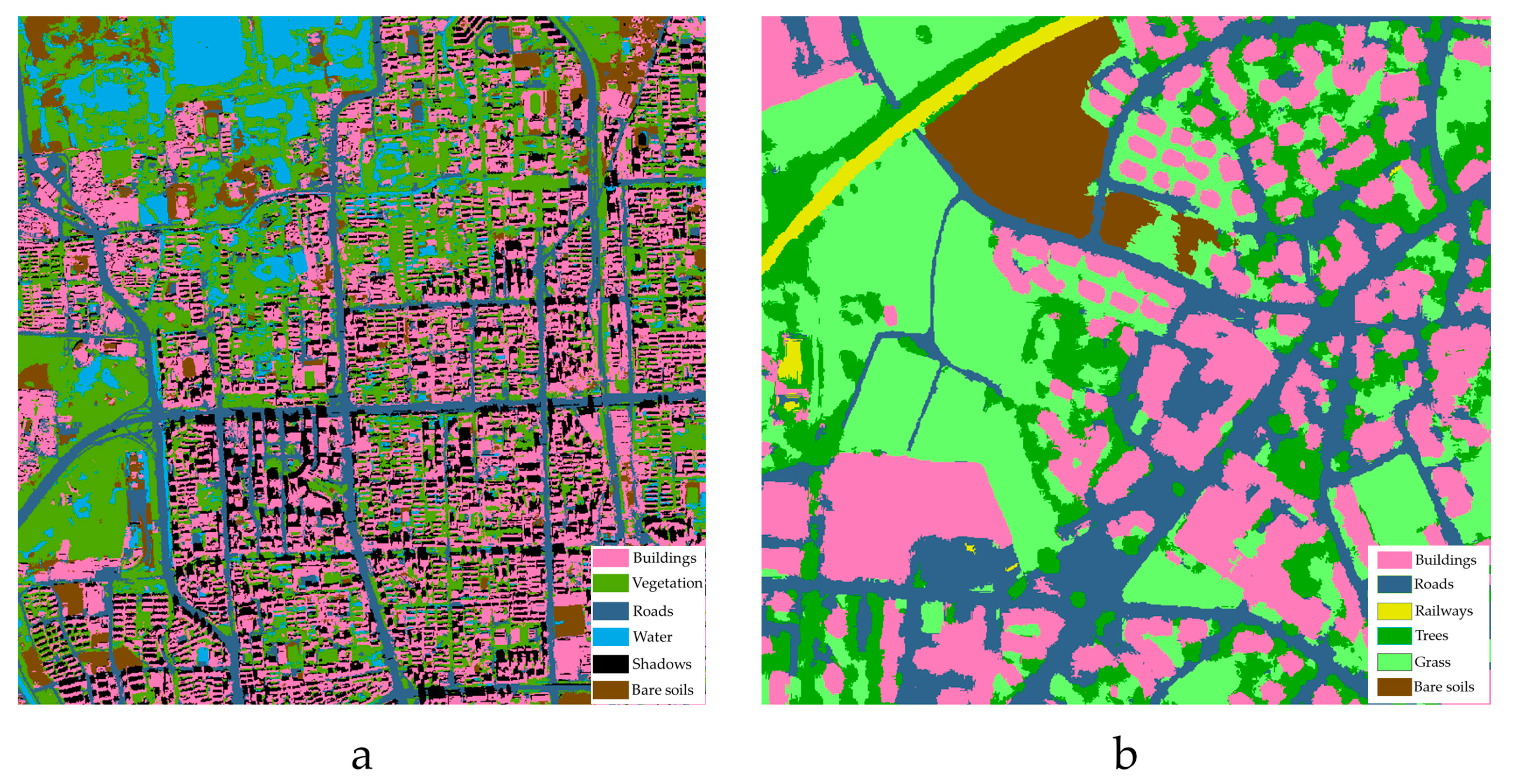

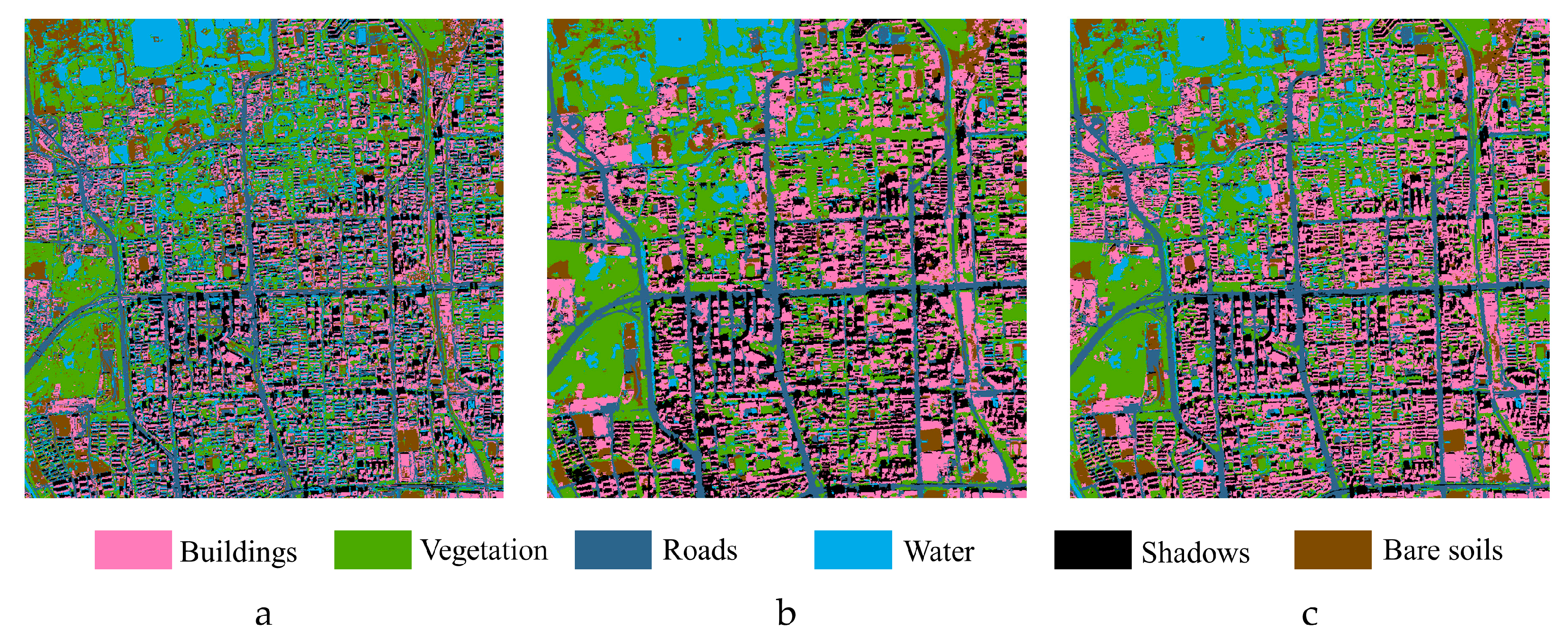

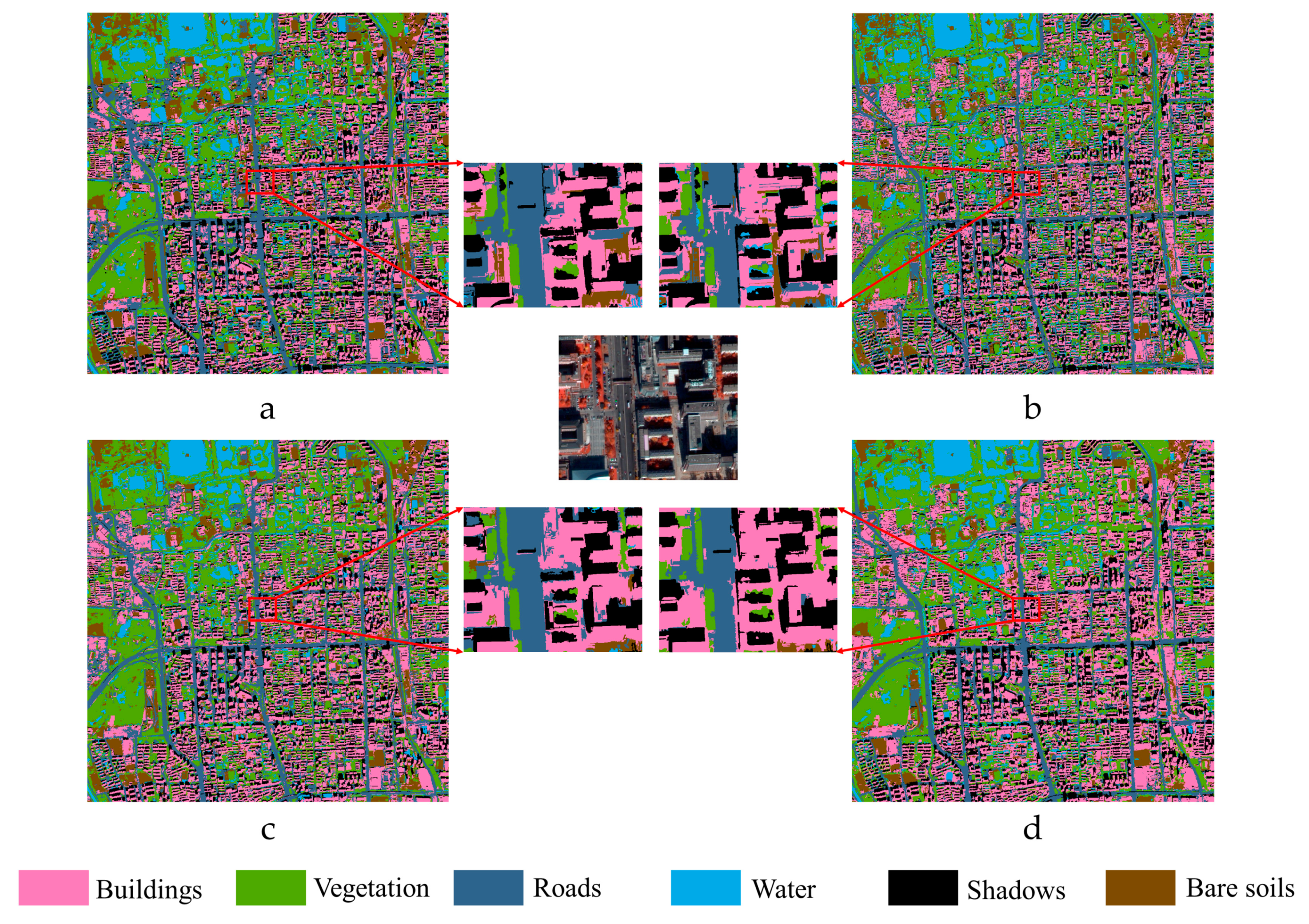

4.1. Land Cover Classification Results

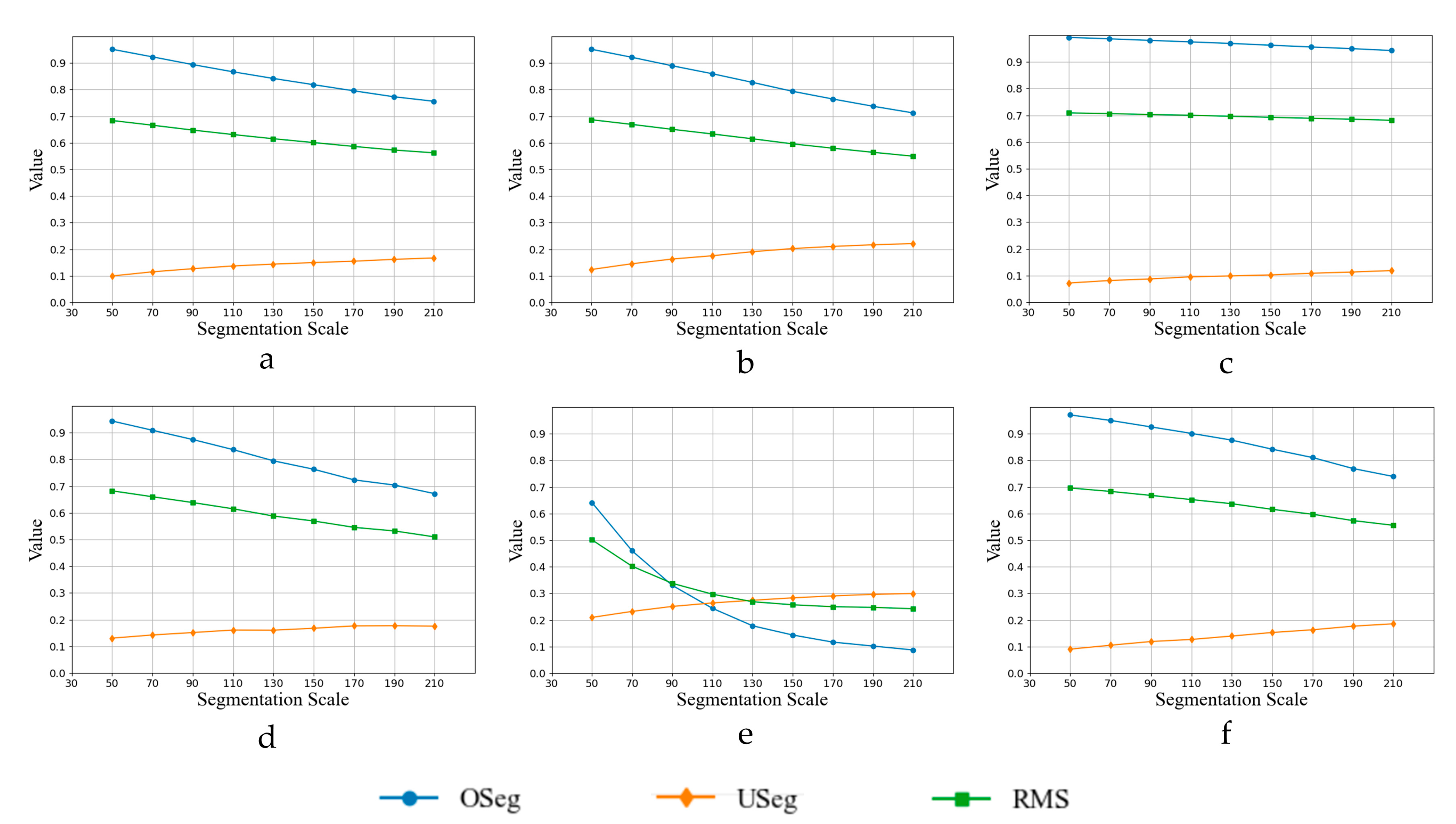

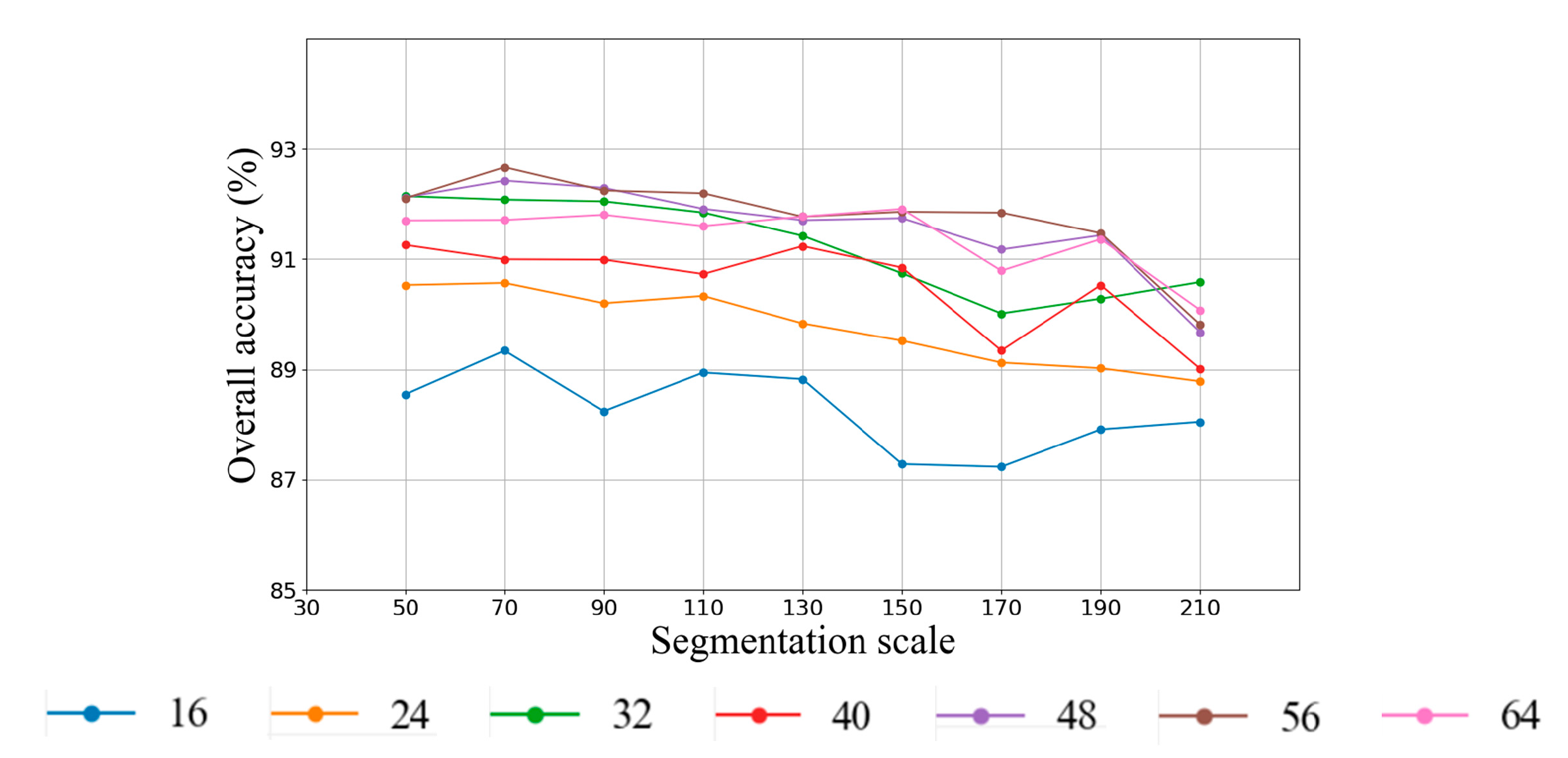

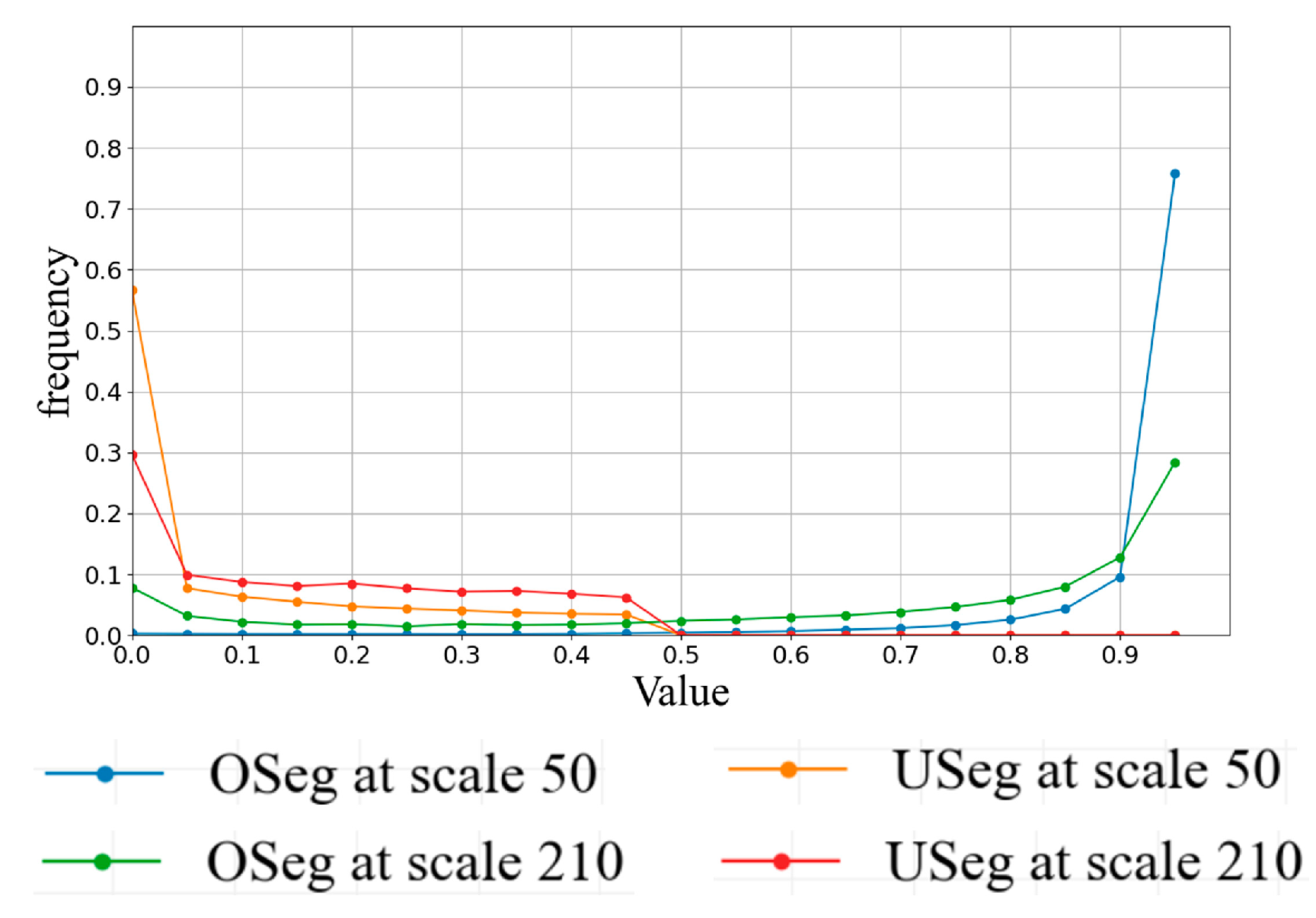

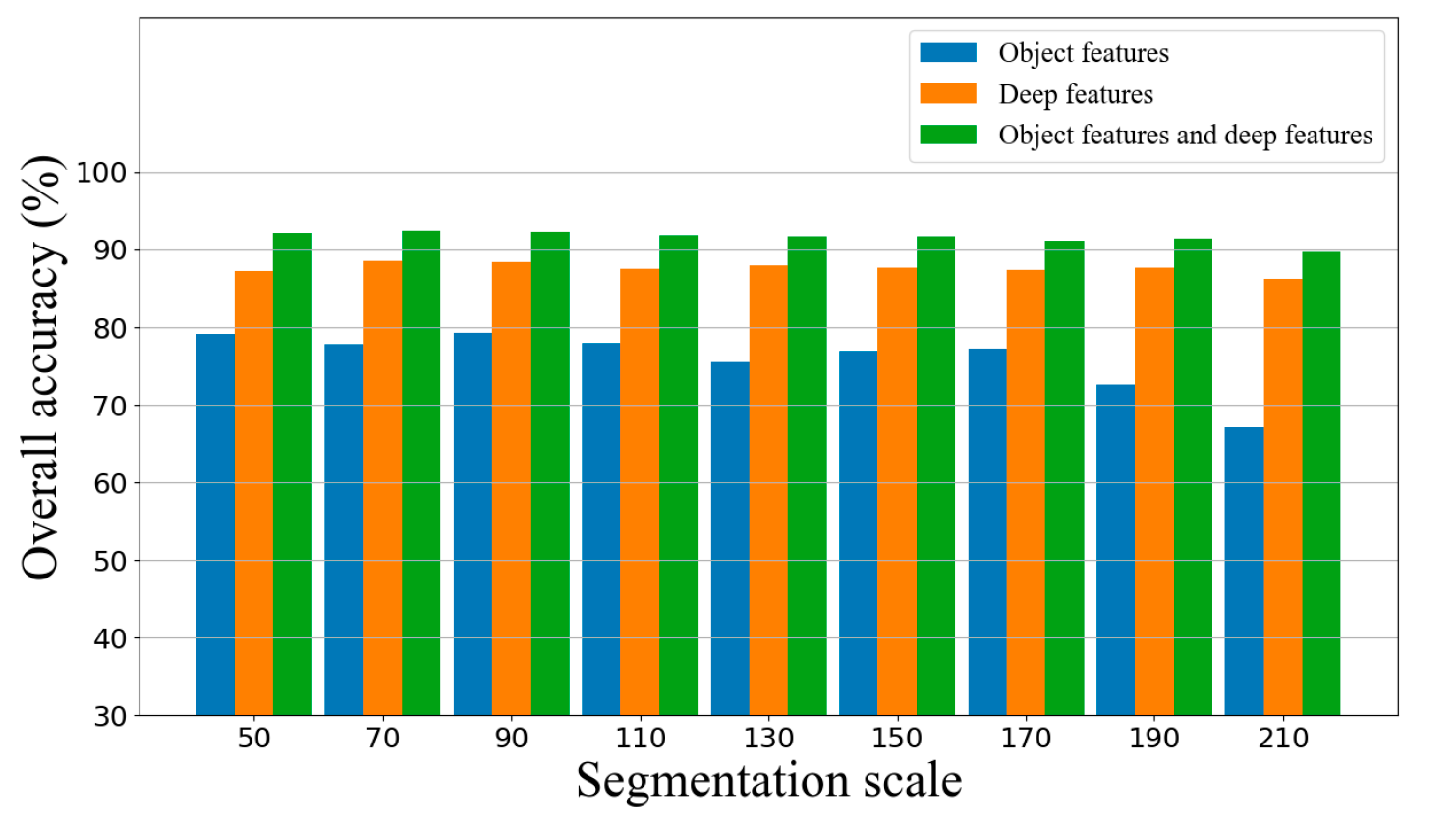

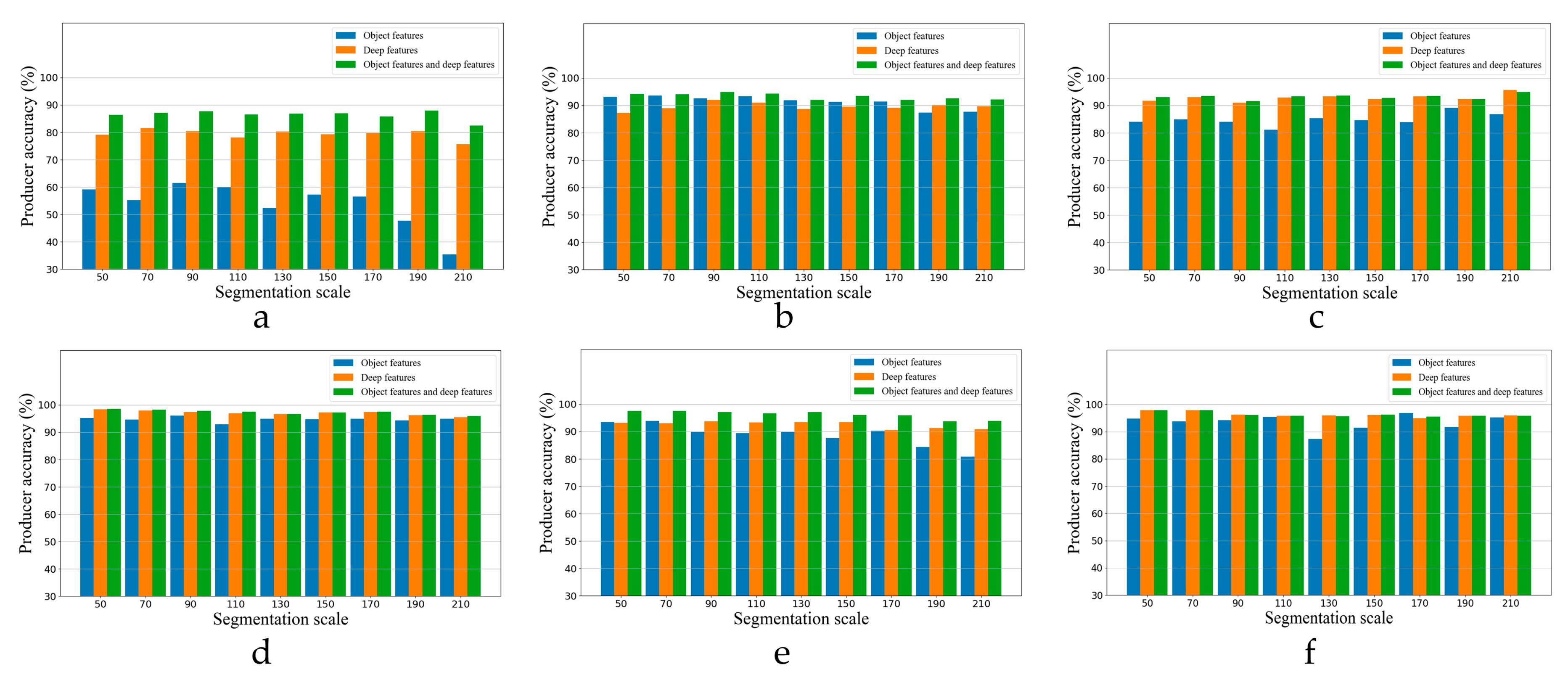

4.2. Influences of Segmentation Scales

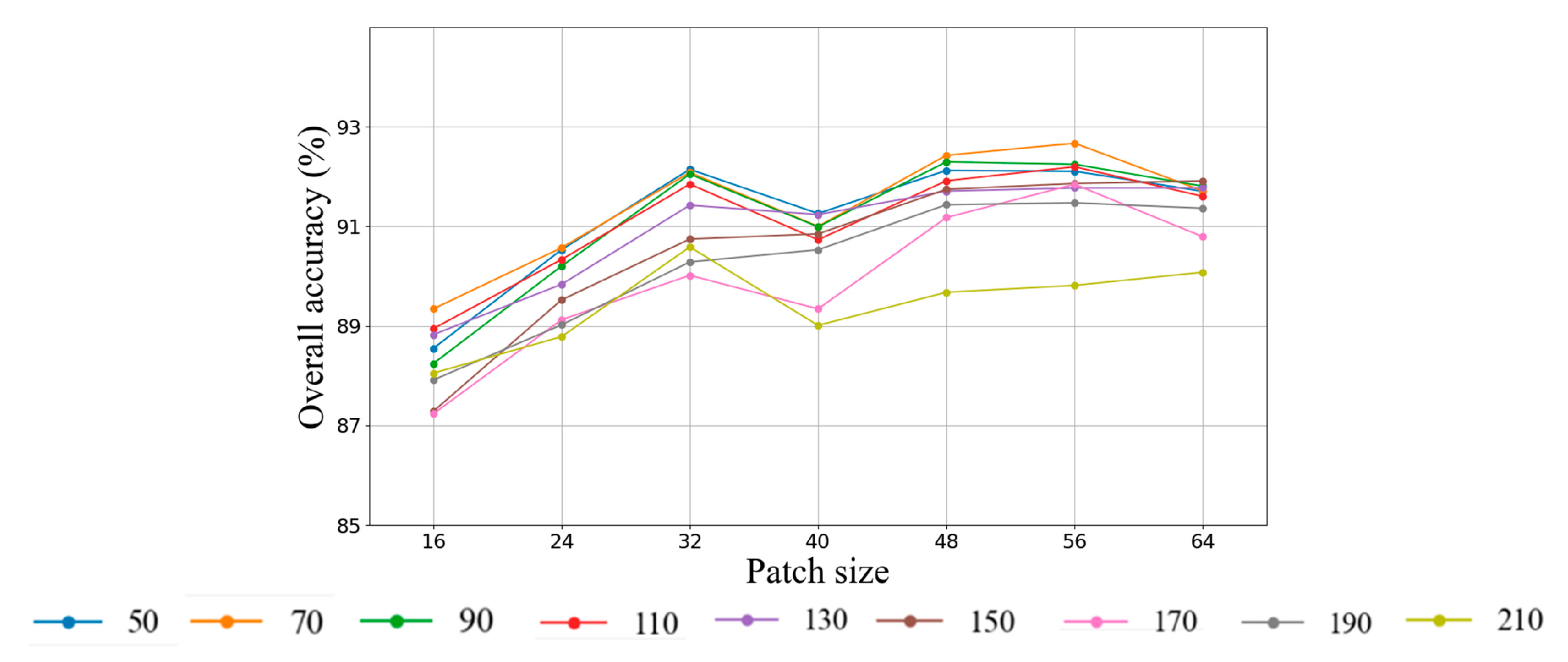

4.3. Influences of Patch Sizes on Classification

5. Discussion

5.1. Object Features vs. Deep Features

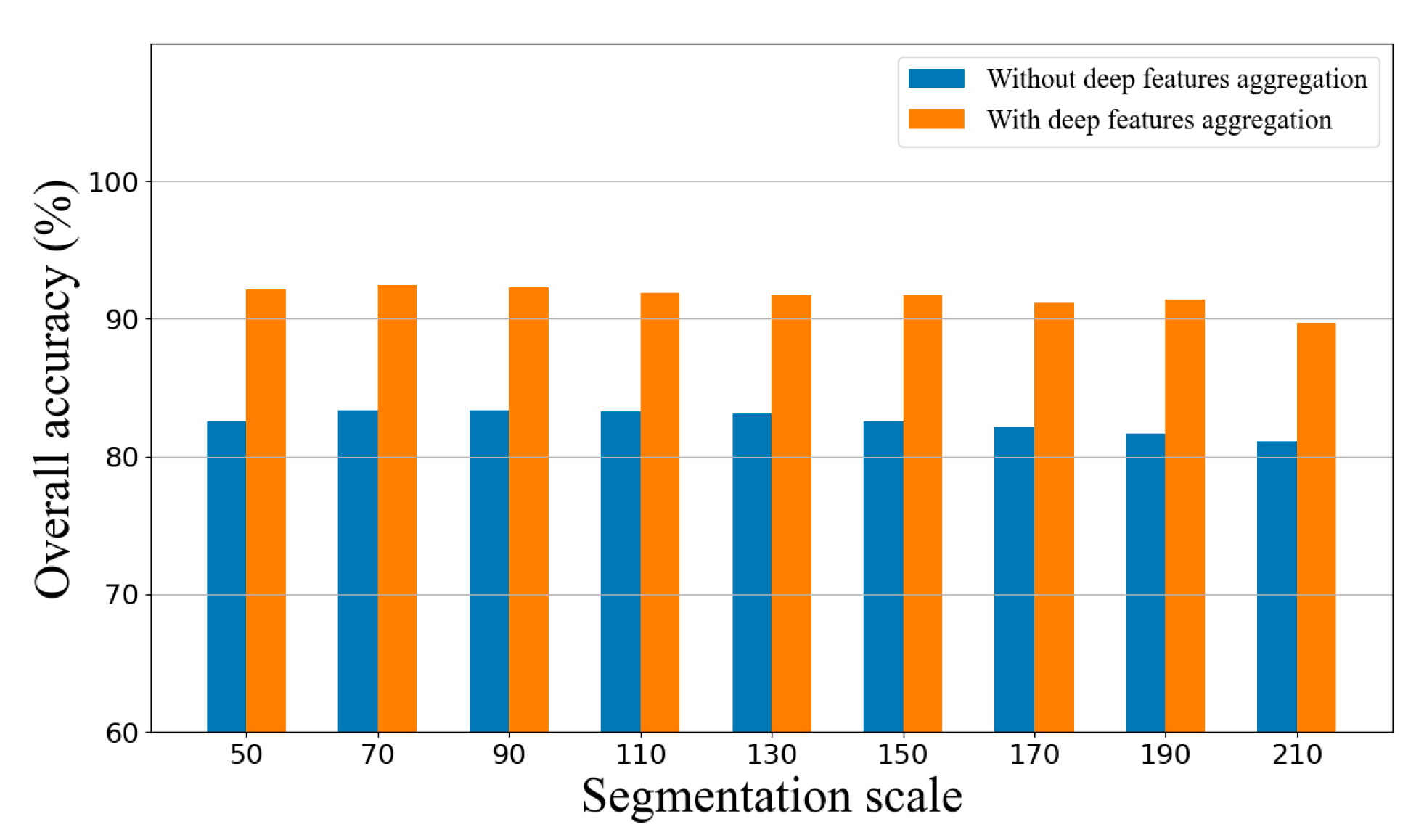

5.2. The Importance of Deep Feature Aggregation

5.3. Comparison with the State-Of-The-Art Methods

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Martinez, S.; Mollicone, D. From Land Cover to Land Use: A Methodology to Assess Land Use from Remote Sensing Data. Remote Sens. 2012, 4, 1024–1045. [Google Scholar] [CrossRef]

- Pesaresi, M.; Guo, H.D.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Lu, L.L.; et al. A Global Human Settlement Layer from Pptical HR/VHR RS Data: Concept and First Results. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- Zhang, L.P.; Huang, X.; Huang, B.; Li, P.X. A Pixel Shape Index Coupled with Spectral Information for Classification of High Spatial Resolution Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2950–2961. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P.; Li, P.X. Classification and Extraction of Spatial Features in Urban Areas Using High-Resolution Multispectral Imagery. IEEE Geosci. Remote Sens. Lett. 2007, 4, 260–264. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.C.; Liu, Y.X.; Ma, X.X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Du, S.H.; Zhang, F.L.; Zhang, X.Y. Semantic classification of urban buildings combining VHR image and GIS data: An improved random forest approach. ISPRS J. Photogramm. Remote Sens. 2015, 105, 107–119. [Google Scholar] [CrossRef]

- Griffith, D.C.; Hay, G.J. Integrating GEOBIA, Machine Learning, and Volunteered Geographic Information to Map Vegetation over Rooftops. ISPRS Int. Geo-Inf. 2018, 7, 462. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.M.N.; Cerasoli, S.; Araujo, J.; Campos, J.; Di Fzaio, S.; Modica, G. Object-Based Land Cover Classification of Cork Oak Woodlands Using UAV Imagery and Orfeo ToolBox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef]

- Ribeiro, F.F.; Roberts, D.A.; Hess, L.L.; Davis, F.W.; Caylor, K.K.; Daldegan, G.A. Geographic Object-Based Image Analysis Framework for Mapping Vegetation Physiognomic Types at Fine Scales in Neotropical Savannas. Remote Sens. 2020, 12, 1721. [Google Scholar] [CrossRef]

- Zhang, F.; Du, B.; Zhang, L.P.; Xu, M.Z. Weakly Supervised Learning Based on Coupled Convolutional Neural Networks for Aircraft Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5553–5563. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Chen, Y.S.; Jiang, H.L.; Li, C.Y.; Jia, X.P.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Chen, X.Y.; Xiang, S.M.; Liu, C.L.; Pan, C.H. Vehicle Detection in Satellite Images by Hybrid Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, T.; Ouyang, C. End-to-End Airplane Detection Using Transfer Learning in Remote Sensing Images. Remote Sens. 2018, 10, 139. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Meena, S.R.; Blaschke, T.; Aryal, J. UAV-Based Slope Failure Detection Using Deep-Learning Convolutional Neural Networks. Remote Sens. 2019, 11, 2046. [Google Scholar] [CrossRef]

- Liu, Y.F.; Zhong, Y.F.; Qin, Q.Q. Scene Classification Based on Multiscale Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7109–7121. [Google Scholar] [CrossRef]

- De Lima, R.P.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2020, 12, 86. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.Z.; Du, S.H.; Wang, Q.; Emery, W.J. Contextually guided very-high-resolution imagery classification with semantic segments. ISPRS-J. Photogramm. Remote Sens. 2017, 132, 48–60. [Google Scholar] [CrossRef]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Abdi, O. Climate-Triggered Insect Defoliators and Forest Fires Using Multitemporal Landsat and TerraClimate Data in NE Iran: An Application of GEOBIA TreeNet and Panel Data Analysis. Sensors 2019, 19, 3965. [Google Scholar] [CrossRef]

- Feng, F.; Wang, S.T.; Wang, C.Y.; Zhang, J. Learning Deep Hierarchical Spatial-Spectral Features for Hyperspectral Image Classification Based on Residual 3D-2D CNN. Sensors 2019, 19, 5276. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.C.; Zhang, P.Q.; Yu, A.Z.; Fu, Q.Y.; Wei, X.P. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1909–1921. [Google Scholar] [CrossRef]

- Zhao, W.Z.; Du, S.H.; Emery, W.J. Object-Based Convolutional Neural Network for High-Resolution Imagery Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. An Object-Based Image Analysis Method for Enhancing Classification of Land Covers Using Fully Convolutional Networks and Multi-View Images of Small Unmanned Aerial System. Remote Sens. 2018, 10, 457. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing Fully Convolutional Networks, Random Forest, Support Vector Machine, and Patch-Based Deep Convolutional Neural Networks for Object-Based Wetland Mapping Using Images from Small Unmanned Aircraft System. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully Convolutional Networks and Geographic Object-Based Image Analysis for the Classification of VHR Imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef]

- Langkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and Segmentation of Satellite Orthoimagery Using Convolutional Neural Networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.P.; Gardiner, A.; Hare, J.; Atitinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef]

- Fu, T.Y.; Ma, L.; Li, M.C.; Johnson, B.A. Using convolutional neural network to identify irregular segmentation objects from very high-resolution remote sensing imagery. J. Appl. Remote Sens. 2018, 12, 025010. [Google Scholar] [CrossRef]

- Baatz, M.; Schape, A. Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation. In Angewandte Geographische Informations-Verarbeitung, XII; Strobl, J., Ed.; Wichmann Verlag: Karlsruhe, Germany; Berlin, Germany, 2000; Volume 58, pp. 12–23. [Google Scholar]

- Lee, H.; Kwon, H. Going Deeper With Contextual CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.Q.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Hu, F.; Xia, G.S.; Hu, J.W.; Zhang, L.P. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of Quantity Disagreement and Allocation Disagreement for Accuracy Assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Stein, A.; Aryal, J.; Gort, G. Use of the Bradley-Terry Model to Quantify Association in Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 852–856. [Google Scholar] [CrossRef]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.; Gong, P. Accuracy Assessment Measures for Object-based Image Segmentation Goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Volpi, M.; Ferrari, V. Semantic segmentation of urban scenes by learning local class interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 11–12 June 2015. [Google Scholar]

- Ming, D.P.; Li, J.; Wang, J.Y.; Zhang, M. Scale Parameter Selection by Spatial Statistics for GeOBIA: Using Mean-Shift Based Multi-Scale Segmentation as an Example. ISPRS J. Photogramm. Remote Sens. 2015, 106, 28–41. [Google Scholar] [CrossRef]

| Buildings | Roads | Vegetation | Water | Shadows | Bare Soils | |

|---|---|---|---|---|---|---|

| 84.99 | 94.79 | 91.25 | 97.77 | 97.05 | 96.07 | |

| 96.71 | 94.82 | 84.66 | 83.77 | 89.89 | 81.92 |

| Buildings | Roads | Railways | Trees | Grass | Bare Soils | |

|---|---|---|---|---|---|---|

| 99.08 | 99.31 | 99.80 | 98.34 | 98.82 | 99.93 | |

| 99.45 | 98.37 | 98.02 | 95.87 | 99.59 | 99.99 |

| Patch Size | Patch Representations of Image Objects | Deep Features (Only One Feature Map Is Shown) |

|---|---|---|

| 16 |  |  |

| 24 |  |  |

| 32 |  |  |

| 40 |  |  |

| 48 |  |  |

| 56 |  |  |

| 64 |  |  |

| Method | (%) | Class | (%) | (%) |

|---|---|---|---|---|

| Fu et al. | 83.34 | Buildings | 69.10 | 95.43 |

| Vegetation | 91.57 | 94.18 | ||

| Roads | 88.06 | 65.29 | ||

| Water | 94.89 | 82.46 | ||

| Shadows | 93.86 | 85.10 | ||

| Bare soils | 96.09 | 59.25 | ||

| Zhao et al. | 87.43 | Buildings | 76.65 | 96.60 |

| Vegetation | 93.27 | 97.30 | ||

| Roads | 90.60 | 74.68 | ||

| Water | 97.53 | 80.21 | ||

| Shadows | 96.07 | 89.93 | ||

| Bare soils | 94.69 | 59.78 | ||

| Zhang et al. | 88.78 | Buildings | 82.75 | 96.01 |

| Vegetation | 93.83 | 94.21 | ||

| Roads | 93.29 | 76.46 | ||

| Water | 94.50 | 81.66 | ||

| Shadows | 88.95 | 90.97 | ||

| Bare soils | 96.62 | 74.53 | ||

| This research | 91.21 | Buildings | 84.99 | 96.71 |

| Vegetation | 94.79 | 94.82 | ||

| Roads | 91.25 | 84.66 | ||

| Water | 97.77 | 83.77 | ||

| Shadows | 97.05 | 89.89 | ||

| Bare soils | 96.07 | 81.92 |

| Method | (%) | Class | (%) | (%) |

|---|---|---|---|---|

| Fu et al. | 95.40 | Buildings | 91.79 | 98.16 |

| Roads | 96.79 | 86.22 | ||

| Railways | 99.97 | 98.97 | ||

| Trees | 94.19 | 87.37 | ||

| Grass | 96.63 | 98.44 | ||

| Bare soils | 99.94 | 99.51 | ||

| Zhao et al. | 96.42 | Buildings | 95.87 | 98.69 |

| Roads | 97.23 | 93.69 | ||

| Railways | 98.05 | 92.05 | ||

| Trees | 95.47 | 83.69 | ||

| Grass | 95.76 | 98.82 | ||

| Bare soils | 99.88 | 99.08 | ||

| Zhang et al. | 98.53 | Buildings | 97.90 | 99.45 |

| Roads | 98.43 | 97.68 | ||

| Railways | 98.93 | 97.49 | ||

| Trees | 96.76 | 95.30 | ||

| Grass | 99.21 | 98.60 | ||

| Bare soils | 99.56 | 99.91 | ||

| This research | 99.05 | Buildings | 99.08 | 99.45 |

| Roads | 99.31 | 98.37 | ||

| Railways | 99.80 | 98.02 | ||

| Trees | 98.34 | 95.87 | ||

| Grass | 98.82 | 99.59 | ||

| Bare soils | 99.93 | 99.99 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Du, S.; Du, S.; Zhang, X. Incorporating Deep Features into GEOBIA Paradigm for Remote Sensing Imagery Classification: A Patch-Based Approach. Remote Sens. 2020, 12, 3007. https://doi.org/10.3390/rs12183007

Liu B, Du S, Du S, Zhang X. Incorporating Deep Features into GEOBIA Paradigm for Remote Sensing Imagery Classification: A Patch-Based Approach. Remote Sensing. 2020; 12(18):3007. https://doi.org/10.3390/rs12183007

Chicago/Turabian StyleLiu, Bo, Shihong Du, Shouji Du, and Xiuyuan Zhang. 2020. "Incorporating Deep Features into GEOBIA Paradigm for Remote Sensing Imagery Classification: A Patch-Based Approach" Remote Sensing 12, no. 18: 3007. https://doi.org/10.3390/rs12183007

APA StyleLiu, B., Du, S., Du, S., & Zhang, X. (2020). Incorporating Deep Features into GEOBIA Paradigm for Remote Sensing Imagery Classification: A Patch-Based Approach. Remote Sensing, 12(18), 3007. https://doi.org/10.3390/rs12183007