Simulations of Leaf BSDF Effects on Lidar Waveforms

Abstract

1. Introduction

1.1. Preface

1.2. Errors Due to Leaf Scattering Assumptions in Optical Remote Sensing Simulations

1.3. Normalizing Lidar Intensity Data

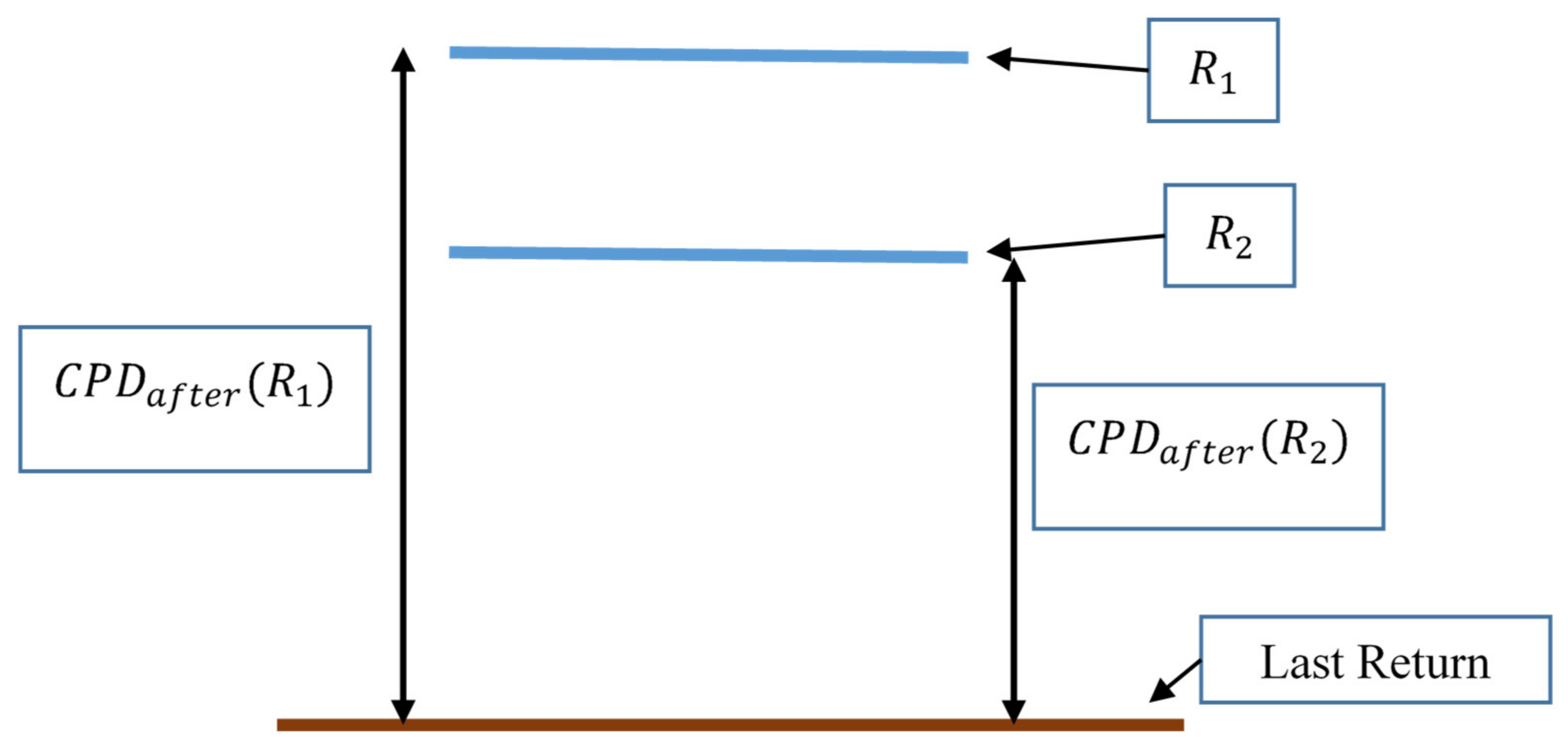

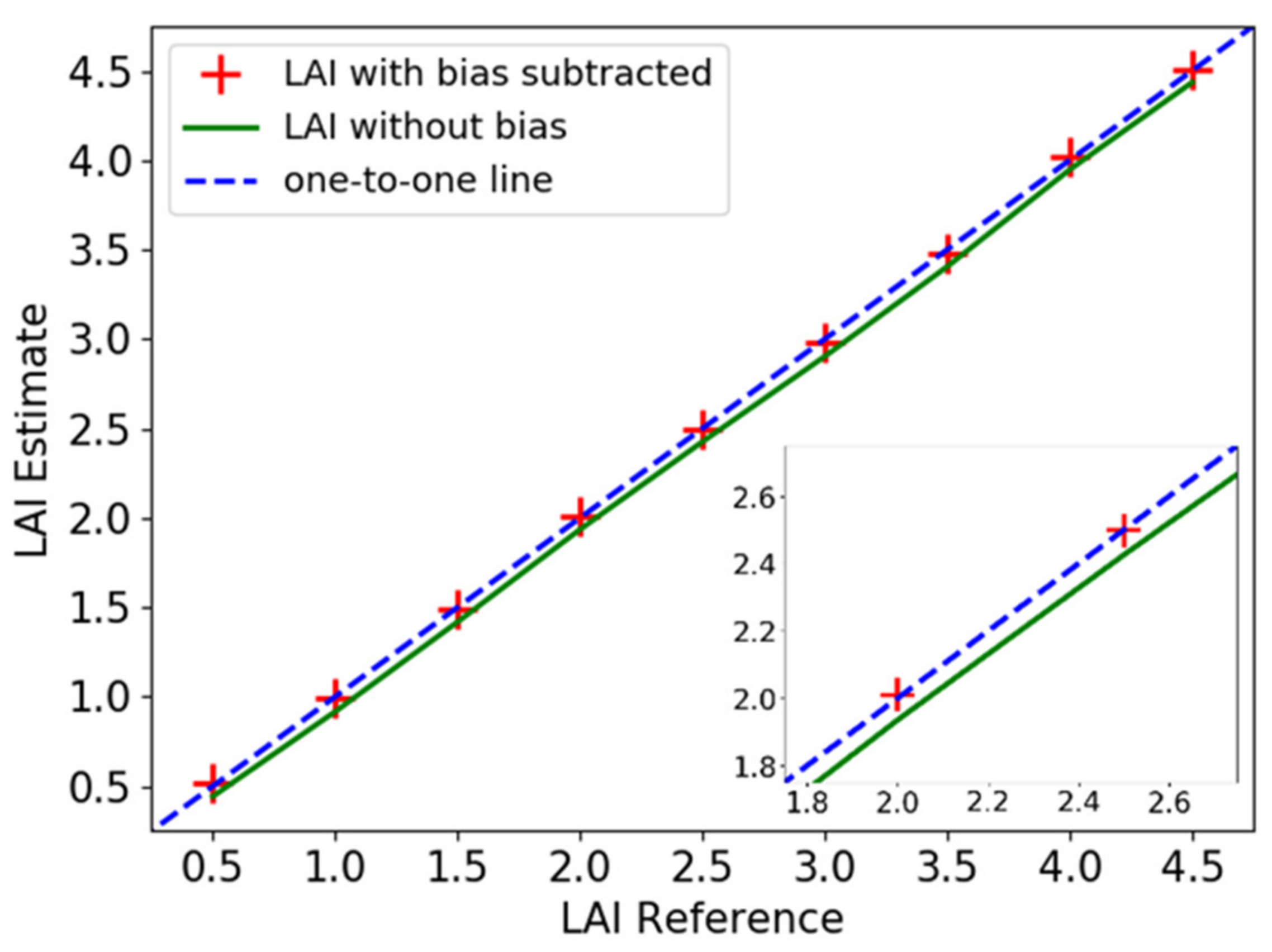

1.4. Extracting LAI and LADen from Lidar Data

1.5. Simulating Lidar Signals

1.6. Objectives

- Quantify intensity contribution from transmission, i.e., using opaque vs. realistic leaf transmissions with a waveform lidar sensor;

- Determine lidar waveform sensitivity for Lambertian vs. realistic BSDF leaves; and

- Analyze BSDF effects on LAI, derived from waveform intensity data.

2. Materials and Methods

2.1. Introduction to the Simulation Method

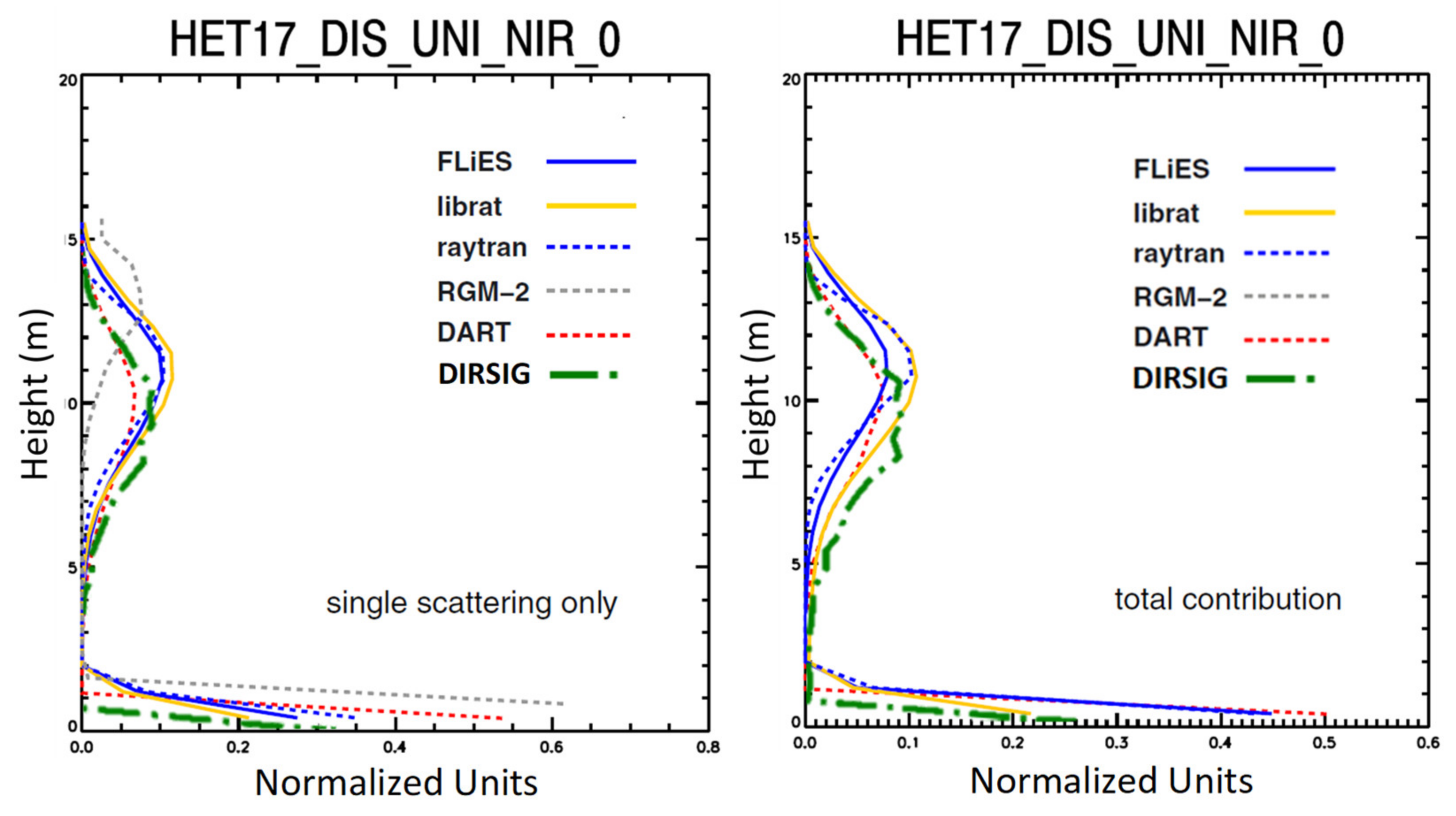

2.2. RAMI Comparison

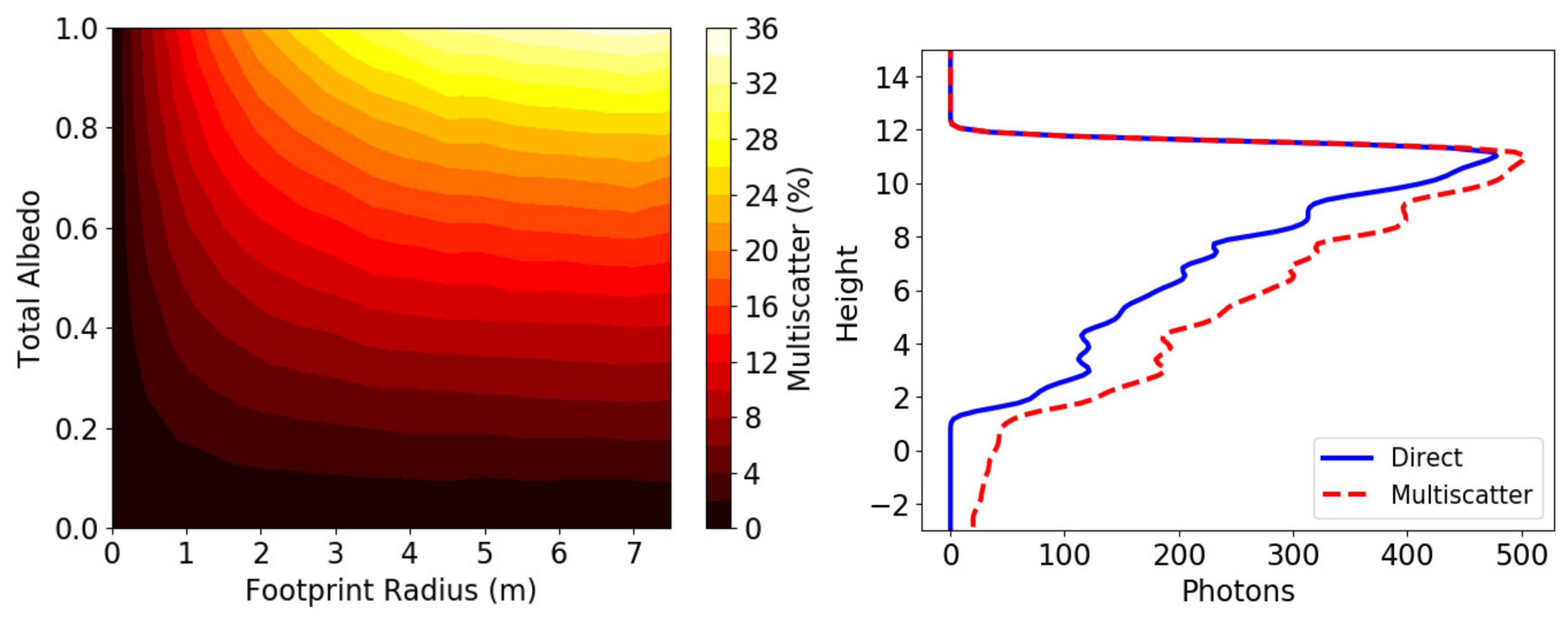

2.3. Multiscatter Contribution Comparison

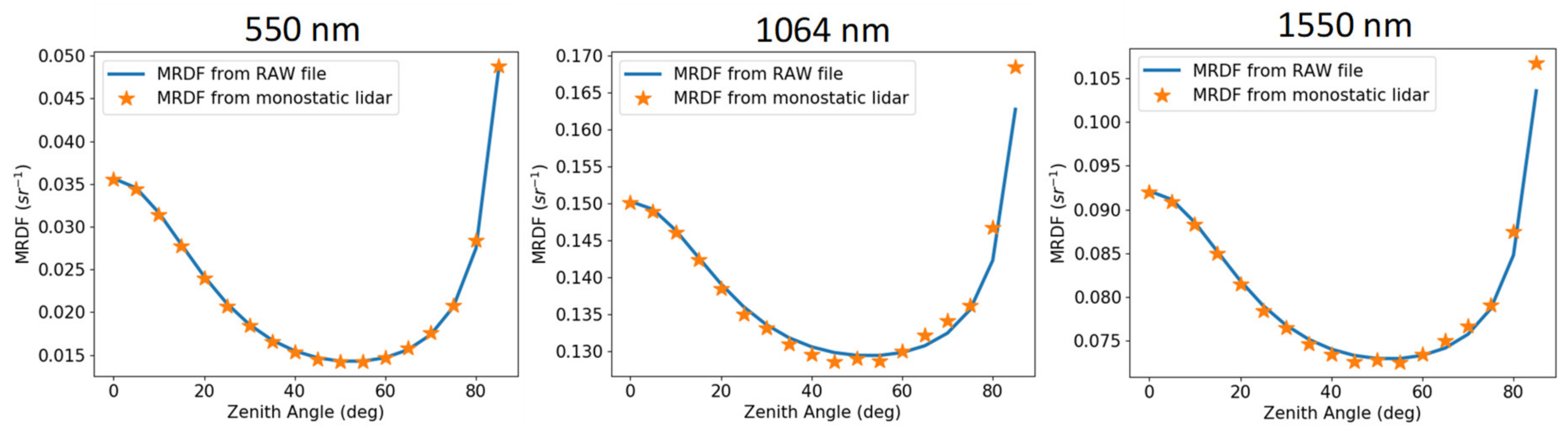

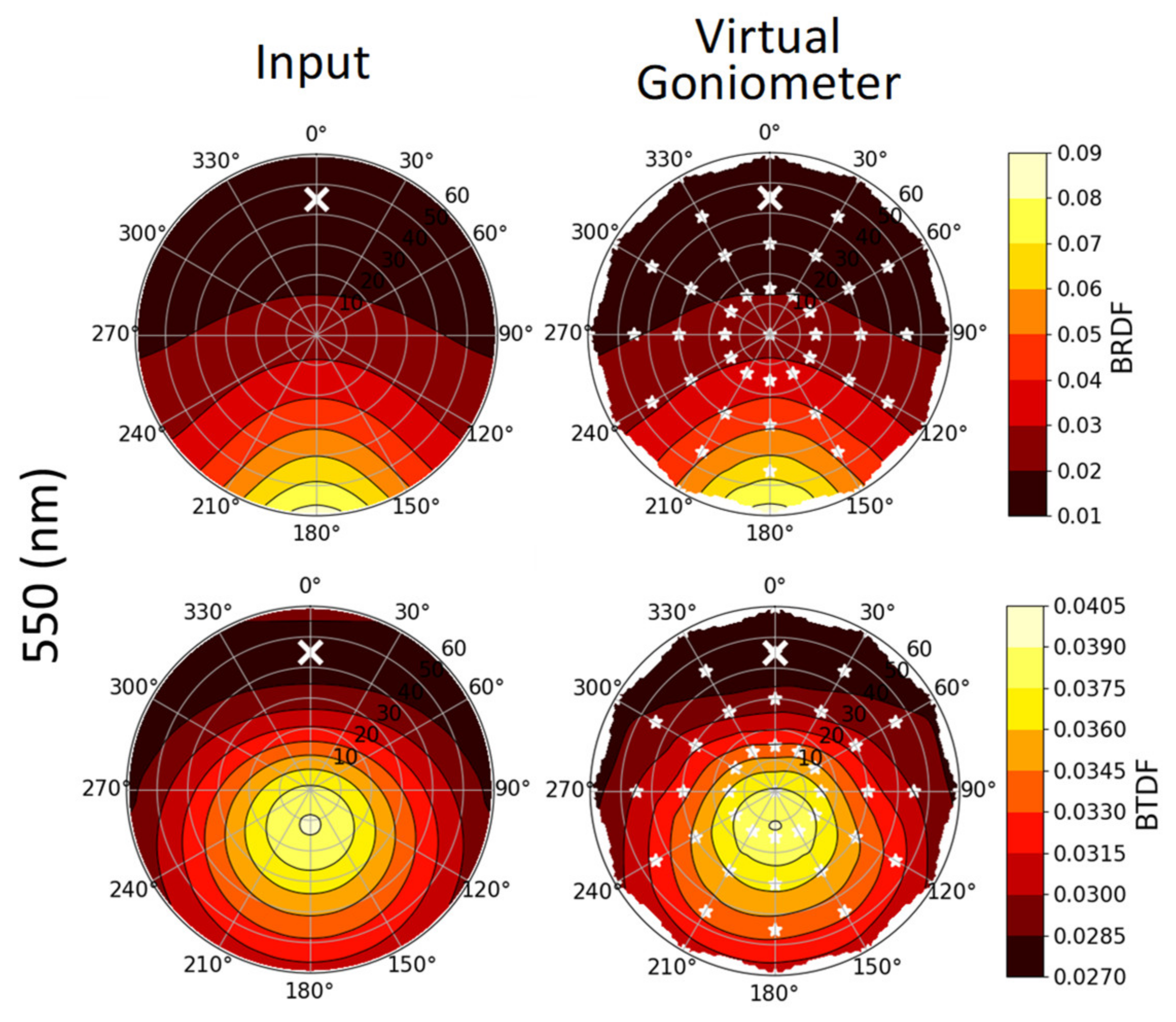

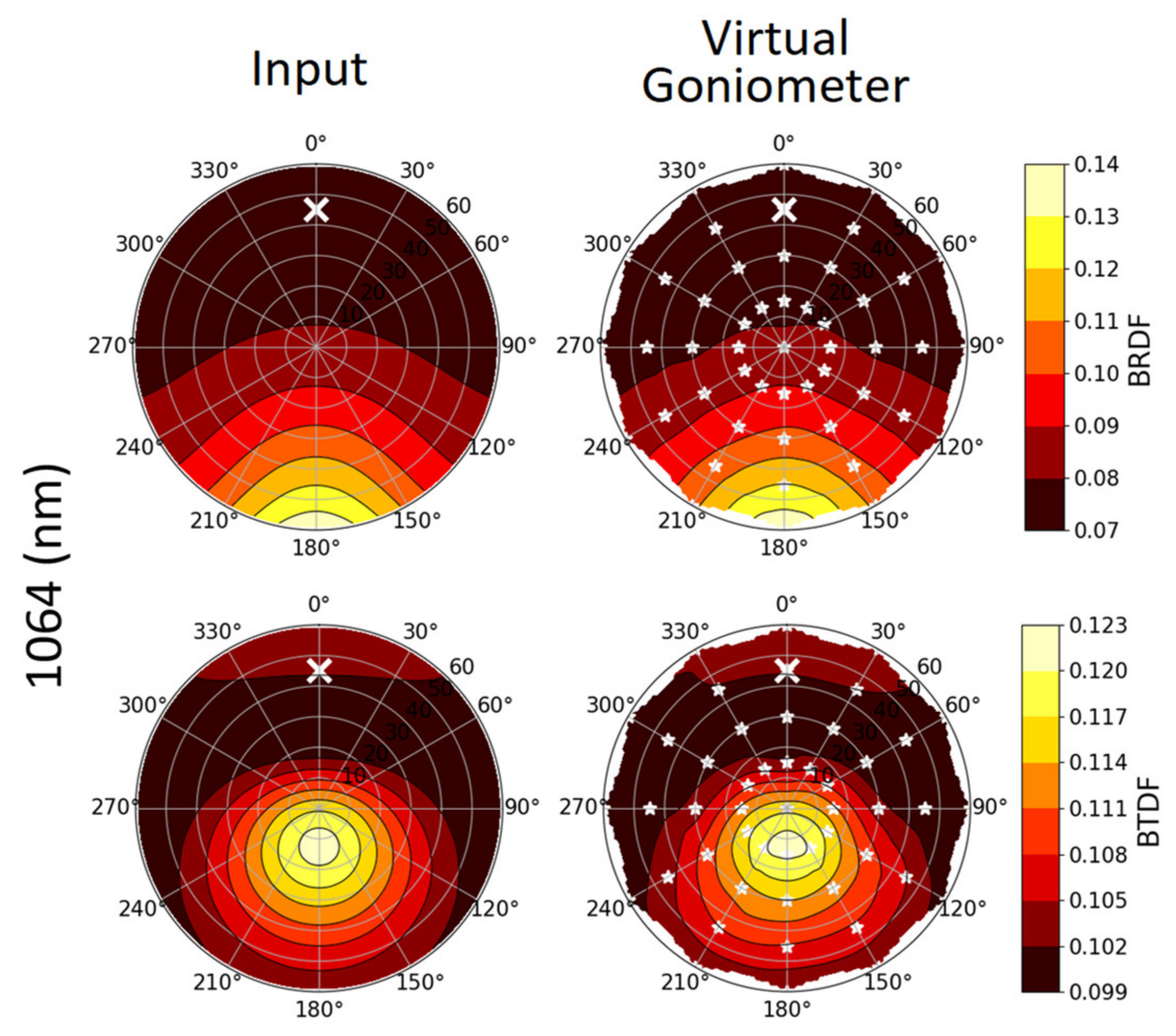

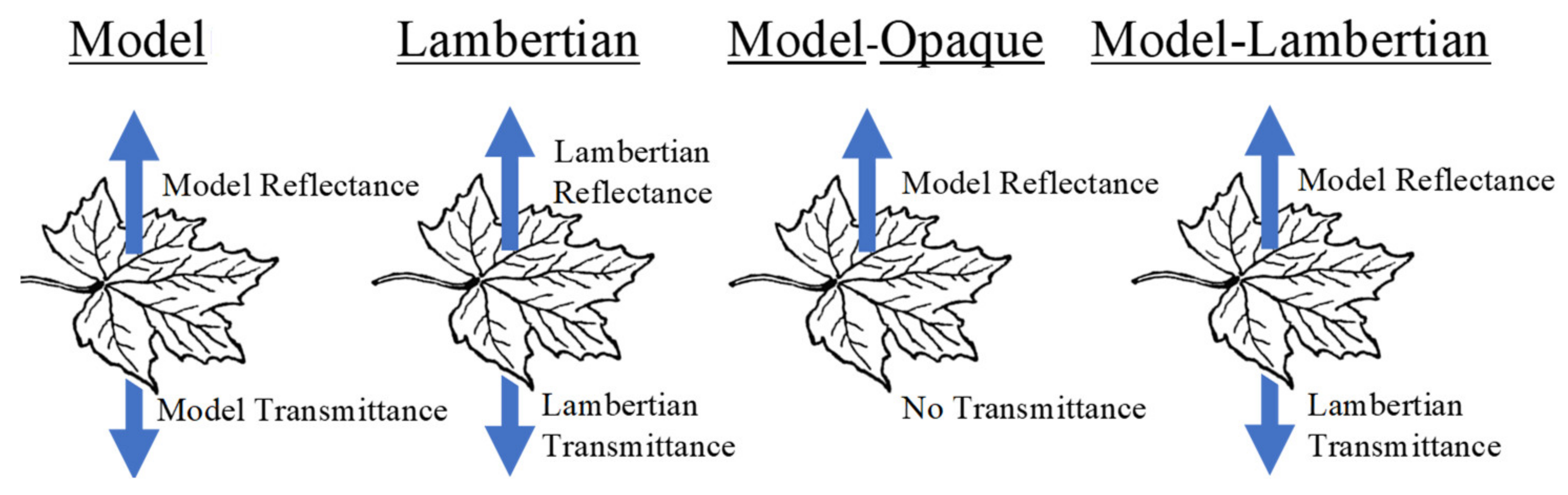

2.4. Data-Driven BSDF in DIRSIG: Description and Verification

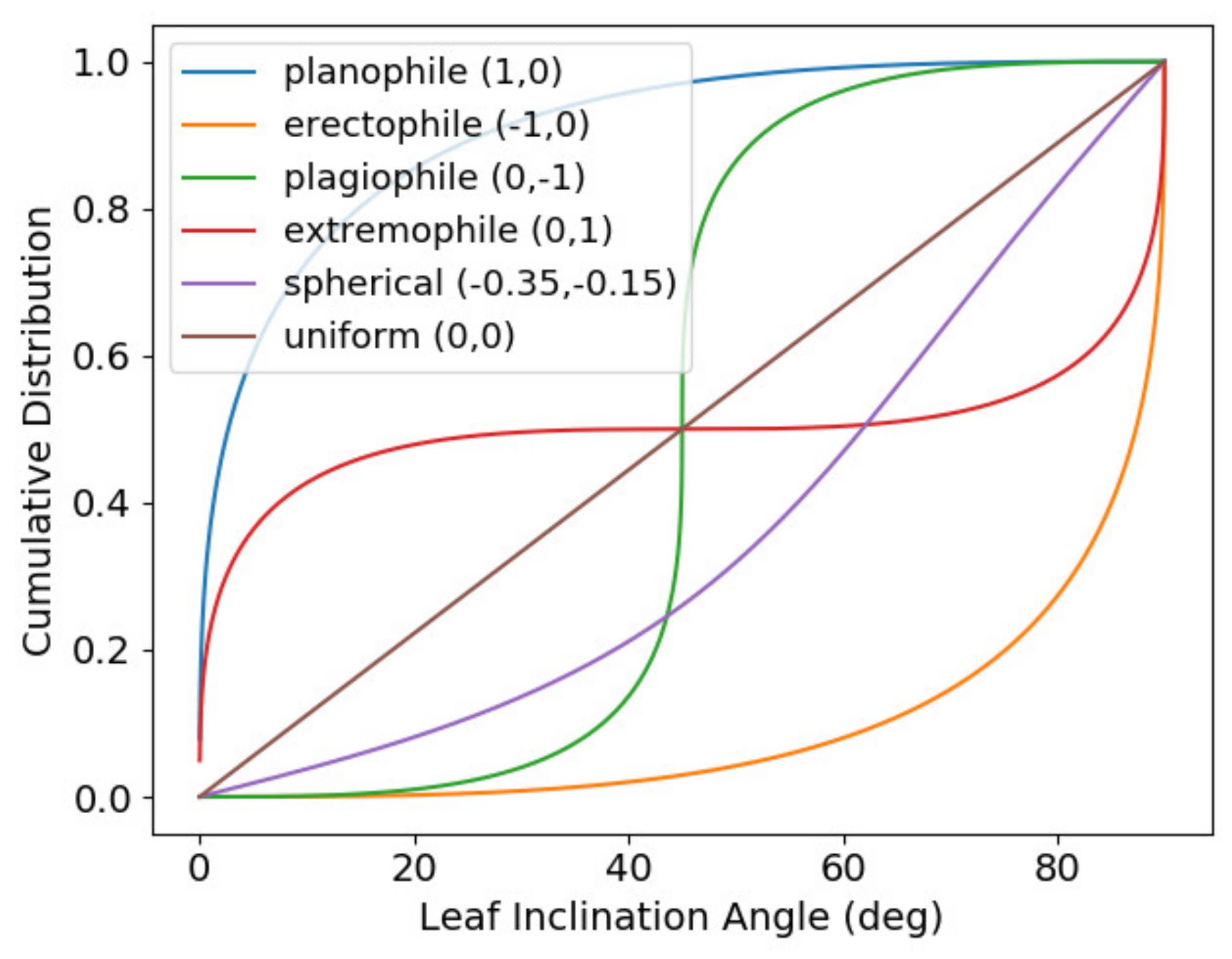

2.5. Leaf Angle Distribution Usage

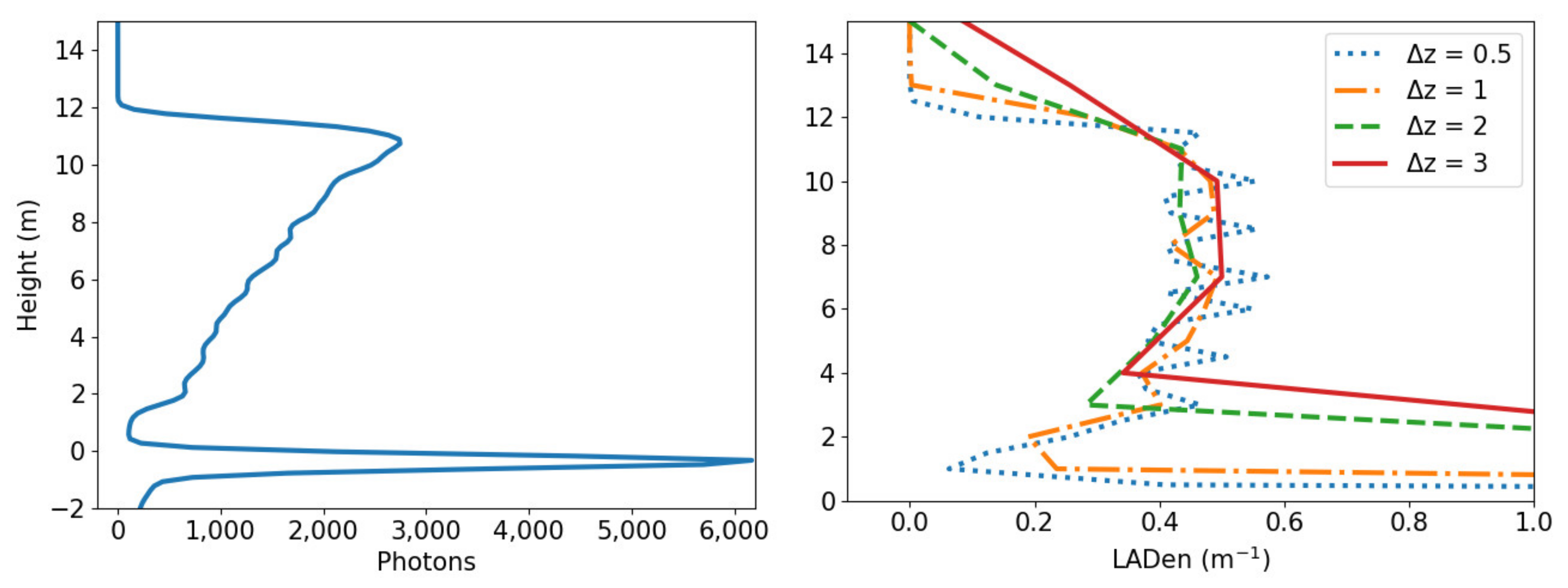

2.6. Leaf Area Density and Leaf Area Index

2.7. Sensitivity Study Overview

2.8. Waveform Comparison Metrics

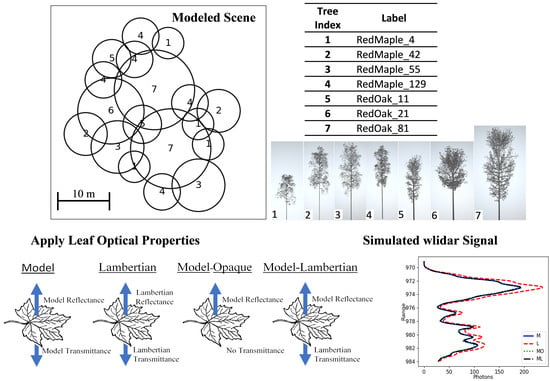

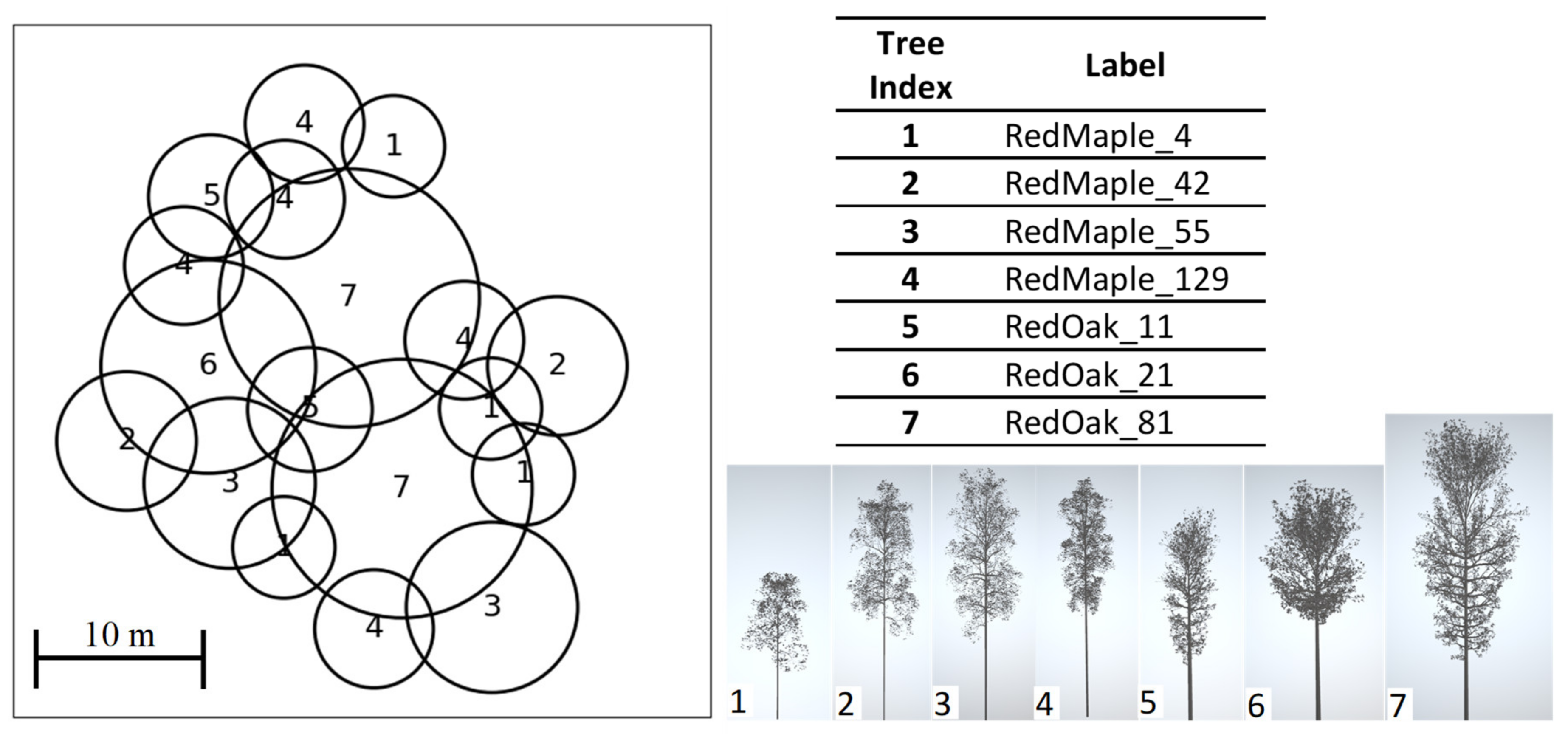

2.9. Maple and Oak Grove Scene

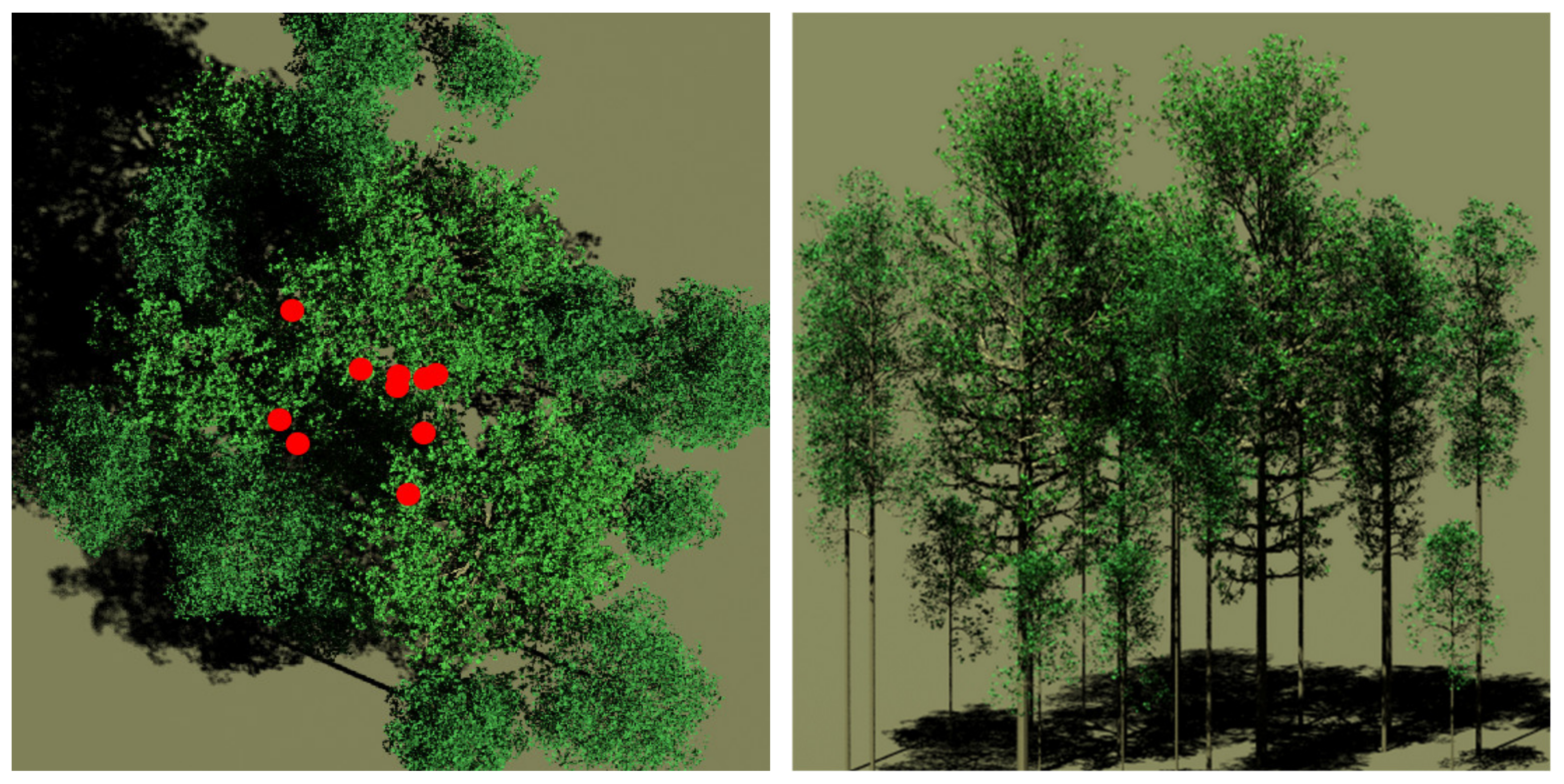

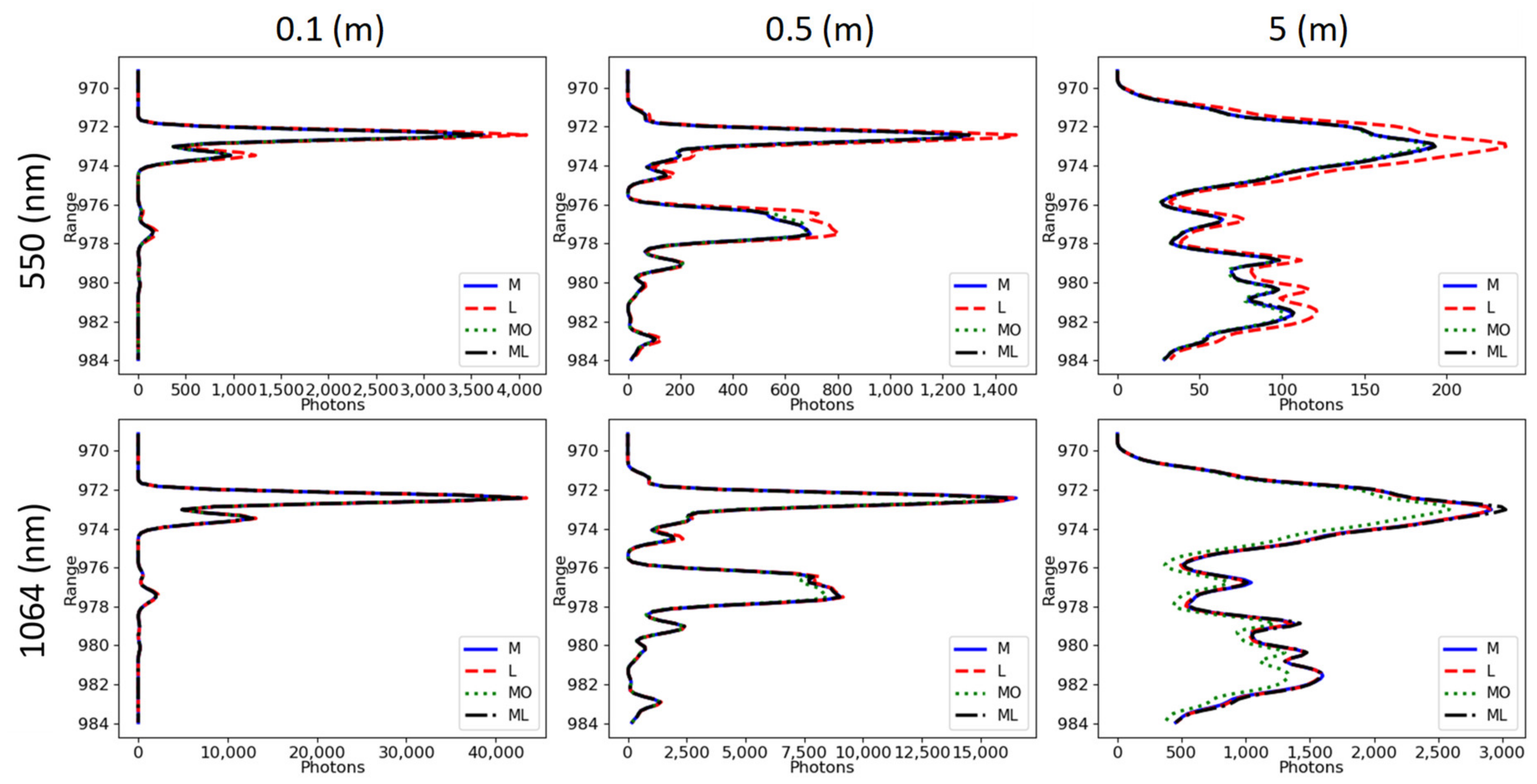

3. Results

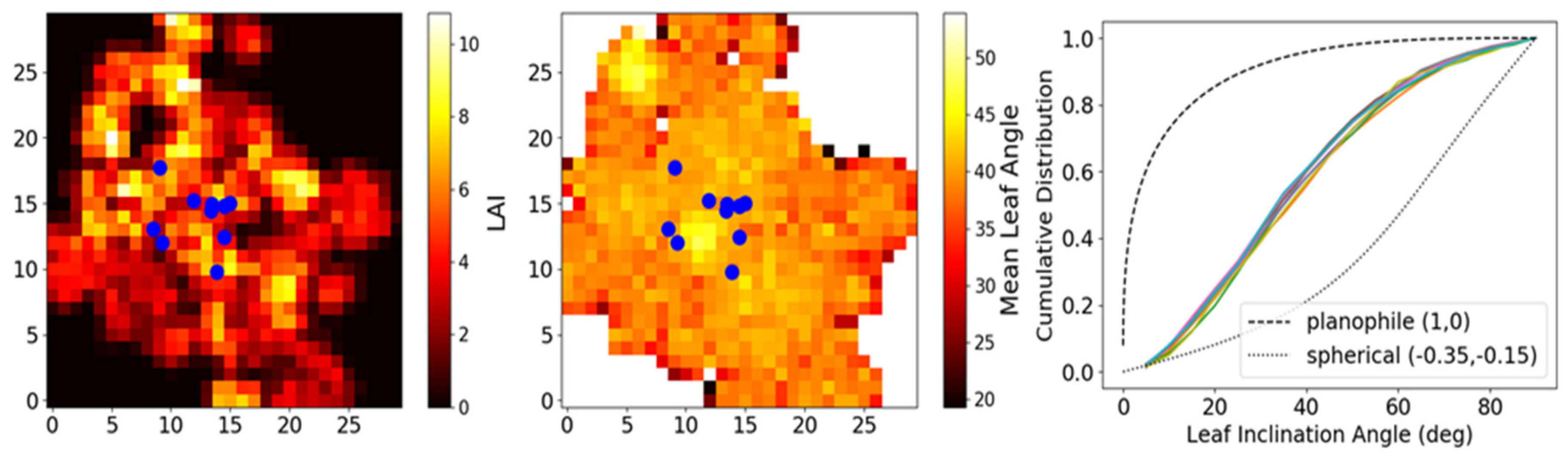

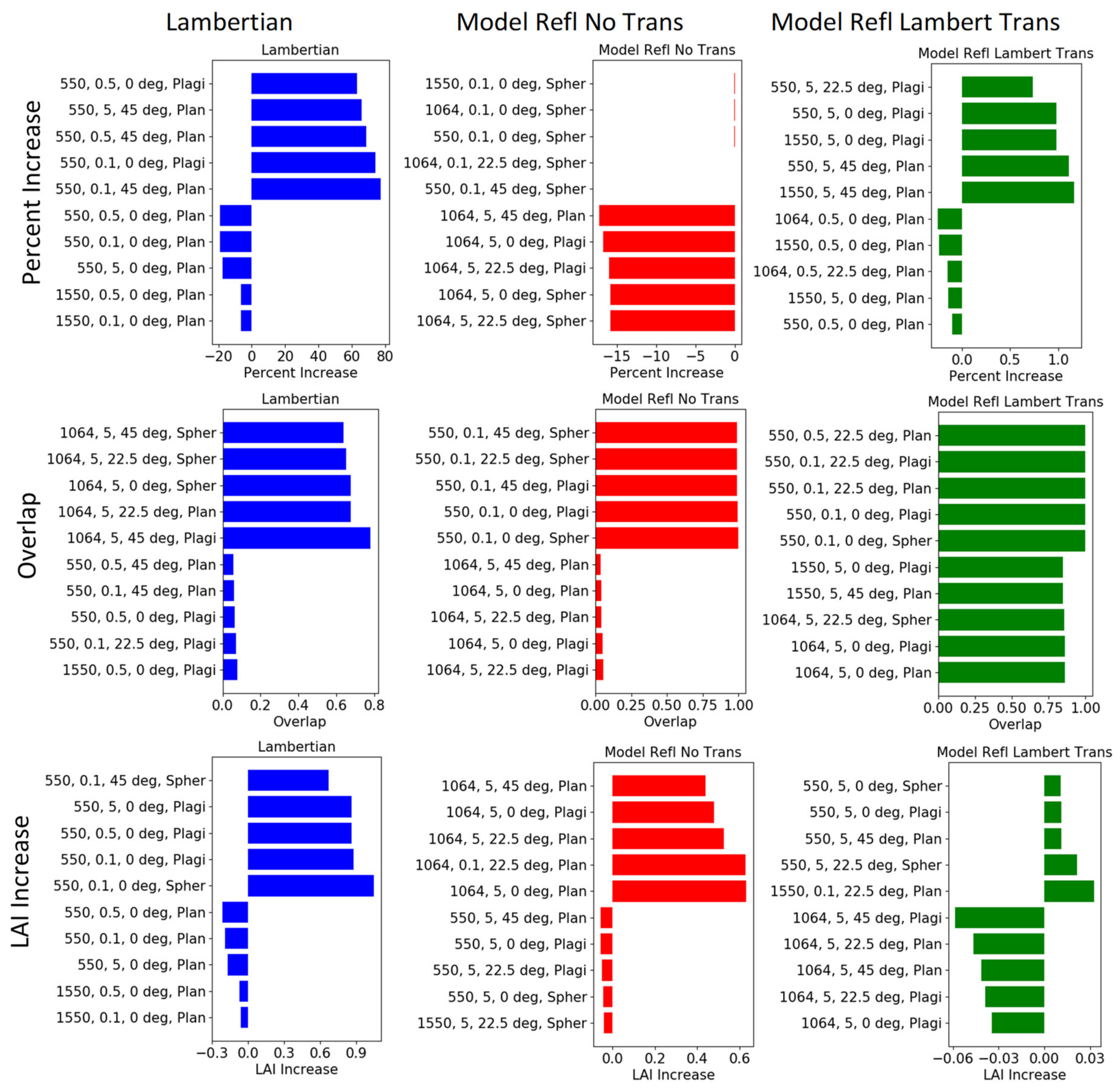

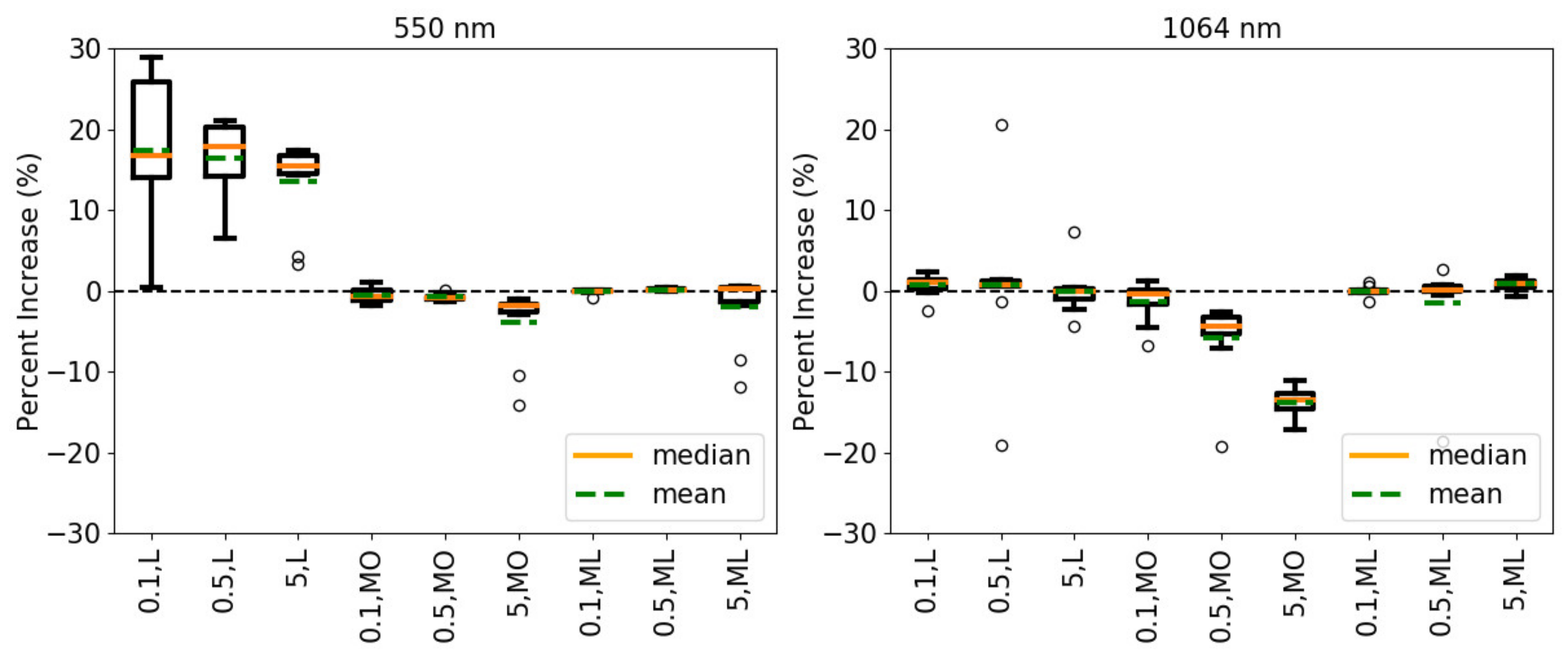

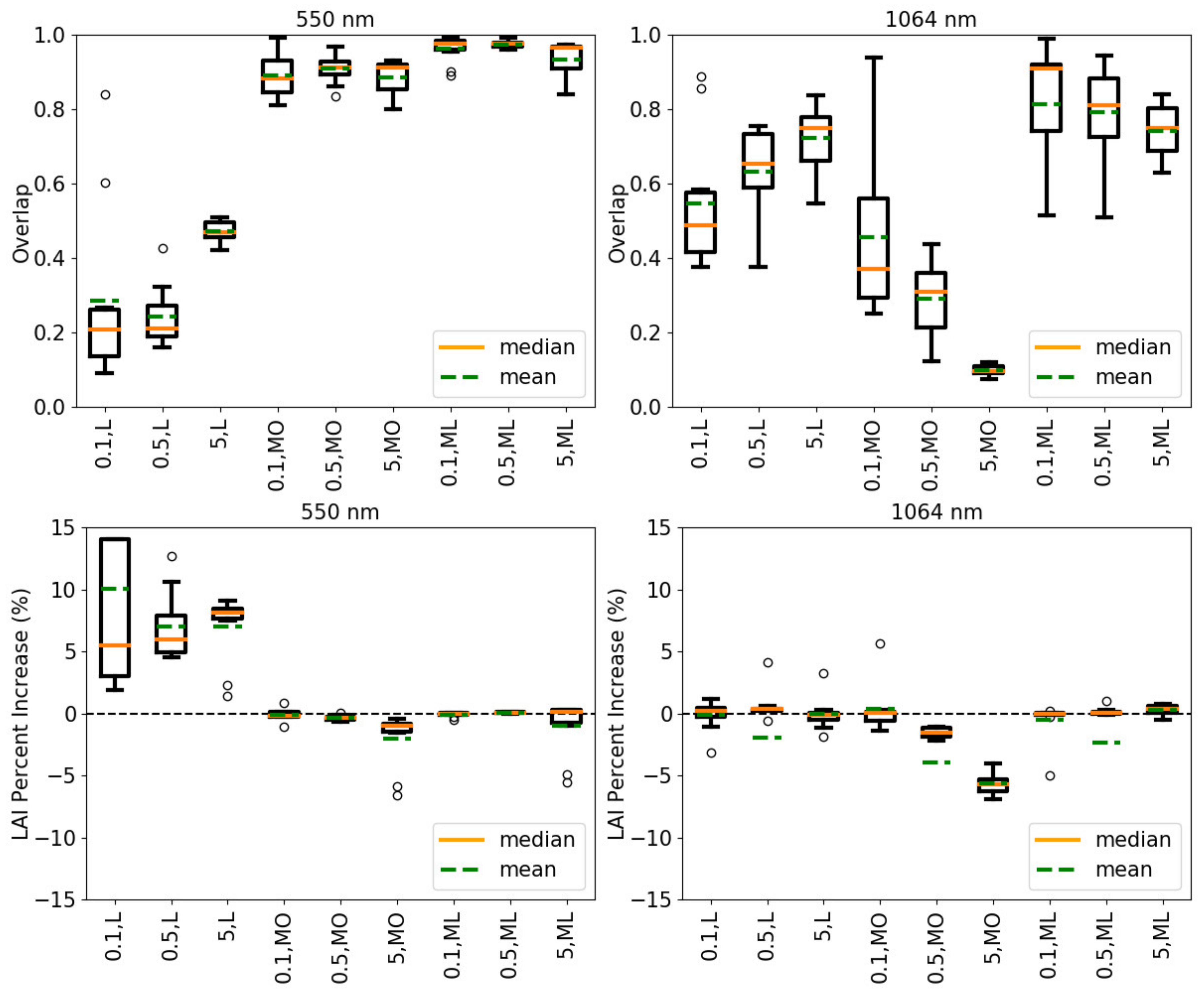

3.1. Vegetation Layer Results

3.2. Maple and Oak Grove Scene Results

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Percent Increase | 550, 0.1 | 550, 0.5 | 550, 5 | 1064, 0.1 | 1064, 0.5 | 1064, 5 | 1550, 0.1 | 1550, 0.5 | 1550, 5 |

|---|---|---|---|---|---|---|---|---|---|

| 0 deg, Sp, L | 25.66% | 22.38% | 22.32% | 3.72% | 3.01% | 1.85% | 6.02% | 5.14% | 4.28% |

| 0 deg, Sp, MO | −0.04% | −0.58% | −2.61% | −0.07% | −1.67% | −15.85% | −0.09% | −1.50% | −8.74% |

| 0 deg, Sp, ML | 0.01% | 0.11% | 0.68% | 0.00% | −0.04% | 0.42% | 0.01% | 0.05% | 0.50% |

| 0 deg, Plan, L | −19.03% | −19.07% | −17.44% | −4.42% | −4.52% | −3.97% | −6.47% | −6.55% | −5.82% |

| 0 deg, Plan, MO | −0.10% | −0.90% | −2.24% | −0.32% | −2.94% | −13.12% | −0.29% | −2.47% | −7.98% |

| 0 deg, Plan, ML | −0.03% | −0.10% | −0.02% | −0.05% | −0.25% | −0.09% | −0.06% | −0.24% | −0.14% |

| 0 deg, Plag, L | 73.95% | 63.22% | 60.02% | 8.19% | 7.12% | 4.99% | 13.49% | 12.02% | 10.86% |

| 0 deg, Plag, MO | −0.10% | −0.96% | −3.63% | −0.30% | −2.41% | −16.70% | −0.26% | −2.08% | −9.61% |

| 0 deg, Plag, ML | 0.01% | 0.19% | 0.98% | −0.01% | 0.00% | 0.70% | −0.01% | 0.11% | 0.98% |

| 22.5 deg, Sp, L | 33.82% | 30.23% | 29.43% | 4.61% | 3.91% | 2.43% | 7.51% | 6.69% | 5.68% |

| 22.5 deg, Sp, MO | −0.13% | −0.75% | −3.00% | −0.01% | −1.90% | −15.83% | −0.20% | −1.71% | −8.78% |

| 22.5 deg, Sp, ML | 0.03% | 0.13% | 0.66% | −0.01% | −0.03% | 0.69% | 0.01% | 0.10% | 0.66% |

| 22.5 deg, Plan, L | 9.87% | 17.48% | 18.20% | 1.36% | 2.27% | 1.86% | 2.38% | 4.07% | 4.05% |

| 22.5 deg, Plan, MO | −0.23% | −1.24% | −3.05% | −0.71% | −3.38% | −14.18% | −0.57% | −2.83% | −8.71% |

| 22.5 deg, Plan, ML | 0.00% | 0.00% | 0.32% | −0.04% | −0.15% | −0.07% | −0.01% | −0.04% | 0.28% |

| 22.5 deg, Plag, L | 41.30% | 40.11% | 39.35% | 5.32% | 4.97% | 3.29% | 8.75% | 8.47% | 7.68% |

| 22.5 deg, Plag, MO | −0.18% | −0.93% | −3.38% | −0.10% | −2.40% | −15.97% | −0.28% | −2.13% | −9.23% |

| 22.5 deg, Plag, ML | 0.00% | 0.20% | 0.73% | −0.03% | −0.03% | 0.33% | 0.00% | 0.09% | 0.73% |

| 45 deg, Sp, L | 35.91% | 39.51% | 35.47% | 4.64% | 4.93% | 3.01% | 7.69% | 8.33% | 6.60% |

| 45 deg, Sp, MO | 0.00% | −0.70% | −2.89% | −0.15% | −1.79% | −15.29% | −0.23% | −1.60% | −8.81% |

| 45 deg, Sp, ML | −0.02% | 0.15% | 0.58% | 0.00% | −0.02% | 0.63% | −0.01% | 0.08% | 0.66% |

| 45 deg, Plan, L | 77.44% | 68.79% | 65.75% | 8.35% | 7.57% | 5.60% | 13.86% | 12.78% | 11.70% |

| 45 deg, Plan, MO | −0.59% | −1.41% | −5.11% | −0.94% | −3.05% | −17.24% | −0.91% | −2.64% | −10.83% |

| 45 deg, Plan, ML | 0.09% | 0.29% | 1.11% | −0.01% | −0.01% | 0.56% | 0.07% | 0.19% | 1.17% |

| 45 deg, Plag, L | 30.35% | 23.37% | 17.72% | 4.06% | 3.14% | 1.50% | 6.73% | 5.37% | 3.53% |

| 45 deg, Plag, MO | −0.11% | −0.71% | −2.70% | −0.12% | −1.93% | −14.32% | −0.18% | −1.70% | −8.28% |

| 45 deg, Plag, ML | 0.03% | 0.10% | 0.36% | −0.01% | 0.02% | 0.46% | 0.02% | 0.07% | 0.49% |

| Overlap | 550, 0.1 | 550, 0.5 | 550, 5 | 1064, 0.1 | 1064, 0.5 | 1064, 5 | 1550, 0.1 | 1550, 0.5 | 1550, 5 |

|---|---|---|---|---|---|---|---|---|---|

| 0 deg, Sp, L | 0.2301 | 0.1865 | 0.278 | 0.3254 | 0.4424 | 0.6758 | 0.2509 | 0.2572 | 0.4672 |

| 0 deg, Sp, MO | 0.9975 | 0.9555 | 0.8668 | 0.9761 | 0.639 | 0.0689 | 0.9769 | 0.6928 | 0.1593 |

| 0 deg, Sp, ML | 0.999 | 0.9934 | 0.9611 | 0.9945 | 0.98 | 0.8796 | 0.9959 | 0.9869 | 0.919 |

| 0 deg, Plan, L | 0.0881 | 0.1194 | 0.1773 | 0.147 | 0.1878 | 0.3409 | 0.1017 | 0.1314 | 0.2101 |

| 0 deg, Plan, MO | 0.9863 | 0.8966 | 0.8277 | 0.8712 | 0.3311 | 0.0416 | 0.8908 | 0.413 | 0.1224 |

| 0 deg, Plan, ML | 0.9965 | 0.9872 | 0.9882 | 0.9772 | 0.9137 | 0.8608 | 0.977 | 0.922 | 0.9271 |

| 0 deg, Plag, L | 0.0804 | 0.063 | 0.0842 | 0.1143 | 0.1397 | 0.3807 | 0.0913 | 0.0759 | 0.1397 |

| 0 deg, Plag, MO | 0.9918 | 0.9381 | 0.837 | 0.9099 | 0.5044 | 0.0503 | 0.9298 | 0.5902 | 0.1355 |

| 0 deg, Plag, ML | 0.999 | 0.989 | 0.9487 | 0.9905 | 0.9863 | 0.859 | 0.9947 | 0.9716 | 0.8451 |

| 22.5 deg, Sp, L | 0.2287 | 0.1662 | 0.2524 | 0.3179 | 0.3596 | 0.6534 | 0.2557 | 0.2153 | 0.4231 |

| 22.5 deg, Sp, MO | 0.9896 | 0.9483 | 0.8753 | 0.9462 | 0.6135 | 0.065 | 0.9487 | 0.6688 | 0.2145 |

| 22.5 deg, Sp, ML | 0.9982 | 0.9914 | 0.9679 | 0.9926 | 0.9841 | 0.8548 | 0.9957 | 0.9712 | 0.9019 |

| 22.5 deg, Plan, L | 0.0763 | 0.196 | 0.3274 | 0.2255 | 0.4595 | 0.6776 | 0.1092 | 0.2628 | 0.4516 |

| 22.5 deg, Plan, MO | 0.9732 | 0.891 | 0.8358 | 0.7504 | 0.3313 | 0.0425 | 0.8086 | 0.4088 | 0.1525 |

| 22.5 deg, Plan, ML | 0.9989 | 0.9986 | 0.9821 | 0.9824 | 0.9493 | 0.8881 | 0.9949 | 0.984 | 0.9285 |

| 22.5 deg, Plag, L | 0.0687 | 0.1121 | 0.1689 | 0.1472 | 0.2646 | 0.5686 | 0.0866 | 0.1448 | 0.2694 |

| 22.5 deg, Plag, MO | 0.985 | 0.9374 | 0.8562 | 0.9414 | 0.5179 | 0.0526 | 0.9205 | 0.5924 | 0.1833 |

| 22.5 deg, Plag, ML | 0.9987 | 0.9868 | 0.9675 | 0.9868 | 0.9805 | 0.893 | 0.993 | 0.9688 | 0.8923 |

| 45 deg, Sp, L | 0.1812 | 0.1275 | 0.2485 | 0.291 | 0.3128 | 0.6369 | 0.2158 | 0.1794 | 0.4123 |

| 45 deg, Sp, MO | 0.9871 | 0.9599 | 0.8986 | 0.856 | 0.6701 | 0.0766 | 0.9033 | 0.7213 | 0.2662 |

| 45 deg, Sp, ML | 0.99 | 0.9909 | 0.9777 | 0.9795 | 0.9613 | 0.8877 | 0.975 | 0.975 | 0.9159 |

| 45 deg, Plan, L | 0.061 | 0.0576 | 0.1055 | 0.1267 | 0.1331 | 0.3971 | 0.0822 | 0.078 | 0.1474 |

| 45 deg, Plan, MO | 0.9584 | 0.9191 | 0.8274 | 0.7537 | 0.4523 | 0.0361 | 0.7833 | 0.5442 | 0.1678 |

| 45 deg, Plan, ML | 0.9934 | 0.9825 | 0.9588 | 0.993 | 0.9814 | 0.8743 | 0.9808 | 0.9582 | 0.8481 |

| 45 deg, Plag, L | 0.1395 | 0.223 | 0.4765 | 0.307 | 0.4612 | 0.7817 | 0.1974 | 0.3022 | 0.6126 |

| 45 deg, Plag, MO | 0.9903 | 0.9505 | 0.8922 | 0.9053 | 0.625 | 0.0673 | 0.9438 | 0.6854 | 0.2666 |

| 45 deg, Plag, ML | 0.9985 | 0.9937 | 0.986 | 0.9941 | 0.9792 | 0.8785 | 0.994 | 0.9809 | 0.9223 |

| LAI | 550, 0.1 | 550, 0.5 | 550, 5 | 1064, 0.1 | 1064, 0.5 | 1064, 5 | 1550, 0.1 | 1550, 0.5 | 1550, 5 |

|---|---|---|---|---|---|---|---|---|---|

| 0 deg, Sp, L | 5.0463 | 4.5001 | 4.5566 | 4.1367 | 4.0651 | 4.0431 | 4.2234 | 4.1114 | 4.1026 |

| 0 deg, Sp, MO | 3.9983 | 3.9859 | 3.9569 | 3.998 | 3.9756 | 4.3485 | 3.9967 | 3.9685 | 3.9645 |

| 0 deg, Sp, ML | 4.0006 | 4.0029 | 4.0106 | 4 | 3.9987 | 3.9754 | 4.0003 | 4.0005 | 3.9872 |

| 0 deg, Plan, L | 3.808 | 3.7838 | 3.8298 | 3.9577 | 3.9517 | 3.9523 | 3.9373 | 3.9288 | 3.9431 |

| 0 deg, Plan, MO | 3.9991 | 3.9908 | 3.9854 | 3.9978 | 3.9794 | 4.6313 | 3.9975 | 3.9748 | 4.0366 |

| 0 deg, Plan, ML | 3.9998 | 3.999 | 4.0008 | 3.9996 | 3.9971 | 3.9723 | 3.9995 | 3.9972 | 3.9881 |

| 0 deg, Plag, L | 4.8775 | 4.8639 | 4.859 | 4.1038 | 4.0983 | 4.068 | 4.1699 | 4.1672 | 4.1393 |

| 0 deg, Plag, MO | 3.9983 | 3.9821 | 3.9439 | 3.9967 | 3.9735 | 4.4781 | 3.9966 | 3.9683 | 3.9795 |

| 0 deg, Plag, ML | 4.0001 | 4.0035 | 4.0109 | 4 | 3.9983 | 3.9656 | 3.9999 | 4.0013 | 3.9888 |

| 22.5 deg, Sp, L | 4.498 | 4.5845 | 4.6436 | 4.069 | 4.0739 | 4.0435 | 4.1126 | 4.1274 | 4.1095 |

| 22.5 deg, Sp, MO | 3.9978 | 3.984 | 3.9606 | 4.0007 | 3.9755 | 4.3375 | 3.9969 | 3.9684 | 3.9599 |

| 22.5 deg, Sp, ML | 4.0005 | 4.0036 | 4.0214 | 3.9995 | 3.9989 | 3.9722 | 4 | 4.0016 | 3.9839 |

| 22.5 deg, Plan, L | 3.9771 | 4.1693 | 4.1501 | 3.9522 | 4.0227 | 3.9962 | 4.0191 | 4.0401 | 4.0256 |

| 22.5 deg, Plan, MO | 3.9997 | 3.9863 | 3.972 | 4.6291 | 3.9745 | 4.5246 | 4.1428 | 3.9707 | 4 |

| 22.5 deg, Plan, ML | 3.9999 | 3.9999 | 4.0037 | 4.0084 | 3.9979 | 3.9532 | 4.0324 | 3.9994 | 3.9812 |

| 22.5 deg, Plag, L | 4.3665 | 4.5566 | 4.5504 | 4.0506 | 4.0702 | 4.0225 | 4.0828 | 4.1196 | 4.1018 |

| 22.5 deg, Plag, MO | 3.9981 | 3.9842 | 3.9503 | 4.0002 | 3.9755 | 4.3937 | 3.9973 | 3.9692 | 3.9754 |

| 22.5 deg, Plag, ML | 4 | 4.0034 | 4.0071 | 3.9997 | 3.999 | 3.9612 | 4 | 4.0007 | 3.9881 |

| 45 deg, Sp, L | 4.6692 | 4.5464 | 4.3977 | 4.0864 | 4.0697 | 4.0145 | 4.1431 | 4.118 | 4.0733 |

| 45 deg, Sp, MO | 4.0001 | 3.9884 | 3.9646 | 3.9977 | 3.9829 | 4.43 | 3.9957 | 3.9772 | 3.9842 |

| 45 deg, Sp, ML | 3.9995 | 4.0025 | 4.0034 | 4.0001 | 3.9991 | 3.9778 | 3.9998 | 4.0009 | 3.9912 |

| 45 deg, Plan, L | 4.6175 | 4.5557 | 4.432 | 4.0761 | 4.0673 | 4.0214 | 4.1247 | 4.1135 | 4.0776 |

| 45 deg, Plan, MO | 3.9936 | 3.9843 | 3.9431 | 3.9915 | 3.9795 | 4.4404 | 3.9912 | 3.9742 | 3.9687 |

| 45 deg, Plan, ML | 4.001 | 4.0033 | 4.0112 | 3.9998 | 3.9987 | 3.9588 | 4.0007 | 4.0016 | 4.0005 |

| 45 deg, Plag, L | 4.3773 | 4.2722 | 4.1884 | 4.0525 | 4.0375 | 3.9813 | 4.0865 | 4.064 | 4.035 |

| 45 deg, Plag, MO | 3.9984 | 3.9908 | 3.9719 | 3.9989 | 3.9858 | 4.3718 | 3.9976 | 3.9799 | 3.9948 |

| 45 deg, Plag, ML | 4.0004 | 4.0012 | 4.0037 | 3.9999 | 3.9992 | 3.9416 | 4.0002 | 4.0004 | 3.9913 |

References

- McRoberts, R.E.; Tomppo, E.O. Remote sensing support for national forest inventories. Remote Sens. Environ. 2006, 110, 412–419. [Google Scholar] [CrossRef]

- Franklin, S. Remote Sensing for Sustainable Forest Management; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Berk, A.; Conforti, P.; Kennett, R.; Perkins, T.; Hawes, F.; Van Den Bosch, J. MODTRAN® 6: A major upgrade of the MODTRAN® radiative transfer code. In Proceedings of the 2014 6th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lausanne, Switzerland, 24–27 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–4. [Google Scholar]

- Gastellu-Etchegorry, J.P.; Yin, T.; Lauret, N.; Cajgfinger, T.; Gregoire, T.; Grau, E.; Feret, J.B.; Lopes, M.; Guilleux, J.; Dedieu, G.; et al. Discrete anisotropic radiative transfer (DART 5) for modeling airborne and satellite spectroradiometer and LIDAR acquisitions of natural and urban landscapes. Remote Sens. 2015, 7, 1667–1701. [Google Scholar] [CrossRef]

- Han, Y. JCSDA Community Radiative Transfer Model (CRTM): Version 1; U.S. Department of Commerce: Washington, DC, USA, 2006.

- Stamnes, K.; Tsay, S.-C.; Wiscombe, W.; Laszlo, I. DISORT, A General-Purpose Fortran Program for Discrete-Ordinate-Method Radiative Transfer in Scattering and Emitting Layered Media: Documentation of Methodology; NASA Technical Reports; NASA: Washington, DC, USA, 2000.

- Fiorino, S.T.; Bartell, R.J.; Krizo, M.J.; Caylor, G.L.; Moore, K.P.; Harris, T.R.; Cusumano, S.J. A first principles atmospheric propagation & characterization tool: The laser environmental effects definition and reference (LEEDR). In Proceedings of the Atmospheric Propagation of Electromagnetic Waves II; International Society for Optics and Photonics: Bellingham, WA, USA, 2008; Volume 6878, p. 68780B. [Google Scholar]

- Disney, M.I.; Kalogirou, V.; Lewis, P.; Prieto-Blanco, A.; Hancock, S.; Pfeifer, M. Simulating the impact of discrete-return lidar system and survey characteristics over young conifer and broadleaf forests. Remote Sens. Environ. 2010, 114, 1546–1560. [Google Scholar] [CrossRef]

- Govaerts, Y.M.; Verstraete, M.M. Raytran: A Monte Carlo ray-tracing model to compute light scattering in three-dimensional heterogeneous media. IEEE Trans. Geosci. Remote Sens. 1998, 36, 493–505. [Google Scholar] [CrossRef]

- Ni, W.; Li, X.; Woodcock, C.E.; Caetano, M.R.; Strahler, A.H. An analytical hybrid GORT model for bidirectional reflectance over discontinuous plant canopies. IEEE Trans. Geosci. Remote Sens. 1999, 37, 987–999. [Google Scholar] [CrossRef]

- Goodenough, A.A.; Brown, S.D. DIRSIG 5: Core design and implementation. In Proceedings of the Algorithms Technol. Multispectral, Hyperspectral, Ultraspectral Imag XVIII, Baltimore, MD, USA, 9 May 2012; Volume 8390. [Google Scholar]

- Morsdorf, F.; Nichol, C.; Malthus, T.; Woodhouse, I.H. Assessing forest structural and physiological information content of multi-spectral LiDAR waveforms by radiative transfer modelling. Remote Sens. Environ. 2009, 113, 2152–2163. [Google Scholar] [CrossRef]

- Ross, J.K.; Marshak, A.L. Calculation of canopy bidirectional reflectance using the Monte Carlo method. Remote Sens. Environ. 1988, 24, 213–225. [Google Scholar] [CrossRef]

- Chelle, M.; Andrieu, B. Radiative models for architectural modeling. Agronomie 1999, 19, 225–240. [Google Scholar] [CrossRef][Green Version]

- Newton, A.; Muller, J.; Pearson, J. Spot Dem Shading For Landsat-tm Topographic correction. In Proceedings of the IGARSS’91 Remote Sensing: Global Monitoring for Earth Management; IEEE: New York, NY, USA, 1991; Volume 2, pp. 655–659. [Google Scholar]

- Burgess, D.W.; Lewis, P.; Muller, J.-P. Topographic effects in AVHRR NDVI data. Remote Sens. Environ. 1995, 54, 223–232. [Google Scholar] [CrossRef]

- Antyufeev, V.S.; Marshak, A.L. Inversion of Monte Carlo model for estimating vegetation canopy parameters. Remote Sens. Environ. 1990, 33, 201–209. [Google Scholar] [CrossRef]

- Kuo, S.D.; Schott, J.R.; Chang, C.Y. Synthetic image generation of chemical plumes for hyperspectral applications. Opt. Eng. 2000, 39, 1047–1056. [Google Scholar] [CrossRef][Green Version]

- Hancock, S.; Armston, J.; Hofton, M.; Sun, X.; Tang, H.; Duncanson, L.I.; Kellner, J.R.; Dubayah, R. The GEDI simulator: A large-footprint waveform lidar simulator for calibration and validation of spaceborne missions. Earth SP Sci. 2019, 6, 294–310. [Google Scholar] [CrossRef] [PubMed]

- Schott, J.R.; Brown, S.D.; Raqueño, R.V.; Gross, H.N.; Robinson, G. An Advanced Synthetic Image Generation Model and its Application to Multi/Hyperspectral Algorithm Development. Can. J. Remote Sens. 1999, 25, 99–111. [Google Scholar] [CrossRef]

- Kuusk, A. A two-layer canopy reflectance model. J. Quant. Spectrosc. Radiat. Transf. 2001, 71, 1–9. [Google Scholar] [CrossRef]

- Huang, D.; Knyazikhin, Y.; Wang, W.; Deering, D.W.; Stenberg, P.; Shabanov, N.; Tan, B.; Myneni, R.B. Stochastic transport theory for investigating the three-dimensional canopy structure from space measurements. Remote Sens. Environ. 2008, 112, 35–50. [Google Scholar] [CrossRef]

- Shabanov, N.V.; Huang, D.; Knjazikhin, Y.; Dickinson, R.E.; Myneni, R.B. Stochastic radiative transfer model for mixture of discontinuous vegetation canopies. J. Quant. Spectrosc. Radiat. Transf. 2007, 107, 236–262. [Google Scholar] [CrossRef][Green Version]

- Qin, W.; Gerstl, S.A. 3-D Scene Modeling of Semidesert Vegetation Cover and its Radiation Regime. Remote Sens. Environ. 2000, 74, 145–162. [Google Scholar] [CrossRef]

- Yang, B.; Knyazikhin, Y.; Zhao, H.; Ma, Y. Contribution of leaf specular reflection to canopy reflectance under black soil case using stochastic radiative transfer model. Agric. For. Meteorol. 2018, 263, 477–482. [Google Scholar] [CrossRef]

- Yang, B.; Knyazikhin, Y.; Mõttus, M.; Rautiainen, M.; Stenberg, P.; Yan, L.; Chen, C.; Yan, K.; Choi, S.; Park, T.; et al. Estimation of leaf area index and its sunlit portion from DSCOVR EPIC data: Theoretical basis. Remote Sens. Environ. 2017, 198, 69–84. [Google Scholar] [CrossRef]

- Xie, D.; Qin, W.; Wang, P.; Shuai, Y.; Zhou, Y.; Zhu, Q. Influences of Leaf-Specular Reflection on Canopy BRF Characteristics: A Case Study of Real Maize Canopies with a 3-D Scene BRDF Model. IEEE Trans. Geosci. Remote Sens. 2017, 55, 619–631. [Google Scholar] [CrossRef]

- Ross, J.; Marshak, A. The influence of leaf orientation and the specular component of leaf reflectance on the canopy bidirectional reflectance. Remote Sens. Environ. 1989, 27, 251–260. [Google Scholar] [CrossRef]

- Walter-Shea, E.A. Laboratory and Field Measurements of Leaf Spectral Properties and Canopy Architecture and their Effects on Canopy Reflectance. Ph.D. Thesis, University of Nebraska, Lincoln, NE, USA, 1987. [Google Scholar]

- Kampe, T.U.; Johnson, B.R.; Kuester, M.A.; Keller, M. NEON: The first continental-scale ecological observatory with airborne remote sensing of vegetation canopy biochemistry and structure. J. Appl. Remote Sens. 2010, 4, 43510. [Google Scholar] [CrossRef]

- Brock, J.C.; Wright, C.W.; Clayton, T.D.; Nayegandhi, A. LIDAR optical rugosity of coral reefs in Biscayne National Park, Florida. Coral Reefs 2004, 23, 48–59. [Google Scholar] [CrossRef]

- Blair, J.B.; Rabine, D.L.; Hofton, M.A. The Laser Vegetation Imaging Sensor: A medium-altitude, digitisation-only, airborne laser altimeter for mapping vegetation and topography. ISPRS J. Photogramm. Remote Sens. 1999, 54, 115–122. [Google Scholar] [CrossRef]

- Cook, B.D.; Nelson, R.F.; Middleton, E.M.; Morton, D.C.; McCorkel, J.T.; Masek, J.G.; Ranson, K.J.; Ly, V.; Montesano, P.M. NASA Goddard’s LiDAR, hyperspectral and thermal (G-LiHT) airborne imager. Remote Sens. 2013, 5, 4045–4066. [Google Scholar] [CrossRef]

- Means, J.E.; Acker, S.A.; Harding, D.J.; Blair, J.B.; Lefsky, M.A.; Cohen, W.B.; Harmon, M.E.; McKee, W.A. Use of Large-Footprint Scanning Airborne Lidar To Estimate Forest Stand Characteristics in the Western Cascades of Oregon. Remote Sens. Environ. 1999, 67, 298–308. [Google Scholar] [CrossRef]

- Hollaus, M.; Mücke, W.; Roncat, A.; Pfeifer, N.; Briese, C. Full-waveform airborne laser scanning systems and their possibilities in forest applications. In Forestry Applications of Airborne Laser Scanning; Springer: Berlin/Heidelberg, Germany, 2014; pp. 43–61. [Google Scholar]

- Schutz, B.E.; Zwally, H.J.; Shuman, C.A.; Hancock, D.; DiMarzio, J.P. Overview of the ICESat mission. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef]

- Abdalati, W.; Zwally, H.J.; Bindschadler, R.; Csatho, B.; Farrell, S.L.; Fricker, H.A.; Harding, D.; Kwok, R.; Lefsky, M.; Markus, T. The ICESat-2 laser altimetry mission. Proc. IEEE 2010, 98, 735–751. [Google Scholar] [CrossRef]

- Stavros, E.N.; Schimel, D.; Pavlick, R.; Serbin, S.; Swann, A.; Duncanson, L.; Fisher, J.B.; Fassnacht, F.; Ustin, S.; Dubayah, R. ISS observations offer insights into plant function. Nat. Ecol. Evol. 2017, 1, 1–5. [Google Scholar] [CrossRef]

- Gastellu-Etchegorry, J.-P.; Yin, T.; Lauret, N.; Grau, E.; Rubio, J.; Cook, B.D.; Morton, D.C.; Sun, G. Simulation of satellite, airborne and terrestrial LiDAR with DART (I): Waveform simulation with quasi-Monte Carlo ray tracing. Remote Sens. Environ. 2016, 184, 418–435. [Google Scholar] [CrossRef]

- Wagner, W.; Ullrich, A.; Ducic, V.; Melzer, T.; Studnicka, N. Gaussian decomposition and calibration of a novel small-footprint full-waveform digitising airborne laser scanner. ISPRS J. Photogramm. Remote Sens. 2006, 60, 100–112. [Google Scholar] [CrossRef]

- Korpela, I.; Ørka, H.O.; Hyyppä, J.; Heikkinen, V.; Tokola, T. Range and AGC normalization in airborne discrete-return LiDAR intensity data for forest canopies. ISPRS J. Photogramm. Remote Sens. 2010, 65, 369–379. [Google Scholar] [CrossRef]

- Wagner, W.; Hyyppa, J.; Ullrich, A.; Lehner, H.; Briese, C.; Kaasalainen, S. Radiometric calibration of full-waveform small-footprint airborne laser scanners. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 163–168. [Google Scholar]

- Jutzi, B.; Gross, H.; Sensing, R.; Karlsruhe, U. Normalization of Lidar Intensity Data Based on Range and Surface Incidence Angle. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 38, 213–218. [Google Scholar]

- Gross, H.; Thoennessen, U. Extraction of lines from laser point clouds. Symp. ISPRS Comm. III Photogramm. Comput. Vis. 2006, 36, 86–91. [Google Scholar]

- Zhu, X.; Wang, T.; Darvishzadeh, R.; Skidmore, A.K.; Niemann, K.O. 3D leaf water content mapping using terrestrial laser scanner backscatter intensity with radiometric correction. ISPRS J. Photogramm. Remote Sens. 2015, 110, 14–23. [Google Scholar] [CrossRef]

- Beckmann, P.; Spizzichino, A. The scattering of Electromagnetic Waves from Rough Surfaces; Pergamon Press: New York, NY, USA, 1987. [Google Scholar]

- Lefsky, M.A.; Cohen, W.B.; Acker, S.A.; Parker, G.G.; Spies, T.A.; Harding, D. Lidar Remote Sensing of the Canopy Structure and Biophysical Properties of Douglas-Fir Western Hemlock Forests. Remote Sens. Environ. 1999, 70, 339–361. [Google Scholar] [CrossRef]

- McGlinchy, J.; Van Aardt, J.A.N.; Erasmus, B.; Asner, G.P.; Mathieu, R.; Wessels, K.; Knapp, D.; Kennedy-Bowdoin, T.; Rhody, H.; Kerekes, J.P. Extracting structural vegetation components from small-footprint waveform lidar for biomass estimation in savanna ecosystems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 480–490. [Google Scholar] [CrossRef]

- Sarrazin, M.J.D.; Van Aardt, J.A.N.; Asner, G.P.; McGlinchy, J.; Messinger, D.W.; Wu, J. Fusing small-footprint waveform LiDAR and hyperspectral data for canopy-level species classification and herbaceous biomass modeling in savanna ecosystems. Can. J. Remote Sens. 2012, 37, 653–665. [Google Scholar] [CrossRef]

- Heinzel, J.; Koch, B. Exploring full-waveform LiDAR parameters for tree species classification. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 152–160. [Google Scholar] [CrossRef]

- Höfle, B.; Hollaus, M.; Hagenauer, J. Urban vegetation detection using radiometrically calibrated small-footprint full-waveform airborne LiDAR data. ISPRS J. Photogramm. Remote Sens. 2012, 67, 134–147. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F.; Smith, G.J.; Jonckheere, I.; Coppin, P. Review of methods for in situ leaf area index (LAI) determination: Part II. Estimation of LAI, errors and sampling. Agric. For. Meteorol. 2004, 121, 37–53. [Google Scholar] [CrossRef]

- Bonan, G.B.; Williams, M.; Fisher, R.A.; Oleson, K.W. Modeling stomatal conductance in the earth system: Linking leaf water-use efficiency and water transport along the soil–plant–atmosphere continuum. Geosci. Model Dev. 2014, 7, 2193–2222. [Google Scholar] [CrossRef]

- Kamoske, A.G.; Dahlin, K.M.; Stark, S.C.; Serbin, S.P. Leaf area density from airborne LiDAR: Comparing sensors and resolutions in a temperate broadleaf forest ecosystem. For. Ecol. Manag. 2019, 433, 364–375. [Google Scholar] [CrossRef]

- Stark, S.C.; Leitold, V.; Wu, J.L.; Hunter, M.O.; de Castilho, C.V.; Costa, F.R.C.; McMahon, S.M.; Parker, G.G.; Shimabukuro, M.T.; Lefsky, M.A.; et al. Amazon forest carbon dynamics predicted by profiles of canopy leaf area and light environment. Ecol. Lett. 2012, 15, 1406–1414. [Google Scholar] [CrossRef] [PubMed]

- Becknell, J.M.; Desai, A.R.; Dietze, M.C.; Schultz, C.A.; Starr, G.; Duffy, P.A.; Franklin, J.F.; Pourmokhtarian, A.; Hall, J.; Stoy, P.C. Assessing interactions among changing climate, management, and disturbance in forests: A macrosystems approach. Bioscience 2015, 65, 263–274. [Google Scholar] [CrossRef]

- MacArthur, R.H.; Horn, H.S. Foliage profile by vertical measurements. Ecology 1969, 50, 802–804. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Cohen, W.B.; Parker, G.G.; Harding, D.J. Lidar remote sensing for ecosystem studies: Lidar, an emerging remote sensing technology that directly measures the three-dimensional distribution of plant canopies, can accurately estimate vegetation structural attributes and should be of particular inte. Bioscience 2002, 52, 19. [Google Scholar] [CrossRef]

- Leblanc, S.G.; Chen, J.M.; Fernandes, R.; Deering, D.W.; Conley, A. Methodology comparison for canopy structure parameters extraction from digital hemispherical photography in boreal forests. Agric. For. Meteorol. 2005, 129, 187–207. [Google Scholar] [CrossRef]

- Cutini, A.; Matteucci, G.; Mugnozza, G.S. Estimation of leaf area index with the Li-Cor LAI 2000 in deciduous forests. For. Ecol. Manag. 1998, 105, 55–65. [Google Scholar] [CrossRef]

- Solberg, S.; Næsset, E.; Hanssen, K.H.; Christiansen, E. Mapping defoliation during a severe insect attack on Scots pine using airborne laser scanning. Remote Sens. Environ. 2006, 102, 364–376. [Google Scholar] [CrossRef]

- Gatziolis, D.; Andersen, H.-E. A Guide to LIDAR Data Acquisition and Processing for the Forests of the Pacific Northwest; General Technical Report PNW-GTR-768; Pacific Northwest Research Station, Forest Service, United States Department of Agriculture: Portland, OR, USA, 2008; 768. [CrossRef]

- Wagner, W.; Ullrich, A.; Melzer, T.; Briese, C.; Kraus, K. From Single-Pulse to Full-Waveform Airborne Laser Scanners: Potential and Practical Challenges. Int. Arch. Photogramm. Remote Sens. Geoinf. Sci. 2004, 35, 414–419. [Google Scholar]

- Hagstrom, S.T. Voxel-Based LIDAR Analysis and Applications. Ph.D. Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2014. [Google Scholar]

- Blair, J.B.; Hofton, M.A. Modeling laser altimeter return waveforms over complex vegetation using high-resolution elevation data. Geophys. Res. Lett. 1999, 26, 2509–2512. [Google Scholar] [CrossRef]

- Chauve, A.; Mallet, C.; Bretar, F.; Durrieu, S.; Deseilligny, M.P.; Puech, W. Processing full-waveform lidar data: Modelling raw signals. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences 2007; ISPRS: Hannover, Germany, 2008; pp. 102–107. [Google Scholar]

- Kobayashi, H.; Iwabuchi, H. A coupled 1-D atmosphere and 3-D canopy radiative transfer model for canopy reflectance, light environment, and photosynthesis simulation in a heterogeneous landscape. Remote Sens. Environ. 2008, 112, 173–185. [Google Scholar] [CrossRef]

- Goel, N.S.; Rozehnal, I.; Thompson, R.L. A computer graphics based model for scattering from objects of arbitrary shapes in the optical region. Remote Sens. Environ. 1991, 36, 73–104. [Google Scholar] [CrossRef]

- Ni-Meister, W.; Jupp, D.L.B.; Dubayah, R. Modeling lidar waveforms in heterogeneous and discrete canopies. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1943–1958. [Google Scholar] [CrossRef]

- Burton, R.R.; Schott, J.R.; Brown, S.D. Elastic ladar modeling for synthetic imaging applications. In Proceedings of the International Symposium on Optical Science and Technology, Seattle, WA, USA, 7–11 July 2002; Volume 4816, p. 144. [Google Scholar]

- Plachetka, T. POV Ray: Persistence of vision parallel raytracer. In Proceedings of the Spring Conference on Computer Graphics, Budmerice, Slovakia, 23–25 April 1998; Volume 123. [Google Scholar]

- Goodwin, N.R.; Coops, N.C.; Culvenor, D.S. Development of a simulation model to predict LiDAR interception in forested environments. Remote Sens. Environ. 2007, 111, 481–492. [Google Scholar] [CrossRef]

- North, P.R.J.; Rosette, J.A.B.; Suárez, J.C.; Los, S.O. A Monte Carlo radiative transfer model of satellite waveform LiDAR. Int. J. Remote Sens. 2010, 31, 1343–1358. [Google Scholar] [CrossRef]

- Kotchenova, S.Y.; Shabanov, N.V.; Knyazikhin, Y.; Davis, A.B.; Dubayah, R.; Myneni, R.B. Modeling Lidar waveforms with time-dependent stochastic radiative transfer theory for remote estimations of forest structure. J. Geophys. Res. Atmos. 2003, 108. [Google Scholar] [CrossRef]

- Calders, K.; Lewis, P.; Disney, M.; Verbesselt, J.; Herold, M. Investigating assumptions of crown archetypes for modelling LiDAR returns. Remote Sens. Environ. 2013, 134, 39–49. [Google Scholar] [CrossRef]

- Qin, H.; Wang, C.; Xi, X.; Tian, J.; Zhou, G. Simulating the Effects of the Airborne Lidar Scanning Angle, Flying Altitude, and Pulse Density for Forest Foliage Profile Retrieval. Appl. Sci. 2017, 7, 712. [Google Scholar] [CrossRef]

- Morsdorf, F.; Frey, O.; Koetz, B.; Meier, E. Ray tracing for modeling of small footprint airborne laser scanning returns. In Proceedings of the ISPRS Workshop ‘Laser Scanning 2007 and SilviLaser 2007’, Espoo, Finland, 12–14 September 2007; ISPRS: Hanover, Germany; Volume 36, pp. 249–299. [Google Scholar]

- Blevins, D.D. Modeling Multiple Scattering and Absorption for a Differential Absorption LIDAR System. Ph.D. Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2005. [Google Scholar]

- Wu, J.; Cawse-Nicholson, K.; VanAardt, J. 3D Tree Reconstruction from Simulated Small Footprint Waveform Lidar. Am. Soc. Photogramm. Remote Sens. 2013, 79, 1147–1157. [Google Scholar] [CrossRef]

- Romanczyk, P.; van Aardt, J.; Cawse-Nicholson, K.; Kelbe, D.; McGlinch, J.; Krause, K. Assessing the impact of broadleaf tree structure on airborne full-waveform small-footprint LiDAR signals through simulation. Can. J. Remote Sens. 2013, 39, S60–S72. [Google Scholar] [CrossRef]

- Wu, J.; Van Aardt, J.A.N.; McGlinchy, J.; Asner, G.P. A robust signal preprocessing chain for small-footprint waveform lidar. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3242–3255. [Google Scholar] [CrossRef]

- Wu, J.; Van Aardt, J.A.N.; Asner, G.P. A comparison of signal deconvolution algorithms based on small-footprint LiDAR waveform simulation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2402–2414. [Google Scholar] [CrossRef]

- Widlowski, J.L.; Pinty, B.; Lopatka, M.; Atzberger, C.; Buzica, D.; Chelle, M.; Disney, M.; Gastellu-Etchegorry, J.P.; Gerboles, M.; Gobron, N.; et al. The fourth radiation transfer model intercomparison (RAMI-IV): Proficiency testing of canopy reflectance models with ISO-13528. J. Geophys. Res. Atmos. 2013, 118, 6869–6890. [Google Scholar] [CrossRef]

- Lidar Modality Handbook. Available online: https://dirsig.cis.rit.edu/docs/new/lidar.html (accessed on 11 June 2019).

- Goodenough, A.A.; Brown, S.D. DIRSIG5: Next-generation remote sensing data and image simulation framework. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4818–4833. [Google Scholar] [CrossRef]

- Roth, B.D.; Saunders, M.G.; Bachmann, C.M.; van Aardt, J. On Leaf BRDF Estimates and Their Fit to Microfacet Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1761–1771. [Google Scholar] [CrossRef]

- Roth, B. Broad Leaf Bidirectional Scattering Distribution Functions (BSDFs). Remote Sens. 2020. [Google Scholar] [CrossRef]

- Katsev, I.L.; Zege, E.P.; Prikhach, A.S.; Polonsky, I.N. Efficient technique to determine backscattered light power for various atmospheric and oceanic sounding and imaging systems. JOSA A 1997, 14, 1338–1346. [Google Scholar] [CrossRef]

- Verhoef, W. Light scattering by leaf layers with application to canopy reflectance modeling: The SAIL model. Remote Sens. Environ. 1984, 16, 125–141. [Google Scholar] [CrossRef]

- De Wit, C.T. Photosynthesis of Leaf Canopies; Wageningen University: Wageningen, The Netherlands, 1965. [Google Scholar]

- Pisek, J.; Sonnentag, O.; Richardson, A.D.; Mõttus, M. Is the spherical leaf inclination angle distribution a valid assumption for temperate and boreal broadleaf tree species? Agric. For. Meteorol. 2013, 169, 186–194. [Google Scholar] [CrossRef]

- Zhao, K.; Popescu, S. Lidar-based mapping of leaf area index and its use for validating GLOBCARBON satellite LAI product in a temperate forest of the southern USA. Remote Sens. Environ. 2009, 113, 1628–1645. [Google Scholar] [CrossRef]

- Bouvier, M.; Durrieu, S.; Fournier, R.A.; Renaud, J.-P. Generalizing predictive models of forest inventory attributes using an area-based approach with airborne LiDAR data. Remote Sens. Environ. 2015, 156, 322–334. [Google Scholar] [CrossRef]

- Richardson, J.J.; Moskal, L.M.; Kim, S.-H. Modeling approaches to estimate effective leaf area index from aerial discrete-return LIDAR. Agric. For. Meteorol. 2009, 149, 1152–1160. [Google Scholar] [CrossRef]

- Romanczyk, P. Extraction of Vegetation Biophysical Structure from Small-Footprint Full-Waveform Lidar Signals. Ph.D. Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2015. [Google Scholar]

- Woodhouse, I.H.; Nichol, C.; Sinclair, P.; Jack, J.; Morsdorf, F.; Malthus, T.J.; Patenaude, G. A multispectral canopy LiDAR demonstrator project. IEEE Geosci. Remote Sens. Lett. 2011, 8, 839–843. [Google Scholar] [CrossRef]

- Wei, G.; Shalei, S.; Bo, Z.; Shuo, S.; Faquan, L.; Xuewu, C. Multi-wavelength canopy LiDAR for remote sensing of vegetation: Design and system performance. ISPRS J. Photogramm. Remote Sens. 2012, 69, 1–9. [Google Scholar] [CrossRef]

- Thomas, J.J. Terrain Classification Using Multi-Wavelength LiDAR Data. Master’s Thesis, Naval Postgraduate School Monterey United States, Monterey, CA, USA, September 2015. [Google Scholar]

- Bunnik, N. The Multispectral Reflectance of Shortwave Radiation by Agricultural Crops in Relation with Their Morphological and Optical Properties. Ph.D. Thesis, Wageningen University, Wageningen, The Netherlands, 1978. [Google Scholar]

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Altitude | 2000 m | Gate Range | 1.323 × 10−5 to 1.335 × 10−5 s |

| Wavelength | 1064 nm | Bin Size | 4 ns (0.6 m) |

| Laser Spectral Width | 0.0003 μm | Receive Radius | 0.05 m |

| Pulse Energy | 100 mJ | Detector length | 100 μm square |

| Beam Shape | cylinder “Rect” | Focal Length | 0.004 m |

| Beam Divergence | 0.025 rad | Spatial subsampling | 100 × 100 |

| Pulse Length | 1 × 10−21 s | Maximum events in photon map per pulse | 4,000,000 |

| Temporal Pulse Shape | Gaussian | Maximum source bundles per pulse | 8,000,000 |

| Photon Map (PM) search radius | 1 cm | Maximum bounces per photon bundle | 10 |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Altitude | 1000 m | Gate Range | 6.55 × 10−6 to 6.75 × 10−5 s |

| Wavelength | 1064 nm | Bin Size | 1 ns |

| Laser Spectral Width | 1 × 10−5 μm | Receive Radius | 0.025 m |

| Pulse Energy | 0.2 mJ | Detector length | 250 μm square |

| Beam Shape | Gaussian | Focal Length | varied |

| Beam Divergence | varied | Spatial subsampling | 101 × 101 |

| Pulse Length | 3 ns | Maximum events in photon map per pulse | 400,000 |

| Temporal Pulse Shape | Gaussian | Maximum source bundles per pulse | 800,000 |

| PM search radius | 1 cm | Maximum bounces per photon bundle | 10 (for multiscatter) |

| Parameter | Values | |||

|---|---|---|---|---|

| Wavelength | 550 nm | 1064 nm | 1550 nm | |

| Footprint Diameter | 0.1 m | 0.5 m | 5 m | |

| Zenith View Angle | 0° | 22.5° | 45° | |

| LAD | Spherical | Planophile | Plagiophile | |

| BSDF | Model | Lambertian | Model-Opaque | Model-Lambertian |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Altitude | Varied (1000 m distance to target) | Gate Range | 6.55 × 10−6 to 6.75 × 10−5 s |

| Wavelength | 550, 1064, 1550 nm | Bin Size | 1 ns |

| Laser Spectral Width | 1 × 10−5 μm | Receive Radius | 0.025 m |

| Pulse Energy | 0.2 mJ | Detector length | 250 μm square |

| Beam Shape | Gaussian | Focal Length | 1.25, 0.25, 0.025 m |

| Beam Divergence | 1 × 10−4, 5 × 10−4, 5 × 10−3 m | Spatial subsampling | 101 × 101 |

| Pulse Length | 3 ns | Maximum events in photon map per pulse | 400,000 |

| Temporal Pulse Shape | Gaussian | Maximum source bundles per pulse | 800,000 |

| PM search radius | 1 cm | Maximum bounces per photon bundle | 10 (for multiscatter) |

| Loc # | X | Y | LAI | Mean Leaf Angle |

|---|---|---|---|---|

| 0 | 15 | 15 | 5.61 | 38.9° |

| 1 | 14.5 | 15 | 4.14 | 41° |

| 2 | 8.52 | 12.43 | 2.58 | 40.8° |

| 3 | 13.45 | 13.03 | 4.09 | 39.2° |

| 4 | 9.04 | 14.92 | 5.31 | 40° |

| 5 | 14.54 | 17.75 | 5.61 | 39.9° |

| 6 | 13.86 | 14.82 | 6.08 | 38.9° |

| 7 | 11.91 | 9.81 | 2.82 | 39.2° |

| 8 | 9.29 | 15.22 | 1.02 | 40.6° |

| 9 | 13.39 | 14.46 | 4.09 | 39.2° |

| Parameter | Values |

|---|---|

| Wavelength | 550 nm, 1064 nm |

| Footprint Diameter | 0.1 m, 0.5 m, 5 m |

| Zenith View Angle | 0° |

| BSDF | Model, Lambertian, Model-Opaque, Model-Lambertian |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roth, B.D.; Goodenough, A.A.; Brown, S.D.; van Aardt, J.A.; Saunders, M.G.; Krause, K. Simulations of Leaf BSDF Effects on Lidar Waveforms. Remote Sens. 2020, 12, 2909. https://doi.org/10.3390/rs12182909

Roth BD, Goodenough AA, Brown SD, van Aardt JA, Saunders MG, Krause K. Simulations of Leaf BSDF Effects on Lidar Waveforms. Remote Sensing. 2020; 12(18):2909. https://doi.org/10.3390/rs12182909

Chicago/Turabian StyleRoth, Benjamin D., Adam A. Goodenough, Scott D. Brown, Jan A. van Aardt, M. Grady Saunders, and Keith Krause. 2020. "Simulations of Leaf BSDF Effects on Lidar Waveforms" Remote Sensing 12, no. 18: 2909. https://doi.org/10.3390/rs12182909

APA StyleRoth, B. D., Goodenough, A. A., Brown, S. D., van Aardt, J. A., Saunders, M. G., & Krause, K. (2020). Simulations of Leaf BSDF Effects on Lidar Waveforms. Remote Sensing, 12(18), 2909. https://doi.org/10.3390/rs12182909