Introducing GEOBIA to Landscape Imageability Assessment: A Multi-Temporal Case Study of the Nature Reserve “Kózki”, Poland

Abstract

:1. Introduction

1.1. The Importance of Image Segmentation Quality as Prerequisites for Imageability Indicator Calculations

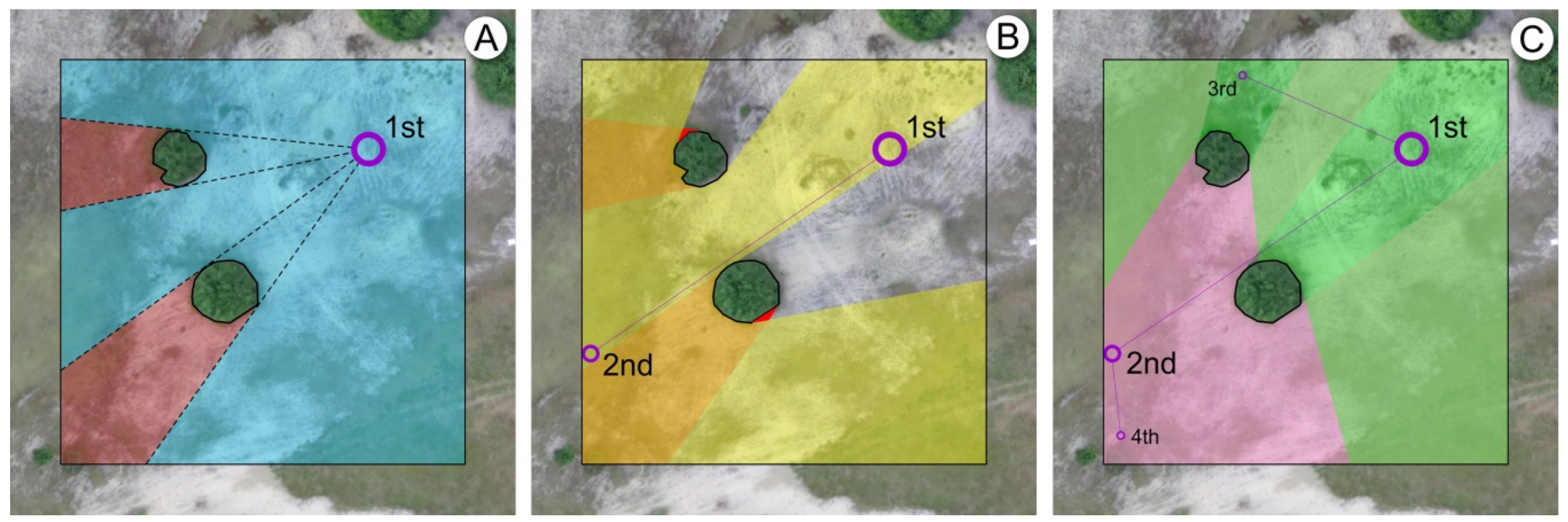

1.2. Measuring Imageability with the Use of Viewpoints

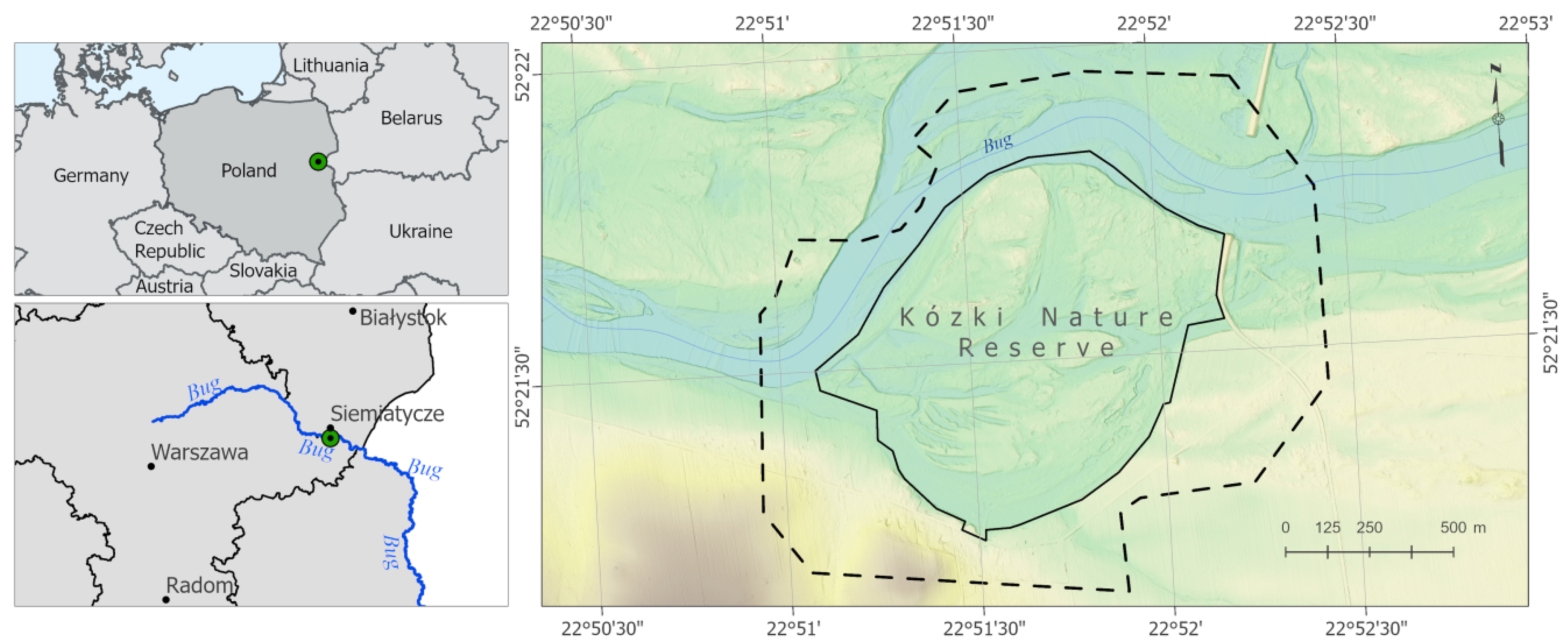

2. Study Area

3. Materials and Methods

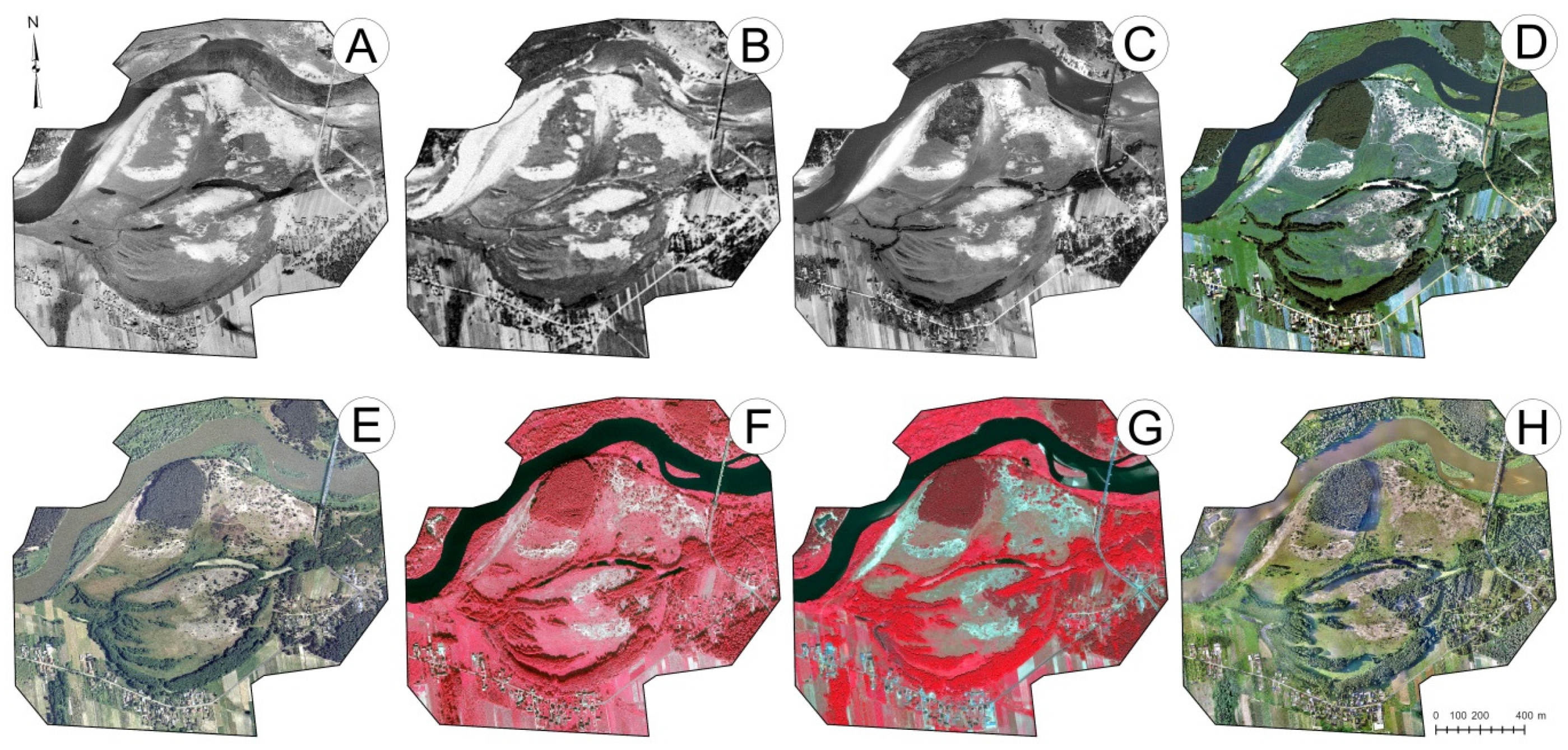

3.1. Remote Sensing Imagery Pre-Preprocessing

3.2. Imagery Processing Method

3.3. Segmentation and Segment Evaluation Method

3.4. Image Classifications

3.4.1. Land-Cover Class Nomenclature

3.4.2. GEOBIA Classification Methodology

3.5. Isovist and Imageability Indicator Method

4. Results

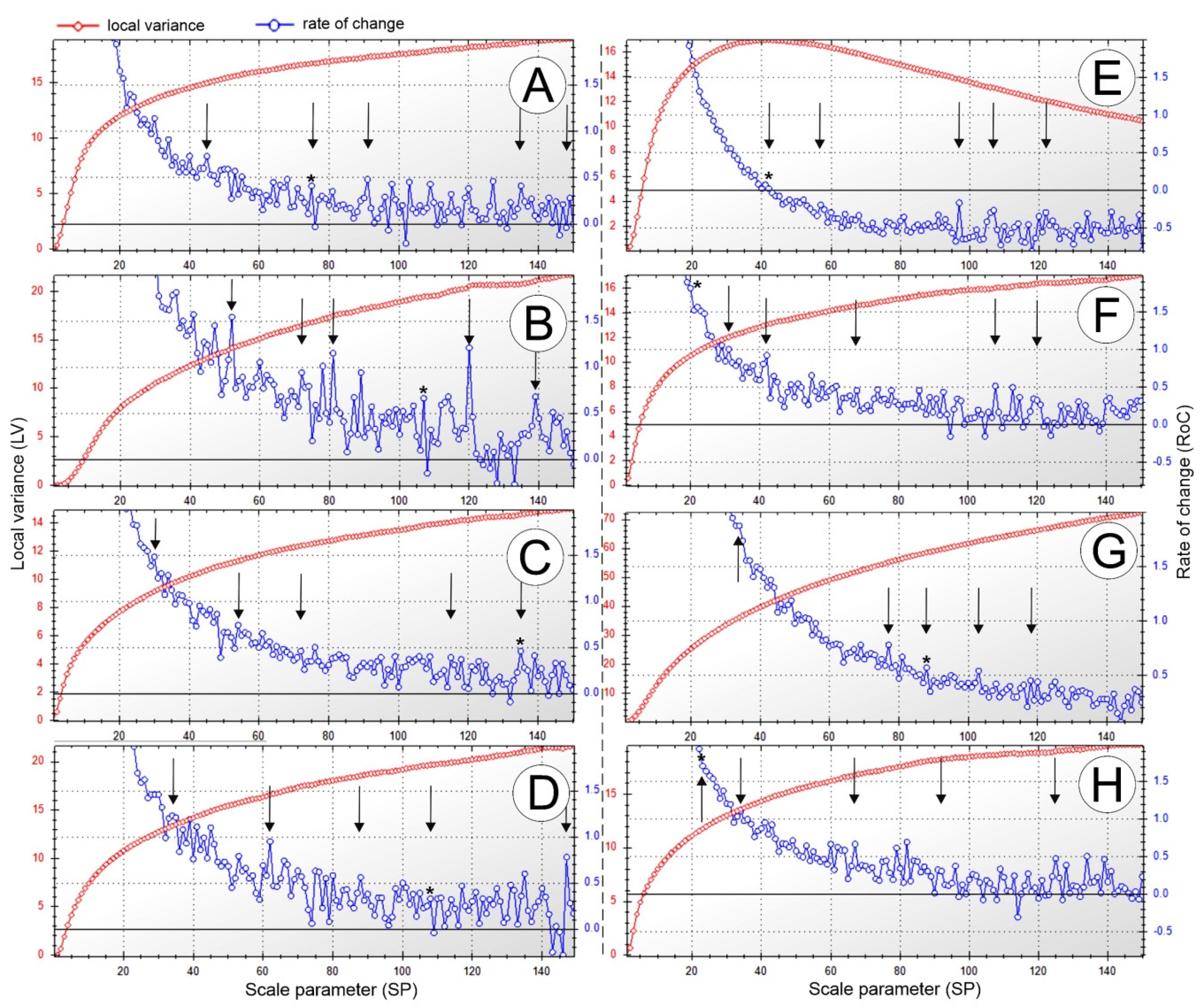

4.1. Obtaining Optimal Segmentation Parameters

4.1.1. SP Candidate Results

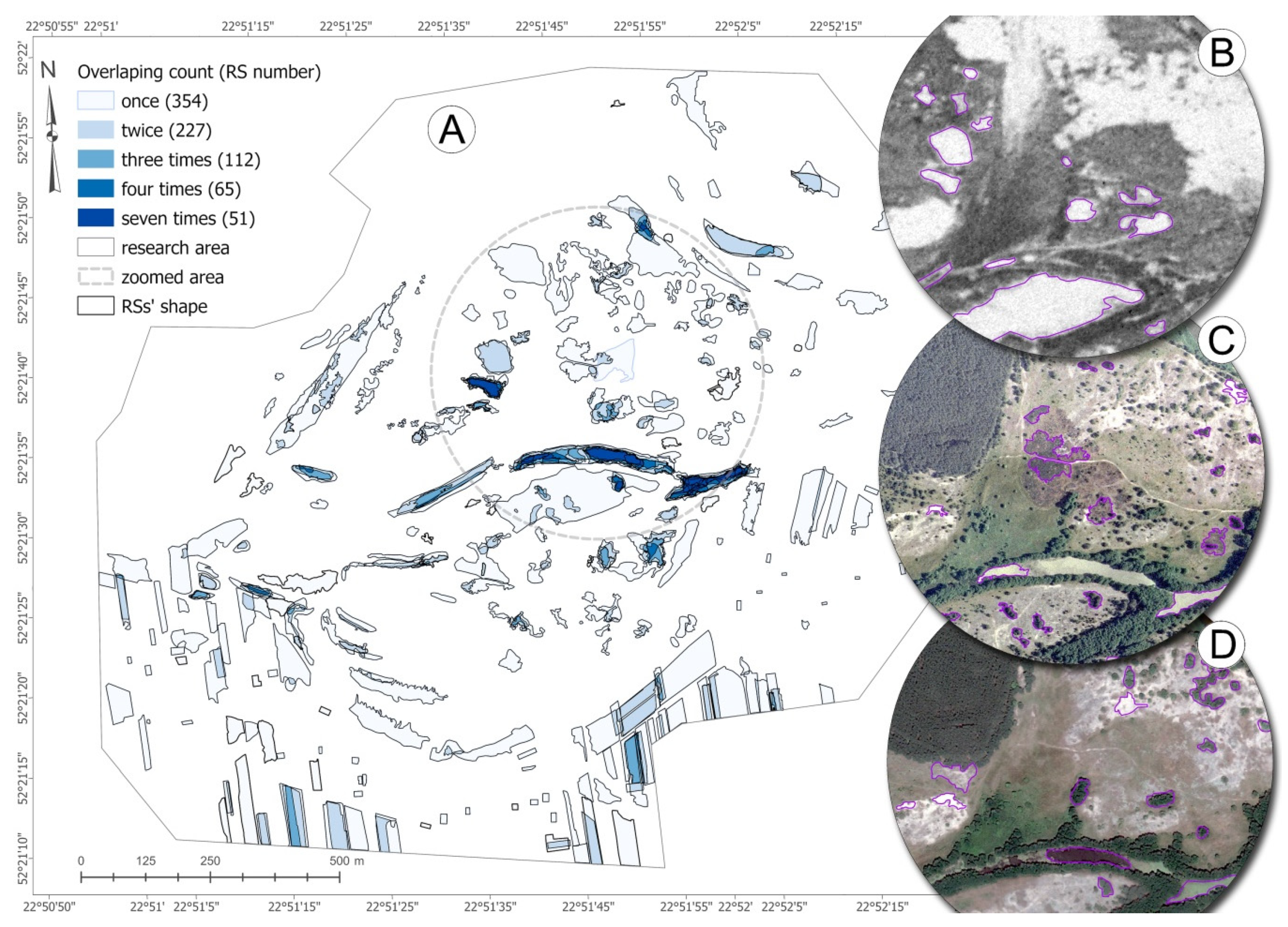

4.1.2. The Reference Segment Digitalization Results

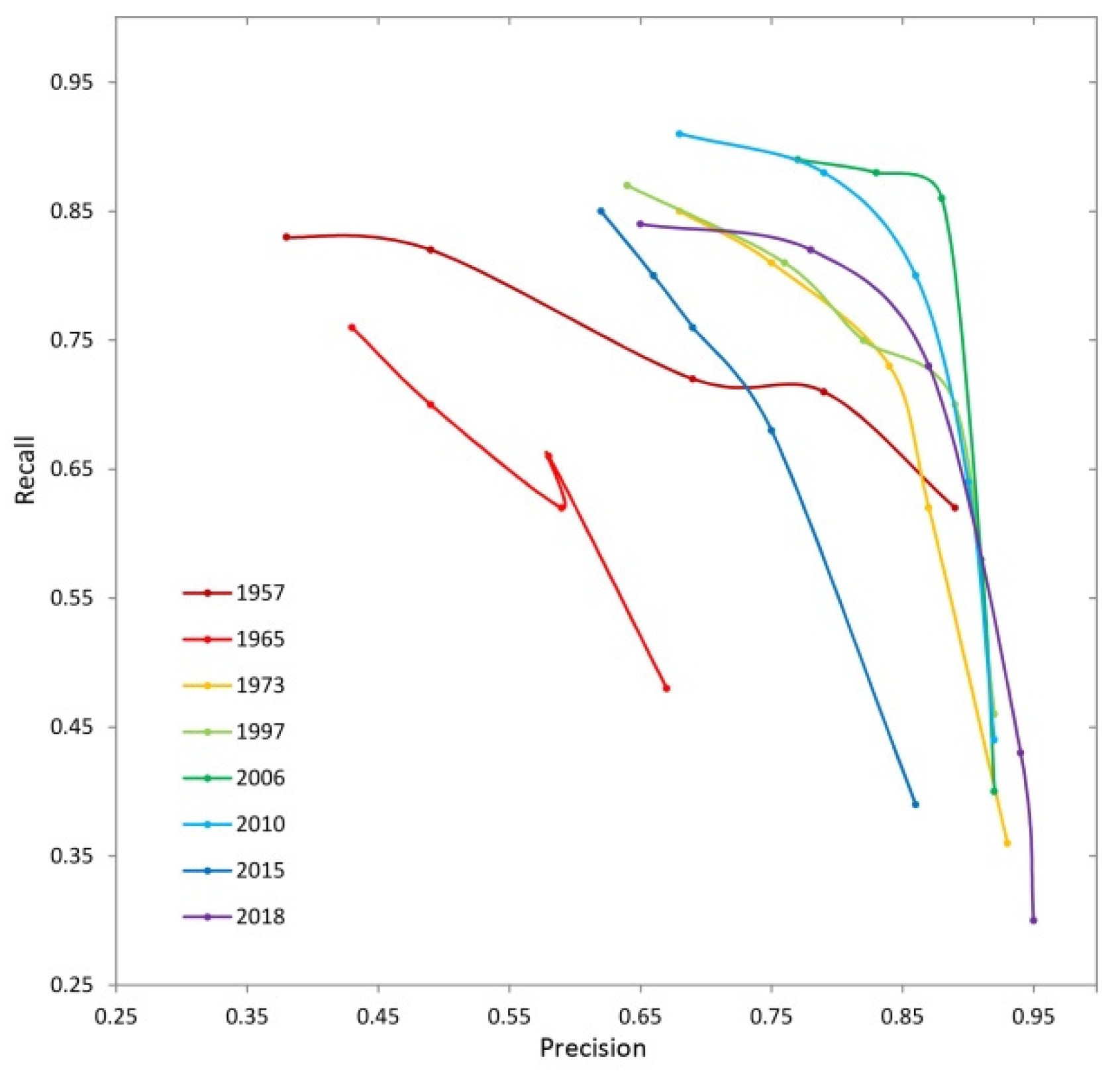

4.1.3. Segmentation Accuracy Results

4.2. Segment Classification Accuracy Results

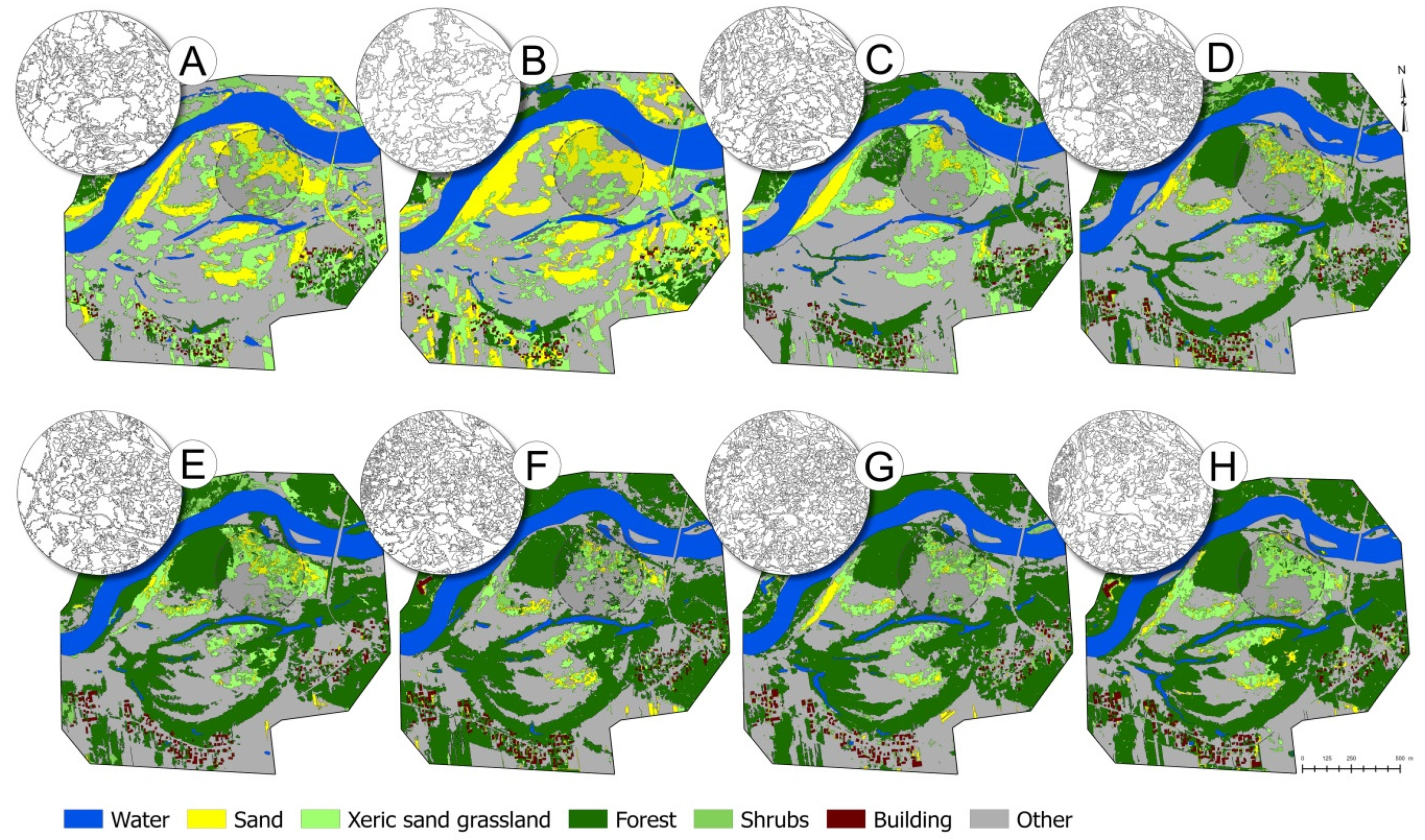

4.3. Land Cover Changes

4.4. The Imageability of Changing Landscape Interpretation

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. The Segmentation Results

| SP Value | Time-Frames | Number of Segments | Mean Segment Size (ha) | Min Segment Size (ha) | Max Segment Size (ha) |

|---|---|---|---|---|---|

| 34 | 2015 | 33,275 | 0.0060 | 0.0001 | 0.3204 |

| 2018 | 15,058 | 0.0132 | 0.0001 | 0.2327 | |

| 72 | 1965 | 1240 | 0.1610 | 0.0035 | 2.7375 |

| 1973 | 3077 | 0.0649 | 0.0005 | 1.7865 | |

| 88 | 1997 | 2622 | 0.0761 | 0.0005 | 3.1234 |

| 2006 | 2909 | 0.0686 | 0.0005 | 2.4855 | |

| 108 | 1997 | 1813 | 0.1101 | 0.0009 | 3.3752 |

| 2010 | 1512 | 0.1321 | 0.0013 | 2.2697 | |

| 135 | 1957 | 792 | 0.252 | 0.0020 | 2.1776 |

| 1973 | 1048 | 0.1906 | 0.0025 | 2.0154 |

| Time-Frame | SP Candidates | Precision | Recall | F-Score (Zhang 2015) | Jaccard Index | Accuracy (e) | Segmentation Error |

|---|---|---|---|---|---|---|---|

| 1957 | SP 45 | 0.89 | 0.62 | 0.67 | 0.58 | 0.80 | 0.28 |

| SP 75 * | 0.79 | 0.71 | 0.68 | 0.60 | 0.83 | 0.23 | |

| SP 91 | 0.69 | 0.72 | 0.60 | 0.52 | 0.81 | 0.31 | |

| SP 135 | 0.49 | 0.82 | 0.48 | 0.42 | 0.78 | 0.44 | |

| SP 149 | 0.38 | 0.83 | 0.38 | 0.32 | 0.75 | 0.55 | |

| 1965 | SP 52 | 0.67 | 0.48 | 0.51 | 0.40 | 0.73 | 0.28 |

| SP 72 | 0.58 | 0.66 | 0.57 | 0.46 | 0.78 | 0.26 | |

| SP 81 | 0.59 | 0.62 | 0.56 | 0.45 | 0.78 | 0.26 | |

| SP 120 | 0.49 | 0.70 | 0.51 | 0.40 | 0.79 | 0.38 | |

| SP 139 | 0.43 | 0.76 | 0.45 | 0.36 | 0.79 | 0.47 | |

| 1973 | SP 30 | 0.93 | 0.36 | 0.47 | 0.34 | 0.68 | 0.49 |

| SP 54 | 0.87 | 0.62 | 0.68 | 0.58 | 0.80 | 0.24 | |

| SP 72 | 0.84 | 0.73 | 0.76 | 0.67 | 0.85 | 0.14 | |

| SP 115 | 0.75 | 0.81 | 0.74 | 0.66 | 0.86 | 0.18 | |

| SP 135 * | 0.68 | 0.85 | 0.71 | 0.62 | 0.86 | 0.22 | |

| 1997 | SP 34 | 0.92 | 0.46 | 0.56 | 0.44 | 0.74 | 0.41 |

| SP 62 | 0.89 | 0.70 | 0.75 | 0.66 | 0.85 | 0.19 | |

| SP 88 | 0.82 | 0.75 | 0.74 | 0.65 | 0.86 | 0.18 | |

| SP 108 * | 0.76 | 0.81 | 0.74 | 0.65 | 0.86 | 0.19 | |

| SP 147 | 0.64 | 0.87 | 0.68 | 0.60 | 0.86 | 0.24 | |

| 2006 | SP 42 * | 0.92 | 0.40 | 0.51 | 0.38 | 0.69 | 0.44 |

| SP 57 | 0.91 | 0.58 | 0.65 | 0.55 | 0.77 | 0.29 | |

| SP 88 | 0.88 | 0.86 | 0.86 | 0.78 | 0.90 | 0.08 | |

| SP 107 | 0.83 | 0.88 | 0.84 | 0.76 | 0.89 | 0.11 | |

| SP 122 | 0.77 | 0.89 | 0.80 | 0.71 | 0.88 | 0.14 | |

| 2010 | PS 31 | 0.92 | 0.44 | 0.54 | 0.42 | 0.72 | 0.42 |

| SP 41 | 0.90 | 0.64 | 0.70 | 0.60 | 0.82 | 0.24 | |

| SP 68 | 0.86 | 0.80 | 0.80 | 0.72 | 0.88 | 0.15 | |

| SP 108 | 0.79 | 0.88 | 0.80 | 0.72 | 0.89 | 0.14 | |

| SP 120 | 0.68 | 0.91 | 0.72 | 0.64 | 0.87 | 0.23 | |

| 2015 | SP 34 | 0.86 | 0.39 | 0.50 | 0.36 | 0.64 | 0.42 |

| SP 77 | 0.75 | 0.68 | 0.68 | 0.58 | 0.79 | 0.21 | |

| SP 97 | 0.69 | 0.76 | 0.68 | 0.59 | 0.82 | 0.22 | |

| SP 104 | 0.66 | 0.80 | 0.66 | 0.58 | 0.81 | 0.25 | |

| SP 118 | 0.62 | 0.85 | 0.64 | 0.56 | 0.81 | 0.29 | |

| 2018 | SP 23 * | 0.95 | 0.30 | 0.42 | 0.29 | 0.62 | 0.56 |

| SP 34 | 0.94 | 0.43 | 0.52 | 0.42 | 0.70 | 0.44 | |

| SP 67 | 0.87 | 0.73 | 0.75 | 0.67 | 0.84 | 0.18 | |

| SP 92 | 0.78 | 0.82 | 0.75 | 0.67 | 0.87 | 0.18 | |

| SP 125 | 0.65 | 0.84 | 0.67 | 0.57 | 0.84 | 0.27 |

Appendix B. The Segment Classification Results

| Date | Classificatory | Overall Measures | Per-Class Accuracy: Producer/User/Kappa | |||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Kappa | c-Class | r-Class | |||||

| forest | shrubs | c-grass | open sand | other | ||||

| 1957 (SP75) | RF | 0.91 | 0.89 | 0.91/0.95/0.89 | 0.96/0.96/0.94 | 0.88/0.91/0.85 | 0.96/0.96/0.94 | 0.88/0.81/0.84 |

| SVM | 0.77 | 0.71 | 0.79/1/0.75 | 0.96/0.92/0.94 | 0.4/0.83/0.33 | 0.8/0.95/0.75 | 0.92/0.50/0.87 | |

| KNN | 0.79 | 0.73 | 0.83/0.90/0.79 | 0.88/0.95/0.85 | 0.56/0.77/0.48 | 0.80/0.80/0.74 | 0.88/0.61/0.83 | |

| 1965 (SP 71) | RF | 0.67 | 0.56 | 0.8/1/0.75 | excluded | 0.2/0.57/0.12 | 1/0.54/1 | 0.7/0.7/0.6 |

| SVM | 0.58 | 0.45 | 0.8/0.88/0.74 | excluded | 0.1/0.16/0.05 | 1/0.64/1 | 0.45/0.47/0.27 | |

| KNN | 0.73 | 0.65 | 0.85/0.94/0.80 | excluded | 0.4/0.72/0.30 | 0.95/0.70/0.92 | 0.75/0.62/0.64 | |

| 1973 (SP71) | RF | 0.78 | 0.73 | 1/0.92/1 | 0.96/1/0.95 | 0.36/0.52/0.25 | 0.8/1/0.76 | 0.8/0.54/0.71 |

| SVM | 0.85 | 0.82 | 1/0.92/1 | 0.96/0.96/0.95 | 0.84/0.65/0.78 | 0.6/1/0.54 | 0.88/0.84/0.84 | |

| KNN | 0.68 | 0.61 | 1/0.78/1 | 0.88/1/0.85 | 0.28/0.36/0.15 | 0.68/0.94/0.62 | 0.6/0.44/0.45 | |

| 1997 (SP62) | RF | 0.89 | 0.87 | 0.92/0.79/0.89 | 0.76/1/0.71 | 0.92/0.92/0.9 | 0.92/1/0.90 | 0.96/0.82/0.94 |

| SVM | 0.66 | 0.58 | 0.4/0.58/0.30 | 0.8/1/0.76 | 0.24/1/0.20 | 0.96/0.96/0.95 | 0.92/0.40/0.85 | |

| KNN | 0.52 | 0.40 | 0.32/0.36/0.17 | 0.56/1/0.50 | 0.08/1/0.06 | 0.76/0.73/0.69 | 0.88/0.36/0.76 | |

| 2006 (SP97) | RF | 0.86 | 0.83 | 0.88/0.81/0.84 | 0.84/0.87/0.80 | 0.72/0.9/0.66 | 0.96/0.96/0.95 | 0.92/0.79/0.89 |

| SVM | 0.55 | 0.44 | 0.84/0.84/0.79 | 0.12/0.37/0.05 | 0.8/0.48/0.69 | 0.86/1/0.84 | 0.16/0.14/0.08 | |

| KNN | 0.46 | 0.33 | 0.56/0.29/0.27 | 0.56/1/0.50 | 0.36/0.56/0.26 | 0.69/0.94/0.64 | 0.16/0.14/0.07 | |

| 2010 (SP65) | RF_3b | 0.85 | 0.82 | 0.96/0.75/0.94 | 0.72/1/0.67 | 0.76/1/0.71 | 1/0.96/1 | 0.84/0.70/0.78 |

| -SVM_5b | 0.60 | 0.51 | 0.52/0.76/0.44 | 0.62/1/0.57 | 0.41/0.47/0.29 | 0.48/1/0.42 | 1/0.43/1 | |

| -KNN_5b | 0.51 | 0.38 | 0.24/0.75/0.18 | 0.62/1/0.57 | 0.29/0.46/0.19 | 0.4/1/0.34 | 1/0.33/1 | |

| 2015 (SP97) | RF | 0.92 | 0.91 | 0.96/0.92/0.94 | 0.88/1/0.85 | 1/0.83/1 | 1/1/1 | 0.8/0.90/0.75 |

| SVM | 0.80 | 0.75 | 1/1/1 | 0.84/1/0.80 | 0.48/1/0.42 | 0.68/1/0.62 | 1/0.5/1 | |

| KNN | 0.58 | 0.48 | 0.88/0.5/0.81 | 0.72/1/0.67 | 0.4/0.76/0.33 | 0.64/1/0.58 | 0.28/0.20/0.01 | |

| 2018 (SP70) | RF | 0.91 | 0.89 | 1/0.89/1 | 0.88/1/0.85 | 0.92/0.88/0.89 | 0.84/1/0.80 | 0.92/0.85/0.89 |

| SVM (DT) | 0.84 | 0.81 | 0.96/0.8/0.94 | 0.72/1/0.67 | 0.88/0.81/0.84 | 0.84/1/0.80 | 0.84/0.75/0.79 | |

| KNN (svm) | 0.63 | 0.54 | 0.28/0.41/0.16 | 0.48/1/0.42 | 0.72/0.78/0.65 | 0.72/1/0.67 | 0.96/0.44/0.92 | |

References

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Arvor, D.; Durieux, L.; Andrés, S.; Laporte, M.-A. Advances in geographic object-based image analysis with ontologies: A review of main contributions and limitations from a remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2013, 82, 125–137. [Google Scholar] [CrossRef]

- Souza-Filho, P.W.M.; Nascimento, W.R.; Santos, D.C.; Weber, E.J.; Silva, R.O.; Siqueira, J.O. A GEOBIA Approach for Multitemporal Land-Cover and Land-Use Change Analysis in a Tropical Watershed in the Southeastern Amazon. Remote Sens. 2018, 10, 1683. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. GISci. Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Dornik, A.; Drăguţ, L.; Urdea, P. Classification of Soil Types Using Geographic Object-Based Image Analysis and Random Forests. Pedosphere 2018, 28, 913–925. [Google Scholar] [CrossRef]

- Hegyi, A.; Vernica, M.-M.; Drăguţ, L. An object-based approach to support the automatic delineation of magnetic anomalies. Archaeol. Prospect. 2019, 27, 1–10. [Google Scholar] [CrossRef]

- Drăguţ, L.; Eisank, C.; Strasser, T. Local variance for multi-scale analysis in geomorphometry. Geomorphology 2011, 130, 162–172. [Google Scholar] [CrossRef] [Green Version]

- Hay, G.J.; Niemann, K.O.; McLean, G.F. An object-specific image-texture analysis of H-resolution forest imagery. Remote Sens. Environ. 1996, 55, 108–122. [Google Scholar] [CrossRef]

- Wężyk, P.; Hawryło, P.; Janus, B.; Weidenbach, M.; Szostak, M. Forest cover changes in Gorce NP (Poland) using photointerpretation of analogue photographs and GEOBIA of orthophotos and nDSM based on image-matching based approach. Eur. J. Remote Sens. 2018, 51, 501–510. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J. An airborne lidar sampling strategy to model forest canopy height from Quickbird imagery and GEOBIA. Remote Sens. Environ. 2011, 115, 1532–1542. [Google Scholar] [CrossRef]

- Vogels, M.F.A.; de Jong, S.M.; Sterk, G.; Addink, E.A. Agricultural cropland mapping using black-and-white aerial photography, Object-Based Image Analysis and Random Forests. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 114–123. [Google Scholar] [CrossRef]

- Pinto, A.T.; Gonçalves, J.A.; Beja, P.; Honrado, J.P. From archived historical aerial imagery to informative orthophotos: A framework for retrieving the past in long-term socioecological research. Remote Sens. 2019, 11, 1388. [Google Scholar] [CrossRef] [Green Version]

- Nita, M.D.; Munteanu, C.; Gutman, G.; Abrudan, I.V.; Radeloff, V.C. Widespread forest cutting in the aftermath of World War II captured by broad-scale historical Corona spy satellite photography. Remote Sens. Environ. 2018, 204, 322–332. [Google Scholar] [CrossRef]

- Kadmon, R.; Harari-Kremer, R. Studying long-term vegetation dynamics using digital processing of historical aerial photographs. Remote Sens. Environ. 1999, 68, 164–176. [Google Scholar] [CrossRef]

- Ellis, E.C.; Wang, H.; Xiao, H.S.; Peng, K.; Liu, X.P.; Li, S.C.; Ouyang, H.; Cheng, X.; Yang, L.Z. Measuring long-term ecological changes in densely populated landscapes using current and historical high resolution imagery. Remote Sens. Environ. 2006, 100, 457–473. [Google Scholar] [CrossRef]

- Kupidura, P. The Comparison of Different Methods of Texture Analysis for Their Efficacy for Land Use Classification in Satellite Imagery. Remote Sens. 2019, 11, 1233. [Google Scholar] [CrossRef] [Green Version]

- Sertel, E.; Topaloğlu, R.H.; Şallı, B.; Yay Algan, I.; Aksu, G.A. Comparison of Landscape Metrics for Three Different Level Land Cover/Land Use Maps. ISPRS Int. J. Geo. Inf. 2018, 7, 408. [Google Scholar] [CrossRef] [Green Version]

- Riedler, B.; Lang, S. A spatially explicit patch model of habitat quality, integrating spatio-structural indicators. Ecol. Indic. 2018, 94, 128–141. [Google Scholar] [CrossRef]

- Ode, Å.; Tveit, M.; Fry, G. Capturing landscape visual character using indicators: Touching base with landscape aesthetic theory. Landsc. Res. 2008, 33, 89–117. [Google Scholar] [CrossRef]

- Tveit, M.; Ode, A.; Fry, G. Key visual concepts in a framework for analyzing visual landscape character. Landsc. Res. 2006, 31, 229–255. [Google Scholar] [CrossRef]

- Frazier, A.E.; Kedron, P. Landscape Metrics: Past Progress and Future Directions. Curr. Landsc. Ecol. Rep. 2017, 2, 63–72. [Google Scholar] [CrossRef] [Green Version]

- Schirpke, U.; Tasser, E.; Tappeiner, U. Predicting scenic beauty of mountain regions. Landsc. Urban. Plan. 2013, 111, 1–12. [Google Scholar] [CrossRef]

- Gong, L.; Zhang, Z.; Xu, C. Developing a Quality Assessment Index System for Scenic Forest Management: A Case Study from Xishan Mountain, Suburban Beijing. Forests 2015, 6, 225–243. [Google Scholar] [CrossRef] [Green Version]

- Hermes, J.; Albert, C.; von Haaren, C. Assessing the aesthetic quality of landscapes in Germany. Ecosyst. Serv. 2018, 31, 296–307. [Google Scholar] [CrossRef]

- Fry, G.; Tveit, M.S.; Ode, Å.; Velarde, M.D. The ecology of visual landscapes: Exploring the conceptual common ground of visual and ecological landscape indicators. Ecol. Indic. 2009, 9, 933–947. [Google Scholar] [CrossRef]

- Robert, S. Assessing the visual landscape potential of coastal territories for spatial planning. A case study in the French Mediterranean. Land Use Policy 2018, 72, 138–151. [Google Scholar] [CrossRef]

- Castilla, G.; Hay, G.J. Image objects and geographic objects. In Object-Based Image Analysis; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Heidelberg/Berlin, Germany, 2008; pp. 91–110. [Google Scholar]

- Bock, M.; Xofis, P.; Mitchley, J.; Rossner, G.; Wissen, M. Object-Oriented Methods for Habitat Mapping at Multiple Scales—Case Studies from Northern Germany and Wye Downs, UK. J. Nat. Conserv. 2005, 13, 75–89. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informations-Verarbeitung; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Herbert Wichmann-Verlag: Heidelberg, Germany, 2000; Volume XII, pp. 12–23. [Google Scholar]

- Munyati, C. Optimising multiresolution segmentation: Delineating savannah vegetation boundaries in the Kruger National Park, South Africa, using Sentin. 2 MSI Imagery. Int. J. Remote Sens. 2018, 39, 5997–6019. [Google Scholar] [CrossRef]

- Fu, Z.; Sun, Y.; Fan, L.; Han, Y. Multiscale and multifeature segmentation of high-spatial resolution remote sensing images using superpixels with mutual optimal strategy. Remote Sens. 2018, 10, 1289. [Google Scholar] [CrossRef] [Green Version]

- Möller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 311–321. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M. A comparison of pixel-based and object- based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Jhonnerie, R.; Siregar, V.P.; Nababan, B.; Prasetyo, L.B.; Wouthuyzen, S. Random Forest Classification for Mangrove Land Cover Mapping Using Landsat 5 TM and Alos Palsar Imageries. Procedia Environ. Sci. 2015, 24, 215–221. [Google Scholar] [CrossRef] [Green Version]

- Drǎguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [Green Version]

- The ESP Software Repository. Available online: http://research.enjoymaps.ro/downloads/ (accessed on 4 May 2020).

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Warner, T. Estimation of optimal image object size for the segmentation of forest stands with multispectral IKONOS imagery. In Object-Based Image Analysis-Spatial Concepts for Knowledge Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Heidelberg/Berlin, Germany, 2008; pp. 291–307. [Google Scholar]

- Kavzoglu, T.; Yildiz Erdemir, M.; Tonbul, H. A region-based multi-scale approach for object-based image analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 241–247. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Zhang, X.; Fritts, J.E.; Goldman, S.A. Image segmentation evaluation: A survey of unsupervised methods. Comput. Vis. Image Underst. 2008, 110, 260–280. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; He, Y.; Weng, Q. An Automated Method to Parameterize Segmentation Scale by Enhancing Intrasegment Homogeneity and Intersegment Heterogeneity. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1282–1286. [Google Scholar] [CrossRef]

- Yang, L.; Mansaray, L.R.; Huang, J.; Wang, L. Optimal segmentation scale parameter, feature subset and classification algorithm for geographic object-based crop recognition using multisource satellite imagery. Remote Sens. 2019, 11, 514. [Google Scholar] [CrossRef] [Green Version]

- Dorren, L.; Maier, B.; Seijmonsbergen, A. Improved Landsat-based forest mapping in steep mountainous terrain using object-based classification. Forest Ecol. Manag. 2003, 183, 31–46. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Warner, T. Forest type mapping using object- specific texture measures from multispectral IKONOS imagery: Segmentation quality and image classification issues. Photogramm. Eng. Remote Sens. 2009, 75, 819–830. [Google Scholar] [CrossRef] [Green Version]

- Csillik, O. Fast segmentation and classification of very high resolution remote sensing data using SLIC superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef] [Green Version]

- Lu, L.; Tao, Y.; Di, L. Object-Based Plastic-Mulched Landcover Extraction Using Integrated Sentinel-1 and Sentinel-2 Data. Remote Sens. 2018, 10, 1820. [Google Scholar] [CrossRef] [Green Version]

- Wang, B.; Zhang, Z.; Wang, X.; Zhao, X.; Yi, L.; Hu, S. Object-based mapping of gullies using optical images: A case study in the black soil region, Northeast of China. Remote Sens. 2020, 12, 487. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drǎguţ, L. Comparing supervised and unsupervised multiresolution segmentation approaches for extractiong buildings from very high resolution imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 67–75. [Google Scholar] [CrossRef] [Green Version]

- Ode, A.; Miller, D. Analysing the relationship between indicators of landscape complexity and preference. Environ. Plan. B Plan. Des. 2011, 38, 24–40. [Google Scholar] [CrossRef]

- Lynch, K. The Image of the City; The MIT Press: Cambridge, MA, USA, 1960. [Google Scholar]

- Shanken, A.M. The visual culture of planning. J. Plan. Hist. 2018, 17, 300–319. [Google Scholar] [CrossRef]

- Chmielewski, T.J.; Kułak, A.; Michalik-Snieżek, M.; Lorens, B. Physiognomic structure of agro-forestry landscapes: Method of evaluation and guidelines for design, on the example of the West Polesie Biosphere Reserve. Int. Agrophys. 2016, 30, 415–429. [Google Scholar] [CrossRef]

- Benedikt, M.L. To Take Hold of Space: Isovists and Isovist Fields. Environ. Plan. B Plann. Des. 1997, 6, 47–65. [Google Scholar] [CrossRef]

- Doherty, M.F. Computation of Minimal Isovist Sets. Technical Rapport. Maryland University College Park Centre for Automation Research (ADA157624), 89. 1984. Available online: https://apps.dtic.mil/sti/citations/ADA157624 (accessed on 1 July 2020).

- Gobster, P.H. Visions of nature: Conflict and compatibility in urban park restoration. Landsc. Urban. Plan. 2001, 56, 35–51. [Google Scholar] [CrossRef]

- Skalski, J. Komfort Dalekiego Patrzenia a Krajobraz Dolin Rzecznych W Miastach Rzecznych Na Nizinach. Teka Komisji Architektury, Urbanistyki i Studiów Krajobrazowych 2005, 1, 44–52. [Google Scholar]

- Smardon, R.C.; Palmer, J.F.; Felleman, J.P. Foundations for Visual Project Analysis; Wiley: New York, NY, USA, 1986. [Google Scholar]

- Olszewska, A.; Marques, P.F.; Barbosa, F. Enhancing Urban Landscape with Neuroscience Tools: Lessons from the Human Brain. Citygreen 2015, 1, 60. [Google Scholar] [CrossRef]

- Olszewska, A.; Marques, P.F.; Ryan, R.L.; Barbosa, F. What makes a landscape contemplative? Environ. Plan. B Urban. Analy. City Sci. 2018, 45, 7–25. [Google Scholar] [CrossRef]

- Kulik, M.; Warda, M.; Leśniewska, P. Monitoring the diversity of psammophilous grassland communities in the Kózki Nature Reserve under grazing and non-grazing conditions. J. Water Land Dev. 2013, 19, 59–67. [Google Scholar] [CrossRef]

- Warda, M.; Kulik, M.; Gruszecki, T. Description of selected grass communities in the “Kozki” nature reserve and a test of their active protection through the grazing of sheep of the Świniarka race. Ann. UMCS Agric. 2011, 66, 1–8. [Google Scholar] [CrossRef]

- Kulik, M.; Patkowski, K.; Warda, M.; Lipiec, A.; Bojar, W.; Gruszecki, T.M. Assessment of biomass nutritive value in the context of animal welfare and conservation of selected Natura 2000 habitats (4030, 6120 and 6210) in eastern Poland. Glob. Ecol. Conserv. 2019, 19, e00675. [Google Scholar] [CrossRef]

- Benedikt, M.L.; Mcelhinney, S. Isovists and the Metrics of Architectural Space. In Proceedings 107th ACSA Annual Meeting; Ficca, J., Kulper, A., Eds.; ACSA Press: Pittsburgh, PA, USA, 2019; pp. 1–10. [Google Scholar]

- Nagarajan, S.; Schenk, T. Feature-based registration of historical aerial images by Area Minimization. ISPRS J. Photogramm. Remote Sens. 2016, 116, 15–23. [Google Scholar] [CrossRef]

- Brinkmann, K.; Hoffmann, E.; Buerkert, A. Spatial and temporal dynamics of Urban Wetlands in an Indian Megacity over the past 50 years. Remote Sens. 2020, 12, 662. [Google Scholar] [CrossRef] [Green Version]

- Galiatsatos, N.; Donoghue, D.N.M.; Philip, G. High resolution elevation data derived from stereoscopic CORONA imagery with minimal ground control: An approach using Ikonos and SRTM data. Photogramm. Eng. Remote Sens. 2008, 74, 1093–1106. [Google Scholar] [CrossRef]

- Gheyle, W.; Bourgeois, J.; Goossens, R.; Jacobsen, K. Scan Problems in Digital CORONA Satellite Images from USGS Archives. Photogramm. Eng. Remote Sens. 2011, 77, 1257–1264. [Google Scholar] [CrossRef]

- Luman, D.E.; Stohr, C.; Hunt, L. Digital reproduction of historical aerial photographic prints for preserving a deteriorating archive. Photogramm. Eng. Remote Sens. 1997, 63, 1171–1179. [Google Scholar]

- The Web Mapping Services (WMS) of Polish National Geoportal. Available online: https//mapy.geoportal.gov.pl/wss/service/img/guest/ORTO/MapServer/WMSServer (accessed on 10 August 2020).

- Ford, M. Shoreline changes interpreted from multi-temporal aerial photographs and high resolution satellite images: Wotje Atoll, Marshall Islands. Remote Sens. Environ. 2013, 135, 130–140. [Google Scholar] [CrossRef]

- Casana, J.; Cothren, J. Stereo analysis, DEM extraction and orthorectification of CORONA satellite imagery: Archaeological applications from the Near East. Antiquity 2008, 82, 732–749. [Google Scholar] [CrossRef] [Green Version]

- Ma, R. Rational function model in processing historical aerial photographs. Photogramm. Eng. Remote Sens. 2013, 79, 337. [Google Scholar] [CrossRef]

- Laben Craig, A.; Bernard, V.B. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Coeurdevey, L.; Fernandez, K. Pleiades Imagery User Guide. Report No. USRPHR-DT-125-SPOT-2.0. Airbus Defense and Space Intelligence, 2012, France CNES, 106. Available online: https://www.intelligence-airbusds.com/en/8718-user-guides (accessed on 4 May 2020).

- Niedzielski, T.; Witek, M.; Miziński, B.; Remisz, J. The Description of UAV Campaign in Kózki Nature Reserve. Biuletyn Informacyjny Instytutu Geografii i Rozwoju Regionalnego 2017, 7–10, 17–18. Available online: http://www.geogr.uni.wroc.pl/data/files/lib-biuletyn_igrr_2017_07_08_09_10.pdf (accessed on 13 April 2020).

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Su, T.; Zhang, S. Local and global evaluation for remote sensing image segmentation. ISPRS J. Photogramm. Remote Sens. 2017, 130, 256–276. [Google Scholar] [CrossRef]

- Lucieer, A.; Stein, A. Existential uncertainty of spatial objects segmented from satellite sensor imagery. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2518–2521. [Google Scholar] [CrossRef] [Green Version]

- Lucieer, A. Uncertainties in segmentation and their visualisation. Ph.D. Thesis, International Institute for Geo-Information Science and Earth Observation (ITC) and the University of Utrecht, Utrecht, The Netherlands, 2004. [Google Scholar]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.I.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Maier, S.W.; Boggs, G.S. Area-based and location-based validation of classified image objects. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 117–130. [Google Scholar] [CrossRef]

- Sicre, M.; Fieuzal, R.; Baup, F. Contribution of multispectral (optical and radar) satellite images to the classification of agricultural surfaces. Int. J. App. Earth Observ. Geoinf. 2020, 84, 101972. [Google Scholar] [CrossRef]

- Cai, L.; Shi, W.; Miao, Z.; Hao, M. Accuracy assessment measures for object extraction from remote sensing images. Remote Sens. 2018, 10, 303. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Feng, X.; Xiao, P.; He, G.; Zhu, L. Segmentation quality evaluation using region-based precision and recall measures for remote sensing images. ISPRS J. Photogramm. Remote Sens. 2015, 102, 73–84. [Google Scholar] [CrossRef]

- Marpu, P.R.; Neubert, M.; Herold, H.; Niemeyer, I. Enhanced evaluation of image segmentation results. J. Spat. Sci. 2010, 55, 55–68. [Google Scholar] [CrossRef]

- Montaghi, A.; Larsen, R.; Greve, M.H. Accuracy assessment measures for image segmentation goodness of the land parcel identification system (LPIS) in Denmark. Remote Sens. Lett. 2013, 4, 946–955. [Google Scholar] [CrossRef]

- Dubes, R.C. How many clusters are best?—An experiment. Pattern Recognit. 1987, 20, 645–663. [Google Scholar] [CrossRef]

- Polak, M.; Zhang, H.; Pi, M. An evaluation metric for image segmentation of multiple objects. Image Vis. Comput. 2009, 27, 1223–1227. [Google Scholar] [CrossRef]

- Anderson, J.R. A Land Use and Land Cover Classification System for Use with Remote Sensor Data; U.S. Government Printing Office: Washington, DC, USA, 1976; 28p.

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Foody, G.M. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- Mahesh, P. Random Forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Sabat-Tomala, A.; Raczko, E.; Zagajewski, B. Comparison of support vector machine and random forest algorithms for invasive and expansive species classification using airborne hyperspectral data. Remote Sens. 2020, 12, 516. [Google Scholar] [CrossRef] [Green Version]

- Mallinis, G.; Koutsias, N.; Tsakiri-Strati, M.; Karteris, M. Object-Based Classification Using Quickbird Imagery for Delineating Forest Vegetation Polygons in a Mediterranean Test Site. ISPRS J. Photogramm. Remote Sens. 2008, 63, 237–250. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. Syst. Man Cybern. IEEE Trans. 1973, 6, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Batty, M.; Rana, S. The Automatic Definition and Generation of Axial Lines and Axial Maps. Environ. Plan. B: Plan. Des. 2004, 31, 615–640. [Google Scholar] [CrossRef]

- Jones, E.G.; Wong, S.; Milton, A.; Sclauzero, J.; Whittenbury, H.; McDonnell, M.D. The impact of pan-sharpening and spectral resolution on vineyard segmentation through machine learning. Remote Sens. 2020, 12, 934. [Google Scholar] [CrossRef] [Green Version]

- Xiao, P.; Zhang, X.; Zhang, H.; Hu, R.; Feng, X. Multiscale optimized segmentation of urban green cover in high resolution remote sensing image. Remote Sens. 2018, 10, 1813. [Google Scholar] [CrossRef] [Green Version]

- Weitkamp, G.; Lammeren, R.; van Bregt, A. Validation of isovist variables as predictors of perceived landscape openness. Landsc. Urban. Plan. 2014, 125, 140–145. [Google Scholar] [CrossRef]

- Wang, Y.; Dou, W. A fast candidate viewpoints filtering algorithm for multiple viewshed site planning. Int. J. Geogr. Inf. Sci. 2020, 34, 448–463. [Google Scholar] [CrossRef]

- Shi, X.; Xue, B. Deriving a minimum set of viewpoints for maximum coverage over any given digital elevation model data. Int. J. Digit. Earth 2016, 9, 1153–1167. [Google Scholar] [CrossRef]

- Chmielewski, S.; Lee, D. GIS-Based 3D visibility modeling of outdoor advertising in urban areas. In Proceedings of the 15th International Multidisciplinary Scientific GeoConference SGEM, Albena, Bulgaria, 18–24 June 2015; pp. 923–930. [Google Scholar]

- Turner, A.; Doxa, M.; O’Sullivan, D.; Penn, A. From isovists to visibility graphs: A methodology for the analysis of architectural space. Environ. Plan. B Plan. Des. 2001, 28, 103–121. [Google Scholar] [CrossRef] [Green Version]

- Suleiman, W.; Joliveau, T.; Favier, E. A New Algorithm for 3D Isovists. In Advances in Spatial Data Handling; Timpf, S., Laube, P., Eds.; Springer: Heidelberg/Berlin, Germany, 2013; pp. 157–173. [Google Scholar]

- Varoudis, T.; Psarra, S. Beyond two dimensions: Architecture through three-dimensional visibility graph analysis. J. Space Syntax 2014, 5, 91–108. [Google Scholar]

- Tobler, W.R. A computer model simulation of urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Fisher, P.F. An Exploration of Probable Viewsheds in Landscape Planning. Environ. Plan. B Plan. Des. 1995, 22, 527–546. [Google Scholar] [CrossRef]

- Bartie, P.; Reitsma, F.; Kingham, S.; Mills, S. Incorporating vegetation into visual exposure modelling in urban environments. Int. J. Geogr. Inf. Sci. 2011, 5, 851–868. [Google Scholar] [CrossRef]

- O’Neill, R.V.; Hunsaker, C.T.; Jackson, B.L.; Jones, K.B.; Riiters, K.H.; Wickham, J.D. Scale problems in reporting landscape pattern at regional scale. Landsc. Ecol. 1996, 11, 169–180. [Google Scholar] [CrossRef]

- Kim-Prieto, C.; Diener, E.; Tamir, M.; Scollon, C.; Diener, M. Integrating the Diverse Definitions of Happiness: A Time-Sequential Framework of Subjective Well-Being. J. Happiness Stud. 2005, 6, 261–300. [Google Scholar] [CrossRef]

- Helbich, M. Toward dynamic urban environmental exposure assessments in mental health research. Environ. Res. 2018, 161, 129–135. [Google Scholar] [CrossRef]

- Navickas, L.; Olszewska, A.; Mantadelis, T. CLASS: Contemplative landscape automated scoring system. In Proceedings of the 24th Mediterranean Conference on Control and Automation (MED), Athens, Greece, 21–24 June 2016; pp. 1180–1185. [Google Scholar]

- Council of Europe. The European Landscape Convention Text. 2007. Available online: https://www.coe.int/en/web/conventions/full-list/-/conventions/treaty/176 (accessed on 20 August 2020).

- Cassatella, C. Landscape Indicators. Assessing and Monitoring Landscape Quality; Cassatella, C., Peano, A., Eds.; Springer: Dordrecht, The Netherland, 2011. [Google Scholar] [CrossRef]

- Dramstad, W.E.; Tveit, M.S.; Fjellstad, W.J.; Fry, G.L. Relationships between visual landscape preferences and map-based indicators of landscape structure. Landsc. Urban. Plan. 2006, 78, 465–474. [Google Scholar] [CrossRef]

- Uuemaa, E.; Roosaare, J.; Mander, U. Landscape metrics as indicators of river water quality at catchment scale. Nord. Hydrol. 2007, 38, 125–138. [Google Scholar] [CrossRef]

- Walz, U.; Stein, C. Indicator for a monitoring of Germany’s landscape attractiveness. Ecol. Indic. 2018, 94, 64–73. [Google Scholar] [CrossRef]

- Lustig, A.; Stouffer, D.B.; Roigé, M.; Worner, S.P. Towards more predictable and consistent landscape metrics across spatial scales. Ecol. Indic. 2015, 57, 11–21. [Google Scholar] [CrossRef]

- Herbst, H.; Förster, M.; Kleinschmidt, B. Contribution of landscape metrics to the assessment of scenic quality—the example of the landscape structure plan Havelland/Germany. Landscape Online 2009, 10, 1–17. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. ISPRS J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef] [Green Version]

- Midekisa, A.; Holl, F.; Savory, D.J.; Andrade-Pacheco, R.; Gething, P.W.; Bennett, A.; Sturrock, H.J. Mapping land cover change over continental Africa using Landsat and Google Earth Engine cloud computing. PLoS ONE 2017, 12, e0184926. [Google Scholar] [CrossRef] [PubMed]

- Cushman, S.A.; McGarigal, K.; Neel, M.C. Parsimony in landscape metrics: Strength, universality, and consistency. Ecol. Indic. 2008, 8, 691–703. [Google Scholar] [CrossRef]

- Fry, G. Culture and nature versus culture or nature. In The New Dimensions of the European Landscape; Jongman, R., Ed.; Springer: Dordrecht, The Netherlands, 2002; pp. 75–81. [Google Scholar]

| Date | Type | Scale/GSD | Spectral Resolution | Camera/Project info/RMSE |

|---|---|---|---|---|

| 1957 (August) | Archival aerial imagery, mono coverage | 1:18,000/0.2 m | Grayscale | RC5/unknown/3.6 * |

| 1965 (26 September) | CORONA KH-4A | 2.8 m | Grayscale | 70 mm Panoramic, Forward, Stereo Medium/Mission 1024-1/3.3 m * |

| 1973 (July) | Archival aerial imagery, mono-coverage | 1:17,000/0.18 m | Grayscale | RC8/unknown/2.1 * |

| 1997 (month unknown) | Orthophoto | 0.5 m | RGB | RC20/PHARE LPIS48/1.5m |

| 2006 (month unknown) | Orthophoto | 0.5 m | RGB | RC20/LPIS_Centrum/1.5 m |

| 2010 (month unknown) | Orthophoto | 0.25 m | RGB + CIR | Unknown/LPIS40/0.75 m |

| 2015 (4 July) | Pleiades-1B Ortho (Level 3) after radiometric and geometric correction | 0.5 m (pansharpened) | RGB + NIR | Digital 12 bits/not specified/0.35 m |

| 2018 (26 June) | UAV eBee flight campaign (Orthophoto) | 0.04 m | RGB + CIR | Canon S100/BIOSTRATEG2/297267/14/NCBR/2016/RMSE 0.39 m |

| LCCL-1 | LCCL-2 | LCCL-3 | ||||

|---|---|---|---|---|---|---|

| Class Name | Status | USGS | CLC | USGS | CLC | CLC |

| Built-up areas | c-class | - | Artificial surfaces (1) | Residential | - | Road and rail networks |

| Forest | c-class | Forest land | - | Deciduous, evergreen, mixed forest land, forest wetland, orchards | Forests (31) | Broad-leaved forest (311), coniferous forest (312), mixed forest (313), fruit trees and berry plantations (222), agro-forestry areas (244) |

| Shrubs | c-class | - | - | Shrub | Scrub (32) | |

| Xeric sandy grassland | s-class | - | Herbaceous | herbaceous (32) | Moors and heathland (322) | |

| Open sands | s-class | - | Sandy areas other than beaches | Open spaces with little or no vegetation (33) | Sparsely vegetated areas (333) | |

| Water | s-class | Water | Water bodies (5) | Lakes | Inland waters (51) | Water bodies (512) |

| Other (agricultural background) | s-class | - | Agricultural area (2) | Cropland and pastures, bare ground | Arable land (21) | Non-irrigated arable land (211), |

| Pasture (23) | Pasture (231) natural grasslands (321) | |||||

| 1957 | 1965 | 1973 | 1997 | 2006 | 2010 | 2015 | 2018 | |

|---|---|---|---|---|---|---|---|---|

| NP | 479 | 261 | 541 | 598 | 561 | 572 | 465 | 510 |

| PD | 1328.2 | 1348.5 | 1317 | 981 | 682.9 | 643.6 | 538.1 | 576.6 |

| Vn (count) | VD (n/km2) | VS (km) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Primary | Secondary | Total | Primary | Secondary | Total | Primary | Secondary | Total | |

| 1957 | 3 | 123 | 126 | 1.5 | 61.5 | 63 | 0.82 | 0.13 | 0.13 |

| 1965 | 2 | 136 | 138 | 1 | 68 | 69 | 1.00 | 0.12 | 0.12 |

| 1973 | 6 | 147 | 153 | 3 | 73.5 | 76.5 | 0.58 | 0.12 | 0.11 |

| 1997 | 8 | 229 | 237 | 4 | 114.5 | 118.5 | 0.50 | 0.09 | 0.09 |

| 2006 | 11 | 239 | 250 | 5.5 | 119.5 | 125 | 0.43 | 0.09 | 0.09 |

| 2010 | 11 | 223 | 234 | 5.5 | 111.5 | 117 | 0.43 | 0.09 | 0.09 |

| 2015 | 10 | 234 | 244 | 5 | 117 | 122 | 0.45 | 0.09 | 0.09 |

| 2018 | 9 | 216 | 225 | 4.5 | 108 | 112.5 | 0.47 | 0.10 | 0.09 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chmielewski, S.; Bochniak, A.; Natapov, A.; Wężyk, P. Introducing GEOBIA to Landscape Imageability Assessment: A Multi-Temporal Case Study of the Nature Reserve “Kózki”, Poland. Remote Sens. 2020, 12, 2792. https://doi.org/10.3390/rs12172792

Chmielewski S, Bochniak A, Natapov A, Wężyk P. Introducing GEOBIA to Landscape Imageability Assessment: A Multi-Temporal Case Study of the Nature Reserve “Kózki”, Poland. Remote Sensing. 2020; 12(17):2792. https://doi.org/10.3390/rs12172792

Chicago/Turabian StyleChmielewski, Szymon, Andrzej Bochniak, Asya Natapov, and Piotr Wężyk. 2020. "Introducing GEOBIA to Landscape Imageability Assessment: A Multi-Temporal Case Study of the Nature Reserve “Kózki”, Poland" Remote Sensing 12, no. 17: 2792. https://doi.org/10.3390/rs12172792

APA StyleChmielewski, S., Bochniak, A., Natapov, A., & Wężyk, P. (2020). Introducing GEOBIA to Landscape Imageability Assessment: A Multi-Temporal Case Study of the Nature Reserve “Kózki”, Poland. Remote Sensing, 12(17), 2792. https://doi.org/10.3390/rs12172792