Hierarchical Multi-Label Object Detection Framework for Remote Sensing Images

Abstract

1. Introduction

- We are the first to tackle the hierarchical multi-label object detection problem under the hierarchically partial-annotated dataset settings.

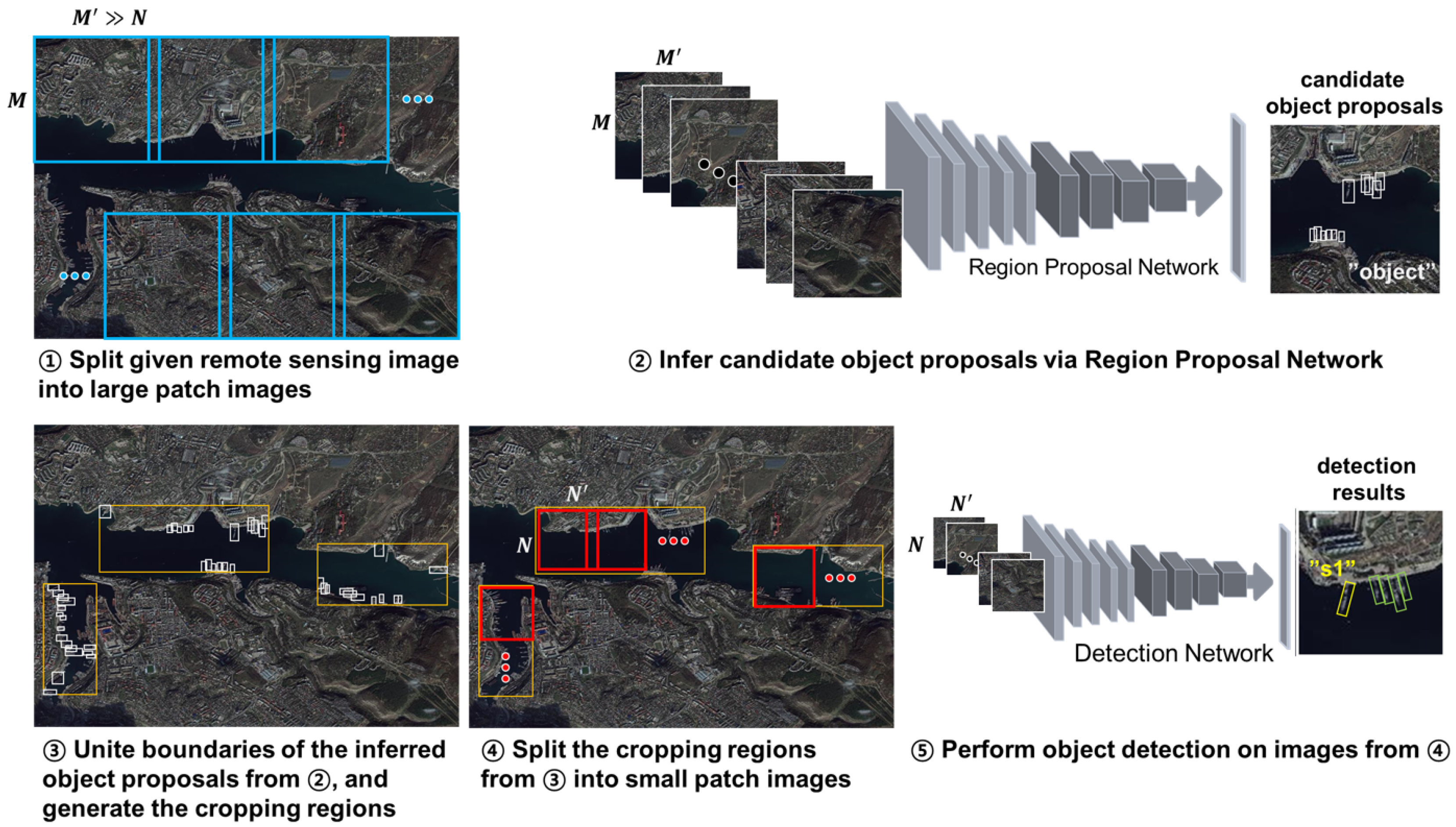

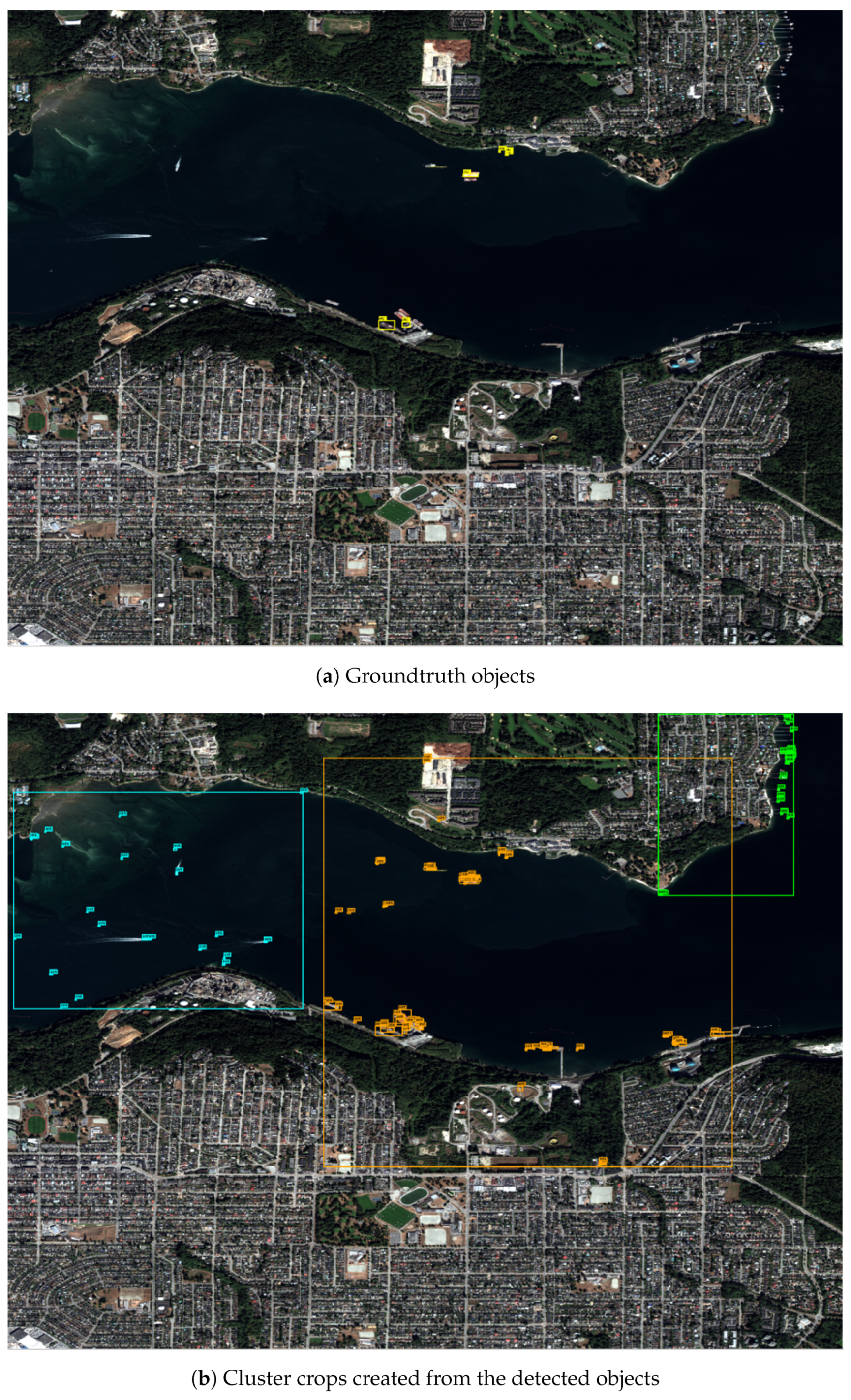

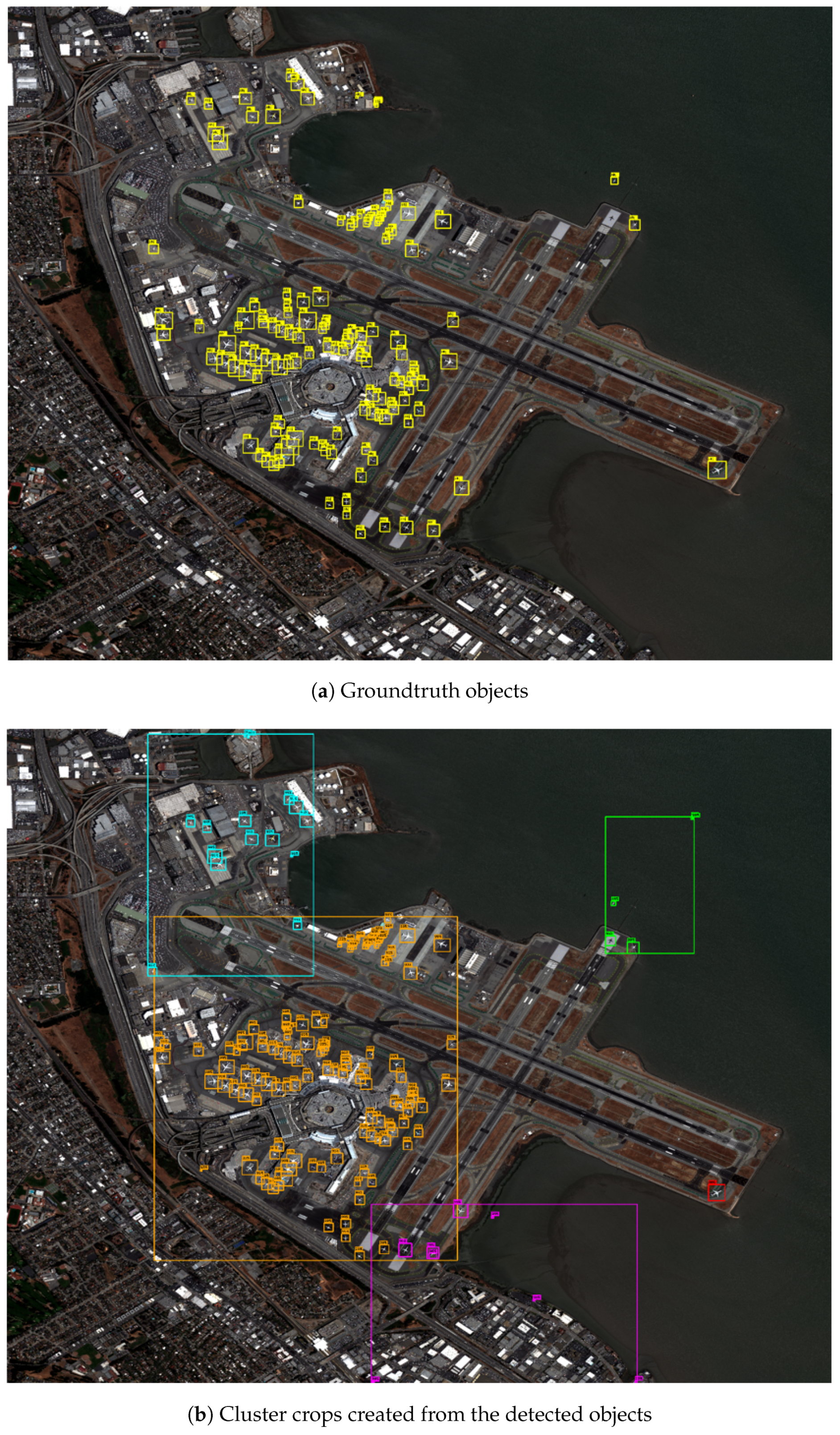

- We propose a clustering-guided cropping strategy which infers object proposals via a deep detector and followed by clustering the object proposals by a clustering algorithm, which supports more efficient testing in subsequent object detection.

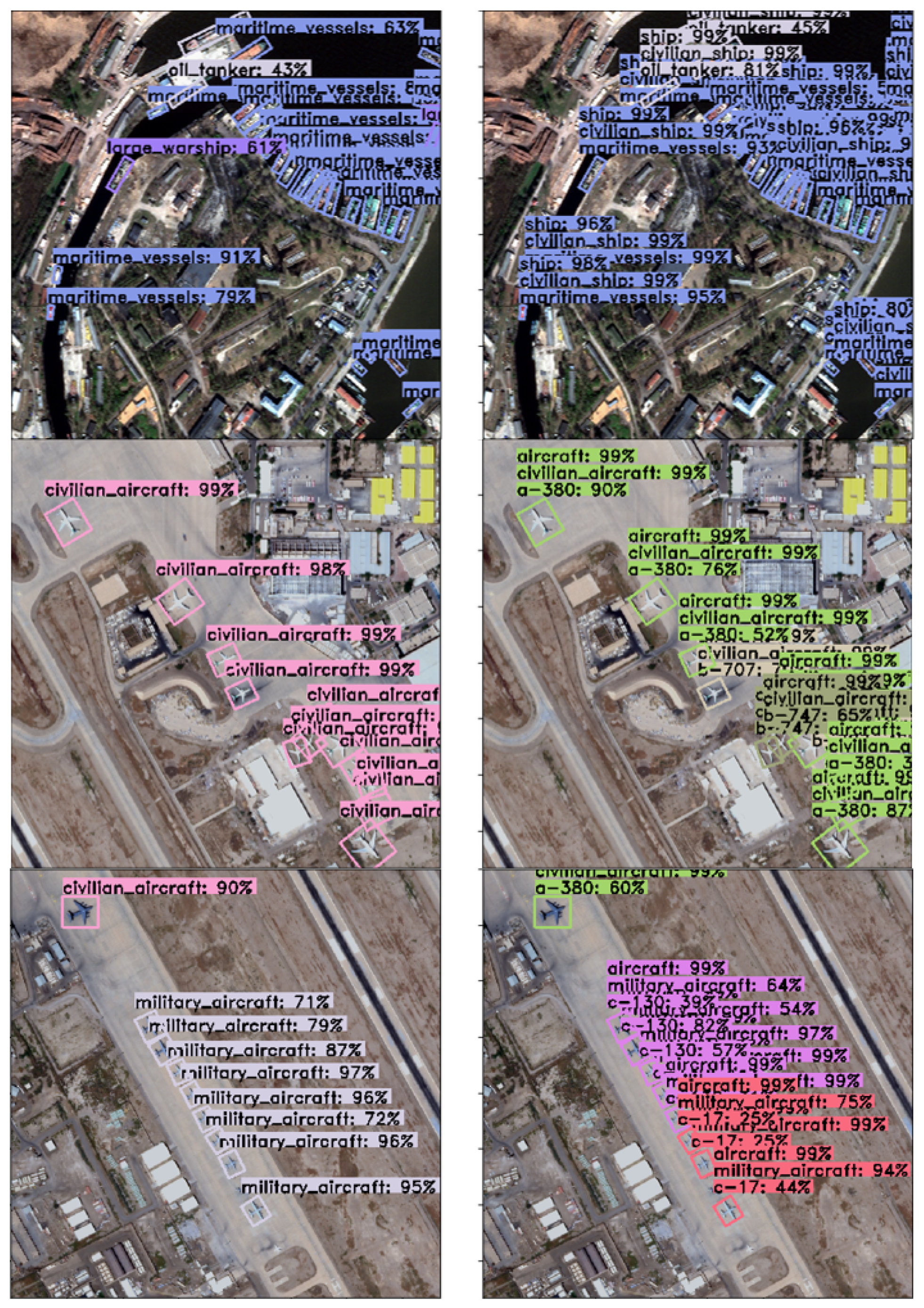

- We introduce a hierarchical multi-label object detection pipeline, DHCR, that are composed of a detection network with multiple classifiers and a classification network for hierarchical multi-labeling to train on arbitrarily hierarchical-annotated datasets and improve the performance of object detection.

- The extensive qualitative and quantitative experiments on our own datasets with WorldView-3 and SkySat satellite images suggest that the proposed object detection framework is a practical solution for cropping regions and detecting objects with hierarchical multi-labels.

2. Previous Work

2.1. Object Detection and Applications of CNN in Remote Sensing Images

2.2. Region Proposal Methods for Aerial Images

2.3. CNN-based Hierarchical Multi-Label Classification

3. Proposed Methodology

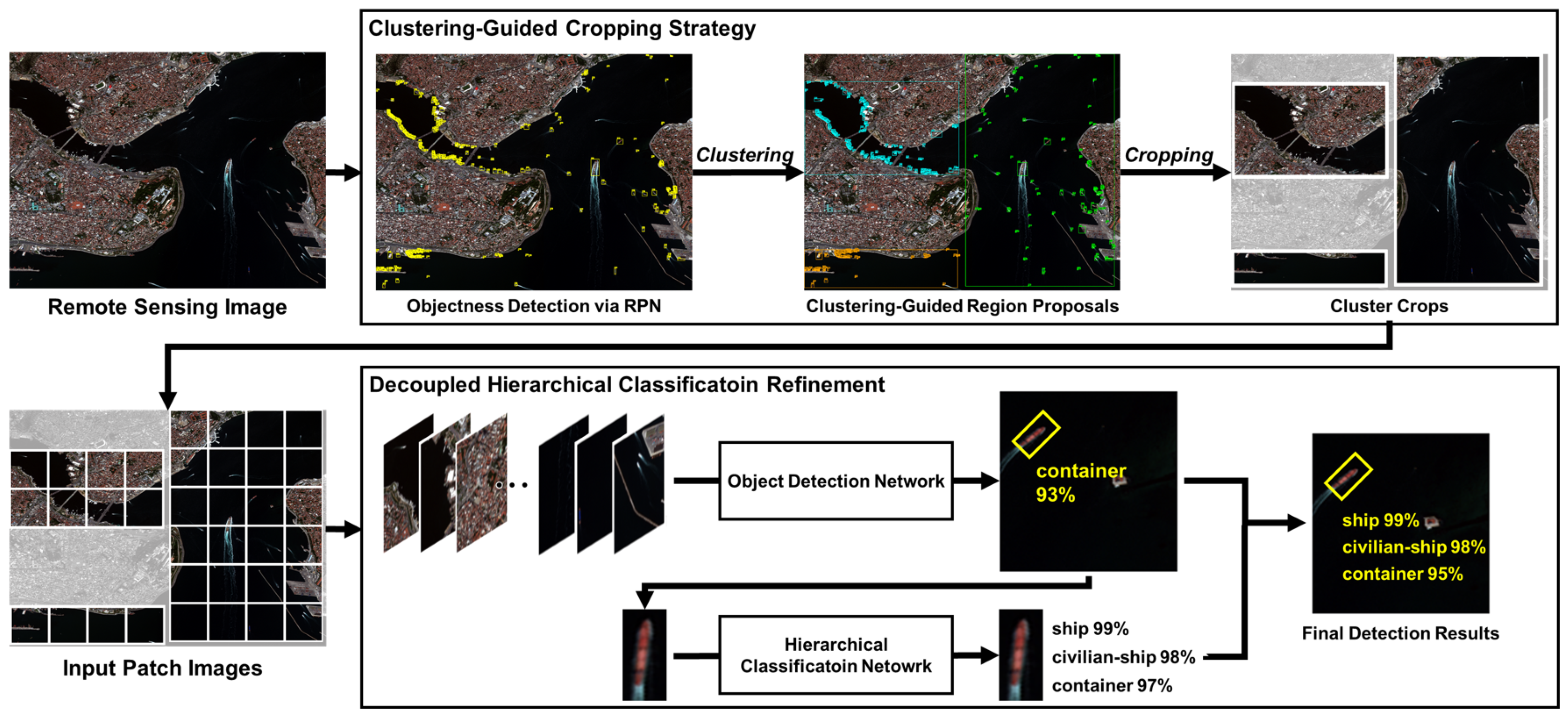

3.1. Overview of the Proposed Hierarchical Multi-Label Object Detection Framework

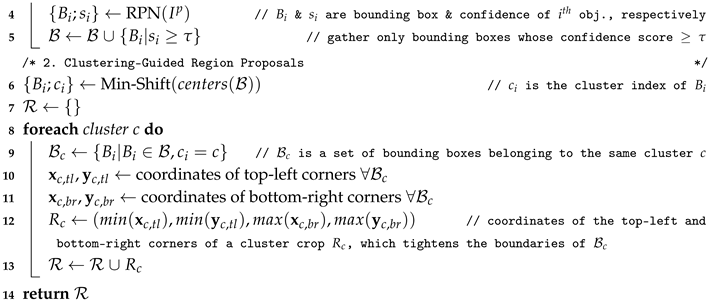

3.2. Clustering-Guided Cropping Strategy

| Algorithm 1: Clustering-Guided Cropping Strategy |

| Input: a remote sensing image I, a score threshold Output: coordinates of cluster crops /* 1. Inference of Candidate Object Proposals via RPN */

|

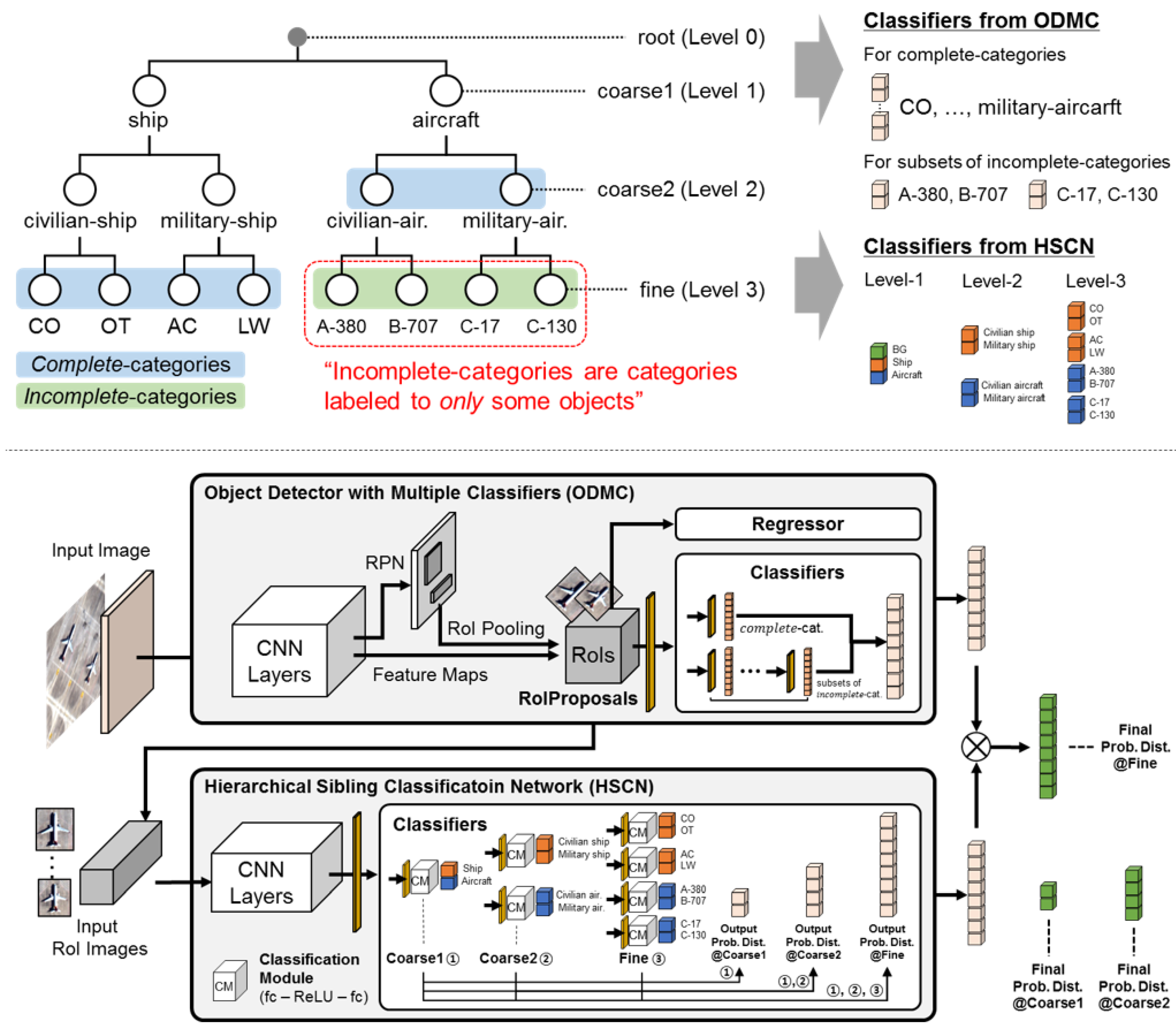

3.3. Decoupled Hierarchical Classification Refinement

3.3.1. Pipeline Design

- RoIProposals from ODMC: As a typical module in general two-stage detectors, this module generates RoIs likely to include target objects on an input patch image.

- Regressor from ODMC: For RoIs from RoIProposals from ODMC module, this module corrects localization boxes for each RoI. After this bounding box refinement, we create input RoI images of HSCN in an upright position.

- Classifiers from ODMC: For RoIs from RoIProposals from ODMC module, this module estimates over complete and incomplete categories at fine level based on the results from multiple classifiers. According to the predicted results of this module, false negative boxes are determined and appended to the input RoI images of HSCN.

- Classifiers from HSCN: This module not only refines the fine-level classification result of Classifiers from ODMC module, but also predicts probability distributions over categories at each coarse level.

3.3.2. Training

3.3.3. Inference

4. Experiments

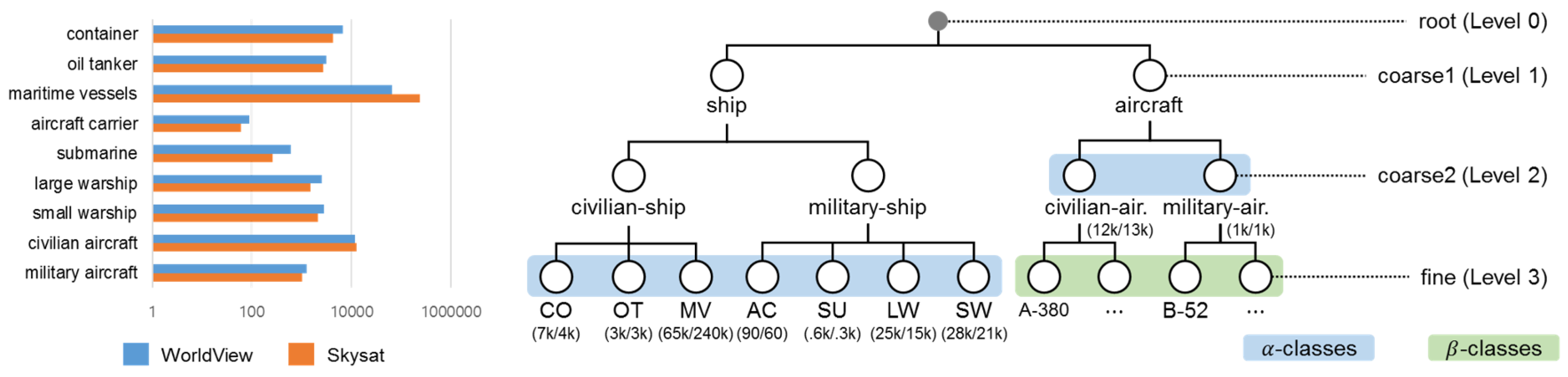

4.1. Dataset Description

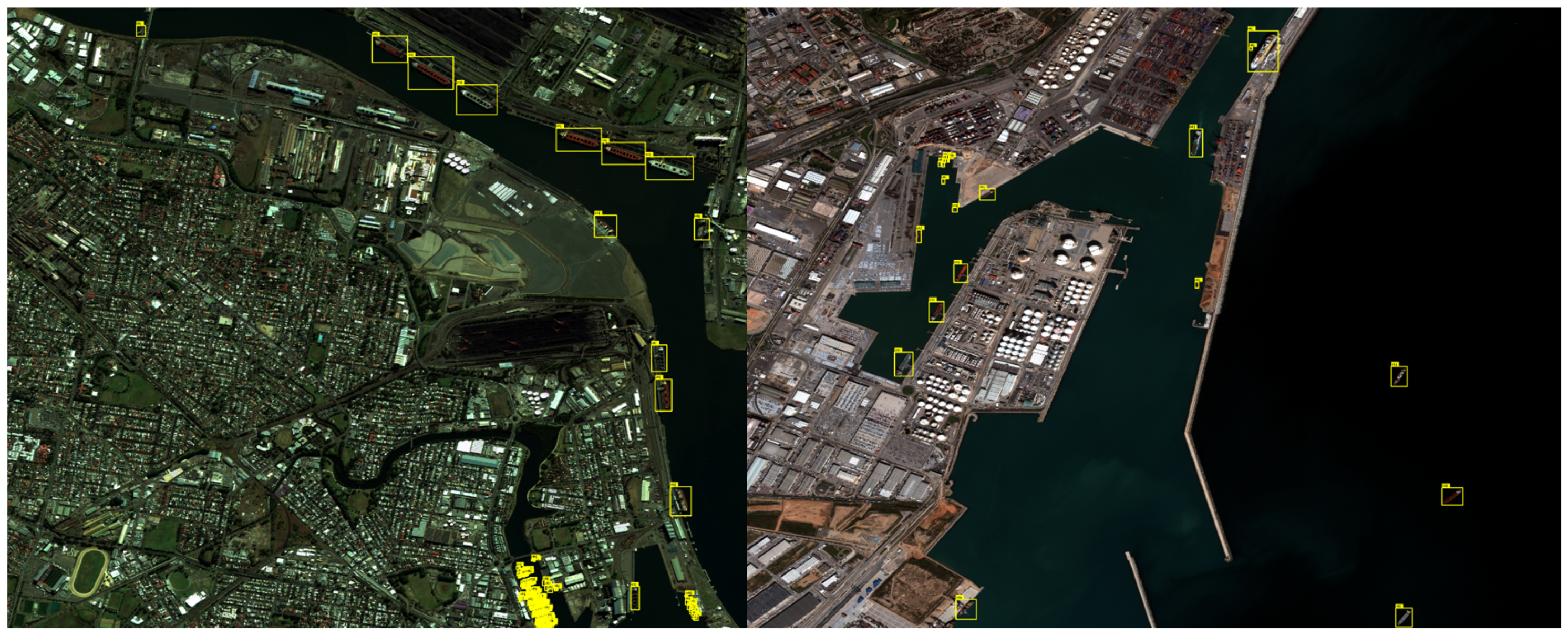

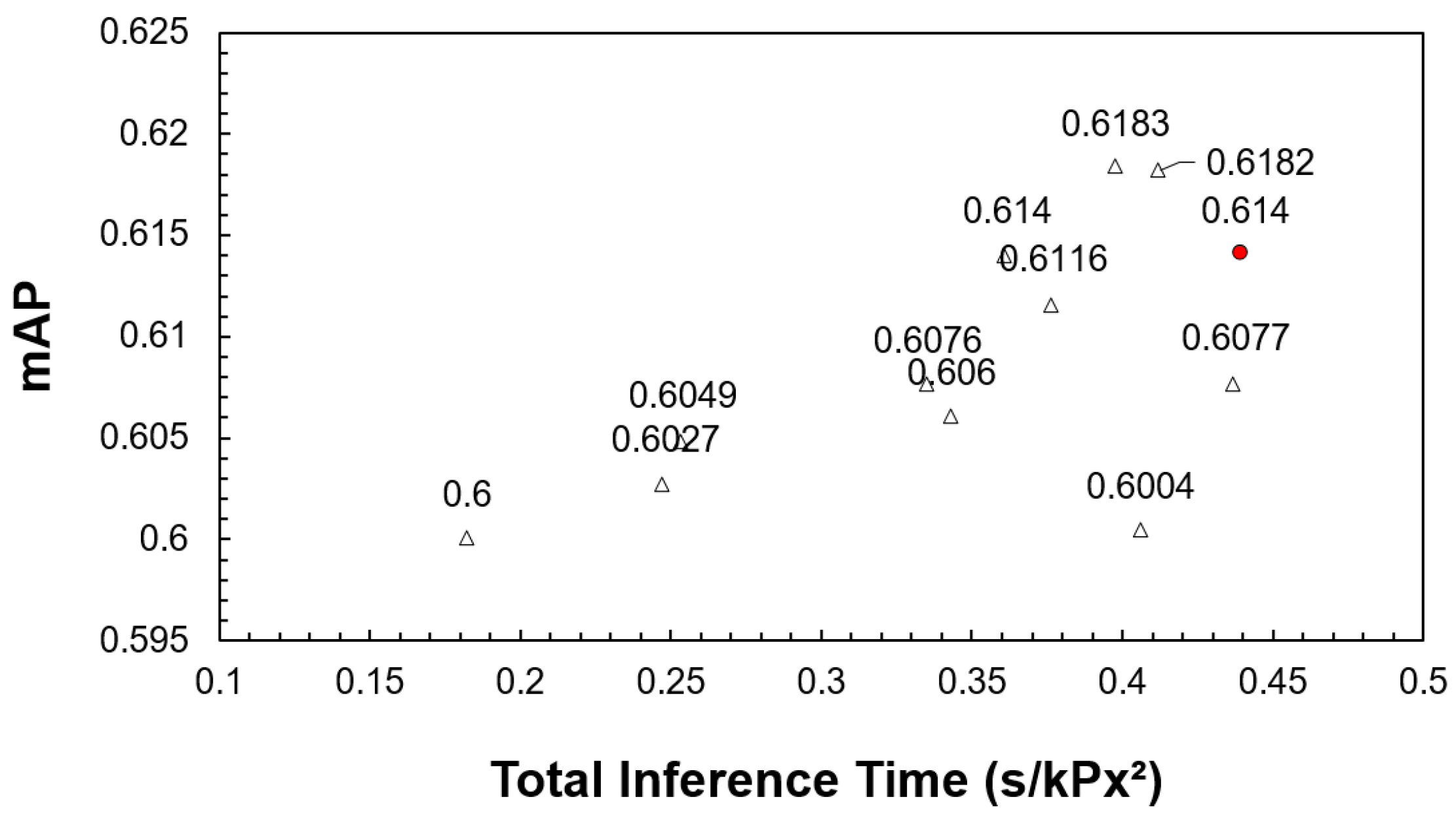

4.2. Experiments on Clustering-Guided Cropping Strategy

4.2.1. Implementation Details

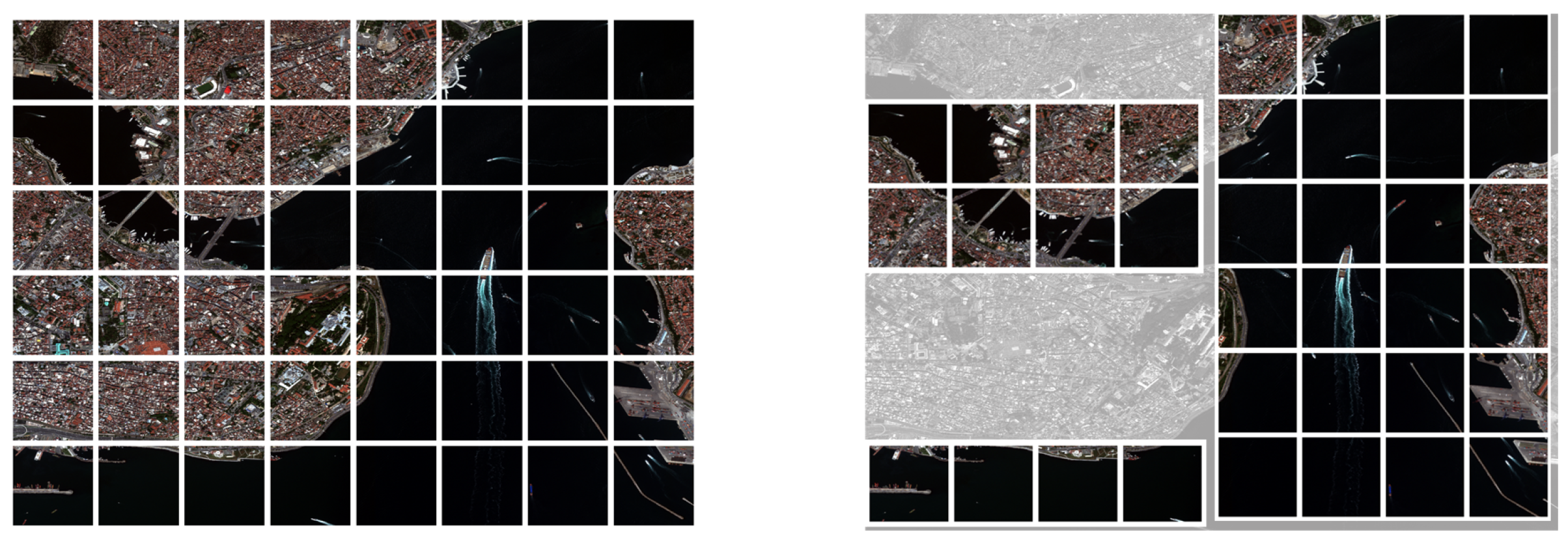

4.2.2. Qualitative Results

4.2.3. Sensitivity Analysis

- Number of patches to be inferred: For uniform cropping, we count the number of patches created from the whole images, while for clustering-guided cropping, we count the number of patches created from the cluster crops.

- Number of groundtruth objects: the number of groundtruth objects included in the output cluster crops. In the case of uniform cropping, there is no loss to the groundtruth objects unless truncated.

- Total inference time (s/img): the average running time per image from the beginning of the object detection framework until the end.

- Average of mean Average Precision (Avg. of mAP) (%): detection performance over incomplete-categories. mAP is used as a standard metric to evaluate the performance of object detection and computed as the average value of AP over all categories. Here, AP computes the average value of precision over the interval from recall = 0 to recall = 1. The precision measures the fraction of detections that are true positives, while the recall measures the fraction of positives that are correctly identified. Hence, the higher the mAP, the better the performance.

4.3. Experiments on Decoupled Hierarchical Classification Refinement

4.3.1. Baselines

4.3.2. Implementation Details

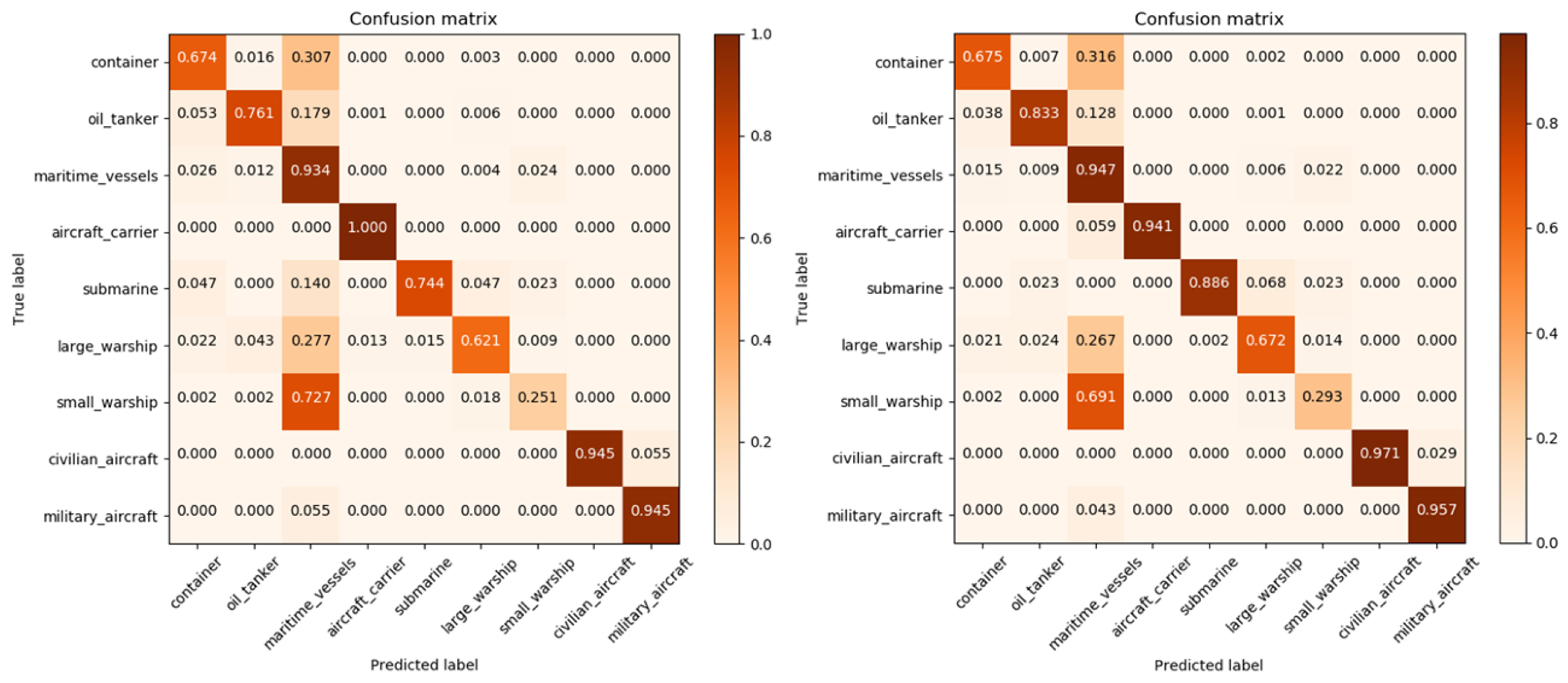

4.3.3. Quantitative Results

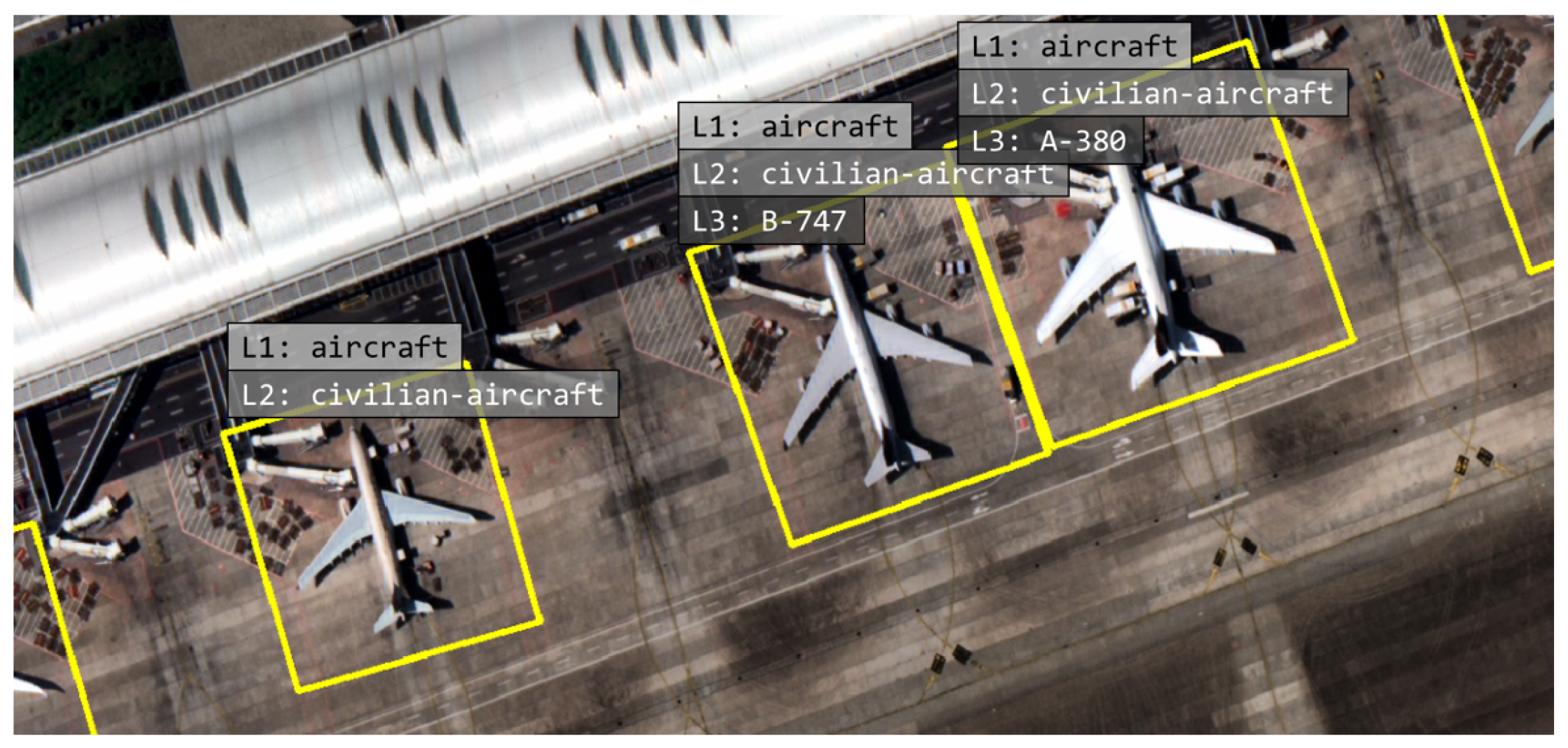

4.3.4. Qualitative Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ahmad, K.; Pogorelov, K.; Riegler, M.; Ostroukhova, O.; Halvorsen, P.; Conci, N.; Dahyot, R. Automatic detection of passable roads after floods in remote sensed and social media data. Signal Process. Image Commun. 2019, 74, 110–118. [Google Scholar] [CrossRef]

- Fu, K.; Li, Y.; Sun, H.; Yang, X.; Xu, G.; Li, Y.; Sun, X. A ship rotation detection model in remote sensing images based on feature fusion pyramid network and deep reinforcement learning. Remote Sens. 2018, 10, 1922. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of fir trees (Abies sibirica) damaged by the bark beetle in unmanned aerial vehicle images with deep learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Tuermer, S.; Kurz, F.; Reinartz, P.; Stilla, U. Airborne vehicle detection in dense urban areas using HoG features and disparity maps. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2327–2337. [Google Scholar] [CrossRef]

- Zhong, J.; Lei, T.; Yao, G. Robust vehicle detection in aerial images based on cascaded convolutional neural networks. Sensors 2017, 17, 2720. [Google Scholar] [CrossRef]

- Munir, N.; Awrangjeb, M.; Stantic, B. An Automated Method for Individual Wire Extraction from Power Line Corridor using LiDAR Data. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, Australia, 2–4 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Awrangjeb, M.; Siddiqui, F.U. A new mask for automatic building detection from high density point cloud data and multispectral imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 89. [Google Scholar] [CrossRef]

- Zhang, F.; Du, B.; Zhang, L.; Xu, M. Weakly supervised learning based on coupled convolutional neural networks for aircraft detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5553–5563. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Liu, Z.; Wang, H.; Weng, L.; Yang, Y. Ship rotated bounding box space for ship extraction from high-resolution optical satellite images with complex backgrounds. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1074–1078. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- DigitalGlobe. Available online: http://www.digitalglobe.com/ (accessed on 1 July 2020).

- Planet Labs. Available online: https://www.planet.com/ (accessed on 1 July 2020).

- Lin, Q.; Zhao, J.; Tong, Q.; Zhang, G.; Yuan, Z.; Fu, G. Cropping Region Proposal Network Based Framework for Efficient Object Detection on Large Scale Remote Sensing Images. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1534–1539. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered object detection in aerial images. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8311–8320. [Google Scholar]

- Yan, Z.; Zhang, H.; Piramuthu, R.; Jagadeesh, V.; DeCoste, D.; Di, W.; Yu, Y. HD-CNN: Hierarchical deep convolutional neural networks for large scale visual recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2740–2748. [Google Scholar]

- Ouyang, W.; Wang, X.; Zhang, C.; Yang, X. Factors in Finetuning deep model for object detection with long-tail distribution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 864–873. [Google Scholar]

- Cheng, B.; Wei, Y.; Shi, H.; Feris, R.; Xiong, J.; Huang, T. Revisiting rcnn: On awakening the classification power of faster rcnn. In Proceedings of the European Conference on Computer Vision (ECCV), Mubich, Germnay, 8–14 September 2018; pp. 453–468. [Google Scholar]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in UAV imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, M.; Li, S.; Feng, H.; Ma, S.; Che, J. End-to-end airport detection in remote sensing images combining cascade region proposal networks and multi-threshold detection networks. Remote Sens. 2018, 10, 1516. [Google Scholar] [CrossRef]

- Chen, F.; Ren, R.; Van de Voorde, T.; Xu, W.; Zhou, G.; Zhou, Y. Fast automatic airport detection in remote sensing images using convolutional neural networks. Remote Sens. 2018, 10, 443. [Google Scholar] [CrossRef]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic ship detection in remote sensing images from google earth of complex scenes based on multiscale rotation dense feature pyramid networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Chen, Z.; Zhang, T.; Ouyang, C. End-to-end airplane detection using transfer learning in remote sensing images. Remote Sens. 2018, 10, 139. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef]

- Pang, J.; Li, C.; Shi, J.; Xu, Z.; Feng, H. R2-CNN: Fast Tiny Object Detection in Large-scale Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5512–5524. [Google Scholar] [CrossRef]

- Li, C.; Yang, T.; Zhu, S.; Chen, C.; Guan, S. Density Map Guided Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 190–191. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Zhu, X.; Bain, M. B-CNN: Branch convolutional neural network for hierarchical classification. arXiv 2017, arXiv:1709.09890. [Google Scholar]

- Parag, T.; Wang, H. Multilayer Dense Connections for Hierarchical Concept Classification. arXiv 2020, arXiv:2003.09015. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Hussain, T.; Muhammad, K.; Del Ser, J.; Baik, S.W.; de Albuquerque, V.H.C. Intelligent Embedded Vision for Summarization of Multiview Videos in IIoT. IEEE Trans. Ind. Informatics 2019, 16, 2592–2602. [Google Scholar] [CrossRef]

- Hussain, T.; Muhammad, K.; Ullah, A.; Cao, Z.; Baik, S.W.; de Albuquerque, V.H.C. Cloud-assisted multiview video summarization using CNN and bidirectional LSTM. IEEE Trans. Ind. Informatics 2019, 16, 77–86. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Tan, J.; Wang, C.; Li, B.; Li, Q.; Ouyang, W.; Yin, C.; Yan, J. Equalization Loss for Long-Tailed Object Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11662–11671. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Yang, X.; Sun, H.; Sun, X.; Yan, M.; Guo, Z.; Fu, K. Position detection and direction prediction for arbitrary-oriented ships via multitask rotation region convolutional neural network. IEEE Access 2018, 6, 50839–50849. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 2849–2858. [Google Scholar]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2cnn: Rotational region cnn for orientation robust scene text detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Scrdet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 Octber–3 November 2019; pp. 8232–8241. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Koo, J.; Seo, J.; Jeon, S.; Choe, J.; Jeon, T. RBox-CNN: Rotated bounding box based CNN for ship detection in remote sensing image. In Proceedings of the 26th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 6–9 November 2018; pp. 420–423. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

| Raw Input Size (M) | # Patches | # Groundtruth | Total Inference | Avg. of mAP (%) |

|---|---|---|---|---|

| before Down-Scaling | to Be Inferred | Objects | Time (s/img) | |

| Uniform Cropping | 47,510 | 24,371 | 64.07 | 61.40 |

| 3000 × 3000 | 27,803 | 22,634 | 45.46 | 60.10 |

| 5000 × 5000 | 24,477 | 21,511 | 40.42 | 59.28 |

| 7000 × 7000 | 23,331 | 21,165 | 38.76 | 59.03 |

| 9000 × 9000 | 18,346 | 19,584 | 31.33 | 57.41 |

| a Sensitivity analysis varying the raw patch sizes before down-scaling to | ||||

| a fixed input size of 1024 when training and testing RPN. | ||||

| Confidence | # Patches | # Groundtruth | Total Inference | Avg. of mAP (%) |

| Threshold of RPN | to be Inferred | Objects | Time (s/img) | |

| Uniform Cropping | 47,510 | 24,371 | 64.07 | 61.40 |

| 0.980 | 25,957 | 21,928 | 42.38 | 59.49 |

| 0.985 | 24,295 | 21,672 | 40.03 | 59.21 |

| 0.990 | 22,399 | 20,616 | 37.48 | 58.50 |

| 0.995 | 19,440 | 20,294 | 33.40 | 58.08 |

| b Sensitivity analysis varying the confidence thresholds for clustering only objects | ||||

| that are detected with a confidence score higher than the confidence threshold. | ||||

| Padding Size | # Patches | # Groundtruth | Total Inference | Avg. of mAP (%) |

| of Cluster Crops | to be Inferred | Objects | Time (s/img) | |

| Uniform Cropping | 47,510 | 24,371 | 64.07 | 61.40 |

| 300 | 19,075 | 21,127 | 33.05 | 58.53 |

| 600 | 22,760 | 21,127 | 37.89 | 58.56 |

| 900 | 27,231 | 21,127 | 44.04 | 59.36 |

| c Sensitivity analysis varying the padding sizes from outside crop border line. | ||||

| Coarse1 Label (L1) | Coarse2 Label (L2) | Fine Label (L3) | ||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Base Detector | +DHCR | S | A | mAP | CS | MS | CA | MA | mAP | CO | OT | MV | AC | SU | LW | SW | CA | CA | MA | MA | mAP† | mAP |

| RRPN [43] | ✗ | 52.23 | 74.92 | 63.58 | 62.72 | 41.12 | 70.13 | 43.74 | 54.43 | 65.38 | 53.56 | 52.81 | 32.94 | 45.68 | 35.01 | 29.94 | - | - | - | - | 45.05 | - |

| R2CNN [44] | ✗ | 53.43 | 74.91 | 64.17 | 69.36 | 42.13 | 81.93 | 33.06 | 56.62 | 70.58 | 56.94 | 59.04 | 33.17 | 46.26 | 39.13 | 30.81 | - | - | - | - | 47.99 | - |

| SCRDet [45] | ✗ | 54.77 | 74.71 | 64.74 | 69.91 | 42.95 | 84.54 | 35.67 | 58.27 | 71.26 | 55.62 | 60.99 | 34.99 | 47.22 | 40.41 | 28.13 | - | - | - | - | 48.37 | - |

| RBox-CNN [47] | ✗ | 64.57 | 74.48 | 69.53 | 72.12 | 44.24 | 80.47 | 50.83 | 61.92 | 70.35 | 75.37 | 61.37 | 34.04 | 50.88 | 40.05 | 34.24 | - | - | - | - | 52.33 | - |

| RetinaNet [46] | ✗ | 51.20 | 73.79 | 62.50 | 61.57 | 41.17 | 69.06 | 42.02 | 53.46 | 64.50 | 52.75 | 51.04 | 33.75 | 43.77 | 38.51 | 28.71 | - | - | - | - | 44.72 | - |

| RRPN [45] | ✔ | 61.80 | 70.40 | 66.10 | 69.80 | 43.94 | 72.18 | 35.90 | 55.46 | 77.16 | 57.85 | 58.77 | 37.45 | 55.68 | 48.17 | 39.94 | 77.88 | 55.12 | 25.22 | 40.29 | 53.57 | 52.14 |

| R2CNN [43] | ✔ | 69.75 | 76.27 | 73.01 | 76.68 | 48.96 | 75.89 | 40.78 | 60.58 | 85.48 | 69.20 | 55.75 | 39.57 | 56.26 | 59.76 | 40.81 | 77.86 | 75.52 | 20.22 | 50.00 | 58.12 | 57.31 |

| SCRDet [44] | ✔ | 71.79 | 77.70 | 74.75 | 80.06 | 46.56 | 83.66 | 36.75 | 61.76 | 85.97 | 72.32 | 58.82 | 40.56 | 57.22 | 54.74 | 38.13 | 89.29 | 76.57 | 16.88 | 45.68 | 58.25 | 57.83 |

| RBox-CNN [47] | ✔ | 81.63 | 80.67 | 81.15 | 89.93 | 51.95 | 83.28 | 51.90 | 69.27 | 93.13 | 84.28 | 60.15 | 41.65 | 60.88 | 58.80 | 44.24 | 87.14 | 70.01 | 17.98 | 68.45 | 63.30 | 62.43 |

| RetinaNet [46] | ✔ | 60.45 | 69.74 | 65.10 | 68.51 | 43.66 | 71.33 | 34.12 | 54.41 | 76.90 | 56.86 | 52.55 | 38.93 | 53.77 | 47.34 | 38.71 | 86.41 | 54.53 | 24.83 | 35.27 | 52.15 | 51.46 |

| Coarse1 Label (L1) | Coarse2 Label (L2) | Fine Label (L3) | ||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Base Detector | +DHCR | S | A | mAP | CS | MS | CA | MA | mAP | CO | OT | MV | AC | SU | LW | SW | CA | CA | MA | MA | mAP† | mAP |

| RRPN [43] | ✗ | 42.40 | 50.01 | 46.21 | 43.29 | 40.04 | 65.20 | 30.71 | 44.81 | 48.68 | 30.09 | 32.11 | 51.57 | 32.02 | 45.54 | 28.87 | - | - | - | - | 38.41 | - |

| R2CNN [44] | ✗ | 50.32 | 54.51 | 52.42 | 50.55 | 42.75 | 64.73 | 40.98 | 49.75 | 68.90 | 36.84 | 37.76 | 53.25 | 38.57 | 46.56 | 30.50 | - | - | - | - | 44.63 | - |

| SCRDet [45] | ✗ | 49.81 | 60.14 | 54.98 | 53.44 | 40.04 | 67.17 | 43.67 | 51.08 | 68.05 | 34.68 | 40.88 | 50.59 | 40.27 | 48.88 | 31.05 | - | - | - | - | 44.91 | - |

| RBox-CNN [47] | ✗ | 55.75 | 70.24 | 63.00 | 60.05 | 43.99 | 80.43 | 45.07 | 57.39 | 85.45 | 45.12 | 45.46 | 55.11 | 41.05 | 53.21 | 33.32 | - | - | - | - | 51.25 | - |

| RetinaNet [46] | ✗ | 42.23 | 51.78 | 47.01 | 45.27 | 41.12 | 66.85 | 30.85 | 46.02 | 50.66 | 31.11 | 33.57 | 50.99 | 37.76 | 45.05 | 29.05 | - | - | - | - | 39.74 | - |

| RRPN [45] | ✔ | 44.26 | 55.41 | 49.84 | 48.97 | 40.25 | 65.43 | 31.78 | 46.61 | 59.12 | 33.40 | 36.99 | 56.93 | 43.16 | 47.72 | 30.29 | 71.69 | 41.47 | 37.63 | 6.43 | 43.94 | 42.26 |

| R2CNN [43] | ✔ | 51.83 | 62.92 | 57.38 | 53.85 | 48.97 | 73.99 | 35.76 | 53.14 | 80.63 | 51.29 | 41.82 | 56.19 | 44.24 | 49.07 | 31.85 | 83.96 | 42.12 | 47.63 | 19.51 | 50.73 | 49.85 |

| SCRDet [44] | ✔ | 55.43 | 64.23 | 59.83 | 58.70 | 44.70 | 75.23 | 34.14 | 53.19 | 81.66 | 52.76 | 44.76 | 55.71 | 46.12 | 50.88 | 33.45 | 80.89 | 35.93 | 53.25 | 20.81 | 52.19 | 50.57 |

| RBox-CNN [47] | ✔ | 61.26 | 70.85 | 66.06 | 62.97 | 50.14 | 80.29 | 40.55 | 58.49 | 90.40 | 60.32 | 45.86 | 60.12 | 47.27 | 55.63 | 34.76 | 85.79 | 35.14 | 55.21 | 23.83 | 56.34 | 54.03 |

| RetinaNet [46] | ✔ | 45.23 | 57.97 | 51.60 | 49.32 | 42.78 | 70.81 | 35.40 | 49.58 | 60.59 | 35.00 | 40.17 | 56.51 | 43.24 | 46.32 | 30.81 | 72.45 | 35.19 | 40.78 | 15.66 | 44.66 | 43.34 |

| Raw Input Size (M) | Performance of RPN | Training Time | Inference Time | ||

|---|---|---|---|---|---|

| before Down-Scaling | TP Rate (%) | FN Rate (%) | mAP (%) | (h) | (s/img) |

| 3000 × 3000 | 51.14 | 48.86 | 31.09 | 218.00 | 45.46 |

| 5000 × 5000 | 36.52 | 63.48 | 26.68 | 208.07 | 40.42 |

| 7000 × 7000 | 35.47 | 64.53 | 24.58 | 195.35 | 38.76 |

| 9000 × 9000 | 29.04 | 70.96 | 21.08 | 176.12 | 31.33 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, S.-J.; Kim, S.; Kim, Y.; Kim, S. Hierarchical Multi-Label Object Detection Framework for Remote Sensing Images. Remote Sens. 2020, 12, 2734. https://doi.org/10.3390/rs12172734

Shin S-J, Kim S, Kim Y, Kim S. Hierarchical Multi-Label Object Detection Framework for Remote Sensing Images. Remote Sensing. 2020; 12(17):2734. https://doi.org/10.3390/rs12172734

Chicago/Turabian StyleShin, Su-Jin, Seyeob Kim, Youngjung Kim, and Sungho Kim. 2020. "Hierarchical Multi-Label Object Detection Framework for Remote Sensing Images" Remote Sensing 12, no. 17: 2734. https://doi.org/10.3390/rs12172734

APA StyleShin, S.-J., Kim, S., Kim, Y., & Kim, S. (2020). Hierarchical Multi-Label Object Detection Framework for Remote Sensing Images. Remote Sensing, 12(17), 2734. https://doi.org/10.3390/rs12172734