Performance Analysis of Deep Convolutional Autoencoders with Different Patch Sizes for Change Detection from Burnt Areas

Abstract

1. Introduction

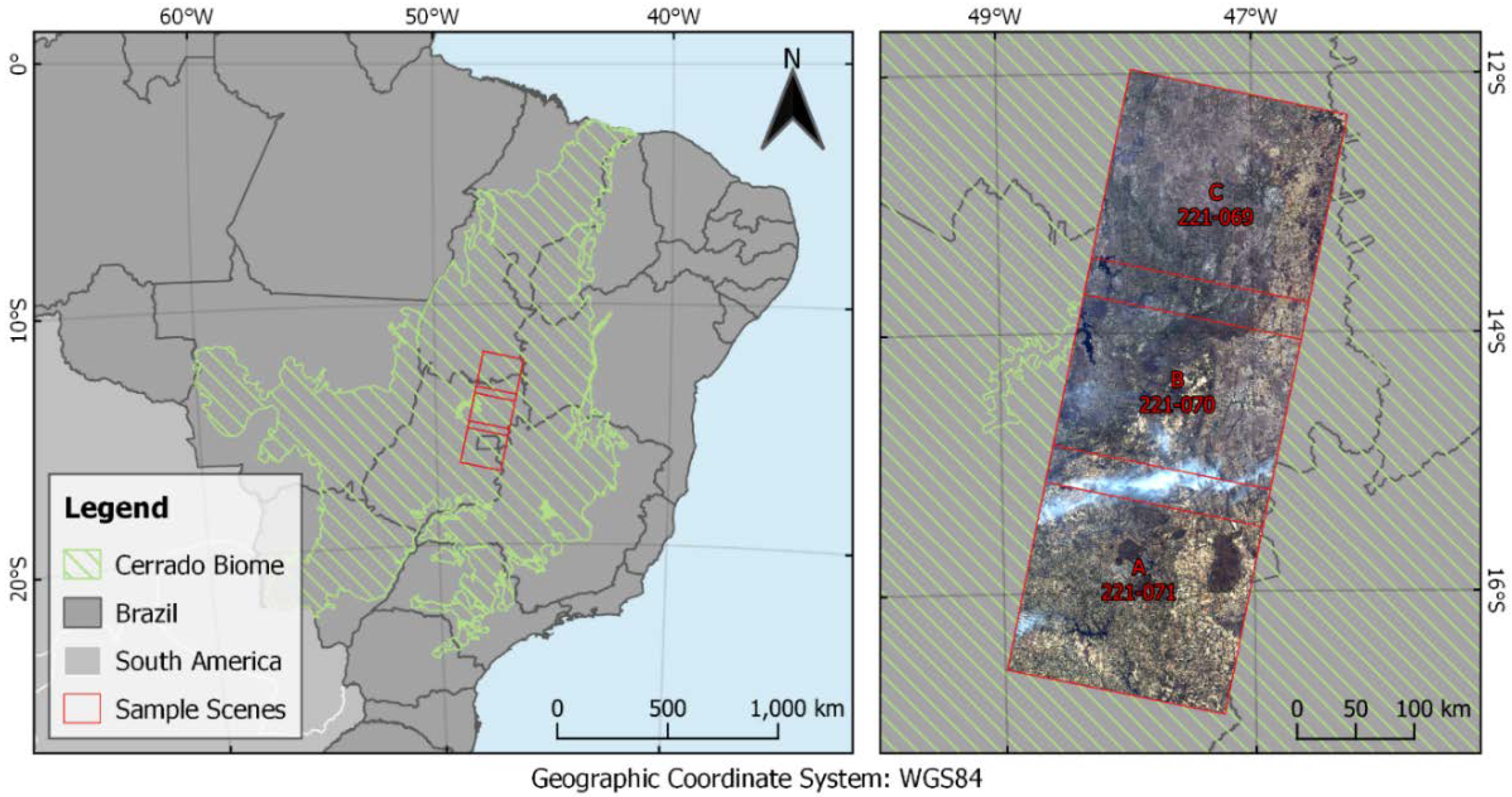

2. Methodology

2.1. Landsat Data

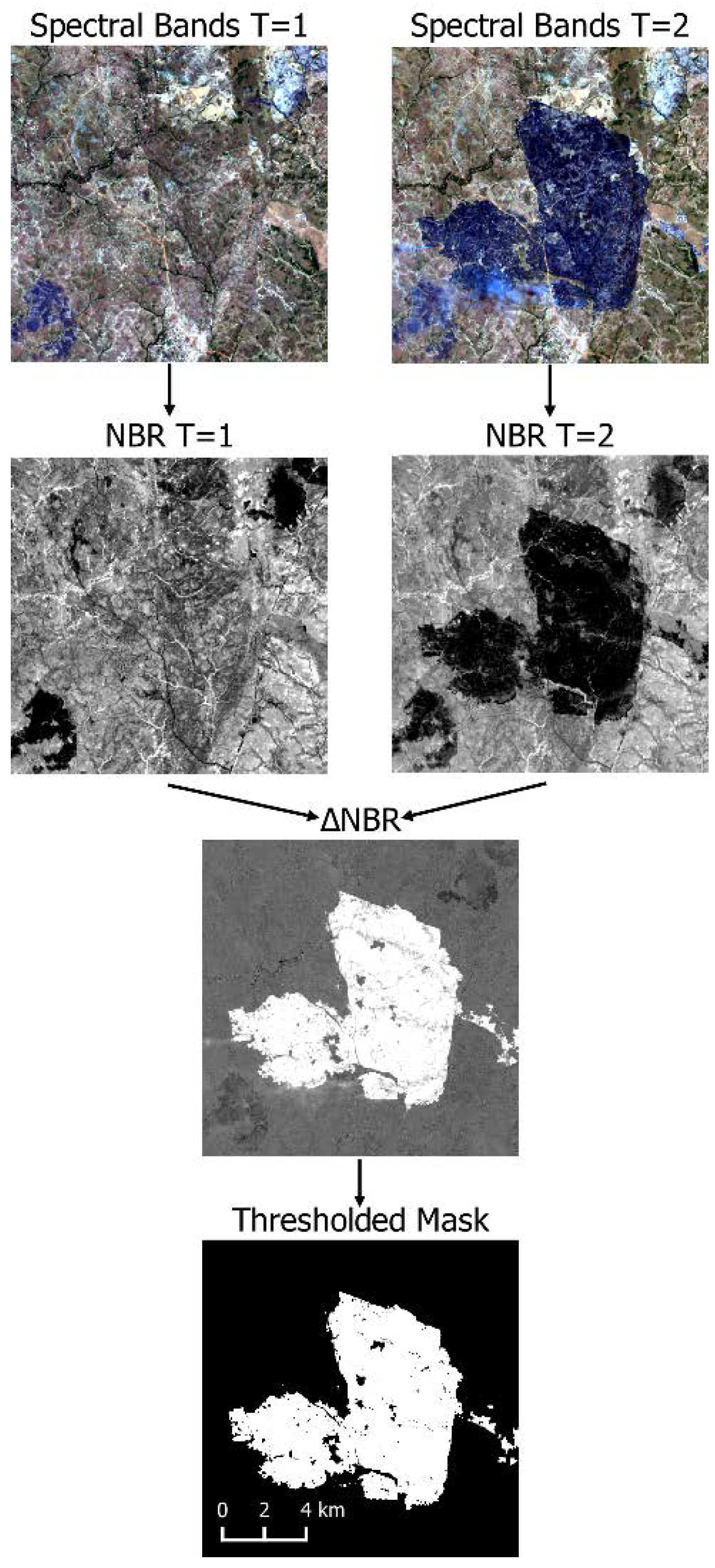

2.2. Burnt Area Change Mask

2.3. Data Structure

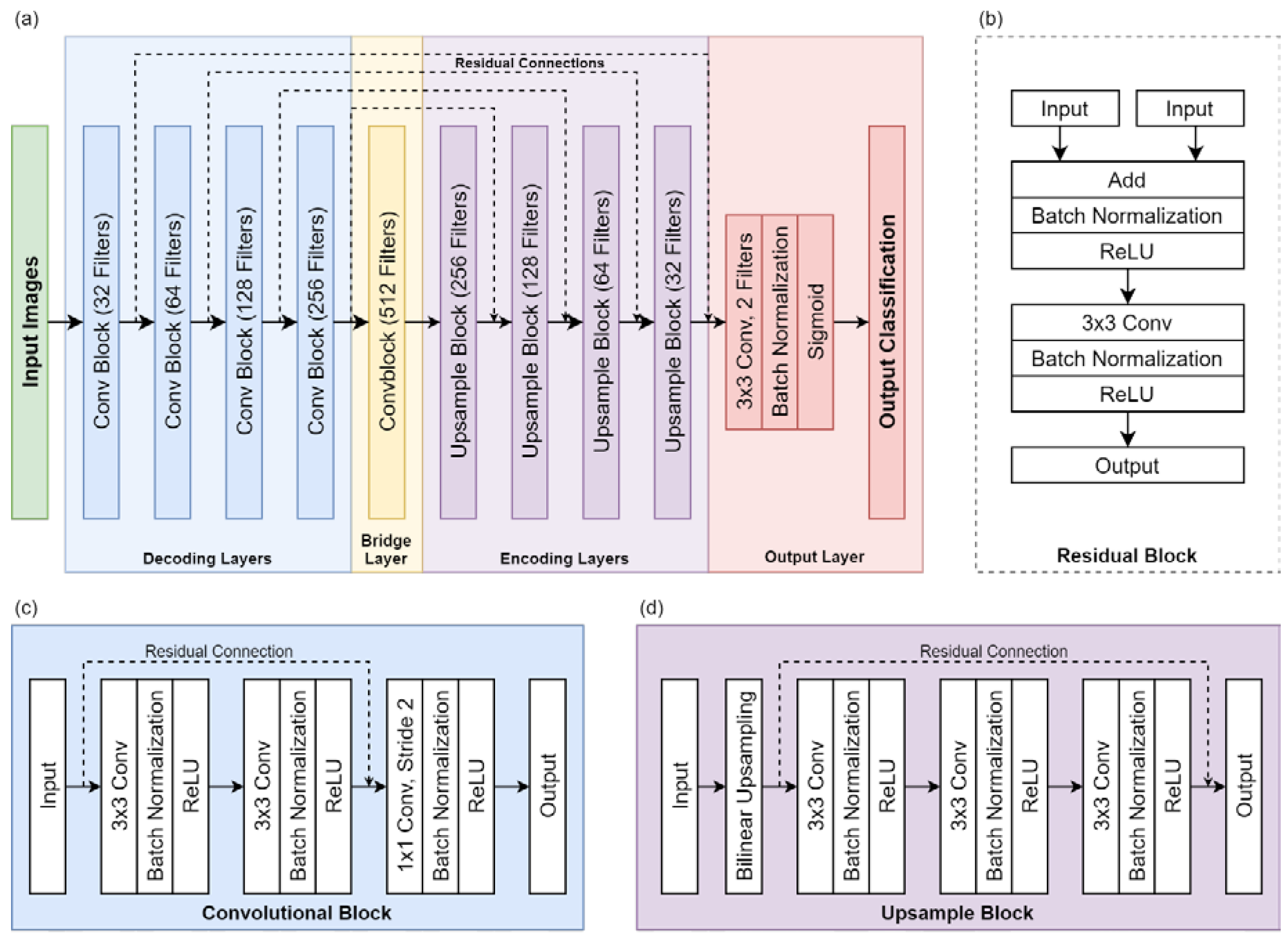

2.4. Deep Learning Models

2.5. Model Training

2.6. Model Evaluation

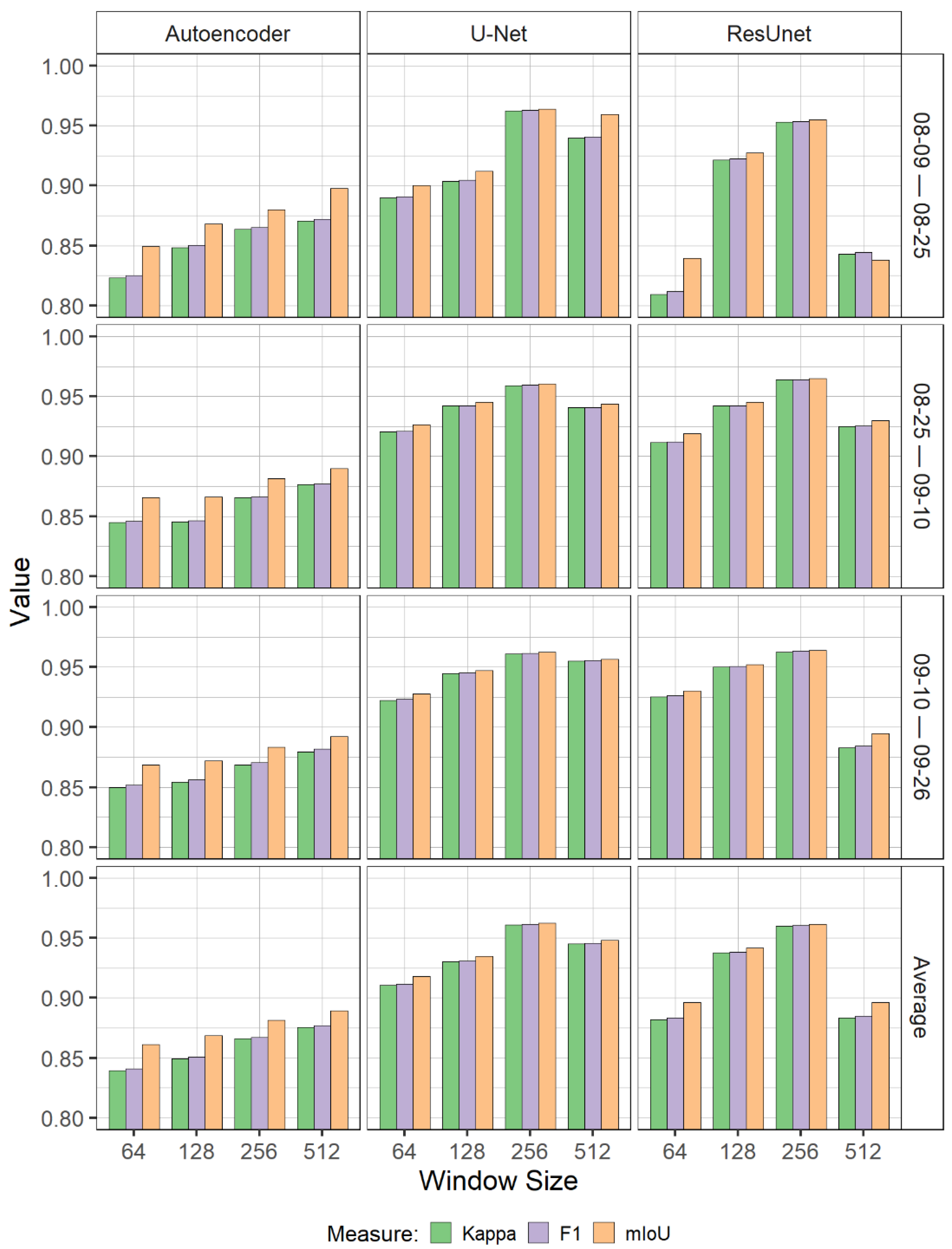

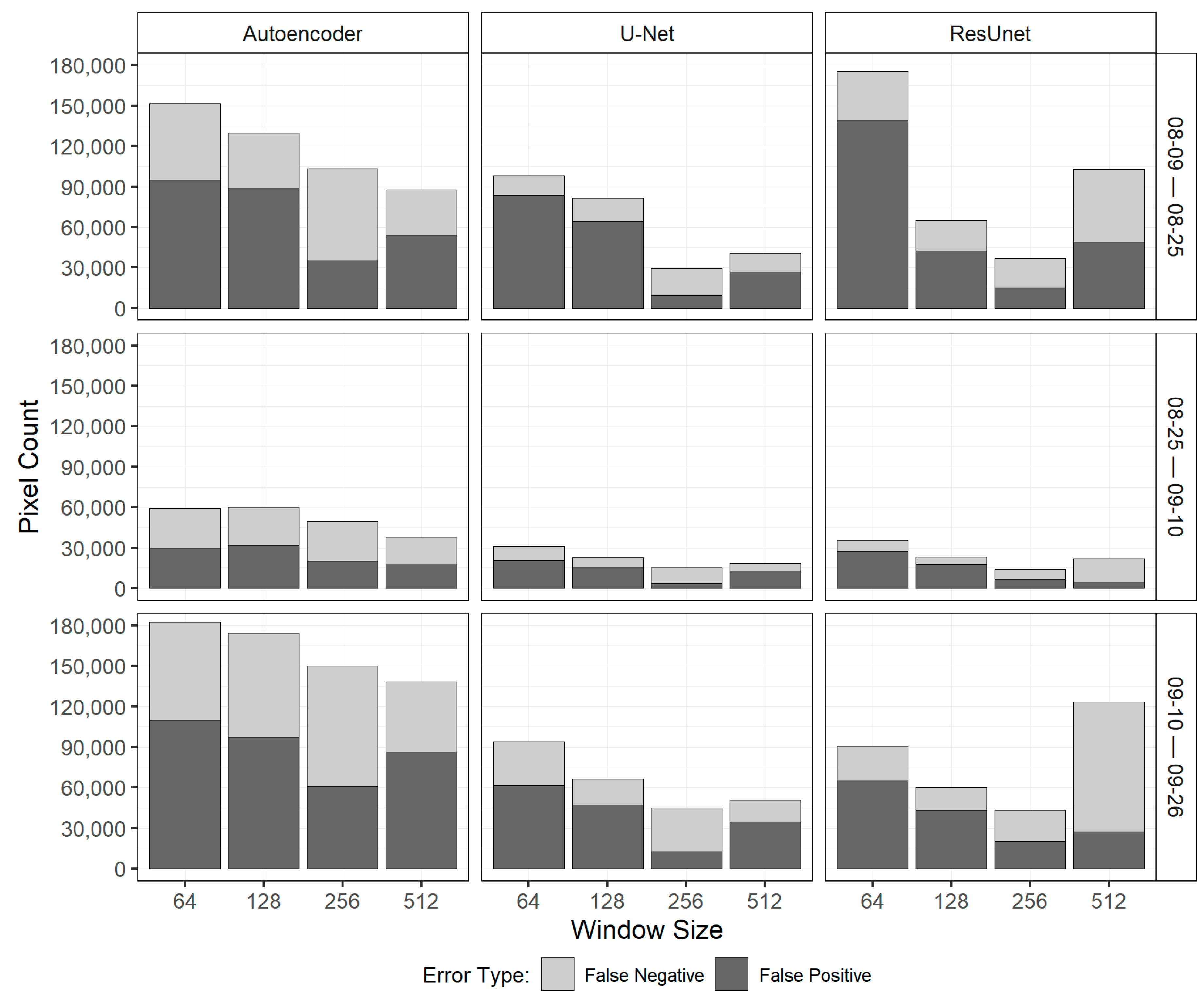

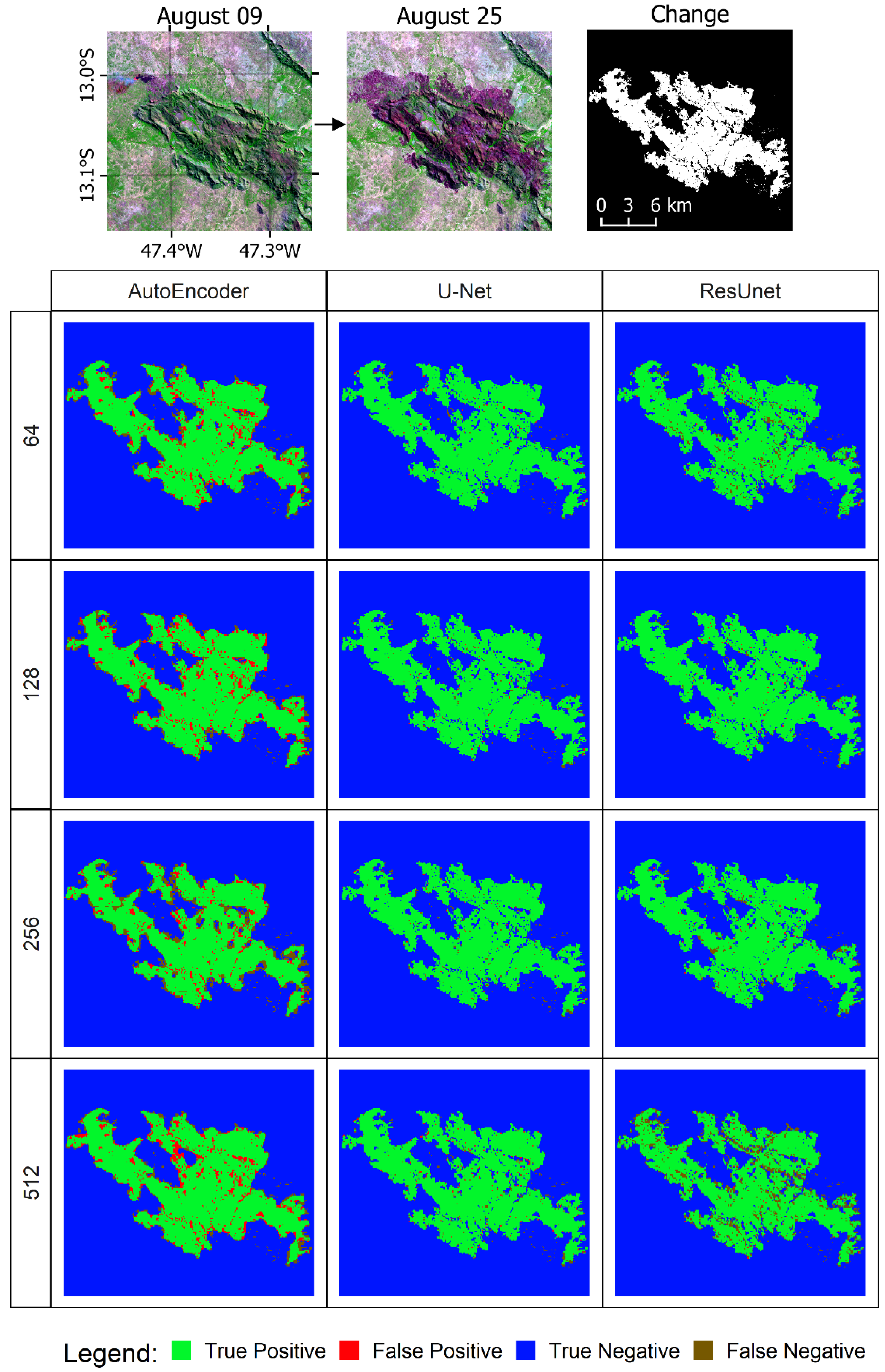

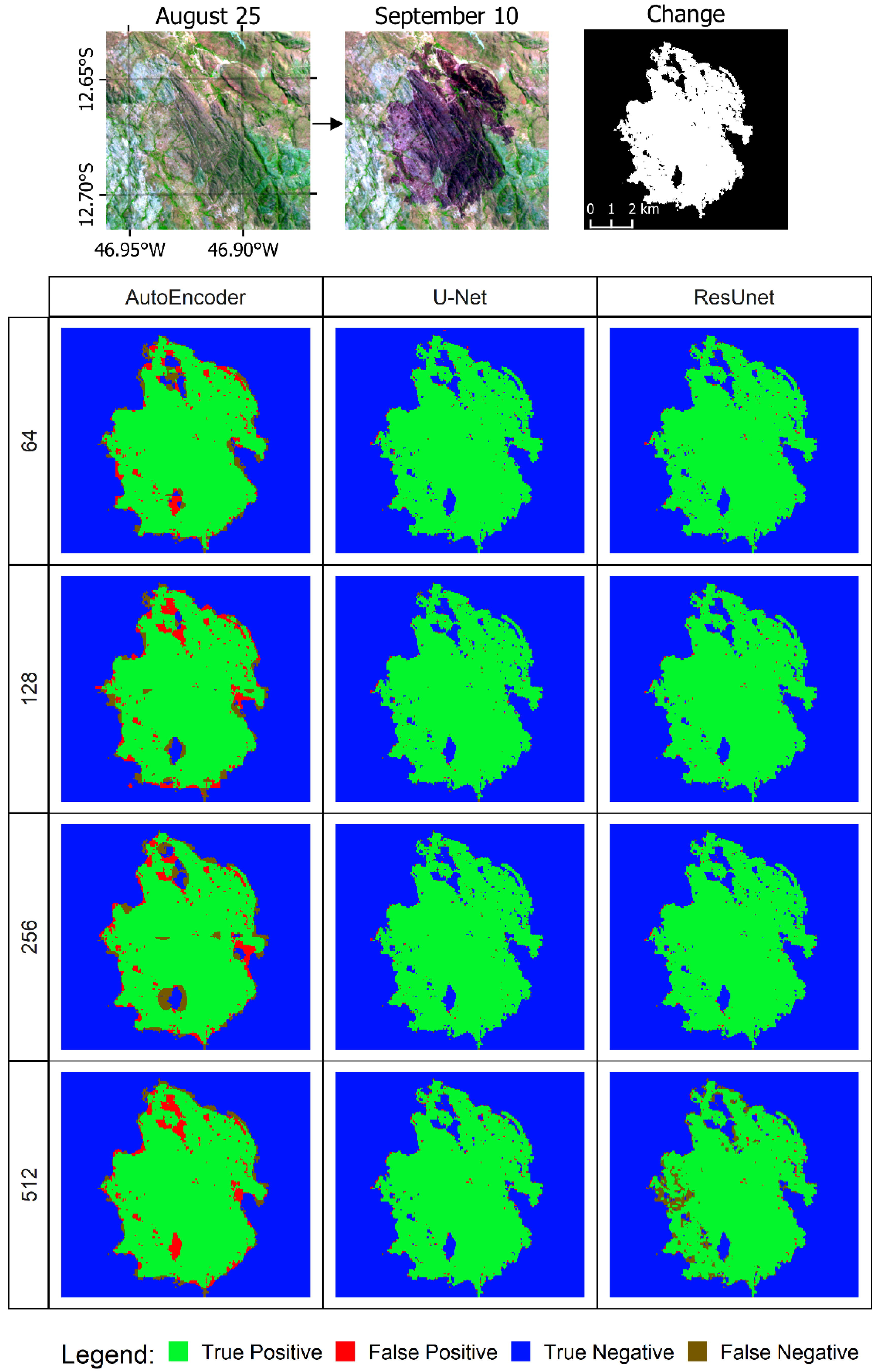

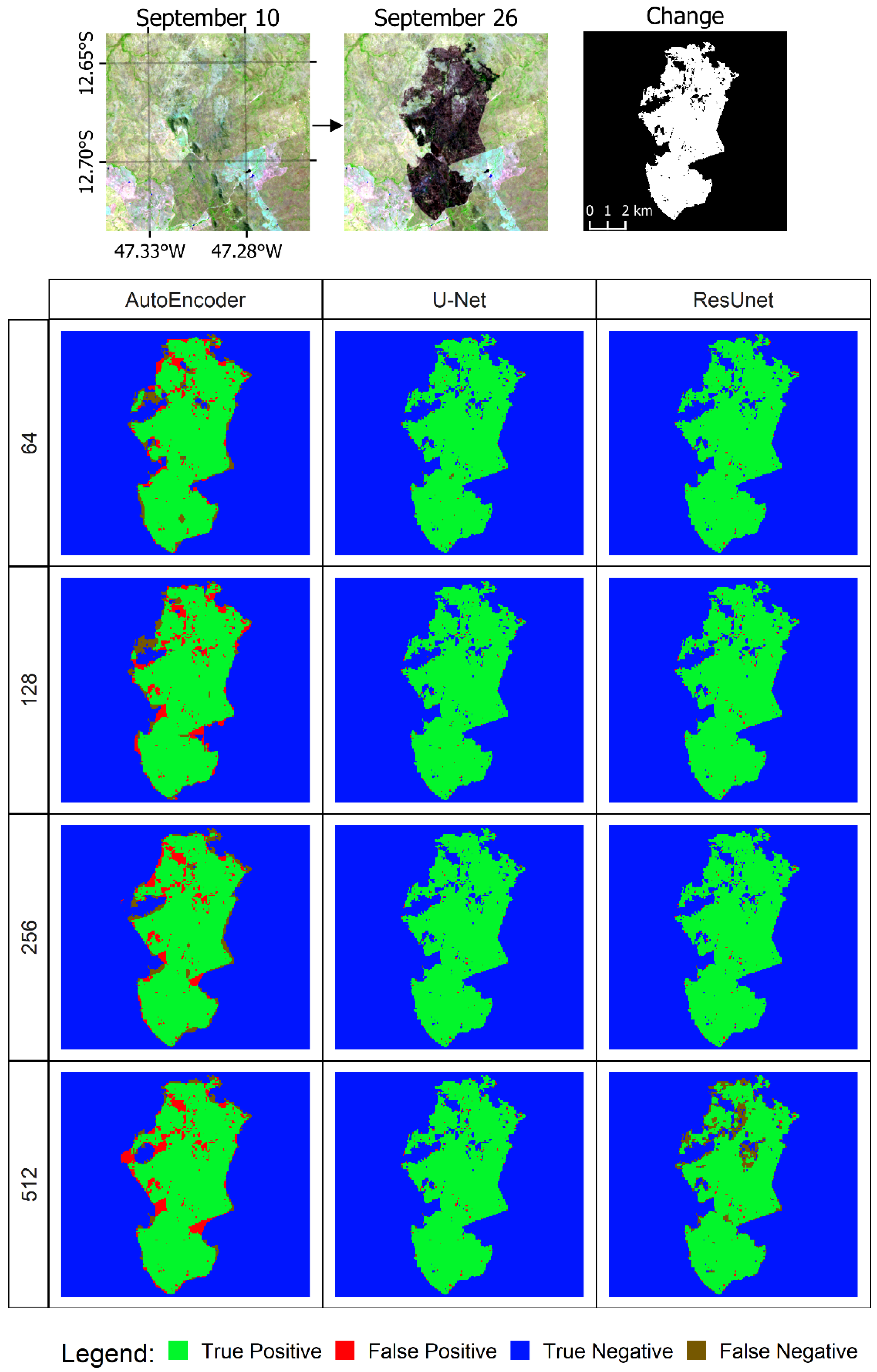

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level Convolutional Networks for Text Classification. In Advances in Neural Information Processing Systems 28; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Curran Associates Inc.: Montreal, QC, Canada, 2015; pp. 649–657. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef]

- Abdel-Hamid, O.; Mohamed, A.; Jiang, H.; Penn, G. Applying Convolutional Neural Networks concepts to hybrid NN-HMM model for speech recognition. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 4277–4280. [Google Scholar]

- Zhang, L.; Zhang, L.; Kumar, V. Deep learning for Remote Sensing Data. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Fully conv-deconv network for unsupervised spectral-spatial feature extraction of hyperspectral imagery via residual learning. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; Volume 56, pp. 5181–5184. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Scott, G.J.; England, M.R.; Starms, W.A.; Marcum, R.A.; Davis, C.H. Training deep convolutional neural networks for land–cover classification of high-resolution imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 549–553. [Google Scholar] [CrossRef]

- Imamoglu, N.; Kimura, M.; Miyamoto, H.; Fujita, A.; Nakamura, R. Solar Power Plant Detection on Multi-Spectral Satellite Imagery using Weakly-Supervised CNN with Feedback Features and m-PCNN Fusion. arXiv 2017, arXiv:1704.06410. [Google Scholar]

- Yu, L.; Wang, Z.; Tian, S.; Ye, F.; Ding, J.; Kong, J. Convolutional Neural Networks for Water Body Extraction from Landsat Imagery. Int. J. Comput. Intell. Appl. 2017, 16, 1750001. [Google Scholar] [CrossRef]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A Multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Select. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Scarpa, G.; Vitale, S.; Cozzolino, D. Target-Adaptive CNN-Based Pansharpening. IEEE Trans. Geosci. Remote Sens. 2018, 1–15. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Stent, S.; Ros, G.; Arroyo, R.; Gherardi, R. Street-view change detection with deconvolutional networks. Auton Robot 2018, 42, 1301–1322. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Gong, M.; Su, L.; Liu, J.; Li, Z. Change detection based on deep feature representation and mapping transformation for multi-spatial-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 116, 24–41. [Google Scholar] [CrossRef]

- Zhao, J.; Gong, M.; Liu, J.; Jiao, L. Deep learning to classify difference image for image change detection. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; IEEE: Beijing, China, 2014; pp. 411–417. [Google Scholar]

- Zeng, X.; Yang, J.; Deng, X.; An, W.; Li, J. Cloud detection of remote sensing images on Landsat-8 by deep learning. In Proceedings of the Tenth International Conference on Digital Image Processing (ICDIP 2018), Shanghai, China, 8 August 2018; Jiang, X., Hwang, J.-N., Eds.; SPIE: Shanghai, China, 2018; p. 173. [Google Scholar]

- Zhan, Y.; Wang, J.; Shi, J.; Cheng, G.; Yao, L.; Sun, W. Distinguishing Cloud and Snow in Satellite Images via Deep Convolutional Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1785–1789. [Google Scholar] [CrossRef]

- Chuvieco, E.; Aguado, I.; Yebra, M.; Nieto, H.; Salas, J.; Martín, M.P.; Vilar, L.; Martínez, J.; Martín, S.; Ibarra, P.; et al. Development of a framework for fire risk assessment using remote sensing and geographic information system technologies. Ecol. Model. 2010, 221, 46–58. [Google Scholar] [CrossRef]

- Myers, N.; Mittermeier, R.A.; Mittermeier, C.G.; da Fonseca, G.A.B.; Kent, J. Biodiversity hotspots for conservation priorities. Nature 2000, 403, 853–858. [Google Scholar] [CrossRef]

- INPE—Instituto Nacional de Pesquisas Espaciais Monitoramento de Queimadas. Available online: http://www.inpe.br/queimadas (accessed on 6 November 2017).

- Costafreda-Aumedes, S.; Comas, C.; Vega-Garcia, C. Human-caused fire occurrence modelling in perspective: A review. Int. J. Wildland Fire 2017, 26, 983. [Google Scholar] [CrossRef]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Chuvieco, E.; Lizundia-Loiola, J.; Pettinari, M.L.; Ramo, R.; Padilla, M.; Tansey, K.; Mouillot, F.; Laurent, P.; Storm, T.; Heil, A.; et al. Generation and analysis of a new global burned area product based on MODIS 250m reflectance bands and thermal anomalies. Earth Syst. Sci. Data 2018, 10, 2015–2031. [Google Scholar] [CrossRef]

- Daldegan, G.A.; de Carvalho Júnior, O.A.; Guimarães, R.F.; Gomes, R.A.T.; de Ribeiro, F.F.; McManus, C. Spatial patterns of fire recurrence using remote sensing and GIS in the Brazilian savanna: Serra do Tombador Nature Reserve, Brazil. Remote Sens. 2014, 6, 9873–9894. [Google Scholar] [CrossRef]

- Pereira, J.M.C. Remote sensing of burned areas in tropical savannas. Int. J. Wildland Fire 2003, 12, 259. [Google Scholar] [CrossRef]

- Sousa, I.M.P.; de Carvalho, E.V.; Batista, A.C.; Machado, I.E.S.; Tavares, M.E.F.; Giongo, M. Identification of burned areas by special index in a cerrado region of the state of tocantins, Brazil. Floresta 2018, 48, 553. [Google Scholar] [CrossRef]

- De Carvalho Júnior, O.A.; Guimarães, R.F.; Silva, C.; Gomes, R.A.T. Standardized time-series and interannual phenological deviation: New techniques for burned-area detection using long-term modis-nbr dataset. Remote Sens. 2015, 7, 6950–6985. [Google Scholar] [CrossRef]

- Pereira Júnior, A.C.; Oliveira, S.L.J.; Pereira, J.M.C.; Turkman, M.A.A. Modelling fire frequency in a cerrado savanna protected area. PLoS ONE 2014, 9, e102380. [Google Scholar] [CrossRef] [PubMed]

- Alvarado, S.T.; Fornazari, T.; Cóstola, A.; Morellato, L.P.C.; Silva, T.S.F. Drivers of fire occurrence in a mountainous Brazilian cerrado savanna: Tracking long-term fire regimes using remote sensing. Ecol. Indic. 2017, 78, 270–281. [Google Scholar] [CrossRef]

- De Bem, P.P.; de Carvalho Júnior, O.A.; Matricardi, E.A.T.; Guimarães, R.F.; Gomes, R.A.T. Predicting wildfire vulnerability using logistic regression and artificial neural networks: A case study in Brazil’s Federal District. Int. J. Wildland Fire 2019, 28, 35. [Google Scholar] [CrossRef]

- Nogueira, K.; Penatti, O.A.B.; dos Santos, J.A. Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef]

- Giglio, L.C.J. MCD64A1 MODIS/Terra+Aqua Burned Area Monthly L3 Global 500m SIN Grid V006; NASA EOSDIS Land Processes DAAC: Sioux Falls, SD, USA, 2015.

- Hall, J.V.; Loboda, T.V.; Giglio, L.; McCarty, G.W. A MODIS-based burned area assessment for Russian croplands: Mapping requirements and challenges. Remote Sens. Environ. 2016, 184, 506–521. [Google Scholar] [CrossRef]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.-J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.T.; Williams, B.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J.; et al. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Moreira de Araújo, F.; Ferreira, L.G.; Arantes, A.E. Distribution patterns of burned areas in the brazilian biomes: An analysis based on satellite data for the 2002–2010 period. Remote Sens. 2012, 4, 1929–1946. [Google Scholar] [CrossRef]

- Santana, N.; de Carvalho Júnior, O.; Gomes, R.; Guimarães, R. Burned-area detection in amazonian environments using standardized time series per pixel in modis data. Remote Sens. 2018, 10, 1904. [Google Scholar] [CrossRef]

- Pereira, A.; Pereira, J.; Libonati, R.; Oom, D.; Setzer, A.; Morelli, F.; Machado-Silva, F.; de Carvalho, L. Burned area mapping in the brazilian savanna using a one-class support vector machine trained by active fires. Remote Sens. 2017, 9, 1161. [Google Scholar] [CrossRef]

- Ramo, R.; Chuvieco, E. Developing a random forest algorithm for modis global burned area classification. Remote Sens. 2017, 9, 1193. [Google Scholar] [CrossRef]

- Mithal, V.; Nayak, G.; Khandelwal, A.; Kumar, V.; Nemani, R.; Oza, N. Mapping burned areas in tropical forests using a novel machine learning framework. Remote Sens. 2018, 10, 69. [Google Scholar] [CrossRef]

- Al-Rawi, K.R.; Casanova, J.L.; Calle, A. Burned area mapping system and fire detection system, based on neural networks and NOAA-AVHRR imagery. Int. J. Remote Sens. 2010, 22, 2015–2032. [Google Scholar] [CrossRef]

- Meng, R.; Zhao, F. Remote sensing of fire effects. A review for recent advances in burned area and burn severity mapping. In Remote Sensing of Hydrometeorological Hazards; Petropoulos, G.P., Islam, T., Eds.; CRC Press: Boca Raton, FL, USA, 2017; pp. 261–276. [Google Scholar]

- Shan, T.; Wang, C.; Chen, F.; Wu, Q.; Li, B.; Yu, B.; Shirazi, Z.; Lin, Z.; Wu, W. A Burned Area Mapping Algorithm for Chinese FengYun-3 MERSI Satellite Data. Remote Sens. 2017, 9, 736. [Google Scholar] [CrossRef]

- Langford, Z.; Kumar, J.; Hoffman, F. Wildfire Mapping in Interior Alaska Using Deep Neural Networks on Imbalanced Datasets. In Proceedings of the 2018 IEEE International Conference on Data Mining Workshops (ICDMW), Singapore, 17–20 November 2018; IEEE: Singapore, 2018; pp. 770–778. [Google Scholar]

- Zhang, P.; Nascetti, A.; Ban, Y.; Gong, M. An implicit radar convolutional burn index for burnt area mapping with Sentinel-1 C-band SAR data. ISPRS J. Photogramm. Remote Sens. 2019, 158, 50–62. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- De Bem, P.; de Carvalho Junior, O.; Fontes Guimarães, R.; Trancoso Gomes, R. Change detection of deforestation in the brazilian amazon using landsat data and convolutional neural networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Li, L. Deep residual autoencoder with multiscaling for semantic segmentation of land-use images. Remote Sens. 2019, 11, 2142. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-temporal SAR data large-scale crop mapping based on u-net model. Remote Sens. 2019, 11, 68. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Bermudez, J.D.; Happ, P.N.; Oliveira, D.A.B.; Feitosa, R.Q. Sar to optical image synthesis for cloud removal with generative adversarial networks. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, IV-1, 5–11. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2016, 9, 22. [Google Scholar] [CrossRef]

- Yohei, K.; Hiroyuki, M. ryosuke shibasaki A CNN-based method of vehicle detection from aerial images using hard example mining. Remote Sens. 2018, 10, 124. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Wang, D.; Yu, L.; Yu, J. Improved CNN classification method for groups of buildings damaged by earthquake, based on high resolution remote sensing images. Remote Sens. 2020, 12, 260. [Google Scholar] [CrossRef]

- Yi, Y.; Zhang, Z.; Zhang, W.; Zhang, C.; Li, W.; Zhao, T. Semantic segmentation of urban buildings from vhr remote sensing imagery using a deep convolutional neural network. Remote Sens. 2019, 11, 1774. [Google Scholar] [CrossRef]

- Liu, C.; Zeng, D.; Wu, H.; Wang, Y.; Jia, S.; Xin, L. Urban land cover classification of high-resolution aerial imagery using a relation-enhanced multiscale convolutional network. Remote Sens. 2020, 12, 311. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in uav imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- De Albuquerque, A.O.; de Carvalho Júnior, O.A.C.; de Carvalho, O.L.F.; de Bem, P.P.; Ferreira, P.H.G.; de dos Moura, R.S.; Silva, C.R.; Gomes, R.A.T.; Guimarães, R.F. Deep semantic segmentation of center pivot irrigation systems from remotely sensed data. Remote Sens. 2020, 12, 2159. [Google Scholar] [CrossRef]

- Escuin, S.; Navarro, R.; Fernández, P. Fire severity assessment by using NBR (Normalized Burn Ratio) and NDVI (normalized difference vegetation index) derived from LANDSAT TM/ETM images. Int. J. Remote Sens. 2008, 29, 1053–1073. [Google Scholar] [CrossRef]

- Miller, J.D.; Thode, A.E. Quantifying burn severity in a heterogeneous landscape with a relative version of the delta normalized burn ratio (dNBR). Remote Sens. Environ. 2007, 109, 66–80. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Cao, K.; Zhang, X. An improved res-unet model for tree species classification using airborne high-resolution images. Remote Sens. 2020, 12, 1128. [Google Scholar] [CrossRef]

- Chollet, F. Others Keras. Available online: https://keras.io (accessed on 6 July 2020).

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. arXiv 2016, arXiv:1606.04797. [Google Scholar]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Maratea, A.; Petrosino, A.; Manzo, M. Adjusted F-measure and kernel scaling for imbalanced data learning. Inf. Sci. 2014, 257, 331–341. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic map comparison: Evaluating the statistical significance of differences in classification accuracy. Photogram. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Tanase, M.A.; Belenguer-Plomer, M.A.; Roteta, E.; Bastarrika, A.; Wheeler, J.; Fernández-Carrillo, Á.; Tansey, K.; Wiedemann, W.; Navratil, P.; Lohberger, S.; et al. Burned area detection and mapping: Intercomparison of sentinel-1 and sentinel-2 based algorithms over tropical Africa. Remote Sens. 2020, 12, 334. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near real-time wildfire progression monitoring with sentinel-1 SAR time series and deep learning. Sci. Rep. 2020, 10, 1322. [Google Scholar] [CrossRef] [PubMed]

- Melchiori, A.E.; Setzer, A.W.; Morelli, F.; Libonati, R.; de Cândido, P.A.; de Jesús, S.C. A Landsat-TM/OLI algorithm for burned areas in the Brazilian Cerrado: Preliminary results. In Advances in Forest Fire Research; Imprensa da Universidade de Coimbra: Coimbra, Portugal, 2014; Volume 4, pp. 1302–1311. ISBN 978-989-26-0884-6. [Google Scholar]

- Kandel, I.; Castelli, M. The effect of batch size on the generalizability of the convolutional neural networks on a histopathology dataset. ICT Express 2020, S2405959519303455. [Google Scholar] [CrossRef]

- Radiuk, P.M. Impact of training set batch size on the performance of convolutional neural networks for diverse datasets. Inf. Technol. Manag. Sci. 2017, 20. [Google Scholar] [CrossRef]

- Axel, A. Burned area mapping of an escaped fire into tropical dry forest in western madagascar using multi-season landsat oli data. Remote Sens. 2018, 10, 371. [Google Scholar] [CrossRef]

- Saulino, L.; Rita, A.; Migliozzi, A.; Maffei, C.; Allevato, E.; Garonna, A.P.; Saracino, A. Detecting burn severity across mediterranean forest types by coupling medium-spatial resolution satellite imagery and field data. Remote Sens. 2020, 12, 741. [Google Scholar] [CrossRef]

| Model | 08-09 to 08-25 | 08-25 to 09-10 | 09-10 to 09-26 | Average | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kappa | F1 | mIoU | Kappa | F1 | mIoU | Kappa | F1 | mIoU | Kappa | F1 | mIoU | |

| Autoencoder64 | 0.823 | 0.825 | 0.849 | 0.844 | 0.845 | 0.865 | 0.849 | 0.851 | 0.868 | 0.839 | 0.840 | 0.861 |

| Autoencoder128 | 0.848 | 0.850 | 0.868 | 0.845 | 0.846 | 0.865 | 0.854 | 0.856 | 0.872 | 0.849 | 0.850 | 0.868 |

| Autoencoder256 | 0.863 | 0.865 | 0.879 | 0.865 | 0.866 | 0.881 | 0.868 | 0.870 | 0.883 | 0.865 | 0.867 | 0.881 |

| Autoencoder512 | 0.870 | 0.872 | 0.885 | 0.876 | 0.877 | 0.889 | 0.879 | 0.881 | 0.892 | 0.875 | 0.877 | 0.889 |

| U-Net64 | 0.889 | 0.890 | 0.900 | 0.920 | 0.920 | 0.926 | 0.922 | 0.923 | 0.927 | 0.910 | 0.911 | 0.918 |

| U-Net128 | 0.903 | 0.904 | 0.912 | 0.942 | 0.942 | 0.945 | 0.944 | 0.945 | 0.947 | 0.930 | 0.930 | 0.934 |

| U-Net256 | 0.962 | 0.963 | 0.964 | 0.959 | 0.959 | 0.960 | 0.960 | 0.961 | 0.962 | 0.960 | 0.961 | 0.962 |

| U-Net512 | 0.939 | 0.940 | 0.943 | 0.940 | 0.940 | 0.943 | 0.954 | 0.955 | 0.956 | 0.945 | 0.945 | 0.948 |

| ResUnet64 | 0.809 | 0.811 | 0.839 | 0.911 | 0.912 | 0.918 | 0.925 | 0.926 | 0.930 | 0.882 | 0.883 | 0.896 |

| ResUnet128 | 0.921 | 0.922 | 0.927 | 0.942 | 0.942 | 0.945 | 0.950 | 0.950 | 0.952 | 0.937 | 0.938 | 0.941 |

| ResUnet256 | 0.953 | 0.953 | 0.955 | 0.963 | 0.964 | 0.965 | 0.962 | 0.963 | 0.964 | 0.959 | 0.960 | 0.961 |

| ResUnet512 | 0.843 | 0.844 | 0.864 | 0.924 | 0.925 | 0.930 | 0.882 | 0.884 | 0.894 | 0.883 | 0.884 | 0.896 |

| Model/Window | Autoencoder | U-Net | ResUnet | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 64 | 128 | 256 | 512 | 64 | 128 | 256 | 512 | 64 | 128 | 256 | 512 | ||

| Autoencoder | 64 | ||||||||||||

| 128 | <0.001 | ||||||||||||

| 256 | <0.001 | <0.001 | |||||||||||

| 512 | <0.001 | <0.001 | <0.001 | ||||||||||

| U-Net | 64 | <0.001 | <0.001 | <0.001 | <0.001 | ||||||||

| 128 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||||||||

| 256 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | |||||||

| 512 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||||||

| ResUnet | 64 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||||

| 128 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||||

| 256 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | 0.089 | <0.001 | <0.001 | <0.001 | |||

| 512 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Bem, P.P.; de Carvalho Júnior, O.A.; de Carvalho, O.L.F.; Gomes, R.A.T.; Fontes Guimarães, R. Performance Analysis of Deep Convolutional Autoencoders with Different Patch Sizes for Change Detection from Burnt Areas. Remote Sens. 2020, 12, 2576. https://doi.org/10.3390/rs12162576

de Bem PP, de Carvalho Júnior OA, de Carvalho OLF, Gomes RAT, Fontes Guimarães R. Performance Analysis of Deep Convolutional Autoencoders with Different Patch Sizes for Change Detection from Burnt Areas. Remote Sensing. 2020; 12(16):2576. https://doi.org/10.3390/rs12162576

Chicago/Turabian Stylede Bem, Pablo Pozzobon, Osmar Abílio de Carvalho Júnior, Osmar Luiz Ferreira de Carvalho, Roberto Arnaldo Trancoso Gomes, and Renato Fontes Guimarães. 2020. "Performance Analysis of Deep Convolutional Autoencoders with Different Patch Sizes for Change Detection from Burnt Areas" Remote Sensing 12, no. 16: 2576. https://doi.org/10.3390/rs12162576

APA Stylede Bem, P. P., de Carvalho Júnior, O. A., de Carvalho, O. L. F., Gomes, R. A. T., & Fontes Guimarães, R. (2020). Performance Analysis of Deep Convolutional Autoencoders with Different Patch Sizes for Change Detection from Burnt Areas. Remote Sensing, 12(16), 2576. https://doi.org/10.3390/rs12162576