Abstract

Smart islands have focused on renewable energy sources, such as solar and wind, to achieve energy self-sufficiency. Because solar photovoltaic (PV) power has the advantage of less noise and easier installation than wind power, it is more flexible in selecting a location for installation. A PV power system can be operated more efficiently by predicting the amount of global solar radiation for solar power generation. Thus far, most studies have addressed day-ahead probabilistic forecasting to predict global solar radiation. However, day-ahead probabilistic forecasting has limitations in responding quickly to sudden changes in the external environment. Although multistep-ahead (MSA) forecasting can be used for this purpose, traditional machine learning models are unsuitable because of the substantial training time. In this paper, we propose an accurate MSA global solar radiation forecasting model based on the light gradient boosting machine (LightGBM), which can handle the training-time problem and provide higher prediction performance compared to other boosting methods. To demonstrate the validity of the proposed model, we conducted a global solar radiation prediction for two regions on Jeju Island, the largest island in South Korea. The experiment results demonstrated that the proposed model can achieve better predictive performance than the tree-based ensemble and deep learning methods.

1. Introduction

Due to the serious problems caused by the use of fossil fuels, much attention has been focused on renewable energy sources (RESs) and smart grid technology to reduce greenhouse gas emissions [1,2]. Smart grid technology incorporates information and communication technology into the existing power grid using diverse smart sensors [3]. Smart grid technology can optimize the energy supply and demand by exchanging power production and consumption information between consumers and suppliers [4]. In particular, many countries, including smart islands, are replacing fossil fuels with RESs for energy self-sufficiency and carbon-free energy generation [5,6,7]. Two representative RESs are wind and global solar radiation. Although wind power has a smaller installation area and better power production than solar power, it suffers from higher maintenance costs and more noise. For example, due to various support policies of the Korean government related to renewable energies and smart grid technologies [8], the demand for photovoltaics (PV) is rapidly increasing in South Korea [9]. PV are best known as a method of generating electric power using solar cells to convert energy from the sun into a flow of electrons using the PV effect. Moreover, PV power system is based on an ecofriendly and infinite resource, and is cheaper to build than other power generation systems [10].

Various meteorological factors influence the PV system, and global solar radiation is the most crucial factor in the PV system [11,12]. Therefore, accurate global solar radiation forecasting is essential for the optimal operation of PV systems [13]. Recently, artificial neural network (ANN)-based global solar radiation forecasting models, such as the shallow neural network (SNN), deep neural network (DNN), and long short-term memory (LSTM) network, have been constructed to handle the nonlinearity and fluctuation of global solar radiation [14,15,16,17,18,19,20]. In addition, many studies have been conducted to predict global solar radiation accurately based on an ensemble learning technique that combines several weak models. For instance, in [21], the authors constructed two global solar radiation forecasting models based on the ANN and random forest (RF) methods. Then, they demonstrated that RF, which is an ensemble learning technique, exhibited better prediction performance than the ANN. In [22], the authors proposed four global solar radiation forecasting models based on the bagging and boosting techniques and analyzed the excellence and feature importance of the ensemble learning techniques.

Because global solar radiation is affected by diverse factors, such as season, time, and weather variables, predicting global solar radiation is challenging in the time domain [13]. The ensemble learning technique can avoid the overfitting problem and perform a more accurate prediction than the single model [23]. In this paper, we propose a novel forecasting model for multistep-ahead (MSA) global solar radiation predictions based on the light gradient boosting machine (LightGBM), which is a tree-based ensemble learning technique. The LightGBM can perform learning and prediction very quickly, which reduces the time needed for MSA prediction and performs more accurate predictions. Our forecasting model uses the meteorological information provided by the Korea Meteorological Administration (KMA) for global solar radiation prediction. In addition, to handle the uncertainty of PV scheduling, our MSA forecasting scheme makes hourly solar forecasts from 8 a.m. to 6 p.m. for 24 h from the current time. Usually, the farther the prediction point is from the learning point, the higher the probability that various changes will occur during the trend and pattern of the meteorological conditions and global solar radiation. To address this issue, we used time-series cross-validation (TSCV). We conducted rigorous experiments to compare the performance of LightGBM, various tree-based ensembles, and deep learning methods. Finally, we used the feature importance of the proposed model to provide interpretable forecasting results.

The contributions of this paper are as follows:

- We proposed an MSA forecasting scheme for the efficient PV system operation.

- We proposed an interpretable forecasting model based on feature importance analysis.

- We increased the accuracy of global solar radiation forecasting using TSCV.

This paper is organized as follows. In Section 2, we describe the overall process for constructing a LightGBM-based forecasting model for MSA global solar radiation forecasting. In Section 3, we analyze the experimental results and describe the interpretable forecasting results of our proposed model. Lastly, we discuss in Section 4 the conclusions and some future research directions.

2. Materials and Methods

2.1. Data Collection and Preprocessing

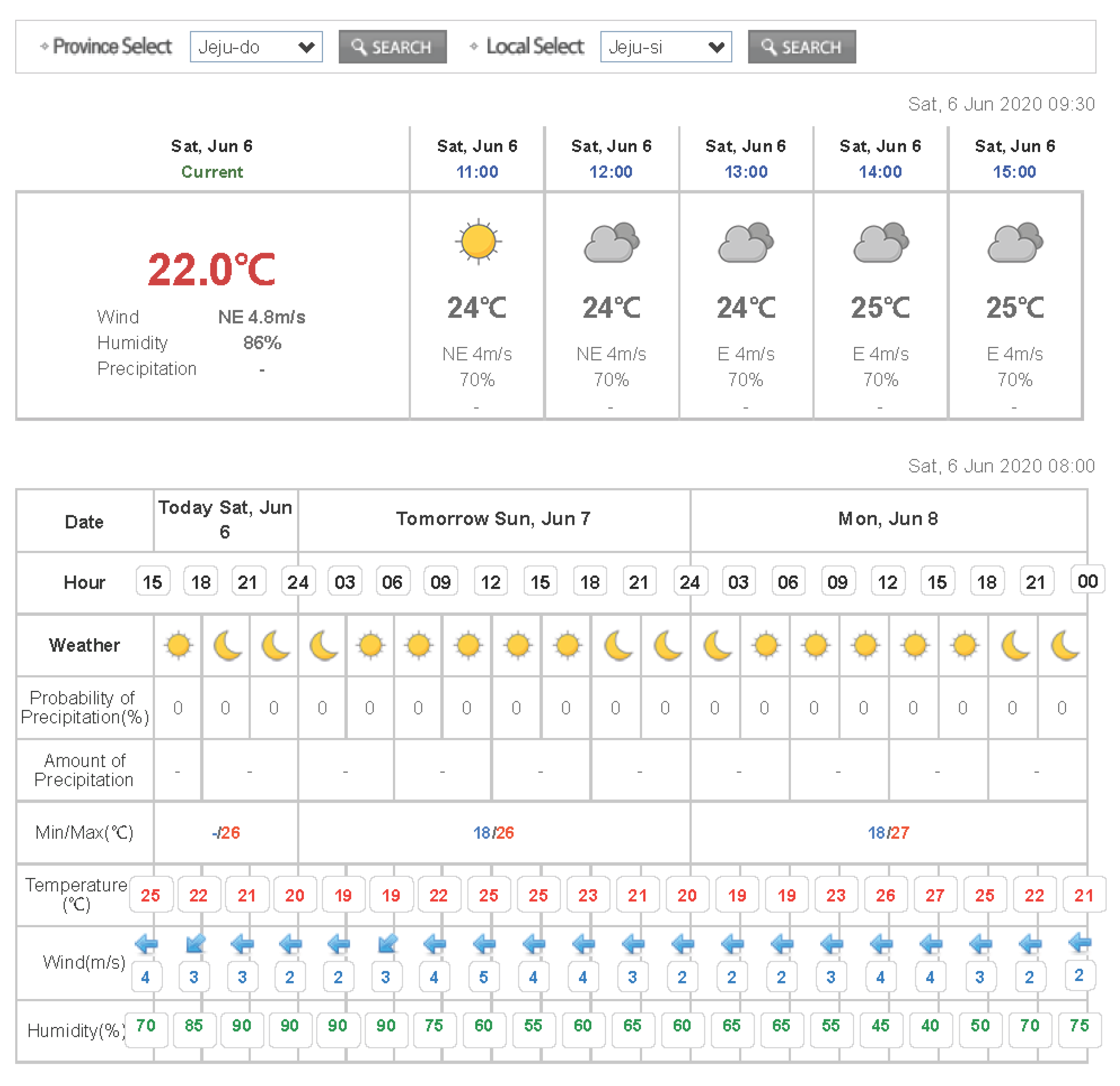

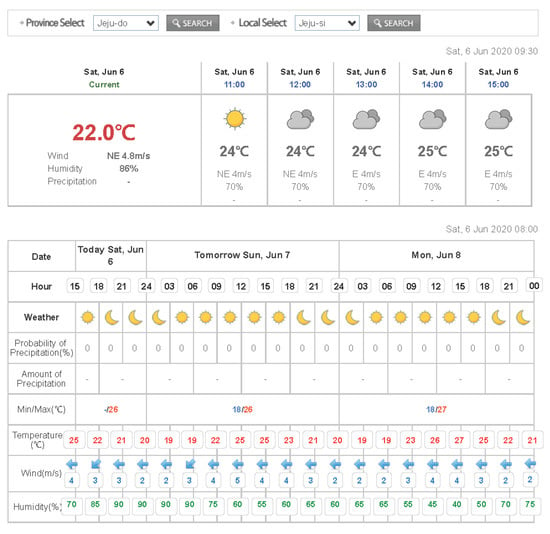

In this paper, we used the date/time, meteorological data, and historical global solar radiation data provided by the KMA as input variables to construct a global solar radiation forecasting model. We considered two regions located on Jeju Island. Jeju is the largest island in South Korea and is implementing various measures to change into a smart island. For instance, it is enforcing diverse energy policies that encourage a shift from conventional fossil fuels to RESs. The two regions that we selected for validating prediction performance are Ildo-1 dong (latitude: 33.51411 and longitude: 126.52969) and Gosan-ri (latitude: 33.29382 and longitude: 126.16283). The data collection period is from 8 a.m. to 6 p.m. for a total of eight years from 2011 to 2018, and the collected data include temperature, humidity, wind speed, and global solar radiation. The meteorological observation data provided by the KMA include extra data, such as soil temperature, total cloud volume, ground-surface temperature, and sunshine amount. However, because the sky condition (also known as weather observation), temperature, humidity, and wind speed are provided by KMA’s short-term weather forecasts, as shown in Figure 1, we only considered these factors [24].

Figure 1.

Short-term weather forecast by the Korea Meteorological Administration.

In the meteorological data we collected, about 0.1% of the total data for each category were missing, and the missing values were indicated as −1. Because the temperature, humidity, wind speed, and global solar radiation have continuous data characteristics, missing values can be estimated using linear interpolation. The sky condition data were presented as categorical values from 1 to 4, and missing values were approximated using logistic regression for similarity with the adjacent data.

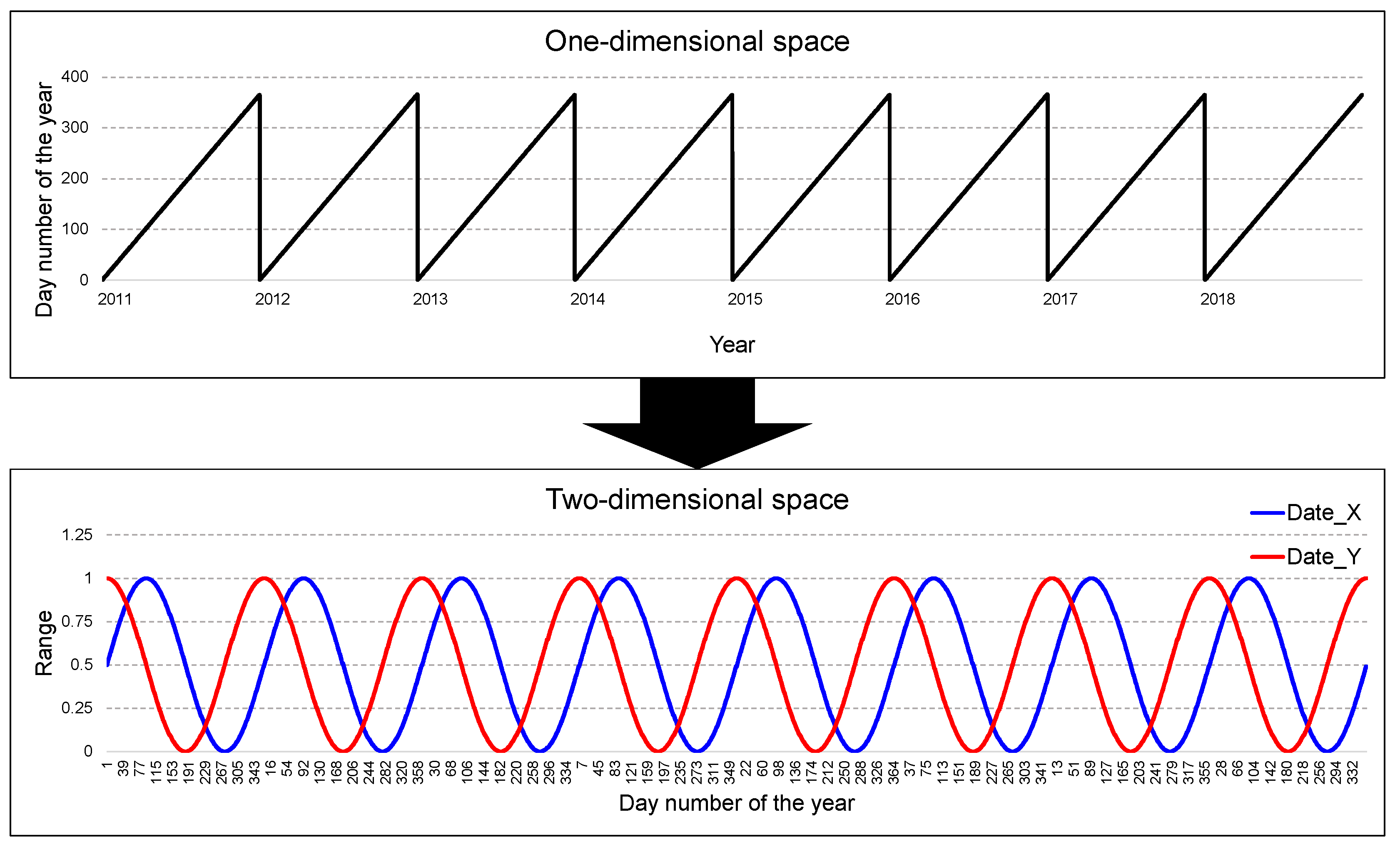

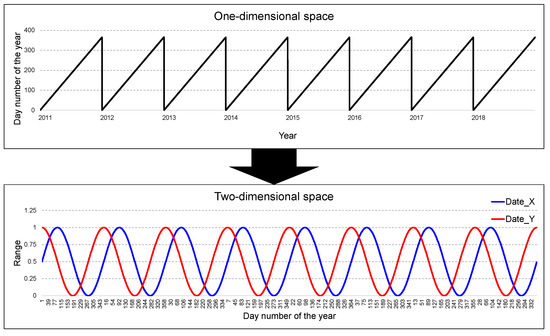

For the date, to reflect the periodicity, one-dimensional data were augmented with continuous data in two-dimensional space using Equations (1) and (2) [25]. In the equations, end-of-month (EoM) indicates the last day of the month. The equations converted each Julian date into a value from 1 to 365. For instance, the Julian date of January 1 is converted to 1, and December 31 is converted to 365. In the case of leap years, 366 was used instead of 365 in the equations. Figure 2 illustrates an example of preprocessing the date data.

Figure 2.

Example of date data preprocessing.

The cloud amount is provided by the KMA. Of the two popular methods for representing cloud amount, which are meteorology 1/8 and climatology 1/10, the KMA uses the second method. Hence, the cloud amount is represented by eleven scales (i.e., from 0 for a clear sky to 10 for an overcast sky). The sky condition data have four interval scales [26,27]: 1 for clear (0 ≤ cloud amount ≤ 2), 2 for partly cloudy (3 ≤ cloud amount ≤ 5), 3 for mostly cloudy (6 ≤ cloud amount ≤ 8), and 4 for cloudy (9 ≤ cloud amount ≤ 10). Because we represent the sky condition data using one-hot encoding, a value of 1 is placed in the binary variable for a specific sky condition, and 0 is used for the other sky conditions. Time data were also represented by interval scales. Global solar radiation is highest during the day from 12 to 2 p.m. To assess these variables more effectively, we used one-hot encoding to represent time intervals.

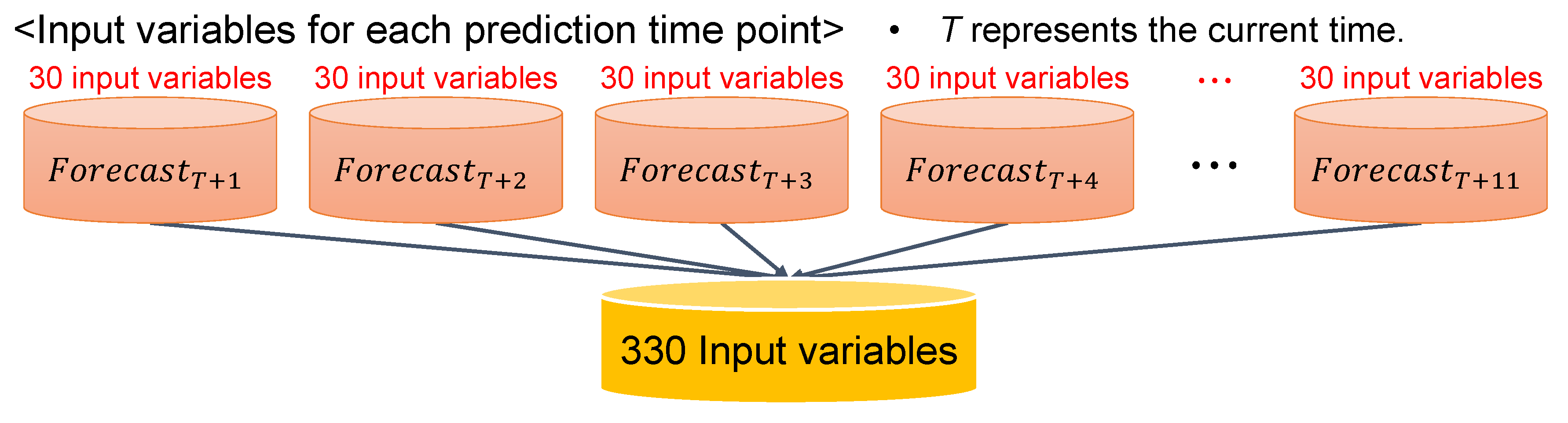

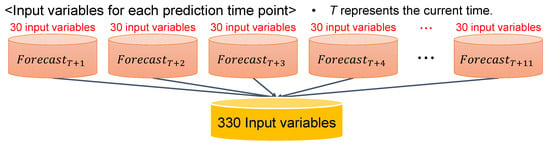

In addition, to reflect the recent trends in global solar radiation, we used the sky condition, temperature, humidity, wind speed, and global solar radiation of the day before the forecast point as input variables. We considered 30 input variables to construct our prediction model, as shown in Table 1. As our goal is to perform MSA (all time points for the next 24 h) forecasting, we needed all the input variables for 11 prediction time points. Therefore, we used 330 input variables (i.e., 30 input variables 11 prediction time points) with 32,143 tuples for the MSA forecasting model construction, as shown in Figure 3.

Table 1.

List of input variables (IV) for the proposed model.

Figure 3.

Input variable configuration for multistep-ahead (MSA) global solar radiation forecasting.

2.2. Forecasting Model Construction

The purpose of our model is to predict global solar radiation for the next 11 time points from the current time. To construct a global solar radiation forecasting model, we used LightGBM, a gradient boosting machine (GBM)-based model. The LightGBM model [28] is based on a gradient boosting decision tree (GBDT) applying gradient-based one-side sampling and exclusive feature bundling technologies. Unlike the conventional GBM tree splitting method, a leafwise method is used to create complex models to achieve higher accuracy; hence, it is useful for time-series forecasting. Because of the GBDT and leafwise method, LightGBM has the advantages of reduced memory usage and faster training speed. The LightGBM contains various hyperparameters to be tuned. Among them, the learning rate, number of iterations, and number of leaves are closely related to the prediction accuracy. In addition, overfitting can be prevented by adjusting the colsample by tree and subsample hyperparameters. Moreover, LightGBM also can use different algorithms for its learning iterations. In this paper, we constructed two LightGBM models using two boosting types: GBDT and dropouts meet multiple additive regression trees (DART) [29] for comparison. Both models perform predictions on multiple outputs using the MultiOutputRegressor module in scikit-learn (v. 0.22.1).

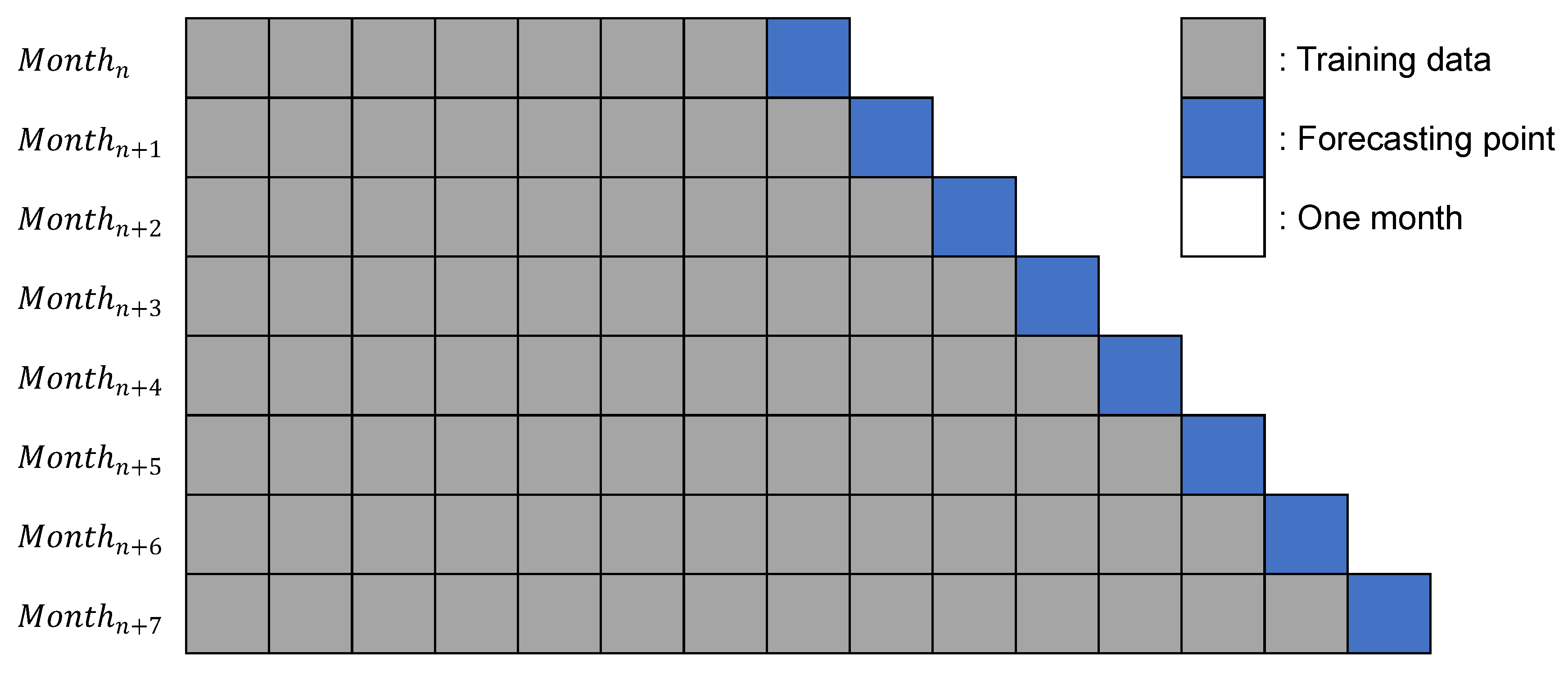

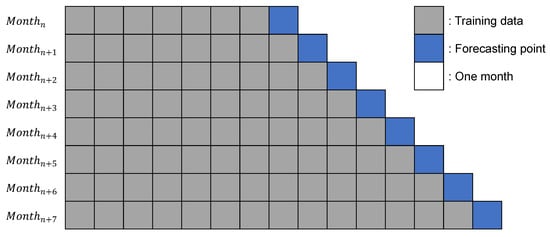

In general, to evaluate a forecasting method, we first divide a dataset into training and test sets. Then, we construct the forecasting model using the training set. Finally, we evaluate the performance of the forecasting model using the test set. A greater time interval between training and forecasting lowers the prediction performance [30]. To solve this problem, we applied TSCV, which is popularly used when data exhibit time-series characteristics and are focused on a single forecast of the dataset [6]. The TSCV uses all data before the prediction point as a training set and predicts the next forecasting point by setting it as a test set, iteratively.

However, if TSCV is performed at every point, it requires a considerable amount of time to train and forecast. To reduce this overhead, we conducted monthly TSCV, as shown in Figure 4. In addition, for interpretable global solar radiation forecasting, we analyzed the variable importance changes for the 30 input variables by obtaining the feature importance using LightGBM.

Figure 4.

Example of monthly time-series cross-validation.

2.3. Baseline Models

To demonstrate the performance of our model, we constructed various forecasting models based on the tree-based ensemble and deep learning methods.

In the case of tree-based ensemble learning methods, because they combine several weak models effectively, they usually exhibit better prediction performance than a single model. In the experiment, we considered RF, GBM, and extreme gradient boosting (XGBoost) ensemble methods to construct MSA global solar radiation forecasting models. The RF method trains each tree independently by using a randomly selected sample of the data. As the RF method tends to reduce the correlation between trees, it provides a robust model for out-of-sample forecasting [31]. In addition, the GBM is a forward-learning ensemble method that obtains predictive results using gradually improved estimations [32]. The adjusted model is built by applying the residuals of the previous model, and this procedure is repeated times to build a robust model. We constructed two GBM models by considering the quantile regression and Huber loss functions, respectively. The XGBoost method is an algorithm that can prevent overfitting by reducing the tree correlation using the shrinkage method [33]. Moreover, it can perform parallel processing by applying the column subsampling method. The XGBoost method constructs a weak model and evaluates the consistency using the training set. After that, the method constructs an adjusted prediction model with the explanatory variable for the gradient in the direction in which consistency increases using the gradient descent method. This procedure is repeated times to build a robust model [34]. We constructed two XGBoost models by applying two boosting types (i.e., GBDT and DART). To predict multiple outputs, we constructed an RF model using the MultivariateRandomForest [35] package in R (v. 3.5.1) and the GBM and XGBoost models using the MultiOutputRegressor module in scikit-learn (v. 0.22.1) [36].

For deep learning-based MSA global solar radiation forecasting models, we considered the SNN, DNN, LSTM network, and attention-based LSTM (ATT-LSTM) network. These models require a sufficient amount of training data for accurate predictive performance, and the models can overfit the data if the training data are insufficient [37]. A typical ANN consists of an input layer, one or more hidden layers, and an output layer, and each layer consists of one or more hidden nodes [38,39]. The ANNs have various hyperparameters that affect prediction performance [38]. These hyperparameters include the number of hidden layers, number of hidden nodes, an activation function, and so on. In addition, the SNN has one hidden layer, and the DNN has two or more hidden layers [39]. The LSTM network [40] is a model that can solve the long-term dependency problem of the existing recurrent neural network. The LSTM network is useful for training sequence data in the time-series forecasting method. Nevertheless, although the length of the input variable is long, the forecasting accuracy of the sequence-to-sequence model suffers due to focusing on all input variables. To solve this problem, an attention mechanism [41] has been developed in the field of machine translation. The attention mechanism comprises an encoder that builds a vector from the input variable and a decoder that outputs a dependent variable using the vector output by the encoder as input. The decoder part performs the model training focused on data representing high similarity by indicating the similarity with the encoder as a value; hence, it can exhibit accurate forecasting performance. Applying the attention mechanism to the LSTM described above focuses the model on specific vectors so that it obtains more accurate forecasting results [42].

In our previous work [7], we constructed several deep learning models for MSA global solar radiation forecasting in the same experimental environment. We used the dropout method to control the weight of the hidden layers to prevent overfitting. To do this, we found optimal hyperparameter values for each deep learning model, as indicated in Table 2.

Table 2.

Selected optimal hyperparameters for each deep learning model.

3. Results and Discussion

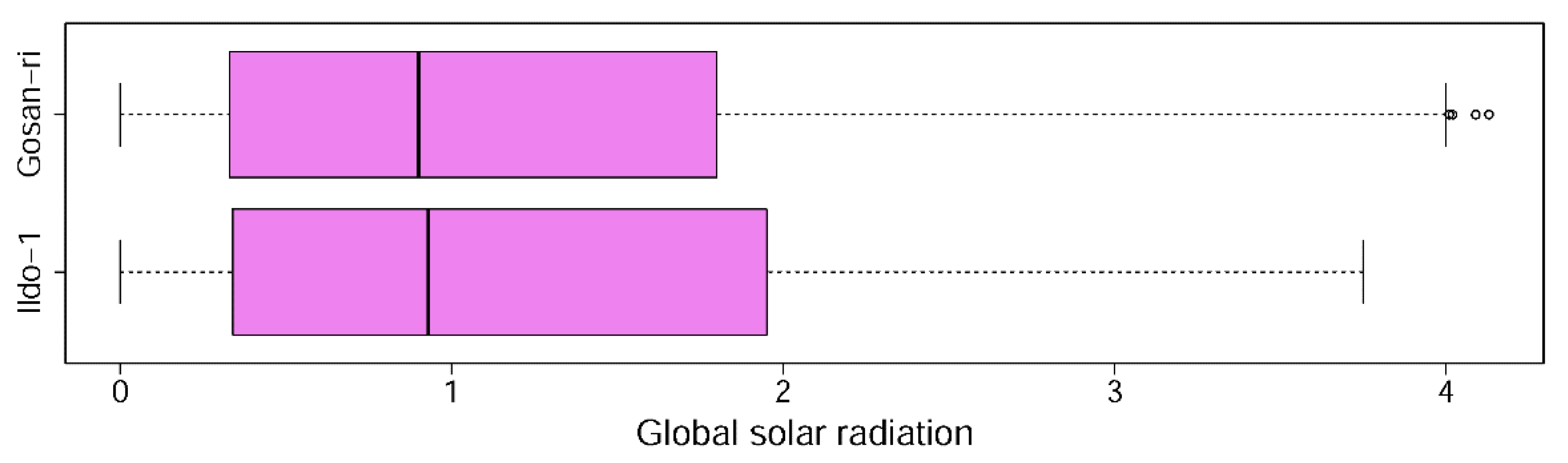

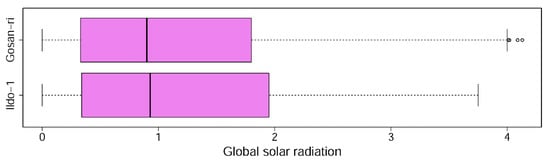

In the experiments, we used two global solar radiation datasets collected from two regions from 2011 to 2018. The two regions are Ildo-1 and Gosan-ri on Jeju island. We divided each dataset into two parts at a ratio of 75:25: a training set (in-sample) spanning 2011 to 2016, and a test set (out-of-sample) spanning 2017 to 2018. Table 3 lists various statistical analysis for the datasets by considering the training and test sets. The statistical analysis was performed by using Excel’s Descriptive Statistics data analysis tool. Figure 5 represents the boxplots of the global solar radiation data for each region.

Table 3.

Statistical analysis of global solar radiation data by region ().

Figure 5.

Boxplots by region ().

For continuous data, such as humidity, wind speed, temperature, and historical global solar radiation, we performed standardization using Equation (3). In the equation, xi and x denote the input variable and original data, respectively. In addition, μ and σ denote the average of the original data and the standard deviation, respectively.

To evaluate the prediction performance of the models, we used four metrics: mean biased error (MBE), mean absolute error (MAE), root mean square error (RMSE), and normalized root mean square error (NRMSE), as shown in Equations (4)–(7). Here, At and Ft represent the actual and forecasted values, respectively, at time t, n indicates the number of observations, and represents the average of the actual values.

We implemented an RF-based forecasting model using R (v. 3.5.1) and all other forecasting models using Python (v. 3.6). We found optimal values for the hyperparameters of the tree-based ensemble learning models via GridSearchCV in scikit-learn (v. 0.22.1), as displayed in Table 4. Because the two regions are close together, we obtained the same hyperparameter values for the two regions.

Table 4.

Selected hyperparameters for each ensemble learning model. Selected values are bold.

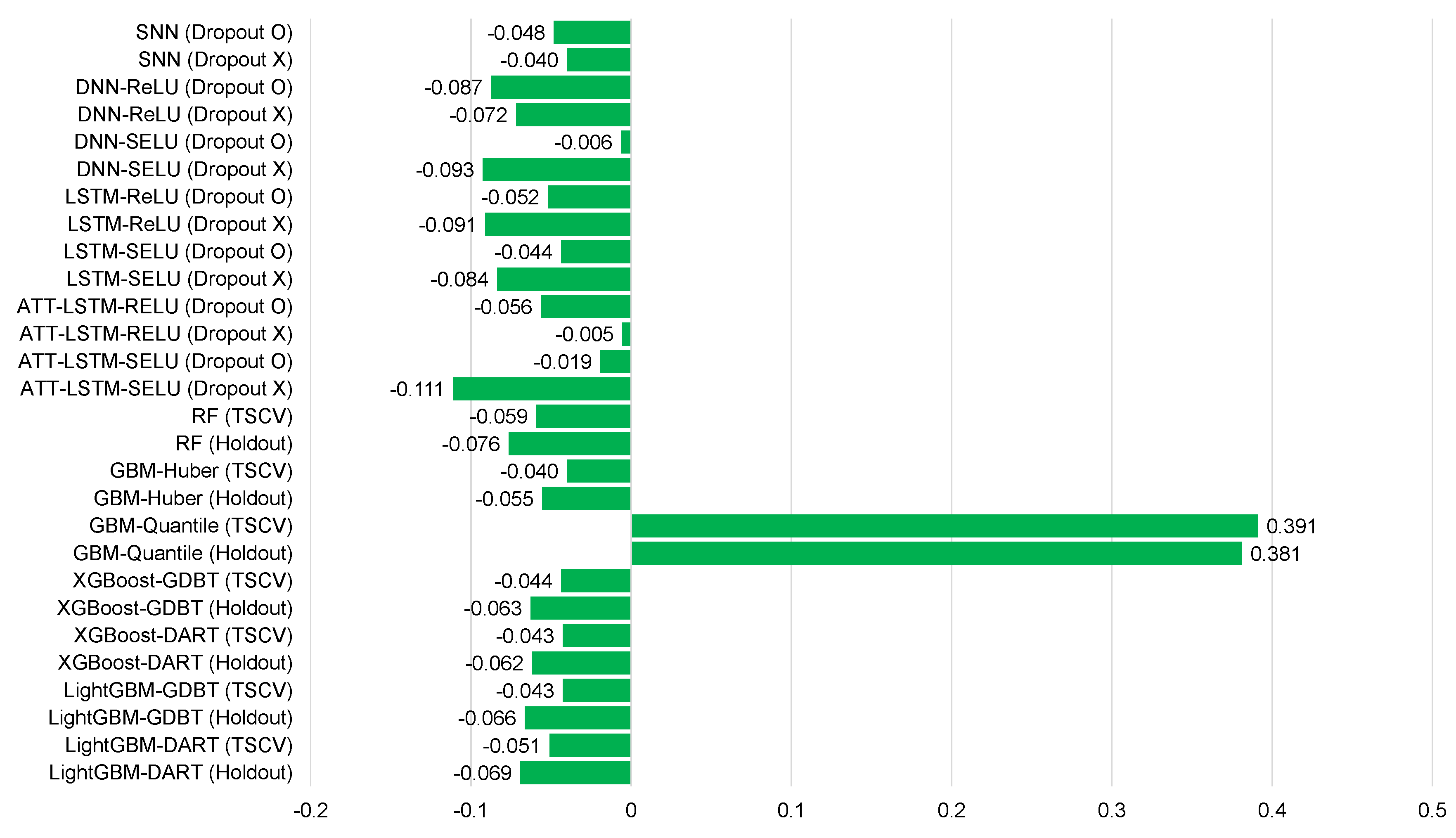

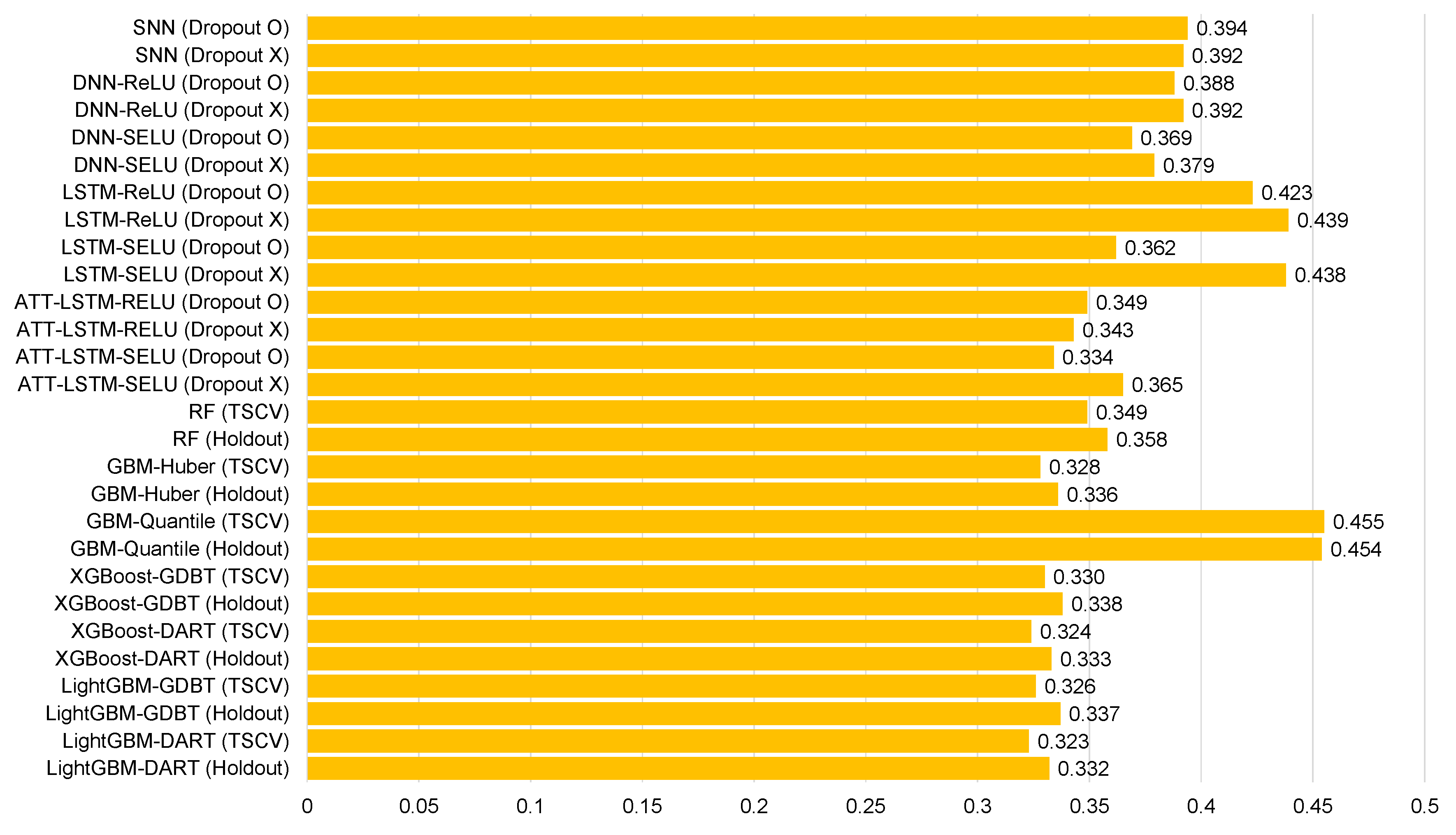

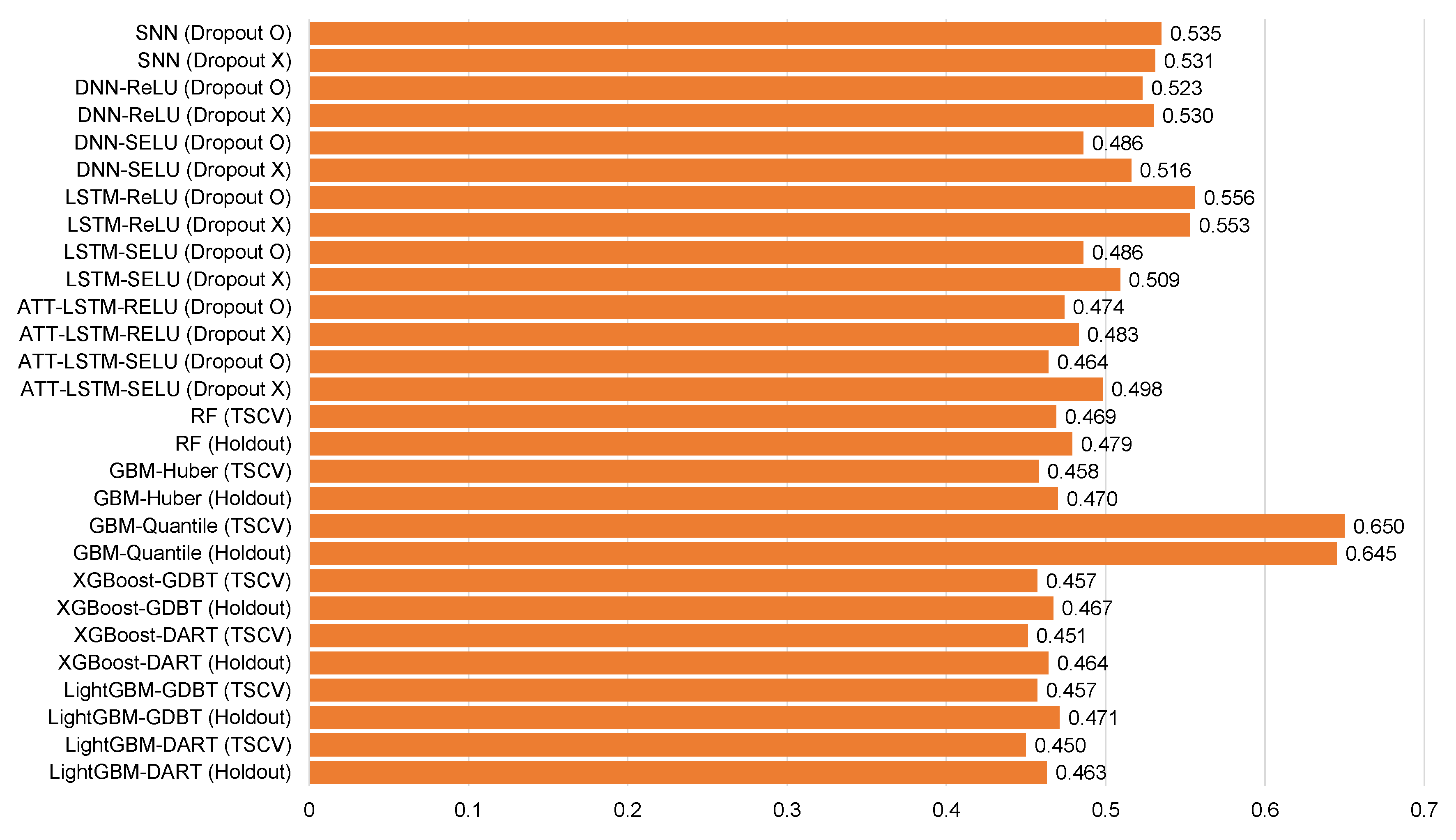

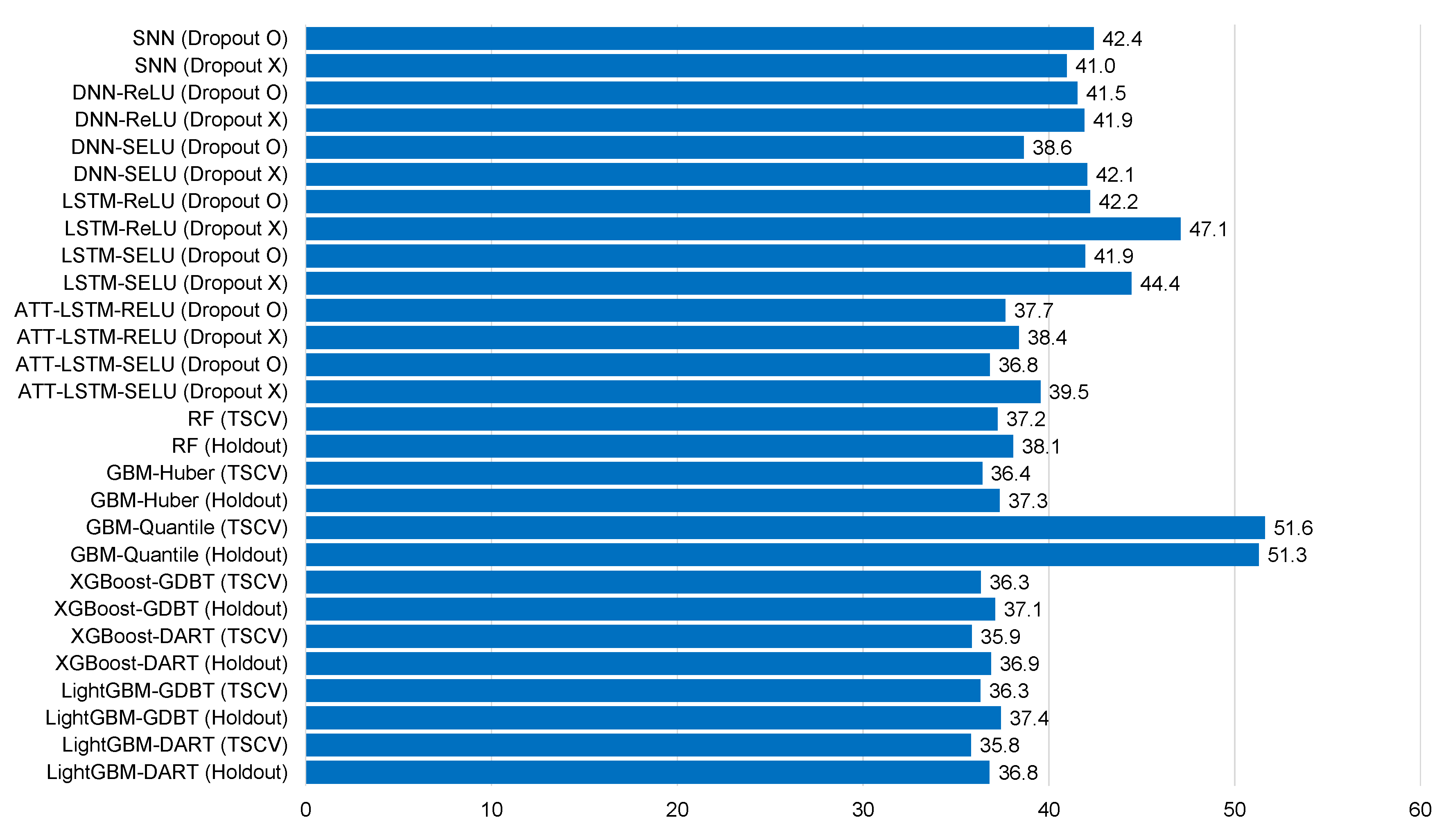

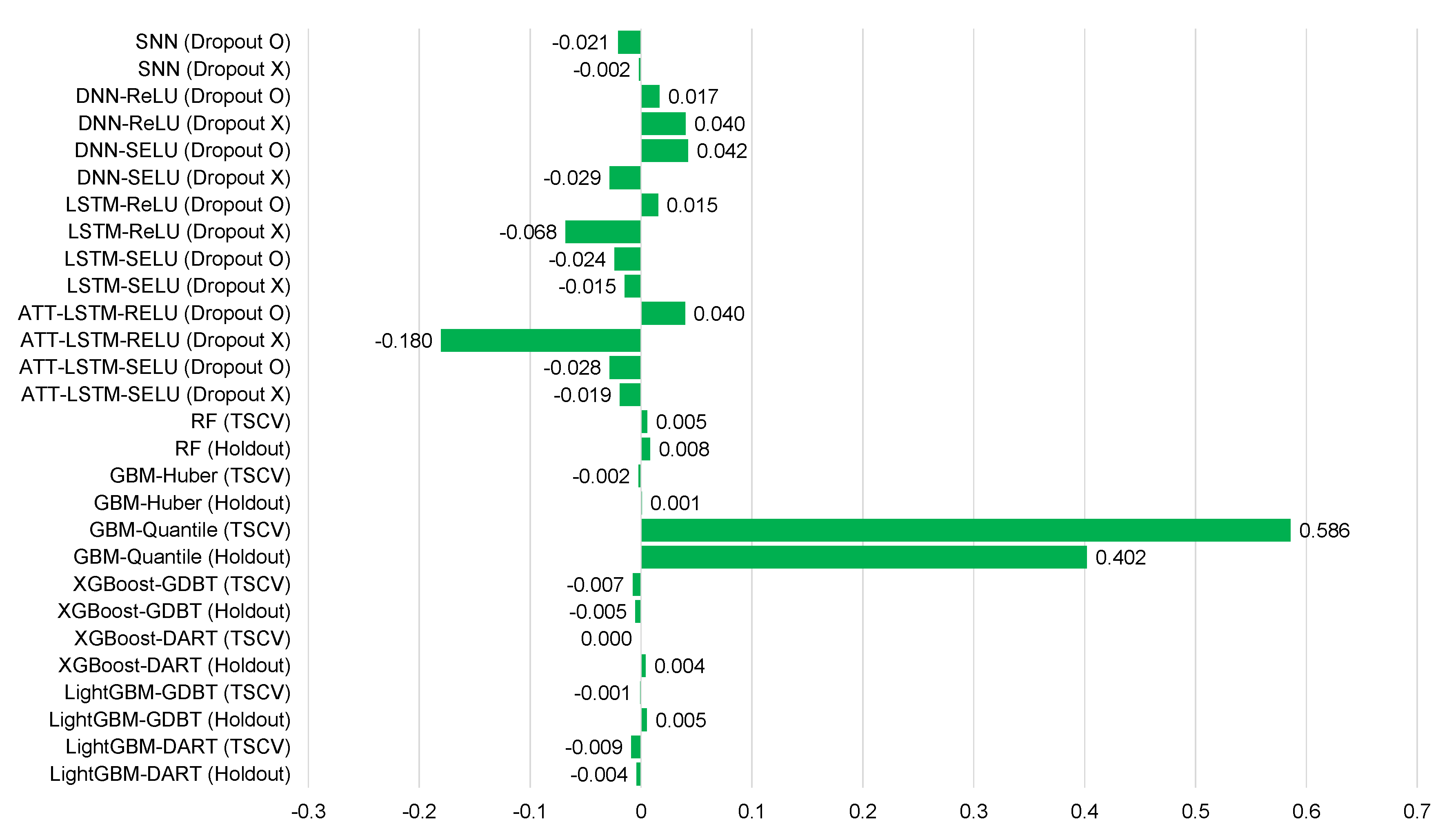

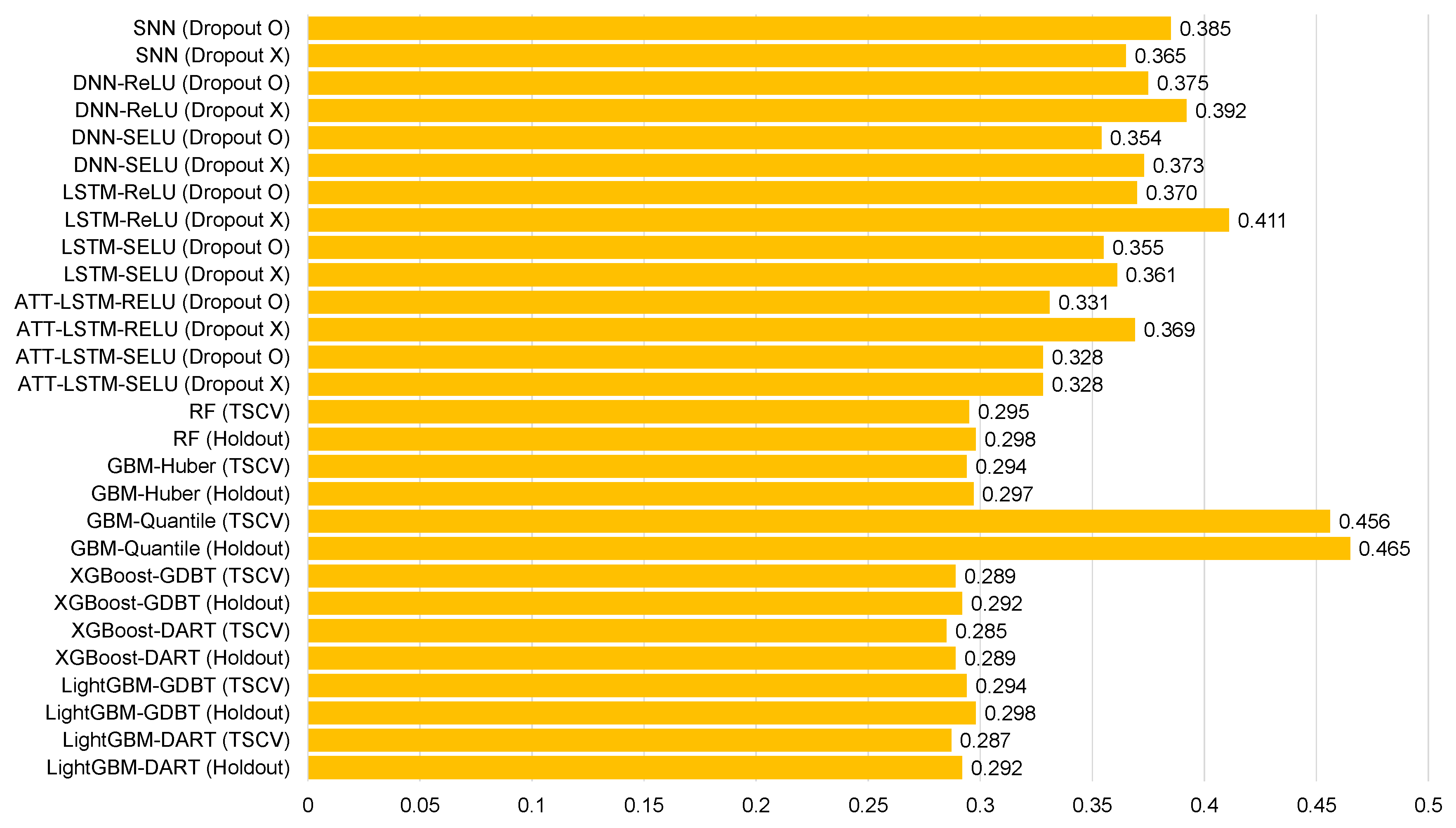

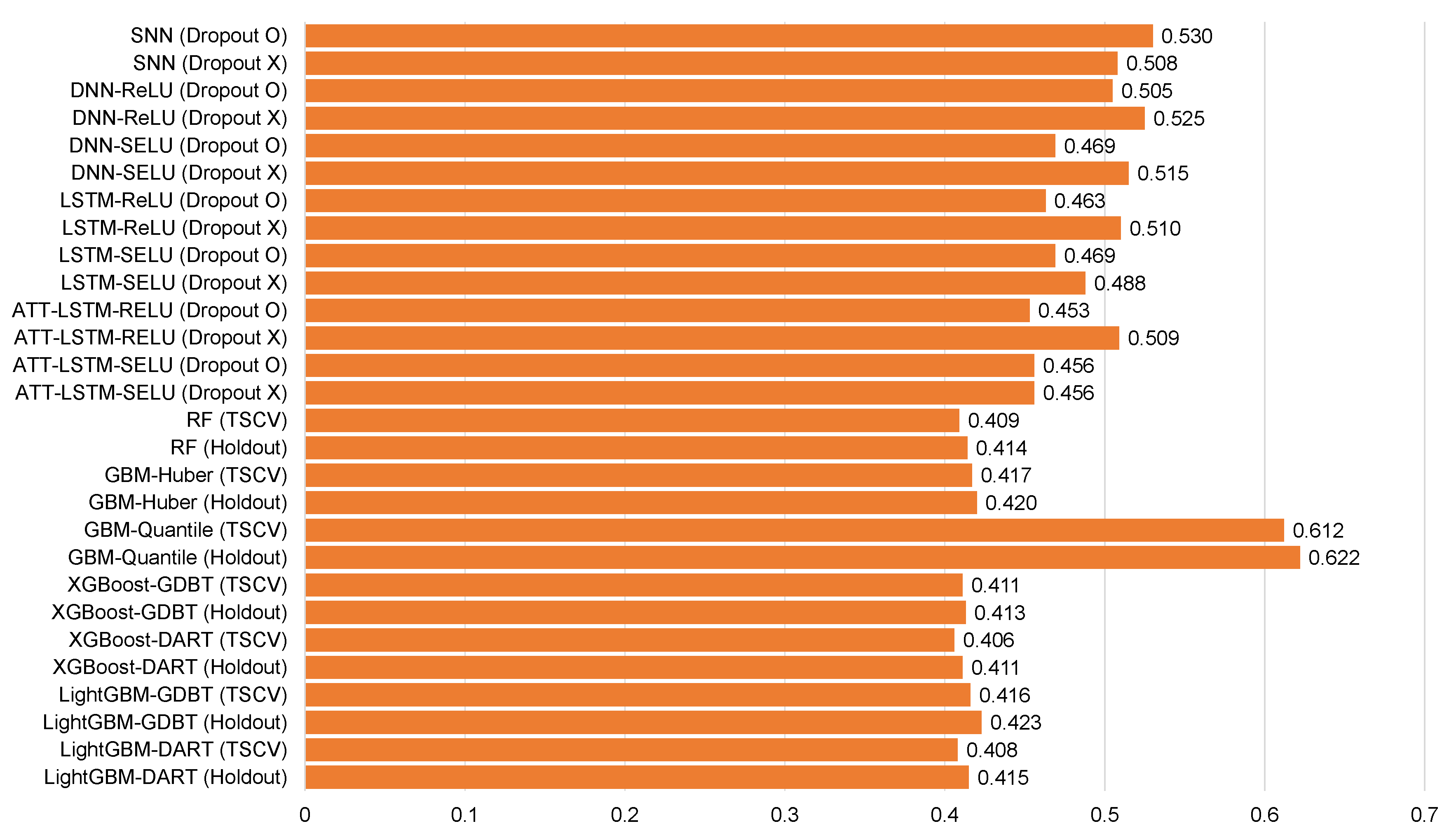

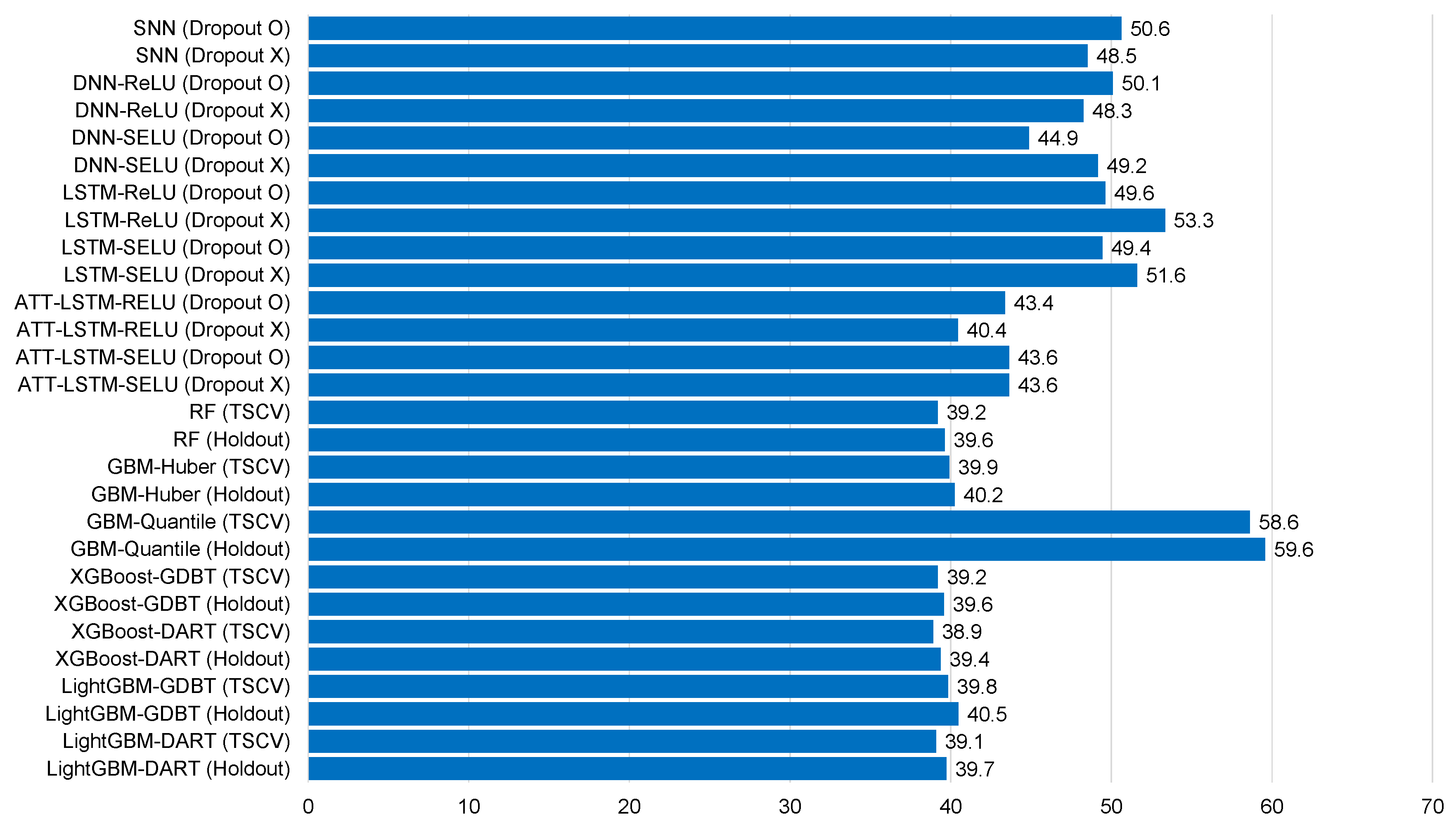

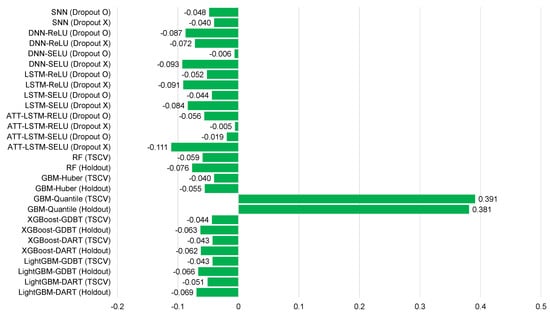

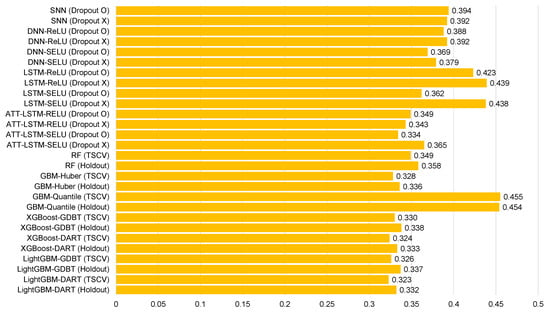

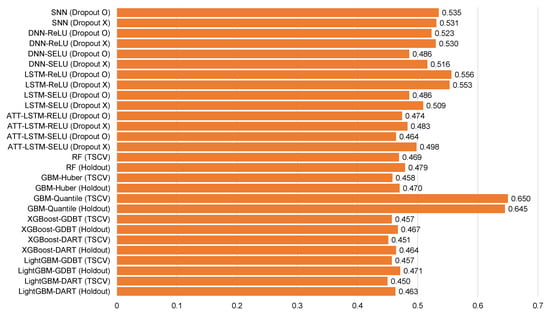

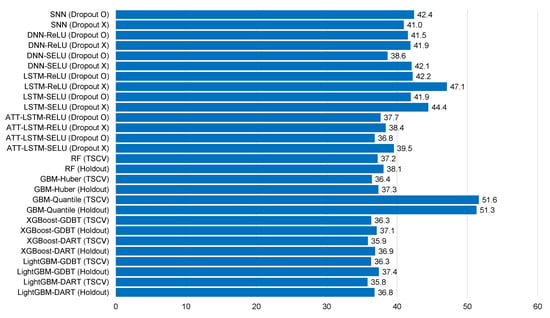

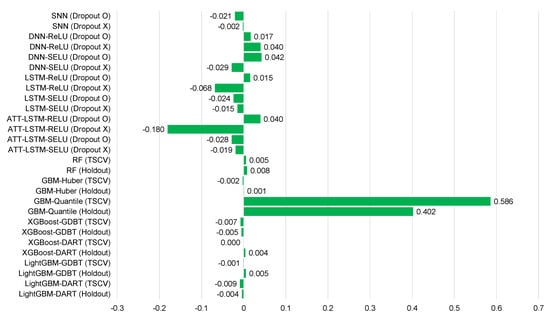

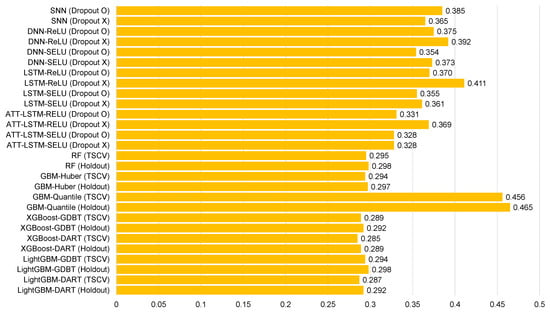

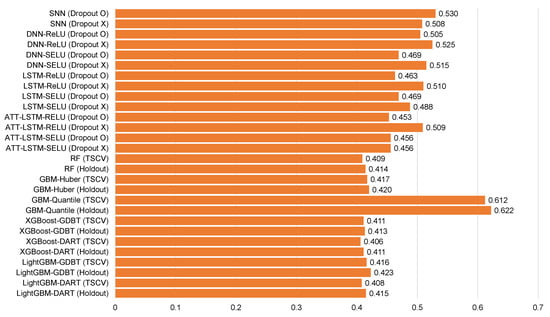

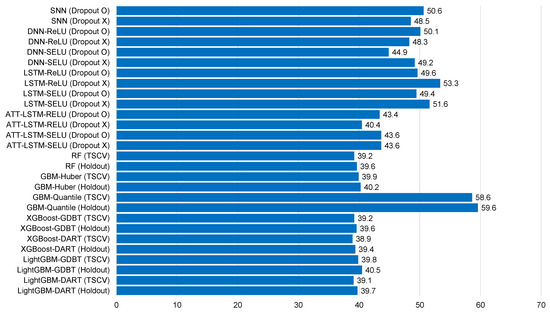

Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12 and Table 13 and Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 demonstrate that our model could achieve lower RMSE and MAE values than all other forecasting models that we considered, except the XGBoost model. In addition, tree-based ensemble models exhibited better performance than deep learning-based models. Moreover, the TSCV scheme demonstrated better prediction performance than the holdout scheme, as presented in Table 13. The XGBoost and LightGBM methods exhibited a similar prediction performance. However, regarding the aspect of the training and testing time, LightGBM took 220 s, whereas XGBoost took 3798 s. That is, LightGBM is 17 times faster than XGBoost. Hence, LightGBM has a clear advantage in terms of accuracy and time. In the forecasting results of LightGBM, we observed that the MAE and RMSE values were lowest at the first time point, and as the distance increased, these values increased.

Table 5.

Mean bias error (MBE) distribution for each model for Ildo-1 ().

Table 6.

Mean absolute error (MAE) distribution for each model for Ildo-1. A cooler color indicates a lower MAE value, whereas a warmer color indicates a higher MAE value ().

Table 7.

Root mean square error (RMSE) distribution for each model for Ildo-1. A cooler color indicates a lower RMSE value, whereas a warmer color indicates a higher RMSE value ().

Table 8.

Normalized root mean square error (NRMSE) distribution for each model for Ildo-1. A cooler color indicates a lower NRMSE value, whereas a warmer color indicates a higher NRMSE value ().

Table 9.

Mean bias error (MBE) distribution for each model of Gosan-ri ().

Table 10.

Mean absolute error (MAE) distribution for each model of Gosan-ri. A cooler color indicates a lower MAE value, whereas a warmer color indicates a higher MAE value ().

Table 11.

Root mean square error (RMSE) distribution for each model for Gosan-ri. A cooler color indicates a lower RMSE value, whereas a warmer color indicates a higher RMSE value ().

Table 12.

Normalized root mean square error (NRMSE) distribution for each model for Gosan-ri. A cooler color indicates a lower NRMSE value, whereas a warmer color indicates a higher NRMSE value ().

Table 13.

Average mean bias error, mean absolute error, root mean square error, and normalized root mean square error comparison according to the forecasting models.

Figure 6.

Average mean bias error for each model of Ildo-1 ().

Figure 7.

Average mean absolute error for each model of Ildo-1 ().

Figure 8.

Average root mean square error for each model of Ildo-1 ().

Figure 9.

Average normalized root mean square error for each model of Ildo-1 ().

Figure 10.

Average mean bias error for each model for Gosan-ri ().

Figure 11.

Average mean absolute error for each model for Gosan-ri ().

Figure 12.

Average root mean square error for each model for Gosan-ri ().

Figure 13.

Average normalized root mean square error for each model for Gosan-ri ().

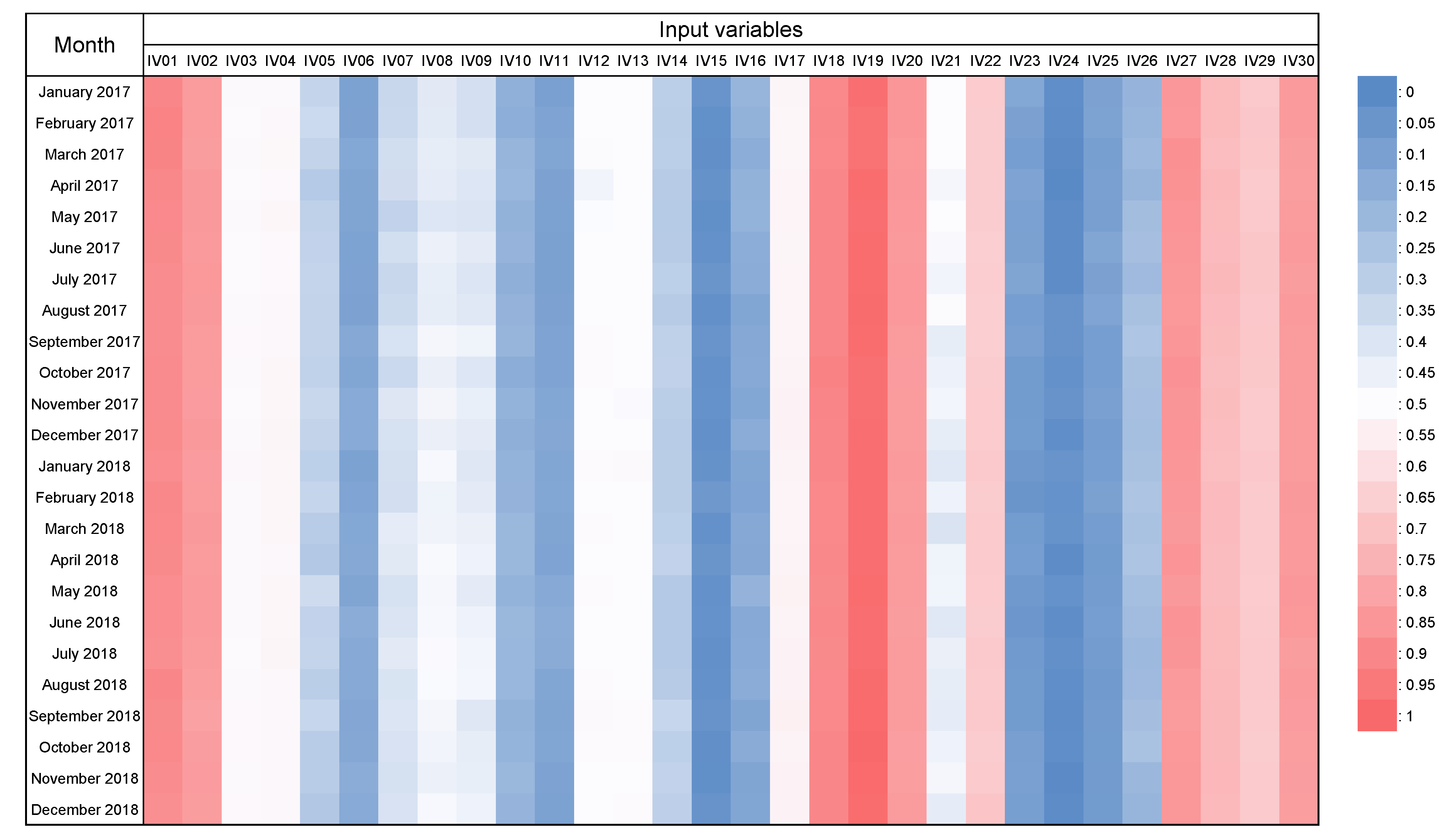

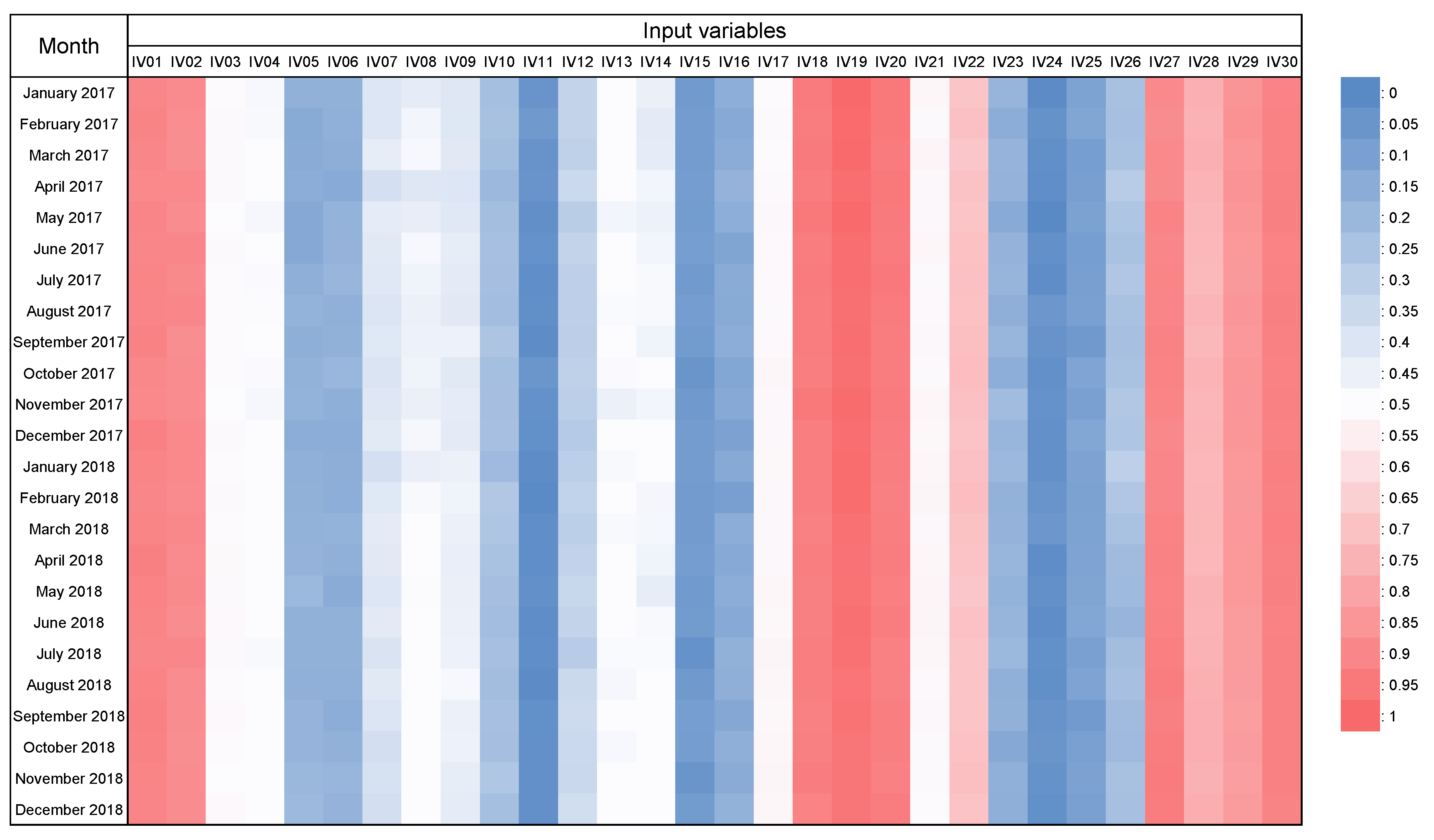

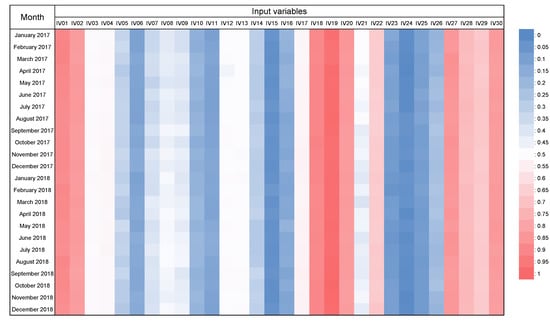

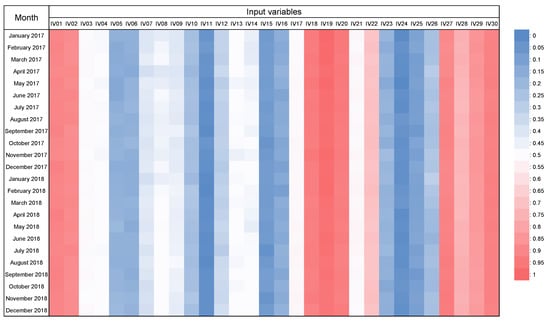

Feature importance is a measure of variable importance when data have obtained a subset of all features. Feature importance can be determined from logistic regression or tree-based models. We determined the feature importance of our model, LightGBM-DART (TSCV), at each test point (one month) according to the TSCV cycle. Figure 14 and Figure 15 present a heat map graph that reveals the feature importance of the input variables mentioned in Table 2 for both regions. The variable importance values are exhibited in the range of 0 to 1 using minimum–maximum normalization to help readers understand. From the table, we confirmed that the day number of the year ( and ) consistently exhibited high feature importance, and the temperature, humidity, and wind speed, among the meteorological information, presented high feature importance. In particular, the importance of humidity increased over time.

Figure 14.

Result of feature importance via time-series cross-validation using the input variables in Table 2 for Ildo-1. A cooler color indicates a lower feature importance value, whereas a warmer color indicates a higher feature importance value.

Figure 15.

Result of feature importance via time-series cross-validation using the input variables in Table 2 for Gosan-ri. A cooler color indicates a lower feature importance value, whereas a warmer color indicates a higher feature importance value.

4. Conclusions

In this paper, we proposed an MSA global solar radiation forecasting method based on LightGBM. To do this, we first configured 330 input variables considering the time and weather information provided by KMA to forecast the global solar radiation at multiple time points over the next 24 h. Then, we constructed a LightGBM-based forecasting model with DART boosting. To evaluate the performance of our model, we implemented diverse ensemble-based models and deep learning-based models and compared their performance using global solar radiation data from Jeju Island. From the comparison, we confirmed that our model exhibited better forecasting performance than other methods. We plan to conduct a forecasting model using only historical global solar radiation data in the future to provide accurate global solar radiation forecasting in regions where meteorological information is not provided. We will also conduct smart grid scheduling based on photovoltaic forecasting.

Author Contributions

Conceptualization, J.P. and J.M.; methodology, J.P.; software, J.P. and S.J.; validation, J.P. and S.J.; formal analysis, J.M.; investigation, S.J.; data curation, J.P. and S.J.; writing—original draft preparation, J.P. and J.M.; writing—review and editing, E.H.; visualization, J.M.; supervision, E.H.; project administration, E.H.; funding acquisition, E.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the Korea Electric Power Corporation (grant number: R18XA05) and in part by Energy Cloud R&D Program (grant number: 2019M3F2A1073179) through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Moon, J.; Kim, K.-H.; Kim, Y.; Hwang, E. A Short-Term Electric Load Forecasting Scheme Using 2-Stage Predictive Analytics. In Proceedings of the IEEE International Conference on Big Data and Smart Computing (BigComp), Shanghai, China, 15–17 January 2018; pp. 219–226. [Google Scholar] [CrossRef]

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput. Appl. 2019, 31, 2727–2740. [Google Scholar] [CrossRef]

- Son, M.; Moon, J.; Jung, S.; Hwang, E. A Short-Term Load Forecasting Scheme Based on Auto-Encoder and Random Forest. In Proceedings of the International Conference on Applied Physics, System Science and Computers, Dubrovnik, Croatia, 26–28 September 2018; pp. 138–144. [Google Scholar] [CrossRef]

- Moon, J.; Park, J.; Hwang, E.; Jun, S. Forecasting power consumption for higher educational institutions based on machine learning. J. Supercomput. 2018, 74, 3778–3800. [Google Scholar] [CrossRef]

- Wang, H.Z.; Li, G.Q.; Wang, G.B.; Peng, J.C.; Jiang, H.; Liu, Y.T. Deep learning based ensemble approach for probabilistic wind power forecasting. Appl. Energy 2017, 188, 56–70. [Google Scholar] [CrossRef]

- Moon, J.; Kim, Y.; Son, M.; Hwang, E. Hybrid Short-Term Load Forecasting Scheme Using Random Forest and Multilayer Perceptron. Energies 2018, 11, 3283. [Google Scholar] [CrossRef]

- Jung, S.; Moon, J.; Park, S.; Hwang, E. A Probabilistic Short-Term Solar Radiation Prediction Scheme Based on Attention Mechanism for Smart Island. KIISE Trans. Comput. Pract. 2019, 25, 602–609. [Google Scholar] [CrossRef]

- Park, S.; Moon, J.; Jung, S.; Rho, S.; Baik, S.W.; Hwang, E. A Two-Stage Industrial Load Forecasting Scheme for Day-Ahead Combined Cooling, Heating and Power Scheduling. Energies 2020, 13, 443. [Google Scholar] [CrossRef]

- Lee, M.; Lee, W.; Jung, J. 24-Hour photovoltaic generation forecasting using combined very-short-term and short-term multivariate time series model. In Proceedings of the 2017 IEEE Power & Energy Society General Meeting, Chicago, IL, USA, 16–20 July 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Khosravi, A.; Koury, R.N.N.; Machado, L.; Pabon, J.J.G. Prediction of hourly solar radiation in Abu Musa Island using machine learning algorithms. J. Clean. Prod. 2018, 176, 63–75. [Google Scholar] [CrossRef]

- Meenal, R.; Selvakumar, A.I. Assessment of SVM, empirical and ANN based solar radiation prediction models with most influencing input parameters. Renew. Energy 2018, 121, 324–343. [Google Scholar] [CrossRef]

- Aggarwal, S.; Saini, L. Solar energy prediction using linear and non-linear regularization models: A study on AMS (American Meteorological Society) 2013–14 Solar Energy Prediction Contest. Energy 2014, 78, 247–256. [Google Scholar] [CrossRef]

- Yadav, A.K.; Chandel, S. Solar radiation prediction using Artificial Neural Network techniques: A review. Renew. Sustain. Energy Rev. 2014, 33, 772–781. [Google Scholar] [CrossRef]

- Rao, K.D.V.S.K.; Premalatha, M.; Naveen, C. Analysis of different combinations of meteorological parameters in predicting the horizontal global solar radiation with ANN approach: A case study. Renew. Sustain. Energy Rev. 2018, 91, 248–258. [Google Scholar] [CrossRef]

- Cornaro, C.; Pierro, M.; Bucci, F. Master optimization process based on neural networks ensemble for 24-h solar irradiance forecast. Sol. Energy 2015, 111, 297–312. [Google Scholar] [CrossRef]

- Dahmani, K.; Dizene, R.; Notton, G.; Paoli, C.; Voyant, C.; Nivet, M.L. Estimation of 5-min time-step data of tilted solar global irradiation using ANN (Artificial Neural Network) model. Energy 2014, 70, 374–381. [Google Scholar] [CrossRef]

- Leva, S.; Dolara, A.; Grimaccia, F.; Mussetta, M.; Sahin, E. Analysis and validation of 24 hours ahead neural network forecasting for photovoltaic output power. Math. Comput. Simul. 2017, 131, 88–100. [Google Scholar] [CrossRef]

- Amrouche, B.; le Pivert, X. Artificial neural network based daily local forecasting for global solar radiation. Appl. Energy 2014, 130, 333–341. [Google Scholar] [CrossRef]

- Kaba, K.; Sarıgül, M.; Avcı, M.; Kandırmaz, H.M. Estimation of daily global solar radiation using deep learning model. Energy 2018, 162, 126–135. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Benali, L.; Notton, G.; Fouilloy, A.; Voyant, C.; Dizene, R. Solar radiation forecasting using artificial neural network and random forest methods: Application to normal beam, horizontal diffuse and global components. Renew. Energy 2019, 132, 871–884. [Google Scholar] [CrossRef]

- Lee, J.; Wang, W.; Harrou, F.; Sun, Y. Reliable solar irradiance prediction using ensemble learning-based models: A comparative study. Energy Conv. Manag. 2020, 208, 112582. [Google Scholar] [CrossRef]

- Rew, J.; Cho, Y.; Moon, J.; Hwang, E. Habitat Suitability Estimation Using a Two-Stage Ensemble Approach. Remote Sens. 2020, 12, 1475. [Google Scholar] [CrossRef]

- Kim, J.; Moon, J.; Hwang, E.; Kang, P. Recurrent inception convolution neural network for multi short-term load forecasting. Energy Build. 2019, 194, 328–341. [Google Scholar] [CrossRef]

- Jung, S.; Moon, J.; Park, S.; Rho, S.; Baik, S.W.; Hwang, E. Bagging Ensemble of Multilayer Perceptrons for Missing Electricity Consumption Data Imputation. Sensors 2020, 20, 1772. [Google Scholar] [CrossRef]

- Kim, K.H.; Oh, J.K.-W.; Jeong, W. Study on Solar Radiation Models in South Korea for Improving Office Building Energy Performance Analysis. Sustainability 2016, 8, 589. [Google Scholar] [CrossRef]

- Lee, M.; Park, J.; Na, S.-I.; Choi, H.S.; Bu, B.-S.; Kim, J. An Analysis of Battery Degradation in the Integrated Energy Storage System with Solar Photovoltaic Generation. Electronics 2020, 9, 701. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T. LightGBM: A highly efficient gradient boosting decision tree. In Advances in Neural Information Processing Systems; Morgan Kaufmann Publishers: San Mateo, CA, USA, 2017; pp. 3148–3156. [Google Scholar]

- Rashmi, K.V.; Gilad-Bachrach, R. DART: Dropouts meet Multiple Additive Regression Trees. In Proceedings of the AISTATS, San Diego, CA, USA, 9–12 May 2015. [Google Scholar]

- De Livera, A.M.; Hyndman, R.J.; Snyder, R.D. Forecasting time series with complex seasonal patterns using exponential smoothing. J. Am. Stat. Assoc. 2011, 106, 1513–1527. [Google Scholar] [CrossRef]

- Breiman, L.E.O. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient Boosting Machines: A Tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Park, S.; Moon, J.; Hwang, E. 2-Stage Electric Load Forecasting Scheme for Day-Ahead CCHP Scheduling. In Proceedings of the IEEE International Conference on Power Electronics and Drive System (PEDS), Toulouse, France, 9–12 July 2019. [Google Scholar] [CrossRef]

- Rahman, R.; Otridge, J.; Pal, R. IntegratedMRF: Random forest-based framework for integrating prediction from different data types. Bioinformatics 2017, 33, 1407–1410. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Feng, C.; Cui, M.; Hodge, B.-M.; Zhang, J. A data-driven multi-model methodology with deep feature selection for short-term wind forecasting. Appl. Energy 2017, 190, 1245–1257. [Google Scholar] [CrossRef]

- Moon, J.; Park, S.; Rho, S.; Hwang, E. A comparative analysis of artificial neural network architectures for building energy consumption forecasting. Int. J. Distrib. Sens. Netw. 2019, 15. [Google Scholar] [CrossRef]

- Moon, J.; Jung, S.; Rew, J.; Rho, S.; Hwang, E. Combination of short-term load forecasting models based on a stacking ensemble approach. Energy Build. 2020, 216, 109921. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Li, H.; Shen, Y.; Zhu, Y. Stock Price Prediction Using Attention-based Multi-Input LSTM. In Proceedings of the 10th Asian Conference on Machine Learning (ACML 2018), Beijing, China, 14–16 November 2018; pp. 454–469. [Google Scholar]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Self-Normalizing Neural Networks. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 971–980. [Google Scholar]

- Moon, J.; Kim, J.; Kang, P.; Hwang, E. Solving the Cold-Start Problem in Short-Term Load Forecasting Using Tree-Based Methods. Energies 2020, 13, 886. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).