Abstract

Radar-based classification of human activities and gait have attracted significant attention with a large number of approaches proposed in terms of features and classification algorithms. A common approach in activity classification attempts to find the algorithm (features plus classifier) that can deal with multiple activities analysed in one study such as walking, sitting, drinking and crawling. However, using the same set of features for multiple activities can be suboptimal per activity and not take into account the diversity of kinematic movements that could be captured by diverse features. In this paper, we propose a hierarchical classification approach that uses a large variety of features including but not limited to energy features like entropy and energy curve, physical features like centroid and bandwidth, image-based features like skewness extracted from multiple radar data domains. Feature selection is used at each step of the hierarchical model to select the best set of features to discriminate the target activity from the others, showing improvements with respect to the more conventional approach of using a multiclass model. The proposed approach is validated on a large dataset with 1078 recorded samples of varying length from 5 s to 10 s of experimental data, yielding 95.4% accuracy to classify six activities. The approach is also validated on a personnel recognition task to identify individual subjects from their walking gait, yielding 83.7% accuracy for ten subjects and 68.2% for a significantly larger group of subjects, i.e., 60 people.

1. Introduction

Sensor-based home health monitoring, such as human activity recognition (HAR) and its applications, has gained significant interest and is widely used for healthcare applications. By monitoring the health parameters and activity patterns of the elderly and patients, the patient care and re-examination workflow management can be improved, increasing the efficiency and reducing the stress in healthcare systems [1]. Additionally, people can be more aware of their own health conditions and receive assisted living services through activity monitoring. For instance, sending the detection of dangerous situations such as stumbles and falls, which can lead to severe injuries, will help emergency centres respond in time and reduce potential risks [2]. Recording the gait information can provide parameters and features such as walking speed and stride length, which are essential indicators to characterise the wider health conditions as well as provide a biometric measure for personnel recognition [3].

Currently, many activity monitoring methods [4,5,6] are based on camera/wearable sensors but can be limited in some cases. For instance, camera monitoring can be influenced by direct sunlight, the brightness of the environment and may raise privacy issues. Wearable sensors require to be worn, charged, and carried around to be effective, which can be an issue for cognitively impaired patients. Compared to these detection technologies, the advantages of radar monitoring are the contactless sensing capabilities and insensitivity to lighting conditions. Radar-based sensing can capture the motion and micro-motions generated by a human body and its individual parts. Features can then be extracted from radar signatures to discriminate between activities while respecting the privacy of users.

In the past few years, significant research works have focused on the HAR using radar. Ding et al. [7] used an ultra-wideband (UWB) radar to recognise daily human motions with physical empirical features and principal component analysis-based features, achieving an average classification accuracy of 89.7% for six in situ motions and 88.9% for another six non-in situ motions. In [8], a Frequency Modulated Continuous Wave (FMCW) radar was used in conjunction with wearable sensors to enhance the discrimination results. The average accuracy rate reached 97.8% when the decision-level fusion scheme was exploited. In [9], singular value decomposition (SVD) algorithm was implemented to extract features from the spectrogram. The NetRAD system, which was developed at University College London, was used to measure the human micro-Doppler signatures. The accuracy of the classification was approximately 99%. In [10], features extracted from the cadence velocity diagram (CVD) were used with support vector machine and achieved approximately 91% accuracy, whereas the accuracy of the features extracted from spectrogram was 87%. Jokanovic et al. [11] applied a deep neural network (DNN) to radar spectrogram for detecting the fall, and overall accuracy of 87% was achieved. The employed DNN consisted of two stacked auto-encoders and a SoftMax regression classifier, and four human activities were considered, namely, walking, falling, bending, and sitting.

Gait analysis is a more specific study of human activities. Human walking (for most people) gait tends to present stable and steady signatures if the initial acceleration and final deceleration phases are removed, and this can provide clear and distinctive micro-Doppler signatures. In [12], the author proved that the micro-Doppler signature could provide valuable information in the gait analysis of pedestrians. Walking and running were distinguished by comparing the Doppler shift of the torso and the cadence frequency of legs. In [13], micro-Doppler signatures, cadence velocity diagram and autocorrelation were performed to extract features including the central Doppler frequency of walking and the fundamental gait cadence frequency to distinguish both running and walking actions of a single person and a group of people. Seifert et al. [14] proposed to extract gait patterns from time-frequency representations with sum-of-harmonics modelling, obtaining an average accuracy of 88% accuracy. Recently, personnel recognition based on gait analysis also received increasing attention. In [15], they first proposed the voted monostatic DCNN (VMo-DCNN) method. By merging the fusion step into the network architecture, they further demonstrated the multi-static DCNN (Mul-DCNN) method. Comparing these two methods, the performance of Mul-DCNN was slightly better than VMo-DCNN, which the highest average accuracies were 99.94% and 99.75%, respectively. In [16], the author compared the result with different statistical learning algorithms from two subjects to seven subjects. Both random forest and the Naïve Bayes algorithms can obtain the best performance with the number of subjects is 2, which achieved 90% accuracy, highlighting that this is the most challenging task.

The vast majority of the human activity recognition research tends to apply the same algorithm (features extracted from the radar data and classifier) to recognise all activities in a given multiclass problem, i.e., one defined algorithm fits all the cases, and there are few attempt of capitalising on the diversity of kinematics that can be captured by diverse features or diverse radar data domains. For example, in [7] the authors showed that in-situ vs non-in-situ actions can be classified using different features and classifiers to improve the overall performance. Different domains of radar data, for example, Cadence Velocity Diagram (CVD) and range-information can complement the traditionally used information contained in the micro-Doppler spectrogram (MDS) [17]. Investigating different radar data domains can provide more degrees of freedom to optimise, both human activity and personnel recognition tasks using different fusion schemes [18]. Combining diverse features and diverse domains of radar data can enable novel approaches based on hierarchical classification, where each activity of interest is classified out of the many in a one-versus-all approach, rather than trying to classify all activities at once. This can also be applied to the problem of personnel identification, i.e., recognising an individual based on their gait out of a pool of known subjects. In this paper, we show a possible approach along this line, with hierarchical classification models for human activity and personnel recognition validated on a large set of experimental radar data. The main contributions are summarised as follows:

- A novel hierarchical human activity recognition scheme with optimised feature sets and classification algorithm combinations for improved performance on one-vs-all schemes;

- The provision of insight into the robustness of features for different activities by analysing which features are consistently selected for classification and which ones are flexibly selected for different activities;

- A novel personnel recognition algorithm using the combination of multiple domains—spectrogram, cadence velocity diagram, velocity diagram, and acceleration diagram to identify stable phases of walk suitable for classification first and then identify personnel;

- The provision of insight into what affects the performances for personnel recognition based on the quality of the radar signatures.

This paper is organised as follows: Section 2 presents the experimental setup for data collection and radar signal pre-processing. Section 3 introduces the applied feature extraction approaches for both activity classification and personnel recognition based on gait analysis, as well as the validation for the proposed feature measurement algorithms. Section 4 deals with classification results and improvements from feature analysis. Section 5 concludes the results and discuss further directions.

2. Data Collection and Pre-Processing

In this study, the radar is an off-the shelf Frequency Modulated Continuous Wave (FMCW) system from Ancortek – SDR-580AD. It is operating at 5.8 GHz centre frequency, with an instantaneous bandwidth equal to 400 MHz and a chirp duration equal to 1 ms. This yields an unambiguous Doppler frequency range equal to ±500 Hz, which is sufficient to capture human activities performed indoor. The transmitted power was set to +19 dBm, and two linearly polarised Yagi antennas with a gain equal to 17 dBi and a beam-width of approximately 24° in azimuth and elevation were used [8]. From [19], the architecture of the radar adopts a hardware dechirping/stretch processing for downconversion [20]. This consists of mixing the received signal with the transmitted signal. The downconverted signal (the beat frequency) is then low pass filtered and amplified before digitization. There are 2 received channels one in-phase and the other in quadrature to capture complex samples. The sample rate is 128 per sweep (1ms), so the ADC sampling rate is 128 kHz and encodes samples on 12 bits.

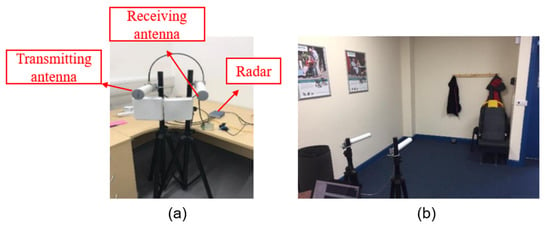

Figure 1 presents the radar equipment and one of the recording locations. The data [21] was recorded at the University of Glasgow and two locations at the North Glasgow Housing Associations facility and Age UK West Cumbria centre, respectively. Sixty volunteers aged from 21 to 98, including 39 males and 21 females, were asked to perform 6 activities, namely, walking (1), sitting (2), standing up (3), drinking water (4), picking an object from the floor (5), and fall (6) respectively with each action repeated three times per volunteer. The falls were not performed for volunteers recorded outside the university of Glasgow in care homes notably the NG homes and West Cumbria data samples. Based on the recorded database, data files have a duration of 10 s for walking and 5 s for other activities, and in total, 1078 samples are collected and analysed for feature extraction and activity classification. Out of all this data, 180 walking samples are used for gait analysis and personnel recognition.

Figure 1.

Radar configuration in the laboratory setup at the University of Glasgow (a), and in the West Cumbria Age UK centre (b).

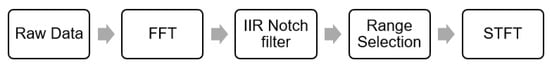

Figure 2 presents the steps for the signal pre-processing. The processing starts with reading and reshaping the raw FMCW data in time domain which are the digitized reflected time-delayed and frequency-shifted signals in complex value before the application of Fast Fourier Transform (FFT) to extract the range information. In this project, the data matrix is reshaped into 128×M where 128 is the number of time samples per sweep, which is also the size used for FFT, and M is the number of chirps for one activity sample. Then FFT is applied to each column of data matrix and obtain the spectrum of each single chirp centered at beat frequency that is proportional to the range from the detected target and thus range profile can be extracted. Next, an infinite impulse-response 9th-order high-pass Butterworth notch filter with a cutoff frequency at 0.0075 Hz is used as a moving target indicator to range profile, removing components near the 0 Hz in the frequency domain, which is caused by stationary objects in the environment. After that, only the range bins of interest from five to 25 (1.875 m to 9.375 m) where the subjects are detected are chosen and then Short Time Fourier Transform (STFT) using a 0.2 s Hamming window with a 95% overlapping factor is implemented on acquired range-time data matrix to extract the time-varying micro-Doppler signatures. For FMCW radar system, the range resolution is proportional to the bandwidth and the Doppler resolution is related to chirp duration and the number of FFT points used in STFT and for the data used in this study, the range resolution is 37.5 cm, and the Doppler resolution is 1.25 Hz (or 0.03 m/s), making it capable of performing accurate velocity analysis and activity classification.

Figure 2.

Block diagram of signal pre-processing.

3. Feature Extraction and Validation

This section describes the proposed approaches for feature extraction, applied to both scenarios of monitoring human activities and gait analysis of specific individuals. The approaches proposed for gait analysis are also validated on simple simulated radar data of human gait and through a preliminary test on the experimental data.

3.1. Activity Feature Extraction

For activity classification, two feature extraction approaches are considered: the implementation of handcrafted features, including empirical features that calculated directly from the data matrix in range-time (RT), range-Doppler domain, micro-Doppler spectrogram (D) and transform-based features inspired by [8,9] that calculated on cadence velocity diagram and singular value decomposition matrix that transformed from base-data matrices (spectrogram, range-time data and range-Doppler data) in multiple radar data domains, and TSFRESH features [22].

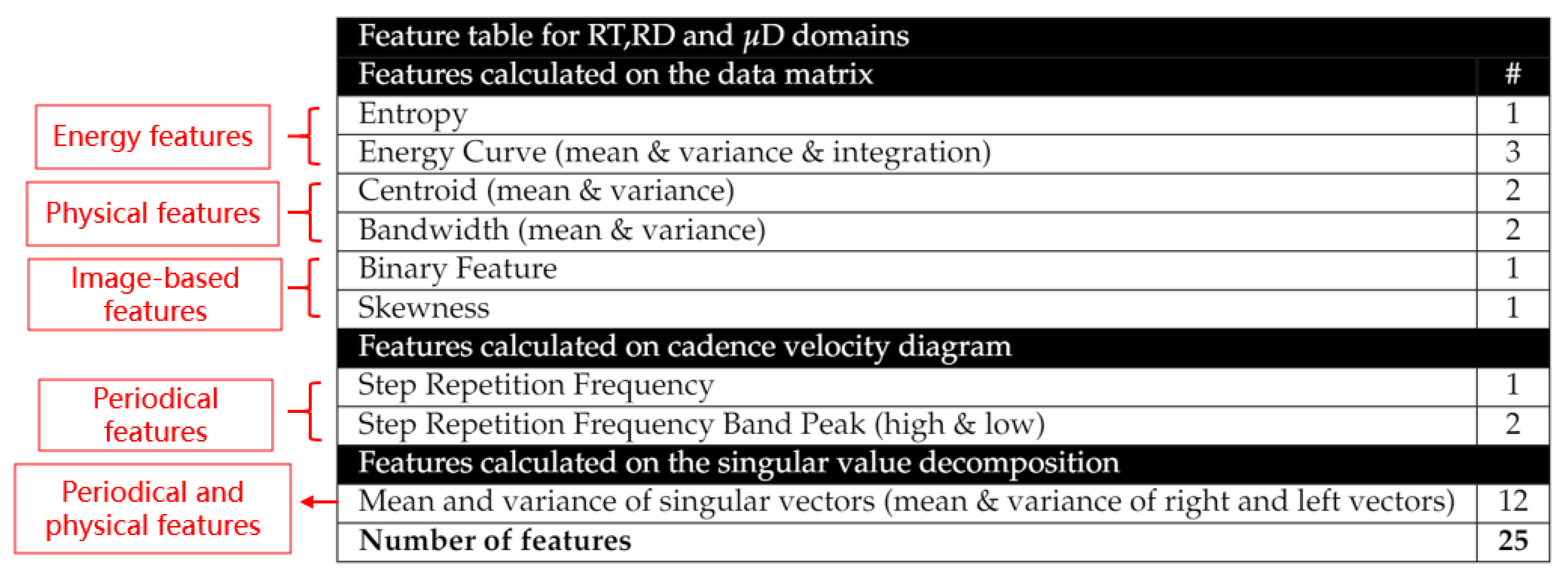

Table 1 shows the features calculated for activity classification. The left column lists the names of features and the right column lists how many corresponding features are calculated. In total, 25 features can be calculated in one domain and the same features are applied in range-time, range-Doppler and micro-Doppler (spectrograms) domains to describe different characteristics and information held in each domain, with the exception of the Cadence Velocity Diagram that by definition is the FFT of the spectrogram along the time axis. These features can be summarised into the types listed which are namely energy features which describe the energy status depending on positions and unpredictability of the energy distribution; physical features which describe the mass centre of Doppler patterns and spread of Doppler shifts; image-based features which describe the symmetry and the shape of spectrogram patterns; Cadence velocity diagram (CVD) features; and singular value decomposition (SVD) features that indicate periodic and physical features such as the velocity of the activity.

Table 1.

List of the features extracted for activity classification.

Some features from the table present interesting properties. For instance, the entropy and energy curve of the spectrogram are features used for analysing the energy properties of activities. For a time-frequency spectrogram S(I,j), the entropy is given by Equations (1) and (2) where P is the probability distribution of the energy.

Energy curve shows how the energy of the target depends on the position. In the Doppler spectrogram, it is calculated by directly summing all the spectrogram value at each time bins. In this study, the entropy and the mean, standard deviation as well as trapezoidal numerical integration of energy curve are used as features. They can follow the energy status of the activity during the movement. If an activity shows a random and unpredicted intense movement such as a sudden fall to the ground, it then reflects a widespread of energy curve and larger entropy and thus can be an evident characteristic for classification.

The centroid and bandwidth of the spectrogram represent the mass centre of the Doppler signature and the surrounding bandwidth. This is denoted in Equation (3), which is given by [23], where is the centre frequency at jth time bin, is the Doppler frequency at ith Doppler bin and is the corresponding spectrogram value.

With the obtained centre frequency, the bandwidth of the Doppler signature can then indicate the spread and range of the Doppler shifts around the centre frequency and is calculated from Equation (4):

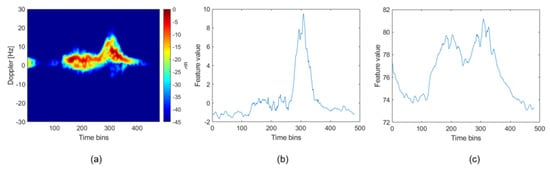

For instance, Figure 3a is the micro-Doppler spectrogram of sitting activity with a color map indicating the normalised spectrogram amplitudes in dB. (b) and (c) are calculated centre frequencies and bandwidth over the time bins. It can be observed that centre frequencies hold the shape and mass centre of the Doppler pattern and the bandwidth features capture the range of Doppler shifts in each time bin and thus they hold and interpret the characteristics of the sitting spectrogram.

Figure 3.

(a) micro-Doppler spectrogram of sitting activity and its, (b) centroid features, (c) bandwidth features.

Furthermore, the skewness feature, describing symmetry properties of the shape of the motion pattern in the spectrogram is applied and given in Equation (5) where and are the mean and standard deviation of x. In this study, E is the histogram calculated by transforming the spectrogram into a grayscale image which indicates the energy distribution of the spectrogram. The calculated skewness s will be close to 0 if the pattern is symmetric distributed and will be negative or positive for more left-spread patterns or right-spread patterns respectively.

Typically, patterns of continuous activities such as walking can present more uniform and periodic distribution on the spectrogram. Furthermore, activities have symmetry motion such as drinking—first raising the arm up and then putting the arm down. These activities can show more symmetric information than split-second activities such as standing up or sitting down and thus can be classified using skewness.

Singular value decomposition (SVD) is a data compression method that has been proposed and applied in the time-frequency spectrogram analysis [24]. It is also used for reducing feature space dimensionality and able to infer the physical parameters such as the velocity, periodic property in spectrogram, which is shown useful for gait analysis in distinguishing armed and unarmed personnel in walking [25]. SVD can be expressed in Equation (6) where U and V are left, and the right matrix of singular vectors and S are the singular values of M where M is the spectrogram in this study. After obtaining the vectors V and U, the mean and standard deviation of these 2 vectors are calculated as features.

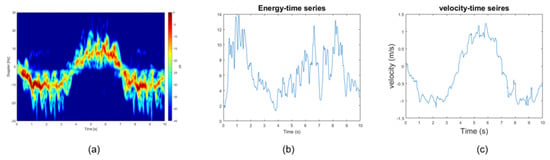

Time Series Feature extraction based on scalable hypothesis tests (TSFRESH) is a python package that can automatically calculate a large number of features based on the input time series [22]. The extracted characteristics include but are not limited to the number of consecutive changes, the autocorrelation, and linear trend, which complement the range of handcrafted features presented above. As the TSFRESH algorithm accepts time series as inputs, a micro-Doppler spectrogram with size has m time series. Therefore, the spectrograms are first pre-processed to reduce the dimension and complexity of input data and extracted features. For all the activity samples, the energy curve is used as the input to the TSFRESH algorithm. It is the sum of the response values of the spectrogram Doppler bins, which describes the energy status of the motion as a function of time. In gait analysis, because of the continuous and periodical shape of the spectrogram pattern, a complete velocity-time curve can be extracted by performing noise filtering and locating the strongest response values of Doppler shifts in each time bin (the details of extraction procedures are given in Section 3.2), and it is used as the second input data for the TSFRESH algorithm. Figure 4 presents an example of input series for TSFRESH. Figure 4a is the micro-Doppler spectrogram of walking activity with a colorbar indicating the normalised spectrogram amplitudes in dB. Figure 4b,c are the calculated one-dimensional time series from Figure 4a which are energy curve series showing the energy status of the target depending on time and velocity-time series of walking. With these input time series, 627 HAR features from all six activities and 349 features from walking activity alone are extracted with TSFRESH.

Figure 4.

(a) micro-Doppler spectrogram and (b) extracted TSFRESH input energy-time series, (c) velocity-time series.

3.2. Walking Sample Processing and Gait Feature Extraction

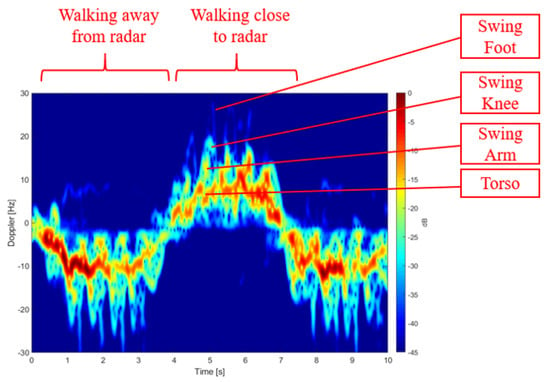

For the collection of walking samples, the volunteers were asked to walk back and forth in their normal speed with arms and legs swinging naturally and each volunteer was recorded for 3 samples. In total, 180 samples from 60 people aged from 21 to 98 are used for the analysis. For a complete walking cycle in recorded samples, three periods, acceleration phase, stable phase and deceleration phase, are considered in the gait patterns. In acceleration phase, the person starts to walk from static position and the walking velocity increases. In stable phase, the walking velocity is oscillating around a constant value and can be regarded as a uniform velocity. After that, the velocity decreases to zero and the person stops in deceleration phase. These states are repeated for 2 to 3 cycles in each recorded sample. Figure 5 presents the micro-Doppler spectrogram of one sample from person ID 01 (gender: male, age: 27, height: 182 cm) male. For the details of all the volunteers and samples please refer to [21]. In the figure, the negative Doppler shifts are caused by the relative motion of the subject moving away from the radar and the positive Doppler shifts then indicate the movement close to the radar. The movement of the main body, as well as the motion of the arm, knee and foot can be observed. These are empirical extrapolations from [26] and our experience in reading measured spectrograms, and this information is not used to separate body parts for the processing, but highlights the contributions of various body parts in the radar signature. In the processing of gait samples, features are extracted to present the variability of walking patterns from 60 volunteers in different age that performed different characteristics such as walking velocity, step length and step repetition frequency (step rate) which can be used for personnel recognition.

Figure 5.

Example of micro-Doppler spectrogram of a walking sample.

To extract more accurate gait parameters, a stable phase extraction of walking based on image processing is first performed to reduce the influence and possible errors caused by the acceleration and deceleration phase of walking. This processing also captures the uniform walking period, and thus a stable measurement of gait parameters such as velocity and step length can be obtained.

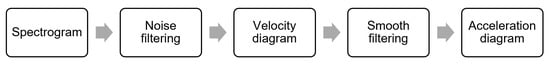

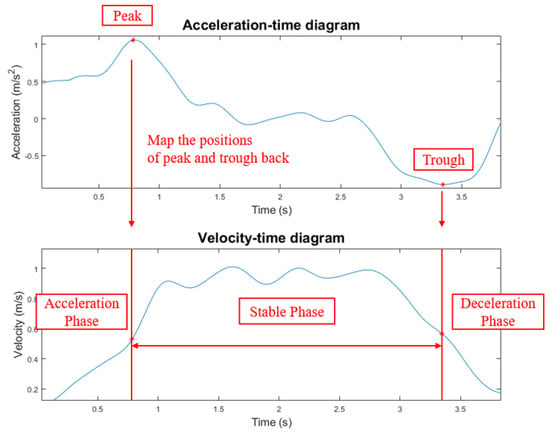

Figure 6 shows the stable phase extraction procedures that start with micro-Doppler signatures of walking activities. The Doppler shifts with the strongest response in the spectrogram at each time bin are recorded to obtain the Doppler shift caused by the torso. Then, a noise filtering is carried out by removing outliers of the curve caused by noise which have velocity values greater than 3 m/s that is far beyond the normal walking speed around 1 m/s and get the velocity-time graph. After that, a Gaussian filter with a length of 50 samples is applied to smooth the data, and the derivation operation is performed to get the acceleration information where a running-average filter is used to get the mean acceleration over a sliding window of length 50 to further smooth and suppress minor changes. In the acceleration-time curve, there is a peak and a trough in a complete walking cycle shown in Figure 7, which indicates the end of the acceleration phase and the start of the deceleration phase. The stable phase of walking is the period the velocity can be approximately regarded as constant. It is extracted by locating the positions of the waveform between the peak and the trough in acceleration data and mapping them back into the velocity data as shown in Figure 7.

Figure 6.

Block diagram of walking samples processing to obtain acceleration-time plots.

Figure 7.

Example of results from the stable phase extraction.

Additional gait features are extracted for the analysis of personnel recognition based on walking data. Two approaches are considered: cadence velocity diagram (CVD) and image-processing based velocity-time diagram analysis which analyse the walking patterns in frequency domain and time domain respectively.

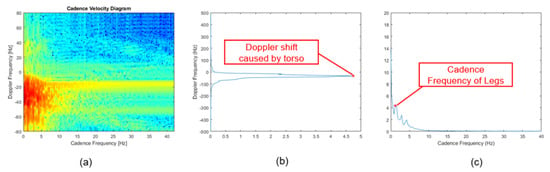

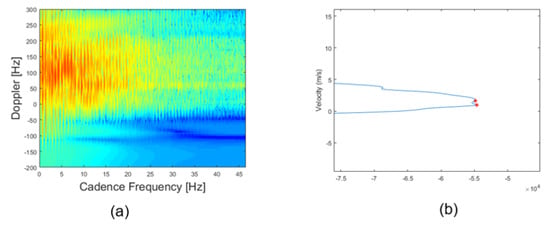

- CVD features: CVD is obtained by performing an FFT along the time axis for all Doppler bins in the micro-Doppler signatures (MDS) in the spectrograms. It can show periodic motions of limbs during walking within the MDS [27]. In this study, the FFT is applied to the extracted stable phase of walking instead of the entire length of the samples, for more accurate measurement and analysis by removing the interference of the acceleration phase and deceleration phases. Figure 8a is the CVD of the walking stable-phase of an example of walking data. Figure 8b,c give the sum of the CVD along the cadence frequency axis and Doppler frequency axis respectively where the Doppler shift and Cadence frequency of legs can be observed, and thus, the gait velocity, and the step repetition frequency can be measured.

Figure 8. (a) Cadence velocity diagram (b) Horizontal projection of CVD (c) Vertical projection of CVD.

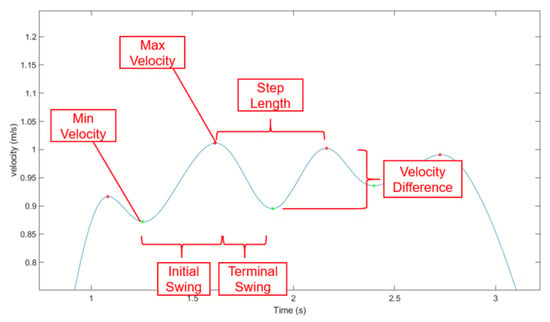

Figure 8. (a) Cadence velocity diagram (b) Horizontal projection of CVD (c) Vertical projection of CVD. - Image-processing based velocity-time diagram features:The velocity-time graph (Figure 9) of walking can be obtained after the pre-processing and stable phase extraction. Features extracted from this data representation are listed in Table 2 where the left column lists the name of each feature and the right column lists how many corresponding features are extracted. The minimum, maximum, and average velocity can be measured directly from the curve with the corresponding velocity axis. In Figure 9, peaks are shown in red marks, and troughs are in green marks. Although the waveform is the track of the movement of the torso, the frequency component of the torso movement is synchronised with the swing frequency of the foot and has the same period. Therefore, the interval every two peaks or two troughs can be associated with a step [26], and the adjacent peak and through can present the initial swing or terminal swing of a step. Knowing the resolution of the time axis, the step length l can be calculated by integrating the multiplication of instantaneous velocity and time t given in Equation (7).

Figure 9. Velocity-time diagram of the walking period in the stable phase.

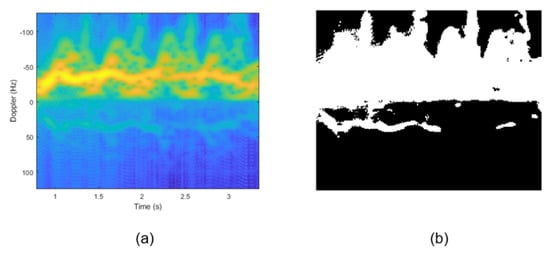

Figure 9. Velocity-time diagram of the walking period in the stable phase. Table 2. List of the features extracted for gait analysis.The time for a half swing, as well as the velocity difference of a half swing, is also considered for potential features. Additionally, a binary feature R is calculated using Equation (8):R is the ratio between two areas where denotes the whole surface area of the stable phase walking spectrogram (Figure 10a) and denotes the area of the white pattern in binary figure (Figure 10b) converted from the spectrogram (a) using a threshold value which is determined by average pixel values of all the walking spectrograms in the dataset. In this way, a walking pattern with more intense energy and faster velocity will generate a widerspread binary pattern, and the ratio can thus indicate the intensity and the shape of the walking activity in the stable phase.

Table 2. List of the features extracted for gait analysis.The time for a half swing, as well as the velocity difference of a half swing, is also considered for potential features. Additionally, a binary feature R is calculated using Equation (8):R is the ratio between two areas where denotes the whole surface area of the stable phase walking spectrogram (Figure 10a) and denotes the area of the white pattern in binary figure (Figure 10b) converted from the spectrogram (a) using a threshold value which is determined by average pixel values of all the walking spectrograms in the dataset. In this way, a walking pattern with more intense energy and faster velocity will generate a widerspread binary pattern, and the ratio can thus indicate the intensity and the shape of the walking activity in the stable phase. Figure 10. (a) The spectrogram of walking in stable phase (b) binary figure converted from the spectrogram.

Figure 10. (a) The spectrogram of walking in stable phase (b) binary figure converted from the spectrogram.

In this study, since both CVD analysis method and image-processing based velocity diagram analysis method can measure the gait velocity, a comparison and validation procedure is performed to evaluate the accuracy of the measurement from the two approaches with a simulation walking model providing the ground truth velocity.

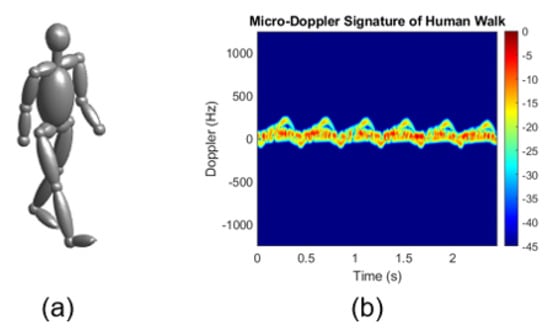

For the simulation model, the construction of the model and the set up of the radar refers to the work in [28]. The human model is constructed using the Boulic kinematic model [29] with 17 joints (e.g., head, neck, shoulder, knee, ankle, toe), which represent the skeleton structure of a person (Figure 11a) and the sampling rate is 2048 Hz per walking cycle. Then a simulated pulse-Doppler radar working at 5.8 GHz is set to have the same frequency as the real radar configuration in this study and capture the return data from the walking of the human model. With obtained data, the radar signal processing is performed to extract the MDS of the simulated motion (Figure 11b).

Figure 11.

(a) human walking model, (b) micro-Doppler spectrogram of the simulated motion.

In the simulation, the preset walking velocity provides the ground truth for the validation of the proposed features for gait analysis. The two approaches, CVD analysis and image-processing based velocity diagram analysis are then performed using the MDS from radar simulation to measure the gait velocity.

Table 3 shows the comparison of the measurement results from both analysis methods. It can be observed that while the measurement results from CVD has a deviation in the order of 0.5 m/s from the simulated velocity, the results from image-processing based velocity diagram yielded much closer results to the ground truth with approximately 95% accuracy. The deviation from CVD analysis could be caused by the limitation in resolution from the FFT, and the blurring of the mean velocity peak caused by the accumulation of the contributions from the arms and feet. This phenomenon may be emphasised by the reduced contrast between the torso returns compared to the rest of the body in simulations, in comparison with measurements.

Table 3.

Comparison of the measured velocity with the simulated velocity.

After comparing the measured gait velocity using both methods with simulated data, these two approaches are further evaluated using the collected experimental samples. This simple validation aims to verify whether there is an increase in accuracy by adding one of the two proposed mean gait velocity estimation to the feature set already identified for activity monitoring. The results are shown in Table 4, where the basic feature set is the combination of the original features in Table 1 and a part of the robust features in Table 2, is the mean velocity measured by the velocity-time diagram, and is the mean velocity measured by CVD. From the results, the feature increases the classification accuracy by 1.48%, whereas the feature reduces the accuracy by 0.29%. The degraded measurements from CVD can be attributed to the influence of reflections from the environment, which is shown to have an impact on the estimation of the foot/torso ratio [13]. Such interference in the pattern can cause a shift of the peak value when accumulated in the horizontal projections of CVD (Figure 12b) and thus worsen the estimation of mean gait velocity. Therefore, the velocity features measured from the velocity-time diagram using image-based processing are retained, but the CVD-based features are dismissed.

Table 4.

Classification results comparing the use of estimated mean gait velocity from the CVD and velocity-time diagram methods.

Figure 12.

(a) CVD of walking activity from experimental data, (b) Horizontal projection of CVD from a noisy spectrogram.

4. Results and Discussion

4.1. Human Activity Classification

Based on the designed features in Table 1 which are all applied to micro-Doppler, range-Doppler and range-time domains as well as the features extracted from TSFRESH, the classification models are trained with several commonly used statistical learning algorithms such as decision trees, nearest neighbour classifiers, support vector machine (SVM) and ensemble classifiers. A 5-fold cross-validation method is used, and each model is trained and validated 50 times to calculate the average classification accuracy, and the results are given in Table 5. These classifiers are trained with multiclass models.

Table 5.

Classification results from the multiclass model for different classifiers and combinations of sets of features.

From the initial results, the SVM model with cubic kernel achieves the highest accuracy at 91.8%. However, the introduction of multidomain features and TSFRESH features to the set does not directly improve the results, which reported 90.7% with ensemble classifier, 72% with SVM and 89.2% with ensemble classifier as a combination. The results show the advantage of the micro-Doppler spectrogram, which can better characterise the kinematic motion properties of activities. Applying additional features in other domains and TSFRESH features increases the dimensionality and complexity of the feature set for classification without adding, in this case, useful information, which can explain the severe decrease in performances. Therefore, a feature selection processing is implemented to improve the prediction performance, increasing prediction speed, cost-effectiveness and providing a better understanding of the data generation process [30].

Feature selection algorithms are generally divided into the following three categories:

- Filter methods, which look into the intrinsic properties of the features regardless of the training model used.

- Wrapper methods, which iterate the subsets of original features and compare their prediction errors.

- Embedded methods, which generates scores of features during the model learning process.

According to the algorithms of these selection methods, filter methods are computationally inexpensive. However, since wrapper methods directly evaluate the classification accuracy using different subsets of features, the performance of wrapper methods are better compared to filter methods [31]. Therefore, in this project, a wrapper method, sequential forward selection (SFS), which is also proven to have better accuracy improvement in [32] for radar HAR feature selection, is chosen. SFS starts with choosing one feature from the feature set and adds one feature each time, which achieves the best classification performance for optimising the training model. The process iterates until the validation accuracy of the model reaches the maximum.

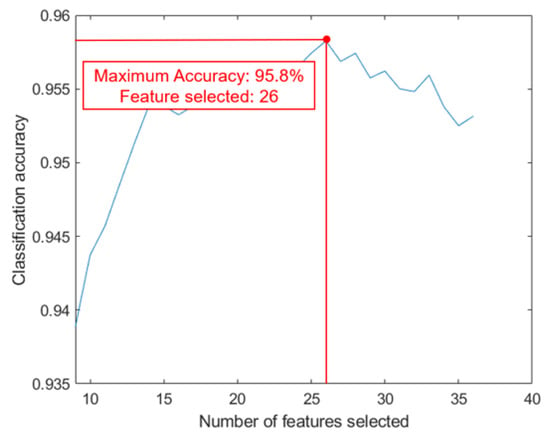

Figure 13 presents the change of classification accuracy over the feature selection processing. A better model with accuracy 95.8% is obtained, and the accuracy is enhanced by 4% by removing those redundant and correlated features.

Figure 13.

Classification accuracy evolution with SFS applied to SVM cubic model.

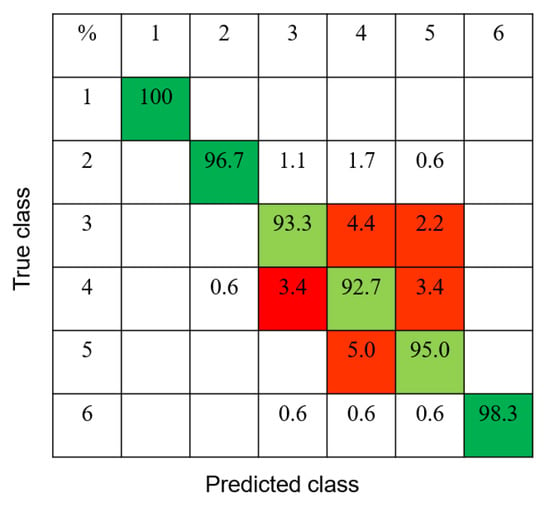

Figure 14 shows the confusion matrix of the results from the multiclass activity classification model with feature selection applied. Labels 1 to 6 represent walking, sitting, standing up, drinking water, picking an object from the floor and fall, respectively. It can be observed that some activities such as 1 and 6 have 100% and 98.3% classification accuracy while activity 4 only has 92.7% accuracy. These varied classification results are mainly caused by the physical characteristics of different motions. For instance, the periodical characteristic of walking activity can be described by SVD features and CVD features, and the intensive and rapid movement from fall can be captured by energy features such as energy curve and entropy. However, the minor motion from drinking (Figure 15a) makes it less distinctive than other activities, and its similarity with picking (Figure 15b) can explain 10% of misclassification.

Figure 14.

Classification accuracy evolution with SFS applied to SVM cubic model.

Figure 15.

(a) Micro-Doppler spectrograms of activity 4 and (b) activity 5.

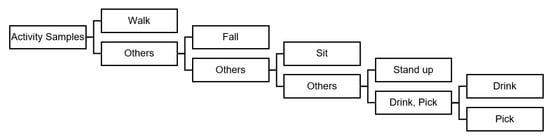

Further analysis is performed looking at a hierarchical classification framework with the one-versus-all approach. From the confusion matrix obtained in multiclass analysis, activity A1 and A6 show 100% and 98.3% classification accuracy and thus can be classified easily. However, some other activities like A3, A4 and A5 have worse performances with the selected feature combination in the multiclass model. Therefore, a hierarchical classification structure shown in Figure 16 is designed to further optimise the classification model in each stage. With a hierarchical classification structure, 5 separate models are trained to classify each specific activity. The first classified activities are removed in the training of the model in the next stage to reduce dimensions and complexity in training data and classes. Additionally, customised training algorithms, parameters and feature combinations can be selected flexibly for each model, and thus the overall performance can be improved.

Figure 16.

Hierarchical classification structure.

The results of the hierarchical approach for each activity are reported in Table 6. From the comparison of the accuracy before and after the feature selection, it can be observed that walking activity can be classified with 100% accuracy, and by using the most suitable models and feature selection methods, falling (6) and sitting (2) activities each have improved accuracy of approximately 2% while the accuracy for standing (3), picking up an object (5) and drinking (4) activities have increased by 5%, 11%, and 12%, respectively. It is worth noting that using the designed structure (Figure 16) by progressively removing the previous classified activity data, the model of the last stage is trained as a one-versus-one classifier that is designed and optimised specifically to distinguish picking up an object (5) from drinking (4).

Table 6.

Classification results from one-versus-all models with and without feature selection vs multiclass accuracy.

Table 7 shows the most robust and commonly kept features in the 5 models after feature selection. The centroid of spectrogram and bandwidth of spectrogram are key features describing the physical properties of the motions. The skewness feature indicates the symmetry properties of the MDS patterns, and SVD features can also infer physical parameters and periodic properties in the spectrogram. Notably, only the standard deviations of the first column data in vector U and V in SVD are selected, possibly showing their better ability and higher weight in each vector U and V to imply the properties of the motion stored. Additionally, FFT coefficients chosen from TSFRESH, presenting the strength of repetition patterns, are also significant features. However, which specific FFT coefficients should be used depends on the activities that need to be classified as they can show different characteristics in different frequency components.

Table 7.

Most robust features from the feature selection of 5 models.

Apart from the features in Table 7, other features are flexibly selected in different models for some distinguishable characteristics of different activities. For instance, in model 1, CVD features are used to classify walking activity, which has salient periodic patterns in comparison to the other activities. The entropy feature showing the randomness and unpredictability of energy distribution is used in model 2 and model 3 to distinguish fall and sitting, which involve rapid and impulsive motions but removed in classifying relatively slow and more similar activities such as drinking (4) and picking (5). Instead, energy curve features are selected in identifying picking (5) from drinking (4) to describe more detailed motions and changes in the energy depending on the position in the two activities.

4.2. Personnel Recognition Based on Gait Analysis

In order to perform personnel recognition on the dataset analysed in this paper, an ensemble classifier with subspace discriminant method was applied to recognise 60 people. In the validation, a 5-fold cross-validation method is implemented for 50 times, and the average values are used to calculate the classification accuracy.

Table 8 summarises the features that are used for personnel recognition. It contains 75 multidomain features from those mentioned in Table 1 that describe the general motion characteristics of walking activity, 11 gait features mentioned in Table 2 that focus on detailed gait parameters for each person, and 349 TSFRESH features including different coefficients and properties of the velocity-time and energy-time series. In total, 435 features are considered for the analysis of personnel recognition.

Table 8.

Summary of features extracted for the personnel recognition task.

Table 9 shows the classification results using multidomain features, gait features and TSFRESH features, respectively. The classification results with multidomain features and TSFRESH features reach 50.9% and 47.4% accuracy in identifying 60 people. However, the gait features are less relevant to classify different people and only yield an accuracy of 16.4%. The best classification 54.2% is obtained by combining three types of feature. From the results, although gait features measure the essential walking parameters, including velocity and stride length, they show much lower accuracy than multidomain features and TSFRESH features.

Table 9.

Classification accuracy using different features.

This can be explained by the fact that in the recording, while some elderly people (older than 65) had slower walking speed between 0.6 to 0.8 m/s, most of the people performed rather similar walking speed around 1 m/s thus making this parameter alone a less salient characteristic for personnel recognition. Additionally, each person only recorded 3 samples for walking activity, and it could be difficult to observe robust and consistent gait features. Therefore, multidomain features and TSFRESH features which are more focused on the periodic characteristics, energy features and elements of the whole recorded time series contain better information for the identification.

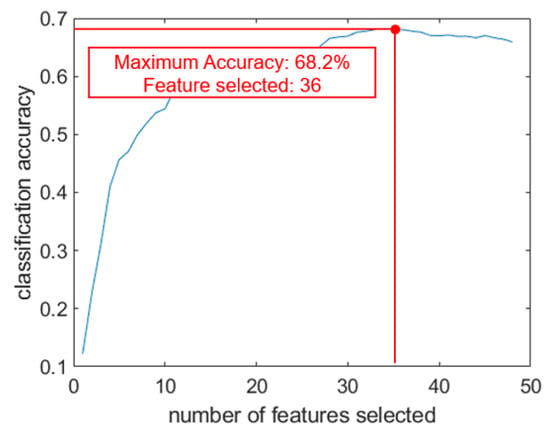

With initial classification results using the combination of multidomain features, gait features and TSFRESH features, an SFS feature selection method is used to remove the redundant and correlated features to further improve the classification accuracy. Figure 17 shows the curve of classification accuracy over the number of features selected. The highest classification is 68.2% increased from 54.2% in identifying 60 people is observed when 36 features are selected.

Figure 17.

Feature selection results with the combination of 3 feature types.

Table 10 presents a combination of the selected features. For multidomain features, 10 of them are selected. Noteworthily, variances of SVD features, centroid and bandwidth of spectrogram features, which are the most robust features found by activity classification analysis, are also selected, showing their robustness and consistency in the personnel recognition task as well. For gait features, the maximum step length and the ratio of initial swing and terminal swing of one step are proven to be the most salient gait features. For TSFRESH features, features describing sequence properties such as 0.1 quantiles, measure of non-linearity, FFT coefficients, continuous wavelet transform coefficients, the cross power spectral density of the time series are chosen. They are auto-calculated characteristics indicating the features in terms of energy, shape, frequency, magnitude and distribution of the input velocity-time series. They are combined with handcrafted features and measured gait parameters which further improve the classification accuracy.

Table 10.

Feature combination after selection.

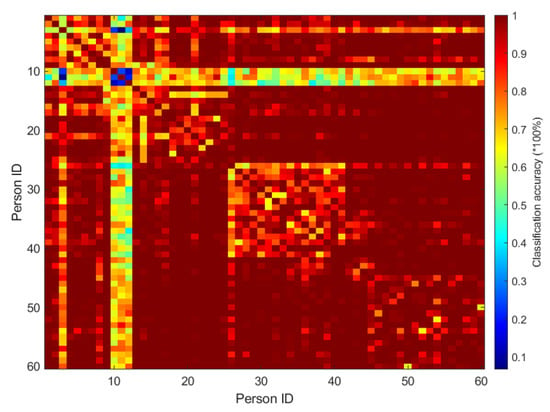

After feature extraction, classification and feature selection, the model for personnel recognition is obtained with 68.2% accuracy in identifying 60 people. Then a one-versus-one personnel recognition is performed on all 60 people in order to evaluate the performance of data samples recorded from each individual person.

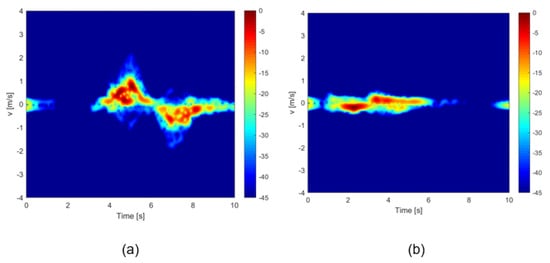

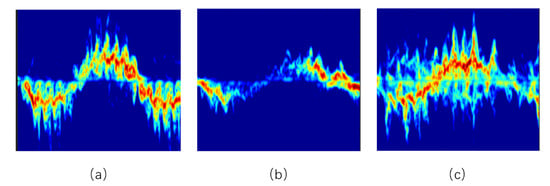

Figure 18 provides the 60 × 60 confusion matrix of the classification. It can be observed that data samples recorded for some specific people with ID 10 to 12 perform poorly during the classification, and the samples from these people cannot be identified in most of the one-versus-one combinations. Three main reasons can be accountable for these poor classification results. A well-recorded data sample (Figure 19a) contains a full acceleration phase, stable phase, and deceleration phase of walking and also has a clear track of the motion of torso, feet, and arms. However, some data samples like Figure 19b have an incomplete waveform of a walking period in the allocated time of 10 s for the recording, which can cause trouble in stable phase extraction and the loss of data for feature extraction.

Figure 18.

Confusion matrix of one versus one classification with the trained model.

Figure 19.

Comparison of spectrograms between recorded walking samples of different subjects: (a) well-recorded sample (b) uncomplete sample (c) noise sample

Furthermore, some data (e.g., Figure 19c) is noisy, which can obscure the contribution of the torso and the measure of the step length. Additionally, since each person only recorded three walking samples, which can be insufficient given 60 classes needed to be recognised, the limited training data may not provide enough consistent features. For instance, walking speed and step length in 3 samples of one person can vary to a certain extent and create confusion with samples from other people with similar features and thus the error can occur in intra-subject gait samples. Therefore, higher accuracy is expected if more data per person can be collected.

Samples of some specific individual for which poor data were recorded leading to worst classification performance are removed, and the performance of personnel classification accuracy over the remaining number of people is analysed again The results are listed in Table 11 where the horizontal axis presents the number of persons removed. The vertical axis presents the number of people classified in the model.

Table 11.

Classification accuracy over the number of subjects classified by the model and number of subjects removed from the classification task.

From the results of Table 11, the first column is the classification accuracy where all data samples are included. As expected, a salient trend of increasing classification results can be observed when the number of removed subject samples with poor performance increases. The best classification results for a specific number of people are obtained in the last row (in red color) of each column where 68.2% in identifying 60 people, 76.7% accuracy in identifying 50 people, 84.4% accuracy in identifying 40 people, 91.4% accuracy in identifying 30, 96.2% in identifying 20 people, and finally 99.8% in identifying 10 people are achieved. The results show that huge improvements in identification accuracy can be obtained when using properly recorded data with a complete track of walking activity and less interference from the noise. In an ideal situation where some distinguishable samples that have rather different walking patterns from 10 people are chosen, the classification accuracy can even reach 99.8%. Generally, a promising personnel recognition result with an average accuracy of 88.3% can be acquired when identifying fewer than 20 people.

5. Conclusions

In this study, human activity classification and individual personnel recognition were performed on a large dataset of radar signatures collected at the University of Glasgow. A large set of diverse features was considered, namely empirical-based features, transform-based features, image features in multiple radar formats, and TSFRESH features which, to the best of our knowledge, have not been used for radar-based human signatures analysis.

The most suitable features for human activities discrimination, both separately and overall, were discussed. Additionally, a hierarchical classification structure was designed to further improve the results by taking advantage of designing customised combinations of features and models for each activity, and the results reached 95.4%, up from 91.2%.

Furthermore, image-based gait analysis was validated in conjunction with CVD. For more accurate measurements, the stable phase of walking was extracted, and then extra gait features were calculated and a feature-level fusion approach was performed which achieved a 20% increase in classification results to approximately 68% in identifying 60 people and up to 91.4% in identifying 30 people based on their walking samples, showing significant results compared to previous work such as 68% in identifying 7 people [16], 87% in identifying 13 people [33].

For future work, further improvements of the proposed scheme will be carried out. While the first three models which classify walking (A1), fall (A6) and sitting (A2) in the proposed hierarchical classification yield accuracy over 98%, the last two models have 95% and 97% in classifying standing (A3), drinking (A4) and picking (A5). Therefore, more customised features can be developed to capture the key differences and identify these specific activities in the dataset. Additionally, the influence of pre-processing choices on the classification accuracy could be investigated, such as the use of windowing at different stages of the pre-processing chain, or the efficiency of a zero-phase moving target indocator compared to a classic moving target indicator filter.

For gait analysis, more data from more people and more repetitions should be collected to further evaluate the robustness of the trained model and measured gait features. This will also include situations where the aspect angle between the radar line of sight and the walking trajectory is unfavourable, or where the walking segments are more fragmented with stop-and-go patterns. However, in the context of assisted living, the performances are promising for a regular household which could have up to 10 people. Most studies only consider one person in the household, however multi-occupancy raises the question of recorded-data association to the individuals. This would support tracking individuals more accurately in order to associate the activity monitoring data to the right individual automatically.

Author Contributions

X.L. and J.L.K. performed the analysis of the data and designed the algorithms; Z.L., S.Y. and O.R. provided inputs on the algorithms’ development and on the manuscript; F.F. coordinated the data collection and experimental setup and helped with the manuscript. All authors have read and agreed to the published version of the manuscript

Funding

The authors would like to thank the British Council 515095884 and Campus France 44764WK—PHC Alliance France-UK for their financial support.

Acknowledgments

The authors would like to acknowledge all the volunteers who participated in recording the dataset, specifically A. Shrestha, H. Li, and S. A. Shah for the data collection, and the staff at North Glasgow Home and Age UK West Cumbria for providing access to their facilities.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chavan, M.; Pardeshi, P.; Khoje, S.A.; Patil, M. Study of Health Monitoring System. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 1779–1784. [Google Scholar]

- Terroso, M.; Rosa, N.; Marques, A.; Simoes, R. Physical consequences of falls in the elderly: A literature review from 1995 to 2010. Eur. Rev. Aging Phys. Act. 2013, 11, 51–59. [Google Scholar] [CrossRef]

- Lee, L.; Grimson, W.E.L. Gait analysis for recognition and classification. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21–21 May 2002; pp. 155–162. [Google Scholar]

- Ozcan, K.; Mahabalagiri, A.K.; Casares, M.; Velipasalar, S. Automatic Fall Detection and Activity Classification by a Wearable Embedded Smart Camera. IEEE J. Emerg. Sel. Top. Circuits Syst. 2013, 3, 125–136. [Google Scholar] [CrossRef]

- Alfuadi, R.; Mutijarsa, K. Classification method for prediction of human activity using stereo camera. In Proceedings of the 2016 International Seminar on Application for Technology of Information and Communication (ISemantic), Semarang, Indonesia, 5–6 August 2016; pp. 51–57. [Google Scholar]

- Zebin, T.; Scully, P.J.; Ozanyan, K.B. Evaluation of supervised classification algorithms for human activity recognition with inertial sensors. In Proceedings of the 2017 IEEE SENSORS, Glasgow, UK, 29 October–1 November 2017; pp. 1–3. [Google Scholar]

- Ding, C.; Zhang, L.; Gu, C.; Bai, L.; Liao, Z.; Hong, H.; Li, Y.; Zhu, X. Non-Contact Human Motion Recognition Based on UWB Radar. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 306–315. [Google Scholar] [CrossRef]

- Li, H.; Shrestha, A.; Heidari, H.; Kernec, J.L.; Fioranelli, F. A Multisensory Approach for Remote Health Monitoring of Older People. IEEE J. Electromagn. RF Microw. Med. Biol. 2018, 2, 102–108. [Google Scholar] [CrossRef]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Performance Analysis of Centroid and SVD Features for Personnel Recognition Using Multistatic Micro-Doppler. IEEE Geosci. Remote Sens. Lett. 2016, 13, 725–729. [Google Scholar] [CrossRef]

- Björklund, S.; Petersson, H.; Hendeby, G. Features for micro-Doppler based activity classification. IET Radar Sonar Navig. 2015, 9, 1181–1187. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Ghaleb, A.; Vignaud, L.; Nicolas, J.M. Micro-Doppler analysis of wheels and pedestrians in ISAR imaging. IET Signal Process. 2008, 2, 301–311. [Google Scholar] [CrossRef]

- Zrnic, B.; Andrić, M.S.; Bondzulic, B.P.; Simic, S.; Bujaković, D.M. Analysis of Radar Doppler Signature from Human Data. Radioengineering 2014, 23, 11–19. [Google Scholar]

- Seifert, A.; Zoubir, A.M.; Amin, M.G. Radar classification of human gait abnormality based on sum-of-harmonics analysis. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 0940–0945. [Google Scholar]

- Chen, Z.; Li, G.; Fioranelli, F.; Griffiths, H. Personnel Recognition and Gait Classification Based on Multistatic Micro-Doppler Signatures Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 669–673. [Google Scholar] [CrossRef]

- Johansson, J.; Daniel, D. Human Identification with Radar. Bachelor’s Thesis, Halmstad University, Halmstad, Sweden, 2016. [Google Scholar]

- Gurbuz, S.Z.; Amin, M.G. Radar-Based Human-Motion Recognition With Deep Learning: Promising applications for indoor monitoring. IEEE Signal Process. Mag. 2019, 36, 16–28. [Google Scholar] [CrossRef]

- Le Kernec, J.; Fioranelli, F.; Ding, C.; Zhao, H.; Sun, L.; Hong, H.; Lorandel, J.; Romain, O. Radar Signal Processing for Sensing in Assisted Living: The Challenges Associated With Real-Time Implementation of Emerging Algorithms. IEEE Signal Process. Mag. 2019, 36, 29–41. [Google Scholar] [CrossRef]

- Ancortek. Frequency-Modulated Continuous-Wave Radar; Ancortek: Fairfax, VA, USA, 2019. [Google Scholar]

- Stimson, G.W. Introduction to Airborne Radar; Mendham, N.J., Ed.; SciTech Pub.: Kolkata, India, 1998; pp. 163–176. [Google Scholar]

- Fioranelli, F.; Shah, S.A.; Li, H.; Shrestha, A.; Yang, S.; Le Kernec, J. Radar Signatures of Human Activities [Data Collection]. 2019. Available online: http://researchdata.gla.ac.uk/848/ (accessed on 5 July 2020).

- Christ, M.; Braun, N.; Neuffer, J.; Kempa-Liehr, A.W. Time Series FeatuRe Extraction on basis of Scalable Hypothesis tests (tsfresh—A Python package). Neurocomputing 2018, 307, 72–77. [Google Scholar] [CrossRef]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Centroid features for classification of armed/unarmed multiple personnel using multistatic human micro-Doppler. IET Radar Sonar Navig. 2016, 10, 1702–1710. [Google Scholar] [CrossRef]

- Marinovic, N.; Eichmann, G. An expansion of Wigner distribution and its applications. In Proceedings of the ICASSP ’85, IEEE International Conference on Acoustics, Speech, and Signal Processing, Tampa, FL, USA, 26–29 April 1985; Volume 10, pp. 1021–1024. [Google Scholar]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Classification of Unarmed/Armed Personnel Using the NetRAD Multistatic Radar for Micro-Doppler and Singular Value Decomposition Features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1933–1937. [Google Scholar] [CrossRef]

- Tahmoush, D.; Silvious, J. Radar micro-doppler for long range front-view gait recognition. In Proceedings of the 2009 IEEE 3rd International Conference on Biometrics: Theory, Applications, and Systems, Washington, DC, USA, 29–30 September 2009; pp. 1–6. [Google Scholar]

- Björklund, S.; Johansson, T.; Petersson, H. Evaluation of a micro-Doppler classification method on mm-wave data. In Proceedings of the 2012 IEEE Radar Conference, Atlanta, GA, USA, 7–11 May 2012; pp. 0934–0939. [Google Scholar]

- Li, J.; Shrestha, A.; Kernec, J.L.; Fioranelli, F. From Kinect skeleton data to hand gesture recognition with radar. J. Eng. 2019, 2019, 6914–6919. [Google Scholar] [CrossRef]

- Boulic, R.; Magnenat-Thalmann, N.; Thalmann, D. A Global Human Walking Model with Real-Time Kinematic Personification. Vis. Comput. 1990, 6, 344–358. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Dash, M.; Liu, H. Feature Selection for Classification. Intell. Data Anal. 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Li, H.; Shrestha, A.; Fioranelli, F.; Le Kernec, J.; Heidari, H. FMCW radar and inertial sensing synergy for assisted living. J. Eng. 2019, 2019, 6784–6789. [Google Scholar] [CrossRef]

- Garreau, G.; Andreou, C.M.; Andreou, A.G.; Georgiou, J.; Dura-Bernal, S.; Wennekers, T.; Denham, S. Gait-based person and gender recognition using micro-doppler signatures. In Proceedings of the 2011 IEEE Biomedical Circuits and Systems Conference (BioCAS), San Diego, CA, USA, 10–12 November 2011; pp. 444–447. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).