2. The WILLIAM Imaging System

The original idea of the WILLIAM system was to create a completely autonomous ground-based all-sky detecting system, which would monitor the night sky and would detect visible stellar objects and variable stars [

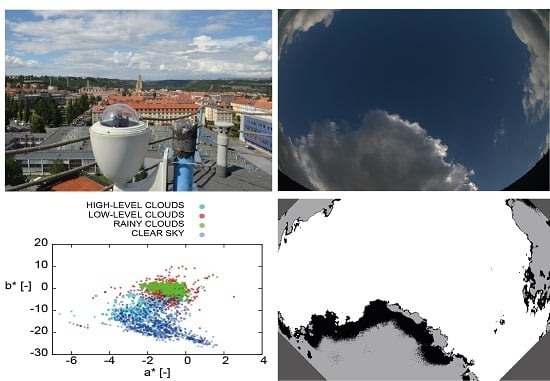

18]. We then aimed to evaluate weather conditions in real-time as a support experiment for autonomous robotic telescopes. The WILLIAM system automatically captures image data at 10 min intervals throughout the day by default, but until now, there has been no exploitation of the image data.

Currently, the WILLIAM system operates in Jarošov nad Nežárkou (South Bohemia, Czechia, GPS N, E). This station is equipped with a NIKON D5100 camera with a CMOS 23.5 × 15.6 mm sensor, which provides a maximal resolution of 3264 × 4928 pixels and 24 bit color depth. An all-sky scenery display with a field-of-view equal to ( with a Nikon full-frame camera) is provided by a Sigma 10 mm diagonal fish-eye lens. The standard setting for daytime image acquisition is f/2.8, exposure value 1/2000 s, and ISO 100. Images are automatically saved on the remote server in the RAW data format.

The camera with supporting electronics is enclosed in a weather-proof housing box, allowing observation throughout the year, irrespective of the weather conditions. The housing was also tested in the climate chamber.

The WILLIAM system captures a huge amount of image data each year. One set of data has been manually labeled and made available in the WILLIAM Meteo Database (WMD (

http://william.multimediatech.cz/meteorology-camera/william-meteo-database/)). WMD contains 2044 daytime images with labeled cloud phenomena. The images have been preselected to cover a variety of conditions during a different daytime and during the year. The clouds in the images have been classified into ten fundamental cloud classes, according to the WMO (World Meteorological Organization [

19]) classification. No division into cloud species and varieties has been specified. The cloud cover data for various cloud levels from the ALADIN (Aire Limitée, Adaptation Dynamique, Development International [

20]) numerical weather model has also been exploited for the classification. The cloud types have been split into four cloud groups, also according to the WMO cloud level classification system. These cloud groups have been customized for the data from the WILLIAM system. Each cloud group incorporates the clouds with similar characteristics and appearance on all-sky images. The cloud groups, their color labels used in the diagrams, and their qualitative descriptors are listed in

Table 1. The WMD contains 492 images with high-level clouds, 833 images with low-level cumuli-type clouds, 793 images with rain clouds, and 1411 images with clear sky. The cloud groups serve as the primary classification categories and are based on the WMO system. The division into the cloud groups also offers minimization of the systematic classification bias. For further consistent color processing results, all RAW all-sky images have been preserved in TIFF format and have been white-balanced to noon-day light of 6000 Kelvin.

3. Methods and Algorithms

Let the input all-sky color RGB (Red, Green, Blue) image be represented as a set of slices

, where the indices are used for spatial and color dimensions. Indices

,

, and

. Symbols

are used for the width and the height of the image.

is the number of color channels that are used. Based on the results presented in Blažek [

16], we selected the two most suitable color-spaces for cloud segmentation, CIE

[

21] and CIE XYZ [

22]. These two color-spaces offer the greatest sensitivity in the chromaticity diagram for separating the cloud types from the blue sky tone. Applying the equations from [

23] to the input,

can be transformed into a new color basis as:

The RGB to CIE XYZ transformation can be described as:

Assuming the CIE standard illuminant D65, the conversion matrix

M [

24] may be represented as:

To obtain the chromaticity

x,

y,

z values from the CIE XYZ space, the tristimulus

X,

Y,

Z values have to be normalized as:

However,

, and therefore, only two chromaticity components are necessary for defining the chrominance. Subsequently, the RGB to CIE

transformation builds on the previous RGB to CIE XYZ transformation and is defined as:

for:

and for

,

, and

. For the purpose of this work,

,

, and

x,

z are chromaticity channels and

L,

Y are luminosity channels. The luminosity channels are used only for equalizing the sensor illumination, and they can be omitted for the segmentation method. Reducing the color-space dimension to a set of two independent chromaticity components leads to more effective convergence.

The proposed segmentation method was based on a heuristic approach derived from the modified k-means++ algorithm [

25]. The maximum number of different cloud classes in the analyzed image is

. Typically, the maximum number can be set to

(see

Table 1), plus other classes in reserve to cover other disturbing details in the image, e.g., parts of buildings, planes, and other artificial objects. The cost function for the analysis is set to:

where

s is the index of the current iteration and

is used for the index of the cloud group.

are pixels of the image in the CIE

(

) and CIE XYZ (

) representation, and two chromaticity channels were used. The luminosity channel

was omitted for the cloud groups separation. The aim was to minimize the Euclidean distance of the image pixel color values to the initial centroids

. The centroids were derived each time in the previous iteration

. The initial approximation of the centroid position can be set as:

The value of criterion function

was calculated in each step. The distance between pixel

and the center of the cloud centroid is expressed as:

Pixels with the distance-satisfying condition:

are marked as potentially classified as a single cloud class

.

is a sensitivity parameter of the convergence of the method. The segmentation process is completed when each pixel can be distinguished as a single cloud group (see

Table 1) in the chromaticity color-space, or when the maximum number of iterations has been taken. The input image can also contain unwanted artificial objects. To remove these areas of the image, a special pixel color mask is applied to the images. These objects can be simply masked out as an additional color cluster.

4. Results and Discussion

Since all of the images have been classified and evaluated, the next step was an investigation of the segmentation accuracy of the proposed method. Two different color-spaces were chosen for the comparison: CIE and the CIE XYZ space.

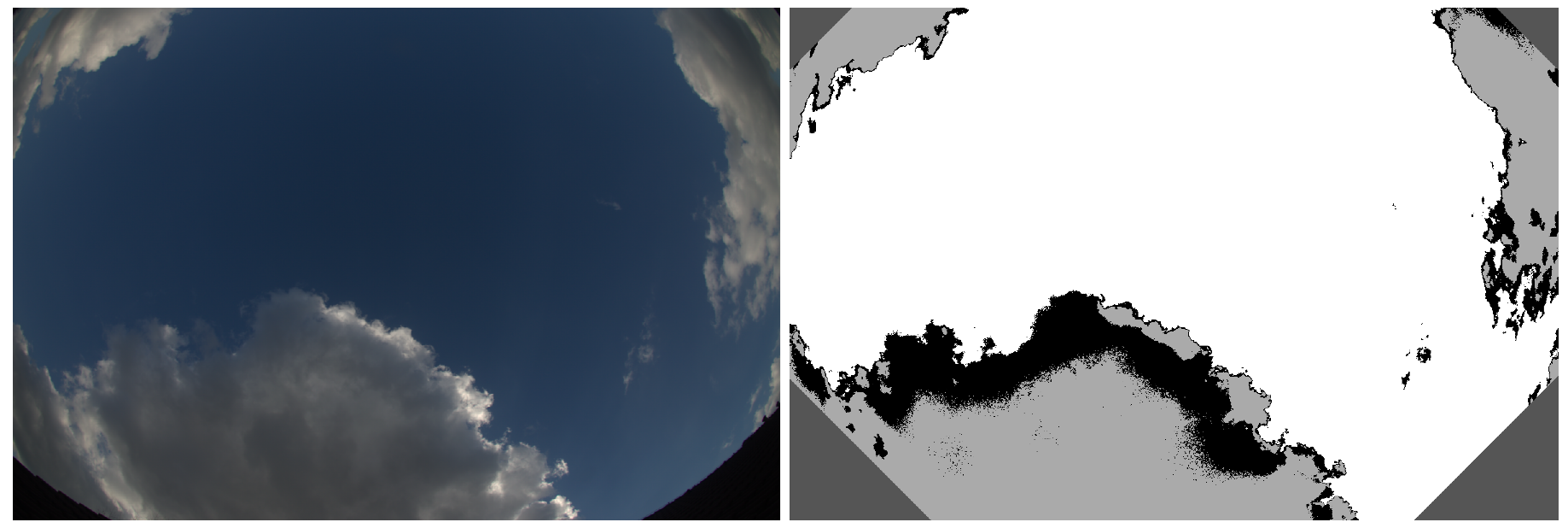

Figure 2 presents the segmentation of the phenomena in the

,

color channels.

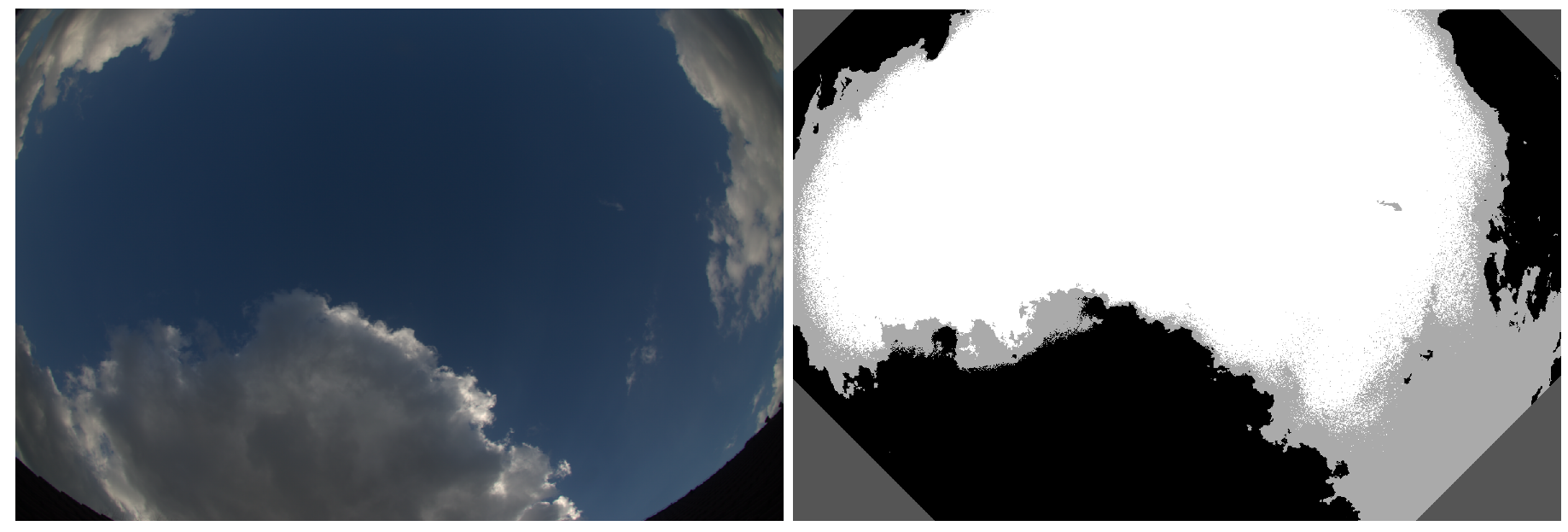

Figure 3 presents a similar segmentation in the

x,

z channels. Firstly, a visual inspection of the segmentation accuracy was done for all images in the WMD database. In general, the separation of the clouds and the clear sky segments was more precise in the

,

channels than in the

x,

z channels. In particular, parts of low-level clouds were frequently separated into one of the produced segments together with a clear sky in the

x,

z channels (see the light-gray segment in

Figure 3). By contrast, the clear sky and the clouds were very precisely separated into stand-alone segments in the

,

channels. We supported these observations by several segmentation accuracy metrics such as pixel accuracy, the F-score, also known as the Dice coefficient, and IoU (Intersection over Union), also known as the Jaccard coefficient.

From the WMD database, there were annotated (classified) cloud groups for each image. In addition, each cloud group had its own, manually labeled, ground truth segments. Ground truth represents pixel areas that contained a particular cloud group or artificial objects and edges in an image. If an image contained several cloud groups, each group in the image had its own ground truth. The artificial objects also had their own ground truth pixel area. The k-means++ method produced several segments. They had to be manually assigned to one of the cloud groups or artificial objects. One cloud group could comprise several generated segments, which were joined together. Subsequently, the pixels within the generated and classified segment could be compared with the pixels of the equally classified ground truth. The number of different ground truth segments in the image was equal to

. The number of generated and classified segments (pixel areas, which belonged to the cloud groups or artificial objects) had to be also equal to

. Therefore, the score of each metric for one image had to be calculated separately for

cases and then averaged over

. Assume a binary representation, where pixels are either zero if they do not belong to the selected area (segment) or one if they belong to the selected area (segment). Let there be a pixel-wise task, where pixels within a generated segment are evaluated against pixels of a ground truth segment as TP (True Positive), TN (True Negative), FP (False Positive), and FN (False Negative). For one image, assume

such pixel-wise tasks. Then, the pixel accuracy metric, F-score, and IoU, respectively, are defined as:

Figure 4 presents the results of the metrics for our segmented all-sky images. All three metrics showed higher values and lower variation for the cloud group segmentation in the

,

color channels than in the

x,

z color channels. This, therefore, indicated higher segmentation accuracy of the cloud groups within the

,

channels.

After the visual inspection combined with the results of the introduced objective metrics, we could conclude that the , color channels were more suitable than the x, z color channels for color-based segmentation of all-sky images. Due to the inaccurate separation in the x, z channels, an additional bias in the color values of the cloud segments was anticipated.

For the investigation of cloud groups color features, it was convenient to extract the color values (features) from the generated segments. Subsequently, the investigation of the distribution of these cloud-segment color features was carried out within the color-spaces that were introduced. The original images were again transformed into the CIE XYZ and CIE

color-spaces. During the previous process of segmentation accuracy evaluation, k-means++ generated segments could be merged and classified as one of the cloud groups. The pixel areas in the again transformed images, which belonged to the classified segments, were extracted. For such segments, the mean values of each color-space channel were calculated. Due to the repeated color transformations, we were also able to extract the luminosity channels

Y and

L of the cloud group segments. It was possible to compare the features of the cloud-group segments in the chrominance and luminosity channels, even though the luminosity channels were previously omitted from the segmentation process. For the investigation of cloud-segment color features, we exploited the diagrams of the selected color-space channels plotted against each other (

Figure 5,

Figure 6 and

Figure 7). To clarify, each data point in the following diagrams represents a segment classified into one of four main cloud groups. For example,

Figure 2 represents two segments joined and classified as low-level clouds and one segment of the clear sky. However, due to the inaccurate segmentation,

Figure 3 represents one classified segment of low-level clouds and two joined and classified segments of clear sky. The segments of masked out artificial objects were generally omitted from the color evaluation.

In this paper, we present and discuss only those color diagrams with the most promising and most significant results. The most exploited color diagrams that we introduce here mainly show the color components of the CIE

color-space. Only to support the exclusion of the CIE XYZ color-space from the cloud-segment color analysis, we also present a color diagram of the

x,

z components in which the segmentation proceeded. As expected, the previous segmentation inaccuracy significantly influenced the distribution of our segments within the

x,

z diagram (

Figure 5). There was massive overlapping of the cloud segment data points by the clear sky data points (blue). Due to this overlapping distribution, it was not possible to perform any further statistical analysis or to make any other use of the data. In general, we considered that the CIE XYZ color-space was unsuitable for cloud color segmentation of all-sky images.

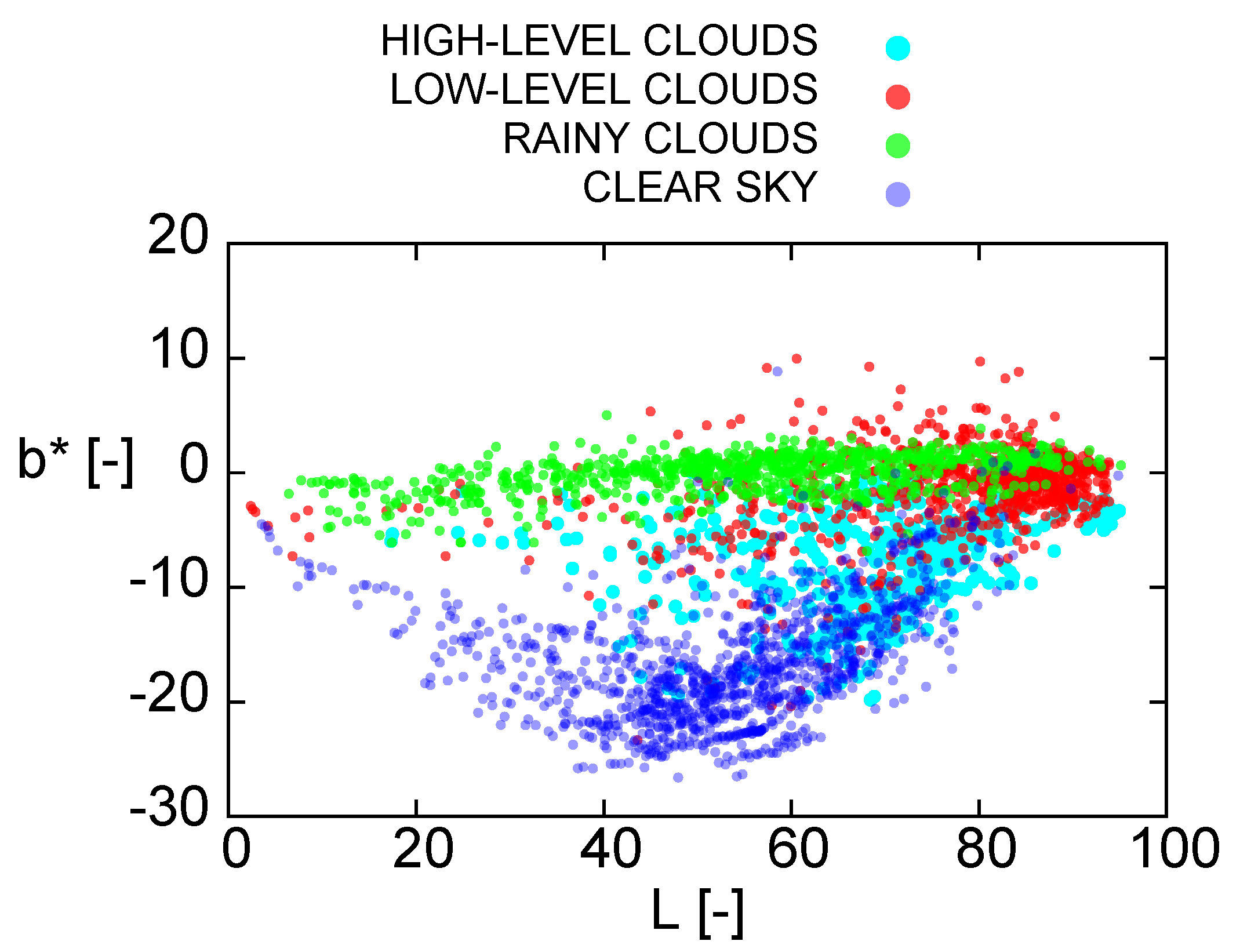

Diagrams

L,

(

Figure 6) and

,

(

Figure 7) offered much more promising data distributions. The inaccurate segmentation and cloud separation in the image data taken close to sunrise or sundown were already observed during the classification process. This inaccuracy was imprinted in the diagrams. Although we segmented the data only in the chrominance channels, the low-light conditions affected the segmentation results. The F-score lower extreme was close to 0.79. This conclusion was confirmed by the

L,

diagram (

Figure 6). The points representing clear sky (blue) with low

L values were closer to the points representing rain clouds (green). However, with average light

L values, the clear sky points were completely separated from the rainy points. The highly solar irradiated scene also significantly affected the color values of the segments. This could be observed in the

L,

diagram, in which the points of all groups with

L values greater than 80 significantly overlapped each other.

Despite the frequent overlapping caused by the significant number of segments and the segmentation bias, however, it was apparent that each of the cloud groups formed a cluster within the diagram. If we evaluated each cloud group separately and computed the mean value with the standard deviation for each group, we obtained the diagram in

Figure 8, in which these centroids were clearly separated. The separation between the cloud group data distribution was measured by the Bhattacharyya coefficient [

26,

27], which approximates the relative closeness between the distributions. BC values are equal to one when two data distribution are identical and equal to zero when two data distributions have no intersection. The Bhattacharyya coefficient (

) is defined as:

where

represents the Bhattacharyya distance between two multivariate distributions with their mean values

,

and covariance matrices

,

. The Bhattacharyya distance is expressed as:

Especially, rain cloud (green) and clear sky (blue) segments or low-level cumulus-type clouds (red) and clear sky (blue) segments reported low values of the

, 0.05 and 0.13, respectively, and therefore, a high separation between them within the

L,

channels. All values of the

for the cloud groups within the

L,

channels are listed in

Table 2. The values of BC in the table indicated that the cloud groups’ data distributions were non-identical in the

L,

space. It was, therefore, possible to exploit the cloud groups in this space as classification classes for a classification task. However, due to the frequent overlapping of some data points, the classification task only in the

L,

space may be problematic. It was apparent that even if the luminosity was omitted from the segmentation process, the illumination conditions of a taken scene affected the color values of the cloud segments. The color of the cloud groups varied during the day. For future work, it is substantial to incorporate some machine learning techniques into the process of cloud classification development. Additional parameters, which would take into consideration the illumination of the scene and the variation of the cloud color during the day, have to be introduced.

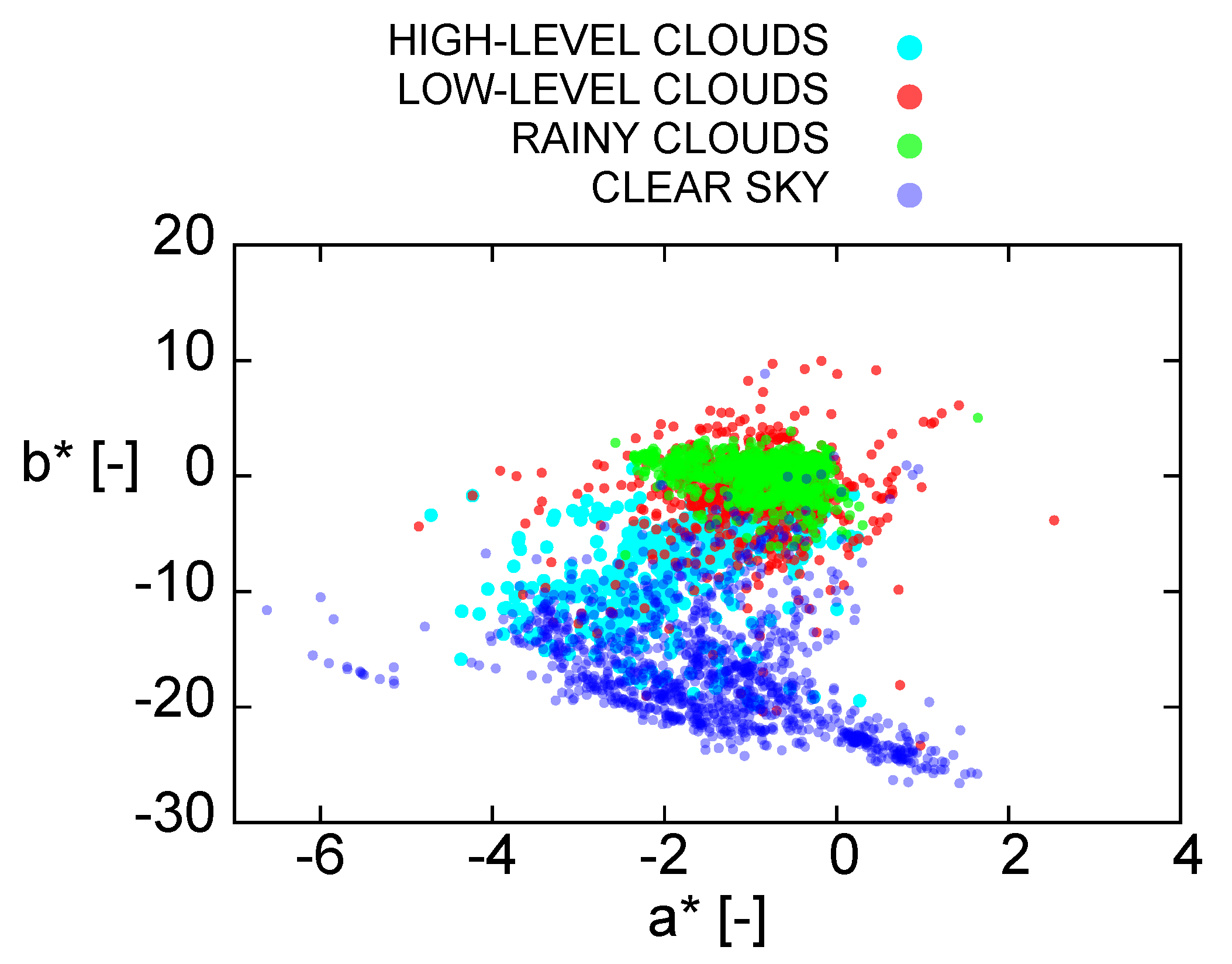

The next color diagram (

Figure 7) of component

versus

also provided interesting results. In this diagram, the rain cloud segment points (green) had similar color features to those of the low-level cumulus-type clouds (red). This supported similar claims in [

16]. Similarly, the high-level clouds (magenta) were also located close to the points of clear sky (blue).

Despite the overlapping of some points, it was apparent that the low-level clouds (red) and the rain clouds (green) formed their own clusters within these channels. These clusters were clearly separated from the high-level clouds and the clear sky points, which formed clusters of their own. We therefore decided to calculate the mean value of these clusters with the standard deviation and to plot these centroids in the new color diagram (

Figure 9). The separation of the centroids of the cloud groups confirmed the formation of the cloud-group clusters within the

,

space. For the inspection of the separation between the cloud groups within the

,

channels, the Bhattacharyya coefficient was again calculated. The values of the coefficient are listed in

Table 3. In the

,

space, BC also indicated that the cloud group distributions were non-identical. However, rain clouds and low-level clouds were more closely related in this space (higher BC). It was more problematic to differentiate between them. The differentiation of the cloud groups in this space was still possible, and this space was valuable for the possible classification task. In the future, it would be necessary to incorporate all the cloud color features from the

color-spaces and also an investigation of cloud color variations during the day. In addition, for the cloud types’ classification, exploitation of some machine learning techniques, especially the soft-classification methods, would be beneficial.

In general, the results confirmed that cloud detection on all-sky images could be performed by color segmentation within the CIE color-space. In addition, the color diagrams explored the diverse color features of the clouds merged into the defined groups. It was therefore possible to distinguish a variety of clouds on all-sky images according to their color features. Due to the sufficient quantity of the classified data from the WMD database, the cloud color features could be exploited as training data for developing a new automatic cloud classification algorithm. The separation of the cloud groups within the color diagrams implied that a properly designed algorithm, based for example on machine learning techniques or on neural networks, would automatically classify the clouds on all-sky images, after k-means++ preprocessing and after extracting the color-features. This cloud classification algorithm could then be extended for detecting rain clouds or for predicting the probability of rain. The proposed method based on k-means++ and other required image processing techniques could easily be optimized, and the algorithm could even operate in real-time on all-sky imaging systems. Color-based classification of clouds in all-sky images could therefore be a promising field of investigation for research groups with their own ground-based all-sky imaging system.