Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation

Abstract

1. Introduction

1.1. Research Hypotheses and Objectives

1.2. Localization in Autonomous Mobile Robots

1.3. Contribution and Structure

- the introduction of a Delaunay triangulation graph map for localization in forests; and

- the design and development of a framework to solve the vehicle tracking problem in a local coordinate system relying solely on lidar data, without GNSS or inertial sensor data.

2. Material and Methods

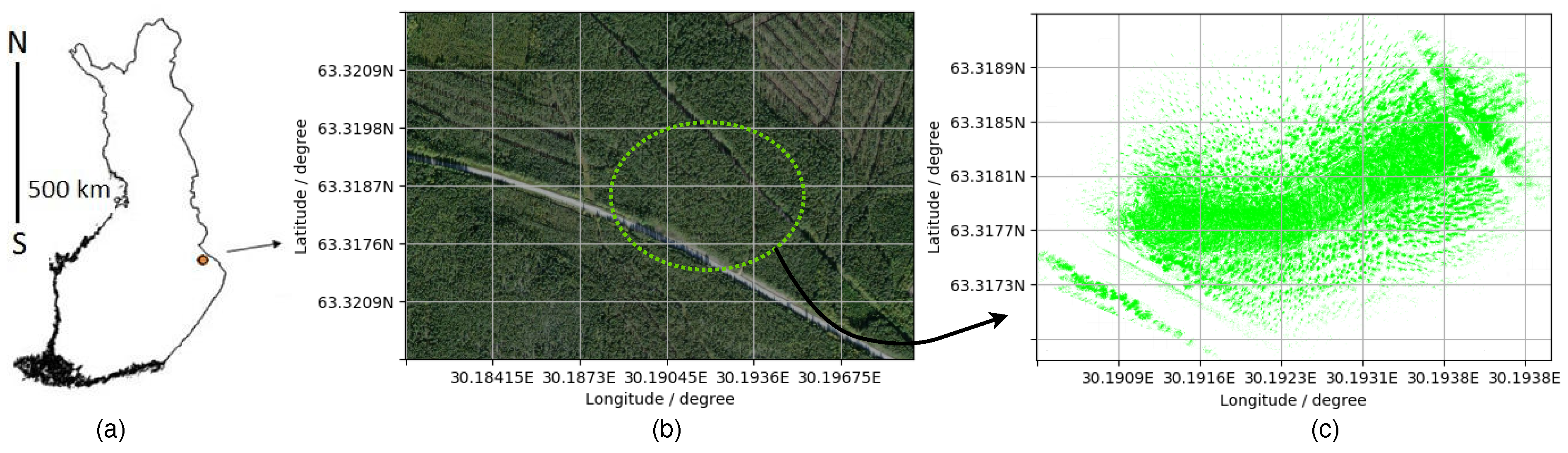

2.1. Study Area

2.2. System Hardware

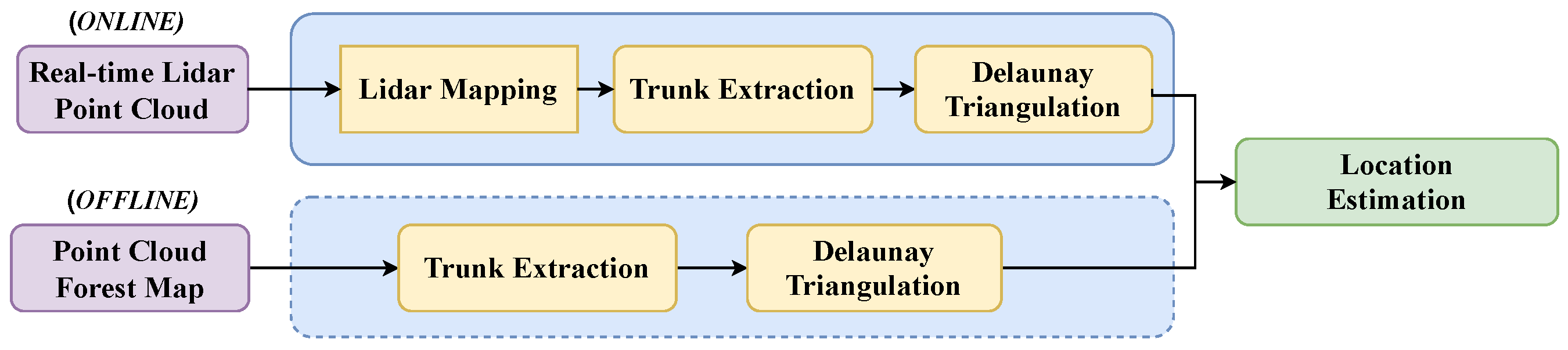

2.3. Methodology

- Point-cloud trunk segmentation from the global map (offline).

- Delaunay triangulation of the global map from the segmented trunk points (offline).

- Aggregation of 3D lidar scans into a local point cloud defining the robot’s position (online).

- Segmentation of trunks from the local point cloud (online).

- Delaunay triangulation from the local segmented trunk points (online).

- Estimation of a geometrical transformation that matches the local Delaunay triangulation with a subset.

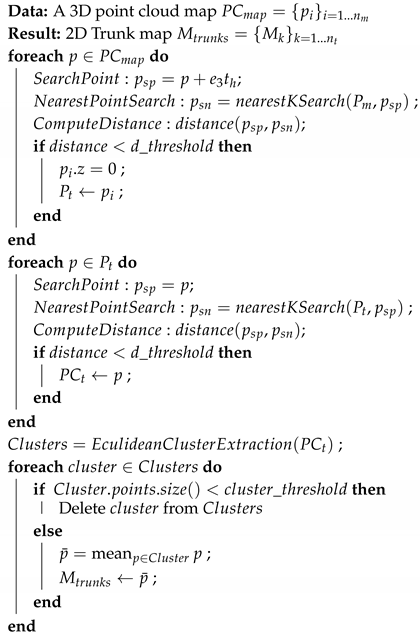

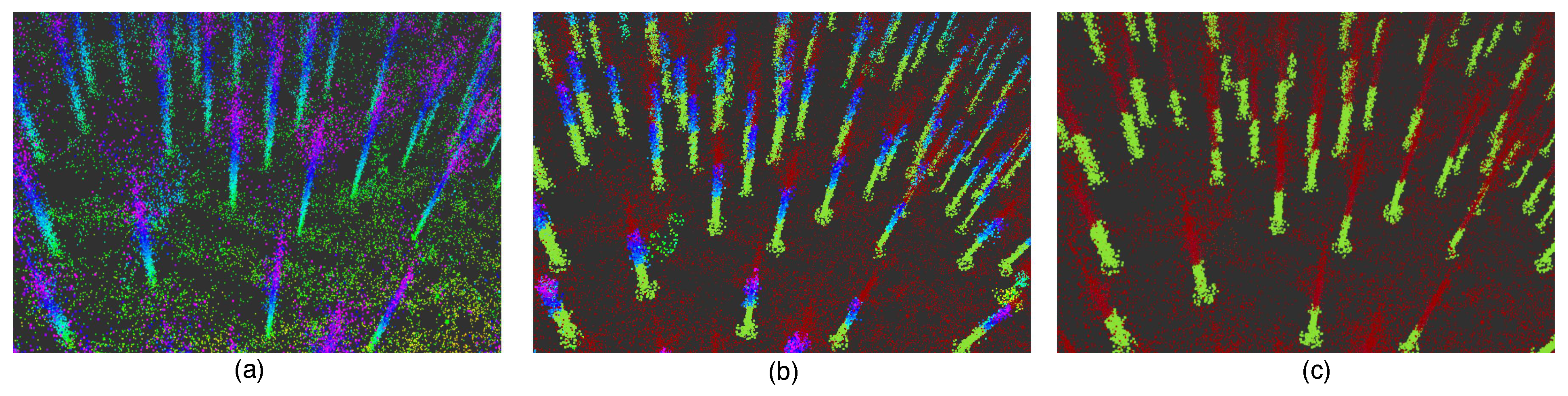

2.4. Trunk Point Cloud Segmentation

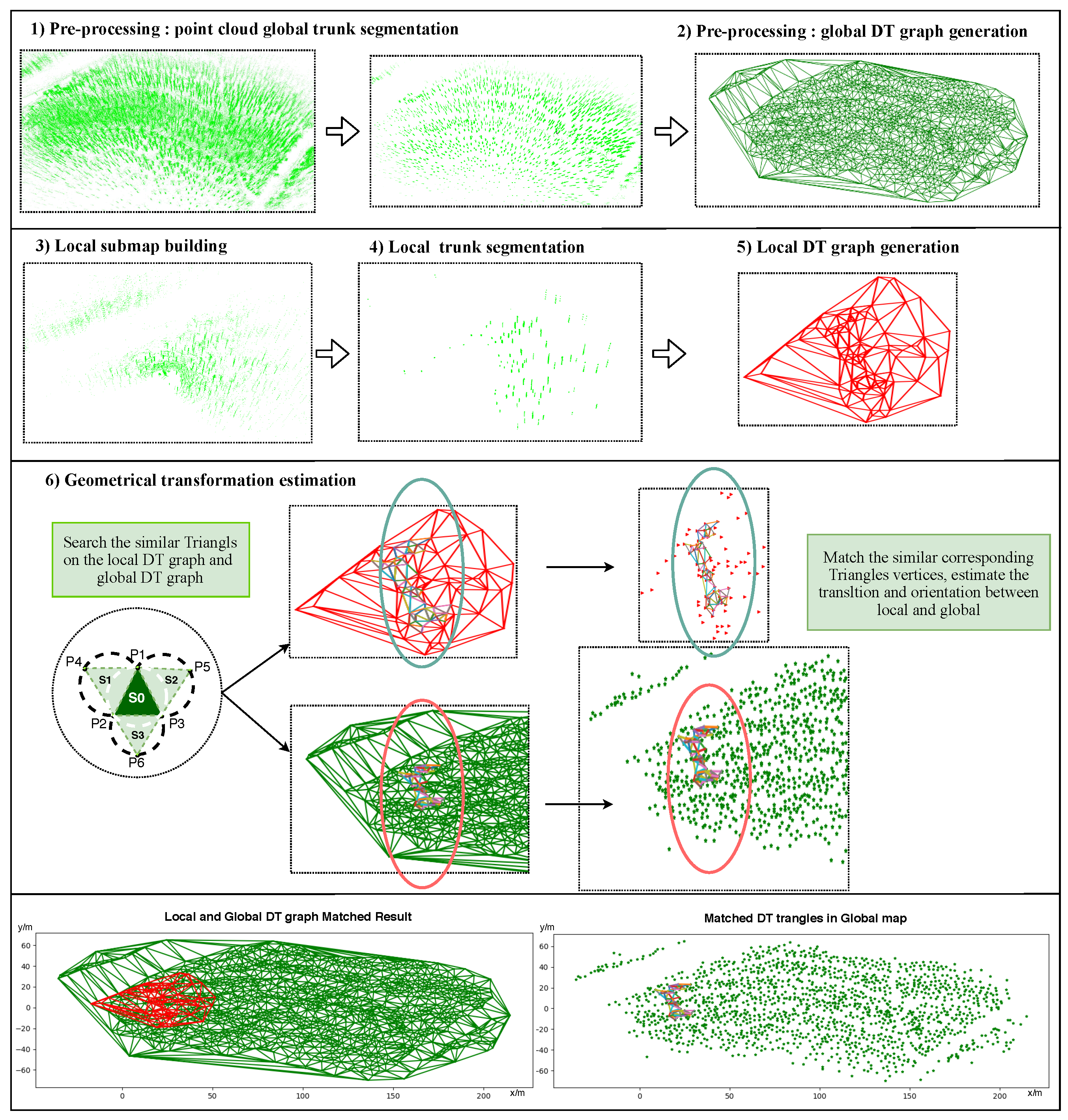

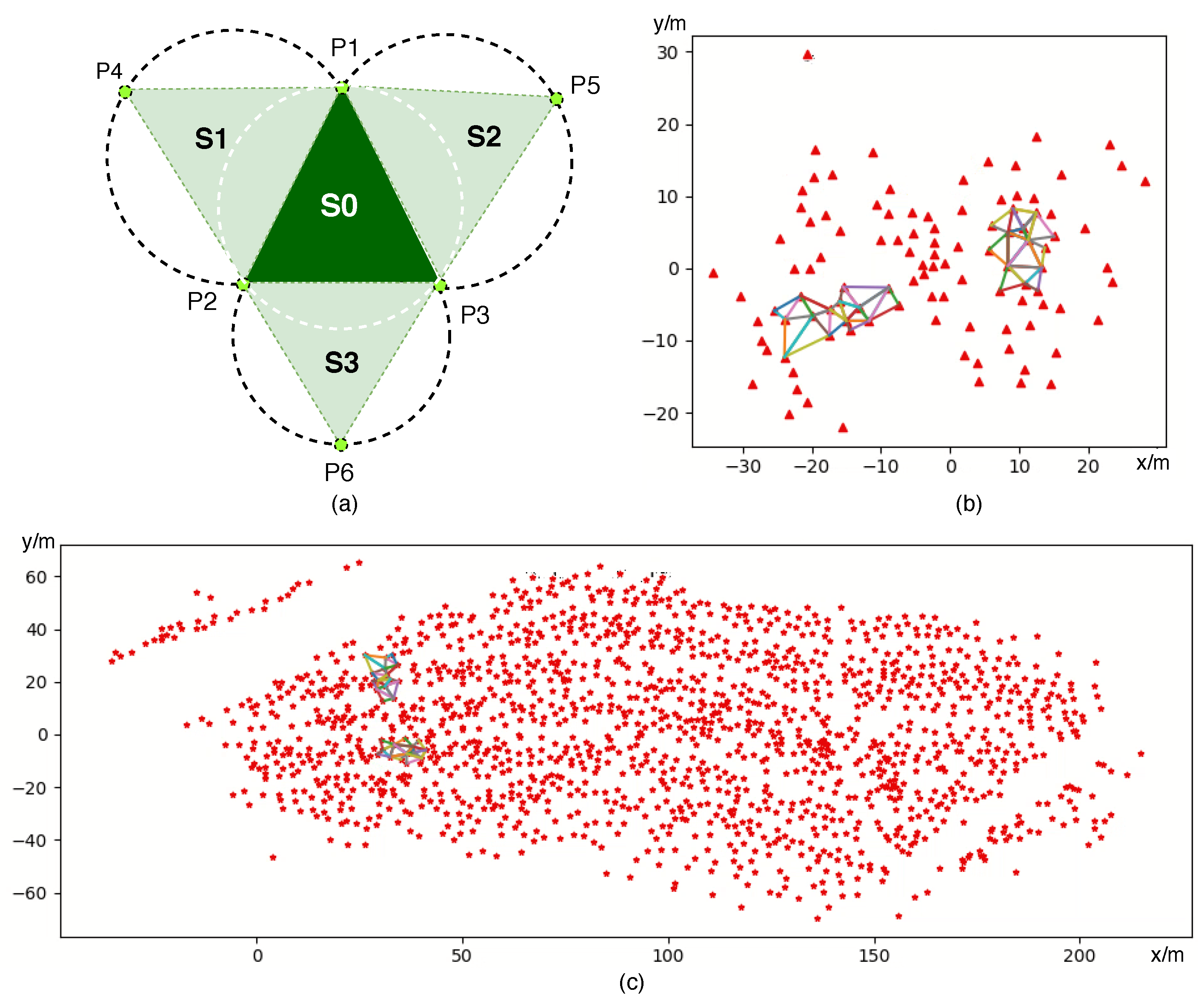

2.5. Global Map DT Graph Generation

| Algorithm 1: Extracting trunks from the point cloud map. |

|

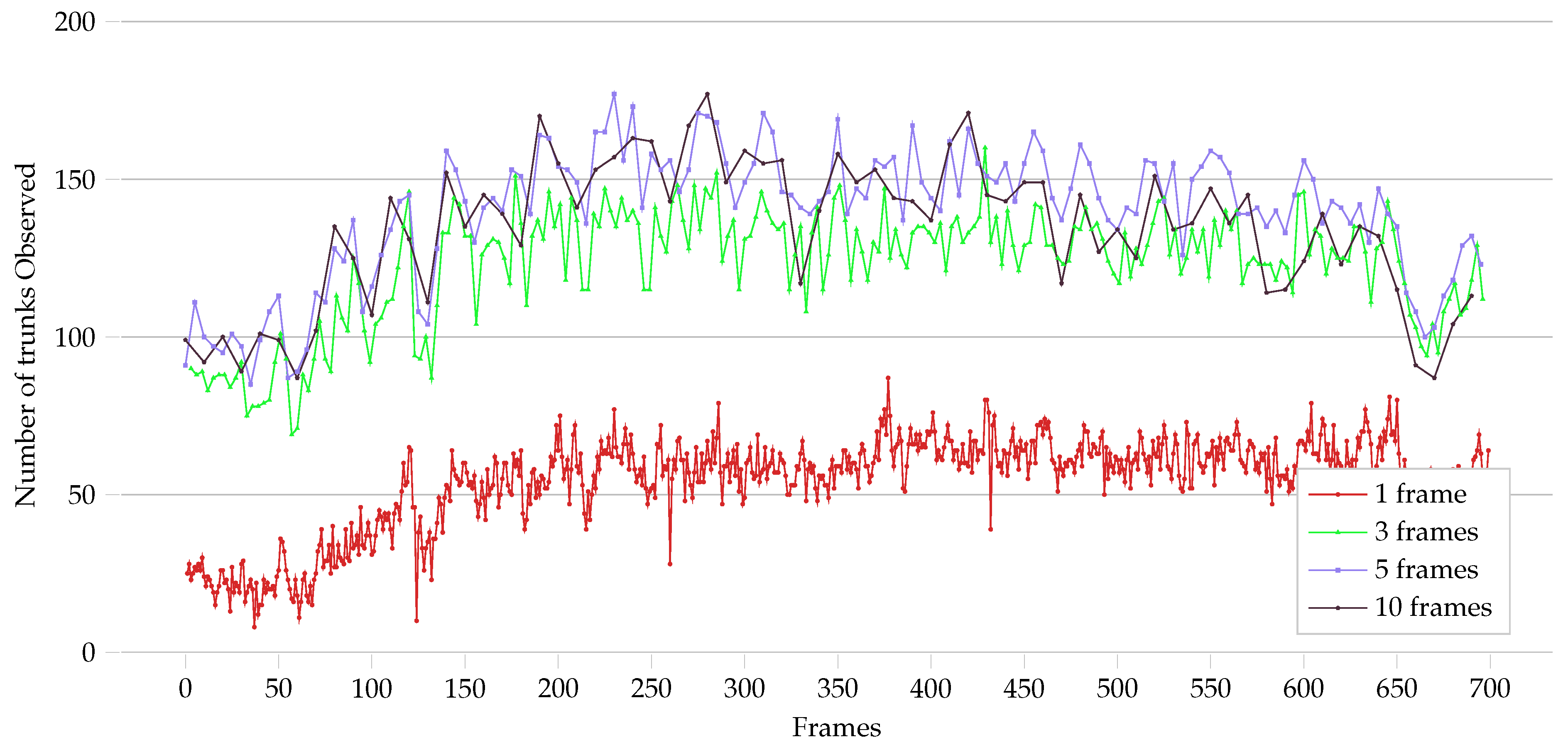

2.6. Local Map and Point Cloud Aggregation

2.7. Local DT Graph Generation

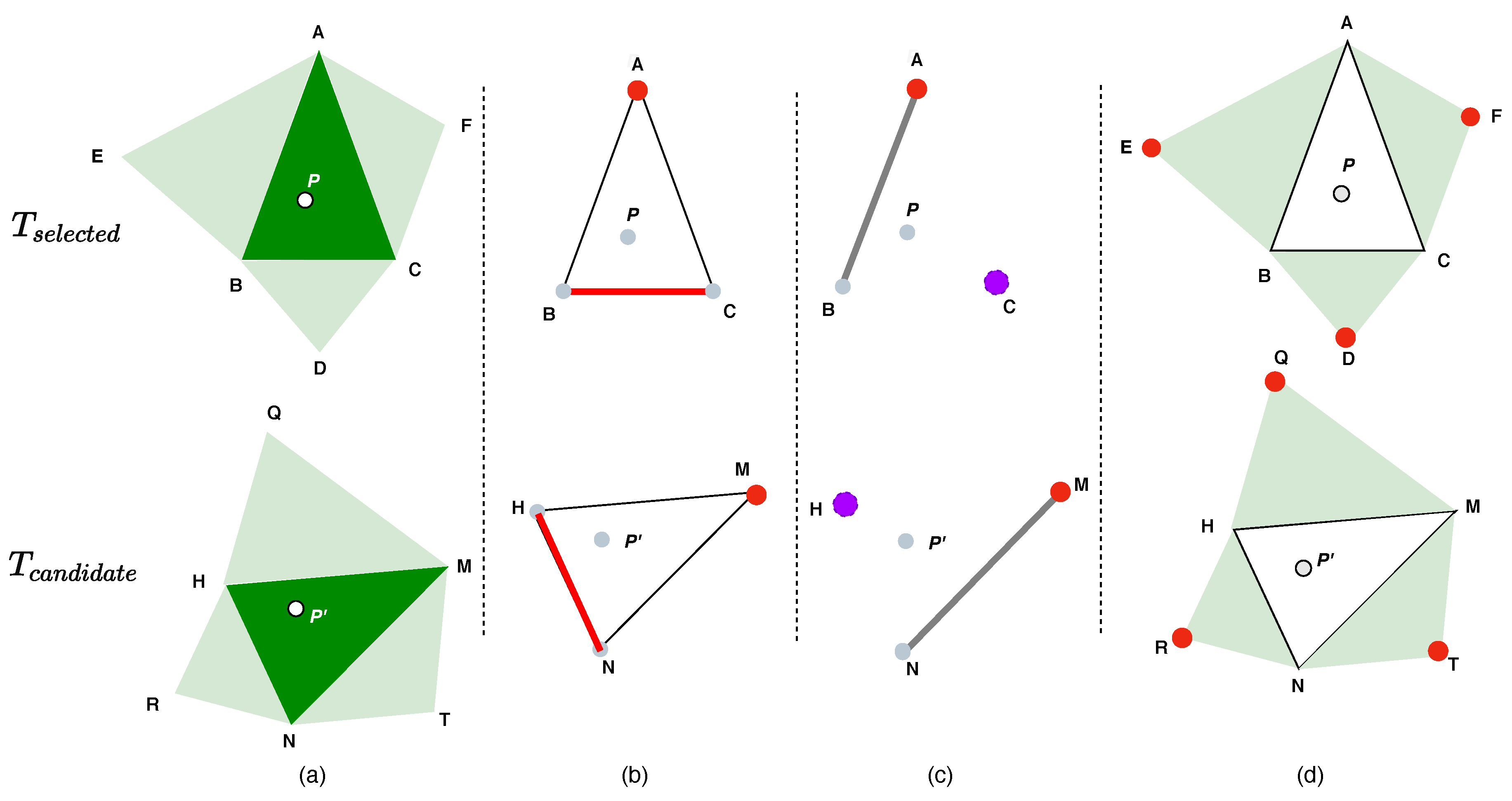

2.8. Matching Local and Global DT

2.8.1. Rotation and Translation Estimation

2.8.2. Geometric Verification, Final Translation and Rotation Estimation

3. Results

3.1. Trunk Segmentation Performance

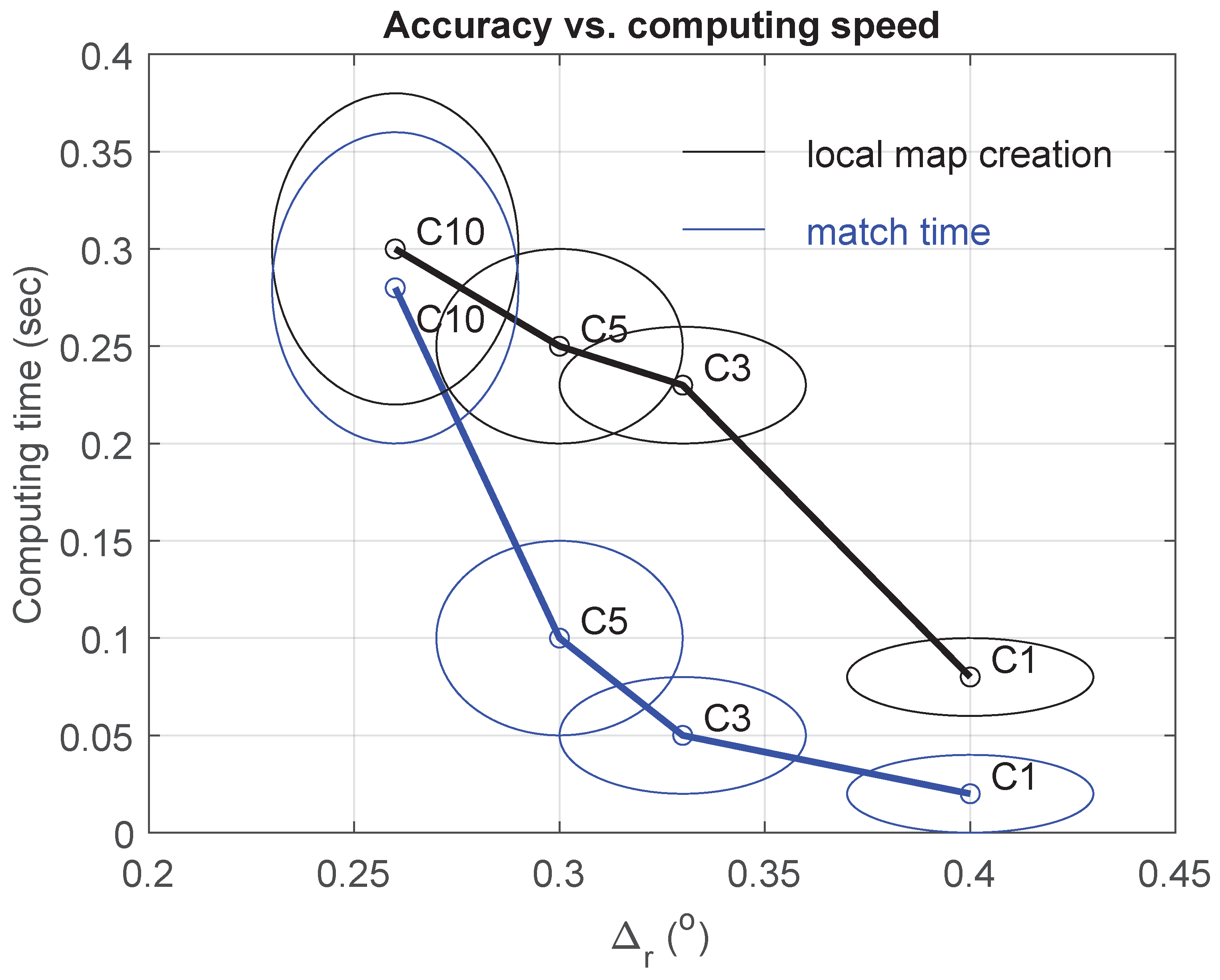

3.2. Computational Time and Accuracy

4. Discussion

4.1. Topology Mapping

4.2. Potential Accuracy Improvements

4.3. Potential Computational Improvements

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DT | Delaunay Triangulation |

| SLAM | Simultaneous localization and mapping |

References

- Kankare, V.; Vauhkonen, J.; Tanhuanpaa, T.; Holopainen, M.; Vastaranta, M.; Joensuu, M.; Krooks, A.; Hyyppa, J.; Hyyppa, H.; Alho, P.; et al. Accuracy in estimation of timber assortments and stem distribution—A comparison of airborne and terrestrial laser scanning techniques. ISPRS J. Photogramm. Remote Sens. 2014, 97, 89–97. [Google Scholar] [CrossRef]

- Hellström, T.; Lärkeryd, P.; Nordfjell, T.; Ringdahl, O. Autonomous Forest Vehicles: Historic, envisioned, and state-of-the-art. Int. J. For. Eng. 2009, 20, 31–38. [Google Scholar] [CrossRef]

- Liao, F.; Lai, S.; Hu, Y.; Cui, J.; Wang, J.L.; Teo, R.; Lin, F. 3D motion planning for UAVs in GPS-denied unknown forest environment. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gotenburg, Sweden, 19–22 June 2016; pp. 246–251. [Google Scholar]

- Tian, Y.; Liu, K.; Ok, K.; Tran, L.; Allen, D.; Roy, N.; How, J.P. Search and rescue under the forest canopy using multiple UAS. In Proceedings of the International Symposium on Experimental Robotics, Buenos Aires, Argentina, 5–8 November 2018; pp. 140–152. [Google Scholar]

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated driving recognition technologies for adverse weather conditions. IATSS Res. 2019, 43. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Resindra Widya, A.; Torii, A.; Okutomi, M. Structure-from-Motion using Dense CNN Features with Keypoint Relocalization. arXiv 2018, arXiv:1805.03879. [Google Scholar]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.; Mutz, F.; et al. Self-driving cars: A survey. arXiv 2019, arXiv:1901.04407. [Google Scholar]

- Thakur, R. Scanning LIDAR in Advanced Driver Assistance Systems and Beyond: Building a road map for next-generation LIDAR technology. IEEE Consum. Electron. Mag. 2016, 5, 48–54. [Google Scholar] [CrossRef]

- Qingqing, L.; Peña Queralta, J.; Gia, T.N.; Zou, Z.; Westerlund, T. Multi Sensor Fusion for Navigation and Mapping in Autonomous Vehicles: Accurate Localization in Urban Environments. In Proceedings of the 9th IEEE CIS-RAM Conference, Bangkok, Thailand, 18–20 November 2019. [Google Scholar]

- Zhang, W.; Wan, P.; Wang, T.; Cai, S.; Chen, Y.; Jin, X.; Yan, G. A novel approach for the detection of standing tree stems from plot-level terrestrial laser scanning data. Remote Sens. 2019, 11, 211. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguére, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Liang, X.; Kankare, V.; Hyyppä, J.; Wang, Y.; Kukko, A.; Haggrén, H.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Guan, F.; et al. Terrestrial laser scanning in forest inventories. ISPRS J. Photogramm. Remote Sens. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Miettinen, M.; Öhman, M.; Visala, A.; Forsman, P. Simultaneous localization and mapping for forest harvesters. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 517–522. [Google Scholar]

- Tang, J.; Chen, Y.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Khoramshahi, E.; Hakala, T.; Hyyppä, J.; Holopainen, M.; Hyyppä, H. SLAM-aided stem mapping for forest inventory with small-footprint mobile LiDAR. Forests 2015, 6, 4588–4606. [Google Scholar] [CrossRef]

- Ringdahl, O.; Lindroos, O.; Hellström, T.; Bergström, D.; Athanassiadis, D.; Nordfjell, T. Path tracking in forest terrain by an autonomous forwarder. Scand. J. For. Res. 2011, 26, 350–359. [Google Scholar] [CrossRef]

- Zhu, X.; Kim, Y.; Minor, M.A.; Qiu, C. Autonomous Mobile Robots in Unknown Outdoor Environments, 1st ed.; CRC Press Inc.: Boca Raton, FL, USA, 2017. [Google Scholar]

- Tominaga, A.; Eiji, H.; Mowshowitz, A. Development of navigation system in field robot for forest management. In Proceedings of the 2018 Joint 10th International Conference on Soft Computing and Intelligent Systems (SCIS) and 19th International Symposium on Advanced Intelligent Systems (ISIS), Toyama, Japan, 5–8 December 2018; pp. 1142–1147. [Google Scholar]

- Chen, S.W.; Nardari, G.V.; Lee, E.S.; Qu, C.; Liu, X.; Romero, R.A.F.; Kumar, V. SLOAM: Semantic lidar odometry and mapping for forest inventory. IEEE Robot. Autom. Lett. 2020, 5, 612–619. [Google Scholar] [CrossRef]

- Sattler, T.; Leibe, B.; Kobbelt, L. Efficient & effective prioritized matching for large-scale image-based localization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1744–1756. [Google Scholar]

- Magnusson, M.; Nuchter, A.; Lorken, C.; Lilienthal, A.J.; Hertzberg, J. Evaluation of 3D registration reliability and speed-A comparison of ICP and NDT. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3907–3912. [Google Scholar]

- Tomaštík, J.; Saloň, Š.; Piroh, R. Horizontal accuracy and applicability of smartphone GNSS positioning in forests. For. Int. J. For. Res. 2016, 90, 187–198. [Google Scholar] [CrossRef]

- Zimbelman, E.G.; Keefe, R.F. Real-time positioning in logging: Effects of forest stand characteristics, topography, and line-of-sight obstructions on GNSS-RF transponder accuracy and radio signal propagation. PLoS ONE 2018, 13, e0191017. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; Volume 2, p. 435. [Google Scholar]

- Holz, D.; Ichim, A.E.; Tombari, F.; Rusu, R.B.; Behnke, S. Registration with the point cloud library: A modular framework for aligning in 3-D. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Lauer, M.; Lange, S.; Riedmiller, M. Calculating the perfect match: An efficient and accurate approach for robot self-localization. In Robot Soccer World Cup; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3. [Google Scholar]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A Globally Optimal Solution to 3D ICP Point-Set Registration. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2241–2254. [Google Scholar] [CrossRef]

- Nunez, P.; Vazquez-Martin, R.; Del Toro, J.C.; Bandera, A.; Sandoval, F. Feature extraction from laser scan data based on curvature estimation for mobile robotics. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 1167–1172. [Google Scholar]

- Sampath, A.; Shan, J. Clustering based planar roof extraction from lidar data. In Proceedings of the American Society for Photogrammetry and Remote Sensing Annual Conference, Reno, NV, USA, 1–5 May 2006; pp. 1–6. [Google Scholar]

- Liang, J.; Zhang, J.; Deng, K.; Liu, Z.; Shi, Q. A new power-line extraction method based on airborne LiDAR point cloud data. In Proceedings of the 2011 International Symposium on Image and Data Fusion, Tengchong, China, 9–11 August 2011; pp. 1–4. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Thrun, S.; Koller, D.; Ghahmarani, Z.; Durrant-Whyte, H. SLAM updates require constant time. In Proceedings of the Workshop on the Algorithmic Foundations of Robotics, Nice, France, 15–17 December 2002; pp. 1–20. [Google Scholar]

- Liu, Y.; Thrun, S. Results for outdoor-SLAM using sparse extended information filters. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 1227–1233. [Google Scholar]

- Ulrich, I.; Nourbakhsh, I. Appearance-based place recognition for topological localization. In Proceedings of the IEEE International Conference on Robotics and Automation (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 2, pp. 1023–1029. [Google Scholar]

- Chen, J.; Luo, C.; Krishnan, M.; Paulik, M.; Tang, Y. An enhanced dynamic Delaunay triangulation-based path planning algorithm for autonomous mobile robot navigation. In Proceedings of the Intelligent Robots and Computer Vision XXVII: Algorithms and Techniques. International Society for Optics and Photonics, San Jose, CA, USA, 18–19 January 2010; Volume 7539, p. 75390P. [Google Scholar]

- Himstedt, M.; Frost, J.; Hellbach, S.; Böhme, H.J.; Maehle, E. Large scale place recognition in 2D LIDAR scans using geometrical landmark relations. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 5030–5035. [Google Scholar]

- Lynen, S.; Bosse, M.; Siegwart, R. Trajectory-based place-recognition for efficient large scale localization. Int. J. Comput. Vis. 2017, 124, 49–64. [Google Scholar] [CrossRef]

- Bosse, M.; Zlot, R. Place recognition using keypoint voting in large 3D lidar datasets. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2677–2684. [Google Scholar]

- Bosse, M.; Zlot, R. Keypoint design and evaluation for place recognition in 2D lidar maps. Robot. Auton. Syst. 2009, 57, 1211–1224. [Google Scholar] [CrossRef]

- Qian, C.; Liu, H.; Tang, J.; Chen, Y.; Kaartinen, H.; Kukko, A.; Zhu, L.; Liang, X.; Chen, L.; Hyyppä, J. An integrated GNSS/INS/LiDAR-SLAM positioning method for highly accurate forest stem mapping. Remote Sens. 2017, 9, 3. [Google Scholar] [CrossRef]

- Edelsbrunner, H. Triangulations and meshes in computational geometry. Acta Numer. 2000, 9, 133–213. [Google Scholar] [CrossRef]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Rusu, R.B. Semantic 3d object maps for everyday manipulation in human living environments. KI Künstliche Intell. 2010, 24, 345–348. [Google Scholar] [CrossRef]

- Lee, D.T.; Schachter, B.J. Two algorithms for constructing a Delaunay triangulation. Int. J. Comput. Inf. Sci. 1980, 9, 219–242. [Google Scholar] [CrossRef]

- Arzoumanian, Z.; Holmberg, J.; Norman, B. An astronomical pattern-matching algorithm for computer-aided identification of whale sharks Rhincodon typus. J. Appl. Ecol. 2005, 42, 999–1011. [Google Scholar] [CrossRef]

- Sinclair, D. S-hull: A fast radial sweep-hull routine for Delaunay triangulation. arXiv 2016, arXiv:1604.01428. [Google Scholar]

- Boukouvala, F.; Misener, R.; Floudas, C. Global Optimization Advances in Mixed-Integer Nonlinear Programming, MINLP, and Constrained Derivative-Free Optimization, CDFO. Eur. J. Oper. Res. 2015, 252. [Google Scholar] [CrossRef]

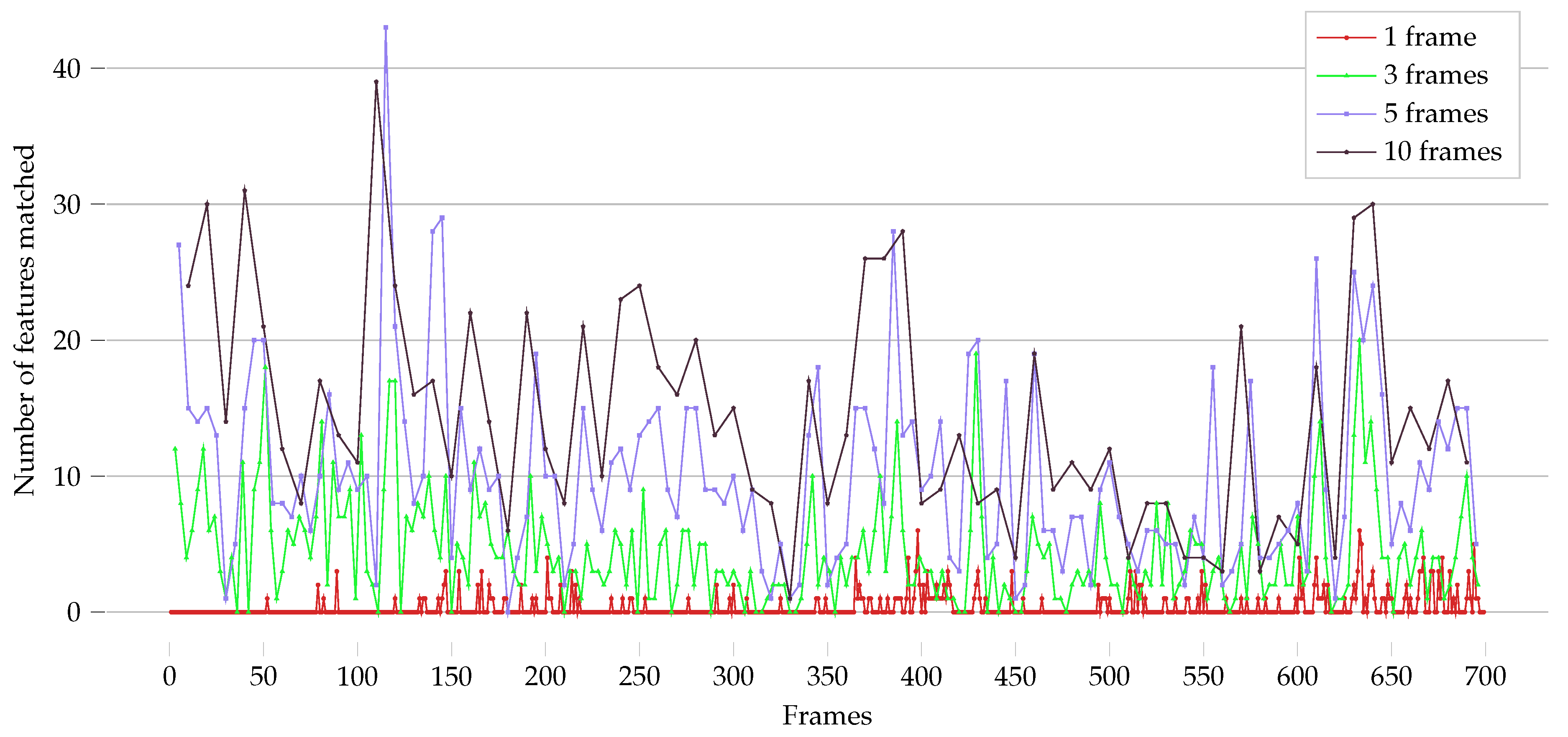

| #Aggregated Frames | Average #Trunks | Average #Matching Triangles | Overall Match Success Rate |

|---|---|---|---|

| 1 | 54.09 | 1.71 | 22.89% |

| 3 | 122.98 | 4.97 | 88.79% |

| 5 | 139.54 | 10.22 | 99.28% |

| 10 | 133.96 | 14.37 | 100% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Nevalainen, P.; Peña Queralta, J.; Heikkonen, J.; Westerlund, T. Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation. Remote Sens. 2020, 12, 1870. https://doi.org/10.3390/rs12111870

Li Q, Nevalainen P, Peña Queralta J, Heikkonen J, Westerlund T. Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation. Remote Sensing. 2020; 12(11):1870. https://doi.org/10.3390/rs12111870

Chicago/Turabian StyleLi, Qingqing, Paavo Nevalainen, Jorge Peña Queralta, Jukka Heikkonen, and Tomi Westerlund. 2020. "Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation" Remote Sensing 12, no. 11: 1870. https://doi.org/10.3390/rs12111870

APA StyleLi, Q., Nevalainen, P., Peña Queralta, J., Heikkonen, J., & Westerlund, T. (2020). Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation. Remote Sensing, 12(11), 1870. https://doi.org/10.3390/rs12111870