Abstract

Joint intrinsic and extrinsic calibration from a single snapshot is a common requirement in coastal monitoring practice. This work analyzes the influence of different aspects, such as the distribution of Ground Control Points (GCPs) or the image obliquity, on the quality of the calibration for two different mathematical models (one being a simplification of the other). The performance of the two models is assessed using extensive laboratory data (i.e., snapshots of a grid). While both models are able to properly adjust the GCPs, the simpler model gives a better overall performance when the GCPs are not well distributed over the image. Furthermore, the simpler model allows for better recovery of the camera position and orientation.

1. Introduction

Coastal monitoring systems using digital video cameras have become a widely used tool to study near-shore processes since the advent of the ARGUS system over 20 years ago [1,2]. At present, besides the original ARGUS developments, there exists a wide variety of packages to manage image acquisition and processing ([3,4,5,6], among others). Video monitoring systems have been shown to be useful, to cite just a few examples, in obtaining intertidal and subaquatic bathymetries [7,8,9], to detect and analyze shoreline dynamics [10,11], or to study the morphodynamics of beach systems [12,13]. Camera calibration is critical in coastal video monitoring systems, as it allows us to relate pixels in the images to real-world co-ordinates and vice versa.

Camera calibration in coastal video monitoring follows close-range photogrammetric procedures [1,14]. Even though the distance to the objects monitored (i.e., beaches) are up to ∼1000 m, the hypotheses of close-range calibration apply (e.g., no atmospheric refraction or non-negligible lens distortion). Actually, in ARGUS-like fixed stations, it is common practice to obtain the parameters related to lens distortion (intrinsic calibration parameters) prior to final deployment through classic close-range methods, using chessboard or similar patterns [1,6]. The camera position and orientation (extrinsic calibration parameters) are then obtained through Ground Control Points (GCPs); that is, pixels whose real-world co-ordinates are known. The literature on full (intrinsic and extrinsic parameters) close-range camera calibration photogrammetry is extensive, and includes studies on the governing equations [15,16,17], the calibration procedures [17,18,19,20,21], and applications including structure-from-motion and multi-camera approaches [22,23,24]. However, there have been few works dealing with the full calibration from a single image using a few GCPs.

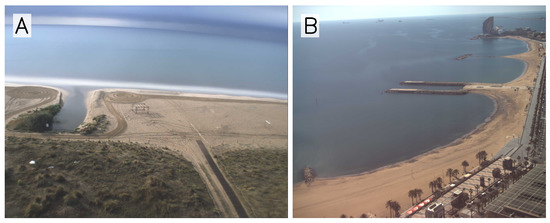

In most coastal ARGUS-like monitoring systems, the intrinsic parameters are obtained prior to the final deployment of the camera, as mentioned above, and the extrinsic parameters are then obtained through GCPs. In many practical situations, however, intrinsic calibration of the camera is not available. This is the case, for example, when using available surfcams around the globe to obtain morphodynamic information [25] or in the CoastSnap project [14]—a citizen science project in which citizens provide smartphone images for some given beaches. In general, taking advantage of the huge amount of freely available coastal images for morphodynamic studies and coastal management is a challenge for the research community. In such situations, all of calibration parameters (i.e., both intrinsic and extrinsic) must be obtained from the GCPs [25]. In a calibration campaign, a large amount of targets (GCPs) can be spread over the entire image and high quality calibrations can be obtained. In the practical situation we want to address, it is only possible to use fixed features and, as large portions of the images are sand, water, or sky, the GCPs are restricted to a relatively small part of the image. For illustrative purposes, Figure 1 includes two snapshots from Castelldefels and Barcelona beaches (Spain, see coo.icm.csic.es): in Figure 1A, the GCPs are usually in the lower half of the image; while in Figure 1B, they mainly lie in the right and lower parts. In addition, the number of points used is usually small; for example, [14] used only seven GCPs for georectification of community-contributed images. Such a low number of GCPs also raises the question of which is—while remaining in the domain of close-range photogrammetry—the most suitable calibration model. Please note that this is very different to what is usually found in close-range photogrammetry, where the calibration is done using a large number of points. In summary, new demands on coastal monitoring systems require further understanding of image calibration when a reduced number of GCPs must be chosen within only a small region of the image.

Figure 1.

Images from Castelldefels (A, at N, E) and Barcelona (B, at N, E) video monitoring stations (coo.icm.csic.es).

The main objective of this contribution is to determine the most suitable GCP distributions and calibration model to georectify images on coastal monitoring systems. To do this, we assume that there is only a single snapshot available to obtain a full camera calibration (intrinsic and extrinsic parameters) with a reduced number of GCPs. In addition, the premises of close-range photogrammetry and the non-use of wide angle lenses are considered. Two mathematical models are considered, one being a simplification of the other. The influence of the obliquity of the snapshot or the GCP distribution throughout the image on the calibration quality is analyzed. The ability to accurately recover some useful calibration parameters (e.g., camera position) is also discussed.

2. Materials and Methods

2.1. Camera Mathematical Models

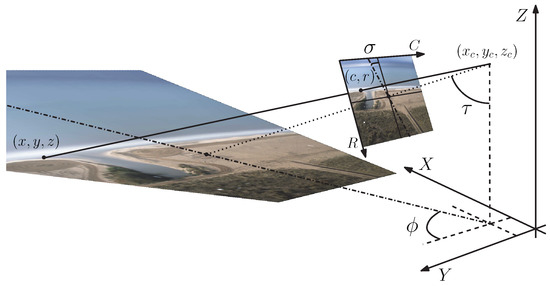

The pinhole model [26], together with the Brown–Conrady [27] model for decentered lens distortion, are the governing equations typically used for cameras in coastal video monitoring systems; see Figure 2. Given the real-world co-ordinates of a point, , its pixel position, in terms of column c and row r, is given by:

where , , , , , , , and are free parameters; higher order distortion terms are avoided for we do not consider wide angle lenses. Furthermore, and and are given by

where is the optical center (camera position); , , and are orthogonal unit vectors given by the Eulerian angles of the camera (azimuth , roll , and tilt ); and and stand for the pixel co-ordinates of the center of the image (known). The inversion of the above Equations (1) and (2) allows us to obtain the real-world co-ordinates of a pixel if an extra condition is given (typically, the point being in a horizontal plane ); this inversion requires the use of iterative procedures to solve the implicit equations.

Figure 2.

Real-world to pixel transformation: camera position (, , ) and Eulerian angles (, and ).

Overall, 14 parameters need to be established to calibrate the above (mathematical) camera model. The intrinsic parameters are as follows:

- radial and tangential distortions: , , , and (dimensionless);

- pixel size: and (dimensionless); and

- decentering: and (in pixels),

and the extrinsic parameters are:

- real world co-ordinates of the center of vision: , , and (in units of length); and

- Eulerian angles: , , and (in radians).

The above equations (including the set of 14 parameters) are referred to as the “complete” model, or . For most present-day cameras, it is reasonable to assume that the radial distortion is parabolic (i.e., ), the tangential distortion is negligible (), the pixels are squared (), and that the decentering is also negligible ( and ). The above hypotheses lead to a “reduced” model, herein called , with only 8 free parameters (, , , , , , , and ). Interestingly, the inversion of the model equations becomes explicit when model is considered (i.e., it becomes a cubic equation).

2.2. Error Definition and Calibration Procedure

A Ground Control Point (GCP) is a 5-tuple including the real-world co-ordinates of a point and the corresponding pixel co-ordinates (column c and row r) in an image (i.e., ). For a set of n GCPs and a camera model with given intrinsic and extrinsic parameters, following [6], the calibration error is defined as

where and are the pixel co-ordinates obtained from the corresponding real-world co-ordinates (i.e., ) through the camera model for the given parameters. For a certain set of GCPs, an image is here calibrated by finding the parameters (intrinsic and extrinsic) which minimize the above error. The optimization method considered is Broyden–Fletcher–Goldfarb–Shanno (BFGS, [28]) combined with a Monte-Carlo-like seeding method. Usually, the calibration takes only a few CPU seconds.

In real practice, the pixel co-ordinates of GCPs are manually digitized by an expert user, with an unavoidable error that is usually on the order of a few pixels. Understanding the influence of different factors (e.g., the obliquity, the amount and distribution of the GCPs, or the mathematical model) on the propagation of this error to the calibration quality is a key issue. For this reason, J “perturbed” calibrations are performed for each of the analyzed cases in the following section. For each j of these J calibrations, each of the n pixel co-ordinates of the GCPs, originally digitized at , was randomly perturbed with a noise of pixels (); that is, , where and are realizations of a uniformly distributed random variable in the range . The calibration errors for each of these perturbations are referred to as ; that is,

where and are the pixel co-ordinates obtained from the corresponding real-world co-ordinates, , for the calibration parameters of the perturbation. The errors in the real-world GCP co-ordinates are usually negligible in coastal studies (as it is orders of magnitude smaller than the size that the pixel represents in the real world). The errors and give a measure of the ability of the camera model to fit the GCPs, either original or perturbed. A different error is introduced below.

Consider J perturbed calibrations and a set of GCPs (here not necessarily those used for the calibration): for each GCP i, , the error is defined as

where is the pixel co-ordinate obtained from using the camera mathematical model and the perturbed calibration parameters. The above error is defined for each pixel of the set of GCPs. The Root Mean Square (RMS) over the set of GCPs is

with i running over all the pixels of the GCPs. The error gives a measure of the quality of the calibration for a given set of GCPs. If the GCPs are the same set used to obtain the perturbed calibrations, the error will be referred to as . When there are no pixel perturbations, for all j (and i) and Equation (4) reduces to Equation (3) (i.e., for all j). Furthermore, in the unperturbed case, as the perturbed calibrations become the original unperturbed ones, (for all j), such that, from Equation (5), it is and, from Equation (6), .

2.3. Experimental Setup

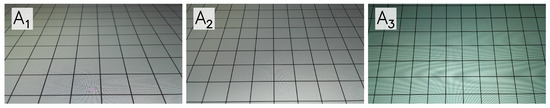

To gain a better understanding of the influence of different aspects on the quality of the calibration and the accuracy of the calibration parameters, a wide range of scenarios was analyzed. Two smartphone cameras were employed: a Samsung Galaxy Grand Prime ( pixels) and a Xiaomi Redmi 10 ( pixels). As both cameras gave equivalent results, only one of them (the Samsung) is introduced below, for the sake of clarity. Three different snapshots were taken of the same grid (see Figure 3), in order to consider a range of obliquities (tilt ): (angle ), (), and (, which is almost zenithal). The GCPs were easily obtained in these images as the intersections of the grid lines. The pixel co-ordinates of the GCPs were manually digitized with an error estimated as ∼2 px. The unit length in the real-world, “u”, was the side of the squares of the grid (around 5 cm).

Figure 3.

Angles (), (), and () to analyze the influence of obliquity.

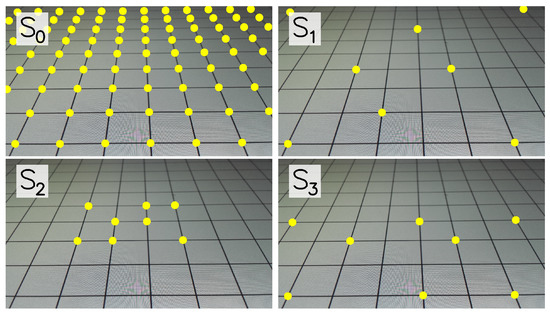

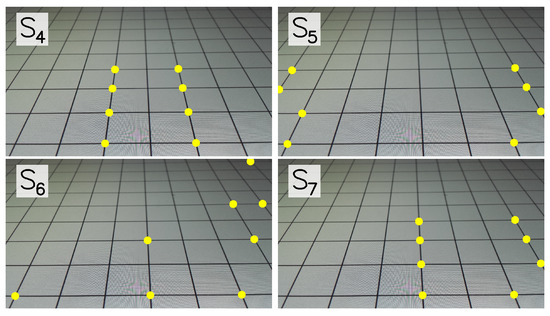

For each of the three angles, eight different subsets ( to ) of the whole set of grid intersections (∼80) were considered to be the GCPs for calibration. Figure 4 shows the eight subsets for the angle ; similar displays were considered for the other images in Figure 3 (although, necessarily, with some differences between the images). While considers all the available intersections of the grid as GCPs, the rest of the sets include eight GCPs distributed in different ways. Leaving aside the especial case , some sets correspond to (and are motivated by) real case conditions. For instance, the set resembles Figure 1A and set resembles Figure 1B. The other sets were designed to analyze the results from a more theoretical point of view (e.g., see and ). The set would correspond to Figure 1B if the horizon line was included (the horizon line is not analyzed in this work). While eight GCPs is a reasonable number of GCPs in usual practice [3,14], and was considered for the reference case, similar displays with 6 and 12 GCPs were also considered for sets to .

Figure 4.

Subsets to , for angle , considered to analyze the influence of the GCPs distribution.

For each angle and subset of GCPs, and for each of the two models ( and ), perturbed calibrations were performed for analysis.

3. Results

The results for the two cameras, three angles, three series of number of GCPs, the eight GCP distributions, and for the two methods, are given in the Supplementary Materials. The main results are presented in this section.

3.1. Error Analysis

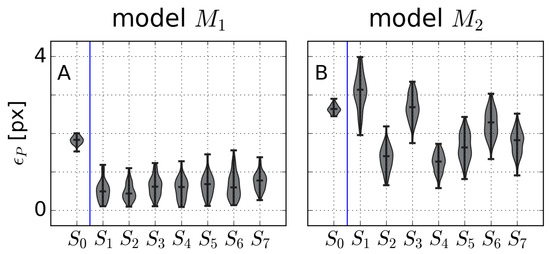

Figure 5 shows the distribution of the perturbed calibration errors for all the subsets of GCPs, for both models and for angle (the results for and were similar in this regard; not shown). Each boxplot contains information of the perturbations. The calibration errors were smaller for than for for all subsets; this is a natural consequence of the model (with eight parameters) being a particular case of model (with 14 free parameters). However, it is noteworthy that model , with around half of the parameters than , still had small calibration errors, with px. Also, from Figure 5, we can see that: (1) for , the errors were larger for (i.e., using all the available points as GCPs); and (2) for , the error was minimum for and . Furthermore, there were no outliers; that is, all the calibrations can be considered to be satisfactory.

Figure 5.

Errors for angle and models (A) and (B) as a function of the GCP calibration subset ( to ).

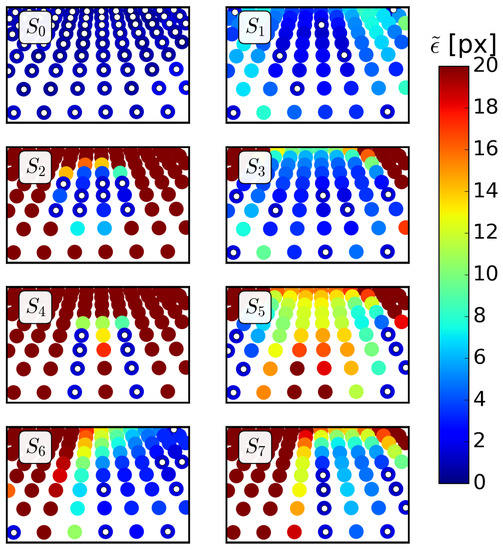

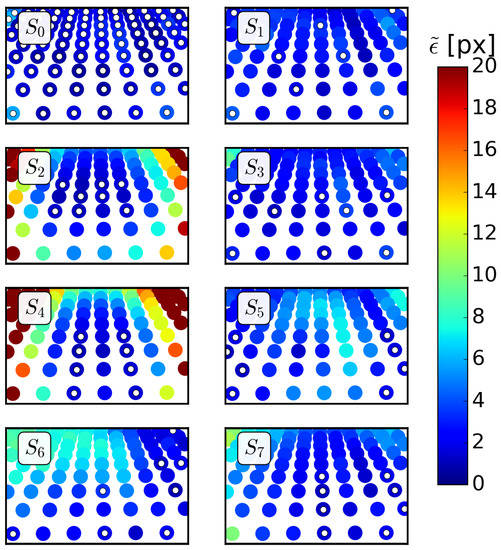

As argued above, the error defined in Equation (5) gives us a better idea about the usability of the calibrations along the image. Figure 6 and Figure 7 show the errors for all the available points for models and , respectively, using the perturbed calibrations of the different subsets (the GCPs of the subsets are highlighted with small white circles, for ease of viewing). The results in Figure 6 and Figure 7 are for the angle (the angles and showed the same trends, although with higher errors, as shown below through ). As depicted in the figures, the errors remained small at the GCPs of each subset . The behaviour outside the region was better for model than for , especially for the cases and –; it can be seen that the color was saturated at px, but the errors increased up to ∼ px for and in model .

Figure 6.

Errors for and angle at all the available points for the different sets . The GCPs for each set are here highlighted with white circles in the center and correspond to the points in Figure 4.

Figure 7.

Errors for and angle at all the available points for the different sets . The GCPs for each set are here highlighted with white circles in the center and correspond to the points in Figure 4.

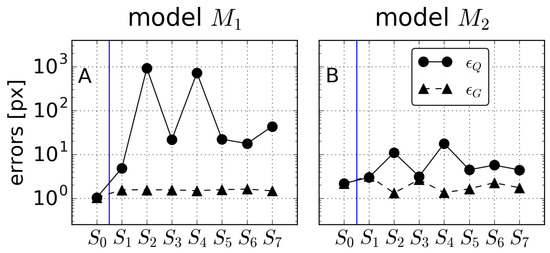

Recalling Equation (6), Figure 8 shows the RMS of the errors in Figure 6 and Figure 7 for angle and for all subsets . The error considers all the pixels in the image (), while the error only considers the pixels used for the calibration (i.e., those highlighted in Figure 6 and Figure 7) for the RMS. Naturally, both errors coincided for . As already suggested from Figure 6 and Figure 7, the errors were small in all cases; these errors were related to the errors in Figure 5. With regard to the error , which evaluates the quality of calibration in the whole image, model yielded significantly smaller errors than , except for the very particular set . For model (Figure 8B), all sets yielded overall errors below 10, except for (pixels near the center of the image) and (centered in the lower half of the image). The sets and were those with smaller errors and . The sets with smaller overall errors were (ideal uniform distribution all over the image) and (lower half of the image), while sets – gave similar results.

Figure 8.

Errors and for angle and models (A) and (B) as a function of the GCP calibration subset ( to ).

3.2. Influence of the Obliquity of the Number of Gcps

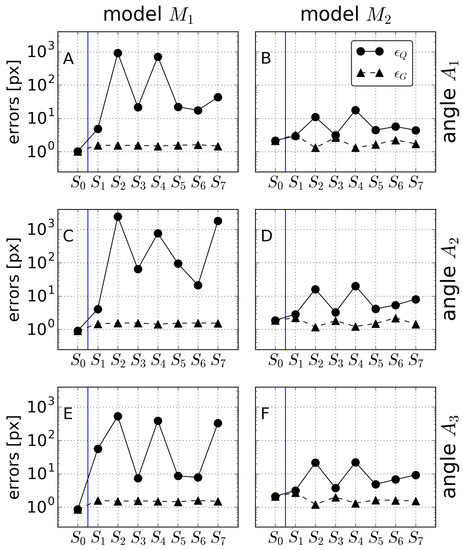

The influence of the obliquity of the image on the errors (as well as on ) is shown in Figure 9. This figure, an extension of Figure 8, includes the results for all three angles. The trends for angles and were, with respect to the model and the set , similar to those described above for . In particular, the errors were, in general, too large for (despite the errors being very small). For model , the errors slightly increased for and , subsets and giving the highest overall errors .

Figure 9.

Errors and for angle with (A,B); with (C,D); and with (E,F); and for models (A,C,E) and (B,D,F) as a function of the GCP calibration set ( to ).

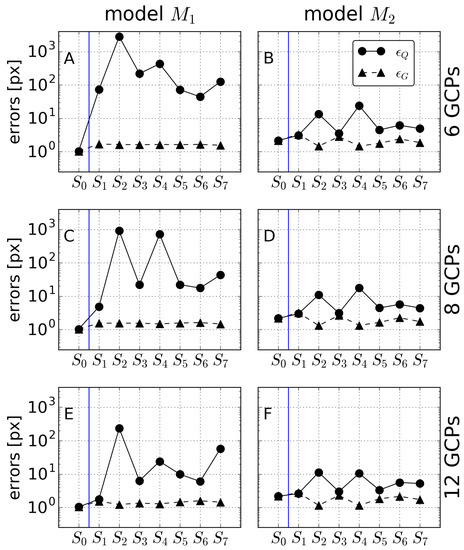

Similarly, the influence of the number of GCPs (for sets to ) on the errors and is shown in Figure 10 for . Figure 10 is an extension of Figure 8 and includes the results also for 6 and 12 GCPs. From Figure 10, it can be seen that the model keeps the overall errors small, even with only 6 GCPs; except for and . With regard to model , while the errors decreased for 12 GCPs (relative to 8 GCPs), they were still larger than for . In the following section, we restrict again to 8 GCPs for to .

Figure 10.

Errors and for angle for different numbers of GCPs (for sets to ): 6 GCPs (A,B); 8 GCPs (C,D); and 12 GCPs (E,F) and for models (A,C,E) and (B,D,F).

3.3. Calibration Parameters

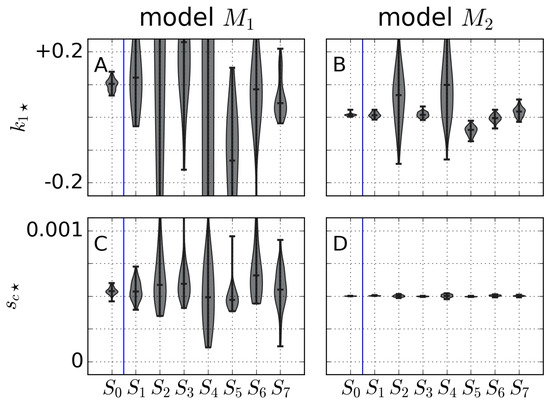

From a practical point of view, the above errors are the most interesting results in the camera calibration problem. However, the recovery of the calibration parameters is also an issue of practical interest (e.g., recovering the camera position or the intrinsic parameters from a single snapshot). Figure 11 shows the results (using always all the J perturbations) for the radial distortion and for both models and for angle . Please note that the intrinsic parameters ( and for , and many other in the complete model ) must be independent of the angle considered– extrinsic parameters, on the contrary, depend on the image (angle)–. The information in this figure contains the results for , the results fro and being similar (not shown). From Figure 11, the results for show a large variability when compared to those for model . Model , except for sets and –and in particular for the radial distortion –, shows small standard deviations in the boxplots. Having small standard deviations means that all perturbed calibrations give similar values of the parameters, so that the results are trustable. The rest of intrinsic parameters in model (, , …) have a similar behaviour than that of and (i.e., with large standard deviations, not shown).

Figure 11.

Radial distortion (A,B) and pixel size (C,D) for models (A,C) and (B,D) for angle .

Given that the model performed similar to in terms of , while giving smaller overall errors (Figure 8) and, further, provides more trustable results for the intrinsic parameters, we will focus on for the extrinsic parameters (model provides noisy results for the extrinsic parameters, as it does for the intrinsic ones; not shown).

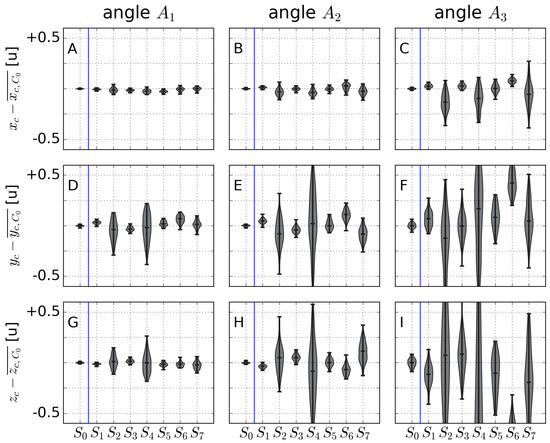

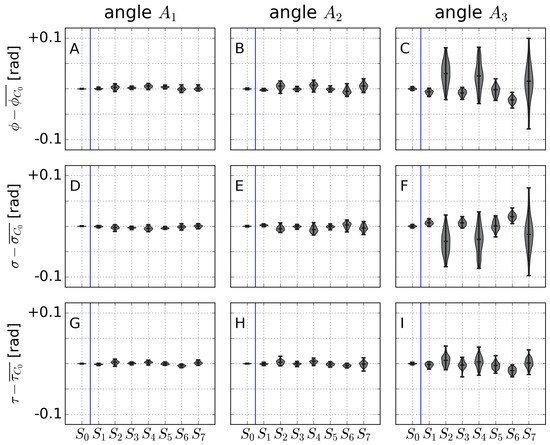

The extrinsic parameters (, , , , , and ) depend on the image (angle) considered, as already mentioned. Figure 12 shows the results for the camera position (, , and ) for angles – using the reduced model . For each angle, given that the results for (with ∼80 GCPs) had the smallest standard deviation (i.e., were the most trustable), the mean value for was subtracted in all cases (, , ). In this way, the variability of the parameter is shown for each angle independently of the actual values of the parameters, which are of minor interest here (and different for all three cases). From Figure 12, angle (with the larger obliquity) produced good estimates of the camera position, except (again) when using sets and . The results worsened for angles and, especially, (∼zenithal). The results for the Eulerian angles , , and are shown in Figure 13. The results followed the same trends as for the camera position; that is, case gave more robust results than and much more than , and and performed especially bad. It is worth noting that for , for , and for .

Figure 12.

Demeaned camera position co-ordinates , , and for angles (A,D,G), (B,E,H), and (C,F,I) for model . The unit length “u” corresponds to the side of the squares of the grid.

Figure 13.

Demeaned camera Eulerian angles , , and for angles (A,D,G), (B,E,H), and (C,F,I) for model .

4. Discussion

The above results—on full calibration of a camera from one single snapshot—show that there is no correlation of the overall quality of the calibration (which can be measured in terms of ) with the error obtained in the optimization process to obtain the calibration parameters. However, in real calibrations, the error cannot be known, while only (similar to and ) can be obtained. The latter errors being small only ensures, in general, good performance of the calibration around the calibration GCPs (Figure 6 and Figure 7 are clear, in this regard).

The results show that the choice of GCPs is crucial to obtain an effective real calibration (i.e., minimal values). Ideally, the overall calibration errors should be minimized by using a large number of GCPs covering the entire image. However, in real situations, the calibration GCPs are limited to a small region of the image, while other parts of the image can be of interest to the research. For example, in Figure 1B, the GCPs would usually be located in the promenade (where there are lots of observable features), while the focus is on the shoreline or the water area. Furthermore, the amount of GCPs is limited for functional requirements. Our findings show that good quality calibrations can be obtained with a limited number of GCPs when at least some of them are placed at the edges of the image. In these cases, even without having the smallest , the errors are small. On the other hand, when all the GCPs are centered in the image, the calibration quality may be poor (large ), even if are small. The justification for this and other behaviours is presented below.

The selection of an appropriate calibration model is essential. Ideally, when a large number of GCPs are available and cover the whole image, the complete model () is the best, both with regard to and (Figure 8 for ). This can typically be done under laboratory conditions but is not the case in coastal studies; particularly when taking advantage of freely available coastal images. For a realistic set of GCPs, the reduced model provided, in all studied cases, the highest quality calibrations. Again, we found the (kind of) paradoxical result that the best were obtained with the model , although the calibration errors were always smaller in model and, therefore, could seem to be more robust. From the above results (Figure 10), the advantage of the model compared to is evident for a reduced number of GCPs (6), remaining even when it is incremented to more reasonable values (12).

The model behaving better than is related to the noise in the recovery of the calibration parameters for model (illustrated in Figure 11 for and ), as explained below. Having just one snapshot to perform the calibration may lead, especially if the GCP distribution is not favourable (as in or ), to many different combinations of parameters providing small calibration errors () but large overall errors . In the complete model, , this compensation of different calibration variables to give similar calibration errors is much more pronounced, as it contains more parameters: this explains the large deviations of the parameters and in Figure 11 (and also in the rest of the calibration parameters; not shown) and the larger errors , except for in (Figure 9 and Figure 10). Model was overparametrized for 6 GCPs and, for 8 and 12 GCPs, still showed symptoms of overparametization behaviours. Focusing on the simple model, , the above compensation mechanism shows up in the worse case (and in ). In the model , the role of the physical distance from the camera position to the GCPs (i.e., the co-ordinates of ), the size and the distortion can be compensated if the GCPs fall near the center of the image, when the role of the distortion cannot be clearly distinguished. This the reason the set showed large deviations in the camera position (see Figure 12) and (Figure 11 for ). For this model, these mechanisms were enhanced for small (angle , Figure 12 and Figure 13), giving slightly larger errors in Figure 9. The angle gave more robust results (in the calibration parameters) due to the fact that, by increasing the relative distances between the different GCPs, the calibration parameters were more accurately captured. Zenithal images with the GCPs concentrated in the center of the image led to the worst quality calibration errors , despite achieving an excellent calibration error (Figure 9, set ).

For calibration purposes, we recommend the use of model and the selection of the GCPs such that some of them fall near the edges of the image. Whenever the recovery of the camera position and orientation is of interest, using zenithal views should be avoided. The use of the simple model to properly georeference images obtained by different devices using just a few GCPs opens up a range of possibilities for the analysis of images from webcams or beach users and the quantification of different parameters of interest (e.g., position and shape of the coastline, …). Furthermore, in fixed video monitoring systems, even if the camera has been intrinsically calibrated prior to its final deployment, the intrinsic calibration (as well as the extrinsic one) can change in time, due to changing external conditions, and continuous re-calibration of the parameters may be required.

5. Conclusions

In this work, we analyzed the influence that the distribution of GCPs and image obliquity has on the overall quality of full (intrinsic and extrinsic) camera calibration using only a single snapshot. This was done by analyzing the performance of two calibration mathematical models. We conclude that, for the calibration of coastal images—especially when only one image is available—the reduced model should be used. This reduced model provided robust camera calibration parameters (camera position, Eulerian angles, pixel size, and radial distortion) in our tests, allowing for an explicit transformation from pixel to real-world co-ordinates and, most importantly, yielded smaller overall calibration errors. With respect to the distribution of the GCPs over the image, using calibration points only near the centre of the image must be avoided, and we recommend using the maximum number of points distributed along the edges of the image. Finally, zenithal views complicate the recovery of the calibration parameters, although the obliquity does not have a significant influence on the overall performance of the calibration.

Supplementary Materials

The following are available online at https://www.mdpi.com/2072-4292/12/11/1840/s1, Camera GCPs and angle (A1, A2 and A3).

Author Contributions

Conceptualization, G.S., D.C., P.S., and J.G.; methodology, G.S. and D.C.; software, G.S. and D.C.; validation, G.S., D.C., and P.S.; formal analysis, G.S. and D.C.; investigation, G.S., D.C., P.S., and J.G.; resources, G.S., D.C., and J.G.; writing–original draft preparation, G.S. and D.C.; writing–review and editing, G.S., D.C., P.S., and J.G.; visualization, G.S. and D.C.; supervision, G.S., D.C., P.S., and J.G.; project administration, G.S. and D.C.; funding acquisition, G.S., D.C., and J.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Spanish Government (MINECO/MICINN/FEDER) grant numbers RTI2018-093941-B-C32, and RTI2018-093941-B-C33.

Acknowledgments

The authors also acknowledge the useful suggestions from R. Álvarez and G. Grande.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CPU | Central Processing Unit |

| GCP(s) | Ground Control Point(s) |

| RMS | Root Mean Square |

References

- Holland, K.; Holman, R.; Lippmann, T.; Stanley, J.; Plant, N. Practical use of video imagery in nearshore oceanographic field studies. IEEE J. Ocean. Eng. 1997, 22, 81–91. [Google Scholar] [CrossRef]

- Holman, R.; Stanley, J. The history and technical capabilities of Argus. Coast. Eng. 2007, 54, 477–491. [Google Scholar] [CrossRef]

- Nieto, M.; Garau, B.; Balle, S.; Simarro, G.; Zarruk, G.; Ortiz, A.; Tintoré, J.; Álvarez Ellacuría, A.; Gómez-Pujol, L.; Orfila, A. An open source, low cost video-based coastal monitoring system. Earth Surf. Process. Landf. 2010, 35, 1712–1719. [Google Scholar] [CrossRef]

- Taborda, R.; Silva, A. COSMOS: A lightweight coastal video monitoring system. Comput. Geosci. 2012, 49, 248–255. [Google Scholar] [CrossRef]

- Brignone, M.; Schiaffino, C.; Isla, F.; Ferrari, M. A system for beach video-monitoring: Beachkeeper plus. Comput. Geosci. 2012, 49, 53–61. [Google Scholar] [CrossRef]

- Simarro, G.; Ribas, F.; Álvarez, A.; Guillén, J.; Chic, O.; Orfila, A. ULISES: An open source code for extrinsic calibrations and planview generations in coastal video monitoring systems. J. Coast. Res. 2017, 33, 1217–1227. [Google Scholar] [CrossRef]

- Aarninkhof, S.; Turner, I.; Dronkers, T.; Caljouw, M.; Nipius, L. A video-based technique for mapping intertidal beach bathymetry. Coast. Eng. 2003, 49, 275–289. [Google Scholar] [CrossRef]

- Holman, R.; Plant, N.; Holland, T. CBathy: A robust algorithm for estimating nearshore bathymetry. J. Geophys. Res. Ocean. 2013, 118, 2595–2609. [Google Scholar] [CrossRef]

- Simarro, G.; Calvete, D.; Luque, P.; Orfila, A.; Ribas, F. UBathy: A new approach for bathymetric inversion from video imagery. Remote Sens. 2019, 11, 2722. [Google Scholar] [CrossRef] [Green Version]

- Ojeda, E.; Guillén, J. Shoreline dynamics and beach rotation of artificial embayed beaches. Mar. Geol. 2008, 253, 51–62. [Google Scholar] [CrossRef]

- Alvarez-Ellacuria, A.; Orfila, A.; Gómez-Pujol, L.; Simarro, G.; Obregon, N. Decoupling spatial and temporal patterns in short-term beach shoreline response to wave climate. Geomorphology 2011, 128, 199–208. [Google Scholar] [CrossRef]

- Alexander, P.; Holman, R. Quantification of nearshore morphology based on video imaging. Mar. Geol. 2004, 208, 101–111. [Google Scholar] [CrossRef]

- Armaroli, C.; Ciavola, P. Dynamics of a nearshore bar system in the northern Adriatic: A video-based morphological classification. Geomorphology 2011, 126, 201–216. [Google Scholar] [CrossRef]

- Harley, M.; Kinsela, M.; Sánchez-García, E.; Vos, K. Shoreline change mapping using crowd-sourced smartphone images. Coast. Eng. 2019, 150, 175–189. [Google Scholar] [CrossRef]

- Duane, C.B. Close- range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Faig, W. Calibration of close-range photogrammetric systems: Mathematical formulation. Photogramm. Eng. Remote Sens. 1975, 41, 1479–1486. [Google Scholar]

- Zhang, Z.; Zhao, R.; Liu, E.; Yan, K.; Ma, Y. A single-image linear calibration method for camera. Meas. J. Int. Meas. Confed. 2018, 130, 298–305. [Google Scholar] [CrossRef]

- Tsai, R. A Versatile Camera Calibration Technique for High-Accuracy 3D Machine Vision Metrology Using Off-the-Shelf TV Cameras and Lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef] [Green Version]

- Bouguet, J.Y. Visual Methods for Three-Dimensional Modeling. Ph.D. Thesis, California Institute of Technology, Pasadena, CA, USA, 1999. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Ricolfe-Viala, C.; Sánchez-Salmerón, A.J. Robust metric calibration of non-linear camera lens distortion. Pattern Recognit. 2010, 43, 1688–1699. [Google Scholar] [CrossRef]

- Galantucci, L.; Ferrandes, R.; Percoco, G. Digital photogrammetry for facial recognition. J. Comput. Inf. Sci. Eng. 2006, 6, 390–396. [Google Scholar] [CrossRef]

- Strecha, C.; Von Hansen, W.; Van Gool, L.; Fua, P.; Thoennessen, U. On benchmarking camera calibration and multi-view stereo for high resolution imagery. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Andriolo, U.; Sánchez-García, E.; Taborda, R. Operational use of surfcam online streaming images for coastal morphodynamic studies. Remote Sens. 2019, 11, 78. [Google Scholar] [CrossRef] [Green Version]

- Shapiro, L.; Stockman, G. Computer Vision; Prentice Hall: Upper Saddle River, NJ, USA, 2001; ISBN1 10:0130307963. ISBN2 13:97801303079652001. [Google Scholar]

- Conrady, A. Decentred Lens-Systems. Mon. Not. R. Astron. Soc. 1919, 79, 384–390. [Google Scholar] [CrossRef] [Green Version]

- Fletcher, R. Practical Methods of Optimization; John Wiley & Sons: Hoboken, NJ, USA, 1987. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).