1. Introduction

Scene classification is the process of automatically assigning an image to a class label that describes the image correctly. In the field of remote sensing, scene classification gained a lot of attention and several methods were introduced in this field such as bag of word model [

1], compressive sensing [

2], sparse representation [

3], and lately deep learning [

4]. To classify a scene correctly, effective features are extracted from a given image, then classified by a classifier to the correct label. Early studies of remote sensing scene classification were based on handcrafted features [

1,

5,

6]. In this context, deep learning techniques showed to be very efficient in terms compared to standard solutions based on handcrafted features. Convolutional neural networks (CNN) are considered the most common deep learning techniques for learning visual features and they are widely used to classify remote sensing images [

7,

8,

9]. Several approaches were built around these methods to boost the classification results such as integrating local and global features [

10,

11,

12], recurrent neural networks (RNNs) [

13,

14], and generative adversarial networks (GANs) [

15].

The number of remote sensing images has been steadily increasing over the years. These images are collected using different sensors mounted on satellites or airborne platforms. The type of the sensor results in different image resolutions: spatial, spectral, and temporal resolution. This leads to huge number of images with different spatial and spectral resolutions [

16]. In many real-world applications, the training data used to learn a model may have different distributions from the data used for testing, when images are acquired over different locations and with different sensors. For such purpose, it becomes necessary to reduce the distribution gap between the source and target domains to obtain acceptable classification results [

17]. The main goal of domain adaptation is to learn a classification model from a labeled source domain and apply this model to classify an unlabeled target domain. Several methods have been introduced related to domain adaptation in the field of remote sensing [

18,

19,

20,

21].

The above methods assume that the sample belongs to one of a fixed number of known classes. This is called a closed set domain adaptation, where the source and target domains have shared classes only. This assumption is violated in many cases. In fact, many real applications follow an open set environment, where some test samples belong to classes that are unknown during training [

22]. These samples are supposed to be classified as unknown, instead of being classified to one of the shared classes. Classifying the unknown image to one of the shared classes leads to negative transfer.

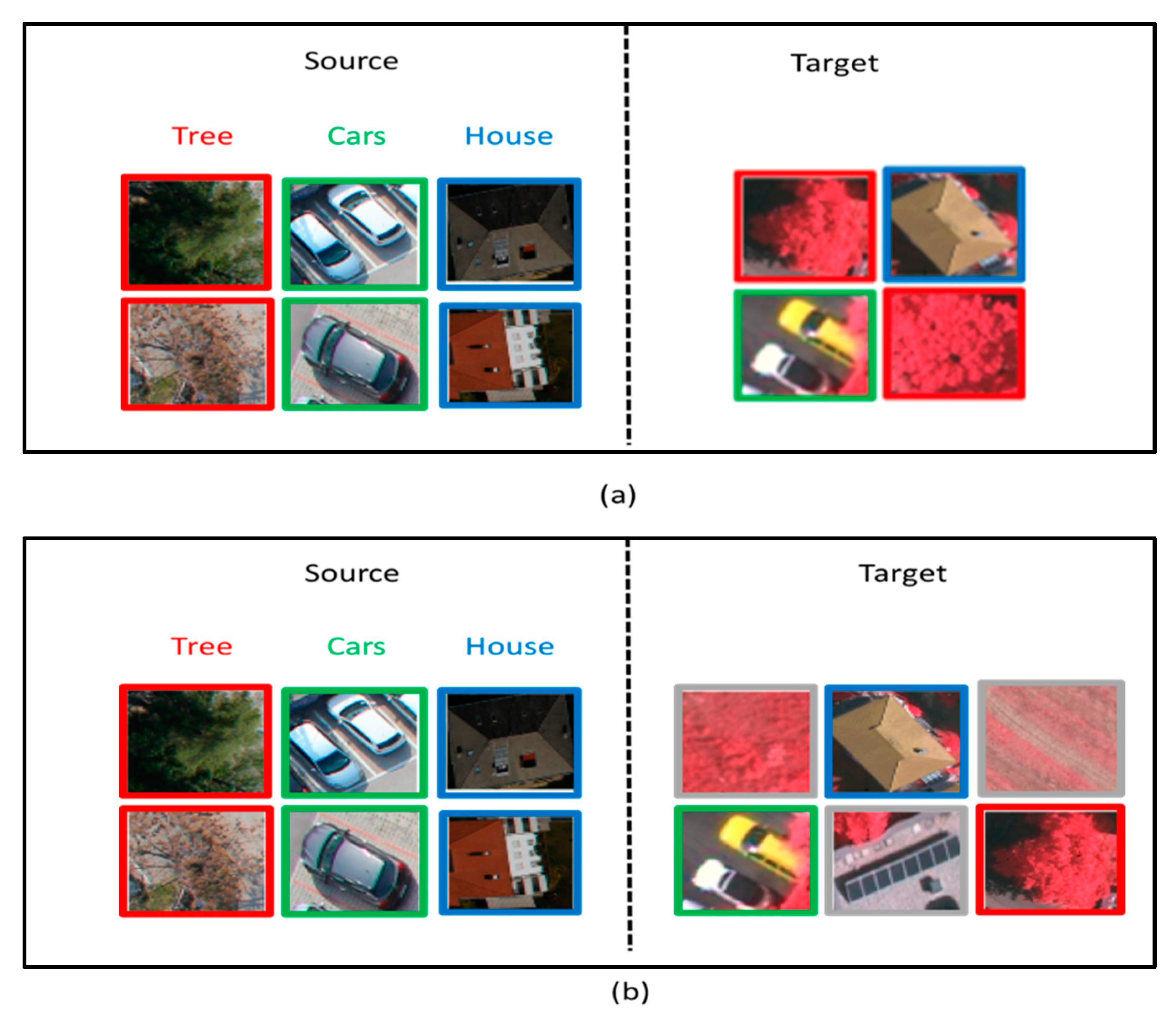

Figure 1 shows the difference between open and closed set classification. This problem is known in the community of machine learning as open set domain adaptation. Thus, in an open set domain adaptation one has to learn robust feature representations for the source labeled data, reduce the data-shift problem between the source and target distributions, and detect the presence of new classes in the target domain.

Open set classification is a new research area in the remote sensing field. Few works have introduced the problem of open set. Anne Pavy and Edmund Zelnio [

23] introduced a method to classify Synthetic Aperture Radar (SAR) images in the test samples that are in the training samples and reject those not in the training set as unknown. The method uses a CNN as a feature extractor and SVM for classification and rejection of unknowns. Wang et al. [

24] addressed the open set problem in high range resolution profile (HRRP) recognition. The method is based on random forest (RF) and extreme value theory, the RF is used to extract the high-level features of the input image, then used as input to the open set module. The two approaches use a single domain for training and testing.

To the best of our knowledge, no previous works have addressed the open-set domain adaptation problem for remote sensing images where the source domain is different from the target domain. To address this issue, the method we propose is based on adversarial learning and pareto-based ranking. In particular, the method leverages the distribution discrepancy between the source and target domain using min-max entropy optimization. During the alignment process, it identifies candidate samples of the unknown class from the target domain through a pareto-based ranking scheme that uses ambiguity criteria based on entropy and the distance to source class porotypes.

2. Related Work on Open Set Classification

Open set classification is a more challenging and more realistic approach, thus it has gained a lot of attention by researchers lately and many works are done in this field. Early research on open set classification depended on traditional machine learning techniques, such as support vector machines (SVMs). Scheirer et al. [

25] first proposed 1-vs-Set method which used a binary SVM classifier to detect unknown classes. The method introduced a new open set margin to decrease the region of the known class for each binary SVM. Jain et al. [

26] invoked the extreme value theory (EVT) to present a multi-class open set classifier to reject the unknowns. The authors introduced the Pi-SVM algorithm to estimate the un-normalized posterior class inclusion likelihood. The probabilistic open set SVM (POS-SVM) classifier proposed by Scherreik et al. [

27] empirically determines the unique reject threshold for each known class. Sparse representation techniques were used in open set classification, where the sparse representation based classifier (SRC) [

28] looks for the sparest possible representation of the test sample to correctly classify the sample [

29]. Bendale and Boult [

30] presented the nearest non-outlier (NNO) method to actively detect and learn new classes, taking into account the open space risk and metric learning.

Deep neural networks (DNNs) were very interesting in several tasks lately, including open set classification. Bendale and Boult [

31] first introduced OpenMax model, which is a DNN to perform open set classification. The OpenMax layer replaced the softmax layer in a CNN to check if a given sample belongs to an unknown class. Hassen and Chan [

32] presented a method to solve the open set problem, by keeping instances belonging to the same class near each other, and instances that belong to different or unknown classes farther apart. Shu et al. [

33] proposed deep open classification (DOC) for open set classification, that builds a multi-class classifier which used instead of the last softmax layer a 1-vs-rest layer of sigmoids to make the open space risk as small as possible. Later, Shu et al. [

34] presented a model for discovering unknown classes that combines two neural networks: open classification network (OCN) for seen classification and unseen class rejection, and a pairwise classification network (PCN) which learns a binary classifier to predict if two samples come from the same class or different classes.

In the last years generative adversarial networks (GANs) [

35] were introduced to the field of open set classification. Ge et al. [

36] presented the Generative OpenMax (G-OpenMax) method. The algorithm adapts OpenMax to generative adversarial networks for open set classification. The GAN trained the network by generating unknown samples, then combined it with an OpenMax layer to reject samples belonging to the unknown class. Neal et al. [

37] proposed another GAN-based algorithm to generate counterfactual images that do not belong to any class; instead are unknown, which are used to train a classifier to correctly classify unknown images. Yu et al. [

38] also proposed a GAN that generated negative samples for known classes to train the classifier to distinguish between known and unknown samples.

Most of the previous studies mentioned in the literature of scene classification assume that one domain is used for both training and testing. This assumption is not always satisfied, due to the fact that some domains have images that are labeled, on the other hand many new domains have shortage in labeled images. It will be time-consuming and expensive to generate and collect large datasets of labeled images. One suggestion to solve this issue is to use labeled images from one domain as training data for different domains. Domain adaptation is one part of transfer learning where transfer of knowledge occurs between two domains, source and target. Domain adaptation approaches differ from each other in the percentage of labeled images in the target domain. Some works have been done in the field of open set domain adaptation. First, Busto et al. [

22] introduced open set domain adaptation in their work, by allowing the target domain to have samples of classes not belonging to the source domain and vice versa. The classes not shared or uncommon are joined as a negative class called “unknown”. The goal was to correctly classify target samples to the correct class if shared between source and target, and classify samples to unknown if not shared between domains. Saito et al. [

39] proposed a method where unknown samples appear only in the target domain, which is more challenging. The proposed approach introduced adversarial learning where the generator can separate target samples to known and unknown classes. The generator can decide to reject or accept the target image. If accepted, it is classified to one of the classes in the source domain. If rejected it is classified as unknown.

Cao et al. [

40] introduced a new partial domain adaptation method, they assumed that the target dataset contained classes that are a subset of the source dataset classes. This makes the domain adaptation problem more challenging due to the extra source classes, which could result in negative transfer problems. The authors used a multi-discriminator domain adversarial network, where each discriminator has the responsibility of matching the source and target domain data after filtering unknown source classes. Zhang et al. [

41] also introduced the problem of transferring from big source to target domain with subset classes. The method requires only two domain classifiers instead of multiple classifiers one for each domain as shown by the previous method. Furthermore, Baktashmotlagh et al. [

42] proposed an approach to factorize the data into shared and private sub-spaces. Source and target samples coming from the same, known classes can be represented by a shared subspace, while target samples from unknown classes were modeled with a private subspace.

Lian et al. [

43] proposed Known-class Aware Self-Ensemble (KASE) that was able to reject unknown classes. The model consists of two modules to effectively identify known and unknown classes and perform domain adaptation based on the likeliness of target images belonging to known classes. Lui et al. [

44] presented Separate to Adapt (STA), a method to separate known from unknown samples in an advanced way. The method works in two steps: first a classifier was trained to measure the similarity between target samples and every source class with source samples. Then, high and low values of similarity were selected to be known and unknown classes. These values were used to train a classifier to correctly classify target images. Tan et al. [

45] proposed a weakly supervised method, where the source and target domains had some labeled images. The two domains learn from each other through the few labeled images to correctly classify the unlabeled images in both domains. The method aligns the source and target domains in a collaborative way and then maximizes the margin for the shared and unshared classes.

In the contest of remote sensing, open set domain adaptation is a new research field and no previous work was achieved.

3. Description of the Proposed Method

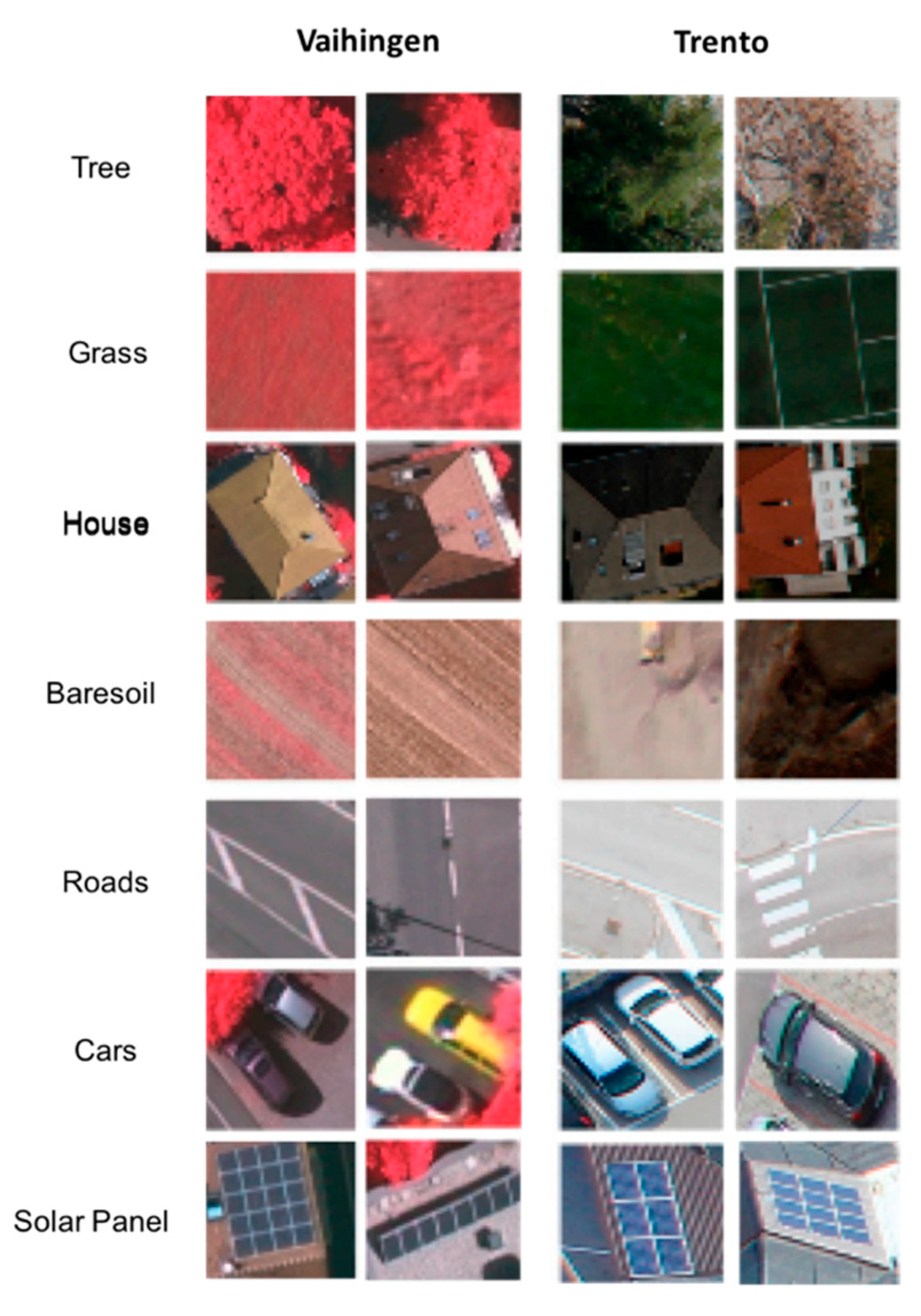

Assume a labeled source domain

composed of

images and their corresponding class labels

, where

is the number of images and

is the number of classes. Additionally, we assume an unlabeled target domain

with

unlabeled images. In an open set setting, the target domain contains

classes, where

classes are shared with the source domain, and an addition unknown class (can be many unknown classes but grouped in one class). The objective of this work is twofold: (1) reduce the distribution discrepancy between the source and target domains, and (2) detect the presence of the unknown class in the target domain.

Figure 2 shows the overall description of the proposed adversarial learning method, which relies on the idea of min-max entropy for carrying out the domain adaptation and uses an unknown class detector based on pareto ranking.

3.1. Network Architecture

Our model uses EfficientNet-B3 network [

46] from Google as a feature extractor although other networks could be used as well since the method is independent of the pre-trained CNN. The choice of this network is motivated by its ability to generate high classification accuracies but with reduced parameters compared to other architectures. EfficientNets are based on the concept of scaling up CNNs by means of a compound coefficient, which jointly integrates the width, depth, and resolution. Basically, each dimension is scaled in a balanced way using a set of scaling coefficients. We truncate this network by removing its original ImageNet-based softmax classification layer. For computation convenience, we set

and

as the feature representations for both source and target data obtained at the output of this trimmed CNN (each feature is a vector of dimension 1536). These features are further subject to dimensionality reduction via a fully-connected layer

acting as a feature extractor yielding new feature representations

and

each of dimension 128. The output of

is further normalized using

normalization and fed as input to a decoder

and similarity-based classifier

.

The decoder has the task to constrain the mapping spaces of with reconstruction ability to the original features provided by the pre-trained CNN in order to reduce the overlap between classes during adaptation. On the other side, the similarity classifier aims to assign images to the corresponding classes including the unknown one (identified using ranking criteria) by computing the similarity measure of their related representations to its weight . These weights are viewed as estimated porotypes for the -source classes and the unknown class with index .

3.2. Adversarial Learning with Reconstruction Ability

We reduce the distribution discrepancy using an adversarial learning approach with reconstruction ability based on min-max entropy optimization [

47]. To learn the weights of

,

, and

we use both labeled sources and unlabeled target samples. We learn the network to discriminate between the labeled classes in the source domain and the unknown class samples identified iteratively in the target domain (using the proposed pareto ranking scheme), while clustering the remaining target samples to the most suitable class prototypes. For such purpose, we jointly minimize the following loss functions:

where

is a regulalrization parameter which controls the contribution of the entropy to the total loss.

is the categorical cross-entropy loss computed for the source domain:

is the cross-entropy loss computed for the samples iteratively identified as unknown class:

is the entropy computed for the samples of the target domain:

and

is the reconstruction loss.

From Equation (1), we observe that both

and

are used to learn discriminative features for the labeled samples. The classifier

makes the target samples closer to the estimated prototypes by increasing the entropy. On the other side, the feature extractor

tries to decrease it by assigning the target samples to the most suitable class prototype. On the other side, the decoder

constrains the projection to the reconstruction space to control the overlap between the samples of the different classes. In the experiments, we will show that this learning mechanism allows to boost the classification accuracy of the target samples. In practice, we use a gradient reversal layer to flip the gradients of

between

and

To this end, we use a gradient reverse layer [

48] between

and

to flip the sign of gradient to simplify the training process. The gradient reverse layer aims to flip the sign of the input by multiplying it with a negative scalar in the backpropagation, while leaving it as it is in the forward propagation.

Pareto Ranking for Unknown Class Sample Selection

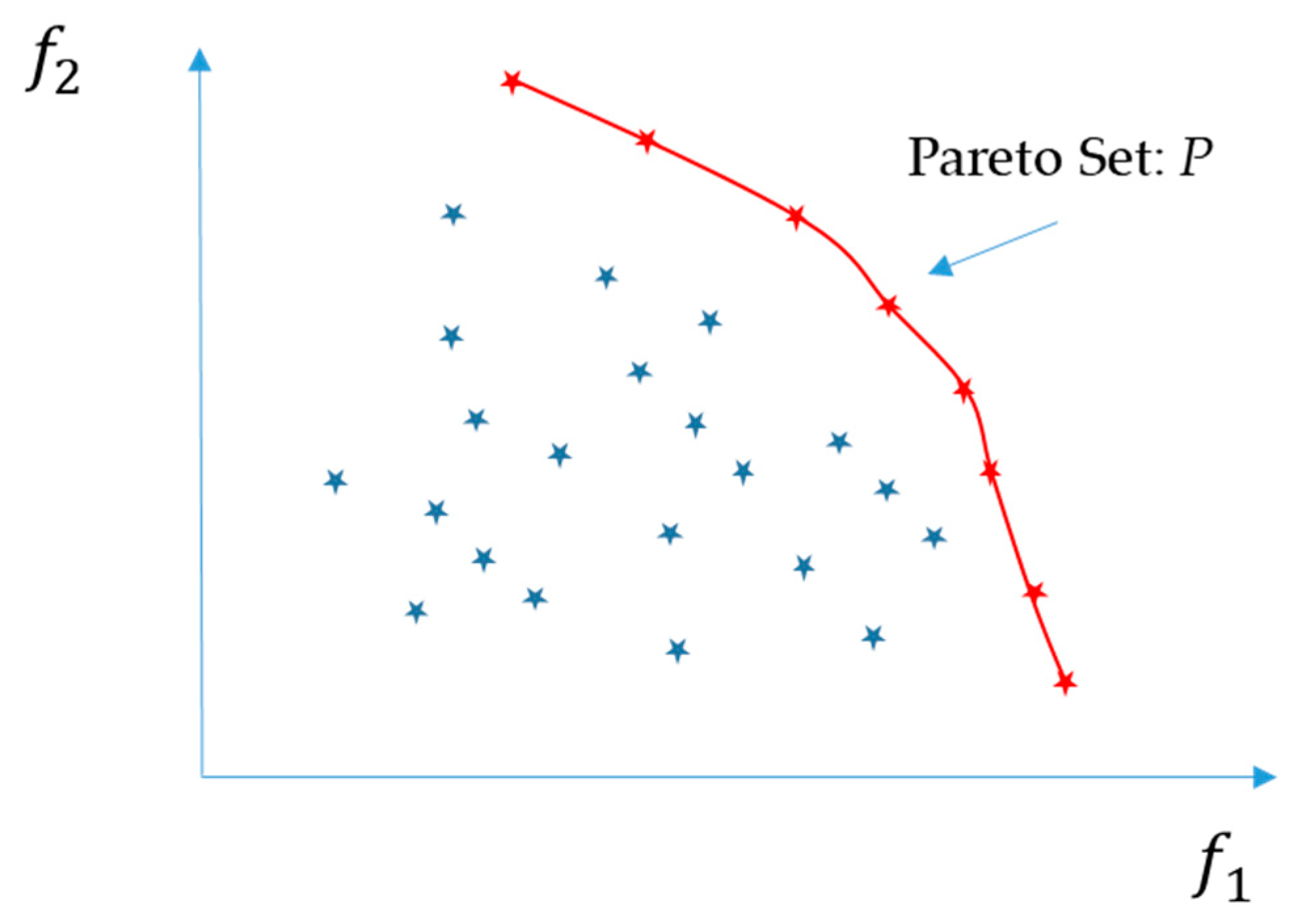

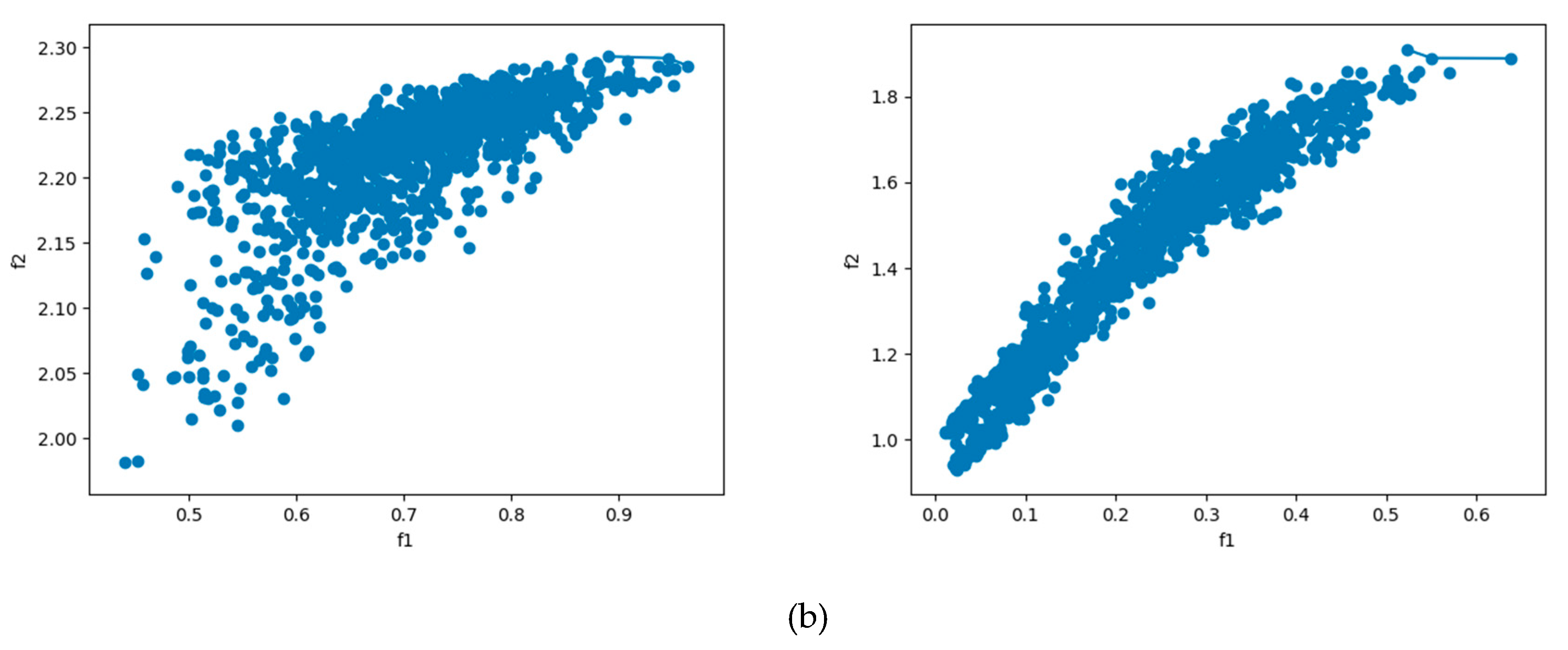

During the alignment of the source and target distributions, we strive for detecting the most ambiguous samples and assign a soft label to them (unknown class K+1). Indeed, the adversarial learning will push the target samples to the most suitable class prototypes in the source domain, while the most ambiguous ones will potentially indicate the presence of a new class. In this work, we use the entropy measure and the distance from the class prototypes as a possible solution for identifying these samples. In particular, we propose to rank the target samples using both measures.

An important aspect of pareto ranking is the concept of dominance widely applied in multi-objective optimization, which involves finding a set of pareto optimal solutions rather than a single one. This set of pareto optimal solutions contains solutions that cannot be improved on one of the objective functions without affecting the other functions. In our case, we formulate the problem as finding a sub-set

from the unlabeled samples that maximizes the two objective functions

and

, where

where

is the cosine distance of the representation

of the target sample

with respect to the class porotypes

of each source class, and

is the cross-entropy loss computed for the samples of the target domain.

Many samples in

Figure 3 are considered undesirable choices due to having low values of entropy and distance which should be dominated by other points. The samples of the pareto-set

should dominate all other samples in the target domain. Thus, the samples in this set are said to be non-dominated and forms the so-called Pareto front of optimal solutions.

The following Algorithm 1 provides the main steps for training the open-set DA with its nominal parameters:

| Algorithm 1: Open-Set DA |

| Input: Source domain: , and target domain: |

| Output: Target labels |

1: Network parameters:Number of iterations Mini-batch size: b = 100 Adam optimizer: learning rate: 0.0001, exponential decay rate for the first and second moments , and epsilon=

|

| 2: Get feature representations from EfficientNet-B3: and |

| 3: Train the extra network on the source domain only by optimizing the loss for |

| 4: Classify the target domain and get samples forming the pareto set and assign them to class K+1 |

| 5: for |

| 5.1: Shuffle the labeled samples and organize them into groups each of size |

5.2: for Pick minibatch from these labeled samples referred as Pick randomly another minibatch of size from the target domain Update the parameters of the network by optimizing the loss in (1)

|

| 5.3: Feed the target domain samples to the network and form a new pareto set |

| 6: Classify the target domain data. |

6. Conclusions

In this paper, we addressed the problem of open-set domain adaptation in remote sensing imagery. Different to the widely known closed set domain adaptation, open set domain adaptation shares a subset of classes between the source and target domains, whereas some of the target domain samples are unknown to the source domain. Our proposed method aims to leverage the domain discrepancy between source and target domains using adversarial learning, while detecting the samples of the unknown class using a pareto-based raking scheme, which relies on the two metrics based on distance and entropy. Experiment results obtained on several remote sensing datasets showed promising performance of our model, the proposed method resulted an 82.64% openset accuracy for the VHR dataset, outperforming the method with no-adaptation by 23.06%. In the EHR dataset, the pareto approach resulted an 80.27% accuracy for the openset accuracy. For future developments, we plan to investigate other criteria for identifying the unknown samples to improve further the performance of the model. In addition, we plan to extend this method to more general domain adaptation problems such as the universal domain adaptation.