Figure 1.

Schematic illustration of the proposed 3D-SaSiResNet.

Figure 1.

Schematic illustration of the proposed 3D-SaSiResNet.

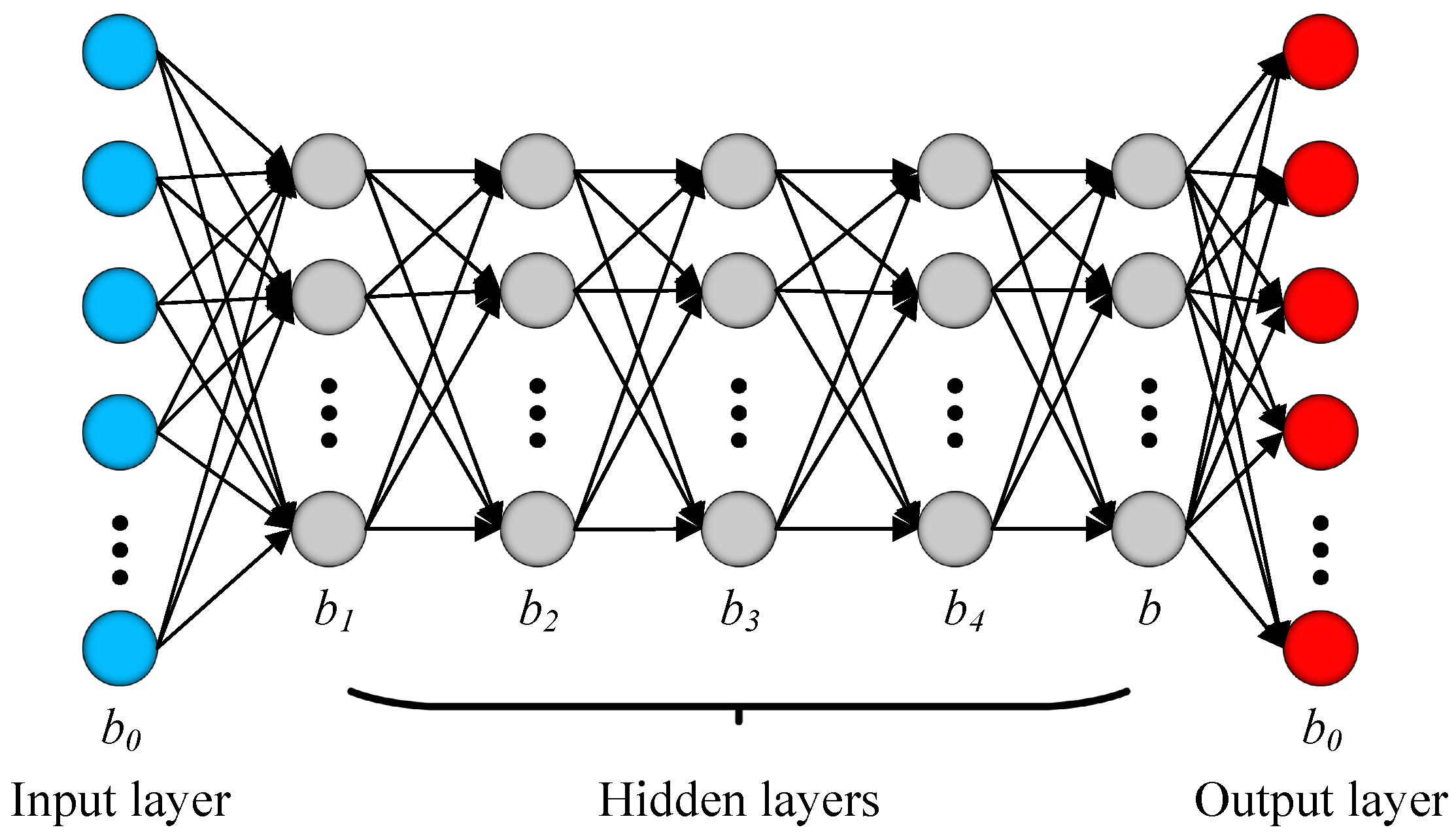

Figure 2.

Structure of the SAE (with five hidden layers) for band reduction.

Figure 2.

Structure of the SAE (with five hidden layers) for band reduction.

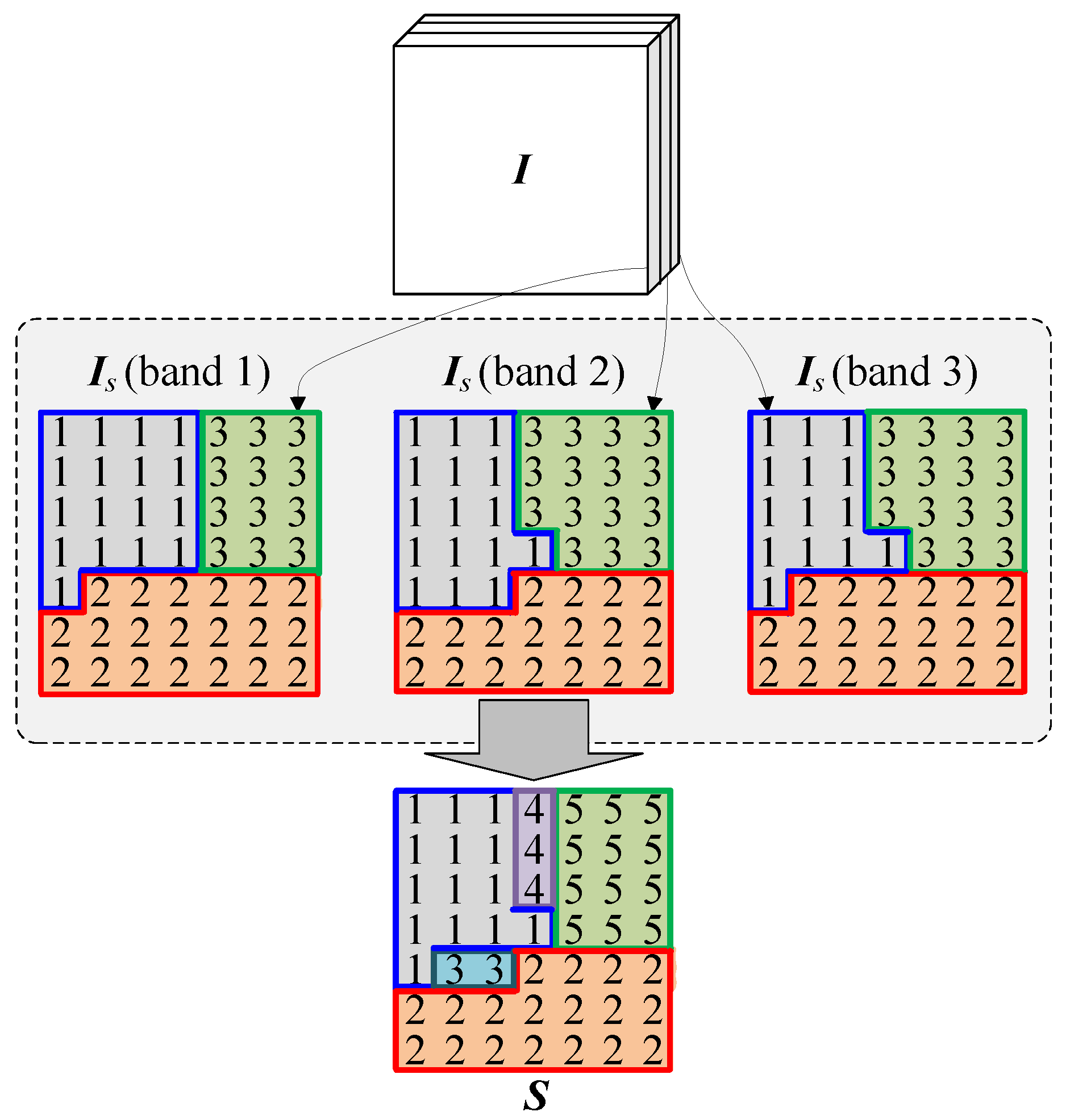

Figure 3.

Schematic example about how to obtain the superpixel map .

Figure 3.

Schematic example about how to obtain the superpixel map .

Figure 4.

Schematic example of the patch generation procedure. (a) extracting the 3D patch by fixing at the center; (b) extracting the 3D spatial-adaptive patch.

Figure 4.

Schematic example of the patch generation procedure. (a) extracting the 3D patch by fixing at the center; (b) extracting the 3D spatial-adaptive patch.

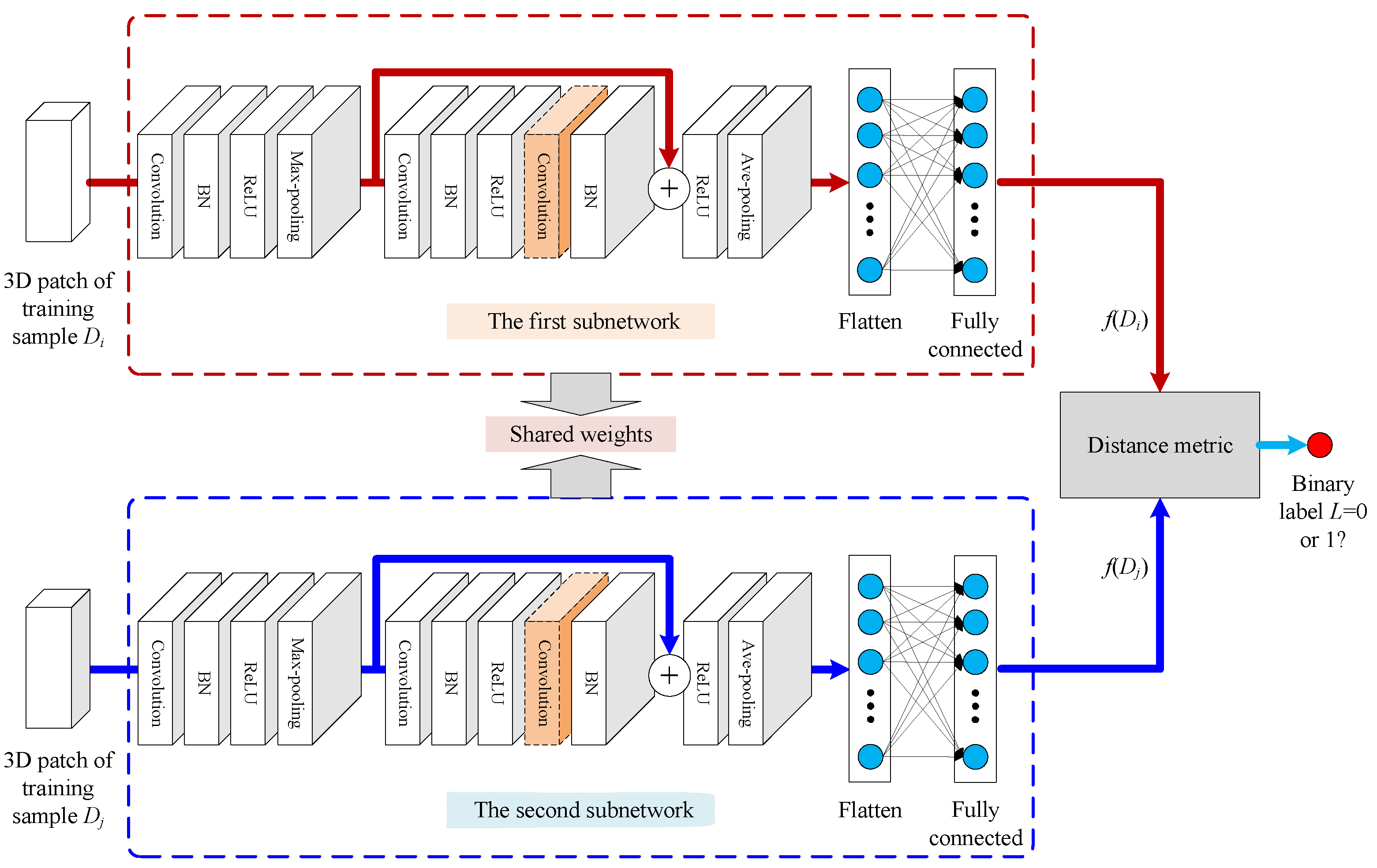

Figure 5.

Architecture of the 3D-SaSiResNet. The 3D residual basic block in this architecture can also be replaced by a 3D ResNeXt basic block, in which the 3D convolution layer highlighted with an orange dotted cube should be changed to grouped convolutions.

Figure 5.

Architecture of the 3D-SaSiResNet. The 3D residual basic block in this architecture can also be replaced by a 3D ResNeXt basic block, in which the 3D convolution layer highlighted with an orange dotted cube should be changed to grouped convolutions.

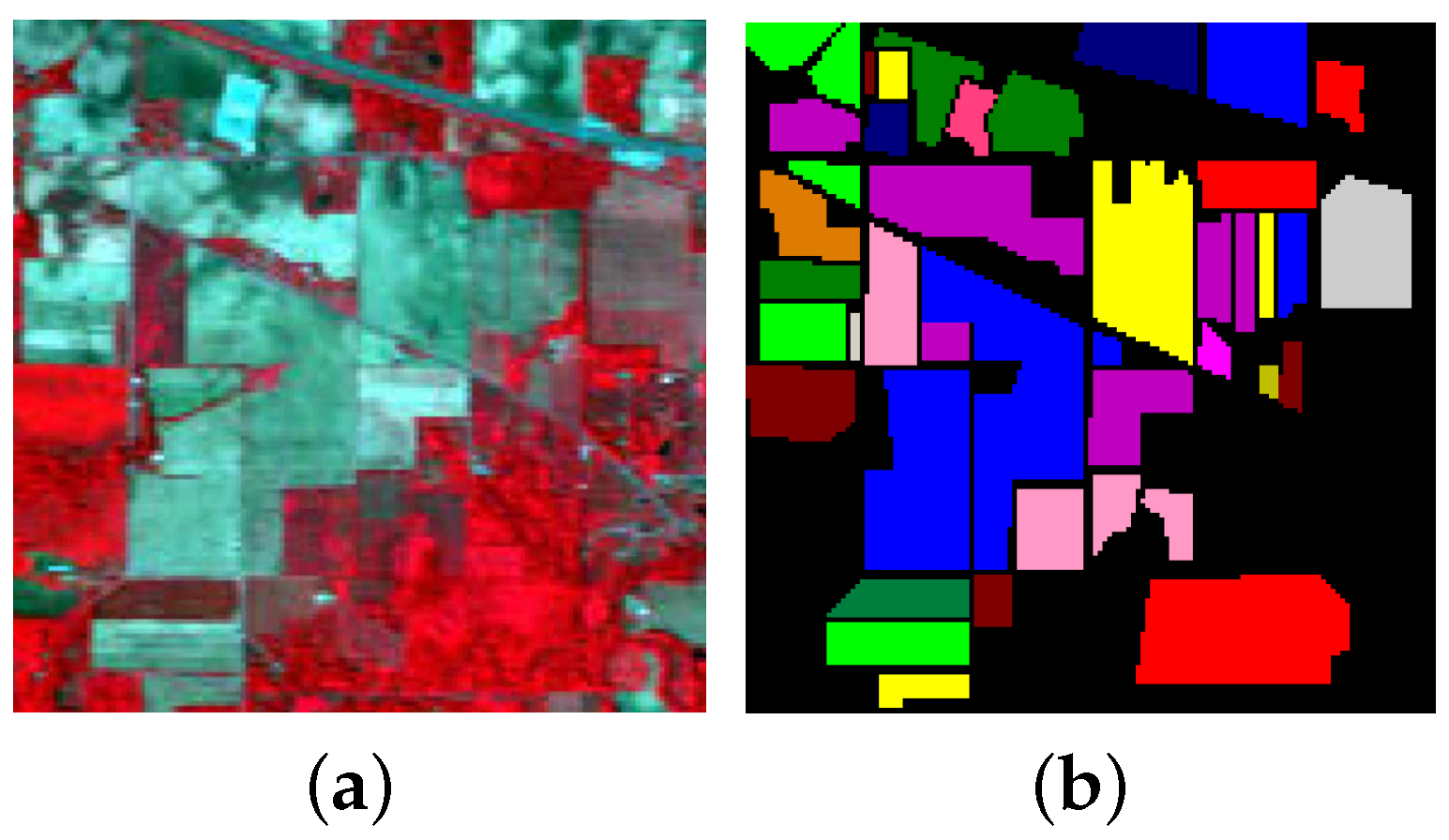

Figure 6.

Indian Pines data. (a) three-band false color composite, (b) ground truth data with 16 classes.

Figure 6.

Indian Pines data. (a) three-band false color composite, (b) ground truth data with 16 classes.

Figure 7.

University of Pavia data. (a) three-band false color composite, (b) ground truth data with nine classes.

Figure 7.

University of Pavia data. (a) three-band false color composite, (b) ground truth data with nine classes.

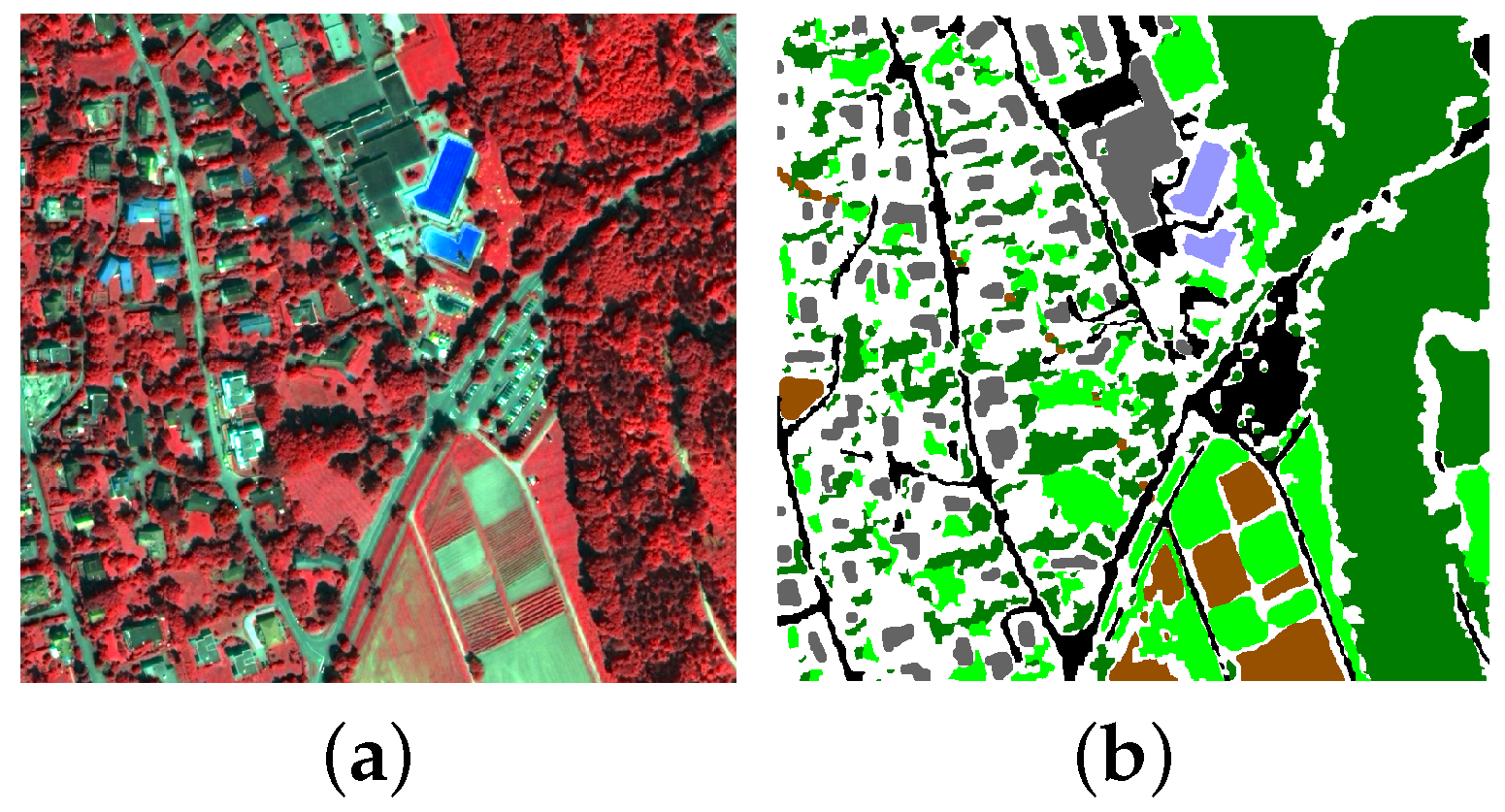

Figure 8.

Zurich data. (a) three-band false color composite, (b) ground truth data with six classes.

Figure 8.

Zurich data. (a) three-band false color composite, (b) ground truth data with six classes.

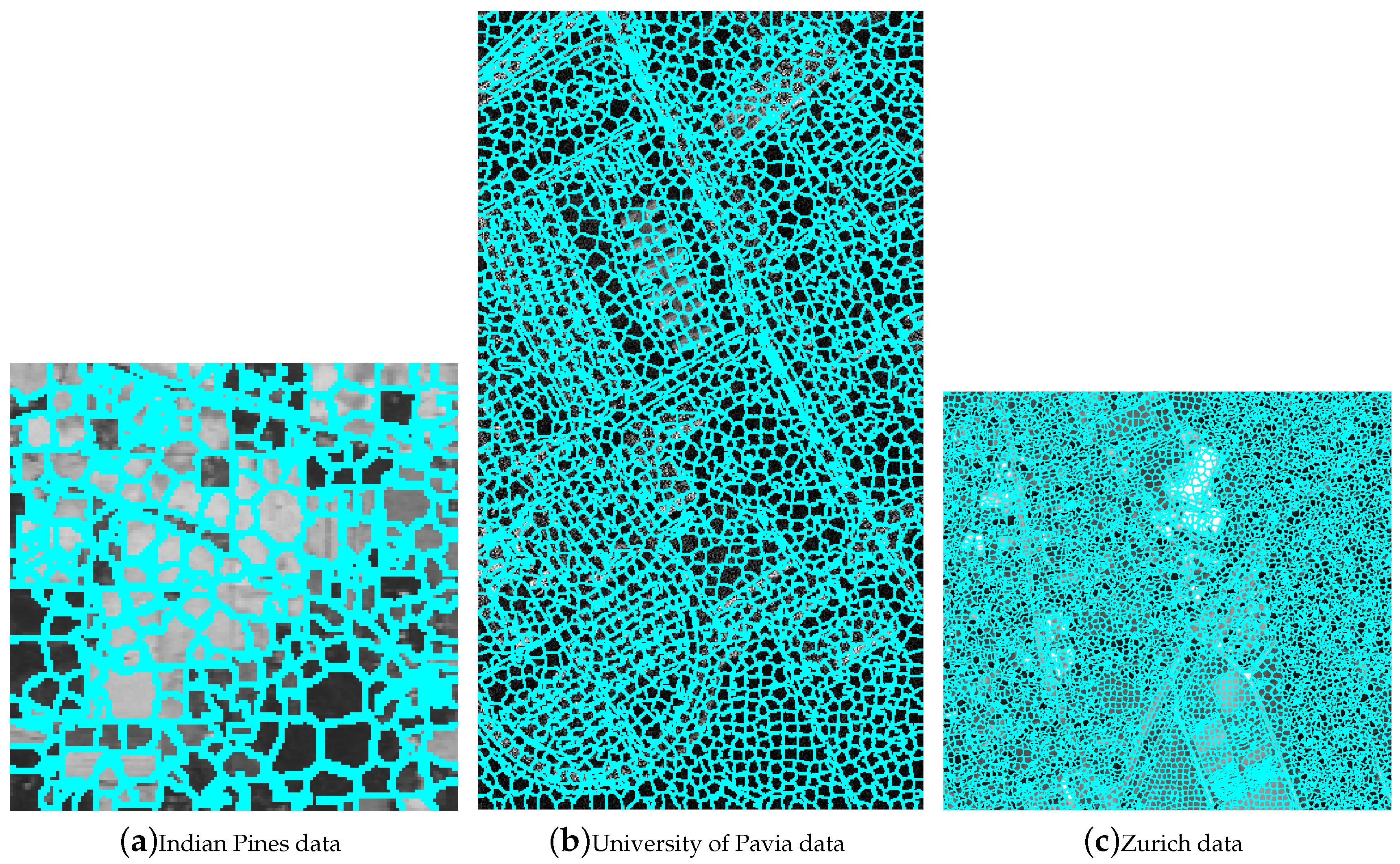

Figure 9.

Final superpixel segmentation results of the three experimental datasets. (a) Indian Pines data, (b) University of Pavia data, and (c) Zurich data.

Figure 9.

Final superpixel segmentation results of the three experimental datasets. (a) Indian Pines data, (b) University of Pavia data, and (c) Zurich data.

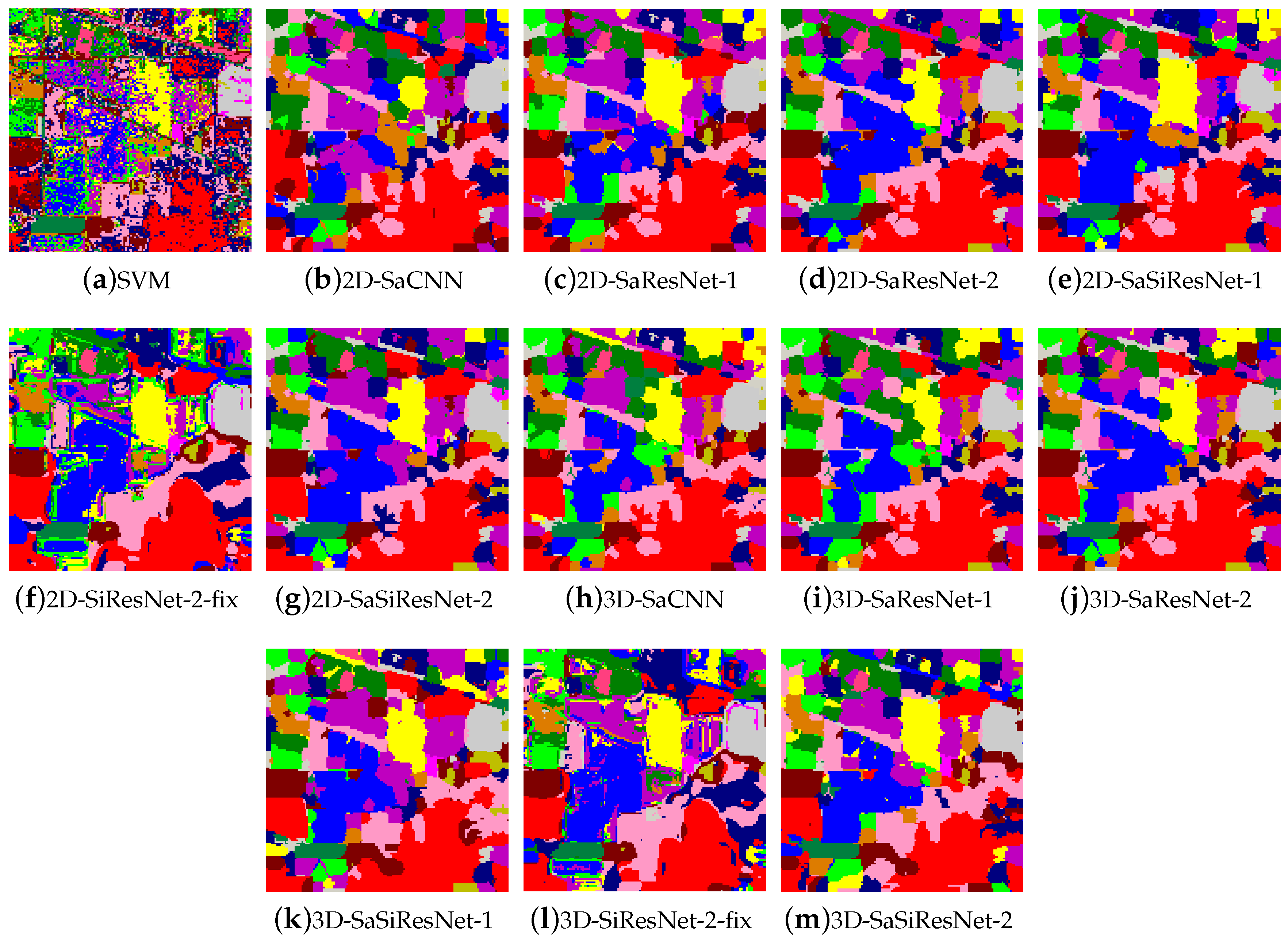

Figure 10.

Classification maps of the Indian Pines data obtained by (a) SVM, (b) 2D-SaCNN, (c) 2D-SaResNet-1, (d) 2D-SaResNet-2, (e) 2D-SaSiResNet-1, (f) 2D-SiResNet-fix, (g) 2D-SaSiResNet-2, (h) 3D-SaCNN, (i) 3D-SaResNet-1, (j) 3D-SaResNet-2, (k) 3D-SaSiResNet-1, (l) 3D-SiResNet-2-fix and (m) 3D-SaSiResNet-2.

Figure 10.

Classification maps of the Indian Pines data obtained by (a) SVM, (b) 2D-SaCNN, (c) 2D-SaResNet-1, (d) 2D-SaResNet-2, (e) 2D-SaSiResNet-1, (f) 2D-SiResNet-fix, (g) 2D-SaSiResNet-2, (h) 3D-SaCNN, (i) 3D-SaResNet-1, (j) 3D-SaResNet-2, (k) 3D-SaSiResNet-1, (l) 3D-SiResNet-2-fix and (m) 3D-SaSiResNet-2.

Figure 11.

Classification maps of the University of Pavia data obtained by (a) SVM, (b) 2D-SaCNN, (c) 2D-SaResNet-1, (d) 2D-SaResNet-2, (e) 2D-SaSiResNet-1, (f) 2D-SiResNet-fix, (g) 2D-SaSiResNet-2, (h) 3D-SaCNN, (i) 3D-SaResNet-1, (j) 3D-SaResNet-2, (k) 3D-SaSiResNet-1, (l) 3D-SiResNet-2-fix and (m) 3D-SaSiResNet-2.

Figure 11.

Classification maps of the University of Pavia data obtained by (a) SVM, (b) 2D-SaCNN, (c) 2D-SaResNet-1, (d) 2D-SaResNet-2, (e) 2D-SaSiResNet-1, (f) 2D-SiResNet-fix, (g) 2D-SaSiResNet-2, (h) 3D-SaCNN, (i) 3D-SaResNet-1, (j) 3D-SaResNet-2, (k) 3D-SaSiResNet-1, (l) 3D-SiResNet-2-fix and (m) 3D-SaSiResNet-2.

Figure 12.

Classification maps of the Zurich data obtained by (a) SVM, (b) 2D-SaCNN, (c) 2D-SaResNet-1, (d) 2D-SaResNet-2, (e) 2D-SaSiResNet-1, (f) 2D-SiResNet-fix, (g) 2D-SaSiResNet-2, (h) 3D-SaCNN, (i) 3D-SaResNet-1, (j) 3D-SaResNet-2, (k) 3D-SaSiResNet-1, (l) 3D-SiResNet-2-fix and (m) 3D-SaSiResNet-2.

Figure 12.

Classification maps of the Zurich data obtained by (a) SVM, (b) 2D-SaCNN, (c) 2D-SaResNet-1, (d) 2D-SaResNet-2, (e) 2D-SaSiResNet-1, (f) 2D-SiResNet-fix, (g) 2D-SaSiResNet-2, (h) 3D-SaCNN, (i) 3D-SaResNet-1, (j) 3D-SaResNet-2, (k) 3D-SaSiResNet-1, (l) 3D-SiResNet-2-fix and (m) 3D-SaSiResNet-2.

Figure 13.

Scattering map of the two-dimensional features obtained by (a) the original data, (b) 2D-SaCNN, (c) 2D-SaSiResNet-2, (d) 3D-SiResNet-2-fix, and (e) 3D-SaSiResNet-2 for the Indian Pines data. (a,d) represent the features of each pixel while (b,c,e) are the features of each patch; therefore, the number of features in object-based methods (i.e., (b,c,e)) are much less than that in the original data (i.e., (a)) and the pixel-based method (i.e., (d)).

Figure 13.

Scattering map of the two-dimensional features obtained by (a) the original data, (b) 2D-SaCNN, (c) 2D-SaSiResNet-2, (d) 3D-SiResNet-2-fix, and (e) 3D-SaSiResNet-2 for the Indian Pines data. (a,d) represent the features of each pixel while (b,c,e) are the features of each patch; therefore, the number of features in object-based methods (i.e., (b,c,e)) are much less than that in the original data (i.e., (a)) and the pixel-based method (i.e., (d)).

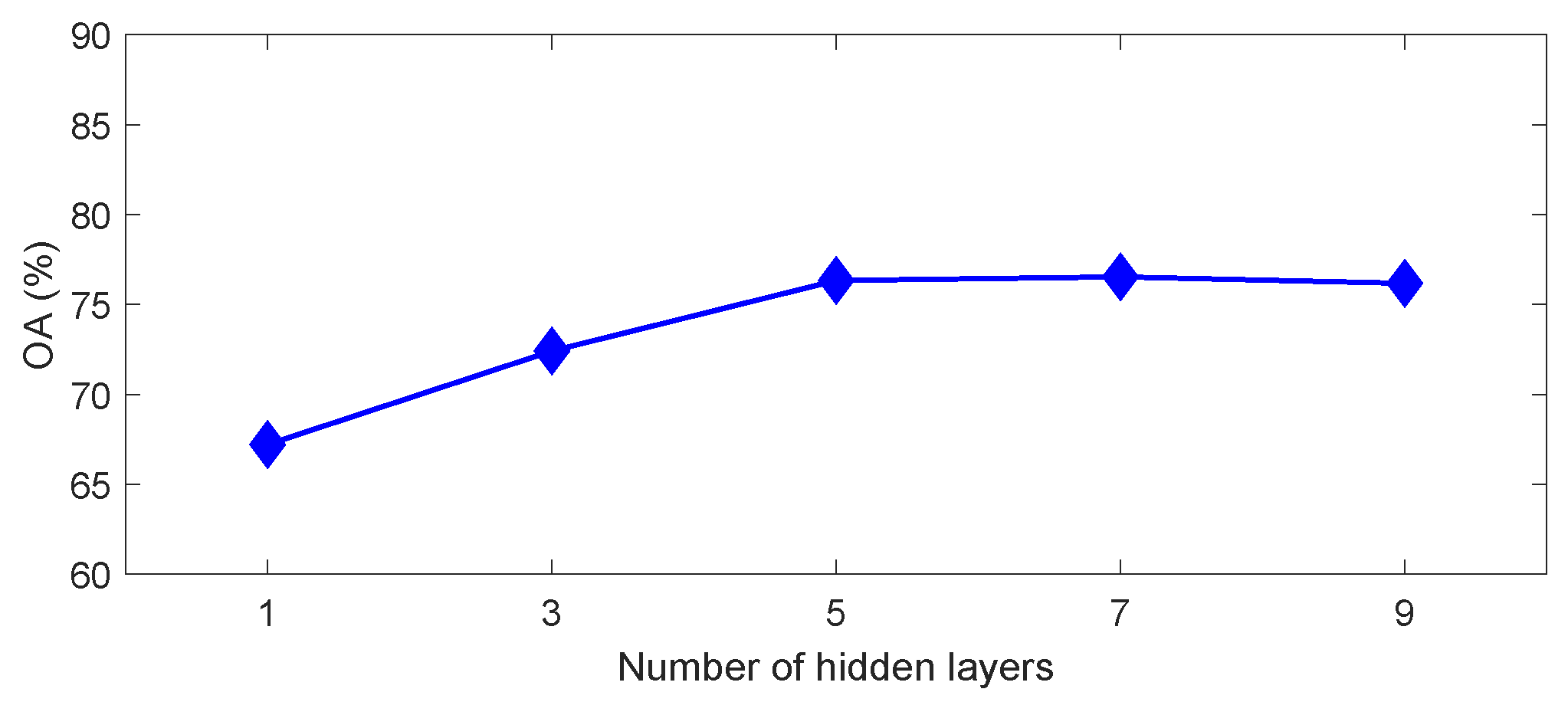

Figure 14.

Impact of the number of hidden layers in SAE to the performance of OA for the Indian Pines data.

Figure 14.

Impact of the number of hidden layers in SAE to the performance of OA for the Indian Pines data.

Figure 15.

Overall accuracy (%) of different methods with various number of training samples for the Indian Pines data. The number of training samples in classes 1, 7, and 9 is constantly set to 10 in different cases.

Figure 15.

Overall accuracy (%) of different methods with various number of training samples for the Indian Pines data. The number of training samples in classes 1, 7, and 9 is constantly set to 10 in different cases.

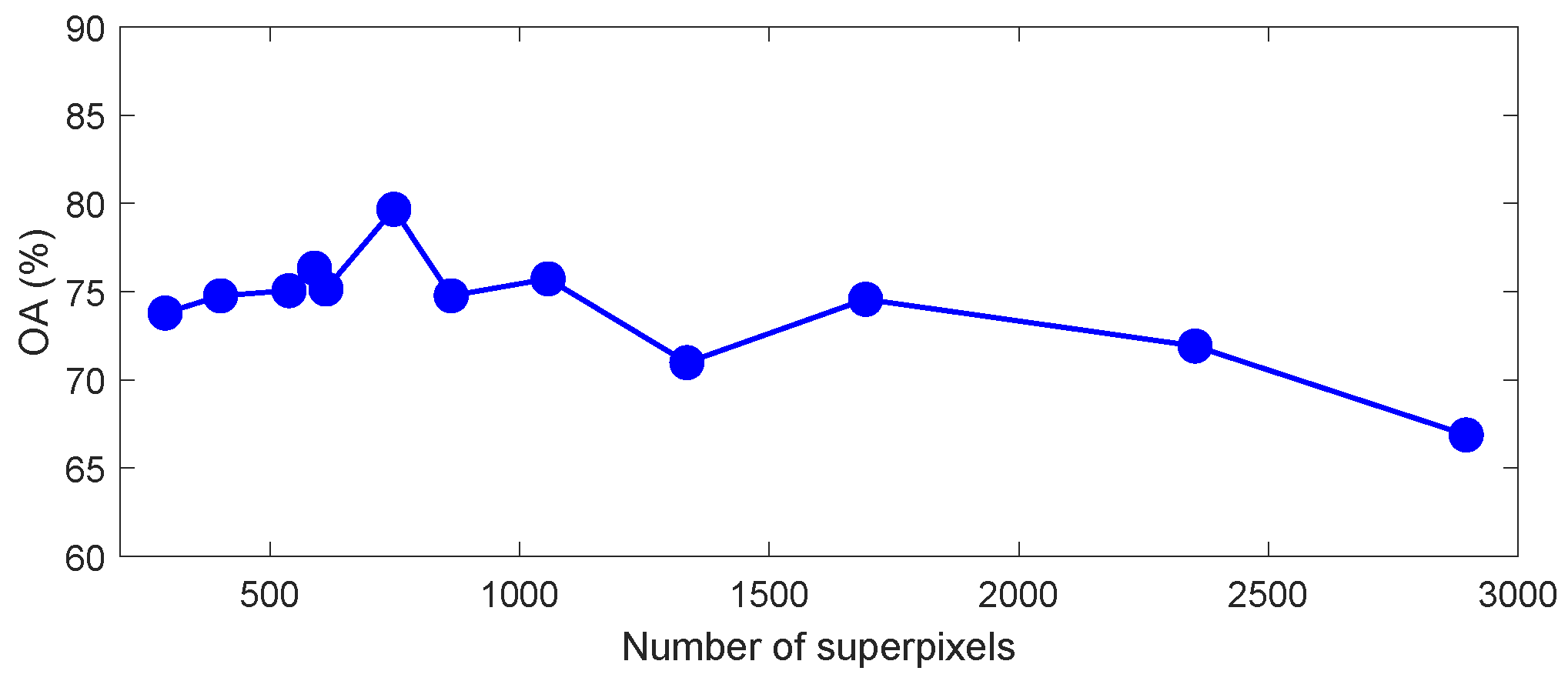

Figure 16.

Impact of the number of superpixels on the performance of OA in the proposed 3D-SaSiResNet method for the Indian Pines data.

Figure 16.

Impact of the number of superpixels on the performance of OA in the proposed 3D-SaSiResNet method for the Indian Pines data.

Figure 17.

Impact of the number of training epochs to the performance of OA in the proposed 3D-SaSiResNet-2 method for the Indian Pines data.

Figure 17.

Impact of the number of training epochs to the performance of OA in the proposed 3D-SaSiResNet-2 method for the Indian Pines data.

Table 1.

Number of training, validation, and testing samples used in the Indian Pines data.

Table 1.

Number of training, validation, and testing samples used in the Indian Pines data.

| Class | Name | Training | Validation | Testing | Total |

|---|

| 1 | Alfalfa | 10 | 10 | 34 | 54 |

| 2 | Corn-no till | 10 | 10 | 1414 | 1434 |

| 3 | Corn-min till | 10 | 10 | 814 | 834 |

| 4 | Corn | 10 | 10 | 214 | 234 |

| 5 | Grass/pasture | 10 | 10 | 477 | 497 |

| 6 | Grass/trees | 10 | 10 | 727 | 747 |

| 7 | Grass/pasture-mowed | 10 | 10 | 6 | 26 |

| 8 | Hay-windrowed | 10 | 10 | 469 | 489 |

| 9 | Oats | 10 | 5 | 5 | 20 |

| 10 | Soybean-no till | 10 | 10 | 948 | 968 |

| 11 | Soybean-min till | 10 | 10 | 2448 | 2468 |

| 12 | Soybean-clean till | 10 | 10 | 594 | 614 |

| 13 | Wheat | 10 | 10 | 192 | 212 |

| 14 | Woods | 10 | 10 | 1274 | 1294 |

| 15 | Bldg-grass-tree-drives | 10 | 10 | 360 | 380 |

| 16 | Stone-steel towers | 10 | 10 | 75 | 95 |

| | Total | 160 | 155 | 10,051 | 10,366 |

Table 2.

Number of training, validation, and testing samples used in the University of Pavia data.

Table 2.

Number of training, validation, and testing samples used in the University of Pavia data.

| Class | Name | Training | Validation | Testing | Total |

|---|

| 1 | Asphalt | 10 | 10 | 6611 | 6631 |

| 2 | Meadows | 10 | 10 | 18,629 | 18,649 |

| 3 | Gravel | 10 | 10 | 2079 | 2099 |

| 4 | Trees | 10 | 10 | 3044 | 3064 |

| 5 | Metal sheets | 10 | 10 | 1325 | 1345 |

| 6 | Bare soil | 10 | 10 | 5009 | 5029 |

| 7 | Bitumen | 10 | 10 | 1310 | 1330 |

| 8 | Bricks | 10 | 10 | 3662 | 3682 |

| 9 | shadows | 10 | 10 | 927 | 947 |

| | Total | 90 | 90 | 42,596 | 42,776 |

Table 3.

Number of training, validation, and testing samples used in the Zurich data.

Table 3.

Number of training, validation, and testing samples used in the Zurich data.

| Class | Name | Training | Validation | Testing | Total |

|---|

| 1 | Roads | 10 | 10 | 51,162 | 51,182 |

| 2 | Trees | 10 | 10 | 185,116 | 185,136 |

| 3 | Grass | 10 | 10 | 65,616 | 65,636 |

| 4 | Buildings | 10 | 10 | 40,214 | 40,234 |

| 5 | Bare soil | 10 | 10 | 19,925 | 19,945 |

| 6 | Swimming pools | 10 | 10 | 4385 | 4405 |

| | Total | 60 | 60 | 366,418 | 366,538 |

Table 4.

Detailed configuration of the 2D-SaCNN. Bold type indicates the number of feature maps in each layer.

Table 4.

Detailed configuration of the 2D-SaCNN. Bold type indicates the number of feature maps in each layer.

| Layer | Input Size | Kernel Size | Stride | Padding | Cardinality | Output Size |

|---|

| Convolution-1 | | | (1,1) | (0,0) | 1 | |

| BN-1 | | – | – | – | – | |

| ReLU-1 | | – | – | – | – | |

| Max-pooling-1 | | | (2,2) | (0,0) | – | |

| Convolution-2 | | | (1,1) | (1,1) | 1 | |

| BN-2 | | – | – | – | – | |

| ReLU-2 | | – | – | – | – | |

| Convolution-3 | | | (1,1) | (1,1) | 1 | |

| BN-3 | | – | – | – | – | |

| ReLU-3 | | – | – | – | – | |

| Flatten | | – | – | – | – | |

| Output | | – | – | – | – | C |

Table 5.

Detailed configuration of the 2D-SaResNet-1 and a subnetwork of the 2D-SaSiResNet-1. Bold type indicates the number of feature maps in each layer.

Table 5.

Detailed configuration of the 2D-SaResNet-1 and a subnetwork of the 2D-SaSiResNet-1. Bold type indicates the number of feature maps in each layer.

| Layer | Input Size | Kernel Size | Stride | Padding | Cardinality | Output Size |

|---|

| Convolution-1 | | | (1,1) | (0,0) | 1 | |

| BN-1 | | – | – | – | – | |

| ReLU-1 | | – | – | – | – | |

| Max-pooling-1 | | | (2,2) | (0,0) | – | |

| Convolution-2 | | | (1,1) | (1,1) | 1 | |

| BN-2 | | – | – | – | – | |

| ReLU-2 | | – | – | – | – | |

| Convolution-3 | | | (1,1) | (1,1) | 1 | |

| BN-3 | | – | – | – | – | |

| Shortcut | Output of Max-pooling 1 + Output of BN 3 |

| ReLU-3 | | – | – | – | – | |

| Flatten | | – | – | – | – | |

| Output | | – | – | – | – | |

Table 6.

Detailed configuration of the 2D-SaResNet-2, and a subnetwork of the 2D-SaSiResNet-2 and 2D-SiResNet-2-fix. Bold type indicates the number of feature maps in each layer.

Table 6.

Detailed configuration of the 2D-SaResNet-2, and a subnetwork of the 2D-SaSiResNet-2 and 2D-SiResNet-2-fix. Bold type indicates the number of feature maps in each layer.

| Layer | Input Size | Kernel Size | Stride | Padding | Cardinality | Output Size |

|---|

| Convolution-1 | | | (1,1) | (0,0) | 8 | |

| BN-1 | | – | – | – | – | |

| ReLU-1 | | – | – | – | – | |

| Max-pooling-1 | | | (2,2) | (0,0) | – | |

| Convolution-2 | | | (1,1) | (1,1) | 8 | |

| BN-2 | | – | – | – | – | |

| ReLU-2 | | – | – | – | – | |

| Convolution-3 | | | (1,1) | (1,1) | 8 | |

| BN-3 | | – | – | – | – | |

| Shortcut | Output of Max-pooling 1 + Output of BN 3 |

| ReLU-3 | | – | – | – | – | |

| Flatten | | – | – | – | – | |

| Output | | – | – | – | – | |

Table 7.

Detailed configuration of the 3D-SaCNN. Bold type indicates the number of feature maps in each layer.

Table 7.

Detailed configuration of the 3D-SaCNN. Bold type indicates the number of feature maps in each layer.

| Layer | Input Size | Kernel Size | Stride | Padding | Cardinality | Output Size |

|---|

| Convolution-1 | | | (1,1,1) | (0,0,0) | 1 | | |

| BN-1 | | – | – | – | – | |

| ReLU-1 | | – | – | – | – | |

| Max-pooling-1 | | | (2,2,2) | (0,0,0) | – | |

| Convolution-2 | | | (1,1,1) | (1,1,1) | 1 | |

| BN-2 | | – | – | – | – | |

| ReLU-2 | | – | – | – | – | |

| Convolution-3 | | | (1,1,1) | (1,1,1) | 1 | |

| BN-3 | | – | – | – | – | |

| ReLU-3 | | – | – | – | – | |

| Flatten | | – | – | – | – | | |

| Output | | – | – | – | – | |

Table 8.

Detailed configuration of the 3D-SaResNet-1 and a subnetwork of the 3D-SaSiResNet-1. Bold type indicates the number of feature maps in each layer.

Table 8.

Detailed configuration of the 3D-SaResNet-1 and a subnetwork of the 3D-SaSiResNet-1. Bold type indicates the number of feature maps in each layer.

| Layer | Input Size | Kernel Size | Stride | Padding | Cardinality | Output Size |

|---|

| Convolution-1 | | | (1,1,1) | (0,0,0) | 1 | | |

| BN-1 | | – | – | – | – | |

| ReLU-1 | | – | – | – | – | |

| Max-pooling-1 | | | (2,2,2) | (0,0,0) | – | |

| Convolution-2 | | | (1,1,1) | (1,1,1) | 1 | |

| BN-2 | | – | – | – | – | |

| ReLU-2 | | – | – | – | – | |

| Convolution-3 | | | (1,1,1) | (1,1,1) | 1 | |

| BN-3 | | – | – | – | – | |

| Shortcut | Output of Max-pooling 1 + Output of BN 3 |

| ReLU-3 | | – | – | – | – | |

| Flatten | | – | – | – | – | | |

| Output | | – | – | – | – | |

Table 9.

Detailed configuration of the 3D-SaResNet-2, and a subnetwork of the 3D-SaSiResNet-2 and 3D-SiResNet-2-fix. Bold type indicates the number of feature maps in each layer.

Table 9.

Detailed configuration of the 3D-SaResNet-2, and a subnetwork of the 3D-SaSiResNet-2 and 3D-SiResNet-2-fix. Bold type indicates the number of feature maps in each layer.

| Layer | Input Size | Kernel Size | Stride | Padding | Cardinality | Output Size |

|---|

| Convolution-1 | | | (1,1,1) | (0,0,0) | 8 | | |

| BN-1 | | – | – | – | – | |

| ReLU-1 | | – | – | – | – | |

| Max-pooling-1 | | | (2,2,2) | (0,0,0) | – | |

| Convolution-2 | | | (1,1,1) | (1,1,1) | 8 | |

| BN-2 | | – | – | – | – | |

| ReLU-2 | | – | – | – | – | |

| Convolution-3 | | | (1,1,1) | (1,1,1) | 8 | |

| BN-3 | | – | – | – | – | |

| Shortcut | Output of Max-pooling 1 + Output of BN 3 |

| ReLU-3 | | – | – | – | – | |

| Flatten | | – | – | – | – | | |

| Output | | – | – | – | – | |

Table 10.

Classification accuracy (%) of various methods for the Indian Pines data with 10 labeled training samples per class, bold values indicate the best result for a row.

Table 10.

Classification accuracy (%) of various methods for the Indian Pines data with 10 labeled training samples per class, bold values indicate the best result for a row.

| Class | SVM | 2D-Based Methods | 3D-Based Methods |

|---|

| | | SaCNN | SaResNet-1 | SaResNet-2 | SaSiResNet-1 | SiResNet-2-fix | SaSiResNet-2 | SaCNN | SaResNet-1 | SaResNet-2 | SaSiResNet-1 | SiResNet-2-fix | SaSiResNet-2 |

| 1 | 80.29 | 8.24 | 84.97 | 92.06 | 94.41 | 95.00 | 84.41 | 94.71 | 94.71 | 94.71 | 94.71 | 95.00 | 95.88 |

| 2 | 40.57 | 50.48 | 51.54 | 48.68 | 59.43 | 49.29 | 54.51 | 44.06 | 43.47 | 37.99 | 66.85 | 55.37 | 66.61 |

| 3 | 53.51 | 43.21 | 63.36 | 64.66 | 77.17 | 54.84 | 76.41 | 77.81 | 79.18 | 68.05 | 79.30 | 65.43 | 81.92 |

| 4 | 68.74 | 77.38 | 78.71 | 77.99 | 84.21 | 87.24 | 76.65 | 88.27 | 83.55 | 75.19 | 81.73 | 88.41 | 80.51 |

| 5 | 72.73 | 39.71 | 49.36 | 64.68 | 84.93 | 78.43 | 86.76 | 83.14 | 72.62 | 83.33 | 90.17 | 82.35 | 89.18 |

| 6 | 71.02 | 77.59 | 90.91 | 95.25 | 89.08 | 87.79 | 86.85 | 89.63 | 90.50 | 90.83 | 88.78 | 90.54 | 89.71 |

| 7 | 85.00 | 90.00 | 100.00 | 100.00 | 100.00 | 96.67 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 98.33 | 100.00 |

| 8 | 83.45 | 98.06 | 99.57 | 99.57 | 94.95 | 91.83 | 94.90 | 95.61 | 96.67 | 97.42 | 96.25 | 92.75 | 95.65 |

| 9 | 94.00 | 18.00 | 35.56 | 36.00 | 30.00 | 100.00 | 44.50 | 36.00 | 36.00 | 36.00 | 38.00 | 100.00 | 36.00 |

| 10 | 54.56 | 32.41 | 62.68 | 54.02 | 75.81 | 67.45 | 72.15 | 62.80 | 63.48 | 70.04 | 72.61 | 68.63 | 77.68 |

| 11 | 35.16 | 38.42 | 52.99 | 51.90 | 53.52 | 52.39 | 65.60 | 43.00 | 45.92 | 56.26 | 59.44 | 61.87 | 59.23 |

| 12 | 48.38 | 66.68 | 55.33 | 68.97 | 72.29 | 56.14 | 79.29 | 72.05 | 76.18 | 69.12 | 76.11 | 70.77 | 75.96 |

| 13 | 91.93 | 85.73 | 98.61 | 98.59 | 98.59 | 98.96 | 98.54 | 98.59 | 98.59 | 98.59 | 95.42 | 98.02 | 98.59 |

| 14 | 79.80 | 83.30 | 94.37 | 95.64 | 91.00 | 89.18 | 92.90 | 92.84 | 92.01 | 89.22 | 93.15 | 89.59 | 90.08 |

| 15 | 52.28 | 73.50 | 77.75 | 77.78 | 83.47 | 77.47 | 83.80 | 86.75 | 79.69 | 77.64 | 79.97 | 73.58 | 81.14 |

| 16 | 79.33 | 80.53 | 78.52 | 78.67 | 78.27 | 99.60 | 80.29 | 79.07 | 79.87 | 79.07 | 77.20 | 94.67 | 77.47 |

| OA (%) | 55.26 | 56.20 | 67.29 | 67.93 | 73.15 | 67.17 | 75.41 | 67.57 | 67.88 | 68.88 | 76.05 | 72.48 | 76.33 |

| AA (%) | 68.17 | 60.20 | 73.39 | 75.28 | 79.19 | 80.14 | 79.85 | 77.78 | 77.03 | 76.47 | 80.60 | 82.83 | 80.98 |

| (%) | 50.09 | 50.88 | 63.00 | 63.77 | 69.84 | 63.16 | 72.16 | 63.77 | 64.04 | 64.87 | 73.00 | 68.93 | 73.33 |

| time(min) | 0 + 0.02 | 1.86 + 1.11 | 1.86 + 1.59 | 1.86 + 1.54 | 1.86 + 14.25 | 0.11 + 58.33 | 1.86 + 14.11 | 1.86 + 1.55 | 1.86 + 1.64 | 1.86 + 1.58 | 1.86 + 14.42 | 0.11 + 76.47 | 1.86 + 14.31 |

Table 11.

Classification accuracy (%) of various methods for the University of Pavia data with 10 labeled training samples per class, bold values indicate the best result for a row.

Table 11.

Classification accuracy (%) of various methods for the University of Pavia data with 10 labeled training samples per class, bold values indicate the best result for a row.

| Class | SVM | 2D-Based Methods | 3D-Based Methods |

|---|

| | | SaCNN | SaResNet-1 | SaResNet-2 | SaSiResNet-1 | SiResNet-2-fix | SaSiResNet-2 | SaCNN | SaResNet-1 | SaResNet-2 | SaSiResNet-1 | SiResNet-2-fix | SaSiResNet-2 |

| 1 | 66.83 | 64.19 | 80.45 | 84.73 | 82.90 | 74.12 | 83.78 | 81.15 | 86.79 | 85.64 | 87.23 | 88.12 | 89.83 |

| 2 | 67.36 | 62.34 | 80.27 | 82.78 | 76.82 | 84.83 | 76.34 | 73.35 | 74.93 | 76.34 | 75.83 | 78.04 | 79.12 |

| 3 | 65.63 | 87.98 | 82.33 | 80.01 | 82.38 | 73.87 | 76.18 | 79.69 | 72.59 | 78.07 | 80.43 | 84.13 | 84.29 |

| 4 | 87.26 | 92.40 | 93.61 | 93.18 | 91.50 | 91.60 | 91.14 | 90.93 | 88.85 | 88.57 | 89.63 | 90.65 | 90.78 |

| 5 | 96.39 | 97.92 | 96.77 | 95.25 | 94.27 | 97.82 | 93.51 | 97.67 | 97.35 | 98.01 | 95.55 | 96.52 | 95.56 |

| 6 | 50.92 | 52.61 | 44.29 | 46.37 | 73.86 | 67.10 | 79.23 | 70.46 | 72.11 | 66.80 | 78.12 | 75.46 | 73.80 |

| 7 | 72.24 | 95.31 | 96.40 | 95.53 | 93.75 | 85.20 | 93.90 | 93.83 | 96.18 | 96.00 | 97.90 | 97.75 | 97.60 |

| 8 | 53.44 | 66.42 | 64.90 | 62.29 | 62.49 | 65.24 | 71.12 | 64.80 | 67.99 | 71.92 | 83.14 | 79.87 | 79.57 |

| 9 | 89.05 | 83.60 | 81.27 | 78.99 | 77.72 | 96.30 | 74.95 | 80.58 | 80.81 | 80.50 | 79.34 | 78.92 | 77.13 |

| OA (%) | 67.01 | 67.82 | 76.83 | 78.35 | 78.59 | 80.02 | 79.48 | 76.61 | 78.20 | 78.61 | 81.08 | 81.86 | 82.32 |

| AA (%) | 72.13 | 78.09 | 80.03 | 79.90 | 81.74 | 81.79 | 82.24 | 81.38 | 81.96 | 82.43 | 85.24 | 85.49 | 85.30 |

| (%) | 58.14 | 59.97 | 69.86 | 71.65 | 72.74 | 74.07 | 73.93 | 70.48 | 72.30 | 72.59 | 75.94 | 76.78 | 77.31 |

| time(min) | 0 + 0.03 | 10.11 + 1.05 | 10.11 + 1.37 | 10.11 + 1.24 | 10.11 + 9.24 | 1.28 + 13.22 | 10.11 + 8.37 | 10.11 + 1.01 | 10.11 + 1.32 | 10.11 + 1.21 | 10.11+ 8.35 | 1.28 + 13.19 | 10.11 + 8.05 |

Table 12.

Classification accuracy (%) of various methods for the Zurich data with 10 labeled training samples per class, bold values indicate the best result for a row.

Table 12.

Classification accuracy (%) of various methods for the Zurich data with 10 labeled training samples per class, bold values indicate the best result for a row.

| Class | SVM | 2D-Based Methods | 3D-Based Methods |

|---|

| | | SaCNN | SaResNet-1 | SaResNet-2 | SaSiResNet-1 | SiResNet-2-fix | SaSiResNet-2 | SaCNN | SaResNet-1 | SaResNet-2 | SaSiResNet-1 | SiResNet-2-fix | SaSiResNet-2 |

| 1 | 32.15 | 52.49 | 45.69 | 45.49 | 57.68 | 62.17 | 57.47 | 54.98 | 51.63 | 49.13 | 58.12 | 57.90 | 53.17 |

| 2 | 66.36 | 50.45 | 79.92 | 84.76 | 82.83 | 86.31 | 84.29 | 78.93 | 81.30 | 83.27 | 82.88 | 83.00 | 86.71 |

| 3 | 90.57 | 63.58 | 88.78 | 81.09 | 81.31 | 74.14 | 80.80 | 84.32 | 82.86 | 80.57 | 81.19 | 82.90 | 78.50 |

| 4 | 27.79 | 37.35 | 64.53 | 61.41 | 58.47 | 52.53 | 58.31 | 65.55 | 65.59 | 66.32 | 58.45 | 58.15 | 64.00 |

| 5 | 70.08 | 76.54 | 77.65 | 78.15 | 79.12 | 79.45 | 79.42 | 81.42 | 81.53 | 77.20 | 79.81 | 83.17 | 80.35 |

| 6 | 95.50 | 98.79 | 94.37 | 94.99 | 93.68 | 96.66 | 93.99 | 94.04 | 94.76 | 92.80 | 94.18 | 95.52 | 92.97 |

| OA (%) | 62.23 | 53.65 | 75.09 | 75.82 | 76.30 | 76.80 | 76.92 | 75.40 | 75.89 | 75.95 | 76.41 | 76.91 | 77.79 |

| AA (%) | 63.74 | 63.20 | 75.16 | 74.32 | 75.51 | 75.21 | 75.71 | 76.54 | 76.28 | 74.88 | 75.77 | 76.77 | 75.95 |

| (%) | 47.48 | 40.40 | 64.68 | 65.08 | 66.08 | 66.72 | 66.81 | 65.31 | 65.77 | 65.55 | 66.32 | 67.40 | 67.95 |

| time(min) | 0 + 0.13 | 3.22 + 1.01 | 3.22 +0.94 | 3.22 + 0.86 | 3.22 + 3.54 | 5.62 + 7.94 | 3.22 + 3.23 | 3.22 + 0.98 | 3.22 + 0.92 | 3.22 + 0.68 | 3.22+ 2.91 | 5.62 + 6.54 | 3.22 + 3.12 |

Table 13.

McNemar’s test between 3D-SaSiResNet-2 and other methods.

Table 13.

McNemar’s test between 3D-SaSiResNet-2 and other methods.

| Methods | Z |

|---|

| | Indian Pines Data | University of Pavia Data | Zurich Data |

| 3D-SaSiResNet-2 vs. SVM | 51.35 | 78.65 | 194.55 |

| 3D-SaSiResNet-2 vs. 2D-SaCNN | 49.05 | 74.38 | 283.45 |

| 3D-SaSiResNet-2 vs. 2D-SaResNet-1 | 21.96 | 28.61 | 29.12 |

| 3D-SaSiResNet-2 vs. 2D-SaResNet-2 | 20.40 | 20.87 | 20.14 |

| 3D-SaSiResNet-2 vs. 2D-SaSiResNet-1 | 7.65 | 19.64 | 12.97 |

| 3D-SaSiResNet-2 vs. 2D-SiResNet-2-fix | 22.25 | 12.38 | 6.45 |

| 3D-SaSiResNet-2 vs. 2D-SaSiResNet-2 | 2.13 | 15.31 | 4.90 |

| 3D-SaSiResNet-2 vs. 3D-SaCNN | 21.28 | 29.51 | 24.52 |

| 3D-SaSiResNet-2 vs. 3D-SaResNet-1 | 20.01 | 21.54 | 18.66 |

| 3D-SaSiResNet-2 vs. 3D-SaResNet-2 | 19.35 | 18.72 | 17.42 |

| 3D-SaSiResNet-2 vs. 3D-SaSiResNet-1 | 1.19 | 7.12 | 10.54 |

| 3D-SaSiResNet-2 vs. 3D-SiResNet-2-fix | 7.89 | 2.94 | 5.51 |