1. Introduction

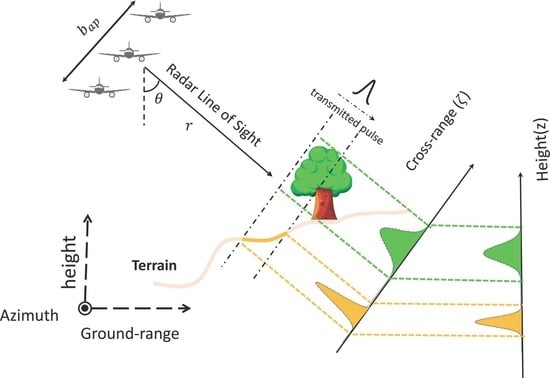

Synthetic Aperture Radar Tomography (TomoSAR) is generally referred to as an active microwave imaging technology to resolve the illuminated targets in the three dimensional (3D) space using multiple SAR acquisitions [

1]. The rationale of TomoSAR is easily understood by considering it as an extension of SAR imaging from 2D to 3D. Conventional SAR systems transmit short pulses and receive the echoes backscattered by the illuminated targets along single flight trajectories, i.e., a 1D synthetic aperture in the SAR jargon. In this way, the received signal can be focused through digital signal processing techniques to produce a 2D image of the illuminated area, where targets are resolved in the range/azimuth plane [

2]. TomoSAR imaging is based on the collection of multiple flight trajectories to form a 2D synthetic aperture. This allows focusing the received signal not only in the range/azimuth plane, as in conventional 2D SAR imaging, but also in elevation, hence resulting in the possibility to resolve the targets in three-dimensions (3D). Leveraging this relatively simple principle, the introduction of SAR tomography has opened up a completely new way to investigate natural media. Indeed, Radar waves can penetrate by meters, or even tens of meters, into natural media that are opaque at optical frequency, as it is the case of vegetation, snow, ice, and sand, hence providing sensitivity to the internal structure of those media. The downside is of course that penetration entails that the received signal is determined by a variety of different scattering mechanisms, including surface scattering, volume scattering, scattering from internal layers, as well as multiple-scattering phenomena, which hinders physical interpretation based on 2D imaging. In this context, the use of SAR tomography has provided researchers with the possibility to directly see the structure of electromagnetic reflections in the interior of the illuminated media, resulting in a significant advancement of the understanding of the way Radar waves interact with natural media. Numerous excellent works on this topic are found in the recent literature, concerning the analysis of forested areas [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16], snow [

17,

18,

19,

20], ice sheets and glaciers [

21,

22,

23,

24,

25,

26].

In a large part of the literature, 3D imaging is achieved by assuming mono-dimensional signal processing approaches following from models developed in the field of SAR Interferometry, where the vector formed by taking all pixels at the same range/azimuth location in multiple SAR images is transformed into a new vector of pixels representing the profile of the scene reflectivity along elevation. This class of approaches, which we will refer to throughout this paper as 1D TomoSAR processing, is strictly based on the assumption that the position of a target along elevation has a negligible impact on the range/azimuth position at which the same target appears in different SAR images. This assumption is only verified in the presence of very closely-spaced and parallel trajectories, which strongly limits the achievable vertical resolution. For this reason, 1D processing approaches are often implemented by resorting to model-based methods and/or super-resolution methods from spectral analysis. The case of urban scenarios is often treated by using processing methods for target detection and position estimation, under the assumption of a conveniently small number of targets displaced in the direction of elevation [

27,

28]. The case of natural scenarios is more problematic, since the objects of interests are assumed to be continuous media. In this case, super-resolution methods from spectral analysis can be used to provide super-resolution capabilities [

29,

30,

31], but the inversion problem is intrinsically under-determined, leading to poor radiometric accuracy. For this reason, the achievement of fine vertical resolution and radiometric accuracy for 3D imaging of natural scenarios is essentially related to the use of large 2D apertures formed by flying different trajectories. Still, the use of largely separated trajectories results in range migration as a function of target elevation, thus violating the assumption underpinning the use of 1D processing methods. In this case, 3D imaging can only be achieved by processing approaches that consider multiple range samples from each SAR image, which we will refer to as 2D TomoSAR processing. The situation becomes even more complicated in the presence of irregular trajectories with variable headings, in which case azimuth migration occurs as well. In this case, the one theoretically exact approach consists in going back to raw SAR data to resolve the targets by 3D back-projection [

32,

33,

34]. This approach, which we will refer to as 3D TomoSAR processing, is suitable for treating the case of arbitrary trajectories, but it suffers from two important drawbacks: (

i) the high computational burden, which is likely to exceed the capabilities of computers normally available to researchers; (

) the need for raw data, which are not available to final users in the vast majority of cases.

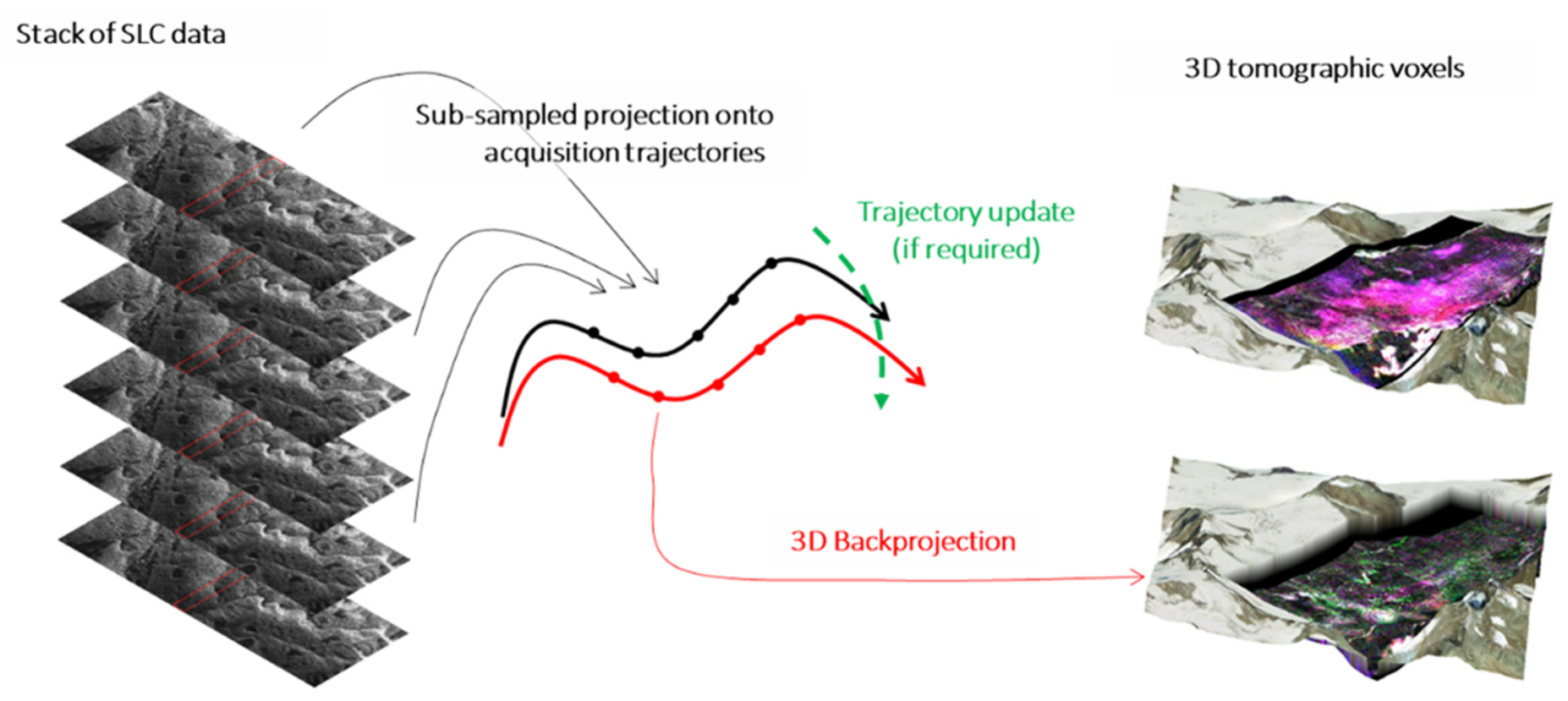

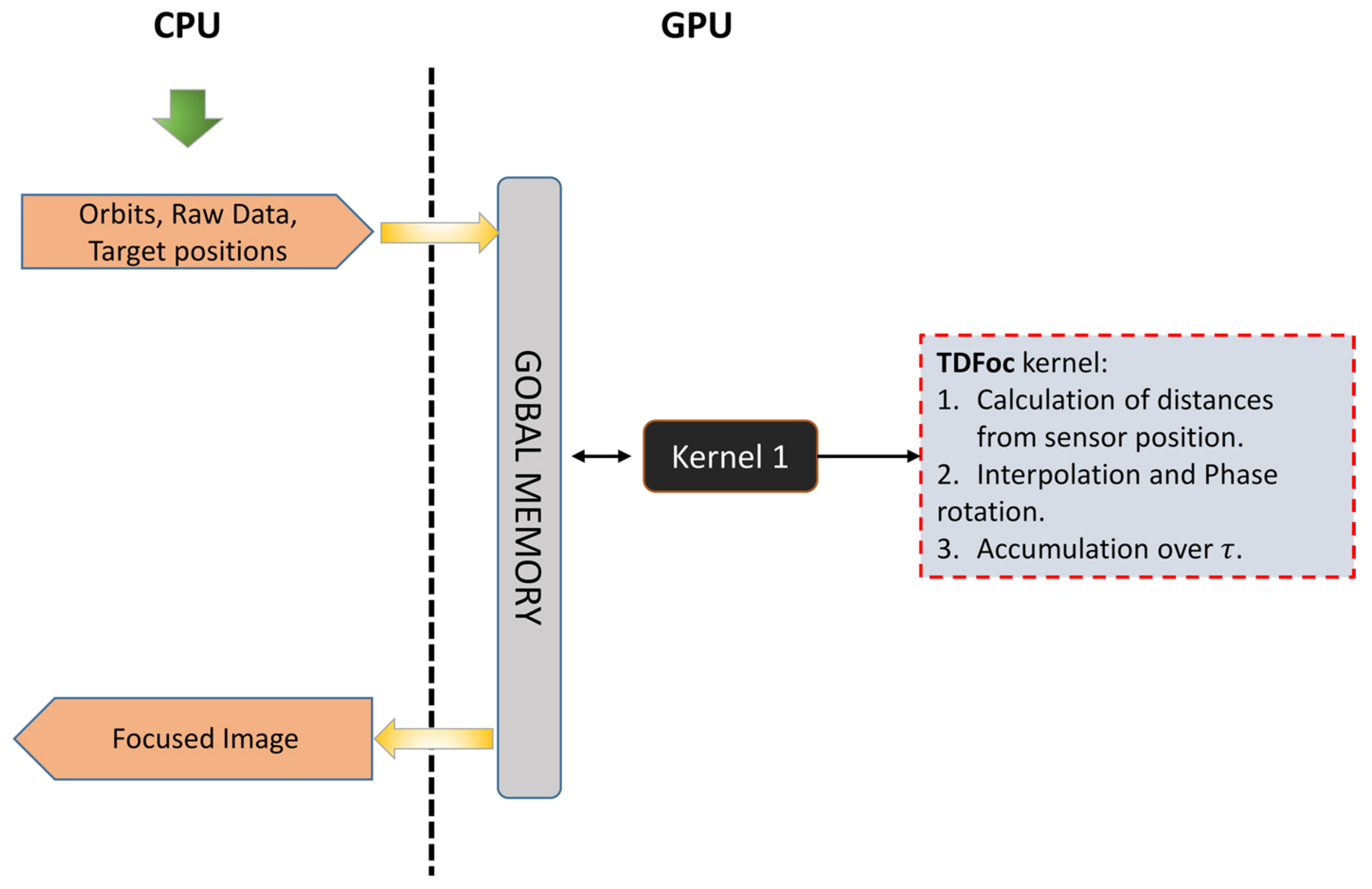

The aim of this paper is twofold. In the first place, we intend to provide an exhaustive discussion of the quality of 3D imagery achievable by 1D, 2D, or 3D TomoSAR processing methods. To do this, we will analyze in depth the conditions under which each approach can be applied, and cast precise requirements concerning acquisition geometry in relation with to vertical extent of the imaged scene. The case of 3D TomoSAR processing will then be further discussed, and a new processing method based on fast defocusing and refocusing will be proposed to achieve high-quality imaging while largely reducing the computational burden, and without having to process the original raw data. Furthermore, this new method will be demonstrated to be easily parallelized and implemented using GPU processing. The analysis will be supported by results from numerical simulations as well as from real airborne data from the ESA campaign AlpTomoSAR, for which we will show that the proposed 3D method is capable of providing very accurate results at a processing burden compatible with the capabilities of standard computers.

This paper is organized as follows:

Section 2 is devoted to illustrating the basic signal models of SAR and TomoSAR imaging, and to introducing the notation to be used throughout the paper. The 1D, 2D and 3D TomoSAR processing approaches are derived and discussed in

Section 3. The proposed 3D processing approach is presented and discussed in

Section 4.

Section 5 presents results from numerical simulations. The case of real data is considered in

Section 6, where the airborne data set acquired during the ESA campaign AlpTomoSAR is analyzed by 1D, 2D, and 3D methods. Finally, conclusions are drawn in

Section 7.

2. Signal Models and Notations

SAR data are multi-dimensional signals, obtained by operating a Radar sensor to transmit and receive short electromagnetic pulses as it is carried on-board a moving platform. In the remainder, we will denote raw range-compressed data by

, where

t is fast time (that is time with respect to the instant of transmission), and

is the slow time (or flight time). Neglecting amplitude terms related to antenna illumination, range compressed SAR data can be expressed mathematically as:

where

denotes the complex reflectivity of a target at

,

is the cardinal since function,

is the carrier wavelength.

denotes the distance between the sensor position at time

and the target at

.

Azimuth compression is carried out by projecting range-compressed data onto a 2D coordinate system, which can either be represented by range/azimuth coordinates

relative to each single trajectory, or by ground range and azimuth coordinates of a given surface, i.e.,

. Focused data are referred to as Single Look Complex (SLC) data, and can be obtained by assuming different processing methods, which might largely differ one from another concerning computational burden and accuracy. For the sake of generality, we will consider here Time Domain Back Projection (TDBP), which provides an exact approach even in the case of strongly nonlinear trajectories [

35,

36]. The back-projection operator is expressed as:

in the range/azimuth coordinate system, or

if focusing is performed with respect to a given surface

expressed in ground range/azimuth coordinates. In both cases, spatial resolution of SLC images is obtained as:

in the range direction, with c the speed of light and

B the transmitted bandwidth, and

in the azimuth direction, with

the length of synthetic aperture, and

r the closest-approach distance [

37].

2.1. The Interferometric SAR Model

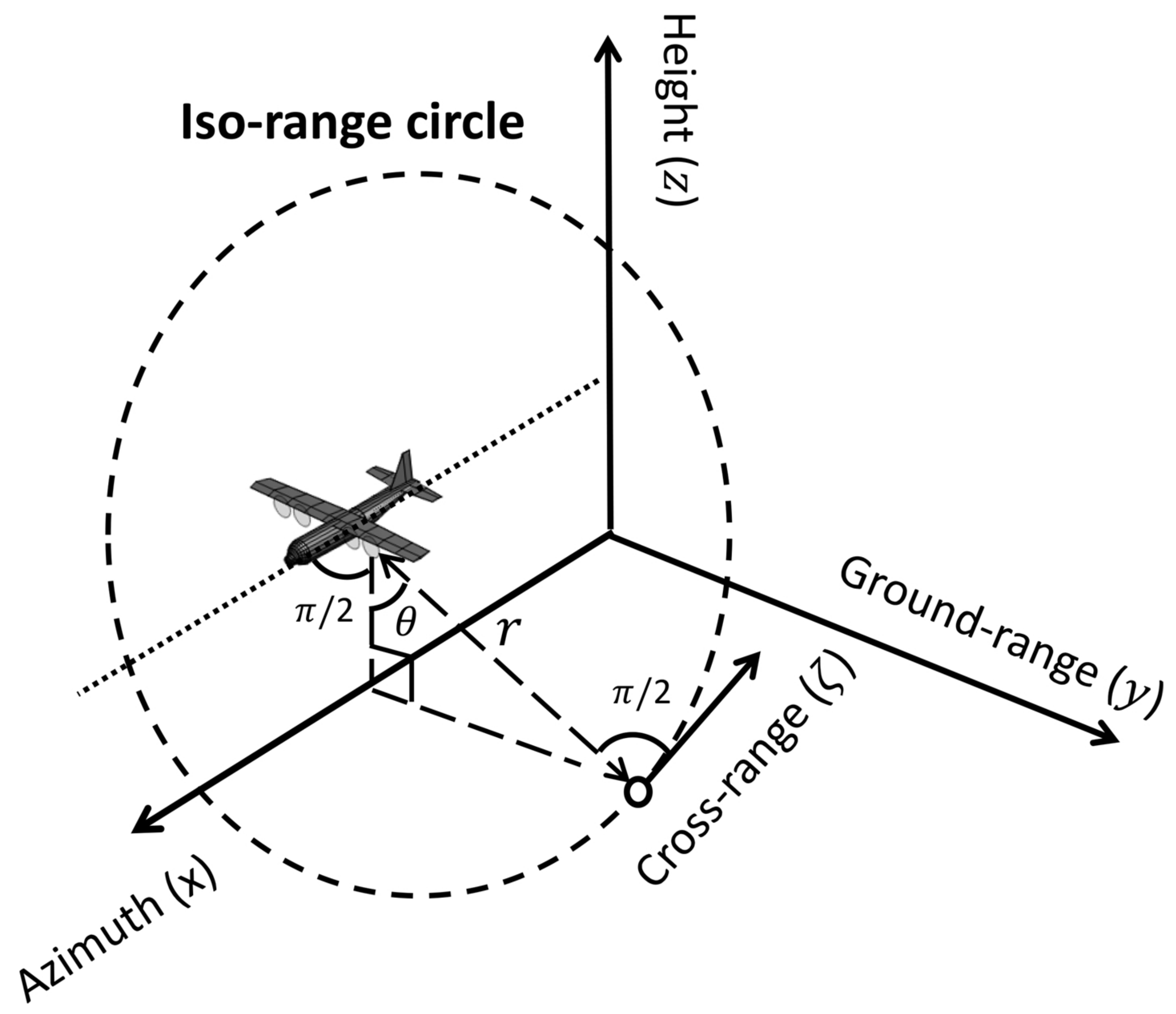

Under the assumption of a linear trajectory, the same raw data is generated by targets lying at different spatial coordinates. The geometrical locus defined by all these spatial coordinates is the iso-range circle orthogonal to the trajectory and centered on it, as represented in

Figure 1. Hence, under the assumption of a linear trajectory, SLC SAR images can be represented mathematically in a conveniently simple form as [

38,

39]:

where

is the closest approach to a target at

. The equation above is often expressed in a cylindrical coordinate system centered on sensor trajectory as:

where the integration is carried out along the iso-range circle spanned by the incidence angle (

).

In large part of the literature, the expression in Equation (

7) is further simplified by approximating the iso-range circle to a segment along its tangent to at the position of the target (see

Figure 1), and expressed as:

where the linear coordinate

is generally referred to as cross-range, or elevation, and the integration with respect to

is intended to be limited by the vertical extent of the targets.

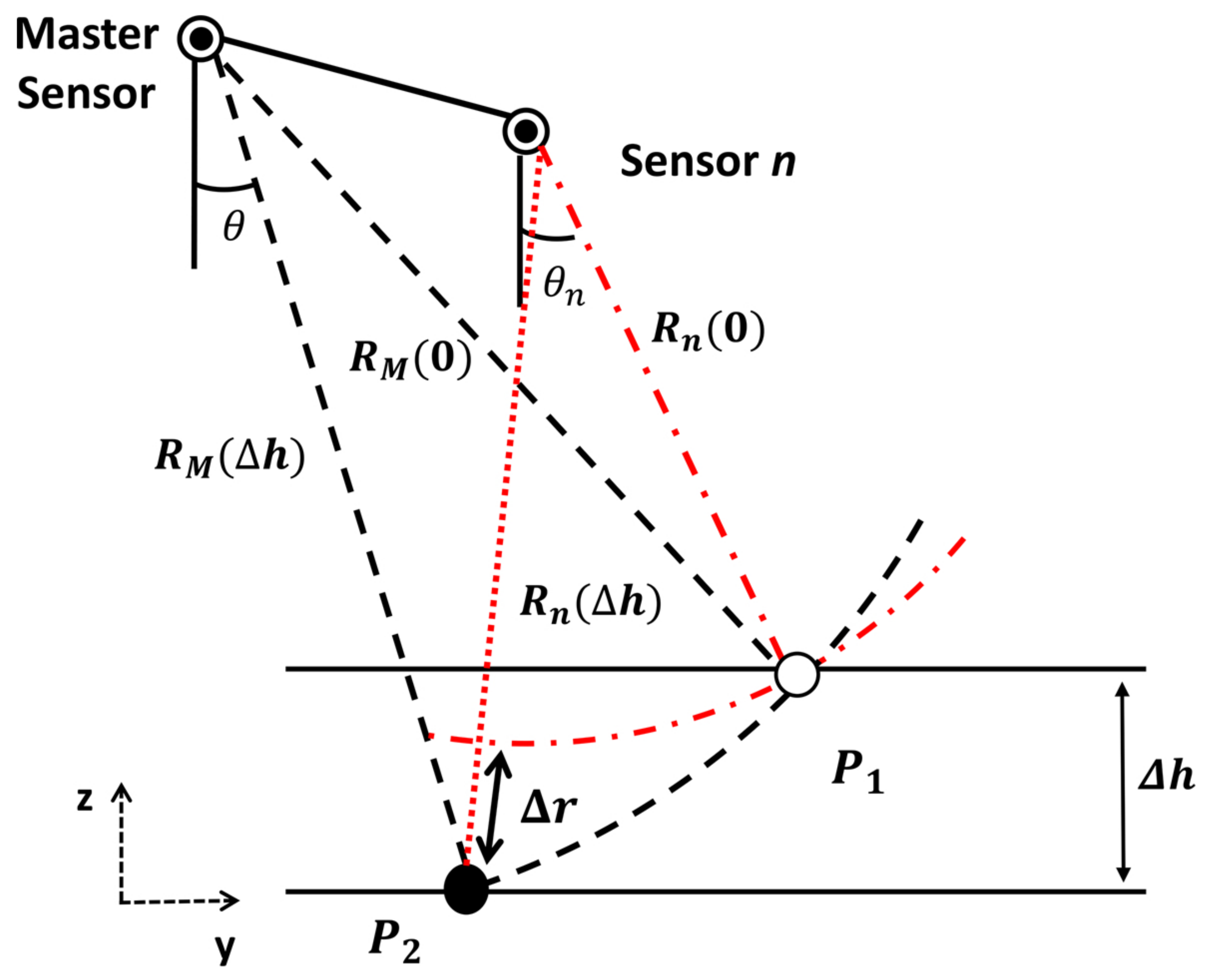

An underlying assumption of conventional SAR Interferometry (InSAR) theory is that SLC images from different trajectories can be properly co-registered onto a common reference range/azimuth coordinate systems, and subsequently expressed mathematically using a common range/azimuth/elevation coordinate system, while the role of trajectory diversity is only accounted for in the expression of phase terms. Mathematically, the InSAR model is expressed as:

where

represents the SLC image acquired along the

nth trajectory, and

represents the closest-approach distance to a target at

. Note that this expression implies the choice of a reference image, commonly referred to as

in the InSAR jargon, used for defining the reference system, so that

by definition.

This expression can be further approximated by expressing distances with respect to a reference surface

, yielding the well-known InSAR model:

where

is the elevation wavenumber, defined as:

with

the incidence angle with respect to the

nth trajectory, and

the incidence angle with respect to the Master trajectory.

The InSAR model in Equation (

10) provides the basis to understand formally the underlying principles of tomographic imaging. Noting that the one term associated with the use of different trajectories is associated with elevation, the integral in Equation (

10) can be solved with respect to range and azimuth, yielding:

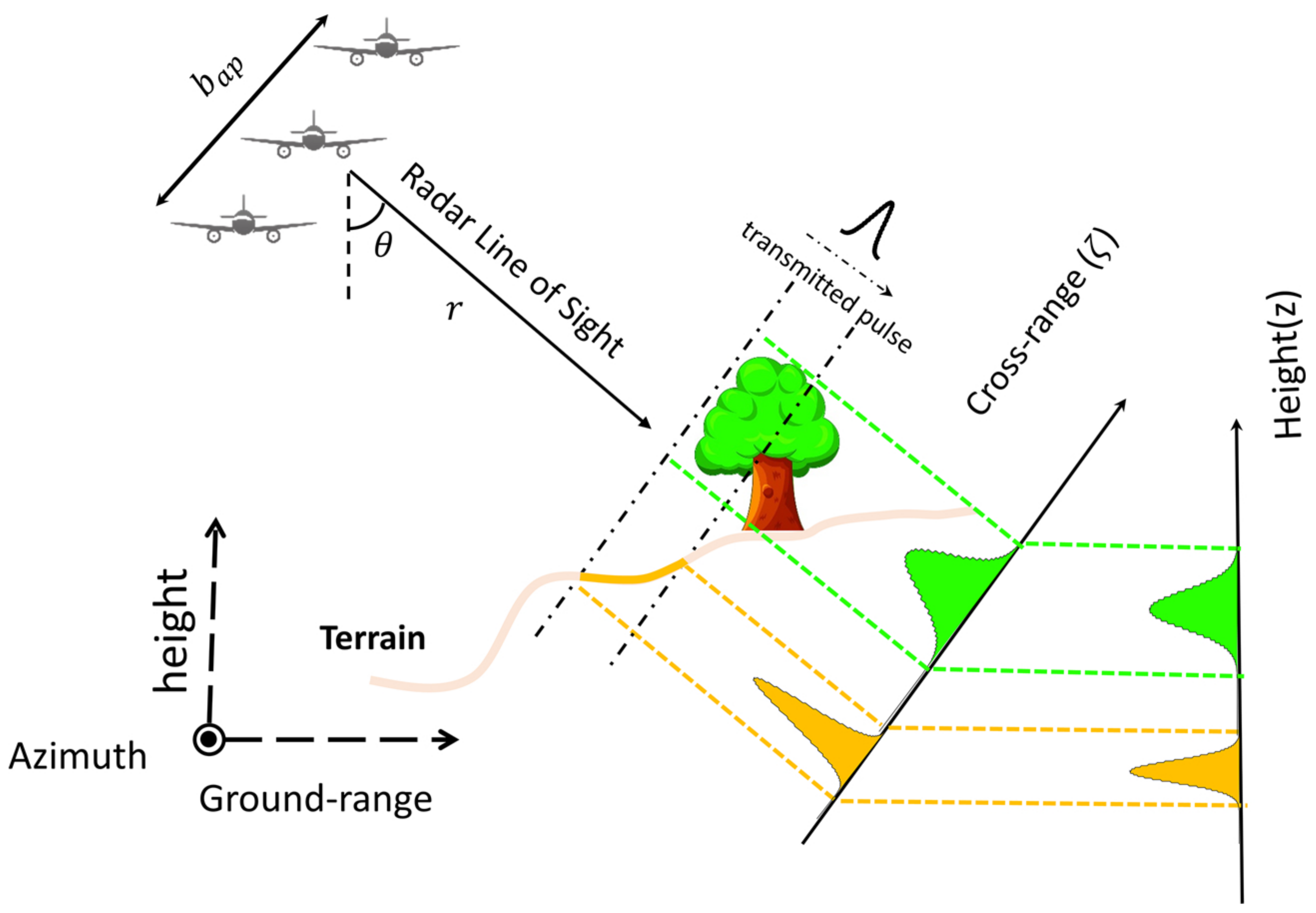

where

represents the projection of target reflectivity along elevation. A pictorial representation of this projection is given in

Figure 2. As highlighted in many papers [

1,

38,

40], this equation can be thought of as the key equation for SAR Tomography. Indeed, the integral in Equation (

12) is easily recognized as the Fourier Transform of (the projection of) target reflectivity along elevation, which can therefore be retrieved in a straightforward fashion simple by removing the phase terms outside the integral and by taking the anti-Fourier transform of SLC SAR data at any fixed range/azimuth location. For completeness, we recall that Equation (

12) is often expressed by representing the elevation coordinate as a function of height, according to the relationship:

, as shown in

Figure 2. Up to amplitude terms, this leads to

where integration is performed along the vertical extent of the target and

is called the phase-to-height conversion factor:

The achievable vertical resolution is also easily derived from standard Fourier theory, and is:

5. Numerical Simulations

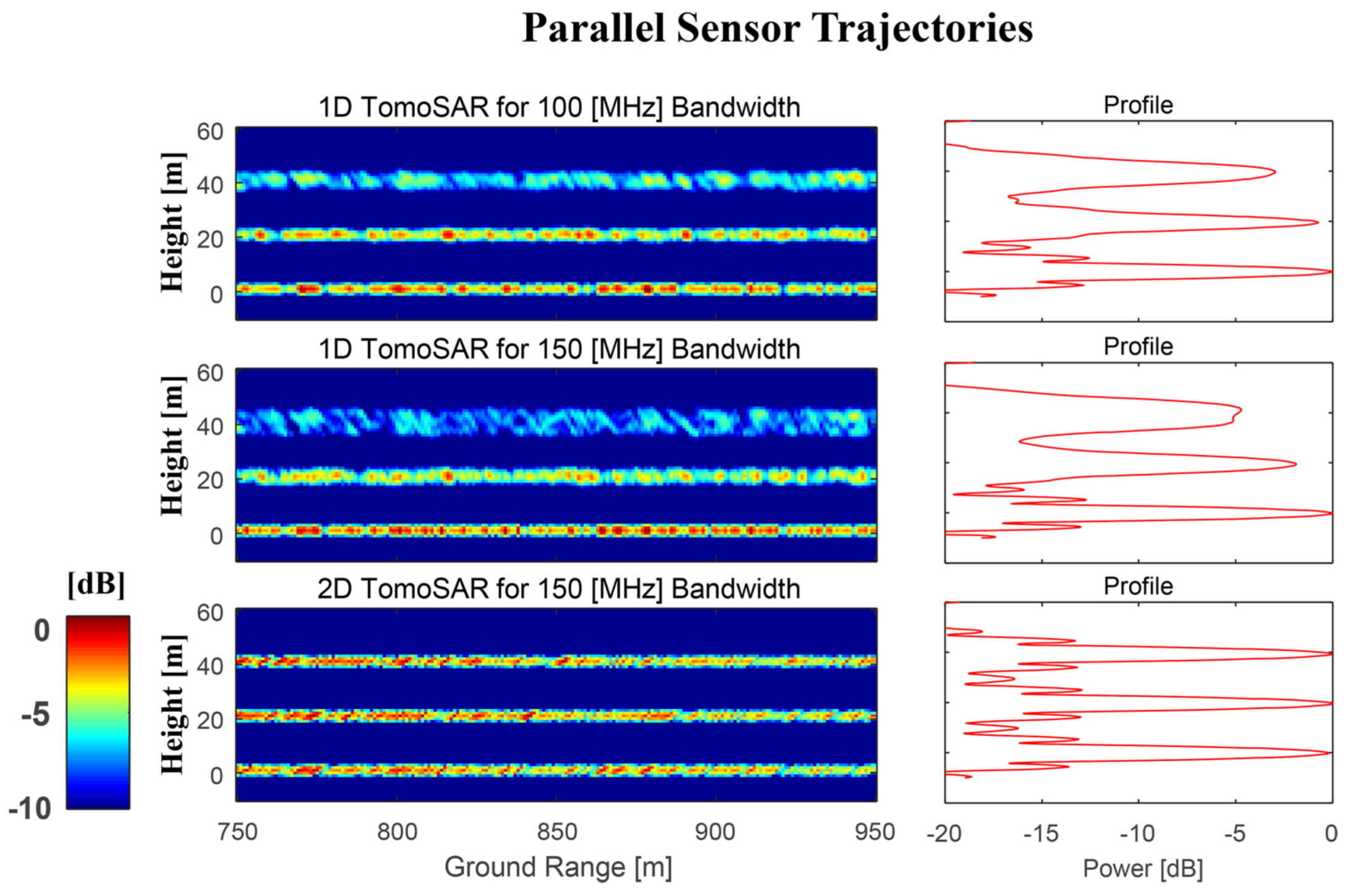

The simulated data were used to give a stepwise analysis of the advantages and limitations of 1D, 2D, and 3D TomoSAR approaches. Two kinds of simulated experiments are carried out to evaluate the tomographic performance of each method regarding the sensor trajectory stability, spatial resolutions, and target thickness. The first test assumed parallel trajectories and explored the tomographic performance differences between 1D and 2D TomoSAR processing with respect to low and high resolution configurations. The second test intended to compare each approach under high resolution systems with nonparallel trajectories. The relevant simulated parameters are summarized in

Table 2. The simulated scene includes distributed targets drawn from a circular normal complex distribution, and consists of three horizontal layers at 0, 20, and 40 m above a flat topography, which is assumed as the reference surface for the generation of SLC data.

In the first test, raw data were simulated under parallel sensor trajectories with 100 and 150 MHz bandwidths, respectively. One-dimension TomoSAR approaches retrieved the tomographic profiles from SAR data in both bandwidth configurations. In contrast, 2D TomoSAR methods only reconstruct the higher resolution tomographic profiles (see

Figure 8).

Results from the first test (parallel trajectories) are shown in

Figure 8. The panel in the first two rows were obtained by 1D TomoSAR processing, assuming a bandwidth 100 and 150 MHz. It is immediate to see that the surface at 0 m is correctly focused in all cases. This is because the 0 m surface has been taken as the reference in the generation of SLC data, hence all SLC are perfectly co-registered at this surface. This is not the case that surfaces at 20 m and 40 m, for which residual range migration leads to defocusing. As expected, the impact on 1D TomoSAR processing is more severe in the case of 150 MHz data, for which range migration is larger as compared to range resolution, leading to power losses of around 5 dB at the 40 m surface.

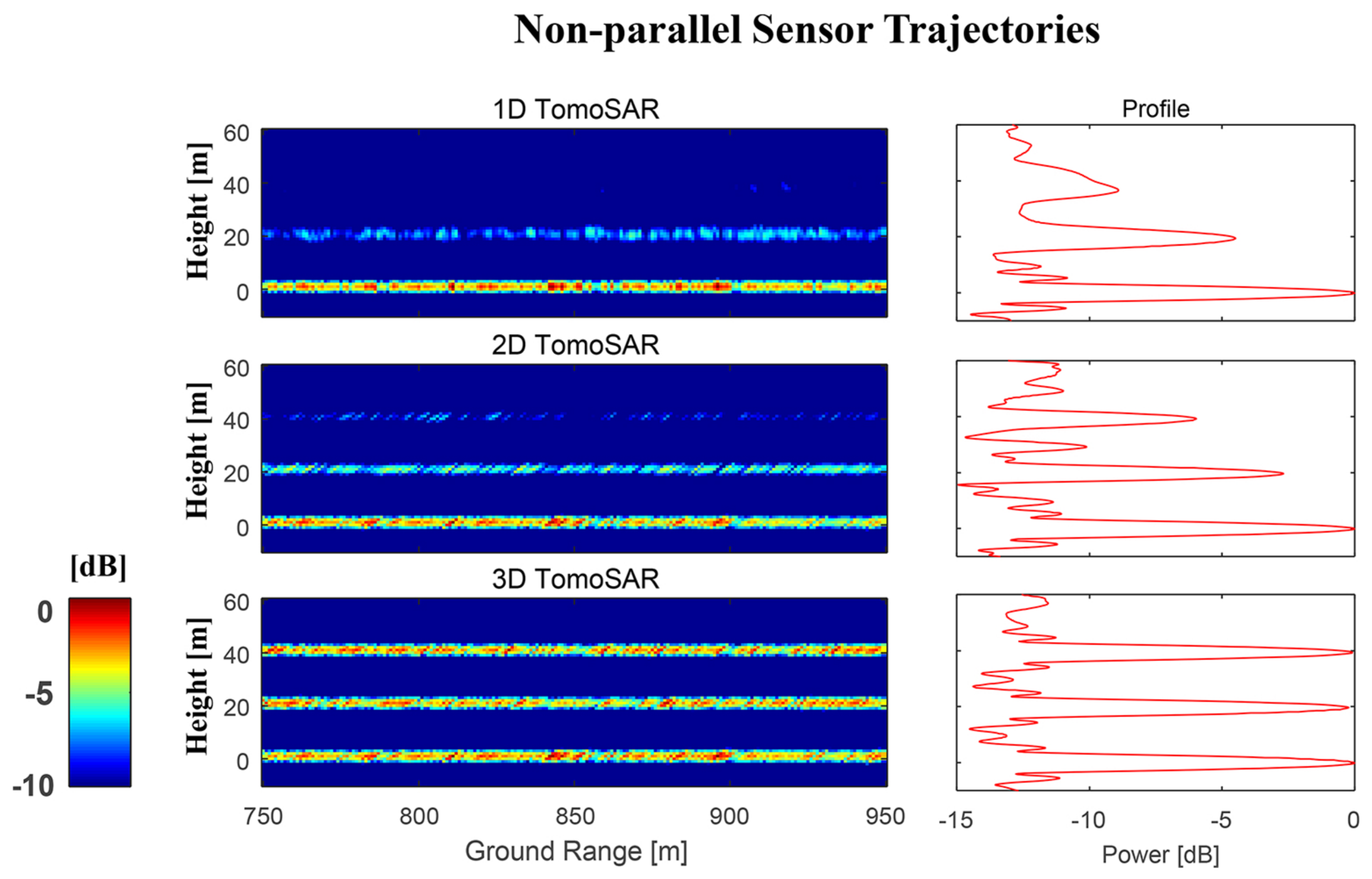

In the second test, the raw data were generated assuming a 150 MHz bandwidth and nonparallel trajectories. Trajectories were simulated assuming using different combinations of yaw and pitch angles, drawn from a random normal distribution with a standard deviation of 1.5 deg. Results are shown in

Figure 9, where the top, middle, and bottom rows refer to the cases of 1D, 2D, and 3D TomoSAR processing. In this case, the presence of residual range and azimuth migration leads to strong defocusing when using 1D and 2D TomoSAR processing, resulting in power losses of 10 dB at the 40 m surface.

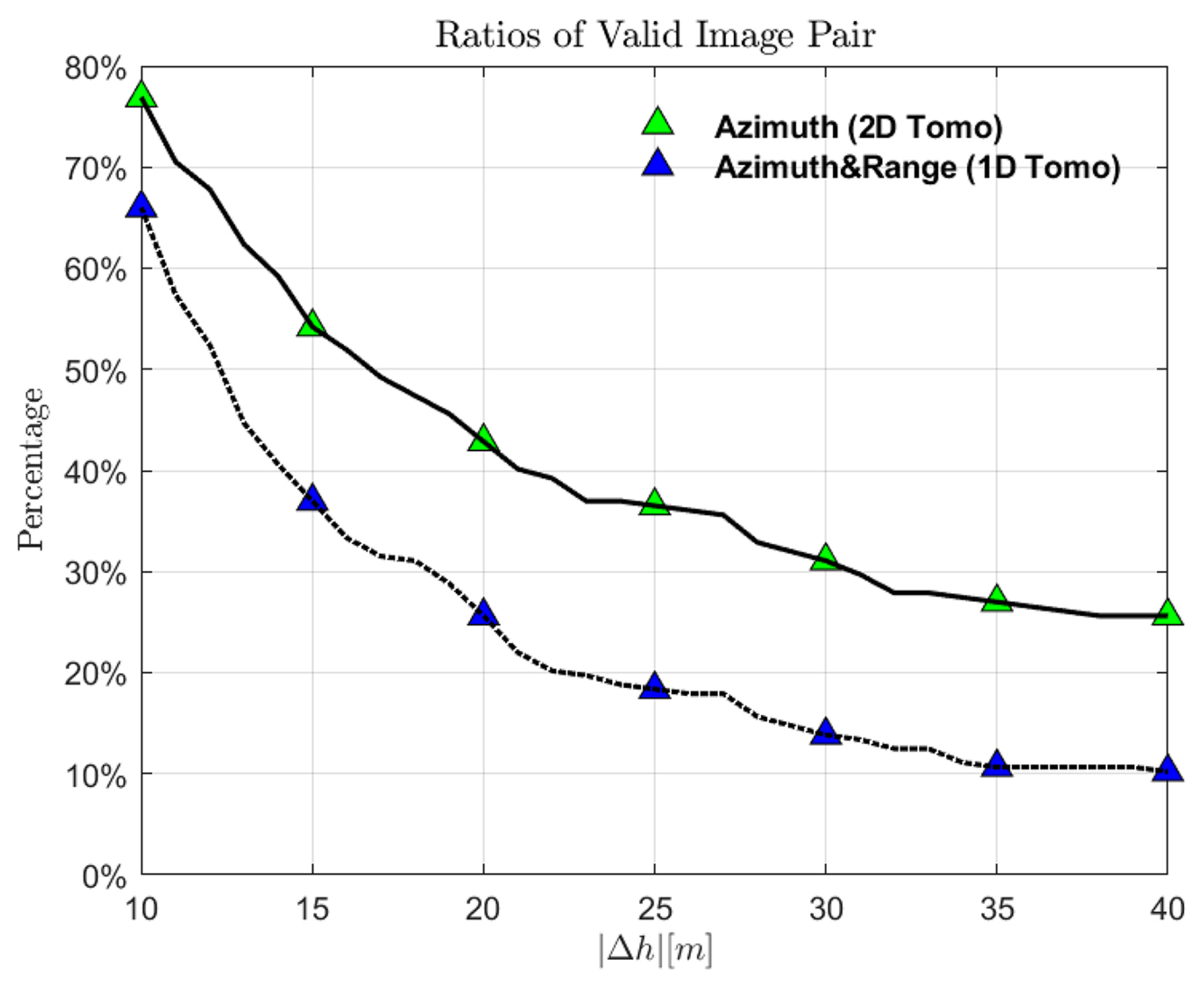

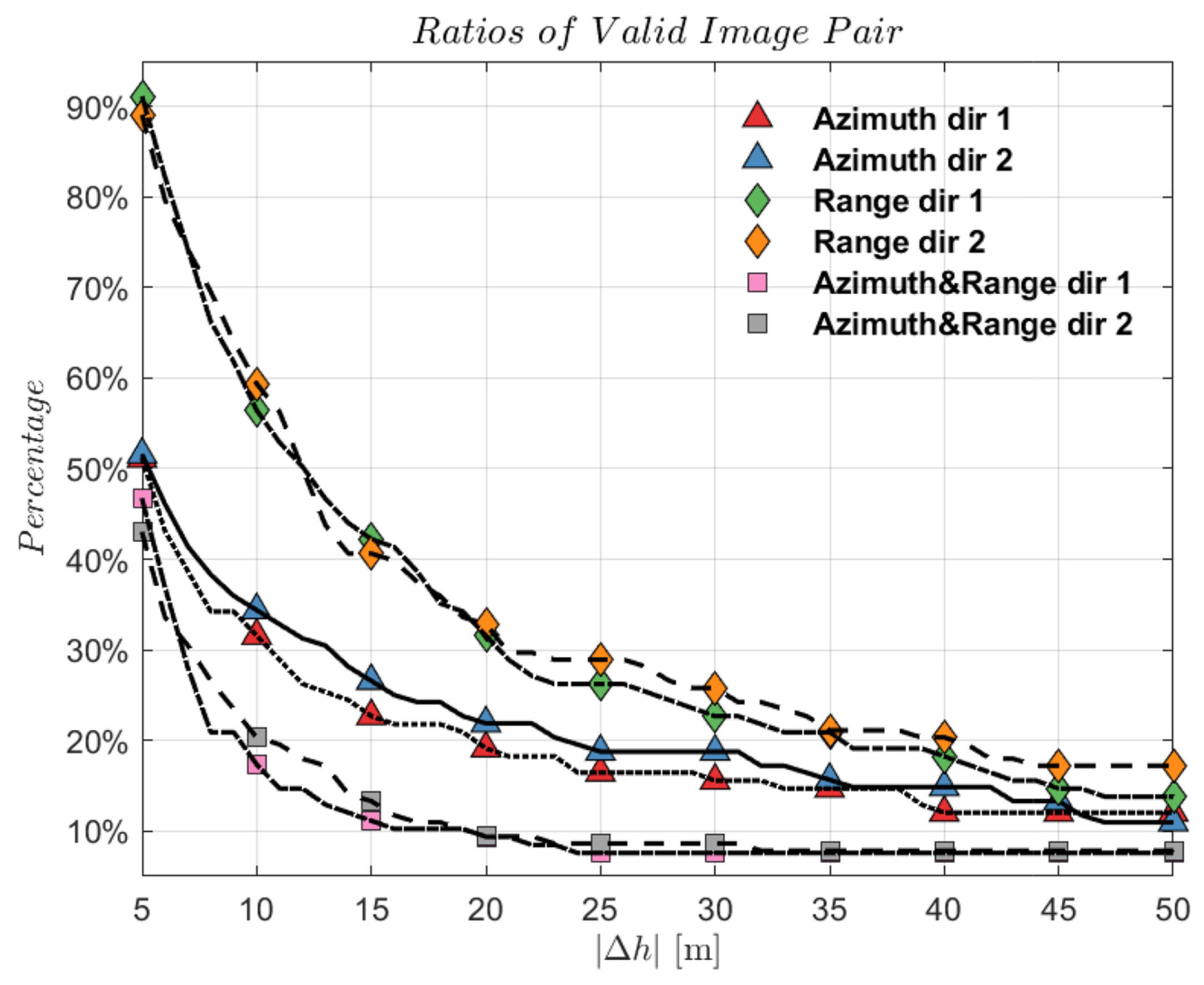

To gain more insights, we plot in

Figure 10 the percentage of valid image pairs as a function of the vertical extent of the scene. An image pair is defined “valid” if range and azimuth residual migration are less than half a resolution cell, that is if the conditions cast in Equations (

20) and (

24) hold. In the considered example, the percentage of valid pairs is

at 20 m and

at 40 m, which causes severe defocusing for height locations far from the reference surface. The use of 2D TomoSAR partly improves the percentage of valid pairs to

at 20 m and

at 40 m by compensating for residual range migration. Still, imaging quality cannot be fully recovered, as residual azimuth migration remains uncompensated.

6. Experiments on AlpTomoSAR Data

The ESA campaign AlpTomoSAR was flown by MetaSensing in 2014 at the Mittelbergferner glacier, in the Austrian study [

23], with the aim of demonstrating the use of SAR tomography for studying the internal structure of glaciers. SAR data were acquired at a central frequency of 1275 MHz, with a pulse bandwidth of 150 MHz. The sensor was flown along roughly 1300 m above the surface along an oval race-track configuration, to provide two independent data-sets from two opposite views. The flight lines were designed to have 10 parallel, closely spaced tracks plus other more largely spaced tracks to improve resolution. Flight trajectories have been perturbed by turbulent phenomena in proximity of the peaks, resulting in a dispersion of about 13 and 1m rms in the horizontal and vertical direction, respectively. In both views, the total baseline aperture resulted in a vertical resolution of approximately 2 m in free-space. SLC data have been focused through time domain back-projection onto a reference surface derived from a Lidar survey acquired in 2010.

Flight trajectories and the reference surface are included in the final data delivery along with SLC data. AlpTomoSAR data were processed in [

38] using the Phase Center Double Localization algorithm to produce accurate information about flight trajectories, since the accuracy of original navigational data was found not to be sufficient for tomographic imaging. In this study, tomographic focusing is carried out using the same trajectories produced in [

38].

Residual range and azimuth migrations are analyzed in

Figure 11. For both views, it is observed that residual azimuth migrations are the main factor that limits the number of valid pairs in 1D and 2D TomoSAR processing, which gets as low as

at 50 m from the reference surface. As shown in [

23], AlpTomoSAR data provide sensitivity to the whole ice body, including the ice surface and the bedrock at approximately 60 m below. This value has to be scaled for the ice refractivity index (about 1.8), yielding an equivalent vertical extent over 100 m in free-space. Accordingly, a height offset of at least 50 m with respect to the reference surface has to be accounted for, even considering the best case where all SLC data are co-registered in the middle of the glacier vertical extent.

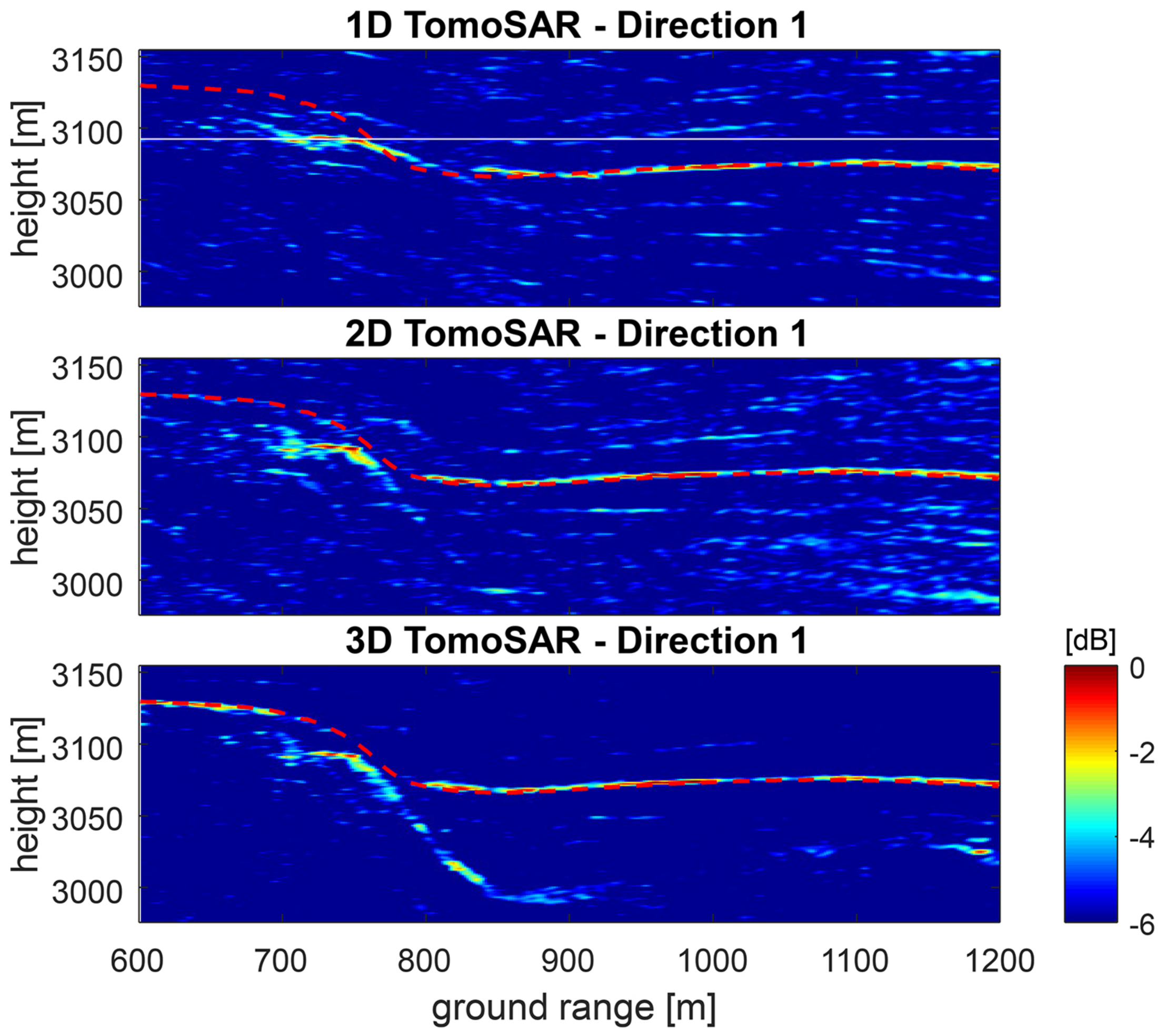

Tomographic vertical sections generated using 1D, 2D, and 3D TomoSAR processing are displayed in

Figure 12. For each panel, the overlaid red line indicates ice surface topography from the Lidar DTM. Similar to the case of numerical simulations, by comparing the three panels, it is immediate to see that only using 3D TomoSAR processing, it is possible to image subsurface layers. One-dimension TomoSAR processing is clearly seriously affected by both azimuth and range migration, so that only small neighborhood about the reference surface can be recovered. Two-dimension TomoSAR processing is only partly capable of detecting the presence of internal structures, but imaging quality is strongly degraded as a result of uncompensated residual azimuth migration.

Sub-Sampled Defocusing/Refocusing: Imaging Quality and Efficiency

In this section, we analyze the quality of 3D Tomographic processing as implemented using different sub-sampling factors. Considering all passes, the maximum residual azimuth migration turned out to be

= 15 m at a height of 100 m from the reference topography, which sets the extent of the azimuth extent to be defocused for generating a vertical section at any single azimuth location (

= 0 m). Following the discussion in

Section 4.2, such a value corresponds to a maximum PRI of approximately

s, where we conventionally assumed to simulate platform motion at 1 m/s (platform velocity being irrelevant since temporal decorrelation is not considered).

To provide a term of reference against which to evaluate imaging quality and computational time, tomographic processing is also implemented through a processing approach hereafter referred to as Global Algorithm, which consists of defocusing SLC data over a large azimuth interval at once, and by subsequently generating tomographic section at all azimuth locations. In this case, raw data are generated by sampling the synthetic aperture at a spatial interval of two wavelengths (0.47 m), which corresponds to approximately half the azimuth resolution of SLC data. Assuming a platform velocity of 1 m/s, the resulting PRI is approximately s. Accordingly, the sub-sampled algorithm allows for a maximum PRI relaxation factor up to 11 with respect to the global algorithm.

Imaging quality is addressed in

Figure 13, which displays the vertical sections obtained by the global algorithm (first panel from top), and by the sub-sampled one, assuming a PRI relaxation factor of 4, 8, 16 (second to last panels from top). Consistently with our analysis in

Section 4, we observe no degradation with respect to the tomographic section produced by the global algorithm in the top panel as long as the relaxation factor is lower than 11, that is when raw data are generated at a PRI faster than 5.4 s.

Table 3 reports the total computational times required for the generation of a tomographic cube of size: 300 m in azimuth, 1000 m in ground range, 180 m in height. Three algorithms are compared; namely, the global algorithm (first row), the sub-sampled algorithm as implemented by subsequently focusing single azimuth lines (

= 0 m), and the sub-sampled algorithms as implemented by subsequently focusing intervals of 15 m in azimuth (

= 7.5 m), resulting in a maximum PRI relaxation factor equal to seven.

Here, it is easy to see that the use of the sub-sampled algorithm provides a substantial benefit, resulting in a net improvement of up to 9.5 with respect to the global algorithm.

Total computational times required by the GPU implementation are shown in

Table 4, where we compare the global algorithm against the sub-sampled algorithm as implemented by subsequently focusing intervals of 15 m in azimuth.

The experiments were carried out on a platform with an Intel(R) Core i7-8700K CPU and an Nvidia Titan V GPU.

Table 5 shows the specifications of the GPUs used in our experiments. The driver mode of GPUs was all set as Tesla Compute Cluster (TCC) mode for a better performance. The source code was compiled with -O2 optimization under the Microsoft Visual Studio 2017 and CUDA Toolkit 10.2.

The GPU implementation entails a dramatic improvement, allowing the generation of a large tomographic cube in few seconds. Also in this case, though, use of the sub-sampled algorithm allows a significant cost reduction with respect to the global one. Finally, it is worth noting that the global algorithm could not be used to generate tomographic cubes with an azimuth extent larger than 2000 m, due to saturation of on-board memory. This problem does not affect the sub-sampled algorithm, for which memory occupation only depends on the extent of the azimuth interval to be defocused.

Furthermore,

Table 6 shows the processing time required by 1D, 2D tomographic approaches on CPU. It follows that both approaches have such low computational burdens that only tens of seconds are needed to generate the 3D cube from SLC images. It also indicates that our proposed 3D full processing implementation has effectively reduced the computational time into the same level as the simplified tomographic algorithms.

7. Conclusions

This paper was intended to shed light on which processing strategies are suitable for the correct generation of high-resolution and accurate tomographic products. We have shown that the popular vector-to-vector 1D processing approach, largely assumed in the literature and declared in many different forms using spectral estimation techniques, is only applicable for cases where residual range migration does not exceed range resolution, that is the case where data are characterized by coarse vertical resolution. Accurate processing of high-resolution tomographic data can be carried out by assuming a 2D processing approach, where bi-dimensional back-projection is carried out at each azimuth location. Still, this approach can only be applied in the case of approximately parallel trajectories, for which residual azimuth migration does not exceed azimuth resolution. The assumption of parallel trajectories is, in general, well-justified whenever data acquisition is carried out using very stable aircrafts under non-turbulent conditions. This is, however, not always the case, as the AlpTomoSAR campaign demonstrates. For such cases, tomographic processing should then be implemented by focusing the raw data in the three-dimensional space by 3D back-projection. This processing option clearly has two important drawbacks, represented by the enormous demands in terms of computational costs (processing time and memory allocation) and the need to access raw data, which are usually not provided as part of standard data delivery. Both these topics were addressed in the second part of this paper, where we proposed a processing algorithm based on sub-sampled defocusing and refocusing, consisting of the generation of sub-sampled raw data from available SLC data, to be focused afterward in the three-dimensional space at a largely reduced computational cost. The proposed algorithm was applied to process data from AlpTomoSAR, and demonstrated to produce accurate results, with hardly distinguishable differences with respect to reference data produced without applying any sub-sampled factor. Finally, the proposed algorithm is also easily implemented using GPU processing, which grants a dramatic reduction in computational time. Accordingly, we deem the present work fully demonstrates that the quality of a tomographic airborne survey is not limited in any sense by platform stability during the acquisition, clearly as long as tomographic processing is correctly implemented by correctly accounting for the sensor trajectories. With regard to the last point, we remark the importance that any information used to produce SLC data is made available to final users, including, in particular, sensor trajectories and the reference surface elevation map used for focusing or co-registering SLC data.