A Cross-Resolution, Spatiotemporal Geostatistical Fusion Model for Combining Satellite Image Time-Series of Different Spatial and Temporal Resolutions

Abstract

1. Introduction

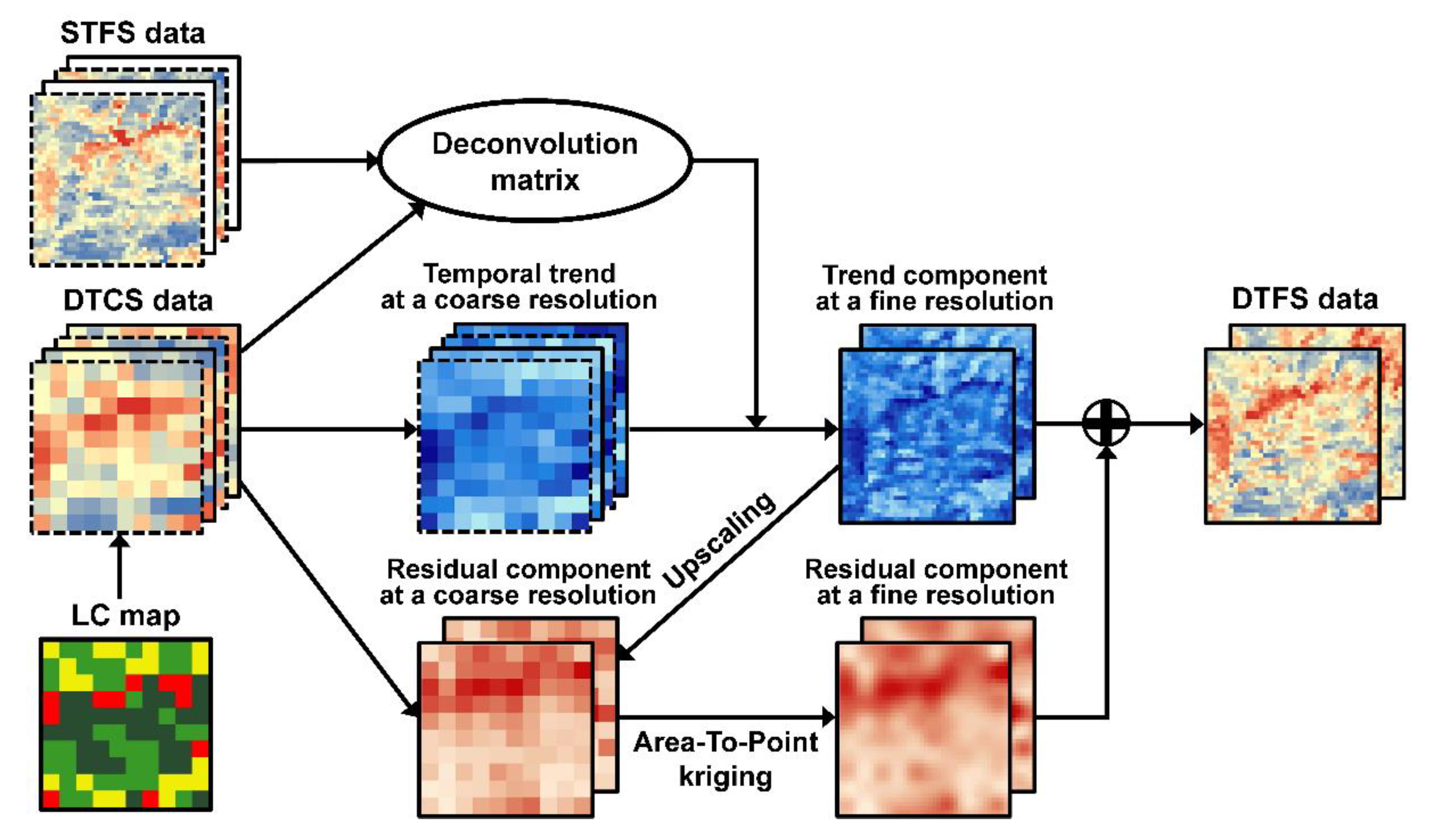

2. Methodology

2.1. Generic Formulation

2.2. Quantification of Temporal Trends at Coarse Spatial Resolution

2.3. Estimation of Temporal Trends at a Fine Spatial Resolution

2.4. Estimation of Residuals at a Fine Spatial Resolution

3. Experimental Design

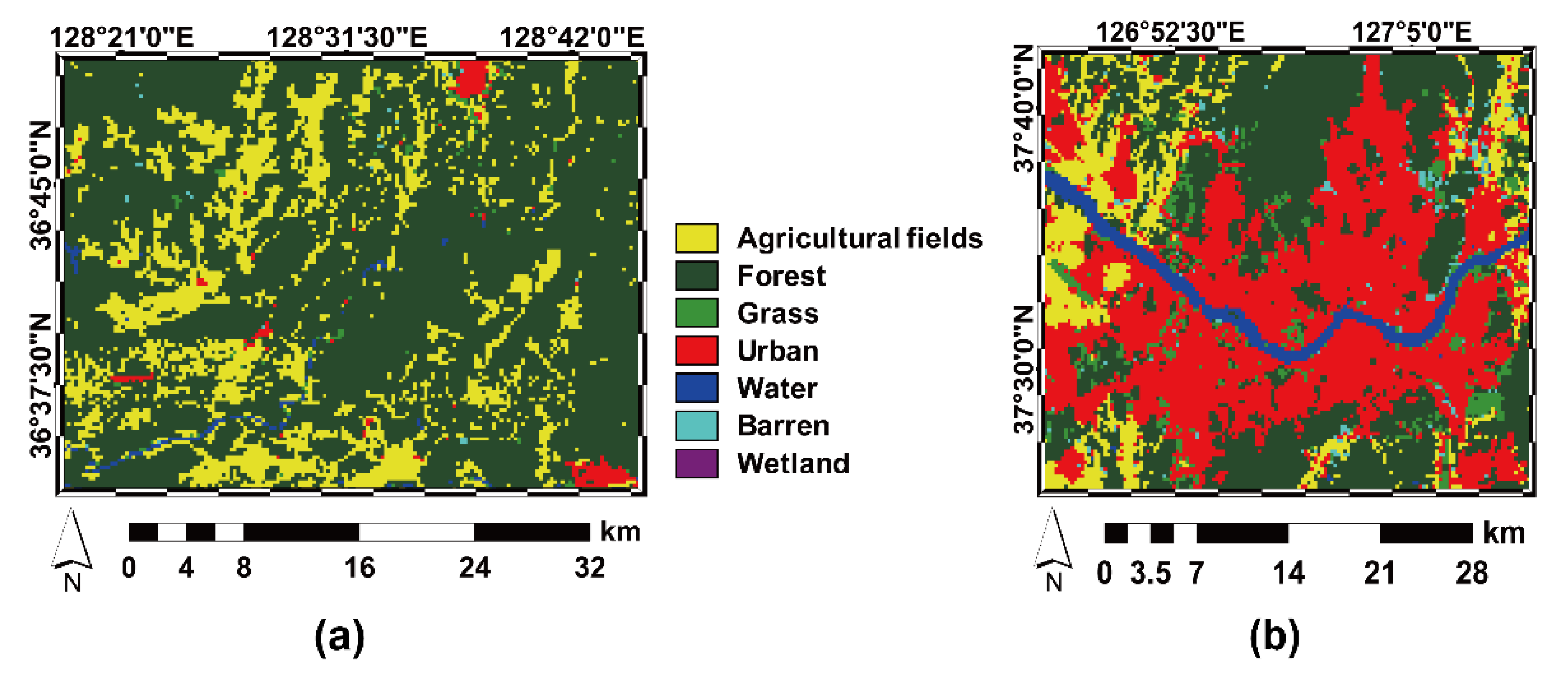

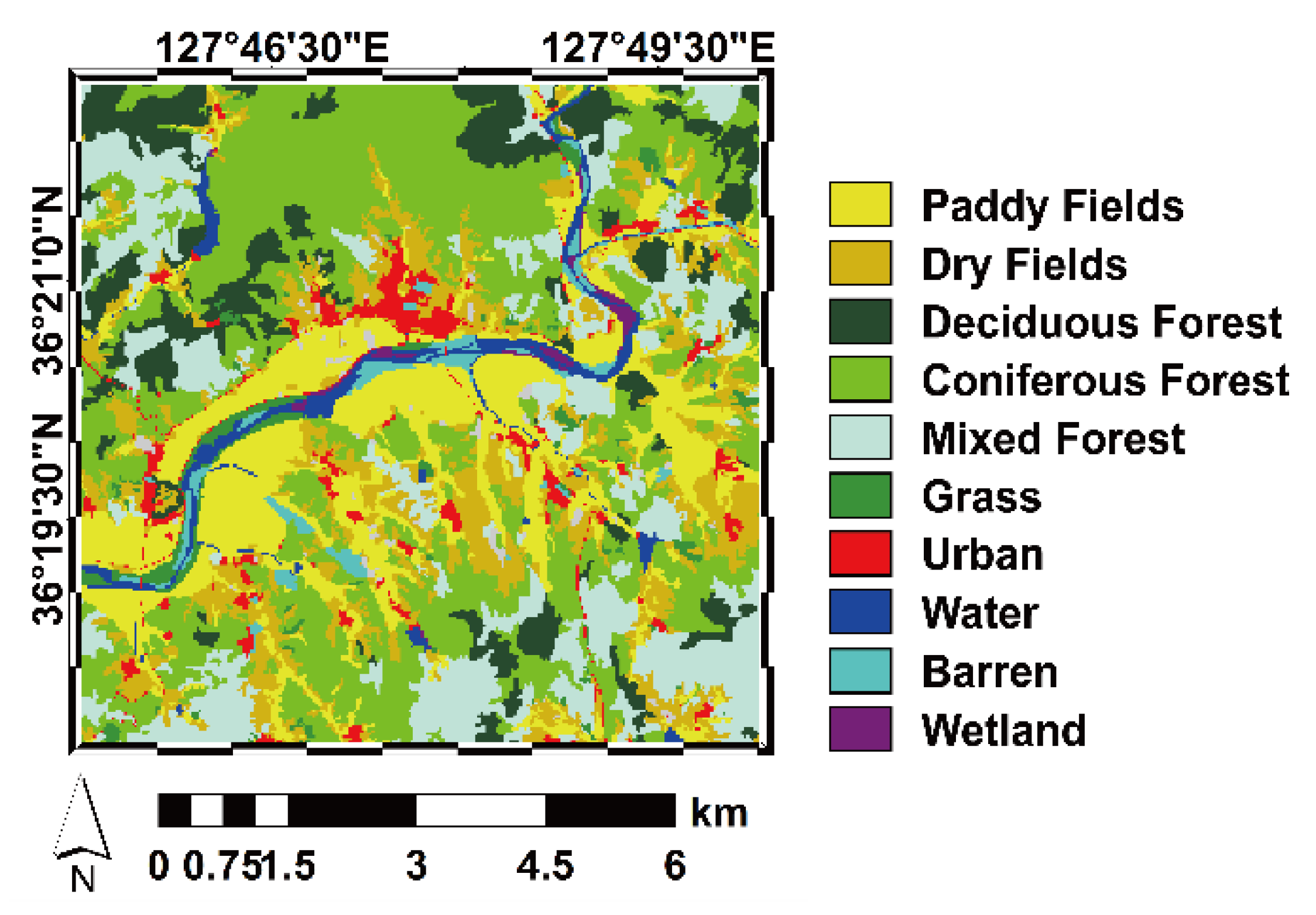

3.1. Study Area and Dataset

3.1.1. Experiments Using Spatially Degraded Datasets

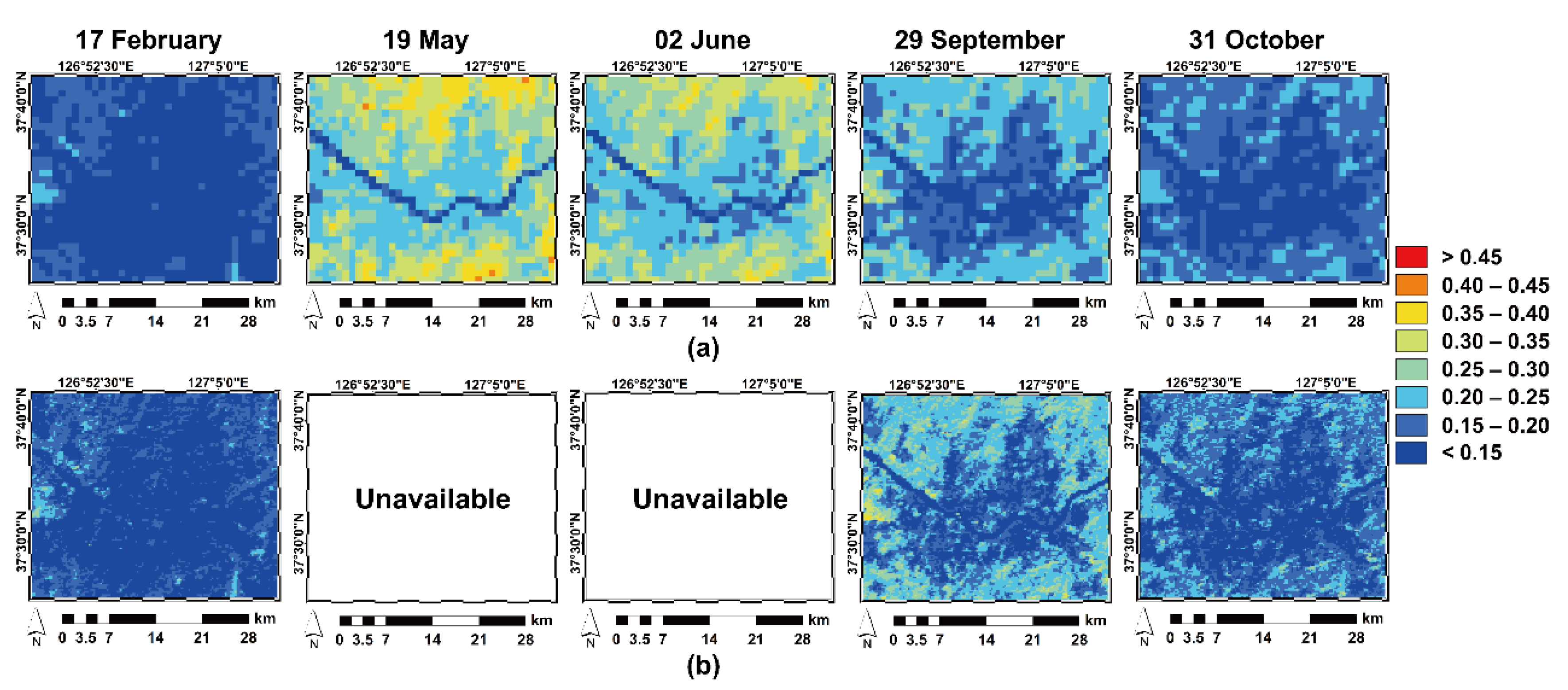

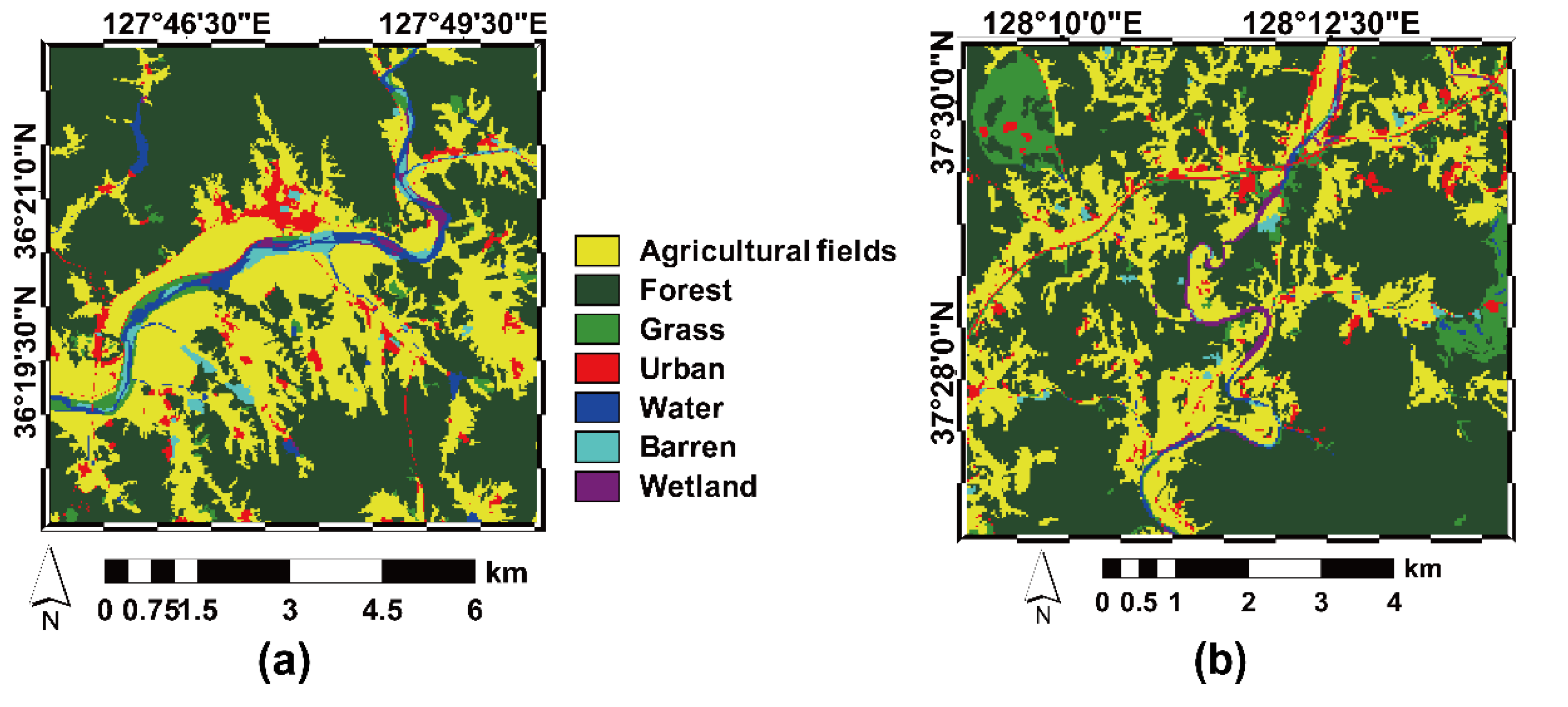

3.1.2. Experiments Using Real Satellite Images

3.2. Evaluation Method

4. Results

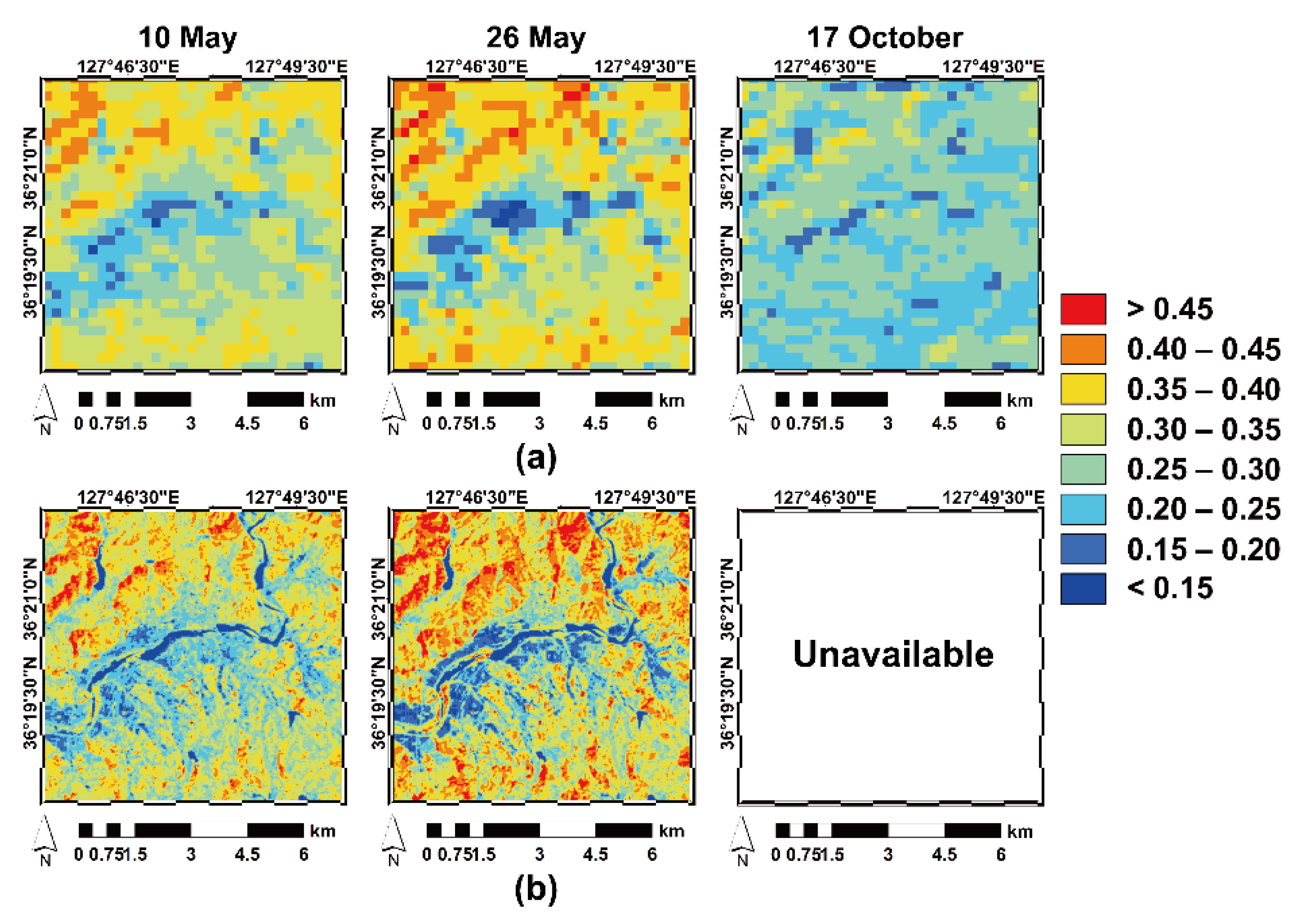

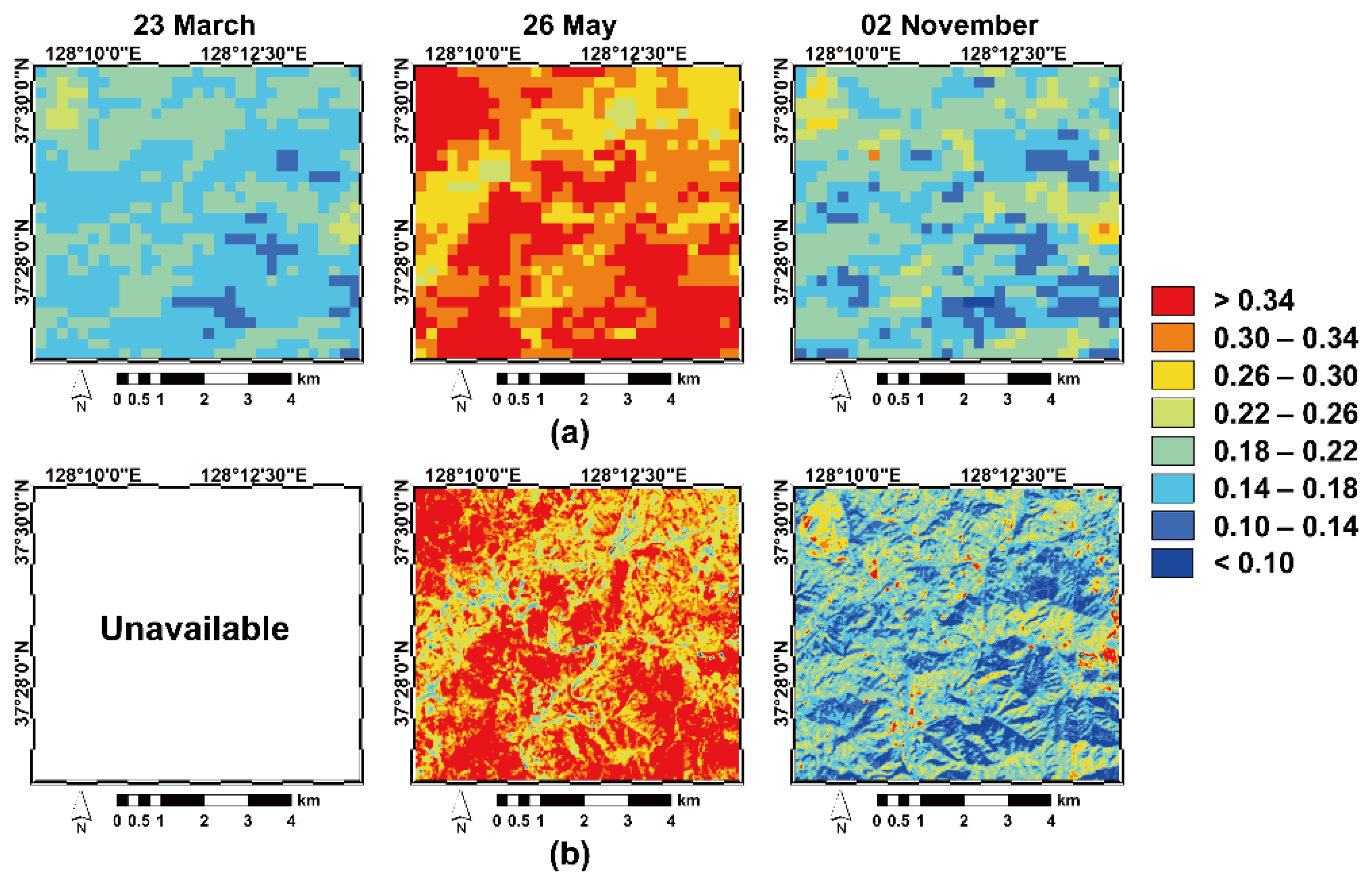

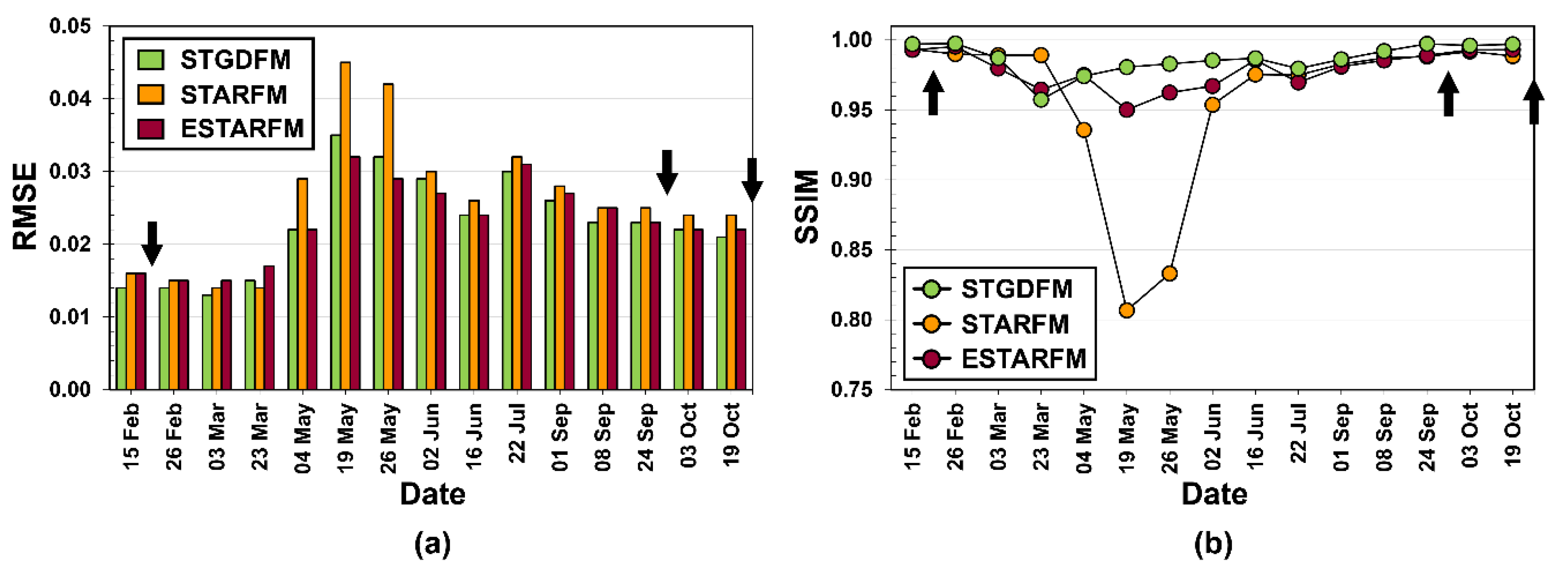

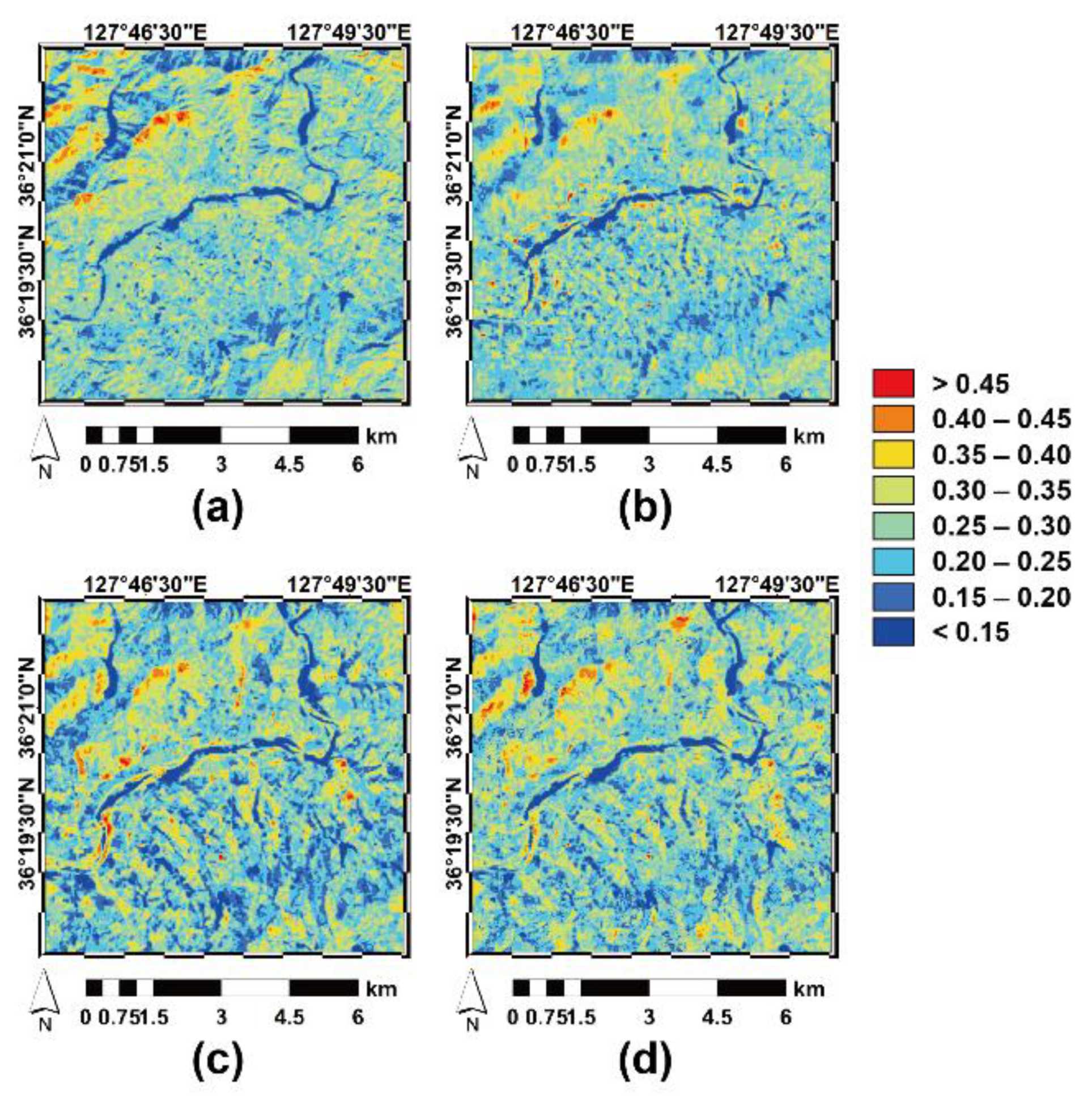

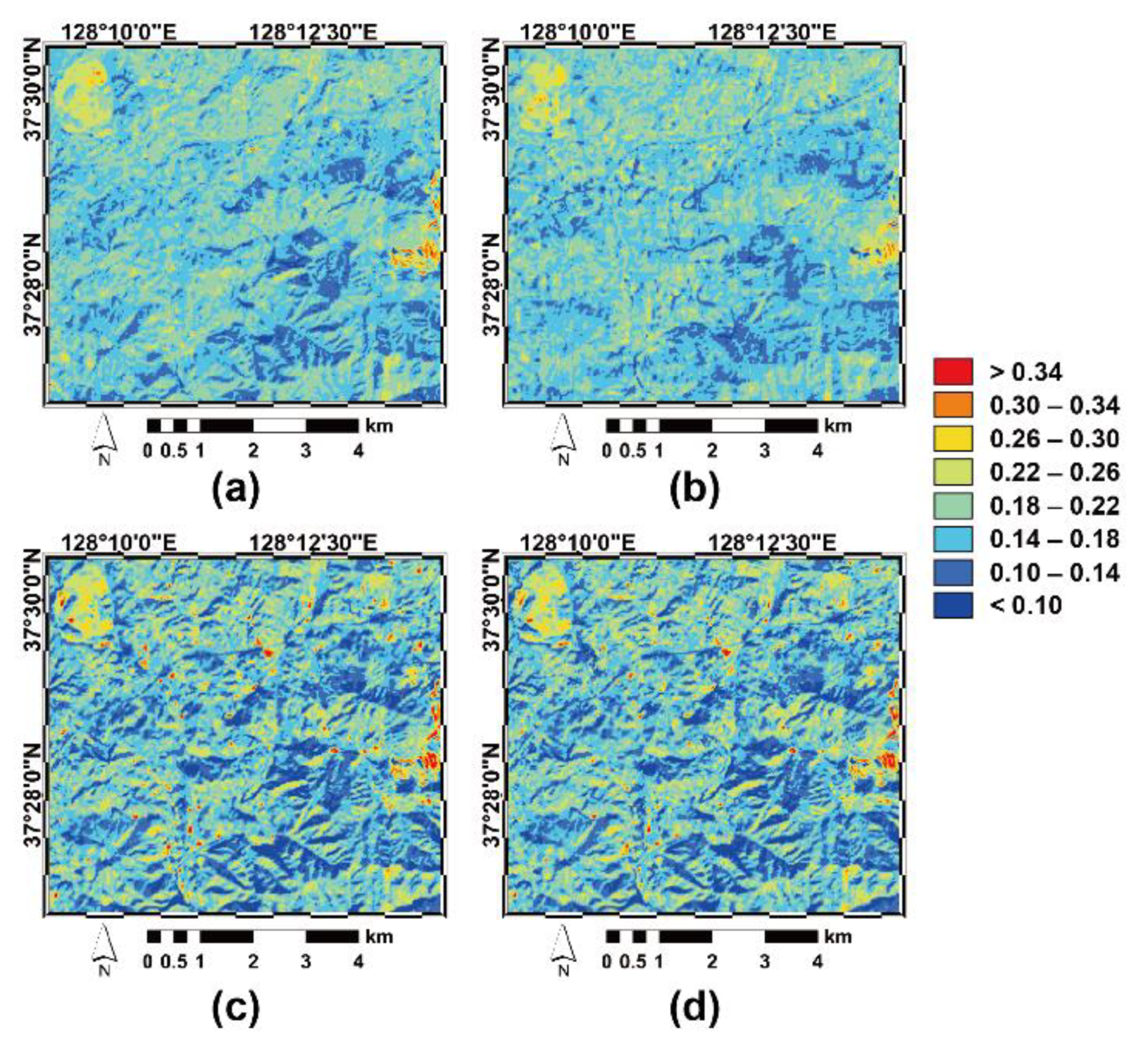

4.1. Results for Experiments Conducted on Spatially Degraded Datasets

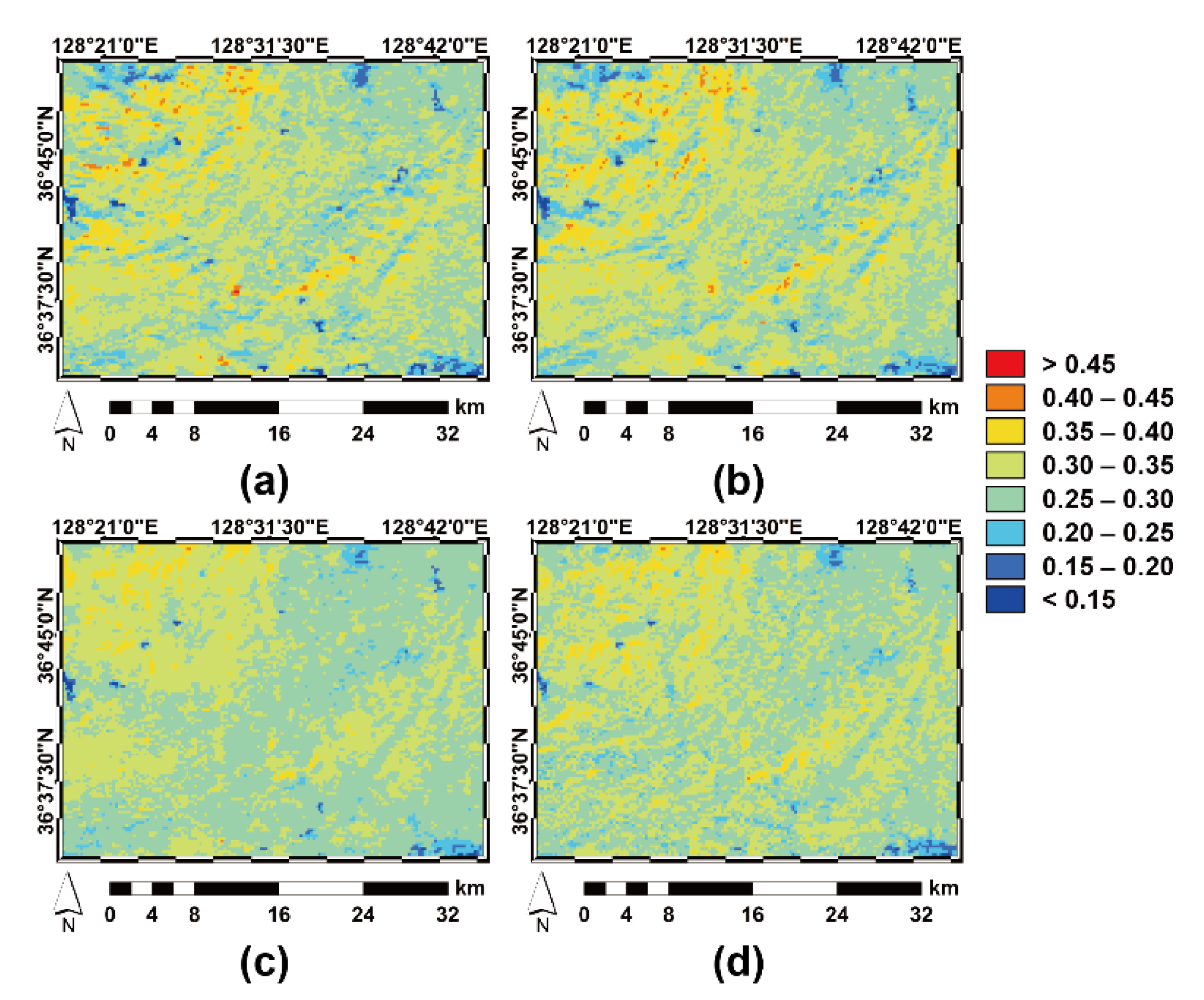

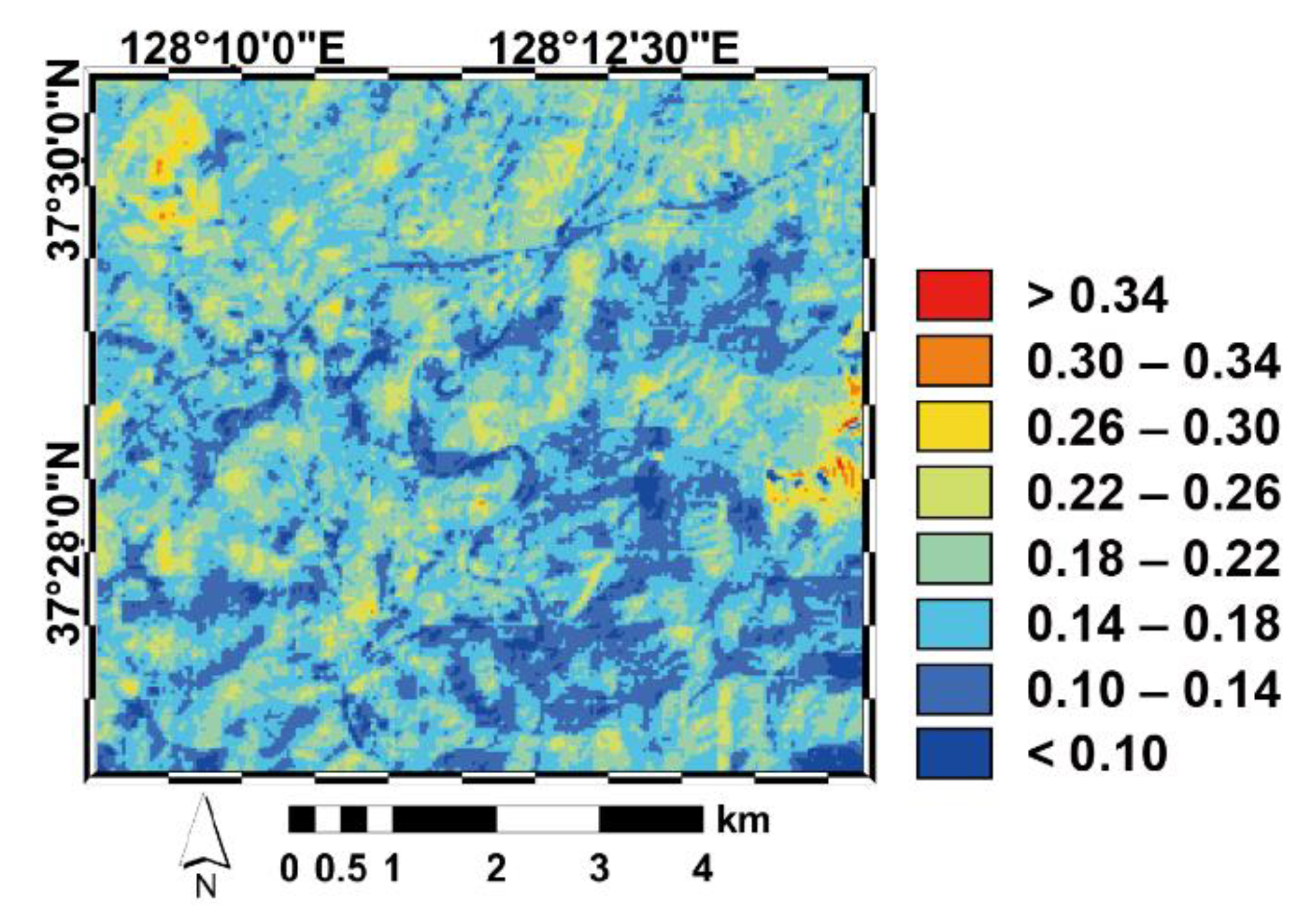

4.2. Results for the Experiment on Real Satellite Images

5. Discussion

5.1. Novelty of STGDFM

5.2. Further Improvement of STGDFM

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Höfle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.; Anders, K.; Gloaguen, R.; et al. Multisource and multitemporal data fusion in remote sensing: A comprehensive review of the state of the art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef]

- Park, N.-W.; Kim, Y.; Kwak, G.-H. An overview of theoretical and practical issues in spatial downscaling of coarse resolution satellite-derived products. Korean J. Remote Sens. 2019, 35, 589–607. [Google Scholar]

- Kim, Y.; Park, N.-W.; Lee, K.-D. Self-learning based land-cover classification using sequential class patterns from past land-cover maps. Remote Sens. 2017, 9, 921. [Google Scholar] [CrossRef]

- Park, N.-W.; Kyriakidis, P.C.; Hong, S. Geostatistical integration of coarse resolution satellite precipitation products and rain gauge data to map precipitation at fine spatial resolutions. Remote Sens. 2017, 9, 255. [Google Scholar] [CrossRef]

- Zheng, Q.; Wang, Y.; Chen, L.; Wang, Z.; Zhu, H.; Li, B. Inter-comparison and evaluation of remote sensing precipitation products over China from 2005 to 2013. Remote Sens. 2018, 10, 168. [Google Scholar] [CrossRef]

- Arulraj, M.; Barros, A.P. Improving quantitative precipitation estimates in mountainous regions by modelling low-level seeder-feeder interactions constrained by global precipitation measurement dual-frequency precipitation radar measurements. Remote Sens. Environ. 2019, 231, 111213. [Google Scholar] [CrossRef]

- Jung, M.; Lee, S.-H. Application of multi-periodic harmonic model for classification of multi-temporal satellite data: MODIS and GOCI imagery. Korean J. Remote Sens. 2019, 35, 573–587. [Google Scholar]

- Deng, C.; Zhu, Z. Continuous subpixel monitoring of urban impervious surface using Landsat time series. Remote Sens. Environ. 2018, 238, 110929. [Google Scholar] [CrossRef]

- Cahalane, C.; Magee, A.; Monteys, X.; Casal, G.; Hanafin, J.; Harris, P. A comparison of Landsat 8, RapidEye and Pleiades products for improving empirical predictions of satellite-derived bathymetry. Remote Sens. Environ. 2019, 233, 111414. [Google Scholar] [CrossRef]

- Sun, W.; Fan, J.; Wang, G.; Ishidaira, H.; Bastola, S.; Yu, J.; Fu, Y.H.; Kiem, A.S.; Zuo, D.; Xu, Z. Calibrating a hydrological model in a regional river of the Qinghai-Tibet plateau using river water width determined from high spatial resolution satellite images. Remote Sens. Environ. 2018, 214, 100–114. [Google Scholar] [CrossRef]

- Filipponi, F. Exploitation of Sentinel-2 time series to map burned areas at the national level: A case study on the 2017 Italy wildfires. Remote Sens. 2019, 11, 622. [Google Scholar] [CrossRef]

- Furberg, D.; Ban, Y.; Nasacetti, A. Monitoring of urbanization and analysis of environmental impact in Stockholm with Sentinel-2A and SPOT-5 multispectral data. Remote Sens. 2019, 11, 2408. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Ibnelhobyb, A.; Mouak, A.; Radgui, A.; Tamtaoui, A.; Er-Raji, A.; Hadani, D.E.; Merdas, M.; Smiej, F.M. New wavelet based spatiotemporal fusion method. In Proceedings of the Fifth International Conference on Telecommunications and Remote Sensing (ICTRS 2016), Milan, Italy, 10–11 October 2016; pp. 25–32. [Google Scholar]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; Van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat-MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, W.; Marinoni, A.; Gao, L.; Zhang, B. An improved spatial and temporal reflectance unmixing model to synthesize time series of Landsat-like images. Remote Sens. 2018, 10, 1388. [Google Scholar] [CrossRef]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal reflectance fusion via sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Song, H.; Huang, B. Spatiotemporal satellite image fusion through one-pair image learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar] [CrossRef]

- Liu, X.; Deng, C.; Wang, S.; Huang, G.-B.; Zhao, B.; Lauren, P. Fast and accurate spatiotemporal fusion based upon extreme learning machine. IEEE Geosci. Remote Sens. Lett. 2016, 13, 2039–2043. [Google Scholar] [CrossRef]

- Tan, Z.; Yue, P.; Di, L.; Tang, J. Deriving high spatiotemporal remote sensing images using deep convolutional network. Remote Sens. 2018, 10, 1066. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Huang, B.; Song, H. A robust adaptive spatial and temporal image fusion model for complex land surface changes. Remote Sens. Environ. 2018, 208, 42–62. [Google Scholar] [CrossRef]

- Xue, J.; Leung, Y.; Fung, T. A Bayesian data fusion approach to spatio-temporal fusion of remotely sensed images. Remote Sens. 2017, 9, 1310. [Google Scholar] [CrossRef]

- Xue, J.; Leung, Y.; Fung, T. An unmixing-based Bayesian model for spatio-temporal satellite image fusion in heterogeneous landscapes. Remote Sens. 2019, 11, 324. [Google Scholar] [CrossRef]

- Zhong, D.; Zhou, F. A prediction smooth method for blending Landsat and Moderate Resolution Imagine Spectroradiometer Images. Remote Sens. 2018, 10, 1371. [Google Scholar] [CrossRef]

- Zhong, D.; Zhou, F. Improvement of clustering methods for modelling abrupt land surface changes in satellite image fusions. Remote Sens. 2019, 11, 1759. [Google Scholar] [CrossRef]

- Kyriakidis, P.C.; Miller, N.L.; Kim, J. A spatial time series framework for simulating daily precipitation at regional scales. J. Hydrol. 2004, 297, 236–255. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B.; Chen, Z.; Chen, Y.; Lian, L. Spatio-temporal variations of nonlinear trends of precipitation over an arid region of northwest China according to the extreme-point symmetric model decomposition method. Int. J. Climatol. 2018, 38, 2239–2249. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, L.; Shen, H. A super-resolution reconstruction algorithm for hyperspectral images. Signal Process. 2012, 92, 2082–2096. [Google Scholar] [CrossRef]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:1603.07285. [Google Scholar]

- Kyriakidis, P.C. A geostatistical framework for area-to-point spatial interpolation. Geogr. Anal. 2004, 36, 259–289. [Google Scholar] [CrossRef]

- Goovaerts, P. Kriging and semivariogram deconvolution in the presence of irregular geographical units. Math. Geosci. 2008, 40, 101–128. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- EGIS (Environmental Geographic Information Service). Available online: https://egis.me.go.kr (accessed on 1 May 2019).

- U.S. Geological Survey (USGS) Earth Resources Observation and Science (EROS) Center. Available online: http://earthexplorer.usgs.gov (accessed on 1 May 2019).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. The effect of the point spread function on sub-pixel mapping. Remote Sens. Environ. 2017, 193, 127–137. [Google Scholar] [CrossRef]

- Zhou, F.; Zhong, D. Kalman filter method for generating time-series synthetic Landsat images and their uncertainty from Landsat and MODIS observation. Remote Sens. Environ. 2020, 239, 111628. [Google Scholar] [CrossRef]

- Zhang, Y.; Foody, G.M.; Ling, F.; Li, X.; Ge, Y.; Du, Y.; Atkinson, P.M. Spatial-temporal fraction map fusion with multi-scale remotely sensed images. Remote Sens. Environ. 2018, 213, 162–181. [Google Scholar] [CrossRef]

- Li, X.; Foody, G.M.; Boyd, D.S.; Ge, Y.; Zhang, Y.; Du, Y.; Ling, F. SFSDAF: An enhanced FSDAF that incorporates sub-pixel class fraction change information for spatio-temporal image fusion. Remote Sens. Environ. 2020, 237, 111537. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A.; Wang, Q.; Atkinson, P.M.; Kindred, D.; Miao, Y. Rice crop phenology mapping at high spatial and temporal resolution using downscaled MODIS time-series. GISci. Remote Sens. 2018, 55, 659–677. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, P.; Tansey, K.; Zhang, S.; Li, H.; Wang, L. Developing a fused vegetation temperature condition index for drought monitoring at field scales using Sentinel-2 and MODIS imagery. Comput. Electron. Agric. 2020, 168, 105144. [Google Scholar] [CrossRef]

| 01 February | 04 February | 05 February | 06 February | 07 February | 08 February | 13 February | 15 February | 17 February |

| ● | ○ | ○ | ● | |||||

| 3 March | 12 March | 14 March | 23 March | 25 March | 26 March | 27 March | 28 March | 10 April |

| ○ | ○ | ○ | ○ | ○ | ||||

| 12 April | 18 April | 19 April | 21 April | 28 April | 14 May | 24 May | 26 May | 01 June |

| ○ | ○ | ● | ○ | |||||

| 07 June | 22 June | 01 August | 02 August | 12 October | 20 October | 21 October | 30 October | 31 October |

| ○ | ○ | ○ | ○ | ○ |

| 15 February | 17 February | 21 February | 26 February | 03 March | 23 March | 25 March | 12 April | 17 April | 18 April |

| ○ | ● | ○ | ○ | ○ | |||||

| 20 April | 21 April | 25 April | 28 April | 04 May | 19 May | 21 May | 23 May | 26 May | 28 May |

| ○ | ○ | ○ | |||||||

| 01 June | 02 June | 16 June | 22 June | 22 July | 17 August | 01 September | 08 September | 24 September | 25 September |

| ○ | ○ | ○ | ○ | ○ | ○ | ||||

| 29 September | 03 October | 12 October | 13 October | 14 October | 19 October | 20 October | 21 October | 30 October | 31 October |

| ● | ○ | ○ | ● |

| 01 February | 07 February | 15 February | 03 March | 12 March | 14 March | 25 March |

| 27 March | 28 March | 08 April | 10 April | 19 April | 10 May | 24 May |

| ● | ||||||

| 26 May | 01 June | 02 June | 06 June | 16 June | 22 June | 03 October |

| ● | ||||||

| 12 October | 13 October | 17 October | 19 October | 21 October | 24 October | 30 October |

| ○ |

| 01 February | 05 February | 07 February | 17 February | 12 March | 23 March | 25 March | 28 March |

| ○ | |||||||

| 10 April | 19 April | 21 April | 28 April | 29 April | 21 May | 24 May | 26 May |

| ● | |||||||

| 02 June | 06 June | 16 June | 22 June | 22 July | 02 August | 08 September | 03 October |

| 12 October | 21 October | 24 October | 31 October | 02 November | 04 November | 20 November | 30 November |

| ● |

| STGDFM | STARFM | ESTARFM | ||

|---|---|---|---|---|

| Case 3 | RMSE | 0.0511 | 0.0579 | 0.0554 |

| SSIM | 0.935 | 0.924 | 0.943 | |

| Case 4 | RMSE | 0.0264 | 0.0492 | 0.0315 |

| SSIM | 0.961 | 0.845 | 0.856 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, Y.; Kyriakidis, P.C.; Park, N.-W. A Cross-Resolution, Spatiotemporal Geostatistical Fusion Model for Combining Satellite Image Time-Series of Different Spatial and Temporal Resolutions. Remote Sens. 2020, 12, 1553. https://doi.org/10.3390/rs12101553

Kim Y, Kyriakidis PC, Park N-W. A Cross-Resolution, Spatiotemporal Geostatistical Fusion Model for Combining Satellite Image Time-Series of Different Spatial and Temporal Resolutions. Remote Sensing. 2020; 12(10):1553. https://doi.org/10.3390/rs12101553

Chicago/Turabian StyleKim, Yeseul, Phaedon C. Kyriakidis, and No-Wook Park. 2020. "A Cross-Resolution, Spatiotemporal Geostatistical Fusion Model for Combining Satellite Image Time-Series of Different Spatial and Temporal Resolutions" Remote Sensing 12, no. 10: 1553. https://doi.org/10.3390/rs12101553

APA StyleKim, Y., Kyriakidis, P. C., & Park, N.-W. (2020). A Cross-Resolution, Spatiotemporal Geostatistical Fusion Model for Combining Satellite Image Time-Series of Different Spatial and Temporal Resolutions. Remote Sensing, 12(10), 1553. https://doi.org/10.3390/rs12101553