Generating High Resolution LAI Based on a Modified FSDAF Model

Abstract

1. Introduction

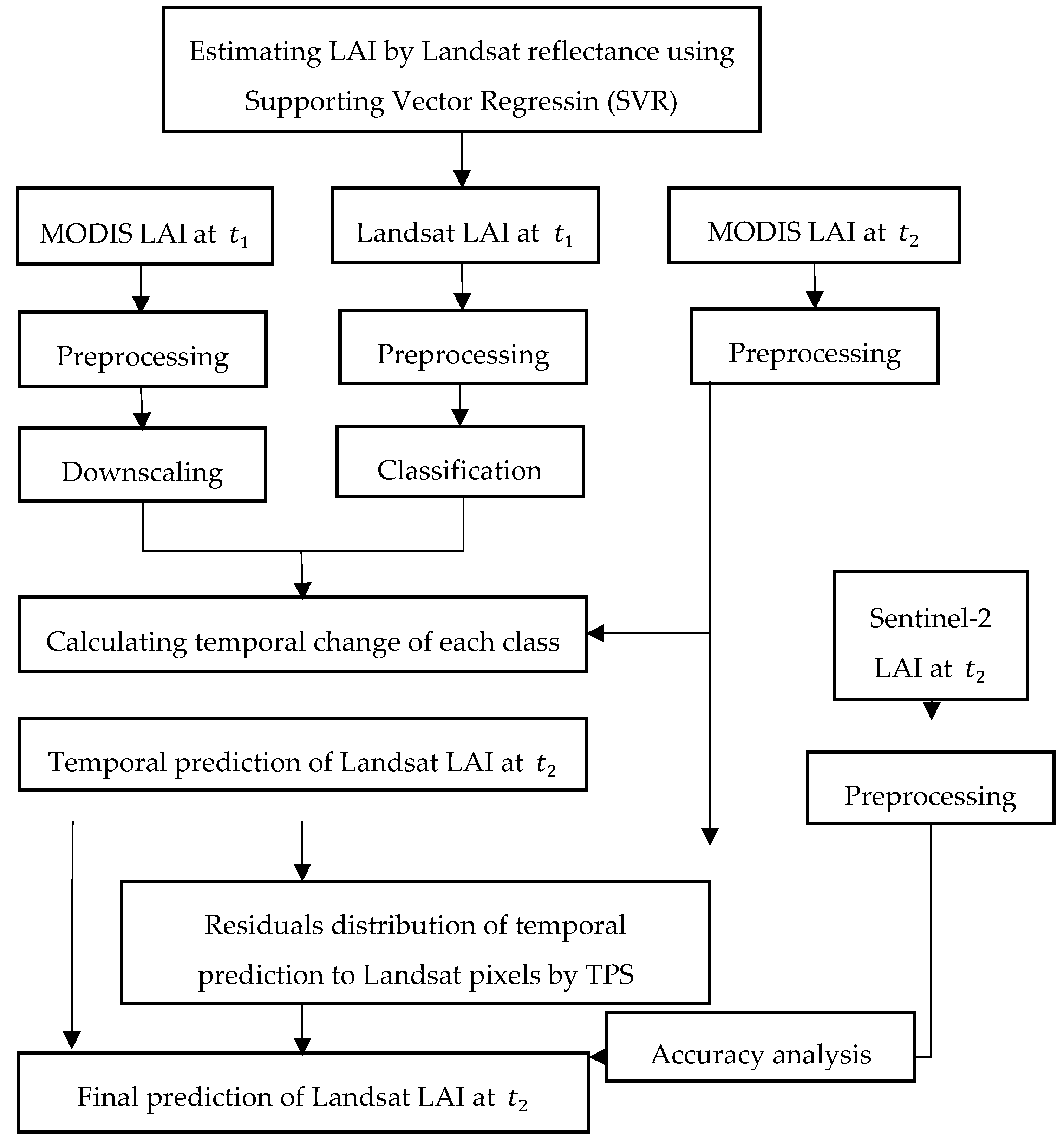

2. Methods

2.1. Downscaling of MODIS LAI Data Based on Linear Spectral Unmixing

2.2. Retrieval of LAI from Landsat Images

2.3. The Modified FSDAF Model

3. Experiment and Results

3.1. Test Area

3.2. Data and Preprocessing

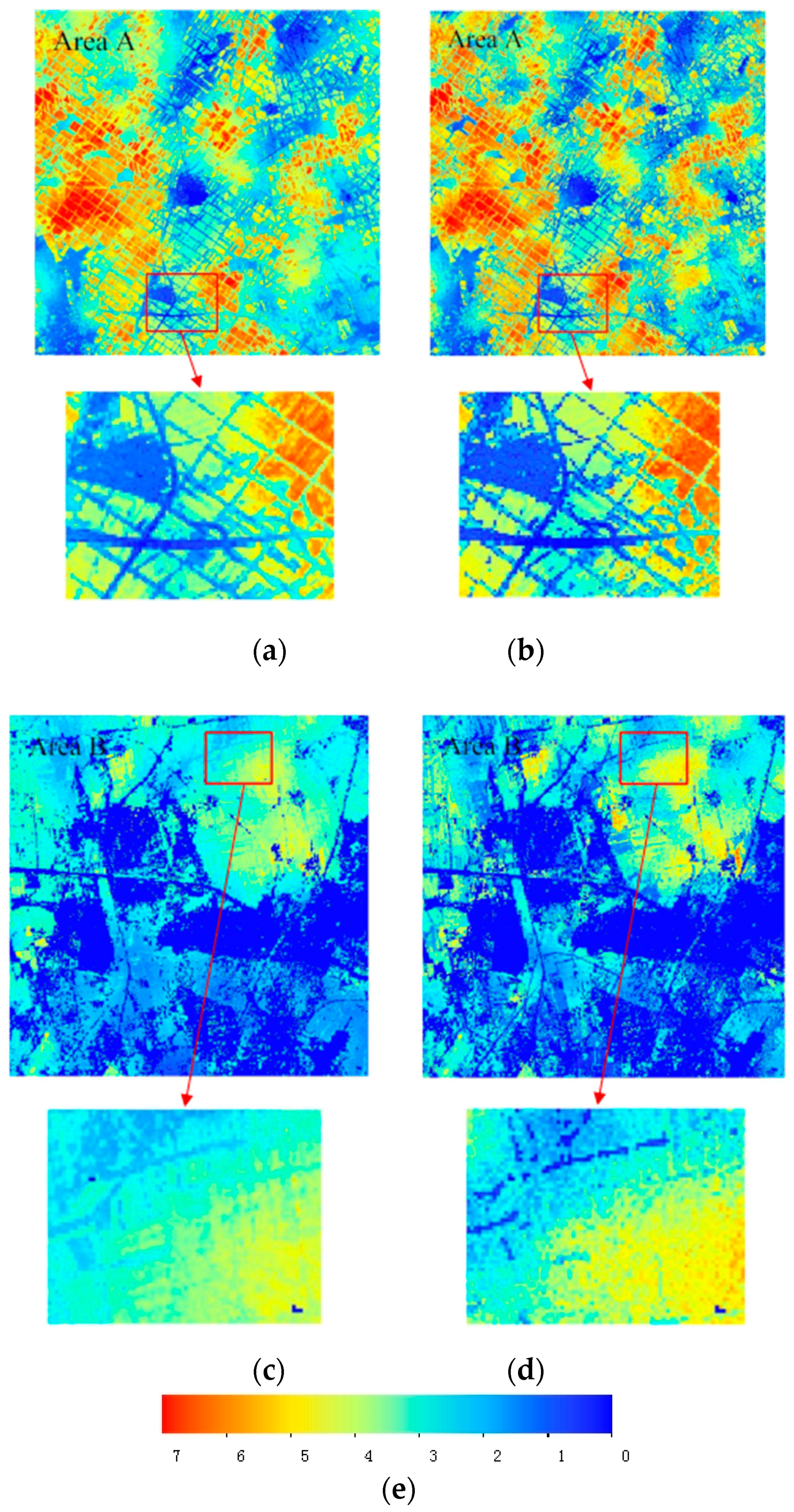

3.3. LAI Inversion from Landsat-8 Data by Support Vector Regression Model

4. Discussion

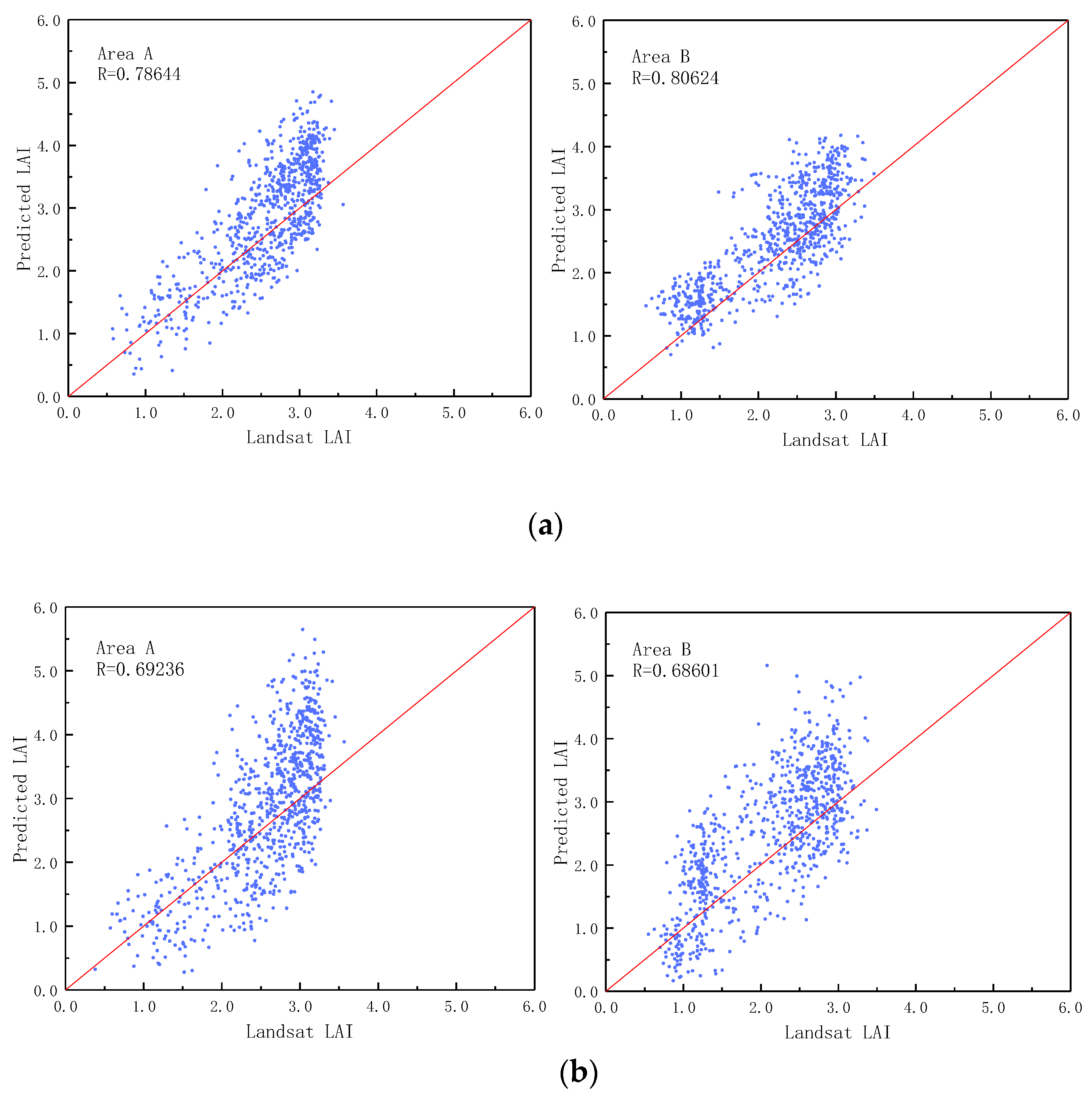

4.1. Analysis of the Accuracy of Landsat LAI Inversion

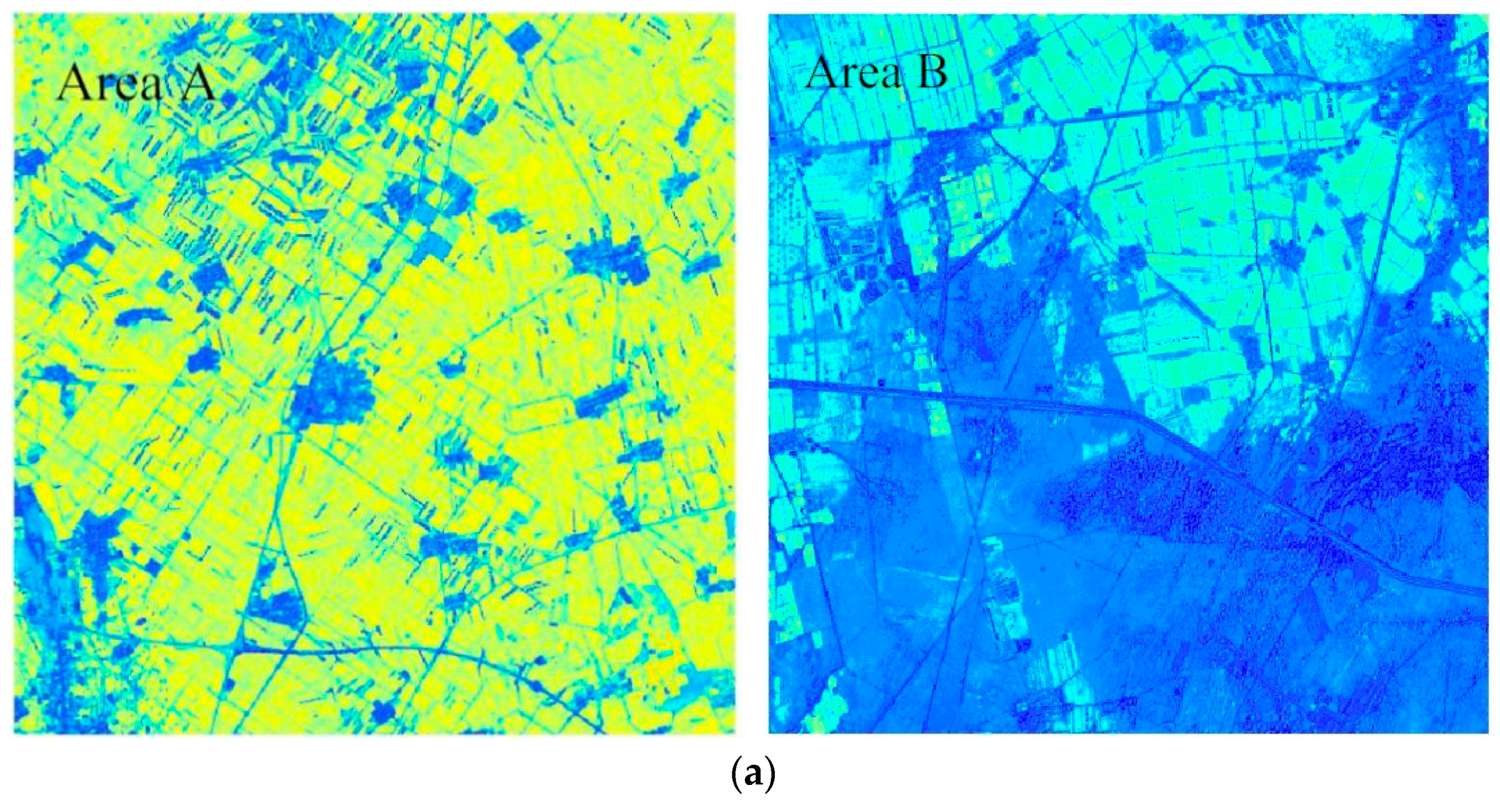

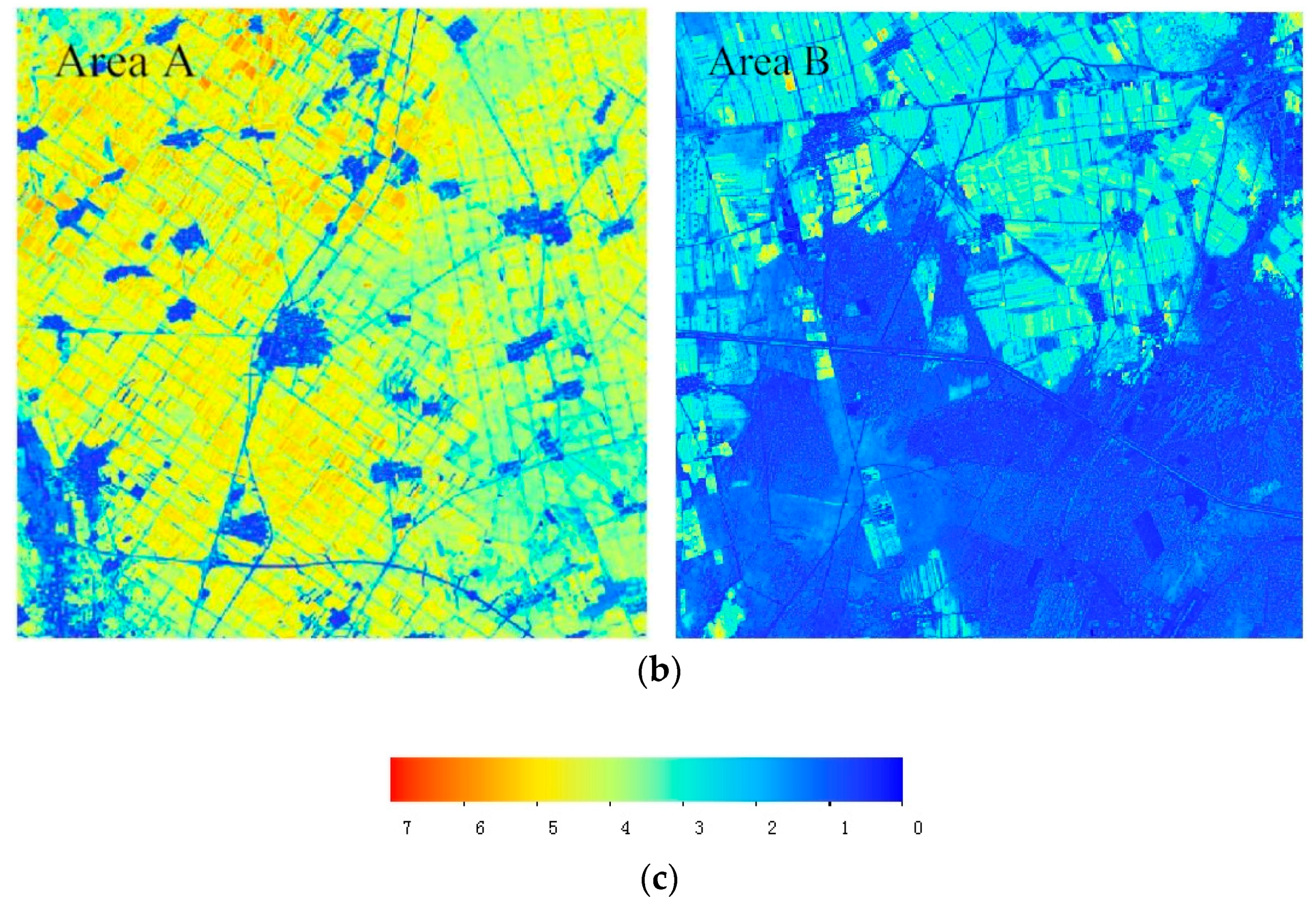

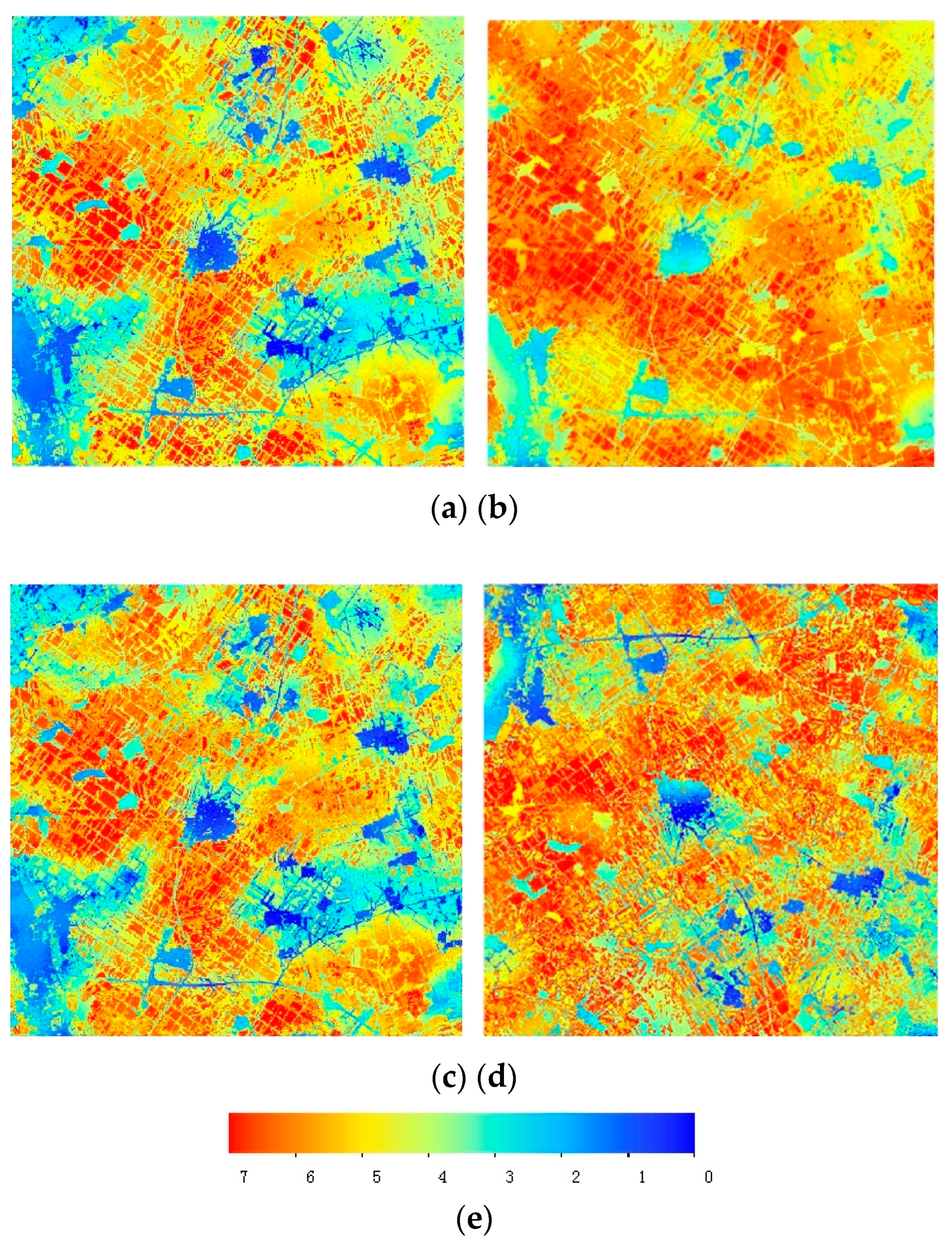

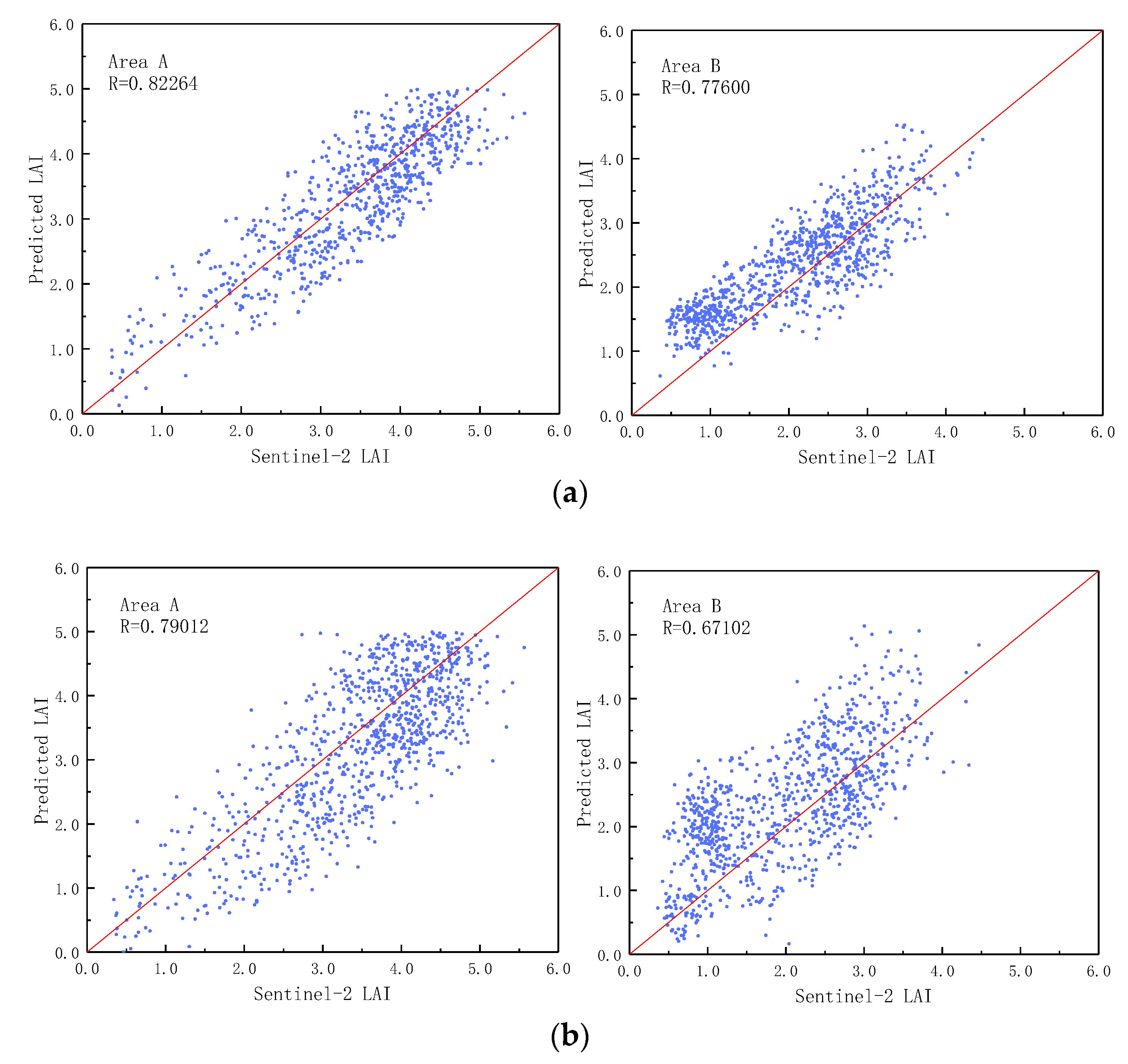

4.2. The Comparison of the Predicted LAI Using the Improved FSDAF and FSDAF Model

4.3. Limitations

5. Conclusions

- (1)

- A linear spectral mixture model was introduced to downscale the input MODIS data, which replaced the resampled low-resolution data in the FSDAF. This improved FSDAF method can generate high-precision predicted LAI data with high spatiotemporal resolution and is potentially extendable to other biophysical variables prediction.

- (2)

- The experiments were conducted on two sample sites to avoid the randomness of fusion results. The correlation coefficients between the predicted LAI generated by the data fusion methods and real data are high. The scatter plots of the improved FSDAF model showed a more concentered distribution than that of the FSDAF. The fusion LAI of the improved FSDAF algorithm showed higher accuracy.

- (3)

- The improved FSDAF method could be applied in highly heterogenous areas. The resultant imagery by the combining linear pixel unmixing and FSDAF model revealed better spatial details and the blurring of the boundaries among different ground objects could be improved.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, J.M.; Black, T.A. Defining leaf-area index for non-flat leaves. Agric. For. Meteorol. 2010, 15, 421–429. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F.; Smith, G.J.; Jonckheere, I.; Coppin, P. Review of methods for in situ leaf area index (lai) determination: Part ii. Estimation of lai, errors and sampling. Agric. For. Meteorol. 2004, 121, 37–53. [Google Scholar] [CrossRef]

- Baret, F.; Hagolle, O.; Geiger, B.; Bicheron, P.; Miras, B.; Huc, M.; Berthelot, B.; Nino, F.; Weiss, M.; Samain, O.; et al. LAI, fAPAR and fCover CYCLOPES global products derived from VEGETATION—Part 1: Principles of the algorithm. Remote Sens. Environ. 2007, 110, 275–286. [Google Scholar] [CrossRef]

- Myneni, R.B.; Hoffman, S.; Knyazikhin, Y.; Privette, J.L.; Glassy, J.; Tian, Y.; Wang, Y.; Song, X.; Zhang, Y.; Smith, G.R.; et al. Global products of vegetation leaf area and fraction absorbed par from year one of modis data. Remote Sens. Environ. 2002, 83, 214–231. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; Garcia-Haro, F.J.; Camps-Valls, G.; Grau-Muedra, G.; Nutini, F.; Crema, A.; Boschetti, M. Multitemporal and multiresolution leaf area index retrieval for operational local rice crop monitoring. Remote Sens. Environ. 2016, 187, 102–118. [Google Scholar] [CrossRef]

- Ganguly, S.; Nemani, R.R.; Zhang, G.; Hashimoto, H.; Milesi, C.; Michaelis, A.; Wang, W.L.; Votava, P.; Samanta, A.; Melton, F.; et al. Generating global leaf area index from landsat: Algorithm formulation and demonstration. Remote Sens. Environ. 2012, 122, 185–202. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Kustas, W.P.; Wang, Y.J. Simple method for retrieving leaf area index from landsat using modis leaf area index products as reference. J. Appl. Remote Sens. 2012, 6. [Google Scholar] [CrossRef]

- Li, H.; Chen, Z.X.; Jiang, Z.W.; Wu, W.B.; Ren, J.Q.; Liu, B.; Hasi, T. Comparative analysis of gf-1, hj-1, and landsat-8 data for estimating the leaf area index of winter wheat. J. Integr. Agric. 2017, 16, 266–285. [Google Scholar] [CrossRef]

- Wu, M.Q.; Wu, C.Y.; Huang, W.J.; Niu, Z.; Wang, C.Y. High-resolution leaf area index estimation from synthetic landsat data generated by a spatial and temporal data fusion model. Comput. Electron. Agric. 2015, 115, 1–11. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Seitz, N.; White, J.C.; Gao, F.; Masek, J.G.; Stenhouse, G. Generation of dense time series synthetic landsat data through data blending with modis using a spatial and temporal adaptive reflectance fusion model. Remote Sens. Environ. 2009, 1133, 1988–1999. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending landsat-modis surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the landsat and modis surface reflectance: Predicting daily landsat surface reflectance. IEEE Trans. Geosci. Remote 2006, 44, 2207–2218. [Google Scholar]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on landsat and modis. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Zhu, X.L.; Chen, J.; Gao, F.; Chen, X.H.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Zhu, X.L.; Helmer, E.H.; Gao, F.; Liu, D.S.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Xie, F.D.; Zhang J, H.; Sun P, J.; Pan Y, Z.; Yun, Y.; Yuan Z M, Q. Remote sensing data fusion by combining starfm and downscaling mixed pixel algorithm. J. Remote Sens. 2016, 20, 62–72. [Google Scholar]

- Yang, M.; Yang, G.; Chen, X.; Zhang, Y.; You, J.; Agency, S.E. Generation of land surface temperature with high spatial and temporal resolution based on fsdaf method. Remote Sens. Land Resour. 2018, 30, 54–62. [Google Scholar]

- Jie, W.; Li, W. Research on relationship between vegetation cover fraction and vegetation index based on flexible spatiotemporal data fusion model. Pratacultural Sci. 2017, 2, 8. [Google Scholar]

- Zhang, L.; Weng, Q.H.; Shao, Z.F. An evaluation of monthly impervious surface dynamics by fusing landsat and modis time series in the pearl river delta, China, from 2000 to 2015. Remote Sens. Environ. 2017, 201, 99–114. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.F.; Gao, F. A spatio-temporal enhancement method for medium resolution lai (stem-lai). Int. J. Appl. Earth Obs. 2016, 47, 15–29. [Google Scholar] [CrossRef]

- Settle, J.J.; Drake, N.A. Linear mixing and the estimation of ground cover proportions. Int. J. Remote Sens. 1993, 14, 1159–1177. [Google Scholar] [CrossRef]

- Wu, M.Q.; Niu, Z.; Wang, C.Y.; Wu, C.Y.; Wang, L. Use of modis and landsat time series data to generate high-resolution temporal synthetic landsat data using a spatial and temporal reflectance fusion model. J. Appl. Remote Sens. 2012, 6. [Google Scholar] [CrossRef]

- Zhang, W.; Li, A.N.; Jin, H.A.; Bian, J.H.; Zhang, Z.J.; Lei, G.B.; Qin, Z.H.; Huang, C.Q. An enhanced spatial and temporal data fusion model for fusing landsat and modis surface reflectance to generate high temporal landsat-like data. Remote Sens. 2013, 5, 5346–5368. [Google Scholar] [CrossRef]

- Zhou, J.M.; Zhang, S.; Yang, H.; Xiao, Z.Q.; Gao, F. The retrieval of 30-m resolution lai from landsat data by combining modis products. Remote Sens. 2018, 10, 1187. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. Libsvm: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Garcia-Haro, F.J. A comparison of starfm and an unmixing-based algorithm for landsat and modis data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Ranga Myneni. Modis Collection 6 (c6) Lai/Fpar Product User’s Guide. Available online: https://lpdaac.usgs.gov/sites/default/files/public/product_documentation/mod15_user_guide.pdf (accessed on 1 August 2018).

- Yang, W.Z.; Huang, D.; Tan, B.; Stroeve, J.C.; Shabanov, N.V.; Knyazikhin, Y.; Nemani, R.R.; Myneni, R.B. Analysis of leaf area index and fraction of par absorbed by vegetation products from the terra modis sensor: 2000–2005. IEEE Trans. Geosci. Remote 2006, 44, 1829–1842. [Google Scholar] [CrossRef]

- Louis, J.; Debaecker, V.; Pflug, B.; Main-Knorn, M.; Gascon, F. Sentinel-2 sen2cor: L2a processor for users. In Proceedings of the Living Planet Symposium, Prague, Czech Republic, 9–13 May 2016. [Google Scholar]

- Roy, D.P.; Li, J.; Zhang, H.K.K.; Yan, L.; Huang, H.Y.; Li, Z.B. Examination of sentinel 2a multi-spectral instrument (msi) reflectance anisotropy and the suitability of a general method to normalize msi reflectance to nadir brdf adjusted reflectance. Remote Sens. Environ. 2017, 199, 25–38. [Google Scholar] [CrossRef]

- Yang, B.; Li, D.; Wang, L. Retrieval of surface vegetation biomass information and analysis of vegetation feature based on sentinel-2a in the upper of minjiang river. Sci. Technol. Rev. 2017, 35, 74–80. [Google Scholar]

| Band | MODIS(μm) | TM(μm) | ETM(μm) | OLI(μm) |

|---|---|---|---|---|

| Red | 0.62–0.67 | 0.63–0.69 | 0.63–0.69 | 0.64–0.67 |

| NIR | 0.841–0.876 | 0.76–0.90 | 0.76–0.90 | 0.85–0.88 |

| Blue | 0.459–0.479 | 0.45–0.52 | 0.45–0.52 | 0.45–0.51 |

| Green | 0.545–0.565 | 0.52–0.60 | 0.52–0.60 | 0.53–0.59 |

| SWIR | 1.628–1.652 | 1.55–1.75 | 1.55–1.75 | 1.57–1.65 |

| Test Area | Landsat by SVR(SD) | Sentinel-2 (SD) | Improved FSDAF(SD) | FSDAF(SD) |

|---|---|---|---|---|

| Area A | 0.65052 | 1.05779 | 0.98252 | 1.18621 |

| Area B | 0.76216 | 0.91251 | 0.73485 | 0.99363 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, H.; Huang, F.; Qi, H. Generating High Resolution LAI Based on a Modified FSDAF Model. Remote Sens. 2020, 12, 150. https://doi.org/10.3390/rs12010150

Zhai H, Huang F, Qi H. Generating High Resolution LAI Based on a Modified FSDAF Model. Remote Sensing. 2020; 12(1):150. https://doi.org/10.3390/rs12010150

Chicago/Turabian StyleZhai, Huan, Fang Huang, and Hang Qi. 2020. "Generating High Resolution LAI Based on a Modified FSDAF Model" Remote Sensing 12, no. 1: 150. https://doi.org/10.3390/rs12010150

APA StyleZhai, H., Huang, F., & Qi, H. (2020). Generating High Resolution LAI Based on a Modified FSDAF Model. Remote Sensing, 12(1), 150. https://doi.org/10.3390/rs12010150