GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization

Abstract

1. Introduction

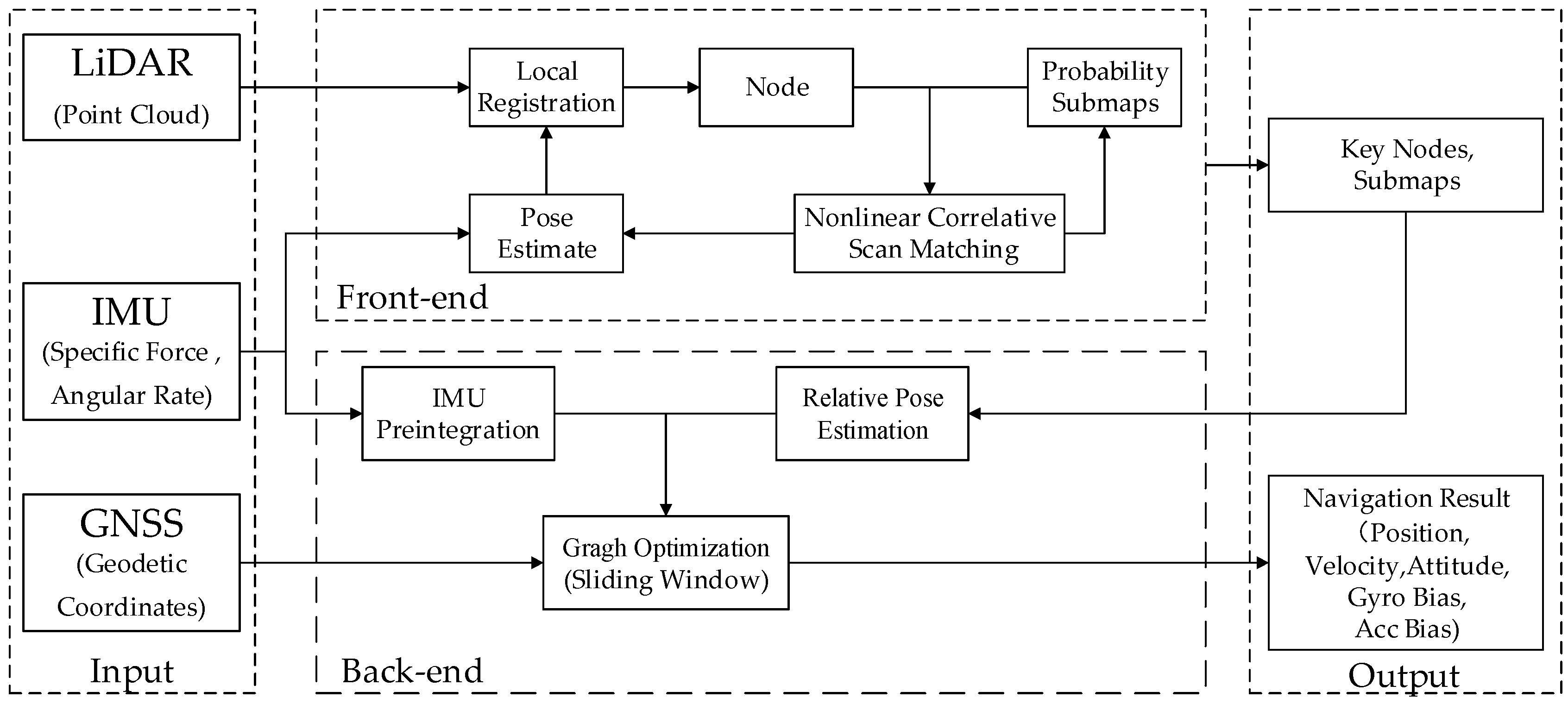

2. GNSS/INS/LiDAR-SLAM Integrated Navigation System

2.1. Coordinate Frame

2.1.1. Coordinate System

2.1.2. Transformation Among Coordinate Systems

2.2. Front-End Scan Matching

2.2.1. Pose Estimate

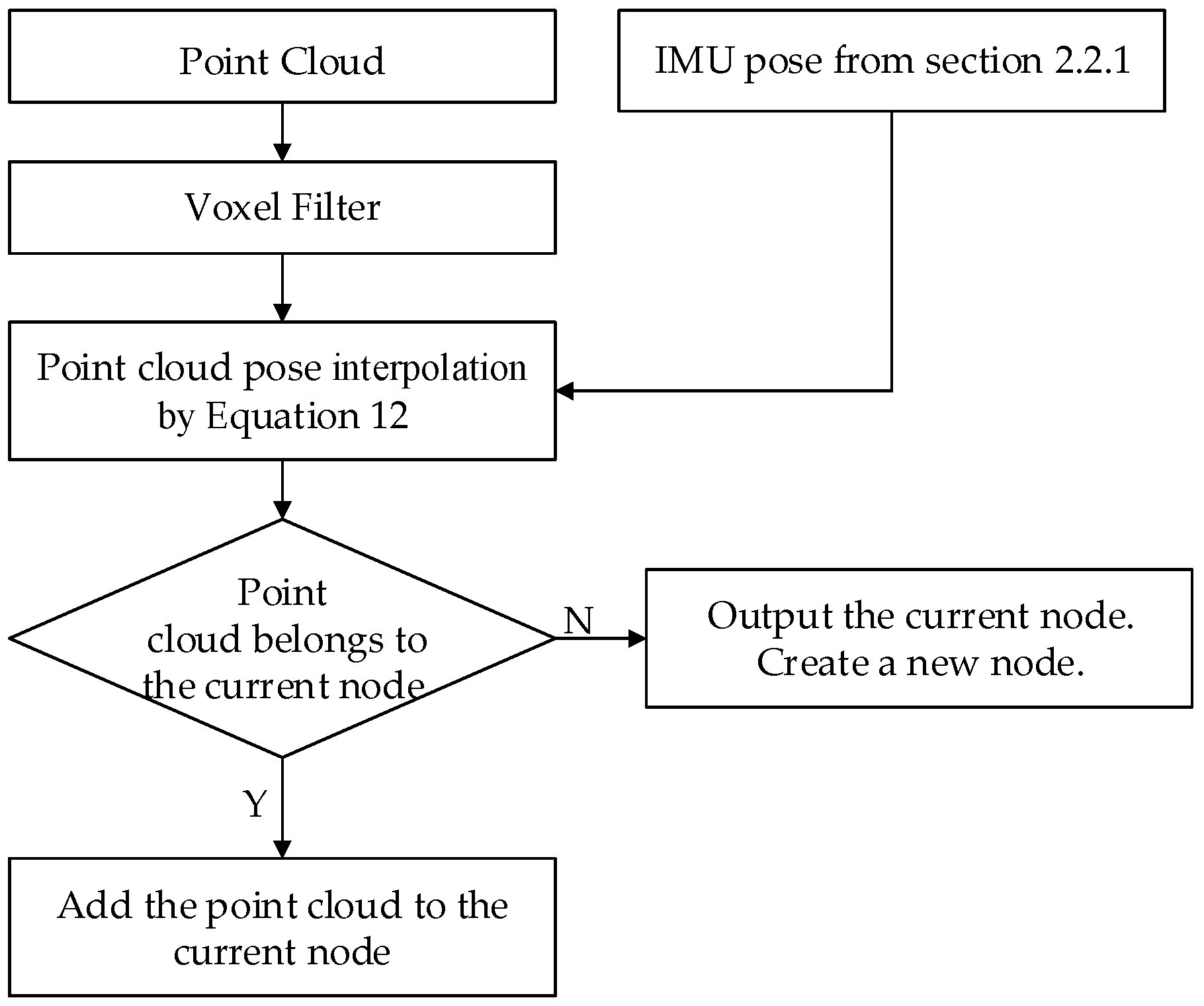

2.2.2. Local Registration

2.2.3. Probability Map

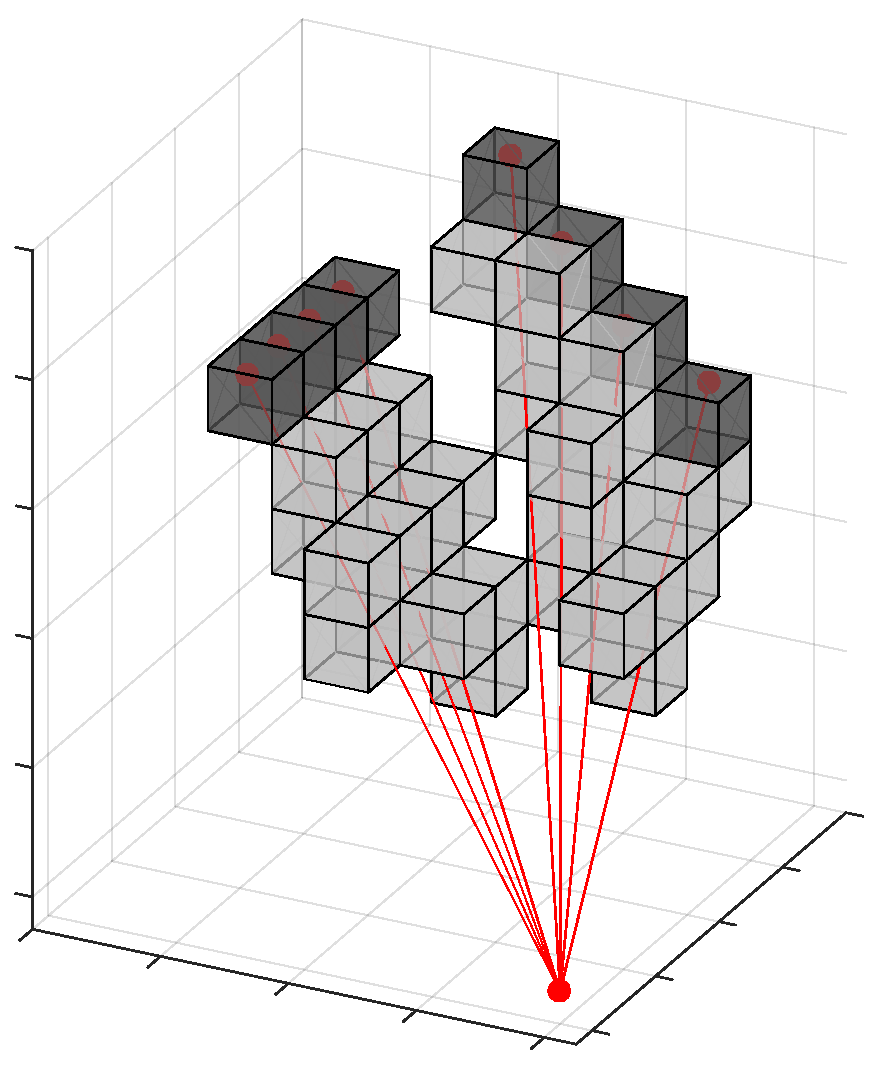

2.2.4. Nonlinear Correlative Scan Matching

2.3. Relative Pose Estimation

2.4. Graph Optimization

2.4.1. Sensor Calibration

2.4.2. GNSS Cost Function

2.4.3. IMU Preintegration Cost Function

2.4.4. LiDAR Cost Function

2.4.5. Sliding Window

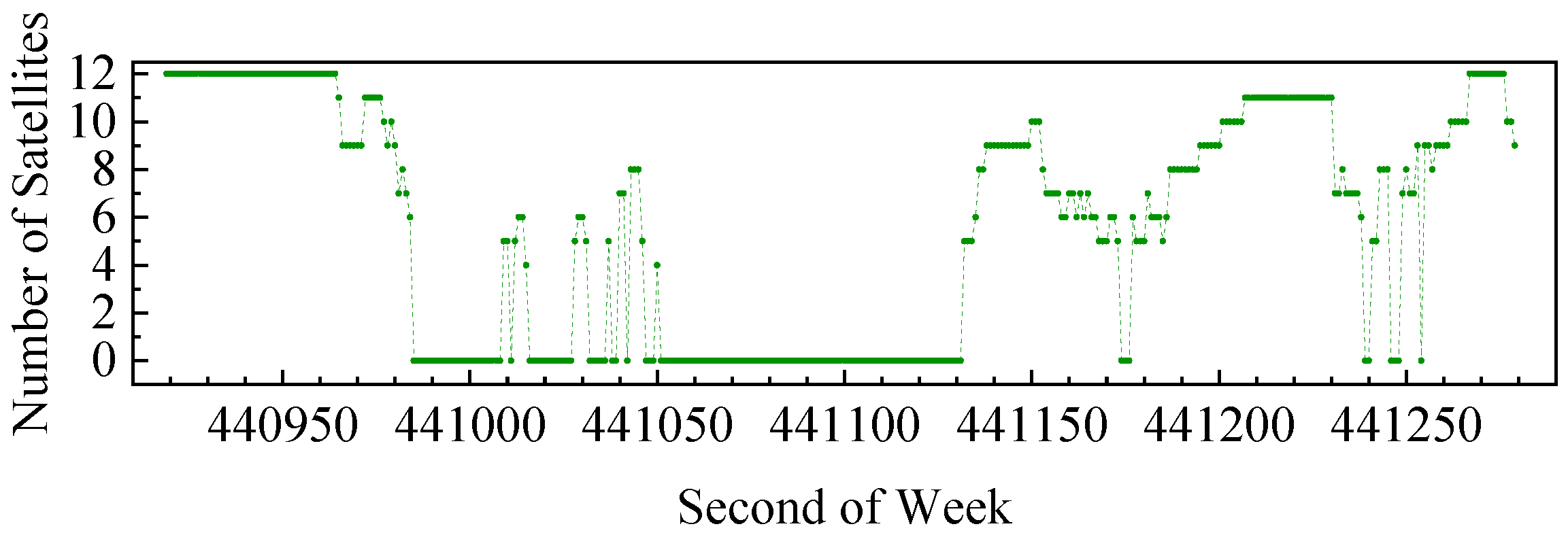

3. Experiment

4. Results and Discussion

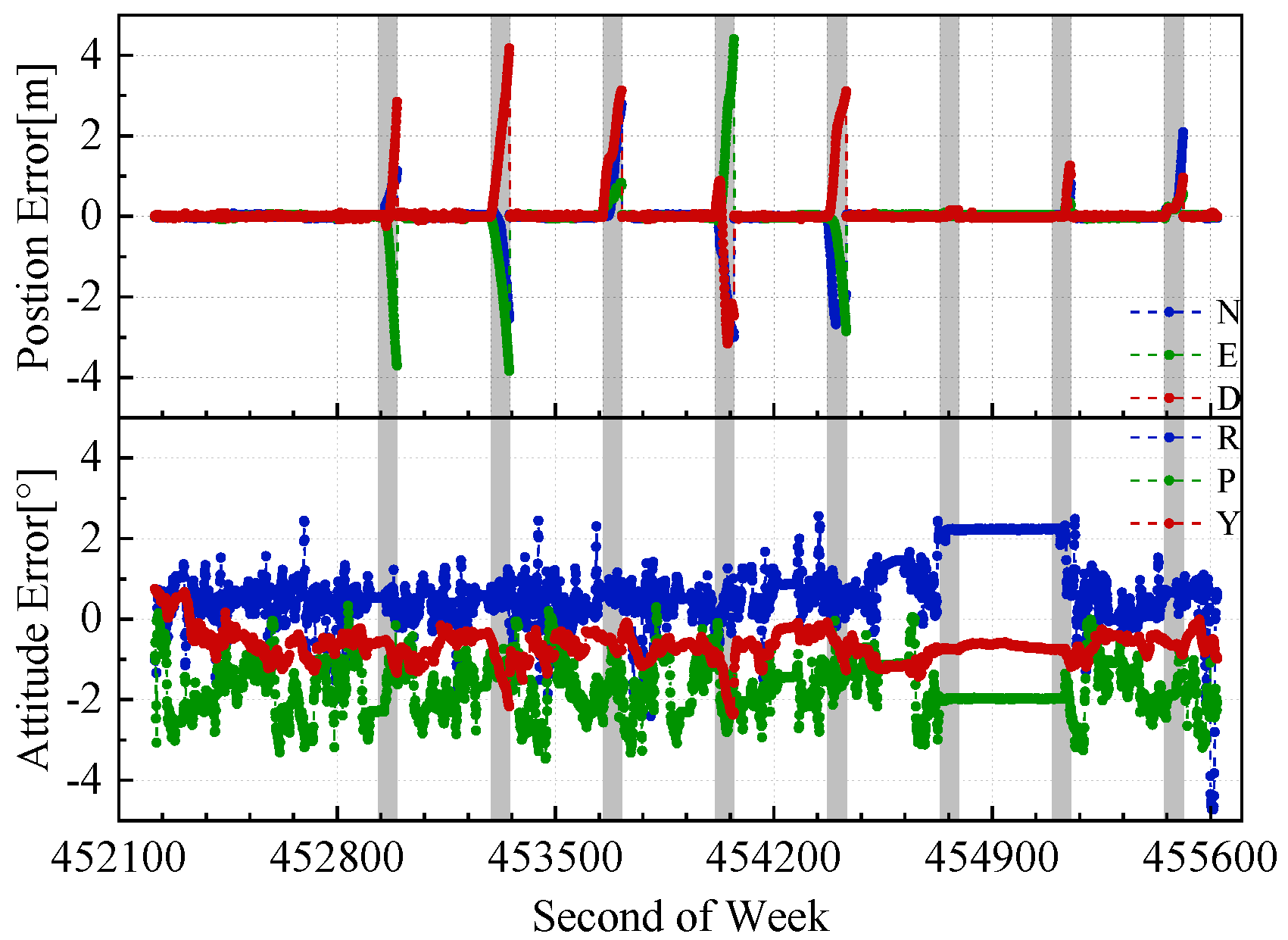

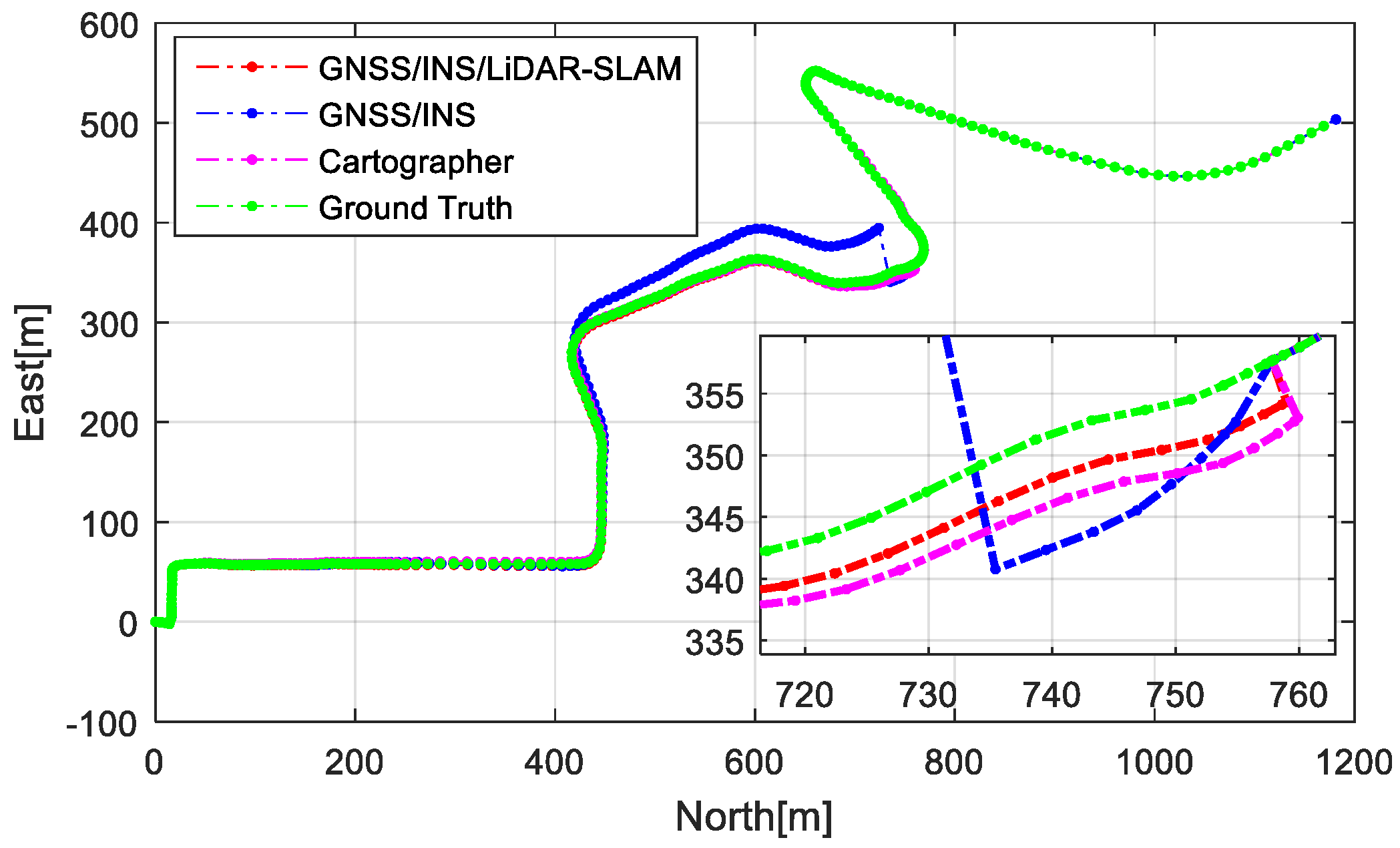

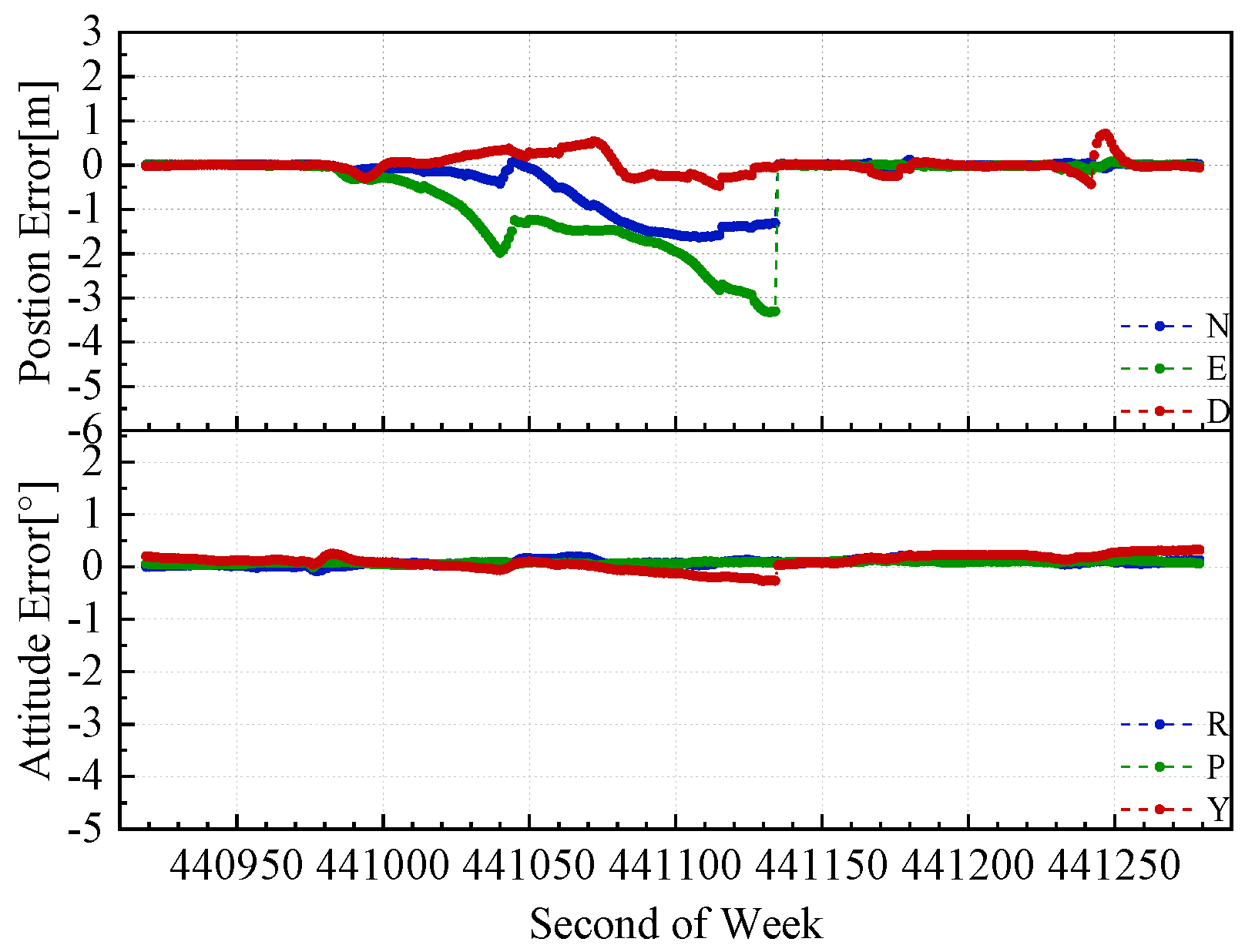

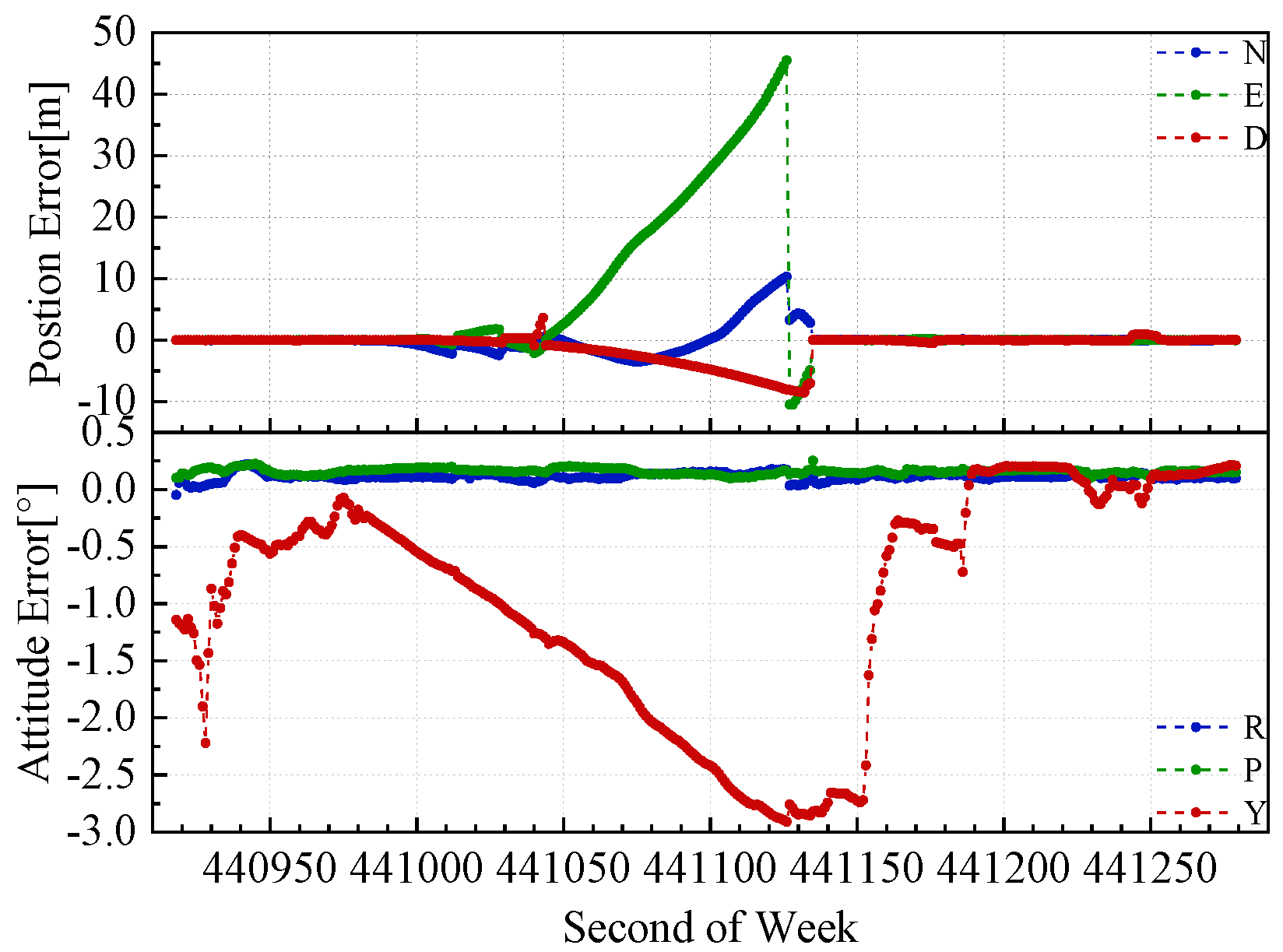

- (1).

- For the horizontal position error (i.e., in the North and East directions), the proposed GNSS/INS/LiDAR-SLAM integrated navigation system was the smallest, and the GNSS/INS integrated navigation system was the largest. The vertical position error of the proposed GNSS/INS/LiDAR-SLAM integrated navigation system was similar to the GNSS/INS integrated navigation system, and Cartographer had the largest vertical position error. In the sixth outage when the vehicle was at rest, the Cartographer and GNSS/INS/LiDAR-SLAM integrated navigation system significantly reduced the horizontal positioning drift with the aid of the LiDAR-SLAM compared to the GNSS/INS integrated navigation system.

- (2).

- Cartographer had the largest attitude error, especially in the roll and pitch, which is because Cartographer assumes low dynamic motion of the vehicle and uses gravity to solve the horizontal attitude (similar to the inclinometer principle). The attitude error of the proposed GNSS/INS/LiDAR-SLAM integrated navigation system was equivalent to that of the GNSS/INS integrated navigation system.

- (a)

- The position error RMS in the North, East and vertical directions of the proposed GNSS/INS/LiDAR-SLAM navigation system was reduced by 82.2%, 79.6%, and 17.2%, respectively. The Cartographer’s North and East position error RMS was reduced by 47.4% and 44.8%, respectively, but the vertical position error RMS is degraded by 2.3 times.

- (b)

- The aid of the LiDAR-SLAM did not significantly improve the attitude accuracy in the GNSS/INS/LiDAR-SLAM integrated navigation system. The RMS values of the Cartographer’s roll, pitch and yaw errors were increased by 9.5, 13.7, and 7.2 times, respectively, because of the dedicated algorithm design for low speed and smooth motion, which does not fit the test cases in this paper.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Shin, E.-H. Estimation Techniques for Low-Cost Inertial Navigation; The University of Calgary: Calgary, Canada, 2005. [Google Scholar]

- Pagliaria, D.; Pinto, L.; Reguzzoni, M.; Rossi, L. Integration of kinect and low-cost gnss for outdoor navigation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 565–572. [Google Scholar] [CrossRef]

- Rossi, L.; De Gaetani, C.; Pagliari, D.; Realini, E.; Reguzzoni, M.; Pinto, L. Comparison between rgb and rgb-d cameras for supporting low-cost gnss urban navigation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 991–998. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Walter, M.R.; Eustice, R.M.; Leonard, J.J. Exactly Sparse Extended Information Filters for Feature-based SLAM. Int. J. Robot. Res. 2007, 26, 335–359. [Google Scholar] [CrossRef]

- Deschaud, J.-E. IMLS-SLAM: Scan-to-model matching based on 3D data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA2018), Brisbane, QL, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar]

- Behley, J.; Stachniss, C. Efficient Surfel-based Slam Using 3d Laser Range Data in Urban Environments. Available online: http://roboticsproceedings.org/rss14/p16.pdf (accessed on 25 April 2019).

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 14–16 July 2014; 2014. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. Visual-lidar odometry and mapping: Low-drift, robust, and fast. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA2015), Seattle, WA, USA, 26–30 May 2015; pp. 2174–2181. [Google Scholar]

- Martínez, J.L.; González, J.; Morales, J.; Mandow, A.; García-Cerezo, A.J. Mobile robot motion estimation by 2D scan matching with genetic and iterative closest point algorithms. J. Field Robot. 2006, 23, 21–34. [Google Scholar] [CrossRef]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008. [Google Scholar]

- Aleksandr, S.; Haehnel, D.; Thrun, S. Generalized-ICP. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; p. 435. [Google Scholar]

- Burguera, A.; González, Y.; Oliver, G. On the use of likelihood fields to perform sonar scan matching localization. Auton. Robot. 2009, 26, 203–222. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Gao, Y.; Liu, S.; Atia, M.M.; Noureldin, A. INS/GPS/LiDAR Integrated Navigation System for Urban and Indoor Environments Using Hybrid Scan Matching Algorithm. Sensors 2015, 15, 23286–23302. [Google Scholar] [CrossRef] [PubMed]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Qian, C.; Liu, H.; Tang, J.; Chen, Y.; Kaartinen, H.; Kukko, A.; Zhu, L.; Liang, X.; Chen, L.; Hyyppä, J. An integrated GNSS/INS/LiDAR-SLAM positioning method for highly accurate forest stem mapping. Remote Sens. 2016, 9, 3. [Google Scholar] [CrossRef]

- Shamsudin, A.U.; Ohno, K.; Hamada, R.; Kojima, S.; Westfechtel, T.; Suzuki, T.; Okada, Y.; Tadokoro, S.; Fujita, J.; Amano, H. Consistent map building in petrochemical complexes for firefighter robots using SLAM based on GPS and LIDAR. Robomech. J. 2018, 5, 7. [Google Scholar] [CrossRef]

- Kukko, A.; Kaijaluoto, R.; Kaartinen, H.; Lehtola, V.V.; Jaakkola, A.; Hyyppä, J. Graph SLAM correction for single scanner MLS forest data under boreal forest canopy. Isprs J. Photogramm. Remote Sens. 2017, 132, 199–209. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Cartographer. Latest. Available online: https://google-cartographer.readthedocs.io/en/latest/ (accessed on 9 July 2018).

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-Manifold Preintegration for Real-Time Visual--Inertial Odometry. IEEE Trans. Robot. 2015, 33, 1–21. [Google Scholar] [CrossRef]

- Yahao, C. Algorithm Implementation of Pseudorange Differential BDS/INS Tightly-Coupled Integration and GNSS Data Quality Control Analysis; Wuhan University: Wuhan, China, 2013. [Google Scholar]

- Jun, W.; Gongmin, Y. Strapdown Inertial Navigation Algorithm and Integrated Navigation Principles; Northwestern Polytechnical University Press Co. Ltd.: Xi’an, China, 2016. [Google Scholar]

- Dehai, Z. Point Cloud Library PCL Learning Tutorial; Beihang University Press: Beijing, China, 2012. [Google Scholar]

- Slerp. Available online: https://en.wikipedia.org/wiki/Slerp (accessed on 2 October 2018).

- Olson, E.B. Real-time correlative scan matching. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 1233–1239. [Google Scholar]

- Ceres Solver, 1.14. Available online: http://ceres-solver.org (accessed on 23 March 2018).

- Lekien, F.; Marsden, J.E. Tricubic Interpolation in Three Dimensions. Int. J. Numer. Methods Eng. 2010, 63, 455–471. [Google Scholar] [CrossRef]

- Clausen, J. Branch and Bound Algorithms—Principles and Examples. Parallel Process. Lett. 1999, 22, 658–663. [Google Scholar]

- Xiangyuan, K.; Jiming, G.; Zongquan, L. Foundation of Geodesy, 2nd ed.; Wuhan University Press: Wuhan, China, 2010. [Google Scholar]

- Sola, J. Quaternion kinematics for the error-state Kalman filter. arXiv 2017, arXiv:1711.02508. [Google Scholar]

- Yongyuan, Q. Kalman Filter and Integrated Navigation Principle; Northwestern Polytechnical University Press Co. Ltd.: Xi’an, China, 2000. [Google Scholar]

- Sibley, G.; Matthies, L.; Sukhatme, G. Sliding window filter with application to planetary landing. J. Field Robot. 2010, 27, 587–608. [Google Scholar] [CrossRef]

- Zhang, F. The Schur Complement and Its Applications; Springer: New York, NY, USA, 2005. [Google Scholar]

- Eckenhoff, K.; Paull, L.; Huang, G. Decoupled, consistent node removal and edge sparsification for graph-based SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 3275–3282. [Google Scholar]

| IMU | Bias | Random Walk | ||

|---|---|---|---|---|

| Gyro. [°/h] | Acc. [mGal] | |||

| LD-A15 (Reference system) | 0.02 | 15 | 0.003 | 0.03 |

| NV-POS1100 (Tested system) | 10 | 1000 | 0.2 | 0.18 |

| Position Error [m] | Attitude Error [°] | ||||||

|---|---|---|---|---|---|---|---|

| N | E | D | R | P | Y | ||

| Proposed GNSS/INS/LiDAR-SLAM | RMS | 0.943 | 1.114 | 0.721 | 0.151 | 0.182 | 0.213 |

| MAX | 3.212 | 3.161 | 1.112 | 0.234 | 0.253 | 0.323 | |

| GNSS/INS | RMS | 5.298 | 5.469 | 0.871 | 0.136 | 0.175 | 0.225 |

| MAX | 12.475 | 11.328 | 2.185 | 0.197 | 0.217 | 0.562 | |

| Cartographer | RMS | 2.789 | 3.019 | 2.898 | 1.438 | 2.589 | 1.865 |

| MAX | 4.835 | 4.439 | 4.169 | 2.320 | 3.602 | 2.896 | |

| Position Error [m] | Attitude Error [°] | ||||||

|---|---|---|---|---|---|---|---|

| N | E | D | R | P | Y | ||

| Proposed GNSS/INS/LiDAR-SLAM | RMS | 0.615 | 1.105 | 0.198 | 0.099 | 0.083 | 0.166 |

| MAX | 1.635 | 3.328 | 0.707 | 0.215 | 0.142 | 0.323 | |

| GNSS/INS | RMS | 2.031 | 11.757 | 2.389 | 0.116 | 0.160 | 1.375 |

| MAX | 10.338 | 45.485 | 8.499 | 0.223 | 0.253 | 2.910 | |

| Cartographer | RMS | 1.051 | 1.395 | 0.463 | 0.780 | 2.042 | 0.867 |

| MAX | 2.891 | 5.166 | 1.488 | 2.517 | 3.427 | 1.637 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, L.; Niu, X.; Liu, T.; Tang, J.; Qian, C. GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization. Remote Sens. 2019, 11, 1009. https://doi.org/10.3390/rs11091009

Chang L, Niu X, Liu T, Tang J, Qian C. GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization. Remote Sensing. 2019; 11(9):1009. https://doi.org/10.3390/rs11091009

Chicago/Turabian StyleChang, Le, Xiaoji Niu, Tianyi Liu, Jian Tang, and Chuang Qian. 2019. "GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization" Remote Sensing 11, no. 9: 1009. https://doi.org/10.3390/rs11091009

APA StyleChang, L., Niu, X., Liu, T., Tang, J., & Qian, C. (2019). GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization. Remote Sensing, 11(9), 1009. https://doi.org/10.3390/rs11091009