An Imaging Algorithm for Multireceiver Synthetic Aperture Sonar

Abstract

1. Introduction

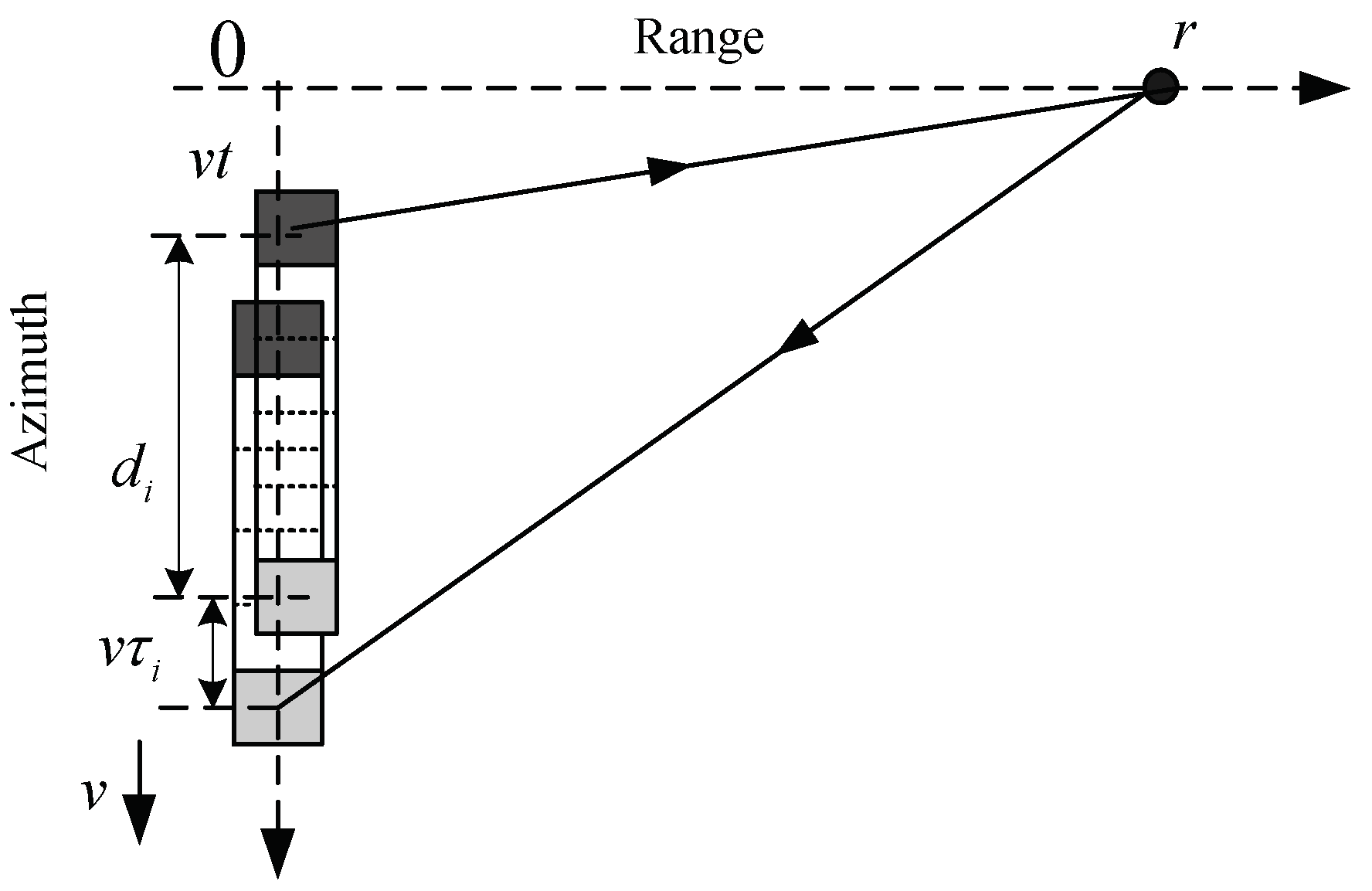

2. Imaging Geometry and Signal Model

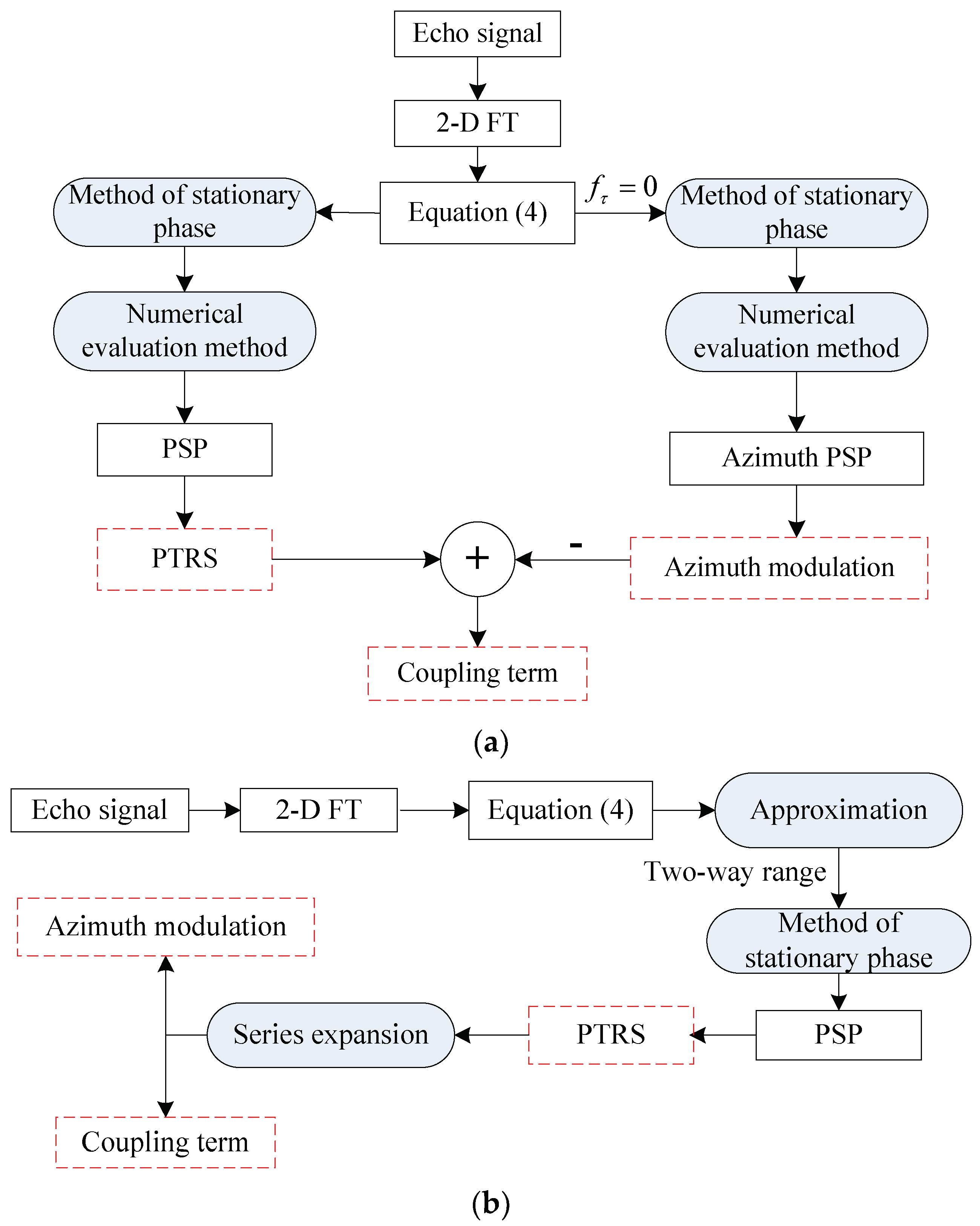

3. PTRS, Azimuth Modulation and Coupling Term

3.1. PTRS

3.2. Azimuth Modulation

3.3. Coupling Term

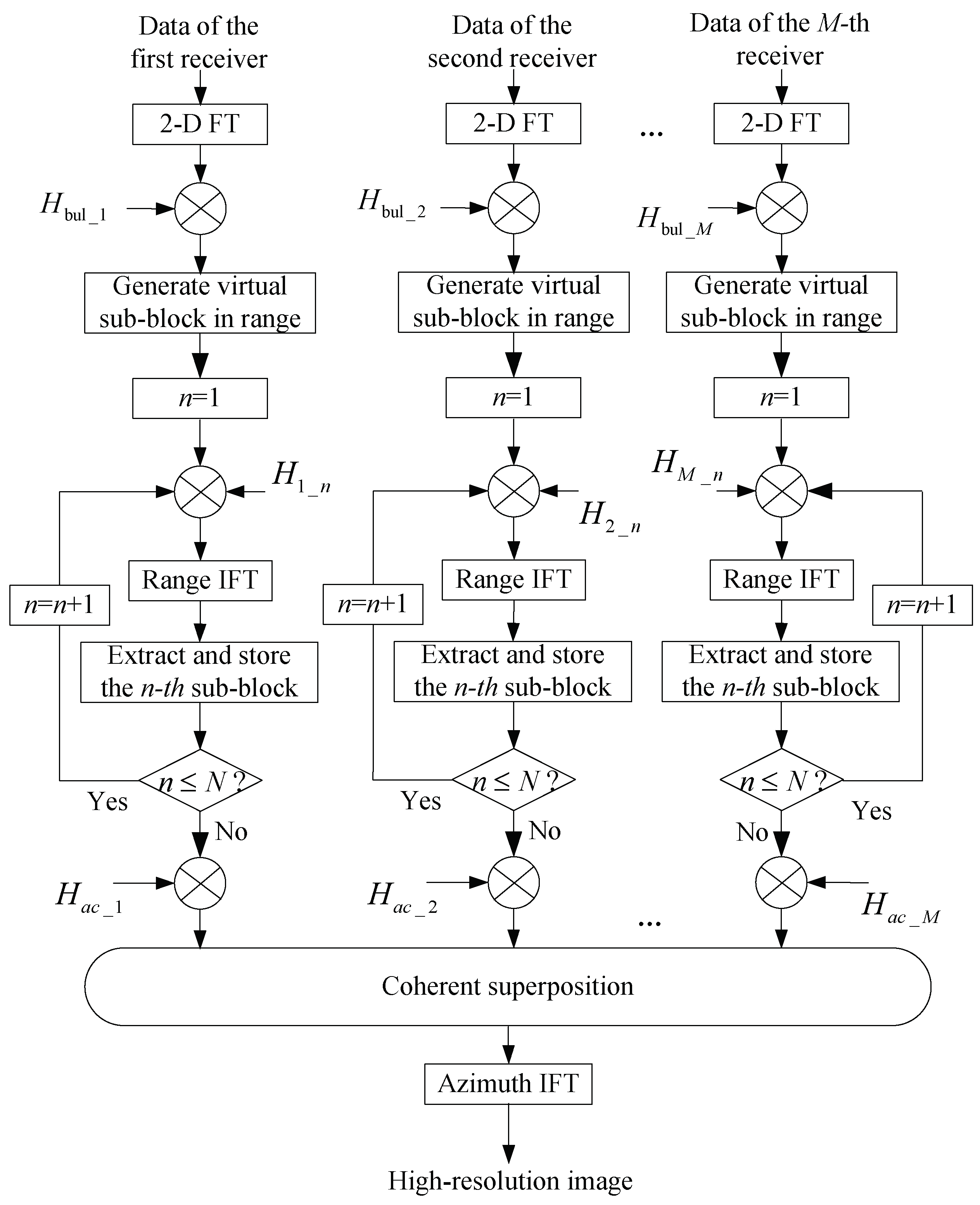

4. Imaging Algorithm

4.1. 2D FT

4.2. Decoupling

4.3. Azimuth Compression

4.4. Coherent Superposition

5. Comparison with Traditional Methods

6. Simulations and Real Data Processing

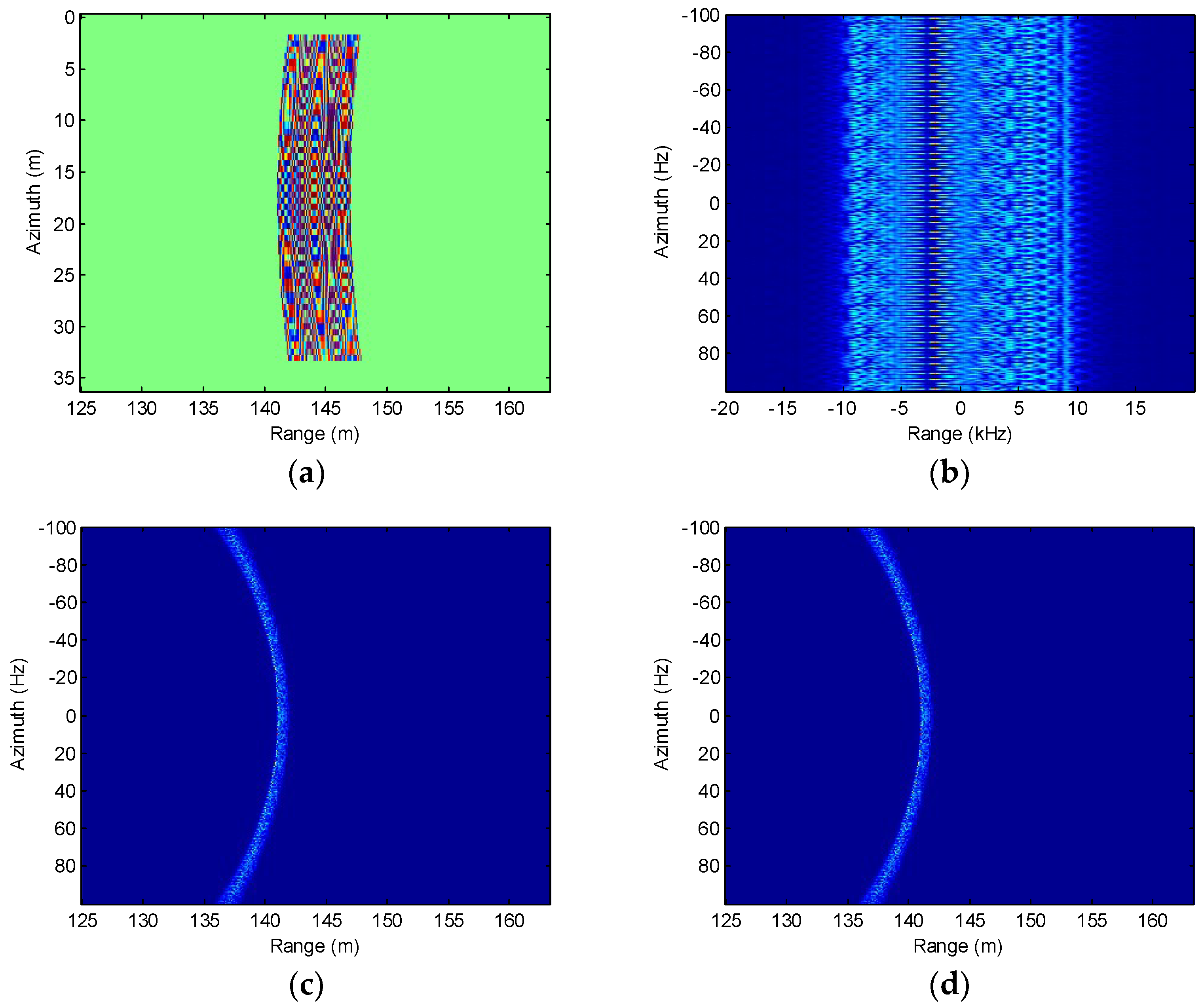

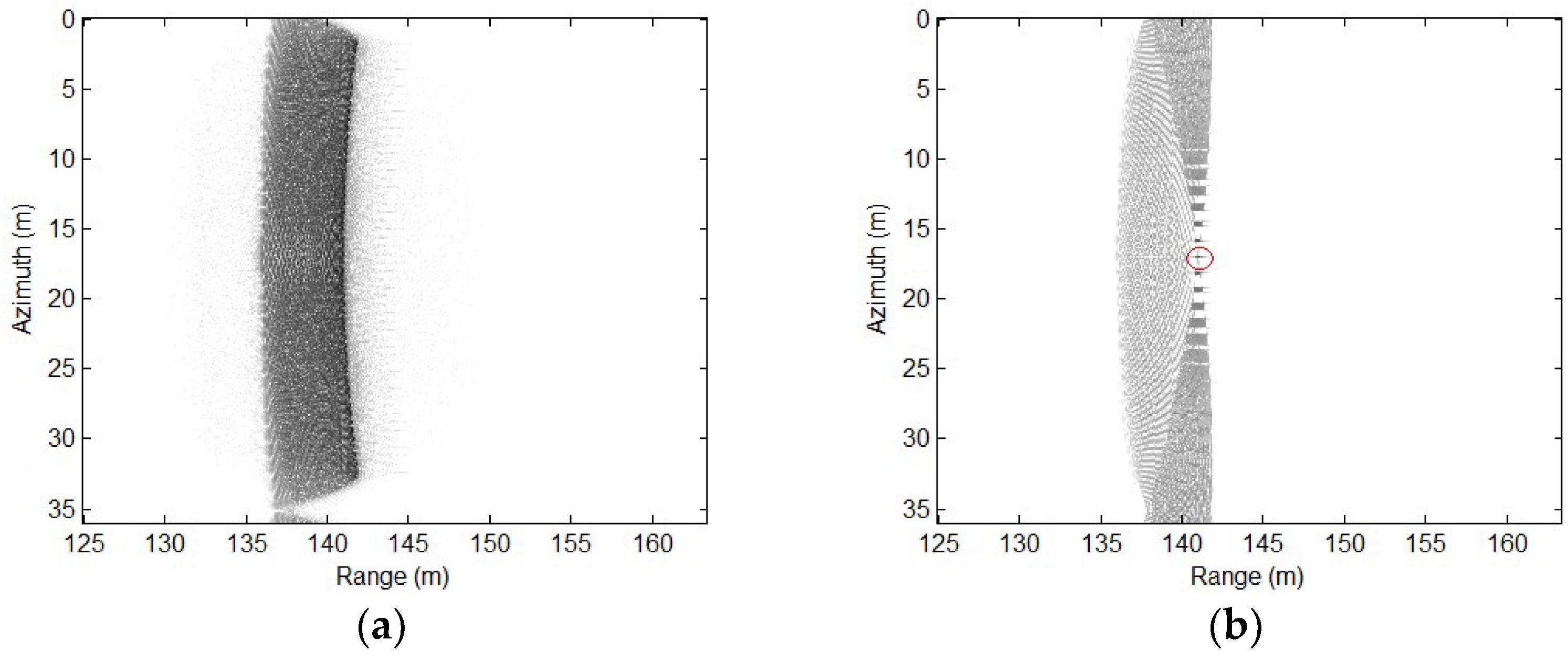

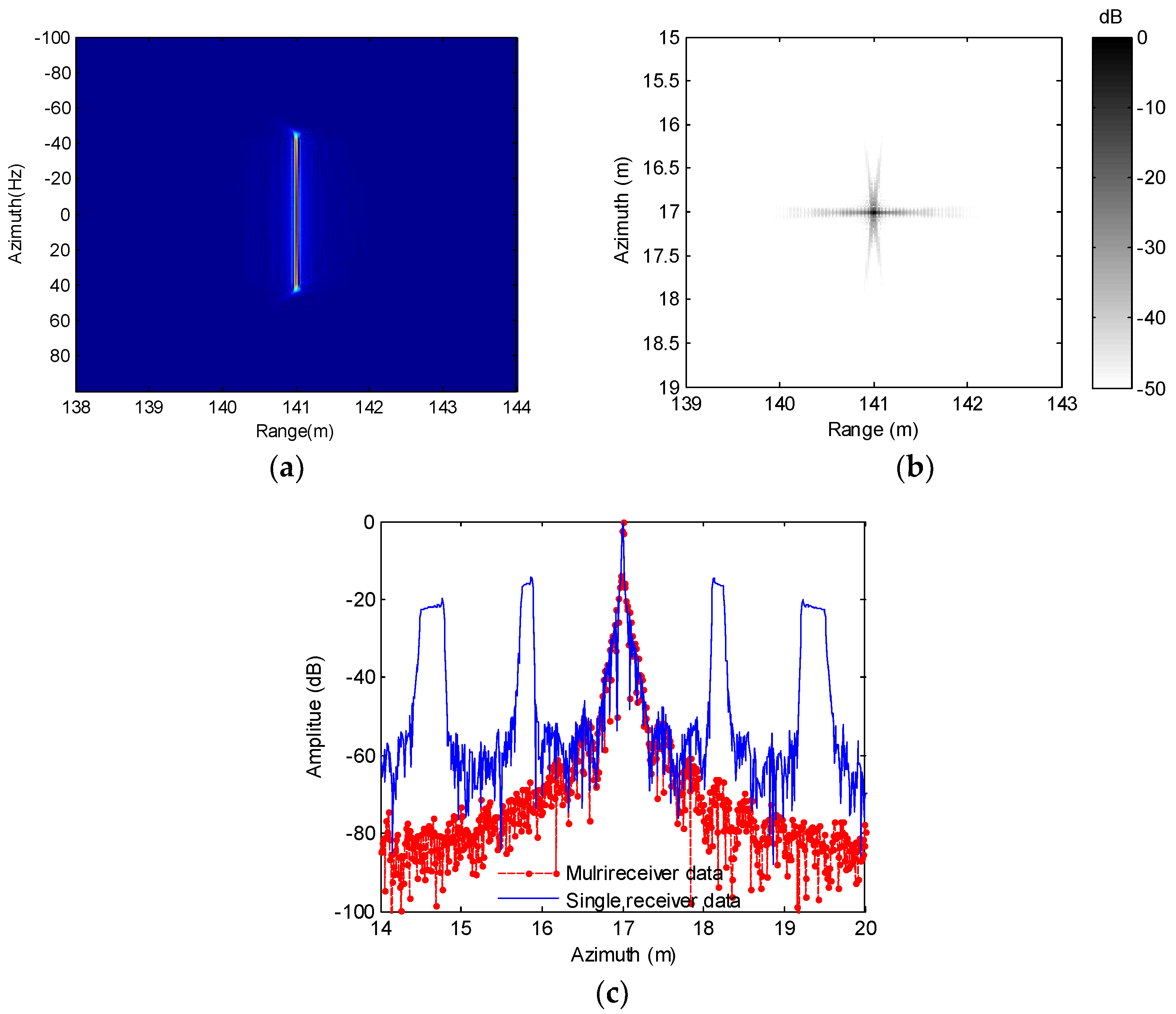

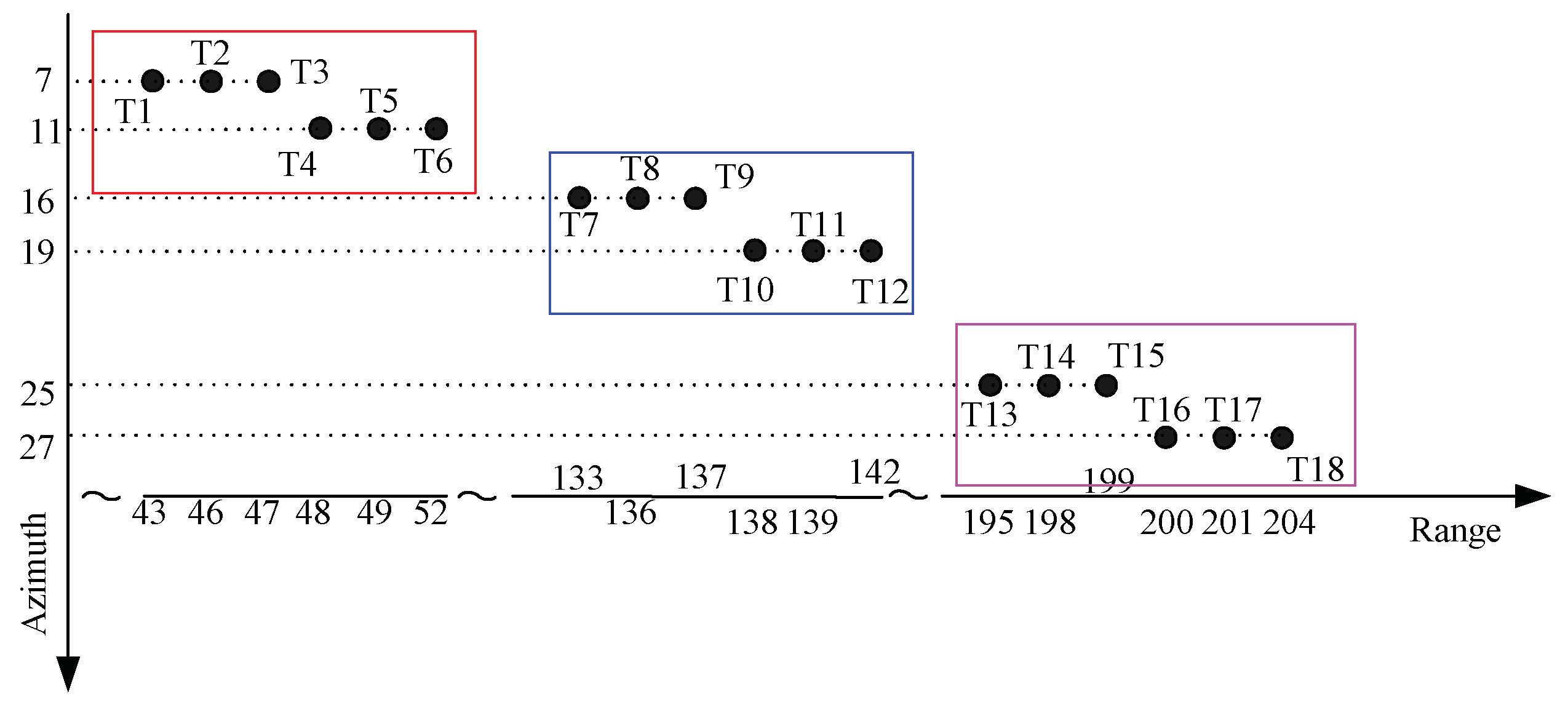

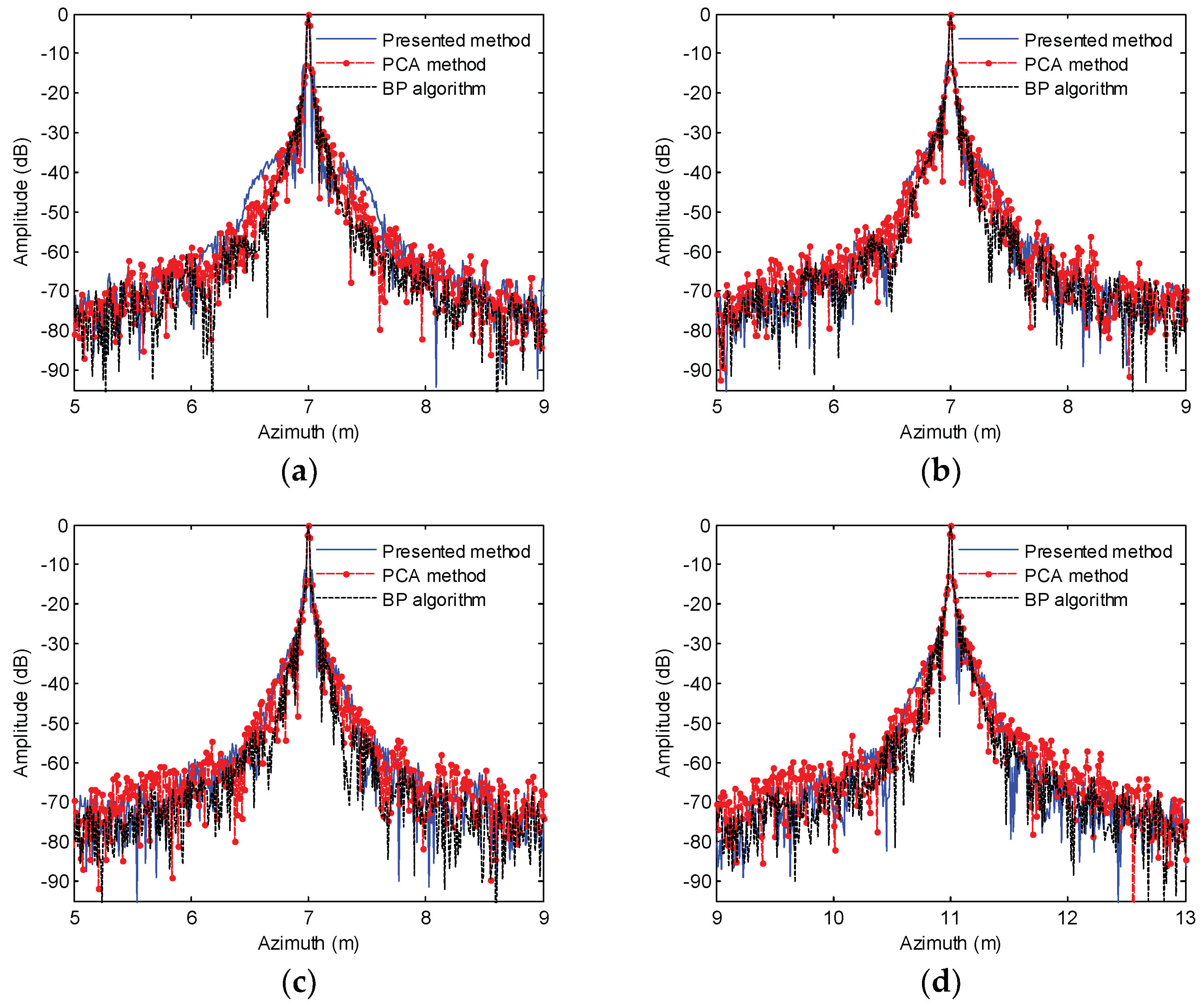

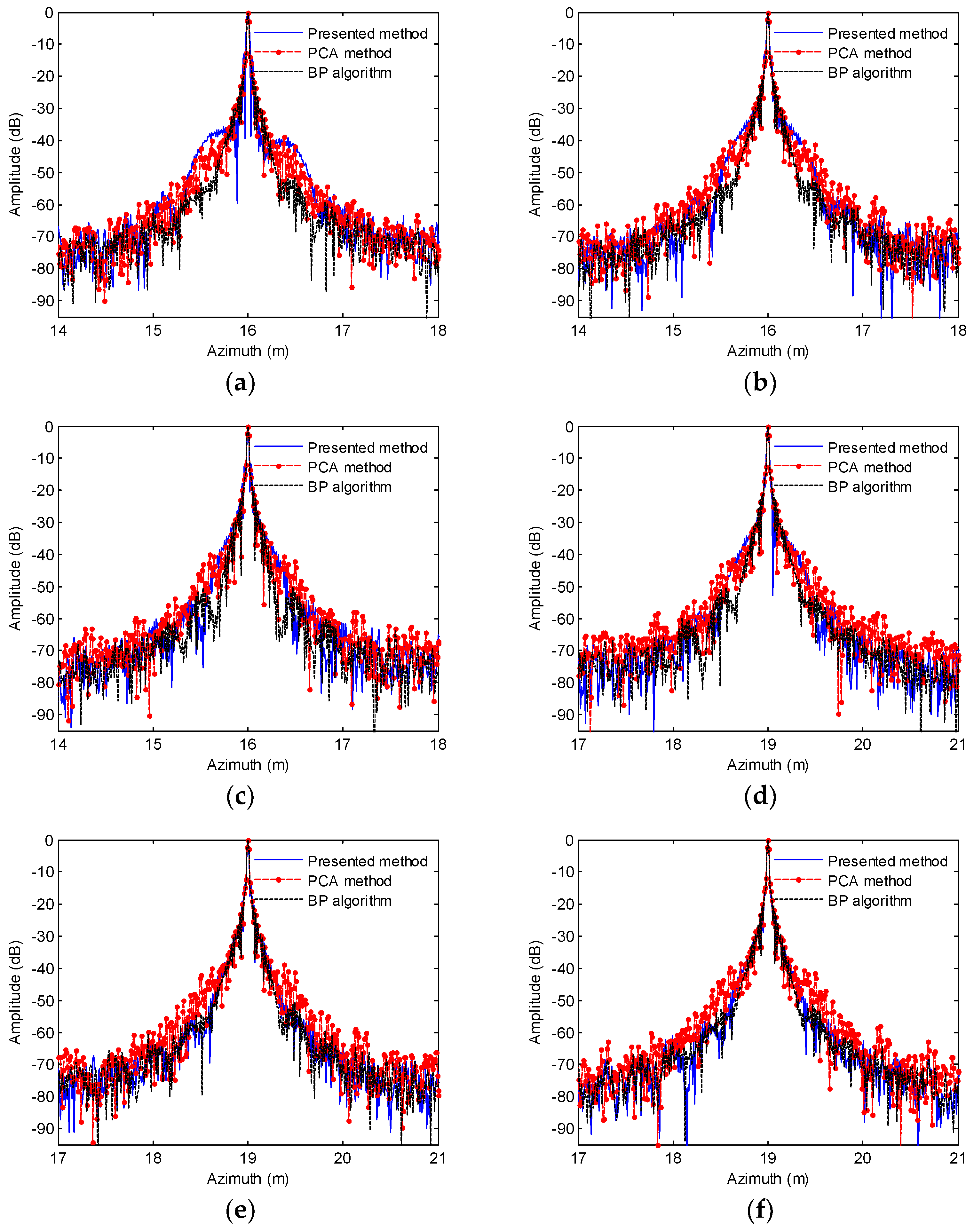

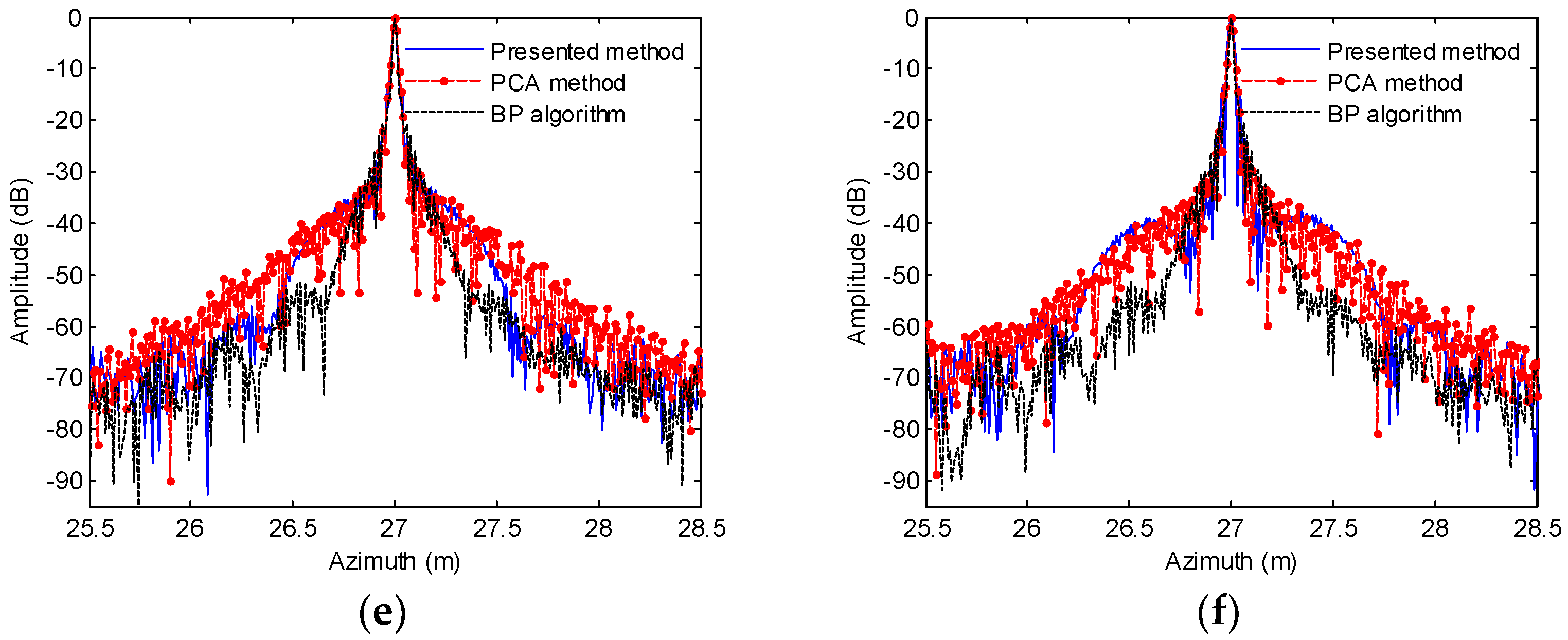

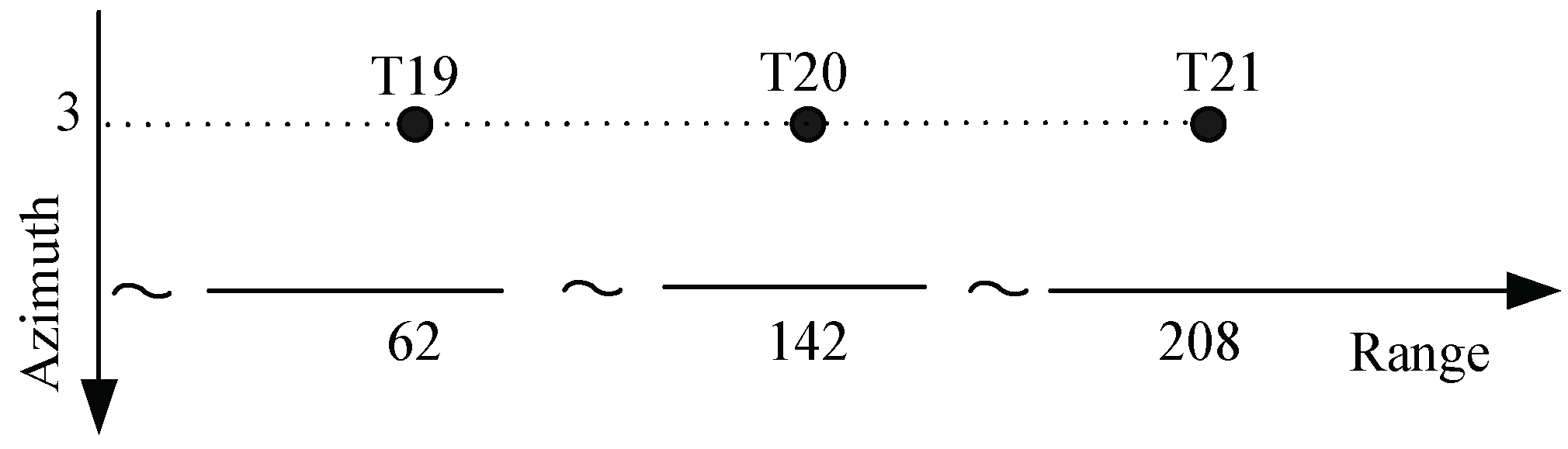

6.1. Simulation Results

6.1.1. Processing Results of Presented Method

6.1.2. Influence of Sub-Block Width on Imagery

6.1.3. Imaging Performance at Scenario Edge

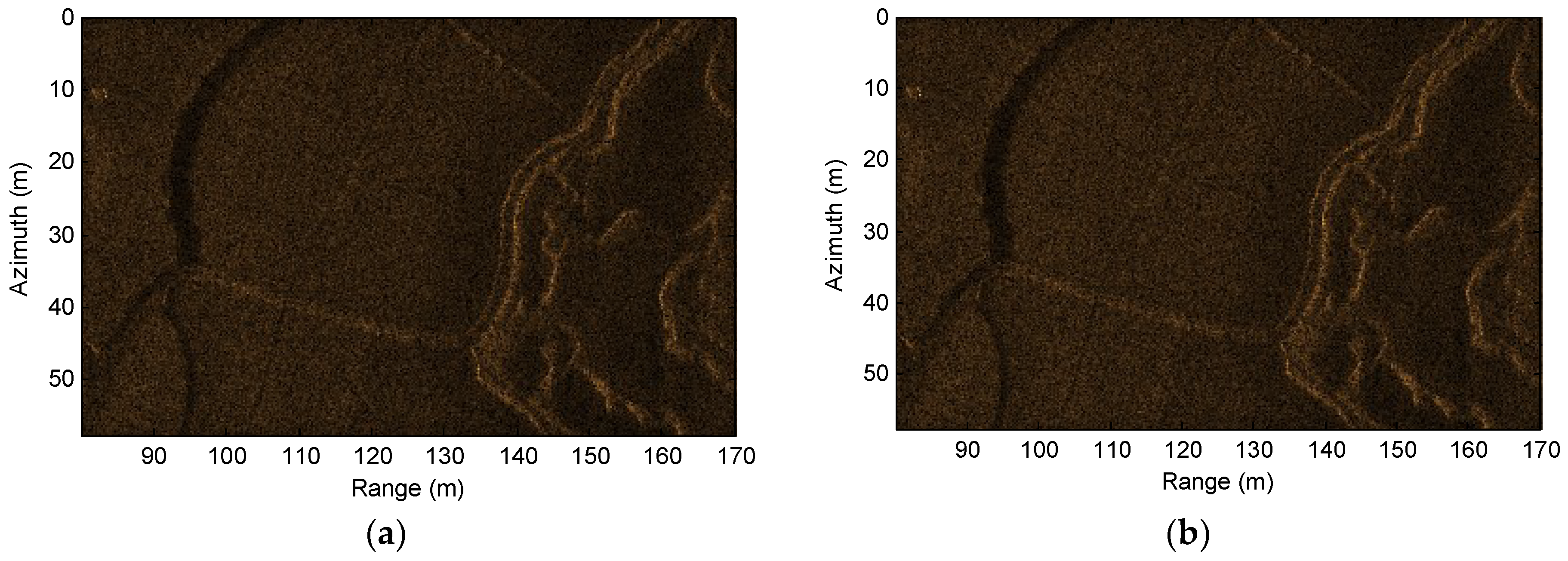

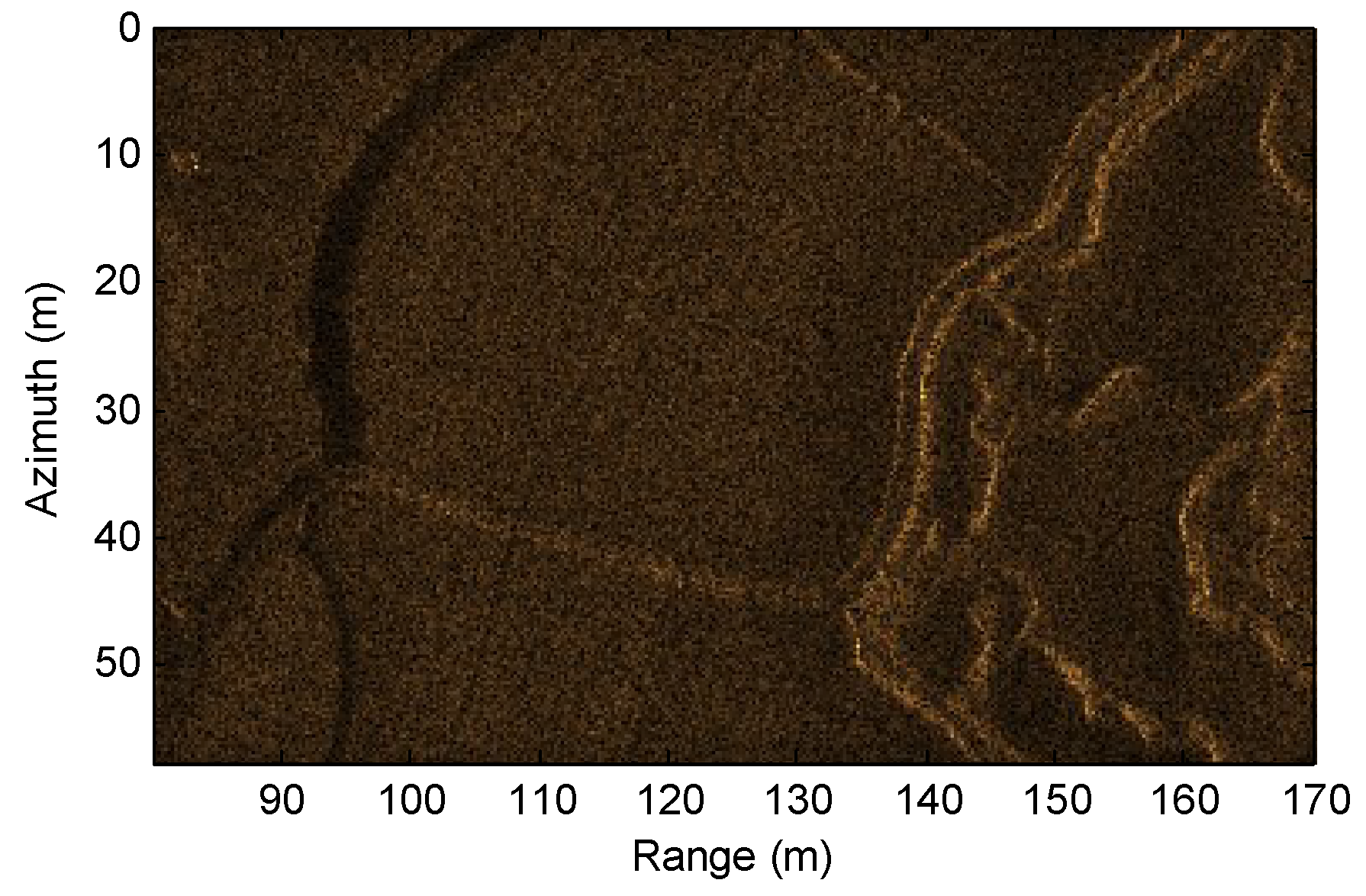

6.2. Real Data Processing

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Williams, D.P. The Mondrian detection algorithm for sonar imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1091–1102. [Google Scholar] [CrossRef]

- Larsen, L.J.; Wilby, A.; Stewart, C. Deepwater ocean survey and search using synthetic aperture sonar. In Proceedings of the 2010 MTS/IEEE Oceans Conference, Seattle, WA, USA, 20–23 September 2010; pp. 1–4. [Google Scholar]

- LeHardy, P.K.; Larsen, L.J. Deepwater synthetic aperture sonar and the search for MH 370. In Proceedings of the 2015 MTS/IEEE Oceans Conference, Washington, DC, USA, 19–22 October 2015; pp. 1–4. [Google Scholar]

- Odegard, O.; Ludvigsen, M.; Lagstad, A. Using synthetic aperture sonar in marine archaeological surveys—Some first experiences. In Proceedings of the 2013 MTS/IEEE Oceans Conference, Bergen, Norway, 10–14 June 2013; pp. 1–7. [Google Scholar]

- Carballini, J.; Viana, F. Using synthetic aperture sonar as an effective tool for pipeline inspection survey projects. In Proceedings of the 2015 MTS/OES RIO Acoustics, Rio de Janeiro, Brazil, 29–31 July 2015; pp. 1–5. [Google Scholar]

- Groen, J.; Coiras, E.; Vera, J.D.R.; Evans, B. Model-based sea mine classification with synthetic aperture sonar. IET Radar Sonar Navig. 2010, 4, 62–73. [Google Scholar] [CrossRef]

- Wu, H.; Tang, J.; Zhong, H. A correction approach for the inclined array of hydrophones in synthetic aperture sonar. Sensors 2018, 18, 2000. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Guo, P.; Zhang, L.; Lin, C. Modeling and precise processing for spaceborne transmitter/missile-borne receiver SAR signals. Remote Sens. 2019, 11, 346. [Google Scholar] [CrossRef]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Wilkinson, D.R. Efficient Image Reconstruction Techniques for a Multiple-Receiver Synthetic Aperture Sonar. Master’s Thesis, Department of Electrical and Computer Engineering, University of Canterbury, Christchurch, New Zealand, July 1999. [Google Scholar]

- Bonifant, W.W. Interferometric Synthetic Aperture Sonar Processing. Master’s Thesis, Georgia Institute of Technology, Atlanta, GA, USA, July 1999. [Google Scholar]

- Zhang, X.; Tang, J.; Zhong, H. Multireceiver correction for the chirp scaling algorithm in synthetic aperture sonar. IEEE J. Ocean. Eng. 2014, 39, 472–481. [Google Scholar] [CrossRef]

- Gough, P.T.; Hayes, M.P. Fast Fourier techniques for SAS imagery. In Proceedings of the 2005 MTS/IEEE Oceans Conference, Brest, France, 20–23 June 2005; pp. 563–568. [Google Scholar]

- Loffeld, O.; Nies, H.; Peters, V.; Knedlik, S. Models and useful relations for bistatic SAR processing. IEEE Trans. Geosci. Remote Sens. 2004, 42, 2031–2038. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, P. Imaging algorithm for multireceiver synthetic aperture sonar. J. Electr. Eng. Technol. 2019, 14, 471–478. [Google Scholar] [CrossRef]

- Yang, H.; Tang, J.; Chen, M.; Chen, X. A multiple-receiver synthetic aperture sonar wavenumber imaging algorithm with non-uniform sampling in azimuth. J. Wuhan Univ. Technol. (Transp. Sci. Eng.) 2011, 35, 993–996. [Google Scholar]

- Zhang, X.; Chen, X.; Qu, W. Influence of the stop-and-hop assumption on synthetic aperture sonar imagery. In Proceedings of the 2017 International Conference on Communication Technology, Chengdu, China, 27–30 October 2017; pp. 1601–1607. [Google Scholar]

- Wang, G.; Zhang, L.; Li, J.; Hu, Q. Precise aperture-dependent motion compensation for high-resolution synthetic aperture radar imaging. IET Radar Sonar Navig. 2017, 11, 204–211. [Google Scholar] [CrossRef]

- Zhang, X.; Tang, J.; Zhang, S.; Bai, S.; Zhong, H. Four order polynomial based range-Doppler algorithm for multireceiver synthetic aperture sonar. J. Electron. Inf. Technol. 2014, 36, 1592–1598. [Google Scholar]

- Wu, Q.; Xing, M.; Shi, H.; Hu, X.; Bao, Z. Exact analytical two-dimensional spectrum for bistatic synthetic aperture radar in tandem configuration. IET Radar Sonar Navig. 2011, 5, 349–360. [Google Scholar] [CrossRef]

- Tian, Z.; Tang, J.; Zhong, H.; Zhang, S. Extended range Doppler algorithm for multiple-receiver synthetic aperture sonar based on exact analytical two-dimensional spectrum. IEEE J. Ocean. Eng. 2016, 41, 3350–3358. [Google Scholar]

- Xu, J.; Tang, J.; Zhang, C. Multi-aperture synthetic aperture sonar imaging algorithm. Signal Process. 2003, 19, 157–160. [Google Scholar]

- Wu, J.; Xu, Y.; Zhong, X.; Sun, Z.; Yang, J. A three-dimensional localization method for multistatic SAR based on numerical range-Doppler algorithm and entropy minimization. Remote Sens. 2017, 9, 470. [Google Scholar] [CrossRef]

- Jin, M.Y.; Wu, C. A SAR correlation algorithm which accommodates large-range migration. IEEE Trans. Geosci. Remote Sens. 1984, GE-22, 592–597. [Google Scholar] [CrossRef]

- Chen, P.; Kang, J. Improved chirp scaling algorithms for SAR imaging under high squint angles. IET Radar Sonar Navig. 2017, 11, 1629–1636. [Google Scholar] [CrossRef]

- Wong, F.H.; Yeo, T.S. New applications of nonlinear chirp scaling in SAR data processing. IEEE Trans. Geosci. Remote Sens. 2001, 39, 946–953. [Google Scholar] [CrossRef]

- Li, Y.; Huang, P.; Lin, C. Focus improvement of highly squint bistatic synthetic aperture radar based on non-linear chirp scaling. IET Radar Sonar Navig. 2017, 11, 171–176. [Google Scholar] [CrossRef]

- Wang, K.; Liu, X. Quartic-phase algorithm for highly squinted SAR data processing. IEEE Geosci. Remote Sens. Lett. 2007, 4, 246–250. [Google Scholar] [CrossRef]

- Guo, P.; Tang, S.; Zhang, L.; Sun, G. Improved focusing approach for highly squinted beam steering SAR. IET Radar Sonar Navig. 2016, 10, 1394–1399. [Google Scholar] [CrossRef]

- Ku, C.S.; Chen, K.S.; Chang, P.C.; Chang, Y.L. Imaging simulation for synthetic aperture radar: A full-wave approach. Remote Sens. 2018, 10, 1404. [Google Scholar] [CrossRef]

- Wu, J.; An, H.; Zhang, Q.; Sun, Z.; Li, Z.; Du, K.; Huang, Y.; Yang, J. Two-dimensional frequency decoupling method for curved trajectory synthetic aperture radar imaging. IET Radar Sonar Navig. 2018, 12, 766–773. [Google Scholar] [CrossRef]

| Parameters | Value | Units |

|---|---|---|

| Center frequency | 150 | kHz |

| Bandwidth | 20 | kHz |

| Platform velocity | 2 | m/s |

| Receiver length in azimuth | 0.02 | m |

| Length of receiver array | 0.6 | m |

| Transmitter length in azimuth | 0.04 | m |

| Pulse repetition interval | 0.3 | s |

| Presented Method | PCA Method | BP Method | ||||

|---|---|---|---|---|---|---|

| PSLR/dB | ISLR/dB | PSLR/dB | ISLR/dB | PSLR/dB | ISLR/dB | |

| T1 | −11.61 | −6.44 | −14.38 | −9.57 | −14.83 | −10.34 |

| T2 | −13.01 | −8.36 | −14.45 | −9.49 | −14.88 | −10.25 |

| T3 | −10.82 | −8.21 | −14.54 | −9.98 | −14.90 | −10.82 |

| T4 | −13.41 | −9.39 | −14.63 | −9.68 | −14.77 | −10.44 |

| T5 | −14.61 | −9.87 | −14.44 | −9.59 | −14.81 | −10.15 |

| T6 | −14.69 | −10.12 | −14.26 | −9.60 | −14.69 | −10.06 |

| Presented Method | PCA Method | BP Method | ||||

|---|---|---|---|---|---|---|

| PSLR/dB | ISLR/dB | PSLR/dB | ISLR/dB | PSLR/dB | ISLR/dB | |

| T7 | −11.61 | −6.44 | −13.92 | −9.52 | −14.81 | −10.23 |

| T8 | −13.26 | −8.67 | −13.87 | −9.37 | −14.92 | −10.25 |

| T9 | −10.36 | −7.87 | −13.86 | −9.32 | −14.77 | −10.09 |

| T10 | −13.86 | −9.38 | −14.01 | −9.71 | −14.78 | −10.28 |

| T11 | −14.02 | −9.53 | −13.73 | −9.22 | −14.87 | −10.21 |

| T12 | −14.13 | −10.13 | −13.69 | −9.17 | −14.7 | −10.12 |

| Presented Method | PCA Method | BP Method | ||||

|---|---|---|---|---|---|---|

| PSLR/dB | ISLR/dB | PSLR/dB | ISLR/dB | PSLR/dB | ISLR/dB | |

| T13 | −14.49 | −10.22 | −13.23 | −9.93 | −14.76 | −10.26 |

| T14 | −14.17 | −9.97 | −13.13 | −9.8 | −14.80 | −10.22 |

| T15 | −13.23 | −9.60 | −12.87 | −9.47 | −14.72 | −10.35 |

| T16 | −12.45 | −7.83 | −12.99 | −9.39 | −14.83 | −10.18 |

| T17 | −11.96 | −8.80 | −13.15 | −9.79 | −14.86 | −10.41 |

| T18 | −11.28 | −6.51 | −13.55 | −9.67 | −14.78 | −10.21 |

| Presented Method | BP Algorithm | ||

|---|---|---|---|

| Four Sub-Blocks | Eight Sub-Blocks | ||

| Processing time/s | 608 | 1149 | 35234 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Tan, C.; Ying, W. An Imaging Algorithm for Multireceiver Synthetic Aperture Sonar. Remote Sens. 2019, 11, 672. https://doi.org/10.3390/rs11060672

Zhang X, Tan C, Ying W. An Imaging Algorithm for Multireceiver Synthetic Aperture Sonar. Remote Sensing. 2019; 11(6):672. https://doi.org/10.3390/rs11060672

Chicago/Turabian StyleZhang, Xuebo, Cheng Tan, and Wenwei Ying. 2019. "An Imaging Algorithm for Multireceiver Synthetic Aperture Sonar" Remote Sensing 11, no. 6: 672. https://doi.org/10.3390/rs11060672

APA StyleZhang, X., Tan, C., & Ying, W. (2019). An Imaging Algorithm for Multireceiver Synthetic Aperture Sonar. Remote Sensing, 11(6), 672. https://doi.org/10.3390/rs11060672