1. Introduction

Taiga and Boreal forests play an important role in the global climate through the carbon, water and energy balance (Bonan [

1]). In Russia, fir forests provide multiple provisioning, regulating and cultural ecosystem services and are considered a national asset of the country. Despite forests are a renewable resource, in vast areas of the world, forest degradation is too high and not compensated by regeneration (Hansen et al. [

2]). One of the main problems of Russian forest degradation is the spatial spread acceleration of the four-eyed fir bark beetle (

Polygraphus proximus Blandford) invasion that causes fast death of fir trees (

Abies sibirica Ledeb) in various forest ecosystems. The geographic origin of

P. proximus propagation comes from its natural habitat in Japan, the Korean Peninsula, Eastern China and the Russian Far East (Khabarovsk and Primorskii krai, Sakhalin and Kuril Islands) (Kuznetsov et al. [

3]. In Siberia, the first beetle occurrence was registered in 2008 (Kerchev [

4]) and within the last 10 years, massive outbreaks of

P. proximus occurred on large forested areas in the south-eastern part of the West Siberian Plain: Tomsk, Kemerovo and Novosibirsk oblasts, Altai region, in the Altai Republic as well as in Krasnoyarsk region (Pashenova et al. and Baranchikov et al. [

5,

6]), where the problem became catastrophic and got out of control. The invasion of this type of beetle can lead not only to degradation of fir forests but also to create a threat to fir existence as a forest type species, with the subsequent broad implications for the regional and global climate (Helbig et al., Ma [

7,

8]).

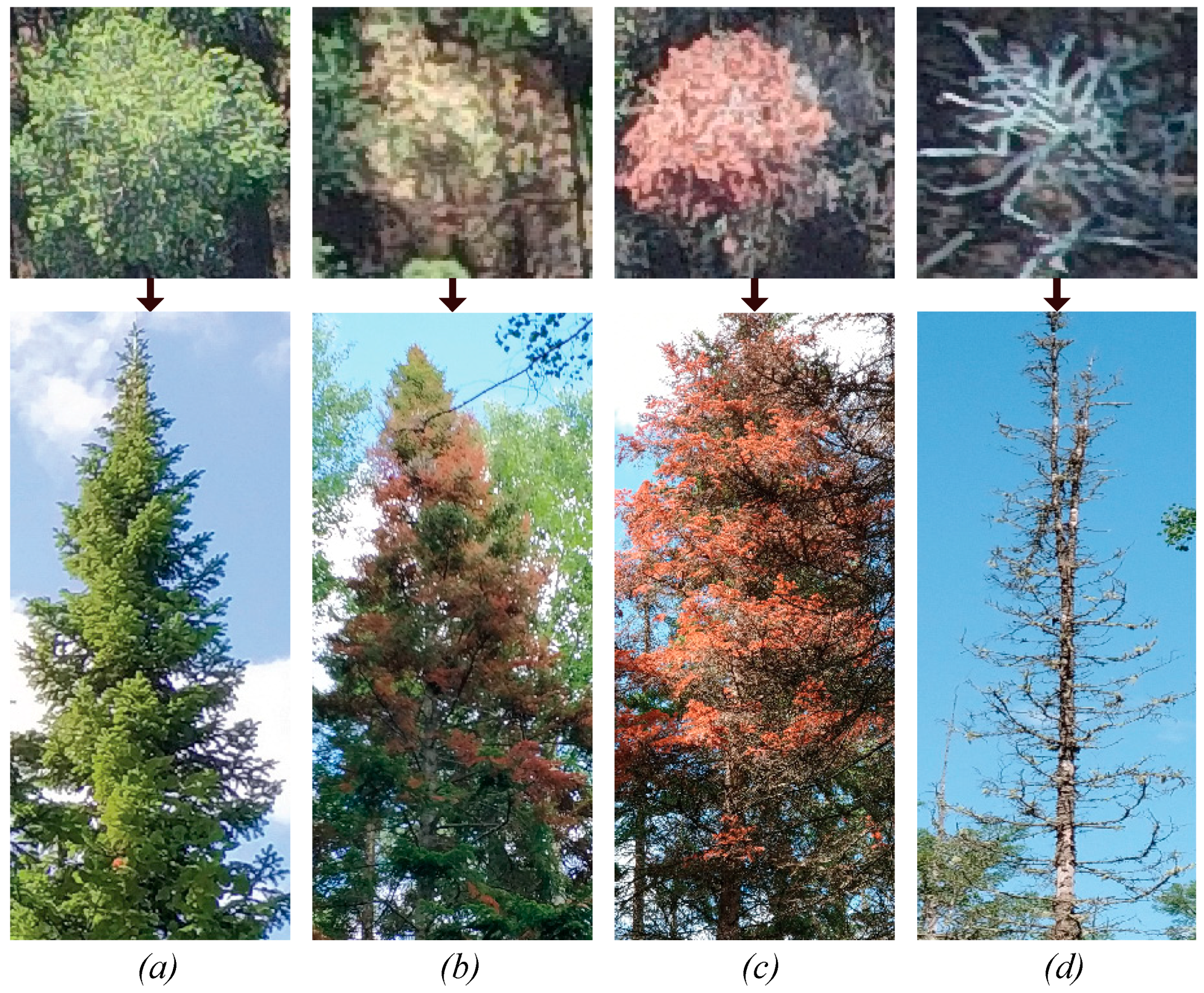

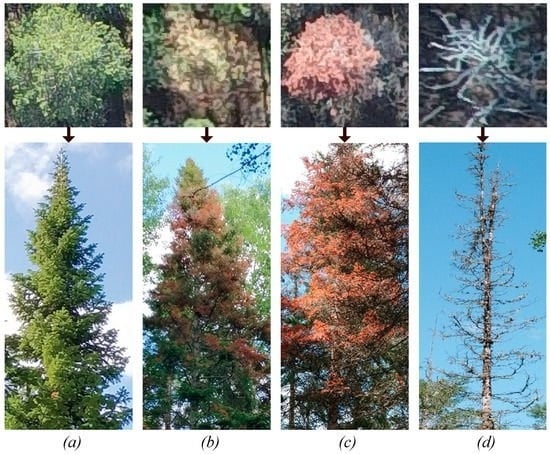

Death of fir trees due to P. proximus outbreaks occurs after several stages. The beetle usually attacks trunks of weakened trees, fallen deadwood and newly harvested wood. In case of massive outbreaks, P. proximus also attacks healthy trees, which can resist against the beetle attack during 2–3 years. Penetration of P. proximus under tree bark results in pervasion and reproduction of ophiostomatoid fungi (different species) and their phytopathogenic activity leads to the gradual weakening of fir trees. As the beetle further colonizes the tree, it begins to dry out. Increase of beetles quantity in a local forest stand leads to a massive death of fir trees. Usually, fir trees die within 2–4 years from the moment of first beetle attack, so the task of the beetle invasion monitoring is really important and poses a challenge to develop a remote survey and early-warning system for precise estimation of forest damage states.

Advances in Earth remote sensing techniques, particularly very high resolution satellite and airborne imagery, open the possibility to develop tools for regional mapping of consequences of forest pest activities. Nowadays, application of unmanned aerial vehicles (UAV) tends to be more popular in local scale forestry research because of better spatial resolution (Lehmann et al. [

9]). Also, UAV data provides the basis for development of new methods in data analysis which can be applied later at larger scales (satellite data). Recently, application of deep learning, in particular convolutional neural networks (CNN), in processing of colour (RGB) images of the Earth surface from various data sources have provided high accuracy results in recognition of different plant species [

10,

11,

12,

13,

14]. However, there are still no published studies on the application of CNN methods to very high resolution imagery for detection of forest health decline caused by pest invasions. The goal of this study was to test the possibilities of neural networks as a new approach to detect bark beetle outbreaks in fir forests. In particular, our aim was to develop and test a CNN method to automatically detect individual fir trees disturbed by

P. proximus in very high resolution imagery of mixed forests.

The main contributions of this paper can be listed as follows:

As far as we know, this is the first work in addressing the problem of forest damage detection caused by the P. proximus beetle in very high resolution images from UAVs with deep learning.

We built a new labelled dataset of orthoimages with four categories of damage stages in fir trees.

We designed a new CNN architecture to accurately classify trees in UAV images into different damage categories of trees and compared it to the most powerful CNN models in the stat.

We developed the detection model as follows. First, a new detection method selects the candidate regions that contain trees in UAV images. Then, these candidate regions are processed by the multiclass model to finally predict the category of tree damage.

We provide a complete description of the used methodology and the source code so that it can be replicated by other researchers to detect and classify tree damage by bark beetle in other images. Our source code can be found at

https://github.com/ansafo/OurCNN.

The rest of the paper is organized as follows. An introduction to Deep learning and CNNs is presented in

Section 2, which includes a description of the CNN models and of the auxiliary techniques of transfer learning and data augmentation. The related works on tree classification are provided in

Section 3. The study area and data acquisition are presented in

Section 4. The proposed methodology is presented in

Section 5, which includes a definition of fir trees damage categories, pre-processing dataset and data augmentation techniques of sample patches for training CNN models, description of the development of the classification model algorithm and creation of a data subset for independent model verification.

Section 6 presents the obtained results and data analysis. Discussion and conclusions are provided in

Section 7.

2. Introduction to Deep learning and CNNs

In this Section, we make a brief introduction to deep learning and particularly to CNN models (

Section 2.1), including the two main approaches used to improve the learning of CNN: that is, transfer learning and data augmentation (

Section 2.2).

Deep Neural Networks (DNNs) are a subset of machine learning algorithms that learn from a first set of data to make predictions on new data. Unlike most machine learning algorithms, DNNs are capable of extracting the existent patterns from data automatically, without the need of external hand-crafted features. Their computation is organized into layers; each layer is composed of a number of artificial neurons. To introduce non-linearity in the network, non-linear functions are introduced at the neuron level, such as the Rectified Linear Unit (ReLU) function. Deep networks are built by stacking a very large number of layers. They provide a powerful framework for supervised learning when trained on a large number of labelled samples (Goodfellow et al. [

15]). A DNN includes an input layer, an output layer and hidden layers between them where the calculations take place.

One of the most powerful types of DNNs are Convolutional Neural Networks (CNNs), which have shown impressive results in image classification (given an input image, the CNN produces a label that indicates the visual content of that image) and object detection in images (given an input image the CNN produces a label together with a bounding box that indicates the region where the object-class is located in the image).

There are three main types of hidden layers in a CNN:

A convolution layer, the main building block of a CNN. It is based on a fundamental operation in image processing called convolution, which consists of filtering a 2D input image with a small 2D-matrix called filter or kernel.

A pooling layer, which reduces the input matrix in general by half. Conceptually, this operation is applied to increase the abstraction of the extracted features and it usually follows the convolution layer.

A fully connected layer, which is used as a classifier of the previously calculated high level features to derive scores for each target class.

Deep CNNs are built by alternating several convolutional layers with pooling layers. At the end of the sequence, the obtained convolutions and pooling layers are connected to one or more fully connected layers. Softmax function is used to convert the scores of the output of the final fully connected layer into a set of probabilities between 0 and 1, which represent the final output layer.

Before starting the training process, the weights of the CNN are initialized with random values. The CNN is then trained iteratively until convergence during a number of epochs. The loss function is used to measure how good the training process is by quantifying the distance between the prediction and the expected value. To find the best weights that correctly assign the input to the correct answer, the training process is reformulated into an optimization problem that aims to minimize the loss function. This minimization can be achieved using several optimization algorithms depending on the problem. One example is the ADAptive Moment estimation (ADAM) optimizer that computes the best weights.

2.1. CNNs Models

In

Appendix B, we provide a brief description of the CNNs that are compared against the CNN developed in this work, that is, VGG, ResNet, Inception-V3, InceptionResNet-V2, Xception and DensNet.

2.2. Transfer Learning and Data Augmentation

CNNs need a large volume of data to achieve good results, however, this is not always possible since building new labelled dataset is costly and time consuming. To overcome this limitation in practice, CNNs are seldom trained from scratch. Instead, their weights are initialized by the pre-trained weights on a large dataset such as, the massive ImageNet. This approach is called transfer learning. In addition, to address a new problem, not all the weights are re-trained but only the weights of the last layers, for example, the fully connected layers. This process is called fine-tuning.

Data-augmentation technique consists of creating new training examples by applying geometrical transformations such as rotation and translation to the original samples. Its objective is artificially increasing the volume of the dataset. In fact, it was demonstrated in several works (Tabik et al. [

16]). that increasing the volume of the dataset improves the learning of CNNs and reduces overfitting. In this paper we used the transformations explained in

Table 1.

3. Classification of Trees in High Resolution Imagery and Related Works

In this section, we consider related works devoted to the problems of classification and detection of objects (from land-covers to organisms) on Earth remote sensing (ERS) data. Scientists from around the world are trying to solve this problem by using various ERS information with different methods and algorithms. In general, few works have addressed the detection of damaged trees using satellite or UAV images, in general they have only considered few health classes (e.g., healthy and dead trees) and did not apply CNNs but classical image classification methods.

For example, Deli et al. [

17] developed a new CNN-based method for classifying four land-cover classes (crops, houses, soil and forests) in land surface images. They trained the algorithm with 100 images per class. Längkvist et al. [

18] proposed the classification and segmentation of satellite orthoimagery (including five classes: vegetation, ground, road, parking, railroad, building and water) using CNNs in a small city for a full, fast and accurate per-pixel classification. They selected the parameters and analysed their influence on the neural network model architecture. They also found a better performance of the CNN model compared to object-oriented methods of classification, reaching a maximum CNN classification accuracy of 94.49%.

Few papers have used neural network classifiers to recognize plant species and plant growth using images from a digital camera and a cell phone camera. Dyrmann et al. [

19] created a CNN capable of recognizing 22 plant species on colour images with an accuracy of 86.2%. For this, the authors used different data sets, depending on the lighting, image resolution and soil type. Razavi and Yalcin [

20] proposed a CNN architecture to classify types of plants growing in agro stations. To evaluate the effectiveness of their approach, the results of the created CNN model were compared to Support Vector Machine (SVM; RBF kernel and polynomial kernel) classifiers. The accuracy of the CNN classification was greater than the SVM, reaching 97.47%.

Few studies have also used CNN on satellite imagery. Li et al. used deep learning models [

10] to detect oil palm trees in QiuckBird images (0.6 m/pix). Guirado et al. in Reference [

11] detected

Ziziphus lotus shrubs on RGB satellite images from Google Earth using TensorFlow and got better accuracies than using object-based image analysis (OBIA). Baeta et al. [

12] detected coffee crops in high-resolution SPOT images (2.5 m/pix). They used a binary classification model and presented a comparison of the accuracy of various CNN and OBIA methods. In addition, the authors used a large number of methods of preparation and preliminary processing of the image, which in the final result leads to a high accuracy of more than 95%.

Very few woks have used CNNs to detect plants in UAV images. Noteworthy is the work by Ferreira et al. [

21], where weed was detected in soybean cultures using CNN based on the CaffeNet architecture. The authors used a set of data acquired with an UAV in manual mode at a height of 4 m above the Earth level using an RGB camera. The accuracy of the classification was 99.5%. Though, according to the authors, the accuracy may be lower in operational circumstances due to the heterogeneity in types of soils and weeds, since testing was performed on an experimental field. In 2018, Onishi and Ise [

22] classified 7 types of trees (deciduous broad-leaved tree, deciduous coniferous tree, evergreen broad-leaved tree,

Chamaecyparis obtuse, pinus, others) on UAV images using supervised deep learning (GoogLeNet) with an accuracy of 89%.

A similar problem to ours, which is the detection of damaged trees, is revised in the next series of works, though they mainly used classical image classification methods. In 2009, Abdullah et al. [

23] identified healthy and infested trees in satellite imagery (Sentinel-2, WorldView-2 and 3 (up to 0.5 m/pix)) using partial least squares regression (PLSR). Heurich et al. [

24] presented semi-automatic detection of dead trees following a spruce bark beetle outbreak in CIR aerial photographs using OBIA with accuracy up to 91%. In this work, authors used several classes, as deadwood areas, vital vegetation, non-woodland and assistant classes (shadows among dead trees and shadows among vital trees). In 2013, Ortiz et al. [

25] detected a bark beetle attack with TerraSAR-X and RapidEye Data (up to 1.18 m/pix) using generalized linear models (GLM), maximum entropy (ME) and random forest (RF), with accuracies up to 74%. Meddens et al. [

26] used multi-temporal disturbance detection methods to detect bark beetle-caused tree mortality and to map the subsequent forest disturbance on Landsat images with different classes (green trees, red damage stage, grey damage stage, herbaceous vegetation, bare soil, shadows, water). Kussul et al. [

13] classified tree species and different levels of ash tree (

Fraxinus sp.) mortality in WorldView-2 images with resolution of 1.85 m/pix using classical methods, in particular, the linear discriminant analysis (LDA), principal component analysis (PCA), stepwise regression and OBIA methods. Species diversity and the magnitude of ash loss were assessed. The authors needed to use a wide set of remote sensing indices to obtain good accuracies. The overall accuracy varied between 83% for the seven tree species and 77% for the four different levels of ash damage. In 2015, Näsi et al. [

27] also identified damaged trees (with three classes: healthy, infected and dead) using UAV images and OBIA methods. In 2017, Dash et al. [

28] used a non-parametric approach based on classification trees on UAV images to monitor forest health for disease outbreak in mature

Pinus radiata trees. In 2018, Näsi et al. [

29] published a paper that most closely relates to ours. They identified of bark beetle infestation at the individual tree level (healthy, infested and dead) in urban forests on UAV images using support vector machine (SVM), with accuracies up to 93%.

As far as we know, our work is the first in addressing the detection of damaged fir trees caused by the bark beetle using CNN methods on images acquired by UAVs (resolution below 0.1 m/pix). We considered four tree-health categories, which is higher than in previous works and allows to better assess the stage of the infestation. We also showed that it is possible to achieve good results using a relatively small training set of data.

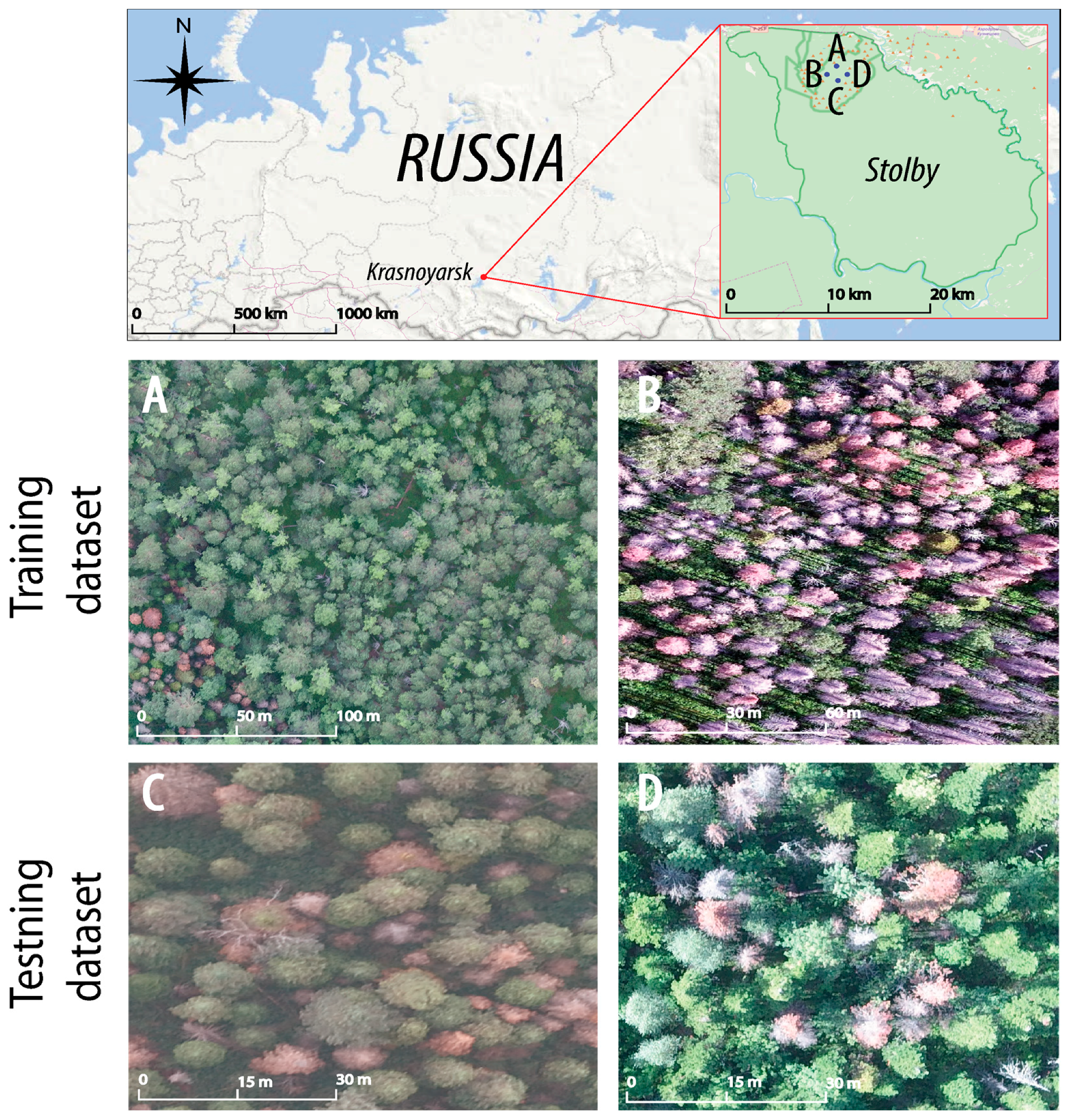

4. Study Area and Data Acquisition

The study area is located in the territory of the “Stolby” State Nature Reserve, near Krasnoyarsk city in Central Siberia of the Russian Federation. Most of the territory (80%) constitutes the mid-mountain belt (500–800 m a.s.l.), mainly covered by mixed forests composed by seven tree species in different proportions: conifers such as pines (

Pinus sylvestris,

Pinus sibirica), larch (

Larix sibirica), fir (

Abies sibirica), spruces (

Picea abies,

Picea obovata) and parvifoliate trees such as birch (

Betula pendula,

Betula pubescens) and aspen (

Populus tremula) (Ryabovol [

30]). Pine trees dominate among other species and occupy 41% of the total forested area, mainly in low-mountains (200–500 m a.s.l.). Distribution of Siberian fir expands on 25% of the Stolby forest.

First appearance of P. proximus in the Stolby nature reserve was registered in 2011 and nowadays the roughly estimated forest damage by the beetle is about 25–30% of the fir area.

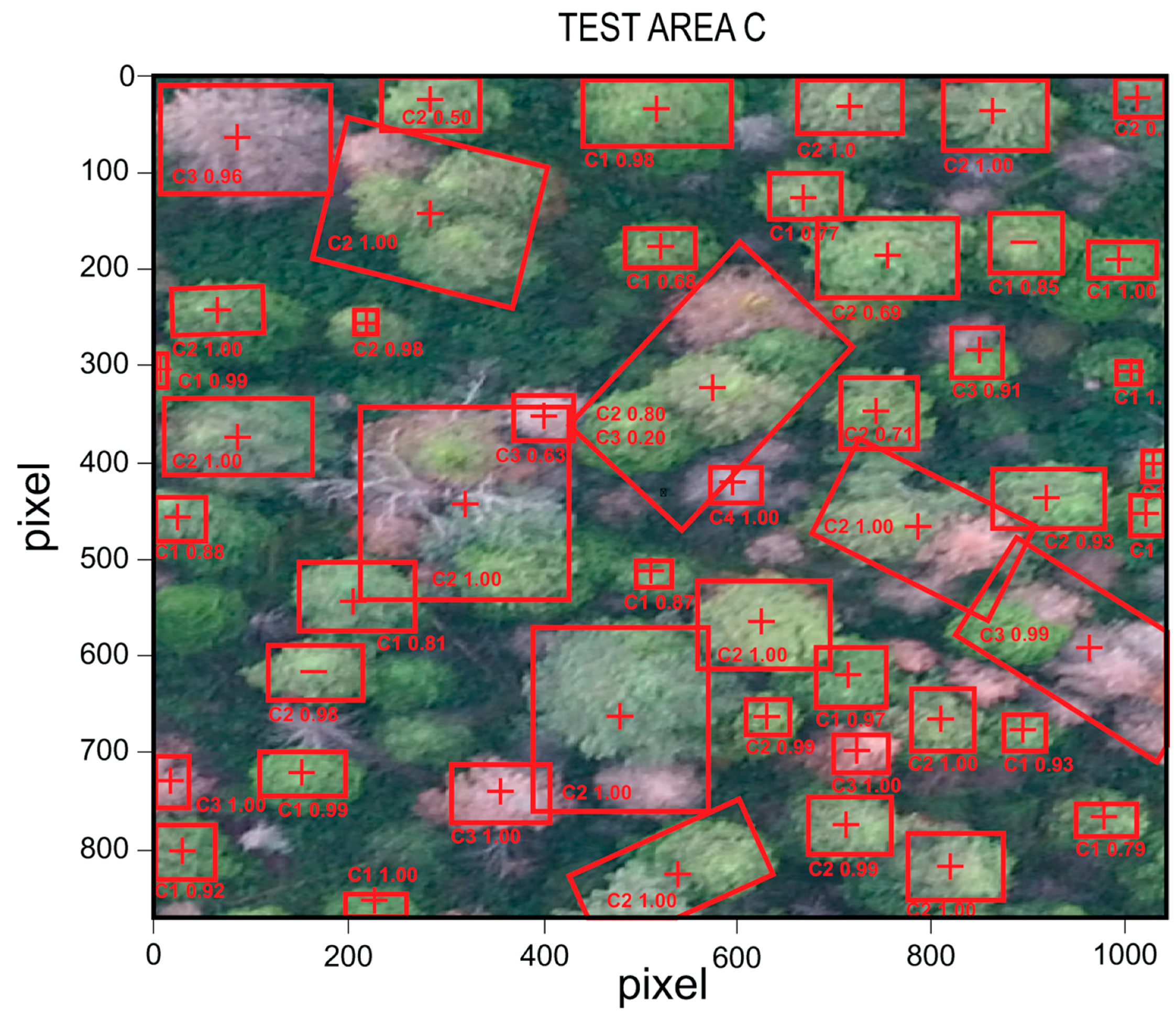

Four plots with different rates of the

P. proximus invasion were chosen for our study at Stolby (

Figure 1).

A set of RGB images with ultra-high spatial resolution (≈5–10 cm/pix) were obtained for the research sites during multiple flights of the DJI Phantom 3 Pro quadcopter (with standard built-in camera) in July 2016 (plot A and C) and of the Yuneec Typhoon H hexacopter (with CGO3+ camera) in May 2016 (plot B) and August 2018 (plot D). Imagery for research plots A and C were recorded in cloudy weather conditions at 670 m (A) and 700 m (C) altitudes (120–150 m elevation above ground), plot B and D was surveyed in sunny weather at 120 m height. Default camera settings (auto white balance, ISO 100) were applied in all aerial shots. Image composites (orthophotomosaics) were created for each plot from a set of overlapping geolocated images (300–400 partially overlapping images per plot) using the Agisoft Photoscan Professional v1.4.0 (64-bit) software (

www.agisoft.com, Agisoft LLC, St. Petersburg, Russia). This software provides photogrammetric and 3D-model reconstruction tools [

31]. The data processing workflow in Photoscan consisted of the following steps: alignment of each image location, generation of dense point cloud (3D-model), creation of digital elevation model (DEM) and eventually production of a georeferenced orthophotomosaic (orthophotos or orthoimages from now). The workflow was run several times with varying settings in order to achieve better stich of images over the complex mountain landscape of the study sites. As a result, we got four orthophotos for each one of the four sites (A, B, C and D for 2016 and 2018).

7. Conclusions

Damage to fir trees caused by the attacks of bark beetles is a major problem in the Taiga and Boreal Forests, particularly in Central Siberia of Russia. An efficient method for recognizing categories of tree damage using remote sensing imagery could substantially help as an early warning system that allows to eliminate fir trees colonized by the P. proximus beetle, to reduce its spread to new territories. The presented results are of great interest, both for scientific and practical purposes, since such work in the task of classifying categories of tree damage caused by beetle attacks to fir trees using CNN methods on UAV images has not yet been encountered. Recognizing the categories of damage to fir trees is a difficult task due to the fact that the forest canopy is very dense and diverse.

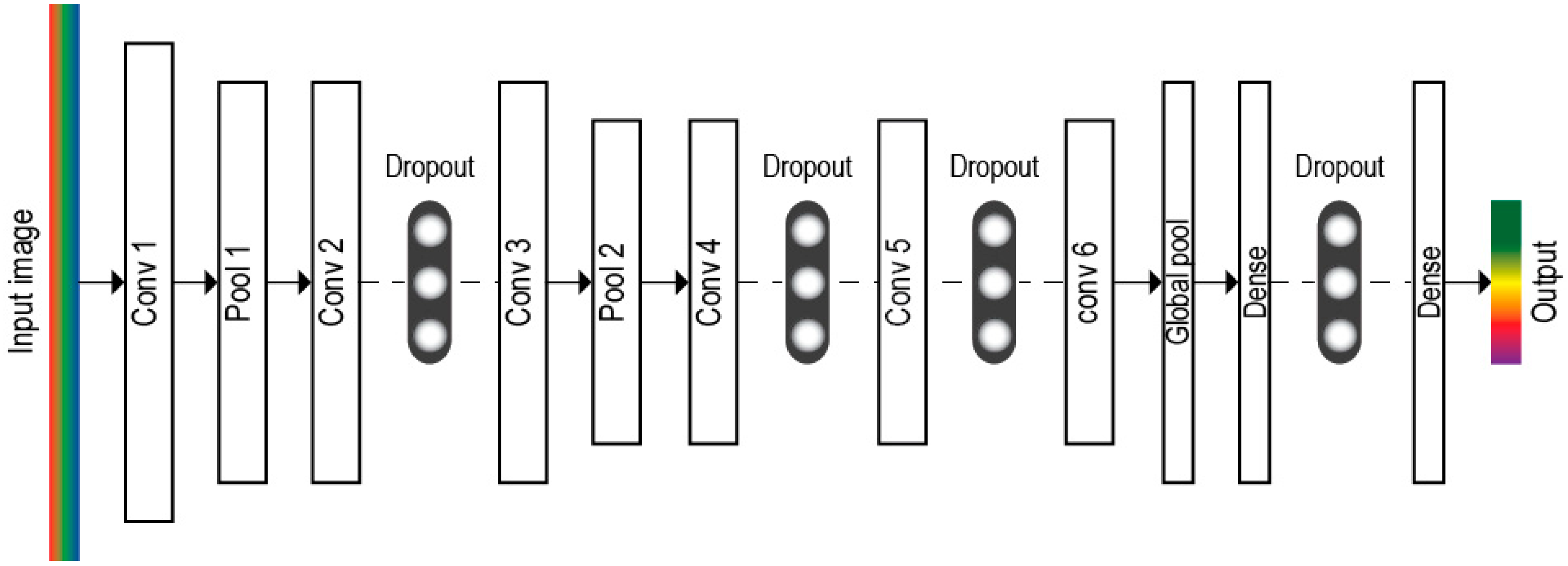

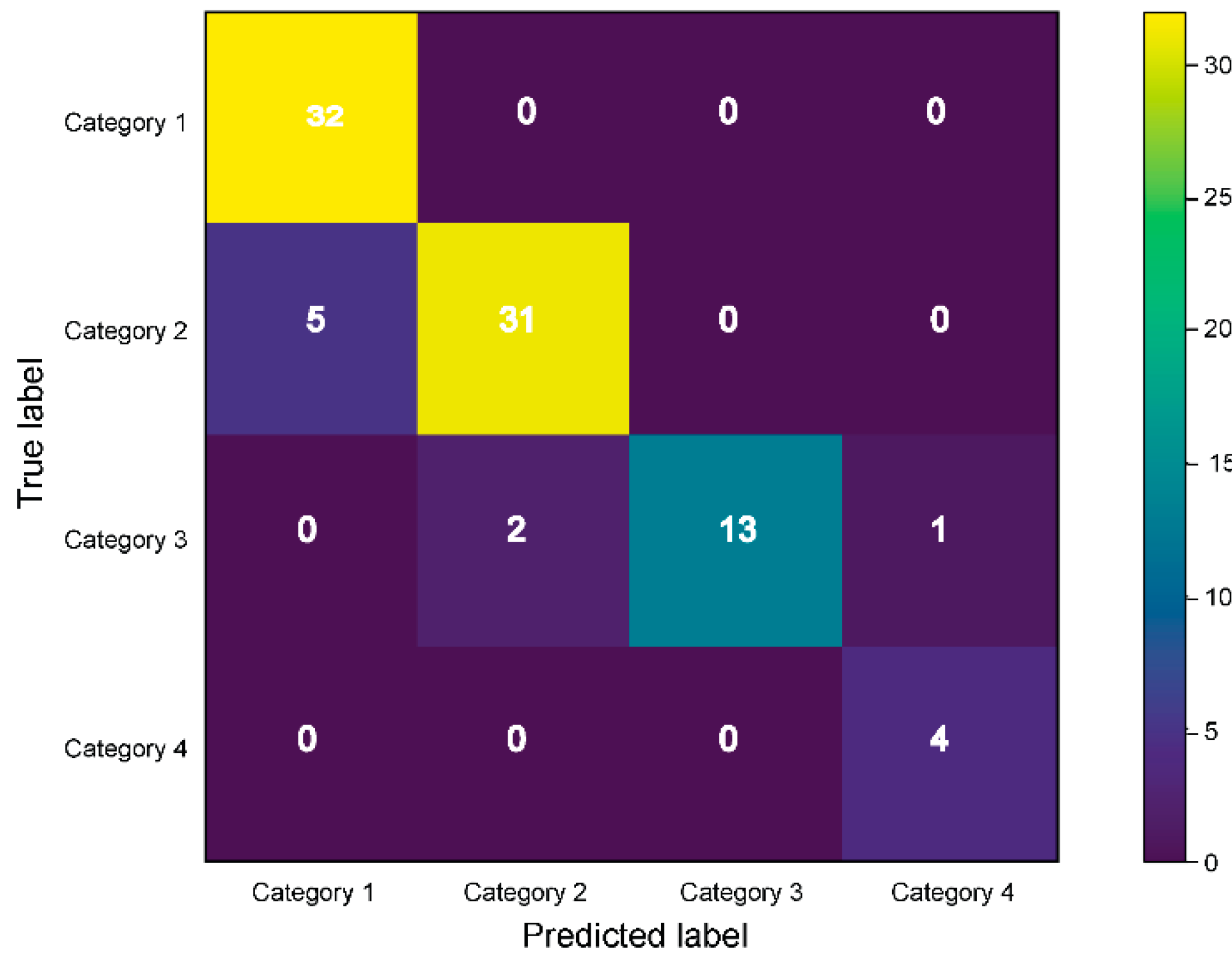

In this article, we developed a model based on CNNs to detect in RGB UAV images the damage to fir trees caused by bark beetles. Our network consists of six convolutional blocks. The network can recognize four damage categories in Siberian firs, from healthy to dead, with high prediction accuracy on images from different survey data. We have shown that our model provides better performance than the most powerful state-of-the-art CNN models, such as Xception, VGG, ResNet, Inception, InceptionResNet, DenseNet. We showed that data augmentation substantially increased the performance of our CNN model. Our model, trained with data augmentation, showed up to 98.77% accuracy for damage categories one, three and four. The second category may have the lowest recognition accuracy due to the fact that we had to merge three categories traditionally assessed in the field (weakened trees, heavily weakened trees and dying trees) into one category—trees colonized by the beetle, since it was not possible to visually observe any differences between these three categories in the UAV imagery.

Regarding alternative models, the VGG-16 model, on average, showed higher results, among other models considered in the paper. VGG-16 model recognized the first, second and fourth categories with an accuracy of 85.9%, 79.76 and 94.37%, respectively. Recognition of the third category with an accuracy higher than 88.89% is achieved by ResNet-50 model. The lowest accuracies were reached by InceptionResNet-V2.

In future works, we will focus on improving the segmentation of tree categories, introducing time series of data and trying to implement the three missing classes: weakened trees, heavily weakened trees and dying trees. It would also be interesting to explore if we can transfer our model to a new area with the same species and to other species, by only changing the last output layer in the network.