Improving Details of Building Façades in Open LiDAR Data Using Ground Images

Abstract

1. Introduction

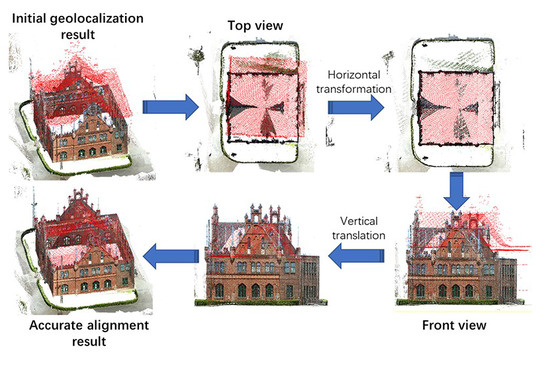

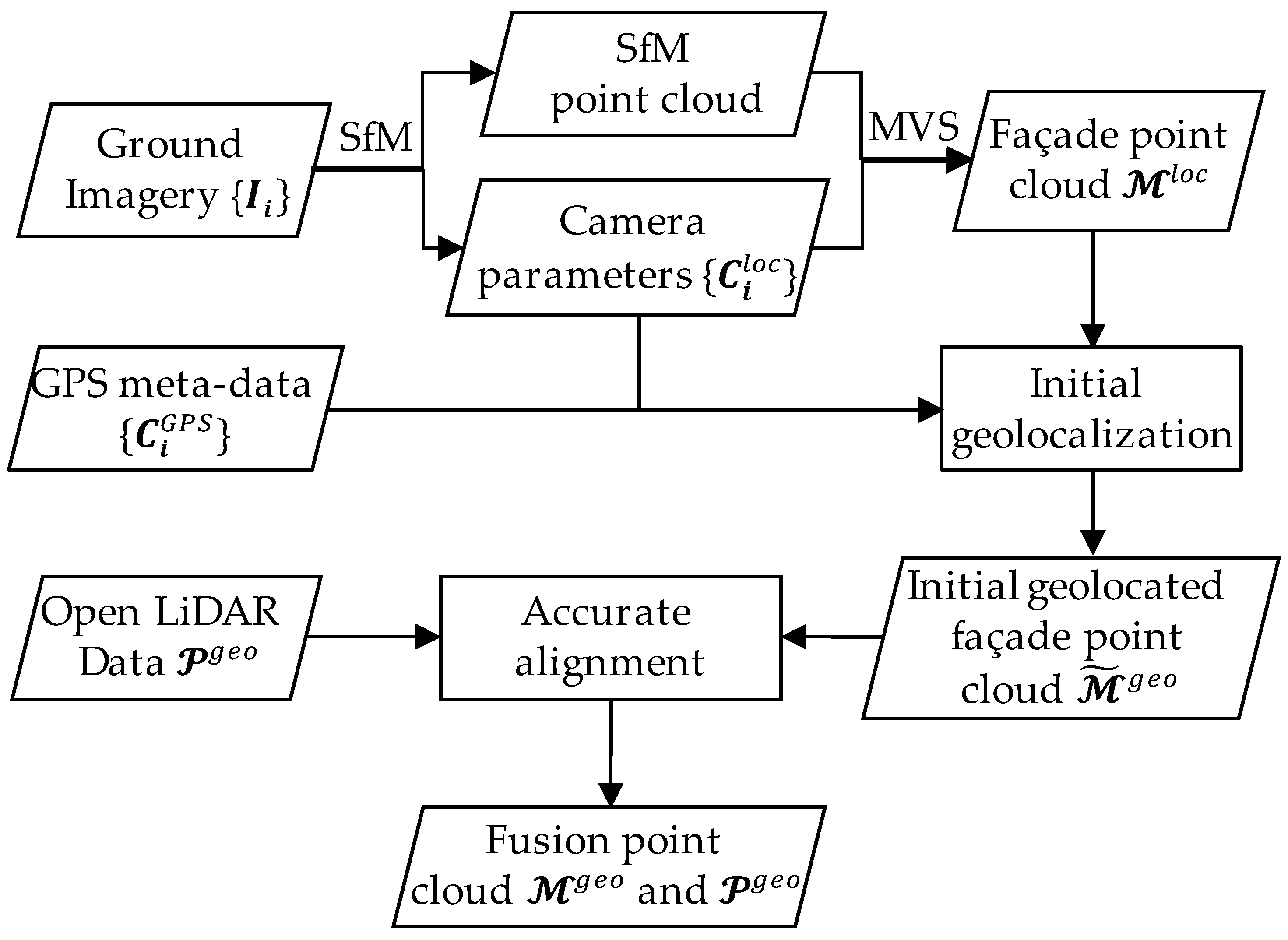

2. Methodology

2.1. Initial Geolocalization

2.1.1. Leveling the Façade Point Cloud

2.1.2. Geolocalization of the Leveling Façade Point Cloud Using GPS Meta-Data

2.2. Modified Coherent Point Drift with Normal Consistency (NC-CPD)

2.2.1. Coherent Drift Algorithm

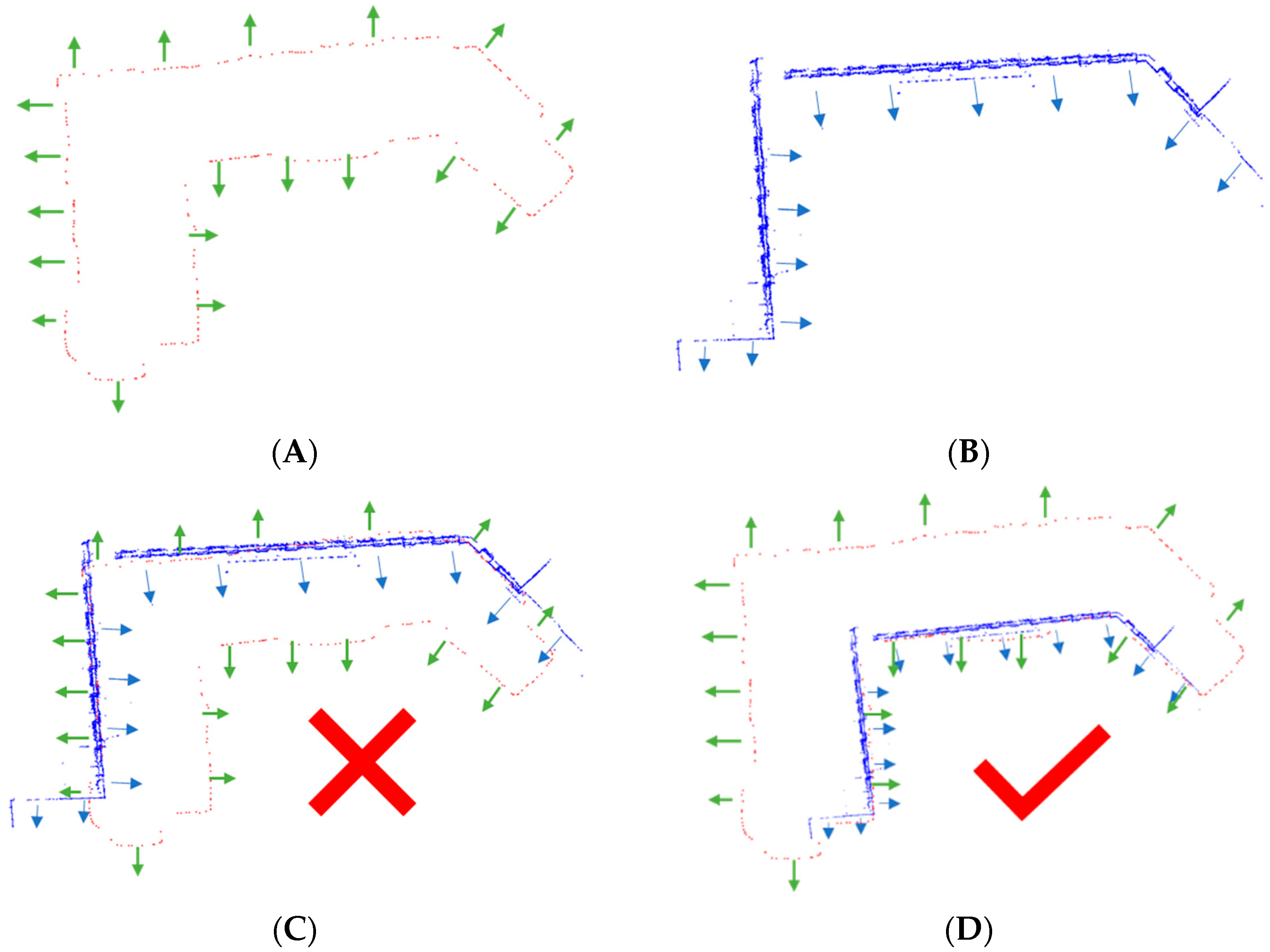

2.2.2. Coherent Point Drift with Normal Consistency

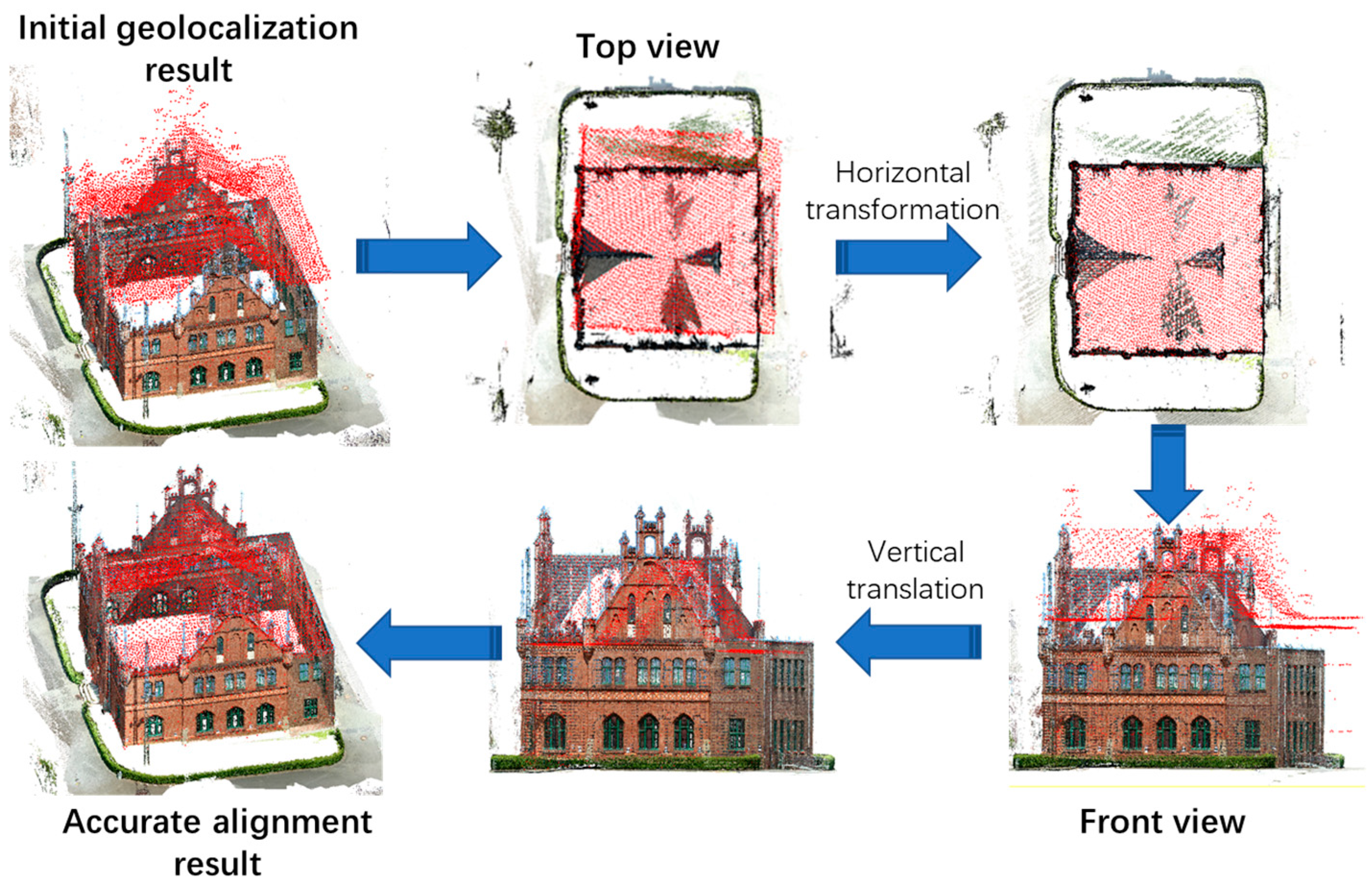

2.3. Accurate Alignment Using NC-CPD

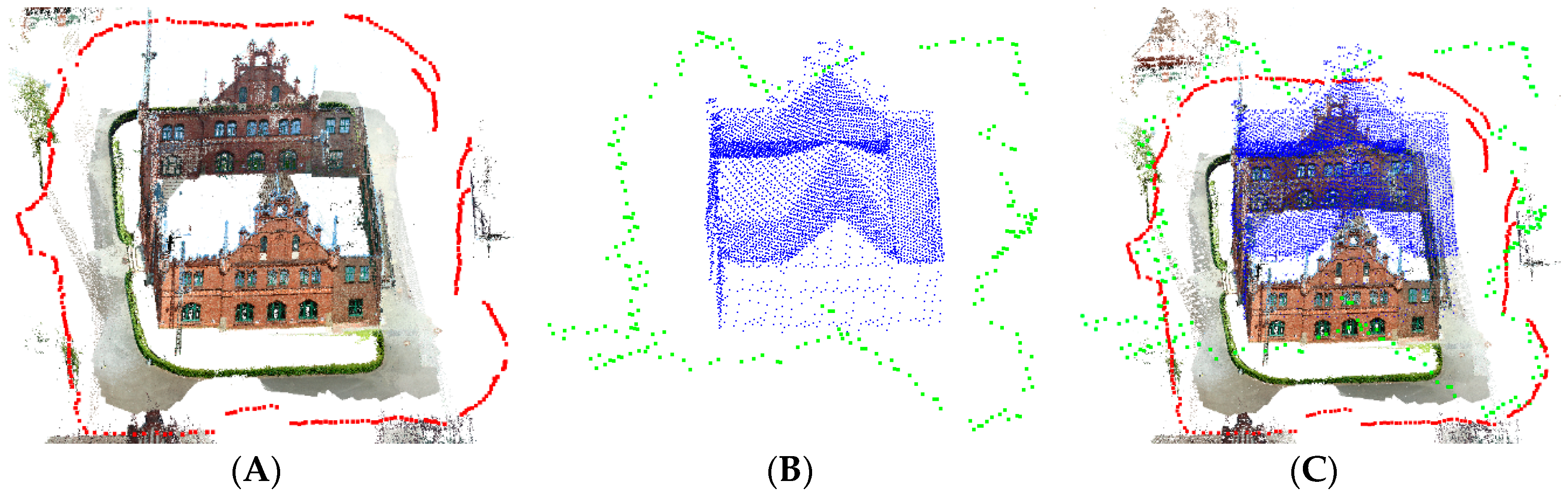

2.3.1. 2D Façade Point Extraction from the Façade Point Cloud

2.3.2. 2D Boundary Point Extraction of Open LiDAR Data

2.3.3. Horizontal Alignment Using NC-CPD

| Algorithm 1: Horizontal Alignment Using Normal Consistency Coherent Point Drift (NC-CPD) | |

| Input: 2D boundary points and the corresponding normal vector 2D façade points and the corresponding initial normal vector Output: Accurate aligned 2D façade points | |

| 1 | Initialization: Assign initial parameters: |

| 2 | Calculate initial , |

| 3 | Construct initial normal consistency in Equation (7) |

| 4 | EM optimization. Repeat 5–7 until convergence to obtain the final |

| 5 | E-step: Update with |

| 6 | M-step: Solve for the new by minimizing Equation (6), |

| 7 | Update |

| 8 | The accurate aligned 2D façade points are given by = |

2.3.4. Vertical Alignment

3. Experiments and Discussion

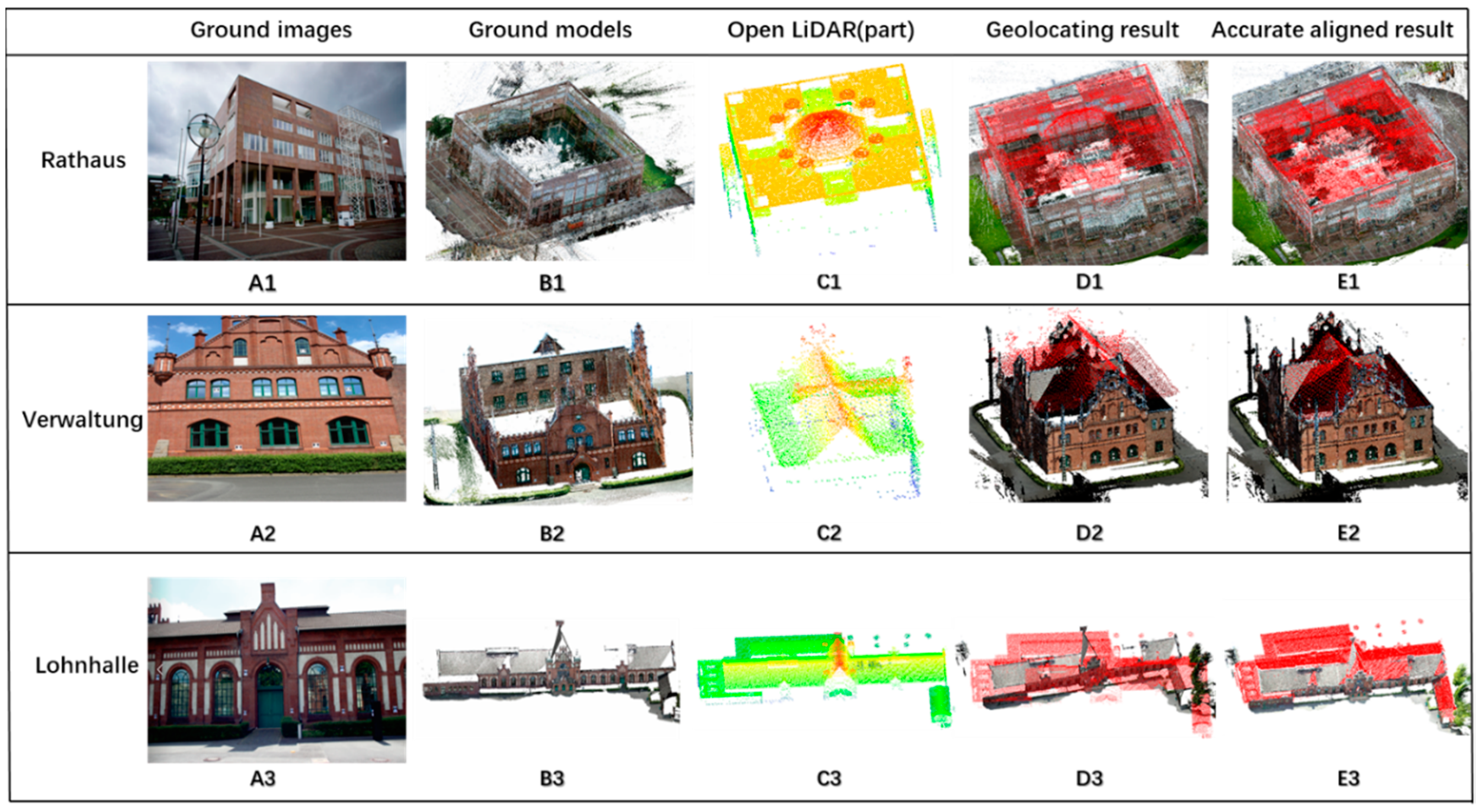

3.1. Dataset Description

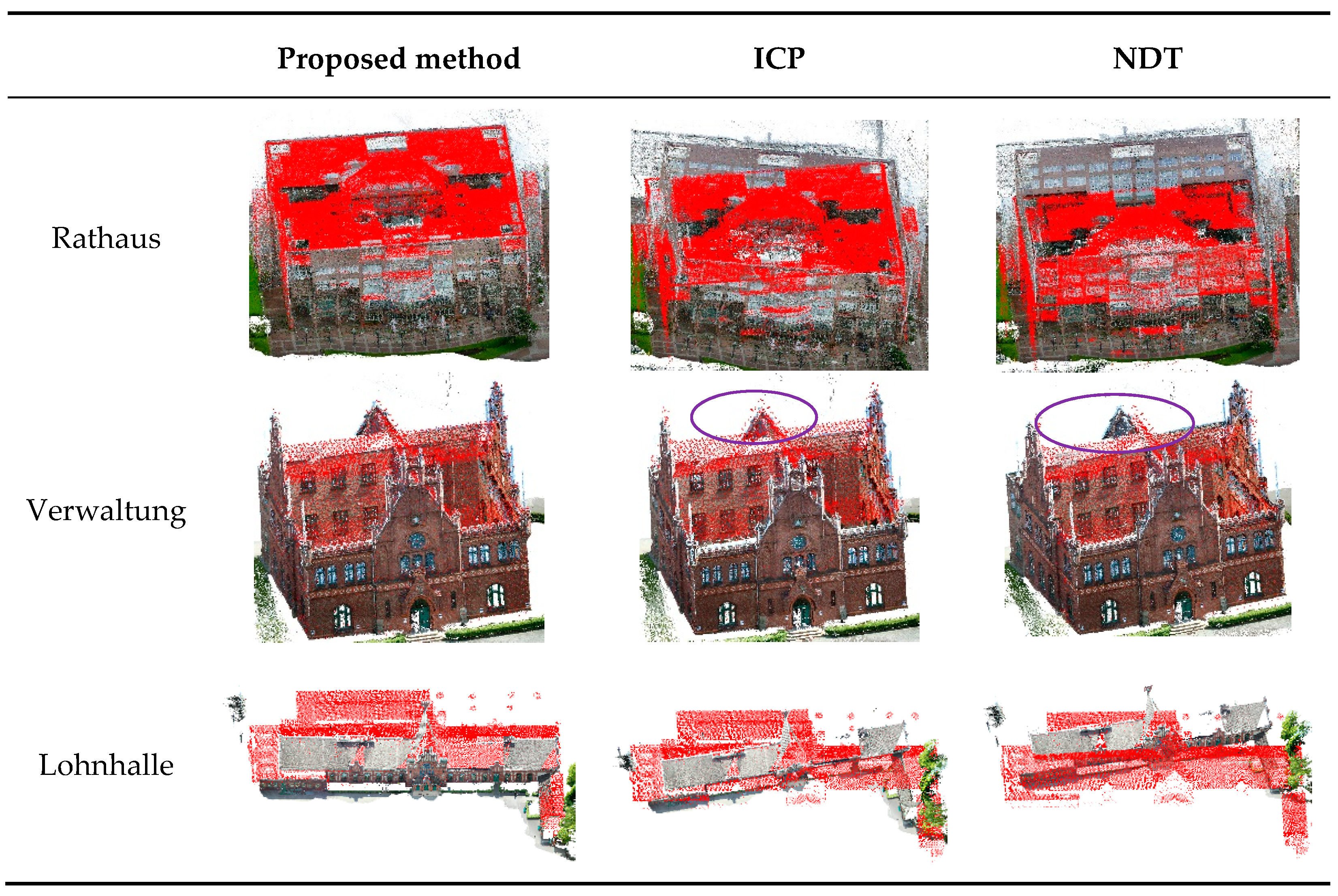

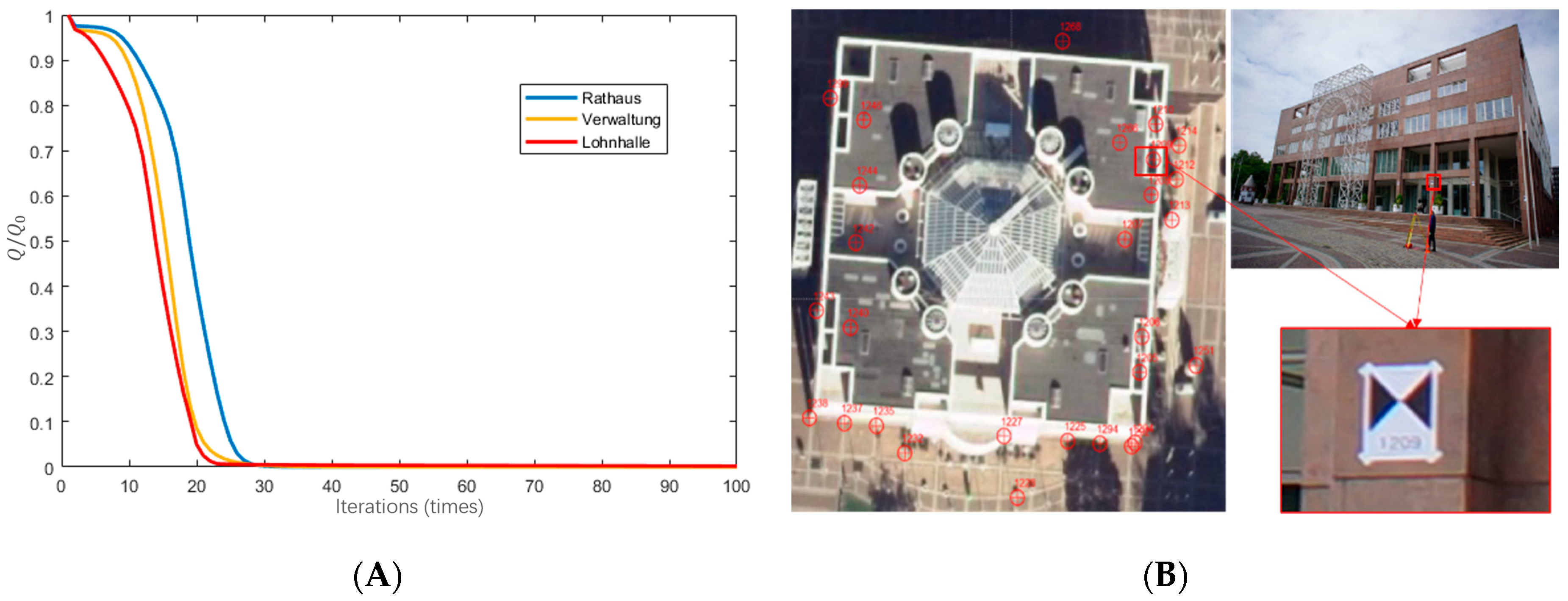

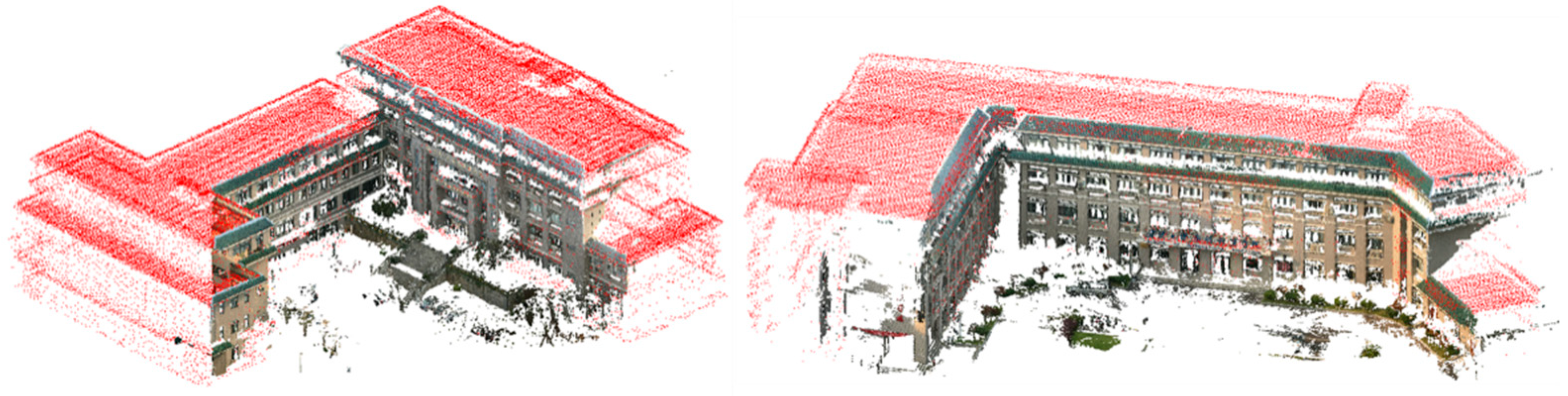

3.2. Qualitative Analysis

3.3. Quantitative Analysis

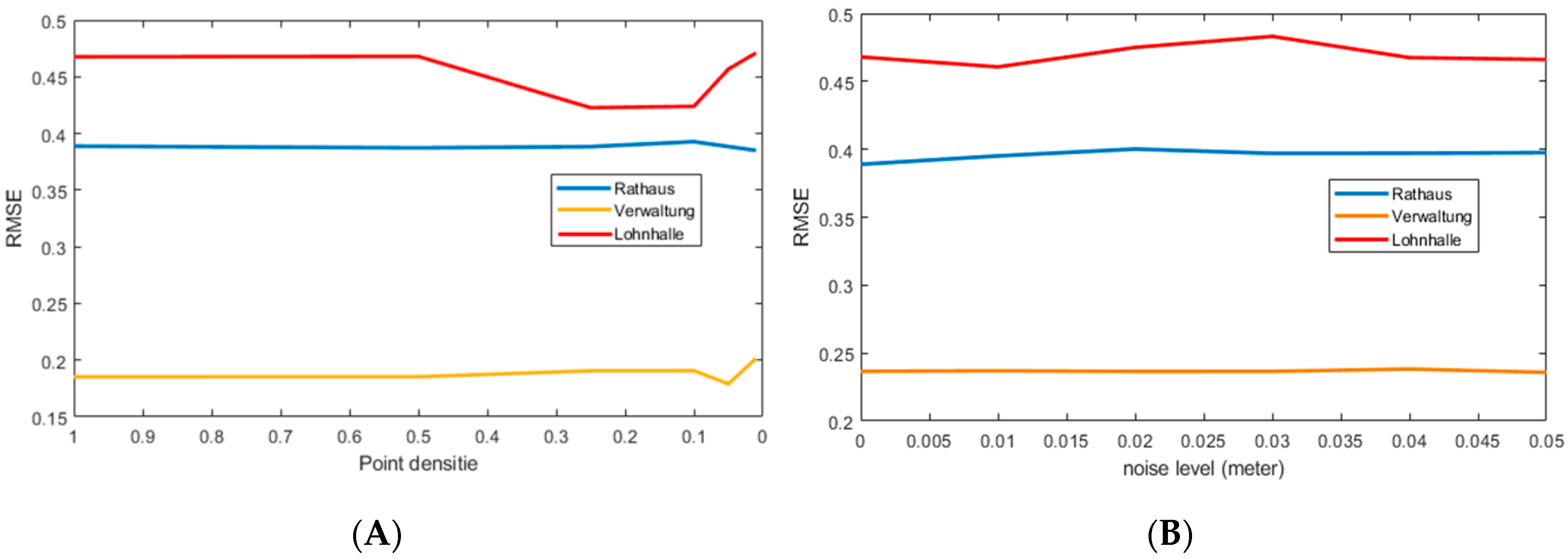

3.4. Robustness Analysis

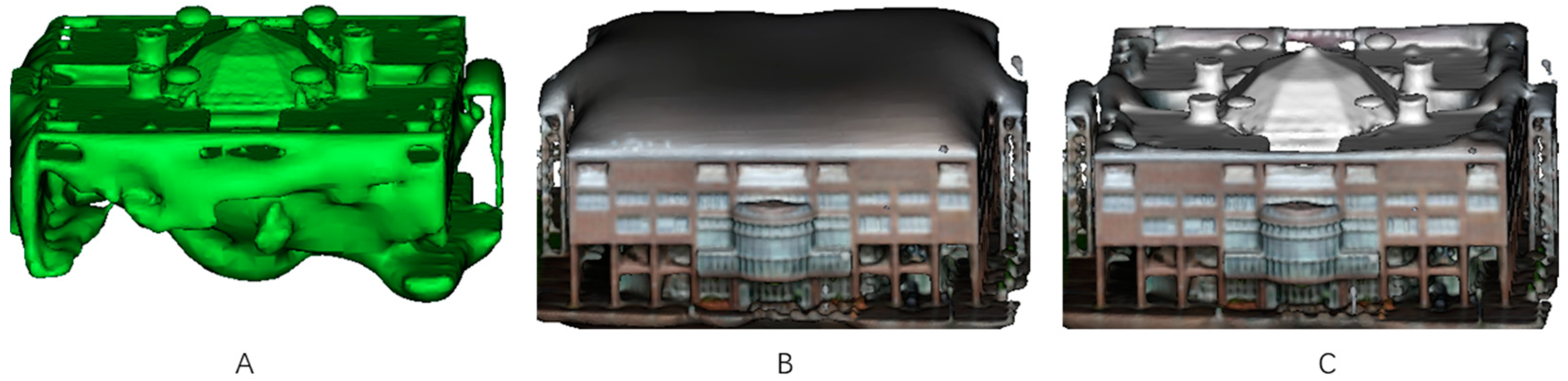

3.5. Expandability for Crowdsourcing Images

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- NYC Open Data. Available online: https://opendata.cityofnewyork.us/ (accessed on 14 October 2018).

- Open Data DC. Available online: http://opendata.dc.gov/ (accessed on 14 October 2018).

- Open Data of Canada. Available online: https://open.canada.ca/en/open-data (accessed on 14 October 2018).

- INSPIRE Directive. Directive 2007/2/EC of the European Parliament and of the Council of 14 March 2007 establishing an Infrastructure for Spatial Information in the European Community (INSPIRE). Off. J. Eur. Union 2007, L 108, 1–14. [Google Scholar]

- European Data Portal. Available online: https://data.europa.eu (accessed on 14 October 2018).

- Scottish Remote Sensing Portal. Available online: https://remotesensingdata.gov.scot/ (accessed on 14 October 2018).

- Open NRW. Available online: https://open.nrw/open-data/ (accessed on 14 October 2018).

- Langheinrich, M. Evaluation of Gmsh Meshing Algorithms in Preparation of High-Resolution Wind Speed Simulations in Urban Areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 559–564. [Google Scholar] [CrossRef]

- Kersting, N. Open Data, Open Government und Online Partizipation in der Smart City. Vom Informationsobjekt über den deliberativen Turn zur Algorithmokratie? In Staat, Internet und Digitale Gouvernementalität; Springer: Wiesbaden, Germany, 2018; pp. 87–104. [Google Scholar]

- Degbelo, A.; Trilles, S.; Kray, C.; Bhattacharya, D.; Schiestel, N.; Wissing, J.; Granell, C. Designing semantic application programming interfaces for open government data. eJ. eDemocr. Open Gov. 2016, 8, 21–58. [Google Scholar]

- Luebke, D.; Reddy, M.; Cohen, J.D.; Varshney, A.; Watson, B.; Huebner, R. Level of Detail for 3D Graphics: Application and Theory; Morgan Kaufmann: Burlington, MA, USA, 2002; p. 431. [Google Scholar]

- Hobrough, G.L. Automatic stereo plotting. Photogramm. Eng. 1959, 25, 763–769. [Google Scholar]

- Schenk, T. Towards automatic aerial triangulation. ISPRS J. Photogramm. Remote Sens. 1997. [Google Scholar] [CrossRef]

- Cramer, M.; Stallmann, D.; Haala, N. Direct Georeferencing Using Gps/Inertial Exterior Orientations for Photogrammetric Applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 198–205. [Google Scholar]

- Krupnik, A. Multiple-Patch Matching in the Object Space for Aerotriangulation. Ph.D. Thesis, The Ohio State University, Columbus, OH, USA, 1994; 93p. [Google Scholar]

- Agarwal, S.; Snavely, N.; Simon, I.; Seitz, S.M.; Szeliski, R. Building Rome in a day. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 72–79. [Google Scholar] [CrossRef]

- Wan, G.; Snavely, N.; Cohen-Or, D.; Zheng, Q.; Chen, B.; Li, S. Sorting unorganized photo sets for urban reconstruction. Graph. Models 2012, 74, 14–28. [Google Scholar] [CrossRef]

- Simon, I.; Snavely, N.; Seitz, S.M. Scene Summarization for Online Image Collections. In Proceedings of the 11th IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, L.; Gruen, A. Multi-image matching for DSM generation from IKONOS imagery. ISPRS J. Photogramm. Remote Sens. 2006, 60, 195–211. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Vu, H.-H.; Labatut, P.; Pons, J.-P.; Keriven, R. High accuracy and visibility-consistent dense multiview stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 889–901. [Google Scholar] [CrossRef] [PubMed]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Moulon, P.; Monasse, P.; Perrot, R.; Marlet, R. OpenMVG: Open multiple view geometry. In International Workshop on Reproducible Research in Pattern Recognition; Springer: Berlin, Germany, 2016; pp. 60–74. [Google Scholar]

- Wu, C. Towards Linear-Time Incremental Structure from Motion. In Proceedings of the 2013 International Conference on 3D Vision—3DV, Seattle, WA, USA, 29 June–1 July 2013; pp. 127–134. [Google Scholar] [CrossRef]

- Böhm, J.; Haala, N. Efficient integration of aerial and terrestrial laser data for virtual city modeling using lasermaps. In Proceedings of the ISPRS Workshop Laser Scanning 2005, Enschede, The Netherlands, 12–14 September 2005; pp. 192–197. [Google Scholar]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Boulaassal, H.; Landes, T.; Grussenmeyer, P. Reconstruction of 3D Vector Models of Buildings by Combination of Als, Tls and Vls Data. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXVIII-5/W16, 239–244. [Google Scholar] [CrossRef]

- Muenkel, C.; Leiterer, U.; Dier, H.D. Scanning the troposphere with a low-cost eye-safe lidar. In Proceedings of the Environmental Sensing and Applications, Munich, Germany, 14–18 June 1999. [Google Scholar]

- Münkel, C.; Emeis, S.; Schäfer, K.; Brümmer, B. Improved near-range performance of a low-cost one lens lidar scanning the boundary layer. In Proceedings of the Remote Sensing of Clouds and the Atmosphere XIV, Berlin, Germany, 31 August–3 September 2009. [Google Scholar]

- Tomoiagäf, T.; Predoi, C.; CoåŸEreanu, L. Indoor Mapping Using Low Cost LIDAR Based Systems. Appl. Mech. Mater. 2016, 841, 198–205. [Google Scholar] [CrossRef]

- Shan, Q.; Wu, C.; Curless, B.; Furukawa, Y.; Hernandez, C.; Seitz, S.M. Accurate geo-registration by ground-to-aerial image matching. In Proceedings of the 2014 2nd International Conference on 3D Vision, Tokyo, Japan, 8–11 December 2014; pp. 525–532. [Google Scholar] [CrossRef]

- Rönnholm, P.; Honkavaara, E.; Litkey, P.; Hyyppä, H.; Hyyppä, J. Integration of Laser Scanning and Photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, XXXVI-3/W5, 355–362. [Google Scholar] [CrossRef]

- Becker, S.; Haala, N. Combined feature extraction for facade reconstruction. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finlan, 12–14 September 2007. [Google Scholar]

- González-Aguilera, D.; Rodríguez-Gonzálvez, P.; Gómez-Lahoz, J. An automatic procedure for co-registration of terrestrial laser scanners and digital cameras. ISPRS J. Photogramm. Remote Sens. 2009. [Google Scholar] [CrossRef]

- Rönnholm, P.; Haggrén, H. Registration of Laser Scanning Point Clouds and Aerial Images Using Either Artifical or Natuarl Tie Featurs. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 3, 63–68. [Google Scholar] [CrossRef]

- Stamos, I.; Allen, P.K. Geometry and texture recovery of scenes of large scale. Comput. Vis. Image Underst. 2002, 88, 94–118. [Google Scholar] [CrossRef]

- Inglot, A.; Tysiac, P. Airborne Laser Scanning Point Cloud Update by Used of the Terrestrial Laser Scanning and the Low-Level Aerial Photogrammetry. In Proceedings of the 2017 Baltic Geodetic Congress (BGC Geomatics), Gdansk, Poland, 22–25 June 2017; pp. 34–38. [Google Scholar] [CrossRef]

- Klapa, P.; Mitka, B.; Zygmunt, M. Application of Integrated Photogrammetric and Terrestrial Laser Scanning Data to Cultural Heritage Surveying. IOP Conf. Ser. Earth Environ. Sci. 2017, 95. [Google Scholar] [CrossRef]

- Böhm, J.; Becker, S.; Haala, N. Model refinement by integrated processing of laser scanning and photogrammetry. In Proceedings of the Proceedings of 2nd International workshop on 3D Virtual Reconstruction and Visualization of Complex Architectures (3D-Arch), Zurich, Switzerland, 12–13 July 2007. [Google Scholar]

- Mastin, A.; Kepner, J.; Fisher, J. Automatic registration of LIDAR and optical images of urban scenes. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2009, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Wang, L.; Neumann, U. A robust approach for automatic registration of aerial images with untextured aerial LiDAR data. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2009, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Rueckert, D.; Sonoda, L.I.; Hayes, C.; Hill, D.L.; Leach, M.O.; Hawkes, D.J. Nonrigid registration using free-form deformations: Application to breast MR images. IEEE Trans. Med. Imaging 1999, 18, 712–721. [Google Scholar] [CrossRef]

- El-Hakim, S.F.; Beraldin, J.A.; Picard, M.; Godin, G. Detailed 3D reconstruction of large-scale heritage sites with integrated techniques. IEEE Comput. Graph. Appl. 2004, 24, 21–29. [Google Scholar] [CrossRef] [PubMed]

- Goshtasby, A.A. 2-D and 3-D Image Registration: For Medical, Remote Sensing, and Industrial Applications; John Wiley & Sons: Hoboken, NJ, USA, 2005; ISBN 0471724262. [Google Scholar]

- Myronenko, A.; Song, X. Point set registration: Coherent point drifts. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef] [PubMed]

- Maiseli, B.; Gu, Y.; Gao, H. Recent developments and trends in point set registration methods. J. Vis. Commun. Image Represent. 2017, 46, 95–106. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z.; Liang, F.; Liu, Y. Automatic registration of large-scale urban scene point clouds based on semantic feature points. ISPRS J. Photogramm. Remote Sens. 2016, 113, 43–58. [Google Scholar] [CrossRef]

- Gold, S.; Rangarajan, A.; Lu, C.P.; Pappu, S.; Mjolsness, E. New algorithms for 2D and 3D point matching: Pose estimation and correspondence. Pattern Recognit. 1998. [Google Scholar] [CrossRef]

- Baba, B.J.; Vemuri, C. A robust algorithm for point set registration using mixture of Gaussians. In Proceedings of the IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005. [Google Scholar]

- Brun, A.; Westin, C. Robust Generalized Total Least Squares. Miccai 2004, 234–241. [Google Scholar] [CrossRef]

- Chetverikov, D.; Stepanov, D.; Krsek, P. Robust Euclidean alignment of 3D point sets: The trimmed iterative closest point algorithm. Image Vis. Comput. 2005, 23, 299–309. [Google Scholar] [CrossRef]

- Stewart, C.V.; Tsai, C.L.; Roysam, B. The dual-bootstrap iterative closest point algorithm with application to retinal image registration. IEEE Trans. Med. Imaging 2003, 22, 1379–1394. [Google Scholar] [CrossRef] [PubMed]

- Kaneko, S.; Kondo, T.; Miyamoto, A. Robust matching of 3D contours using iterative closest point algorithm improved by M-estimation. Pattern Recognit. 2003, 36, 2041–2047. [Google Scholar] [CrossRef]

- Campbell, D.; Petersson, L. An adaptive data representation for robust point-set registration and merging. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4292–4300. [Google Scholar] [CrossRef]

- Yang, C.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Zandbergen, P.A.; Barbeau, S.J. Positional accuracy of assisted GPS data from high-sensitivity GPS-enabled mobile phones. J. Navig. 2011. [Google Scholar] [CrossRef]

- GPS Accuracy. Available online: https://www.gps.gov/systems/gps/performance/accuracy/ (accessed on 14 October 2018).

- Edelsbrunner, H.; Mücke, E.P. Three-dimensional alpha shapes. ACM Trans. Graph. 1994, 13, 43–72. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F.; Gerke, M.; Przybilla, H.-J.; Bäumker, M.; Zurhorst, A. ISPRS Benchmark for multi-platform photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 135–142. [Google Scholar] [CrossRef]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform—An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Renew. Energy 2009, 28, 655–663. [Google Scholar]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. 2013, 32, 29. [Google Scholar] [CrossRef]

| Rathaus | Lohnhalle | Verwaltung | |

|---|---|---|---|

| Number of images | 1211 | 194 | 351 |

| Façade model points | 36,085,050 | 8,004,604 | 11,176,836 |

| Capturing device | SONY NEX-7 | Canon EOS 600D | Canon EOS 600D |

| Focal length | 16 mm | 20 mm | 20 mm |

| Image size (pixel) | 4000 × 6000 | 5184 × 3456 | 5184 × 3456 |

| Ground resolution | 7.6 mm/pixel | 3.1 mm/pixel | 1.72 mm/pixel |

| GPS information | ✓ | ✓ | ✓ |

| Targets Qty. | Methods | RMSE (m) | Mean Error (m) | Standard Deviation (m) | |

|---|---|---|---|---|---|

| Rathaus | 20 | TCR | 0.192 | 0.164 | 0.197 |

| ICP | 4.283 | 3.548 | 4.280 | ||

| NDT | 6.814 | 6.663 | 1.575 | ||

| Proposed method | 0.389 | 0.342 | 0.304 | ||

| Verwaltung | 40 | TCR | 0.049 | 0.030 | 0.050 |

| ICP | 0.336 | 0.288 | 0.300 | ||

| NDT | 1.700 | 1.452 | 1.489 | ||

| Proposed method | 0.185 | 0.161 | 0.173 | ||

| Lohnhalle | 31 | TCR | 0.188 | 0.164 | 0.189 |

| ICP | 10.039 | 8.537 | 5.672 | ||

| NDT | 23.225 | 22.336 | 6.495 | ||

| Proposed method | 0.468 | 0.380 | 0.423 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Tao, P.; Wang, L.; Hou, Y.; Hu, Z. Improving Details of Building Façades in Open LiDAR Data Using Ground Images. Remote Sens. 2019, 11, 420. https://doi.org/10.3390/rs11040420

Zhang S, Tao P, Wang L, Hou Y, Hu Z. Improving Details of Building Façades in Open LiDAR Data Using Ground Images. Remote Sensing. 2019; 11(4):420. https://doi.org/10.3390/rs11040420

Chicago/Turabian StyleZhang, Shenman, Pengjie Tao, Lei Wang, Yaolin Hou, and Zhihua Hu. 2019. "Improving Details of Building Façades in Open LiDAR Data Using Ground Images" Remote Sensing 11, no. 4: 420. https://doi.org/10.3390/rs11040420

APA StyleZhang, S., Tao, P., Wang, L., Hou, Y., & Hu, Z. (2019). Improving Details of Building Façades in Open LiDAR Data Using Ground Images. Remote Sensing, 11(4), 420. https://doi.org/10.3390/rs11040420