1. Introduction

Airborne lidar, or laser scanning, from small unoccupied aerial systems (UAS) has gained a reputation as a viable mapping tool for both academic researchers and commercial users within the past decade (e.g., [

1,

2,

3]). Accompanying the release of the lightweight Velodyne VLP-16 in 2014 was the emergence of highly-accurate, lightweight navigational hardware, eliminating the need for auxiliary sensor data, such as computer vision techniques used in the earliest UAS lidar studies [

4]. These two core components form a payload that is functionally similar to the conventional airborne lidar payloads aboard piloted aircraft, but at a fraction of the weight and cost. Commercial outfits (e.g., LiDARUSA, YellowScan, Phoenix LiDAR Systems) have offered turnkey UAS lidar systems which utilize the VLP-16 since at least 2015 [

2]. From an accessible platform, the UAS, users can now collect an established and familiar data product, the lidar point cloud, which is compatible with existing data processing workflows and analysis.

As with all geospatial data, it is important to characterize the spatial accuracy of lidar products to inform their appropriate use. Similarly, a measure of accuracy is important for rigorously adjusting lidar data, correcting for systematic errors, and fusing it with other geospatial datasets, such as photography [

5]. Accuracy assessment of geospatial data typically involves the use of check-points, independently surveyed targets, or features within the captured scene, to produce statistical measures of accuracy often reported using the root mean square error (RMSE). However, comparison of lidar point clouds relative to check points can be problematic since individual lidar points are never exactly coincident with surveyed reference points. Often, only the elevation errors of points or derived raster digital elevation model (DEM) cells in the neighborhood of a reference surface are used, neglecting any measure of horizontal errors. This is the case for both conventional occupied-platform lidar and UAS lidar [

6].

Historically, the development of accuracy assessment methods for lidar was limited due to the relatively sparse spatial density of the points [

7]. Continual advancements in technology have led to rapidly-increasing point densities, with contemporary large systems capable of collecting over 1 million point measurements per second, thus allowing for the use of artificial targets for full 3D accuracy assessment of the data. Targets designed to intercept several points enable a more holistic assessment of the positional accuracy of point clouds and are more appropriate for characterizing accuracy for applications that involve using several points to reconstruct and measure features, such as the synthesizing and measurement of buildings, roadway structures, and tree morphology models.

Hardware calibration is a critical component of processing lidar data and consists of estimating systematic parameter errors associated with the physical alignment of sensor mechanisms [

8]. These include the lever arm and boresight parameters: the location and angular orientation, respectively, of the scanner head relative to the navigation reference point of the system, usually the inertial measurement unit (IMU). In addition to these, other parameters are often captured in the calibration procedure associated with, for example, biases in the laser scanning mechanism.

Like calibration, lidar strip adjustment is used to bring collection units, swaths associated with flight lines, into alignment. The adjustment is often generic [

9]. That is, it does not attempt to model actual physical parameters due to the inaccessibility of requisite data, such as vehicle trajectories. The effects of errors in physical calibration parameters as well as errors not modeled in calibration, e.g., biases in trajectory due to navigation solution drift, may be absorbed into the geometric transformation parameters.

Both calibration and strip adjustment algorithms often use the identification of points associated with geometric primitives, such as planes or lines in the scene, to detect discrepancies between overlapping collection units to be used as observations in optimization schemes for resolving the parameters. However, many scenes do not have planar surfaces or features composed of these primitives, or the features are not distributed in an optimal geometric configuration to best resolve the parameters. Some algorithms avoid the use of primitives by using the points themselves, diminishing structural requirements on the scene. These include iterative closest point (ICP) and iterative closest plane variants [

10]. These methods can be time-consuming, and require close initial approximations. Furthermore, some scenes are dynamic and complex, such as forests in windy conditions, and the fundamental assumption that features or points are in the same place from collection unit to collection unit is violated. Effects are amplified for smaller mapping regions and higher point densities associated with UAS lidar. They can be mitigated by using large portions or all of the point cloud in the adjustment, but at the cost of processing time. Artificial lidar targets may provide a way to address these issues associated with calibration and strip adjustment.

Past studies on lidar targets employed a variety of different approaches. The study presented in [

11] used circular targets with a bright white center on a dark black background to exploit point intensity data in determining which points fell within the central circle. A method similar to the generalized Hough-transform circle-finding method was used to find the horizontal location of the circle within the point cloud, and the average point height was used to estimate the vertical component. The estimated positioning accuracy of the elevated 2 m diameter targets was around 5–10 cm and 2–3 cm for the horizontal and vertical components, respectively, at a lidar point density of 5 pts/m

2. The study in [

12] used white L-shaped targets painted on asphalt to similarly locate 3D positions within lidar-derived intensity images using least-squares matching [

13]. The study in [

14] used retro-reflective targets in a hexagonal configuration to find a location within a relatively sparse point cloud (approximately 2 pts/m

2) to aid in boresight calibration, with reported location precision of 5 cm and 4 cm in the vertical and horizontal components, respectively. The dimensions of a proposed, but not yet implemented, foldable 3D target were illustrated by [

15], designed to use solely the point positions, and not intensity.

The current applied methods for targets in UAS lidar primarily employ bright flat targets which make use of the intensity value of lidar returns available in most scanners. For example, [

16] used ICP to align UAS lidar swaths, ground control points (GCPs) marked with flat sheet targets to apply an absolute correction, and targeted and untargeted ground-surveyed checkpoints for accuracy analysis. The method in [

17] used the average location of points striking reflective ground targets for accuracy assessment. That study described critical issues with this approach, including assumed distribution of points striking the target and effects of the laser beam divergence leading to bias and/or lower accuracy of measured locations in the point cloud compared to expectations from stochastic modeling. Similarly, [

17] used reflective ground targets manually measured in UAS point clouds, but details of the method were not described. There is not much exploration of mensuration uncertainty, the uncertainty of target location within the point cloud, in the literature, and thus a direct comparison of the various targeting methods is not possible.

Often, the use of intensity is not a robust solution for artificial targeting in laser scanner data. For example, the Velodyne VLP-16 is one of the most commonly-used UAS laser scanning systems. As reported by [

18] and in the present authors’ experience, the VLP-16 intensity reading quality is highly dependent on range and the incidence angle of the beam with the intercepting object. Additionally, intensity is recorded at a lower quantization level than larger and more-expensive laser scanners [

19]. At the low altitudes associated with UAS platforms, incidence angles for small homogenous areas and objects in the scene can have very diverse incidence angles, and therefore objects with similar reflectivity can yield substantially diverse intensity readings when surveyed in the typical boustrophedonic overlapping-swath flight pattern. It is often difficult, for example, to locate points from overlapping swaths associated with a white paper plate on healthy grass based on intensity from the VLP-16 as illustrated in Materials and Methods section. Use of very bright objects may be one approach to addressing this issue. However, [

18] indicates that high-intensity VLP-16 readings are associated with range bias on the order of a centimeter, which has also been observed by the present authors. In addition to issues associated with locating target points by intensity, the flatness of targets can also be problematic. As [

4] mentioned, non-uniform point distribution on the target can lead to poor, biased resolution of the horizontal reference point of the target, often located in the center of extreme target points, e.g.,

x = (max

x − min

x)/2. This is the case for both automatic methods and manual methods, where an operator estimates the center location of the target and interpolates the elevation of the target center based on surrounding points. To address these issues, this study introduces an alternative to intensity-based artificial targets for UAS lidar. More specifically, this study presents:

The description of a developed small-UAS laser scanning system;

The design of geometric structure-based targets which can be used for accuracy assessment, calibration, and strip adjustment;

Mensuration algorithms for precise target location within point clouds;

Two case studies illustrating the efficacy of the targets and associated algorithms for accuracy assessment.

While the accuracy results of the UAS system are presented and are of interest, they are not the focus of the paper. Instead, we focus on the methods to achieve the accuracy assessment, which can be used for other similar UAS laser scanning systems. It should be noted that UAS lidar accuracy may vary depending on site location, control configuration and accuracy, system characteristics, and mission planning.

2. Materials and Methods

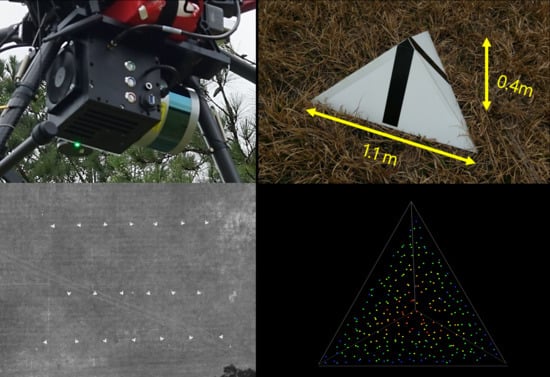

The UAS laser scanning system used in this study (

Figure 1) comprised a DJI s1000 octocopter with a sensor payload consisting of a Velodyne VLP-16 Puck Lite laser scanner head, NovAtel OEM615 Global Navigation Satellite System (GNSS) receiver, STIM300 IMU, Garmin GPS18x—for time stamping outgoing lidar pulses, a NovAtel GPS-702-GG antenna (Experiment Site 1 only), and a Maxtena M1227HCT-A2-SMA antenna (Experiment Site 2 only). Although this system was designed and built by our team, it can be considered typical, with various commercially-available turnkey analogues. Similarly, processing algorithms to generate point clouds from raw laser scanner and navigation sensor data, calibrate the sensor, and perform strip adjustment were developed internally. They can also be considered standard, except that they were designed to use our developed targets, and had the capability to propagate and carry forward uncertainties from the navigation and scanner observations to the point cloud. This allowed the estimated standard deviations of the X, Y, and Z components of each point in the point cloud to be stored and accessed, a benefit that we explored in our target mensuration experiments.

A primary objective of this study was to create portable, inexpensive, and multimodal (measurable in both lidar point clouds and imagery) targets capable of establishing accurate 3D locations within a point cloud from associated point observations with practical densities and distributions associated with UAS–lidar systems. The resulting design is shown in

Figure 2. The targets were foldable, corner-cube trilateral pyramids made from white corrugated plastic. The base of the pyramid was 1.1 m and the peak was 0.4 m above the ground when deployed. The reference location, to where ground-survey and point cloud coordinates were measured, was where the three planes intersected at the peak. The targets’ foldability allowed for easy transport and storage.

Figure 2b shows a stack of 22 targets, which could be easily carried by a single person. Black tape was placed on the pyramids to allow for measurement of the reference point, the intersection of the centerline of the three black lines, in photography taken from any perspective showing at least two sides of the target.

The mensuration algorithm for locating targets in the lidar point cloud was an iterative least-squares process to fit a known target template, three intersecting planes based on the design dimensions of the target, to points associated with it using rigid-body six-parameter transformation (3D rotation followed by translation) [

20]. The template’s untransformed coordinate system origin was located at the reference point at the tip of the pyramid, the base was parallel with the horizontal plane (close to what will likely be the fitted pose, hastening convergence), and one of the edges was aligned such that

y = 0 (

Figure 3). This edge orientation was arbitrarily chosen, with any orientation leading to convergence of the mensuration algorithm, meaning that actual targets need not be aligned with any particular orientation when placed in the field.

The first step was to identify and isolate points associated with the target. In this study, segmentation of target points was done manually, based on the relative height of the points above the ground. Automatic extraction of these points was feasible by using a random sample consensus (RANSAC) [

21] variant of the mensuration algorithm, which is proposed for future work. Nevertheless, manual segmentation was straightforward due to the structure of the targets.

Figure 4 and

Figure 5 illustrate the identifiability of target points based on height compared to that based on intensity. The mensuration algorithm presented here allowed the use of any subset of the points on the target, as long as some points on each facet were found. The distribution of the points did not bias the estimated target location, a quality that is explored in the

Appendix A. This allowed the user to be conservative in the confident identification of target points, reducing the possibility of blundered selection of non-target points.

The target transformation parameters were initialized by setting the orientation angles equal to zero: , , and , where , . are sequential rotations about the target , and axes, respectively. Translation was initialized to be equal to the coordinates of the highest of the target points.

Each iteration of the least-squares solution begins with identifying the closest facet,

, of the template in its current pose for each point. This is accomplished by finding the minimum dot product of the unit normal vectors of each facet

,

with

, where

is the position of the point

. Jacobian matrix

. is formed (Equation (1), where

is the number of points), containing the partial derivatives of the distance,

, of each point

to its associated plane with respect to the six transformation parameters:

Finally, a vector containing the negatives of the point-to-plane distances is formed,

enabling the least-squares solution to the linearized observation equations using Equation (2):

In Equation (2), contains corrections to the current approximations of the transformation parameters. The process is repeated until convergence of the standard deviation of unit weight, with the final resolved translation components, serving as the estimated position of the reference point.

If propagated uncertainties for point coordinates are available, the algorithm has the same steps, except that the solution is:

In Equation (3),

where

is the Jacobean matrix with rows containing the partial derivatives of the distances of each point,

to their associated planes with respect to

:

is the covariance matrix associated with lidar point coordinate uncertainty. The effect of including this weighting in the mensuration algorithm was explored in the experiments for Site 2.

The covariance matrix of the target template transformation parameters,

, may be calculated using Equation (5). The elements of

. associated with the translation components,

can be used as estimates of the target mensuration uncertainty:

Note that in this study, point position uncertainties were based on propagated variance from navigation parameters and scanner-head hardware. Inter-state correlations among these components were not resolved (i.e., the weight matrix associated with point-to-plane distances, was a diagonal matrix), and thus it was expected that propagated uncertainties of the target positions would be optimistic, since the assumed independence of point positions, and therefore point-to-plane distances, was violated. Obtaining estimates of the inter and intra-state correlation among navigation and scanner head parameters was not readily achieved, as they are not fully available in commercial navigation processing software and from scanner hardware vendors, respectively. Still, the outcomes from this study presented in the Results and Analysis section suggest that the propagated uncertainties used, even without accounting for correlation among parameters, were valuable.

Since lidar points near target edges may have convoluted echoes and lead to spurious observations, each point is tested for edge proximity. If a point is closer to an edge than a selected tolerance it is removed from the solution. In this study, points were culled if they were closer than one fourth of the beam diameter to an edge, a tolerance found empirically to improve the fidelity of the observations without overly decreasing redundancy. Similarly, points were removed if their orthogonal projection onto their associated facet-planes fell outside of the facet triangle. Points were tested in each iteration of the solution after the first two iterations were completed (the algorithm requires at least three iterations before convergence is established). The distance of a lidar point

to a target edge

associated with vertices

and

, where

, is:

The projection of a point

onto the plane containing target vertices

,

and

. associated with the closest target facet

is:

If is inside of the triangle formed by , and , the barycentric coordinates of with respect to the triangle will all be positive. This condition is used to test if the orthogonal projections of points fall on their associated target facets.

Two separate study areas were used to illustrate the efficacy of the pyramidal target methods. The first (Site 1) was used to illustrate precision and how lidar point density affects target mensuration within the point cloud, and the other (Site 2) to illustrate the benefit of using error propagation to locate targets.

Site 1 was located in Jonesville, Florida, at a recreation/sports park. Site 2 was located within Ordway Swisher Biological Station (OSBS), a 9500-acre University of Florida research facility in Melrose, Florida, which is primarily used for ecosystem monitoring and management research. The planned mission parameters are shown in

Table 1.

Site 1 consisted of three north-to-south flight paths (

Figure 6). Data were collected along these flight paths and associated turns twice at 40 m and once at 30 m flying height above ground level (AGL). The area was scanned with the full outgoing pulse rate of the VLP-16, 300 kHz, yielding an average combined-swath pulse density of 3000 pts/m

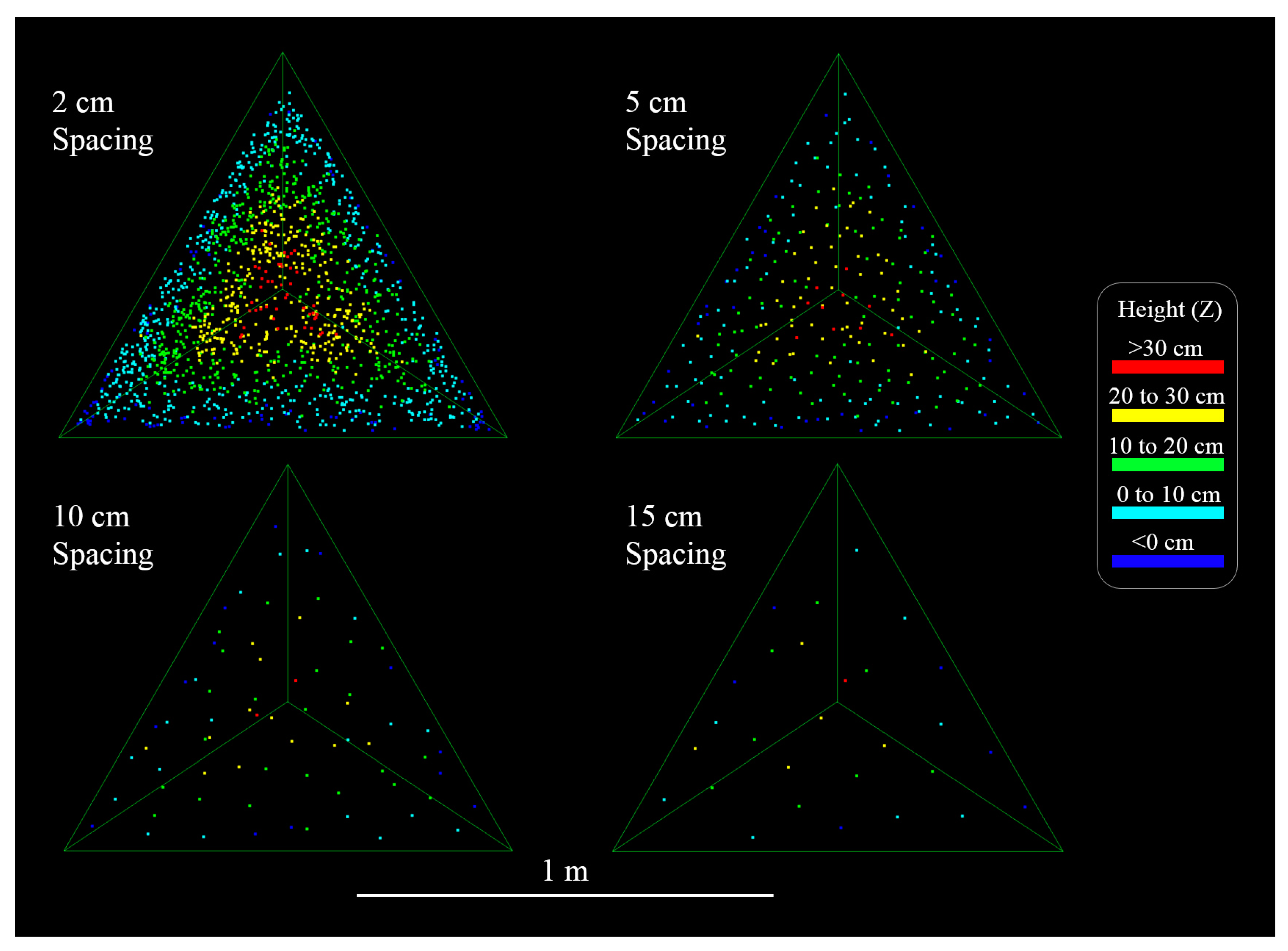

2, or an approximate point spacing of 1.8 cm in the center of the project area. The scan rate was 10 Hz, although it should be noted that later investigation found maximizing the scan rate of the VLP-16 yielded better point distribution, reducing clustering of points, albeit with lowered point accuracy due to lower resolution of the encoded scan angles. In order to investigate the effect of point density on target mensuration, the original processed point cloud was decimated three times to generate target point clouds with 5 cm, 10 cm, and 15 cm minimum 3D point distances, by iteratively and randomly selecting points and removing all neighbors within less than the minimum 3D point distance. Initially, 20 cm point spacing was also attempted, but it sometimes (2 of 20 targets) led to failure of the target mensuration algorithm and was excluded from analysis.

Site 2 data were collected via a single repetition of six flight lines stretching 200 m north-to-south, and with 25 m line separation (

Figure 7). The area was scanned at 30 m flying height above ground, with a pulse rate of 300 kHz, and 10 Hz scan rate. The average combined point cloud pulse density was approximately 800 pts/m

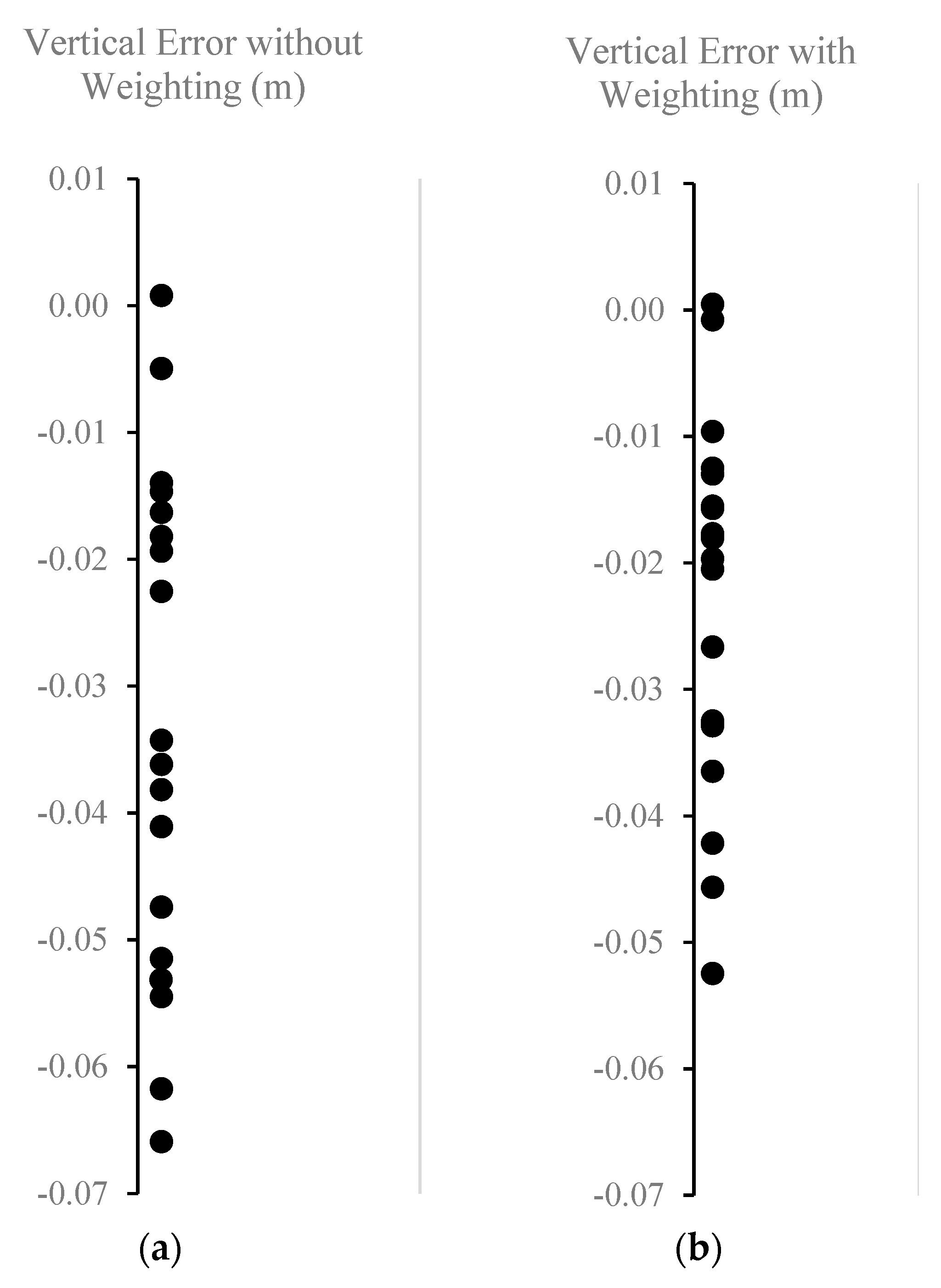

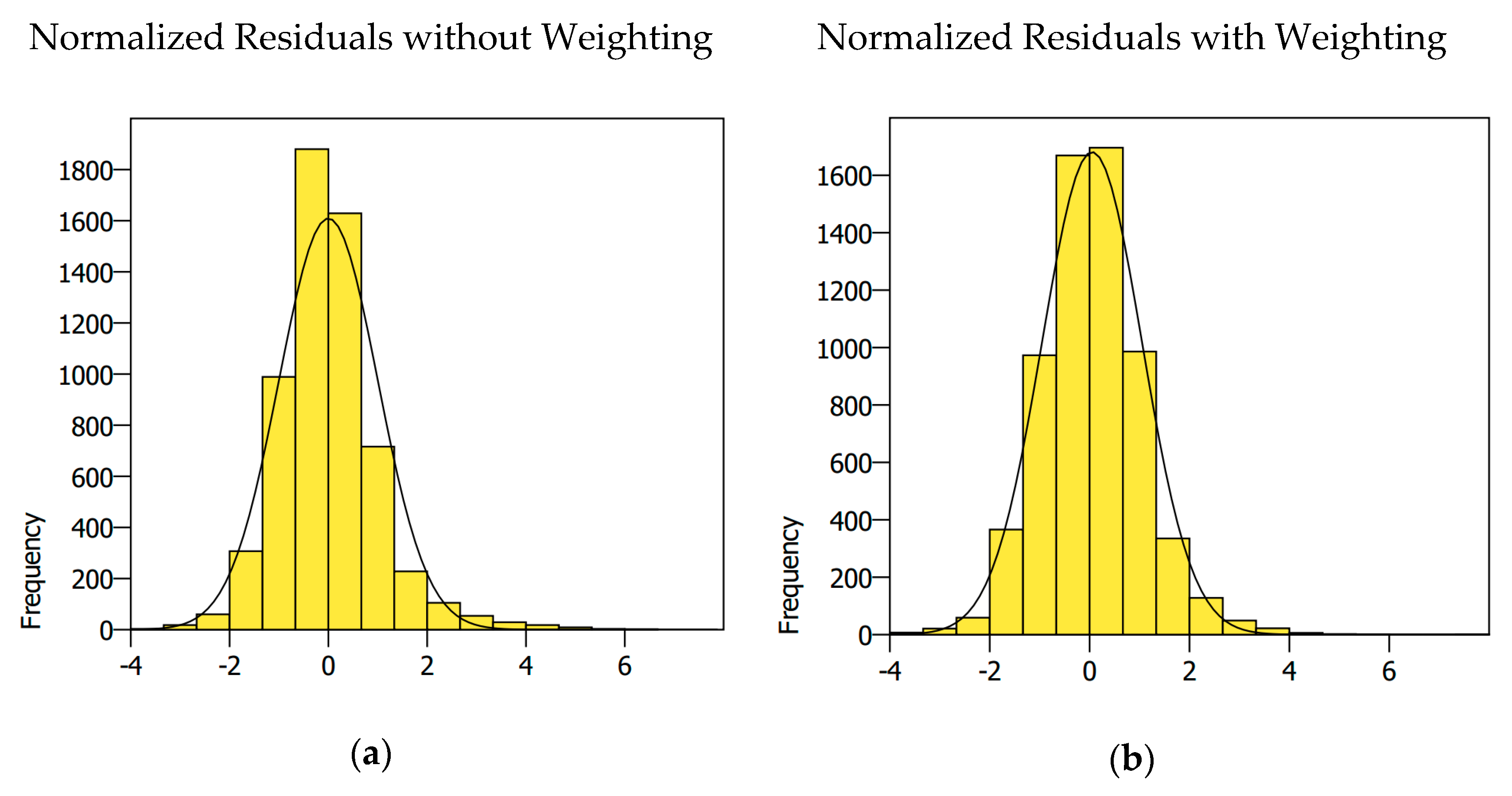

2, or a point spacing of approximately 3.5 cm. Note that since the Site 2 flight paths were longer, the increase in the average point density associated with points collected on turns between primary lines was smaller than that for Site 1. Similarly, the longer lines for this flight led to higher uncertainty for some target-associated points, since uncertainty is largely dependent on laser range, making this dataset well-suited for the analysis of uncertainty-based weighting on target mensuration. To study this, the target locations were estimated using both the weighted and unweighted versions of the mensuration algorithm.

Boresight calibration of the laser scanner sensor suite, estimation of the angular alignment of the scanner head with respect to the platform axes corresponding to the filtered trajectory (IMU axes), was carried out for both missions, each time using three targets without using ground survey coordinate observations. The details of the boresight procedure are beyond the scope of this paper, but details will be given in a future article. Strip adjustment, alignment of the overlapping swaths, was not necessary to bring collection units into alignment for this study, since there was no observable discrepancy between flight strips after the boresight calibration procedure.

At each site, the targets were used to mark ground-surveyed checkpoints, which were well-distributed across the project area. There were 20 checkpoints within Site 1 that were nominally spaced at 7 m along three north–south rows. The eastern-most row was approximately 14 m from the central row, and the western-most row was 20 m from the central row. These can be seen in

Figure 6. Site 1 target coordinates were established using post-processed kinematic (PPK) GNSS. The rover GNSS antenna was held above the targets so that the processed coordinates could be reduced to the target reference points. It is important to mention that the target survey used the same base station that was used to calculate the UAS trajectory, albeit at different times, and thus the two were not entirely independent. Site 2 had 18 widely distributed checkpoints, with a mean nearest neighbor distance of 22 m.

Figure 7 shows the distribution of Site 2 targets. Site 2 target coordinates were established using a total station, with distances corrected for map projection distance scale. A reflective surveying prism was held above the targets so coordinates could be reduced to the target reference points. Similar to Site 1, since total station back sight observations were made to a (non-lidar-targeted) point with coordinates established by the same GNSS observations that were used for calculating the UAS trajectory, target coordinates were not completely independent from the estimated UAS trajectory.

4. Discussion

In Site 1, the estimated mensuration uncertainty and standard deviations of ground-surveyed checkpoints were less than 2 cm for point spacings up to 15 cm in all components, X, Y, and Z. To guarantee mensuration within the expected error of ground-surveyed points, it is recommended to not exceed 10 cm. Although the experiments indicated a significant decrease in mensuration accuracy in the sparsest clouds, the approach enabled target location and yielded reliable measured coordinates up to a spacing of 15 cm. When comparing against field-surveyed check point coordinates, the target coordinate accuracy decreased with decreased point density. However, even for the sparsest point clouds tested in this study, the lowest accuracy measured using RMSE was in the order of what is expected for the ground survey and the theoretical limits of the UAS lidar system, ~2 cm, illustrating that mensuration accuracy for the targets and associated algorithm were sufficient for accuracy assessment. The estimated uncertainties associated with the mensuration algorithm generally agreed with the results of the check-point accuracy experiment when taking into account scanner and check-point error, and could be used for weighting in rigorous adjustment and fusion of UAS lidar data. For example, when combining lidar data from two separate collections, the targets can be used to register the point clouds with each other via a weighted coordinate transformation to bring them into coincidence. Further exploration of the value of target position uncertainty estimation is given in the

Appendix A.

Although useable results were achieved with and without it, the Site 2 experiment illustrated the potentially significant impact on mensuration that can be made by including the uncertainty of each point as input in the algorithm. The RMSE was lower for each component, X, Y, and Z, when incorporating weights into the mensuration algorithm. Similarly, mean error magnitudes and standard deviations were generally lower when weighting. While rigorous point coordinate uncertainty requires navigation uncertainty metadata, which may preclude use by some practitioners, surrogate generic methods based on manufacturer-specified scanner encoder uncertainty, range uncertainty, and estimates of platform exterior orientation uncertainty may be as effective as rigorous physical modeling. Users are encouraged to explore either option for their systems, since beyond the benefits of carrying forward propagated uncertainties for post-processing and analysis, they also increase the accuracy of general location measurement, and avoid the reduced point cloud density and target-placement restriction associated with more naïve methods, such as point removal based on, e.g., long range or across-track distance.

The targets had some, albeit minor, drawbacks. For sparse point clouds, the targets generated noticeable systematic error in the form of lower Z coordinates relative to denser point clouds (and check point coordinates). This may be remedied by constraining tilt to zero, the approach used in the presented Site 1 experiment, since the ground was flat and level at the study site. However, on sloped ground one may need to level the targets when placing them or record the tilt to enable constraint to non-zero values.

Black tape was placed on the targets for use in fusion with photogrammetry. While it was effective in its purpose, drop-outs (no point returned) were observed for some points falling on the tape (some of these voids are visible in

Figure 4a). Although this did not seem to have a significant effect on the mensuration of the targets in the point clouds, it could be remedied by using a different colored tape or only placing tape on targets that must be seen from imagery. Another approach the present authors utilized is the placement of flat targets on the ground next to the target (e.g., adjacent to the north facet) to obtain more reliable height, the weakest-resolved component for the targets. The targets were very light weight, since they were made from corrugated plastic. In windy conditions, they must be staked to the ground, or, depending on the ground composition, secured with sandbags on the corners.

The design of our targets precluded viewing any monument, such as capped rebar, underneath the targets when placed. To place a target associated with a monumented point, an effective approach has been to place a tripod with a leveled and centered optical-plummet tribrach over the monumented point, then place the target above it, centering the reference point of the pyramid visually using the tribrach. Height was measured using tape and checked using the design specifications of the target (0.4 m). The target points were easily and rapidly manually extracted from point clouds, and non-target and dubious points were identified and culled using tests based on Equations (6) and (7). However, for a large number of targets, manual extraction can be somewhat time consuming. Future work will include the development of automatic identification and extraction of target points to include a RANSAC-approach to discriminate target points and identify outliers.