Abstract

Hyperspectral remote sensing obtains abundant spectral and spatial information of the observed object simultaneously. It is an opportunity to classify hyperspectral imagery (HSI) with a fine-grained manner. In this study, the fine-grained classification of HSI, which contains a large number of classes, is investigated. On one hand, traditional classification methods cannot handle fine-grained classification of HSI well; on the other hand, deep learning methods have shown their powerfulness in fine-grained classification. So, in this paper, deep learning is explored for HSI supervised and semi-supervised fine-grained classification. For supervised HSI fine-grained classification, densely connected convolutional neural network (DenseNet) is explored for accurate classification. Moreover, DenseNet is combined with pre-processing technique (i.e., principal component analysis or auto-encoder) or post-processing technique (i.e., conditional random field) to further improve classification performance. For semi-supervised HSI fine-grained classification, a generative adversarial network (GAN), which includes a discriminative CNN and a generative CNN, is carefully designed. The GAN fully uses the labeled and unlabeled samples to improve classification accuracy. The proposed methods were tested on the Indian Pines data set, which contains 33,3951 samples with 52 classes. The experimental results show that the deep learning-based methods provide great improvements compared with other traditional methods, which demonstrate that deep models have huge potential for HSI fine-grained classification.

1. Introduction

Hyperspectral imaging obtains data of the observing target with spectral and spatial information simultaneously and has become a useful tool for a wide branch of users. Among the hyperspectral imagery (HSI) processing methods, classification is one of the core techniques, which tries to allocate a specific class to each pixel in the scene. HSI classification is widely-used including urban development, land change monitoring, scene interpretation, and resource management [1].

The data acquisition capability of remote sensing has been largely improved in the recent decades. In the context of hyperspectral remote sensing, varieties of instruments will be available for Earth observation. Those advanced technologies have increased different types of satellites images which have different resolutions in spectral and spatial dimensions. In general, it leads to difficulties and also opportunities for data processing techniques [2].

In common, the data shown in hyperspectral remote sensing have following features: abundant of object labels, a large size of pixels, and high dimensional features. To the best of our knowledge, most of hyperspectral datasets do not contain more than 20 classes individually. How to handle HSI classification with a large number of classes is a challenging task in real applications. In this study, we investigate the classification of Indian Pines dataset which contains 52 classes. As far as we can see, it is the only public dataset with more than 50 classes. Furthermore, for the Indian Pines dataset, there are fine-grained classes which contain more specific and detailed information compared with the traditional coarse definition of classes.

Among the HSI processing techniques, classification is one of the most vibrant topics. There are three types of HSI classification methods: supervised, unsupervised, and semi-supervised ones. Most of the existing HSI classifiers are based on supervised learning methods.

Due to the abundant spectral information of HSI, traditional methods have been focused on spectral classifiers including multinomial logistic regression [3], random forest [4], neural network [5], support vector machine [6,7], sparse representation [8], and deep learning [9,10,11].

HSI contains both spectral and spatial information. With the help of spatial information, the classification performance can be significantly improved. Therefore, spectral-spatial classifiers are the mainstream of supervised HSI classification [12]. Typical spectral-spatial classification techniques are based on morphological profiles [13,14], multiple kernels [15], and sparse representation [8]. Morphological profile and its extensions extract the spatial features of HSI, and support vector machines (SVMs) or random forests are followed to obtain the final classification map [16,17]. On the other hand, multiple kernel-based methods use different kernels to handle the heterogeneous spectral and spatial features in an efficient manner [18]. In sparse representation-based methods, spatial information is incorporated to formulate spectral-spatial classifiers [8].

The collection of labeled training samples is costly and time-consuming. Therefore, the number of training samples is usually limited in practice. On the other hand, there are many unlabeled samples in the dataset. The semi-supervised classification, which uses the labeled and unlabeled samples together is a promising way to solve the problem of limited training samples [19,20,21]. A transductive support vector machine has been introduced to classify remote sensing images [22]. The proposed transductive SVM significantly increased the classification accuracy compared to the standard SVM. In [23], the semi-supervised graph-based method, which combined composite kernels, has been developed for hyperspectral image classification. Although the number of publications of semi-supervised classification in the literature is smaller than that of supervised learning, it is very important in remote sensing applications.

The aforementioned supervised and semi-supervised methods do not classify HSI in a “deep” manner. Deep learning-based models have the advantages in feature extraction, which have shown their capability in many research areas computer vision [24], speech recognition [25], machine translation [26], and remote sensing [27,28].

Most of popular deep learning models, including stacked auto-encoder [29,30], deep belief network [12,31], convolutional neural network (CNN) [32,33,34,35], and recurrent neural network [36], have been explored for HSI classification. Among the aforementioned deep models, CNN-based methods are widely-used for HSI classification. Similar works for the purpose of extracting spectral-spatial features from pixel have been proposed using a deep CNN in [37]. Li et al. [38] leverage the CNN method to extract pixel-pairs features of HSI following by a majority voting strategy to predict the final classification result. Z. Zhong et al. proposed a 3D-deep network which receives 3D-blocks equipped with spatial and spectral information both from HSI and calculates 3D-convolution kernels for each pixel [39]. In [40], a light 3D-convolution was proposed for extracting the deep spectral-spatial-combined features and the proposed model was less likely to overfitting and easy to train. Furthermore, in [41], band selection was used to select informative and discriminative bands, and then the labeled and unlabeled samples were fed into a 3D-convolutional Auto-Encoder to get the encoded features for semi-supervised HSI classification. In the meanwhile, Romero et al. [34] raised an unsupervised deep CNN to grab sparse features for the limitation of a small training set. In 2018, generative adversarial network (GAN) was used as a supervised classification method to obtain accurate classification of HSI [42], and in [43] a semi-supervised classification utilizing spectral features based on GAN was proposed.

Although deep learning-based methods have shown their capability in HSI processing, deep learning is still in the early stage for HSI classification. There are many specific classification problems, including few training samples and HSI with a great number of classes, to be solved by deep learning methods.

Fine-grained classification tries to classify data with small diversity of relatively large number of classes. Few methods have been proposed for HSI fine-grained classification. As far as we know, only in [44], SVM was used to classify HSI with a great number of classes. How to classify HSI with volume and variety is an urgent task to be tackled nowadays. Due to the advantages of deep learning, it is necessary to use deep learning methods for HSI fine-grained classification.

Furthermore, due to the difficulty and time-consuming to label samples, labeled training samples are usually limited. It is necessary to use the unlabeled samples, which can be used to improve the classification performance.

Moreover, the processing time is another important factor of practical applications. As we know, HSI classification with lots of training samples is time-consuming. In order to speed up the classification procedure, preprocessing of HSI, which reduces the computational complexity of classification, is usually needed. On one hand, these preprocessing techniques are traditionally performed through spectral dimensionality reduction algorithms (e.g., principal component analysis (PCA) [45] and Auto-Encoder (AE)). On the other hand, the deep learning methods which are the combinations of feature extraction and classification, can reduce the classification time via the feature extraction stage. In [30,46], the new spectral-spatial HSI classification methods based on the deep features extraction using stacked-auto-encoders (SAE) are proposed, which have achieved an effective performance on HSI classification.

Generally speaking, a further refinement process can produce an improved classification output. Considering that, some post-processing methods combining probabilistic graphical models such as MRF and conditional random field (CRF) with CNN have been explored in [47,48]. For example, Liu et al. [49] used CRF to improve the segmentation outputs by explicitly modeling the contextual information between regions. Furthermore, Chen et al. [50] proposed a fully connected Gaussian CRF model with respective unary potentials getting from a CNN instead of using a disconnected approach. And Zheng et al. [51] demonstrated a dense CRF with Gaussian pairwise potentials as a recurrent neural network to improve the low-resolution prediction by a traditional CNN.

In this study, the deep learning-based methods for hyperspectral supervised and semi-supervised fine-grained classification are investigated. With the help of deep learning models, the proposed methods achieve significant improvements in terms of classification accuracy. Besides, compared with traditional methods like SVM-based methods, the deep learning models can reduce the total running time (e.g., training and test time). In more details, the main contributions of this study are summarized as follows.

(1) The deep learning-based methods are explored for supervised and semi-supervised fine-grained classification of HSI for the first time.

(2) Densely connected convolutional neural network (DenseNet) is explored for supervised classification of HSI. Moreover, pre-processing (i.e., PCA and AE) and post-processing (i.e., CRF) techniques are combined with DenseNet to further improve the classification performance.

(3) A Semi-supervised deep model, semi-GAN, is proposed for semi-supervised classification of HSI. The Semi-GAN effectively utilizes the unlabeled samples to improve the classification performance.

(4) The proposed methods are tested on HSI under the condition of limited training samples, and the deep learning models obtain astounding classification performance.

The rest of the paper is organized as follows. Section 2 presents the densely connected CNN for HSI supervised fine-grained classification and Section 3 presents the GAN for HSI semi-supervised fine-grained classification. The details of experimental results are reported in Section 4. In Section 5, the conclusions and discussions are presented.

2. Densely Connected CNN for HSI Supervised Fine-grained Classification

HSI usually covers a wide range of the observing scene, which means that the data contain complex data distribution and dozens of different classes at the same time. Without effective feature extraction, it is difficult to classify HSI accurately. Deep models, which use multiple processing layers to hierarchically extract the abstract and discriminant features of the inputs, have the potential to handle accurate classification of complex data. In this section, CNNs are explored for HSI classification.

2.1. Deep Learning and Convolutional Neural Network

In general, deep learning-based methods use multiple layers, which are composed by simple but nonlinear layers, to gradually learn semantically meaningful representation of the inputs. By accumulating enough nonlinear layers, complex functions can be learned by a deep model. The deep model starts with raw pixels and ends with abstract features, and the learned discriminant features suppressed the irrelevant variations. This procedure is extremely important for a classification task.

There are many different ways to implement the idea of deep learning and the mainstream implementations include stacked Auto-Encoder, deep belief network, deep CNN, and deep recurrent neural network. Among the popular deep models, CNN is the most widely-used method for image processing due to the advantages of local connections, shared weights, and pooling.

The convolutional operation with nonlinear transform is the core part of a CNN and it is formulated as follows:

where matrix is the -th feature map of the -th layer, is the -th feature map of the -th layer, and is the total number of feature maps. is the convolution filter and is the corresponding bias. is a nonlinear transform such as the rectified linear unit (ReLU) and denotes the convolution operation. All the parameters including weights and biases are determined by back-propagation learning.

The pooling operation merges the semantically similar features into one, which brings invariance to the feature extraction procedure. There are several pooling strategies and the most common pooling operation is max pooling.

By stacking convolution and pooling layers, deep CNN can be established. In the training of deep CNN, there are some problems such as gradient vanishing and weights initialization difficulty. Batch normalization (BN) [52] can stabilize the distributions of layer inputs, which is achieved by injecting additional BN layers with controlling the mean and variance of distributions. In [53], it has been proved that the effectiveness of batch normalization does not lie in reducing so-called internal covariate shift but lies in making the landscape of the corresponding optimization problem significantly more smooth. Let contains a mini-batch of inputs, then BN mechanism can be formulated as follows.

The normalized result is scaled and shifted by the learnable parameters and . is a constant for numerical stability.

This implies that the gradients used in training are more predictive and well-behaved to cope with the gradient vanishing curse. BN is a practical tool in the training of a deep neural network and it usually speeds up the training procedure.

2.2. Densely Connected CNN for HSI Supervised Fine-grained Classification

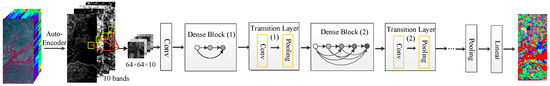

In this subsection, the proposed DenseNet framework for HSI fine-grained classification is illustrated in Figure 1. From Figure 1, one can see that there are three parts: the data preparation, feature extraction, and classification. In data preparation part, Auto-Encoder is used to condense the information in the spectral domain, and then the neighbors of the pixel to be classified are selected as input. DenseNet, which is used for feature extraction, is the core part of the framework. At last, a softmax classifier is used to obtain the final classification result.

Figure 1.

The framework of DenseNet for HSI supervised fine-grained classification.

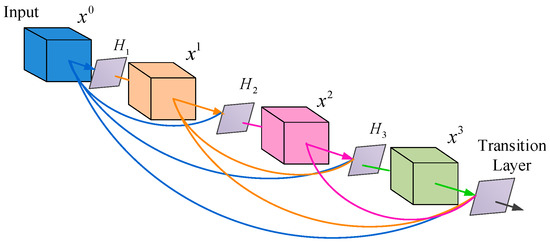

Traditional CNNs stack the hidden layers to formulate a deep net. Simple stacking of layers leads to serious problems including vanishing gradient and inefficient feature propagation. Although there are some techniques including BN to alleviate the aforementioned problems, the classification performance of CNNs can be further improved by modifying its architecture. DenseNet is a relatively new type of CNN [54]. In DenseNet, each layer obtains additional inputs from all preceding layers. Hence, the -th layer has inputs obtained by connections. This scheme introduces connections in an L-layer network, while a traditional CNN of L layers only has L connections. Figure 2 shows the situation when L = 4. There are there composite functions, which are denoted by , and one transition layer. From the figure, we can see that the total number of connections (colored lines) is 10.

Figure 2.

A four-layer dense block.

In dense connection, each layer is connected to all subsequent layers. Let represents the feature maps of the l-th layer, and is obtained through the combination of all previous layers:

represents the concatenation of previous feature maps produced in layers 0, 1, …, l − 1. is a composite function of operations: batch normalization, followed by an activation function (ReLU), and a convolution (Conv).

DenseNet can extract the discriminant features of similar classes, which are useful for fine-grained classification.

2.3. Dimensionality Reduction with DenseNet for HSI Fine-Grained Classification

HSI usually contains hundreds of spectral bands of the same scene, which can provide more abundant spectral information. With the increasing amount of bands, most of the traditional algorithms dramatically suffer from the curse of dimensionality (i.e., Hughes phenomenon). In this study, two dimensionality reduction methods (i.e., whitening principal component analysis and Auto-Encoder) are combined with DenseNet for HSI fine-grained classification.

The whitening PCA, which is a modified PCA with the identity covariance matrix, is a common way of dimensionality reduction. The PCA can condense the data by reducing the dimensions to a suitable scale. In HSI dimensionality reduction, the whitening PCA is executed to extract the principal information on the spectral dimensions, and then the reduced image is regarded as the input of deep models. Due to PCA, the computational complexity is dramatically reduced, which alleviates the overfitting problem and improves the classification performance.

The Auto-Encoder is another way of dimensionality reduction. Auto-Encoder can non-linearly transform data into a latent space. When this latent space has lower dimension than the original one, this can be viewed as a form of non-linear dimensionality reduction. An Auto-Encoder typically consists of an encoder and a decoder to define the data reconstruction cost. The encoder mapping f adopts the feed-forward process of the neural network to get the embedded feature. However, the decoder mapping g aims to reconstruct the original input. The process can be formulated as:

where denotes the input pixel vector, denotes the reconstructed vector, and denotes the corresponding latent vector for classification. The difference between the original input vector and the reconstructed vector is reduced by minimizing the cost function:

where N is the numbers of pixels of an HSI.

The aforementioned whitening PCA or Auto-Encoder, which is used as a pre-processing technique, can be combined with DenseNet to build an end-to-end system to fulfill the HSI fine-grained classification task.

2.4. CRF with DenseNet for HSI Fine-grained Classification

Different from dimensionality reduction with DenseNet for HSI fine-grained classification, there is another way (post-processing with DenseNet) to improve classification performance. Therefore, in this study, conditional random field (CRF) is combined with DenseNet to further improve the classification accuracy of HSI.

In general, CRFs have been widely used in semantic segmentation based on an initial coarse pixel-level class label, which is predicted by the local interactions of pixels and edges [55,56]. The goal of CRFs is to make pixels in a local neighborhood having the same class label, especially they have been applied to smoothing noisy segmentation maps.

To overcome these limitations of short-range CRFs, we use the fully connected pairwise CRF proposed in [57] for its efficient computation, and ability to capture fine details based on long-range dependencies. In detail, we perform the CRF as a post-processing method on top of the convolutional network, which treats every pixel as a CRF node receiving unary potentials of the CNN and Auto-Encoder-DenseNet.

The fully connected CRF performs the energy function:

where is the label assignment for pixels. The unary potential , where is the label predicted probability at pixel as computed by convolution network. The pairwise potential uses a fully-connected graph and when we connect all pairs of image pixels, , we get the energy function.

In this function, one can see that it includes two Gaussian kernels, which stand for different feature spaces, the first kernel based on both pixel positions and spectral band , and the second kernel only depends on pixel positions. The scales of Gaussian kernels are decided according to the hyper parameters and . The Gaussian CRF potentials in the fully connected CRF model in [57] that we adopt can capture long-range dependencies and at the same time the model is amenable to fast mean field inference. The first kernel impels voxels in an area with similar positions and homogenous spectral band to equip with similar labels, and the second kernel only takes spatial proximity into consideration.

3. Generative Adversarial Networks for HSI Semi-Supervised Fine-grained Classification

The collection of labeled training samples is costly and time-consuming. In addition, there are tremendous unlabeled samples in the dataset. How to effectively combine the labeled and unlabeled samples is an urgent task in remote sensing processing. In this section, a GAN-based semi-supervised classification method is proposed for HSI fine-grained classification.

3.1. Generative Adversarial Network (GAN)

As a novel way to train a generative and discriminative model, GAN which was proposed by Goodfellow [58], has achieved successful development in many fields. Later, various GANs have been proposed like conditional GAN (cGAN) used for image generations [59], SRGAN for super-resolution [60], and image-to-image translation through CycleGAN [61] and DualGAN [62]. Other models were also developed for specific applications including video prediction [63], texture synthesis [64], and natural language processing [65].

Commonly GAN consists of two parts: the generative network and the discriminative model . The generator can obtain the potential distribution of real data and generate a new similar data sample while the discriminator is a binary classifier that can distinguish the real input samples from the fake samples.

Assuming that the input noise variable possesses a prior and the real samples are equipped with data distribution . After accepting a random noise as input, the generator can produce a mapping to data space , where represents the function of the generative model. Similarly, we can define that stands for the mapping function of the discriminative model.

In the optimized procedure, the aim of discriminator is maximizing which is the probability of assigning the correct labels to the correct sources, and the generator tries to make the generated samples possess more similar distribution with real data, hence we can train the generator to minimize . Therefore, the ultimate aim of the training procedure is to solve the minimax problem:

where is the expectation operator. However, the shallow multiply perceptrons are usually inferior to deep models in dealing with complex data. Considering that the deep learning-based methods have achieved many novel implementations in variety of aspects, the deep networks (CNNs) are adopted to compose the model and in this paper [66].

3.2. Generative Adversarial Networks for HSI Semi-Supervised Fine-grained Classification

Although GAN has exhibited promising application in image synthesis [67] and many other aspects [61], the discriminative model of traditional GAN can be used only in distinguishing the real samples from the generated samples, which is not suitable for the multiclass image classification. Recently, the concept of GAN has been extended to be a conditional model with semi-supervised methods where the labels of true training data were imported to the discriminator .

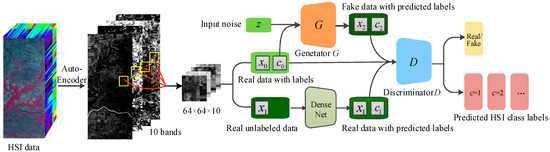

To adapt GAN to the multiclass HSI classification issue, we need some additional information for both and . The introduced information are usually class labels to train the conditional GAN. In this study, the proposed Semi-GAN, whose discriminator is modified to be a softmax classifier that can output multi-class labels probabilities, can be used for HSI classification. Besides, additional information from the training data with real class labels, the training data equipped with predicted labels are also introduced into the discriminator network. The main framework of Semi-GAN for HSI fine-grained classification is shown in Figure 3.

Figure 3.

The framework of HSI semi-supervised fine-grained classification.

From Figure 3, one can see that the network can extract spectral and spatial features together. First of all, the HSI data are fed into an Auto-Encoder to obtain the dimensionality-reduced data. In the whole training process, the dimensionality-reduced real data are divided into two parts: one is composed of the labeled samples and the other is unlabeled part. The labeled samples are introduced into both model and , while the unlabeled samples are fed into the DenseNet to get the predicted the corresponding labels. The input of the discriminator consists of real labeled training data, the fake data generated by the generator and the real unlabeled training data with predicted labels, and then will output the probability distribution . Therefore, the ultimate aim of the discriminator is to maximize the log-likelihood of the right source:

Similarly, the aim of the G network is to minimize the log-likelihood of the right source.

In the network, one can see that the real training data are composed of two parts: one is the labeled real data and the other is unlabeled real data with labels predicted by trained DenseNet. The generator also accepts two parts: the hyperspectral image class labels and the noise , the output of can be defined by . The probability distribution of sources and the probability distribution of class labels are fed into the network [68]. Considering the different sources and labels of data, the objective function can be divided into two parts: The log-likelihood of the right source of input data and the log-likelihood of the right class labels :

where and represent real labeled training samples and its true labels respectively. and stand for real unlabeled training samples and the predicted labels obtained by DenseNet, while the and signify the generated samples from model and corresponding labels estimated by model . In the whole training process, is trained to maximize , while is optimized to maximize .

4. Experimental Results

4.1. Data Description and Environmental Setup

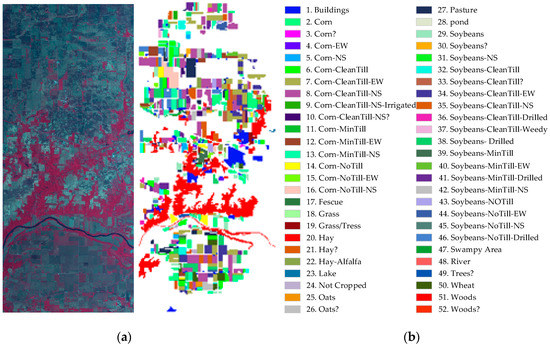

In this study, Indian Pines was adopted to validate the proposed methods which contains 333,951 samples with 52 classes. It was a mixed vegetation site over the Indian Pines test area in North-western Indiana.

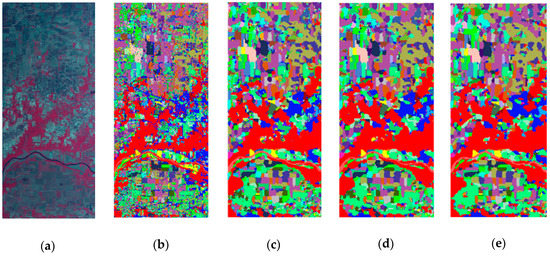

The dataset was collected by the Airborne Visible/Infrared Imaging Spectrometer over the Purdue University Agronomy farm northwest of West Lafayette and the surrounding area. In this experiment, we used the North-South scene due to the available North-South ground reference map. It was equipped with a size of 1403 × 614 and 220 spectral bands in the wavelength range of 0.4–2.5 μm. The false color image was shown in Figure 4a. In this experiment, fifty-two different land-cover classes (with more than 100 samples) are provided in the ground reference map, as shown in Figure 4b. In this study, the classification accuracy is mainly evaluated using the overall accuracy (OA), average accuracy (AA), and Kappa coefficient (K).

Figure 4.

The Indian Pines dataset. (a) False-color composite image (Band 40, 25, 10); (b) ground reference map.

For the dataset, the labeled samples were divided into two subsets which contain the training and test samples, in the training process of supervised methods, 8000 training samples are used to learn the weights and bias of each neuron. In the test process, 20,000 test samples were used to estimate the performance of the trained network. In the semi-supervised methods, besides the labeled data, the unlabeled data are also used to improve the performance of trained network, 20,000 unlabeled samples are fed into the training process in this experiment. 20,000 samples were used to test the classification performance. The training and test samples were randomly chosen among the whole samples. In order to obtain reliable results, all the experiments were run five times and the experimental results were given in the form of mean ± standard deviation. The number of training samples for the same class may be different in different running. Table 1 showed the distribution of the 52 classes and the number of training/test samples for each class in two runs (denoted by I or II).

Table 1.

The distribution of the 52 classes.

4.2. HSI Supervised Fine-Grained Classification

In this supervised experiment, the DenseNet was also compared with the traditional CNN used in HSI classification. The training and test samples were randomly chosen among the whole dataset and 8000 labeled samples for all classes were regarded as the training data in view of the large class numbers.

The details of basic DenseNet framework were shown in Table 2. In the Table 2, the DenseNet in the experiment had four dense blocks and three Transition Layers. Each Dense Block had the BN-ReLU-Conv ()-BN-ReLU-Conv () version of The introduced convolution before convolution was used to reduce the number of input feature maps, and thus improved computational efficiency. The growth rate in the experiment was set to 16 which meant that the number of input feature maps in next layer increased by 16 compared with the last layer. The numbers of in four dense blocks are 2, 4, 6, 8, respectively. For the convolutional layer in dense block, each side of the inputs was zero-padded by one pixel to keep the feature-map size fixed. Between two contiguous dense blocks, we used Transition Layer that contained convolution followed by average pooling to reduce the size of feature maps. At the end of the last dense block, a global average pooling was executed, and then a softmax classifier was attached to get the predicted labels. The hyperspectral data after Auto-Encoder were preserved ten principal components in the Auto-Encoder-DenseNet. The classification results with different number of principal components were shown in Table 3. From Table 3, one can see that Auto-Encoder-DenseNet with ten principal components obtained best classification performance. Therefore, we preserved ten principal components in the Auto-Encoder-DenseNet. And similarly, the total channels were condensed to ten dimensions through PCA in the PCA-DenseNet for the comparison.

Table 2.

The detailed framework of DenseNet.

Table 3.

Test accuracy of Auto-Encoder-DenseNet with different numbers of principal components.

In the CNN-based methods and DenseNet-based methods, we used where the d represented the number of spectral bands and neighbors of each pixel as the input 3D images without compressing and with compressing, respectively. For the RBF-SVM method, we used a “grid-search” method to find the most appropriate and [69]. In this manner, pairs of were tried and the one with the best classification accuracy on the validation samples was picked. This method was convenient and straightforward. In this experiment, the ranges of and were and , respectively. Furthermore, in the RF-based methods, we preserved three principal components of hyperspectral data after AE stage, the neighbors of each pixel are regarded as the input 3D images. The input images are normalized into the range [−0.5–0.5]. The parameters of deep models were generally selected by trial-and-error. Table 4 showed the detailed parameter settings of deep models. The learning rate was chosen from {0.1, 0.01, 0.005, 0.002, 0.001, 0.0005, 0.0002, 0.0001}. The number of epochs was chosen from {100, 150, 200, 250, 300, 350}. The batch size was chosen from {50, 100, 200, 500, 1000, 5000}. We carefully trained and optimized the involved models for fair comparison.

Table 4.

The detailed parameter settings of deep models.

In this study, regularization was used as regularization techniques in DenseNet and Semi-GAN (mentioned later). regularization, which leads the value of weights tend to be smaller, is a common used technique to handle overfitting. In the experiments, the hyperparameter of weight decay was set to 0.0001. Furthermore, the global average pooling (GAP) were used in DenseNet to reduce number of the parameters for alleviating the problem of overfitting. The details about global average pooling can be found in the network structure.

The classification results obtained from different methods were shown in Table 5. Table 5 included the RF-based and original CNN-based methods to give a comprehensive comparison. To exploit spectral-spatial features, the extended morphological profiles with RF (EMP-RF), which is a popular method used in hyperspectral classification, was also performed. In the EMP-RF method, three principal components from HSIs were computed, and then the opening and closing operations were used to extract spatial information on the first three components. The shape structuring element (SE) was set as disk with an increasing size from 1 to 4. Therefore, 24 spatial features were generated. The extracted features were fed to Random Forest [70] to obtain the final classification results. We also used extended multi-attribute profiles (EMAPs), which was an extension of attribute profiles (APs) using different types of attributes [71,72]. For the EMAP-RF method [73], four morphological attributes types (area, diagonal, inertia, and standard deviation) were stacked together and computed for every connected component of a grayscale image. For every attribute, we set four thresholds and executed thinning or thickening operations according to the level between connected component and defined thresholds. For every band obtained from PCA, thus if N was the number of thresholds considered in the analysis, the AP was composed of 2N+1 images. We obtained 99 feature maps in the EMAPs due to the reserved three principal spectral bands and 16 thresholds in whole four attributes. In RF, 50 trees were chosen in the forest to train the samples and predict the labels of test data. In the CRF-based methods, we use the fully connected pairwise CRF proposed by [57] for its efficient computation, and the ability to capture fine details based on long-range dependencies. The CRF is regarded as a post-processing method on top of the convolution network, which treats every pixel as a CRF node receiving unary potentials of the CNN and Auto-Encoder-DenseNet. Furthermore, the original CNN was also used as a benchmark method.

Table 5.

Test accuracy with different supervised methods on the Indian Pines dataset.

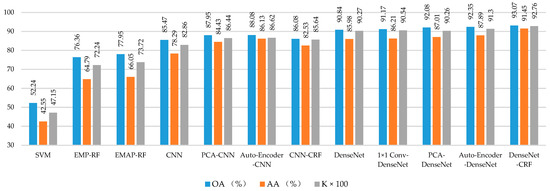

Table 5 showed the classification results with different supervised methods and Table 6 showed the classification results with different preprocessing methods on the Indian Pines dataset. For further comparison, Figure 5 was introduced to illustrate the test accuracy of relevant methods.

Table 6.

Test accuracy with different preprocessing methods on the Indian Pines dataset.

Figure 5.

Test accuracy of different supervised methods on the Indian Pines dataset.

From Figure 5, one can see that the OA, AA, and K of different classification methods on Indian Pines dataset were presented. The traditional methods (i.e., SVM, EMP-RF, and EMAP-RF) obtained relatively low classification accuracy compared with deep learning-based methods. For deep CNN-based methods, DenseNet obtained better classification performance compared with classical CNN. Moreover, the combination of DenseNet and pre-processing/post-processing obtained classification accuracy improvement compared with original DenseNet.

For example, OA, AA, and K of CNN were 85.47%, 78.29%, and 0.8286, respectively, improving these accuracies by 33.23%, 35.74%, and 0.3571% over RBF-SVM, respectively. And, DenseNet obtained better performance compared with CNN. On one hand, one can see that the preprocessing methods help improve the classification performance. For example, the Auto-Encoder-DenseNet obtained superior performance on OA, AA, and K, which outperformed the DenseNet by 1.51%, 5.47%, and 0.0103, respectively. In addition, one can see that Auto-Encoder-DenseNet obtained the best classification performance in terms of OA, which showed that the auto-encoder-based methods achieved better performance than PCA-based methods in terms of classification accuracy. On the other hand, the CRF combined with supervised methods achieved superior results compared with those without CRF. For example, the DenseNet-CRF obtained the highest scores in OA, AA, and K, which exceeded the DenseNet by 2.23%, 4.44%, and 0.0249, respectively. It showed that CRF could be used as a post-processing technique for further improving the classification performance of deep models.

In general, a deeper network may have superior performance compared with a shallow network due to larger numbers of composition of non-linear operations. Whereas arbitrarily increases the depth of network cannot bring the benefit, it may deteriorate the generalization abilities of network and cause the overfitting phenomenon.

To evaluate the sensitivity of DenseNet over depth, we performed several experiments based on different network depths, the classification results, and cost time for five repeated experiments were shown in Table 7. In these experiments, the depth of DenseNet is controlled by the numbers of composite function in four dense blocks (i.e., (1, 2, 3, 4) and (2, 4, 6, 8)). The parameter (1, 2, 3, 4) means that the numbers of aforementioned in four dense blocks are 1, 2, 3, and 4, respectively. If we regard each as a composite layer, then it possesses 1 + 2 + 3 + 4 = 10 layers, and for the parameters (2, 4, 6, 8), it possesses 2 + 4 + 6 + 8 = 20 layers.

Table 7.

Test accuracy of DenseNet over different depth in Indian Pines dataset.

From the results, one can see that the classification accuracy firstly increased and then decreased with the growth of network depth increasingly. It demonstrated that adding the depth of DenseNet suitably could boost its superior ability. While too deep architecture may lead to the overfitting phenomenon. This can deteriorate the generalization abilities of network, which is a reason for descending of classification accuracy.

4.3. HSI Semi-Supervised Fine-grained Classification

From aforementioned methods, one can see that the supervised method usually requires a large number of labeled samples for training to learn its parameters. However, the labeled samples are commonly very limited for the real remote sensing application, due to the high labeling cost, the semi-supervised methods, which exploited both labeled and unlabeled samples, have been widely utilized to increase the accuracy and robustness of class predictions.

In this experiment, other semi-supervised classification methods including transductive SVM (TSVM) and Label Propagation were also executed to make a comprehensive comparison with the proposed Semi-GAN method. In TSVM, we used n-cross-validation method to execute model selection, and considering a multiclass problem defined by a set made up of N class labels, originally the transductive process of TSVM was based on a structured architecture made up of binary classifiers [22], which was not proper for multiclass classification of unlabeled samples. In this experiment, a one-against-all multiclass strategy that involved a parallel architecture consisting of N different TSVMs, was adopted. The training and test data were chosen randomly among the whole dataset and with the assumption that there is at least one sample for each class. To assess the effectiveness of TSVM, the chosen test samples were regarded as unlabeled samples.

However, these samples have not been used for the model selection due to the assumption that the labels are unavailable. In the graph-based method like Label Propagation, we used a RBF kernel to construct a graph, and the clamping factor was set to be 0.2, which represented that the 80 percent of original label distribution was always reserved and it changed the confidence of the distribution within 20 percent [23]. This method iterated on a modified version of the original graph and normalized the edge weights by computing the normalized graph Laplacian matrix, besides, it minimized a loss function that has regularization properties to make classification performance robust against noise.

In the proposed Semi-GAN, firstly, the real training samples from the dataset, which used 10 principal components through PCA were divided into the labeled part and unlabeled part, and the real labeled samples were introduced into both the models and , while the unlabeled samples were fed into the DenseNet to get the predicted corresponding labels. The size of input noise to the model is , and the model converted the inputs to fake samples with the size of . The data received by the discriminator come from three sections: real labeled training data, the fake data generated by the generator , and the real unlabeled training data with predicted labels. Besides, the label smoothing, a technique that replaced the 0 and 1 targets for a classifier with smoothed values with 0.2 and 0.8, was adopted to reduce the vulnerability of neural networks [74] in this paper. The experiment arrangement and details of the models and architectures were set like [42].

The classification results obtained from different semi-supervised approaches were listed in Table 8. From Table 8, one can see that the proposed Semi-GAN obtained the best performance compared with Label Propagation and TSVM. The OA, AA, and K of our approach were 94.02%, 90.11%, and 0.9276%, which are higher 34%, 38.91%, and 0.3359% than Label Propagation, respectively. Furthermore, the Label Propagation possessed a superior capacity than TSVM in coping with complex data. The OA, AA, and K of the Label Propagation were higher than those of TSVM by 5.53%, 9.18%, and 0.0848%, respectively.

Table 8.

Test accuracy with different semi-supervised methods on the Indian Pines dataset.

Moreover, to explore the capacity of our Semi-GAN, several different experiments with progressively reduced training data are performed. Here, the number of labeled training samples fed into Semi-GAN to train the network, which decreased gradually, is represented by N. Moreover, N was set to 4000, 6000, and 8000 in this experiment, and the different classification accuracy results with different training data numbers were shown in Table 9. Besides, the number of unlabeled samples regarded as the training dataset of Semi-GAN is all set to 20,000 in these experiments.

Table 9.

Test accuracy with different training samples numbers on Indian Pines dataset.

From the results, one can see that as the number of labeled training samples decreased, the performance of Semi-GAN also deteriorated gradually. For example, the network with 8000 labeled samples and 20,000 unlabeled samples obtained the highest scores in OA, AA, and K, with which exceeded 6000 training samples by 1.49%, 1.97%, and 0.0101, respectively, in addition, they also outperformed 4000 training samples by 4.57%, 6.70%, and 0.05, respectively.

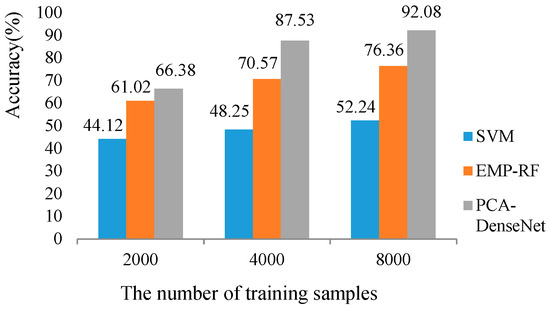

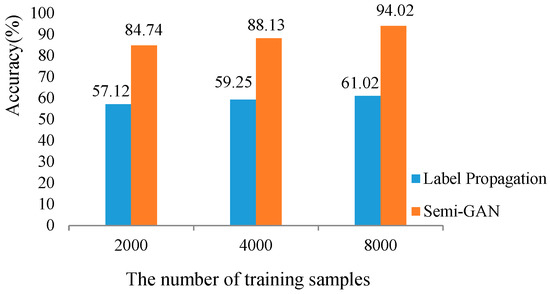

4.4. Limited Training Samples and Classification Maps

In this experiment, in order to make a comprehensive comparison between different supervised and semi-supervised methods, respectively, we calculated the results of OA when the number of training samples was changed. The results of supervised and semi-supervised methods were shown in Figure 6 and Figure 7, respectively.

Figure 6.

Test accuracy of different supervised methods with changed number of training samples.

Figure 7.

Test accuracy of different semi-supervised methods with changed number of training samples.

For supervised methods, we chose the SVM, EMP-RF, and PCA-DenseNet to report the different capacities in coping with complex data when the number of training samples was reduced. For semi-supervised methods, the Label Propagation and Semi-GAN were chosen as the contrastive methods. Take the two figures apart, we can see that the PCA-DenseNet and Semi-GAN always obtained the highest OA in three different conditions for both supervised and semi-supervised classification. Compared with the traditional approaches used in HSI classification, the results demonstrated that the deep learning methods can exploit huge capacities in coping with complex data. When combining the two figures together, the Semi-GAN showed the best performance in all supervised and semi-supervised methods. Furthermore, we can see that the reduction of Semi-GAN was lower than PCA-DenseNet when the number of available training samples decreased, which proved that the semi-supervised methods obtained a superior ability than supervised approaches in the limited training samples condition.

Moreover, we visually analyzed the classification results. The investigated methods include SVM, EMP-RF, PCA-DenseNet, and Semi-GAN. The classification maps for different approaches were shown in Figure 8. From the maps, one can see how the different methods affected the classification results. The EMP-RF method had the lowest precise in the dataset (see Figure 8b), and compared with the traditional methods, the deep learning methods achieved a superior performance in classification, furthermore, we can see that our proposed Semi-GAN gave a more detailed classification map than PCA-DenseNet.

Figure 8.

(a) False-color composite image of Indian Pines dataset; The classification maps using (b) EMP-RF; (c) PCA-DenseNet; (d) DenseNet-CRF; (e) Semi-GAN.

4.5. Consuming Time

In this study, the total running time for five repeated experiments of five methods, e.g., the CNN-based models and traditional SVM model, on this dataset were shown in Table 10. In the SVM method, we preserved three principal components of HSI after PCA, the 27 × 27 × 3 neighbors of each pixel were regarded as the input 3D image. In the CNN-based methods, the size of each input image was 64 × 64 × d, where the d represented the number of spectral bands in CNN and 64 × 64 × 10 in Semi-GAN and PCA-DenseNet. All the experiments were run on a 3.2-GHz CPU with a GTX 1060 GPU card. The CNN-based methods were performed on PyTorch platform and the SVM method was performed on LibSVM library.

Table 10.

Running time of five different methods.

From Table 10, one can see that SVM method had the longest running time. When coping with complicated data with large volume, the consuming time of SVM-based method increased sharply along with the increasing numbers of training samples, which made SVM not suitable for the classification with lots of training samples.

The deep models reduced the total consuming time drastically and improved the classification performance at the same time when compared with SVM model. Besides, the additional preprocessing operations like PCA and Auto-Encoder reduced the running time greatly, which make deep learning methods more applicable for the HSI fine-grained classification with a great number of classes.

5. Conclusions

The fine-grained classification of HSI is a task to be solved nowadays. In this study, deep learning-based methods were investigated for HSI supervised and semi-supervised fine-grained classification for the first time. The obtained experimental results have shown that the proposed deep learning-based methods obtained superior performance in terms of classification accuracy.

For supervised fine-grained classification of HSI, densely connected CNN was proposed for accurate classification. The deep learning-based methods significantly outperformed the traditional spectral-spatial classifiers such as SVM, EMP-RF, and EMAP-RF in terms of classification accuracy. Moreover, the combination of DenseNet with pre-processing or post-processing technique was proposed to further improve classification accuracy.

For semi-supervised fine-grained classification of HSI, GAN was used to handle the labeled and unlabeled samples in the training stage. The proposed 3D Semi-GAN achieved better classification performance compared with traditional semi-supervised classifiers such as TSVM and Label Propagation.

The proposed deep learning models worked effectively with different numbers of training samples. The deep models exhibited good classification performance (e.g., OA was 88.23%) even under limited training samples (e.g., 4000 training samples were available, which meant there were only 77 training samples for each class on average in Auto-Encoder-DenseNet). The study demonstrates that deep learning has a huge potential for HSI fine-grained classification.

Author Contributions

Conceptualization, Y.C.; methodology, L.H., L.Z., and Y.C.; writing—original draft preparation, Y.C., L.H., L.Z., N.Y., and X.J.

Funding

This research was funded by the Natural Science Foundation of China under the Grant 61971164.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fauvel, M.; Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J.; Tilton, J.C. Advances in Spectral-Spatial Classification of Hyperspectral Images. Proc. IEEE 2012, 101, 652–675. [Google Scholar] [CrossRef]

- Chang, C.-I. Hyperspectral Imaging: Techniques for Spectral Detection and Classification; Kluwer Academic Publishers: New York, NY, USA, 2003; pp. 15–35. [Google Scholar]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Spectral–spatial hyperspectral image segmentation using subspace multinomial logistic regression and Markov random fields. IEEE Trans. Geosci. Remote Sens. 2011, 50, 809–823. [Google Scholar] [CrossRef]

- Ham, J.; Chen, Y.; Crawford, M.M.; Ghosh, J. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef]

- Yang, H. A back-propagation neural network for mineralogical mapping from AVIRIS data. Int. J. Remote Sens. 1999, 20, 97–110. [Google Scholar] [CrossRef]

- Gualtieri, J.A.; Cromp, R.F. Support vector machines for hyperspectral remote sensing classification. In Proceedings of the 27th AIPR Workshop: Advances in Computer-Assisted Recognition, Washington, DC, USA, 14–16 October 1998; pp. 221–232. [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef]

- Xu, X.; Li, W.; Ran, Q.; Du, Q.; Gao, L.; Zhang, B. Multisource remote sensing data classification based on convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2017, 56, 937–949. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.; Zhang, X.; Huang, X. Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Palmason, J.A.; Benediktsson, J.A.; Sveinsson, J.R.; Chanussot, J. Classification of hyperspectral data from urban areas using morphological preprocessing and independent component analysis. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 25–29 July 2005; pp. 176–179. [Google Scholar]

- Pesaresi, M.; Benediktsson, J.A. A new approach for the morphological segmentation of high-resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Gu, Y.; Chanussot, J.; Jia, X.; Benediktsson, J.A. Multiple kernel learning for hyperspectral image classification: A review. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6547–6565. [Google Scholar] [CrossRef]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3804–3814. [Google Scholar] [CrossRef]

- Song, B.; Li, J.; Dalla Mura, M.; Li, P.; Plaza, A.; Bioucas-Dias, J.M.; Benediktsson, J.A.; Chanussot, J. Remotely sensed image classification using sparse representations of morphological attribute profiles. IEEE Trans. Geosci. Remote Sens. 2013, 52, 5122–5136. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Gomez-Chova, L.; Muñoz-Marí, J.; Vila-Francés, J.; Calpe-Maravilla, J. Composite kernels for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2006, 3, 93–97. [Google Scholar] [CrossRef]

- Baraldi, A.; Bruzzone, L.; Blonda, P. Quality assessment of classification and cluster maps without ground truth knowledge. IEEE Trans. Geosci. Remote Sens. 2005, 43, 857–873. [Google Scholar] [CrossRef]

- Chi, M.; Bruzzone, L. A semilabeled-sample-driven bagging technique for ill-posed classification problems. IEEE Geosci. Remote Sens. Lett. 2005, 2, 69–73. [Google Scholar] [CrossRef]

- Shahshahani, B.M.; Landgrebe, D.A. The effect of unlabeled samples in reducing the small sample size problem and mitigating the Hughes phenomenon. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1087–1095. [Google Scholar] [CrossRef]

- Bruzzone, L.; Chi, M.; Marconcini, M. A novel transductive SVM for semisupervised classification of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3363–3373. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Marsheva, T.V.B.; Zhou, D. Semi-supervised graph-based hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3044–3054. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.-r.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Kingsbury, B. Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Gao, J.; He, X.; Yih, W.-T.; Deng, L. Learning semantic representations for the phrase translation model. arXiv 2013, arXiv:1312.0482. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Geng, J. Spectral–spatial classification of hyperspectral image based on deep auto-encoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4073–4085. [Google Scholar] [CrossRef]

- Zhong, P.; Gong, Z.; Li, S.; Schönlieb, C.-B. Learning to diversify deep belief networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3516–3530. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 1–12. [Google Scholar] [CrossRef]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised deep feature extraction for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1349–1362. [Google Scholar] [CrossRef]

- Tao, Y.; Xu, M.; Lu, Z.; Zhong, Y. DenseNet-based depth-width double reinforced deep learning neural network for high-resolution remote sensing image per-pixel classification. Remote Sens. 2018, 10, 779. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Li, W.; Wu, G.; Zhang, F.; Du, Q. Hyperspectral image classification using deep pixel-pair features. IEEE Trans. Geosci. Remote Sens. 2016, 55, 844–853. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–spatial residual network for hyperspectral image classification: A 3-D deep learning framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 847–858. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–spatial classification of hyperspectral imagery with 3D convolutional neural network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Sellami, A.; Farah, M.; Farah, I.R.; Solaiman, B. Hyperspectral imagery classification based on semi-supervised 3-D deep neural network and adaptive band selection. Expert Syst. Appl. 2019, 129, 246–259. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Generative adversarial networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5046–5063. [Google Scholar] [CrossRef]

- Zhan, Y.; Hu, D.; Wang, Y.; Yu, X. Semisupervised hyperspectral image classification based on generative adversarial networks. IEEE Geosci. Remote Sens. Lett. 2017, 15, 212–216. [Google Scholar] [CrossRef]

- Cavallaro, G.; Riedel, M.; Richerzhagen, M.; Benediktsson, J.A.; Plaza, A. On understanding big data impacts in remotely sensed image classification using support vector machine methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4634–4646. [Google Scholar] [CrossRef]

- Richards, J.A.; Richards, J. Remote Sensing Digital Image Analysis; Springer: Berlin, Germany, 1999; pp. 161–201. [Google Scholar]

- Mughees, A.; Tao, L. Efficient deep auto-encoder learning for the classification of hyperspectral images. In Proceedings of the 2016 International Conference on Virtual Reality and Visualization (ICVRV), Hangzhou, China, 23–25 September 2016; pp. 44–51. [Google Scholar]

- Chu, X.; Ouyang, W.; Wang, X. Crf-cnn: Modeling structured information in human pose estimation. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 316–324. [Google Scholar]

- Kirillov, A.; Schlesinger, D.; Zheng, S.; Savchynskyy, B.; Torr, P.H.; Rother, C. Joint training of generic CNN-CRF models with stochastic optimization. In Proceedings of the Asian Conference on Computer Vision, Taipei, China, 20–24 November 2016; pp. 221–236. [Google Scholar]

- Liu, F.; Lin, G.; Shen, C. CRF learning with CNN features for image segmentation. Pattern Recognit. 2015, 48, 2983–2992. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H. Conditional random fields as recurrent neural networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1529–1537. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How does batch normalization help optimization? In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 2–8 December 2018; pp. 2483–2493. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Rother, C.; Kolmogorov, V.; Blake, A. Grabcut: Interactive foreground extraction using iterated graph cuts. ACM Trans. Gr. (TOG) 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Shotton, J.; Winn, J.; Rother, C.; Criminisi, A. Textonboost for image understanding: Multi-class object recognition and segmentation by jointly modeling texture, layout, and context. Int. J. Comput. Vision 2009, 81, 2–23. [Google Scholar] [CrossRef]

- Krähenbühl, P.; Koltun, V. Efficient inference in fully connected crfs with gaussian edge potentials. In Proceedings of the Advances in Neural Information Processing Systems, Granada, Spain, 12–17 December 2011; pp. 109–117. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 4681–4690. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Yi, Z.; Zhang, H.; Tan, P.; Gong, M. Dualgan: Unsupervised dual learning for image-to-image translation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2849–2857. [Google Scholar]

- Mathieu, M.; Couprie, C.; LeCun, Y. Deep multi-scale video prediction beyond mean square error. arXiv 2015, arXiv:1511.05440. [Google Scholar]

- Li, C.; Wand, M. Precomputed real-time texture synthesis with markovian generative adversarial networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 702–716. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. Seqgan: Sequence generative adversarial nets with policy gradient. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein gan. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 2642–2651. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Breiman, L. RF/tools: A class of two-eyed algorithms. In Proceedings of the SIAM Workshop, San Francisco, CA, USA, 1–3 May 2003; pp. 1–56. [Google Scholar]

- Dalla Mura, M.; Atli Benediktsson, J.; Waske, B.; Bruzzone, L. Extended profiles with morphological attribute filters for the analysis of hyperspectral data. Int. J. Remote Sens. 2010, 31, 5975–5991. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Benediktsson, J.A.; Waske, B.; Bruzzone, L. Morphological attribute profiles for the analysis of very high resolution images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3747–3762. [Google Scholar] [CrossRef]

- Ghamisi, P.; Benediktsson, J.A.; Cavallaro, G.; Plaza, A. Automatic framework for spectral–spatial classification based on supervised feature extraction and morphological attribute profiles. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2147–2160. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training gans. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2234–2242. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).