Self-Adjusting Thresholding for Burnt Area Detection Based on Optical Images

Abstract

1. Introduction

2. Materials and Methods

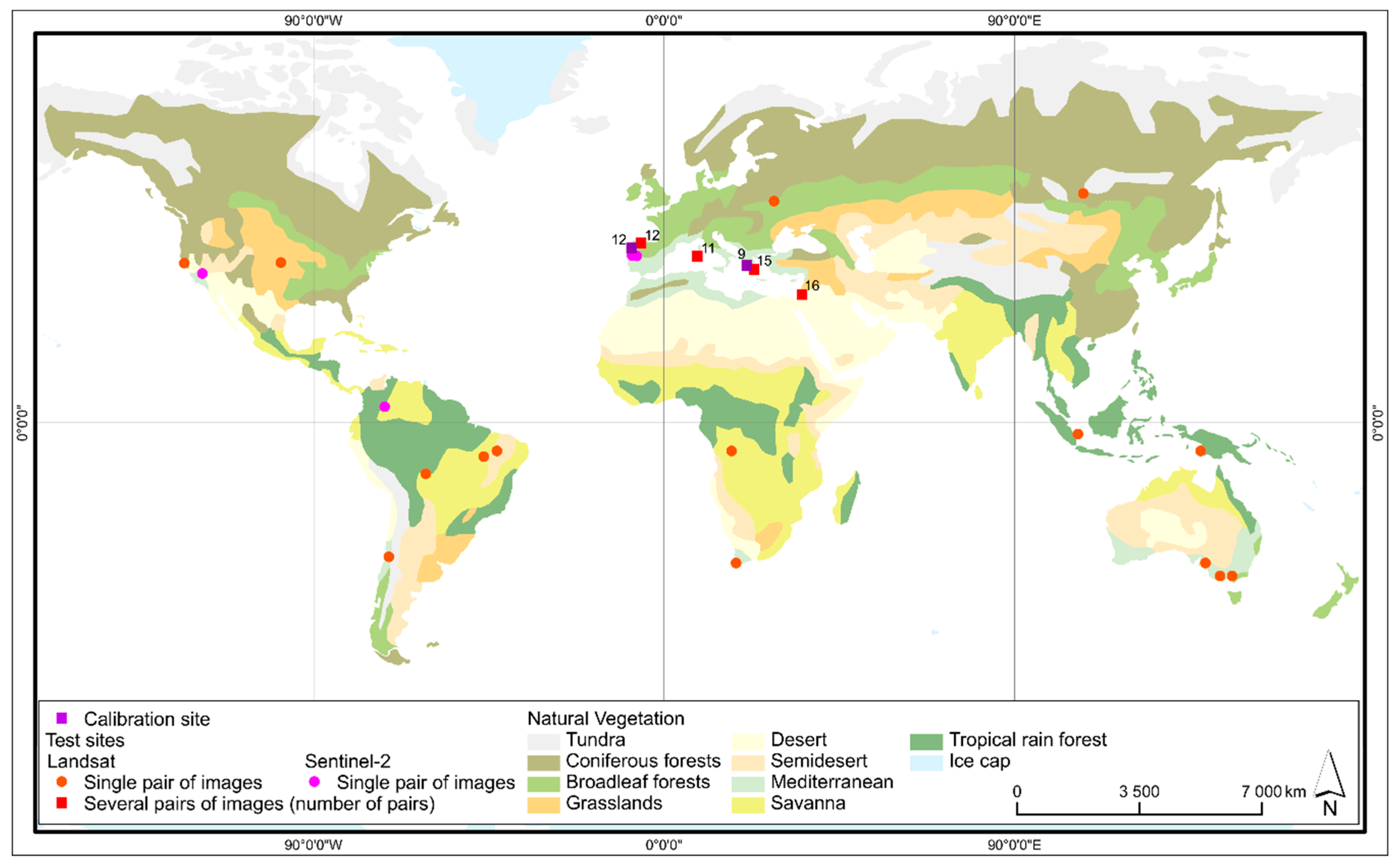

2.1. Data

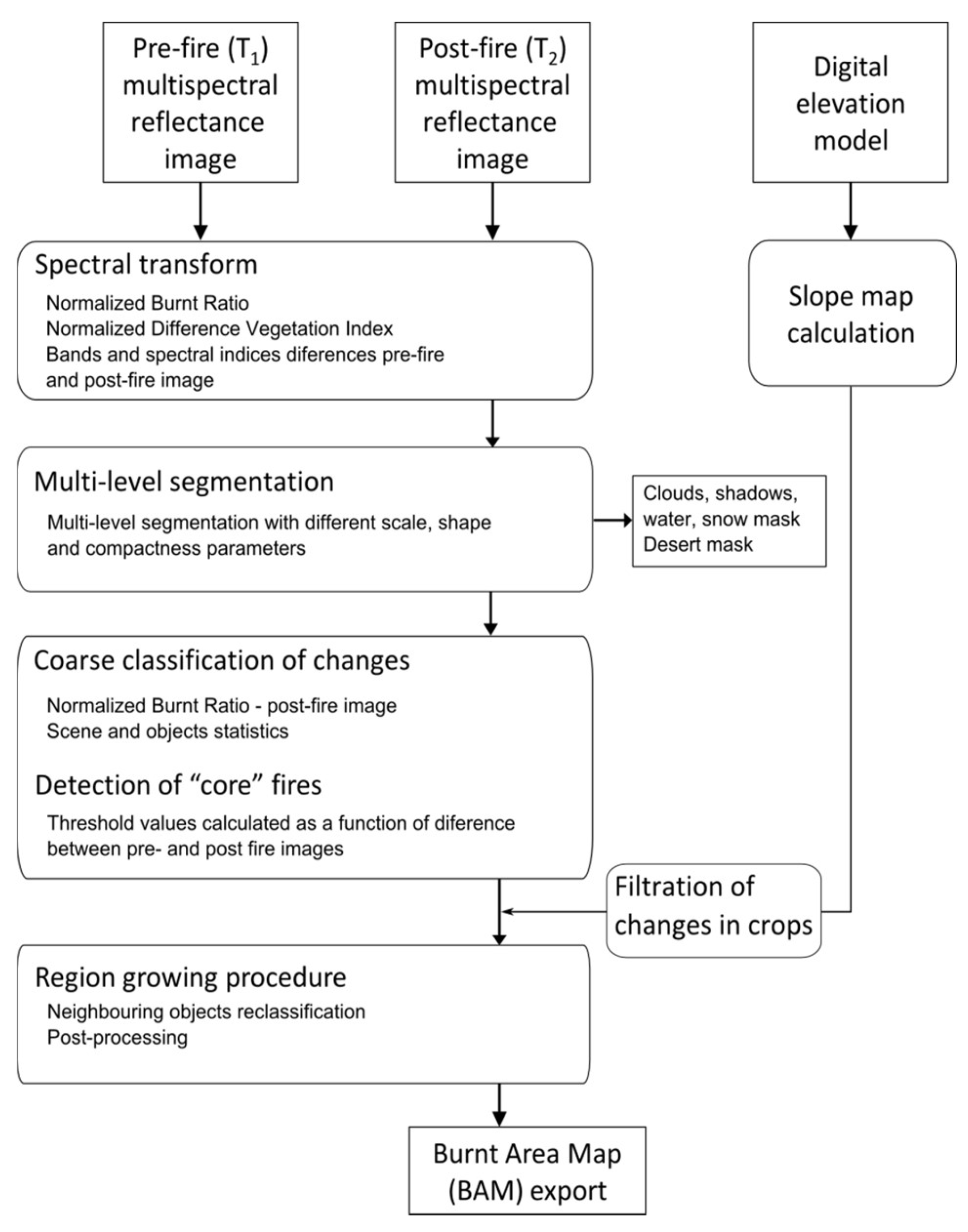

2.2. Method

2.2.1. Band Arithmetic

2.2.2. Segmentation and Masking

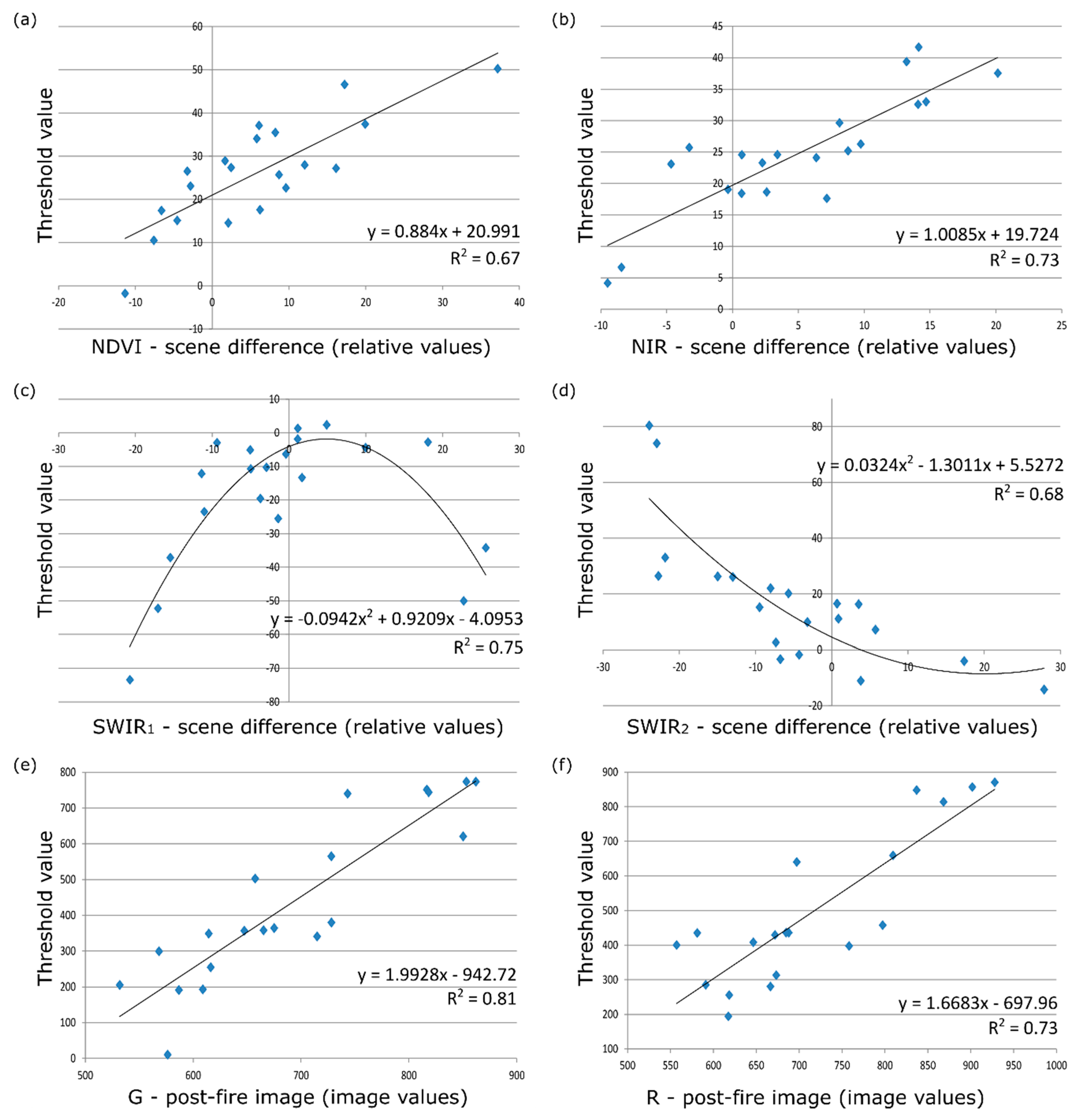

2.2.3. Core Burnt Areas Classification

2.2.4. Region Growing

2.3. Validation

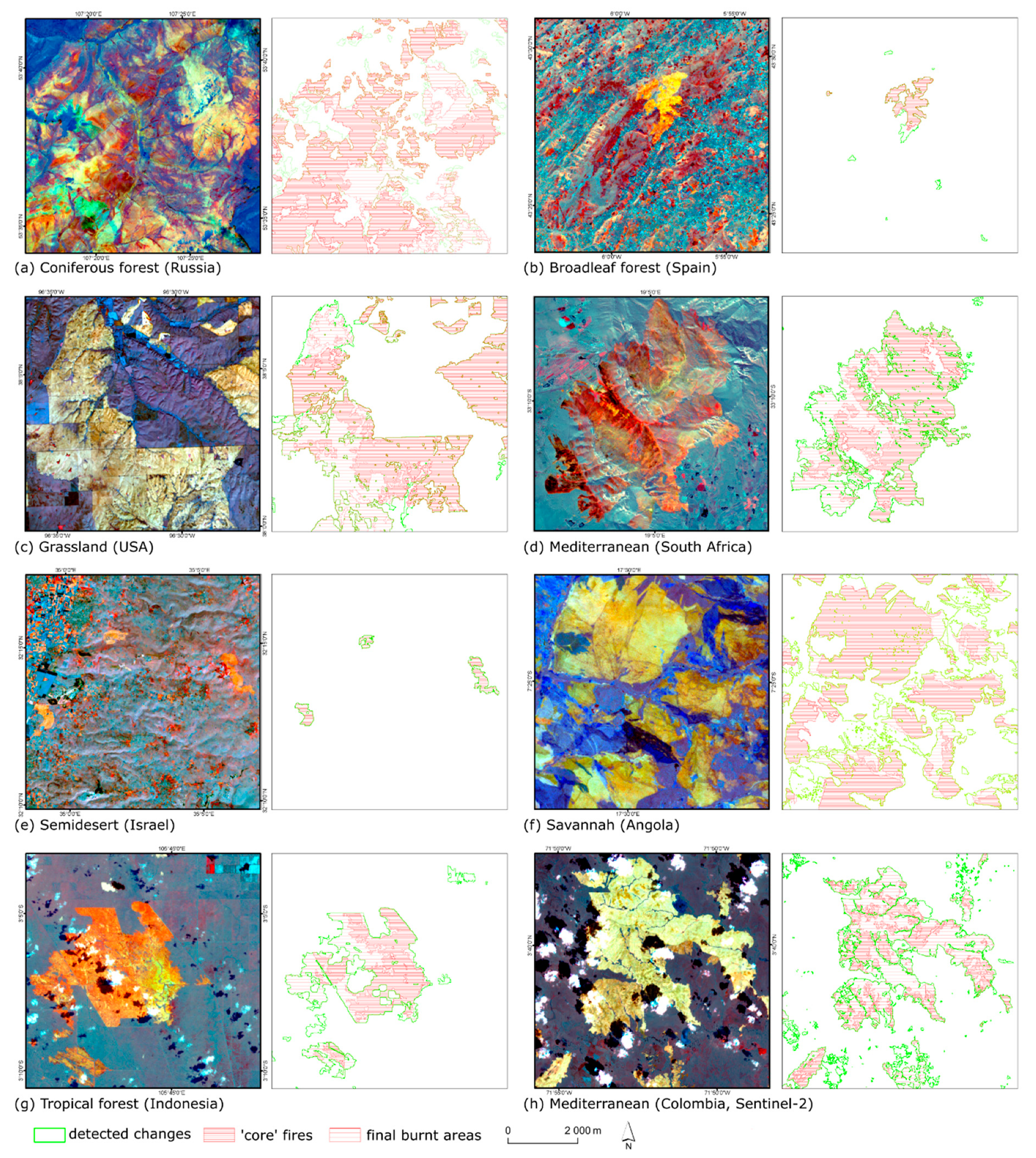

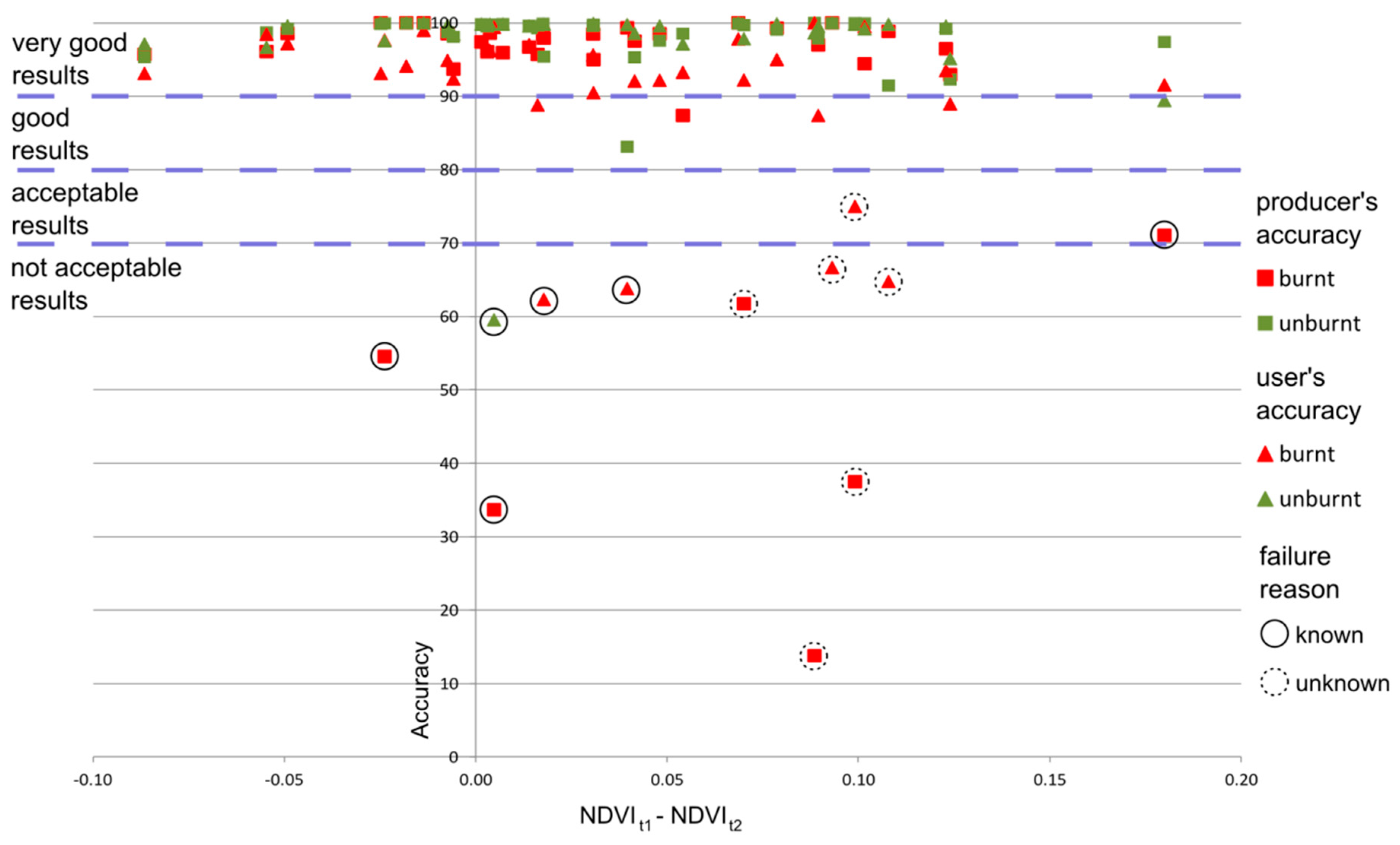

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Brushlinsky, N.N.; Ahrens, M.; Sokolov, S.V.; Wagner, P. World Fire Statistics 22; Center of Fire Statistics of International Association of Fire and Rescue Services: Ljubljana, Slovenia, 2017. [Google Scholar]

- Bowman, D.M.J.S.; Balch, J.K.; Artaxo, P.; Bond, W.J.; Carlson, J.M.; Cochrane, M.A.; D’Antonio, C.M.; DeFries, R.S.; Doyle, J.C.; Harrison, S.P.; et al. Fire in the Earth System. Science 2009, 324, 481–484. [Google Scholar] [CrossRef]

- Andersen, A.N.; Cook, G.D.; Corbett, L.K.; Douglas, M.M.; Eager, R.W.; Russell-Smith, J.; Setterfield, S.A.; Williams RJ And Woinarski JC, Z. Fire frequency and biodiversity conservation in Australian tropical savannas: Implications from the Kapalga fire experiment. Austral Ecol. 2005, 30, 155–167. [Google Scholar] [CrossRef]

- Certini, G. Effects of fire on properties of forest soils: A review. Oecologia 2005, 143, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Morgan, P.; Hardy, C.; Swetnam, T.; Rollins, M.; Long, D. Mapping fire regimes across time and space: Understanding coarse and fine scale fire patterns. Int. J. Wildland Fire 2001, 10, 329–343. [Google Scholar] [CrossRef]

- Barbosa, P.M.; Gregoire, J.M.; Cardoso Pereira, J.M. An Algorithm for Extracting Burned Areas from Time Series of AVHRR GAC Data Applied at a Continental Scale. Remote Sens. Environ. 1999, 69, 253–263. [Google Scholar] [CrossRef]

- Justice, C.O.; Giglio, L.; Korontzi, S.; Owens, J.; Morisette, J.T.; Roy, D.; Descloitres, J.; Alleaume, S.; Petitcolin, F.; Kaufman, Y. The MODIS fire products. Remote Sens. Environ. 2002, 83, 244–262. [Google Scholar] [CrossRef]

- Roy, D.; Jin, Y.; Lewis, P.; Justice, C. Prototyping a global algorithm for systematic fire-affected area mapping using MODIS time series data. Remote Sens. Environ. 2005, 97, 137–162. [Google Scholar] [CrossRef]

- Giglio, L.; van der Werf, G.; Randerson, J.; Collatz, G.; Kasibhatla, P. Global estimation of burned area using MODIS active fire observations. Atmos. Chem. Phys. 2006, 6, 957–974. [Google Scholar] [CrossRef]

- Alonso-Canas, I.; Chuvieco, E. Global Burned Area Mapping from ENVISAT-MERIS data. Remote Sens. Environ. 2015, 163, 140–152. [Google Scholar] [CrossRef]

- Chuvieco, E.; Yue, C.; Heil, A.; Mouillot, F.; Alonso-Canas, I.; Padilla, M.; Pereira, J.M.; Oom, D.; Tansey, K. A new global burned area product for climate assessment of fire impacts. Glob. Ecol. Biogeogr. 2016, 25, 619–629. [Google Scholar] [CrossRef]

- Chuvieco, E.; Lizundia-Loiola, J.; Pettinari, M.L.; Ramo, R.; Padilla, M.; Tansey, K.; Mouillot, F.; Laurent, P.; Storm, T.; Heil, A. Generation and analysis of a new global burned area product based on MODIS 250 m reflectance bands and thermal anomalies. Earth Syst. Sci. Data 2018, 10, 2015–2031. [Google Scholar] [CrossRef]

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The Collection 6 MODIS burned area mapping algorithm and product. Remote Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef] [PubMed]

- Otón, G.; Ramo, R.; Lizundia-Loiola, J.; Chuvieco, E. Global Detection of Long-Term (1982–2017) Burned Area with AVHRR-LTDR Data. Remote Sens. 2019, 11, 2079. [Google Scholar] [CrossRef]

- Malingreau, J.P.; Stephens, G.; Fellows, L. Remote sensing of forest fires: Kalimantan and North Borneo in 1982–83. Ambio 1985, 14, 314–321. [Google Scholar]

- Libonati, R.; DaCamara, C.; Pereira, J.; Peres, L. Retrieving middle-infrared reflectance for burned area mapping in tropical environments using MODIS. Remote Sens. Environ. 2010, 114, 831–843. [Google Scholar] [CrossRef]

- Hardtke, L.A.; Blancoa, P.D.; del Vallea, H.F.; Metternichtb, G.I.; Sionec, W.F. Semi-automated mapping of burned areas in semi-arid ecosystems using MODIS time-series imagery. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 25–35. [Google Scholar] [CrossRef]

- De Carvalho, O.A., Jr.; Fontes Guimaraes, R.; Rosa Silva, C.; Trancoso Gomes, R.A. Standardized Time-Series and Interannual Phenological Deviation: New Techniques for Burned-Area Detection UsingLong-Term MODIS-NBR Dataset. Remote Sens. 2015, 7, 6950–6985. [Google Scholar] [CrossRef]

- Fernandez, A.; Illera, P.; Casanova, J.L. Automatic mapping of surfaces affected by forest fires in Spain using AVHRR NDVI composite image data. Remote Sens. Environ. 1997, 60, 153–162. [Google Scholar] [CrossRef]

- Garcia, M.; Chuvieco, E. Assessment of the potential of SAC-C/MMRS imagery for mapping burned areas in Spain. Remote Sens. Environ. 2004, 92, 414–423. [Google Scholar] [CrossRef]

- Quintano, C.; Fernández-Manso, A.; Stein, A.; Bijker, W. Estimation of area burned by forest fires in Mediterranean countries: A remote sensing data mining perspective. For. Ecol. Manag. 2011, 262, 1597–1607. [Google Scholar] [CrossRef]

- Loboda, T.; O’Neal, K.; Csiszar, I. Regionally adaptable dNBR-based algorithm for burned area mapping from MODIS data. Remote Sens. Environ. 2007, 109, 429–442. [Google Scholar] [CrossRef]

- Russell-Smith, J.; Ryan, P.G.; Durieu, R. A Landsat MSS-derived fire history of Kakadu National Park. J. Appl. Ecol. 1997, 34, 748–766. [Google Scholar] [CrossRef]

- Edwards, A.; Hauser, P.; Anderson, M.; McCartney, J.; Armstrong, M.; Thackway, R.; Allan, G.; Hempel, C.; Russell-Smith, J. A tale of two parks: Contemporary fire regimes of Litchfield and Nitmiluk National Parks, monsoonal northern Australia. Int. J. Wildland Fire 2001, 10, 79–89. [Google Scholar] [CrossRef]

- Recondo, C.; Woźniak, E.; Perez-Morandaira, C. Cartografia de zonas quemadas en Asturias durante el periodo 1991-2001 a partir de imaenes Landsat TM. Rev. De Teledetec. 2002, 18, 47–55. [Google Scholar]

- Silva, J.; Sá, A.; Pereira, J. Comparison of burned area estimates derived from SPOT-VEGETATION and Landsat ETM+ data in Africa: Influence of spatial pattern and vegetation type. Remote Sens. Environ. 2005, 96, 188–201. [Google Scholar] [CrossRef]

- Felderhof, L.; Gillieson, D. Comparison of fire patterns and fire frequency in two tropical savanna bioregions. Austral Ecol. 2006, 31, 736–746. [Google Scholar] [CrossRef]

- Goodwin, N.R.; Collett, L.J. Development of an automated method for mapping fire history captured in Landsat TM and ETM+ time series across Queensland, Australia. Remote Sens. Environ. 2014, 148, 206–221. [Google Scholar] [CrossRef]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.-J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.T.; Williams, B.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J.; et al. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- Bastarrika, A.; Chuvieco, E.; Martín, M.P. Mapping burned areas from Landsat TM/ETM+ data with a two-phase algorithm: Balancing omission and commission errors. Remote Sens. Environ. 2011, 115, 1003–1012. [Google Scholar] [CrossRef]

- Stroppiana, D.; Bordogna, G.; Carrara, P.; Boschetti, M.; Boschetti, L.; Brivio, P.A. A method for extracting burned areas from Landsat TM/ETM+ images by soft aggregation of multiple spectral indices and a region growing algorithm. ISPRS J. Photogramm. Remote Sens. 2012, 69, 88–102. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. In Proceedings of the 3rd Earth Resources Technology Satellite-1 Symposium (NASA), Washington, DC, USA, 10–14 December 1974; pp. 309–317. [Google Scholar]

- Chuvieco, E.; Martín, M.P.; Palacios, A. Assessment of different spectral indices in the red-near-infrared spectral domain for burned land discrimination. Int. J. Remote Sens. 2002, 23, 5103–5110. [Google Scholar] [CrossRef]

- Pinty, B.; Verstraete, M.M. GEMI: A non-linear index to monitor global vegetation from satellites. Vegetatio 1992, 101, 15–20. [Google Scholar] [CrossRef]

- Key, C.H.; Benson, N.C. Measuring and remote sensing of burn severity. In Proceedings of the Joint Fire Science Conference and Workshop: Crossing the Millennium: Integrating Spatial Technologies and Ecological Principles for a New Age in Fire Management, Boise, Idaho, 15–17 June 1999; Volume 2, p. 284. [Google Scholar]

- Miller, J.D.; Thode, A.E. Quantifying burn severity in a heterogeneous landscape with a relative version of the delta Normalized Burn Ratio (dNBR). Remote Sens. Environ. 2007, 109, 66–80. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Lhermitte, S.; Verstraeten, W.W.; Goossens, R. A time-integrated MODIS burn severity assessment using the multi-temporal differenced normalized burn ratio (dNBRMT). Int. J. Appl. Earth Obs. Geoinf. 2001, 13, 52–58. [Google Scholar] [CrossRef]

- Martín, M.P. Cartografía e Inventario de Incendios Forestales en la Península Ibérica a Partir de Imágenes NOAA–AVHRR. Ph.D. Thesis, Departamento de Geografía, Universidad de Alcalá, Alcalá de Henares, Madrid, Spain, 1998. [Google Scholar]

- Martín, M.; Gómez, I.; Chuvieco, E. Burnt area index (BAIM) for burned area discrimination at regional scale using MODIS data. For. Ecol. Manag. 2006, 234, s221. [Google Scholar] [CrossRef]

- Maier, S. Changes in surface reflectance from wildfires on the Australian continent measured by MODIS. Int. J. Remote Sens. 2010, 31, 3161–3176. [Google Scholar] [CrossRef]

- Katagis, T.; Gitas, I.Z.; Mitri, G.H. An Object-Based Approach for Fire History Reconstruction by Using Three Generations of Landsat Sensors. Remote Sens. 2014, 6, 5480–5496. [Google Scholar] [CrossRef]

- Stroppiana, D.; Azar, R.; Calò, F.; Pepe, A.; Imperatore, P.; Boschetti, M.; Silva, J.M.N.; Brivio, P.A.; Lanari, R. Integration of Optical and SAR Data for Burned Area Mapping in Mediterranean Regions. Remote Sens. 2015, 7, 1320–1345. [Google Scholar] [CrossRef]

- Li, Z.; Kaufman, Y.J.; Ichoku, C.; Fraser, R.; Trishchenke, A.; Giglio, L.; Jin, J.; Yu, X. A review of AVHRR-based active fire detection algorithms: Principles, limitations, and recommendations. In Global and Regional Vegetation Fire Monitoring from Space. Planning a Coordinated and International Effort; Ahern, F.J., Goldammer, J.G., Justice, C.O., Eds.; SPB Academic: The Hague, The Netherlands, 2001; pp. 199–255. [Google Scholar]

- Adams, R.; Bischof, L. Seeded region growing. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 641–647. [Google Scholar] [CrossRef]

- Vincent, L.; Soille, P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Bastarrika, A.; Alvarado, M.; Artano, K.; Martinez, M.P.; Mesanza, A.; Torre, L.; Ramo, R.; Chuvieco, E. BAMS: A Tool for supervised burned area mapping using Landsat data. Remote Sens. 2014, 6, 12360–12380. [Google Scholar] [CrossRef]

- USGS. Product Guide. LANDSAT 4-7 Surface Reflectance (LEDAPS) Product. 2018. Available online: https://landsat.usgs.gov/sites/default/files/documents/ledaps_product_guide.pdf (accessed on 23 February 2018).

- USGS. Product Guide. LANDSAT 8 Surface Reflectance (LASRC) Product. 2017. Available online: https://landsat.usgs.gov/sites/default/files/documents/lasrc_product_guide.pdf (accessed on 23 February 2018).

- ESA. S2 MPC L2A Product Definition Document. S2-PDGS-MPC-L2A-PDD-V14.2. 2017. Available online: http://step.esa.int/thirdparties/sen2cor/2.4.0/Sen2Cor_240_Documenation_PDF/S2-PDGS-MPC-L2A-PDD-V14.2_V4.6.pdf (accessed on 23 February 2018).

- Kalimeris, A.; Founda, D.; Giannakopoulos, C.; Pierros, F. Long-term precipitation variability in the Ionian Islands, Greece (Central Mediterranean): Climatic signal analysis and future projections. Theor. Appl. Climatol. 2012, 109, 51–72. [Google Scholar] [CrossRef]

- Samet, H. The Quadtree and Related Hierarchical Data Structures. ACM Comput. Surv. 1984, 16, 187–260. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Object-Oriented and Multi-Scale Image Analysis in Semantic Networks. In Proceedings of the 2nd International Symposium on Operationalization of Remote Sensing ITC, Enschede, The Netherlands, 16–20 August 1999. [Google Scholar]

- Woźniak, E.; Kofman, W.; Wajer, P.; Lewiński, S.; Nowakowski, A. The influence of filtration and decomposition window size on the threshold value and accuracy of land-cover classification of polarimetric SAR images. Int. J. Remote Sens. 2016, 37, 212–228. [Google Scholar] [CrossRef]

- Stehman, S.V. Impact of sample size allocation when using stratified random sampling to estimate accuracy and area of land-cover change. Remote Sens. Lett. 2012, 3, 111–120. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

| Path 185 Row 33 | Path 204 Row 31 | ||||||

|---|---|---|---|---|---|---|---|

| Pre-Fire Image | Post-Fire Image | Pre-Fire Image | Post-Fire Image | ||||

| Year | Day of Year | Year | Day of Year | Year | Day of Year | Year | Day of Year |

| 1986 | 92 | 1986 | 236 | 2000 | 248 | 2001 | 250 |

| 1986 | 204 | 1986 | 236 | 2003 | 192 | 2003 | 256 |

| 1999 | 216 | 1999 | 280 | 2006 | 120 | 2006 | 216 |

| 2002 | 176 | 2002 | 304 | 2007 | 107 | 2007 | 251 |

| 2003 | 187 | 2003 | 203 | 2009 | 112 | 2009 | 288 |

| 2010 | 190 | 2010 | 238 | 2010 | 115 | 2010 | 291 |

| 2011 | 113 | 2011 | 193 | 2013 | 107 | 2013 | 251 |

| 2011 | 193 | 2011 | 241 | 2013 | 187 | 2013 | 251 |

| 2013 | 182 | 2013 | 294 | 2014 | 71 | 2014 | 206 |

| 2016 | 176 | 2016 | 275 | ||||

| 2017 | 102 | 2017 | 262 | ||||

| Conditions Using Constant Thresholds | Conditions Using Variable Thresholds (T) Derived from the Function | ||

|---|---|---|---|

| NDVIT2 | dNIR | ||

| dNBR | depending on mode of dNBR values | dSWIR1 | |

| dSWIR2 | |||

| RT2 | |||

| GT2 | |||

| Coniferous forest (Russia T1: 2015/169, T2: 2015/233) | Semidesert (Israel T1: 1986/095, T2: 1987/162) | ||||

| Burnt | Unburnt | Burnt | Unburnt | ||

| Burnt | 14,083 | 1069 | Burnt | 391 | 23 |

| Unburnt | 1746 | 20,892 | Unburnt | 2 | 2776 |

| User accuracy | 89.0 | 95.1 | User accuracy | 99.5 | 99.2 |

| Producer accuracy | 92.9 | 92.3 | Producer accuracy | 94.4 | 99.9 |

| Overall accuracy | 92.6 | Overall accuracy | 99.2 | ||

| Broadleaf forest (Spain T1: 1984/165, T2: 1985/119) | Savannah (Angola T1: 2003/144, T2: 2004/155) | ||||

| Burnt | Unburnt | Burnt | Unburnt | ||

| Burnt | 1540 | 69 | Burnt | 10,829 | 1939 |

| Unburnt | 114 | 2336 | Unburnt | 142 | 30,162 |

| User accuracy | 93.1 | 97.1 | User accuracy | 98.7 | 94.0 |

| Producer accuracy | 95.7 | 95.3 | Producer accuracy | 84.8 | 99.5 |

| Overall accuracy | 95.5 | Overall accuracy | 95.2 | ||

| Grassland (USA T1: 2016/003, T2:2016/099) | Tropical forest (Indonesia T1: 2009/217, T2: 2009265) | ||||

| Burnt | Unburnt | Burnt | Unburnt | ||

| Burnt | 28,447 | 1162 | Burnt | 392 | 0 |

| Unburnt | 446 | 33,774 | Unburnt | 41 | 1079 |

| User accuracy | 98.5 | 96.7 | User accuracy | 90.5 | 100.0 |

| Producer accuracy | 96.1 | 98.7 | Producer accuracy | 100.0 | 96.3 |

| Overall Accuracy | 97.5 | Overall accuracy | 97.3 | ||

| Mediterranean (South Africa T1: 2014/115, T2: 2015/070) | Mediterranean / Sentinel-2 (Colombia T1: 2015/12/09, T2: 2016/01/19) | ||||

| Burnt | Unburnt | Burnt | Unburnt | ||

| Burnt | 841 | 37 | Burnt | 6091 | 155 |

| Unburnt | 37 | 4682 | Unburnt | 524 | 10,652 |

| User accuracy | 95.8 | 99.2 | User accuracy | 92.1 | 98.6 |

| Producer accuracy | 95.8 | 99.2 | Producer accuracy | 97.5 | 95.3 |

| Overall accuracy | 98.7 | Overall accuracy | 96.1 | ||

| Portugal (West) (T1: 2017/07/14, T2: 2017/09/02) | Portugal (East) (T1: 2017/07/14, T2: 2017/09/02) | California (T1: 2017/07/11, T2: 2017/10/19) | ||||||

|---|---|---|---|---|---|---|---|---|

| Burnt | Unburnt | Burnt | Unburnt | Burnt | Unburnt | |||

| Burnt | 6223 | 33 | Burnt | 32,330 | 63 | Burnt | 11,710 | 58 |

| Unburnt | 42 | 10,409 | Unburnt | 176 | 11,545 | Unburnt | 74 | 8568 |

| User accuracy | 99.3 | 99.7 | User accuracy | 99.5 | 99.6 | User accuracy | 99.4 | 99.3 |

| Producer accuracy | 99.5 | 99.6 | Producer accuracy | 99.8 | 98.5 | Producer accuracy | 99.5 | 99.1 |

| Overall accuracy | 99.6 | Overall accuracy | 99.5 | Overall accuracy | 99.4 | |||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Woźniak, E.; Aleksandrowicz, S. Self-Adjusting Thresholding for Burnt Area Detection Based on Optical Images. Remote Sens. 2019, 11, 2669. https://doi.org/10.3390/rs11222669

Woźniak E, Aleksandrowicz S. Self-Adjusting Thresholding for Burnt Area Detection Based on Optical Images. Remote Sensing. 2019; 11(22):2669. https://doi.org/10.3390/rs11222669

Chicago/Turabian StyleWoźniak, Edyta, and Sebastian Aleksandrowicz. 2019. "Self-Adjusting Thresholding for Burnt Area Detection Based on Optical Images" Remote Sensing 11, no. 22: 2669. https://doi.org/10.3390/rs11222669

APA StyleWoźniak, E., & Aleksandrowicz, S. (2019). Self-Adjusting Thresholding for Burnt Area Detection Based on Optical Images. Remote Sensing, 11(22), 2669. https://doi.org/10.3390/rs11222669