Abstract

In this paper, we develop a hyperspectral feature extraction method called sparse and smooth low-rank analysis (SSLRA). First, we propose a new low-rank model for hyperspectral images (HSIs) where we decompose the HSI into smooth and sparse components. Then, these components are simultaneously estimated using a nonconvex constrained penalized cost function (CPCF). The proposed CPCF exploits total variation penalty, penalty, and an orthogonality constraint. The total variation penalty is used to promote piecewise smoothness, and, therefore, it extracts spatial (local neighborhood) information. The penalty encourages sparse and spatial structures. Additionally, we show that this new type of decomposition improves the classification of the HSIs. In the experiments, SSLRA was applied on the Houston (urban) and the Trento (rural) datasets. The extracted features were used as an input into a classifier (either support vector machines (SVM) or random forest (RF)) to produce the final classification map. The results confirm improvement in classification accuracy compared to the state-of-the-art feature extraction approaches.

1. Introduction

Hyperspectral cameras can acquire remotely sensed images for a large number of contiguous spectral bands. Thus, a hyperspectral image (HSI) contains detailed spectral information of a scene. Since many kinds of materials have unique spectral signatures, this type of image is useful for recognizing the types of materials in a captured scene []. On the other hand, due to the Hughes effect [], which is also known as the curse of dimensionality, the high spectral dimensionality makes the analysis of HSIs a challenging task from both computational and statistical perspective. The limited availability of training samples is a common issue in this kind of analysis since their collection can be both time demanding and expensive []. An increase in the number of spectral features, after a certain point, usually causes a decrease in classification accuracy when the number of training samples is limited. As a result, reducing the spectral dimensionality (or feature reduction) is of great interest in HSI analysis []. In general, dimensionality reduction (DR) techniques can be divided into feature selection (FS) and feature extraction (FE). In this paper, we focus on FE.

FE is the process of finding a set of vectors that represent an observation while reducing the dimensionality. For data classification, it is desirable to extract informative features that are useful for differentiating between classes of interest. Although DR is important for HSI analysis, the error due to the reduction in dimension has to occur without sacrificing the discriminative power of the classifier [].

FE techniques can be broadly divided, based on the availability of training data, into two main groups: supervised FE (SFE) and unsupervised FE (USFE). The SFE methods require training samples while the USFE techniques are used to extract distinctive features in the absence of labeled training data.

SFE has been widely studied in the hyperspectral community []. Discriminant analysis feature extraction (DAFE) [] is a classical SFE approach. It is a parametric method that extracts features that maximize the proportion of the between-class variance to within-class variance. The main shortcoming of DAFE is that this approach is not full rank and its rank at maximum is equal to the number of classes minus one. In addition, the class mean values can highly affect the performance of DAFE. Therefore, decision boundary feature extraction (DBFE) [] and nonparametric weighted feature extraction (NWFE) [] are suggested for HSI classification. In DBFE, the decision boundary is defined by applying the Bayes decision rules on the training samples and from that a decision boundary matrix transformation is calculated to extract the feature vectors. Hence, DBFE could fail in the case of having too few training samples since it directly works with the training samples to determine the location of the effective decision boundaries. NWFE is designed to improve the limitations of parametric feature extraction by putting different weights on samples to compute the local means and define a new nonparametric between-class and within-class scatter matrices to produce more features than DAFE. In addition, discriminant analysis based techniques such as the linear constraint distance-based discriminant analysis (LCDA) [], the modified Fisher’s linear discriminant analysis (MFLDA) [], and a tensor representation-based discriminant analysis [] were all proposed to improve the performance of the DAFE.

Recent SFE approaches take the advantage of the local neighborhood properties (spatial information) of data. Li et al. [] considered local Fisher’s discriminant analysis [] to perform DR while preserving the corresponding multi-modal structure. In [], local neighborhood information is taken into account in both spectral and spatial domains to obtain a discriminative projection for dimensionality reduction of hyperspectral data. Xue et al. [] introduced a nonlinear FE approach based on spatial and spectral regularized local discriminant embedding to address spatial variability and spectral multi-modality.

USFE techniques are usually based on optimizing an objective function to project the original features into a lower dimensional feature space. Principal component analysis (PCA) searches for a projection to maximize the signal variance []. Maximum noise fraction (MNF) [] and noise adjusted principal components (NAPC) [] seek a projection that maximizes the signal-to-noise ratio (SNR). Such FE approaches are mostly used for data representation, usually as a preprocessing step, and address the large size of hyperspectral datasets. Independent component analysis (ICA) [,], non-negative matrix factorization (NMF) [,], and hyperspectral unmixing [,] are other examples of USFE techniques.

Some FE techniques are proposed based on preserving local (spatial) information [,]. Neighborhood preserving embedding (NPE) [], locality preserving projection (LPP) [] and linear local tangent space alignment (LLTSA) [] are proposed for hyperspectral FE [,]. The work in [] develops a tensor version of the LPP algorithm for hyperspectral DR and classification. The work in [] proposes a common minimization framework called graph-embedding (GE), which is based on estimating an undirected weighted graph to describe the desired intrinsic (statistical or geometrical) properties of the data. The method uses either scale normalization or penalty graph constraints that describe undesirable properties. In [], a sparse graph-based discriminant analysis (SGDA) technique that induces sparsity on the graph construction is proposed for hyperspectral DR and classification. SGDA may not obtain acceptable results when the input data have a nonlinear and complex nature. To address this issue, a kernel extension of SGDA is proposed in []. Image fusion and recursive filtering [] are designed in [], which incorporate spatial information to extract informative features. In [], a DR approach is developed to estimate a sparse and low-rank projection matrix by fulfilling the restricted isometric property condition on all secants of hyperspectral data to preserve the nearest neighbor points of all pixels to improve the subsequent classification step further. Total variation (TV) regularization is suggested in [] for HSI feature extraction. Wavelet-based sparse reduced-rank regression [] and sparse and low-rank modeling [] are suggested for hyperspectral feature extraction. Recently, in [], orthogonal total variation component analysis (OTVCA) is proposed, where a non-convex cost function is optimized to find the best representation for HSI in a low dimensional feature space while controlling the spatial smoothness of the features by using a TV regularization. The TV penalty promotes piecewise smoothness (homogeneous spatial regions) on the extracted features, and thus substantially helps to extract spatial (local neighborhood) information that is very useful for classification.

In this paper, we propose a USFE for the classification of HSI called sparse and smooth low-rank analysis (SSLRA). SSLRA decomposes the HSI into sparse and piecewise smooth components. To capture the spectral redundancy of HSI and perform DR, we assume that these components can be represented in a lower dimensional space. Therefore, we propose a low-rank model for HSI in which the HSI is modeled based on a combination of sparse and smooth components. The components are estimated simultaneously by optimizing a constrained penalized cost function (CPCF). The TV and penalties are exploited by the CPCF to promote the smoothness and the sparsity of the corresponding components, respectively. We assume that the unknown bases are orthogonal, and therefore we solve the CPCF by enforcing an orthogonality constraint. In the experiments, we used two HSIs: (1) an urban HSI of the University of the Houston campus; and (2) a rural HSI of the Italian city of Trento. The experiments confirmed that SSLRA outperforms both OTVCA and state-of-the-art FE techniques concerning classification accuracy.

The organization of the paper is as follows. The proposed hyperspectral feature extraction technique (SSLRA) and the corresponding algorithm are derived and explained in Section 2. Section 3 is devoted to the experimental results. Finally, Section 4 concludes the paper.

Notation

The notations used in this paper are as follows. The number of spectral bands and pixels in each band are denoted by p and n, respectively. r indicates the rank of the HSI. Matrices are represented by bold and capital letters, vectors by bold letters, the th element of by , and the ith column by . denotes identity matrix of size . stands for the estimate of . The Frobenius norm and TV-normare denoted by and , respectively. The definitions of the symbols used in the paper are given in Table 1.

Table 1.

The definitions of the symbols used in this paper.

2. Hyperspectral Modeling and Sparse and Smooth Low-Rank Analysis

HSIs are often represented by using low-rank models. Such models have, for example, been shown to be more appropriate for HSI in terms of mean squared error than full-rank models []. However, the rank is unknown and has to be estimated [,]. We model the observed HSI as

where is an matrix containing the vectorized observed image at band i in its ith column, is an matrix representing the HSI, and is an matrix that represents the noise and model error. Here, we assume that is a low-rank matrix, i.e., it has rank . The low-rank property can be enforced by representing as a product of two rank r matrices and , which leads to the following low-rank model:

where and are matrices of size containing the unknown smooth and sparse components, respectively, and is an unknown subspace matrix. The model in Equation (2) separates the sparse features from the smooth features. The smooth features can be used to promote smooth regions of interests in the classification maps.

Assuming the model in Equation (2), we propose a CPCF to simultaneously estimate , , and by solving

where

In Equation (4), the TV-norm (see Appendix A) promotes piecewise smoothness on , the norm promotes sparsity on , and the constraint enforces the orthogonality condition on the subspace. Note that minimization of Equation (3) is non-convex and therefore the solution might lead to a local minima.

To solve Equation (3), we use a cyclic descent (CD) algorithm [,,] where the problem is solved with respect to one matrix at a time while the others are assumed to be fixed. As a result, the proposed CD approach consists of the four steps discussed below: initialization, the step, the step, and the step.

2.1. Initialization

Decompose by using truncated (rank-r) singular value decomposition (SVD), i.e., . Then, initialize and .

2.2. F-Step

Given fixed and , get by solving Equation (3) which can be reduced to

where . The problem in Equation (4) can be thought of as r-separable TV regularization problems [] that can be solved using the split Bregman iterations method given in [] (also known as the alternative direction method of multipliers (ADMM) []) denoted by

2.3. S-Step

Given fixed and , get by solving Equation (3), i.e.,

It can be shown that Equation (5) is separable and the solution is given by

which is called soft-thresholding and often is written as

Note that soft function in Equation (7) is applied element-wise on matrix .

2.4. V-Step

Given fixed and , get by solving Equation (3), which can be rewritten as

The solution is given by a low-rank Procrustes rotation []

where the matrices and are given by the following truncated SVD of rank r

where is a diagonal matrix which contains the first r singular values of . The resulting algorithm is summarized in Algorithm 1.

The monotonicity property of SSLRA can be observed easily since by construction the algorithm guarantees that the cost function is non-increasing with respect to the iteration index, i.e., . Therefore, the cost function is guaranteed to decrease or stay the same at each iteration of the algorithm. Since the cost function is both upper bounded (by the initial value) and lower bounded (since it is greater than or equal zero), the cost function iterations will converge to a finite value.

Note that the smooth features () extracted using SSLRA are used for classification purposes in this paper. This is discussed further in Section 3.

| Algorithm 1: SSLRA. |

|

3. Experimental Results

Two HSIs, the Houston and Trento datasets, described below, were used in the experiments. The Houston dataset investigation is presented in Section 3.2, Section 3.3 and Section 3.4. The Trento dataset experiment is presented in Section 3.4. Two classifiers were used in the experiments: Random Forest (RF) and Support Vector Machine (SVM). For the RF, we set the number of trees to 200. For the SVM, a radial basis function (RBF) kernel was used. The penalty parameter C and spread of the RBF kernel were selected by searching in the range of and , respectively, using five-fold cross-validation. The classification metrics used in the experiments are Average Accuracy (AA), Overall Accuracy (OA), and Kappa Coefficient () (see A.5 in []).

3.1. Datasets

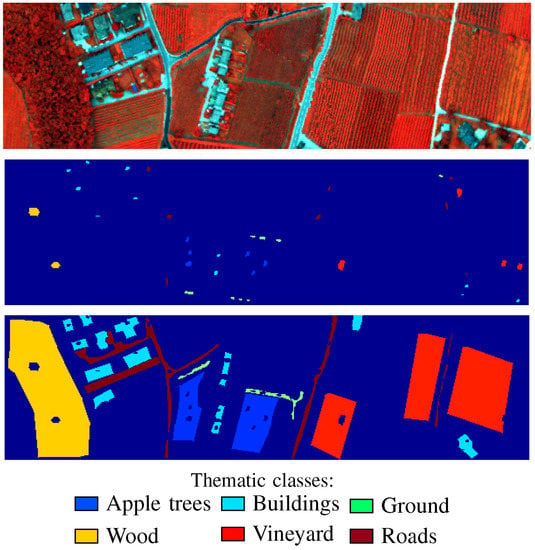

3.1.1. Trento

The first dataset is from a rural area in the south of the city of Trento, Italy. The size of the dataset is 600 by 166 pixels. The AISA Eagle sensor acquired the HSI with a spatial resolution of 1 m. The hyperspectral data consist of 63 bands ranging from 0.40 to 0.99 m, where the spectral resolution is 9.2 nm. The available ground truth consists of six classes, i.e., Building, Wood, Apple Tree, Road, Vineyard, and Ground. Figure 1 illustrates a false color composite representation of the hyperspectral data along with the corresponding training and test samples. Table 2 provides information on the number of training and test samples for each class of interest.

Figure 1.

Trento (from top to bottom): A color composite representation of the hyperspectral data using bands 40, 20, and 10, as R, G, and B, respectively; training samples; test samples; and the corresponding color bar.

Table 2.

Trento: Number of training and test samples.

3.1.2. Houston

The Compact Airborne Spectrographic Imager (CASI) captured the second HSI over the University of Houston campus and the neighboring urban area. The data size is 349 × 1905 pixels, and the spatial resolution is 2.5 m. The hyperspectral dataset consists of 144 spectral bands ranging from 0.38 to 1.05 m. The 15 classes of interest are: Grass Healthy, Grass Stressed, Grass Synthetic, Tree, Soil, Water, Residential, Commercial, Road, Highway, Railway, Parking Lot 1, Parking Lot 2, Tennis Court and Running Track. “Parking Lot 1” includes parking garages at the ground level and also in elevated areas, while “Parking Lot 2” corresponds to parked vehicles. Figure 2 illustrates a false color composite representation of the hyperspectral data and the corresponding training and test samples. Table 3 provides information on the number of training and test samples.

Figure 2.

Houston (from left to right): A color composite representation of the hyperspectral data using band 70, 50, and 20 as R, G, and B, respectively; training samples; test samples; and the corresponding color bar.

Table 3.

Houston: Number of training and test samples.

It is important to note that we used the standard sets of training and test samples for the datasets mentioned above to make the results entirely comparable with most of the approaches available in the literature.

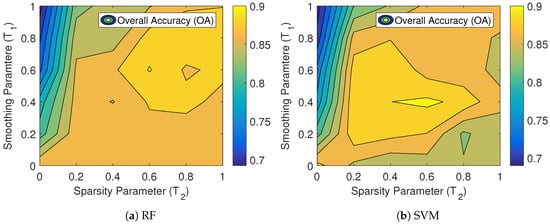

3.2. Performance of SSLRA with Respect to Tuning Parameters

The assessment of the effect of the tuning parameters ( and ) on the performance of the proposed algorithm was of interest. Since we were interested in the classification accuracy, we investigated the effect of the smoothing parameter () and the sparsity parameter () on OA. We selected the tuning parameter value with respect to a percentage of the range of the intensity value as follows:

where .

Figure 3 shows the contour plot of the OA with respect to and for both the random forest (RF) and the support vector machine (SVM) classifiers. It can be seen that along the diagonal line the OA has little variability and takes on high values. The results confirm, for this example, that, if the tuning parameters are selected to be equal, SSLRA is less sensitive in terms of OA with respect to and . Tuning parameter selection is non-trivial and often a computationally-expensive task. To save computations, we selected . Here, we selected , which is the number of the classes, and we assumed that it is the dimension of the subspace. Note that one can claim that the number of classes of interests is the minimum of the dimension of the subspace.

Figure 3.

Performance of OA with respect to the tuning parameters and obtained by applying RF and SVM classifiers on the extracted features from the University of Houston dataset.

3.3. Performance of SSLRA Compared to OTVCA

OTVCA is a recent FE technique whose advantages have already been confirmed compared to the state-of-the-art techniques [,]. For example, in [], the performances of several FE approaches are compared using several USFE approaches (i.e., OTVCA [], PCA [], and LPP []), an SFE approach (i.e., NWFE []), and several semi-supervised FE approaches (i.e., SELF [], SELD [], and SEGL []). As shown in [], OTVCA considerably outperforms the aforementioned approaches in terms of classification accuracy. Therefore, first we compare the performance of SSLRA with OTVCA.

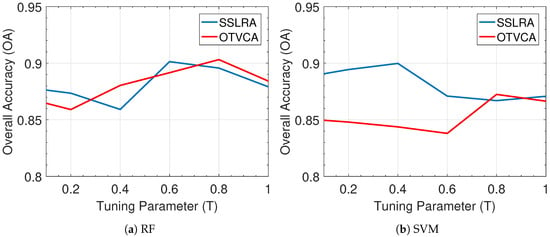

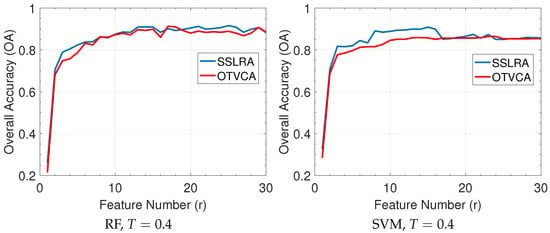

3.3.1. Comparisons with Respect to the Tuning Parameter

The tuning parameter T controls the amount of smoothness of , and therefore it can affect the classification accuracies. Hence, it is of interest to compare the performances of OTVCA and SSLRA with respect to T. Figure 4 shows that SSLRA and OTVCA give similar OA with respect to T for RF, but SSLRA give higher overall accuracies for SVM except when . Note that increasing T causes oversmoothing of the extracted feature, which might lead to the loss of information in the final classification map. We selected and to avoid oversmoothing of the features.

Figure 4.

Performance of OA with respect to the tuning parameters T obtained by applying RF and SVM classifiers on the extracted features from the University of Houston dataset.

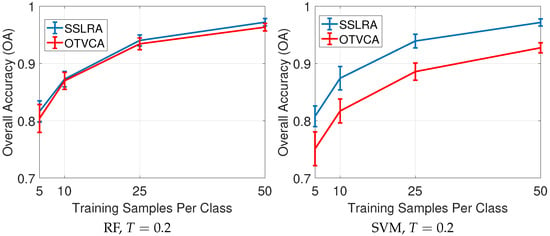

3.3.2. Comparisons with Respect to the Number of Features

A major advantage of FE techniques is their DR capability. In HSI classification, DR is of great interest since the spectral redundancy makes HSI classification computationally expensive and also DR can improve the classification task since it can address the Hughes effect or the curse of dimensionality to a large extent []. As a result, we investigated the performance of SSLRA in terms of OA with respect to r.

Figure 5 depicts the DR performance of SSLRA in terms of OA with respect to feature number r for both RF and SVM classifiers. For both SVM and RF and for both and when , SSLRA provides high OAs (close to 90%), which confirms the good performance of SSLRA concerning DR. Additionally, Figure 5 compares SSLRA with OTVCA in terms of OA with respect to r for both RF and SVM classifiers. The figure shows that SSLRA outperforms OTVCA in terms of OA and demonstrates better DR for the SVM classifier. For the RF, SSLRA and OTVCA perform similarly.

Figure 5.

Performance of OA with respect to r obtained by applying RF and SVM classifiers on the extracted features from the University of Houston dataset.

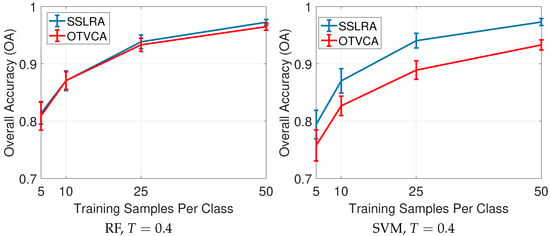

3.3.3. Comparisons with Respect to the Number of Training Samples

A major problem in classification applications is to collect reliable ground truth. The number of labeled samples per class is usually limited compared to the number of pixels. Hence, it is of interest to evaluate the performance of the proposed technique with respect to the number of samples selected per class. Figure 6 compares the performance of SSLRA with OTVCA in terms of OA obtained by applying SVM and RF on the extracted components. The experiments were performed by selecting different numbers of training samples per class (5, 10, 25, and 50) for the classification task. The reported results are the mean values over ten simulations each time using SVM and RF on the Houston features and selecting the training samples randomly (the error bars show standard deviations.). The results for both SSLRA and OTVCA are shown for and .

Figure 6.

Performance of OA with respect to the number of training samples obtained by applying RF and SVM classifiers on the extracted features from the University of Houston dataset.

Both OTVCA and SSLRA show a similar trend in terms of OA with respect to the number of training samples. We see that SSLRA provides high accuracy by using only few training samples (5 and 10) for both classifiers, which is of great interest in the remote sensing community. It can also be observed that, by increasing the number of training samples up to only 50 samples per class, the OA reaches over 97% in all cases shown for SSLRA. We note that, only in the experiments presented in this subsection, we did not use the standard sets of test and training samples. We instead selected the samples randomly to be able to show the performance of the techniques with respect to the number of training samples.

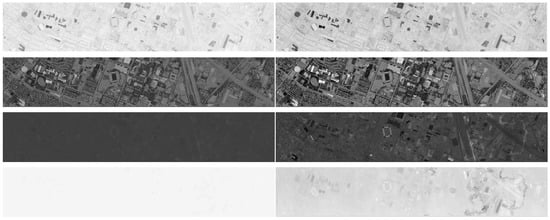

3.3.4. Visual Comparisons of Extracted Features

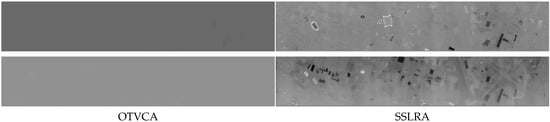

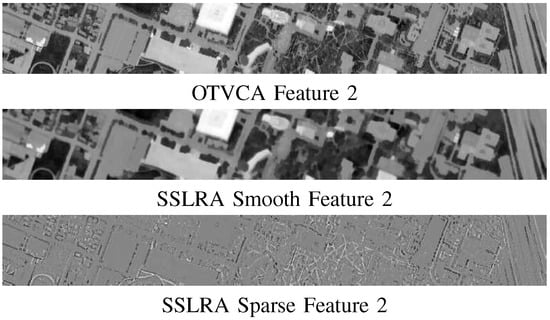

Figure 7 visually compares the components extracted by using OTVCA and the smooth components (F) extracted by SSLRA from the Houston dataset. As can be seen, the shadow removal areas are apparent in components 4, 8, 10, and 15. The comparisons show that the sparse structures in the components extracted by OTVCA are not present in the smooth components extracted by SSLRA. The features extracted using SSLRA contain more homogeneous regions compared to the ones extracted by OTVCA. Figure 8 demonstrates this better. It shows a portion of feature 2 extracted by SSLRA compared with the corresponding OTVCA component. Figure 8 depicts the sparse structures extracted by SSLRA. The sparse structures in the sparse components decrease the classification accuracies since they are not frequently included in the region of interests, and, therefore, the class labels are not available for these sparse structures. SSLRA separates the sparse structures from the smooth ones which increases the classification accuracy and provides homogeneous class regions in the final classification map.

Figure 7.

Houston components extracted by using OTVCA and SSLRA (the smooth components (F))—From top to bottom: components 1, 2, 4, 8, 10 and 15.

Figure 8.

A portion of feature 2 of Houston extracted by using OTVCA and SSLRA.

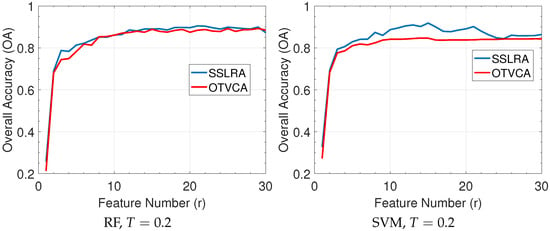

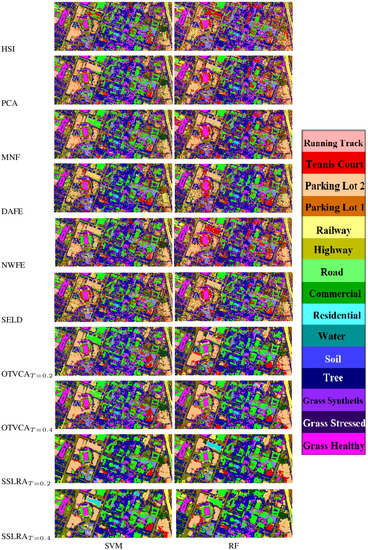

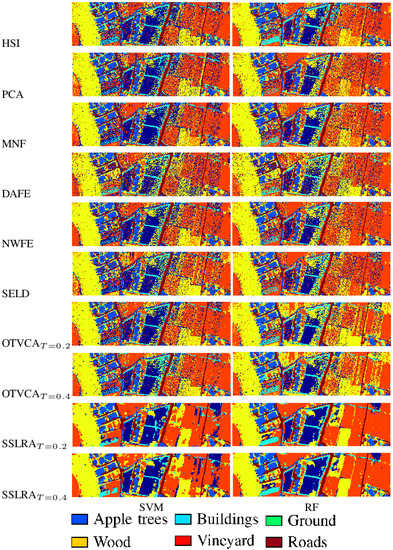

3.4. Performance of SSLRA with Respect to the State-of-the-Art

Here, we compared the performance of SSLRA with PCA, MNF [], DAFE [], NWFE [], and SELD [] as competitive FE approaches applied to Houston and Trento. The number of features was set to the number of classes of interests (i.e., 15 for Houston and 6 for Trento) except for DAFE that extracts maximum one feature fewer than the number of classes. In the tables, HSI shows the classification results applied on the spectral bands.

Table 4 and Table 5 show the classification accuracies obtained by applying SVM and RF, respectively, on Houston’s components extracted using different FE techniques. Similarly, Table 6 and Table 7 show the classification accuracies for Trento. In general, SSLRA outperforms the other FE approaches. For Houston, SSLRA improves OA over 13% using RF and 6% using SVM compared to HSI. For Trento, SSLRA improves OA over 9% using RF and 5% using SVM compared to HSI. OTVCA achieves the second best performance in terms of classification accuracy followed by MNF. As can be seen, DAFE gives the lowest OAs. Figure 9 and Figure 10 show the classifications maps for Houston and Trento datasets, respectively. These figures show that the maps obtained by SSLRA contain homogeneous class regions which is of interest in the classification applications.

Table 4.

Classification accuracies obtained by applying SVM on the features extracted from the Houston hyperspectral dataset. The highest accuracy in each row is shown bold.

Table 5.

Classification accuracies obtained by applying RF on the features extracted from the Houston hyperspectral dataset. The highest accuracy in each row is shown bold.

Table 6.

Classification accuracies obtained by applying SVM on the features extracted from the Trento hyperspectral dataset. The highest accuracy in each row is shown bold.

Table 7.

Classification accuracies obtained by applying RF on the features extracted from the Trento hyperspectral dataset. The highest accuracy in each row is shown in bold.

Figure 9.

Classification maps obtained by applying SVM and RF classifiers on the features extracted from the Houston hyperspectral dataset.

Figure 10.

Classification maps obtained by applying SVM and RF classifiers on the features extracted from the Trento hyperspectral dataset.

Table 8 compares the CPU processing time (in seconds) spent by different feature extraction techniques applied on the Trento and Houston datasets. All methods were implemented in Matlab on a computer having Intel(R) Core(TM) i7-6700 processor (3.40 GHz), 32 GB of memory and 64-bit Operating System. It can be seen that SSLRA and OTVCA are computationally expensive compared to the other techniques used in the experiments. That is mainly due to the iterative nature of those algorithms and the inner TV-regularization loop and the SVD step. It is worth noting that the CPU time for the supervised techniques (NWFE and LDA) is affected considerably by the number of labeled (training) samples used and the semi-supervised technique (SELD) is affected by both labeled and unlabeled samples used. In the case of unsupervised techniques, the CPU time is affected by the total size of the data.

Table 8.

CPU processing times in seconds consumed by different techniques applied on the Trento and the Houston datasets.

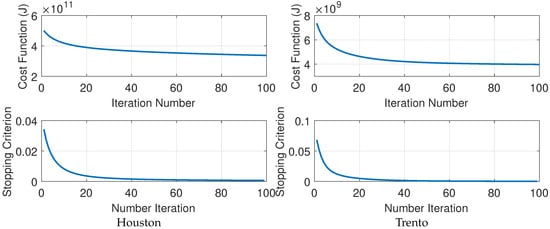

Figure 11 depicts the values of the cost function J and the values of the stopping criterion () when SSLRA was applied on the Houston and Trento datasets. It can be seen that the cost functions are strictly descending, as stated in Section 2, for both datasets. The stopping criterion values are less than 0.001 after 62 and 48 iterations for Houston and Trento, respectively. Therefore, in the experiments, we set the number of iterations to 100.

Figure 11.

The cost function and the stopping criterion values of SSLRA applied on Houston and Trento.

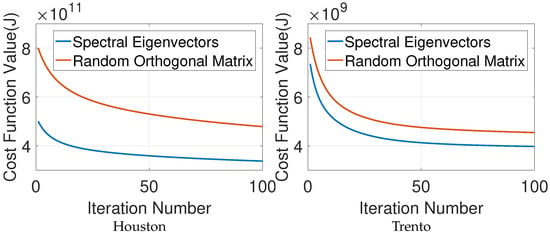

Figure 12 compares the values of the cost function (J) with respect to the number of iterations for two different initialization of SSLRA. It can be seen that random orthogonal matrix initialization gives higher cost function values for all iterations shown compared to the spectral eigenvectors initialization. Therefore, in this paper, spectral eigenvectors were used to initialize SSLRA, i.e., . Note that the proposed cost function is nonconvex and therefore different initializations might give different optimum values.

Figure 12.

The cost function values using spectral eigenvectors and random orthogonal matrix for the Initialization of SSLRA applied on Houston and Trento.

3.5. Discussion

The conventional FE methods used in the experiments, i.e., PCA, MNF, DAFE, and NWFE, do not take into account spatial correlation of the HSI that can considerably improve the classification results [,]. SELD incorporates the spatial correlation by using unlabeled samples. However, the number of unlabeled samples highly affects the complexity of the algorithm and having few samples does not provide satisfactory spatial information []. That drawback has been considerably improved in OTVCA. OTVCA captures both spectral and spatial redundancies while extracting informative components. The spectral redundancy of HSI is captured by the low-rank representation of HSI in the OTVCA model. TV regularization not only captures the spatial redundancy of HSI but also induces the piece-wise smoothness on HSI features that helps to extract spatial information and reduce the salt and pepper noise. However, there are sparse structures in the components extracted by OTVCA that are mostly assumed to be outliers in the classification task. SSLRA improves the classification accuracies by separating the sparse structures and providing smoother components. As a result, the classification map obtained contains less salt and pepper noise effect and more homogeneous class regions. On the other hand, SSLRA is computationally more expensive than the other methods.

4. Conclusions

Sparse and smooth low-rank analysis was proposed for hyperspectral image feature extraction. First, a low-rank model was proposed where the HSI was modeled as a combination of smooth and sparse components. A constrained penalized cost function minimization was proposed to estimate the smooth and sparse components that use the TV penalty and the penalty to promote smoothness and sparsity, respectively, while the orthogonality constraint was applied on the subspace basis. Then, an iterative algorithm was derived from solving the proposed non-convex minimization problem. In the experiments, it was shown that SSLRA outperforms other FE methods in terms of classification accuracy for urban and rural HSIs. It was also demonstrated that components extracted by SSLRA provide relatively high classification accuracies when only a limited number of training samples is available. Additionally, the experiments confirmed that SSLRA reduces the salt and pepper noise effect and produces homogeneous class regions in the classification maps by separating the sparse features from the smooth ones. On the other hand, SSLRA is more complicated and computationally expensive compared to the techniques used in the experiments.

Author Contributions

B.R. wrote the manuscript and performed the experiments. P.G. and M.O.U. revised the manuscript and improved its presentation.

Funding

This research received no external funding. However, the contribution of Pedram Ghamisi is supported by the High Potential Program offered by Helmholtz-Zentrum Dresden-Rossendorf.

Acknowledgments

The authors would like to thank L. Bruzzone of the University of Trento, Wenzhi Liao, Naoto Yokoya, and the National Center for Airborne Laser Mapping (NCALM) at the University of Houston for providing the Trento dataset, the Matlab script for SELD, the shadow-removed Houston hyperspectral dataset, and the CASI Houston dataset, respectively. We also thank the IEEE GRSS Image Analysis and Data Fusion Technical Committee for organizing the 2013 Data Fusion Contest.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Total Variation Norm

The isotropic total variation of a (vectorized) two-dimensional image of size is given by

where and are the matrix operators for calculating the first order vertical and horizontal differences, respectively, given by and . is the first order difference matrix given by

References

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geos. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef]

- Landgrebe, D. Signal Theory Methods in Multispectral Remote Sensing; Wiley Series in Remote Sensing and Image Processing; Wiley: Hoboken, NJ, USA, 2005. [Google Scholar]

- Ghamisi, P.; Benediktsson, J.A. Feature Selection Based on Hybridization of Genetic Algorithm and Particle Swarm Optimization. IEEE Geos. Remote Sens. Lett. 2015, 12, 309–313. [Google Scholar] [CrossRef]

- Jia, X.; Kuo, B.C.; Crawford, M. Feature Mining for Hyperspectral Image Classification. Proc. IEEE 2013, 101, 676–697. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Ghamisi, P. Spectral-Spatial Classification of Hyperspectral Remote Sensing Images; Artech House Publishers: Norwood, MA, USA, 2015. [Google Scholar]

- Fukunaga, K. Introduction to Statistical Pattern Recognition; Computer Science and Scientific Computing; Elsevier Science: New York, NY, USA, 1990. [Google Scholar]

- Lee, C.; Landgrebe, D. Feature extraction based on decision boundaries. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 388–400. [Google Scholar] [CrossRef]

- Kuo, B.C.; Landgrebe, D. Nonparametric weighted feature extraction for classification. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1096–1105. [Google Scholar]

- Du, Q.; Chang, C.I. A linear constrained distance-based discriminant analysis for hyperspectral image classification. Pattern Recognit. 2001, 34, 361–373. [Google Scholar] [CrossRef]

- Du, Q. Modified Fisher’s Linear Discriminant Analysis for Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2007, 4, 503–507. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. Tensor Discriminative Locality Alignment for Hyperspectral Image Spectral-Spatial Feature Extraction. IEEE Trans. Geosci. Remote Sens. 2013, 51, 242–256. [Google Scholar] [CrossRef]

- Li, W.; Prasad, S.; Fowled, J.E.; Bruce, L.M. Locality-preserving dimensionality reduction and classification for hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1185–1198. [Google Scholar] [CrossRef]

- Sugiyama, M. Dimensionality reduction of multimodal labeled data by local Fisher discriminant analysis. J. Mach. Learn. Res. 2007, 8, 1027–1061. [Google Scholar]

- Zhou, Y.; Peng, J.; Chen, C.L.P. Dimension Reduction Using Spatial and Spectral Regularized Local Discriminant Embedding for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1082–1095. [Google Scholar] [CrossRef]

- Xue, Z.; Du, P.; Li, J.; Su, H. Simultaneous sparse graph embedding for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6114–6133. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal Component Analysis; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Green, A.; Berman, M.; Switzer, P.; Craig, M. A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef]

- Lee, J.; Woodyatt, A.; Berman, M. Enhancement of high spectral resolution remote-sensing data by a noise-adjusted principal components transform. IEEE Trans. Geosci. Remote Sens. 1990, 28, 295–304. [Google Scholar] [CrossRef]

- Hyvärinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; Adaptive and Learning Systems for Signal Processing, Communications and Control Series; Wiley: Hoboken, NJ, USA, 2001. [Google Scholar]

- Villa, A.; Benediktsson, J.; Chanussot, J.; Jutten, C. Hyperspectral Image Classification With Independent Component Discriminant Analysis. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4865–4876. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Algorithms for Non-Negative Matrix Factorization; NIPS; MIT Press: Cambridge, MA, USA, 2000; pp. 556–562. [Google Scholar]

- Lin, B.; Tao, G.; Kai, D. Using non-negative matrix factorization with projected gradient for hyperspectral images feature extraction. In Proceedings of the 2013 IEEE 8th Conference on Industrial Electronics and Applications (ICIEA), Melbourne, Australia, 19–21 June 2013; pp. 516–519. [Google Scholar]

- Sigurdsson, J.; Ulfarsson, M.; Sveinsson, J. Total variation and lq based hyperspectral unmixing for feature extraction and classification. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar]

- Sigurdsson, J.; Ulfarsson, M.; Sveinsson, J. Hyperspectral unmixing with lq regularization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6793–6806. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.; Tian, J. Local Manifold Learning-Based k-Nearest-Neighbor for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4099–4109. [Google Scholar] [CrossRef]

- Fang, Y.; Li, H.; Ma, Y.; Liang, K.; Hu, Y.; Zhang, S.; Wang, H. Dimensionality Reduction of Hyperspectral Images Based on Robust Spatial Information Using Locally Linear Embedding. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1712–1716. [Google Scholar] [CrossRef]

- He, X.; Cai, D.; Yan, S.; Zhang, H.J. Neighborhood preserving embedding. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005; Volume 2, pp. 1208–1213. [Google Scholar]

- He, X.; Niyogi, P. Locality Preserving Projections. In Advances in Neural Information Processing Systems; Thrun, S., Saul, L., Scholkopf, B., Eds.; MIT Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Zhang, T.; Yang, J.; Zhao, D.; Ge, X. Linear local tangent space alignment and application to face recognition. Neurocomputing 2007, 70, 1547–1553. [Google Scholar] [CrossRef]

- Fong, M. Dimension Reduction on Hyperspectral Images; Technical Report; University of California: Los Angeles, CA, USA, 2007. [Google Scholar]

- Huang, H.Y.; Kuo, B.C. Double Nearest Proportion Feature Extraction for Hyperspectral-Image Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4034–4046. [Google Scholar] [CrossRef]

- Deng, Y.J.; Li, H.C.; Pan, L.; Shao, L.Y.; Du, Q.; Emery, W.J. Modified Tensor Locality Preserving Projection for Dimensionality Reduction of Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 277–281. [Google Scholar] [CrossRef]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.J.; Yang, Q.; Lin, S. Graph Embedding and Extensions: A General Framework for Dimensionality Reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 40–51. [Google Scholar] [CrossRef]

- Ly, N.H.; Du, Q.; Fowler, J. Sparse Graph-Based Discriminant Analysis for Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3872–3884. [Google Scholar]

- Pan, L.; Li, H.C.; Li, W.; Chen, X.D.; Wu, G.N.; Du, Q. Discriminant Analysis of Hyperspectral Imagery Using Fast Kernel Sparse and Low-Rank Graph. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6085–6098. [Google Scholar] [CrossRef]

- Gastal, E.S.L.; Oliveira, M.M. Domain Transform for Edge-aware Image and Video Processing. ACM Trans. Graph. 2011, 30, 69. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Benediktsson, J.A. Feature Extraction of Hyperspectral Images With Image Fusion and Recursive Filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3742–3752. [Google Scholar] [CrossRef]

- Sun, W.; Yang, G.; Du, B.; Zhang, L.; Zhang, L. A Sparse and Low-Rank Near-Isometric Linear Embedding Method for Feature Extraction in Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4032–4046. [Google Scholar] [CrossRef]

- Rasti, B.; Sveinsson, J.R.; Ulfarsson, M.O. Total Variation Based Hyperspectral Feature Extraction. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 4644–4647. [Google Scholar]

- Rasti, B.; Sveinsson, J.; Ulfarsson, M. Wavelet-Based Sparse Reduced-Rank Regression for Hyperspectral Image Restoration. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6688–6698. [Google Scholar] [CrossRef]

- Rasti, B. Sparse Hyperspectral Image Modeling and Restoration. Ph.D. Thesis, Department of Electrical and Computer Engineering, University of Iceland, Reykjavik, Iceland, 2014. [Google Scholar]

- Rasti, B.; Ulfarsson, M.O.; Sveinsson, J.R. Hyperspectral Feature Extraction Using Total Variation Component Analysis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6976–6985. [Google Scholar] [CrossRef]

- Rasti, B.; Ulfarsson, M.; Sveinsson, J. Hyperspectral Subspace Identification Using SURE. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2481–2485. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.; Nascimento, J. Hyperspectral Subspace Identification. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2435–2445. [Google Scholar] [CrossRef]

- Bertsekas, D. Nonlinear Programming; Athena Scientific: Belmont, MA, USA, 1995. [Google Scholar]

- Luenberger, D. Linear Nonlinear Programming, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Tseng, P.; Mangasarian, C.O.L. Convergence of a block coordinate descent method for nondifferentiable minimization. J. Opt. Theory Appl. 2001, 109, 475–494. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Goldstein, T.; Osher, S. The Split Bregman Method for ℓ1-Regularized Problems. SIAM J. Imaging Sci. 2009, 2, 323–343. [Google Scholar] [CrossRef]

- Eckstein, J.; Bertsekas, D.P. On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 1992, 55, 293–318. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T.; Tibshirani, R. Sparse Principal Component Analysis. J. Comput. Graph. Stat. 2004, 15, 2006. [Google Scholar] [CrossRef]

- He, X.F.; Niyogi, P. Locality Preserving Projections; MIT Press: Cambridge, MA, USA, 2004; pp. 153–160. [Google Scholar]

- Sugiyama, M.; Ide, T.; Nakajima, S.; Sese, J. Semi-supervised local Fisher discriminant analysis for dimensionality reduction. Mach. Learn. 2010, 78, 35–61. [Google Scholar] [CrossRef]

- Liao, W.; Pizurica, A.; Scheunders, P.; Philips, W.; Pi, Y. Semi-Supervised Local Discriminant Analysis for Feature Extraction in Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 184–198. [Google Scholar] [CrossRef]

- Luo, R.; Liao, W.; Huang, X.; Pi, Y.; Philips, W. Feature Extraction of Hyperspectral Images with Semi-Supervised Graph Learning. IEEE J. Sel. Top. App. Earth Obs. Remote Sens. 2016, 9, 4389–4399. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).