In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series

Abstract

1. Introduction

2. Study Site and Data

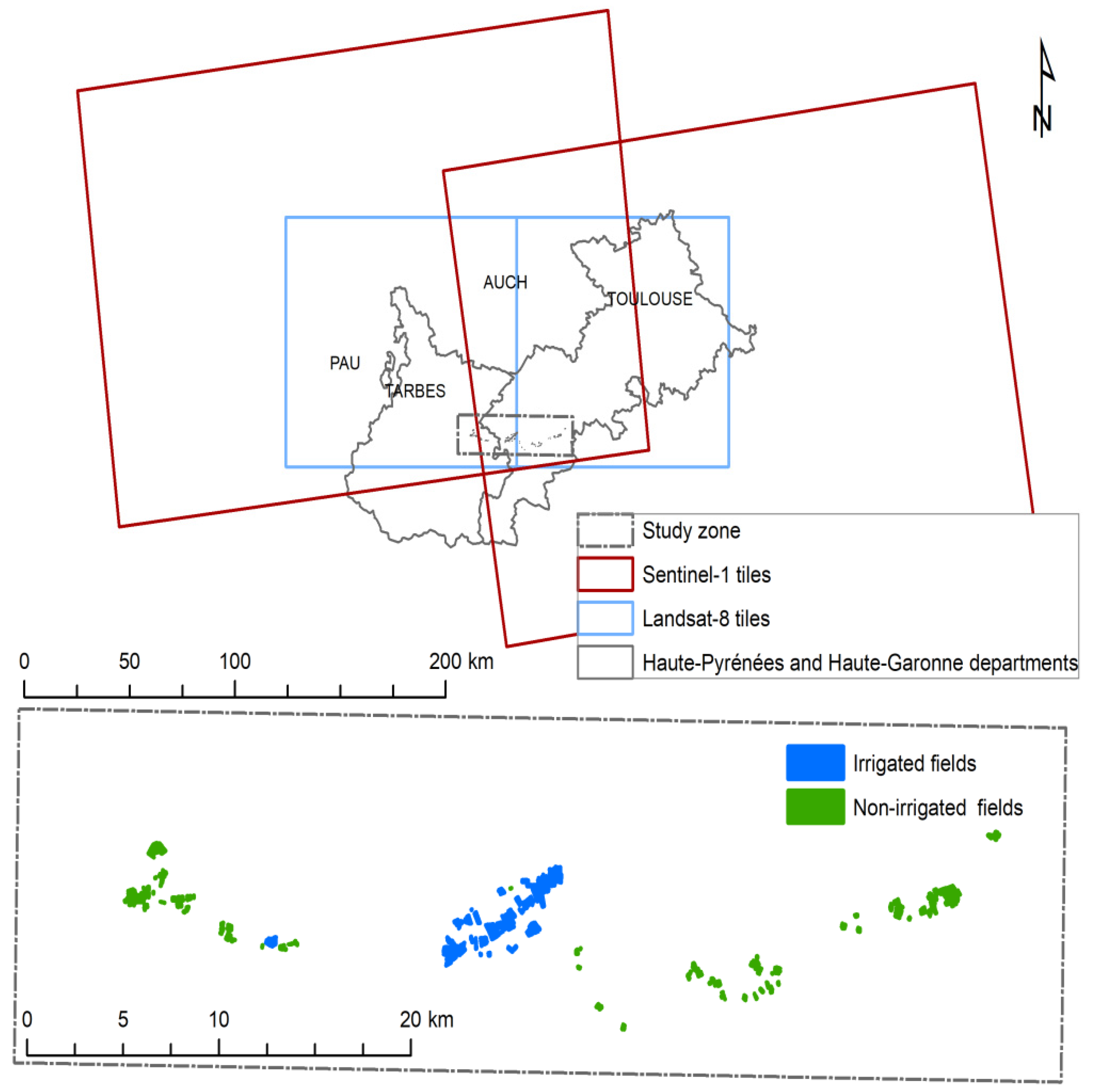

2.1. Study Site

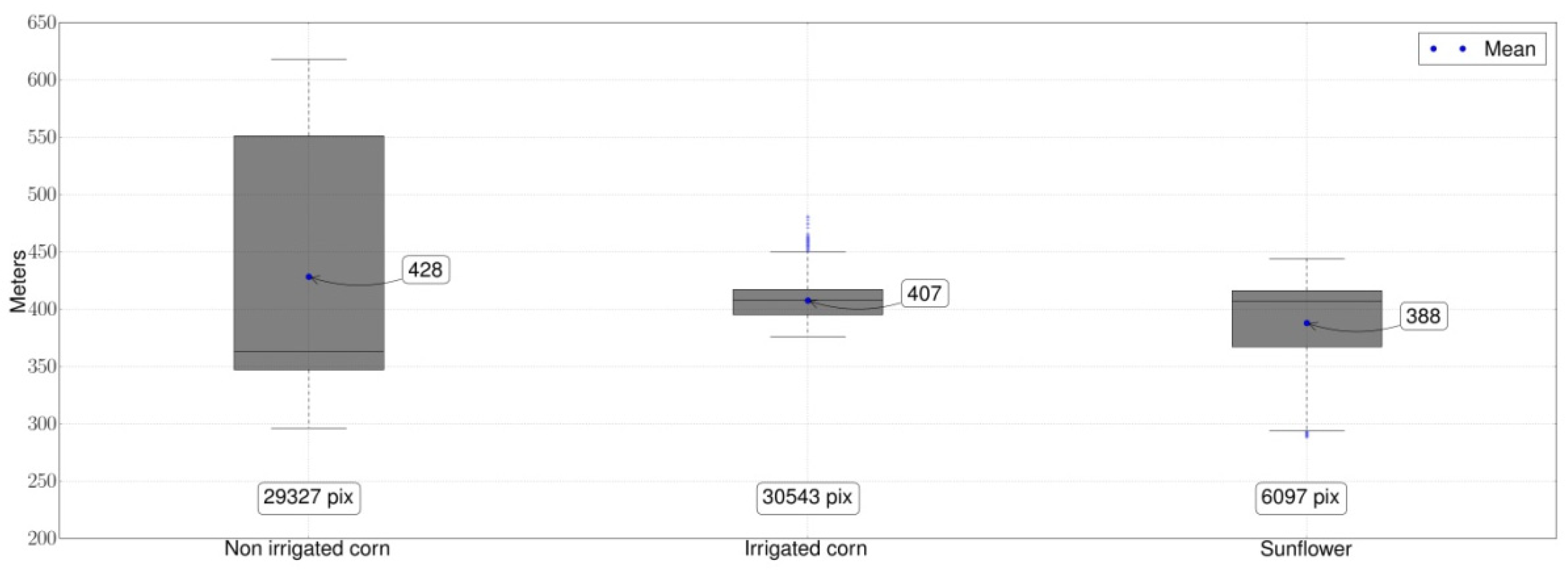

2.2. Reference Dataset

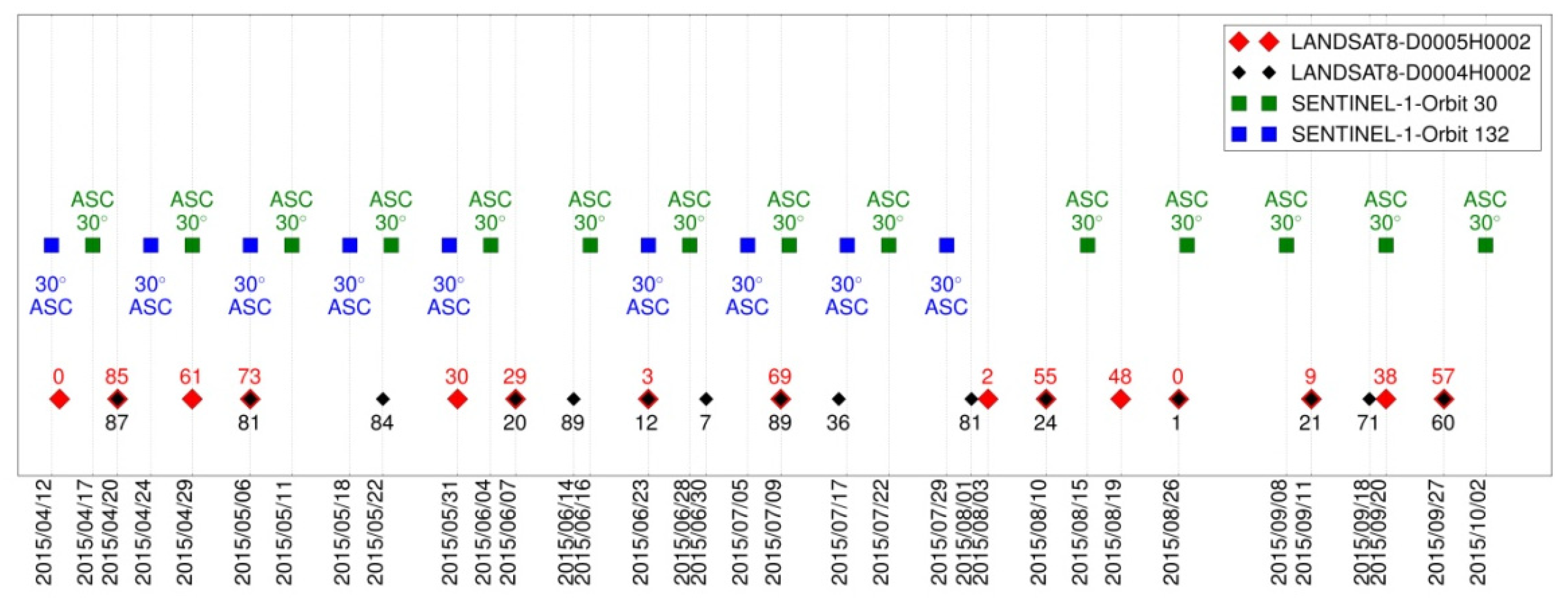

2.3. Landsat 8 Images

2.4. SAR Images

3. Method

3.1. Incremental Classification

3.2. Selected Features

3.3. Summer Crops Mask

4. Results and Discussion

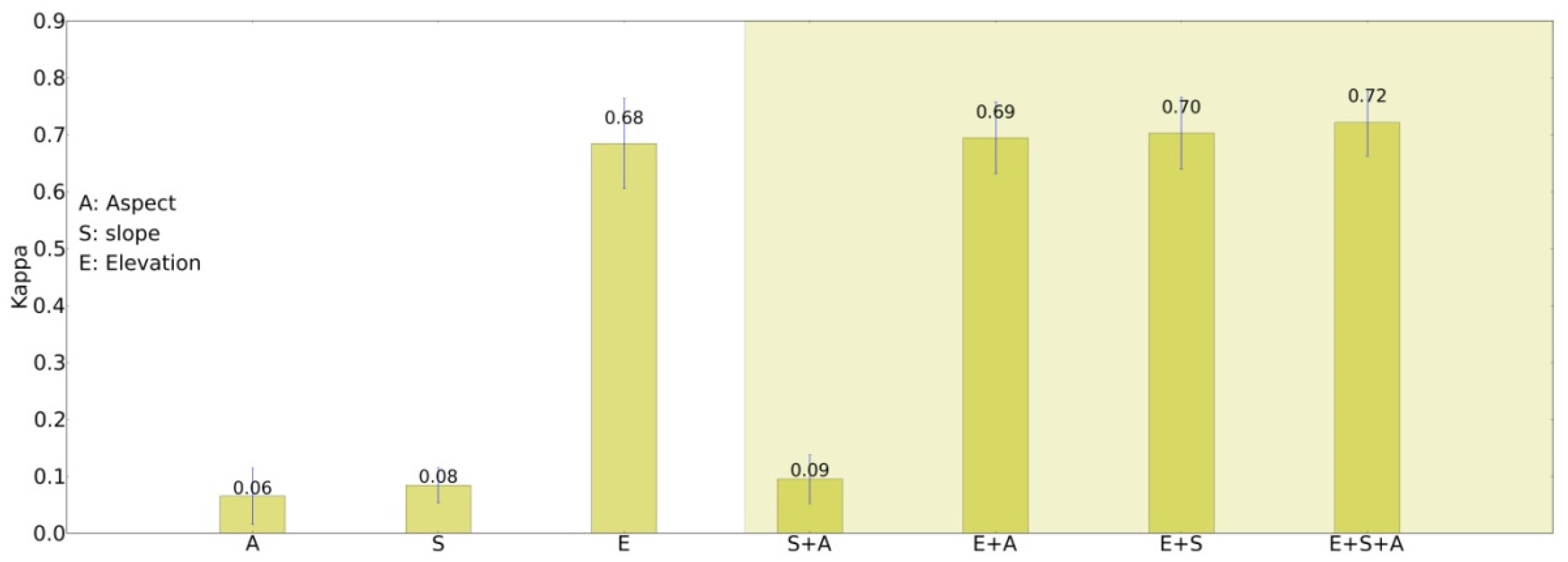

4.1. Effect of Topographic Variables

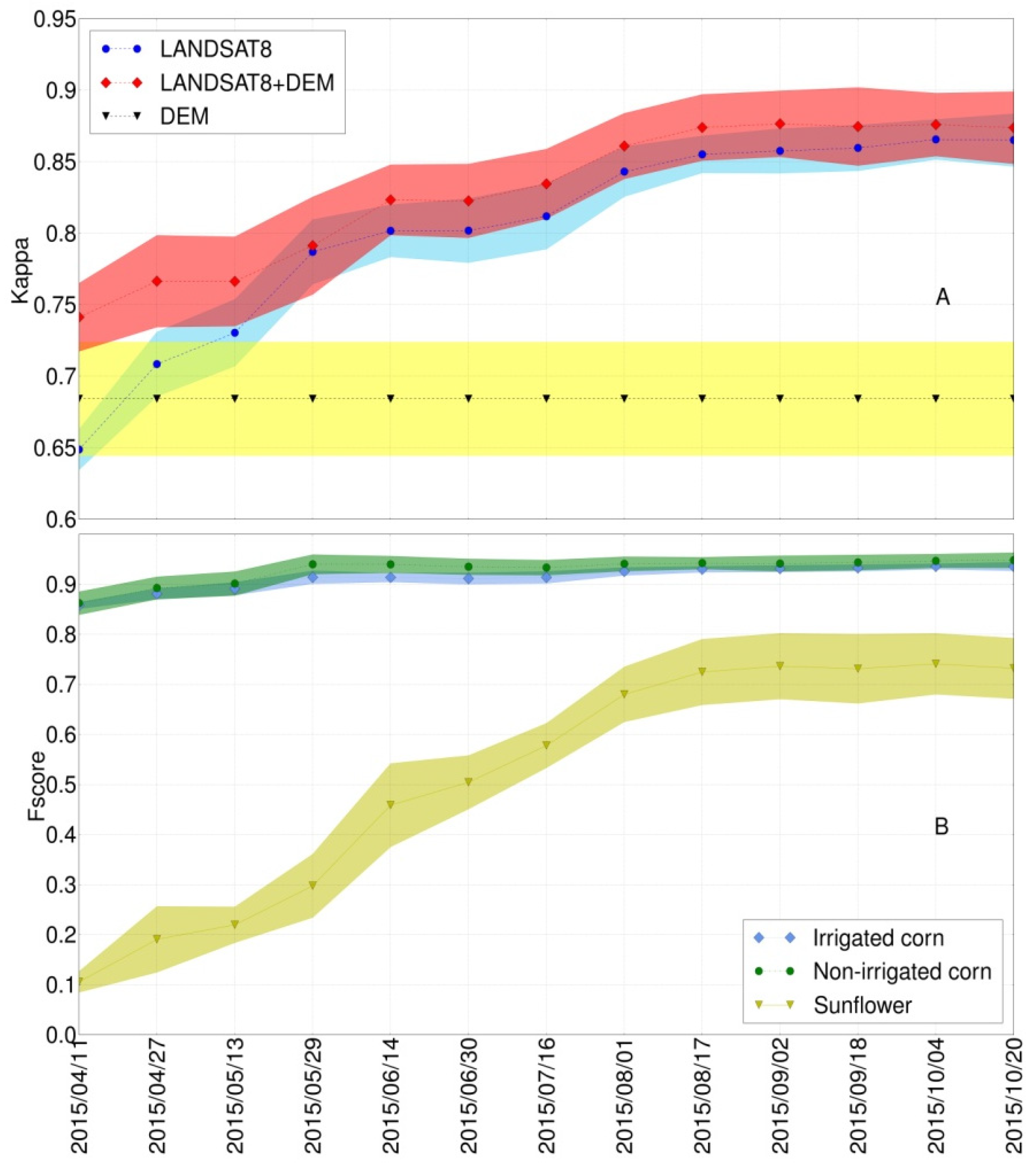

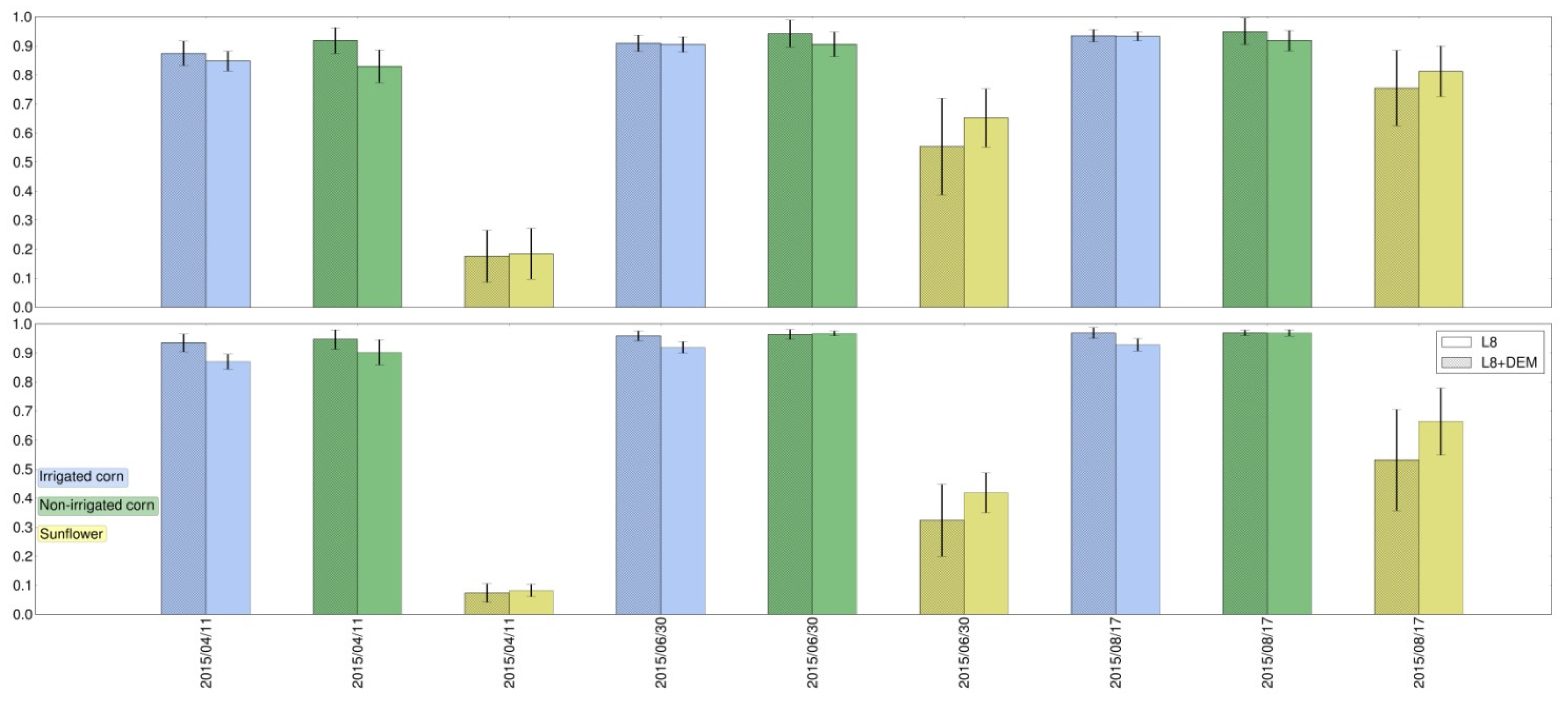

4.2. Incremental Classification Using Optical Images and Elevation Features

- (1)

- with the elevation feature alone (Figure 5A, yellow curve);

- (2)

- with the spectral features of the Landsat 8 images listed in Section 3.2 (Figure 5A, blue curve); and

- (3)

- with both the elevation and optical imagery (Figure 5A, red curve).

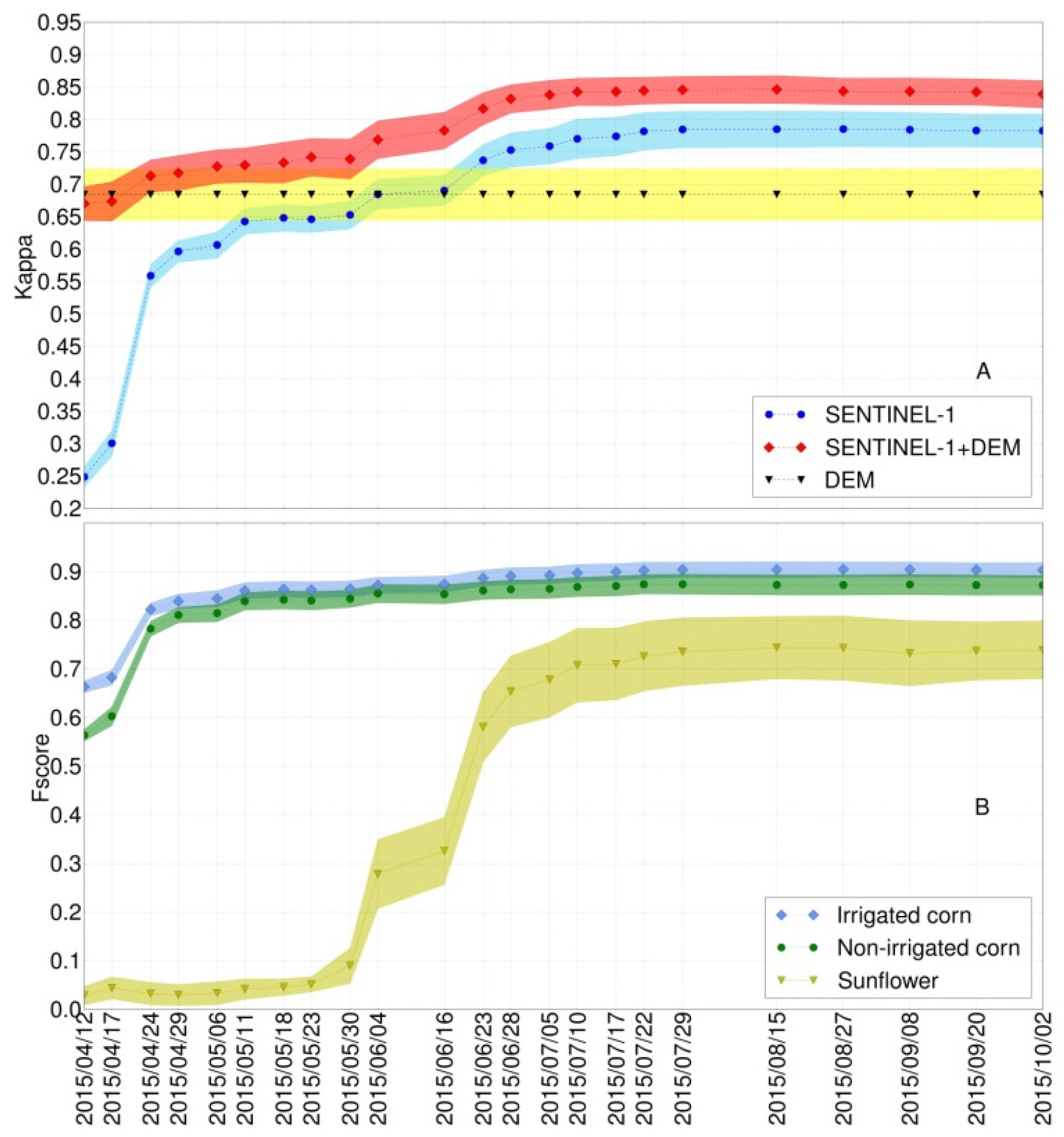

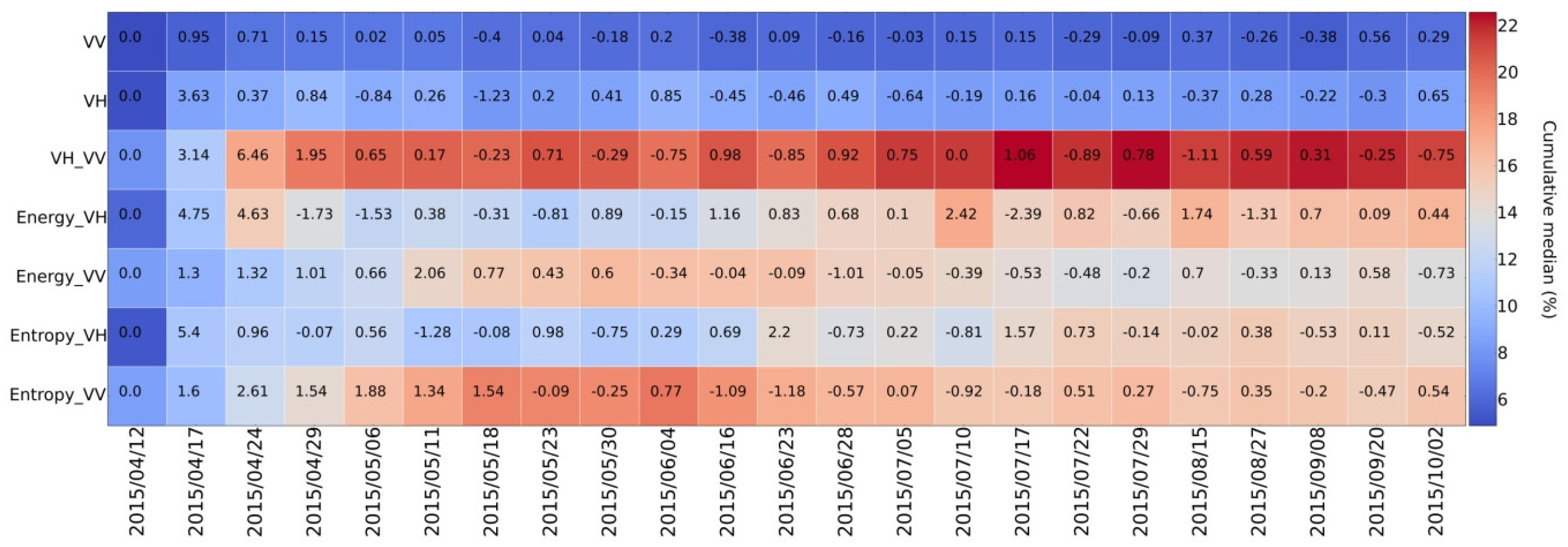

4.3. Incremental Classification Using Radar Images and Elevation

- (1)

- with the elevation feature only (Figure 7A, yellow curve);

- (2)

- with the radar features listed in Section 3.2 (Figure 7A, blue curve); and

- (3)

- with combined use of elevation and radar imagery (Figure 7A, red curve).

- In April (during sowing and emergence), the k coefficient of the radar classifications (scenario 2) increases significantly in connection with the Fscore increase for the irrigated and non-irrigated maize (Figure 7B). During this period, sunflower is very poorly classified (Fscore = 0.05) as plots correspond to bare soil mainly subjected to strong variations in moisture and roughness, to which the SAR signal is very sensitive.

- From May to the end of June, k increases moderately, probably due to a better detection of sunflower, as shown by the increase in the Fscore of this class.

- From July to October, gains in k and in Fscore are negligible. At this stage, all crops have reached their maximum development.

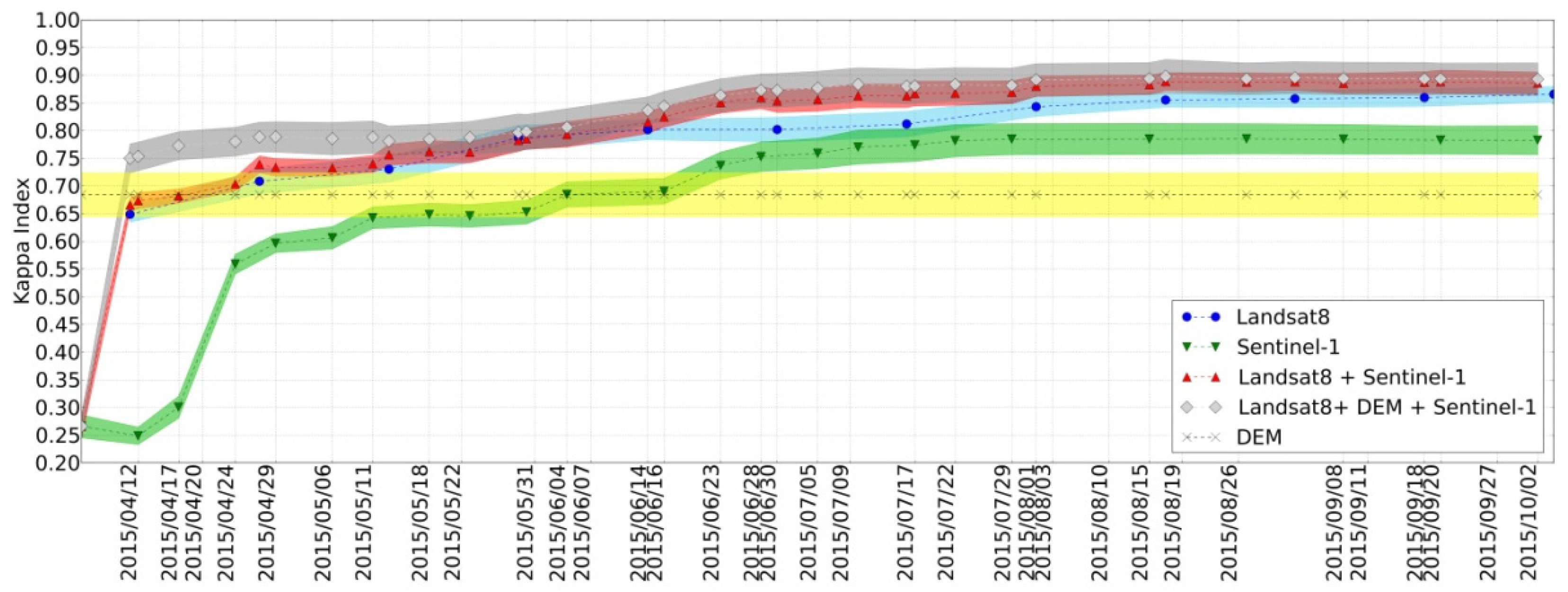

4.4. Multi-Temporal Classifications Using Optical and SAR Images

- (1)

- with the elevation (yellow curve);

- (2)

- with the optical images (blue curve);

- (3)

- with the radar images (green curve);

- (4)

- with optical and radar imagery (red curve); and

- (5)

- with optical and radar and elevation (grey curve).

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Loubier, S.; Campardon, M.; Morardet, S. L’irrigation diminue-t-elle en France? Premiers enseignements du recensement agricole de 2010. In Sciences Eaux & Territoires; IRSTEA: Villeurbanne, France, 2013. [Google Scholar]

- Bastiaanssen, W.G.M.; Molden, D.J.; Makin, I.W. Remote sensing for irrigated agriculture: Examples from research and possible applications. Agric. Water Manag. 2000, 46, 137–155. [Google Scholar] [CrossRef]

- Ozdogan, M.; Yang, Y.; Allez, G.; Cervantes, C. Remote Sensing of Irrigated Agriculture: Opportunities and Challenges. Remote Sens. 2010, 2, 2274–2304. [Google Scholar] [CrossRef]

- Goetz, S.J.; Varlyguin, D.; Smith, A.J.; Wright, R.K.; Prince, S.D.; Mazzacato, M.E.; Tringe, J.; Jantz, C.; Melchoir, B. Application of multitemporal Landsat data to map and monitor land cover and land use change in the Chesapeake Bay watershed. In Analysis of Multi-temporal Remote Sensing Images; World Scientific: Singapore, 2004. [Google Scholar]

- Knight, J.K.; Lunetta, R.L.; Ediriwickrema, J.; Khorram, S. Regional scale land-cover characterization using MODIS-NDVI 250 m multi-temporal imagery: A phenology based approach. Gisci. Remote Sens. 2006, 43, 1–23. [Google Scholar] [CrossRef]

- Velpuri, N.M.; Thenkabail, P.S.; Gumma, M.K.; Biradar, C.M.; Dheeravath, V.; Noojipady, P.; Yuanjie, L. Influence of Resolution in Irrigated Area Mapping and Area Estimations. Photogramm. Eng. Remote Sens. 2009, 75, 1383–1396. [Google Scholar] [CrossRef]

- Thenkabail, P.; Dheeravath, V.; Biradar, C.; Gangalakunta, O.R.; Noojipady, P.; Gurappa, C.; Velpuri, M.; Gumma, M.; Li, Y.; Thenkabail, P.S.; et al. Irrigated Area Maps and Statistics of India Using Remote Sensing and National Statistics. Remote Sens. 2009, 1, 50–67. [Google Scholar] [CrossRef]

- Dheeravath, V.; Thenkabail, P.S.; Chandrakantha, G.; Noojipady, P.; Reddy, G.P.O.; Biradar, C.M.; Gumma, M.K.; Velpuri, M. Irrigated areas of India derived using MODIS 500 m time series for the years 2001–2003. ISPRS J. Photogramm. Remote Sens. 2010, 65, 42–59. [Google Scholar] [CrossRef]

- Bouvet, A.; le Toan, T. Use of ENVISAT/ASAR wide-swath data for timely rice fields mapping in the Mekong River Delta. Remote Sens. Environ. 2011, 115, 1090–1101. [Google Scholar] [CrossRef]

- Gumma, M.K.; Thenkabail, P.S.; Maunahan, A.; Islam, S.; Nelson, E.A. Mapping seasonal rice cropland extent and area in the high cropping intensity environment of Bangladesh using MODIS 500 m data for the year 2010. ISPRS J. Photogramm. Remote Sens. 2014, 91, 98–113. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P. Using the 500 m MODIS land cover product to derive a consistent continental scale 30 m Landsat land cover classification. Remote Sens. Environ. 2017, 197, 15–34. [Google Scholar] [CrossRef]

- Ouzemou, J.-E.; El Harti, A.; Lhissou, R.; El Moujahid, A.; Bouch, N.; El Ouazzani, R.; Bachaoui, E.M.; El Ghmari, A. Crop type mapping from pansharpened Landsat 8 NDVI data: A case of a highly fragmented and intensive agricultural system. Remote Sens. Appl. Soc. Environ. 2018, 11, 94–103. [Google Scholar] [CrossRef]

- Product User Guide, CCI LC PUGv2. Available online: http://maps.elie.ucl.ac.be/CCI/viewer/download/ESACCI-LC-Ph2-PUGv2_2.0.pdf (accessed on 30 July 2018).

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. Sentin. Mission. New Oppor. Sci. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. Sentin. Mission. New Oppor. Sci. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Ozdogan, M.; Gutman, G. A new methodology to map irrigated areas using multi-temporal MODIS and ancillary data: An application example in the continental US. Remote Sens. Environ. 2008, 112, 3520–3537. [Google Scholar] [CrossRef]

- Julien, Y.; Sobrino, J.A.; Jiménez-Muñoz, J.-C. Land use classification from multitemporal Landsat imagery using the Yearly Land Cover Dynamics (YLCD) method. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 711–720. [Google Scholar] [CrossRef]

- Miao, X.; Heaton, J.S.; Zheng, S.; Charlet, D.A.; Liu, D.H. Applying tree-based ensemble algorithms to the classification of ecological zones using multi-temporal multi-source remote-sensing data. Int. J. Remote Sens. 2012, 33, 1823–1849. [Google Scholar] [CrossRef]

- Valero, S.; Morin, D.; Inglada, J.; Sepulcre, G.; Arias, M.; Hagolle, O.; Dedieu, G.; Bontemps, S.; Defourny, P. Processing Sentinel-2 image time series for developing a real-time cropland mask. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–30 July 2015; pp. 2731–2734. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an Operational System for Crop Type Map Production Using High Temporal and Spatial Resolution Satellite Optical Imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Valero, S.; Morin, D.; Inglada, J.; Sepulcre, G.; Arias, M.; Hagolle, O.; Dedieu, G.; Bontemps, S.; Defourny, P.; Koetz, B.; et al. Production of a Dynamic Cropland Mask by Processing Remote Sensing Image Series at High Temporal and Spatial Resolutions. Remote Sens. 2016, 8, 55. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C.; Immitzer, M.; Vuolo, F.; Atzberger, E.C. First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Asgarian, A.; Soffianian, A.; Pourmanafi, S. Crop type mapping in a highly fragmented and heterogeneous agricultural landscape: A case of central Iran using multi-temporal Landsat 8 imagery. Comput. Electron. Agric. 2016, 127, 531–540. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Marais Sicre, C.; Dedieu, G.; Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; et al. Effect of Training Class Label Noise on Classification Performances for Land Cover Mapping with Satellite Image Time Series. Remote Sens. 2017, 9, 173. [Google Scholar] [CrossRef]

- Moody, D.I.; Brumby, S.P.; Chartrand, R.; Keisler, R.; Longbotham, N.; Mertes, C.; Skillman, S.W.; Warren, M.S. Crop classification using temporal stacks of multispectral satellite imagery. In Proceedings of the Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XXIII, Anaheim, CA, USA, 9–13 April 2017; p. 101980G. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K. Crop classification from Sentinel-2-derived vegetation indices using ensemble learning. J. Appl. Remote Sens. 2018, 12, 026019. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.-T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, E.O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved Early Crop Type Identification by Joint Use of High Temporal Resolution SAR and Optical Image Time Series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. Gisci. Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Yang, H.; Pan, B.; Wu, W.; Tai, J. Field-based rice classification in Wuhua county through integration of multi-temporal Sentinel-1A and Landsat-8 OLI data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 226–236. [Google Scholar] [CrossRef]

- Whyte, A.; Ferentinos, K.P.; Petropoulos, G.P. A new synergistic approach for monitoring wetlands using Sentinels-1 and 2 data with object-based machine learning algorithms. Environ. Model. Softw. 2018, 104, 40–54. [Google Scholar] [CrossRef]

- Simonneaux, V.; Duchemin, B.; Helson, D.; Raki, E.; Olioso, A.; Chehbouni, A. The use of high-resolution image time series for crop classification and evapotranspiration estimate over an irrigated area in central Morocco. Int. J. Remote Sens. 2008, 29, 95–116. [Google Scholar] [CrossRef]

- Gumma, M.K.; Thenkabail, P.S.; Muralikrishna, I.V.; Velpuri, M.N.; Gangadhararao, P.T.; Dheeravath, V.; Biradar, C.M.; Nalan, S.A.; Gaur, A. Changes in agricultural cropland areas between a water-surplus year and a water-deficit year impacting food security, determined using MODIS 250 m time-series data and spectral matching techniques, in the Krishna River basin (India). Int. J. Remote Sens. 2011, 32, 3495–3520. [Google Scholar] [CrossRef]

- Edlinger, J.; Conrad, C.; Lamers, J.; Khasankhanova, G.; Koellner, T.; Edlinger, J.; Conrad, C.; Lamers, J.P.A.; Khasankhanova, G.; Koellner, T. Reconstructing the Spatio-Temporal Development of Irrigation Systems in Uzbekistan Using Landsat Time Series. Remote Sens. 2012, 4, 3972–3994. [Google Scholar] [CrossRef]

- Salmon, J.M.; Friedl, M.A.; Frolking, S.; Wisser, D.; Douglas, E.E.M. Global rain-fed, irrigated, and paddy croplands: A new high resolution map derived from remote sensing, crop inventories and climate data. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 321–334. [Google Scholar] [CrossRef]

- Ambika, A.K.; Wardlow, B.; Mishra, V. Remotely sensed high resolution irrigated area mapping in India for 2000 to 2015. Sci. Data 2016, 3, 160118. [Google Scholar] [CrossRef] [PubMed]

- Pervez, M.S.; Budde, M.; Rowland, J. Mapping irrigated areas in Afghanistan over the past decade using MODIS NDVI. Remote Sens. Environ. 2014, 149, 155–165. [Google Scholar] [CrossRef]

- Peña-Arancibia, J.L.; McVicar, T.R.; Paydar, Z.; Li, L.; Guerschman, J.P.; Donohue, R.J.; Dutta, D.; Podger, G.M.; van Dijk, A.I.J.M.; Chiew, F.H.S. Dynamic identification of summer cropping irrigated areas in a large basin experiencing extreme climatic variability. Remote Sens. Environ. 2014, 154, 139–152. [Google Scholar] [CrossRef]

- Conrad, C.; Löw, F.; Lamers, J.P.A. Mapping and assessing crop diversity in the irrigated Fergana Valley, Uzbekistan. Appl. Geogr. 2017, 86, 102–117. [Google Scholar] [CrossRef]

- Ferrant, S.; Selles, A.; Le Page, M.; Herrault, P.-A.; Pelletier, C.; Al-Bitar, A.; Mermoz, S.; Gascoin, S.; Bouvet, A.; Saqalli, M.; et al. Detection of Irrigated Crops from Sentinel-1 and Sentinel-2 Data to Estimate Seasonal Groundwater Use in South India. Remote Sens. 2017, 9, 1119. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Ok, A.O.; Akar, O.; Gungor, O. Evaluation of random forest method for agricultural crop classification. Eur. J. Remote Sens. 2012, 45, 421–432. [Google Scholar] [CrossRef]

- Ghimire, B.; Rogan, J.; Rodriguez Galiano, V.; Panday, P.; Neeti, N. An evaluation of bagging, boosting, and Random Forests for land-cover classification in Cape Cod, Massachusetts, USA. Geosci. Remote Sens. 2012, 49, 623–643. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, E.J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Hao, P.; Wang, L.; Niu, Z. Comparison of Hybrid Classifiers for Crop Classification Using Normalized Difference Vegetation Index Time Series: A Case Study for Major Crops in North Xinjiang, China. PLoS ONE 2015, 10, e0137748. [Google Scholar] [CrossRef] [PubMed]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Song, Q.; Hu, Q.; Zhou, Q.; Hovis, C.; Xiang, M.; Tang, H.; Wu, W.; Song, Q.; Hu, Q.; Zhou, Q.; et al. In-Season Crop Mapping with GF-1/WFV Data by Combining Object-Based Image Analysis and Random Forest. Remote Sens. 2017, 9, 1184. [Google Scholar] [CrossRef]

- Gao, Q.; Zribi, M.; Escorihuela, M.; Baghdadi, N.; Segui, P.; Gao, Q.; Zribi, M.; Escorihuela, M.J.; Baghdadi, N.; Segui, P.Q. Irrigation Mapping Using Sentinel-1 Time Series at Field Scale. Remote Sens. 2018, 10, 1495. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Brut, A.; Tallec, T.; Huc, M.; Cros, J.; Weber, J.; Lhuissier, L.; Simonneaux, V.; Demarez, V. Modeling water needs and total irrigation depths of maize crop in the south west of France using high spatial and temporal resolution satellite imagery. Agric. Water Manag. 2017, 189, 123–136. [Google Scholar] [CrossRef]

- Hagolle, O.; Sylvander, S.; Huc, M.; Claverie, M.; Clesse, D.; Dechoz, C.; Lonjou, V.; Poulain, V. SPOT-4 (Take 5): Simulation of Sentinel-2 time series on 45 large sites. Remote Sens. 2015, 7, 12242–12264. [Google Scholar] [CrossRef]

- Lee, J.-S. Speckle Suppression and Analysis for Synthetic Aperture Radar Images. Opt. Eng. 1986, 25, 255636. [Google Scholar] [CrossRef]

- Congalton, R. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gao, B. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Caloz, R.; Abednego, B.; Collet, C. The Normalisation of a Soil Brightness Index for the Study of Changes in Soil Conditions. In Proceedings of the Spectral Signatures of Objects in Remote Sensing, Aussois, France, 18–22 January 1988; ESA SP-287. Guyenne, T.D., Hunt, J.J., Eds.; European Space Agency: Paris, France, 1988; p. 363. [Google Scholar]

- Abrams, M.; Bailey, B.; Tsu, H.; Hato, M. The ASTER global DEM. Photogramm. Eng. Remote Sens. 2010, 76, 344–348. [Google Scholar]

- Marais Sicre, C.; Inglada, J.; Fieuzal, R.; Baup, F.; Valero, S.; Cros, J.; Huc, M.; Demarez, V.; Marais Sicre, C.; Inglada, J.; et al. Early Detection of Summer Crops Using High Spatial Resolution Optical Image Time Series. Remote Sens. 2016, 8, 591. [Google Scholar] [CrossRef]

- Bargiel, D. A new method for crop classification combining time series of radar images and crop phenology information. Remote Sens. Environ. 2017, 198, 369–383. [Google Scholar] [CrossRef]

| Class Label | Number of Fields Sampled | Total Area Sampled (ha) | Mean Field Size (ha) |

|---|---|---|---|

| Irrigated maize | 114 | 2581 | 2.2 |

| Non-irrigated maize | 171 | 2372 | 1.3 |

| Sunflower | 32 | 505 | 1.5 |

| Class Label | Irrigated Maize | Non-Irrigated Maize | Sunflower |

|---|---|---|---|

| Irrigated maize | 90.38 | 0.38 | 9.24 |

| Non-irrigated maize | 11.35 | 87.12 | 1.22 |

| Sunflower | 46.06 | 14.37 | 41.3 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demarez, V.; Helen, F.; Marais-Sicre, C.; Baup, F. In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series. Remote Sens. 2019, 11, 118. https://doi.org/10.3390/rs11020118

Demarez V, Helen F, Marais-Sicre C, Baup F. In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series. Remote Sensing. 2019; 11(2):118. https://doi.org/10.3390/rs11020118

Chicago/Turabian StyleDemarez, Valérie, Florian Helen, Claire Marais-Sicre, and Frédéric Baup. 2019. "In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series" Remote Sensing 11, no. 2: 118. https://doi.org/10.3390/rs11020118

APA StyleDemarez, V., Helen, F., Marais-Sicre, C., & Baup, F. (2019). In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series. Remote Sensing, 11(2), 118. https://doi.org/10.3390/rs11020118