Abstract

Dimensionality Reduction (DR) models are highly useful for tackling Hyperspectral Images (HSIs) classification tasks. They mainly address two issues: the curse of dimensionality with respect to spectral features, and the limited number of labeled training samples. Among these DR techniques, the Graph-Embedding Discriminant Analysis (GEDA) framework has demonstrated its effectiveness for HSIs feature extraction. However, most of the existing GEDA-based DR methods largely rely on manually tuning the parameters so as to obtain the optimal model, which proves to be troublesome and inefficient. Motivated by the nonparametric Gaussian Process (GP) model, we propose a novel supervised DR algorithm, namely Gaussian Process Graph-based Discriminate Analysis (GPGDA). Our algorithm takes full advantage of the covariance matrix in GP to constructing the graph similarity matrix in GEDA framework. In this way, more superior performance can be provided with the model parameters tuned automatically. Experiments on three real HSIs datasets demonstrate that the proposed GPGDA outperforms some classic and state-of-the-art DR methods.

1. Introduction

Hyperspectral images (HSIs) contain considerable different reflections of electromagnetic waves from visible light to near-infrared or even far-infrared [1,2]. This characteristic allows various ground objects to be discriminated based on HSIs with abundant information. Because of it, HSIs are widely used in astronomy [3], agriculture [4], biomedical imaging [5], geosciences [6] and military surveillance [7]. However, the abundant features in HSIs could lead to significant redundancy. When using traditional classification algorithms to distinguish the class/object of each pixels in HSIs, the curse of dimensionality or the so-called “Hughes Phenomenon” would occur [8]. Chang et al. found that up to 94% of the spectral bands can be brushed aside without affecting the classification accuracy [9]. Therefore, Dimensionality Reduction (DR), a pre-processing procedure which tries to discover low-dimensional latent features from high-dimensional HSIs, plays a vital role in HSIs data analysis and classification.

In general, DR methods can be divided into two categories: feature selection and feature extraction. The former attempts to select a small subset of bands from the original bands based on some criteria, while the latter tries to find a low-dimensional subspace embedded in high-dimensional observations [10]. As reviewed in [11], discovering optimal bands from large numbers of possible feature combinations by feature selection methods could be suboptimal, so we only focus on feature extraction based DR methods for HSIs instead of feature selection in this paper.

A variety of feature extraction based DR models have been introduced for HSIs data analysis over the past decades. They can be roughly divided into two categories: unsupervised and supervised DR techniques. Unsupervised DR methods try to find low-dimensional representations that could preserve the intrinsic structure of the high-dimensional observations without using labels, while supervised methods make use of the available labels to find low-dimensional and discriminant features. The most representative unsupervised DR algorithm could be Principal Component Analysis (PCA), which tries to use the linear model to project the observed data into low-dimensional space with maximal variances [12,13]. Based on PCA, various extensions have been proposed, such as Probabilistic PCA (PPCA), Robust PCA (RPCA), Sparse PCA, Tensor PCA, etc. [11,14,15,16,17,18]. However, the aforementioned algorithms belong to the linear DR methods. When dealing with nonlinear structures embedded into the high-dimensional HSIs data, PCA and its linear extensions could be unable to provide satisfactory performance. Therefore, many nonlinear DR methods have been introduced, among which manifold learning based DR models have been widely employed in HSIs data analysis [19,20,21].

Representative manifold learning based DR algorithms include Isometric Mapping (ISOMAP) [20], Locally Linear embedding (LLE) [19], Laplacian Eigenmaps (LE) [22], Local Tangent Space Alignment (LTSA) [23], etc. The idea behind these algorithms is to assume that the data lie along a low-dimensional manifold embedded in a high-dimensional Euclidean space, and to uncover this manifold structure [24] with different criteria. For example, ISOMAP, an extension of Multi-dimensional Scaling (MDS) [25], seeks a low-dimensional embedding that preserves geodesic distances of all the pairs of points. In LLE, each sample is reconstructed by a linear combination of its neighbors and then the corresponding low-dimensional representations that could preserve the linear reconstruction relationship in original space are solved. LE utilizes a similarity graph to represent the neighbor relationships of pairwise points in low-dimensional space. The local geometry via the tangent space is modeled in LTSA to learn the low-dimensional embedding. However, most of the manifold learning models encounter the so-called out-of-sample problem [26], which means it could be ineffective to find the low-dimensional representation corresponding to a new testing sample. An effective solution to this problem is to add a linear mapping that projects observed samples to low-dimensional subspace. For instance, Locality Preserving Projections (LPP) [27], Neighborhood Preserving Embedding (NPE) [28] and Linear Local Tangent Space Alignment (LLTSA) [29] are the linear extensions of LE, LLE and LTSA, respectively. In [30], a Graph Embedding (GE) framework has been proposed to unify these manifold learning methods on the basis of geometry theory. Recently, the representation-based algorithms have also been introduced to the GE framework to construct various similarity graphs [31]. For example, Sparse Representation (SR), Collaborative Representation (CR) and Low Rank Representation (LRR) [32] are utilized to constitute the sparse graph ( graph), collaborative graph ( graph) and low-rank graph, leading to Sparsity Preserving Projection (SPP) [33], Collaborative Representation based Projection (CRP) [34] and Low Rank Preserving Projections (LRPP) [35], respectively.

Nevertheless, the aforementioned algorithms are all unsupervised DR models, which means that extra labels available in HSIs data are not utilized. To take advantage of these label information, the unsupervised DR models can be extended to the supervised versions, which could improve the discriminative power of DR models [24]. In this line, Linear Discriminant Analysis (LDA), as the most well-known supervised DR model, attempts to improve the class-separability by maximizing the distance between heterogeneous samples and minimizing the distance between homogeneous samples [36]. However, LDA can only extract up to features with c being the number of label classes. Thus, Nonparametric Weighted Feature Extraction (NWFE) was proposed to tackle this problem by using the weighted mean to calculate the nonparametric scatter matrices which could obtain more than -dimension features [37]. Other related works including Regularized LDA (RLDA) [38], Modified Fisher’s LDA (MFLDA) [39] and Supervised PPCA (SPPCA) [11,40] have been introduced for supervised HSIs feature extraction.

Apparently, the above supervised linear DR models may fail to discover the nonlinear geometric structure in HSIs data, resulting in unsatisfactory performance of DR models. Therefore, many supervised nonlinear DR methods have been introduced to find the complex geometric structure embedded in high-dimensional data. For example, Local Fisher Discriminant Analysis (LFDA) effectively combine the advantages of Fisher Discriminant Analysis (FDA) [41] and LPP by maximizing the between-class separability and minimizing the within-class distance simultaneously [42,43]. Local Discriminant Embedding (LDE) extends the concept of LDA to perform local discrimination [44,45]. Low-rank Discriminant Embedding (LRDE) learns the latent embedding space by maximizing the empirical likelihood and preserving the geometric structure [46]. Other related techniques include Discriminative Gaussian Process Latent Variable Model (DGPLVM) [47], Locally Weighted Discriminant Analysis (LWDA) [48], Multi-Feature Manifold Discriminant Analysis (MFMDA) [49], etc. Similarly, the representation based algorithms have also been introduced to supervised DR framework, such as Sparse Graph-based Discriminate Analysis (SGDA) [50], Weighted Sparse Graph-based Discriminate Analysis (WSGDA) [51], Collaborative Graph-based Discriminate Analysis (CGDA) [52], Laplacian regularized CGDA (LapCGDA) [53], Discriminant Analysis with Graph Learning (DAGL) [54], Graph-based Discriminant Analysis with Spectral Similarity (GDA-SS) [55], Local Geometric Structure Fisher Analysis (LGSFA) [56], Sparse and Low-Rank Graph-based Discriminant Analysis (SLGDA) [57], Kernel CGDA (KCGDA) [53], Laplacian Regularized Spatial-aware CGDA (LapSaCGDA) [58], etc. A good survey of these discriminant analysis models can be found in [31].

Although the graph embedding based DR methods are effective for extracting discriminative spectral features of HSIs data, these models are significantly affected by two factors: similarity graphs and model parameters. The similarity graph is the key for all the graph embedding models, while the performance of the models largely relies on the manual settings of model parameters, which is time-consuming and inefficient. Motivated by the nonparametric Gaussian Process (GP) model [59], we constitute the similarity graphs with GP in this paper. A Gaussian process is a type of continuous stochastic process, which defines a probability distribution over functions. With various covariance/kernel functions, GP can nonparametrically model complex and nonlinear mappings. Furthermore, all parameters of covariance functions typically termed hyperparameters can be learned automatically in GP. Inspired by the benefits of GP, we try to learn the similarity matrix in the graph embedding framework with GP. Specifically, the learned covariance matrix in GP is considered as the similarity graphs, giving rise to the Gaussian Process Graph based Discriminate Analysis (GPGDA), which could learn more efficient similarity graphs and avoid manually tuning parameters compared to existing algorithms. Experimental results on three HSIs datasets demonstrate that the proposed GPGDA can effectively improve the classification accuracy without time-consuming model parameters tuning.

The rest of the paper is organized as follows. In Section 2, we briefly review the related works, including the Gaussian Process (GP), Graph-Embedding Discriminate Analysis framework. The proposed Gaussian Process Graph-based Discriminate Analysis (GPGDA) is introduced in Section 3. Then, three HSIs datasets are used to evaluate the effectiveness of the proposed GPGDA in Section 4. Finally, a brief summary is given in Section 5.

2. Related Works

In this section, we briefly review the Gaussian Process and Graph-Embedding Discriminate Analysis framework. For the sake of consistency, we make use of the following notations throughout this paper: are the original high-dimensional data with each sample ; are the outputs where each sample (real values for regression tasks and discrete labels in for classification tasks); are the projected low-dimensional data with dimension and each corresponding to and . For the sake of convenience, we further denote X as an matrix, Y an vector and Z an matrix.

2.1. Gaussian Process

Gaussian Process is typically used in Gaussian Process Regression (GPR), where we assume that each output sample is generated from the unknown function with independent and identically-distributed noise variables with distributions , which is . A Gaussian Process prior is placed over the latent function f, i.e., , with the covariance matrix defined by the positive-semidefinite kernel function . are the parameters of kernel function and typically termed as hyperparameters. The choice of kernel function and its hyperparameters settings determine the behavior of GP, which are fairly significant. With Bayesian theorem, the latent function can be marginalized analytically . Generally, the parameter of Gaussian noise can be easily merged into the covariance function with . Thus, the hyperparameters of kernel function can be optimized by maximizing the log marginal likelihood

Considering a testing data point , the prediction distribution for a new test point can be calculated as follows

where Ks are the matrices of the covariance/kernel function values at the corresponding points X and/or .

For the classification task with discrete outputs, active functions such as the sigmoid function as the likelihood model in are typically introduced in Gaussian Process Classification (GPC) for binary classification. When making prediction, the predictive distributions over the and for a new test point are

It is worth noting that the two integrals become analytically intractable due to the non-Gaussianity of the logistic function , making it impossible to get the exact posterior in GPC. In such case, approximation techniques such as Laplace Approximation (LA), Expectation Propagation (EP), etc. are adopted to acquire the approximated GP posterior and conduct model optimization.

2.2. Graph-Embedding Discriminant Analysis

Many DR approaches have been proposed recently for HSIs feature extraction and classification, among which the Graph-Embedding Discriminant Analysis methods have shown promising performance [31]. Typically, Graph-Embedding Discriminant Analysis (GEDA) models try to find the projection matrix P in the mapping function by preserving the similarities of samples in the original observation space. The objective function of GEDA can be denoted by

where the similarity matrix W is an undirected intrinsic graph with each element describing the similarity between samples and , is the Laplacian matrix of graph W, T is the diagonal matrix with and is the constraint matrix defined to find a non-trivial solution of the objective function. Typically, is the diagonal matrix T for scale normalization and may also be the Laplacian matrix of penalty graph . The intrinsic graph W characterizes intraclass compactness while the penalty graph describes interclass separability. By simply re-formulating the objective function, we can obtain

When is the Laplacian matrix of penalty graph , Equation (5) is named as Marginal Fisher Analysis (MFA) [60]. The solution of Equation (5) can be easily obtained by solving the generalized eigenvalue decomposition problem

where is constructed by the eigenvectors corresponding to the d smallest eigenvalues. As we can see from the above formulations, the most significant step of GEDA is to build an intrinsic graph.

A popular approach that estimate the similarity between samples and is the heat kernel, which is utilized in unsupervised LPP

where denotes the local scaling of data samples. Different from unsupervised LPP that estimates the similarities of all the vertices, supervised discriminant analysis methods build the affinity matrix with label information, which will further improve the discriminative power. Thus, the similarity matrix W typically becomes a block-diagonal matrix

where is the affinity matrix of size only from the lth class.

Recently, representation based algorithms have been introduced to construct the within-class similarity matrix. For example, the sparse representation coefficients ( norm) is used to construct the similarity matrix in SGDA [50] ( is the number of training data from the lth class). To reduce the computational complexity of SGDA, the collaborative representation ( norm) instead of sparse representation is used in CGDA [52]. Similarly, SLGDA [57], LapCGDA [53] and LapSaCGDA [58] were developed recently with different objective functions to construct the similarity matrices as follows,

where is a training sample from lth class, denotes all training samples from lth class, is excluding , in SLGDA indicates the nuclear norm, in LapCGDA and LapSaCGDA is the Laplacian matrix constructed by Equation (7), and and are similarly defined by and with the pixel coordinate for samples in lth class , respectively.

3. The Proposed Method

To effectively learn the similarity graphs without time-consuming parameters tuning in the Graph-Embedding Discriminant Analysis (GEDA) framework, the Gaussian Process Graph based Discriminant Analysis (GPGDA) method is proposed to address it. The GPGDA method makes use of the nonparametric and nonlinear GP model to learn the similarity/affinity matrix adaptively.

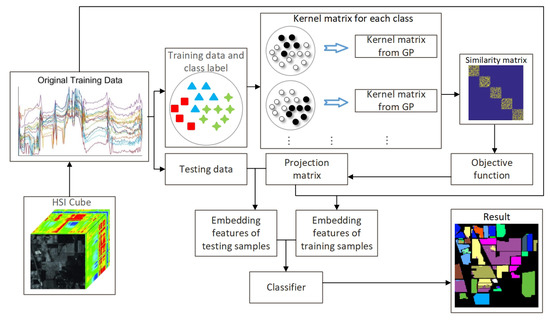

The flowchart of the proposed GPGDA method is shown in Figure 1. Firstly, the HSIs data will be divided into training and testing dataset randomly. Then, we try to construct the block-diagonal similarity matrix. Inspired by CGDA, we make use of GPR to model training samples from each class. To be specific, when we handle the training data from lth class, their labels are manually set to be 1 while the rest of the training data are labeled to be 0 conversely, which implicitly enforces interclass separability when learning the similarity graphs of each class. Thus, the learned similarity matrix should be more efficient than those from CGDA, KCGDA and other related algorithms. Subsequently, the similarity matrices of all classes are reassembled into the block-diagonal matrix, resulting a complete similarity matrix in the GEDA framework. Finally, the projection matrix can be acquired by solving the generalized eigenvalue decomposition problem and the dimension-reduced testing data can be obtained accordingly. To further measure the proposed method, the dimension-reduced training and testing data will be fed to different classifiers to get the prediction results.

Figure 1.

Flowchart of the proposed GPGDA method for HSIs feature extraction and classification.

From Section 2.1, we can learn that the convariance/kernel function is vital to GP since the corresponding kernel matrix measures the similarities between all pairs of samples. In view of this, kernel matrix in GP can be used to represent the similarity graph in GEDA framework. Because we want the intrinsic graph reflecting class-label information, it will be eventually expressed in the form of block-diagonal structure as in Equation (8). Here, we straightforwardly make use of GPR rather than GPC to model the high-dimensional training data with discrete labels, because time-consuming approximation methods in GPC could increase the model complexity. In addition, GPR is enough to model the class-specific training data.

Given a dataset , in which is a high-dimensional HSI sample and is the corresponding label. First, to efficiently learn the similar matrix, we set the label of training samples from lth class to be 1 while the rest are 0. The new binary labels can be denoted by . Then, let us model the mapping from to with nonlinear GPR,

The optimal hyperparameters of specific kernel function can be automatically estimated by optimizing the GPR objective function in Equation (1) with gradients based optimization algorithms. With the optimal hyperparameters, we could obtain the corresponding kernel matrix as follows,

where are the training samples from lth class with the number of the class-specific data , and denotes training data from other categories.

At this moment, we only care about training samples from lth class, which have been labeled to be 1, so we choose the block from the kernel matrix, which corresponds to the samples from lth class in order to form the similarity matrix . Once we have repeatedly obtained all the class-specific similarity matrix by GPR, the block-diagonal matrix W in Equation (8) can be simply constructed

Finally, based on the GEDA framework, it is easy to solve the optimal projection matrix P by solving the eigenvalue decomposition in Equation (6).

The complete GPGDA algorithm is outlined in Algorithm 1. To boost the performance of the models, we preprocess the HSIs data by a simple average filtering initially.

| Algorithm 1 GPGDA for HSIs dimensionality reduction and classification. |

| Input: High-dimensional training samples , training ground truth , pre-fixed latent dimensionality d, and testing pixels , testing ground truth . Output: s = {, P}.

|

As for the model complexity, since only small-scale training data are considered, we do not make use of the approximation methods such as Fully Independent Training Conditional (FITC) model [59] for GPR. Therefore, the time complexity of the proposed GPGDA is where C is the number of the discrete classes and is the maximum number of samples in each class. By comparison, other discriminant analysis based methods such as CGDA and LapCGDA are with as well because there are matrix inversion operation. Thus, the proposed GPGDA does not increase the model complexity theoretically.

4. Experiments

We validated the effectiveness of the proposed GPGDA for HSI feature extraction and classification by comparing with SPPCA, NWFE, DGPLVM, SLGDA, LapCGDA, KCGDA and LGSFA on three typical HSIs datasets. In addition, the traditional Support Vector Machine (SVM) and Convolutional Neural Network (CNN) [61] were applied in the original high-dimensional spectral feature space for comparison. K-Nearest Neighbors (KNN) with Euclidean distance and SVM with Radial Basis Function (RBF) kernel were adopted as classifiers in the learned low-dimensional space to verify all the DR models in terms of the classification Overall Accuracy (OA), the classification Average Accuracy (AA) and the Kappa Coefficient (KC). The parameter K in KNN was set to 5. The optimal parameters of kernel in SVM were selected by grid searching within a given set . The architecture of CNNs for each dataset is shown in Table 1 based on the experiments settings in [61]. For a fair comparison, all the data were preprocessed by average filtering with a spatial window, which is a simple and efficient method for smoothing HSIs. Experiments to verify the effect of different window sizes were also conducted; please refer to the Supplementary Materials for details.

Table 1.

Architecture of the CNN.

Firstly, the most suitable kernel in GPGDA was chosen from 18 kernels in the fast Gaussian process latent variable model toolbox (FGPLVM) (http://inverseprobability.com/fgplvm/index.html). The corresponding hyperparameters of each kernel can be learned automatically in the proposed GPGDA. The regularization parameters such as for DGPLVM, SLGDA, LapCGDA and KCGDA were selected by the grid search with a given set . Table 2 displays the best parameter values for the above four DR models. Then, after obtaining the optimal kernel and its corresponding hyperparameters, we compared and chose the best dimensionality of each DR model in the range of 1–30 in terms of the classification accuracy based on SVM in the projected low-dimensional space. Finally, we further compared all the DR models on the selected optimal dimension when different numbers of training data were chosen. All the experiments were repeated ten times and the average results are reported with standard deviation (STD). All methods were tested on MATLAB R2017a using an Intel Xeon CPU with 2.50 GHz and 64G memory PC with Linux platform.

Table 2.

Optimal parameters settings for DGPLVM, SLGDA, LapCGDA and KCGDA on three HSI datasets.

4.1. Data Description

Three popular HSIs datasets were selected in our experiments (http://www.ehu.es/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes).

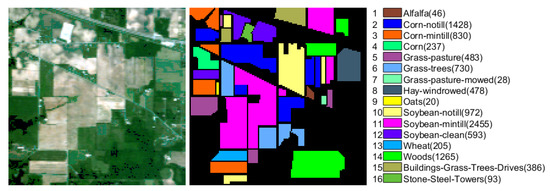

The Indian Pines scene (IndianPines) was captured by Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over Northwest Indiana in 1992, which contains pixels and 200 spectral reflectance bands after discarding 24 bands affected by water absorption. Sixteen ground truth classes are discriminated in this dataset, and the False Color Composition (FCC) and Ground Truth (GT) are shown in Figure 2.

Figure 2.

The false color composition and ground truth of Indian Pines data with numbers of samples for each class in brackets.

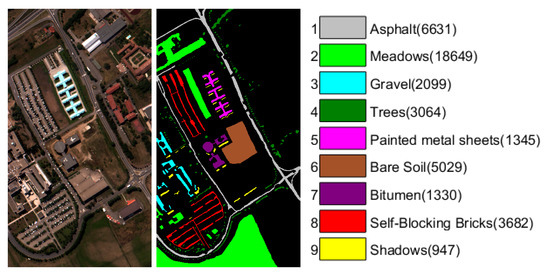

The University of Pavia scene (PaviaU) was gathered by Reflective Optics System Imaging Spectrometer (ROSIS-3) sensor over the University of Pavia, Italy in 2002. There are valid pixels with 103 spectral bands after removing some samples of the original scene containing no information. Nine ground truth classes are considered in this dataset, and the false color composition and ground truth are shown in Figure 3.

Figure 3.

The false color composition and ground truth of University of Pavia data with numbers of samples for each class in brackets.

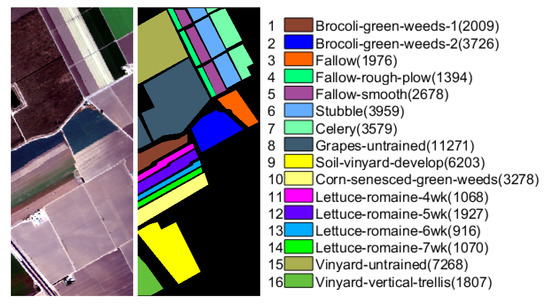

The Salinas Scene (Salinas) was collected by AVIRIS sensor over Salinas Valley, California in 1998, which consists of pixels with 224 spectral bands. Similar to Indian Pines scene, 20 water absorption and atmospheric effects bands are discarded, then the number of spectral bands becomes 204. Sixteen ground truth classes are labeled in this dataset, and the false color composition and ground truth are shown in Figure 4.

Figure 4.

The false color composition and ground truth of Salinas data with numbers of samples for each class in brackets.

4.2. Sensitivity Analysis for Kernel

In this section, we mainly analyze the impact of all the 18 kernels in terms of OAs, because the type of kernel is the only thing that has to be manually selected in our proposed model. We randomly chose 30 samples from each class as training data and the remainder were testing data with the number of reduced dimensionality being 30. Table 3 demonstrates the OAs based on the proposed GPGDA with different kernels on three HSIs datasets, where we can see that many kernels (up to 10 kernels on each dataset) could provide satisfactory results in terms of OAs in bold. Thus, choosing the best kernel function is not a big problem for our model. For the Indian Pines, University of Pavia and Salinas scenes, we have recommended ten kernels for each dataset with respect to the following experimental results. As for other HSI datasets that have not been studied in this paper, the kernels “dexp” and “lin” in the proposed GPGDA can be firstly considered as they could provide high accuracy for all the three datasets.

Table 3.

Classification accuracy based on the proposed GPGDA with 18 kernels on Indian Pines, University of Pavia and Salinas datasets.

4.3. Experiments on the Indian Pines Data

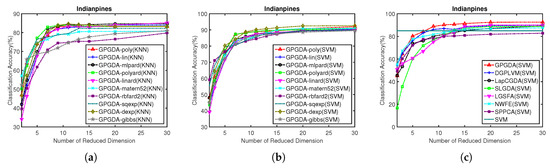

Initially, an optimal kernel was selected for the proposed GPGDA based on the KNN and SVM classification results. The corresponding hyperparameters of each kernel could be learned via empirical Bayesian approach according to the training data. In this experiment, 30 samples were randomly chosen from each class as training data. When the number of training data in a certain category was less than 30, then 60% samples were chosen. For the fair comparison, the remaining data were split into the verification set (50%) and test set (50%). The optimal number of reduced dimensionality are chosen based on the verification set, and the reported results in Figure 5 are based on the test set.

Figure 5.

Classification accuracy w.r.t. different kernels and different dimensionality of the projection space on Indian Pines data: (a) KNN classification results of GPGDA based on different kernels; (b) SVM classification results of GPGDA based on different kernels, and (c) SVM classification results of all the DR methods based on different dimensions.

As can be seen in Figure 5a,b, KNN and SVM based classification results from the proposed GPGDA with ten kernel functions are depicted. Here, we do not show all the results from 18 kernel functions because it could be chaos to plot 18 curves simultaneously. We only demonstrate ten kernels of poly, lin, mlpard, polyard, linard, matern52, rbfard2, sqexp, dexp and gibbs [59], which have better classification results than others. Although there are some differences for the ten kernels in terms of the KNN classification results, the classification results from SVM are similar, which means each one out of the ten kernels can be used efficiently. Since the ultimate goal of dimensionality reduction is classification, we only select the appropriate kernel based on SVM results, which are usually higher than KNN results. According to Figure 5b, kernel dexp is selected for the following experiments.

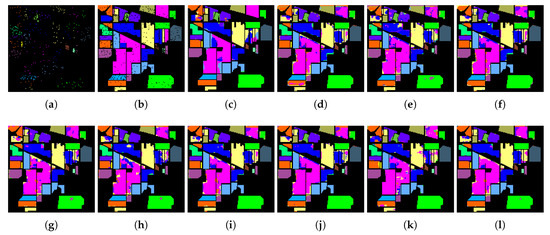

After setting the optimal kernel for GPGDA, we further conducted experiments to choose the best dimensionality of the projection space in terms of the OAs based on SVM. The optimal number of dimensionality of the projection space was selected from the range of 1–30. For the sake of fairness, the optimal regularization parameters in other DR models to be compared were set beforehand, as shown in the Table 2. It can be viewed in Figure 5c that, the optimal number of dimensionality for each DR model in Indian pines data is 30. It is also worth noting that, the OA of GPGDA surpasses other DR models on most dimensions, meaning that the learned low-dimensional features are very discriminatory. Table 4 illustrates the average classification accuracy of each class, the AAs, OAs, and KCs, as well as their STDs of eight DR models when dimensionality is 30 and classifier is SVM. Table 4 shows that the proposed GPGDA outperforms traditional CNN, SVM and other models in terms of the AA, OA and KC. Accordingly, Figure 6 tells us that classification maps from the proposed methods are more accurate than other contrastive approaches.

Table 4.

Classification results of CNN and different dimensionality reduction methods based on SVM on the Indian Pines data.

Figure 6.

Classification maps of CNN and different DR models based on SVM on the Indian Pines data: (a) Training GT; (b) Testing GT; (c) CNN (OA = 89.1%); (d) SVM (OA = 86.0%); (e) SPPCA (OA = 83.5%); (f) NWFE (OA = 87.6%); (g) DGPLVM(OA = 88.5%); (h) SLGDA (OA = 89.4%); (i) LapCGDA (OA = 89.4%); (j) KCGDA (OA = 87.9%); (k) LGSFA (OA = 89.8%); and (l) GPGDA (OA = 91.7%).

Finally, to verify the discriminating power of the proposed method even further, more classification experiments were conducted when different numbers of training data were randomly chosen: 10–60 samples were randomly chosen from each class, and the remainder were testing samples. For those classes with less samples, no more than 60% samples were randomly chosen. Table 5 shows that the OAs, AAs and KCs improve as the number of training samples increases for all methods. In addition, when classifier is KNN, LGSFA outperforms other DR models, followed by GPGDA. It is because LGSFA takes the intraclass neighbor reconstruction relationship of each training pixels into consideration, thus enhancing the class-separability of projected low-dimensional testing data. However, the local geometric structure is not utilized in GPGDA, thus it could be added in our future works. As for SVM classification accuracy, GPGDA demonstrates better OAs compared to other DR techniques. In general, the proposed GPGDA is capable of obtaining more discriminating features.

Table 5.

Classification results with different amounts of training data on the Indian pines data (OA ± STD (%)).

4.4. Experiments on the University of Pavia Data

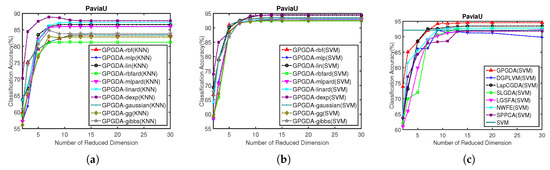

To further demonstrate the effectiveness of the proposed algorithms, we chose the University of Pavia data to conduct experiments. Similarly, we firstly selected the optimal kernel and learned their corresponding hyperparameters for the proposed GPGDA. In this experiment, 30 training samples were randomly picked from each class while the remaining data were split into the verification set (50%) and test set (50%). The optimal number of reduced dimensionality was picked based on the verification set, and the reported results in Figure 7 are based on the test set.

Figure 7.

Classification accuracy w.r.t. different kernels and different dimensionality of the projection space on University of Pavia data: (a) KNN classification results of GPGDA based on different kernels; (b) SVM classification results of GPGDA based on different kernels; and (c) SVM classification results of all the DR methods based on different dimensions.

According to Figure 7a,b, OAs based on KNN and SVM from the proposed GPGDA with ten kernel functions are illustrated. Considering the display effects, only ten well-performing kernels (rbf, mlp, lin, rgfard, mlpard, linard, dexp, gaussian, gg and gibbs) are shown. Similarly, there are some differences for the ten kernels in terms of the OAs based on KNN, but the classification results from SVM are almost coincidence with each other. Thus, choosing arbitrary kernel from these ten kernel functions would have little impact on the SVM classification results, indicating that selecting the best kernel function is not a troublesome problem for our model. In view of the fact that the OAs of dexp are the highest in both Figure 7a,b, we chose kernel dexp for the next experiments.

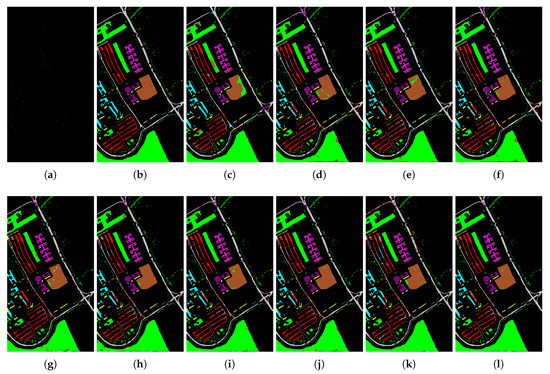

Once the optimal kernel for GPGDA was chosen, we chose the best dimensionality of the projection space in terms of the SVM results acquired in the low-dimensional space. All the experiments were conducted on low dimensions from 1 to 30. To be fair, the optimal regularization parameters in other contrastive DR models were determined via parameter sensitivity experiments, as shown in Table 2. Figure 7c shows that the proposed GPGDA is superior to other DR models in almost all the low-dimensional projection space. Moreover, the optimal number of dimensionality for each DR model in University of Pavia data is 30. Table 6 displays the AAs, OAs, KCs and detailed classification accuracy for each class based on SVM when dimensionality is 30. In Table 6 we can see that GPGDA excels traditional CNN, SVM and other DR algorithms in terms of AA, OA and KC. Accordingly, the classification maps in Figure 8 give us a similar conclusion.

Table 6.

Classification results of CNN and different DR methods based on SVM on the University of Pavia data.

Figure 8.

Classification maps of CNN and different DR models based on SVM on the University of Pavia data: (a) Training GT; (b) Testing GT; (c) CNN (OA = 90.4%); (d) SVM (OA = 91.2%); (e) SPPCA (OA = 92.5%); (f) NWFE (OA = 91.3%); (g) DGPLVM (OA = 92.6%); (h) SLGDA (OA = 91.4%); (i) LapCGDA (OA = 91.8%); (j) KCGDA (OA = 92.4%); (k) LGSFA (OA = 91.2%); and (l) GPGDA (OA = 93.9%).

Finally, based on the optimal projection dimensionality, we further compared the discriminating power of the eight DR approaches by randomly selecting different number of pixels as training data. Here, 10–60 training samples were randomly chosen from each class, and the remainder were testing samples. Table 7 shows that the OAs, AAs and KCs rise as the number of training samples increases for all methods. Specifically, LGSFA demonstrates comparative OAs based on KNN than the proposed GPGDA while GPGDA surpasses LGSFA and other methods in terms of the SVM classification results. This illustrates that GPGDA exceeds LGSFA, which preserves local geometric structure, in extracting discriminative features for HSIs data.

Table 7.

Classification results with different amounts of training data on the University of Pavia data (OA ± STD (%)).

4.5. Experiments on the Salinas Data

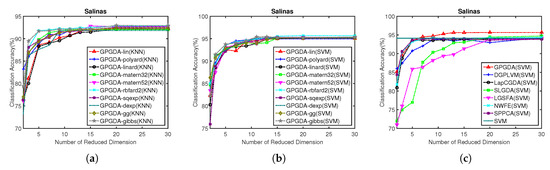

Another challenging HSI data is the Salinas data. As we did before, we firstly chose the optimal kernel for the proposed GPGDA, in which the hyperparameters can be automatically learned via empirical Bayesian approach. Thirty training samples were randomly picked from each class in this experiment, while the remaining data were split into the verification set (50%) and test set (50%). The optimal number of reduced dimensionality and the reported results in Figure 7 are based on the verification set and the test set, respectively.

Figure 9a,b shows the KNN and SVM classification accuracy of the proposed GPGDA, respectively. For the sake of simplicity, ten better-performing kernels of all the kernels are demonstrated: lin, polyard, linard, matern32, matern52, rbfard2, sqexp, dexp, gg and gibbs. As in the experiments results for Indian Pines and University of Pavia datasets, slight differences are shown in Figure 9a,b, indicating that any of the ten kernels can be used efficiently. Since the SVM classification result of rbfard2 is slightly higher than others, kernel rbfard2 is finally picked.

Figure 9.

Classification accuracy w.r.t. different kernels and different dimensionality of the projection space on Salinas data: (a) KNN classification results of GPGDA based on different kernels; (b) SVM classification results of GPGDA based on different kernels; and (c) SVM classification results of all the DR methods based on different dimensions.

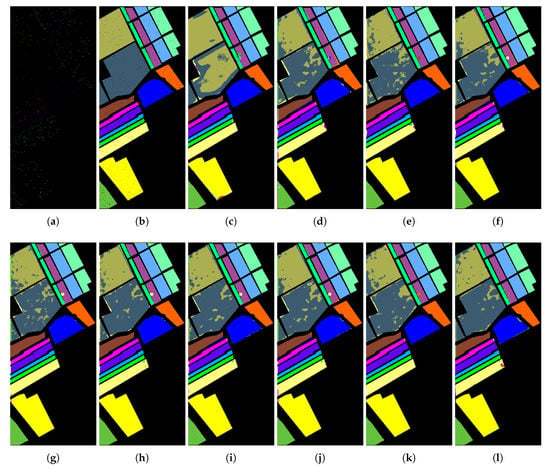

Having chosen the optimal kernel for GPGDA, the best dimensionality of the projection space can be obtained, as shown in Figure 9c, which describes the results based on SVM performed in the low-dimensional projection space. All the experiments were conducted on low-dimensional space in a range from 1 to 30. To make it fair, the best regularization parameters in other DR models were confirmed in advance. The optimal parameter values of other DR models on Salinas dataset are displayed in Table 2. Once again, the optimal number of dimensionality for all the DR models in Salinas data is 30. Table 8 displays the outperformance of GPGDA in terms of OA and KC. Accordingly, Figure 10 demonstrates that the classification map of GPGDA is more accurate than other methods.

Table 8.

Classification results of CNN and different DR methods based on SVM on the Salinas data.

Figure 10.

Classification maps of different DR models based on SVM on the Salinas data: (a) Training GT; (b) Testing GT; (c) CNN (OA = 84.2%); (d) SVM (OA = 93.2%); (e) SPPCA (OA = 94.7%); (f) NWFE (OA = 94.0%); (g) DGPLVM(OA = 94.1%); (h) SLGDA (OA = 94.1%); (i) LapCGDA (OA = 94.2%); (j) KCGDA (OA = 94.1%); (k) LGSFA (OA = 94.2%); and (l) GPGDA (OA = 95.6%).

Finally, based on the optimal projection dimensionality, we also conduct experiments when different amounts of training data were selected. In addition, 10–60 samples were randomly picked from all the labeled data, and the rest of data were the testing data. Table 9 displays that the OAs, AAs and KCs keep an upward tendency as the number of training samples increases for all methods. Moreover, the OAs of GPGDA based on SVM are higher than other DR models, while LGSFA is superior to GPGDA in terms of KNN classification results because of the preserving local geometric structure. Generally, the experimental results in Table 9 corroborate the discriminating power of the proposed GPGDA.

Table 9.

Classification results with different amounts of training data on the Salinas data (OA ± STD (%)).

5. Discussion

Based on above experimental results, we can provide the following discussions.

- (i)

- The proposed GPGDA outperforms SPPCA, NWFE, DGPLVM, SLGDA, LapCGDA, KCGDA and LGSFA in terms of OA, AA and KC based on SVM. As for the KNN classification results, LGSFA always takes the first place, but GPGDA is superior to other methods such as DGPLVM, SLGDA, and LapCGDA. The explanation could be that, although the learned similarity graph from GPGDA is more representative than that from other models, LGSFA preserves the intraclass neighbor reconstruction relationship in the objection function which considers the local manifolds. However, compared to other DR models of which regularization parameters needs be to be manually tuned via parameters sensitivity experiments, the only parameters of kernels in GPGDA can be learned automatically by gradients based optimization algorithms. Furthermore, the procedure of dividing the multi-class data into two classes enforces interclass separability when learning the kernel matrix from training data of each class.

- (ii)

- It is clear that KCGDA is a kernel based discriminant analysis method which combines the advantages of kernel and GEDA framework. However, it is quite difficult to set the optimal kernel parameters for KCGDA. Sometimes, KCGDA is even inferior to conventional CGDA because of the unsuitable parameters. DGPLVM is a GP based supervised DR model, but, unlike GPGDA, DGPLVM still has regularization parameters to be tuned. Thus, parameter sensitivity experiments should be carried out. By contrast, the proposed GPGDA outperforms the two models in terms of classification accuracy and automatic parameters tuning.

- (iii)

- The time complexity of GPGDA is , which is the same with other discriminant analysis based methods such as CGDA and LapCGDA. However, traditional discriminant analysis based methods are able to reach the close-form solutions straightforwardly, while the GPR in the proposed GPGDA is often optimized by gradients based optimization algorithms, which could be more time-consuming. Nevertheless, when the number of training samples is small, which is the case in HSIs classification, the training time can be short. In Table 4, Table 6 and Table 8, we report the running times of extracting the dimensionality reduced features with different algorithms on three HSIs datasets. It should be noted that, although the proposed GPGDA needs more running times than most contrastive DR models, the hyperparameters of GPGDA can be automatically learned, indicating that the time consumption for parameter sensitivity experiments with respect to other DR models can be saved significantly. Furthermore, if parallel computation is adopted to calculate the class-specific similarity matrix, the experiment time will be further greatly reduced.

- (iv)

- Deep Learning based methods such as Convolutional Neural Networks (CNN) can take spatial information into account while extracting spectral information from HSIs. Its performance can be sensitive to the depths and widths of the deep network. However, more layers means more parameters that needs to be learned. Due to the large number of learnable parameters, sufficient training samples are needed in CNNs to avoid the overfitting problem. Unfortunately, the lack of labeled training samples is a common bottleneck in HSI classification tasks, which could decrease the performance of CNN. By contrast, the only thing we need to select is the type of kernel in the proposed nonparametric model GPGDA, which could significantly outperform CNN especially in the small sample size scenario.

6. Conclusions

This paper introduces a novel supervised DR technique GPGDA for HSIs data based on the GEDA framework. The proposed GPGDA utilizes the kernel function in GP to calculate all the within-class matrices, and then constructs the block-diagonal intrinsic graph in the GEDA framework. Once we get the intrinsic graph, the optimal projection matrix can be evaluated based on the GEDA framework. To proves this, various experimental results illustrate that discriminative information for classification can be effectively extracted by the proposed DR methods. Our future work would focus on how to introduce the local geometric manifold structure of HSIs data into our GPGDA algorithm.

Supplementary Materials

The following are available online at https://www.mdpi.com/2072-4292/11/19/2288/s1.

Author Contributions

X.S. carried out the experiments and wrote the paper. X.J. was mainly responsible for mathematical modeling and experimental design. J.G. contributed to some ideas of this paper and revised the paper. Z.C. reviewed and edited the draft.

Funding

This work was supported by the National Natural Science Foundation of China under Grants 61402424, 61773355, and 61403351.

Acknowledgments

The authors would like to thank Wei Li and Fulin Luo for sharing the MATLAB codes of SLGDA, LapCGDA and LGSFA for comparison purposes.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Solomon, J.; Rock, B. Imaging spectrometry for earth remote sensing. Science 1985, 228, 1147–1152. [Google Scholar]

- Vane, G.; Duval, J.; Wellman, J. Imaging spectroscopy of the Earth and other solar system bodies. Remote Geochem. Anal. Elem. Mineral. Compos. 1993, 108, 121–166. [Google Scholar]

- Hege, E.K.; O’Connell, D.; Johnson, W.; Basty, S.; Dereniak, E.L. Hyperspectral imaging for astronomy and space surviellance. Opt. Sci. Technol. 2004, 5159, 380–391. [Google Scholar]

- Lacar, F.; Lewis, M.; Grierson, I. Use of hyperspectral imagery for mapping grape varieties in the Barossa Valley, South Australia. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Sydney, Australia, 9–13 July 2001; pp. 2875–2877. [Google Scholar]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Kruse, F.A.; Boardman, J.W.; Huntington, J.F. Comparison of airborne hyperspectral data and EO-1 Hyperion for mineral mapping. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1388–1400. [Google Scholar] [CrossRef]

- Yuen, P.W.; Richardson, M. An introduction to hyperspectral imaging and its application for security, surveillance and target acquisition. Imaging Sci. J. 2010, 58, 241–253. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Chang, C.I. Hyperspectral Data Exploitation: Theory and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Jia, X.; Richards, J.A. Segmented principal components transformation for efficient hyperspectral remote-sensing image display and classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 538–542. [Google Scholar]

- Xia, J.; Chanussot, J.; Du, P.; He, X. (Semi-) supervised probabilistic principal component analysis for hyperspectral remote sensing image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2224–2236. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Rodarmel, C.; Shan, J. Principal component analysis for hyperspectral image classification. Surv. Land Inf. Sci. 2002, 62, 115. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM 2011, 58, 11. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T.; Tibshirani, R. Sparse principal component analysis. J. Comput. Graph. Stat. 2006, 15, 265–286. [Google Scholar] [CrossRef]

- Kutluk, S.; Kayabol, K.; Akan, A. Classification of Hyperspectral Images using Mixture of Probabilistic PCA Models. In Proceedings of the 24th European Signal Processing Conference, Budapest, Hungary, 29 August–2 September 2016; pp. 1568–1572. [Google Scholar]

- Ren, Y.; Liao, L.; Maybank, S.J.; Zhang, Y.; Liu, X. Hyperspectral image spectral-spatial feature extraction via tensor principal component analysis. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1431–1435. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Tenenbaum, J.B.; De Silva, V.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Zhang, L.; Wang, Q.; Li, Z. Using diffusion geometric coordinates for hyperspectral imagery representation. IEEE Geosci. Remote Sens. Lett. 2009, 6, 767–771. [Google Scholar]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Zhang, Z.; Zha, H. Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM J. Sci. Comput. 2004, 26, 313–338. [Google Scholar] [CrossRef]

- Lunga, D.; Prasad, S.; Crawford, M.M.; Ersoy, O. Manifold-learning-based feature extraction for classification of hyperspectral data: A review of advances in manifold learning. IEEE Signal Process. Mag. 2014, 31, 55–66. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Bengio, Y.; Paiement, J.F.; Vincent, P.; Delalleau, O.; Roux, N.L.; Ouimet, M. Out-of-sample Extensions for LLE, Isomap, MDS, Eigenmaps, and Spectral Clustering. In Proceedings of the 16th International Conference on Neural Information Processing Systems, Whistler, BC, Canada, 9–11 December 2003; pp. 177–184. [Google Scholar]

- He, X.; Niyogi, P. Locality preserving projections. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2004; pp. 153–160. [Google Scholar]

- He, X.; Cai, D.; Yan, S.; Zhang, H.J. Neighborhood preserving embedding. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; pp. 1208–1213. [Google Scholar]

- Zhang, T.; Yang, J.; Zhao, D.; Ge, X. Linear local tangent space alignment and application to face recognition. Neurocomputing 2007, 70, 1547–1553. [Google Scholar] [CrossRef]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.J.; Yang, Q.; Lin, S. Graph embedding and extensions: A general framework for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 40–51. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Feng, F.; Li, H.; Du, Q. Discriminant analysis-based dimension reduction for hyperspectral image classification: A survey of the most recent advances and an experimental comparison of different techniques. IEEE Geosci. Remote Sens. Mag. 2018, 6, 15–34. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. A survey on representation-based classification and detection in hyperspectral remote sensing imagery. Pattern Recognit. Lett. 2016, 83, 115–123. [Google Scholar] [CrossRef]

- Qiao, L.; Chen, S.; Tan, X. Sparsity preserving projections with applications to face recognition. Pattern Recognit. 2010, 43, 331–341. [Google Scholar] [CrossRef]

- Yang, W.; Wang, Z.; Sun, C. A collaborative representation based projections method for feature extraction. Pattern Recognit. 2015, 48, 20–27. [Google Scholar] [CrossRef]

- Lu, Y.; Lai, Z.; Xu, Y.; Li, X.; Zhang, D.; Yuan, C. Low-rank preserving projections. IEEE Trans. Cybern. 2015, 46, 1900–1913. [Google Scholar] [CrossRef] [PubMed]

- Friedman, J.H. Regularized discriminant analysis. J. Am. Stat. Assoc. 1989, 84, 165–175. [Google Scholar] [CrossRef]

- Kuo, B.C.; Landgrebe, D.A. Nonparametric weighted feature extraction for classification. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1096–1105. [Google Scholar]

- Bandos, T.V.; Bruzzone, L.; Camps-Valls, G. Classification of hyperspectral images with regularized linear discriminant analysis. IEEE Trans. Geosci. Remote Sens. 2009, 47, 862–873. [Google Scholar] [CrossRef]

- Du, Q. Modified Fisher’s linear discriminant analysis for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2007, 4, 503–507. [Google Scholar] [CrossRef]

- Yu, S.; Yu, K.; Tresp, V.; Kriegel, H.P.; Wu, M. Supervised probabilistic principal component analysis. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 464–473. [Google Scholar]

- Mika, S.; Ratsch, G.; Weston, J.; Scholkopf, B.; Mullers, K.R. Fisher discriminant analysis with kernels. In Proceedings of the Neural Networks for Signal Processing IX: Proceedings of the 1999 IEEE Signal Processing Society Workshop, Madison, WI, USA, 25 August 1999; pp. 41–48. [Google Scholar]

- Sugiyama, M. Dimensionality reduction of multimodal labeled data by local fisher discriminant analysis. J. Mach. Learn. Res. 2007, 8, 1027–1061. [Google Scholar]

- Li, W.; Prasad, S.; Fowler, J.E.; Bruce, L.M. Locality-preserving dimensionality reduction and classification for hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1185–1198. [Google Scholar] [CrossRef]

- Chen, H.T.; Chang, H.W.; Liu, T.L. Local discriminant embedding and its variants. In Proceedings of the Computer Vision and Pattern Recognition, 2005. CVPR 2005, San Diego, CA, USA, 20–25 June 2005; pp. 846–853. [Google Scholar]

- Zhao, W.; Du, S. Spectral–spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Li, J.; Wu, Y.; Zhao, J.; Lu, K. Low-rank discriminant embedding for multiview learning. IEEE Trans. Cybern. 2016, 47, 3516–3529. [Google Scholar] [CrossRef] [PubMed]

- Urtasun, R.; Darrell, T. Discriminative Gaussian process latent variable model for classification. In Proceedings of the 24th International Conference on Machine Learning, Corvalis, OR, USA, 20–24 June 2007; pp. 927–934. [Google Scholar]

- Li, X.; Zhang, L.; You, J. Locally Weighted Discriminant Analysis for Hyperspectral Image Classification. Remote Sens. 2019, 11, 109. [Google Scholar] [CrossRef]

- Huang, H.; Li, Z.; Pan, Y. Multi-Feature Manifold Discriminant Analysis for Hyperspectral Image Classification. Remote Sens. 2019, 11, 651. [Google Scholar] [CrossRef]

- Ly, N.H.; Du, Q.; Fowler, J.E. Sparse graph-based discriminant analysis for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 3872–3884. [Google Scholar]

- He, W.; Zhang, H.; Zhang, L.; Philips, W.; Liao, W. Weighted sparse graph based dimensionality reduction for hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 686–690. [Google Scholar] [CrossRef]

- Ly, N.H.; Du, Q.; Fowler, J.E. Collaborative graph-based discriminant analysis for hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2688–2696. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Laplacian regularized collaborative graph for discriminant analysis of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7066–7076. [Google Scholar] [CrossRef]

- Chen, M.; Wang, Q.; Li, X. Discriminant analysis with graph learning for hyperspectral image classification. Remote Sens. 2018, 10, 836. [Google Scholar] [CrossRef]

- Feng, F.; Li, W.; Du, Q.; Zhang, B. Dimensionality reduction of hyperspectral image with graph-based discriminant analysis considering spectral similarity. Remote Sens. 2017, 9, 323. [Google Scholar] [CrossRef]

- Luo, F.; Huang, H.; Yang, Y.; Lv, Z. Dimensionality reduction of hyperspectral images with local geometric structure Fisher analysis. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 52–55. [Google Scholar]

- Li, W.; Liu, J.; Du, Q. Sparse and low-rank graph for discriminant analysis of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4094–4105. [Google Scholar] [CrossRef]

- Jiang, X.; Song, X.; Zhang, Y.; Jiang, J.; Gao, J.; Cai, Z. Laplacian regularized spatial-aware collaborative graph for discriminant analysis of hyperspectral imagery. Remote Sens. 2019, 11, 29. [Google Scholar] [CrossRef]

- Rasmussen, C.E. Gaussian processes in machine learning. In Summer School on Machine Learning; Springer: Berlin/Heidelberg, Germany, 2003; pp. 63–71. [Google Scholar]

- Xu, D.; Yan, S.; Tao, D.; Lin, S.; Zhang, H.J. Marginal fisher analysis and its variants for human gait recognition and content-based image retrieval. IEEE Trans. Image Process. 2007, 16, 2811–2821. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Zhu, L.; Ghamisi, P.; Jia, X.; Li, G.; Tang, L. Hyperspectral Images Classification With Gabor Filtering and Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2355–2359. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).