DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds

Abstract

1. Introduction

1.1. The Impact of the Refraction Effect on Structure from Motion-Multi-View-Stereo (SfM-MVS) Procedures

1.2. Fusing Image-Based and LiDAR Seabed Point Clouds

1.3. Contribution of the Present Research

2. Related Work

2.1. Analytical and Image-Based Refraction Correction

2.2. Image-Based Bathymetry Estimation Using Machine Learning and Simple Regression Models

2.3. Fusing Seabed Point Clouds

3. Datasets and Pre-Processing

3.1. Test Sites and Reference Data

3.2. Test Sites and Reference Data

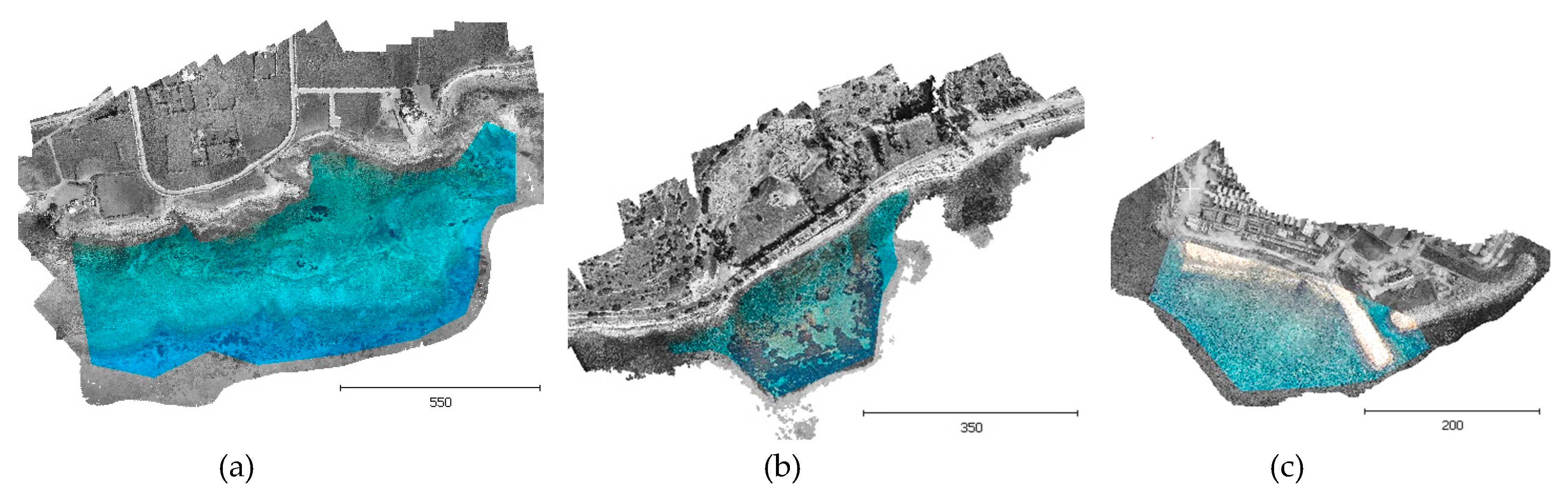

3.2.1. Agia Napa Test Area

3.2.2. Amathouda Test Area

3.2.3. Dekelia Test Area

3.3. The Influence of the Base-to-Height Ratio (B/H) of Stereopairs on the Apparent Depths

3.4. Data Pre-Processing

3.5. LiDAR Reference Data

3.6. GPS Reference Data

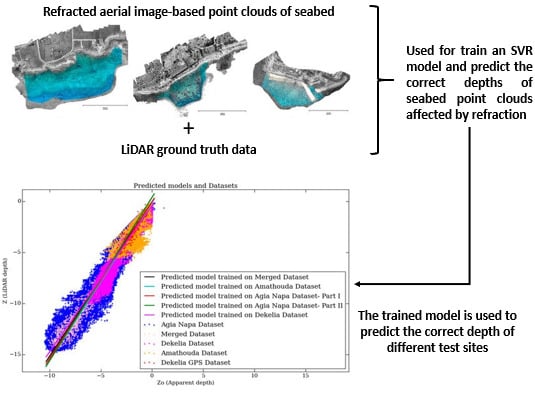

4. Proposed Methodology

4.1. Depth Correction Using SVR

4.2. The Linear SVR Approach

5. Experimental Results and Validation

5.1. Training, Validation, and Testing

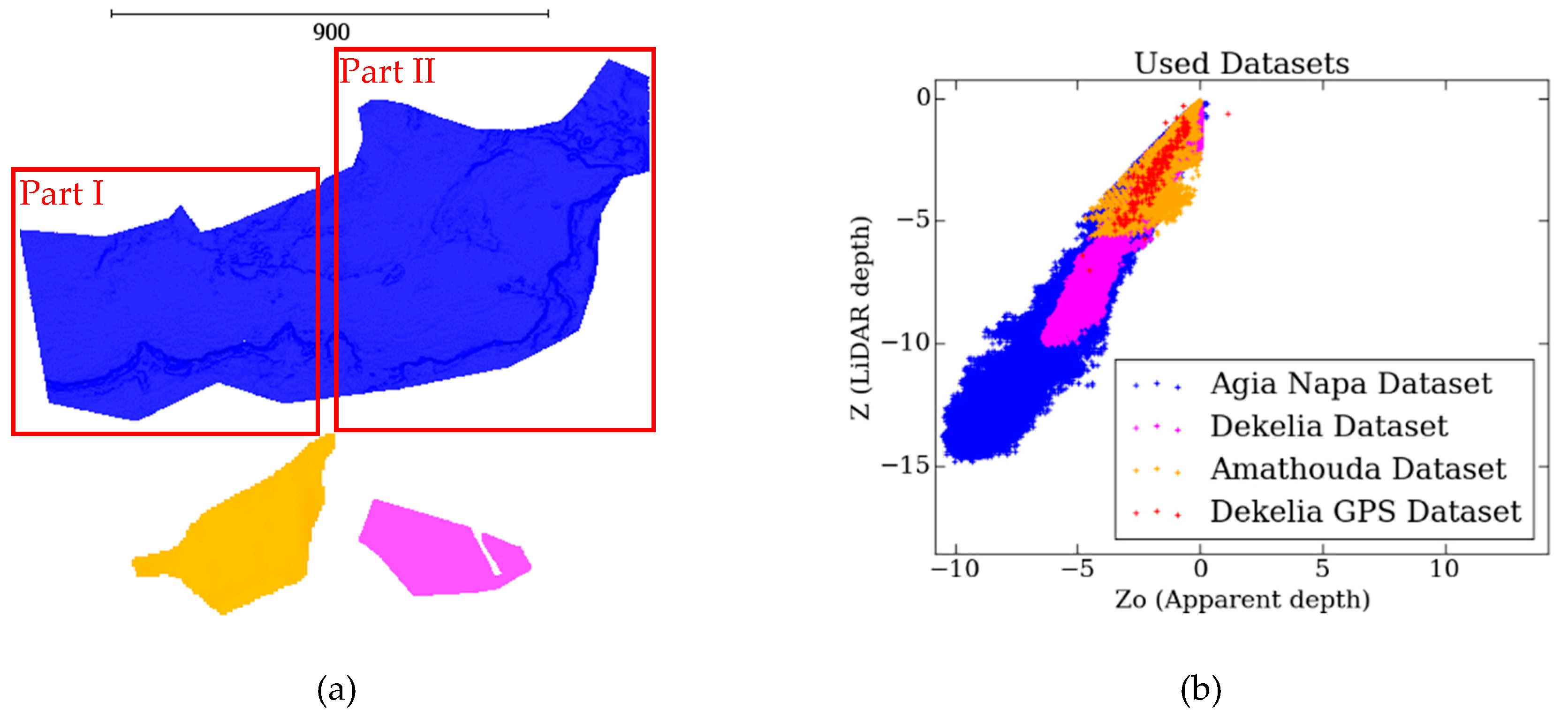

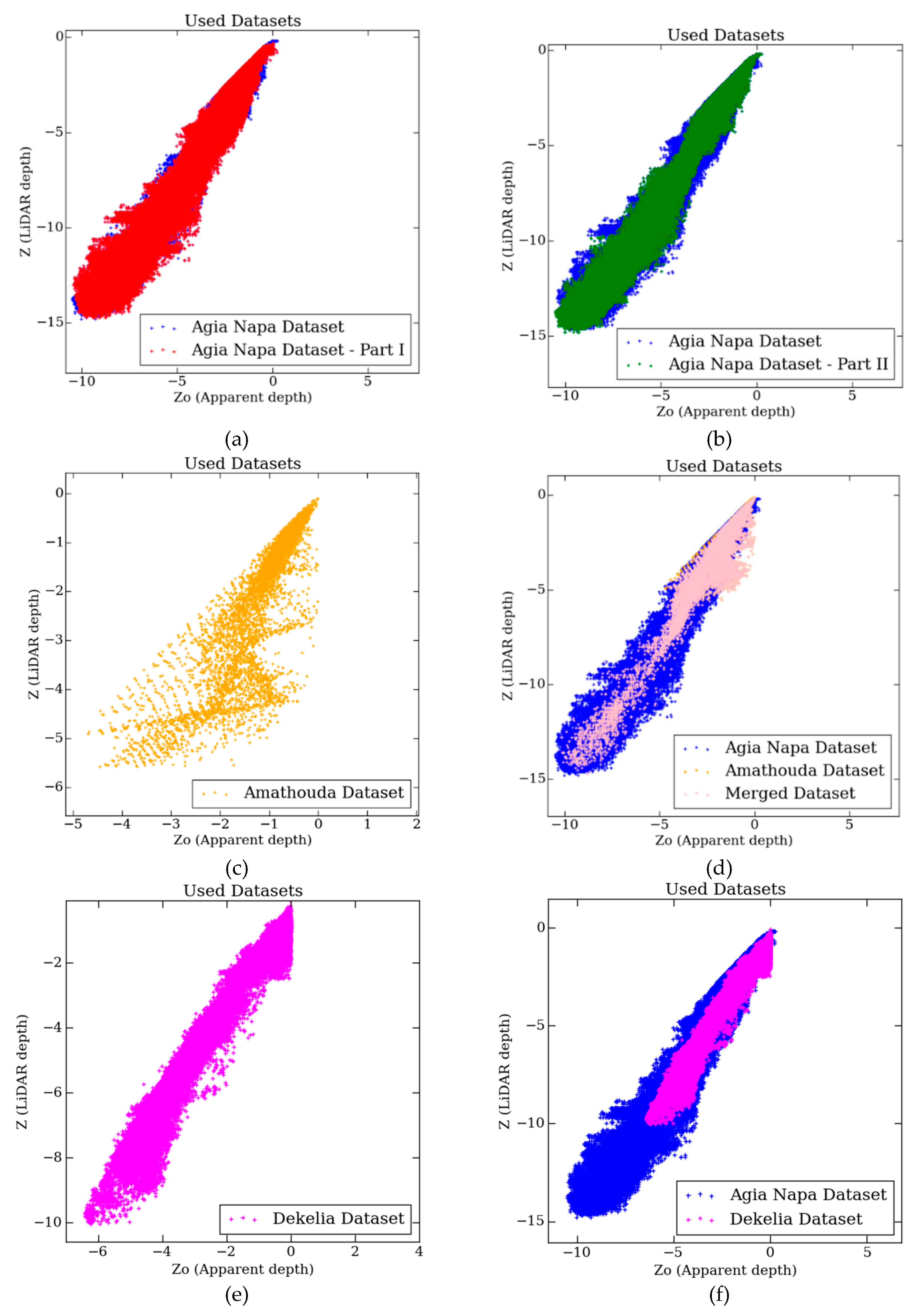

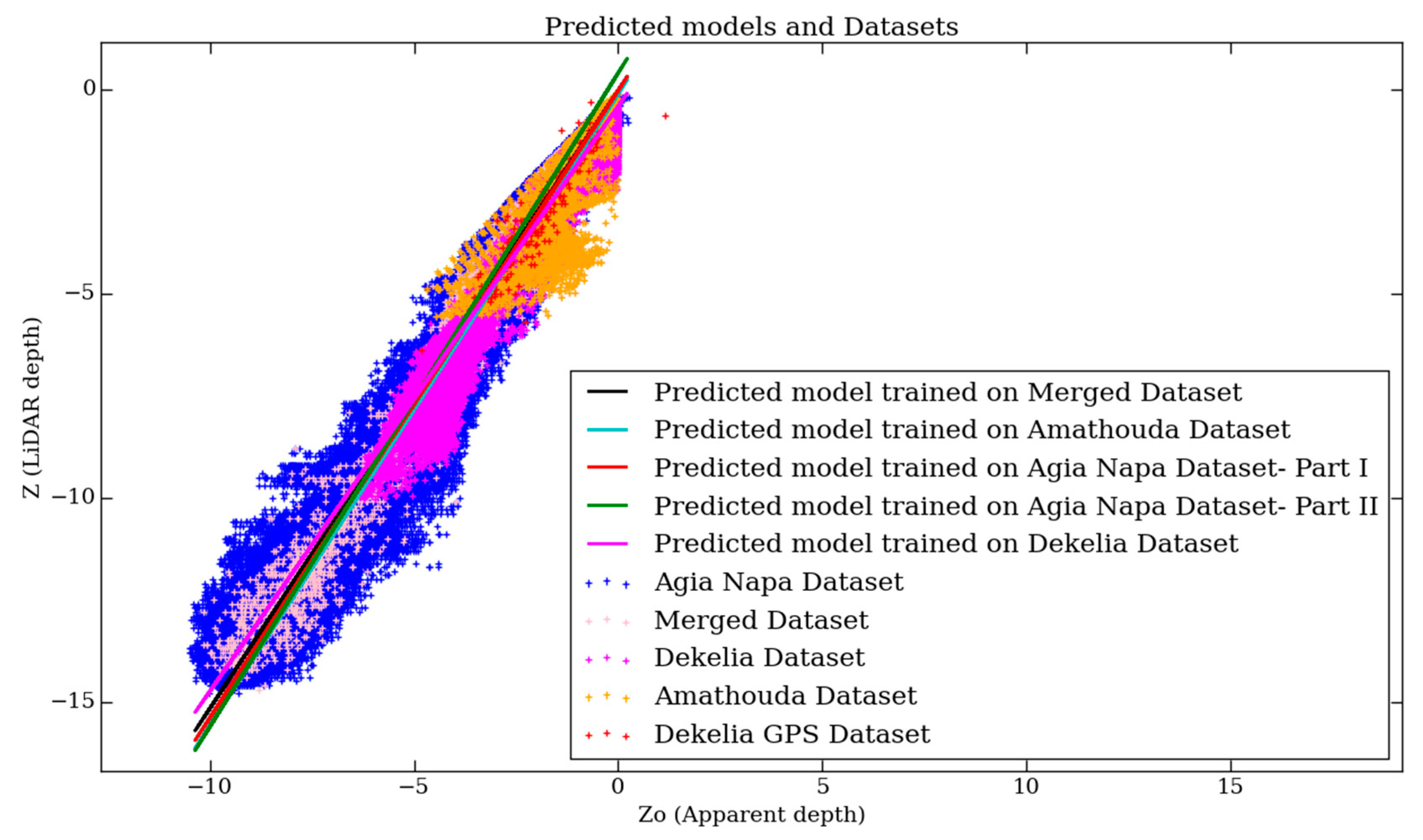

5.1.1. Agia Napa I and II, Amathouda, and Dekelia Datasets

5.1.2. Merged Dataset

5.2. Evaluation of the Results

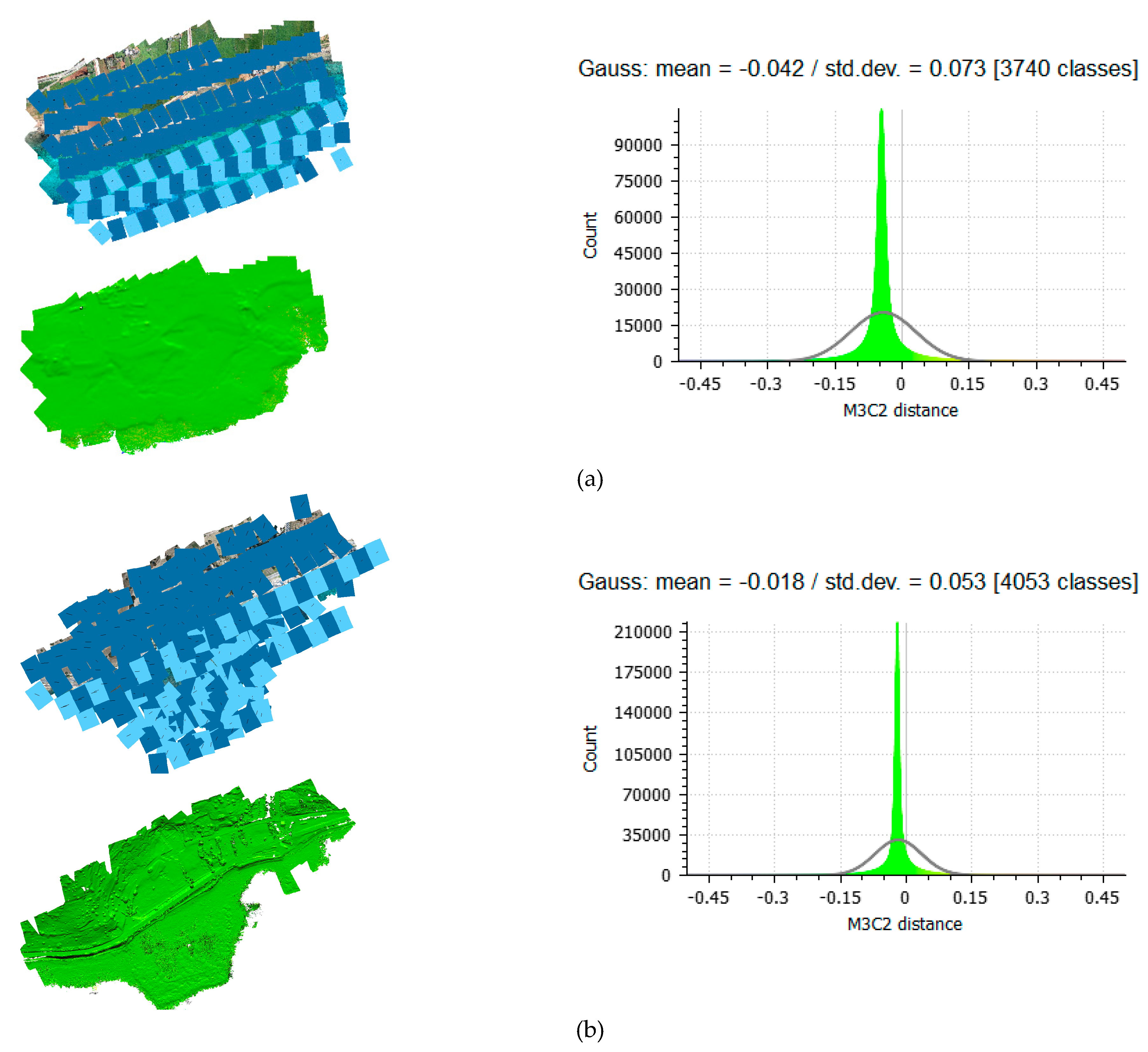

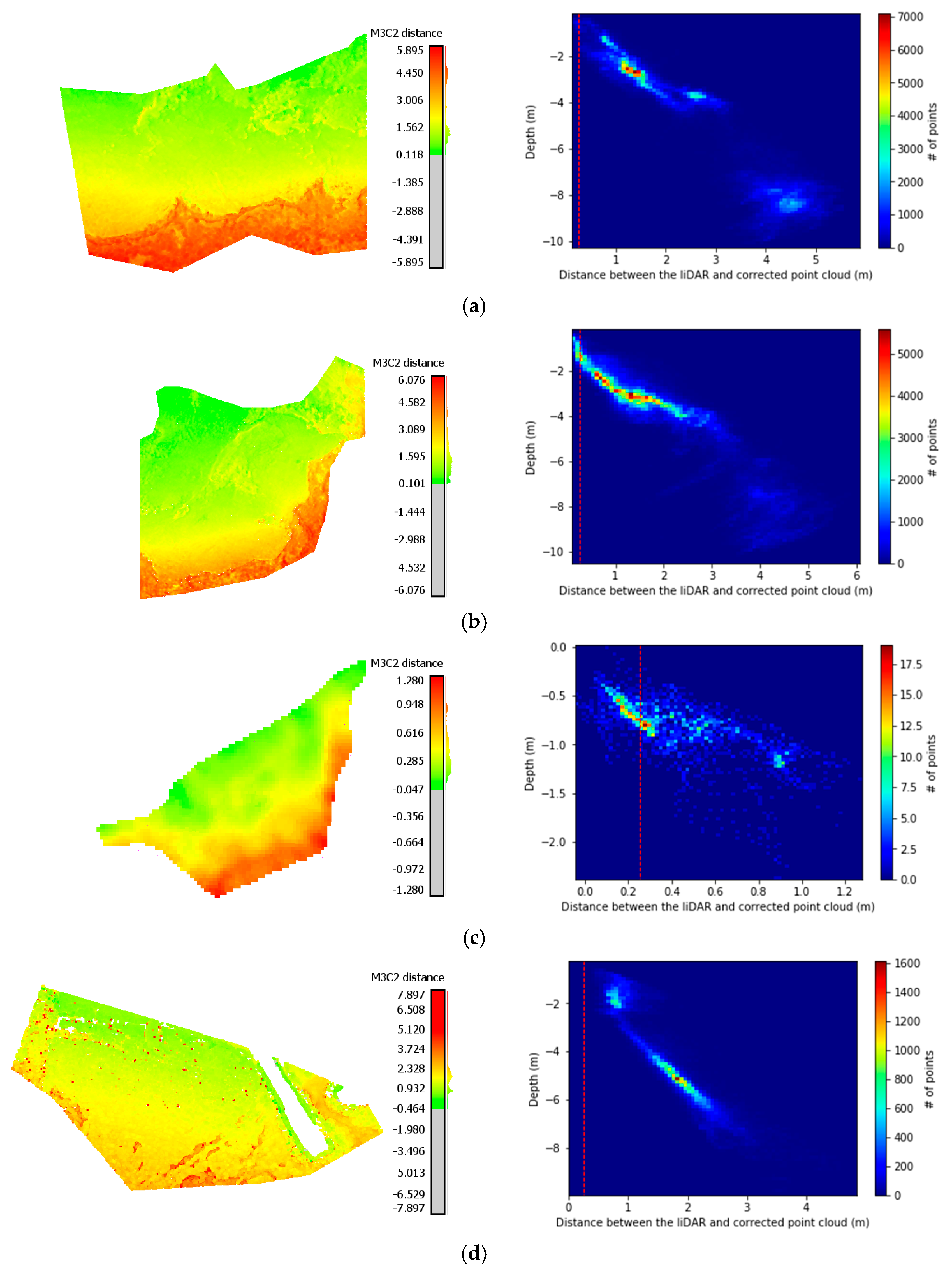

5.2.1. Comparing the Corrected Image-Based, and LiDAR Point Clouds

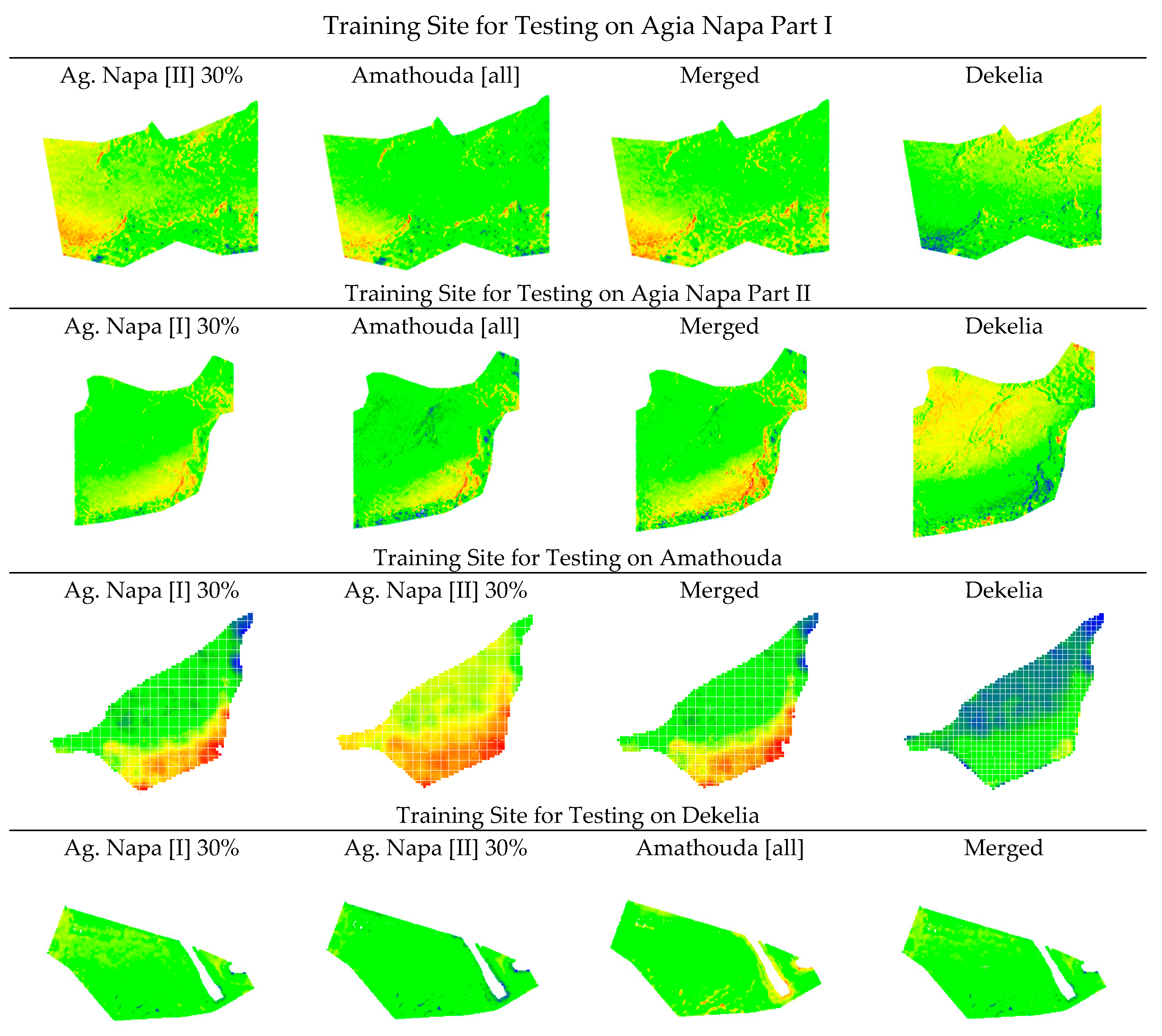

5.2.2. Fitting Score

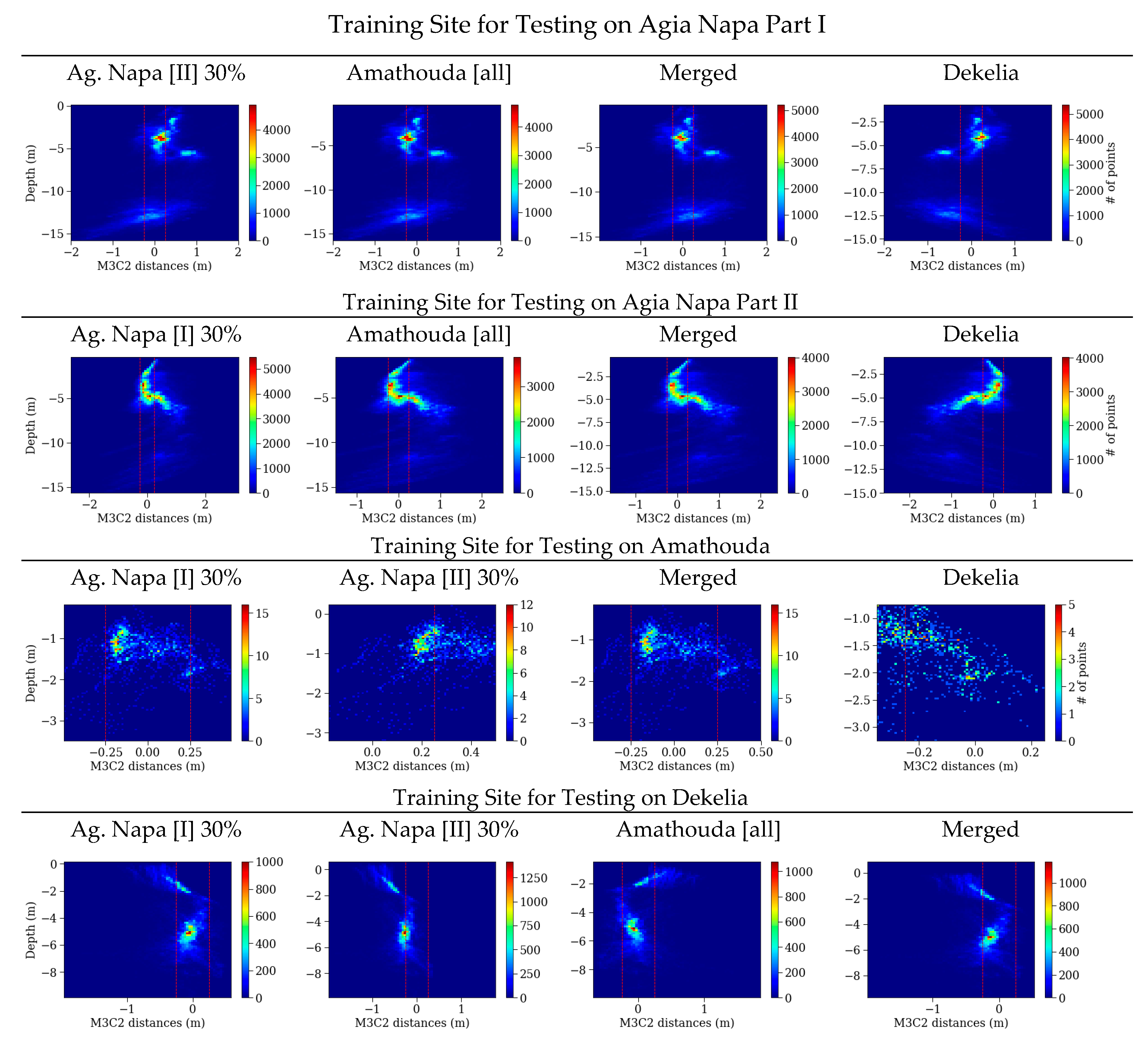

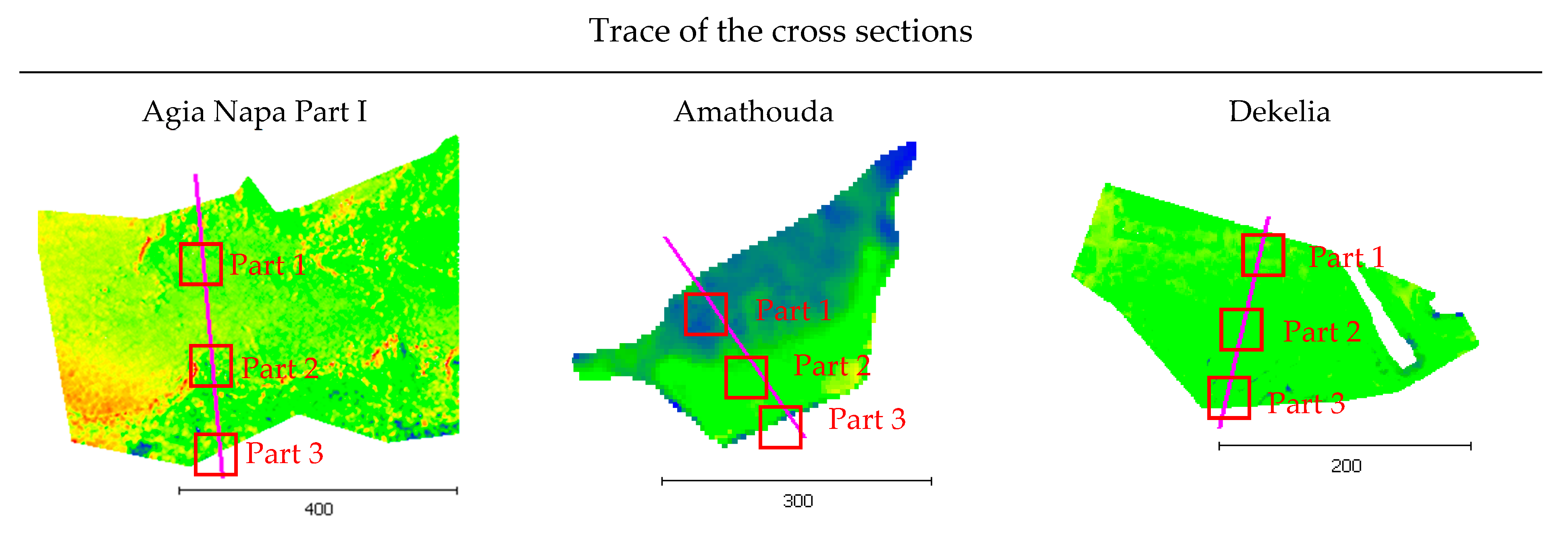

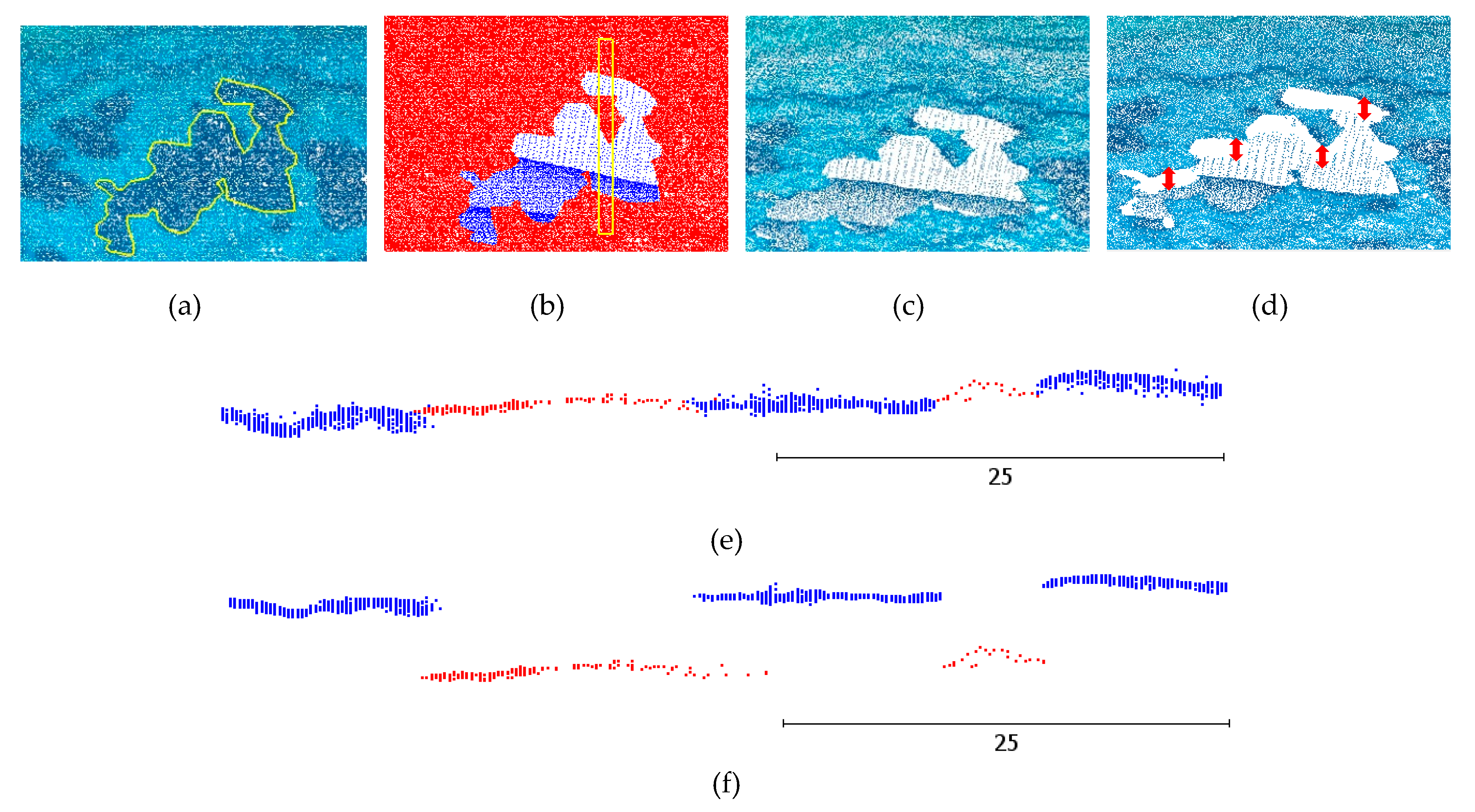

5.2.3. Seabed Cross Sections

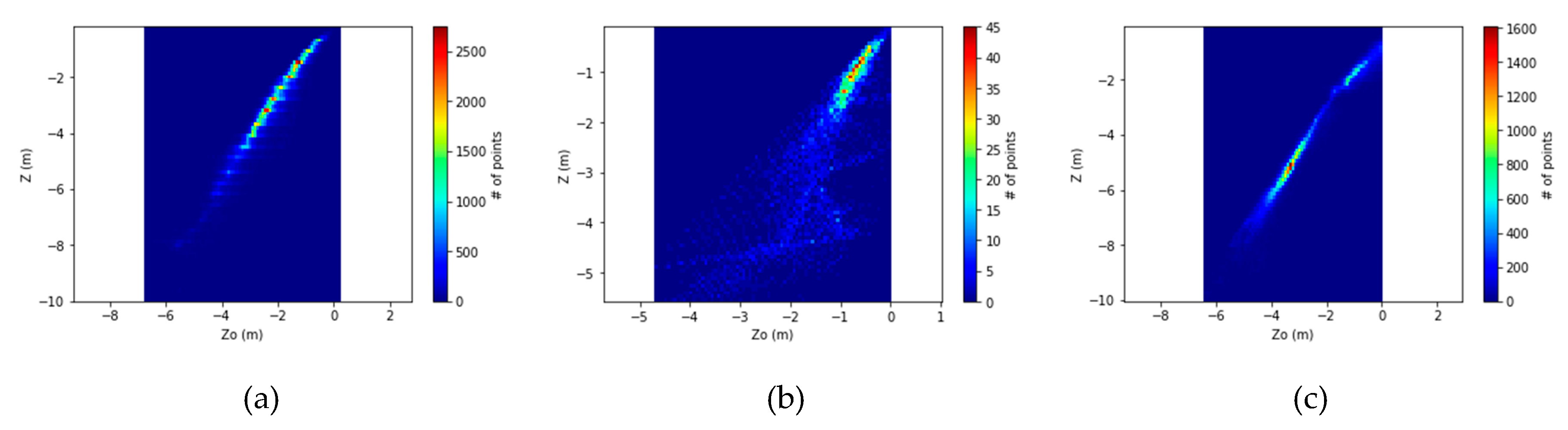

5.2.4. Distribution Patterns of Remaining Errors

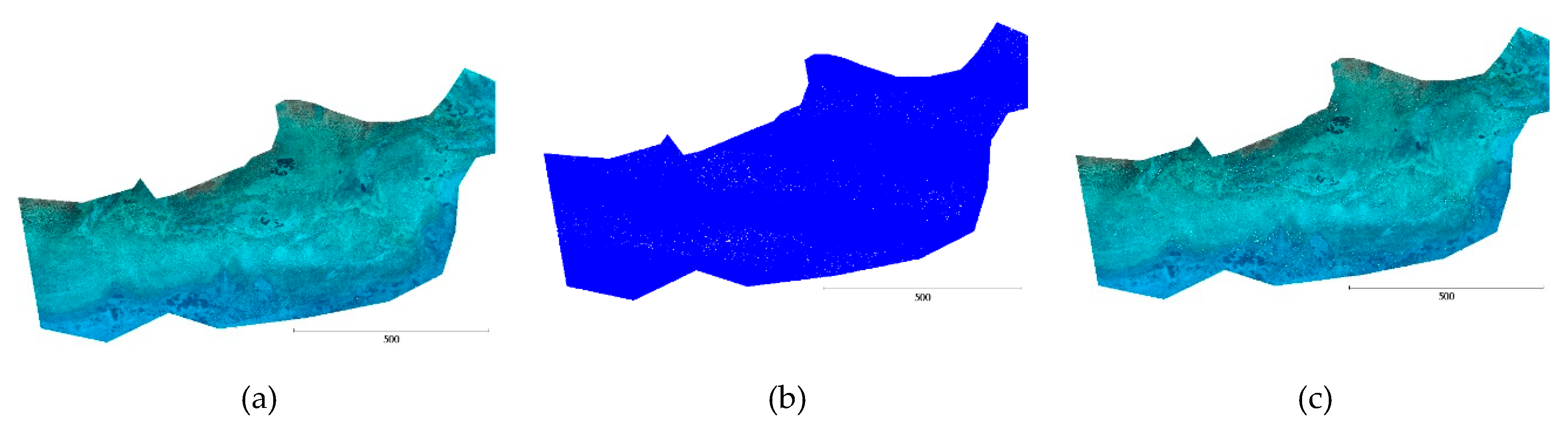

6. Fusing the Corrected Image-Based, and LiDAR Point Clouds

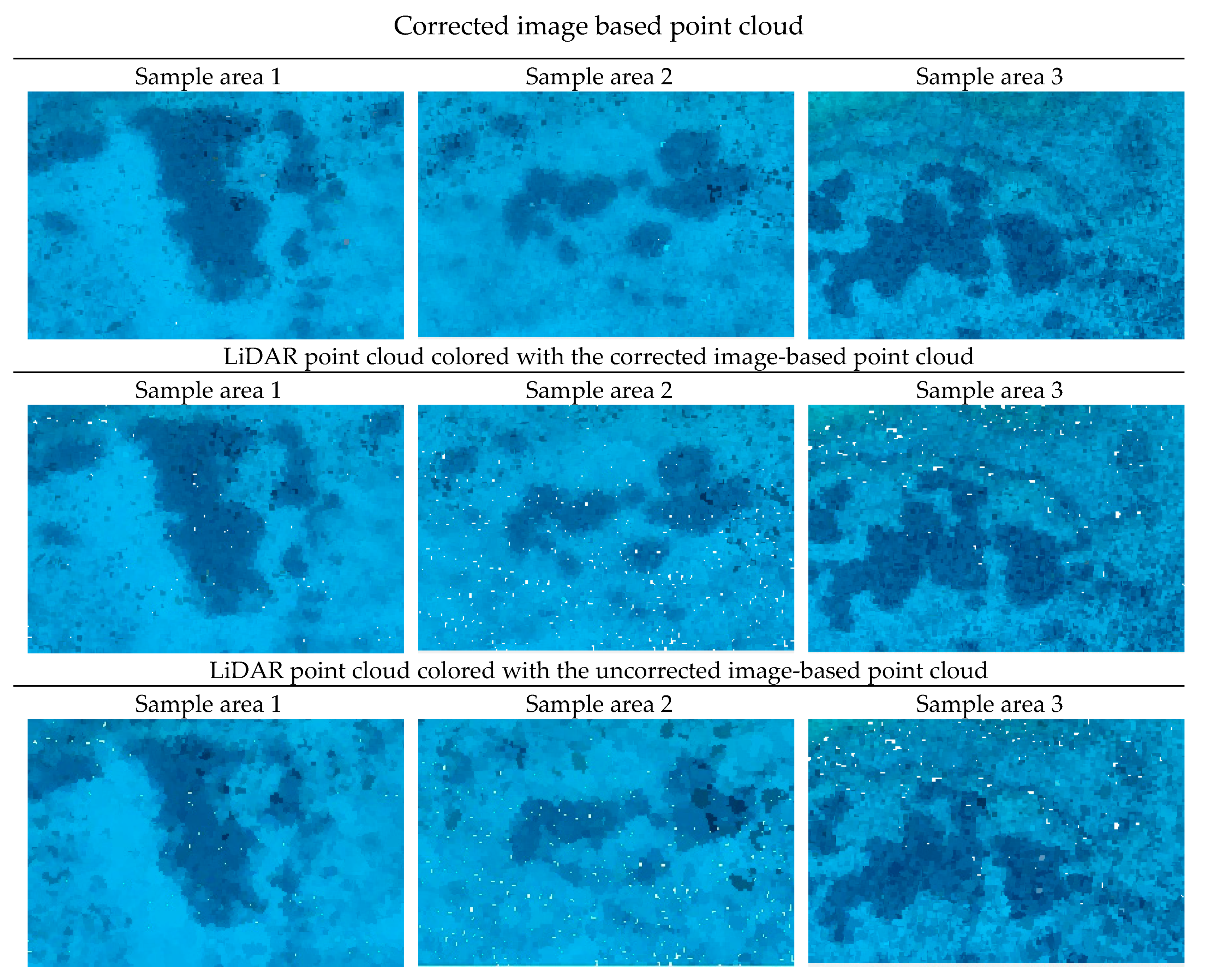

6.1. Color Transfer to LiDAR Data

6.2. Seamless Hole Filling

7. Discussion

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Agrafiotis, P.; Skarlatos, D.; Forbes, T.; Poullis, C.; Skamantzari, M.; Georgopoulos, A. Underwater photogrammetry in very shallow waters: main challenges and caustics effect removal. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 15–22. [Google Scholar] [CrossRef]

- Karara, H.M. Non-Topographic Photogrammetry, 2nd ed.; American Society for Photogrammetry and Remote Sensing: Falls Church, VA, USA, 1989. [Google Scholar]

- Menna, F.; Agrafiotis, P.; Georgopoulos, A. State of the art and applications in archaeological underwater 3D recording and mapping. J. Cult. Herit. 2018. [Google Scholar] [CrossRef]

- Skarlatos, D.; Agrafiotis, P. A Novel Iterative Water Refraction Correction Algorithm for Use in Structure from Motion Photogrammetric Pipeline. J. Mar. Sci. Eng. 2018, 6, 77. [Google Scholar] [CrossRef]

- Green, E.; Mumby, P.; Edwards, A.; Clark, C. Remote Sensing: Handbook for Tropical Coastal Management; United Nations Educational Scientific and Cultural Organization (UNESCO): London, UK, 2000. [Google Scholar]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. Shallow water bathymetry mapping from UAV imagery based on machine learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 9–16. [Google Scholar] [CrossRef]

- Lavest, J.; Rives, G.; Lapresté, J. Underwater camera calibration. In Computer Vision—ECCV; Vernon, D., Ed.; Springer: Berlin, Germany, 2000; pp. 654–668. [Google Scholar]

- Shortis, M. Camera Calibration Techniques for Accurate Measurement Underwater. In 3D Recording and Interpretation for Maritime Archaeology; Springer: Cham, Switzerland, 2019; pp. 11–27. [Google Scholar]

- Elnashef, B.; Filin, S. Direct linear and refraction-invariant pose estimation and calibration model for underwater imaging. ISPRS J. Photogramm. Remote Sens. 2019, 154, 259–271. [Google Scholar] [CrossRef]

- Fryer, J.G.; Kniest, H.T. Errors in Depth Determination Caused by Waves in Through-Water Photogrammetry. Photogramm. Rec. 1985, 11, 745–753. [Google Scholar] [CrossRef]

- Okamoto, A. Wave influences in two-media photogrammetry. Photogramm. Eng. Remote Sens. 1982, 48, 1487–1499. [Google Scholar]

- Agrafiotis, P.; Georgopoulos, A. Camera constant in the case of two media photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W5, 1–6. [Google Scholar] [CrossRef]

- Georgopoulos, A.; Agrafiotis, P. Documentation of a submerged monument using improved two media techniques. In Proceedings of the 2012 18th International Conference on Virtual Systems and Multimedia, Milan, Italy, 2–5 September 2012; pp. 173–180. [Google Scholar] [CrossRef]

- Maas, H.-G. On the Accuracy Potential in Underwater/Multimedia Photogrammetry. Sensors 2015, 15, 18140–18152. [Google Scholar] [CrossRef]

- Allouis, T.; Bailly, J.S.; Pastol, Y.; Le Roux, C. Comparison of LiDAR waveform processing methods for very shallow water bathymetry using Raman, near-infrared and green signals. Earth Surf. Process. Landf. 2010, 5, 640–650. [Google Scholar] [CrossRef]

- Schwarz, R.; Mandlburger, G.; Pfennigbauer, M.; Pfeifer, N. Design and evaluation of a full-wave surface and bottom-detection algorithm for LiDAR bathymetry of very shallow waters. ISPRS J. Photogramm. Remote Sens. 2019, 150, 1–10. [Google Scholar] [CrossRef]

- van den Bergh, J.; Schutz, J.; Chirayath, V.; Li, A. A 3D Active Learning Application for NeMO-Net, the NASA Neural Multi-Modal Observation and Training Network for Global Coral Reef Assessment. In Proceedings of the AGU Fall Meeting, New Orleans, LA, USA, 11–15 December 2017. [Google Scholar]

- Butler, J.B.; Lane, S.N.; Chandler, J.H.; Porfiri, E. Through-water close range digital photogrammetry in flume and field environments. Photogramm. Rec. 2002, 17, 419–439. [Google Scholar] [CrossRef]

- Tewinkel, G.C. Water depths from aerial photographs. Photogramm. Eng. 1963, 29, 1037–1042. [Google Scholar]

- Shmutter, B.; Bonfiglioli, L. Orientation problem in two-medium photogrammetry. Photogramm. Eng. 1967, 33, 1421–1428. [Google Scholar]

- Wang, Z. Principles of Photogrammetry (with Remote Sensing); Publishing House of Surveying and Mapping: Beijing, China, 1990. [Google Scholar]

- Shan, J. Relative orientation for two-media photogrammetry. Photogramm. Rec. 1994, 14, 993–999. [Google Scholar] [CrossRef]

- Fryer, J.F. Photogrammetry through shallow waters. Aust. J. Geod. Photogramm. Surv. 1983, 38, 25–38. [Google Scholar]

- Whittlesey, J.H. Elevated and airborne photogrammetry and stereo photography. In Photography in Archaeological Research; Harp, E., Jr., Ed.; University of New Mexico Press: Albuquerque, NM, USA, 1975; pp. 223–259. [Google Scholar]

- Westaway, R.; Lane, S.; Hicks, M. Remote sensing of clear-water, shallow, gravel-bed rivers using digital photogrammetry. Photogramm. Eng. Remote Sens. 2010, 67, 1271–1281. [Google Scholar]

- Elfick, M.H.; Fryer, J.G. Mapping in shallow water. Int. Arch. Photogramm. Remote Sens. 1984, 25, 240–247. [Google Scholar]

- Partama, I.G.Y.; Kanno, A.; Ueda, M.; Akamatsu, Y.; Inui, R.; Sekine, M.; Yamamoto, K.; Imai, T.; Higuchi, T. Removal of water-surface reflection effects with a temporal minimum filter for UAV-based shallow-water photogrammetry. Earth Surf. Process. Landf. 2018, 43, 2673–2682. [Google Scholar] [CrossRef]

- Ferreira, R.; Costeira, J.P.; Silvestre, C.; Sousa, I.; Santos, J.A. Using stereo image reconstruction to survey scale models of rubble-mound structures. In Proceedings of the First International Conference on the Application of Physical Modelling to Port and Coastal Protection, Porto, Portugal, 22–26 May 2006. [Google Scholar]

- Muslow, C. A flexible multi-media bundle approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, XXXVIII, 472–477. [Google Scholar]

- Wolff, K.; Forstner, W. Exploiting the multi view geometry for automatic surfaces reconstruction using feature based matching in multi media photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2000, XXXIII Pt B5, 900–907. [Google Scholar]

- Ke, X.; Sutton, M.A.; Lessner, S.M.; Yost, M. Robust stereo vision and calibration methodology for accurate three-dimensional digital image correlation measurements on submerged objects. J. Strain Anal. Eng. Des. 2008, 43, 689–704. [Google Scholar] [CrossRef]

- Byrne, P.M.; Honey, F.R. Air survey and satellite imagery tools for shallow water bathymetry. In Proceedings of the 20th Australian Survey Congress, Shrewsbury, UK, 20 May 1977; pp. 103–119. [Google Scholar]

- Harris, W.D.; Umbach, M.J. Underwater mapping. Photogramm. Eng. 1972, 38, 765. [Google Scholar]

- Masry, S.E. Measurement of water depth by the analytical plotter. Int. Hydrogr. Rev. 1975, 52, 75–86. [Google Scholar]

- Dietrich, J.T. Bathymetric Structure-from-Motion: Extracting shallow stream bathymetry from multi-view stereo photogrammetry. Earth Surf. Process. Landf. 2017, 42, 355–364. [Google Scholar] [CrossRef]

- Telem, G.; Filin, S. Photogrammetric modeling of underwater environments. ISPRS J. Photogramm. Remote Sens. 2010, 65, 433–444. [Google Scholar] [CrossRef]

- Woodget, A.S.; Carbonneau, P.E.; Visser, F.; Maddock, I.P. Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 47–64. [Google Scholar] [CrossRef]

- Murase, T.; Tanaka, M.; Tani, T.; Miyashita, Y.; Ohkawa, N.; Ishiguro, S.; Suzuki, Y.; Kayanne, H.; Yamano, H. A photogrammetric correction procedure for light refraction effects at a two-medium boundary. Photogramm. Eng. Remote Sens. 2008, 74, 1129–1136. [Google Scholar] [CrossRef]

- Hodúl, M.; Bird, S.; Knudby, A.; Chénier, R. Satellite derived photogrammetric bathymetry. ISPRS J. Photogramm. Remote Sens. 2018, 142, 268–277. [Google Scholar] [CrossRef]

- Qian, Y.; Zheng, Y.; Gong, M.; Yang, Y.H. Simultaneous 3D Reconstruction for Water Surface and Underwater Scene. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 754–770. [Google Scholar]

- Kasvi, E.; Salmela, J.; Lotsari, E.; Kumpula, T.; Lane, S.N. Comparison of remote sensing based approaches for mapping bathymetry of shallow, clear water rivers. Geomorphology 2019, 333, 180–197. [Google Scholar] [CrossRef]

- Mandlburger, G. A case study on through-water dense image matching. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, XLII-2, 659–666. [Google Scholar] [CrossRef]

- Mandlburger, G. Through-Water Dense Image Matching for Shallow Water Bathymetry. Photogramm. Eng. Remote Sens. 2019, 85, 445–455. [Google Scholar] [CrossRef]

- Wimmer, M. Comparison of Active and Passive Optical Methods for Mapping River Bathymetry. Master’s Thesis, Vienna University of Technology, Vienna, Austria, 2016. [Google Scholar]

- Wang, L.; Liu, H.; Su, H.; Wang, J. Bathymetry retrieval from optical images with spatially distributed support vector machines. GIScience Remote Sens. 2018, 1–15. [Google Scholar] [CrossRef]

- Misra, A.; Vojinovic, Z.; Ramakrishnan, B.; Luijendijk, A.; Ranasinghe, R. Shallow water bathymetry mapping using Support Vector Machine (SVM) technique and multispectral imagery. Int. J. Remote Sens. 2018, 1–20. [Google Scholar] [CrossRef]

- Mohamed, H.; Negm, A.; Zahran, M.; Saavedra, O.C. Bathymetry determination from high resolution satellite imagery using ensemble learning algorithms in Shallow Lakes: Case study El-Burullus Lake. Int. J. Environ. Sci. Dev. 2016, 7, 295. [Google Scholar] [CrossRef]

- Traganos, D.; Poursanidis, D.; Aggarwal, B.; Chrysoulakis, N.; Reinartz, P. Estimating satellite-derived bathymetry (SDB) with the google earth engine and sentinel-2. Remote Sens. 2018, 10, 859. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Pahlevan, N.; Vitti, A. Mapping Substrate Types and Compositions in Shallow Streams. Remote Sens. 2019, 11, 262. [Google Scholar] [CrossRef]

- Shintani, C.; Fonstad, M.A. Comparing remote-sensing techniques collecting bathymetric data from a gravel-bed river. Int. J. Remote Sens. 2017, 38, 2883–2902. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P.; Meredith, A. Preliminary Assessment of Turbidity and Chlorophyll Impact on Bathymetry Derived from Sentinel-2A and Sentinel-3A Satellites in South Florida. Remote Sens. 2019, 11, 645. [Google Scholar] [CrossRef]

- Legleiter, C.J.; Fosness, R.L. Defining the Limits of Spectrally Based Bathymetric Mapping on a Large River. Remote Sens. 2019, 11, 665. [Google Scholar] [CrossRef]

- Cabezas, R.; Freifeld, O.; Rosman, G.; Fisher, J.W. Aerial reconstructions via probabilistic data fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 4010–4017. [Google Scholar]

- Alvarez, L.; Moreno, H.; Segales, A.; Pham, T.; Pillar-Little, E.; Chilson, P. Merging unmanned aerial systems (UAS) imagery and echo soundings with an adaptive sampling technique for bathymetric surveys. Remote Sens. 2018, 10, 1362. [Google Scholar] [CrossRef]

- Legleiter, C.J. Remote measurement of river morphology via fusion of LiDAR topography and spectrally based bathymetry. Earth Surf. Process. Landf. 2012, 37, 499–518. [Google Scholar] [CrossRef]

- Coleman, J.B.; Yao, X.; Jordan, T.R.; Madden, M. Holes in the ocean: Filling voids in bathymetric lidar data. Comput. Geosci. 2011, 37, 474–484. [Google Scholar] [CrossRef]

- Cheng, L.; Ma, L.; Cai, W.; Tong, L.; Li, M.; Du, P. Integration of Hyperspectral Imagery and Sparse Sonar Data for Shallow Water Bathymetry Mapping. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3235–3249. [Google Scholar] [CrossRef]

- Leon, J.X.; Phinn, S.R.; Hamylton, S.; Saunders, M.I. Filling the ‘white ribbon’—A multisource seamless digital elevation model for Lizard Island, northern Great Barrier Reef. Int. J. Remote Sens. 2013, 34, 6337–6354. [Google Scholar] [CrossRef]

- Hasegawa, H.; Matsuo, K.; Koarai, M.; Watanabe, N.; Masaharu, H.; Fukushima, Y. DEM accuracy and the base to height (B/H) ratio of stereo images. Int. Arch. Photogramm. Remote Sens. 2000, 33, 356–359. [Google Scholar]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (NZ). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- CloudCompare (Version 2.11 Alpha) [GPL Software]. Available online: http://www.cloudcompare.org/ (accessed on 15 July 2019).

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.; Gonizzi-Barsanti, S. Dense image matching: Comparisons and analyses. In Proceedings of the 2013 IEEE Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; Volume 1, pp. 47–54. [Google Scholar]

- Matthies, L.; Kanade, T.; Szeliski, R. Kalman filter-based algorithms for estimating depth from image sequences. Int. J. Comput. Vis. 1989, 3, 209–238. [Google Scholar] [CrossRef]

- Okutomi, M.; Kanade, T. A multiple-baseline stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 353–363. [Google Scholar] [CrossRef]

- Ylimäki, M.; Heikkilä, J.; Kannala, J. Accurate 3-D Reconstruction with RGB-D Cameras using Depth Map Fusion and Pose Refinement. In Proceedings of the 2018 24th IEEE International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1977–1982. [Google Scholar]

- Mangeruga, M.; Bruno, F.; Cozza, M.; Agrafiotis, P.; Skarlatos, D. Guidelines for Underwater Image Enhancement Based on Benchmarking of Different Methods. Remote Sens. 2018, 10, 1652. [Google Scholar] [CrossRef]

- Leica HawkEye III. Available online: https://leica-geosystems.com/-/media/files/leicageosystems/products/datasheets/leica_hawkeye_iii_ds.ashx?la=en (accessed on 7 August 2019).

- Skinner, K.D. Evaluation of LiDAR-Acquired Bathymetric and Topographic Data Accuracy in Various Hydrogeomorphic Settings in the Deadwood and South Fork Boise Rivers, West-Central Idaho 2007; U.S. Geological Survey: Reston, VA, USA, 2011. [Google Scholar]

- Bailly, J.S.; Le Coarer, Y.; Languille, P.; Stigermark, C.J.; Allouis, T. Geostatistical estimations of bathymetric LiDAR errors on rivers. Earth Surf. Process. Landf. 2010, 35, 1199–1210. [Google Scholar] [CrossRef]

- Fernandez-Diaz, J.C.; Glennie, C.L.; Carter, W.E.; Shrestha, R.L.; Sartori, M.P.; Singhania, A.; Legleiter, C.J.; Overstreet, B.T. Early results of simultaneous terrain and shallow water bathymetry mapping using a single-wavelength airborne LiDAR sensor. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 623–635. [Google Scholar] [CrossRef]

- Westfeld, P.; Maas, H.G.; Richter, K.; Weiß, R. Analysis and correction of ocean wave pattern induced systematic coordinate errors in airborne LiDAR bathymetry. ISPRS J. Photogramm. Remote Sens. 2017, 128, 314–325. [Google Scholar] [CrossRef]

- Smola, A.J. Regression Estimation with Support Vector Learning Machines. Ph.D. Thesis, Technische Universität München, Munich, Germany, 1996. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Guenther, G.C.; Cunningham, A.G.; LaRocque, P.E.; Reid, D.J. Meeting the Accuracy Challenge in Airborne Bathymetry; National Oceanic Atmospheric Administration/NESDIS: Silver Spring, MD, USA, 2000. [Google Scholar]

| Test Site | Amathouda | Agia Napa | Dekelia |

|---|---|---|---|

| # Images | 182 | 383 | 78 |

| Control points used | 29 | 40 | 17 |

| Average flying height [m] | 103 | 209 | 188 |

| Average Base-to-Height (B/H) ratio along strip | 0.39 | 0.35 | 0.32 |

| Average Base-to-Height (B/H) ratio across strip | 0.66 | 0.62 | 0.38 |

| Average along strip overlap | 65% | 69% | 70% |

| Average across strip overlap | 54% | 57% | 73% |

| Image footprint on the ground [m] | 149 × 111 | 301 × 226 | 271 × 203 |

| GSD [m] | 0.033 | 0.063 | 0.059 |

| RMSX [m] | 0.028 | 0.050 | 0.033 |

| RMSY [m] | 0.033 | 0.047 | 0.037 |

| RMSΖ [m] | 0.046 | 0.074 | 0.039 |

| Reprojection error on all points [pix] | 0.645 | 1.106 | 0.717 |

| Reprojection error in control points [pix] | 1.48 | 0.76 | 0.77 |

| Pixel size (μm) | 1.55 | 1.55 | 1.55 |

| Total number of tie points | 28.5 K | 404 K | 71 K |

| Initial number of dense cloud points | 1.4 M | 6.5 M | 678 K |

| Average point cloud density (points/m2) | 23.3 | 5.65 | 22.6 |

| Area of the seabed used [sq. Km] | 0.06 | 1.15 | 0.03 |

| Test Site | # of Points | Point Density [Points/m2] | Average Pulse Spacing [m] | Flying Height [m] | Nominal Bathymetric Accuracy [m] |

|---|---|---|---|---|---|

| Amathouda | 6 K | 0.4 | - | 600 | 0.15 |

| Agia Napa | 1.3 M | 1.1 | 1.65 | 600 | 0.15 |

| Dekelia | 500 K | 1.1 | 1.65 | 600 | 0.15 |

| Training Site | Training Points | Training Percentage (%) | Fitting Score | Evaluation Site | Max/Min Depth of Test Site | Evaluation Points | Corrected Data | Uncorrected Data | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean Dist. (m) | Stdev (m) | Mean Dist. (m) | Stdev (m) | |||||||

| Ag. Napa[I] | 627.552 | 5 | 0.984 | Ag. Napa[II] | 14.7/0.30 | 661.208 | −0.15 | 0.49 | 2.23 | 1.42 |

| Ag. Napa[I] | 627.552 | 30 | 0.984 | Ag. Napa[II] | 14.7/0.30 | 661.208 | −0.14 | 0.50 | 2.23 | 1.42 |

| Ag. Napa[I] | 627.552 | 5 | 0.984 | Amathouda | 5.57/0.10 | 5400 | −0.03 | 0.19 | 0.44 | 0.26 |

| Ag. Napa[I] | 627.552 | 30 | 0.984 | Amathouda | 5.57/0.10 | 5400 | −0.03 | 0.19 | 0.44 | 0.26 |

| Ag. Napa[I] | 627.552 | 5 | 0.984 | Dekelia | 10.1/0.09 | 101.887 | −0.12 | 0.25 | 1.72 | 0.76 |

| Ag. Napa[I] | 627.552 | 30 | 0.984 | Dekelia | 10.1/0.09 | 101.887 | −0.12 | 0.25 | 1.72 | 0.76 |

| Ag. Napa[I] | 627.552 | 5 | 0.984 | Dekelia (GPS) | 7.0/0.30 | 208 | −0.12 | 0.46 | −1.15 | 0.55 |

| Ag. Napa[I] | 627.552 | 30 | 0.984 | Dekelia (GPS) | 7.0/0.30 | 208 | −0.12 | 0.46 | −1.15 | 0.55 |

| Ag. Napa[II] | 661.208 | 5 | 0.967 | Ag. Napa[I] | 14.8/0.20 | 627.552 | 0.14 | 0.49 | 2.23 | 1.42 |

| Ag. Napa[II] | 661.208 | 30 | 0.967 | Ag. Napa[I] | 14.8/0.20 | 627.552 | 0.14 | 0.49 | 2.23 | 1.42 |

| Ag. Napa[II] | 661.208 | 5 | 0.967 | Amathouda | 5.57/0.10 | 5400 | 0.25 | 0.11 | 0.44 | 0.26 |

| Ag. Napa[II] | 661.208 | 30 | 0.967 | Amathouda | 5.57/0.10 | 5400 | 0.25 | 0.11 | 0.44 | 0.26 |

| Ag. Napa[II] | 661.208 | 5 | 0.967 | Dekelia | 10.1/0.09 | 101.887 | −0.23 | 0.28 | 1.72 | 0.76 |

| Ag. Napa[II] | 661.208 | 30 | 0.967 | Dekelia | 10.1/0.09 | 101.887 | −0.22 | 0.28 | 1.72 | 0.76 |

| Ag. Napa[II] | 661.208 | 5 | 0.967 | Dekelia (GPS) | 7.0/0.30 | 208 | −0.27 | 0.48 | −1.15 | 0.55 |

| Ag. Napa[II] | 661.208 | 30 | 0.967 | Dekelia (GPS) | 7.0/0.30 | 208 | −0.25 | 0.48 | −1.15 | 0.55 |

| Amathouda | 5400 | 100 | - | Ag. Napa[I] | 14.8/0.20 | 627.552 | −0.10 | 0.45 | 2.23 | 1.42 |

| Amathouda | 5400 | 100 | - | Ag. Napa[II] | 14.7/0.30 | 661.208 | −0.26 | 0.49 | 2.23 | 1.42 |

| Amathouda | 5400 | 100 | - | Dekelia | 10.1/0.09 | 101.887 | 0.02 | 0.26 | 1.72 | 0.76 |

| Amathouda | 5400 | 100 | - | Dekelia (GPS) | 7.0/0.30 | 208 | −0.02 | 0.46 | −1.15 | 0.55 |

| Merged | 11873 | 100 | - | Ag. Napa[I] | 14.8/0.20 | 627.552 | 0.13 | 0.45 | 2.23 | 1.42 |

| Merged | 11873 | 100 | - | Ag. Napa[II] | 14.7/0.30 | 661.208 | −0.06 | 0.50 | 2.23 | 1.42 |

| Merged | 11873 | 100 | - | Amathouda | 5.57/0.10 | 5400 | 0.00 | 0.18 | 0.44 | 0.26 |

| Merged | 11873 | 100 | - | Dekelia | 10.1/0.09 | 101.887 | −0.19 | 0.24 | 1.72 | 0.76 |

| Merged | 11873 | 100 | - | Dekelia(GPS) | 7.0/0.30 | 208 | −0.17 | 0.46 | −1.15 | 0.55 |

| Dekelia | 101.887 | 100 | - | Ag. Napa[I] | 14.8/0.20 | 627.552 | 0.13 | 0.51 | 2.23 | 1.42 |

| Dekelia | 101.887 | 100 | - | Ag. Napa[II] | 14.7/0.30 | 661.208 | −0.15 | 0.56 | 2.23 | 1.42 |

| Dekelia | 101.887 | 100 | - | Amathouda | 5.57/0.10 | 5400 | −0.21 | 0.18 | 0.44 | 0.26 |

| Overall Average | −0.068 | 0.366 | 1.013 | 0.844 | ||||||

| Stdev | 0.149 | 0.142 | 1.307 | 0.461 | ||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sens. 2019, 11, 2225. https://doi.org/10.3390/rs11192225

Agrafiotis P, Skarlatos D, Georgopoulos A, Karantzalos K. DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sensing. 2019; 11(19):2225. https://doi.org/10.3390/rs11192225

Chicago/Turabian StyleAgrafiotis, Panagiotis, Dimitrios Skarlatos, Andreas Georgopoulos, and Konstantinos Karantzalos. 2019. "DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds" Remote Sensing 11, no. 19: 2225. https://doi.org/10.3390/rs11192225

APA StyleAgrafiotis, P., Skarlatos, D., Georgopoulos, A., & Karantzalos, K. (2019). DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sensing, 11(19), 2225. https://doi.org/10.3390/rs11192225