Development of an Operational Algorithm for Automated Deforestation Mapping via the Bayesian Integration of Long-Term Optical and Microwave Satellite Data

Abstract

1. Introduction

- (1)

- Scalable. It can flexibly integrate with any available data, regardless of the region of interest.

- (2)

- Best effort. An operation can proceed even when with some input data missing.

- (3)

- Robust. It is not very sensitive to sensor noise, cloud/cloud-shadow contamination, or seasonal deciduous forest changes.

- (4)

- Near-real-time: It can provide a deforestation map soon after obtaining a new satellite image. Alternatively, a computationally expensive algorithm or an algorithm that requires the overall recalculation of historical images is undesirable.

- (5)

- Accessible: It substantially utilizes open–free or cost-effective data, or its program is written via open-source software (e.g., Python, Bash, GRASS GIS).

2. Materials and Methods

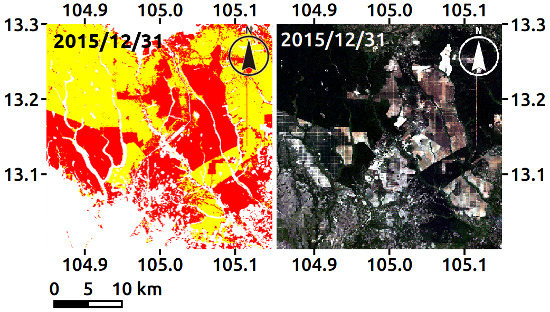

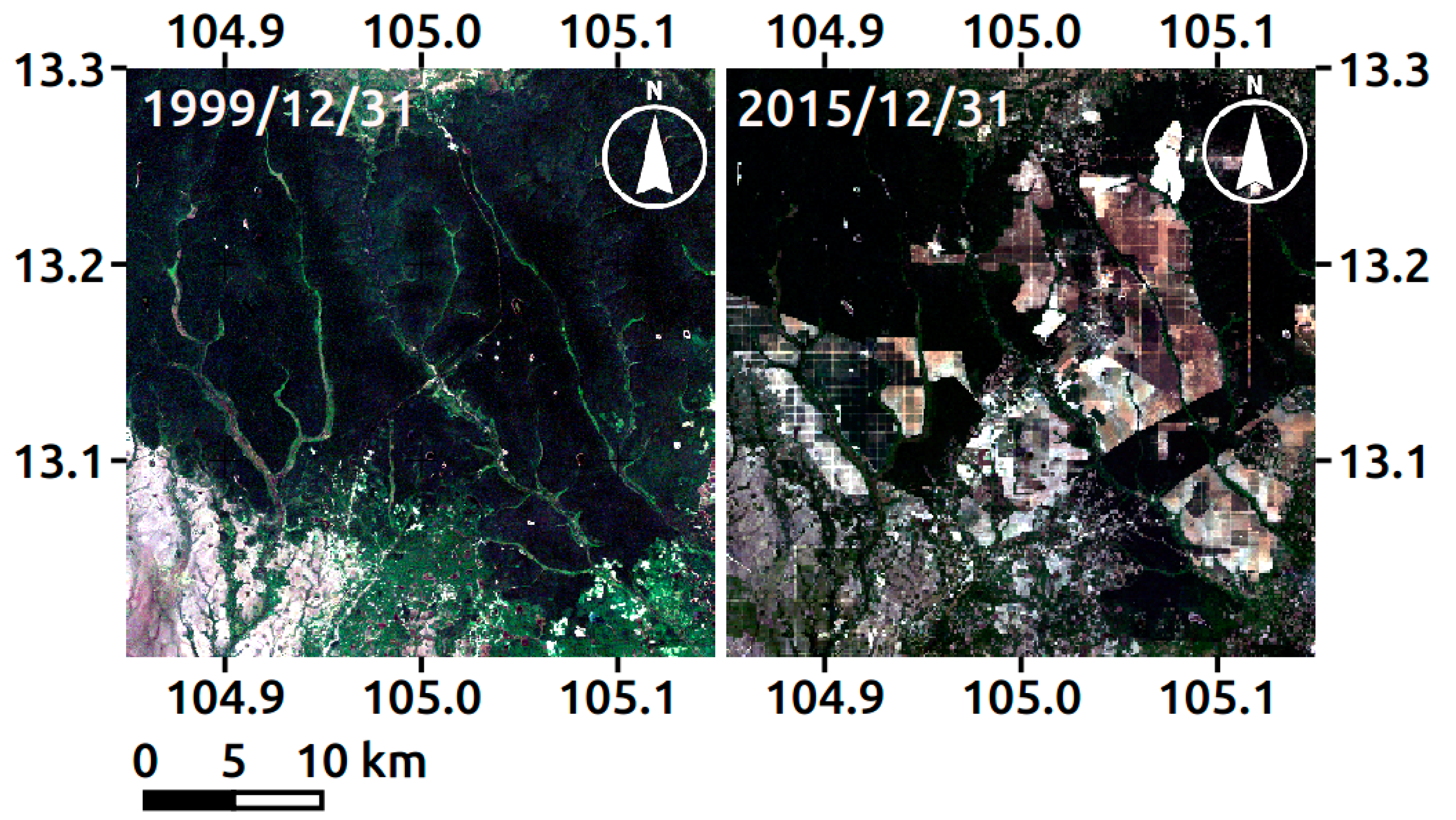

2.1. Study Site and Target Period

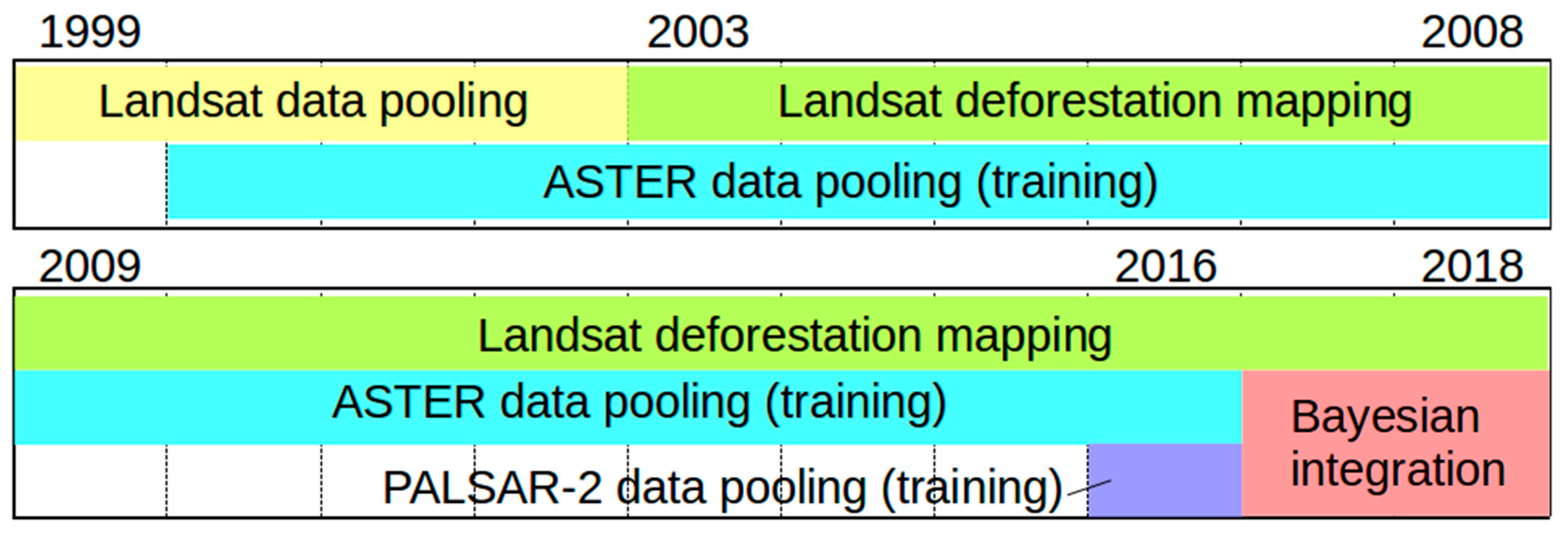

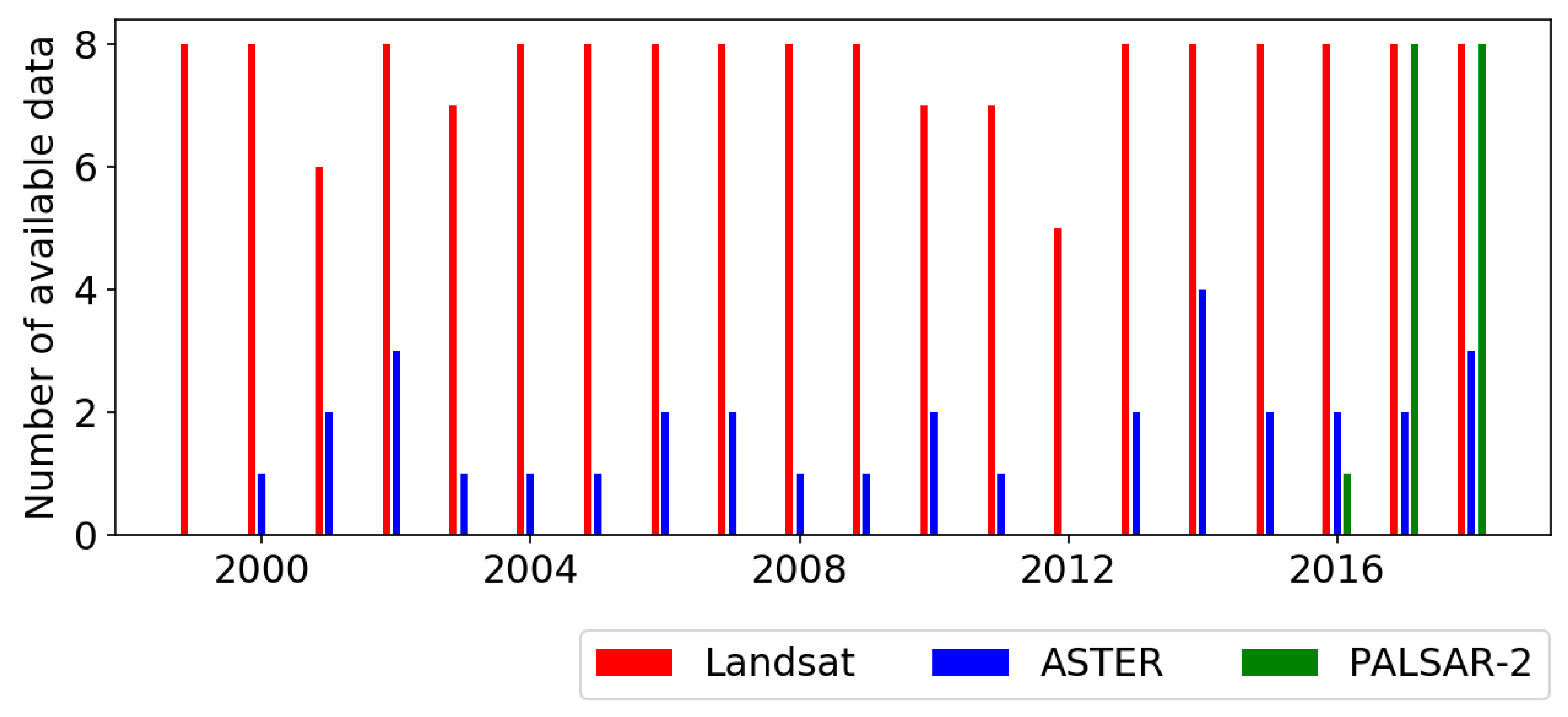

2.2. Satellite Data and Preprocessing

2.2.1. Landsat Series

2.2.2. ASTER

2.2.3. PALSAR-2

2.3. Reference Data

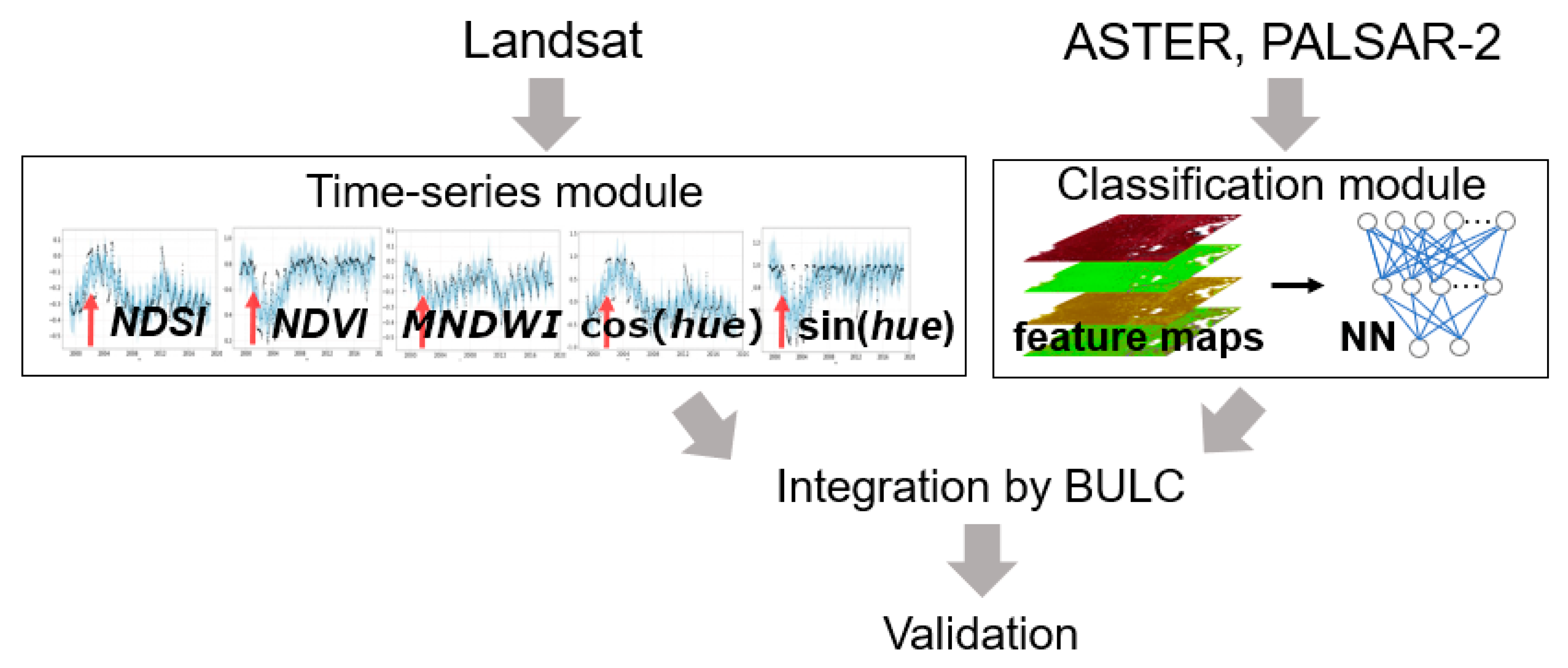

2.4. Algorithm

2.4.1. Time-series module

- Calculate the mean μf and standard deviation σf of f during day 1, 2, …, t − 1.

- Flag the pixel where f(t, i, j) satisfies the following:f (t, i, j) < μf–2 σf (f: NDVI, MNDWI, sin(hue)), orf (t, i, j) > μf + 2 σf (f: NDSI, cos(hue)).

- Determine the pixel as deforestation if more than four indices are flagged.

2.4.2. Classification Module

2.4.3. Postintegration of Multiple Deforestation Maps

- Cloud-free data should come last.

- High-resolution data should come last.

2.5. Validation and Evaluation

3. Results

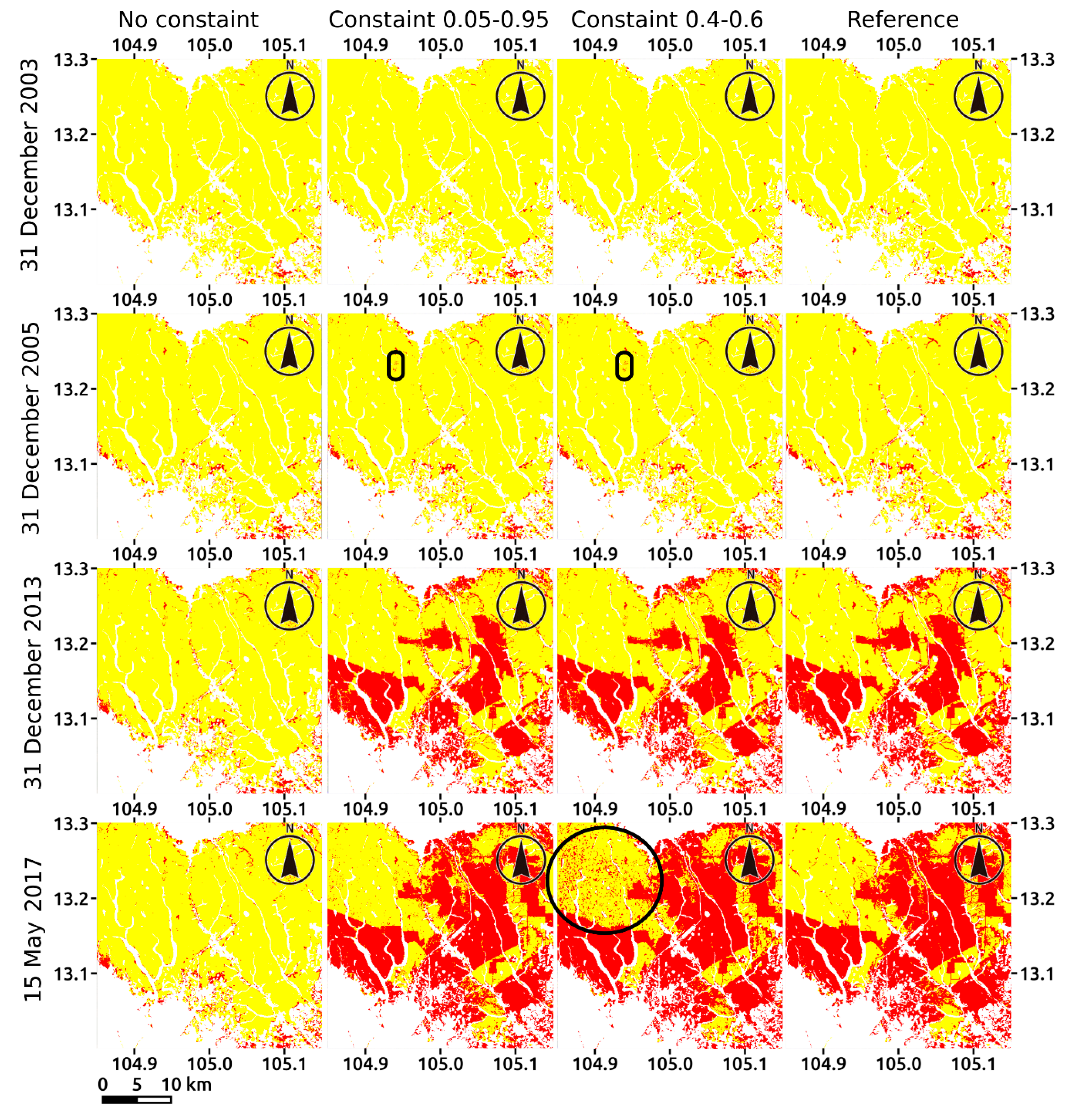

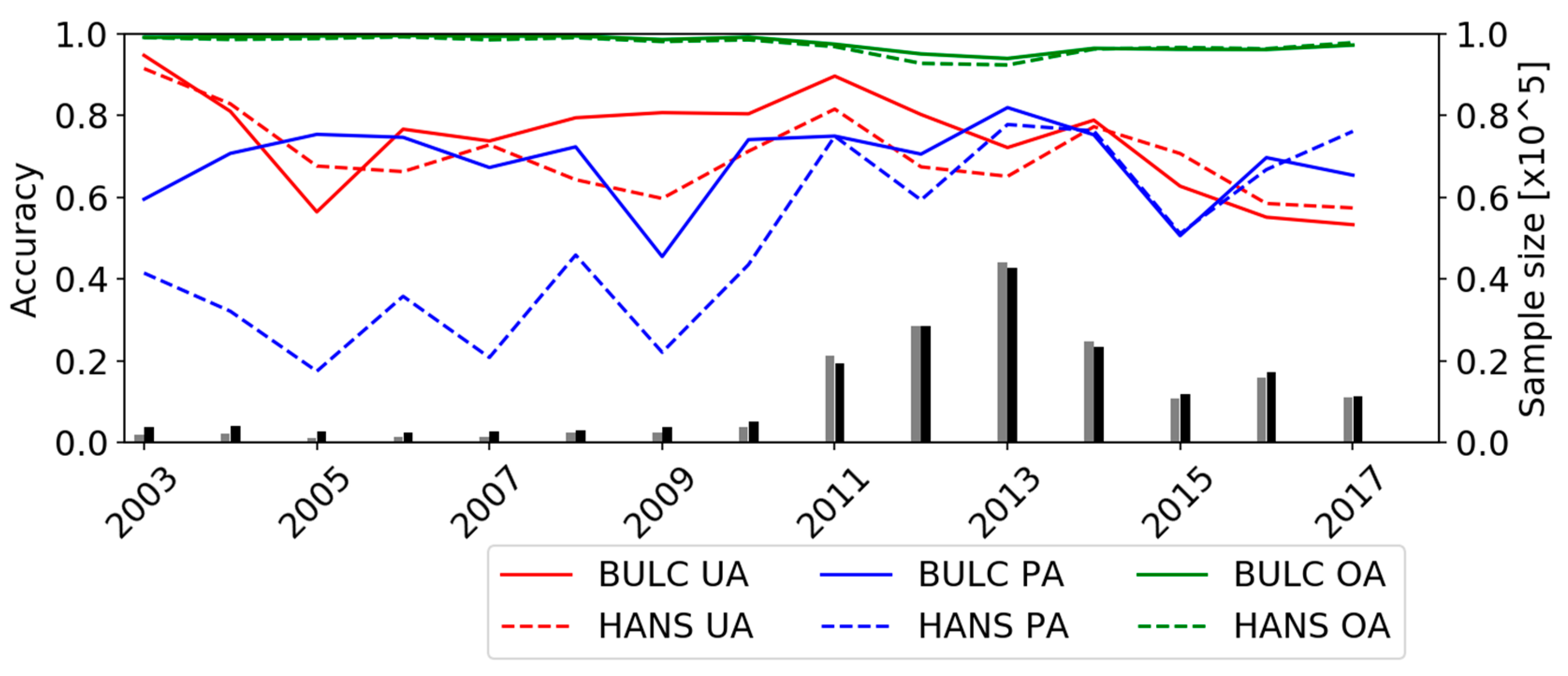

3.1. Effect of the Constraint of Posterior Probability

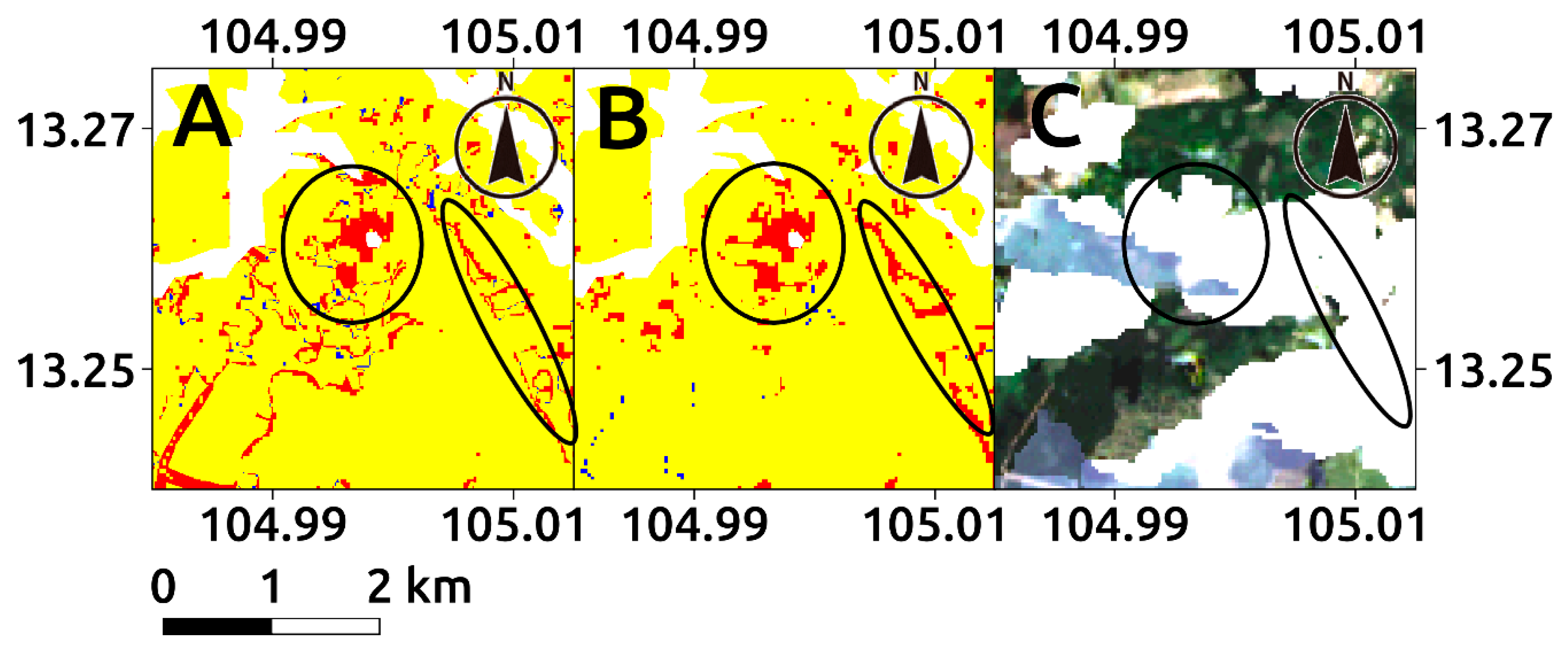

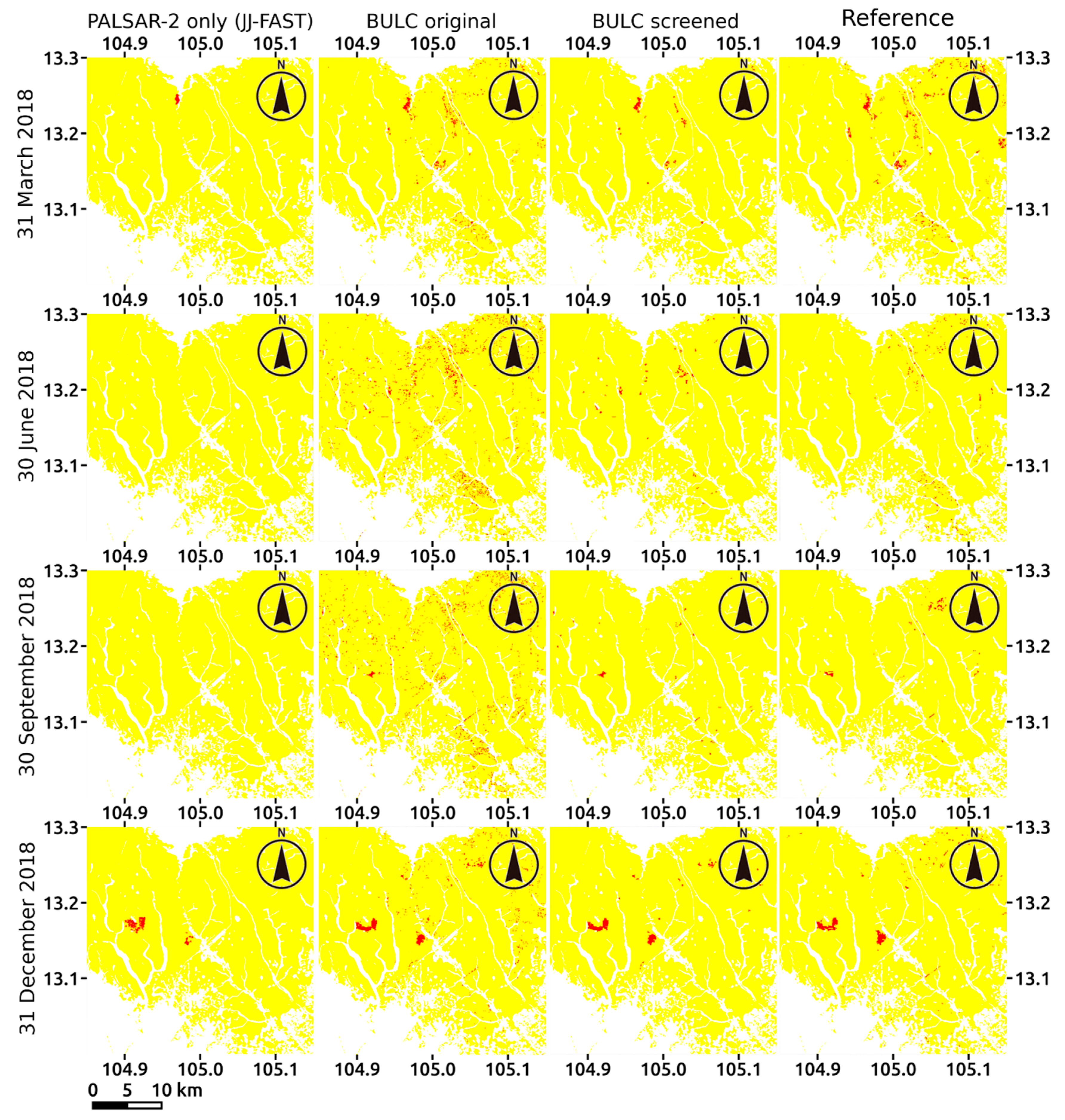

3.2. Effect of Sensor Integration

4. Discussion

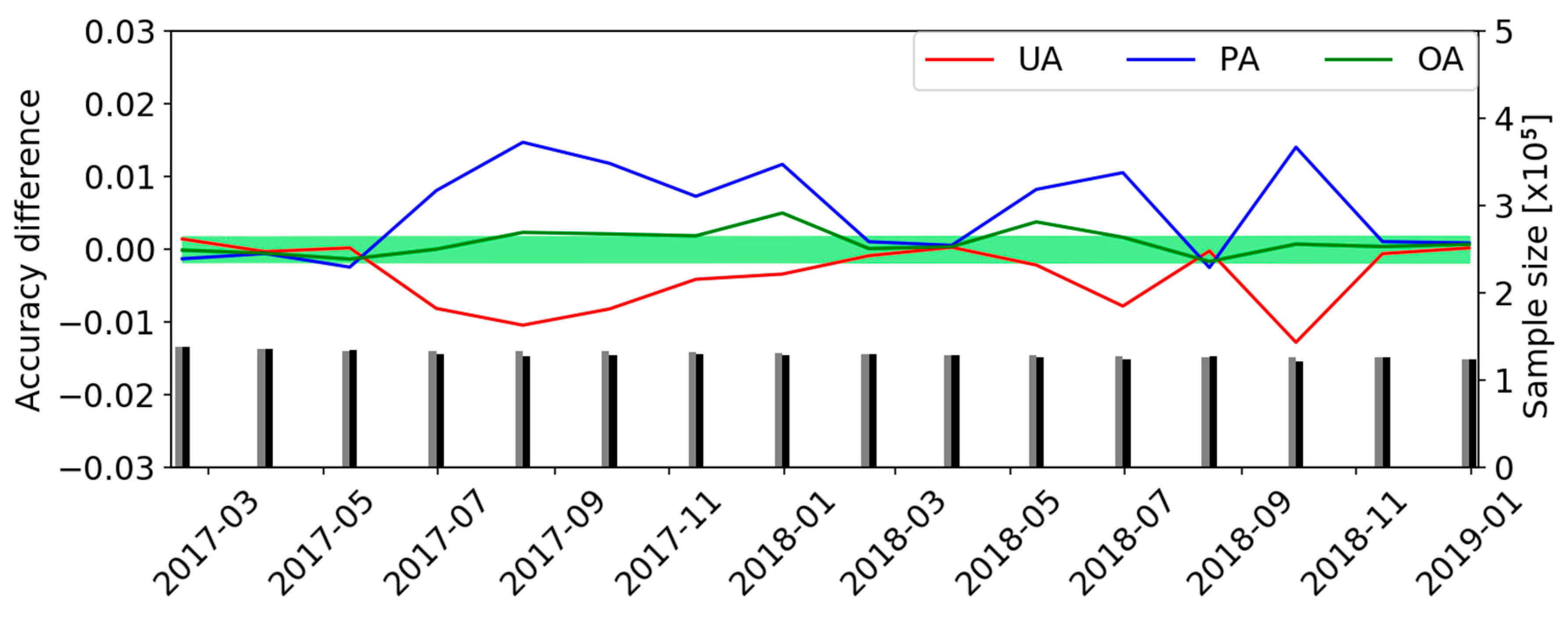

4.1. Accuracy of the Integration Map

4.2. Comparison with Other Algorithms

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- IPCC. Climate Change 2014: Mitigation of Climate Change; Contribution of Working Group III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2014. [Google Scholar]

- Giam, X. Global biodiversity loss from tropical deforestation. Proc. Natl. Acad. Sci. USA 2017, 114, 5775–5777. [Google Scholar] [CrossRef] [PubMed]

- Adikari, Y.; Noro, T. A Global Outlook of Sediment-Related Disasters in the Context of Water-Related Disasters. Int. J. Eros. Control Eng. 2010, 3, 110–116. [Google Scholar] [CrossRef][Green Version]

- Tranter, V. Food and Agriculture Organization of the United Nations; Cambridge University Press (CUP): Cambridge, UK, 2015; pp. 388–398. [Google Scholar]

- Espejo, J.C.; Messinger, M.; Román-Dañobeytia, F.; Ascorra, C.; Fernandez, L.E.; Silman, M. Deforestation and Forest Degradation Due to Gold Mining in the Peruvian Amazon: A 34-Year Perspective. Remote Sens. 2018, 10, 1903. [Google Scholar] [CrossRef]

- Potapov, P.; Turubanova, S.A.; Hansen, M.C.; Adusei, B.; Broich, M.; Altstatt, A.; Mane, L.; Justice, C.O. Quantifying forest cover loss in Democratic Republic of the Congo, 2000–2010. Remote Sens. Environ. 2012, 122, 106–116. [Google Scholar] [CrossRef]

- Stibig, H.J.; Achard, F.; Carboni, S.; Rasi, R.; Miettinen, J. Change in tropical forest cover of Southeast Asia from 1999 to 2010. Biogeoscience 2014, 11, 247–258. [Google Scholar] [CrossRef]

- Houghton, R.; Greenglass, N.; Baccini, A.; Cattaneo, A.; Goetz, S.; Kellndorfer, J.; Laporte, N.; Walker, W. The role of science in Reducing Emissions from Deforestation and Forest Degradation (REDD). Carbon Manag. 2010, 1, 253–259. [Google Scholar] [CrossRef]

- Hansen, M.; DeFries, R. Detecting long-term global forest change using continuous fields of tree-cover maps from 8-km Advanced Very High Resolution Radiometer (AVHRR) data for the years 1982–1999. Ecosystems 2004, 7, 695–716. [Google Scholar] [CrossRef]

- Potapov, P.; Hansen, M.C.; Stehman, S.V.; Loveland, T.R.; Pittman, K. Combining MODIS and Landsat imagery to estimate and map boreal forest cover loss. Remote Sens. Environ. 2008, 112, 3708–3719. [Google Scholar] [CrossRef]

- Huang, C.; Goward, S.N.; Masek, J.G.; Thomas, N.; Zhu, Z.; Vogelmann, J.E. An automated approach for reconstructing recent forest disturbance history using dense Landsat time series stacks. Remote Sens. Environ. 2010, 114, 183–198. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef]

- Mermoz, S.; Le Toan, T. Forest Disturbances and Regrowth Assessment Using ALOS PALSAR Data from 2007 to 2010 in Vietnam, Cambodia and Lao PDR. Remote Sens. 2016, 8, 217. [Google Scholar] [CrossRef]

- Reiche, J.; Hamunyela, E.; Verbesselt, J.; Hoekman, D.; Herold, M. Improving near-real time deforestation monitoring in tropical dry forests by combining dense Sentinel-1 time series with Landsat and ALOS-2 PALSAR-2. Remote Sens. Environ. 2018, 204, 147–161. [Google Scholar] [CrossRef]

- Marshak, C.; Simard, M.; Denbina, M. Monitoring Forest Loss in ALOS/PALSAR Time-Series with Superpixels. Remote Sens. 2019, 11, 556. [Google Scholar] [CrossRef]

- Hansen, M.C.; Krylov, A.; Tyukavina, A.; Potapov, P.V.; Turubanova, S.; Zutta, B.; Ifo, S.; Margono, B.; Stolle, F.; Moore, R. Humid tropical forest disturbance alerts using Landsat data. Environ. Res. Lett. 2016, 11, 34008. [Google Scholar] [CrossRef]

- JICA-JAXA Forest Early Warning System in the Tropics (JJ-FAST). Available online: https://www.eorc.jaxa.jp/jjfast/ (accessed on 8 May 2019).

- Ju, J.; Roy, D.P. The availability of cloud-free Landsat ETM+ data over the conterminous United States and globally. Remote Sens. Environ. 2008, 112, 1196–1211. [Google Scholar] [CrossRef]

- Olander, L.P.; Gibbs, H.K.; Steininger, M.; Swenson, J.J.; Murray, B.C. Reference scenarios for deforestation and forest degradation in support of REDD: A review of data and methods. Environ. Res. Lett. 2008, 3, 025011. [Google Scholar] [CrossRef]

- Omar, H.; Misman, M.A.; Kassim, A.R. Synergetic of PALSAR-2 and Sentinel-1A SAR polarimetry for retrieving aboveground biomass in dipterocarp forest in Malaysia. Appl. Sci. 2017, 7, 675. [Google Scholar] [CrossRef]

- Schwaller, M.; Hall, F.; Gao, F.; Masek, J. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Belgiu, M.; Stein, A. Spatiotemporal Image Fusion in Remote Sensing. Remote Sens. 2019, 11, 818. [Google Scholar] [CrossRef]

- Mizuochi, H.; Hiyama, T.; Ohta, T.; Fujioka, Y.; Kambatuku, J.R.; Iijima, M.; Nasahara, K.N. Development and evaluation of a lookup-table-based approach to data fusion for seasonal wetlands monitoring: An integrated use of AMSR series, MODIS, and Landsat. Remote Sens. Environ. 2017, 199, 370–388. [Google Scholar] [CrossRef]

- Mizuochi, H.; Nishiyama, C.; Ridwansyah, I.; Nasahara, K.N. Monitoring of an Indonesian Tropical Wetland by Machine Learning-Based Data Fusion of Passive and Active Microwave Sensors. Remote Sens. 2018, 10, 1235. [Google Scholar] [CrossRef]

- Cardille, J.A.; Fortin, J.A. Bayesian updating of land-cover estimates in a data-rich environment. Remote Sens. Environ. 2016, 186, 234–249. [Google Scholar] [CrossRef]

- White, L.; Brisco, B.; Dabboor, M.; Schmitt, A.; Pratt, A. A Collection of SAR Methodologies for Monitoring Wetlands. Remote Sens. 2015, 7, 7615–7645. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Vogelmann, E.J.; Helder, D.; Morfitt, R.; Choate, M.J.; Merchant, J.W.; Bulley, H. Effects of Landsat 5 Thematic Mapper and Landsat 7 Enhanced Thematic Mapper Plus radiometric and geometric calibrations and corrections on landscape characterization. Remote Sens. Environ. 2001, 78, 55–70. [Google Scholar] [CrossRef]

- Li, P.; Jiang, L.; Feng, Z. Cross-comparison of vegetation indices derived from landsat-7 enhanced thematic mapper plus (ETM+) and landsat-8 operational land imager (OLI) sensors. Remote Sens. 2013, 6, 310–329. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the 3rd ERTS Symposium, Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Xu, H. Modification of normalized difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Faraklioti, M.; Petrou, M. Illumination invariant unmixing of sets of mixed pixels. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2227–2234. [Google Scholar] [CrossRef]

- Koutsias, N.; Karteris, M.; Chuvieco, E. The use of intensity-hue-saturation transformation of landsat5 thematic mapper data for burned land mapping. Photogramm. Eng. Remote Sensing. 2000, 66, 829–839. [Google Scholar]

- METI AIST Satellite Data Archive System (MADAS). Available online: https://gbank.gsj.jp/madas/ (accessed on 8 May 2019).

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Falkowski, M.J.; Gessler, P.E.; Morgan, P.; Hudak, A.T.; Smith, A.M. Characterizing and mapping forest fire fuels using ASTER imagery and gradient modeling. For. Ecol. Manag. 2005, 217, 129–146. [Google Scholar] [CrossRef]

- Motohka, T.; Isoguchi, O.; Sakashita, M.; Shimada, M. Results of ALOS-2 PALSAR-2 Calibration and Validation after 3 Years of Operation. In Proceedings of the International Geoscience and Remote Sensing Symposium, Valencia, Spain, 25 July 2018. [Google Scholar]

- Pour, A.B.; Hashim, M. Application of Landsat-8 and ALOS-2 data for structural and landslide hazard mapping in Kalimantan, Malaysia. Nat. Hazards Earth Syst. Sci. 2017, 17, 1285–1303. [Google Scholar] [CrossRef]

- Thapa, R.B.; Watanabe, M.; Motohka, T.; Shimada, M. Potential of high-resolution ALOS–PALSAR mosaic texture for aboveground forest carbon tracking in tropical region. Remote Sens. Environ. 2015, 160, 122–133. [Google Scholar] [CrossRef]

- Foroosh, H.; Zerubia, J.; Berthod, M. Extension of phase correlation to subpixel registration. IEEE Trans. Image Process. 2002, 11, 188–200. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Kikuchi, M.; Yoshida, M.; Okabe, M.; Umemura, K. Confidence Interval of Probability Estimator of Laplace Smoothing. In Proceedings of the 2015 2nd International Conference on Advanced Informatics: Concepts, Theory and Applications (ICAICTA), Chonburi, Thailand, 19–22 August 2015; pp. 1–6. [Google Scholar]

- Basieva, I.; Pothos, E.; Trueblood, J.; Khrennikov, A.; Busemeyer, J. Quantum probability updating from zero priors (by-passing Cromwell’s rule). J. Math. Psychol. 2017, 77, 58–69. [Google Scholar] [CrossRef]

- Jackman, S. Bayesian Analysis for the Social Sciences; John Wiley & Sons: Hoboken, NJ, USA, 2009; pp. 18–19. [Google Scholar]

- Fortin, J.A.; Cardille, J.A.; Perez, E. Multi-sensor detection of forest-cover change across 45 years in Mato Grosso, Brazil. Remote Sens. Environ. 2019, 111266. [Google Scholar] [CrossRef]

- Stehman, S.V. Estimating area and map accuracy for stratified random sampling when the strata are different from the map classes. Int. J. Remote Sens. 2014, 35, 4923–4939. [Google Scholar] [CrossRef]

- Nakano, Y.; Yamakoshi, T.; Shimizu, T.; Tamura, K.; Doshida, S. The Evaluation of Eruption Induced Sediment Related Disasters using Satellite Remote Sensing-Applications for Emergency Response. Int. J. Eros. Control Eng. 2010, 3, 34–42. [Google Scholar] [CrossRef][Green Version]

- Holloway, J.; Mengersen, K. Statistical Machine Learning Methods and Remote Sensing for Sustainable Development Goals: A Review. Remote Sens. 2018, 10, 1365. [Google Scholar] [CrossRef]

- Xie, Z.; Roberts, C.; Johnson, B. Object-based target search using remotely sensed data: A case study in detecting invasive exotic Australian Pine in south Florida. ISPRS J. Photogramm. Remote Sens. 2008, 63, 647–660. [Google Scholar] [CrossRef]

- Jin, Y.; Sung, S.; Lee, D.K.; Biging, G.S.; Jeong, S. Mapping Deforestation in North Korea Using Phenology-Based Multi-Index and Random Forest. Remote Sens. 2016, 8, 997. [Google Scholar] [CrossRef]

| Landsat | ASTER | PALSAR-2 | |

|---|---|---|---|

| Duration | 1999–2018 | 2000–2018 | 2016–2018 |

| Original resolution | 30 m | 15 m | 50 m |

| Band used | optical | Optical | microwave |

| Calculated features | NDVI, MNDWI, NDSI, sin(hue), cos(hue) | red, green, blue, sin(hue), cos(hue), saturation, value, NDVI, NDWI, GRVI, NDVI-Entropy, NDVI-Correlation, NDVI-ASM, NDVI-Contrast | γ0HH, γ0HV, sin(hue), cos(hue), saturation, value, γ0HV-Entropy, γ0HV-Correlation, γ0HV-ASM, γ0HV-Contrast |

| Activation function | ReLu |

| Cost function | Cross entropy |

| Regularization rate (L1 regularization) | 0.0005 |

| Dropout rate | 0.15 |

| Minibatch size | 512 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mizuochi, H.; Hayashi, M.; Tadono, T. Development of an Operational Algorithm for Automated Deforestation Mapping via the Bayesian Integration of Long-Term Optical and Microwave Satellite Data. Remote Sens. 2019, 11, 2038. https://doi.org/10.3390/rs11172038

Mizuochi H, Hayashi M, Tadono T. Development of an Operational Algorithm for Automated Deforestation Mapping via the Bayesian Integration of Long-Term Optical and Microwave Satellite Data. Remote Sensing. 2019; 11(17):2038. https://doi.org/10.3390/rs11172038

Chicago/Turabian StyleMizuochi, Hiroki, Masato Hayashi, and Takeo Tadono. 2019. "Development of an Operational Algorithm for Automated Deforestation Mapping via the Bayesian Integration of Long-Term Optical and Microwave Satellite Data" Remote Sensing 11, no. 17: 2038. https://doi.org/10.3390/rs11172038

APA StyleMizuochi, H., Hayashi, M., & Tadono, T. (2019). Development of an Operational Algorithm for Automated Deforestation Mapping via the Bayesian Integration of Long-Term Optical and Microwave Satellite Data. Remote Sensing, 11(17), 2038. https://doi.org/10.3390/rs11172038