An Accurate Visual-Inertial Integrated Geo-Tagging Method for Crowdsourcing-Based Indoor Localization

Abstract

:1. Introduction

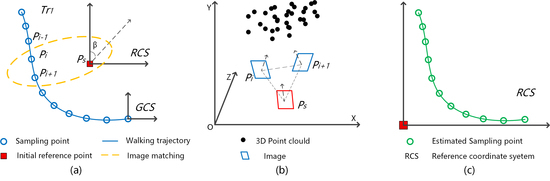

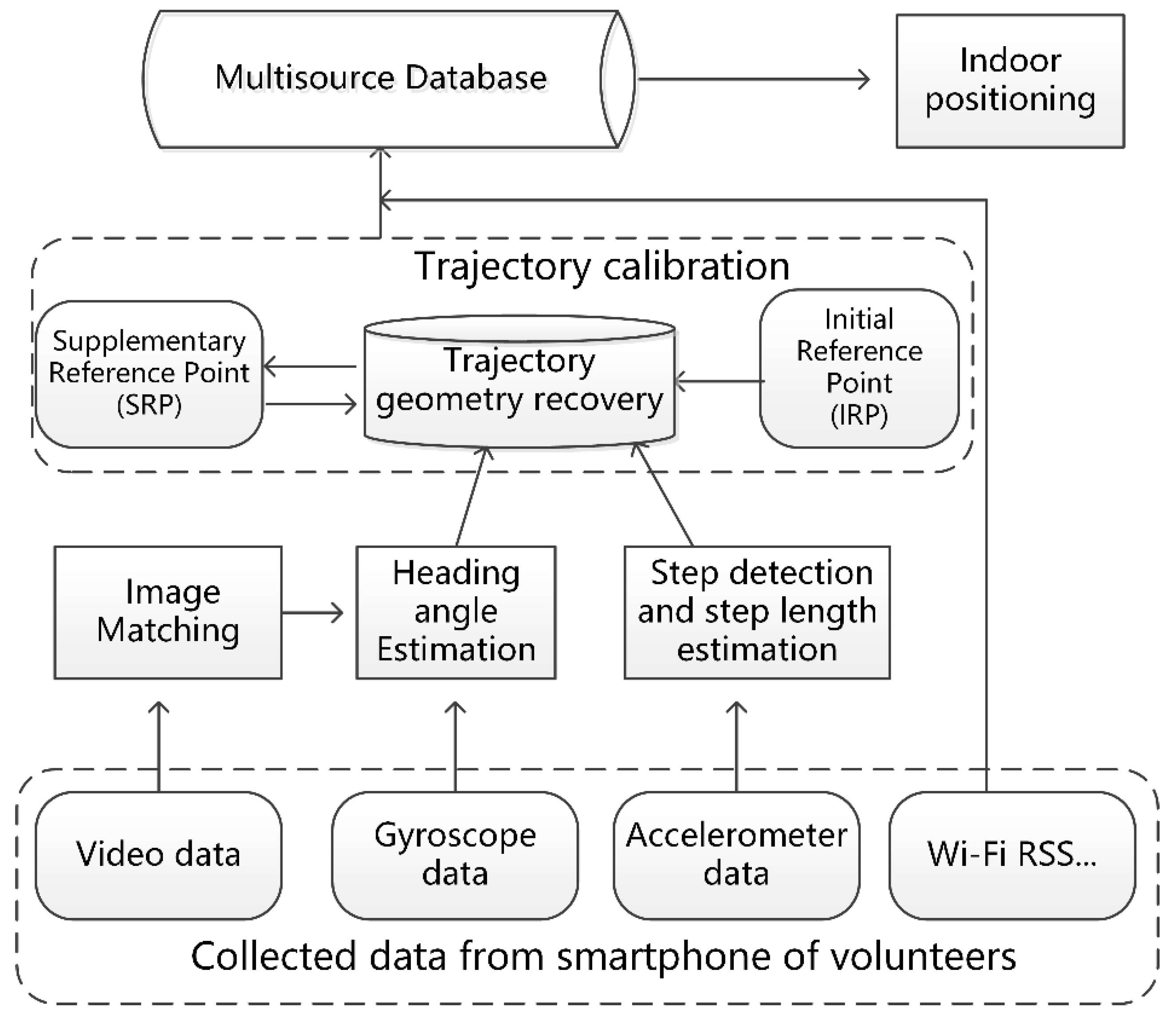

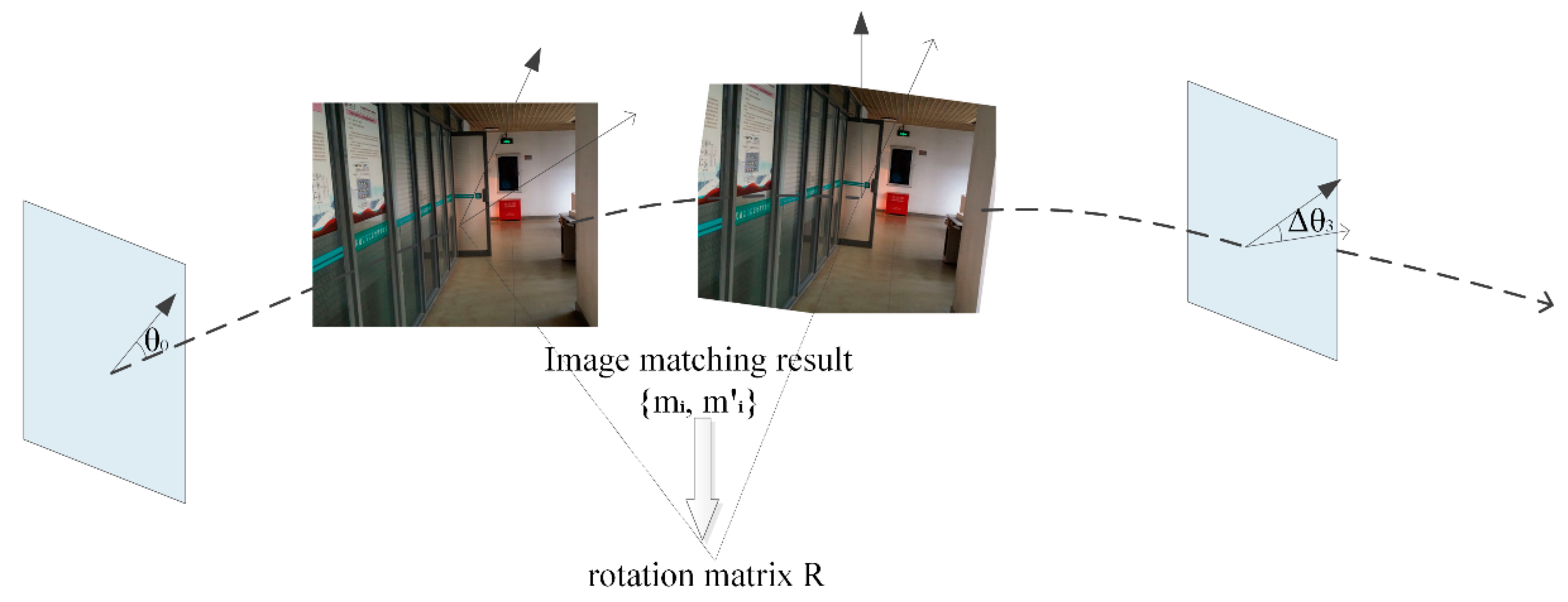

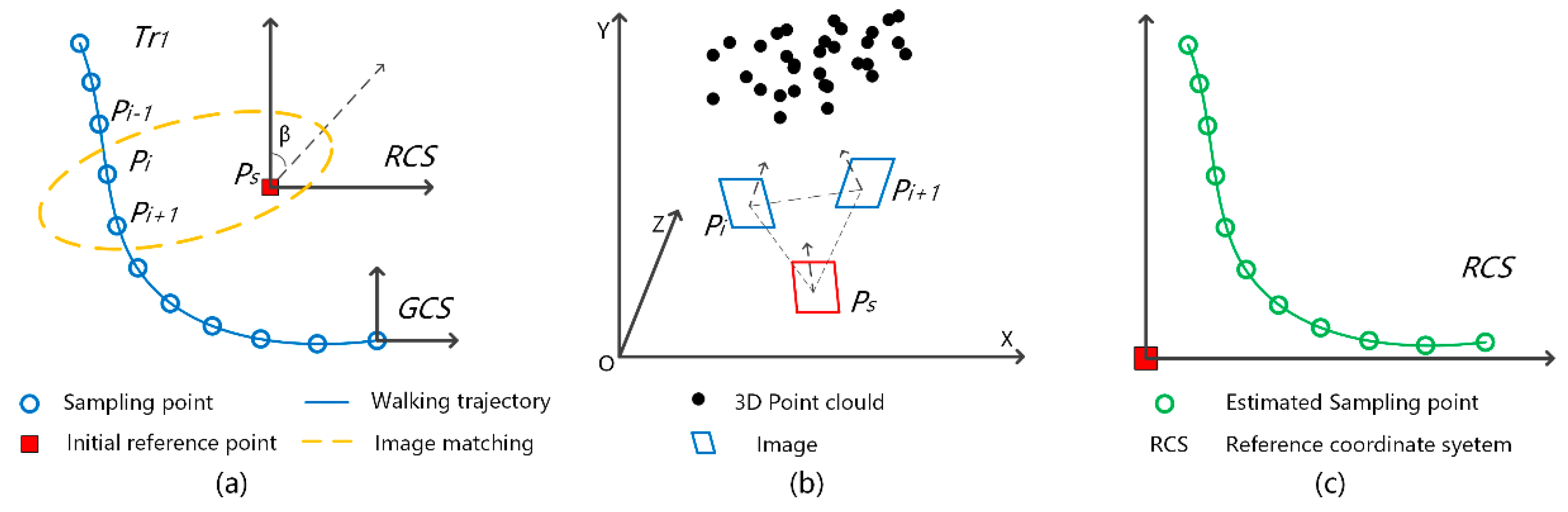

2. Visual Based Trajectory Geometry Recovery

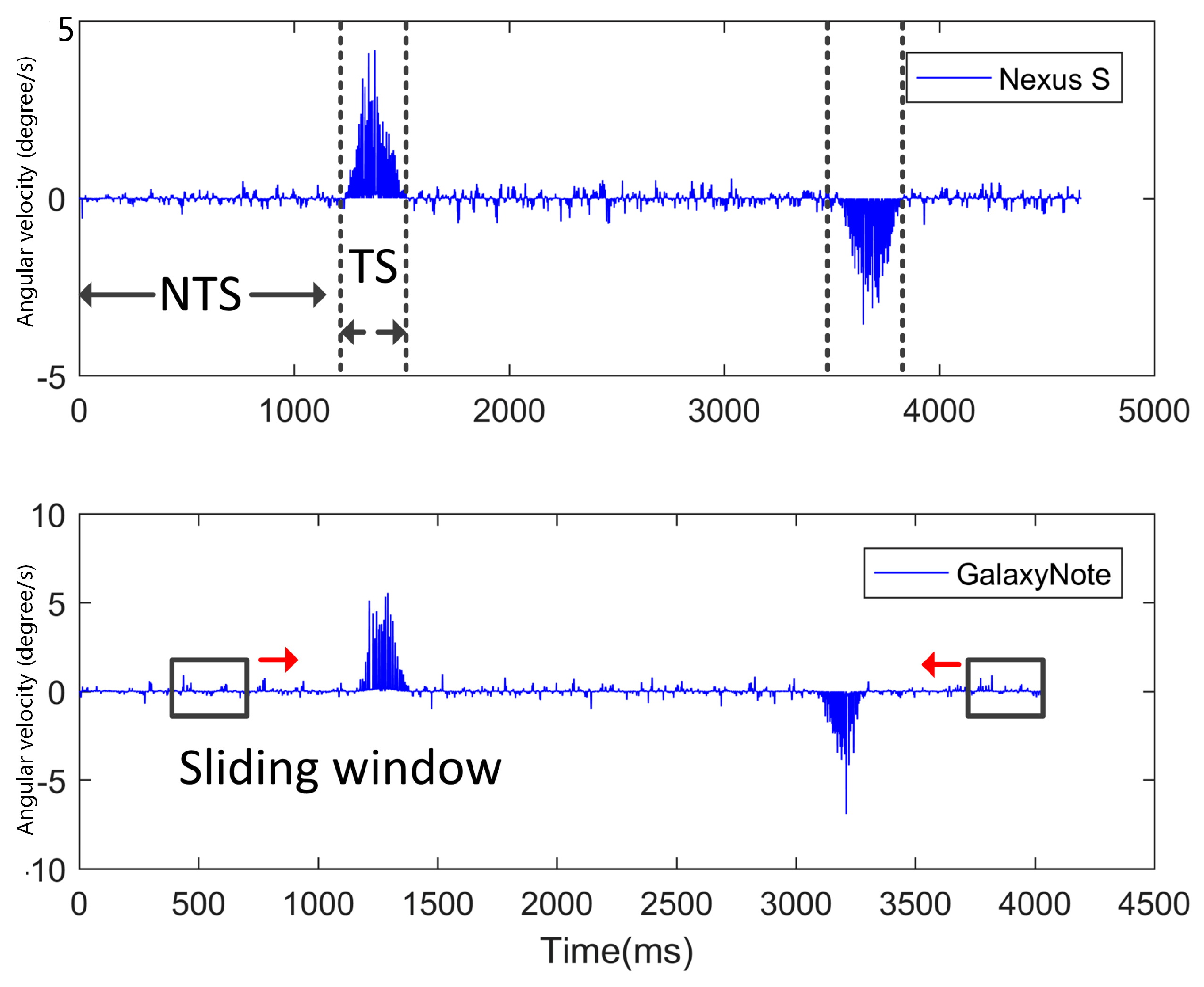

2.1. Heading Angle Estimation

2.2. Trajectory geometry recovery

| Algorithm 1 Sliding-window filter-based turning moment detection |

| Input: gyroscope angles |

| Input: sliding window |

| Output: Turn[,]; //a two-dimensional vector which records the starting and ending moment of each TS segment of a trajectory |

| definition: size_win; //the size of the sliding window |

| count(); // The function to count the number of positive values or negative values |

| Pair(); // The function to find the starting and ending moment of each TS segment |

| Turn_S=[]; // The vector to record the candidate moments of start turning |

| Turn_E=[];// The vector to record the candidate moments of end turning |

| Np=0;// the number of angular velocity readings which are higher than 0 |

| Ne=0;// the number of angular velocity readings which are below 0 |

| for i=1:length(gyroscope angle) |

| sliding window=gyroscope angle[i,(size_win+i)]; |

| Np=count(sliding window); |

| Ne=count(sliding window); |

| if Np==size_win || Ne=size_win |

| Turn_S.add(i); |

| end if |

| end for |

| for i=length(gyroscope angle):-1:1 |

| sliding window=gyroscope angle[(i-size_win),i]; |

| Np=count(sliding window); |

| Ne=count(sliding window); |

| if Np==size_win || Ne=size_win |

| Turn_E.add(i); |

| end if |

| end for |

| Turn=Pair(Turn_S, Turn_E); |

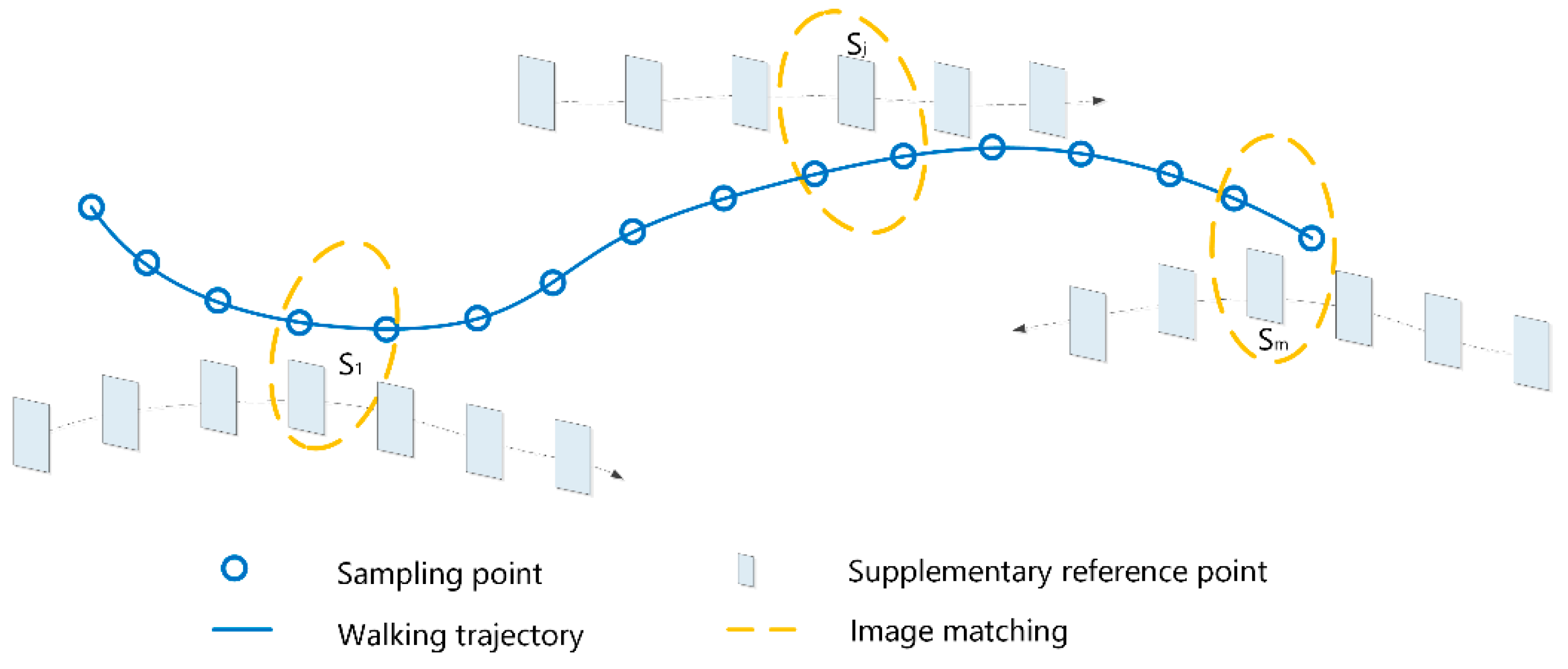

3. Trajectory Calibration and Geo-Tagging

3.1. Indoor Reference Coordinate System

3.2. Geo-Tagging Sampling Points in Reference Coordinate System

| Algorithm 2 Trajectory estimation in the RCS |

| input: N trajectories with recovered geometry |

| input: IRP[] //initial reference point |

| output: Estimated trajectories in the RCS |

| definition: Multi-IM() is the multi-constrained image-matching function, which returns the number of matched keypoints. |

| BA() is a bundle adjustment function which returns the location of two adjacent sampling points relative to an IRP |

| SRP=[]; // supplementary reference point |

| Label_trajectory=[]; //label a trajectory if it has been estimated in the RCS |

| While true |

| for ss=1:length(IRP) |

| for i=1 to N |

| if i does not exist in Label_trajectory |

| NUM=the number of sampling points of trajectory{i} |

| candidate=[]; |

| for k=1 to NUM |

| n=Multi-IM(point{k}, IRP{ss}); // returns the number of matched keypoints |

| if n>r //the number of matched keypoints is higher than threshold r |

| candidate.add(point{k}); |

| end if |

| if SRP.size>0 |

| n=Multi-IM(point{k}, SRP); |

| if n>r //the number of matched keypoints is higher than threshold r |

| candidate.add(point{k}); |

| end if |

| end |

| end for |

| flag=0; //label whether the two sampling points have been estimated in the RCS |

| for j=1: candidate |

| if points k and (k+1) exist in candidate[] |

| dist= || point{k}, point{k+1} ||; //calculate the distance between point{k} and point{k+1} |

| BA(point{k}, point{k+1}, dist, IRP); //calculate the relative location of point{k} and point{k+1} by using the bundle adjustment function |

| flag=1; |

| end if |

| if flag==1 |

| estimate the trajectory{i} in the RCS; |

| SRP.add (sampling points of trajectory{i}); |

| Label_trajectory.add(i); |

| break; |

| end if |

| end for |

| candidate.clear(); |

| end if |

| end for |

| end for |

| if Label_trajectory.number==N |

| break; |

| end |

| end while |

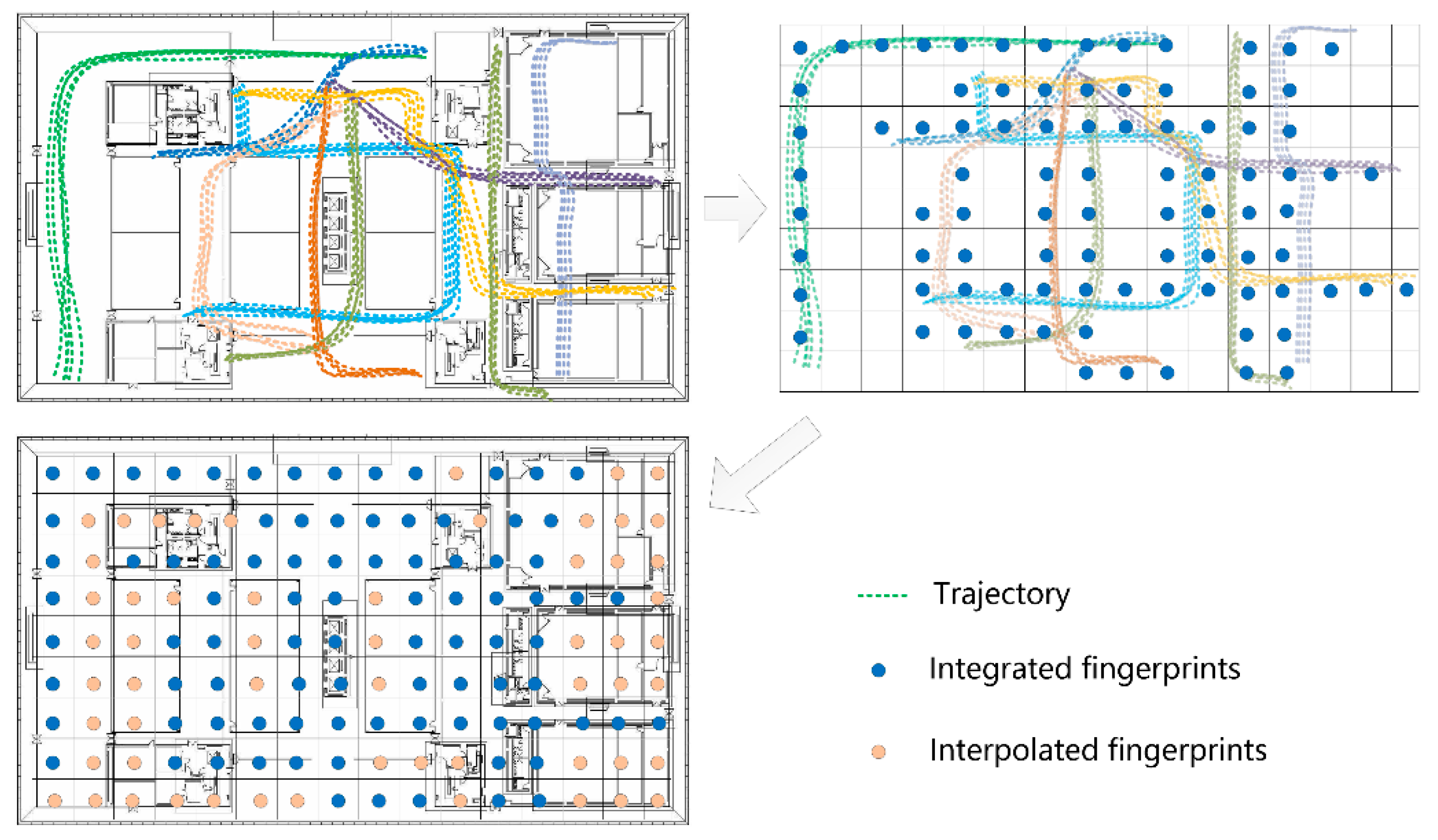

3.3. Generating Multi-Source Datasets for Indoor Positioning

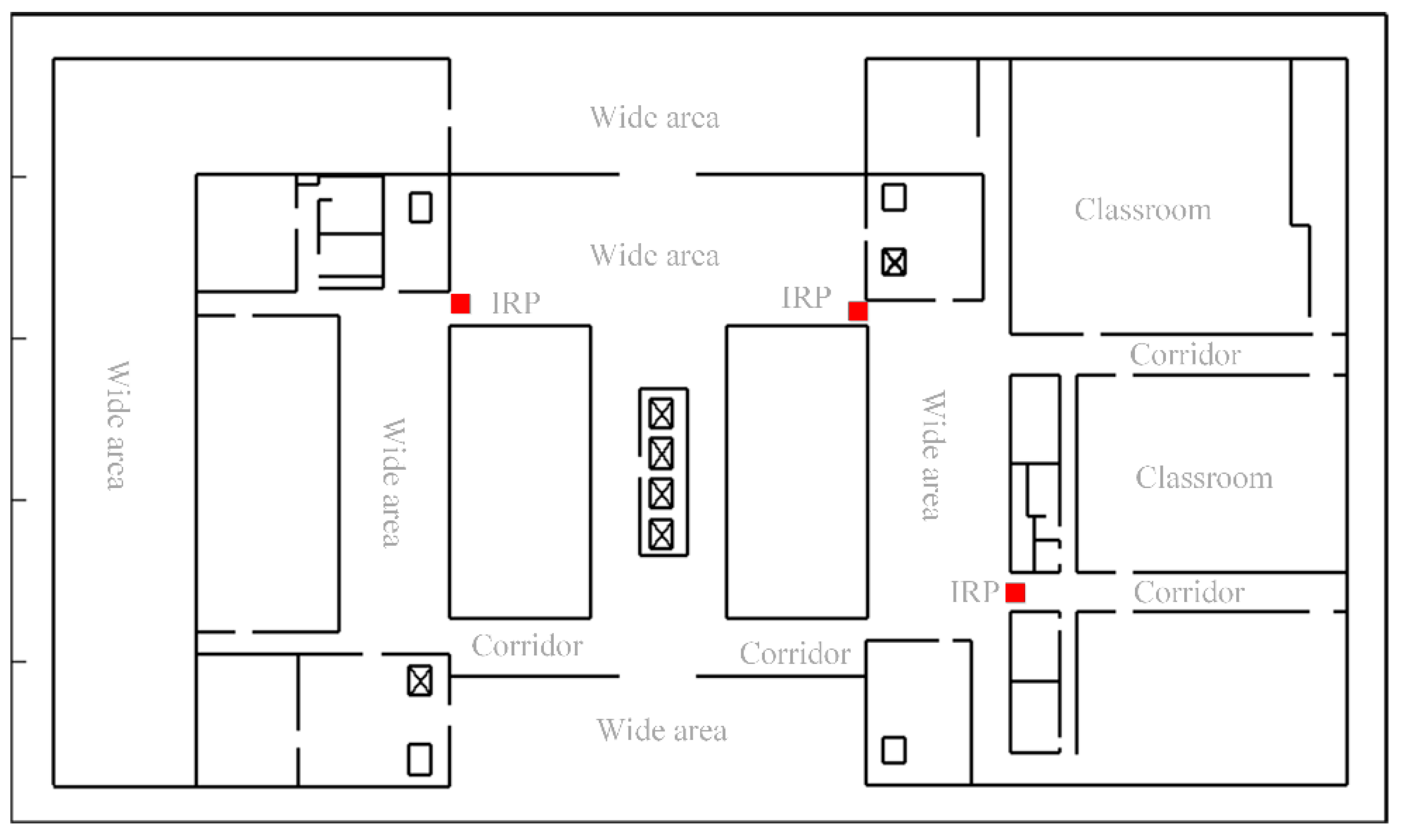

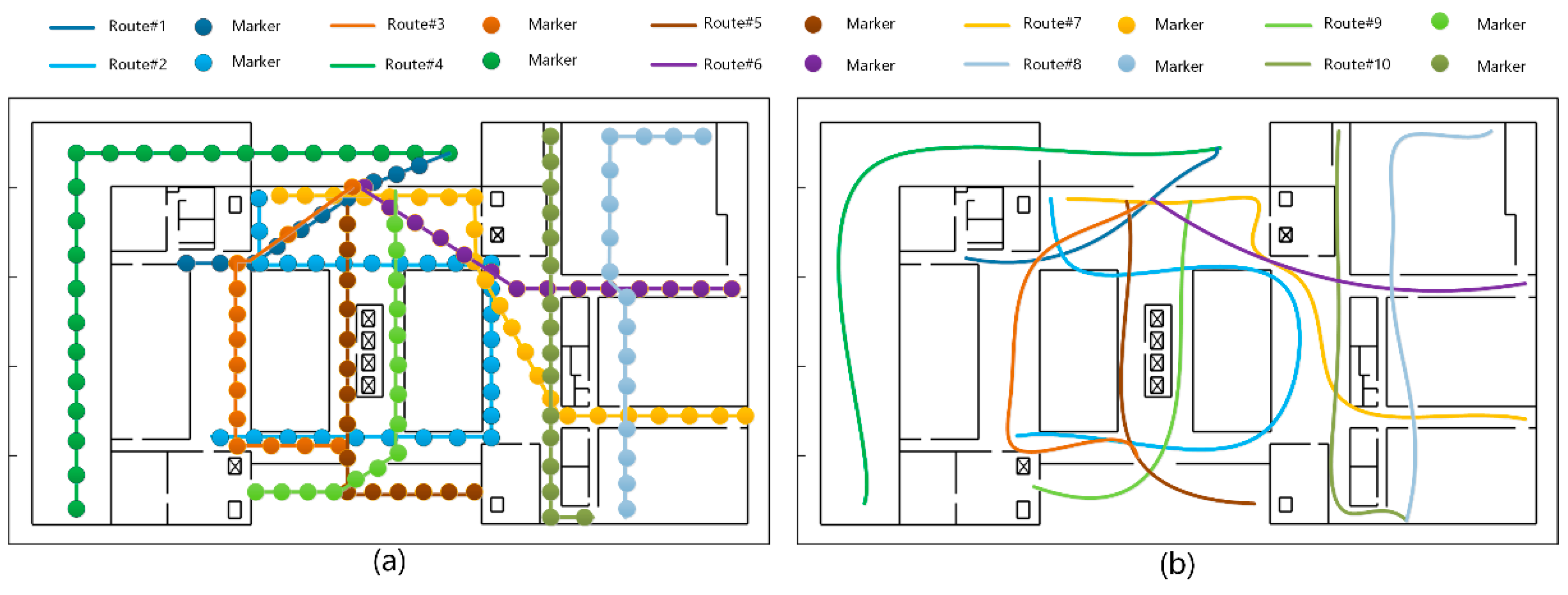

4. Evaluation

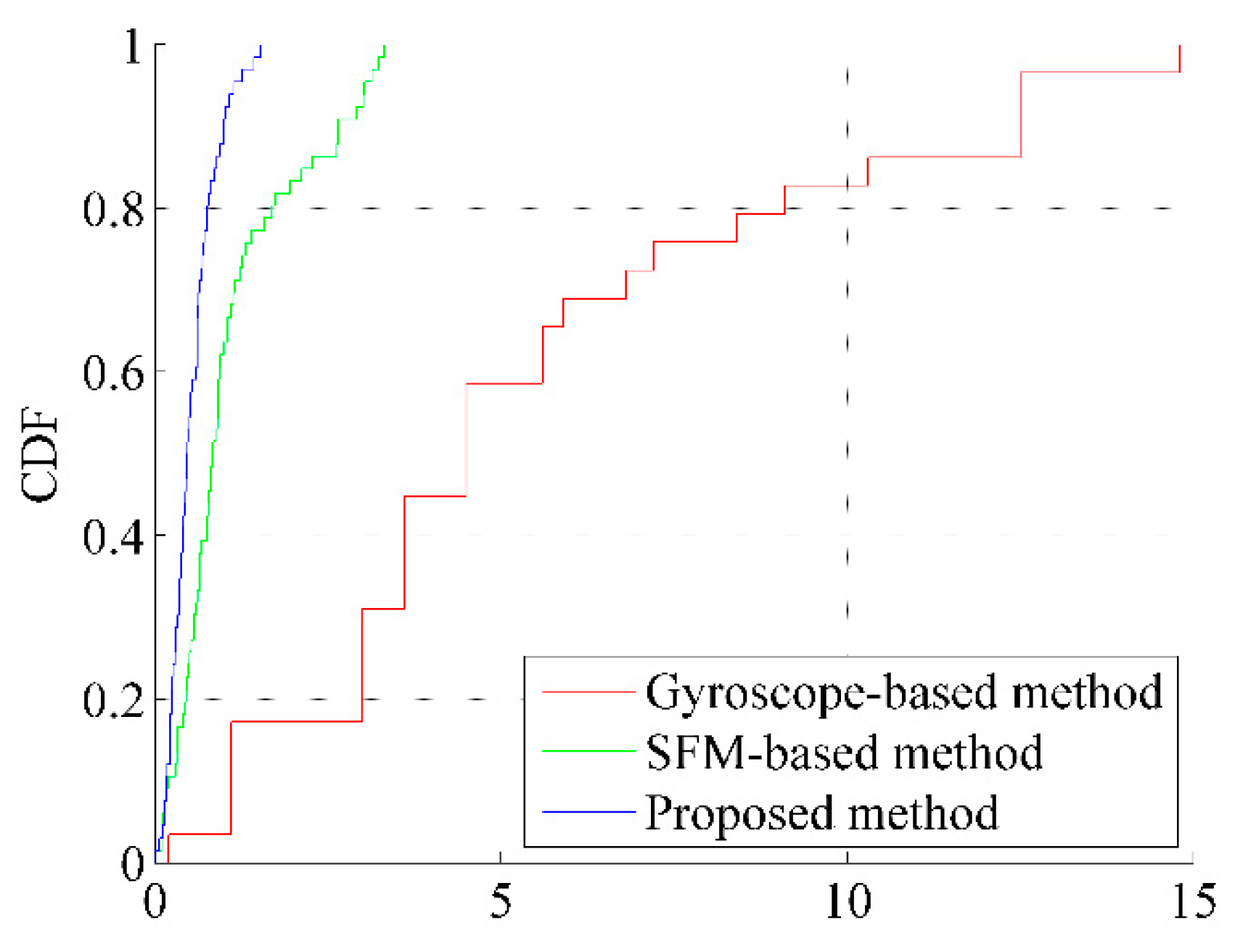

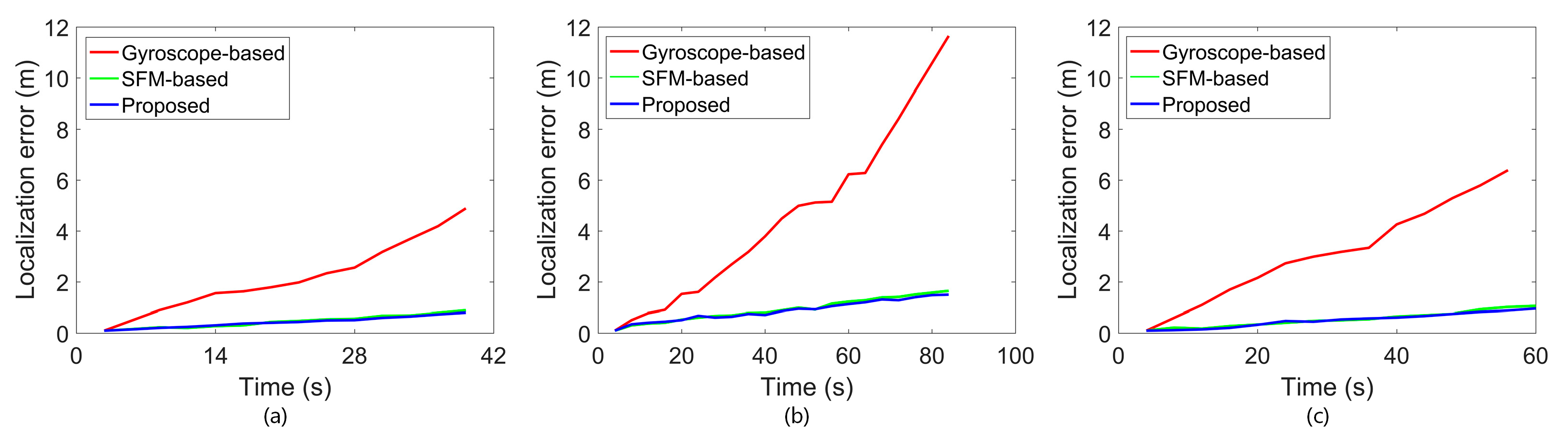

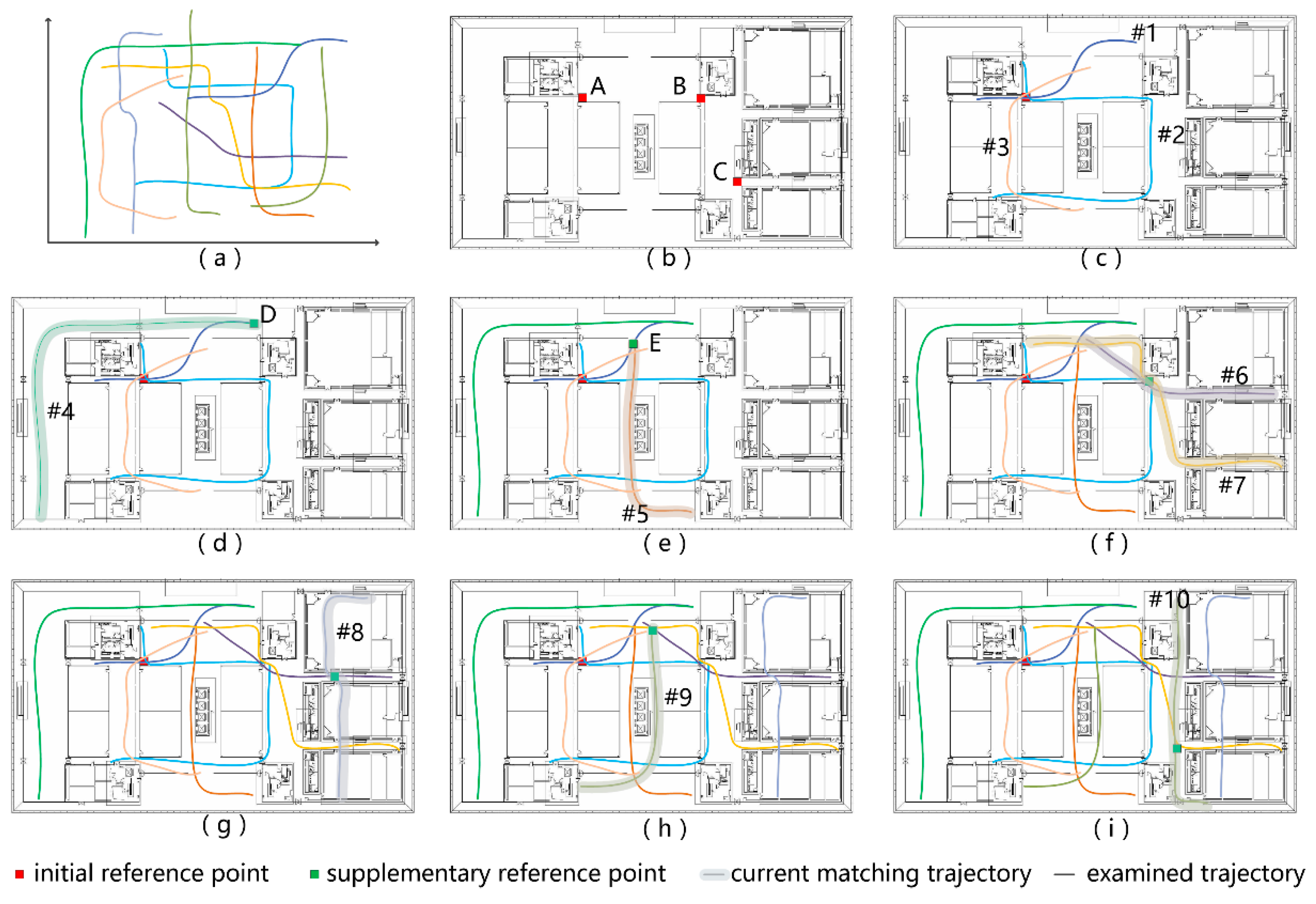

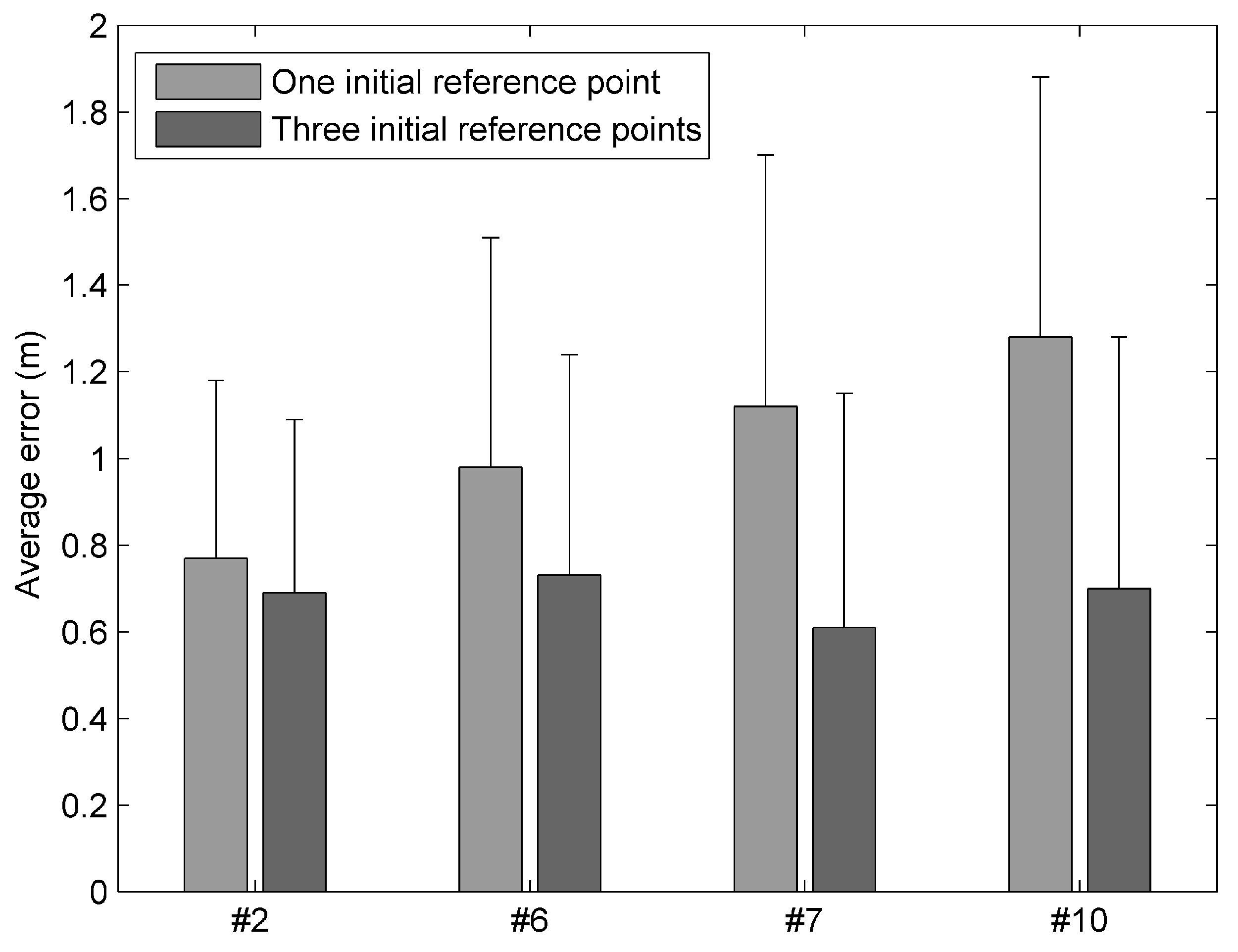

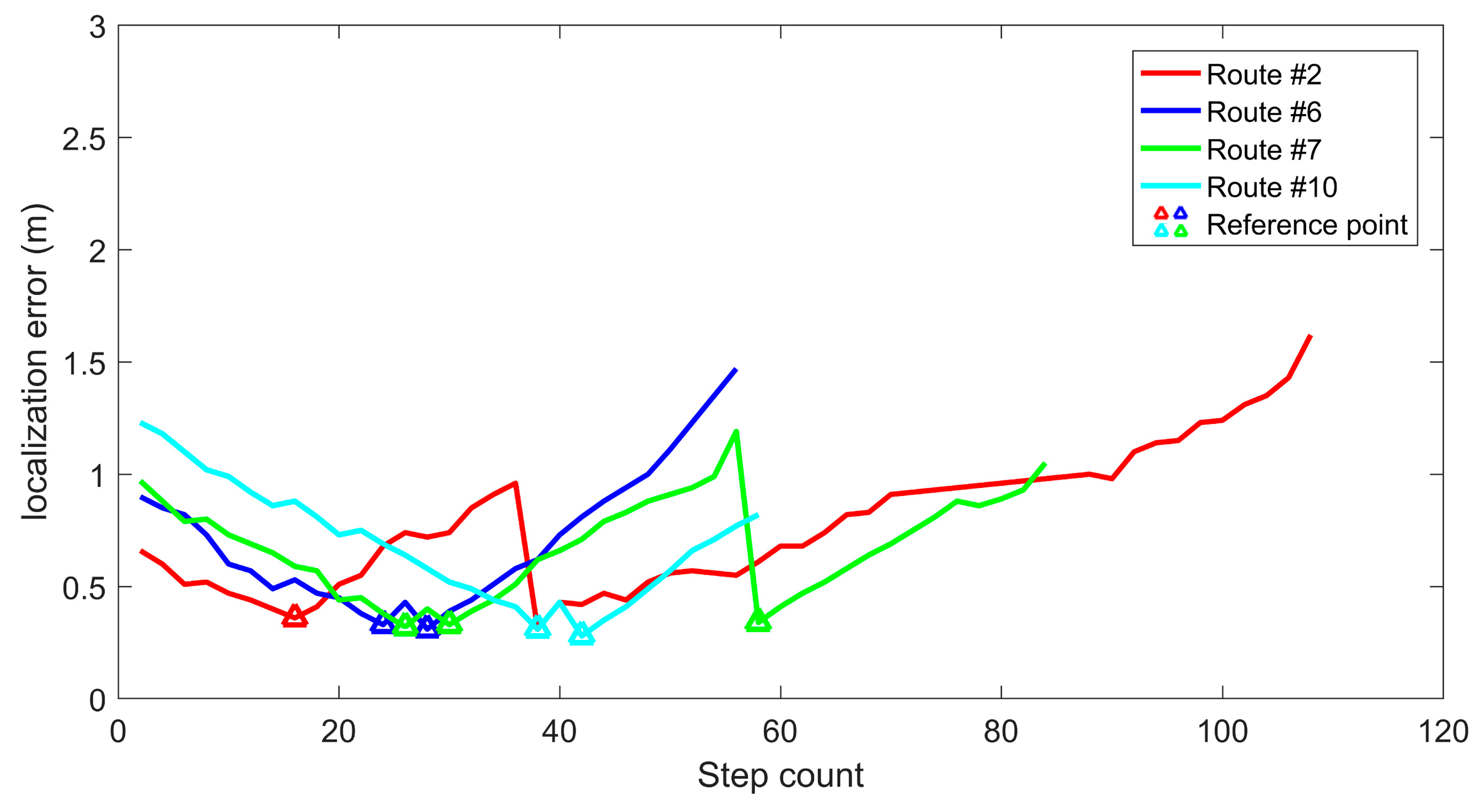

4.1. Evaluation of Trajectory Estimation

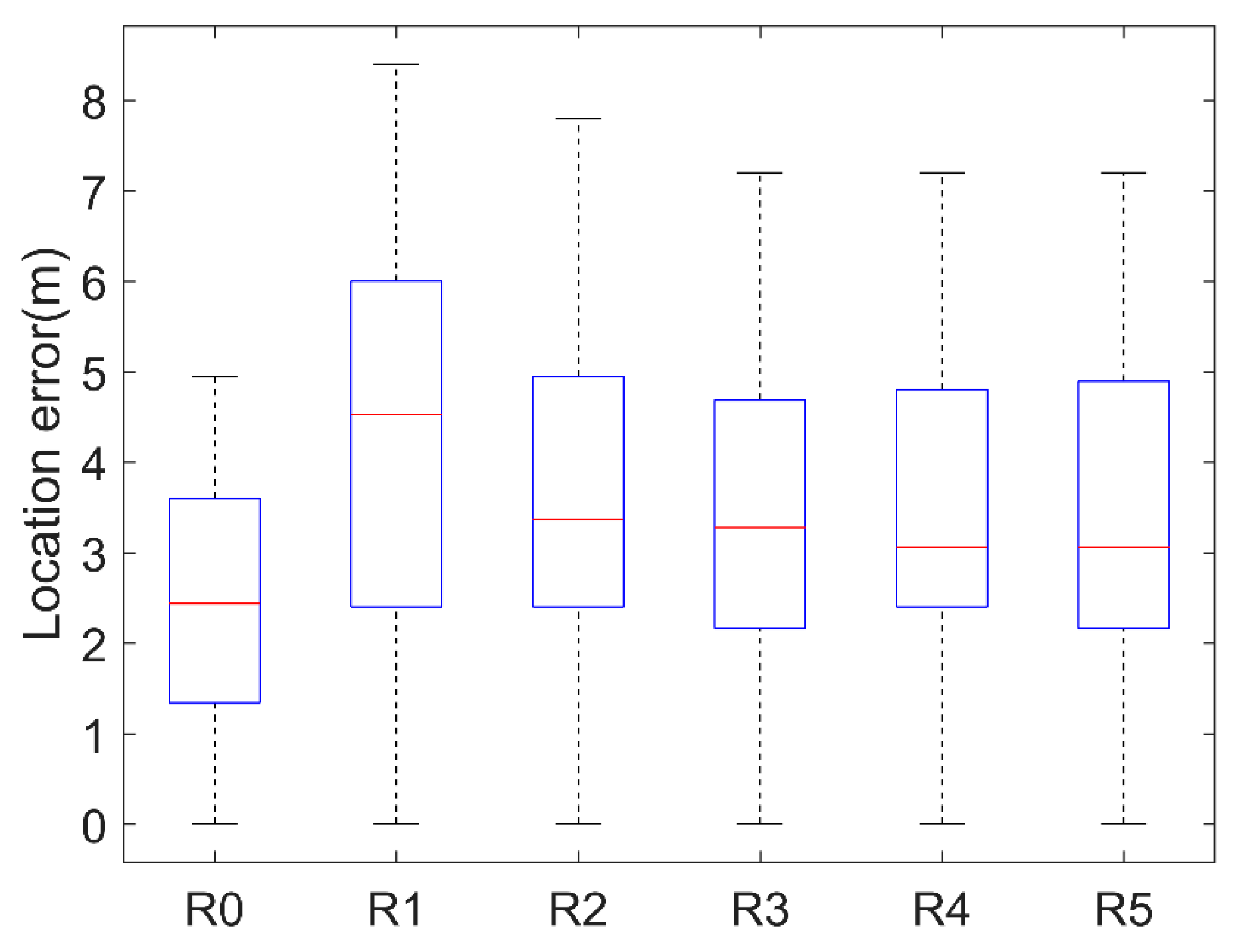

4.2. Performance of Constructed Databases for Indoor Positioning

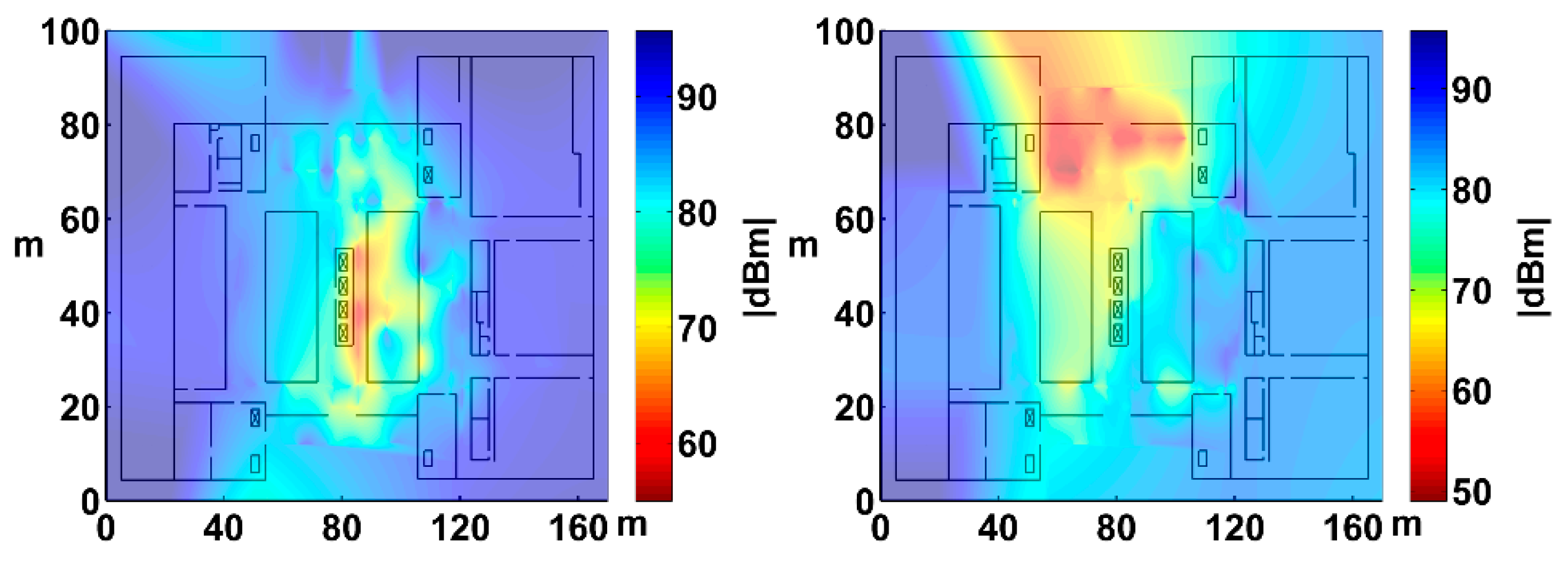

4.2.1. RSS-Based Indoor Positioning

4.2.2. Image Matching Based Indoor Positioning

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, A.Y.; Wang, L. Research on indoor localization algorithm based on WIFI signal fingerprinting and INS. In Proceedings of the International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Xiamen, China, 25–26 January 2018; pp. 206–209. [Google Scholar]

- Lee, N.; Ahn, S.; Han, D. AMID: Accurate magnetic indoor localization using deep learning. Sensors 2018, 18, 1598. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Kuang, Y.; Chen, X. A UWB/Improved PDR integration algorithm applied to dynamic indoor positioning for pedestrians. Sensors 2017, 17, 2065. [Google Scholar] [CrossRef] [PubMed]

- Díaz, E.; Pérez, M.C.; Gualda, D.; Villadangos, J.M.; Ureña, J.; García, J.J. Ultrasonic indoor positioning for smart environments: A mobile application. In Proceedings of the IEEE 4th Experiment@ International Conference, Faro, Algarve, Portugal, 6–8 June 2017; pp. 280–285. [Google Scholar]

- Bahl, P.; Padmanabhan, V.N. RADAR: An in-building RF-based user location and tracking system. In Proceedings of the IEEE INFOCOM 2000. Conference on Computer Communications. Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societie, Tel Aviv, Israel, 26–30 March 2000; pp. 775–784. [Google Scholar]

- Youssef, M.; Ashok, A. The Horus WLAN location determination system. In Proceedings of the 3rd International Conference on Mobile Systems, Applications, and Services, Seattle, WA, USA, 6–8 June 2005; pp. 205–218. [Google Scholar]

- He, S.; Chan, S.H.G. Wi-Fi fingerprint-based indoor positioning: Recent advances and comparisons. IEEE Commun. Surv. Tutor. 2017, 18, 466–490. [Google Scholar] [CrossRef]

- Tao, L.; Xing, Z.; Qingquan, L.; Zhixiang, F. A visual-based approach for indoor radio map construction using smartphones. Sensors 2017, 17, 1790. [Google Scholar]

- IndoorAtlas. Available online: https://www.indooratlas.com/ (accessed on 21 June 2019).

- Ravi, N.; Shankar, P.; Frankel, A.; Elgammal, A.; Iftode, L. Indoor localization using camera phones. In Proceedings of the IEEE Workshop on Mobile Computing Systems & Applications, Orcas Island, WA, USA, 1 August 2006, Orcas Island, WA, USA, 1 August 2006; pp. 1–7. [Google Scholar]

- Chen, Y.; Chen, R.; Liu, M.; Xiao, A.; Wu, D.; Zhao, S. Indoor visual positioning aided by CNN-based image retrieval: Training-free, 3D modeling-free. Sensors 2018, 18, 2692. [Google Scholar] [CrossRef] [PubMed]

- Ruotsalainen, L.; Kuusniemi, H.; Bhuiyan, M.Z.H.; Chen, L.; Chen, R. A two-dimensional pedestrian navigation solution aided with a visual gyroscope and a visual odometer. GPS Solut. 2013, 17, 575–586. [Google Scholar] [CrossRef]

- Zhou, B.; Li, Q.; Mao, Q.; Tu, W. A robust crowdsourcing-based indoor localization system. Sensors 2017, 17, 864. [Google Scholar] [CrossRef]

- Zhuang, Y.; Syed, Z.; Georgy, J.; El-Sheimy, N. Autonomous smartphone-based WiFi positioning system by using access points localization and crowdsourcing. Pervasive Mob. Comput. 2015, 18, 118–136. [Google Scholar] [CrossRef]

- Jung, S.H.; Han, D. Automated construction and maintenance of Wi-Fi radio maps for crowdsourcing-based indoor positioning systems. IEEE Access 2018, 6, 1764–1777. [Google Scholar] [CrossRef]

- Yang, S.; Dessai, P.; Verma, M.; Gerla, M. FreeLoc: Calibration-free crowdsourced indoor localization. In Proceedings of the IEEE INFOCOM 2013, Turin, Italy, 14–19 April 2013; pp. 2481–2489. [Google Scholar]

- Wu, C.; Yang, Z.; Liu, Y. Smartphones based crowdsourcing for indoor localization. IEEE Trans. Mob. Comput. 2015, 14, 444–457. [Google Scholar] [CrossRef]

- Rai, A.; Chintalapudi, K.K.; Padmanabhan, V.N.; Sen, R. Zee: Zero-effort crowdsourcing for indoor localization. In Proceedings of the 18th Annual International Conference on Mobile Computing and Networking, Istanbul, Turkey, 22–26 August 2012; pp. 293–304. [Google Scholar]

- Yang, D.; Xue, G.; Fang, X.; Tang, J. Incentive mechanisms for crowdsensing: Crowdsourcing with smartphones. IEEE/ACM Trans. Netw. 2015, 99, 1–13. [Google Scholar] [CrossRef]

- Zhao, W.; Han, S.; Hu, R.Q.; Meng, W.; Jia, Z. Crowdsourcing and multi-source fusion based fingerprint sensing in smartphone localization. IEEE Sens. J. 2018, 18, 3236–3247. [Google Scholar] [CrossRef]

- Lim, J.S.; Jang, W.H.; Yoon, G.W.; Han, D.S. Radio map update automation for WiFi positioning systems. IEEE Commun. Lett. 2013, 17, 693–696. [Google Scholar] [CrossRef]

- Zhuang, Y.; Syed, Z.; Li, Y.; El-Sheimy, N. Evaluation of two WiFi positioning systems based on autonomous crowd sourcing on handheld devices for indoor navigation. IEEE Trans. Mob. Comput. 2015, 15, 1982–1995. [Google Scholar] [CrossRef]

- Chen, W.; Wang, W.; Li, Q.; Chang, Q.; Hou, H. A crowd-sourcing indoor localization algorithm via optical camera on a smartphone assisted by Wi-Fi fingerprint RSSI. Sensors 2016, 16, 410. [Google Scholar]

- Zhang, C.; Subbu, K.P.; Luo, J.; Wu, J. GROPING: Geomagnetism and crowdsensing powered indoor navigation. IEEE Trans. Mob. Comput. 2014, 14, 387–400. [Google Scholar] [CrossRef]

- Wu, T.; Liu, J.; Li, Z.; Liu, K.; Xu, B. Accurate smartphone indoor visual positioning based on a high-precision 3D photorealistic map. Sensors 2018, 18, 1974. [Google Scholar] [CrossRef]

- Gao, R.; Tian, Y.; Ye, F.; Luo, G.; Bian, K.; Wang, Y.; Li, X. Sextant: Towards ubiquitous indoor localization service by photo-taking of the environment. IEEE Trans. Mob. Comput. 2015, 15, 460–474. [Google Scholar] [CrossRef]

- Bollinger, P. Redpin–adaptive, zero-configuration indoor localization through user collaboration. In Proceedings of the First ACM International Workshop on Mobile Entity Localization and Tracking in GPS-Less Environments, San Francisco, CA, USA, 14–19 September 2008; pp. 55–60. [Google Scholar]

- Park, J.G.; Charrow, B.; Curtis, D.; Battat, J.; Minkov, E.; Hicks, J.; Ledlie, J. Growing an organic indoor location system. In Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services, San Francisco, CA, USA, 15–18 June 2010; pp. 271–284. [Google Scholar]

- Lee, M.; Jung, S.H.; Lee, S.; Han, D. Elekspot: A platform for urban place recognition via crowdsourcing. In Proceedings of the 2012 IEEE/IPSJ 12th International Symposium on Applications and the Internet, Izmir, Turkey, 16–20 July 2012; pp. 190–195. [Google Scholar]

- Wu, C.; Yang, Z.; Liu, Y.; Xi, W. WILL: Wireless indoor localization without site survey. IEEE Trans. Parallel Distrib. Syst. 2012, 24, 839–848. [Google Scholar]

- Liu, T.; Zhang, X.; Li, Q.; Fang, Z.X. Modeling of structure landmark for indoor pedestrian localization. IEEE Access 2019, 7, 15654–15668. [Google Scholar] [CrossRef]

- Gu, Y.; Zhou, C.; Wieser, A.; Zhou, Z. WiFi based trajectory alignment, calibration and crowdsourced site survey using smart phones and foot-mounted IMUs. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–6. [Google Scholar]

- Chen, S.; Li, M.; Ren, K.; Qiao, C. Crowd map: Accurate reconstruction of indoor floor plans from crowdsourced sensor-rich videos. In Proceedings of the IEEE 35th International Conference on Distributed Computing Systems, Columbus, OH, USA, 29 June–2 July 2015; pp. 1–10. [Google Scholar]

- Pan, J.J.; Pan, S.J.; Yin, J.; Ni, L.M.; Yang, Q. Tracking mobile users in wireless networks via semi-supervised colocalization. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 587–600. [Google Scholar] [CrossRef] [PubMed]

- Park, J.G.; Curtis, D.; Teller, S.; Ledlie, J. Implications of device diversity for organic localization. In Proceedings of the IEEE INFOCOM 2011, Shanghai, China, 10–15 April 2011; pp. 3182–3190. [Google Scholar]

- Wu, Z.; Jiang, L.; Jiang, Z.; Chen, B.; Liu, K.; Xuan, Q.; Xiang, Y. Accurate indoor localization based on CSI and visibility graph. Sensors 2018, 18, 2549. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Gao, L.; Mao, S.; Pandey, S. CSI-based fingerprinting for indoor localization: A deep learning approach. IEEE Trans. Veh. Technol. 2016, 66, 763–776. [Google Scholar] [CrossRef]

- Kang, W.; Han, Y. SmartPDR: Smartphone-based pedestrian dead reckoning for indoor localization. IEEE Sens. J. 2015, 15, 2906–2916. [Google Scholar] [CrossRef]

- Luong, Q.T.; Faugeras, O.D. The fundamental matrix: Theory, algorithms, and stability analysis. Int. J. Compt. Vis. 1996, 17, 43–75. [Google Scholar] [CrossRef]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 1 June 2017).

- Mladenov, M.; Mock, M. A step counter service for Java-enabled devices using a built-in accelerometer. In Proceedings of the 1st International Workshop on Context-Aware Middleware and Services: Affiliated with the 4th International Conference on Communication System Software and Middleware (COMSWARE 2009), Dublin, Ireland, 16 June 2009; pp. 1–5. [Google Scholar]

- Jahn, J.; Batzer, U.; Seitz, J.; Patino-Studencka, L.; Boronat, J.G. Comparison and evaluation of acceleration based step length estimators for handheld devices. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation, Zurich, Switzerland, 15–17 September 2010; pp. 1–6. [Google Scholar]

- Torr, P.H.; Zisserman, A. Vision Algorithms: Theory and Practice; Springer: Berlin, Germany, 1999. [Google Scholar]

- Akyilmaz, O. Total least squares solution of coordinate transformation. Surv. Rev. 2007, 39, 68–80. [Google Scholar] [CrossRef]

- Shen, G.; Chen, Z.; Zhang, P.; Moscibroda, T.; Zhang, Y. Walkie-Markie: Indoor pathway mapping made easy. In Proceedings of the 10th Symposium on Networked Systems Design and Implementation, Lombard, IL, USA, 2–5 April 2013; pp. 85–98. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Liang, J.Z.; Corso, N.; Turner, E.; Zakhor, A. Image based localization in indoor environments. In Proceedings of the 2013 Fourth International Conference on Computing for Geospatial Research and Application, Washington, DC, USA, 22–24 July 2013; pp. 70–75. [Google Scholar]

| Sampling Point ID | Trajectory ID | Coordinates | RSS | Image | Direction |

|---|---|---|---|---|---|

| P1 | Tr_1 | {(), ()…} | I1 | Azimuth1 | |

| P2 | Tr_2 | {(), ()…} | I2 | Azimuth2 | |

| P3 | Tr_3 | {(), ()…} | I3 | Azimuth2 |

| IRP Trajectory | SRP Trajectory | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Trajectory | #1 | #2 | #3 | #4 | #5 | #6 | #7 | #8 | #9 | #10 |

| max error (m) | 1.35 | 1.42 | 1.28 | 1.45 | 2.1 | 2.39 | 2.52 | 2.85 | 3.05 | 2.98 |

| min error (m) | 0.2 | 0.36 | 0.3 | 0.32 | 0.67 | 0.77 | 0.68 | 0.65 | 0.72 | 0.58 |

| avg error (m) | 0.61 | 0.77 | 0.65 | 0.85 | 1.09 | 0.98 | 1.12 | 1.55 | 1.46 | 1.28 |

| Length (m) | 41.7 | 102.8 | 56.9 | 101.1 | 59.0 | 55.2 | 85.6 | 65.4 | 57.8 | 57.6 |

| Time (s) | 21 | 52 | 29 | 153 | 180 | 980 | 1505 | 924 | 435 | 1260 |

| Database | R0 | R1 | R2 | R3 | R4 | R5 |

|---|---|---|---|---|---|---|

| Max error | 4.9 | 8.4 | 7.8 | 7.2 | 7.2 | 7.2 |

| Average error | 2.6 | 4.3 | 3.7 | 3.4 | 3.2 | 3.2 |

| Error std | 1.3 | 2.2 | 1.9 | 1.8 | 1.8 | 1.8 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Zhang, X.; Li, Q.; Fang, Z.; Tahir, N. An Accurate Visual-Inertial Integrated Geo-Tagging Method for Crowdsourcing-Based Indoor Localization. Remote Sens. 2019, 11, 1912. https://doi.org/10.3390/rs11161912

Liu T, Zhang X, Li Q, Fang Z, Tahir N. An Accurate Visual-Inertial Integrated Geo-Tagging Method for Crowdsourcing-Based Indoor Localization. Remote Sensing. 2019; 11(16):1912. https://doi.org/10.3390/rs11161912

Chicago/Turabian StyleLiu, Tao, Xing Zhang, Qingquan Li, Zhixiang Fang, and Nadeem Tahir. 2019. "An Accurate Visual-Inertial Integrated Geo-Tagging Method for Crowdsourcing-Based Indoor Localization" Remote Sensing 11, no. 16: 1912. https://doi.org/10.3390/rs11161912

APA StyleLiu, T., Zhang, X., Li, Q., Fang, Z., & Tahir, N. (2019). An Accurate Visual-Inertial Integrated Geo-Tagging Method for Crowdsourcing-Based Indoor Localization. Remote Sensing, 11(16), 1912. https://doi.org/10.3390/rs11161912