Power Pylon Reconstruction Based on Abstract Template Structures Using Airborne LiDAR Data

Abstract

1. Introduction

1.1. Related Work

1.2. Contributions

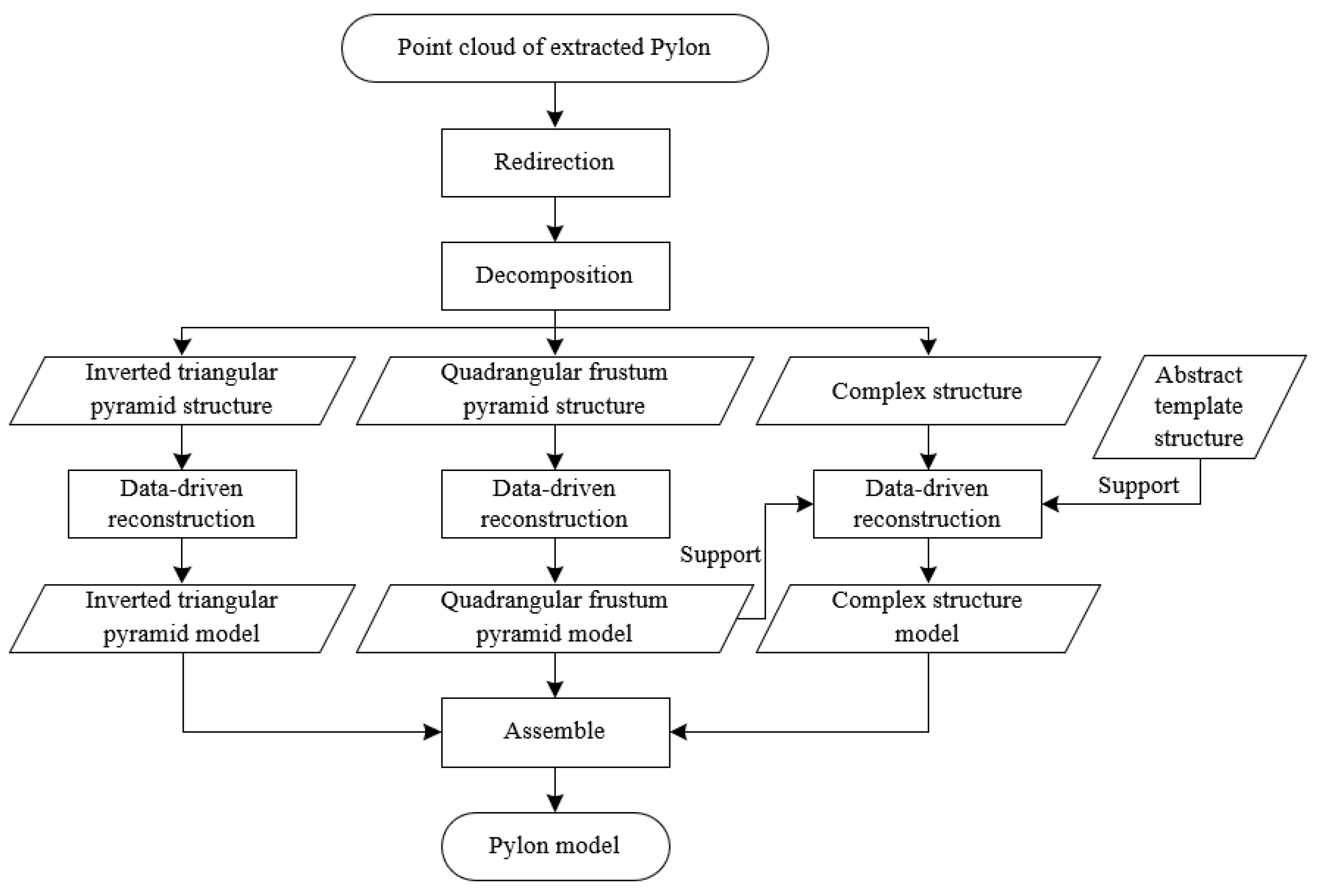

1.3. Overview

2. Methodology

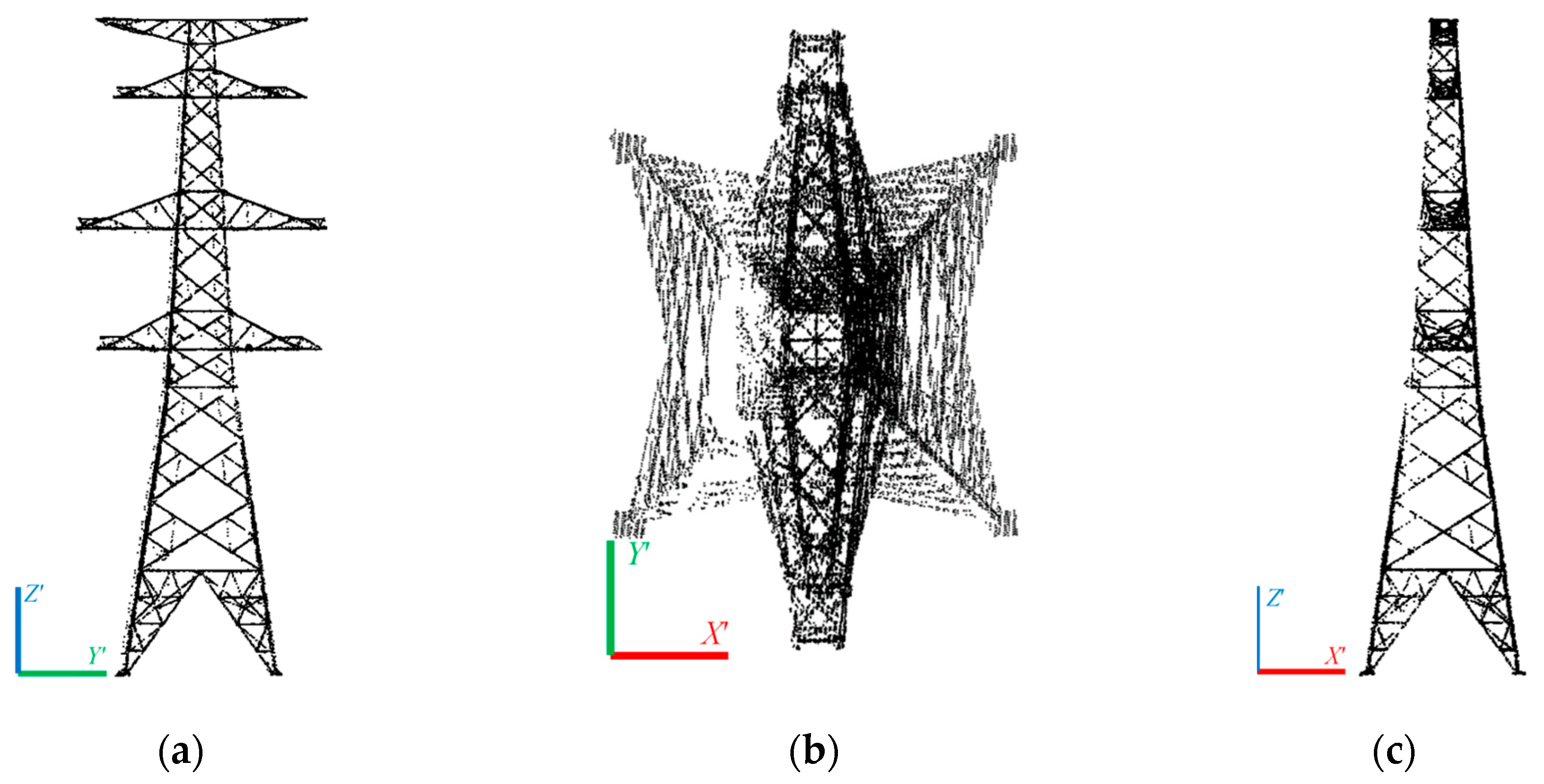

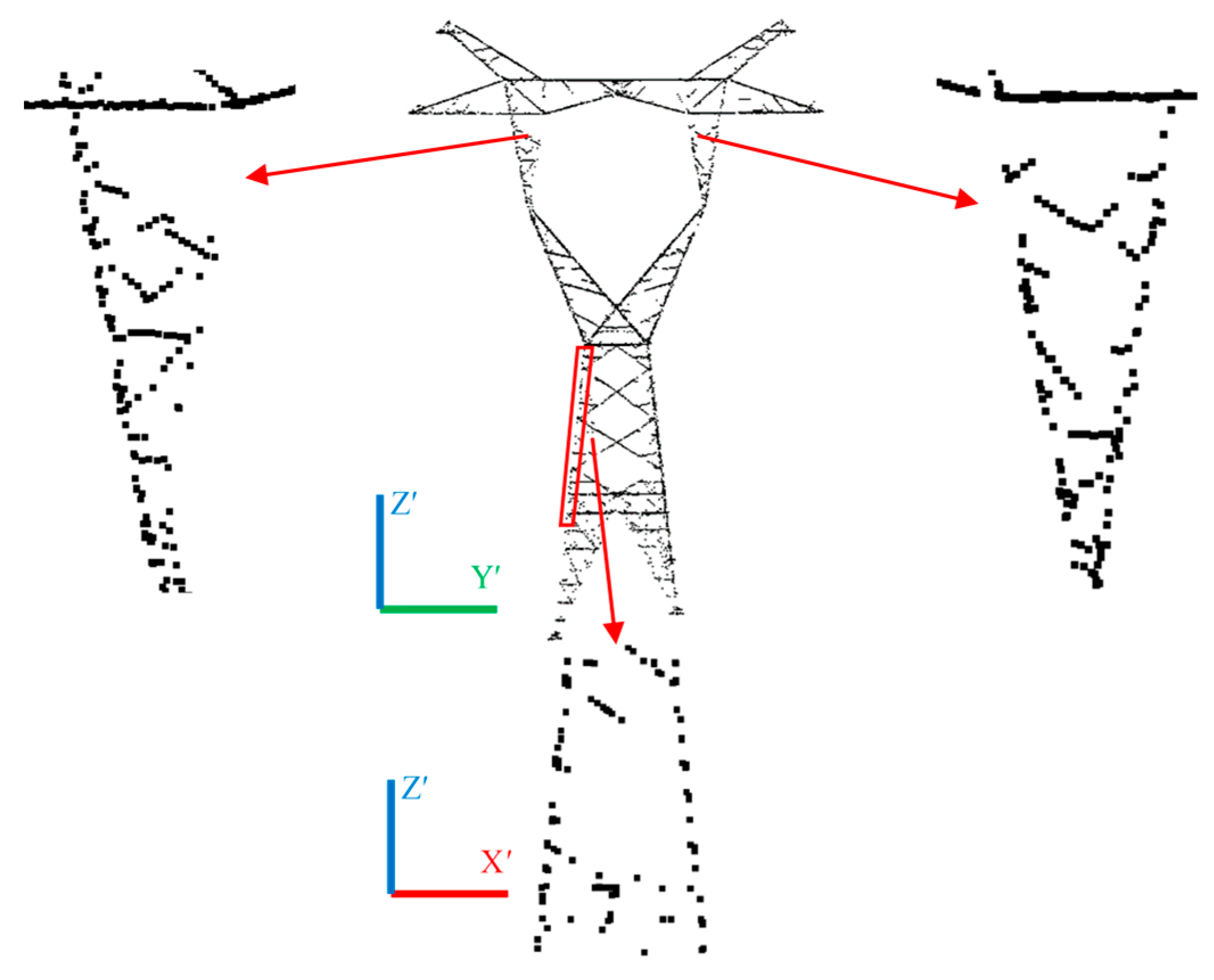

2.1. Pylon Redirection

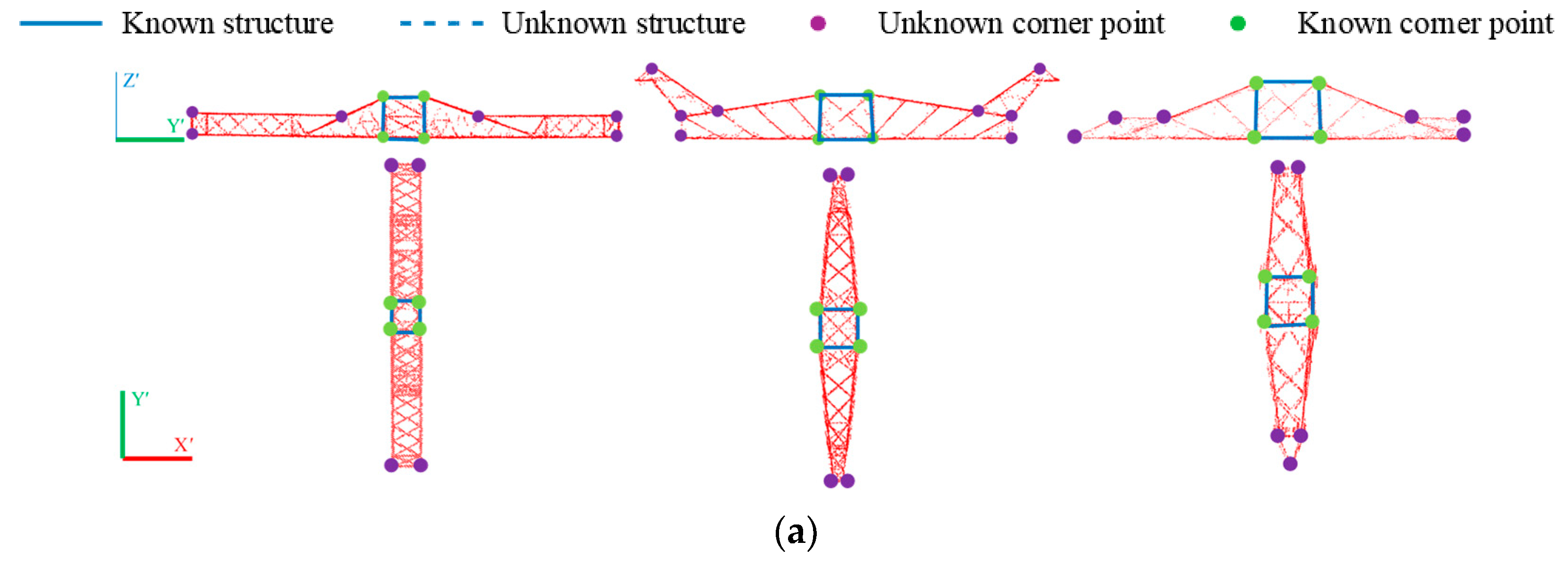

2.2. Pylon Decomposition

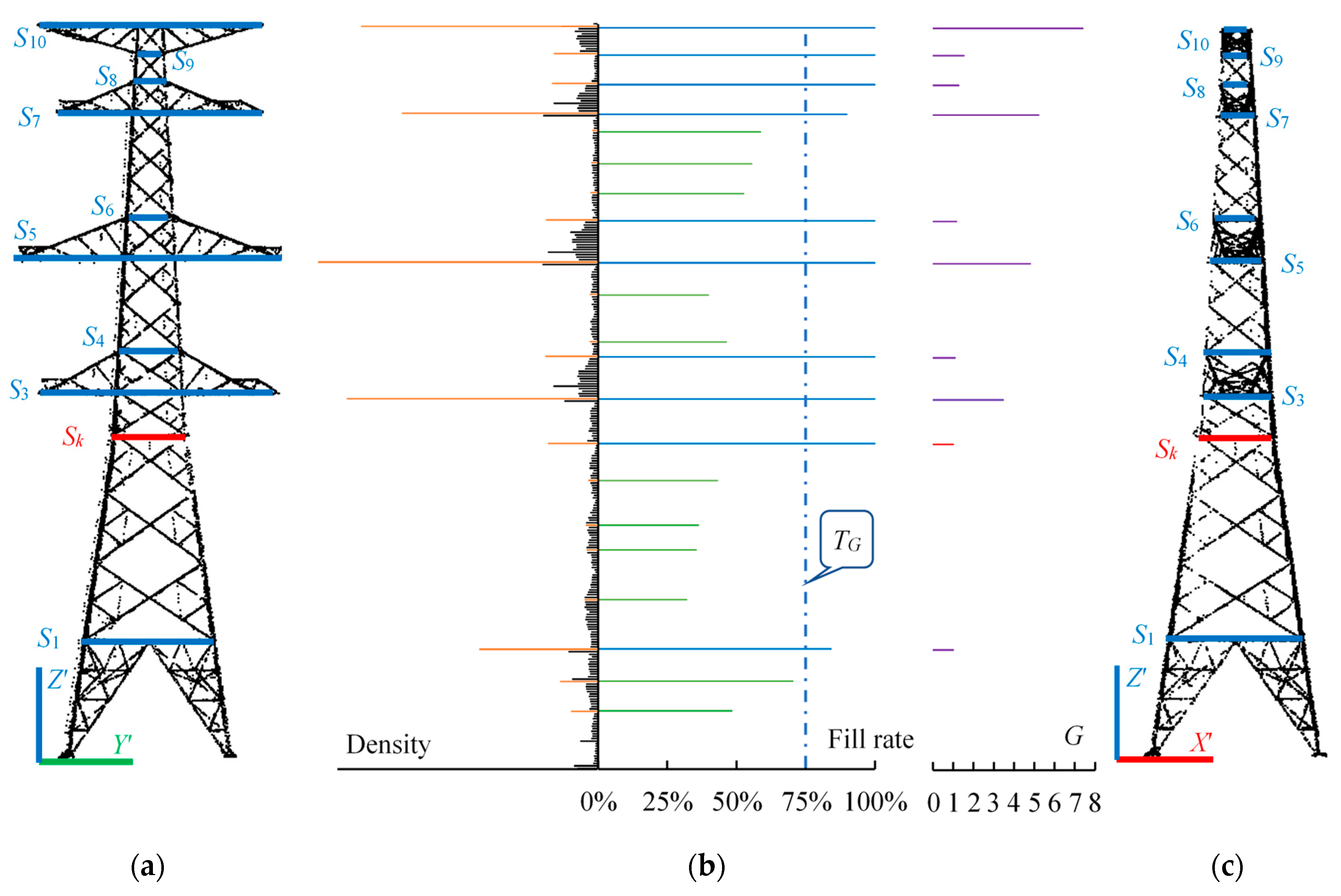

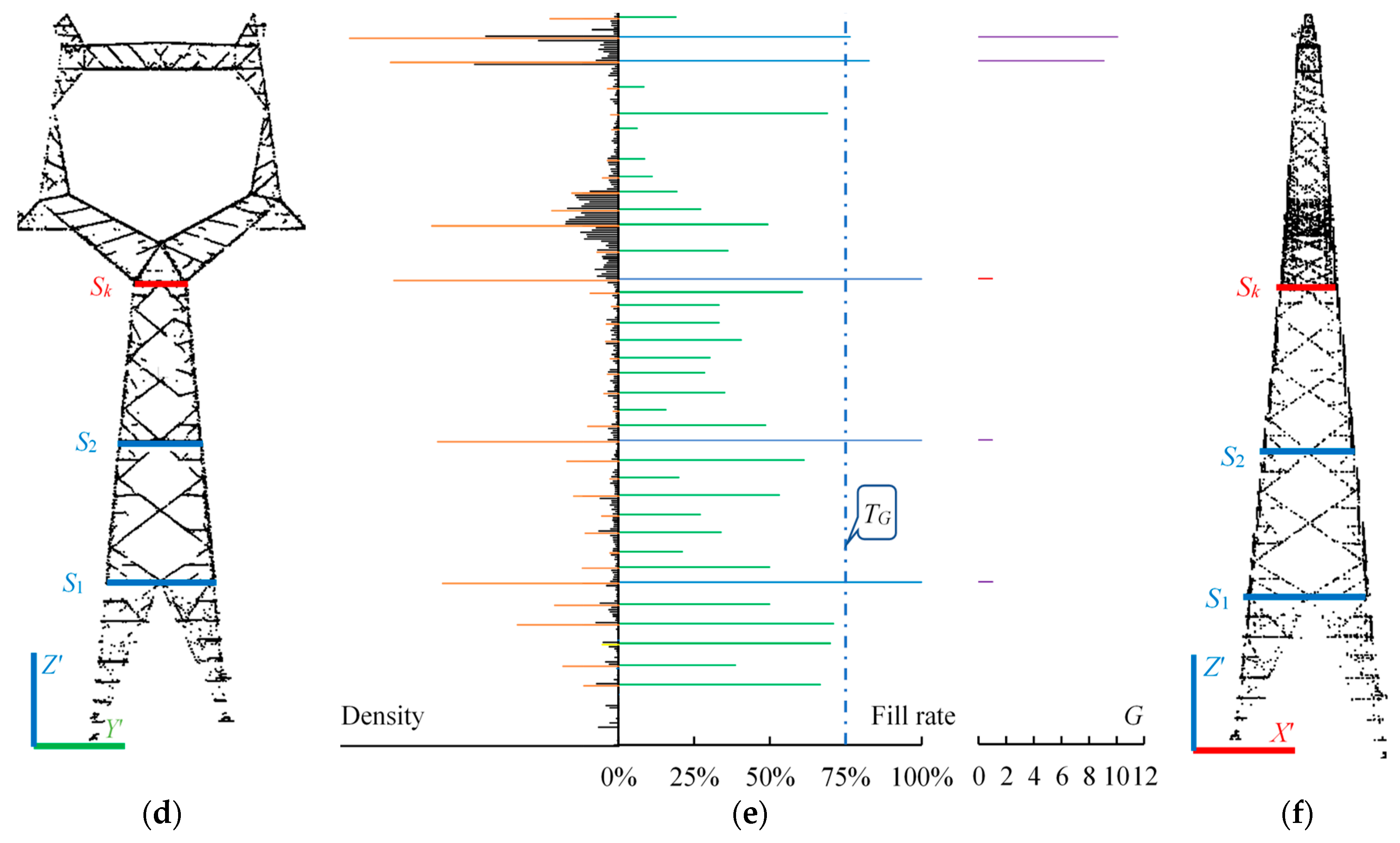

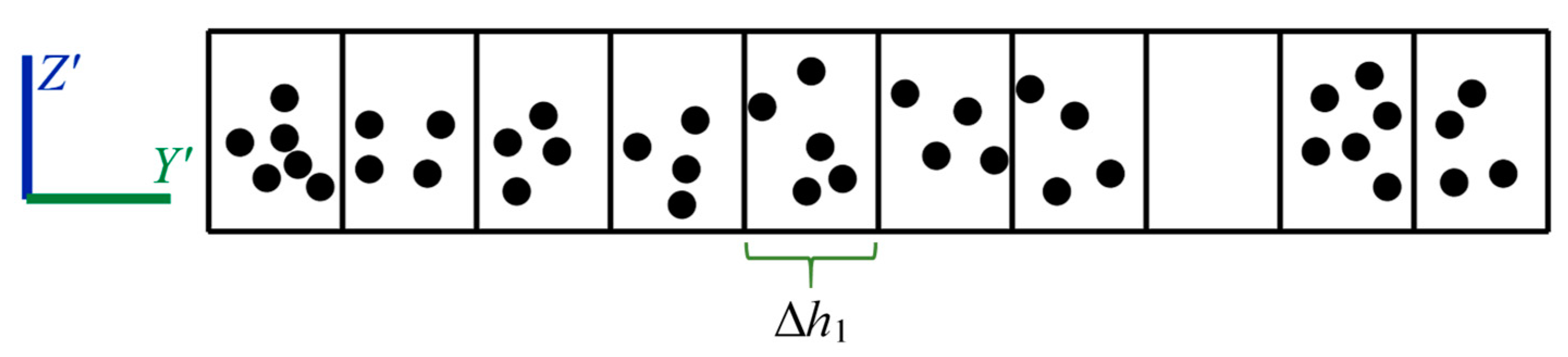

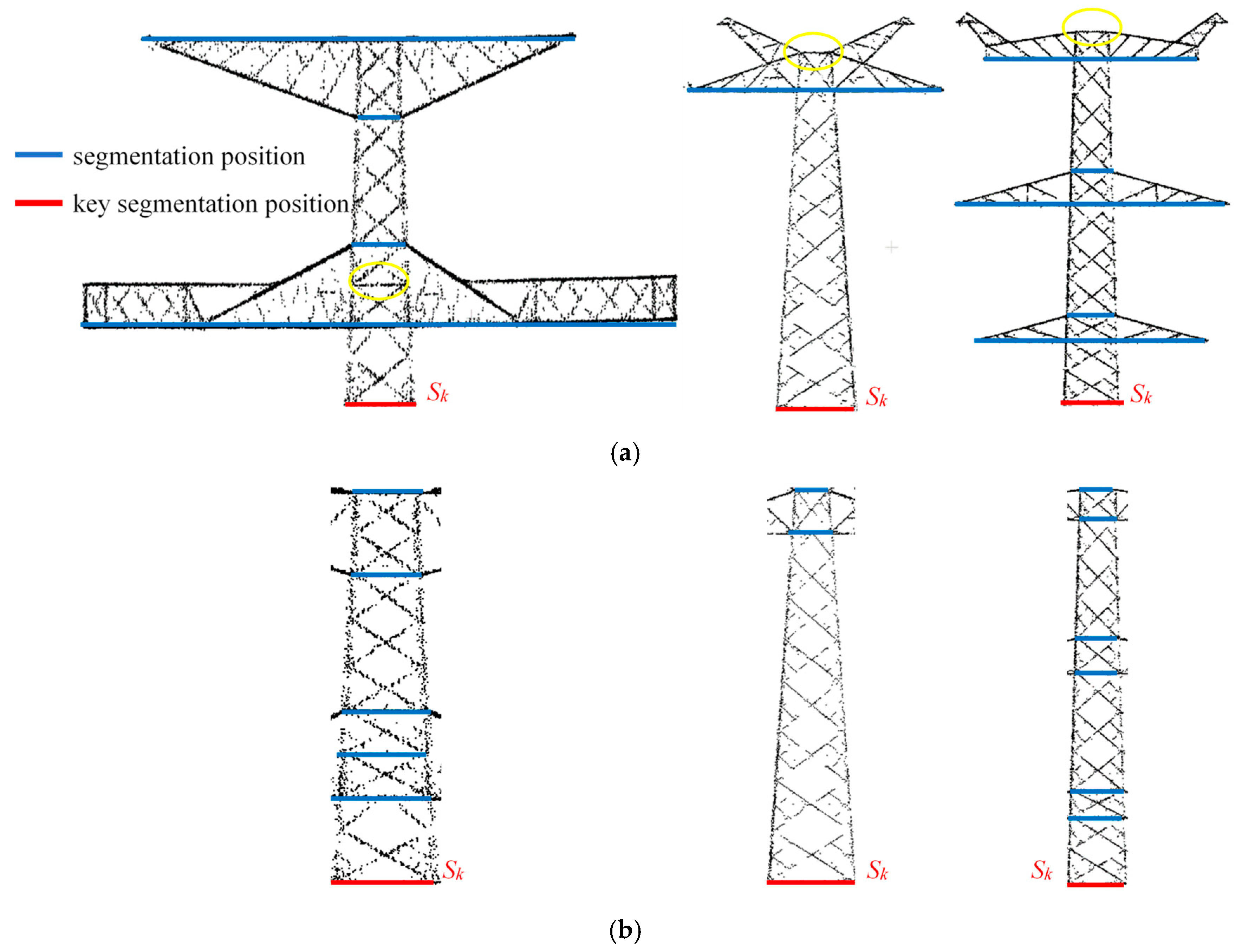

2.2.1. Segmentation Positions and Key Segmentation Position Identification

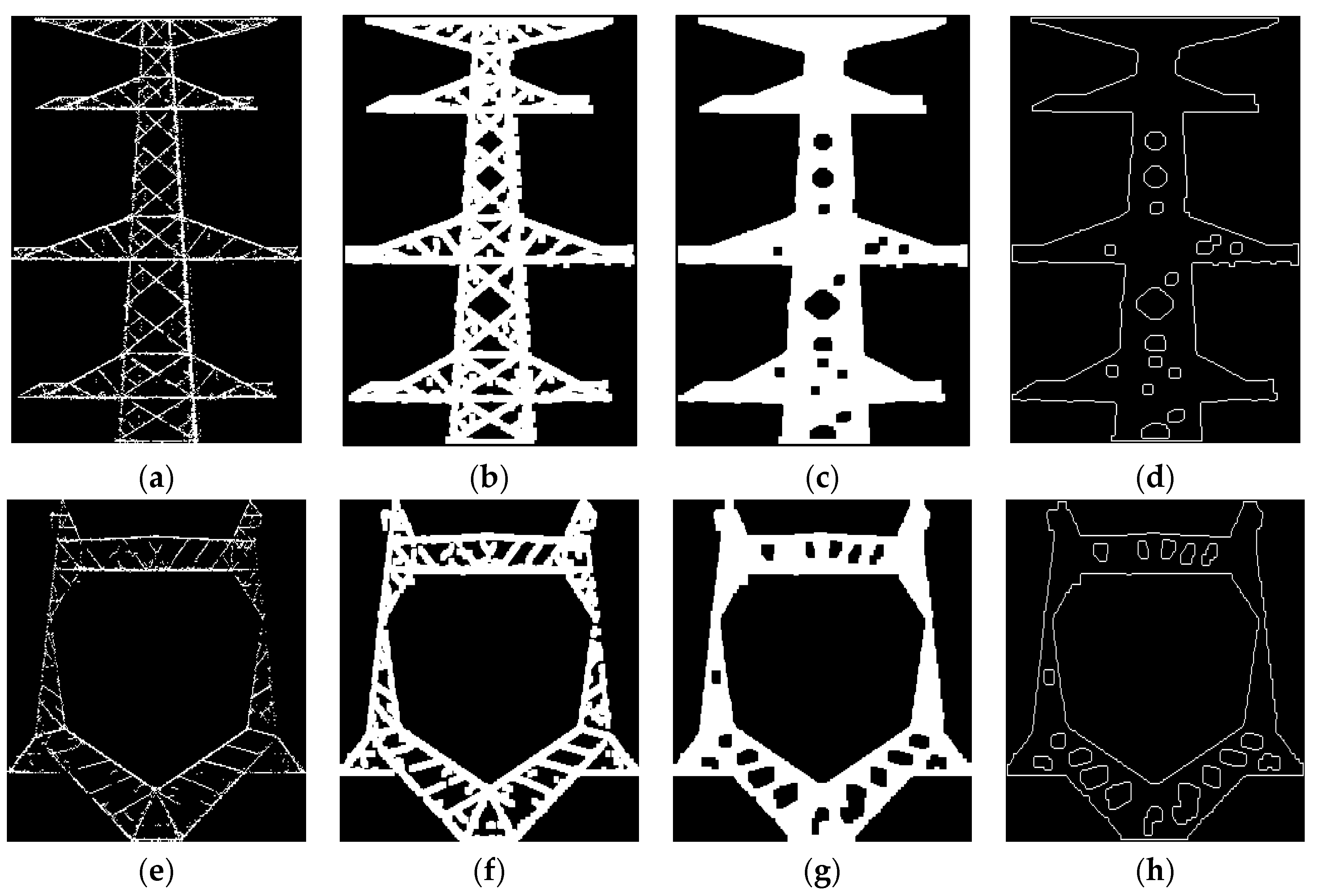

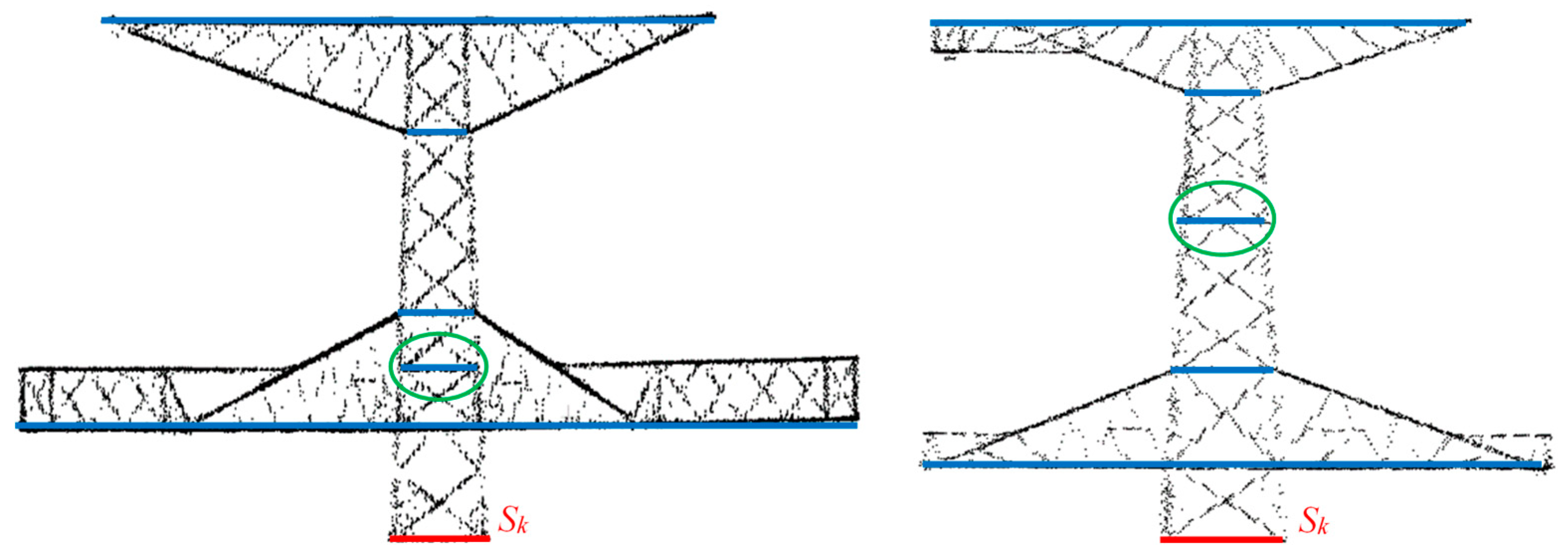

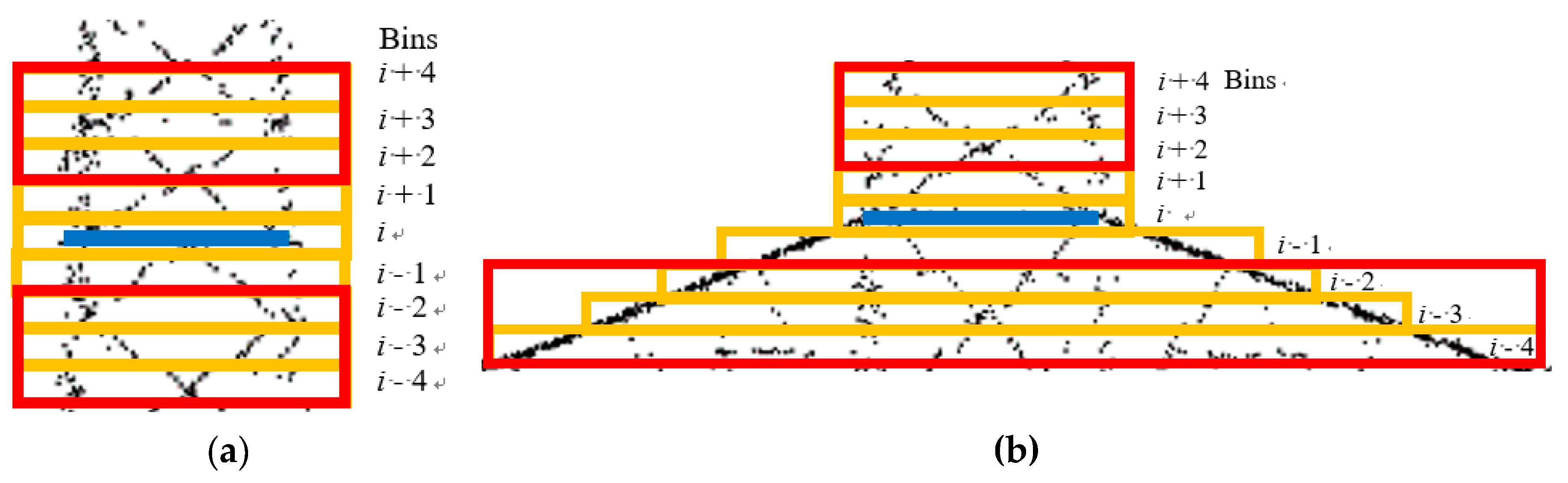

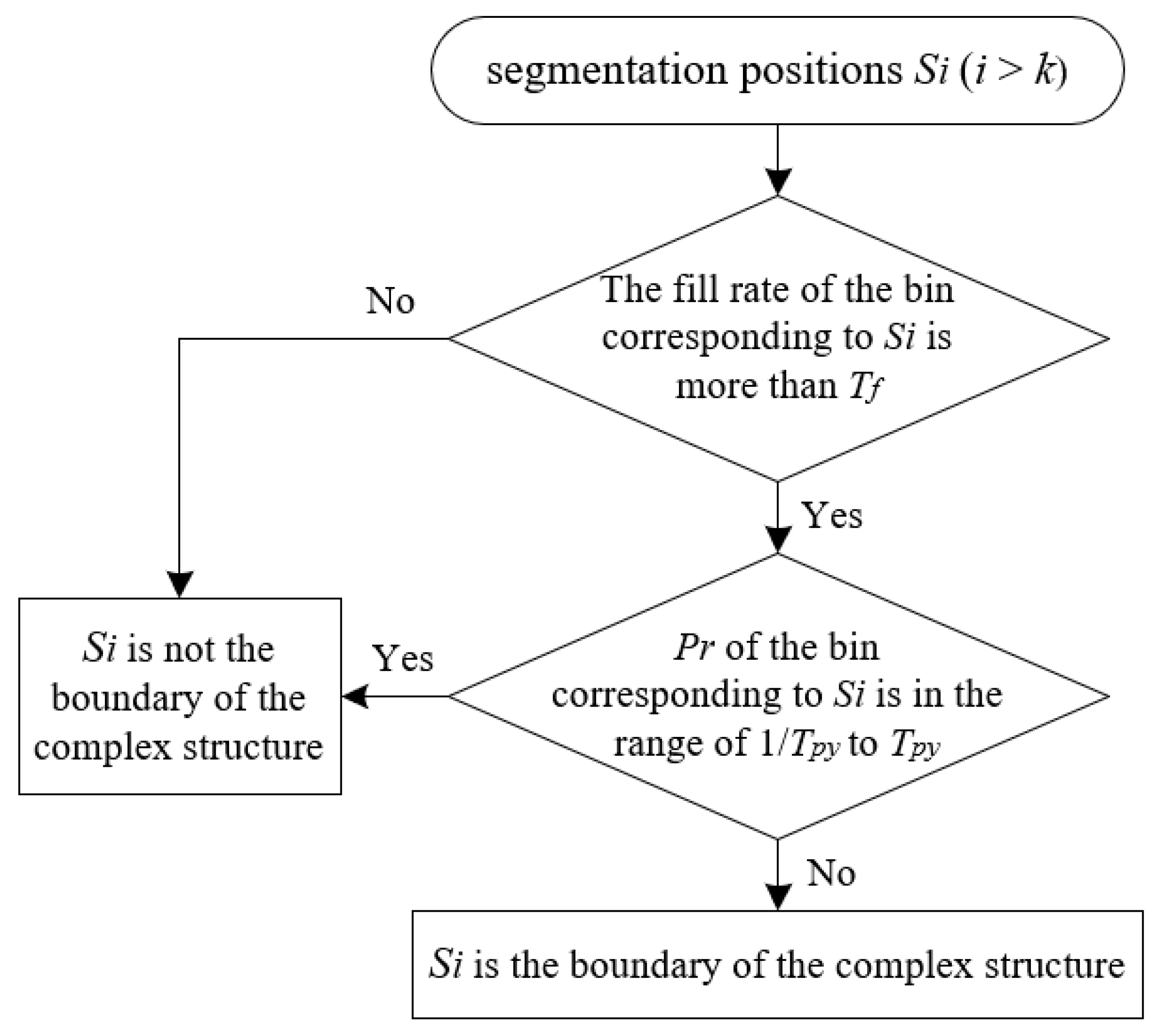

2.2.2. Complex Structure Recognition

2.3. Inverted Triangular Pyramid Reconstruction

2.4. Quadrangular Frustum Pyramid Reconstruction

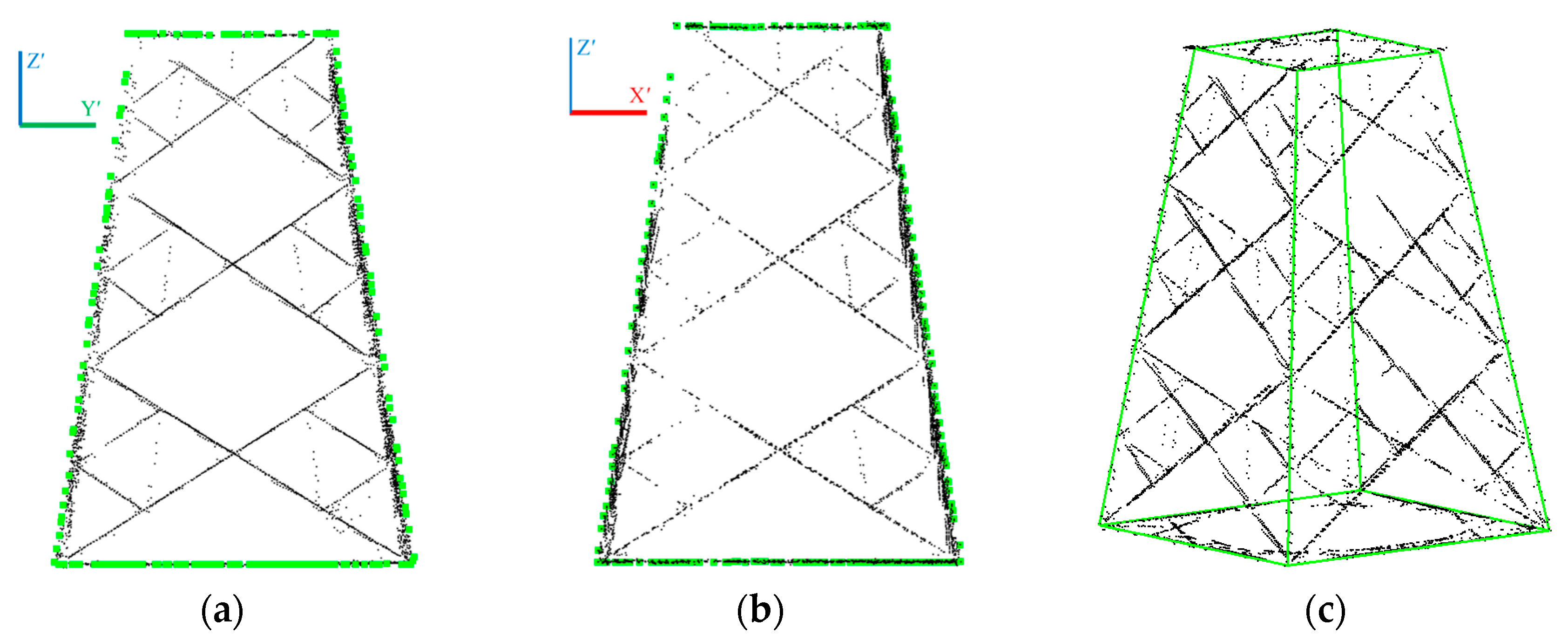

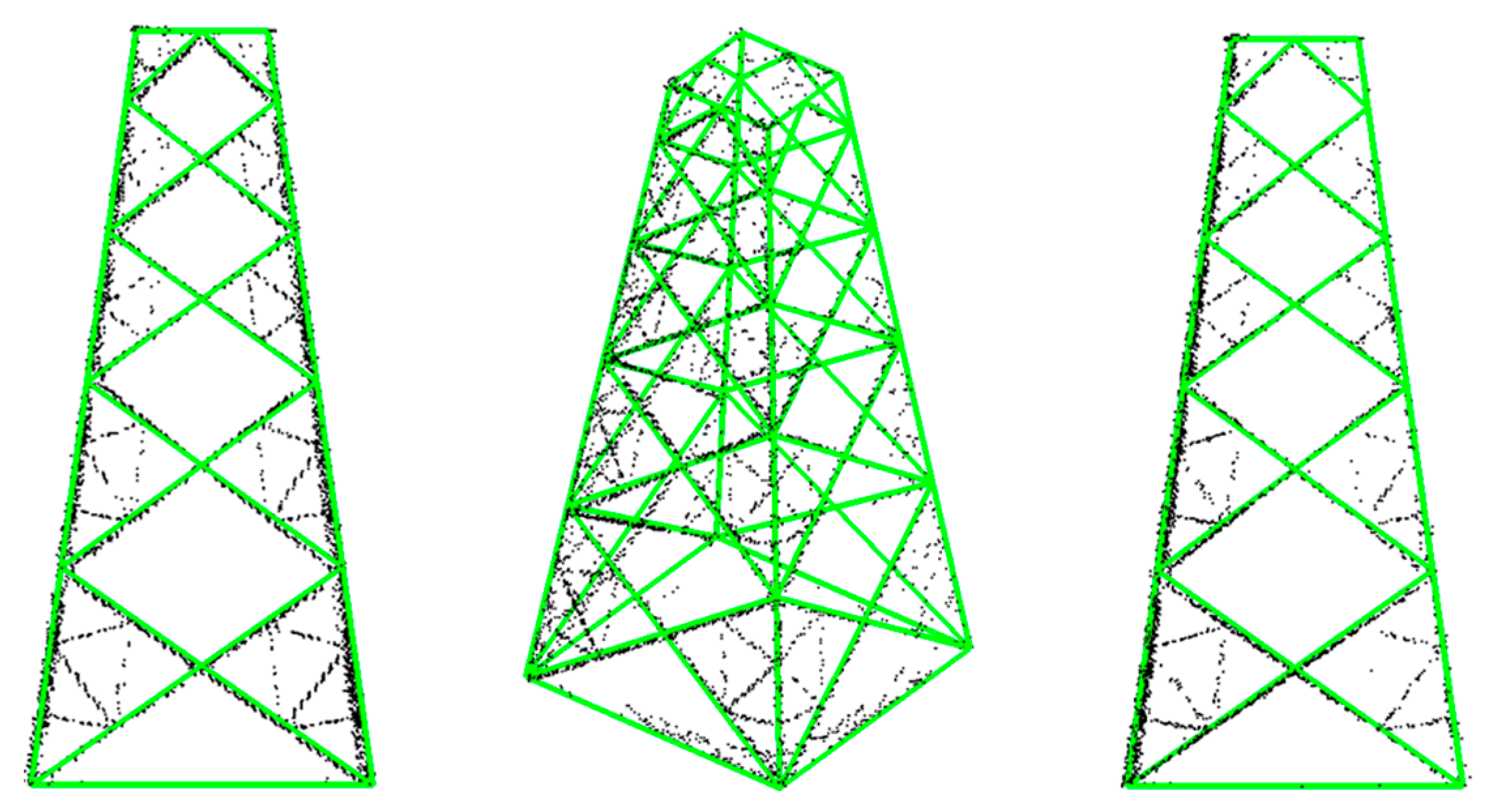

2.4.1. The Frame Reconstruction

2.4.2. The Internal Structure Reconstruction

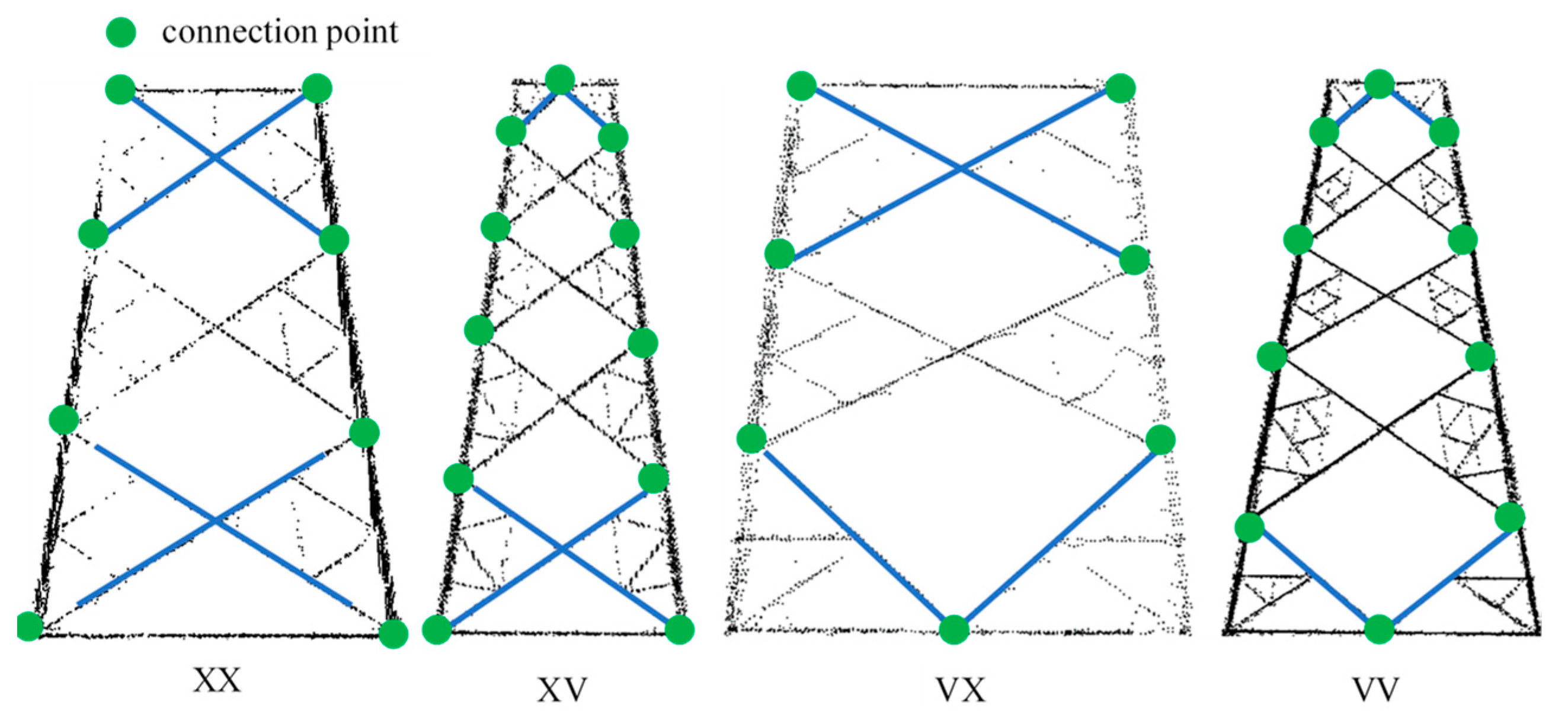

Identifying the Type of the Internal Structure

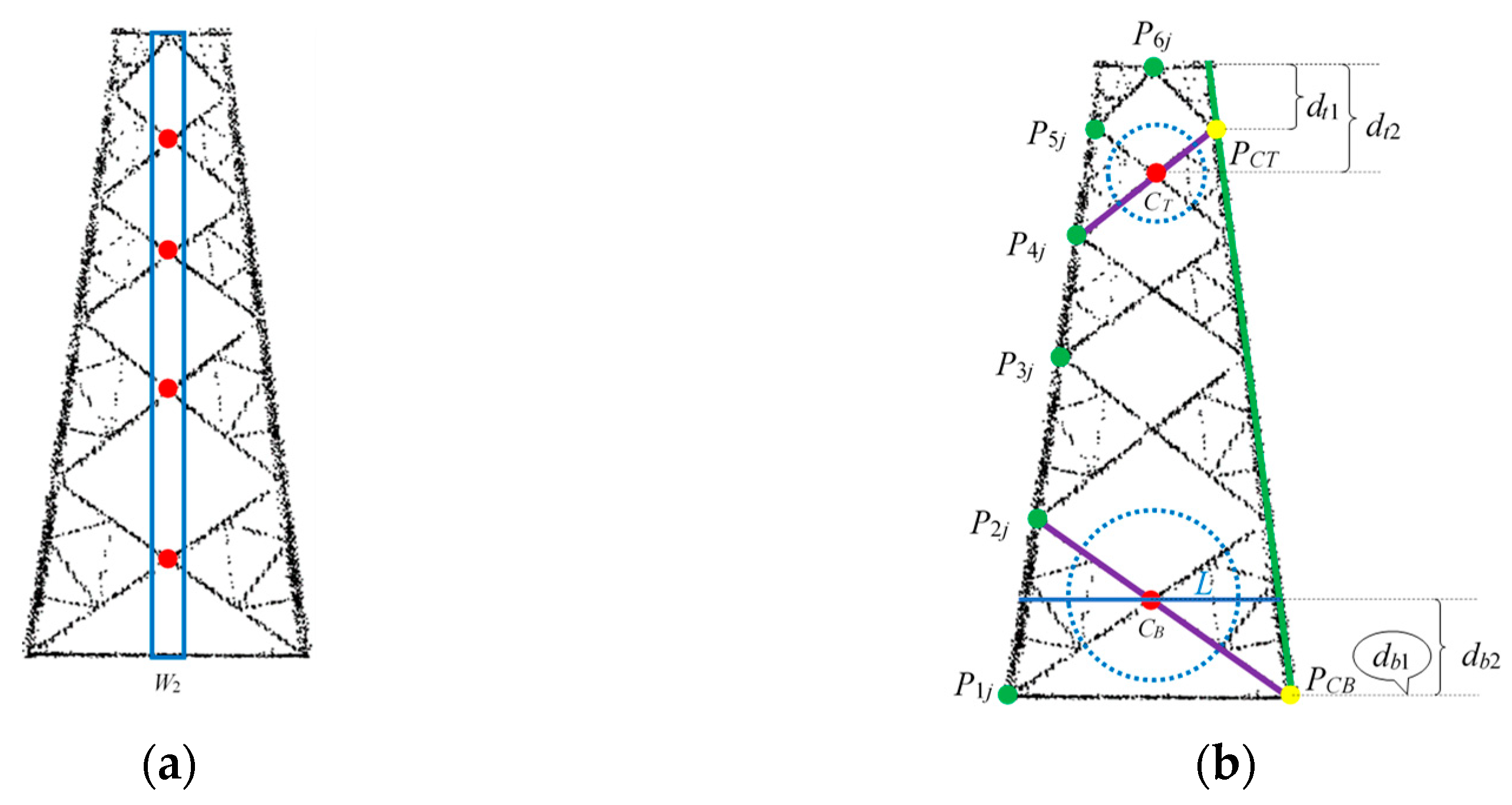

Calculating the Coordinates of the Connection Points

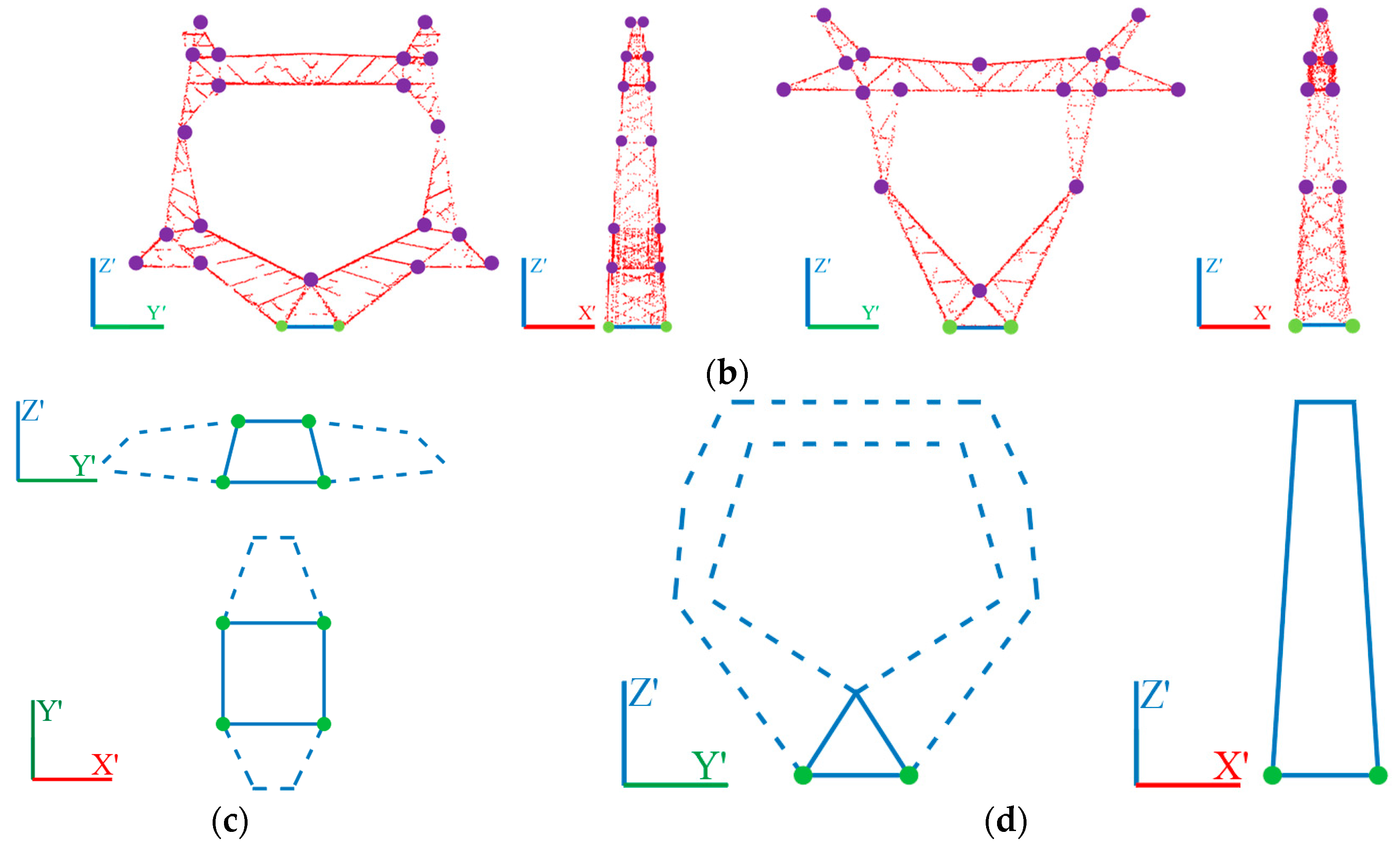

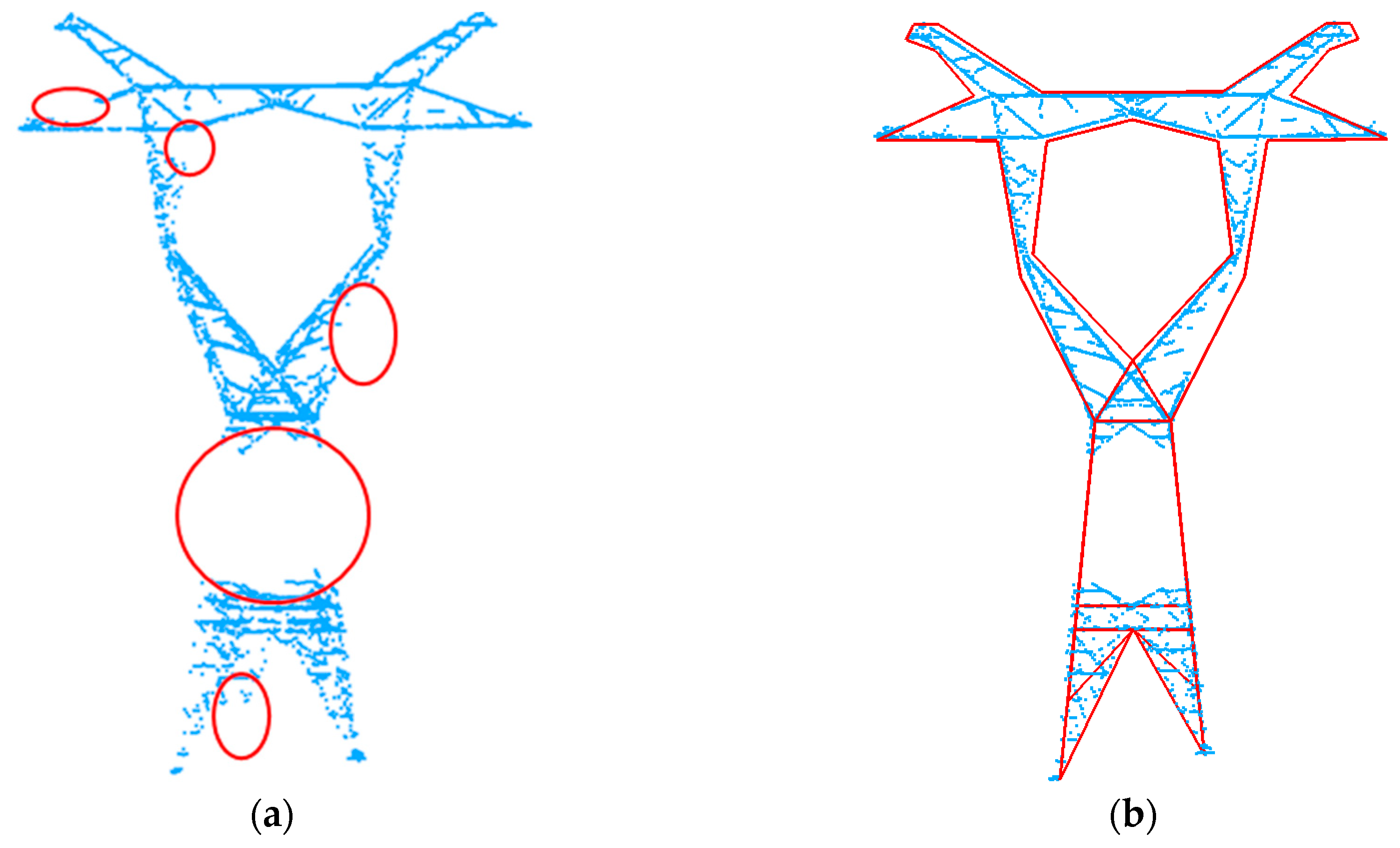

2.5. Complex Structure Reconstruction

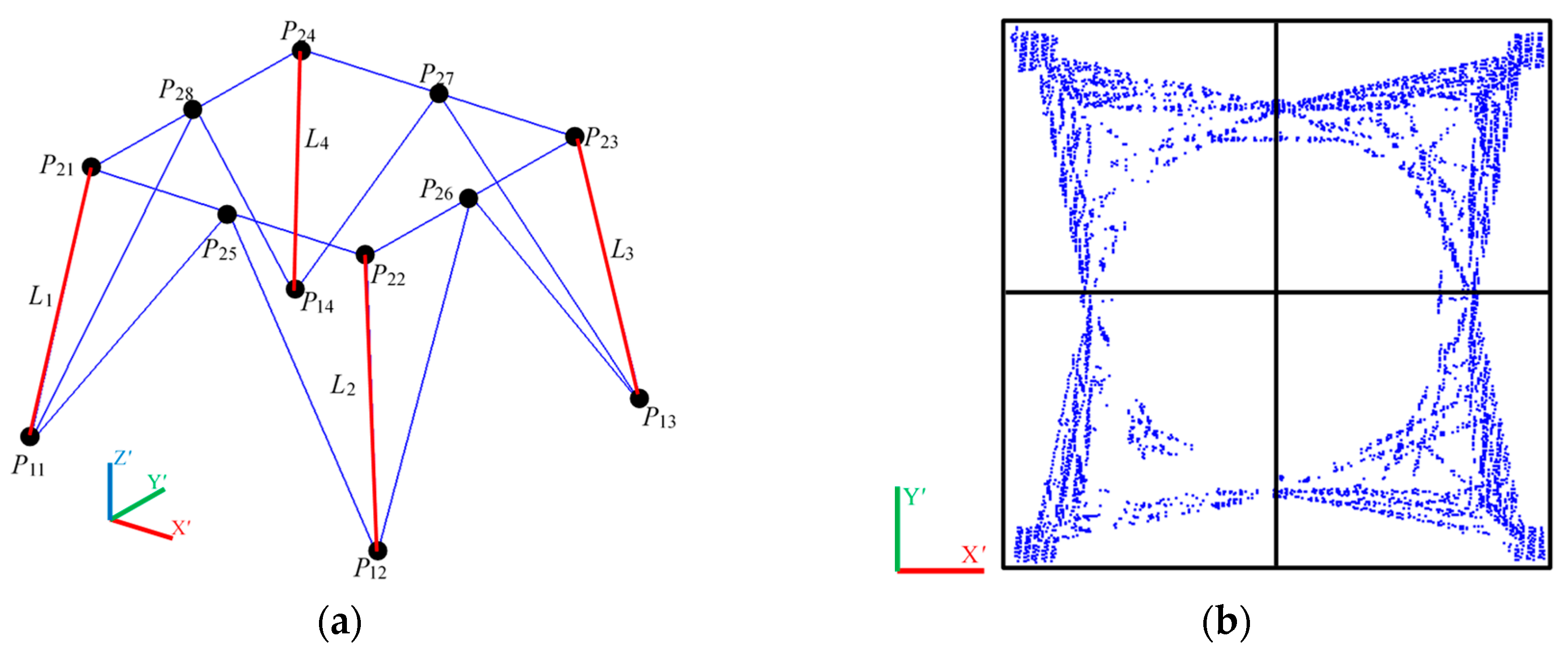

2.5.1. Establishing the Topological Relationship among Corner Points

2.5.2. Calculating 3D Coordinates of Corner Points

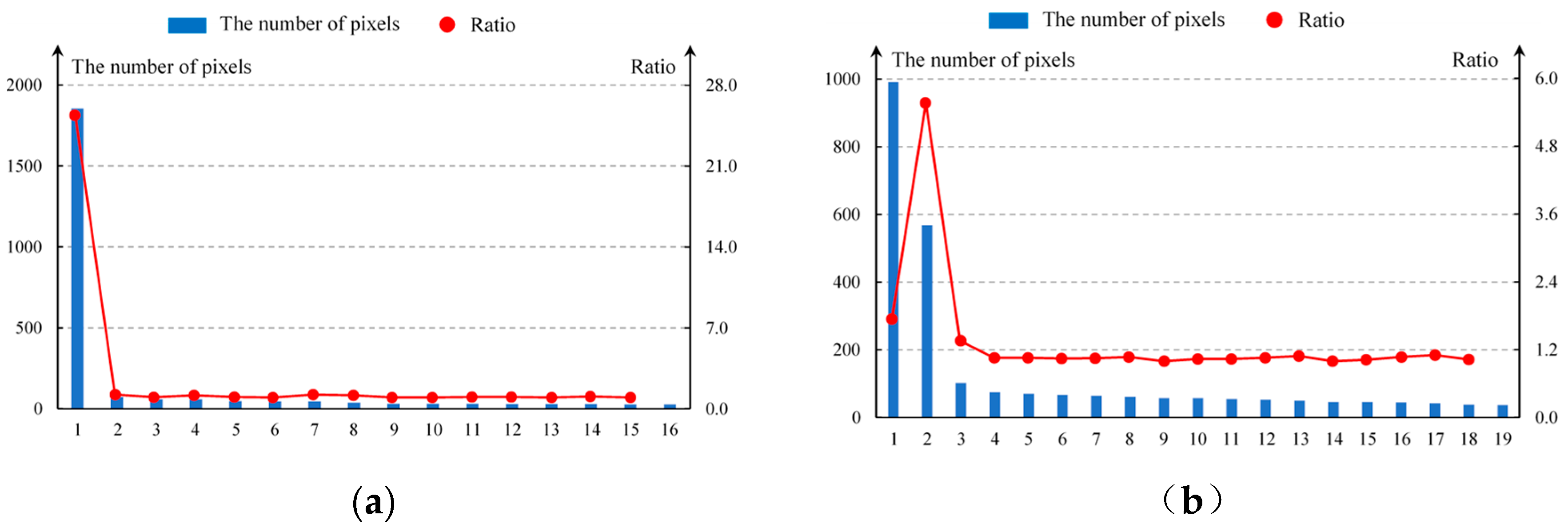

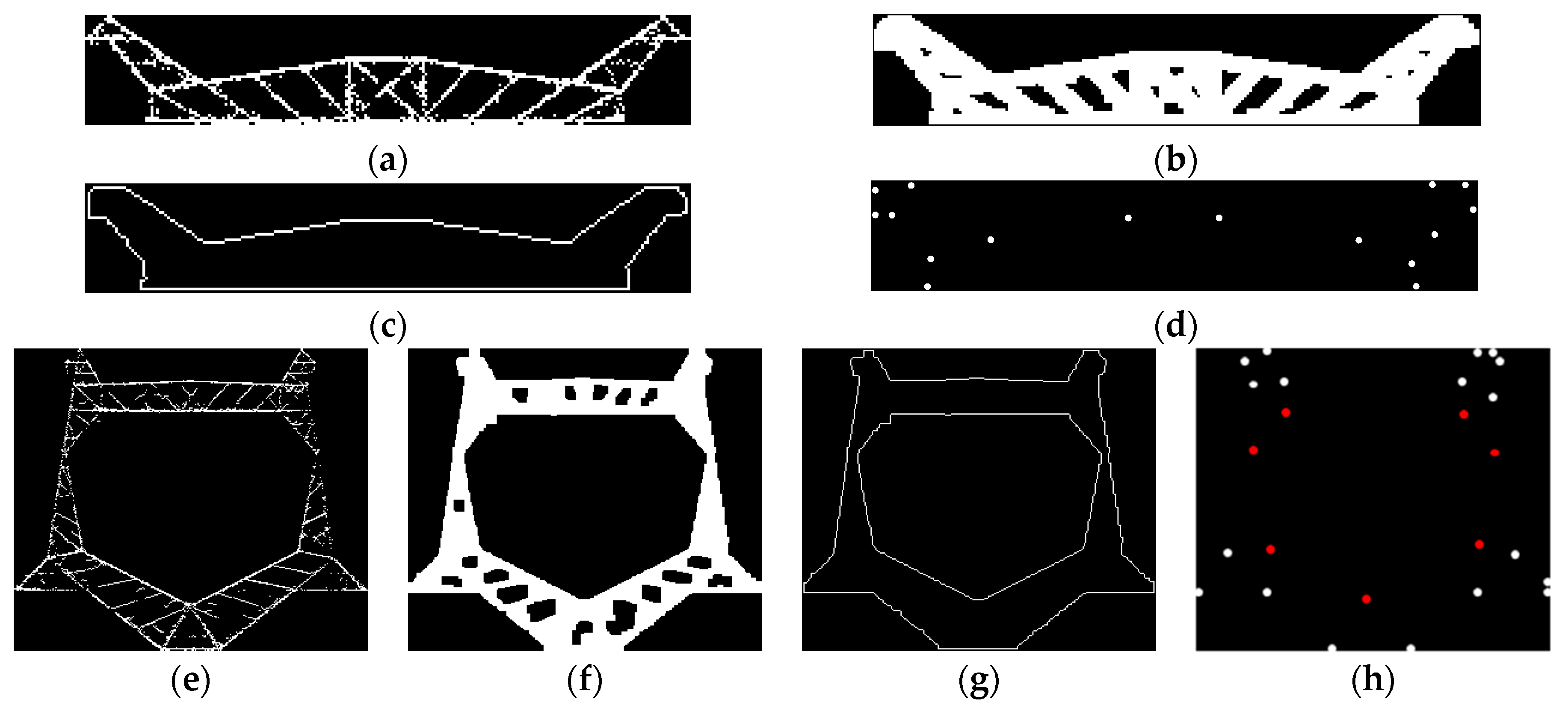

Extracting Corner Points

Optimization

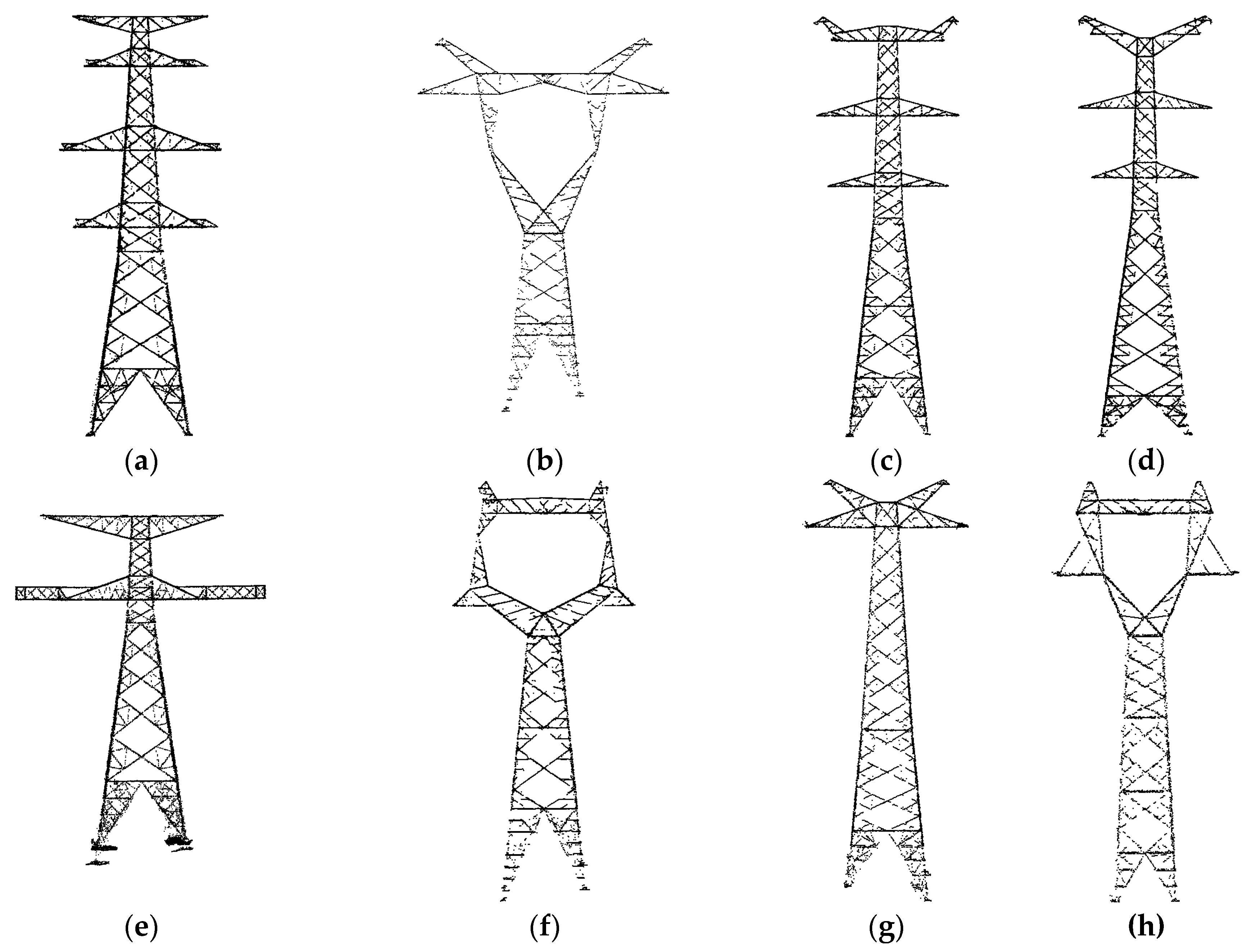

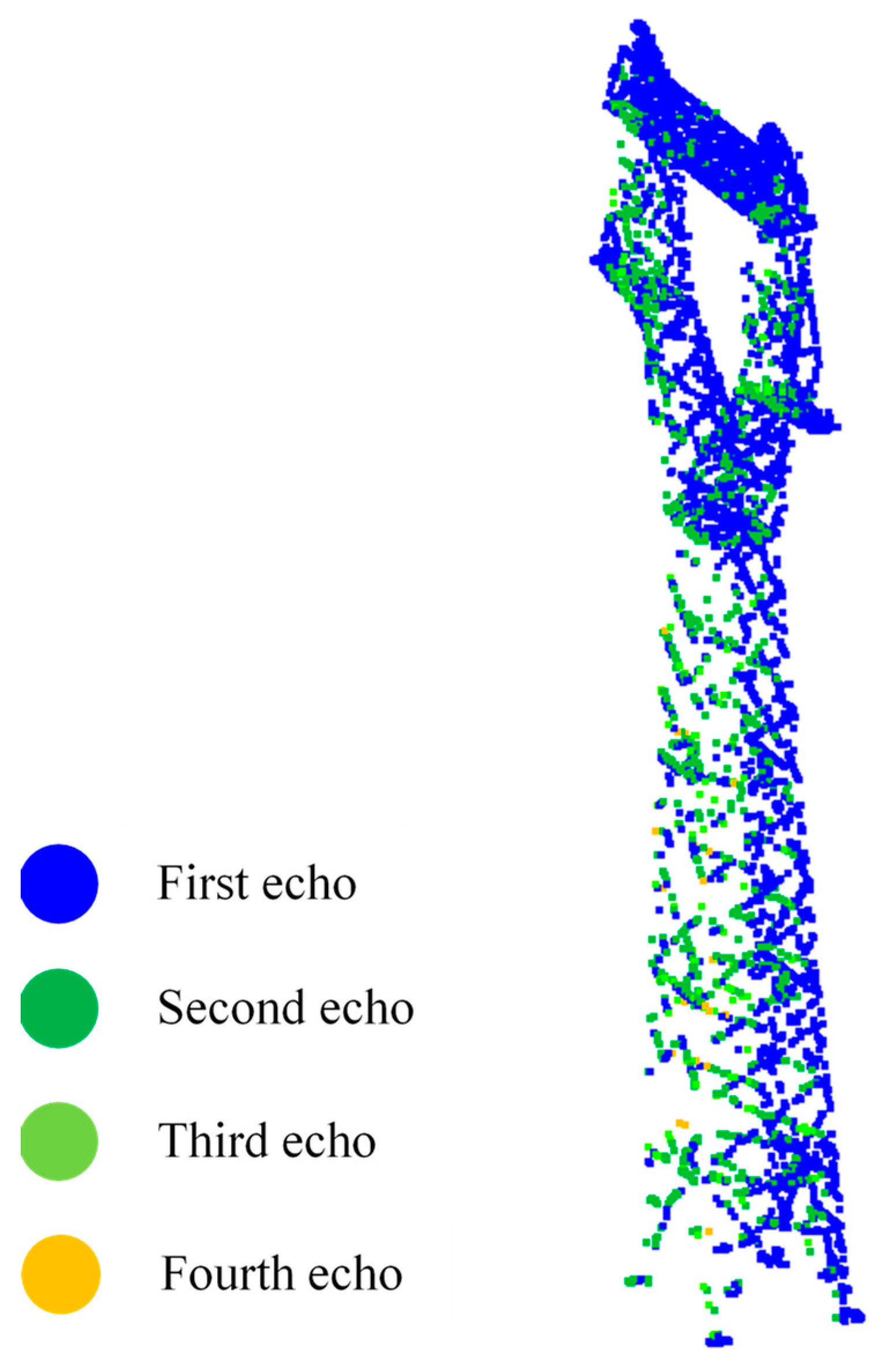

3. Experimental Data

4. Results

4.1. Accuarcy of Pylon Redirection

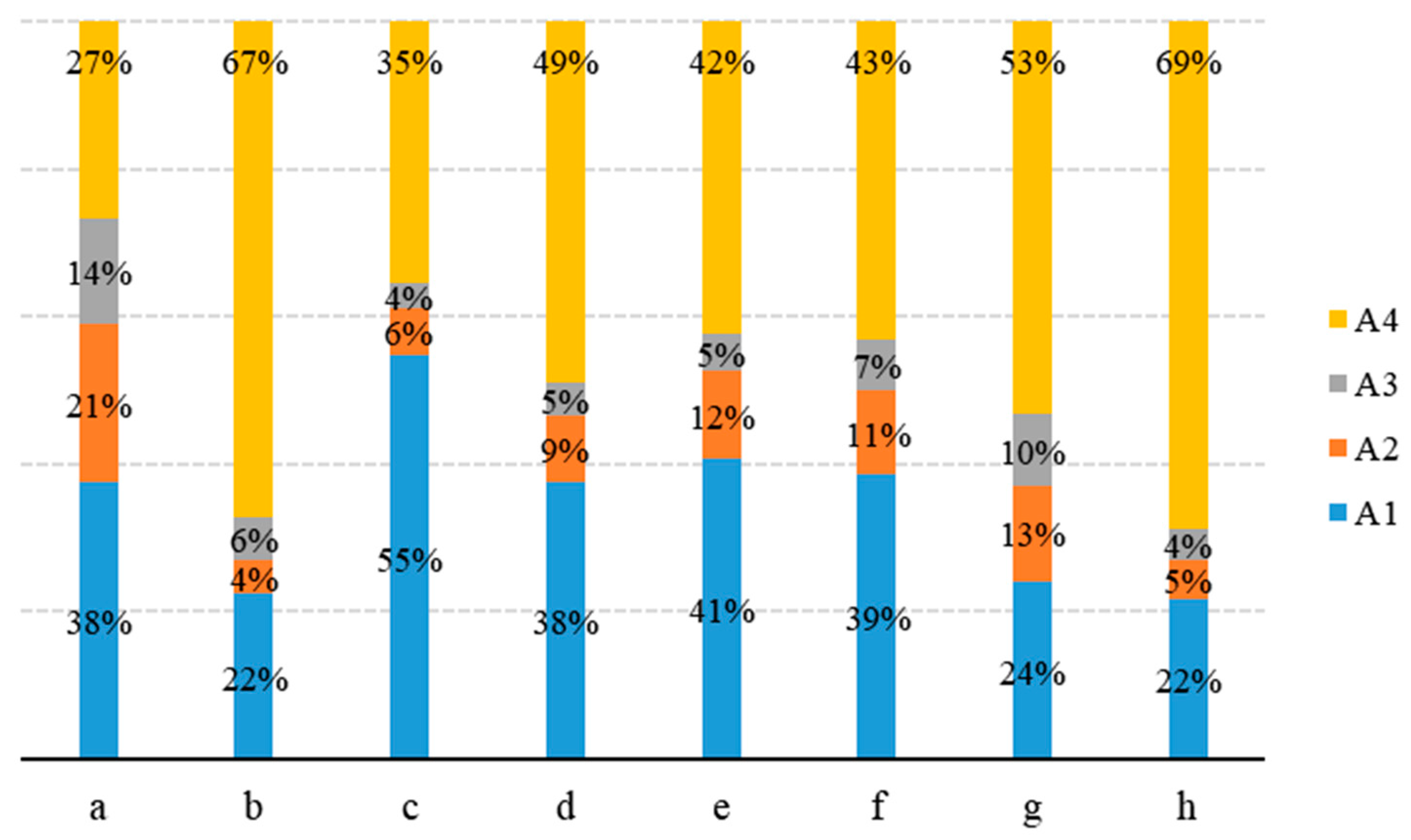

4.2. Accuracy of Pylon Decomposition

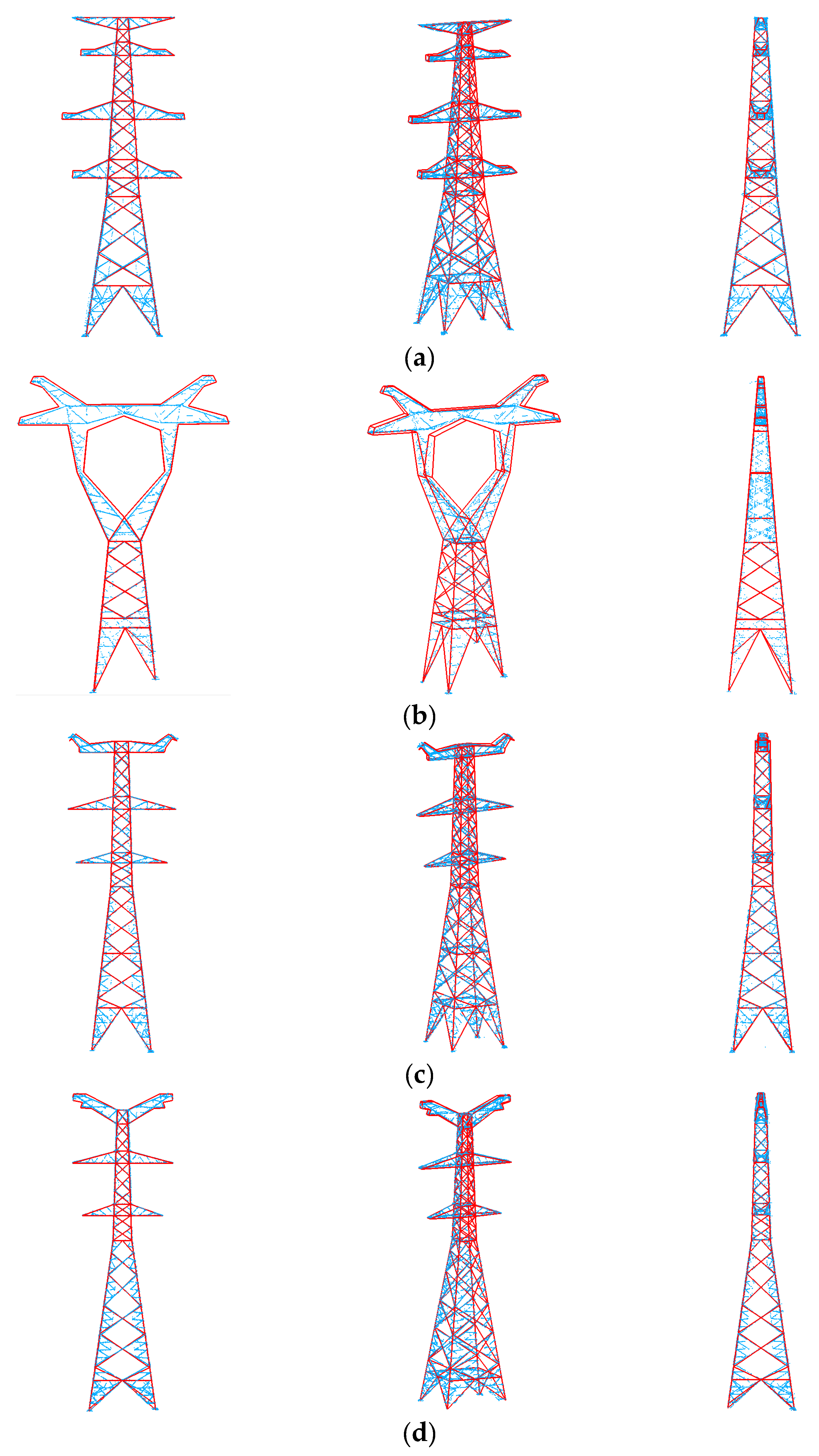

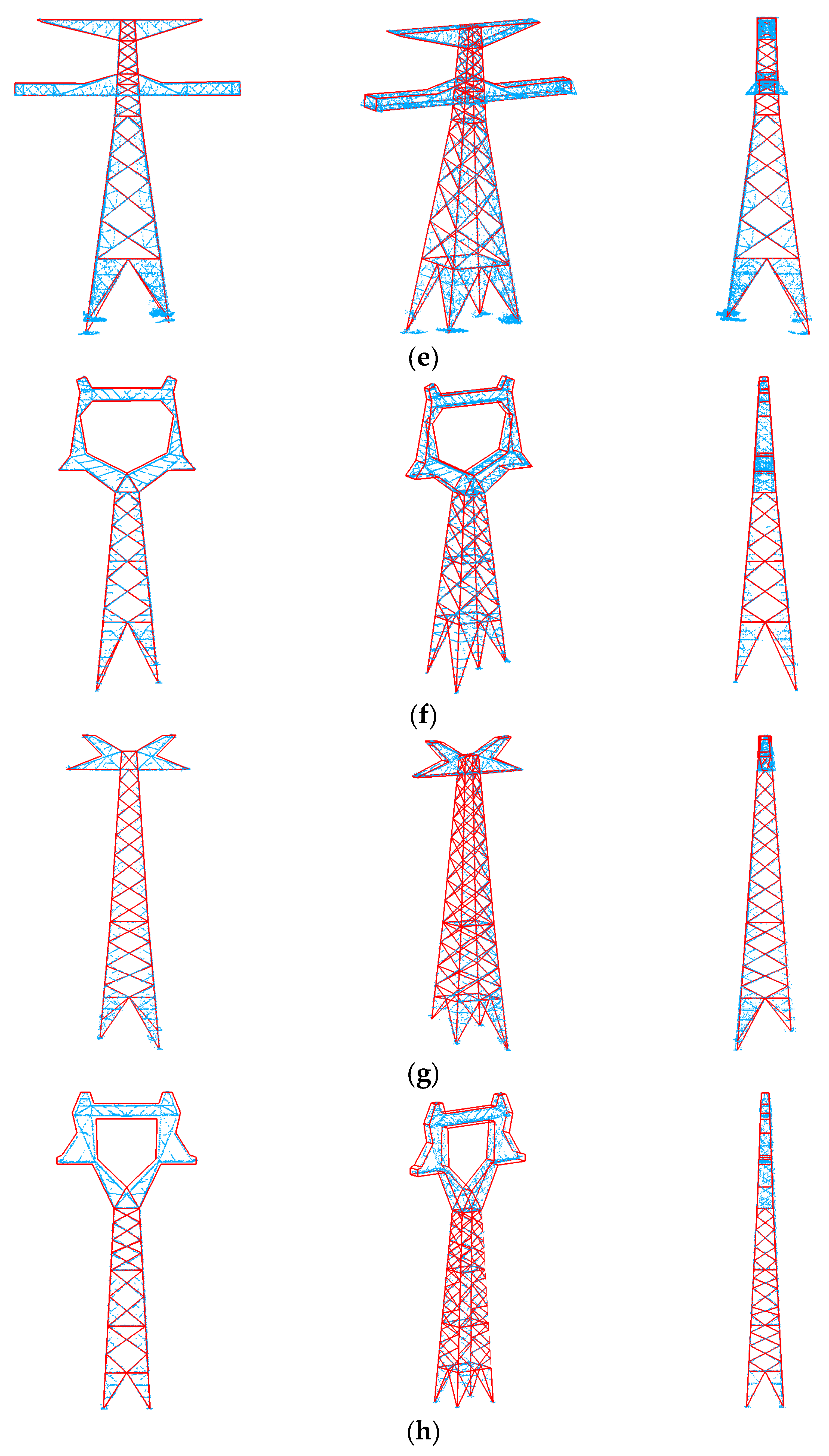

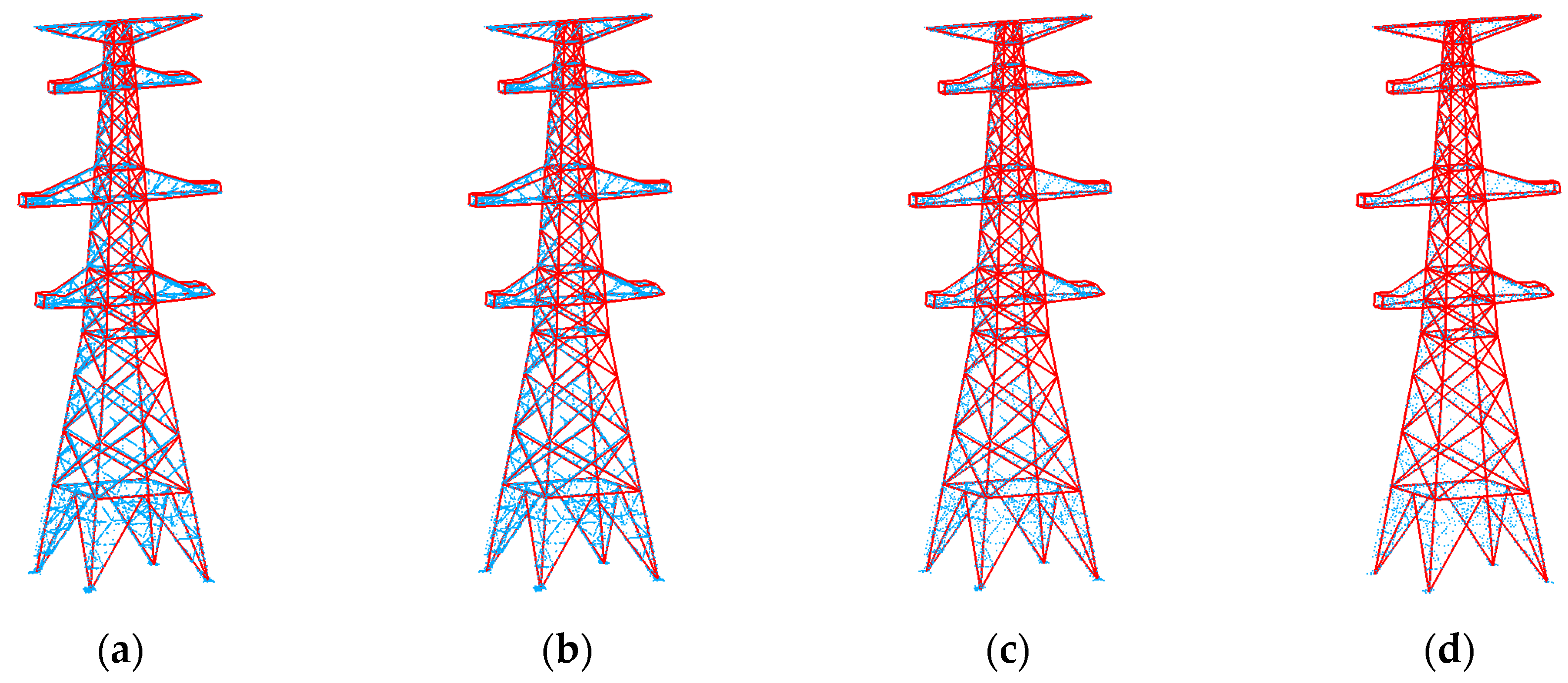

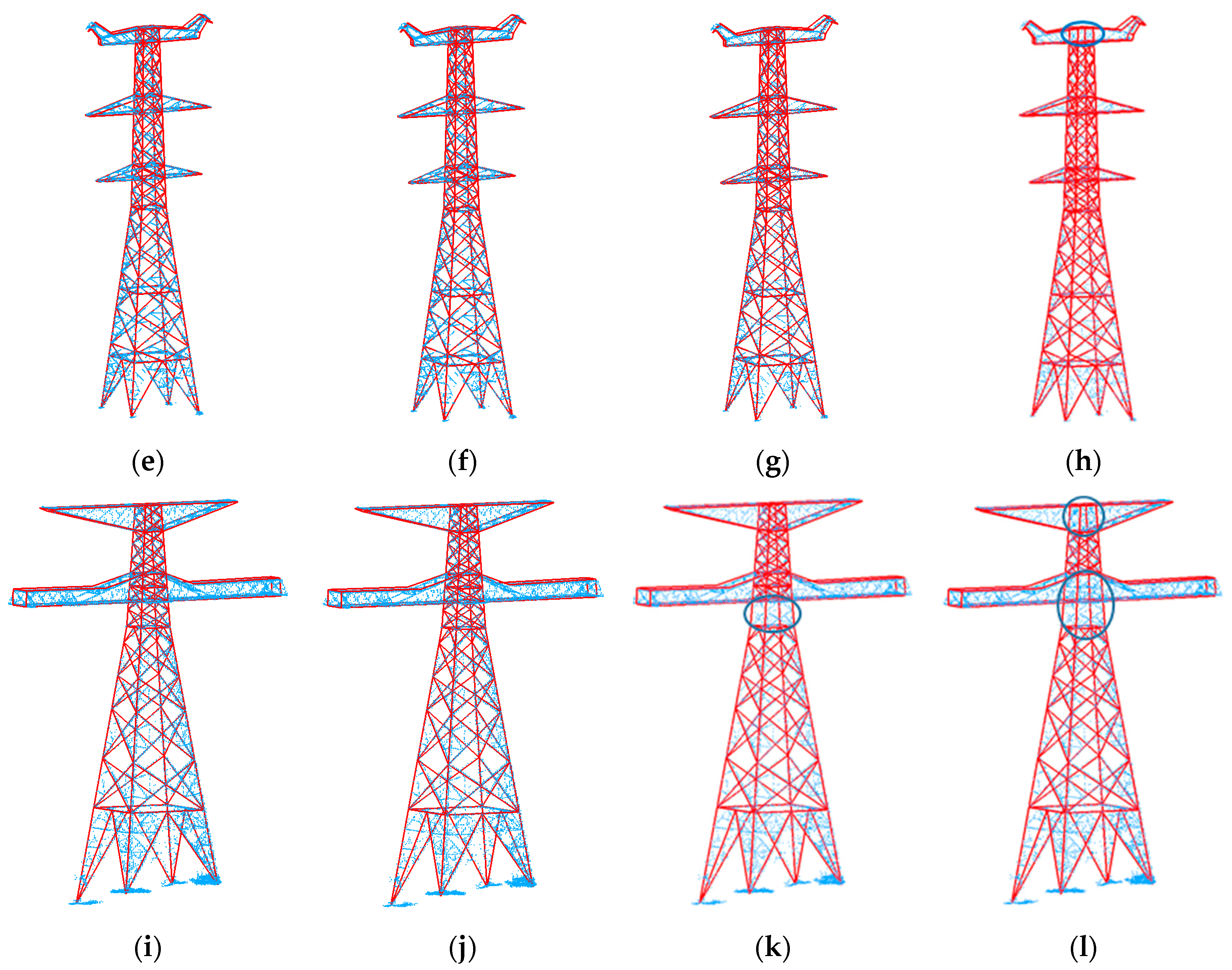

4.3. Accuracy of Pylon Reconstruction

5. Discussion

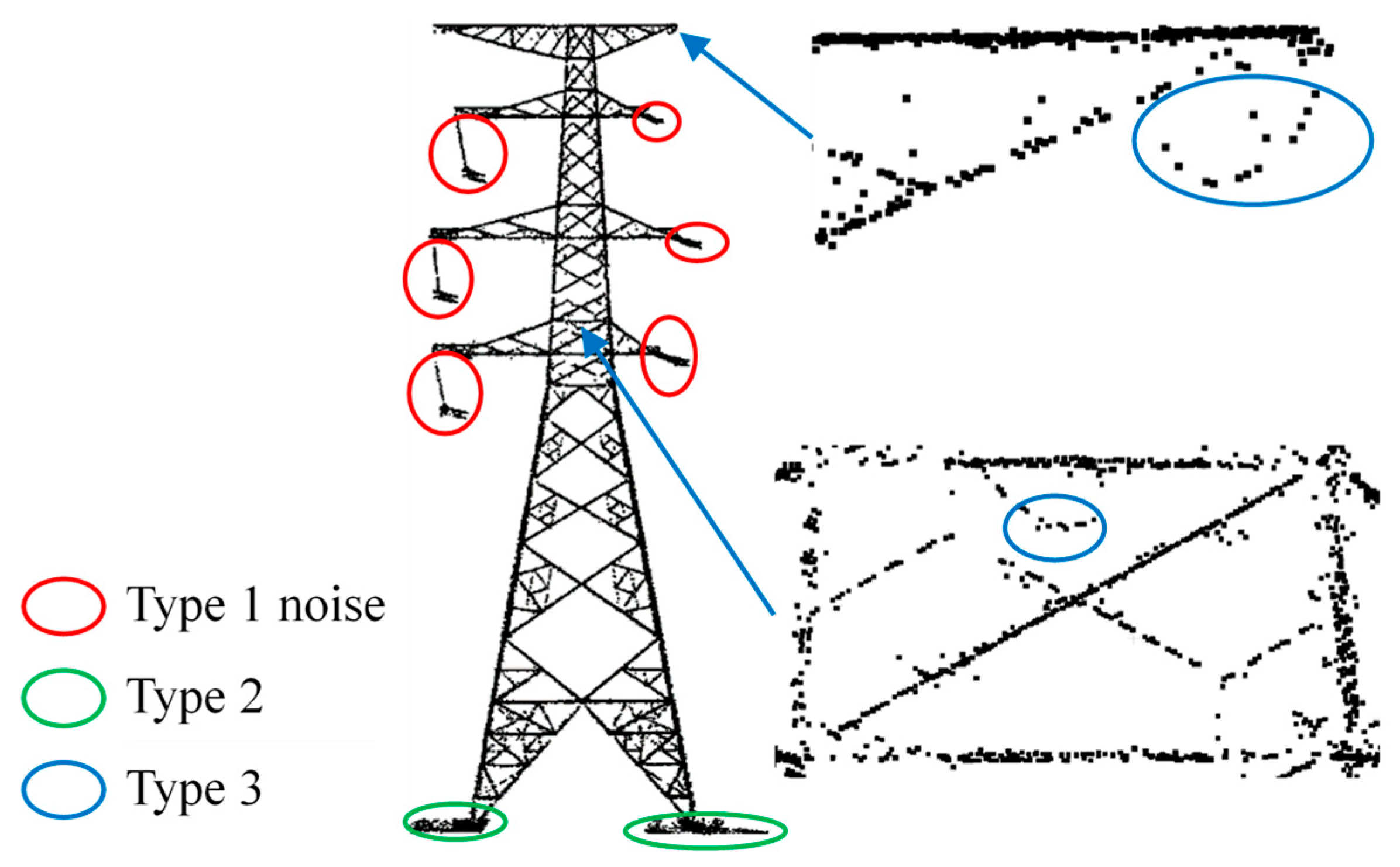

5.1. The Impact of Noise on Pylon Reconstruction

5.2. The Impact of Data Sparsity on Pylon Reconstruction

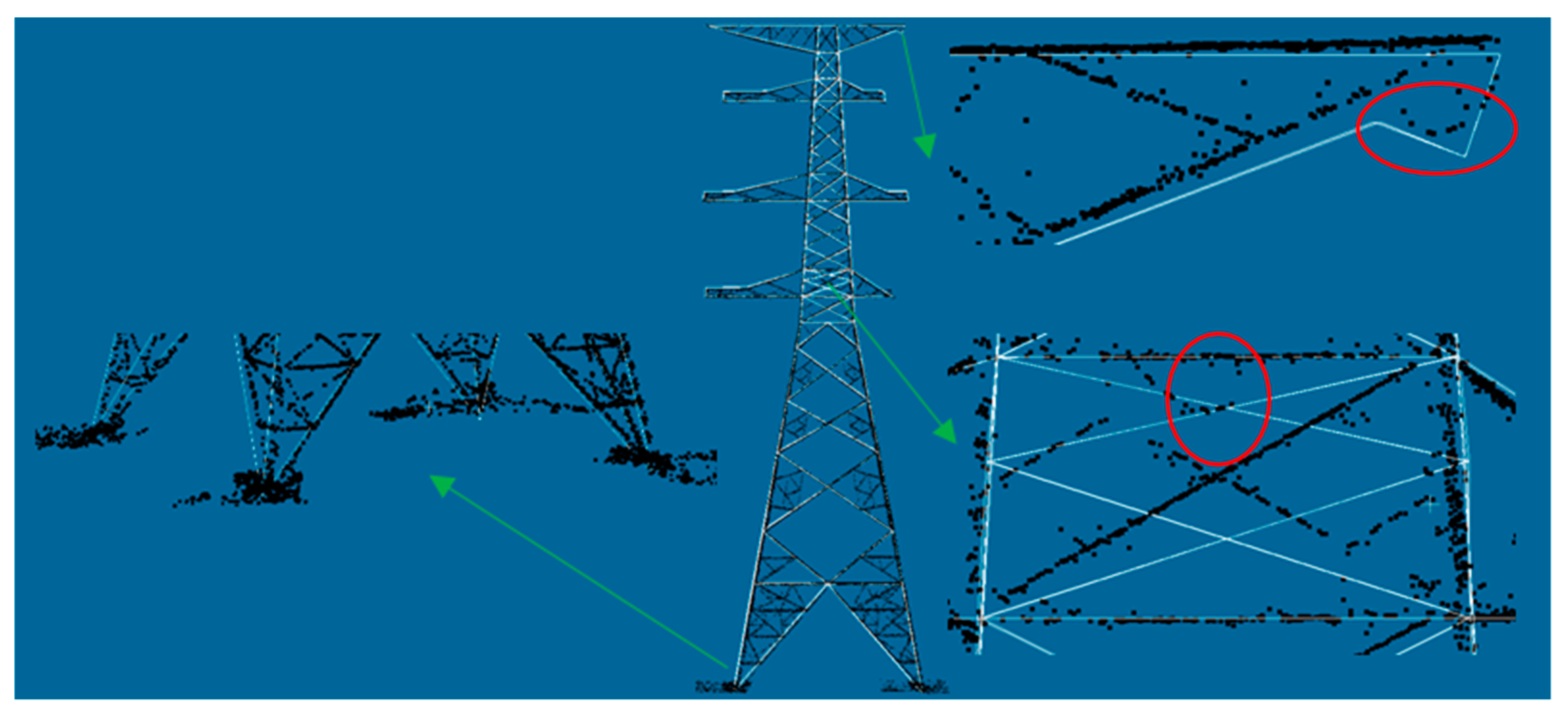

5.3. The Impact of Data Loss on Pylon Reconstruction

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kim, H.B.; Sohn, G. Point-based classification of power line corridor scene using random forests. Photogramm. Eng. Remote Sens. 2013, 79, 821–833. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Liu, L.; Li, X.; Sangaiah, A.K.; Li, K. Systematic Comparison of Power Line Classification Methods from ALS and MLS Point Cloud Data. Remote Sens. 2018, 10, 1222. [Google Scholar] [CrossRef]

- Yang, J.; Kang, Z. Voxel-based extraction of transmission lines from airborne LiDAR point cloud data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3892–3904. [Google Scholar] [CrossRef]

- Qin, X.; Wu, G.; Ye, X.; Huang, L.; Lei, J. A novel method to reconstruct overhead high-voltage power lines using cable inspection robot LiDAR data. Remote Sens. 2017, 9, 753. [Google Scholar] [CrossRef]

- Matikainen, L.; Lehtomaki, M.; Ahokas, E.; Hyyppa, J.; Karjalainen, M.; Jaakkola, A.; Kukko, A.; Heinonen, T. Remote sensing methods for power line corridor surveys. ISPRS J. Photogramm. Remote Sens. 2016, 119, 10–31. [Google Scholar] [CrossRef]

- Xie, L.; Zhang, H.; Wang, C.; Zhang, B.; Wu, F. High-voltage transmission towers detection using hybrid polarimetric SAR data. In Proceedings of the 3rd International Workshop on Earth Observation and Remote Sensing Applications, Changsha, China, 11–14 June 2014. [Google Scholar]

- Ahmad, J.; Malik, A.S.; Xia, L.; Ashikin, N. Vegetation encroachment monitoring for transmission lines right-of-ways: A survey. Electr. Power Syst. Res. 2013, 95, 339–352. [Google Scholar] [CrossRef]

- Sampedro, C.; Martinez, C.; Chauhan, A.; Campoy, P. A supervised approach to electric tower detection and classification for power line inspection. In Proceedings of the International Symposium on Neural Networks, Beijing, China, 6–11 July 2014. [Google Scholar]

- Zhou, R.; Zhai, R.; Jiang, W.; Xu, B.; Huang, W. Automatic extraction and reconstruction of bundle conductors from airborne LiDAR point clouds. Sci. Surv. Mapp. 2018, 43, 124–130. [Google Scholar]

- Guan, H.; Yu, Y.; Li, J.; Ji, Z.; Zhang, Q. Extraction of power-transmission lines from vehicle-borne lidar data. Int. J. Remote Sens. 2016, 37, 229–247. [Google Scholar] [CrossRef]

- Zhu, L.; Hyyppa, J. Fully-automated power line extraction from airborne laser scanning point clouds in forest areas. Remote Sens. 2014, 6, 11267–11282. [Google Scholar] [CrossRef]

- Guo, B.; Li, Q.; Huang, X.; Wang, C. An improved method for power-line reconstruction from point cloud data. Remote Sens. 2016, 8, 36. [Google Scholar] [CrossRef]

- Zhou, R.; Jiang, W.; Jiang, S. A novel method for high-voltage bundle conductor reconstruction from airborne LiDAR data. Remote Sens. 2018, 10, 2051. [Google Scholar] [CrossRef]

- Fang, X.; Misra, S.; Xue, G.; Yang, D. Smart grid—The new and improved power grid: A Survey. IEEE Commun. Surv. Tutor. 2012, 14, 944–980. [Google Scholar] [CrossRef]

- Guo, B.; Huang, X.; Li, Q.; Zhang, F.; Zhu, J.; Wang, C. A stochastic geometry method for pylon reconstruction from airborne LiDAR data. Remote Sens. 2016, 8, 243. [Google Scholar] [CrossRef]

- Kwoczyńska, B.; Dobek, J. Elaboration of the 3D model and survey of the power lines using data from airborne laser scanning. J. Ecol. Eng. 2016, 17, 65–74. [Google Scholar] [CrossRef]

- Han, W. Three-dimensional power tower modeling with airborne LiDAR data. J. Yangtze River Sci. Res. Inst. 2012, 29, 122–126. [Google Scholar]

- Chen, Z.; Lan, Z.; Long, H.; Hu, Q. 3D modeling of pylon from airborne LiDAR data. In Proceedings of the 18th China National Symposium on Remote Sensing—Remote Sensing of the Environment, Wuhan, China, 20–23 October 2012. [Google Scholar]

- Li, Q.; Chen, Z.; Hu, Q. A model-driven approach for 3D modeling of pylon from airborne LiDAR data. Remote Sens. 2015, 7, 11501–11524. [Google Scholar] [CrossRef]

- Zhou, R.; Jiang, W.; Huang, W.; Xu, B.; Jiang, S. A heuristic method for power pylon reconstruction from airborne LiDAR data. Remote Sens. 2017, 9, 1172. [Google Scholar] [CrossRef]

- Uijlings, J.R.R.; Van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Park, Y.; Yang, H. Convolutional neural network based on an extreme learning machine for image classification. Neurocomputing 2019, 339, 36–76. [Google Scholar] [CrossRef]

- Fu, K.; Dai, W.; Zhang, Y.; Wang, Z.; Yan, M.; Sun, X. MultiCAM: Multiple Class Activation Mapping for Aircraft Recognition in Remote Sensing Images. Remote Sens. 2019, 11, 544. [Google Scholar] [CrossRef]

- Brahimi, S.; Aoun, N.B.; Amar, C.B. Boosted Convolutional Neural Network for object recognition at large scale. Neurocomputing 2019, 330, 337–354. [Google Scholar] [CrossRef]

- Suzuki, S.; Abe, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Wang, J.; Huang, B.; Wu, X. Application of iterative method in linear equations. Agro Food Ind. Hi-tech 2017, 28, 2261–2264. [Google Scholar]

- Wang, B.; Li, J.; Liu, C. A robust weighted total least squares algorithm and its geodetic applications. Studia Geophys. Et Geod. 2016, 60, 177–194. [Google Scholar] [CrossRef]

- Xu, Z.; Shin, B.; Klette, R. Accurate and robust line segment extraction using minimum entropy with hough transform. IEEE Trans. Image Process. 2015, 24, 813–822. [Google Scholar] [PubMed]

- Xu, Z.; Shin, B.; Klette, R. Closed form line-segment extraction using the Hough transform. Pattern Recognit. 2015, 48, 4012–4023. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, Z.; Bi, Q. Linear fitting application based on the improved RANSAC algorithm. Modul. Mach. Tool Autom. Manuf. Tech. 2015, 1, 123–125. [Google Scholar]

- Wang, X.; Cai, Y.; Shi, T. Road edge detection based on improved RANSAC and 2D LIDAR data. In Proceedings of the Fourth International Conference on Control, Automation and Information Sciences, Changshu, China, 29–31 October 2015. [Google Scholar]

- Zhao, L.; Shi, G. A method for simplifying ship trajectory based on improved Douglas-Peucker algorithm. Ocean Eng. 2018, 166, 37–46. [Google Scholar] [CrossRef]

| Parameter | Empirical Value |

|---|---|

| Δh1 | 0.2 m |

| Δh2 | 0.2 m |

| W1 | 2 m |

| Tf | 75% |

| Ce | 0.5 |

| TG | TG = G1 + Ce |

| Parameter | Empirical Value |

|---|---|

| Tf | 75% |

| Tpy | 1.5 |

| Parameter | Empirical Value |

|---|---|

| W2 | 1 m |

| r | 1/3L |

| Imax | 15 |

| Tr | 0.4 |

| ALS System | Flying Height | Horizontal Distance | Flying Speed | Field of View | Scanning Speed | Rate | Laser Beam Divergence | Angle Measurement Resolution | Accuracy |

|---|---|---|---|---|---|---|---|---|---|

| RIEGLVUX-1 | 40 m above the powerline | 30 m above the powerline | 30 km/h | 330° | 200 lines/s | 600 kHz | 0.5 mrad | 0.001° | 15 mm |

| The Type of Pylon | The Number of Points | The Length of Pylon (m) | The Width of Pylon (m) | The Height of Pylon (m) | The Average Density of Points (pts/m3) |

|---|---|---|---|---|---|

| a | 32,798 | 23.06 | 15.143 | 60.1 | 55 |

| b | 5933 | 33.079 | 10.745 | 49.35 | 6 |

| c | 16,423 | 22.69 | 13.22 | 65.55 | 18 |

| d | 18,646 | 20.168 | 13.607 | 63.54 | 24 |

| e | 39,640 | 45.337 | 18.995 | 63.54 | 37 |

| f | 13,133 | 29.423 | 13.793 | 67.76 | 11 |

| g | 7129 | 17.39 | 8.656 | 43.57 | 16 |

| h | 6196 | 15.154 | 15.154 | 34.132 | 18 |

| Laptop | CPU | GPU | RAM | VM |

|---|---|---|---|---|

| Lenovo Y700 | Intel Core I7-6700HQ | Nvidia GeForce GTX 960M | 16G | 4G |

| The Type of Pylon | Pylon Decomposition | Pylon Reconstruction | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Δh1 (m) | Δh2 (m) | W1 (m) | Tf | Ce | Tpy | W2 (m) | r | Imax | Tr | The Distance Threshold of Linear Fitting Based on RANSAC(m) | |

| a | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| b | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| c | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| d | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| e | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| f | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| g | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| h | 0.2 | 0.2 | 2 | 75% | 0.5 | 1.5 | 1 | 1/3L | 15 | 0.4 | 0.2 |

| The Type of Pylon | a | b | c | d | e | f | g | h |

|---|---|---|---|---|---|---|---|---|

| Δθ(°) | 0.25 | 0.31 | 0.24 | 0.22 | 0.35 | 0.41 | 0.19 | 0.15 |

| The Type of Pylon | ΔS1 (m) | ΔS2 (m) | ΔS3 (m) | ΔS4 (m) | ΔS5 (m) | ΔS6 (m) | ΔS7 (m) | ΔS8 (m) | ΔS9 (m) | ΔS10 (m) | Average Value (m) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| a | 0.05 | 0.02 | 0.1 | 0.05 | 0.09 | 0.02 | 0.11 | 0.07 | 0.05 | 0.07 | 0.06 |

| b | 0.03 | 0.08 | 0.02 | 0.04 | |||||||

| c | 0.01 | 0.02 | 0.02 | 0.03 | 0.11 | 0.1 | 0.11 | 0.03 | 0.04 | 0.05 | |

| d | 0.02 | 0.03 | 0.01 | 0.09 | 0.03 | 0.12 | 0.08 | 0.1 | 0.06 | ||

| e | 0.05 | 0.03 | 0.02 | 0.1 | 0.03 | 0.02 | 0.14 | 0.06 | |||

| f | 0.03 | 0.03 | 0.07 | 0.05 | |||||||

| g | 0.06 | 0.02 | 0.05 | 0.07 | 0.05 | ||||||

| h | 0.05 | 0.04 | 0.03 | 0.08 | 0.05 |

| The Type of Pylon | A1 | A2 | A3 | A4 | ||||

|---|---|---|---|---|---|---|---|---|

| Average (m) | Maximum (m) | Average (m) | Maximum (m) | Average (m) | Maximum (m) | Average (m) | Maximum (m) | |

| a | 0.21 | 0.35 | 0.12 | 0.27 | 0.08 | 0.39 | 0.15 | 0.33 |

| b | 0.15 | 0.26 | 0.03 | 0.05 | 0.04 | 0.05 | 0.45 | 0.81 |

| c | 0.45 | 0.79 | 0.05 | 0.07 | 0.03 | 0.06 | 0.29 | 0.75 |

| d | 0.33 | 0.64 | 0.08 | 0.12 | 0.04 | 0.07 | 0.43 | 1.31 |

| e | 0.24 | 0.29 | 0.07 | 0.13 | 0.03 | 0.07 | 0.25 | 0.68 |

| f | 0.17 | 0.23 | 0.05 | 0.08 | 0.03 | 0.06 | 0.19 | 0.72 |

| g | 0.13 | 0.18 | 0.07 | 0.11 | 0.04 | 0.07 | 0.33 | 0.67 |

| h | 0.16 | 0.21 | 0.04 | 0.09 | 0.03 | 0.09 | 0.51 | 0.82 |

| The Type of Pylon | a | b | c | d | e | f | g | h |

|---|---|---|---|---|---|---|---|---|

| Time consumption (s) | 1.9 | 0.2 | 0.7 | 0.8 | 1.5 | 0.3 | 0.4 | 0.3 |

| The Type of Pylon | The Number of Points | |||

|---|---|---|---|---|

| Original Point Cloud | Sampling Distance | |||

| 0.1 m | 0.2 m | 0.3 m | ||

| a | 32,798 | 15,391 | 7353 | 4358 |

| c | 16,423 | 11,417 | 5871 | 3598 |

| e | 39,640 | 17,568 | 10,768 | 7105 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Wang, C.; Dai, H.; Zhang, H.; Pan, F.; Xi, X.; Yan, Y.; Wang, P.; Yang, X.; Zhu, X.; et al. Power Pylon Reconstruction Based on Abstract Template Structures Using Airborne LiDAR Data. Remote Sens. 2019, 11, 1579. https://doi.org/10.3390/rs11131579

Chen S, Wang C, Dai H, Zhang H, Pan F, Xi X, Yan Y, Wang P, Yang X, Zhu X, et al. Power Pylon Reconstruction Based on Abstract Template Structures Using Airborne LiDAR Data. Remote Sensing. 2019; 11(13):1579. https://doi.org/10.3390/rs11131579

Chicago/Turabian StyleChen, Shichao, Cheng Wang, Huayang Dai, Hebing Zhang, Feifei Pan, Xiaohuan Xi, Yueguan Yan, Pu Wang, Xuebo Yang, Xiaoxiao Zhu, and et al. 2019. "Power Pylon Reconstruction Based on Abstract Template Structures Using Airborne LiDAR Data" Remote Sensing 11, no. 13: 1579. https://doi.org/10.3390/rs11131579

APA StyleChen, S., Wang, C., Dai, H., Zhang, H., Pan, F., Xi, X., Yan, Y., Wang, P., Yang, X., Zhu, X., & Aben, A. (2019). Power Pylon Reconstruction Based on Abstract Template Structures Using Airborne LiDAR Data. Remote Sensing, 11(13), 1579. https://doi.org/10.3390/rs11131579