1. Introduction

Oil spills are one of the main causes of marine pollution. In the past, major ecological disasters have occurred on the coasts and oceans around the world. Moreover, a large number of ships illegally clean their tanks at sea, worsening this problem. Early detection of oil slicks is very important to limit pollution and mitigate the environmental damage caused by accidents and illegal discharges.

Nowadays, research focuses on the detection of spills and the boats that cause them, as shown in recent works such as [

1,

2]. It is known that remote sensing technologies have been effective for oil spill monitoring and detection [

3], reducing the emergency response time from authorities and governments. The European Maritime Safety Agency (EMSA) has an observation service called CleanSeaNet which uses satellite-based observation (e.g., ENVISAT, RADARSAT, SENTINEL, etc.) for oil spill monitoring and vessel detection. The Spanish Maritime Safety Agency (SASEMAR) also uses 3 EADS-CASA CN 235-300 aircraft to locate shipwrecks and vessels at sea, detect discharges into the marine environment, and identify the infringing ships. These airplanes are equipped with a Millimetre-Wave Radar (MWR) on each wing, and they are able to carry out maritime patrol missions with a maximal total range exceeding 3706 km, and up to more than 9 flight hours. The detection of possible targets is done manually, as the SLAR signal is digitized as an image and analyzed by expert operators. To confirm the detections, marine samples are also taken when necessary.

A variety of sensors are used for oil spill detection [

4,

5]. Among them, the best solution for wide area surveillance, during day or night and with rainy and cloudy weather, is Synthetic Aperture Radar (SAR) and Side-Looking Airborne Radar (SLAR), as shown in [

6,

7]. The main differences between them are that SAR in general is installed on satellites while SLAR is usually mounted on airplanes, assembling two SAR antennas under the wings. This implies that SLAR images, in contrast with SAR, contain artifacts caused by small differences in alignment between both antennas or by return signal loss (reflected signal), showing a higher complexity in the representation of ground scenes. Moreover, the size of these artifacts is variable and dependant on the flight altitude and the ascent/descent speed. However, SLAR has the advantage of being able to control an area with greater precision and at any time (without having to wait for the satellite to be positioned).

The basic principle on which the SAR and SLAR sensors are based to perform remote sensing is the emission of a microwave beam, where the received signal is the reflection by the object back scatter features. Based on this response the sensor builds a two dimensional image, where the brightness of the captured image is a function of the properties of the target-surface. This brightness is related to the normalized radar cross section (NRCS) representing the power of the backscattered radar signal. The possibility of detecting an oil spill in a SAR or SLAR image relies on the fact that the oil film decreases the backscattering of the sea surface resulting in a dark formation that contrasts with the brightness of the surrounding spill-free sea [

8]. In addition, oil slick features, such as thickness, shape or size, which are mainly dependent on weather, sea or wind conditions, and the time since the spill, also determine the dark spot appearance observed in the image.

However, dark areas (or areas of reduced NRCS values) do not always originate from oil spills, because they can also be originated by other ocean phenomena, named “lookalikes”, such as very calm sea areas, currents, eddies, different weather conditions such as low wind, and may also have a biological origin such as shoals of fish, seaweed and plankton. For this reason it is very difficult to differentiate between mineral oil spills and effects caused by biogenic surface films.

Image processing techniques are commonly used for the extraction of textural, geometric and physical features along with segmentation methods in order to identify the regions of oil slicks within an image. Then, supervised machine learning classifiers can be applied to discriminate between oil slicks and lookalikes. They can produce false positives in the detection process due to the similarity in appearance with the regions that represent spills.

The statistical distributions of dark spots and background can also be modeled in order to differentiate between oil slicks and sea as in [

9], applying a Generalized Likelihood Ratio Test (GLRT) [

10], using spatial density features [

11], using strategies based on energy minimization such as Region Scalable Fitting (RSF) methods [

12], Global Minimization Active Contour Model (GMACM) [

13] or Globally Statistical Active Contour Model (GSACM) [

14], among others.

However, these approaches are very limited when there are environmental changes and SLAR images contain artifacts—such as that caused by aircraft maneuvers—which do not follow any statistical distribution and, therefore, it can be confused with oil slicks or other artifacts. To avoid this problem, Gil and Alacid [

7] presented a method to identify oil slicks from SLAR imagery using an image processing technique to eliminate the artifacts regions caused by maneuvers. Other authors applied a segmentation process guided by a saliency map to identify the oil spills. For example, Li et al. [

15] proposed a simplified graph-based visual saliency model to extract bottom-up saliency. The method is able to detect oil slicks and exclude other salient regions caused by other targets such as artifacts.

In general, the previously mentioned methods use image processing techniques to segment candidate regions representing oil spills and/or to extract features. Then, they feed these features into machine learning classifiers to detect oil slicks. Some of these methods (such as [

16,

17]) use the geometry of the image or the elements to be classified as features for oil spill detection. However, they are very dependent on the dataset used to select the most relevant features, failing as soon as the characteristics of the image change and therefore losing generalization capabilities.

Unlike with SAR, in SLAR it is not convenient to define descriptors using characteristics extracted from the whole image, as done in known state-of-art methods. SLAR data are obtained as a set of scanning lines and each of them represents a time observation. Therefore, it is very difficult to extract spatial characteristics in a representative neighborhood that allow to design robust descriptors for each target class.

Deep Learning and, in particular, Convolutional Neural Networks (CNN) are recently being used to perform classification without applying any hand-crafted feature extraction nor pre-processing techniques. They can also obtain reliable results in image segmentation and recognition tasks, in some cases showing a performance close (or even superior) to the human level when working with signals such as images, video, or audio [

18]. Deep learning techniques are being applied to overcome the limitations from the traditional machine learning methods that require extracting hand-crafted features from the input data.

The kernels of the different convolutional layers of a CNN learn a numerical matrix which allows to transform the input image they receive. Therefore, each of these kernels learn to transform their input in a different way, highlighting different elements that are relevant for the detection of the target class. Low level features are learned in the first layers, since small kernels are applied over the entire image. These results are combined and passed through layer after layer, until the last layers of the network, that extract highest level features.

In the case of deep learning approaches, there is previous research using a CNN for oil spill detection task such as [

19], or pixel-level segmentation techniques [

20] to identify dark spots representing oil spills as in [

21,

22]. The latter work [

23] successfully combined Resnet [

24] and Googlenet [

25] with Fully Convolutional Networks (FCN) [

26] for this task.

Segmentation networks can be applied to SAR or SLAR images for the detection of spills or other classes, as in [

27], where a Selectional Auto-Encoder (SAE) network was proposed. This architecture modifies the topology of a FCN to specialize it to the segmentation of one class (oil spills in this case) and to return a probability distribution after which a threshold is applied to select the pixels to segment.

Most existing methods for oil spill detection need to process the full image. Therefore, their usage in environments that require a quick response may not be feasible. In contrast with [

27], which uses a SAE over the entire image of the flight sequence, in this work we present a new approach to segment SLAR images in real time. The proposed method uses a combination of Convolutional Long Short Term Memory (ConvLSTM) networks [

28] with a variation of the SAE topology, in order to enable faster processing. The main reason for adding recurrent neurons (ConvLSTM) is to perform the segmentation using only the current reading of the sensor along with a small amount of the previous readings. In this way, it is possible to return a response during the flight time, without having to wait for more readings to complete an image or obtain a larger context of the area to be classified, which would generate a lag in the response. In addition, the proposed process simultaneously segments other elements of the image such as ships, coast or the artifacts generated by the aircraft sensors, and combines this information into a final classifier. The result obtained for these additional classes is used to improve the segmentation oil spills and ships. In this way, the final classifier can make a high-level decision by combining the result of the specialized classifiers, and thus discard, for example, the segmentation of a ship or an oil spill when they are surrounded by coast or noise.

In summary, the main contributions of this paper are:

A method designed to work in real time (flight time), in contrast to previous techniques such as [

27] which can only be used offline (once all the scanlines are available). For this, a SAE topology was modified in order to work directly with SLAR scanlines, and recurrent neurons were added to take advantage of the information in the previous readings.

The proposed method uses a parallel set of specialized supervised classifiers for each of the classes, and finally combines their outputs to provide an answer. By combining the classifiers’ decisions, it is possible to consistently improve the results, as demonstrated with statistical tests in

Section 3.

The model was evaluated using 51 different flight sequences with a total of 5.4 flight hours, including a wide range of examples of the different elements to be classified as well as different meteorological and flight conditions (altitude, flight speed, wind speed, etc.).

The proposed approach is compared with other state-of-the-art methods, reporting better results in both detection and segmentation tasks at the pixel level, as well as better processing time.

The rest of the paper is structured as follows.

Section 2 describes the proposed method and the data used for evaluation,

Section 3 shows the results obtained with the proposed method,

Section 4 discusses the results and how they can be interpreted in perspective of previous studies, and finally, conclusions and future work are given in

Section 5.

2. Materials and Methods

This section first describes the materials used for the experiments. Then, we introduce the proposed method, which uses a combination of ConvLSTM networks with SAE to process the SLAR signals during flight.

2.1. Materials

For the experiments with the proposed method, we have used a dataset composed of 51 flight sequences supplied by SASEMAR. SASEMAR is the public authority responsible for monitoring the Exclusive Economic Zones (EEZ) of Spain whose procedures are based on reports of EMSA.

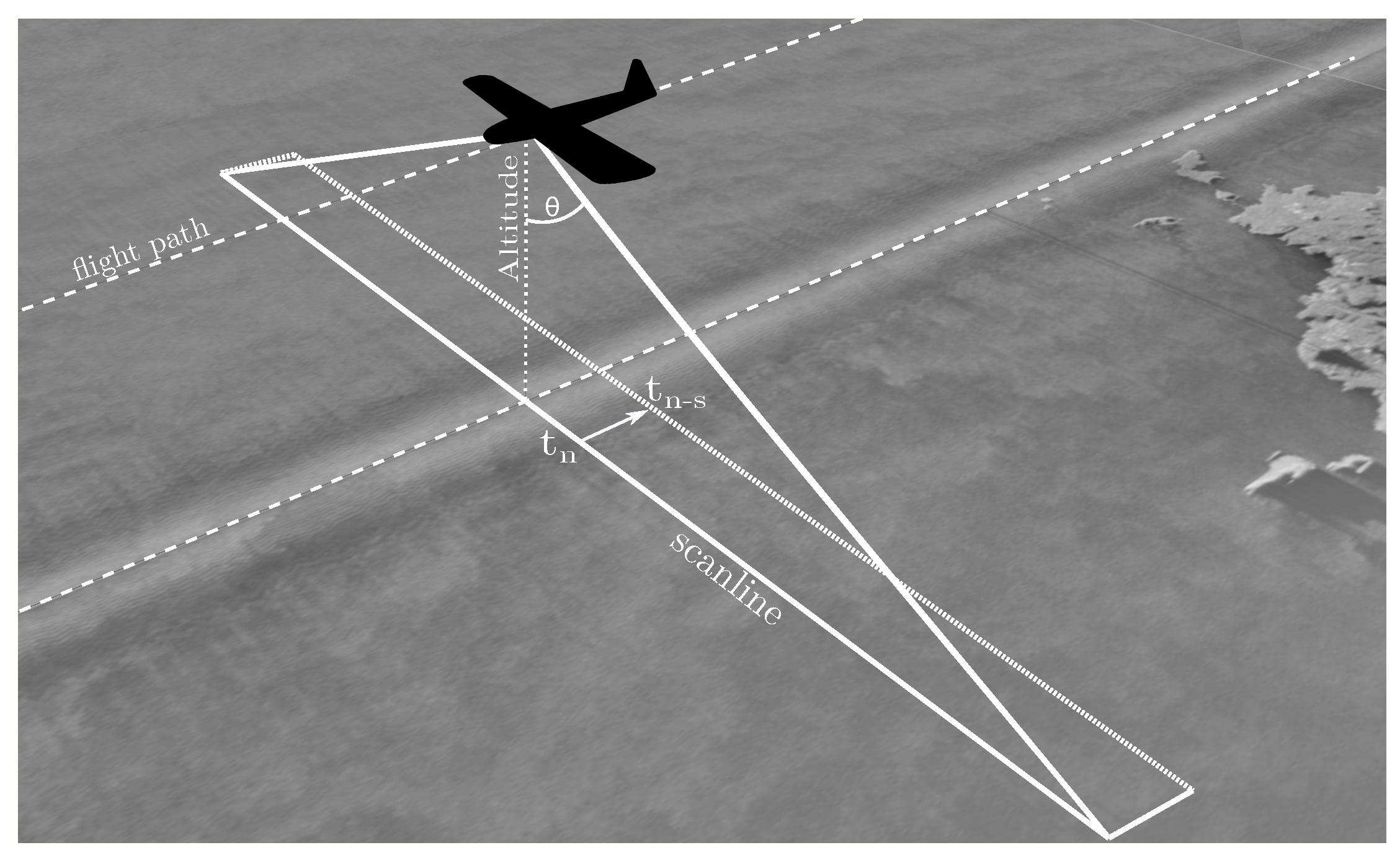

Figure 1 shows the scheme of the used EADS-CASA CN 235-300 aircraft with its data acquisition system. This aircraft has two TERMA SLAR-9000 antennas under both wings pointing in a perpendicular angle to the flight direction. At the average flight altitude of our dataset, they cover around 23 km on each side of the aircraft. Each antenna scans the surface with an angle

(or so called off-nadir angle), returning two signals that are combined into a single scanline. This scanline is the data used as input at each time

t for the proposed approach.

The flight sequences of the dataset have an average duration of 6.1 min ( min), making a total of 5.4 h of flight and 24,582 scanlines. They were captured at an approximate altitude of 3100 feet ( ft), with a flight speed of about 183 Kn ( Kn), and with a wind speed between 0 Kn and 32 Kn.

For each flight sequence, the raw data of the SLAR sensor was stored. Subsequently, these data were digitized as 8-bit integers (grayscale images) with an image resolution of pixels due to the constraints of the monitoring equipment installed on the aircraft. A scanline, as used in the rest of the paper, is one row of this image, so each flight sequence consists of 482 scanlines. The area covered by each scanline on the ground depends on the altitude and on the flight speed, which, at the average values, means approximately 47.3 × 69.6 m per pixel. Therefore, a complete scanline (composed of 1157 pixels wide and 1 pixel high), at the average altitude, covers an average ground area of 54.8 km wide and 69.6 m high (27.4 km wide per antenna).

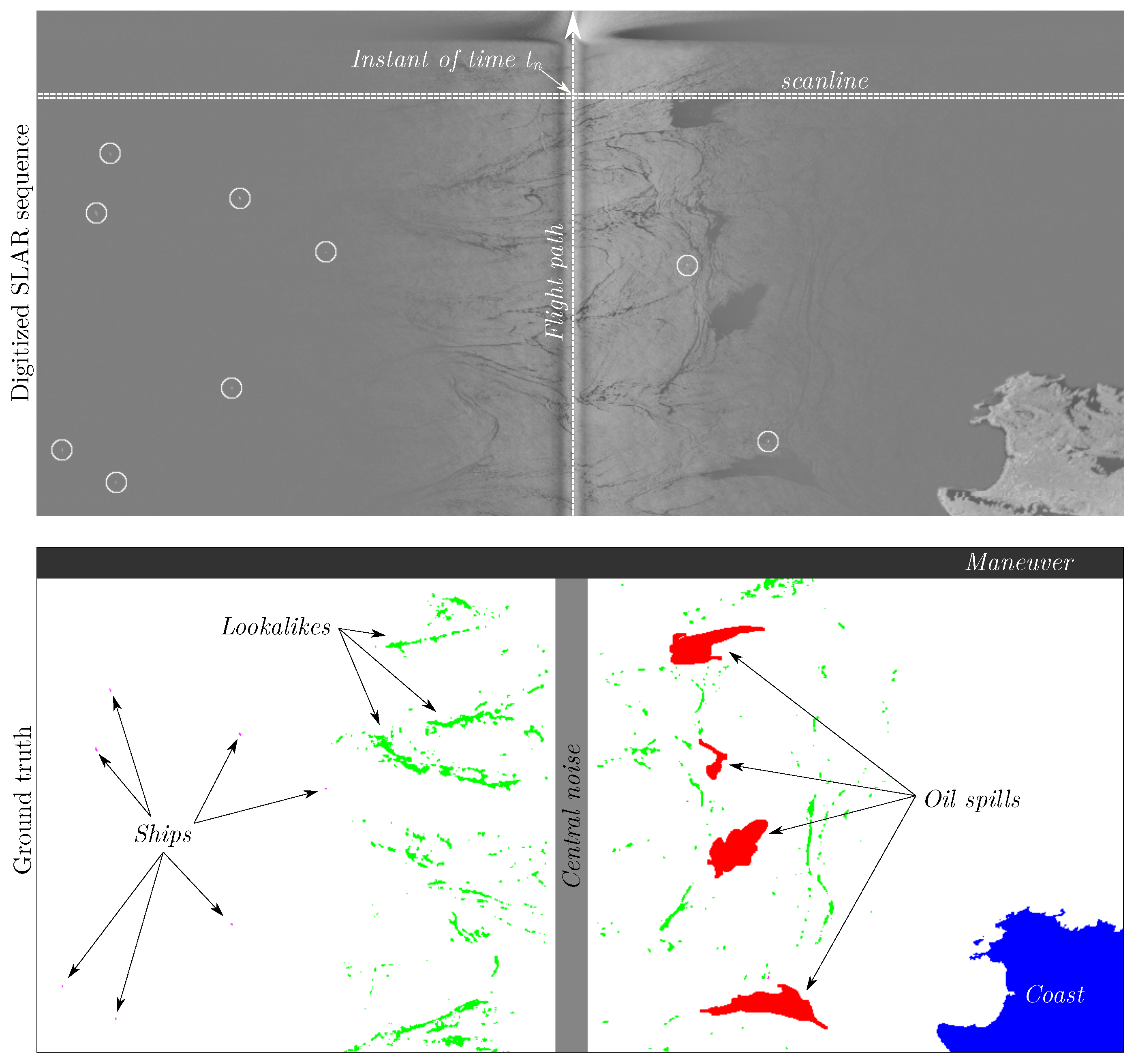

The scanlines of the 51 flight sequences were concatenated to give the 24,582 scanlines mentioned above. As ground truth, we have used a grayscale mask for each SLAR image delimiting the pixels of the target classes (ship, oil spill, lookalike, coast, central noise, lateral turns, and water) with a different gray value. It is important to note that this labeling has been performed at the pixel level since we want to evaluate both the detection and the precise location of the instances for each class in the digitized SLAR images.

Figure 2 shows an example of a SLAR sequence (top) and its corresponding ground truth (bottom) with the seven classes labeled using different colors. This sample contains several instances of boats, coast, lookalikes, as well as the two types of noise generated by the sensor: The central noise, generated by the union of the radar signals, and the noise caused by the aircraft turning maneuvers. The central noise appears in the center of the image in light gray (coinciding with the trajectory of the plane) and the maneuver noise is in the top area of the image in dark gray. The instances of ships are marked with a circle in the left image. This image also contains some examples of lookalikes (in green color) around the central noise, with elongated shapes very similar to those of current instances of spills.

The lookalike class is very difficult to differentiate at first glance from an actual oil spill because sometimes they can have very similar shapes and intensity values. These classes were labeled by an expert flight operator (from SASEMAR) who was able to analyze the scene recorded in each sequence. Given the difficulty of distinguishing oil spills from lookalikes, in case of doubts, it is recommended (due to operational reasons) to notify an operator who can review the data.

Table 1 shows a summary with statistical information about the dataset, including the number of instances of each class, the percentage of pixels that each class occupies in total, the mean size of the samples considering the bounding box that contains them, and the number of scanlines with information of each class. As can be seen, the dataset has a significant number of samples of the main classes to be detected (ship and oil spills), whose sizes are also small with respect to the total size. The lookalike and coast classes also have many samples, although in this case they usually correspond to fragmented pieces of the same spot or small portions of land labeled separately due to the noise caused by the airplane maneuvers.

Based on these data, it may seem that certain classes are easy to differentiate simply by their size but, as can be seen, the standard deviation values are very high, so the area does not allow us to differentiate an oil spill from a lookalike and not even from a ship. The shapes of the different classes are also similar, for instance oil spills and lookalikes sometimes have similar shapes, as it happens with those of the ships and small islets. Therefore, it is not reliable to differentiate between different classes only according to their size or shape.

To train and evaluate the proposed method, we used sequences of SLAR scanlines with length s, hence we obtain a dataset containing a total of 24,582 − scanlines (the algorithm cannot process the first scanlines of each flight since it still does not have a complete sequence, that is, it is necessary to have a minimum of s lines of context to return a response). For example, if the length of the sequence is 20 scanlines, we would obtain 24,582 − 23,613 sequences for which a prediction can be calculated.

2.2. Method

From the SLAR sensor signals, the aim of our method is to detect the presence of different objectives: ships, oil spills, lookalikes, coast, central noise, aircraft maneuvers (which causes image artifacts), and water (or background). Therefore, the proposed method receives as input a scanline digitalised from the sensor signal and returns as output the pixel-level segmentation with the labels of the considered classes.

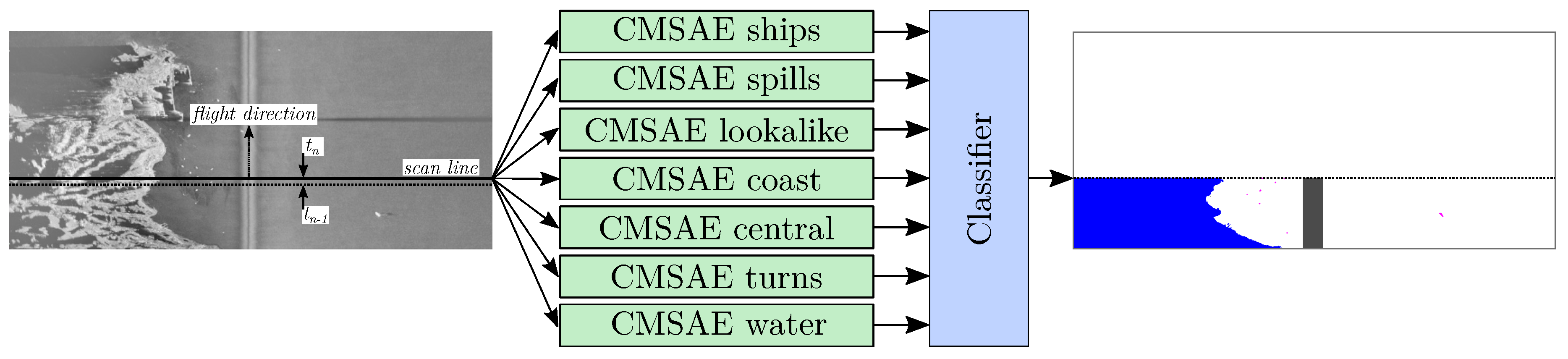

To perform this segmentation, we use an ensemble of ConvLSTM Selectional AutoEncoders (CMSAE), which are SAE networks with Convolutional LSTM layers as will be detailed in

Section 2.2.1. This ensemble employs the strategy of one against all. For each class, a CMSAE is trained to specialize in the segmentation of that class (positive class), considering all the other classes (including water) as background (negative class). Then, we combine all the CMSAE results using another classifier to obtain the final segmentation.

Figure 3 shows the proposed architecture. As can be seen, all CMSAE networks can be run in parallel. Therefore, in the inference stage the execution time will be equal to that of a single network.

We evaluated different topologies and parameter configurations for the CMSAE networks as well as different machine learning methods for the final classifier. The following sections describe in detail the architecture of the networks and the classifiers evaluated.

2.2.1. CMSAE

Autoencoders were proposed decades ago by Hinton and Zemel [

29], and since then they have been actively researched [

30]. They consist of feed-forward neural networks trained to reconstruct their input and are usually divided in two stages. The first part (called the

encoder) receives the input and creates a latent representation of it, and the second part (the

decoder) takes this intermediate representation and tries to reconstruct the input. Formally, given an input

x, the network must minimize the loss

, where

f and

g represent the encoder and decoder functions, respectively.

Some variations of autoencoders have been proposed in the literature to solve other kind of problems. For example,

denoising autoencoders are an extension trained to reconstruct the input

x from a corrupted version (usually generated using Gaussian noise) of it (denoted as

). Thus, these networks are trained to minimize the loss

, therefore they are not only focused on copying the input but also on removing the noise [

31,

32,

33].

For the detection of each of the classes from the SLAR sensor data, we have based our approach on the SAE architecture introduced in Gallego et al. [

27]. This type of architecture does not intend to learn the identity function as it happens with autoencoders, nor an underlying error as in denoising autoencoders. Instead, it learns a codification that maintains only those input pixels that we select as relevant (i.e., the class to be segmented). This goal is achieved by modifying the training function so that the input

x is mapped to a ground truth classification image. For this, we use the ground truth

y of the pixels from the input image that we want to select. Therefore, it is trained to minimize the loss

, learning a function

such that

, or in other words, a probability map over a

image that preserves the input shape and outputs the decision in the range of

with the likelihood that each of the pixels from the input image belongs to the target class.

We modified this topology in order to use only one sensor scanline, so, in this case,

w is the width of the image and

h is set to 1. We also add a first layer with recurrent neurons to take advantage of the information from the previous

(for various values of

s) sensor readings (where

s is the length of the input sequence). Recurrent neurons are a special type of artificial neurons that use an internal state to process sequences of inputs. These neurons process an input sequence one element at a time, maintaining a state (or memory) that implicitly contains information about the history of all the past elements of the sequence [

18].

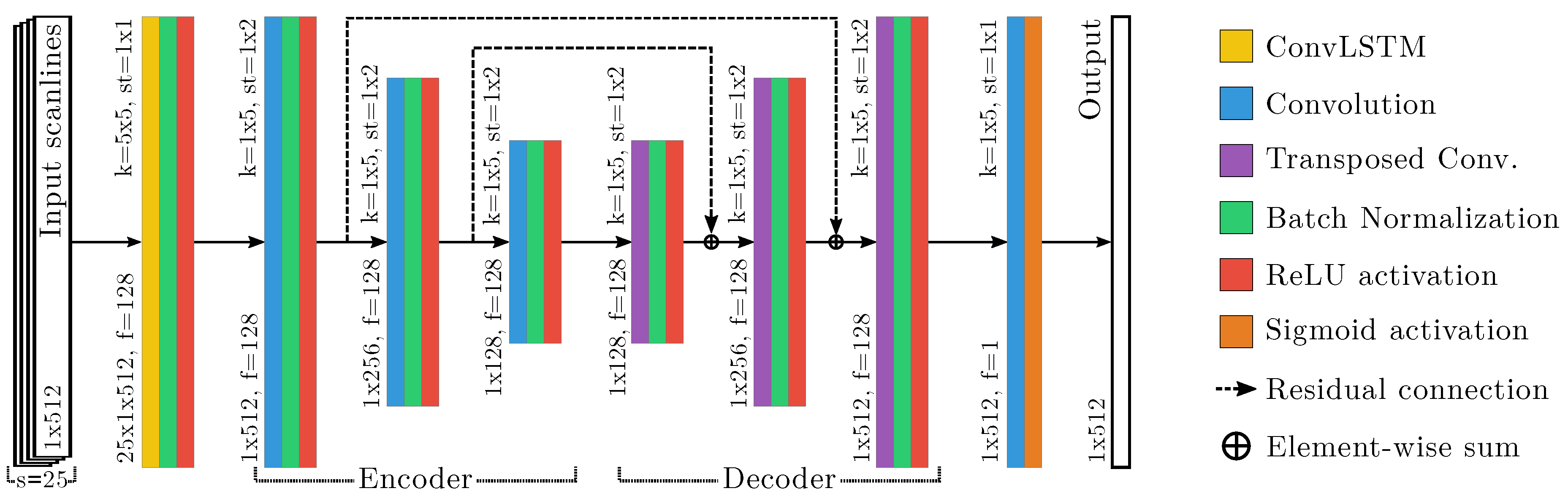

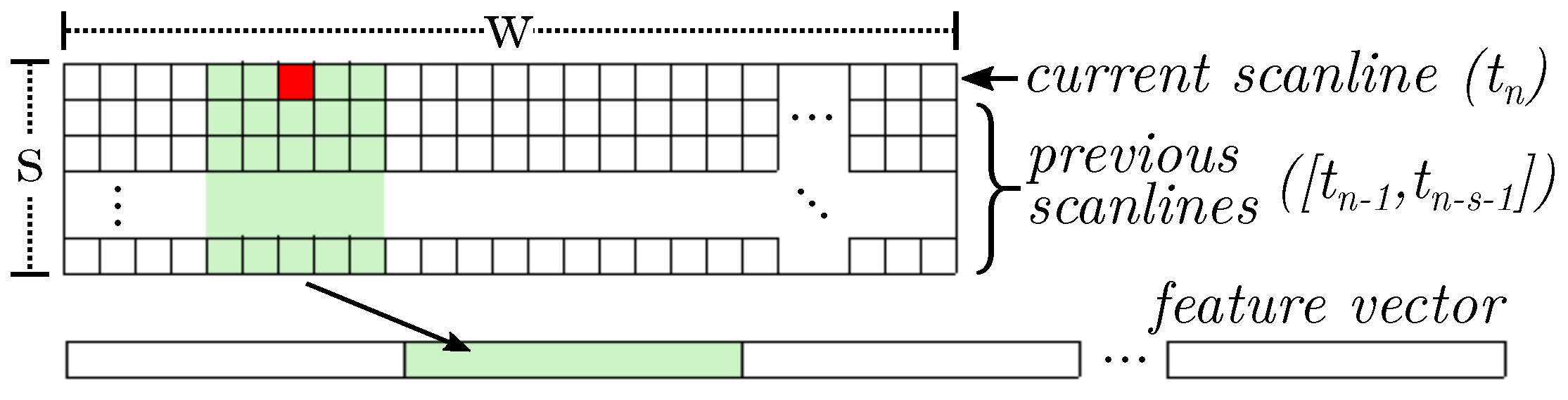

Figure 4 shows the scheme of the CMSAE network topology specialized for the segmentation of oil spills. As can be seen, the first layer uses the

ConvLSTM recurrent neurons [

28], followed by a

Batch Normalization layer [

34] and

ReLU as the activation function [

35]. The encoding part of the network consists of a series of layers with three elements:

Convolutions,

Batch Normalization and

ReLU activation functions. These layers are replicated until the intermediate layer, in which the encoded representation of the input is attained. Then, this representation is followed by a series of transposed convolutions plus

Batch Normalization layers, also with

ReLU activation functions, which generate the output image with the same input size (that is, with the same size of the data that is supplied as input to the CMSAE network). In addition, we added residual connections from each encoding layer to its analogous decoding layer, which facilitate convergence and improve the results. The last layer consists of a unique convolution with a sigmoid activation to predict a value in the range of

, depending on the

selectional level

for the corresponding input.

The downsampling in the network encoder part is performed by convolutions using stride, instead of resorting to pooling layers. Up-sampling is achieved through transposed convolution layers, which perform the inverse operation to a convolution, to increase rather than decrease the resolution of the output.

ConvLSTM neurons [

28] are an extension of the fully connected LSTM (FC-LSTM) to contain convolutional structures in both the input-to-state and the state-to-state transitions. It is demonstrated that ConvLSTM neurons capture spatiotemporal correlations better, consistently outperforming the state of the art (FC-LSTM) in spatiotemporal data.

Batch Normalization layers [

34] carry out a normalization process to the weights learned by the different layers after each training mini-batch. They help to perform faster training, reduce the overfitting, and improve the overall success rate. We have chosen the

Rectified Linear Unit (ReLU) [

35] as the activation function because it is computationally efficient and enhances the gradient propagation throughout the training phase, avoiding vanishing and exploding gradient problems.

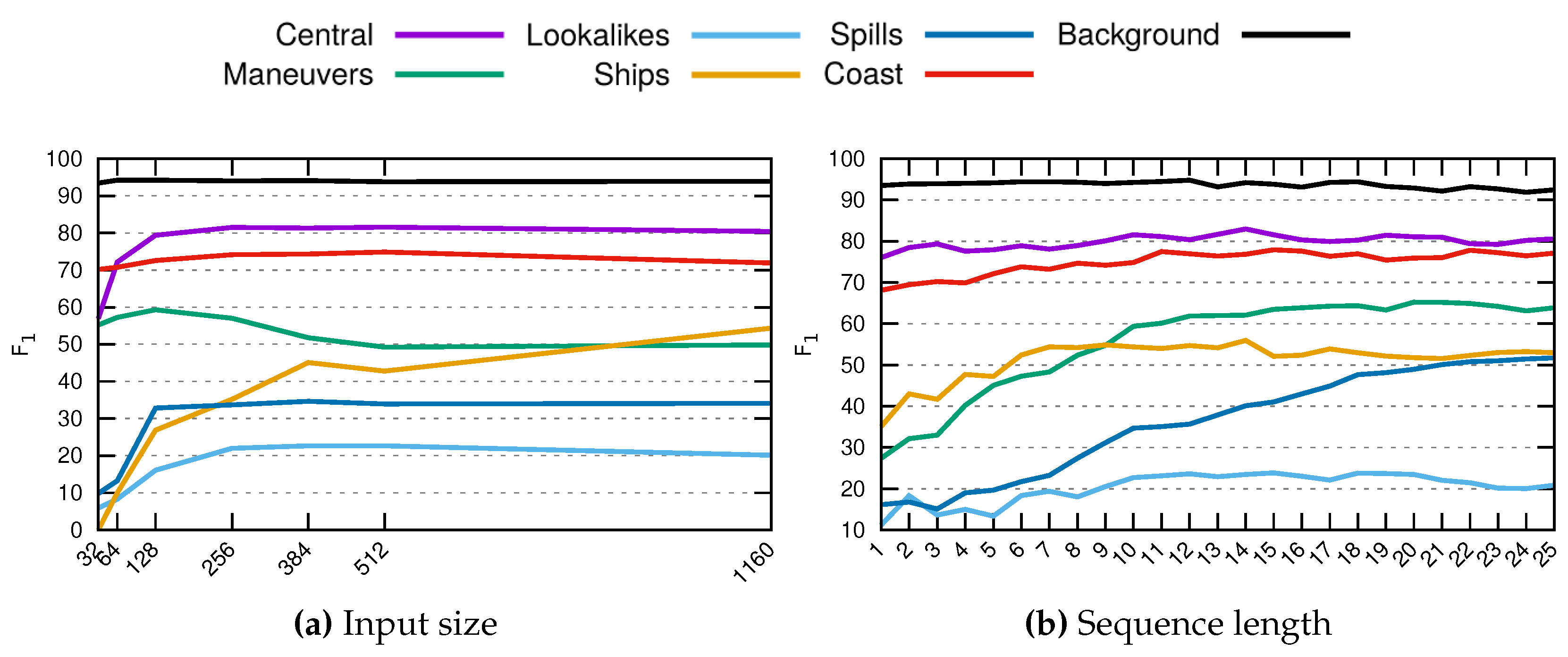

The topology of this type of network can be easily varied since we can modify the number of layers, number of filters, kernel size, etc. In addition, it is expected that, depending on the class to be detected, the appropriate configuration may vary. For example, it is not the same to segment small ships, which are represented by only a few pixels in the image, as contrasted with a coastal area, whose size is significantly larger. To find the network architecture with the best configuration of layers and hyperparameters, we have applied a

grid-search technique [

36]. Results of this experimentation are included in

Section 3.1, although we report the best topologies found for each network in

Table 2.

2.2.2. Classifier Integration

The segmentation result obtained from each of the networks is supplied to a classifier. To do this, we extract the feature vector (

neural codes or NC) of the penultimate layer of each network, we normalize them using

norm [

37], and concatenate them with the others forming a single vector of features that can be supplied to a classifier. In previous experiments, we tried to use the intermediate encoding of the auto-encoder, but worse results were obtained possibly because the precision and position of the information was degraded or lost.

Since the input size of the auto-encoder used for each class (and its corresponding output size) is different (see

Table 2), it is necessary to apply a process to normalize the sizes in order to combine this information. This process is performed on the NC extracted from each network, which form a matrix of

, where we have set

s to 12 scanlines (which is the minimum common sequence length used), and

w is the width of the output size. To this matrix, we apply bi-cubic interpolation to adjust the network’s output size to obtain an

matrix, which is the precision with which the result of the classification will be returned.

Once the sizes are normalized, we proceed to prepare the data to be supplied to the classifier, which has to predict one of the seven possible classes for each of the input pixels. For this, we create a feature vector by combining the NC in the neighborhood of each pixel. Specifically, a window of

around each pixel is taken, adding zero padding for the edge pixels. This information is extracted for the NC of each of the seven networks, obtaining a vector of

features (with

).

Figure 5 shows a graphical representation of this process.

We evaluated different machine learning methods to choose the most suitable one for this task (that is, classify the red pixel in

Figure 5). Specifically, we tested the following methods and parameter settings:

k-Nearest-Neighbors (

kNN) [

38]: This classifier is one of the most widely used schemes for supervised learning tasks. It classifies a given input element by assigning the most common label among its

k-nearest prototypes of the training set according to a similarity function. Different numbers of neighbors

have been evaluated in this work.

Support-Vector Machines (SVM) [

39]: It learns a hyperplane that tries to maximize the distance to the nearest samples (support vectors) of each class. In our case, we use the “one-against-rest” approach [

40] for multi-class classification and a

Radial Basis Function (or Gaussian) kernel to handle non-linear decision boundaries. Typically, an SVM also considers a parameter that measures the cost of learning a non-optimal hyperplane, which is usually referred to as parameter

c. For these experiments, we tuned this parameter in the range

.

Random Forest (RaF) [

41]: It builds an ensemble classifier by generating several random decision trees at the training stage. The final output is taken by combining the individual decisions of each tree. The number of random trees has been established by experimenting in the range

.

As a result of this evaluation (which will be detailed in

Section 3.2), we obtained the best results using a SVM with

. Therefore, we chose SVM as the final classifier of the proposed method.

2.3. Training Process

CMSAE can be trained using conventional optimization algorithms such as gradient descent. In this case, the network parameters were tuned by means of stochastic gradient descent [

42] considering the adaptive learning rate proposed by Zeiler [

43]. The loss function (usually called

reconstruction loss in autoencoders) can be defined as the squared error between the ground truth and the generated output. In the proposed method, we use the cross-entropy loss function as the input is normalized to be in the range

.

In all of the experiments, we used an

n-fold cross validation (with

), which yields a better Monte-Carlo estimation than when solely performing the tests with a single random partition [

44]. Our dataset was consequently divided into

n mutually exclusive sub-sets, using the data of each flight sequence only in one partition. For each fold, we used one of the partitions for test (

of the samples) and the rest for training (

). Besides, a validation sub-set with 10% of the training samples was used in the grid search process (see

Section 3.1). The training and testing processes were repeated

times, using different partitions of the dataset and finally providing the average result along with its standard deviation.

The data supplied to the networks during both training and inference was normalized using standard normalization. For this, we apply the equation

, where

X is the input matrix containing the raw pixel values from the training set,

is the sample mean, and

the standard deviation. For the normalization of the test set we used the same mean and deviation calculated in the training set. As seen in Gallego et al. [

27], this kind of normalization is suitable for this type of data, since in some cases the improvement reaches up to 25%.

In the dataset used for training and evaluation, nearly 80% of the pixels are water, and the most relevant targets (oil spills and ships) are represented by less than 0.25% of the pixels (see

Table 1). As a consequence of that, the dataset is unbalanced. To solve this issue, we relied on data augmentation techniques to balance classes adding samples. Consequently, for training each of the CMSAE networks, the sequences of the positive and negative classes were counted and then, samples of the minority class were artificially generated by randomly applying different types of transformations to the original samples of that class. These transformations include horizontal and vertical flips and horizontal translations in the range [−10, 10]% of the sample width.

4. Discussion

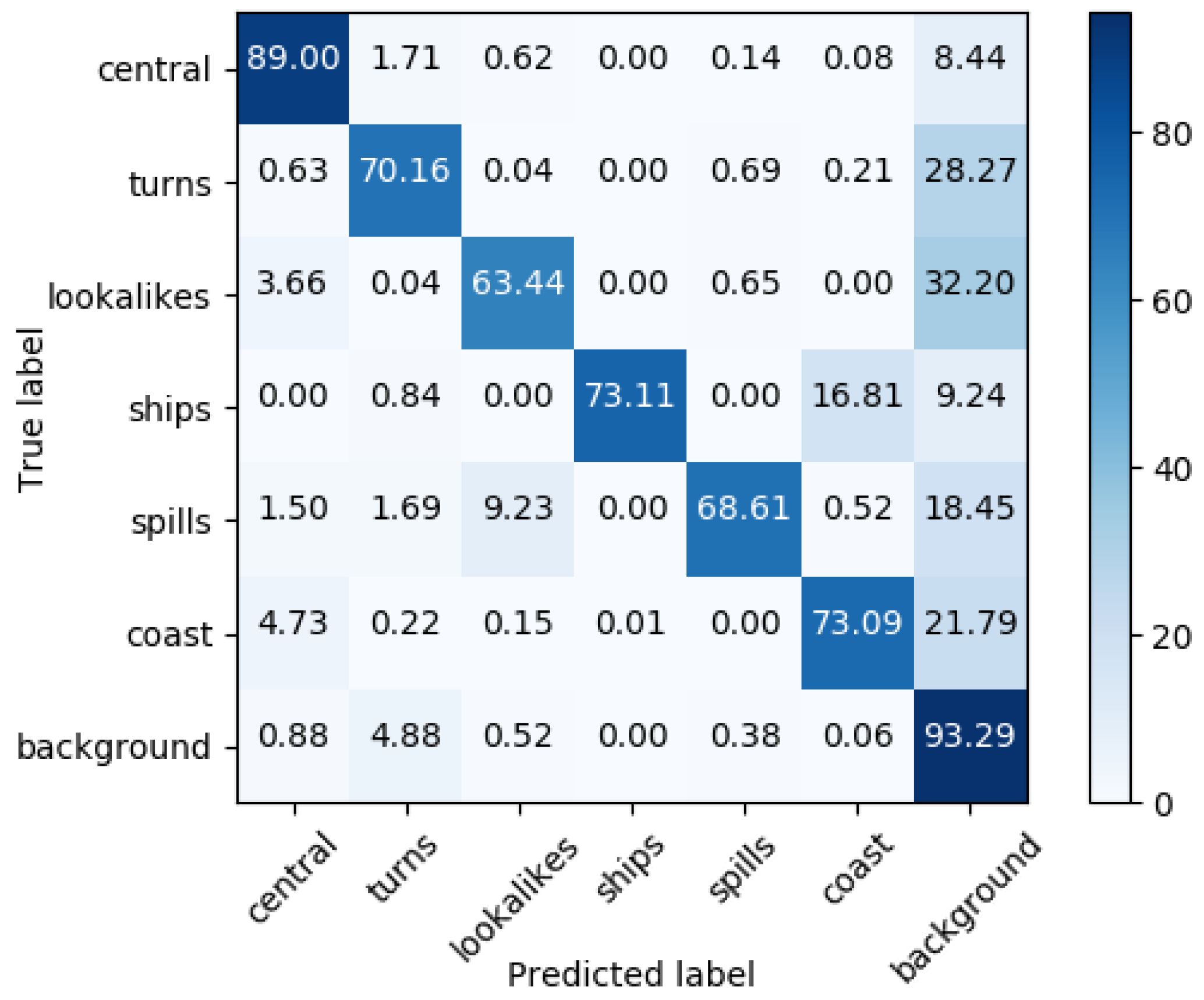

As shown in the previous section, the proposed method (CMSAE+SVM) obtains an average result of 67.39% for classifying the different targets at the pixel level. Some classes, such as central noise, coast or water, obtain accurate results, 87.92%, 83.92% and 95.45%, respectively. For the two most relevant classes, ships and spills, good results are also obtained (58.03% and 54.36%), especially considering that the discrimination is done at the pixel level. The worst result is obtained for the lookalikes class, since this class groups together different types of noise that can be confused with oil spills, and that even for a human expert are very difficult to distinguish.

To better discuss these results and analyze how they can be interpreted in perspective to other previous studies, we compare the results obtained using the proposed approach with other state-of-the-art methods, which are as follows:

BiRNN [

45]: The authors also proposed the use of recurrent neurons to process the sampling lines of the SLAR sensor in order to detect oil spills. In this case, they use Bidirectional RNNs [

46], thus, in this model, the output at time

t also depends on future elements.

TSCNN [

2]: This method employs a two-stage architecture composed of three pairs of CNNs. Each pair of networks is trained to recognize a single class (ship, oil spill, or coast) by following two steps: a first network performs a coarse detection, and then, a second CNN obtains the precise localization. After classification, a postprocessing stage is performed to improve the results.

U-Net [

47]: This CNN was developed for biomedical image segmentation. This network uses a FCN divided into two phases: a contracting path and an expansive path. The feature activations of the contracting path are concatenated with the corresponding layers from the expansive path. The last layer uses a 1x1 convolution with a Softmax activation function to output class labels.

SegNet [

20]: It uses a fully convolutional neural network architecture for semantic pixel-wise segmentation, containing an encoder network, a corresponding decoder network, and a pixel-wise multiclass classification layer. The architecture of the encoder network is topologically identical to the 13 convolutional layers in the VGG16 network [

48].

DeepLabv3 [

49]: It uses atrous spatial pyramid pooling to robustly segment objects at multiple scales with filters at multiple sampling rates to explicitly control the resolution at which feature responses are computed within Deep Convolutional Neural Networks. It also includes a image-level feature to capture longer range information and uses batch normalization to facilitate the training.

SelAE [

27]: This approach uses a SAE network specialized in the segmentation of oil spills. It returns a probability distribution over which they apply a threshold to select the pixels to segment.

The BiRNN and SelAE methods were originally proposed for the detection of oil spills, however in this experiment they are also applied for the detection of the rest of the classes. For this, the originally proposed architectures were used with the same configuration, but training and evaluating a network model for each of the classes.

In the case of the U-Net, SegNet and DeepLabv3, we also used the original architectures but modifying the last layer to classify the seven classes from our dataset. The TSCNN method could only be used for the classification of ships, oil spills and coast, since this method proposed a specific architecture for these three classes and in addition then it applied post-processing that combined the information to improve the result. Therefore, it was not possible to extrapolate this method for the rest of the classes.

Table 5 shows the results of this comparison, giving the accuracy for each of the classes as well as the average obtained by each method. In the case of TSCNN, the average is not shown since it does not contain all the results. However, it can be seen that this method performs worse for these classes than other methods, such as SelAE or our proposal (CMSAE+SVM). The SegNet network obtains the worst average result, because some classes have significantly low accuracy, especially those that have very small or thin elements, such as ships or lookalikes. The BiRNN, U-Net and DeepLabv3 methods obtain a slightly better result, however, difficulties to detect small objects are also observed.

Comparing the results obtained by SelAE for the detection of oil spills with those presented in the original paper [

27], it can be observed that this method has significantly worse results here, decreasing from 93.01% with the previous dataset shown in [

27] to 54.52% with the new dataset used in this work. However, it must be considered that many new sequences have been added in the new dataset, which include many examples of lookalikes, coast, more complex representations of oil spills, and also some of the images are very noisy.

On average, the proposed method CMSAE+SVM is the one that obtains the best results. The SelAE and DeepLabv3 algorithms obtain slightly better results for three of the classes. However, the difference is not significant, 0.26% for maneuvers, 0.16% for oil spills, and 0.65% for coast, less than 1% in all cases, with the standard deviation higher than this difference. Moreover, it should be considered that the proposed method works using only a few scanlines, so the execution time is much faster (as will be discussed in the next section).

To assess the significance of the result obtained by the proposed method in comparison with the second best result (the one obtained by SelAE), we performed a statistical significance comparison using the paired sample non-parametric Wilcoxon signed-rank test [

50]. On average, the proposed method (CMSAE+SVM) obtains a

p-value of

, so this test reflects that this method significantly outperforms the results obtained by SelAE.

The results at the pixel level indicate the precision with which the method detects the position and shape of each class. However, this metric does not allow us to discern if all the objectives are actually being detected. For this reason, the Intersection over Union (IoU) metric is also calculated to evaluate if all the targets present in the image are correctly detected. In addition, we have also performed this process at the class level. To start, we calculate a binary mask with the output of the network using a threshold of 0.5, and setting the pixels of that class to 1 and the rest to 0. Then we apply a morphological opening filter and then a closing operation with a circular kernel of

in order to eliminate small gaps as well as isolated pixels. Finally, we calculate the

blobs (we define a blob as a group of connected pixels with value 1 in the binary mask) from the network prediction (

) and we pair them with those ground-truth blobs (

) with which they have a highest IoU using the following equation:

where

indicates the intersection between the object proposal and the ground truth blobs, and

depicts its union. The detection will be considered to be positive when the value of IoU exceeds a certain threshold

, which is set to 0.5 since it is the value normally used for this type of tasks.

Table 6 shows the results of this experiment. As can be seen, the proposed method obtains the best result again, although using this metric the difference with respect to SelAE is not so significant since both methods perfectly detect the central noise, maneuvers and water. Therefore, we can conclude that the proposed method detects slightly better the presence of the different targets, but with greater precision for detecting their shape and in significantly less time (as will be seen in the next section).

All methods were trained and evaluated with the dataset described in

Section 2.1. As stated before, this dataset is composed of more than 5 h of flight with varied missions, which allows an evaluation under different conditions. The weights learned by networks are dependant on the data used for training, however, to alleviate this fact, layers of Batch Normalization along with data augmentation were used, which increase the generalization capabilities of the method. Even so, it is possible that the proposed method would perform a wrong detection in a particular situation. In this case, this sample could be included in the training set so the weights will be updated to avoid a similar mistake. Therefore, unlike hand-crafted methods, it is easily adaptable to unseen data.

Runtime Analysis

It is also relevant to analyze the time required by each of the methods to give a prediction. The SelAE, SegNet, U-Net and DeepLabv3 algorithms use a complete image, so in principle it would be necessary to wait until a complete image (requiring 6.1 min of flight) is recorded before yielding a result. However, they could wait for that time at the beginning of the flight and then build new images incrementally as each scanline arrives. That is, add the new scanline to the top of the image and eliminate the oldest data from the bottom in order to always have a complete image and give results for every new scanline. This would imply that the prediction at each time instant would be for the last scanline, that is, the top row of the image. However, in the experiments carried out it has been observed that when using this technique the precision of the results is halved, as there is no context. To maintain the same precision using this technique, it would be necessary to wait 24 s to accumulate 32 scanlines preceding each prediction. That is, in order to give a result for the current scanline it is necessary to wait for the 32 future scanlines, which will produce a delay to get the current response (we will call this delay as “lag time”).

The TSCNN and BiRNN methods can be applied directly to a part of the sequence. Specifically, TSCNN uses a sliding window with different window sizes that can be applied horizontally to a set of scanlines. Since the largest window size used is 50 × 50, and that the result obtained is for the central pixel of the same, it would be necessary to wait 19 s (25 scanlines) for each answer. The BiRNN method, according to the specifications of the article, uses only 3 scanlines. However, as it is bidirectional, it would be necessary to wait 2.28 s, as it needs to wait for the next scanlines to get the current prediction. With the proposed method, it is possible to use only the previous information and obtain a response at each time frame, therefore the response time would be only 0.76 s. A summary of these results is shown in the column “

lag time” of

Table 7.

We have also added to this table the column “runtime” to compare the execution times once all the necessary data (scanlines) are available. These runtimes were obtained using a Intel(R) Core(TM) i7-6700 CPU @ 3.40GHz with 16 GB DDR4 RAM and a Nvidia GeForce GTX 1070 GPU. The fastest methods are BiRNN, SelAE and TSCNN, since these are networks with few parameters and binary outputs. The processing time of our proposal is divided between the time used by the CMSAE networks, which is 0.0039 s. on average, and that used by the selected classifier, which for SVM with is 0.5220 s. Since the CMSAE networks can be run in parallel, the average time spent by the network with more parameters has been calculated. In this case these are the networks with 6 layers, 128 filters and a kernel of . While our proposal has a slower runtime than the compared approaches, the perceived runtime (column “Total time”) would be the smallest by far, and since the runtime is less than both the lag time and the time needed by the sensor to return each scanline, the method could be used for the realtime detection of oil spills and ships during flight.

Having evaluated the classification performance and the runtime as stand-alone measures of merit, we will now analyze them jointly from a Multi-objective Optimization Problem (MOP) perspective. Note that classification performance and runtime could by opposing goals as improving one of them could deteriorate the other.

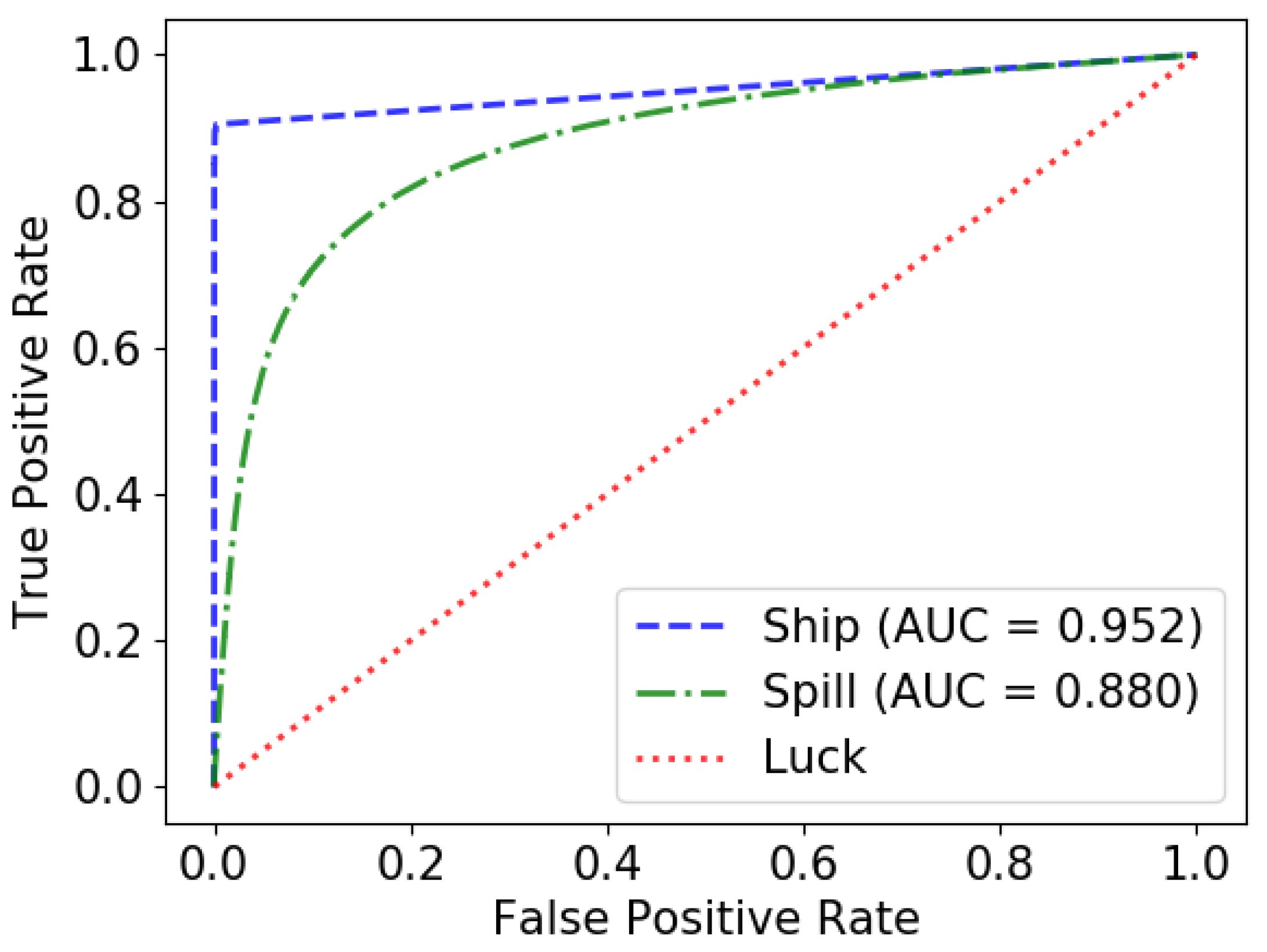

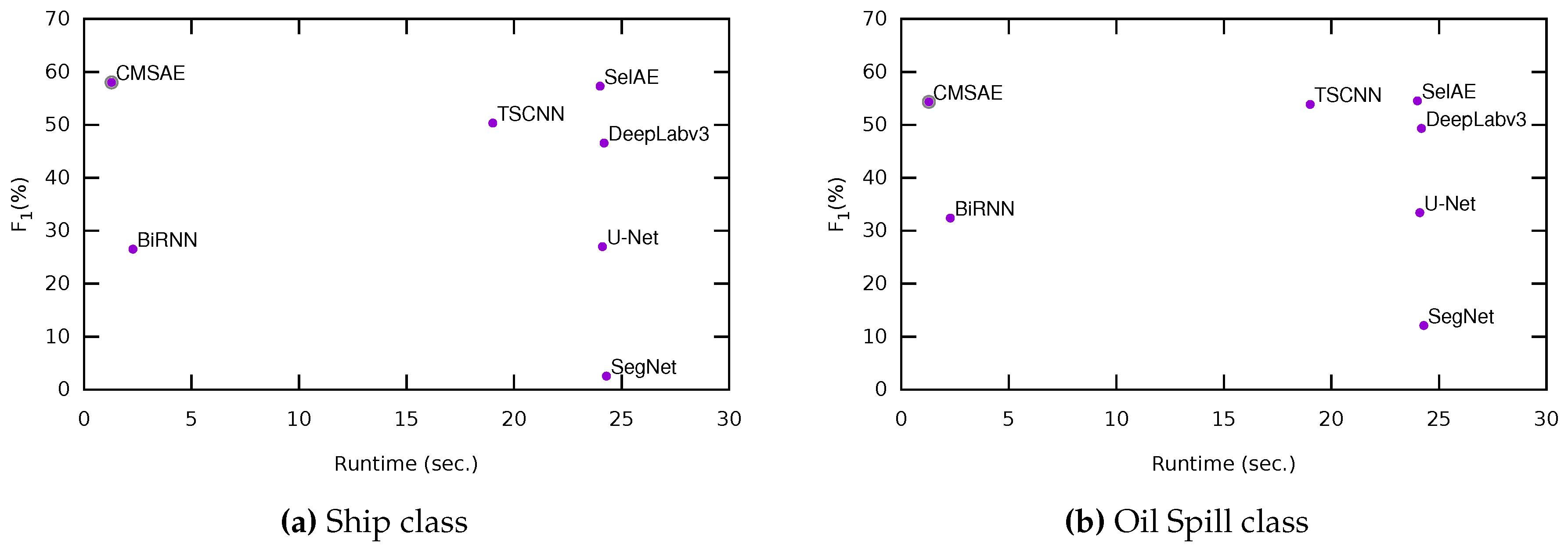

Figure 10 compares these two variables graphically for the ship and oil spill classes. In this way, it can be clearly seen how the proposed method is much closer to the target, being much more efficient and also obtaining the best result for these two classes.

5. Conclusions

In this work we propose a new architecture called Convolutional LSTM Selectional AutoEncoders (CMSAE) to detect multiple targets such as coast, oil spill and ships from SLAR images. By running multiple CMSAE networks in parallel and combining their outputs using a machine learning classifier (SVM), the method can use only a few scanlines to obtain reliable results and also provide a quick response during live aircraft flights.

Different configurations of the networks and final classifier were evaluated. The best selected setup (CMSAE+SVM with ) was compared to previous methods from the state of the art (BiRNN, TSCNN, U-Net, SegNet, DeepLabv3, and SelAE) using a dataset with 51 flight sequences (with a total of 24,582 scanlines).

The proposed approach obtained the highest pixel level average (67.39%) with the lowest lag time. This result was validated using statistical significance tests, showing that the presented method significantly outperforms the results obtained by SelAE. In addition to the evaluation at the pixel level, the proposed approach also obtained the best results at blob level detection (90.85%) using the Intersection over Union metric.

One of the reasons why the proposed approach obtains better results than the rest of the state-of-the-art methods is because it uses specialized classifiers. This allows the networks to learn the particular characteristics of each class. Another reason is the use of a higher resolution for the scanline amplitude, which benefits the detection of ships and the more accurate detection of the edges for the different classes.

With respect to the time needed to process each scanline of the SLAR sensor during flight, the proposed method achieved the best result (1.28 s), far better than the second algorithm with best classification results (SelAE, which takes 20 s).

Therefore, we can summarize that the proposed method (CMSAE+SVM) obtains better average results when detecting the target objectives, with a higher precision for detecting their shape and requiring significantly less time.

With respect to the ship and spill classes, the proposed method detects 90.13% and 92.11% of these targets, respectively. It should be noted that SLAR sensors generate a large amount of noise and, in our case, the dataset used contains noise in all flight sequences. This noise prevents the detection of these classes and sometimes it can also be confused with them.

Based only on SLAR intensity data, the accuracy obtained from the state-of-the-art algorithms, including the proposed method, is still low to rely exclusively on them for ship and oil spill detection. However, they can be used to aid a human operator that can visually inspect the candidate targets.

Future work is intended to consider the metadata provided by the sensor and the aircraft to improve the results of the classification. Information such as flight altitude, airplane speed or wind speed could help to better discriminate between the true targets and the noise generated by the sensors.