Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images

Abstract

1. Introduction

1.1. Background—Objectives and Challenges of Drone Operation in Forestry

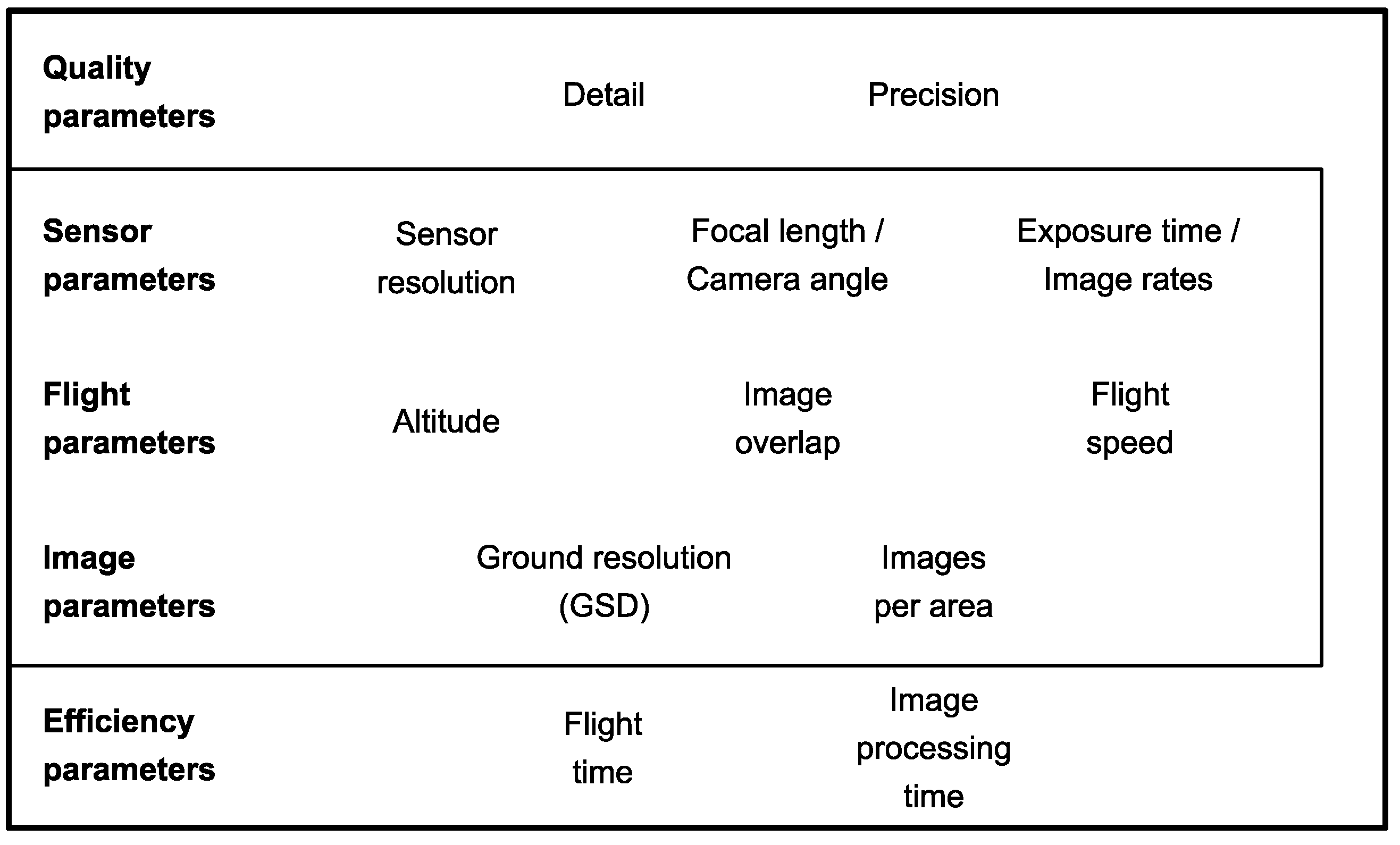

1.2. Trade-Offs of Drone Operations

1.3. Objectives

2. Materials and Methods

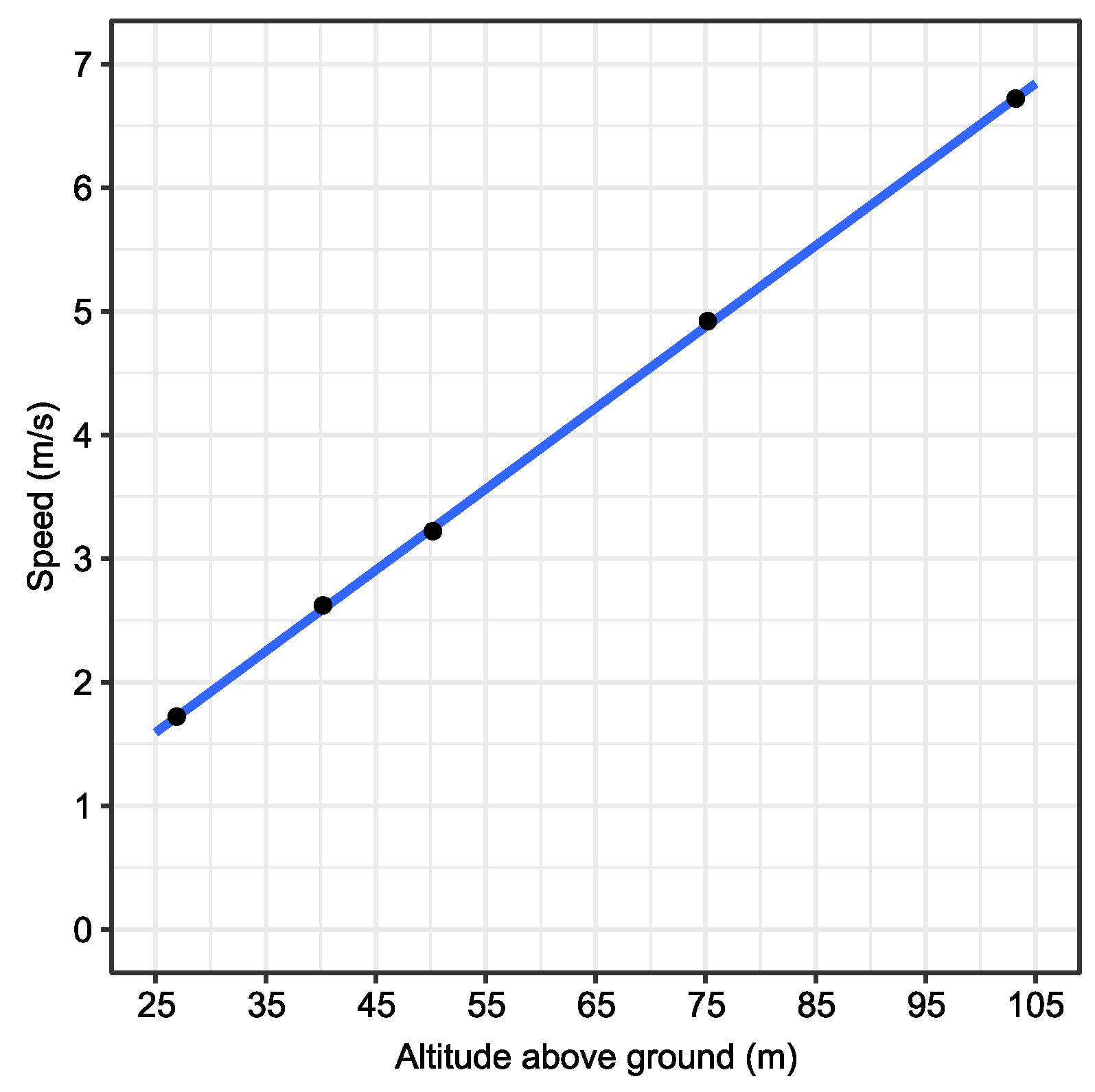

2.1. Study Site and Flight Planning

2.2. A Metric for Multi-View Reconstruction Quality

2.3. Statistical Analyses

3. Results

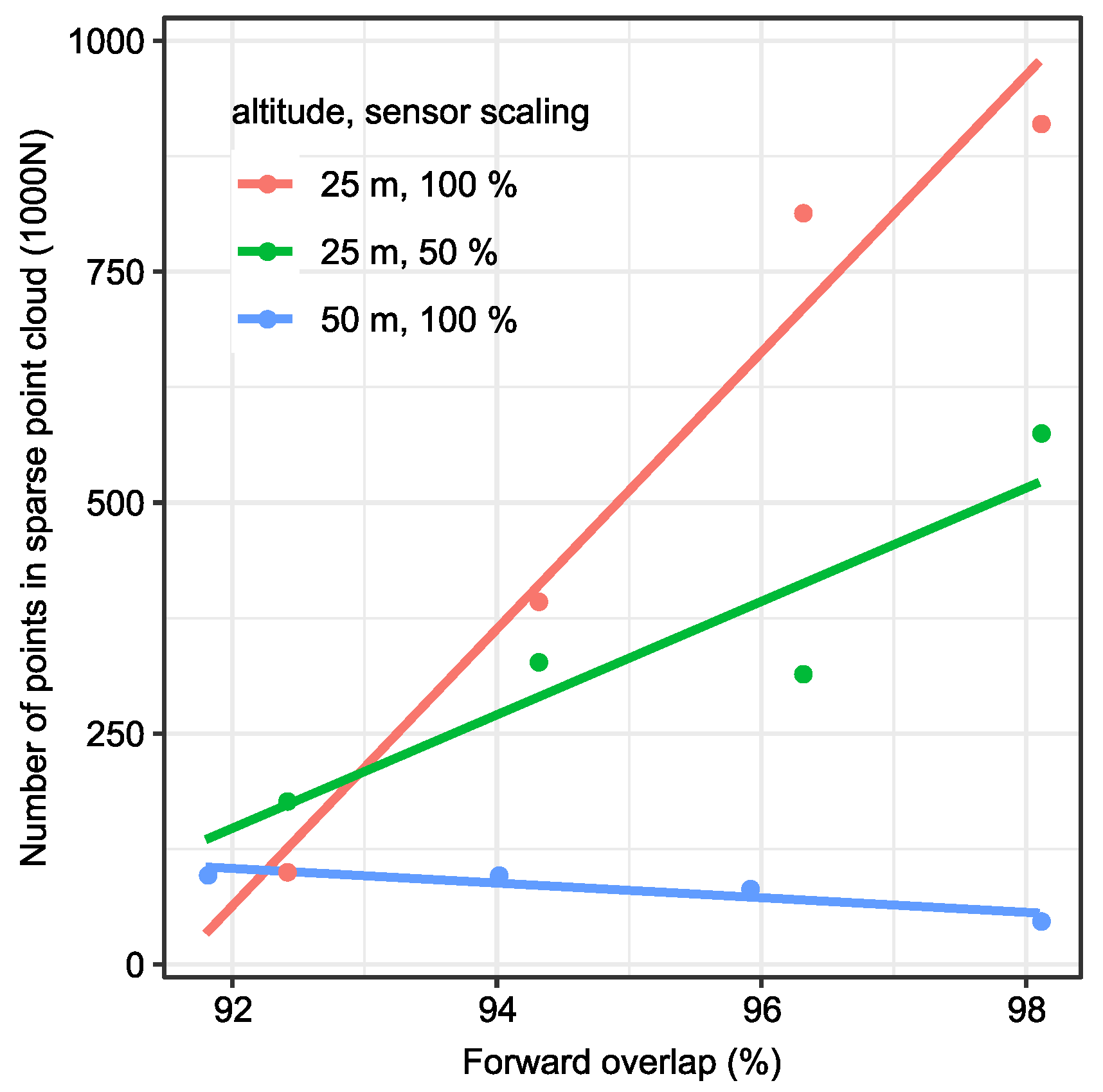

3.1. Relation between Sparse and Dense Point Clouds

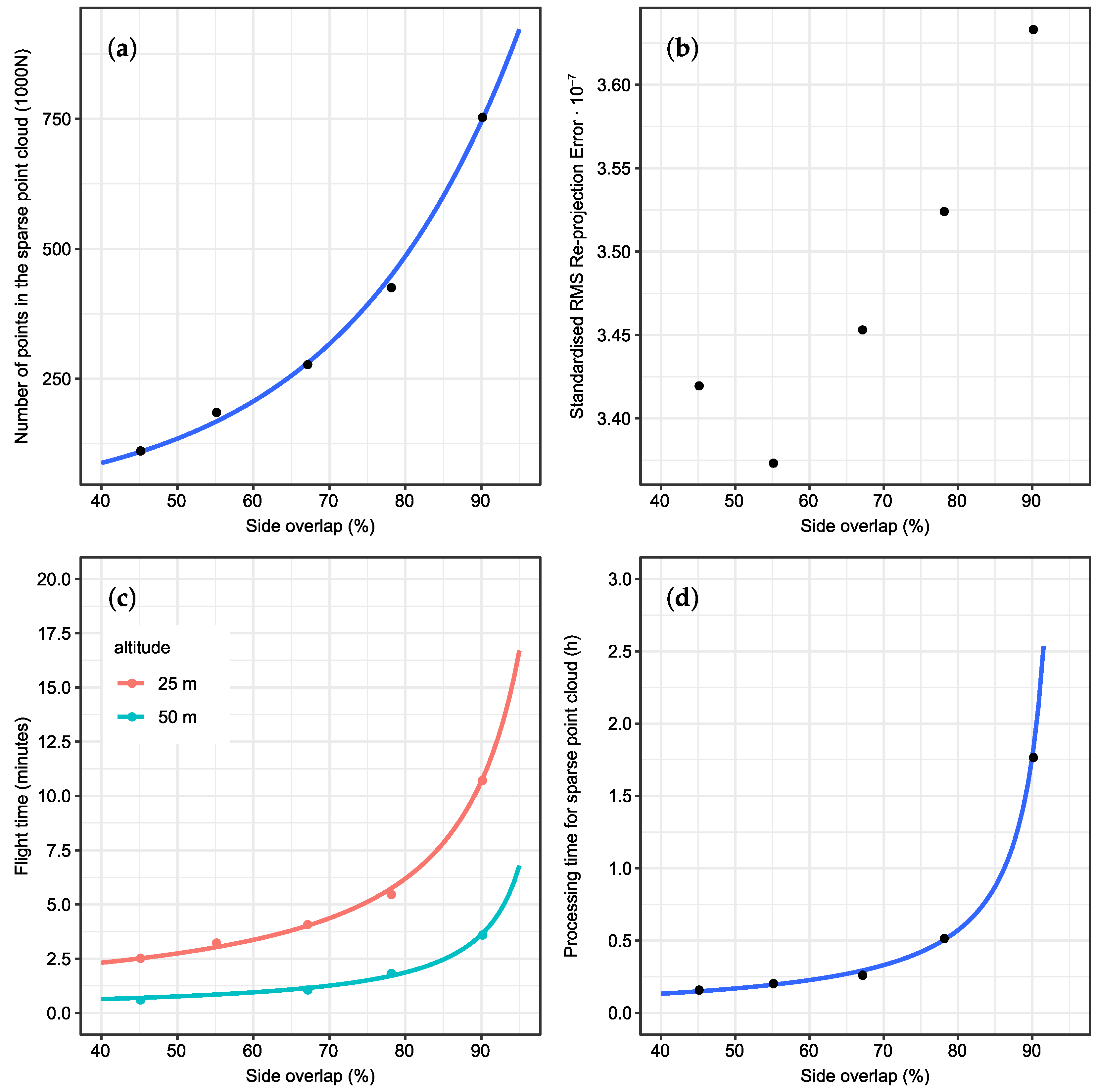

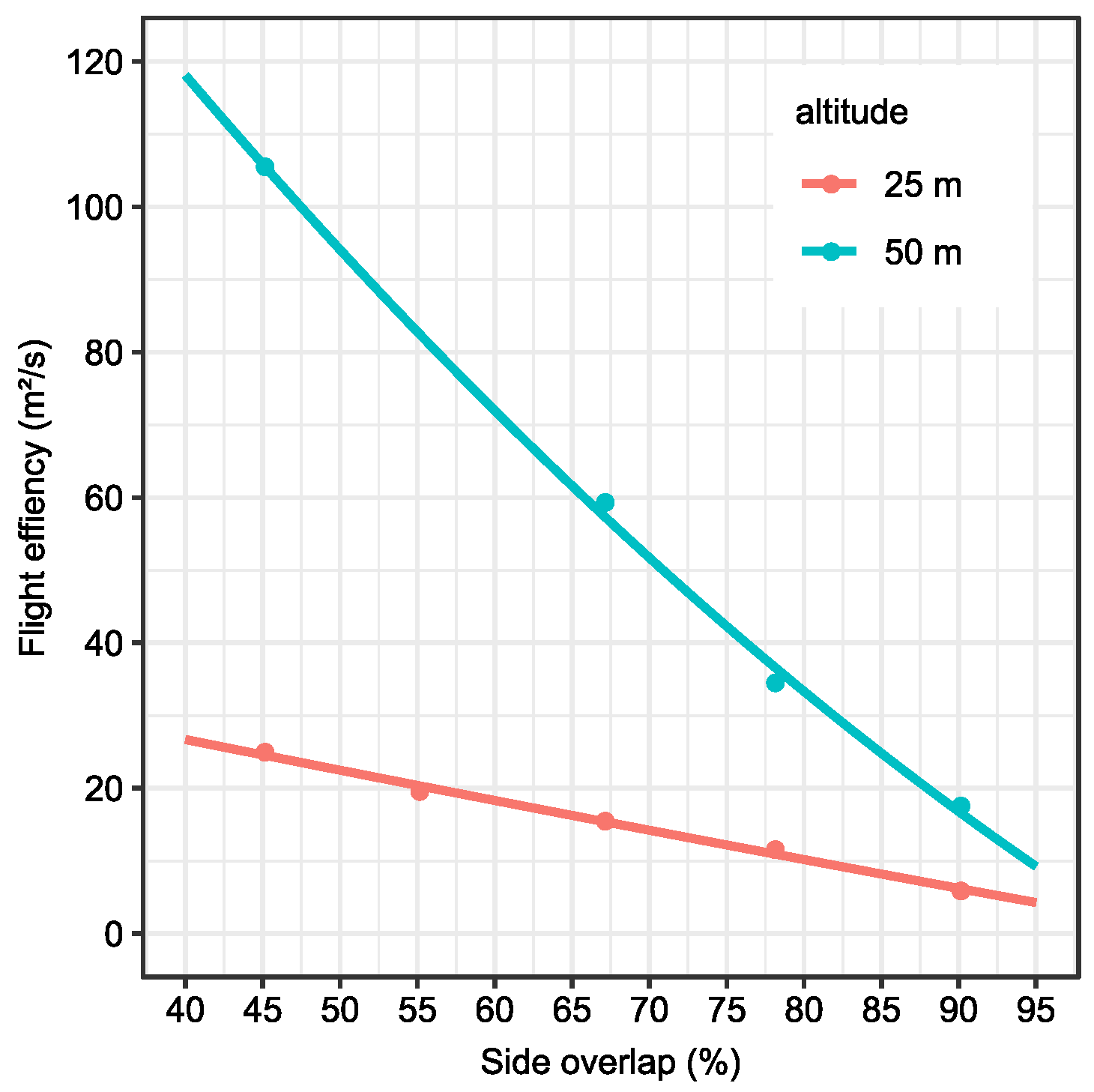

3.2. Influence of Side Overlap

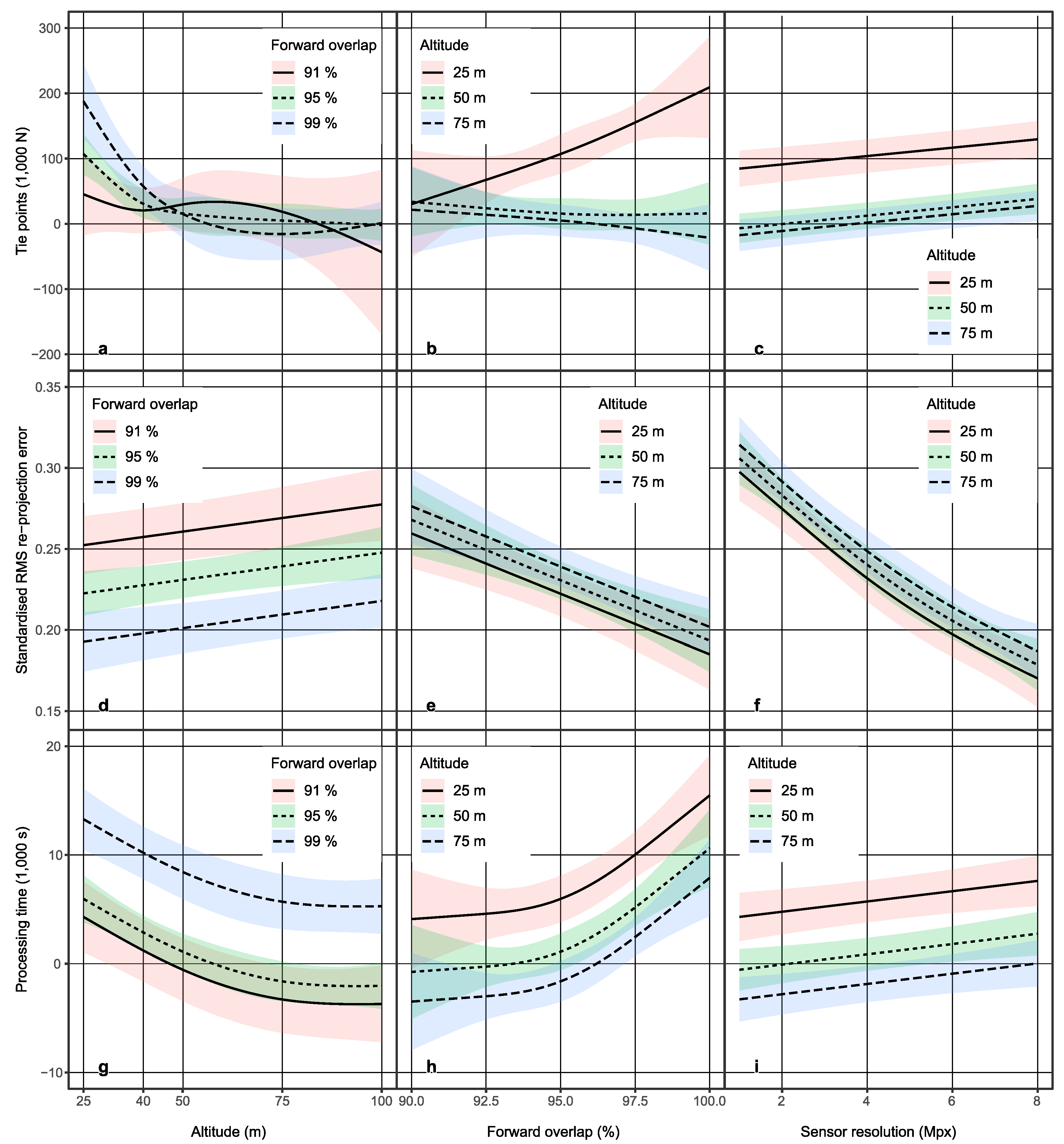

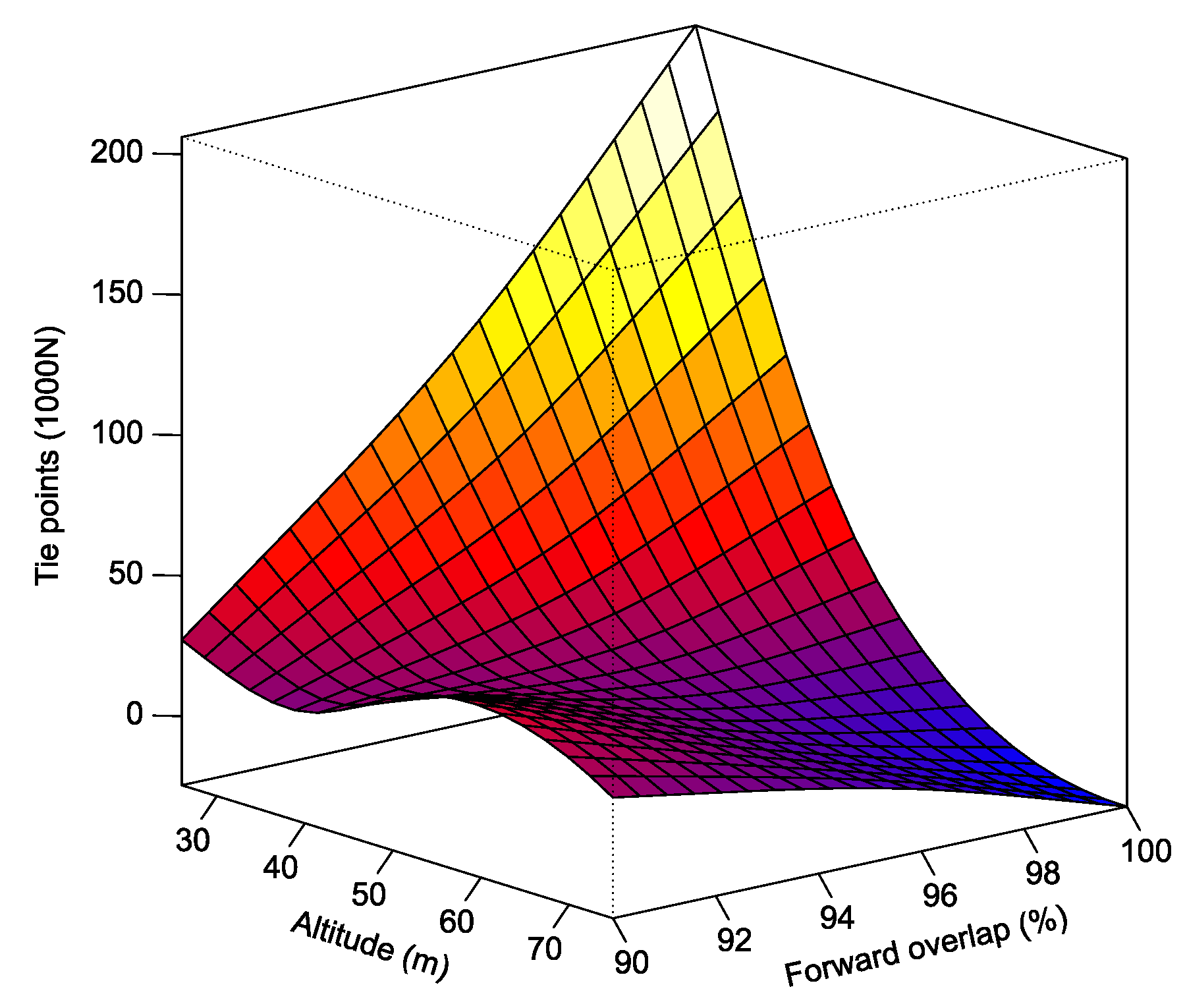

3.3. Models

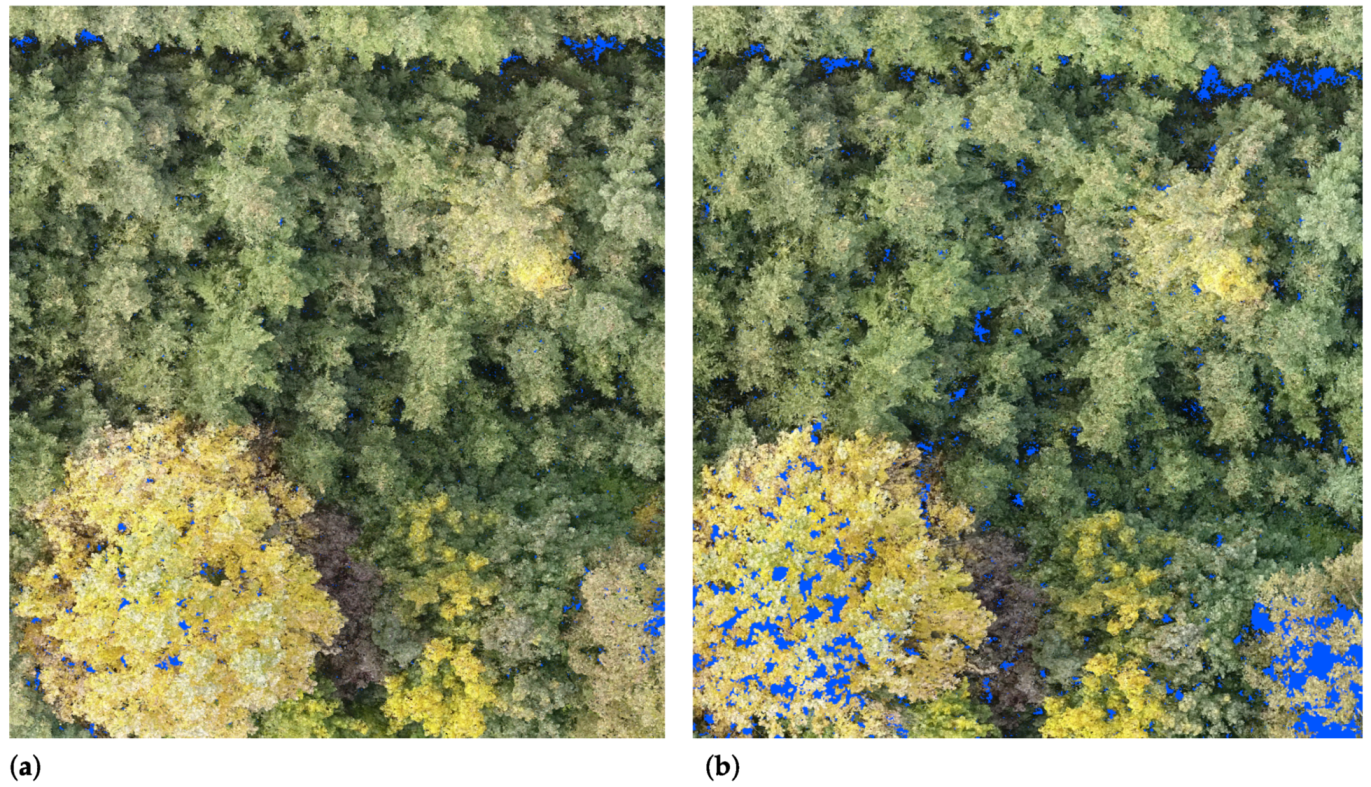

3.4. Reconstruction Details

3.5. Reconstruction Precision

3.6. Processing Time of the Sparse Reconstruction

4. Discussion

4.1. Major Findings

4.2. Comparison to Findings by Other Authors

4.3. Reasonable Ranges for Flight Parameters

4.4. Contextualisation of Our Results and Future Opportunities

5. Conclusions

- The processing of video stream data in the MVG reconstruction proved to be successful and efficient. It facilitated a constant flight speed and enabled high forward overlap rates.

- Low altitudes of 15–30 m above canopy, in combination with high forward overlap rates of close to 99%, led to the best reconstruction detail and accuracy. High detail in object geometry was identified as the most likely cause of this effect. This compound effect could not easily be recreated with an increased sensor resolution at higher altitudes, since the sensor resolution was only linear, while the compound effect was nonlinear.

- The nonlinear effects of forward overlap and altitude on processing time might pose a constraint on using forward overlap rates higher than 95%, if processing time is the main limitation.

- In contrast to the forward overlap, the side overlap showed an optimum in reconstruction accuracy in a range between 50% and 70%.

- First reasonable ranges for flight parameter selection have been provided based on this study.

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ALS | Airborne LiDAR |

| GNSS | Global Navigation Satellite System |

| GSD | Ground Sample Distance |

| FOV | Field-Of-View |

| LiDAR | Light Detection And Ranging |

| Mpx | Megapixels |

| MVG | Multi-View Reconstruction |

| px | Pixels |

| RMSRE | Root Mean Squared Re-projection Error |

| SIFT | Scale-Invariant Feature Transform |

| SRMSRE | Standardised Root Mean Squared Re-projection Error |

| UAV | Unmanned Aerial Vehicle |

References

- Goodbody, T.R.; Coops, N.C.; Marshall, P.L.; Tompalski, P.; Crawford, P. Unmanned aerial systems for precision forest inventory purposes: A review and case study. For. Chron. 2017, 93, 71–81. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Filippo Di Gennaro, S.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- International Union of Forest Research Organizations. Scientific Summary No. 19. Available online: https://www.iufro.org/publications/summaries/article/2006/05/31/scientific-summary-no-19/ (accessed on 1 March 2019).

- Seifert, T.; Klemmt, H.J.; Seifert, S.; Kunneke, A.; Wessels, C.B. Integrating terrestrial laser scanning based inventory with sawing simulation. In Developments in Precision Forestry Since 2006, Proceedings of the International Precision Forestry Symposium, Stellenbosch University, Stellenbosch, South Africa, 1–3 March 2010; Ackerman, P.A., Ham, H., Lu, C., Eds.; Department of Forest and Wood Science: Stellenbosch, South Africa, 2010. [Google Scholar]

- Ducey, M.J.; Astrup, R.; Seifert, S.; Pretzsch, H.; Larson, B.C.; Coates, K.D. Comparison of Forest Attributes Derived from Two Terrestrial Lidar Systems. Photogramm. Eng. Remote Sens. 2013, 79, 245–257. [Google Scholar] [CrossRef]

- Holopainen, M.; Vastaranta, M.; Hyyppä, J. Outlook for the Next Generation’s Precision Forestry in Finland. Forests 2014, 5, 1682–1694. [Google Scholar] [CrossRef]

- Kunneke, A.; van Aardt, J.; Roberts, W.; Seifert, T. Localisation of Biomass Potentials. In Bioenergy from Wood: Sustainable Production in the Tropics; Seifert, T., Ed.; Springer: Dordrecht, The Netherlands, 2014; pp. 11–41. [Google Scholar] [CrossRef]

- Salamí, E.; Barrado, C.; Pastor, E. UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef]

- Sauerbier, M.; Siegrist, E.; Eisenbeiss, H.; Demir, N. The Practical Application of UAV-Based Photogrammetry under Economic Aspects. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 45–50. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Baltsavias, E.; Gruen, A.; Eisenbeiss, H.; Zhang, L.; Waser, L. High-quality image matching and automated generation of 3D tree models. Int. J. Remote Sens. 2008, 29, 1243–1259. [Google Scholar] [CrossRef]

- Leberl, F.; Irschara, A.; Pock, T.; Meixner, P.; Gruber, M.; Scholz, S.; Wiechert, A. Point Clouds. Photogramm. Eng. Remote Sens. 2010, 76, 1123–1134. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The Utility of Image-Based Point Clouds for Forest Inventory: A Comparison with Airborne Laser Scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef]

- Hardin, P.J.; Jensen, R.R. Small-Scale Unmanned Aerial Vehicles in Environmental Remote Sensing: Challenges and Opportunities. GIScience Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Fritz, A.; Kattenborn, T.; Koch, B. UAV-based photogrammetric point clouds—Tree stem mapping in open stands in comparison to terrestrial laser scanner point clouds. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 141–146. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Hugenholtz, C.H. Enhancing UAV–SfM 3D Model Accuracy in High-Relief Landscapes by Incorporating Oblique Images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Garber, S.M.; Temesgen, H.; Monleon, V.J.; Hann, D.W. Effects of height imputation strategies on stand volume estimation. Can. J. For. Res. 2009, 39, 681–690. [Google Scholar] [CrossRef]

- Seifert, T.; Seifert, S. Modelling and Simulation of Tree Biomass. In Bioenergy from Wood: Sustainable Production in the Tropics; Seifert, T., Ed.; Springer: Dordrecht, The Netherlands, 2014; pp. 43–65. [Google Scholar] [CrossRef]

- Mensah, S.; Pienaar, O.L.; Kunneke, A.; du Toit, B.; Seydack, A.; Uhl, E.; Pretzsch, H.; Seifert, T. Height–Diameter allometry in South Africa’s indigenous high forests: Assessing generic models performance and function forms. For. Ecol. Manag. 2018, 410, 1–11. [Google Scholar] [CrossRef]

- Pekin, B.K.; Jung, J.; Villanueva-Rivera, L.J.; Pijanowski, B.C.; Ahumada, J.A. Modeling acoustic diversity using soundscape recordings and LIDAR-derived metrics of vertical forest structure in a neotropical rainforest. Landsc. Ecol. 2012, 27, 1513–1522. [Google Scholar] [CrossRef]

- Müller, J.; Brandl, R.; Buchner, J.; Pretzsch, H.; Seifert, S.; Strätz, C.; Veith, M.; Fenton, B. From ground to above canopy—Bat activity in mature forests is driven by vegetation density and height. For. Ecol. Manag. 2013, 306, 179–184. [Google Scholar] [CrossRef]

- Seifert, T.; Seifert, S.; Seydack, A.; Durrheim, G.; Gadow, K.V. Competition effects in an afrotemperate forest. For. Ecosyst. 2014, 1, 13. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; White, J.C. Digital Aerial Photogrammetry for Updating Area-Based Forest Inventories: A Review of Opportunities, Challenges, and Future Directions. Curr. For. Rep. 2019, 5, 55–75. [Google Scholar] [CrossRef]

- Falkner, E.; Morgan, D. Aerial Mapping: Methods and Applications, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Leachtenauer, J.C.; Driggers, R.G. Surveillance and Reconnaissance Imaging Systems: Modeling and Performance Prediction; Artech House: Norwood, MA, USA, 2001. [Google Scholar]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Frey, J.; Kovach, K.; Stemmler, S.; Koch, B. UAV Photogrammetry of Forests as a Vulnerable Process. A Sensitivity Analysis for a Structure from Motion RGB-Image Pipeline. Remote Sens. 2018, 10, 912. [Google Scholar] [CrossRef]

- CloudCompare Team. CloudCompare: 3D Point Cloud and Mesh Processing Software. Available online: http://www.cloudcompare.org/ (accessed on 1 March 2019).

- Wood, S.N. Fast stable restricted maximum likelihood and marginal likelihood estimation of semiparametric generalized linear models. J. R. Stat. Soc. Ser. B Stat. Methodol. 2011, 73, 3–36. [Google Scholar] [CrossRef]

- Zuur, A.; Ieno, E.N.; Walker, N.; Saveiliev, A.A.; Smith, G.M. Mixed Effects Models and Extensions in Ecology with R; Springer: New York, NY, USA, 2009; ISBN 978-0-387-87457-9. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

| Above Ground | Above Canopy Tips | ||

|---|---|---|---|

| Altitude (m) | Spatial Resolution (cm/px) | Altitude (m) | Spatial Resolution (cm/px) |

| 25 | 1.2 | 15.5 | 0.7 |

| 40 | 1.7 | 30.5 | 1.3 |

| 50 | 2.2 | 40.5 | 1.8 |

| 75 | 3.2 | 65.5 | 2.8 |

| 100 | 4.5 | 90.5 | 4.1 |

| Above Ground Altitude (m) | |||||

|---|---|---|---|---|---|

| Sampling Rate (Images/s) | 25 | 40 | 50 | 75 | 100 |

| 4 | 98.1% | 98.2% | 98.1% | 98.3% | 98.8% |

| 3 | 96.3% | 96.2% | 95.9% | 96.3% | 97.5% |

| 2 | 94.3% | 94.4% | 94.0% | 94.5% | 96.0% |

| 1 | 92.5% | 92.2% | 91.9% | 92.5% | 94.4% |

| Scaling Factor (%) | Image Dimensions (px) | Sensor Area (Mpx) |

|---|---|---|

| 100 | 3840 × 2160 | 8.3 |

| 75 | 2880 × 1620 | 4.7 |

| 50 | 1920 × 1080 | 2.1 |

| 25 | 960 × 540 | 0.5 |

| 20 | 768 × 432 | 0.3 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sens. 2019, 11, 1252. https://doi.org/10.3390/rs11101252

Seifert E, Seifert S, Vogt H, Drew D, van Aardt J, Kunneke A, Seifert T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sensing. 2019; 11(10):1252. https://doi.org/10.3390/rs11101252

Chicago/Turabian StyleSeifert, Erich, Stefan Seifert, Holger Vogt, David Drew, Jan van Aardt, Anton Kunneke, and Thomas Seifert. 2019. "Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images" Remote Sensing 11, no. 10: 1252. https://doi.org/10.3390/rs11101252

APA StyleSeifert, E., Seifert, S., Vogt, H., Drew, D., van Aardt, J., Kunneke, A., & Seifert, T. (2019). Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sensing, 11(10), 1252. https://doi.org/10.3390/rs11101252